Abstract

Modern healthcare practice, especially in intensive care units, produces a vast amount of multivariate time series of health-related data, e.g., multi-lead electrocardiogram (ECG), pulse waveform, blood pressure waveform and so on. As a result, timely and accurate prediction of medical intervention (e.g., intravenous injection) becomes possible, by exploring such semantic-rich time series. Existing works mainly focused on onset prediction at the granularity of hours that was not suitable for medication intervention in emergency medicine. This research proposes a Multi-Variable Hybrid Attentive Model (MVHA) to predict the impending need of medical intervention, by jointly mining multiple time series. Specifically, a two-level attention mechanism is designed to capture the pattern of fluctuations and trends of different time series. This work applied MVHA to the prediction of the impending intravenous injection need of critical patients at the intensive care units. Experiments on the MIMIC Waveform Database demonstrated that the proposed model achieves a prediction accuracy of 0.8475 and an ROC-AUC of 0.8318, which significantly outperforms baseline models.

Keywords: medical intervention, multivariate time series, hybrid attentive model, attention mechanism

1. Introduction

Intensive care units (ICU) play a pivotal role in caring for the most severely hospitalized patients [1], where clinicians must anticipate patient care needs according to a set of fast-paced physiological signals, and then provide aggressive life-saving treatments or interventions [2]. To assist clinicians with supporting evidence for timely and accurate medical interventions, an effective approach is analyzing time series which contain representative information related to the health status, e.g., the physiology, the respiratory and the neurological function [3,4,5,6,7,8]. In other words, early event prediction plays an important role in ICUs, and it ensures that hospital staff are prepared for interventions [9].

To provide high level supportive analytics, numerous predictive models and computer-aided diagnostic solutions were proposed [10]. For example, different medical scoring systems (e.g., SOFA, SAPS, APACHE [11]) have been developed to provide computer assisted decision support. Usually, these scoring systems are based on some type of routine physiological measurements followed by logic-based regression techniques. However, these scoring systems are not able to discover the rich semantics of the vital physiological time series and are not well calibrated in predicting results [12].

Although medical scoring systems are still widely used for evaluating various clinical probabilities in the ICUs [13,14,15], machine learning approaches have been attracting more and more attention lately in the literature. In addition to predictive models based on logistic regression, more sophisticated approaches (e.g., random forests and clustering techniques) are employed to improve the predictive performance for early detection of emergency clinical events [15,16,17]. Nevertheless, one main drawback of existing approaches is that they depend on a set of priori features, which are designed manually based on domain knowledge, by considering the multivariate time series as uncorrelated inputs. Thus, they fail to leveraging the complex correlations among multivariate time series for the extraction of latent features [18]. Furthermore, none of these approaches could provide the ability to deal with time-varying data in the ICUs [10].

Recently, deep models [19,20] show powerful data representation and feature extraction advantages, which have been successfully applied to different medical scenarios [21,22,23] and achieved significant performance improvements over traditional models [24,25,26]. For example, convolutional neural network (CNN) is capable of obtaining a compact latent representation [27], while a long short-term memory network (LSTM) can effectively learn long dependencies of time series [28]. Meanwhile, attention mechanisms have shown great promise of providing interpretable learning results [29], while preserving the versatility and flexibility of deep models. Specifically, such deep models have been successfully used for the prediction of ICU interventions, e.g., ventilation, vasopressors, colloid/crystalloid boluses [30,31].

This work aims to predict the impending need of medical interventions (intravenous injection to be specific) by exploring the patient‘s physiological recordings in ICUs. Virtually intravenous administration has become one of the most common interventions in ICUs and emergency settings. Each day, in acute and critical care conditions, over 30% of patients had received intravenous therapy [32], and a wealth of information of each hospitalized patient is recorded through pervasive sensing, including measurement of high-resolution physiological signals (such as respiration rate, pulse, blood pressure, and temperature), complete clinical information in electronic health records, and various laboratory tests. For these acutely ill patients, medical staff are required to make lifesaving decisions under strict time constraints by dealing with a high level of uncertainty in clinical data and a high-volume of complex physiologic signals.

Thus, for this purpose, there are two important issues to be addressed. First, changes in one or more vital signs prior to a serious adverse event are well documented, and early checking of vital signs is key to timely intervention. However, a vast amount of data with disparate types is continuously captured in real-time as patients stay at ICUs, including static variables (such as gender and age), time-varying vital signals (such as electrocardiogram and oxygen saturation), and clinical notes. Therefore, to achieve timely and accurate intervention prediction, this research needs to select a compact but useful collection of vital time series. Second, the characteristics of biomedical signals before serious adverse events can vary drastically, thus it is difficult to build classifiers based on feature engineering. Moreover, to support clinical decision making, an interpretable model is needed, which should provide easy-to-understand predictions. Therefore, considering the complex correlations among multivariate time series, how to build an interpretable prediction model is the second challenge.

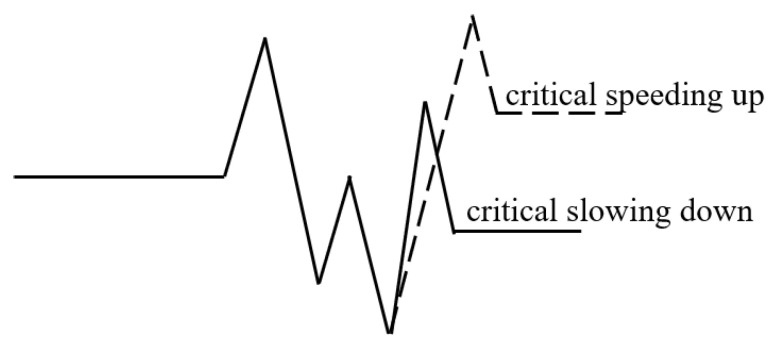

To address these challenges, this research designs a Multi-Variable Hybrid Attentive Model (MVHA) to facilitate timely prediction of medical interventions, using fluctuations and trend characteristics of the time series of physiological signals. In other words, the model depends on the assumption that one or more physiological signals should have been altered prior to a medical intervention [33] and reflect the potential life-threatening conditions [34]. In the ICUs, the acute fluctuation and abrupt trend are typical abnormal patterns of the physiological signals, which are driven by the patients’ internal perturbations (e.g., disease). Discovering and understanding such abnormal and hidden implications are critical for timely decision-making in an emergency. On one hand, the time series of vital signs can exhibit oscillations on the order of seconds to min, and significant prognostic values can be obtained by tracking patient specific fluctuations. On the other hand, extra benefits can be acquired by considering the temporal trends of vital signs, which can help improve the prediction accuracy and decrease the false alarm rate. Figure 1 shows a typical abnormal period of a certain signal, which illustrates two kinds of abnormal fluctuations, i.e., abnormal speeding up and abnormal slowing down.

Figure 1.

Conceptual illustration of abnormal fluctuations.

Among the physiological signals recorded in ICUs, ECG is one of the most important vital signs [35]. By analyzing the ECG time series, researchers can not only reveal the respiratory rate, heart rate and variability, but also reduce the false alarm in ICUs [36,37,38]. Thus, ECG provides a good chance for understanding the patient’s physiological status. Recently, a number of models have been developed for end-to-end ECG diagnosis and illustrated a superior performance [39,40,41,42]. However, these models were directly fed with raw ECG waveforms, without exploring the fine-grained temporal fluctuations or trends, which are key to ECG-based medical diagnoses [31], especially for the treatment of acute heart attacks, acute coronary syndromes, and other life-threatening symptoms in ICUs [43,44,45]. Moreover, it should be noted that other physiological signals can be important supplements for ECG-based analysis. As a result, to explore the temporal nature of multiple physiological signals, this study proposes to build a hybrid model by combining the convolutional neural network and the recurrent neural network, aiming to take full advantages of CNN’s ability of extracting local features and LSTM’s capability of mining long dependencies of the time series. Specifically, in this work we mainly consider the following signals, including arterial blood pressure (ABP), peripheral arterial oxygen saturation (SpO2), heart rate (HR), pulse, and respiration rate (RESP). Then, to further improve the model’s interpretability, this model incorporates a fluctuation attention mechanism for CNN and a multi-channel trend attention mechanism for LSTM. Based on attentive modeling of the hidden characteristics of multi-variate signals, the work can identify the inputs that have more significant influences on the model’s output.

To sum up, the contributions of this paper are three-fold:

First, to characterize the abnormal pattern of physiological variables more accurately, this work propose a novel hybrid neural architecture by combining a CNN and a LSTM. Particularly, CNN aims to find compact latent features in each wave components, and LSTM is utilized to learn long dependencies of time series to model the overall variation patterns.

Second, to enhance the interpretability of the proposed model, this work designs two attention mechanisms, including a fluctuation attention mechanism for CNN and a multi-channel trend attention mechanism for LSTM. Moreover, this work performs attention fusion across fluctuations and trends of different time series to characterize variation patterns according to their importance.

Third, this study achieve state-of-the-art prediction results in the forward-facing prediction of emergency rescue medications in ICU, which can help ensure hospital staff are prepared for interventions as early as possible.

The remainder of this paper is organized as follows. Section 2 reviews the related work. Section 3 describes the proposed approach in details. Experimental results are presented in Section 5. Finally, Section 4 concludes the paper.

2. Related Work

This section will briefly review the related work, which can be grouped into three categories.

2.1. ICU Scoring Models

The medical scoring model gives an assessment of the patient’s health status in the form of a score [46], which refer to the clinical severity of the patient. The outcome of forecasting scores can help caregivers be aware of patients at risk and take appropriate actions in advance to prevent these patients from deteriorating [47]. For instance, the sequential organ failure assessment score (SOFA score), which is based on six different scores, is useful in predicting the clinical outcomes of critically ill patients [48]. In the logistic organ dysfunction system (LODS), logistic regression techniques are used to determine severity levels and provided an objective tool for identifying the organ dysfunction level (from 1 to 3) for six different organ systems [14].

Specifically, there are two widely used ICU scoring models at present. The Simplified Acute Physiology Score (SAPS) model calculates the severity of disease for patients admitted to intensive care units, by using 12 routine physiological measurements of the past 24 h [49]. The Acute Physiology And Chronic Health Evaluation (APACHE) model is used to calculate the probability of death independent of diagnosis, based on markers for the extent of the abnormality of 12 common physiological and laboratory values [50].

In general, the outputs of the models are ordinal, i.e., a higher score corresponds to a higher severity. However, all of them are based on fixed time intervals, without considering neither the evolving clinical information nor the non-linear constructed latent features [10,30].

2.2. ICU Interventions

Intensive care unit interventions refer to medical treatments given to seriously or critically ill patients who are at risk of conditions that may be potential or established organ failures [51]. Existing studies mainly relate to the content of emergency airway care, respiratory failure and so on [52].

Mechanical ventilation (i.e., assisted respiration) is one of the most common intervention implemented in the intensive care medicine [53]. For instance, a number of studies have been conducted to determine the factors that could help predict the possibility of mechanical ventilation and weaning [54,55,56]. Vasopressor is another commonly used intervention in a medical intensive care unit [57]. For example, Wu et al. [58] used a switching-state autoregressive model to predict the need for a vasopressor. Similarly, to make the intervention model more applicable, unsupervised switching state autoregressive models [9] have been developed by combining waveform recordings with demographic information, aiming to simultaneously provide an in-hospital early detection for five different clinical intervention.

Nevertheless, existing works mainly focus on improving the prediction performance for actionable interventions several hours ahead of onset, and none of them have explored the prediction problem of immediate intravenous injections, which is a core focus of our work.

2.3. Deep Learning on ICU Data

Intensive care treatment is highly challenging due to the chitinous generation of a large amounts of heterogeneous health-related data. Thereby, more and more attention is being paid to deep learning based data processing and assistant decision-making, aiming to improve the accuracy of clinical identification and prediction [24,29]. For example, Rajpurkar et al. [59] developed a multi-layer CNN model to detect arrhythmias based on ECG time-series. Similarly, a deep learning based model was built to classify 12 rhythm classes [60], which achieved a state-of-the-art performance.

However, these studies mainly explored the time series of a single vital sign, and could not provide a more comprehensive characterization of the patient’s status in clinical environments (especially in ICUs). A better choice is to fuse multiple simultaneously collected time series with deep models. Recently, a set of models had been proposed to combine vital physiological time series with demographic information (including age, gender, lab test results and so on) to provide clinical predictions [30,61]. Similarly, Lipton et al. [62] had shown promising results using multivariate time series of clinical measurements for learning and prediction.

Nevertheless, the timeliness and interpretability of existing models are still not good enough for the prediction of impending medication intervention needs in ICUs. Therefore, a more effective model is needed, which should be able to provide timely and interpretable predictions, by exploring the fine-grained temporal trends and fluctuations of multivariate time series.

3. Methodology

This section describe the proposed multi-variable hybrid CNN-LSTM model, which is mainly composed of a multivariate input processing layer, a hybrid attentive model layer and a predictive output layer.

3.1. Overview of MVHA

This subsection first briefly describes the framework of MVHA and introduces the notations used in this article. We denote multivariate physiological signals as , where G represents the high-frequency waveforms (such as ECG) and L represents the numerical waveforms (such as HR). Aligned with the i-th intravenous intervention, we denote multi-channel high-frequency waveforms G at time step t as: = [, ,…, ], where ∈R, , cg = 1, 2,…, CG and CG = ||, ng denotes the length of . Similarly, the numerical signals L at time step t is defined as: = [, ,…, ], where ∈R, , cl = 1, 2,…, CL and CL = ||, nl denotes the length of . Particularly, T represents the time steps used for the prediction of a medical intervention, is the continuously monitored high-frequency waveform by channel cg, and denotes the numerical sign sampled by channel cl. The used notations are summarized in Table 1.

Table 1.

Notations for MVHA.

| Notation | Description |

|---|---|

| S, s, | multivariate physiological signals (G and L), one of or , the k-th segment in s |

| G, , | high-frequency waveforms, the cg-th channel in G, the k-th segment in g |

| L, , | numerical waveforms, the cl-th channel in L, the k-th segment in l |

| P∈ , ∈ | the convolutional features, the j-th column in P |

| O∈ , o∈, | output of the CNN layer, the sum of , output of the fluctuant level attention |

| , , , | weights of the fluctuant level attention, the k-th value in , weights of the trend level attention, the k-th value in |

| H, | output of the Bi-LSTM layer, the k-th column in H |

| Z, z | combination of H, the sum of |

| X, x | output of the fully connected layer, the k-th column of X |

| , | feature weights of the fluctuant level attention, feature weights of the trend level attention |

| d, , () | output of the trend level attention, difference between and , max, mean or min of |

| prediction result of the i-th segment |

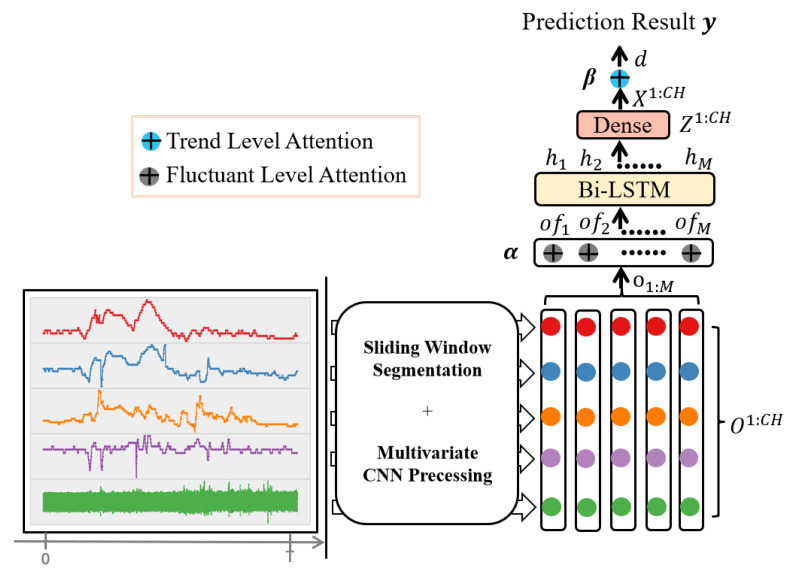

Given a time step t and an observation window W for the i-th intervention, this work takes the observed multivariate time series (including both and ) as input, aiming to predict the output value of variable . With a pre-defined step size, is first split into M equal length segments: , (e.g., given a step with a length of 1 min, a high-frequency waveform segmentation of 125 Hz contains 7500 samples and a numerical waveform of 1 Hz contains 60 values). Next, CNN has been applied to these segments to obtain the convolutional output and the fluctuant level attention , followed by a Bi-LSTM that transforms into sequentially embedded vectors H and Z, and then a fully connected layer is adopted to convert Z into X. After that, this work makes use of the weighted average to integrate X = [,…,] (CH = |G| + |L|) across all channels to obtain the trend level attention d, which will be concatenated with () and used for prediction. Among them, represents the difference between and , where calculates the statistics of segment (i.e., max, mean or min). Specifically, to improve the model’s accuracy and interpretability, this study design a two-level attention mechanism (i.e., a fluctuant level attention and a trend level attention, denoted as and . Figure 2 depicts the framework of the proposed model.

Figure 2.

An overview of the MVHA model.

3.2. Details of MVHA

3.2.1. Multi-Variate Attentive Model

To enable effective prediction of medical interventions, this work mainly consider the abnormal wave fluctuations and trends of multivariate signals. To locate such abnormal patterns from signals, this research proposes a hybrid attentive CNN-LSTM model to simultaneously exploit local fluctuations and global trends of physiological waveforms. Specifically, we design two attention mechanisms (i.e., fluctuant level attention and trend level attention) and embed them into the hybrid model. More details of the proposed model are shown in Algorithm 1.

| Algorithm 1 Multi-Variable Hybrid Attentive Model |

|

For a multivariate time series, to exploit the local dependency patterns among different channels, this study adopted convolutional neural networks to encode the time series and map them to the latent space. Formally, the study first split G and L of S(t − W, t] into a sequence of equal length segments. In particular, the segments of S(t − W, t] is defined as follows.

| (1) |

Next, 1-D convolution is applied to the obtained segments to extract features P = conv(s), where s stands for or , P∈, U is the number of filters, and J is the length of the segment after convolution (a hyperparameter of CNN [29,34]). And then, added along the J axis together to get the value of o, which can be shown as: , . The dimension of the M segments output was finally fixed at , in which the first dimension corresponded to the number of filters and the second dimension corresponded to the number of segments. Therefore, the output of the CNN layer is defined as:

| (2) |

where , , and .

Fluctuant Level Attentive Layer. To extract fluctuant level patterns, this study propose a fluctuant-specific weight vector (with a size of 1 × M) to aggregate the physiological feature maps. Thus, the model obtains better fluctuant level interpretation , where represents the weight of the k-th fluctuant level features. Then, to sequentially represent the history information of the physiological time series, we adopt LSTM to characterize the long-term temporal dependencies. Specifically, the LSTM units include a set of gates to control when the information should be maintain in the memory cell, when it should be forgotten and when it should be outputted. For a given time series at time t, the encoder layer employs the input gate , the output gate and the forget gate to jointly control the cell state and the output as follows:

| (3) |

| (4) |

| (5) |

| (6) |

| (7) |

where the group of tensors W and b are the matrices and bias parameters to be learned during training, is the current input, corresponds to the previous state, and is the cell state vector at the current time step. Due to the use of different gates, LSTM can overcome the vanishing gradient problem and capture the long-term dependencies of time series. Specifically, this model use a standard configuration of the bidirectional LSTM network, due to its abilities to capture temporal dependencies. The output of LSTM is denoted as . Finally, by concatenating the forward and backward outputs, we obtain the sequential encoding features as .

Trend Level Attentive Layer. The trend level attentive layer is designed to obtain a more comprehensive view of the multivariate signals, by fusing attentions across all the channels. First, a fully-connected transformation is performed on the LSTM feature map as follows:

| (8) |

where , , , , and . Then, considering that different signal channels play different roles and have various importance, this model introduce a trend-specific weight vector (with a size of ) to fuse the trend level attentions as . Finally, given the encoded state d and the time-varying variable , the model can predict a categorical output based on multivariate regression as follows:

| (9) |

Specifically, the model adopt the cross-entropy loss function as follows:

| (10) |

where denotes the number of instances in a mini-batch, and represent the true label and the predicted label of the i-th instance, respectively.

3.2.2. Hybrid Attention Mechanisms

The above section have described the framework of the proposed model. To further explain the design principle of the model, this subsection will present the details of the proposed hybrid attention mechanisms.

In order to better characterize fluctuation and trend changes, this study imported two attention mechanisms in the proposed model, i.e., a fluctuant attention and a trend attention. To obtain the fluctuant attention vector and the trend attention vector , the model design is a two-step neural network. Specifically, the first full connection layer is used to calculate the scores for computing weights, and the second full connection layer is designed to compute the weights with via Softmax activation.

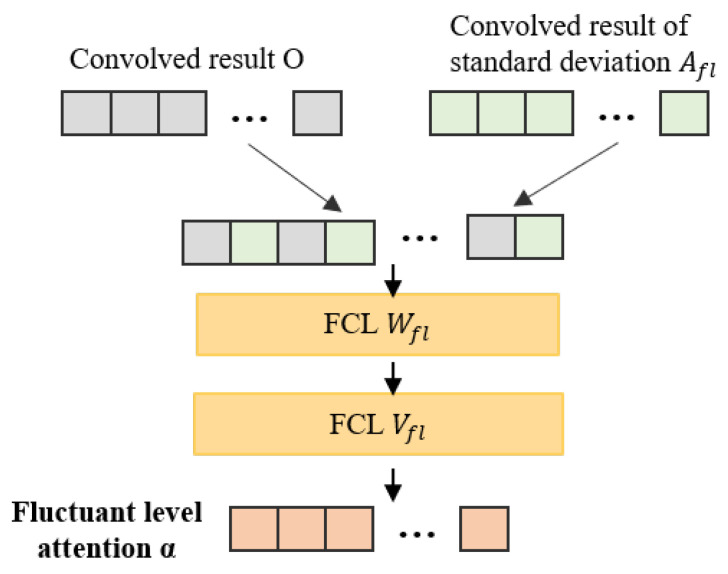

Fluctuant Attention Mechanism. To characterize fluctuations with attention weights , the model first compute the standard deviation of each obtained segment s, and obtain the fluctuant level knowledge feature vector as follows:

| (11) |

where calculates the standard deviation of each s of the time series S. Afterwards, the model concatenates the knowledge features with the output of the CNN layer to obtain the attention weights:

| (12) |

where is the weighted matrix at the first layer, is the weighted vector at the second layer, is the bias vector, ⊙ denotes an addition with broadcasting, , and . We further present the fluctuant attention in more detail in Algorithm 2. Figure 3 shows the structure of fluctuant attention.

| Algorithm 2 Fluctuant Attention Mechanism. |

|

Figure 3.

The structure of fluctuant attention mechanism.

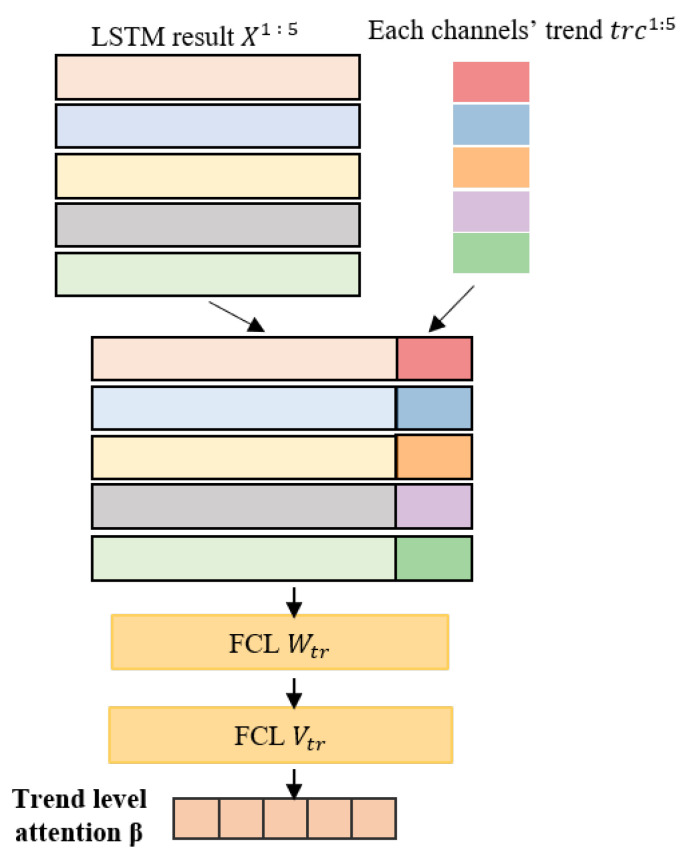

Trend Attention Mechanism. Intuitively, signals with significant changes are likely to contain more important information, and should be given more attentions. However, as different channels of the multivariate time series usually have different amplitudes, this study adopts the min-max scaling to normalize the time series first, based on which this model further extracts the trend level knowledge feature weights of each channel as:

| (13) |

Based on the above formula, the model can obtain the trend level knowledge feature vector , and then calculate the attention weight as follows:

| (14) |

where and are the weighted matrix and vector in the first and second layers, respectively. is the bias vector, ⊙ represents an addition with broadcasting, , and . We further present the proposed trend attention in more detail in Algorithm 3. Figure 4 shows the structure of trend attention.

| Algorithm 3 Trend Attention Mechanism. |

|

Figure 4.

The structure of trend attention mechanism.

4. Experiments

This section first describes the dataset and baseline models used in this work, and then presents the experimental results.

4.1. Dataset

To evaluate the performance of the proposed model, this research use the MIMIC-III (Multi-parameter Intelligent Monitoring in Intensive Care) Waveform Database Matched Subset [63]. MIMIC is a publicly available benchmark dataset which contains over 58,000 hospital admissions from approximately 38,600 adults, whose physiological signals were recorded continuously in ICUs. These waveform records include thousands of recordings of waveforms (such as one or more channel of ECG signals) and the time series of vital signs (such as heart and respiration rates). This research chose 18 frequently used rescue intravenous drugs in critical care unit (CCU) [64], which is a special department of the ICU, and got 19,608 experimental records. These medications include sodium nitroprusside, nitroglycerin, dopamine, dobutamine, norepinephrine, milrinone, amiodarone, lidocaine, epinephrine, adenosine, alteplase, esmolol, diltiazem, phenylephrine, hydralazine, nesiritide, procainamide, and isoproterenol.

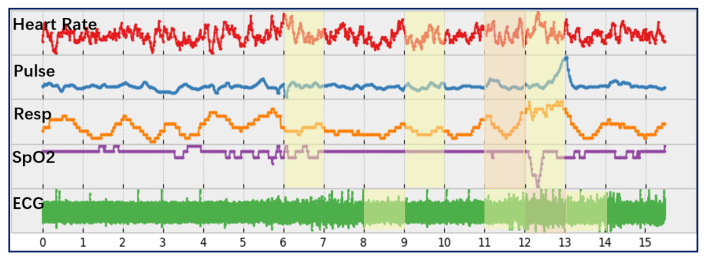

In the experiment, this work aimed to predict whether an intravenous injection of the mentioned drugs is needed. Specifically, this research formulates the prediction issue as a binary classification problem, i.e., whether the patient needs an injection within a certain time period. Normally, the medical staff of the emergency treatment in ICUs would inject a variety of drugs into patients in a relatively short time period. Therefore, this work takes all drugs that were injected 2 min before and after a certain time point as the same group. For example, as shown in Figure 5, the subject was given a group of injections, including three doses of norepinephrine and one dose of lorazepam.

Figure 5.

One subject’s multi-channel time series which includes a group of intravenous injections.

Accordingly, this work identified 18,792 groups of intravenous injections. For each injection event, 30 min of time series were extracted from the dataset by taking the event as an endpoint. With the constraint that there should be only one group of intravenous injections in the extracted time series, a total number of 14,465 groups were obtained. The experiment took the first half of each time series as a negative sample and the second half as a positive sample. Specifically, the obtained time series consisted of five vital signs, i.e., heart rate (hr), pulse, respiratory (resp), peripheral capillary oxygen saturation (SpO) and ECG. Missing values were imputed using piecewise cubic spline interpolation in the experiment.

4.2. Experimental Setup and Baseline Models

Training and Implementation Details. For the training of CNNs, various numbers of convolutional layers (ranging from 1 to 5) and filters (ranging from 8 to 64) have been tried, with the hyperparameter of stride setting as 1 or 2. Similar to existing studies [29,65,66], this study use batch normalization, rectified linear unit (ReLU) activation and max pooling between convolutional layers to prevent overfitting. Specifically, this model utilize a 3-layer CNN for high-frequency time series (i.e., EEG) with the filter size ranging from 10 to 3, a 2-layer CNN for the other time series with the filter size varying from 5 to 2.

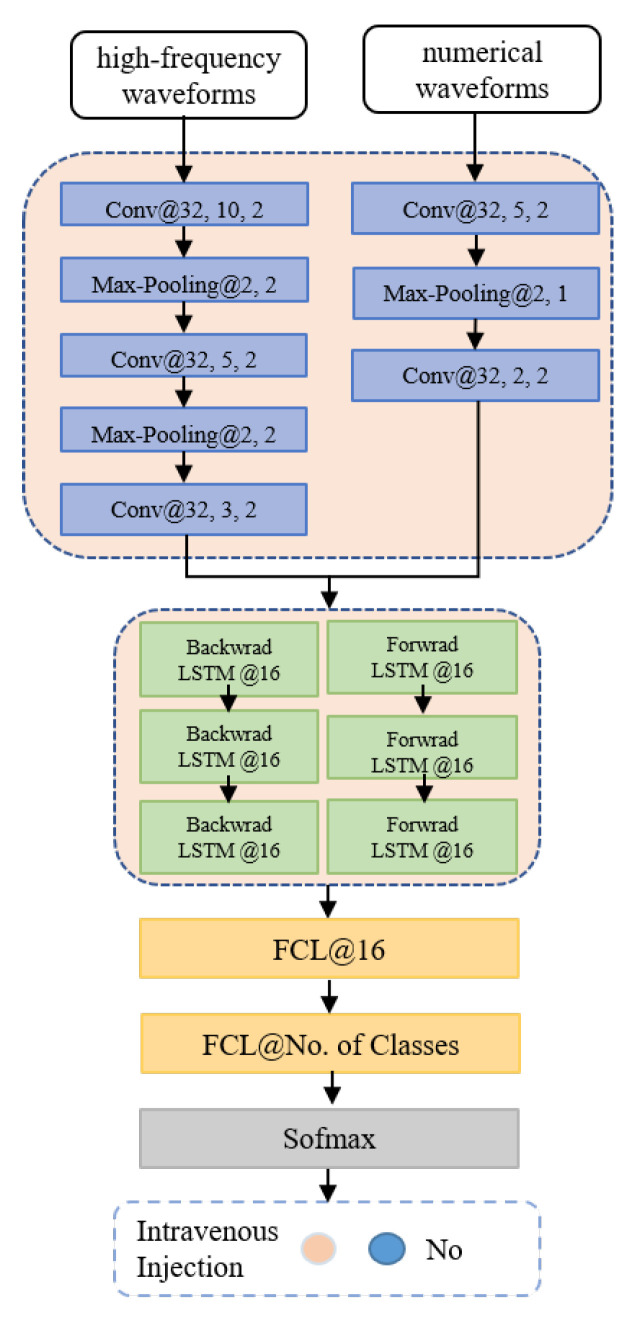

Furthermore, this work explore the Bi-LSTM from one to eight layers and the number of hidden units from 8 to 64. Meanwhile, different configurations are tested, including different mini-batch sizes (16, 32 and 128) and different optimizers (stochastic gradient descent, adagrad and Adam). Specifically, the model used a 3-layer Bi-LSTM by setting the number of hidden units to 16. The model’s initial weights/parameters are given randomly, and the learnable ones are updated in each loop based on the Adam optimizer, with the learning rate of 0.002. The dropout rate is set to 0.5 in the fully connected prediction layer. The model is trained with a mini-batch size of 128 samples, and the dataset is randomly divided into three subsets, i.e., a training set (70%), a validation set(10%) and a test set (20%). In our experiments, all models are implemented with Pytorch 1.1.0 and the used machine is equipped with Intel Xeon E5-2640, 256 GB RAM, 8 Nvidia Titan-X GPU and CUDA 8.0. The workflows of the proposed hybrid CNN-LSTM model is shown in Figure 6.

Figure 6.

The concrete architecture of the hybrid model. In each layer, the meaning behind symbol ‘@’ indicate the size of the convolution filter, the number of neurons, the stride of the filter, or the size of the pooling layer, the stride of the pooling layer, respectively.

Baseline Models. In this work, different baselines are employed to compare with the proposed model MVHA.

-

(a)

CNN (ECG)—The CNN model is performed on one minute of ECG segments, followed by a fully connect layer and a Softmax layer for prediction;

-

(b)

CNN-LSTM—The vanilla CNN and Bi-LSTM are trained using the full time series, with a fully connect layer and a Softmax layer on the top of the hidden layers;

-

(c)

CNN-FAttn—The CNN model is used to encode all the time series, with the fluctuant level attention mechanism for better representation;

-

(d)

CLSTM-FAttn—The fluctuant level attention mechanism is introduced to the CNN-LSTM model;

-

(e)

CLSTM-TAttn—The trend level attention mechanism is introduced to the CNN-LSTM model.

4.3. Experimental Results

The experiment measure the models’ performance based on accuracy (ACC), area under the ROC curve (ROC-AUC) and F1 score.

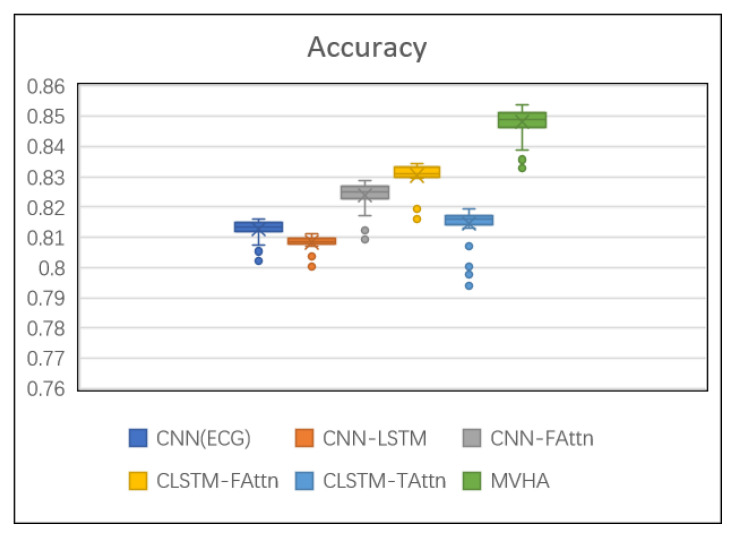

Table 2 reports the performance of each model on the prediction task. The results reflect that the proposed model MVHA outperforms all other models. Meanwhile, all attention-based predictions show better performance than without, which agree with the premise of utilizing the attention mechanisms can distinguish between samples more clearly in result. In order to get a better view of the results, a boxplot graph of the accuracy is shown in Figure 7.

Table 2.

Performance comparison of different models.

| ACC | ROC-AUC | F1 | |

|---|---|---|---|

| CNN (ECG) | 0.8129 | 0.7917 | 0.7630 |

| CNN-LSTM | 0.8090 | 0.7845 | 0.7417 |

| CNN-FAttn | 0.8257 | 0.8119 | 0.7672 |

| CLSTM-FAttn | 0.8314 | 0.8181 | 0.7581 |

| CLSTM-TAttn | 0.8137 | 0.7931 | 0.7617 |

| MVHA | 0.8475 | 0.8318 | 0.7831 |

Figure 7.

The boxplot diagram of accuracy.

CNN (ECG) has a relatively satisfied classification result and two main reasons are speculated: first, the samples came from CCU which treated patients with severe cardiac diseases, and these acute diseases influence the ECG directly; second, high dense signals contain enough information for completing some certain tasks, and the ability of the designed CNN could utilize these multidimensional inputs efficiently. In other ways, however, its performance was inferior to that of other models (such as CNN-FAttn), perhaps suggesting that ECG needs to be integrated with other time series data for prediction tasks. CNN-FAttn hold all the time series and fluctuant level attention mechanism to improve performance. Particularly, CNN-FAttn surpasses CNN (ECG) by up to 1.5% for ACC, which indicates that the representatives from wider signal sources help in performance improvement.

The rest of the five kinds of models incorporate both CNN and LSTM. CNN-LSTM gives the relatively poorer experiment results compared with other models. It can be explained that a proper short space of waveform from the injection point could provide sufficient contextual information and, if too long, may undermine information already mined from previous search time series. In a further study, the shorter waveforms may be used for such research. Adding multi-channel trend level attention CLSTM-TAttn has higher scores compared to CNN-LSTM, but did not beat CNN-FAttn and CLSTM-FAttn, maybe indicating that whencomparing with the trend variation in a short time, the violent fluctuation of signals seems to be more significant for impending need intravenous injection. Furthermore, it can be found that whatever type of attention models we decided to use, the method can improve the classification performance. Lastly, the proposed model MVHA that incorporates changes from both fluctuation and trends events reaches the best performance on prediction. That is, mining the fluctuation pattern and overall variation trends could retain more useful information for the classification.

To validate the interpretability of the proposed attentive model, Figure 8 presents the predicted risk level for an intravenous injection of an unseen patient. Accordingly, this study can find that the patient is predicted to have a higher risk of intervention than average during the 11th–13th min (highlighted cells as yellow and orange). Apparently, a time slice would receive higher attention if it is closer to the time point of an intravenous injection or it contains significant fluctuations, which proved the effectiveness of the proposed fluctuation level attention mechanism.

Figure 8.

The risk level for an intravenous injection predicted by MVHA. The learned attention cells are highlighted in orange (above 0.15) and yellow (between 0.1 to 0.15).

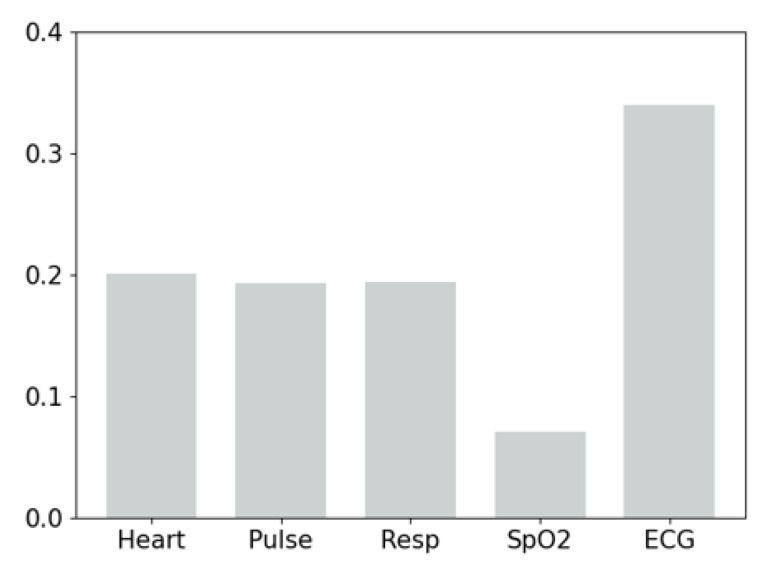

In addition, for the trend level attention (as shown in Figure 9), we find that the ECG channel receives the highest attention weight, the other three channels (i.e., Heat, Pulse and Resp) attract slightly lower attention, and the SpO channel has the lowest attention. It indicates that, on the one hand, ECG provides the most important evidence for the prediction of intravenous injections. On the other hand, while high-frequency time series contain abundant information, it is still necessary to take into account other vital signs to enable timely and accurate medical interventions.

Figure 9.

The trend level attention of different channels.

5. Conclusions and Future Work

This paper proposed a hybrid deep model to enable timely medical intervention by exploring health-related multivariate time series. Specifically, CNNs were utilized to mine local features and LSTM to depict time-dependent features. Furthermore, to improve the interpretability of the prediction result, a two-level attention mechanism (i.e., fluctuant level attention and trend level attention) is developed to focus on key time slices and key channels. MVHA is finally set as 3-layer CNNs for high-frequency time series, 2-layer CNNs for numerical waveforms plus 3-layer Bi-LSTM. Total number of learnable parameters in our model is 3392. Experiments on the MIMIC dataset showed that the proposed model significantly outperformed baseline models. In the future, we plan to extend the proposed model by taking into account multi-modality data, such as medical text and medical image and another possible future direction is to study other kinds of medical interventions. Meanwhile, sparse neural networks, which use what is known as network pruning, would be adopted by a future model in order to reduce the computational load.

Further, in this work, by exploiting multi-channel waveforms, a hybrid attentive neural network was used to predict whether an intravenous injection is needed or not. On the other hand, many correlative references (such as Chen et al. [67]) also demonstrated that a rule-based system in the ICUs could execute decisions much faster with proper training for tagging critical events. However, against the background of this thesis, limitations of rule-based systems are as follows: first, when complex and high-density databases are involved in one decision, it can be hard for humans to try instituting detailed and complete rules; second, if researchers want to make rule-based systems successful, it is important to consider the domain expertise, but that is not fully known at design time. While deep learning is more beneficial for analyzing the data and looking for correlations, rule-based systems are relatively simple and their output is easy for a human to debug. Meanwhile, because using the rule engine‘s data can come in handy in increasing the performance of the deep learning algorithm [68], in future work, neural network and operating rules systems would be considered in tandem, and this could be more beneficial to the framework than replacing rules entirely.

Author Contributions

J.X. and Z.W. made the idea and designed the experiments; J.X. built the experiments and interpreted the results; J.X. and Z.W. wrote the original paper; Z.W and Z.Y. participated the review and editing; Z.Y. and B.G. gave valuable views. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This work is partially supported by the National Natural Science Foundation of China (No. 61960206008, 62072375), and the Fundamental Research Funds for the Central Universities (No. 3102019AX10).

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Ervin J.N., Kahn J.M., Cohen T.R., Weingart L.R. Teamwork in the intensive care unit. Am. Psychol. 2018;73:468. doi: 10.1037/amp0000247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Vincent J.-L. Critical care-where have we been and where are we going? Crit. Care. 2013;17:1–6. doi: 10.1186/cc11500. [DOI] [Google Scholar]

- 3.Tonekaboni S., Mazwi M., Laussen P., Eytan D., Greer R., Goodfellow S.D., Goodwin A., Brudno M., Goldenberg A. Prediction of cardiac arrest from physiological signals in the pediatric ICU; Proceedings of the Machine Learning for Healthcare Conference PMLR; Palo Alto, CA, USA. 17–18 August 2018. [Google Scholar]

- 4.Boashash B. Time-Frequency Signal Analysis and Processing: A Comprehensive Reference. Academic Press; Cambridge, MA, USA: 2015. [Google Scholar]

- 5.Orphanidou C. A review of big data applications of physiological signal data. Biophys. Rev. 2019;11:83–87. doi: 10.1007/s12551-018-0495-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Lehman L.-w.H., Nemati S., Adams R.P., Mark R.G. Discovering shared dynamics in physiological signals: Application to patient monitoring in ICU; Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society; San Diego, CA, USA. 28 August–1 September 2012; [DOI] [PubMed] [Google Scholar]

- 7.Huvanandana J., Thamrin C., Tracy M.B., Hinder M., Nguyen C.D., McEwan A.L. Advanced analyses of physiological signals in the neonatal intensive care unit. Physiol. Meas. 2017;38:R253. doi: 10.1088/1361-6579/aa8a13. [DOI] [PubMed] [Google Scholar]

- 8.Chen W., Wang S., Long G., Yao L., Sheng Q.Z., Li X. Dynamic illness severity prediction via multi-task rnns for intensive care unit; Proceedings of the 2018 IEEE International Conference on Data Mining (ICDM); Singapore. 17–20 November 2018. [Google Scholar]

- 9.Ghassemi M., Wu M., Hughes M.C., Szolovits P., Doshi-Velez F. Predicting intervention onset in the ICU with switching state space models. AMIA Summits Transl. Sci. Proc. 2017;2017:82. [PMC free article] [PubMed] [Google Scholar]

- 10.Kaji D.A., Kaji D.A., Zech J.R., Kim J.S., Cho S.K., Dangayach N.S., Costa A.B., Oermann E.K. An attention based deep learning model of clinical events in the intensive care unit. PLoS ONE. 2019;14:e0211057. doi: 10.1371/journal.pone.0211057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Soares M., Fontes F., Dantas J., Gadelha D., Cariello P., Nardes F., Amorim C., Toscano L., Rocco J.R. Performance of six severity-of-illness scores in cancer patients requiring admission to the intensive care unit: A prospective observational study. Crit. Care. 2004;8:1–10. doi: 10.1186/cc2870. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Cowen S.J., Kelley M.A. Errors and bias in using predictive scoring systems. Crit. Care Clin. 1994;10:53–72. doi: 10.1016/S0749-0704(18)30144-1. [DOI] [PubMed] [Google Scholar]

- 13.Granholm A., Christiansen C.F., Christensen S., Perner A., Møller M.H. Performance of SAPS II according to ICU length of stay: Protocol for an observational study. Acta Anaesthesiol. Scand. 2019;63:122–127. doi: 10.1111/aas.13233. [DOI] [PubMed] [Google Scholar]

- 14.Le Gall J.R., Klar J., Lemeshow S., Saulnier F., Alberti C., Artigas A., Teres D. The Logistic Organ Dysfunction system: A new way to assess organ dysfunction in the intensive care unit. JAMA. 1996;276:802–810. doi: 10.1001/jama.1996.03540100046027. [DOI] [PubMed] [Google Scholar]

- 15.Kotani Y., Fujii T., Uchino S., Doi K., JAKID Study Group Modification of sequential organ failure assessment score using acute kidney injury classification. J. Crit. Care. 2019;51:198–203. doi: 10.1016/j.jcrc.2019.02.026. [DOI] [PubMed] [Google Scholar]

- 16.Churpek M.M., Yuen T.C., Winslow C., Meltzer D.O., Kattan M.W., Edelson D.P. Multicenter comparison of machine learning methods and conventional regression for predicting clinical deterioration on the wards. Crit. Care Med. 2016;44:368. doi: 10.1097/CCM.0000000000001571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Johnson A.E.W., Pollard T.J., Shen L., Lehman L.W.H., Feng M., Ghassemi M., Moody B., Szolovits P., Anthony L., Mark R.G. MIMIC-III, a freely accessible critical care database. Sci. Data. 2016;3:1–9. doi: 10.1038/sdata.2016.35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Du S., Li T., Yang Y., Horng S.J. Multivariate time series forecasting via attention-based encoder?decoder framework. Neurocomputing. 2020;388:269–279. doi: 10.1016/j.neucom.2019.12.118. [DOI] [Google Scholar]

- 19.LeCun Y., Bengio Y., Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 20.Liu F., Zhou X., Cao J., Wang Z., Wang T., Wang H., Zhang Y. Anomaly detection in quasi-periodic time series based on automatic data segmentation and attentional LSTM-CNN. IEEE Trans. Knowl. Data Eng. 2020;34:2626–2640. doi: 10.1109/TKDE.2020.3014806. [DOI] [Google Scholar]

- 21.Liang Z., Zhang G., Huang J.X., Hu Q.V. Deep learning for healthcare decision making with EMRs; Proceedings of the 2014 IEEE International Conference on Bioinformatics and Biomedicine (BIBM); Belfast, UK. 2–5 November 2014. [Google Scholar]

- 22.Cheng Y., Wang F., Zhang P., Hu J. Risk prediction with electronic health records: A deep learning approach; Proceedings of the 2016 SIAM International Conference on Data Mining; Miami, FL, USA. 5–7 May 2016. [Google Scholar]

- 23.Pham T., Tran T., Phung D., Venkatesh S. Deepcare: A deep dynamic memory model for predictive medicine; Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining; Auckland, New Zealand. 19–22 April 2016; Cham, Switzerland: Springer; 2016. [Google Scholar]

- 24.Shamout F.E., Zhu T., Sharma P., Watkinson P.J., Clifton D.A. Deep interpretable early warning system for the detection of clinical deterioration. IEEE J. Biomed. Health Inform. 2019;24:437–446. doi: 10.1109/JBHI.2019.2937803. [DOI] [PubMed] [Google Scholar]

- 25.Choi E., Bahadori M.T., Schuetz A., Stewart W.F., Sun J. Doctor ai: Predicting clinical events via recurrent neural networks; Proceedings of the Machine Learning for Healthcare Conference PMLR; Los Angeles, CA, USA. 19–20 August 2016; [PMC free article] [PubMed] [Google Scholar]

- 26.Choi E., Schuetz A., Stewart W.F., Sun J. Using recurrent neural network models for early detection of heart failure onset. J. Am. Med. Inform. Assoc. 2017;24:361–370. doi: 10.1093/jamia/ocw112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Singh A.R., Panicker M.R. Subject independent emotion recognition using EEG signals employing attention driven neural networks. Biomed. Signal Process. Control. 2022;75:103547. [Google Scholar]

- 28.Han S., Dong H., Teng X., Li X., Wang X. Correlational graph attention-based Long Short-Term Memory network for multivariate time series prediction. Appl. Soft Comput. 2021;106:107377. doi: 10.1016/j.asoc.2021.107377. [DOI] [Google Scholar]

- 29.Xu Y., Xu Y., Biswal S., Deshpande S.R., Maher K.O., Sun J. Raim: Recurrent attentive and intensive model of multimodal patient monitoring data; Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining; London, UK. 19–23 August 2018. [Google Scholar]

- 30.Suresh H., Hunt N., Johnson A., Celi L.A., Szolovits P., Ghassemi M. Clinical intervention prediction and understanding using deep networks. arXiv. 20171705.08498 [Google Scholar]

- 31.Xiao C., Choi E., Sun J. Opportunities and challenges in developing deep learning models using electronic health records data: A systematic review. J. Am. Med. Inform. Assoc. 2018;25:1419–1428. doi: 10.1093/jamia/ocy068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Finfer S., Myburgh J., Bellomo R. Intravenous fluid therapy in critically ill adults. Nat. Rev. Nephrol. 2018;14:541–557. doi: 10.1038/s41581-018-0044-0. [DOI] [PubMed] [Google Scholar]

- 33.Charbonnier S., Gentil S. On-line adaptive trend extraction of multiple physiological signals for alarm filtering in intensive care units. Int. J. Adapt. Control. Signal Process. 2010;24:382–408. doi: 10.1002/acs.1123. [DOI] [Google Scholar]

- 34.Lehman L.-W.H., Adams R.P., Mayaud L., Moody G.B., Malhotra A., Mark R.G., Nemati S. A physiological time series dynamics-based approach to patient monitoring and outcome prediction. IEEE J. Biomed. Health Inform. 2014;19:1068–1076. doi: 10.1109/JBHI.2014.2330827. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Balaji S., Ellenby M., McNames J., Goldstein B. Update on intensive care ECG and cardiac event monitoring. Card. Electrophysiol. Rev. 2002;6:190–195. doi: 10.1023/A:1016300202560. [DOI] [PubMed] [Google Scholar]

- 36.Bashar S.K., Ding E., Walkey A.J., McManus D.D., Chon K.H. Noise detection in electrocardiogram signals for intensive care unit patients. IEEE Access. 2019;7:88357–88368. doi: 10.1109/ACCESS.2019.2926199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Bashar S.K., Ding E., Albuquerque D., Winter M., Binici S., Walkey A.J., McManus D.D., Chon K.H. Atrial fibrillation detection in icu patients: A pilot study on mimic iii data; Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Berlin, Germany. 23–27 July 2019; [DOI] [PubMed] [Google Scholar]

- 38.Dai J., Sun Z., He X. Feature Engineering and Computational Intelligence in ECG Monitoring. Springer; Singapore: 2020. False Alarm Rejection for ICU ECG Monitoring; pp. 215–226. [Google Scholar]

- 39.Yildirim O. A novel wavelet sequence based on deep bidirectional LSTM network model for ECG signal classification. Comput. Biol. Med. 2018;96:189–202. doi: 10.1016/j.compbiomed.2018.03.016. [DOI] [PubMed] [Google Scholar]

- 40.Andreotti F., Carr O., Pimentel M.A., Mahdi A., De Vos M. Comparing feature-based classifiers and convolutional neural networks to detect arrhythmia from short segments of ECG; Proceedings of the 2017 Computing in Cardiology Conference (CinC); Rennes, France. 24–27 September 2017. [Google Scholar]

- 41.Liu F., Zhou X., Cao J., Wang Z., Wang H., Zhang Y. Arrhythmias classification by integrating stacked bidirectional LSTM and two-dimensional CNN; Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining; Macau, China. 14–17 April 2019; Cham, Switzerland: Springer; 2019. [Google Scholar]

- 42.Zihlmann M., Perekrestenko D., Tschannen M. Convolutional recurrent neural networks for electrocardiogram classification; Proceedings of the 2017 Computing in Cardiology Conference (CinC); Rennes, France. 24–27 September 2017. [Google Scholar]

- 43.Thoren A., Rawshani A., Herlitz J., Engdahl J., Kahan T., Gustafsson L., Djarv T. ECG-monitoring of in-hospital cardiac arrest and factors associated with survival. Resuscitation. 2020;150:130–138. doi: 10.1016/j.resuscitation.2020.03.002. [DOI] [PubMed] [Google Scholar]

- 44.Sharma R., Bews H., Mahal H., Asselin C.Y., OBrien M., Koley L., Hiebert B., Ducas J., Jassal D.S. In-hospital cardiac arrest in the cardiac catheterization laboratory: Effective transition from an ICU-to CCU-led resuscitation team. J. Interv. Cardiol. 2019;2019:1686350. doi: 10.1155/2019/1686350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Al-Ghamdi M.A. Morbidity pattern and outcome of patients admitted in a coronary care unit: A report from a secondary hospital in southern region, Saudi Arabia. J. Commun. Hosp. Intern. Med. Perspect. 2018;8:191–194. doi: 10.1080/20009666.2018.1500421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Rapsang G.A., Shyam D.C. Scoring systems in the intensive care unit: A compendium. Indian J. Crit. Care Med. 2014;18:220. doi: 10.4103/0972-5229.130573. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.El Adi A. Master’s Thesis. Univeristy of Tampere; Tampere, Finland: 2018. Deep Neural Networks to Forecast Cardiac and Respiratory Deterioration of Intensive Care Patients. [Google Scholar]

- 48.Vincent J.L., De Mendonça A., Cantraine F., Moreno R., Takala J., Suter P.M., Sprung C.L., Colardyn F., Blecher S. Use of the SOFA score to assess the incidence of organ dysfunction/failure in intensive care units: Results of a multicenter, prospective study. Crit. Care Med. 1998;26:1793–1800. doi: 10.1097/00003246-199811000-00016. [DOI] [PubMed] [Google Scholar]

- 49.Gall L., Jean-Roger, Lemeshow S., Saulnier F. A new simplified acute physiology score (SAPS II) based on a European/North American multicenter study. JAMA. 1993;270:2957–2963. doi: 10.1001/jama.1993.03510240069035. [DOI] [PubMed] [Google Scholar]

- 50.Chhangani N.P., Amandeep M., Choudhary S., Gupta V., Goyal V. Role of acute physiology and chronic health evaluation II scoring system in determining the severity and prognosis of critically ill patients in pediatric intensive care unit. Indian J. Crit. Care Med. 2015;19:462. doi: 10.4103/0972-5229.162463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Singer M., Little R. ABC of intensive care: Cutting edge. BMJ. 1999;319:501. doi: 10.1136/bmj.319.7208.501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Nee P., Andrews F., Rivers E. Critical care in the emergency department: Introduction. Emerg. Med. J. 2006;23:560. doi: 10.1136/emj.2005.029942. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Spieth M.P., Thea K., de Abreu M.G. Approaches to ventilation in intensive care. Dtsch. Arztebl. Int. 2014;111:714. doi: 10.3238/arztebl.2014.0714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Sanabria A., Gomez X., Vega V., Dominguez L.C., Osorio C. Prediction of prolonged mechanical ventilation for intensive care unit patients: A cohort study. Colomb. Medica. 2013;44:184–188. doi: 10.25100/cm.v44i3.1285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Yang L.K., Tobin M.J. A prospective study of indexes predicting the outcome of trials of weaning from mechanical ventilation. N. Engl. J. Med. 1991;324:1445–1450. doi: 10.1056/NEJM199105233242101. [DOI] [PubMed] [Google Scholar]

- 56.Guo L., Wang W., Zhao N., Guo L., Chi C., Hou W., Wu A., Tong H., Wang Y., Wang C., et al. Mechanical ventilation strategies for intensive care unit patients without acute lung injury or acute respiratory distress syndrome: A systematic review and network meta-analysis. Crit. Care. 2016;20:1–11. doi: 10.1186/s13054-016-1396-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Srivali N., Thongprayoon C., Cheungpasitporn W., Kashani K. Trends of vasopressor using in medical intensive care unit: A 7-year cohort study. Intensive Care Med. Exp. 2015;3:1–2. doi: 10.1186/2197-425X-3-S1-A960. [DOI] [Google Scholar]

- 58.Wu M., Ghassemi M., Feng M., Celi L.A., Szolovits P., Doshi-Velez F. Understanding vasopressor intervention and weaning: Risk prediction in a public heterogeneous clinical time series database. J. Am. Med. Inform. Assoc. 2017;24:488–495. doi: 10.1093/jamia/ocw138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Rajpurkar P., Hannun A.Y., Haghpanahi M., Bourn C., Ng A.Y. Cardiologist-level arrhythmia detection with convolutional neural networks. arXiv. 20171707.01836 [Google Scholar]

- 60.Hannun A.Y., Rajpurkar P., Haghpanahi M., Tison G.H., Bourn C., Turakhia M.P., Ng A.Y. Cardiologist-level arrhythmia detection and classification in ambulatory electrocardiograms using a deep neural network. Nat. Med. 2019;25:65–69. doi: 10.1038/s41591-018-0268-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Zhang D., Yin C., Zeng J., Yuan X., Zhang P. Combining structured and unstructured data for predictive models: A deep learning approach. BMC Med. Inform. Decis. Mak. 2020;20:1–11. doi: 10.1186/s12911-020-01297-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Lipton Z.C., Kale D.C., Elkan C., Wetzel R. Learning to diagnose with LSTM recurrent neural networks. arXiv. 20151511.03677 [Google Scholar]

- 63.Syed M., Syed S., Sexton K., Syeda H.B., Garza M., Zozus M., Syed F., Begum S., Syed A.U., Sanford J., et al. Application of machine learning in intensive care unit (ICU) settings using MIMIC dataset: Systematic review. Informatics. 2021;8:16. doi: 10.3390/informatics8010016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Dabbagh A., Talebi Z., Rajaei S. Congenital Heart Disease in Pediatric and Adult Patients. Springer; Cham, Swizterland: 2017. Cardiovascular pharmacology in pediatric patients with congenital heart disease; pp. 117–195. [Google Scholar]

- 65.Ioffe S., Szegedy C. Batch normalization: Accelerating deep network training by reducing internal covariate shift; Proceedings of the International Conference on Machine Learning, PMLR; Lille, France. 6–11 July 2015. [Google Scholar]

- 66.Kuruvila I., Muncke J., Fischer E., Hoppe U. Extracting the auditory attention in a dual-speaker scenario from EEG using a joint CNN-LSTM model. Front. Physiol. 2021;12:700655. doi: 10.3389/fphys.2021.700655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Chen O., Lipsky A.M., Forgacs A., Celniker G., Lilly C.M., Pessach I.M. Validation of an Automatic Tagging System for Identifying Respiratory and Hemodynamic Deterioration Events in the Intensive Care Unit. Healthc. Inform. Res. 2021;27:241–248. doi: 10.4258/hir.2021.27.3.241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Ramesh J., Aburukba R., Sagahyroon A. A remote healthcare monitoring framework for diabetes prediction using machine learning. Healthc. Technol. Lett. 2021;8:45–57. doi: 10.1049/htl2.12010. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.