Abstract

To improve the monitoring of the electrical power grid, it is necessary to evaluate the influence of contamination in relation to leakage current and its progression to a disruptive discharge. In this paper, insulators were tested in a saline chamber to simulate the increase of salt contamination on their surface. From the time series forecasting of the leakage current, it is possible to evaluate the development of the fault before a flashover occurs. In this paper, for a complete evaluation, the long short-term memory (LSTM), group method of data handling (GMDH), adaptive neuro-fuzzy inference system (ANFIS), bootstrap aggregation (bagging), sequential learning (boosting), random subspace, and stacked generalization (stacking) ensemble learning models are analyzed. From the results of the best structure of the models, the hyperparameters are evaluated and the wavelet transform is used to obtain an enhanced model. The contribution of this paper is related to the improvement of well-established models using the wavelet transform, thus obtaining hybrid models that can be used for several applications. The results showed that using the wavelet transform leads to an improvement in all the used models, especially the wavelet ANFIS model, which had a mean RMSE of 1.58 , being the model that had the best result. Furthermore, the results for the standard deviation were 2.18 , showing that the model is stable and robust for the application under study. Future work can be performed using other components of the distribution power grid susceptible to contamination because they are installed outdoors.

Keywords: LSTM, GMDH, ANFIS, ensemble learning models, wavelet, time series forecasting

1. Introduction

Power distribution insulators are responsible for the electrical insulation and mechanical support of the cables of electrical power transmission and distribution aerial networks [1]. Because they are usually installed outdoors, they are constantly exposed to adverse weather conditions [2]. External agents must be considered when choosing the type of insulator to be used in a network because depending on the environment and the insulator chosen, they can compromise the proper operation of the grid and the life of the insulator itself. Typically, the insulators are manufactured using materials such as porcelain, glass, or polymers [3].

Contamination (pollution, salinity, biological agents) can make the surface of the insulator more conductive, increasing the possibility of partial discharges (PDs), dry band arcing, and consequently decreasing the efficiency of the system [4]. Contamination is a problem in places close to industries, agriculture, mining, coastal regions, or unpaved streets that may accumulate on the surface of the insulators [5]. Therefore, in some critical locations, cleaning or washing the insulators is required to mitigate insulation failure in the power system [6]. The layer of contamination deposited on the insulator when dry is not very conductive, but in the presence of moisture (light rain, fog), its conductivity can be increased by the formation of an electrolyte [7]. As the conductivity increases, PDs become more frequent and more intense, which can damage the surface of the insulator and evolve into dry band arcing that in turn may develop into a complete rupture known as flashover [8].

In the presence of a layer of wet contamination and leakage current on the surface of the insulator, heat dissipation, which can evaporate certain portions of this wet layer, drying out part of this conductive path, occurs [9]. This dry, non-conductive region is known as the dry band. These dry regions, which interrupt the conductive path due to evaporation, can concentrate an intense electric field, which can, in turn, lead to disruption of the air over the dry band, causing what is called dry band arcing and may damage the insulation [10]. One way to prevent a fault from becoming irreversible is to perform leakage current increase prediction through time series forecasting.

The major difficulty in using methods for time series forecasting is defining which model to use. Many authors support proposals for hybrid models in which several techniques are combined; however, a complete comparison to determine if the approach is the most adequate is usually not performed. In this paper, the models long short-term memory (LSTM) [11], group method of data handling (GMDH) [12], adaptive neuro fuzzy inference system (ANFIS) [13], bagging [14], boosting [15], random subspace [16], and stacking ensemble learning models [17] are compared. After defining the structure of each model that has the best performance for the used data set, the hyperparameters of the models and the use of wavelet transform, which has wide application for time series, are evaluated [18].

The remainder of this paper is structured as follows: Section 2 discusses the problem of contamination in insulators and the laboratory procedure used to perform the simulation of contamination accumulation. Section 3 presents the methods used for time series forecasting. Section 4 analyzes and discusses the results. Section 5 presents the conclusion.

2. Insulators Contamination

Since the power distribution insulators are usually installed outdoors, they are exposed to external agents such as sunlight, rain, and wind [19]. As time passes, it is natural for small amounts of particles such as dust or salt to be deposited on the insulator. This layer of dirt on the surface of the insulator is called contamination [20]. Contamination is one of the reasons of failure in power grid insulators [21]. This happens mainly in coastal areas due to the salinity present in the sea air, on unpaved streets, and in industrial regions with mining activities or chemical industries that generate suspended dirt. This contamination is distributed on the surface of the insulator in a non-uniform way [22].

The presence of contamination on the insulator’s surface does not mean that it needs to be replaced and normally has no harmful effect as long as moisture is not present [23]. However, in the presence of moisture, a conductive path, which decreases the insulation between the high-voltage phases and the ground, can be generated [24]. When cracks and fissures occur in the insulator, the rate of contamination deposition may accelerate and consequently increase its leakage current, making it more susceptible to partial discharge events and flashovers [25].

For polymeric insulators, Maraaba et al. [26] showed that insulators with up to 2 years of use have a better hydrophobic level compared to equivalent equipment with 15 years of operation. According to their study, there is a gradual reduction in hydrophobicity over time, which impairs the performance of the insulator. It is even recommended to replace the insulators after a field period longer than 19.2 years due to changes in their electrical and mechanical characteristics. The improvement of the network in the design phase has helped obtain more robust power systems [27,28].

In the presence of a contaminated and wet filament, dry band arcing occurs. This arcing dissipates energy through the joule effect, which can generate dry bands since the evaporation capacity is greater than the capacity to fill the affected region with water [29]. These dry bands bring the high voltage point closer to the earth, concentrating intense electric fields, which in turn can give rise to a series of dry band arcing [30]. The discharges can damage the surface of the polymeric insulator, giving rise to cracks that diminish its hydrophobic property and, in the long term, can lead to a complete rupture of the insulation [31].

Among the techniques for assessing contamination, the equivalent salt deposit density (ESDD) evaluates the amount of salt (NaCl) dissolved in a certain area measured in . This value is defined by washing the insulator with a specific amount of water and then measuring the conductivity of the water [32]. From the ESDD measurement, it is possible to measure the current state of a specific insulator that can be extrapolated to several insulators in the same region with equivalent exposure time [33]. The major disadvantage of the ESDD method is the need to remove the insulator from the transmission line to perform an accurate measurement [34]. An alternative is to estimate the ESDD through the information of the leakage current [35], a consequence of the salt deposition, thus not requiring the removal of the insulator to perform the evaluation.

The accumulation of contamination and consequently PDs can cause the equipment to be at risk and lead to outages, which makes monitoring these insulators essential for electrical utility [36,37]. If maintenance is not performed and the insulation fails, a technician should perform corrective maintenance, searching and replacing the insulator in the field in an emergency manner [38].

One of the most effective way to assess surface insulation degradation is by monitoring the leakage current [39]. According to Ghunem et al. [40], the leakage current is the main cause of fires on poles, which may result in wildfires. When an insulator is contaminated, there may be an increase in leakage current until there is a disruptive failure [41]. The contamination accumulates over time and becomes embedded in the surface of the insulator [42]. The evaluation of the increase in leakage current can be an indication that a disruptive failure will occur [43].

There are a variety of techniques and equipment specialized in the detection of defective insulators. This analysis can be performed from visual inspection techniques [44] or even taking insulator samples for bench tests. The equipment commonly used for inspection of the network are ultrasound detectors [45], acoustic sensors [46], infrared [47], and ultra-violet cameras. Software with a focus on protection and security has been increasingly used [48], which can also be an alternative for use in monitoring the electrical power system. This maintenance is performed by field technicians who, when detecting possible defective insulators, clean or, if necessary, replace the insulator.

The use of machine learning to predict the increase in contamination levels, partial discharges, or/and faulty insulators has been growing recently. Because the failures do not follow a linear pattern, their monitoring is a challenging task. Models that use deep layers for time series prediction as well as models that combine simpler models to create a more robust structure are becoming popular [49]. Among the ensemble learning methods for time series forecasting, the highlights are bootstrap aggregation (bagging) [50], sequential learning (boosting) [51], random subspace [52], random forest [53], and stacked generalization [54].

Laboratory Setup

To evaluate contamination in the laboratory, tests are performed in saline chambers, where controlled contamination situations are simulated on the surface of the insulator [55]. The experiments can be performed in two ways: The first method consists of using salty water to generate saline spray. In the second method, the contamination is applied directly to the surface of the insulator. In both methods, the salt levels on the surface of the insulator can be increased until the dielectric breakdown.

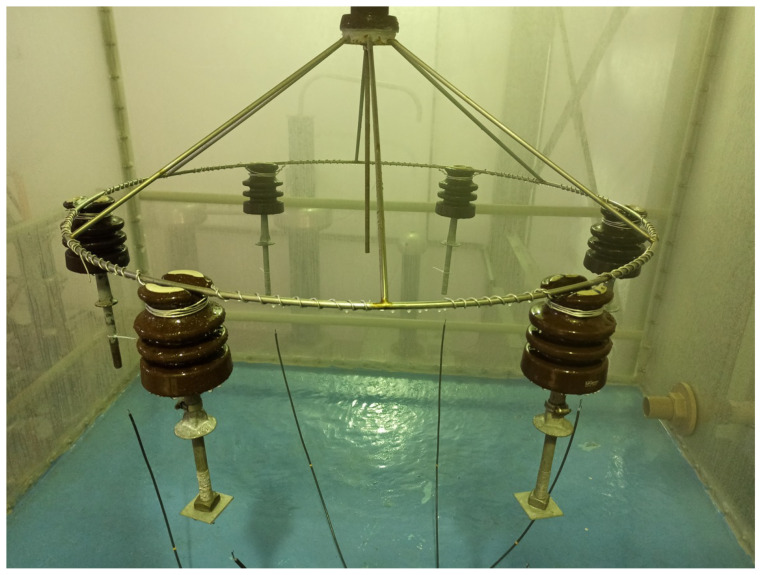

In the case of the experiment conducted in this paper with salt spray, the first method is applied. To simulate salt contamination that accumulates over time on the surface of the insulators, six insulators were mounted in a saline chamber. A 8.66 kV RMS 60 Hz was applied to the insulators (same phase), and the salt concentration was increased gradually. This voltage level is defined by the NBR 10621 (similar to IEC 60507) standard used by the electrical power utility. Specifically, this standard deals with the determination of the characteristics of supportability under artificial pollution for insulators in electric power grids for the 15 kV class. The arrangement of this experiment is shown in Figure 1.

Figure 1.

Insulators in a saline chamber experiment.

Saline contamination was used in this paper because it is one of the contaminants that has the greatest impact on leakage current since salinity increases the surface conductivity of insulators, thus reducing their insulating capacity. With reduced insulation and increased leakage current, there is a greater chance of a flashover occurring [56]. Specifically, a saline spray chamber was used because it is an automated method of contamination that facilitates the evaluation of the experiment in relation to time, especially when it is necessary to carry out a prolonged experiment.

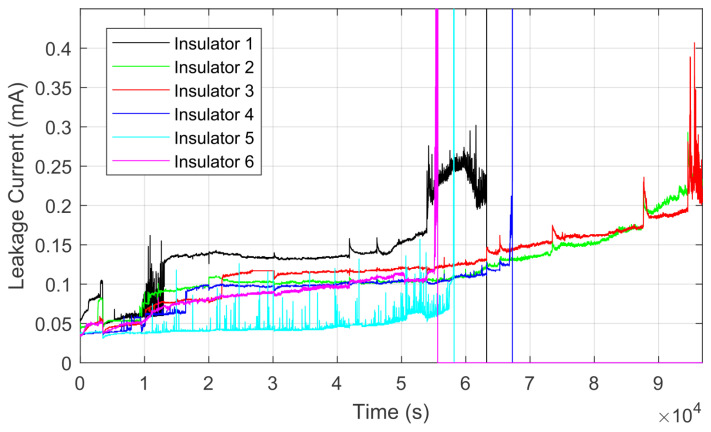

To monitor and record the applied voltage and resulting leakage current, an interface was developed in LabVIEW software. Each of the insulators was individually connected to the ground to measure the leakage current through a shunt resistor. Figure 2 presents the measured values of the leakage current during the experiment.

Figure 2.

Leakage current of the contaminated insulators.

From the six analyzed insulators, two did not have flashover, and the respective leakage current values were measured until the end of the experiment. The other insulators were monitored only until the surface breakdown occurred. Using the signal recorded during the laboratory experiment, time series forecasting models were applied to evaluate the capability of predicting the development of a failure in relation to the increase in leakage current.

The discharges due to the increase in leakage current occur randomly. The purpose of the experiment was to subject the insulators to an extreme contamination condition, thus simulating insulators installed in the field and exposed to adverse conditions for several years. Since the discharge is random, there is no certainty that it will occur. For this reason, six insulators were used in an experiment under controlled conditions. The evolution of the leakage current in one of the insulators is enough to perform the time series prediction when there is a flashover. This means that at least one faulty insulator would be necessary to perform the evaluation. If there were no failures during the experiment, the experiment would have to be prolonged.

The experiment was conducted from 18 April 2022 to 29 April 2022, and more than 90,000 measurements were recorded. In this period, four insulators presented flashover, so the analysis could be performed in any of these components.

3. Time Series Forecasting

The values from the time series are used up to time t. This way, it is possible to predict the value in the future, [57]. Thus, a mapping is created from the sample points n, sampled in each unit in time,

| (1) |

to a predicted value,

| (2) |

To predict the values of the next steps forward, the answers of the training sequence are changed on a time interval. Using the time series forecasting step ahead approach, each input sequence of the time step learns to predict the value of the next time step [58]. To obtain the expected values of future time steps, the output of the training sequences is shifted by a single time step [59]. From the time series evaluation, it is feasible to predict the development of flashover voltage, considering contamination conditions on electrical power insulators [60].

Several models can be used in time series forecasting, making the hoice of the appropriate model a difficult task. LSTM has been applied in deep learning by several authors due to its promising features in dealing with nonlinear data [61]. GMDH has performance advantages because it is an adaptive model that disregards neurons that do not help in the training process [62].

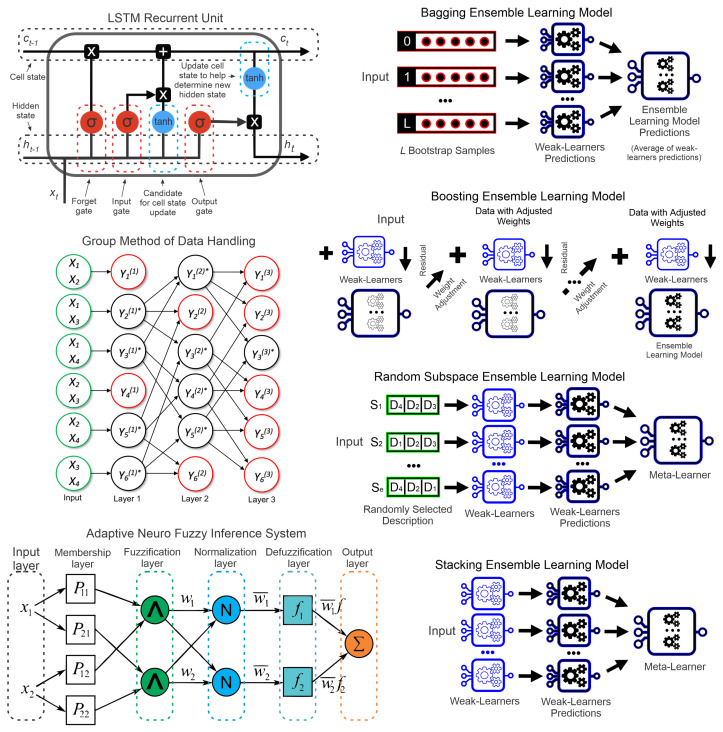

ANFIS has the advantages of fuzzy logic for time series forecasting [63]. The combination of simpler models makes the ensemble learning approach a promising alternative for forecasting [64], such as the bagging, boosting, random subspace, and stacking. These approaches will be explained and compared in this paper. The structure of these models is presented in Figure 3 and will be explained in this section.

Figure 3.

Structure of the considered models.

3.1. LSTM

LSTM is a recurrent neural network used in deep learning that has become increasingly popular [65]. The major advantage of using the LSTM is that it can learn long-term dependencies, being able to handle nonlinear variations of the system, which is an important feature for time series forecasting [66].

An LSTM unit consists of a cell with an input port, an output port, and a forgetting port [67]. The unit remembers values at arbitrary time intervals and the three gates control the flow of information in and out of the cell [68]. The LSTM can be calculated according to the equations:

| (3) |

where is the gate activation function, W and R are weight matrices, and b is the bias. These values are assigned to the network training [69].

In an LSTM recurrent unit, is the hidden state at the previous time step (short-term memory), is the cell state at previous time step (long-term memory), is the input vector at current time step t, is the hidden state at current time step t, and is the cell state at current time step t [70].

During the training phase, it is possible to define several optimizers, the most popular of them are stochastic gradient descent with momentum (SGDM) [71], adaptive moment estimation (ADAM) [72], and RMS propagation (RMSProp) [73]. In this paper, the preliminary evaluation will be performed using the SGDM with 50 hidden units, and after defining the best structure, all the mentioned optimizers will be evaluated.

3.2. GMDH

The group method of data handling is distinguished by being an inductive approach that performs the ranking of gradually complicated polynomial models and selects the best possible solution using an external criterion [74]. The external criterion is one major feature of GMDH, as it describes the requirements of the model. In this model, the number of hidden layers and the number of their neurons are determined automatically [75].

The GMDH selects the best structure that results in better performance to obtain an optimized network. When the minimum is no longer reduced with the previous layer, the network prediction error stops [76]. For a comparison of the models, a maximum of 50 neurons were initially used. The coefficients of GMDH are solved with regression methods for each pair of input variables and , where:

| (4) |

| (5) |

In this paper, the coefficients are estimated by the least-squares error (LSE) function:

| (6) |

To make the analysis easier, the results can be expressed in matrix form as:

| (7) |

where

| (8) |

3.3. ANFIS

An ANFIS is a neural network based on the Takagi–Sugeno–Kang inference model. This method unites both the benefits of neural networks and fuzzy systems in the same structure. By using both characteristics of these methods, this approach can deal with systems involving imprecise and nonlinear data [77].

The behavior of ANFIS can be understood by observing variables related to membership functions, the relationship from inputs to outputs, and fuzzy rules. Given these features, the ANFIS model might be adopted for chaotic time series forecasting [78]. The model optimization was evaluated in two ways: being the backpropagation using a gradient descent to calculate all parameters and the hybrid method that performs a combination of backpropagation to calculate the input membership parameters and least-squares estimation to calculate the output membership parameters.

3.4. Ensemble Learning Models

Ensemble learning modeling is based on the divide-and-conquer challenge dedicated to enhancing the accuracy of models. Several weak learners individually perform a particular task, and when their results are combined, a more efficient model regarding the accuracy is achieved [79].

The ensemble learning approach has better results because every base model learns distinct features of the data, and then when the outputs are combined, the entire pattern of the data is learned by aggregating the weak learners (base models) [80]. Because of the suitability of the ensemble learning method to handle different types of data, applications can be found in various fields such as energy [81], security [82], public health [83], industry [84], and the environment [85].

The weak learners used in this paper are support vector regression (SVR). These base models were used, considering that they are an efficient approach for ensemble learning models [86]. The form of the SVR is defined by a convex optimization problem with linear constraints, denoted by:

| (9a) |

| (9b) |

| (9c) |

| (9d) |

where and b are the normal vector and the bias, respectively, of a training dataset (, ), and is a margin of tolerance. The is employed to transform (9b) into a soft constraint (penalized by C), letting the optimization problem satisfy the constraint, even in ambiguous cases. To accomplish the relationship between input and output data,

| (10) |

where the forecasting values are , and the mapping of the input vector is ; b and are the coefficients calculated by the minimization of the risk function (R):

| (11) |

and the loss function () is used to penalize the training errors, evaluated by:

| (12) |

Using the in the regularized function, the task becomes a quadratic programming problem. The minimization of this function can be rewritten as an equivalent optimization problem, referred to as the primal problem:

| (13) |

subject to

| (14) |

where

| (15) |

Rewriting the dual problem:

| (16) |

subject to

| (17) |

The Kernel functions (K) used in the SVR for this paper are linear (19), radial basis function (RBF) (18), and polynomial (20). For an overall evaluation, the quadratic programming (L1QP) [87], iterative single data algorithm optimization (ISDA) [88], and sequential minimal optimization (SMO) [89] optimizers were used. For the initial comparison of ensemble models, the linear Kernel function and the L1QP optimizer were adopted. After the best-fit model was defined, all the presented kernel functions and optimizers were evaluated.

| (18) |

| (19) |

| (20) |

Several models are employed in the field of ensemble learning in which bootstrap aggregation (bagging) [90], sequential learning (boosting) [91], random subspace [92], and stacked generalization [93] can be highlighted. This grouping is intended to bring together the weakest multiple models to reduce their general susceptibility to the bias-variance, therefore making the prediction more robust.

3.4.1. Bagging

The bagging ensemble method is a type of parallel method aimed to generate a more robust set of models than individual models that compose it (weak learners). Bagging is focused on reducing the variance of the resulting model. In this method, independent learners are considered independent of each other; then it is possible to train them simultaneously [94]. The bagging method can also be called bootstrap aggregation since the bootstrap sample is created initially for each model, and afterwards the model is aggregated, combined by the mean rule (in regression cases) [95].

3.4.2. Boosting

The boosting ensemble learning is a process characterized as a sequential learning approach. Weak learners are not independently trained, focusing on the reduction of the bias of the individual models [96]. Indeed, for the regression tasks, the effectiveness of the boosting approach is due to the fact that after the result of the first weak model, the following models try to improve accuracy by fitting models to the residual of the previous models [97].

Using the regularization parameter, overfitting is avoided. In fact, the boosting paradigm trains new models iteratively, concentrating on observations that the prior models had more difficulty in predicting, which therefore makes the predictive model less impartial [98]. Since the goal is to reduce the bias of simpler predictors, it is proper to use a simpler model with higher bias and lower variance [99].

3.4.3. Random Subspace

The random subspace ensemble learning model is a popular random sampling method that was introduced by Ho [100] to improve the performance of weak classifiers and to improve the classification accuracy of individual classifiers [101]. According to Pham et al. [102], random subspace is an ensemble approach where the original high-dimensional feature vector is randomly sampled to create the low-dimensional subspaces, and multiple classifiers are then combined on these random subspaces for the final decision.

3.4.4. Stacked Generalization

Stacking ensemble learning combines several different predictive models into a single model working in layers or levels. This concept introduces meta-learning, which represents an asymptotically optimum learning system and is intended to minimize generalization errors by reducing the bias of its generalizers [103]. In fact, a stacked model is created from the predictions of weak learners, which are used as features. The characteristics allow the resulting model to cluster the initial models, so the model disregards the results that performed poorly [104].

3.5. Wavelet

For the purpose of comparing the proposed model, the signal will be filtered using the wavelet transform (WT) to assess whether the use of filters is promising for the application. In the WT, information is extracted from each signal segment and treated [105]. Initially, the wavelet energy coefficient is obtained after the signal decomposition by the wavelet packets transform (WPT), considering that the information on both sides of the spectrum is considered in this procedure [106].

The WPT performs a new decomposition in each interaction based on the coefficients of the previous iterations and thus indicates that the final number of coefficients depends on the number of iterations [107]. The orthogonal wavelet is decomposed into wavelet packages (WP); thus a vector tree structure is created. The structure is divided into two parts, the first being an approximation coefficient vector and the second a detailed result vector [108]. The WP function can be obtained by:

| (21) |

where k represents the translation operator, j is a scalable parameter, and n is the oscillation parameter. The first two WP functions for and are:

| (22) |

Equation (21) represents the scale function, and Equation (22) represents the main function. The equations for , can be defined according to the following relations:

| (23) |

| (24) |

where is a high-pass filter, and is a low-pass filter [109]. The coefficients can be calculated by the product of by , expressed by:

| (25) |

Each WP coefficient can be determined according to its frequency level. While the wavelet decomposes the elements of low frequency, the WPT decomposes the elements of all frequencies, so its use results in components of low and high frequencies [110]. Using the tree structure generated by the decomposition coefficients of approximation, an optimal binary value is obtained. The resulting subtree can be much smaller than the original; thus it can make the algorithm more efficient [111].

3.6. Considered Measures

Aiming to forecast the leakage current of the contaminated insulators, it is promising to evaluate the evolution of the failure through time series analysis, thus efficiently estimating the moment when the component is vulnerable to suffering a disruptive discharge [112].

The most commonly used measures of performance in relation to forecast error are root-mean-square error (RMSE), mean absolute percentage error (MAPE), and mean absolute error (MAE) [113], calculated as follows:

| (26) |

| (27) |

| (28) |

where the error is calculated by the difference in the observed value to the predicted output [114].

Typically for classification tasks, the metrics are based on the confusion matrix [115]; however, for prediction, the metrics are based on the error [116]. The coefficient of determination (R) measures the adjustment of a statistical model to the observed values of a random variable. This is another widely used metric for evaluating regressions, calculated by:

| (29) |

where is the relationship between the residual sum of squares, and is the total sum of squares, given by:

| (30) |

| (31) |

where is the average of the observed value [117]. When the best model configurations were found, 100 runs were performed to evaluate the mean Equation (32), median Equation (33), standard deviation Equation (34), and variance Equation (35). The simulations were evaluated using an Intel Core I5-7400, 20 GB of random-access memory, with MATLAB software, version R2019.

| (32) |

| (33) |

| (34) |

| (35) |

where m is the number of performed runs (100 in this paper), is the result of the RMSE of each run (i), and is the mean result of all the simulations.

4. Analysis of Results

The leakage current of the insulators was recorded with a time interval of one second between each record. During the experiment, 100,000 records were made, corresponding to approximately 27 h and 46 min of evaluation. As the time series is long-term, the down-sample method of order five is used to reduce the time series length. During the experiment, the water salinity went from 142.3 to 133,600.0 (S), the pressure from 3 to 5 (BAR), and the water flow from 10.4 to 20.8 (mL/s); there was no significant change in temperature, humidity, and applied voltage.

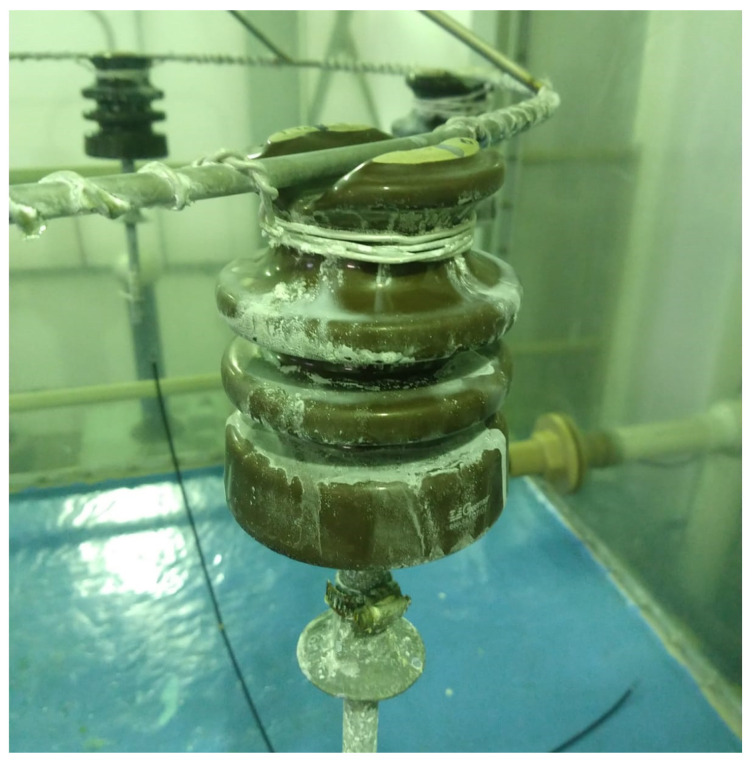

The dielectric breakdown occurred in four out of the six tested insulators. Only the insulators that resulted in a disruptive discharge were considered for the analysis since this is the condition that should be predicted when an increase in insulator contamination occurs. One of the insulators at the end of the experimental analysis is shown in Figure 4. After recording the variation of leakage current over time due to the increased accumulation of contamination on the insulator surface, time series forecasting models were applied to evaluate the prediction capacity of the failure development. The best results for each structure are highlighted in bold in this section.

Figure 4.

Insulator with salt contamination accumulated on its surface at the end of the experiment.

4.1. Time Series Forecasting Analysis

In this section, initially all models are evaluated with different structure configurations. After defining the best structure for the model, the hyperparameters of each model are evaluated. From the configuration definition that has better performance, a statistical analysis is conducted using the standard models. Then, based on the best model’s configuration, the depth of the wavelet transform is evaluated in each model. From the best use of wavelet, a final statistical analysis is performed to compare the results of the proposed hybrid models.

The comparison between the models regarding their structure in the LSTM and GMDH is related to the increase in the size of the neural network through the inclusion of more layers. The results are presented in Table 1.

Table 1.

Overall comparison of the models.

| Model | Structure | RMSE | MAPE | MAE | R | Time (s) |

|---|---|---|---|---|---|---|

| LSTM | 1 Deeper Layer | 6.60 | 1.56 | 0.7104 | 75.86 | |

| 2 Deeper Layers | 3.01 | 8.07 | 1.95 | 0.5269 | 107.22 | |

| 3 Deeper Layers | 4.49 | 1.37 | 3.17 | 0.0506 | 155.01 | |

| 4 Deeper Layers | 5.22 | 1.63 | 3.75 | 0.4208 | 182.07 | |

| GMDH | 1 Max. Layer | 4.79 | 3.32 | 7.18 | 0.9880 | 2.21 |

| 2 Max. Layers | 4.35 | 1.90 | 4.70 | 0.9901 | 2.90 | |

| 3 Max. Layers | 5.10 | 9.06 | 2.10 | 0.9864 | 1.92 | |

| 4 Max. Layers | 6.00 | 3.11 | 7.30 | 0.8819 | 4.68 | |

| ANFIS | FCM | 1.15 | 2.38 | 4.85 | 0.9304 | 25.08 |

| Grid Partitioning | 5.23 | 5.68 | 1.45 | 0.9857 | 92.53 | |

| Subt. Clustering | 4.48 | 3.29 | 3.43 | 0.9895 | 73.75 | |

| Ensemble | Bagging | 4.19 | 1.88 | 3.16 | 0.9909 | 4443.30 |

| Boosting | 3.40 | 1.27 | 2.73 | 0.3971 | 31,874.70 | |

| Random Subsp. | 4.94 | 1.09 | 2.21 | 0.9872 | 25,155.34 | |

| Stacking | 5.04 | 1.56 | 3.59 | 0.3255 | 1295.48 |

All ensemble models had a higher computational effort than the other models until convergence, resulting in a considerably higher processing time to be computed. The GMDH was the fastest model for the required processing This occurred because it uses the number of layers and nodes according to the needs of the task, being an efficient adaptive model. Because of the greater number of maximum layers used, the GMDH needs more time to converge, similar to what occurs with the LSTM model when deeper layers are used. In this evaluation, the GMDH model was superior to the LSTM model in terms of considered error metrics, coefficient of determination, and time to convergence.

The coefficient of determination of the ensemble bagging, ANFIS using subtractive clustering, and GMDH with maximum use of two layers were higher than the other models and their different structures. In the LSTM, the use of a deeper network did not result in a progressive increase in model performance with respect to error evaluation. This shows that using a model based on deep learning may not always be the best alternative.

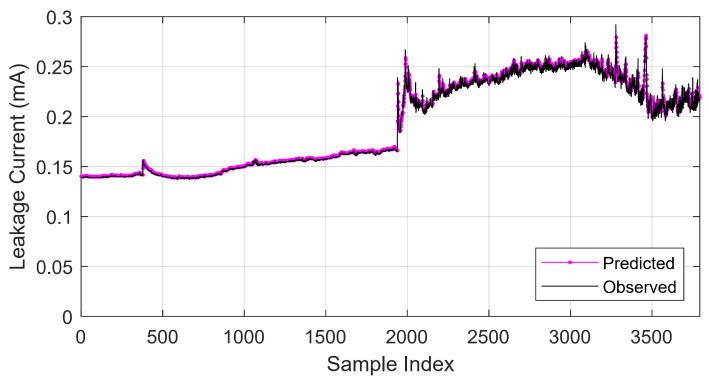

Regarding the MAPE and MAE, the model that had the lowest error result was the ANFIS subtractive clustering followed by the ensemble bagging and GMDH (max of two layers). The RMSE of these models was also lower compared to other structure configurations. These structures had the best results in this comparison. For this reason, their hyperparameters will be modified for a more complete evaluation of their capabilities. A comparison of the original (observed) signal and the predicted signal is presented in Figure 5.

Figure 5.

Predicted signal compared to observed signal.

4.2. Hyperparameter Optimization

To obtain models with better performance, the variation in the main configuration parameters of the structures that had superior results of each model was evaluated. Table 2 presents the results of the LSTM model using the SGDM, ADAM, and RMSprop optimizers, varying the number of hidden units.

Table 2.

Evaluation of LSTM hyperparameters.

| Optimizer | Hidden Units |

RMSE | MAPE | MAE | R | Time (s) |

|---|---|---|---|---|---|---|

| SGDM | 10 | 5.40 | 1.67 | 3.85 | 0.5226 | 81.53 |

| 20 | 3.73 | 1.12 | 2.60 | 0.2737 | 78.02 | |

| 30 | 2.32 | 7.09 | 1.63 | 0.7180 | 71.09 | |

| 40 | 2.77 | 8.48 | 1.95 | 0.5981 | 70.62 | |

| 50 | 1.78 | 4.82 | 1.15 | 0.8339 | 74.71 | |

| ADAM | 10 | 5.61 | 1.79 | 4.08 | 0.6419 | 71.29 |

| 20 | 5.64 | 1.76 | 4.05 | 0.6601 | 72.94 | |

| 30 | 3.33 | 1.02 | 2.34 | 0.4206 | 74.13 | |

| 40 | 2.97 | 8.85 | 2.06 | 0.5396 | 74.16 | |

| 50 | 1.82 | 4.84 | 1.16 | 0.8274 | 74.43 | |

| RMSprop | 10 | 5.13 | 1.68 | 3.79 | 0.3732 | 72.47 |

| 20 | 5.13 | 1.75 | 3.90 | 0.3762 | 73.32 | |

| 30 | 4.50 | 1.51 | 3.38 | 0.0566 | 73.74 | |

| 40 | 3.10 | 9.16 | 2.14 | 0.4995 | 75.56 | |

| 50 | 3.73 | 1.33 | 2.92 | 0.2722 | 73.08 |

When varying the hyperparameters of the LSTM model, there was no significant improvement, considering that the difference between the best and worst results were closer than the other compared models. Table 3 presents the results of the variation of the maximum number of neurons in the GMDH model. Specifically this parameter was evaluated because the model is adaptive, and it only needs to define the maximum number of layers and neurons.

Table 3.

Evaluation of GMDH hyperparameters.

| Max Neurons |

RMSE | MAPE | MAE | R | Time (s) |

|---|---|---|---|---|---|

| 10 | 4.15 | 7.79 | 2.04 | 0.9910 | 0.26 |

| 20 | 4.58 | 5.61 | 1.31 | 0.9891 | 0.34 |

| 30 | 4.49 | 1.41 | 2.92 | 0.9903 | 0.25 |

| 40 | 4.59 | 5.89 | 1.30 | 0.9890 | 0.24 |

| 50 | 4.30 | 2.57 | 6.23 | 0.9903 | 0.24 |

| 60 | 4.22 | 4.44 | 1.28 | 0.9907 | 0.24 |

| 70 | 4.42 | 3.43 | 8.15 | 0.9905 | 0.26 |

| 80 | 4.09 | 3.75 | 1.22 | 0.9912 | 0.26 |

| 90 | 4.45 | 3.70 | 8.18 | 0.9897 | 0.24 |

| 100 | 4.34 | 1.08 | 2.80 | 0.9902 | 0.23 |

There was not much variation in the results when the maximum number of neurons used by the GMDH was changed. The best result was obtained using the maximum of 80 neurons. This result shows that GMDH is promising for this application, given that even varying the hyperparameters of the model, the results remain with lower error compared to LSTM. The high value of the coefficient of determination makes it one of the fastest models to converge. The superior results of this model are due to the fact that it is an optimized model in which neurons that do not help in the learning phase are disregarded.

The next model in which the configuration of the hyperparameters was evaluated is the ANFIS model. Considering the subtractive clustering structure, the results of this evaluation regarding training form and influence radius are presented in Table 4.

Table 4.

Evaluation of ANFIS subtractive clustering hyperparameters.

| Method | Radius | RMSE | MAPE | MAE | R | Time (s) |

|---|---|---|---|---|---|---|

| Hybrid | 0.2 | 4.15 | 5.92 | 9.44 | 0.9910 | 28.34 |

| 0.4 | 4.48 | 3.29 | 3.43 | 0.9895 | 28.81 | |

| 0.6 | 4.16 | 4.86 | 7.06 | 0.9910 | 29.39 | |

| 0.8 | 4.16 | 1.25 | 2.39 | 0.9910 | 25.68 | |

| 1.0 | 4.17 | 6.83 | 1.14 | 0.9909 | 25.74 | |

| Backpropag. | 0.2 | 7.60 | 3.57 | 6.41 | 0.9698 | 25.18 |

| 0.4 | 4.09 | 2.00 | 3.98 | 0.9913 | 24.96 | |

| 0.6 | 4.06 | 1.74 | 3.02 | 0.9914 | 25.12 | |

| 0.8 | 7.66 | 3.58 | 6.52 | 0.9693 | 30.52 | |

| 1.0 | 8.17 | 3.89 | 7.12 | 0.9651 | 25.51 |

There was a minor variation in the ANFIS subtractive clustering model by changing the influence radius using a hybrid optimization method. The lowest MAPE and MAE values occurred using the hybrid method with a radius of influence of 0.4. Using this method, the lowest RMSE value was achieved with a radius of influence of 0.2, ranking among the best coefficient of determination values. Considering that the MAPE and MAE using the classical backpropagation method were higher and the difference in RMSE and coefficient of determination were not high, the hybrid method proved to be more promising.

Table 5 shows the results of using the L1QP, ISDA, and SMO optimizers for the ensemble bagging model. For these optimizers, the linear, RBF, and polynomial Kernel functions are evaluated.

Table 5.

Evaluation of ensemble bagging hyperparameters.

| Optimizer | Kernel | RMSE | MAPE | MAE | R | Time (s) |

|---|---|---|---|---|---|---|

| L1QP | Linear | 4.19 | 1.88 | 3.16 | 0.9909 | 4443.30 |

| RBF | 1.05 | 3.42 | 7.76 | 0.7926 | 5256.65 | |

| Polynomial | 2.98 | 1.43 | 3.18 | 0.5363 | 5763.47 | |

| ISDA | Linear | 4.17 | 1.27 | 1.98 | 0.9909 | 25.33 |

| RBF | 9.70 | 3.14 | 7.14 | 0.9143 | 11.52 | |

| Polynomial | 9.68 | 3.14 | 7.13 | 0.8935 | 11.83 | |

| SMO | Linear | 4.23 | 3.19 | 5.74 | 0.9906 | 8.09 |

| RBF | 1.06 | 3.44 | 7.82 | 0.8680 | 6.06 | |

| Polynomial | 1.06 | 3.44 | 7.80 | 0.8132 | 6.67 |

The ensemble bagging model took much longer to converge using the L1QP optimizer. This shows that an inadequate configuration can result in low performance and a high computational effort. The Kernel function that had the best coefficient of determination and lowest error results was the linear function, so the combination between the ISDA optimizer and the linear function had the best result in this analysis considering the metrics evaluated.

The best setup results in the hyperparameters evaluated in this section were: LSTM with 50 hidden units with one deeper layer using an SGDM optimizer, GMDH with a maximum of 2 layers and 80 neurons, ANFIS subtractive clustering with the hybrid method and influence radius of 0.2, and ensemble bagging with the ISDA optimizer with a linear Kernel function. These settings were used for the following analysis in this paper. The result of the statistical evaluation using these settings for the RMSE is presented in Table 6. In this evaluation, the ANFIS and ensemble models had better results with lower error, smaller variance, and standard deviation than GMDH and LSTM.

Table 6.

Statistical assessment.

| Model | Mean | Median | Std. Dev. | Variance |

|---|---|---|---|---|

| LSTM | 2.17 | 2.11 | 3.30 | 1.09 |

| GMDH | 4.45 | 4.38 | 3.02 | 9.12 |

| ANFIS | 4.15 | 4.15 | 8.72 | 7.60 |

| Ensemble | 4.20 | 4.19 | 5.77 | 3.33 |

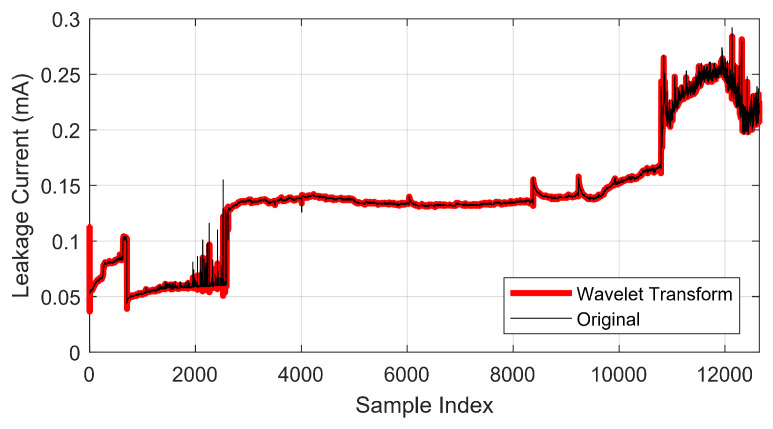

4.3. Application of Wavelet Transform

The results of the use of the wavelet transform on insulator 1 are presented in Figure 6. These results are presented in relation to a generic index, considering that after the use of the down-sample algorithm, the samples do not follow the time sequence of the experiment since the focus of the evaluation is the variation in the amplitude of the leakage current.

Figure 6.

Wavelet transform evaluation.

To evaluate the use of the wavelet transform, all models were tested using different combinations of depth for the wavelet packet tree. The results of this evaluation are presented in Table 7. The use of more than one node results in a loss of time series features, so this configuration was standardized.

Table 7.

Analysis using the wavelet transform.

| Model | Depth | RMSE | MAPE | MAE | R | Time (s) |

|---|---|---|---|---|---|---|

| Wavelet LSTM |

1 | 3.83 | 1.18 | 2.72 | 0.2314 | 86.24 |

| 2 | 3.72 | 1.13 | 2.61 | 0.2776 | 70.33 | |

| 3 | 3.28 | 1.12 | 2.48 | 0.4376 | 73.87 | |

| 4 | 4.01 | 1.46 | 3.17 | 0.1595 | 77.55 | |

| Wavelet GMDH |

1 | 3.98 | 5.79 | 1.35 | 0.9917 | 0.22 |

| 2 | 3.01 | 4.09 | 9.50 | 0.9953 | 0.29 | |

| 3 | 5.81 | 1.01 | 2.30 | 0.9824 | 0.23 | |

| 4 | 3.94 | 6.11 | 1.42 | 0.9919 | 0.24 | |

| Wavelet ANFIS |

1 | 1.56 | 5.31 | 1.17 | 0.9987 | 35.93 |

| 2 | 1.58 | 5.48 | 1.28 | 0.9987 | 42.54 | |

| 3 | 1.57 | 1.76 | 3.90 | 0.9987 | 33.86 | |

| 4 | 1.57 | 2.03 | 4.52 | 0.9987 | 33.05 | |

| Wavelet Ensemble |

1 | 2.64 | 8.46 | 1.71 | 0.9964 | 21.03 |

| 2 | 3.62 | 1.44 | 2.88 | 0.9931 | 17.65 | |

| 3 | 2.46 | 7.01 | 1.43 | 0.9968 | 20.08 | |

| 4 | 3.12 | 1.15 | 2.31 | 0.9949 | 19.90 |

The results of using the wavelet transform to reduce signal noise were promising in all models except for the LSTM in which the original signal prediction achieved better results of determination coefficients, time to convergence, and lower error. In GMDH, the use of the wavelet transform with two depth levels had better results than predicting the original signal. In the ANFIS model using two levels of depth, the RMS was close to the best result and had the best performance considering the other error metrics. For this reason, a depth of two levels was used in the ANFIS model, as in GMDH.

The ensemble model had a promising result using three levels in the wavelet transform. Only MAE and MAPE had superior results on the original signal after using the down-sample algorithm. The use of the wavelet transform had promising results when it was applied after the down-sample algorithm. When the analysis was performed using the down-sample before the wavelet transform, all models had inferior results, not being a suitable strategy for this analysis.

Considering the use of the best configuration of the wavelet transform, 100 runs were performed, and the statistical results regarding RMSE are presented in Table 8. As can be observed, the models that had the best performance are the ANFIS model and the ensemble, which were the same models that had the best performance without the use of the wavelet transform.

Table 8.

Statistical evaluation of models with wavelet transform.

| Model | Mean | Median | Std. Dev. | Variance |

|---|---|---|---|---|

| Wavelet LSTM | 2.08 | 2.06 | 3.48 | 1.21 |

| Wavelet GMDH | 4.39 | 4.29 | 1.45 | 2.10 |

| Wavelet ANFIS | 1.58 | 1.58 | 2.18 | 4.75 |

| Wavelet Ensemble | 2.94 | 2.91 | 3.12 | 9.76 |

An interesting result is that the LSTM had the worst performance in both analyses, being a model that is not suitable for this evaluation. Many authors have used the LSTM; however, this model may not be the most appropriate for forecasting depending on the signal used, as presented in this paper.

5. Conclusions

The use of time series prediction models to evaluate the development of faults in insulators based on leakage current shows promise. Several models can be successfully applied to accomplish this task. The increase in contamination results in a consequent increase in leakage current until an electrical discharge occurs, which is an adequate way of assessing the development of the adverse condition to result in a failure. For this reason, leakage current must be monitored to keep the electrical power system operational.

The ANFIS subtractive clustering and the ensemble bagging stood out in predicting the leakage current, considering that they had lower error and better coefficient of determination. The results show that the structure of the model has a major influence on its performance. Then it is necessary to perform a comparative analysis of all variations of the model to have an optimized algorithm. The statistical results showed that ANFIS is a stable model, resulting in low variance when several simulations are performed.

The application of the wavelet transform resulted in an improvement in the predictive ability of the evaluated models, being a promising technique for noise reduction without the loss of signal characteristics. With a mean RMSE of 1.58 , the wavelet ANFIS had the best results with lower error and variance; comparatively, this model had a 53.74% lower error (RMSE) than the wavelet ensemble which was the second best model in this study. Most of the models presented stability when several simulations were performed, showing that these methods are reliable for the application presented in this paper. In particular, the wavelet ANFIS had a variance result of 2.18 , which is considerably lower than all the other models, thus proving to be the most stable model.

Based on the results, future work can be performed to develop an embedded system for monitoring leakage current and indicating vulnerability to a disruptive discharge in distribution insulators. The leakage current proves to be a suitable indicator for monitoring the conditions of the power electrical system; this measurement can be applied to other insulating components of the electrical power grid.

Author Contributions

Investigation, writing—Original draft, formal analysis, methodology, N.F.S.N.; software, validation, S.F.S.; supervision, writing—Review and editing, project administration, L.H.M.; supervision, R.G.O. and V.R.Q.L. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

For future comparisons, the recorded data are available at https://github.com/SFStefenon/LeakageCurrent (accessed on 1 August 2022).

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This work was supported by national funds through the Foundation for Science and Technology, I.P. (Portuguese Foundation for Science and Technology) by the project UIDB/05064/2020 (VALORIZA—Research Center for Endogenous Resource Valorization), and Project UIDB/04111/2020, ILIND—Lusophone Institute of Investigation and Development, under project COFAC/ILIND/COPELABS/3/2020.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Kim T., Sanyal S., Koo J.B., Son J.A., Choi I.H., Yi J. Analysis of Thermal Sensitivity by High Voltage Insulator Materials. IEEE Access. 2020;8:75586–75591. doi: 10.1109/ACCESS.2020.2987705. [DOI] [Google Scholar]

- 2.Stefenon S.F., Seman L.O., Pavan B.A., Ovejero R.G., Leithardt V.R.Q. Optimal design of electrical power distribution grid spacers using finite element method. IET Gener. Transm. Distrib. 2022;16:1865–1876. doi: 10.1049/gtd2.12425. [DOI] [Google Scholar]

- 3.Meyer L., Pintarelli R. Inclined Plane Test for Erosion of Polymeric Insulators under AC and DC Voltages. IEEE Lat. Am. Trans. 2020;18:1455–1461. doi: 10.1109/TLA.2020.9111682. [DOI] [Google Scholar]

- 4.Ilomuanya C., Nekahi A., Farokhi S. A Study of the Cleansing Effect of Precipitation and Wind on Polluted Outdoor High Voltage Glass Cap and Pin Insulator. IEEE Access. 2022;10:20669–20676. doi: 10.1109/ACCESS.2022.3148709. [DOI] [Google Scholar]

- 5.Wang S., Wu Y. Effect of salty fog on flashover characteristics of OCS composite insulators. Chin. J. Electr. Eng. 2019;5:59–66. doi: 10.23919/CJEE.2019.000021. [DOI] [Google Scholar]

- 6.Stefenon S.F., Seman L.O., Sopelsa Neto N.F., Meyer L.H., Nied A., Yow K.C. Echo state network applied for classification of medium voltage insulators. Int. J. Electr. Power Energy Syst. 2022;134:107336. doi: 10.1016/j.ijepes.2021.107336. [DOI] [Google Scholar]

- 7.Nan J., Li H., Wan X., Xu T., Huo F., Lin F. Study on Fast Contamination Characteristics of Cap and Pin Insulators in Straight Flow Wind Tunnel Simulation. IEEE Access. 2021;9:125912–125919. doi: 10.1109/ACCESS.2021.3110802. [DOI] [Google Scholar]

- 8.Sezavar H.R., Fahimi N., Shayegani-Akmal A.A. An Improved Dynamic Multi-Arcs Modeling Approach for Pollution Flashover of Silicone Rubber Insulator. IEEE Trans. Dielectr. Electr. Insul. 2022;29:77–85. doi: 10.1109/TDEI.2022.3146531. [DOI] [Google Scholar]

- 9.Stefenon S.F., Ribeiro M.H.D.M., Nied A., Mariani V.C., Coelho L.D.S., Leithardt V.R.Q., Silva L.A., Seman L.O. Hybrid Wavelet Stacking Ensemble Model for Insulators Contamination Forecasting. IEEE Access. 2021;9:66387–66397. doi: 10.1109/ACCESS.2021.3076410. [DOI] [Google Scholar]

- 10.Alqudsi A.Y., Ghunem R.A., David E. Analyzing the Role of Filler Interface on the Erosion Performance of Filled RTV Silicone Rubber under DC Dry-band Arcing. IEEE Trans. Dielectr. Electr. Insul. 2021;28:788–796. doi: 10.1109/TDEI.2021.009337. [DOI] [Google Scholar]

- 11.Sagheer A., Kotb M. Time series forecasting of petroleum production using deep LSTM recurrent networks. Neurocomputing. 2019;323:203–213. doi: 10.1016/j.neucom.2018.09.082. [DOI] [Google Scholar]

- 12.Elbaz K., Shen S.L., Zhou A., Yin Z.Y., Lyu H.M. Prediction of Disc Cutter Life During Shield Tunneling with AI via the Incorporation of a Genetic Algorithm into a GMDH-Type Neural Network. Engineering. 2021;7:238–251. doi: 10.1016/j.eng.2020.02.016. [DOI] [Google Scholar]

- 13.Kardani N., Bardhan A., Kim D., Samui P., Zhou A. Modelling the energy performance of residential buildings using advanced computational frameworks based on RVM, GMDH, ANFIS-BBO and ANFIS-IPSO. J. Build. Eng. 2021;35:102105. doi: 10.1016/j.jobe.2020.102105. [DOI] [Google Scholar]

- 14.Meira E., Cyrino Oliveira F.L., de Menezes L.M. Forecasting natural gas consumption using Bagging and modified regularization techniques. Energy Econ. 2022;106:105760. doi: 10.1016/j.eneco.2021.105760. [DOI] [Google Scholar]

- 15.Lu H., Cheng F., Ma X., Hu G. Short-term prediction of building energy consumption employing an improved extreme gradient boosting model: A case study of an intake tower. Energy. 2020;203:117756. doi: 10.1016/j.energy.2020.117756. [DOI] [Google Scholar]

- 16.Talukdar S., Eibek K.U., Akhter S., Ziaul S., Towfiqul Islam A.R.M., Mallick J. Modeling fragmentation probability of land-use and land-cover using the bagging, random forest and random subspace in the Teesta River Basin, Bangladesh. Ecol. Indic. 2021;126:107612. doi: 10.1016/j.ecolind.2021.107612. [DOI] [Google Scholar]

- 17.Ribeiro M.H.D.M., da Silva R.G., Moreno S.R., Mariani V.C., Coelho L.S. Efficient bootstrap stacking ensemble learning model applied to wind power generation forecasting. Int. J. Electr. Power Energy Syst. 2022;136:107712. doi: 10.1016/j.ijepes.2021.107712. [DOI] [Google Scholar]

- 18.Zhang K., Gençay R., Ege Yazgan M. Application of wavelet decomposition in time-series forecasting. Econ. Lett. 2017;158:41–46. doi: 10.1016/j.econlet.2017.06.010. [DOI] [Google Scholar]

- 19.Stefenon S.F., Corso M.P., Nied A., Perez F.L., Yow K.C., Gonzalez G.V., Leithardt V.R.Q. Classification of insulators using neural network based on computer vision. IET Gener. Transm. Distrib. 2021;16:1096–1107. doi: 10.1049/gtd2.12353. [DOI] [Google Scholar]

- 20.Salem A.A., Abd-Rahman R., Al-Gailani S.A., Kamarudin M.S., Ahmad H., Salam Z. The Leakage Current Components as a Diagnostic Tool to Estimate Contamination Level on High Voltage Insulators. IEEE Access. 2020;8:92514–92528. doi: 10.1109/ACCESS.2020.2993630. [DOI] [Google Scholar]

- 21.Salem A.A., Abd-Rahman R., Rahiman W., Al-Gailani S.A., Al-Ameri S.M., Ishak M.T., Sheikh U.U. Pollution Flashover Under Different Contamination Profiles on High Voltage Insulator: Numerical and Experiment Investigation. IEEE Access. 2021;9:37800–37812. doi: 10.1109/ACCESS.2021.3063201. [DOI] [Google Scholar]

- 22.Stefenon S.F., Neto C.S.F., Coelho T.S., Nied A., Yamaguchi C.K., Yow K.C. Particle swarm optimization for design of insulators of distribution power system based on finite element method. Electr. Eng. 2022;104:615–622. doi: 10.1007/s00202-021-01332-3. [DOI] [Google Scholar]

- 23.Sopelsa Neto N.F., Stefenon S.F., Meyer L.H., Bruns R., Nied A., Seman L.O., Gonzalez G.V., Leithardt V.R.Q., Yow K.C. A Study of Multilayer Perceptron Networks Applied to Classification of Ceramic Insulators Using Ultrasound. Appl. Sci. 2021;11:1592. doi: 10.3390/app11041592. [DOI] [Google Scholar]

- 24.Araya J., Montaña J., Schurch R. Electric Field Distribution and Leakage Currents in Glass Insulator Under Different Altitudes and Pollutions Conditions using FEM Simulations. IEEE Lat. Am. Trans. 2021;19:1278–1285. doi: 10.1109/TLA.2021.9475858. [DOI] [Google Scholar]

- 25.Medeiros A., Sartori A., Stefenon S.F., Meyer L.H., Nied A. Comparison of artificial intelligence techniques to failure prediction in contaminated insulators based on leakage current. J. Intell. Fuzzy Syst. 2022;42:3285–3298. doi: 10.3233/JIFS-211126. [DOI] [Google Scholar]

- 26.Maraaba L.S., Soufi K.Y.A., Alhems L.M., Hassan M.A. Performance Evaluation of 230 kV Polymer Insulators in the Coastal Area of Saudi Arabia. IEEE Access. 2020;8:164292–164303. doi: 10.1109/ACCESS.2020.3022521. [DOI] [Google Scholar]

- 27.Stefenon S.F., Americo J.P., Meyer L.H., Grebogi R.B., Nied A. Analysis of the Electric Field in Porcelain Pin-Type Insulators via Finite Elements Software. IEEE Lat. Am. Trans. 2018;16:2505–2512. doi: 10.1109/TLA.2018.8795129. [DOI] [Google Scholar]

- 28.Corso M.P., Stefenon S.F., Couto V.F., Cabral S.H.L., Nied A. Evaluation of Methods for Electric Field Calculation in Transmission Lines. IEEE Lat. Am. Trans. 2018;16:2970–2976. doi: 10.1109/TLA.2018.8804264. [DOI] [Google Scholar]

- 29.Azizi S., Momen G., Ouellet-Plamondon C., David E. Performance improvement of EPDM and EPDM/Silicone rubber composites using modified fumed silica, titanium dioxide and graphene additives. Polym. Test. 2020;84:106281. doi: 10.1016/j.polymertesting.2019.106281. [DOI] [Google Scholar]

- 30.Yamashita T., Ishimoto R., Furusato T. Influence of series resistance on dry-band discharge characteristics on wet polluted insulators. IEEE Trans. Dielectr. Electr. Insul. 2018;25:154–161. doi: 10.1109/TDEI.2018.007005. [DOI] [Google Scholar]

- 31.Madi A.M., He Y., Jiang L. Design and testing of an improved profile for silicone rubber composite insulators. IEEE Trans. Dielectr. Electr. Insul. 2017;24:2930–2936. doi: 10.1109/TDEI.2017.006170. [DOI] [Google Scholar]

- 32.Cao B., Wang L., Yin F. A Low-Cost Evaluation and Correction Method for the Soluble Salt Components of the Insulator Contamination Layer. IEEE Sens. J. 2019;19:5266–5273. doi: 10.1109/JSEN.2019.2902192. [DOI] [Google Scholar]

- 33.Yin C., Guo Y., Zhang X., Huang G., Wu G. A Novel Method for Visualizing the Pollution Distribution of Insulators. IEEE Trans. Instrum. Meas. 2021;70:1–8. doi: 10.1109/TIM.2021.3098793. [DOI] [Google Scholar]

- 34.Corso M.P., Perez F.L., Stefenon S.F., Yow K.C., García Ovejero R., Leithardt V.R.Q. Classification of Contaminated Insulators Using k-Nearest Neighbors Based on Computer Vision. Computers. 2021;10:112. doi: 10.3390/computers10090112. [DOI] [Google Scholar]

- 35.Salem A.A., Abd-Rahman R., Al-Gailani S.A., Salam Z., Kamarudin M.S., Zainuddin H., Yousof M.F.M. Risk Assessment of Polluted Glass Insulator Using Leakage Current Index Under Different Operating Conditions. IEEE Access. 2020;8:175827–175839. doi: 10.1109/ACCESS.2020.3026136. [DOI] [Google Scholar]

- 36.Stefenon S.F., Singh G., Yow K.C., Cimatti A. Semi-ProtoPNet Deep Neural Network for the Classification of Defective Power Grid Distribution Structures. Sensors. 2022;22:4859. doi: 10.3390/s22134859. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Lan L., Zhang G., Wang Y., Wen X., Wang W., Pei H. The Influence of Natural Contamination on Pollution Flashover Voltage Waveform of Porcelain Insulators in Heavily Polluted Area. IEEE Access. 2019;7:121395–121406. doi: 10.1109/ACCESS.2019.2936868. [DOI] [Google Scholar]

- 38.Stefenon S.F., Oliveira J.R., Coelho A.S., Meyer L.H. Diagnostic of Insulators of Conventional Grid Through LabVIEW Analysis of FFT Signal Generated from Ultrasound Detector. IEEE Lat. Am. Trans. 2017;15:884–889. doi: 10.1109/TLA.2017.7910202. [DOI] [Google Scholar]

- 39.Palangar M.F., Amin U., Bakhshayesh H., Ahmad G., Abu-Siada A., Mirzaie M. Identification of Composite Insulator Criticality Based on a New Leakage Current Diagnostic Index. IEEE Trans. Instrum. Meas. 2021;70:1–10. doi: 10.1109/TIM.2021.3096869. [DOI] [Google Scholar]

- 40.Ghunem R.A., El-Hag A.H., Marzinotto M., Nazir M.T., Wong K.L., Jayaram S.H. Overhead Lines and Wildfires: Role of Outdoor Insulators: Prepared by a Task Force of the IEEE DEIS Outdoor Insulation Technical Committee. IEEE Electr. Insul. Mag. 2022;38:14–25. doi: 10.1109/MEI.2022.9797260. [DOI] [Google Scholar]

- 41.Yeh C.T., Thanh P.N., Cho M.Y. Real-Time Leakage Current Classification of 15kV and 25kV Distribution Insulators Based on Bidirectional Long Short-Term Memory Networks With Deep Learning Machine. IEEE Access. 2022;10:7128–7140. doi: 10.1109/ACCESS.2022.3140479. [DOI] [Google Scholar]

- 42.Gouda O.E., Darwish M.M.F., Mahmoud K., Lehtonen M., Elkhodragy T.M. Pollution Severity Monitoring of High Voltage Transmission Line Insulators Using Wireless Device Based on Leakage Current Bursts. IEEE Access. 2022;10:53713–53723. doi: 10.1109/ACCESS.2022.3175515. [DOI] [Google Scholar]

- 43.Liu Y., Du B.X., Farzaneh M. Self-Normalizing Multivariate Analysis of Polymer Insulator Leakage Current Under Severe Fog Conditions. IEEE Trans. Power Deliv. 2017;32:1279–1286. doi: 10.1109/TPWRD.2017.2650214. [DOI] [Google Scholar]

- 44.Wang S., Liu Y., Qing Y., Wang C., Lan T., Yao R. Detection of Insulator Defects With Improved ResNeSt and Region Proposal Network. IEEE Access. 2020;8:184841–184850. doi: 10.1109/ACCESS.2020.3029857. [DOI] [Google Scholar]

- 45.Stefenon S.F., Bruns R., Sartori A., Meyer L.H., Ovejero R.G., Leithardt V.R.Q. Analysis of the Ultrasonic Signal in Polymeric Contaminated Insulators Through Ensemble Learning Methods. IEEE Access. 2022;10:33980–33991. doi: 10.1109/ACCESS.2022.3161506. [DOI] [Google Scholar]

- 46.Polisetty S., El-Hag A., Jayram S. Classification of common discharges in outdoor insulation using acoustic signals and artificial neural network. High Volt. 2019;4:333–338. doi: 10.1049/hve.2019.0113. [DOI] [Google Scholar]

- 47.Jin L., Tian Z., Ai J., Zhang Y., Gao K. Condition Evaluation of the Contaminated Insulators by Visible Light Images Assisted With Infrared Information. IEEE Trans. Instrum. Meas. 2018;67:1349–1358. doi: 10.1109/TIM.2018.2794938. [DOI] [Google Scholar]

- 48.Pereira F., Crocker P., Leithardt V.R. PADRES: Tool for PrivAcy, Data REgulation and Security. SoftwareX. 2022;17:100895. doi: 10.1016/j.softx.2021.100895. [DOI] [Google Scholar]

- 49.Stefenon S.F., Kasburg C., Nied A., Klaar A.C.R., Ferreira F.C.S., Branco N.W. Hybrid deep learning for power generation forecasting in active solar trackers. IET Gener. Transm. Distrib. 2020;14:5667–5674. doi: 10.1049/iet-gtd.2020.0814. [DOI] [Google Scholar]

- 50.Kadiyala A., Kumar A. Applications of python to evaluate the performance of bagging methods. Environ. Prog. Sustain. Energy. 2018;37:1555–1559. doi: 10.1002/ep.13018. [DOI] [Google Scholar]

- 51.Sauer J., Mariani V.C., dos Santos Coelho L., Ribeiro M.H.D.M., Rampazzo M. Extreme gradient boosting model based on improved Jaya optimizer applied to forecasting energy consumption in residential buildings. Evol. Syst. 2021;13:577–588. doi: 10.1007/s12530-021-09404-2. [DOI] [Google Scholar]

- 52.Stefenon S.F., Ribeiro M.H.D.M., Nied A., Yow K.C., Mariani V.C., dos Santos Coelho L., Seman L.O. Time series forecasting using ensemble learning methods for emergency prevention in hydroelectric power plants with dam. Electr. Power Syst. Res. 2022;202:107584. doi: 10.1016/j.epsr.2021.107584. [DOI] [Google Scholar]

- 53.Saha S., Saha M., Mukherjee K., Arabameri A., Ngo P.T.T., Paul G.C. Predicting the deforestation probability using the binary logistic regression, random forest, ensemble rotational forest, REPTree: A case study at the Gumani River Basin, India. Sci. Total. Environ. 2020;730:139197. doi: 10.1016/j.scitotenv.2020.139197. [DOI] [PubMed] [Google Scholar]

- 54.da Silva R.G., Ribeiro M.H.D.M., Moreno S.R., Mariani V.C., dos Santos Coelho L. A novel decomposition-ensemble learning framework for multi-step ahead wind energy forecasting. Energy. 2021;216:119174. doi: 10.1016/j.energy.2020.119174. [DOI] [Google Scholar]

- 55.Ilhan S., Cherney E.A. Comparative tests on RTV silicone rubber coated porcelain suspension insulators in a salt-fog chamber. IEEE Trans. Dielectr. Electr. Insul. 2018;25:947–953. doi: 10.1109/TDEI.2018.006968. [DOI] [Google Scholar]

- 56.Ren A., Liu H., Wei J., Li Q. Natural Contamination and Surface Flashover on Silicone Rubber Surface under Haze—Fog Environment. Energies. 2017;10:1580. doi: 10.3390/en10101580. [DOI] [Google Scholar]

- 57.Stefenon S.F., Kasburg C., Freire R.Z., Silva Ferreira F.C., Bertol D.W., Nied A. Photovoltaic power forecasting using wavelet Neuro-Fuzzy for active solar trackers. J. Intell. Fuzzy Syst. 2021;40:1083–1096. doi: 10.3233/JIFS-201279. [DOI] [Google Scholar]

- 58.Tealab A. Time series forecasting using artificial neural networks methodologies: A systematic review. Future Comput. Inform. J. 2018;3:334–340. doi: 10.1016/j.fcij.2018.10.003. [DOI] [Google Scholar]

- 59.Abbasimehr H., Shabani M., Yousefi M. An optimized model using LSTM network for demand forecasting. Comput. Ind. Eng. 2020;143:106435. doi: 10.1016/j.cie.2020.106435. [DOI] [Google Scholar]

- 60.Salem A.A., Lau K.Y., Abdul-Malek Z., Al-Gailani S.A., Tan C.W. Flashover voltage of porcelain insulator under various pollution distributions: Experiment and modeling. Electr. Power Syst. Res. 2022;208:107867. doi: 10.1016/j.epsr.2022.107867. [DOI] [Google Scholar]

- 61.Cao J., Li Z., Li J. Financial time series forecasting model based on CEEMDAN and LSTM. Phys. Stat. Mech. Its Appl. 2019;519:127–139. doi: 10.1016/j.physa.2018.11.061. [DOI] [Google Scholar]

- 62.Wang W., Du Y., Chau K., Chen H., Liu C., Ma Q. A Comparison of BPNN, GMDH, and ARIMA for Monthly Rainfall Forecasting Based on Wavelet Packet Decomposition. Water. 2021;13:2871. doi: 10.3390/w13202871. [DOI] [Google Scholar]

- 63.Zardkoohi M., Fatemeh Molaeezadeh S. Long-term prediction of blood pressure time series using ANFIS system based on DKFCM clustering. Biomed. Signal Process. Control. 2022;74:103480. doi: 10.1016/j.bspc.2022.103480. [DOI] [Google Scholar]

- 64.Zhang S., Chen Y., Zhang W., Feng R. A novel ensemble deep learning model with dynamic error correction and multi-objective ensemble pruning for time series forecasting. Inf. Sci. 2021;544:427–445. doi: 10.1016/j.ins.2020.08.053. [DOI] [Google Scholar]

- 65.Liu F., Cai M., Wang L., Lu Y. An Ensemble Model Based on Adaptive Noise Reducer and Over-Fitting Prevention LSTM for Multivariate Time Series Forecasting. IEEE Access. 2019;7:26102–26115. doi: 10.1109/ACCESS.2019.2900371. [DOI] [Google Scholar]

- 66.Abbasimehr H., Paki R. Improving time series forecasting using LSTM and attention models. J. Ambient. Intell. Humaniz. Comput. 2022;13:673–691. doi: 10.1007/s12652-020-02761-x. [DOI] [Google Scholar]

- 67.Smyl S. A hybrid method of exponential smoothing and recurrent neural networks for time series forecasting. Int. J. Forecast. 2020;36:75–85. doi: 10.1016/j.ijforecast.2019.03.017. [DOI] [Google Scholar]

- 68.Sagheer A., Kotb M. Unsupervised pre-training of a deep LSTM-based stacked autoencoder for multivariate time series forecasting problems. Sci. Rep. 2019;9:19038. doi: 10.1038/s41598-019-55320-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Stefenon S.F., Freire R.Z., Meyer L.H., Corso M.P., Sartori A., Nied A., Klaar A.C.R., Yow K.C. Fault detection in insulators based on ultrasonic signal processing using a hybrid deep learning technique. IET Sci. Meas. Technol. 2020;14:953–961. doi: 10.1049/iet-smt.2020.0083. [DOI] [Google Scholar]

- 70.Smagulova K., James A.P. A survey on LSTM memristive neural network architectures and applications. Eur. Phys. J. Spec. Top. 2019;228:2313–2324. doi: 10.1140/epjst/e2019-900046-x. [DOI] [Google Scholar]

- 71.Han Y., Wang C., Ren Y., Wang S., Zheng H., Chen G. Short-Term Prediction of Bus Passenger Flow Based on a Hybrid Optimized LSTM Network. ISPRS Int. J. Geo Inf. 2019;8:366. doi: 10.3390/ijgi8090366. [DOI] [Google Scholar]

- 72.Chang Z., Zhang Y., Chen W. Electricity price prediction based on hybrid model of adam optimized LSTM neural network and wavelet transform. Energy. 2019;187:115804. doi: 10.1016/j.energy.2019.07.134. [DOI] [Google Scholar]

- 73.Lin J., Li H., Liu N., Gao J., Li Z. Automatic Lithology Identification by Applying LSTM to Logging Data: A Case Study in X Tight Rock Reservoirs. IEEE Geosci. Remote. Sens. Lett. 2021;18:1361–1365. doi: 10.1109/LGRS.2020.3001282. [DOI] [Google Scholar]

- 74.Dodangeh E., Panahi M., Rezaie F., Lee S., Tien Bui D., Lee C.W., Pradhan B. Novel hybrid intelligence models for flood-susceptibility prediction: Meta optimization of the GMDH and SVR models with the genetic algorithm and harmony search. J. Hydrol. 2020;590:125423. doi: 10.1016/j.jhydrol.2020.125423. [DOI] [Google Scholar]

- 75.Roshani M., Sattari M.A., Muhammad Ali P.J., Roshani G.H., Nazemi B., Corniani E., Nazemi E. Application of GMDH neural network technique to improve measuring precision of a simplified photon attenuation based two-phase flowmeter. Flow Meas. Instrum. 2020;75:101804. doi: 10.1016/j.flowmeasinst.2020.101804. [DOI] [Google Scholar]

- 76.Roshani M., Phan G., Hossein Roshani G., Hanus R., Nazemi B., Corniani E., Nazemi E. Combination of X-ray tube and GMDH neural network as a nondestructive and potential technique for measuring characteristics of gas-oil-water three phase flows. Measurement. 2021;168:108427. doi: 10.1016/j.measurement.2020.108427. [DOI] [Google Scholar]

- 77.Al-qaness M.A., Ewees A.A., Fan H., Abualigah L., Elaziz M.A. Boosted ANFIS model using augmented marine predator algorithm with mutation operators for wind power forecasting. Appl. Energy. 2022;314:118851. doi: 10.1016/j.apenergy.2022.118851. [DOI] [Google Scholar]

- 78.Suparta W., Samah A.A. Rainfall prediction by using ANFIS times series technique in South Tangerang, Indonesia. Geod. Geodyn. 2020;11:411–417. doi: 10.1016/j.geog.2020.08.001. [DOI] [Google Scholar]

- 79.Ribeiro G.T., Mariani V.C., dos Santos Coelho L. Enhanced ensemble structures using wavelet neural networks applied to short-term load forecasting. Eng. Appl. Artif. Intell. 2019;82:272–281. doi: 10.1016/j.engappai.2019.03.012. [DOI] [Google Scholar]

- 80.Ribeiro M.H.D.M., Mariani V.C., dos Santos Coelho L. Multi-step ahead meningitis case forecasting based on decomposition and multi-objective optimization methods. J. Biomed. Inform. 2020;111:103575. doi: 10.1016/j.jbi.2020.103575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Divina F., Gilson A., Goméz-Vela F., García Torres M., Torres J.F. Stacking Ensemble Learning for Short-Term Electricity Consumption Forecasting. Energies. 2018;11:949. doi: 10.3390/en11040949. [DOI] [Google Scholar]

- 82.Gao X., Shan C., Hu C., Niu Z., Liu Z. An Adaptive Ensemble Machine Learning Model for Intrusion Detection. IEEE Access. 2019;7:82512–82521. doi: 10.1109/ACCESS.2019.2923640. [DOI] [Google Scholar]

- 83.da Silva R.G., Ribeiro M.H.D.M., Mariani V.C., dos Santos Coelho L. Forecasting Brazilian and American COVID-19 cases based on artificial intelligence coupled with climatic exogenous variables. Chaos Solitons Fractals. 2020;139:110027. doi: 10.1016/j.chaos.2020.110027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Tan M., Yuan S., Li S., Su Y., Li H., He F. Ultra-Short-Term Industrial Power Demand Forecasting Using LSTM Based Hybrid Ensemble Learning. IEEE Trans. Power Syst. 2020;35:2937–2948. doi: 10.1109/TPWRS.2019.2963109. [DOI] [Google Scholar]

- 85.Sun S., Wang S., Zhang G., Zheng J. A decomposition-clustering-ensemble learning approach for solar radiation forecasting. Sol. Energy. 2018;163:189–199. doi: 10.1016/j.solener.2018.02.006. [DOI] [Google Scholar]

- 86.Ribeiro M.H.D.M., Stefenon S.F., de Lima J.D., Nied A., Mariani V.C., Coelho L.S. Electricity Price Forecasting Based on Self-Adaptive Decomposition and Heterogeneous Ensemble Learning. Energies. 2020;13:5190. doi: 10.3390/en13195190. [DOI] [Google Scholar]

- 87.Boiroux D., Jørgensen J.B. Sequential L1 Quadratic Programming for Nonlinear Model Predictive Control. IFAC Symp. Dyn. Control. Process. Syst. 2019;52:474–479. doi: 10.1016/j.ifacol.2019.06.107. [DOI] [Google Scholar]

- 88.Vijayan S V., Mohanta H.K., Pani A.K. Support vector regression modeling in recursive just-in-time learning framework for adaptive soft sensing of naphtha boiling point in crude distillation unit. Pet. Sci. 2021;18:1230–1239. doi: 10.1016/j.petsci.2021.07.001. [DOI] [Google Scholar]

- 89.Gu B., Shan Y., Quan X., Zheng G. Accelerating Sequential Minimal Optimization via Stochastic Subgradient Descent. IEEE Trans. Cybern. 2021;51:2215–2223. doi: 10.1109/TCYB.2019.2893289. [DOI] [PubMed] [Google Scholar]

- 90.Lv Y., Peng S., Yuan Y., Wang C., Yin P., Liu J., Wang C. A classifier using online bagging ensemble method for big data stream learning. Tsinghua Sci. Technol. 2019;24:379–388. doi: 10.26599/TST.2018.9010119. [DOI] [Google Scholar]

- 91.Tanha J., Abdi Y., Samadi N., Razzaghi N., Asadpour M. Boosting methods for multi-class imbalanced data classification: An experimental review. J. Big Data. 2020;7:70. doi: 10.1186/s40537-020-00349-y. [DOI] [Google Scholar]

- 92.Yaman M.A., Subasi A., Rattay F. Comparison of Random Subspace and Voting Ensemble Machine Learning Methods for Face Recognition. Symmetry. 2018;10:651. doi: 10.3390/sym10110651. [DOI] [Google Scholar]

- 93.Wen L., Hughes M. Coastal Wetland Mapping Using Ensemble Learning Algorithms: A Comparative Study of Bagging, Boosting and Stacking Techniques. Remote. Sens. 2020;12:1683. doi: 10.3390/rs12101683. [DOI] [Google Scholar]

- 94.Kim D., Baek J.G. Bagging ensemble-based novel data generation method for univariate time series forecasting. Expert Syst. Appl. 2022;203:117366. doi: 10.1016/j.eswa.2022.117366. [DOI] [Google Scholar]

- 95.Truong X.L., Mitamura M., Kono Y., Raghavan V., Yonezawa G., Truong X.Q., Do T.H., Tien Bui D., Lee S. Enhancing Prediction Performance of Landslide Susceptibility Model Using Hybrid Machine Learning Approach of Bagging Ensemble and Logistic Model Tree. Appl. Sci. 2018;8:1046. doi: 10.3390/app8071046. [DOI] [Google Scholar]

- 96.Jiang Y., Ge H., Zhang Y. Quantitative analysis of wheat maltose by combined terahertz spectroscopy and imaging based on Boosting ensemble learning. Food Chem. 2020;307:125533. doi: 10.1016/j.foodchem.2019.125533. [DOI] [PubMed] [Google Scholar]

- 97.Kumari P., Toshniwal D. Extreme gradient boosting and deep neural network based ensemble learning approach to forecast hourly solar irradiance. J. Clean. Prod. 2021;279:123285. doi: 10.1016/j.jclepro.2020.123285. [DOI] [Google Scholar]

- 98.Basaran K., Özçift A., Kılınç D. A new approach for prediction of solar radiation with using ensemble learning algorithm. Arab. J. Sci. Eng. 2019;44:7159–7171. doi: 10.1007/s13369-019-03841-7. [DOI] [Google Scholar]

- 99.Liang W., Sari A., Zhao G., McKinnon S.D., Wu H. Short-term rockburst risk prediction using ensemble learning methods. Nat. Hazards. 2020;104:1923–1946. doi: 10.1007/s11069-020-04255-7. [DOI] [Google Scholar]

- 100.Ho T.K. The random subspace method for constructing decision forests. IEEE Trans. Pattern Anal. Mach. Intell. 1998;20:832–844. doi: 10.1109/34.709601. [DOI] [Google Scholar]

- 101.Pham B.T., Tien Bui D., Prakash I., Dholakia M. Hybrid integration of Multilayer Perceptron Neural Networks and machine learning ensembles for landslide susceptibility assessment at Himalayan area (India) using GIS. Catena. 2017;149:52–63. doi: 10.1016/j.catena.2016.09.007. [DOI] [Google Scholar]

- 102.Pham B.T., Prakash I., Tien Bui D. Spatial prediction of landslides using a hybrid machine learning approach based on Random Subspace and Classification and Regression Trees. Geomorphology. 2018;303:256–270. doi: 10.1016/j.geomorph.2017.12.008. [DOI] [Google Scholar]

- 103.Li G., Zheng Y., Liu J., Zhou Z., Xu C., Fang X., Yao Q. An improved stacking ensemble learning-based sensor fault detection method for building energy systems using fault-discrimination information. J. Build. Eng. 2021;43:102812. doi: 10.1016/j.jobe.2021.102812. [DOI] [Google Scholar]

- 104.Wu T., Zhang W., Jiao X., Guo W., Alhaj Hamoud Y. Evaluation of stacking and blending ensemble learning methods for estimating daily reference evapotranspiration. Comput. Electron. Agric. 2021;184:106039. doi: 10.1016/j.compag.2021.106039. [DOI] [Google Scholar]

- 105.Stefenon S.F., Freire R.Z., Coelho L.S., Meyer L.H., Grebogi R.B., Buratto W.G., Nied A. Electrical Insulator Fault Forecasting Based on a Wavelet Neuro-Fuzzy System. Energies. 2020;13:484. doi: 10.3390/en13020484. [DOI] [Google Scholar]

- 106.El-Hendawi M., Wang Z. An ensemble method of full wavelet packet transform and neural network for short term electrical load forecasting. Electr. Power Syst. Res. 2020;182:106265. doi: 10.1016/j.epsr.2020.106265. [DOI] [Google Scholar]

- 107.Sui X., Wan K., Zhang Y. Pattern recognition of SEMG based on wavelet packet transform and improved SVM. Optik. 2019;176:228–235. doi: 10.1016/j.ijleo.2018.09.040. [DOI] [Google Scholar]

- 108.Tayab U.B., Zia A., Yang F., Lu J., Kashif M. Short-term load forecasting for microgrid energy management system using hybrid HHO-FNN model with best-basis stationary wavelet packet transform. Energy. 2020;203:117857. doi: 10.1016/j.energy.2020.117857. [DOI] [Google Scholar]

- 109.Stefenon S.F., Ribeiro M.H.D.M., Nied A., Mariani V.C., Coelho L.S., da Rocha D.F.M., Grebogi R.B., Ruano A.E.B. Wavelet group method of data handling for fault prediction in electrical power insulators. Int. J. Electr. Power Energy Syst. 2020;123:106269. doi: 10.1016/j.ijepes.2020.106269. [DOI] [Google Scholar]

- 110.Yesilli M.C., Khasawneh F.A., Otto A. On transfer learning for chatter detection in turning using wavelet packet transform and ensemble empirical mode decomposition. CIRP J. Manuf. Sci. Technol. 2020;28:118–135. doi: 10.1016/j.cirpj.2019.11.003. [DOI] [Google Scholar]

- 111.Wang L., Liu Z., Cao H., Zhang X. Subband averaging kurtogram with dual-tree complex wavelet packet transform for rotating machinery fault diagnosis. Mech. Syst. Signal Process. 2020;142:106755. doi: 10.1016/j.ymssp.2020.106755. [DOI] [Google Scholar]

- 112.Arshad, Ahmad J., Tahir A., Stewart B.G., Nekahi A. Forecasting Flashover Parameters of Polymeric Insulators under Contaminated Conditions Using the Machine Learning Technique. Energies. 2020;13:3889. doi: 10.3390/en13153889. [DOI] [Google Scholar]

- 113.Ribeiro M.H.D.M., dos Santos Coelho L. Ensemble approach based on bagging, boosting and stacking for short-term prediction in agribusiness time series. Appl. Soft Comput. 2020;86:105837. doi: 10.1016/j.asoc.2019.105837. [DOI] [Google Scholar]

- 114.Kasburg C., Stefenon S.F. Deep Learning for Photovoltaic Generation Forecast in Active Solar Trackers. IEEE Lat. Am. Trans. 2019;17:2013–2019. doi: 10.1109/TLA.2019.9011546. [DOI] [Google Scholar]

- 115.de Lima R.R., Fernandes A.M.R., Bombasar J.R., da Silva B.A., Crocker P., Leithardt V.R.Q. An Empirical Comparison of Portuguese and Multilingual BERT Models for Auto-Classification of NCM Codes in International Trade. Big Data Cogn. Comput. 2022;6:8. doi: 10.3390/bdcc6010008. [DOI] [Google Scholar]

- 116.Fernandes F., Stefenon S.F., Seman L.O., Nied A., Ferreira F.C.S., Subtil M.C.M., Klaar A.C.R., Leithardt V.R.Q. Long short-term memory stacking model to predict the number of cases and deaths caused by COVID-19. J. Intell. Fuzzy Syst. 2022;6:6221–6234. doi: 10.3233/JIFS-212788. [DOI] [Google Scholar]

- 117.Chicco D., Warrens M.J., Jurman G. The coefficient of determination R-squared is more informative than SMAPE, MAE, MAPE, MSE and RMSE in regression analysis evaluation. PeerJ Comput. Sci. 2021;7:e623. doi: 10.7717/peerj-cs.623. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement