Abstract

Background:

Telemedicine video consultations are rapidly increasing globally, accelerated by the COVID-19 pandemic. This presents opportunities to use computer vision technologies to augment clinician visual judgement because video cameras are so ubiquitous in personal devices and new techniques, such as DeepLabCut (DLC) can precisely measure human movement from smartphone videos. However, the accuracy of DLC to track human movements in videos obtained from laptop cameras, which have a much lower FPS, has never been investigated; this is a critical gap because patients use laptops for most telemedicine consultations.

Objectives:

To determine the validity and reliability of DLC applied to laptop videos to measure finger tapping, a validated test of human movement.

Method:

Sixteen adults completed finger-tapping tests at 0.5 Hz, 1 Hz, 2 Hz, 3 Hz and at maximal speed. Hand movements were recorded simultaneously by a laptop camera at 30 frames per second (FPS) and by Optotrak, a 3D motion analysis system at 250 FPS. Eight DLC neural network architectures (ResNet50, ResNet101, ResNet152, MobileNetV1, MobileNetV2, EfficientNetB0, EfficientNetB3, EfficientNetB6) were applied to the laptop video and extracted movement features were compared to the ground truth Optotrak motion tracking.

Results:

Over 96% (529/552) of DLC measures were within 0.5 Hz of the Optotrak measures. At tapping frequencies 4 Hz, there was progressive decline in accuracy, attributed to motion blur associated with the laptop camera’s low FPS. Computer vision methods hold potential for moving us towards intelligent telemedicine by providing human movement analysis during consultations. However, further developments are required to accurately measure the fastest movements.

Keywords: Telemedicine, DeepLabCut, Finger tapping, Motor control, Computer vision

1. Introduction

The assessment of human movement by visual observation is a fundamental part of clinical assessments in all areas of medicine. The clinician’s visual judgement of patient movement plays a key role in diagnosis and assessment across a multitude of conditions throughout the life course of their patients. For example, clinicians evaluate how a baby grasps an object for child development assessments, the range of eye movements after surgery, the amplitude of arm movements after rehabilitation, the speed of walking after a stroke, and to track benefits (and side effects) of medications for hand tremor. These are just a few specific examples of medicine consultations taking place all over the world every hour of every day.

However, the accuracy of clinicians, even those expert in movement analysis, are constrained by the limits of human perception, which cannot accurately measure subtle changes. Numerous publications have proposed technological methods to objectively measure human movement [1], [2], [3]. These have the theoretical benefit of allowing remote monitoring and assessment, but a requirement for specialist equipment, wearable sensors or patient engagement with specific apps likely explains why none have entered routine clinical practice.

Wearable sensors are commonly used to extract finger tapping features in the laboratory. In prior studies, machine learning methods have been applied to determine finger tapping pattern classification. For instance, Shima et al. [4] applied Log-linearized Gaussian Mixture Networks on sensor data to extract finger tapping movements. Wissel et al. [5] applied Hidden Markov model and support-vector machine (SVM) to classify finger movement patterns into different groups using electroencephalogram data. Khan et al. [6] applied a random forests model to classify individual finger movements using functional near-infrared spectroscopy data. These wearable sensors or neurophysiological signal based methods can extract accurate finger tapping features; however, they require participants to have sensors or other equipment physically attached on to them which limits implementation in any environment outside of a specialized laboratory. In contrast, camera-based methods are non-touch and can be used remotely, thus providing wider accessibility.

The COVID-19 pandemic has highlighted the reach but also the challenges of remote medicine delivery [7]. Telemedicine consultations remain severely limited compared to standard face-to-face consultations, by the fact that clinicians cannot accurately examine patients’ movements remotely. This was highlighted in a study of neurology clinics during the COVID-19 pandemic, that found telemedicine consultations were much better suited to conditions that were based on describing symptoms (e.g. headaches, epilepsy) than those that required doctors to observe abnormalities in movement (e.g. Parkinson’s, Multiple Sclerosis [8]). This inability of clinicians to accurately evaluate human movements remotely, combined with the swift uptake of telemedicine, results in a significant risk to patient safety.

Thus, there is a growing and urgent need to extend the capabilities of telemedicine so that intelligent video technologies can provide accurate measurement of movement that is clinically relevant. Recent developments in computer vision deep learning methods open up the opportunity for remote healthcare assessments to include precise measures of human movement. DeepLabCut (DLC) is a new artificial intelligence software that was originally designed to perform marker-less tracking of research mice and insects [9] and has since been used in research-related fields in human movement. The coming age of video consultation presents opportunities to use technology to aid clinician visual judgement, because video cameras are so ubiquitous in personal devices (no special equipment is required) and computing techniques have the potential to precisely measure human movement from video. This could provide a new form of augmented or automatic remote healthcare assessment.

However, if similar methods are to be successfully applied to telemedicine, it is necessary to evaluate their accuracy when applied to videos obtained from standard laptop cameras (with a relatively low FPS) because this is one of the most common computer cameras used by patients for telemedicine consultations, including older adults [10] and carers, and especially for conditions that have a central focus on movement assessment [11]. So far, there has not been a published comparison of human movements measured via analysis of video collected through a laptop camera with a ‘gold standard’ measure obtained through wearable movement sensors.

In this study, for the first time, we determine the validity and reliability of DLC computer vision methods applied to 2D video collected via a standard laptop camera at 30 FPS, compared to a 3D gold-standard wearable sensors method collected at 250 FPS. To clarify, the objective of this study is not to classify participants into different positive and negative controls, but rather, to validate whether computer vision methods can extract accurate hand movement features compared with the gold standard Optotrak method using video data from a relatively low FPS (30FPS) laptop camera. We use a well-validated clinical test of human movement control, finger tapping, during which a person is asked to repetitively tap index finger and thumb together. This test is easy for participants to perform whilst seated and allows for a range of different frequencies and component measures to be evaluated. Additionally, finger tapping test is a common test being widely used in aiding the diagnosis of neuro logical diseases, and tackling small objects (like hand or finger) tracking from videos or images is challenging in computer vision domain. That is why it is a timely and necessary validity and reliability study of showing how accurately the computer vision method can measure finger tapping hand movement compared with the gold standard wearable sensor method. Through experiments, our method recognizes the need to loosen specific requirements, such as patient positioning and high-quality camera equipment, if objective measures of movement are to be successfully integrated into real-world telemedicine consultations.

2. Materials and methods

2.1. Participants

A convenience sample of sixteen staff and students (9 female, mean age 34.5 years; range 24–52) at the University of Tasmania were recruited via an email invitation. Assessments took place at the University of Tasmania Sensorimotor Neuroscience and Ageing Laboratory. The study was approved by the institutional ethics review board at the University of Tasmania (Project ID: 21660) and participants provided written informed consent in accordance with the Declaration of Helsinki. Information on gender, age, dominant hand, and whether there was any history of neurological disorders, was collected from each participant.

2.2. Experiment design

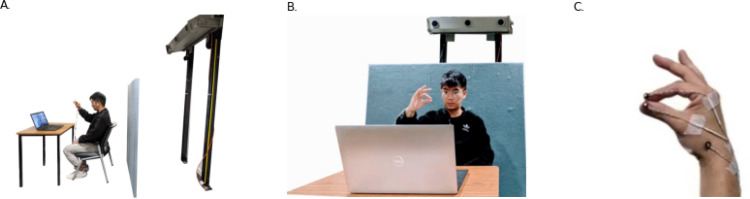

Participants sat facing a Dell Laptop (Model Precision 5540) placed on a table approximately 60 cm in front of them. The laptop 2D camera captured video images at 30 FPS with a resolution of 1280 × 720 pixels. A high-speed 3D Optotrak camera system (Northern Digital Inc.) was fixed to the wall approximately 2 m behind the participant; (Fig. 1 A and B). The Optotrak uses three co-linear detectors to record 3D (x, y, z) positional data of infrared Light Emitting Diodes (LEDs, active markers), at 250 FPS with an accuracy of 0.1 mm; (Figure 1C [12]). A plain blue board placed 50 cm behind the participant provided a uniform background for the laptop camera (Fig. 1B). Standard ambient lighting was used.

Fig. 1.

Experiment design. 1A: Lateral view with the participant sitting approximately 60 cm from the laptop and approximately 2 m from the Optotrak camera. 1B: Experimental set up from the front view, with a plain light blue board placed behind the participant and the hand visible to both the Optotrak camera and the laptop camera. 1C: The positions of the LED sensors with one secured on the index fingertip, one on the thumb-tip and one over the radial styloid process, each secured with adhesive tape. In this position, the sensors were visible to the Optotrak camera but not to the laptop camera, so as not to interfere with DLC video image analysis.

Three small (5 mm diameter) lightweight (1 g) LED sensors were attached to the participant’s right hand: one sensor on the lateral aspect of the index fingertip, one on the dorsal aspect of the thumb-tip and one over the radial styloid process; Fig. 1C. The sensor positions were chosen so they were visible to the Optotrak camera while invisible to the laptop camera. In this way, both cameras could record the movement simultaneously, while the image of the sensors did not impact the deep learning algorithms to detect key points on the hand.

2.3. Protocol

The participant flexed their right elbow and held their right hand steady with the index finger and thumb opposed and their little finger facing towards the laptop camera. The researcher checked that the participant’s hand position was captured by both the laptop camera (visible on the screen) and the Optotrak system (sensor positions detected) and then started recording from both systems. The participant was instructed to hold their hand still for a few seconds of recording and begin finger tapping when the researcher gave the ‘start’ command. The delayed start allowed time-synchronization between cameras during offline analysis.

The participant was instructed to tap their index finger against their thumb ‘as big and fast as possible’ (internally-paced). For the next four conditions, an electronic metronome (auditory tone) externally paced each period of finger tapping at 0.5 Hz, 1 Hz, 2 Hz and 3 Hz. After 20 s of finger tapping, the participant was instructed to stop. Each condition recorded thus comprised a preparation period of 3–5 s and a ‘tapping’ period of 20 s.

The laptop and table were then moved 20 cm further away from the participant and the same set of recordings were repeated. Thus, each participant completed ten recordings in total (see Table 1). After each recording, the participant had a 30-second period to rest. The order of conditions was fixed.

Six recruited participants (3 female, mean age 28.7 years; range 24–36) agreed to complete the full protocol twice. They had a five-minute break between the first and second recordings. In total, this resulted in 220 23–25 -second 2D videos collected via the 30 FPS laptop camera (around 165,000 frames in total) with paired 3D positional data collected via the 250 FPS 3D wearable sensor system.

Table 1.

Finger tapping protocol.

| Condition number | Distance of participant’s hand from laptop | Tapping frequency |

|---|---|---|

| 1 | 60 cm | as fast as possible |

| 2 | 60 cm | 0.5 Hz |

| 3 | 60 cm | 1 Hz |

| 4 | 60 cm | 2 Hz |

| 5 | 60 cm | 3 Hz |

| 6 | 80 cm | as fast as possible |

| 7 | 80 cm | 0.5 Hz |

| 8 | 80 cm | 1 Hz |

| 9 | 80 cm | 2 Hz |

| 10 | 80 cm | 3 Hz |

2.4. Key point detection on hand through 2D video

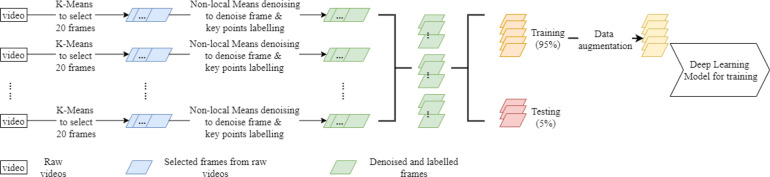

We implemented 5 steps to detect key points (index fingertip and thumb-tip) on the hands through 2D videos, i.e., data pre-processing, data augmentation, model training, model evaluation and key point inference by using the DLC framework [13]. Fig. 2 shows the whole process and details are given in the following sub-sections.

Fig. 2.

The process of key point detection on the hands using 2D video data.

2.4.1. Data pre-processing

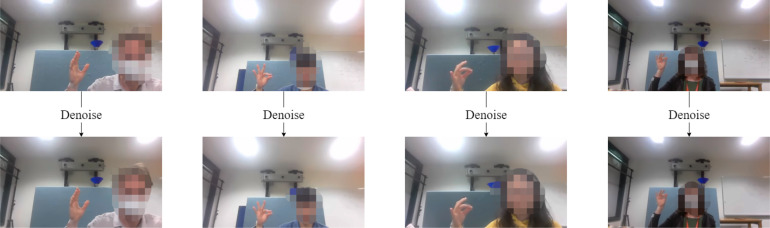

In the data pre-processing step, initially 20 frames from each of the 220 finger tapping videos were selected by a K-means (K=10) clustering algorithm and the positions of the index fingertip and thumb-tip were manually labelled. These 20 selected frames were regarded as representations of the video, which contained 600 frames in total (30FPS 20 s). To reduce the noise on individual frames, we applied a non-local means denoising algorithm [14]. Fig. 3 shows the sample frames before and after denoising using this technique, demonstrating that the background behind the hand becomes cleaner after denoising.

Fig. 3.

Using non-local means denoising algorithm to denoise the raw frames. This produced a more clear background. Faces have been covered with mosaics on the frames.

2.4.2. Data augmentation

Data augmentation settings in the DLC framework were implemented. Specifically, the probability of adding augmentation to a frame was set at 0.5, the scale crop ratio was 0.4, the rotation degree was +/−25 degrees, the fraction of applying rotation was 0.4, and the scale rate ranged between 0.5 and 1.25.

2.4.3. Model training

In the model training step, 4,400 denoised and labelled frames were randomly split into training partition (95%) and testing (5%) datasets. There are many different neural network based key point detection methods, we selected 3 typical networks to be trained for finger tip detection, i.e., ResNet50 [15], EfficientNetB0 [16] and MobileNetV2 [17]. ResNet50, EfficientNetB0 and MobileNetV2 are commonly used as backbone networks for different computer vision tasks including key point detection [18], [19]. ResNet50 introduces the concept of skip-connection, which solves the gradient vanishing problem in neural networks. In this case, the network of ResNet can be very deep, allowing the network to learn deeper features in the network without compromising the vanishing gradient problem. EfficientNetB0 is well known for its scaling method that can uniformly scale different dimensions of depth/width/resolution using a compound coefficient [16]. MobileNetV2 is a light weight network which can provide the real-time key point detection. Although it is light weight, it achieves good performance in key point detection tasks [20]. All these networks are state of the art methods in the computer vision field and they represent different types of novelty in terms of neural network theory. Additionally, these networks have been embedded into DLC software which brings convenience to neuroscientists and other non-computer scientists who may wish to use these methods to track movements. The output layers of each network architecture were 2 score maps (2D grid with values of 0–1 in each pixel) indicating the presence of the thumb-tip or index fingertip. The Adam optimizer [21] was applied in the learning process. To take advantage of transfer learning, training started from ImageNet pre-trained model and ended after 50,000 epochs. The loss function was calculated as the cross-entropy between ground truth score maps and predicted score maps.

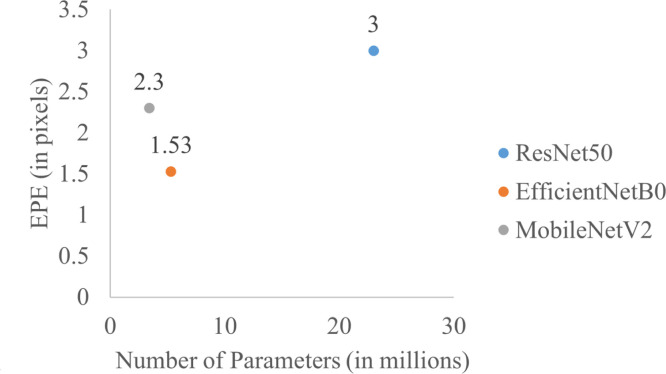

2.4.4. Model evaluation

To evaluate the performance of the model, we calculated the End Point Error (EPE) between predicted point position and real point position, on the testing dataset for each of the three neural network architectures (Table 2). More neural networks training results were included in the supplementary material. Eq. (1) shows the calculation of EPE.

| (1) |

where is the number of types of hand key points (here for index fingertip and thumb-tip), is the predicted position of the th hand key point on the th image and is the true position of the th key point on the th image.

Table 2.

End Point Error (EPE) for the three deep learning neural networks.

| Deep learning neural network | EPE on training dataset | EPE on testing dataset |

|---|---|---|

| ResNet50 | 2.98 pixels | 3.00 pixels |

| EfficientNetB0 | 1.52 pixels | 1.53 pixels |

| MobileNetV2 | 2.21 pixels | 2.30 pixels |

To evaluate the complexity of the model, we showed the EPE vs number of parameters plot for different networks in Fig. 4 to see the efficiency of different models. More neural networks’ complexity evaluations were included in the supplementary material. Overall, different networks’ fingertip tracking errors are all very small (around 1.5 to 3 pixels), while EfficientNetB0 achieves lowest EPE at a relatively small number of parameters.

Fig. 4.

The performance (measured in EPE) vs number of parameters for different networks.

2.4.5. Key point inference

In the key point inference stage, frames from the original videos were predicted by using the trained neural network architectures, and the output were a set of 2D (x, y) coordinates of index fingertip and thumb-tip in pixels.

2.5. Extraction of hand movement features

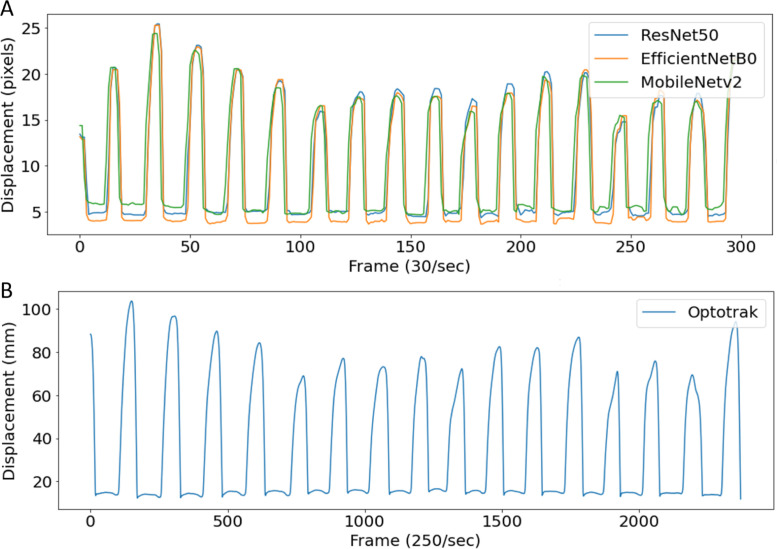

For the Optotrak system, Euclidean distance (D) (Eq. (2)) was calculated between the finger tip sensor and thumb tip sensor in 3D space and measured in millimetres. For the computer vision methods, displacement between the index fingertip and thumb-tip was measured in 2D space by the number of pixels (Eq. (3)). Fig. 5 shows displacement vs time graph for both 2D space (based on 2D video) and 3D space (based on Optotrak).

| (2) |

| (3) |

where thumb-tip position is and for 3D and 2D space respectively, while index fingertip position is and for 3D and 2D space respectively.

Fig. 5.

A, shows an example of the distance between the two finger sensors measured by computer vision methods (DLC) during the 1 Hz condition. B shows the same finger-tapping motion calculated from the Optotrak method.

The Optotrak and computer vision data were time-synchronized using the peak (i.e. index finger and the thumb maximally separated) of the second tap cycle and the subsequent 10 s period of data was included in the analysis. The Mean Tapping Frequency (M-TF) was calculated as the average value of 1 divided by the time difference between each consecutive peak points (Eq. (4)). The Variation of TF (Var-TF) was calculated as the coefficient of variance, the ratio of standard deviation of 1 divided by the time difference between each consecutive peak points to the M-TF (Eq. (5)).

| (4) |

| (5) |

where refers to the number of peaks and refers to the time point at th peak.

2.6. Statistical analysis

M-TF and Var-TF outcomes were compared between the three DLC computer vision neural network architectures and the gold standard measure. Reliability of the computer vision methods for tracking hand motion at different distances from the laptop camera were calculated: Near-To-Laptop (60 cm) versus Far-From-Laptop (80 cm). Bland Altman [22] plots and paired Welch’s t-tests measured the level of agreement, with +/−0.5 Hz as a clinically acceptable error margin [23].

To evaluate the validity of the computer vision methods, we compared each of the three different artificial neural network architectures from the DLC platform [9] (i.e., ResNet50, EfficientNetB0 and MobileNetV2) based computer vision methods separately with the Optotrak measurements. We used Bland Altman [22] plots to measure the degree of error. To evaluate (whether the distance from the camera had significant impact on features extracted from different methods), we compared for each participant, the same finger tapping tests completed Near-To-Laptop (60 cm) to their repeat tests completed Far-From-Laptop (80 cm). We used Bland Altman [22] plots and paired Welch’s t-tests to measure the degree of error. We considered 0.5 Hz as a clinically acceptable error margin and in line with previous similar publications [23].

3. Results

3.1. Validation of computer vision methods compared to the gold standard system

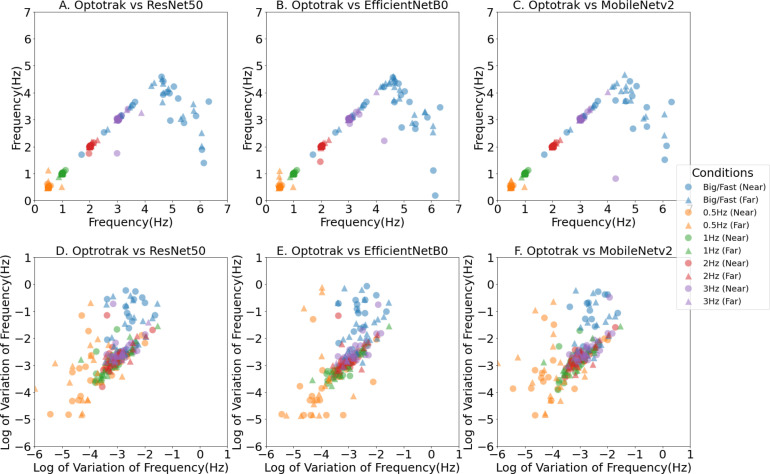

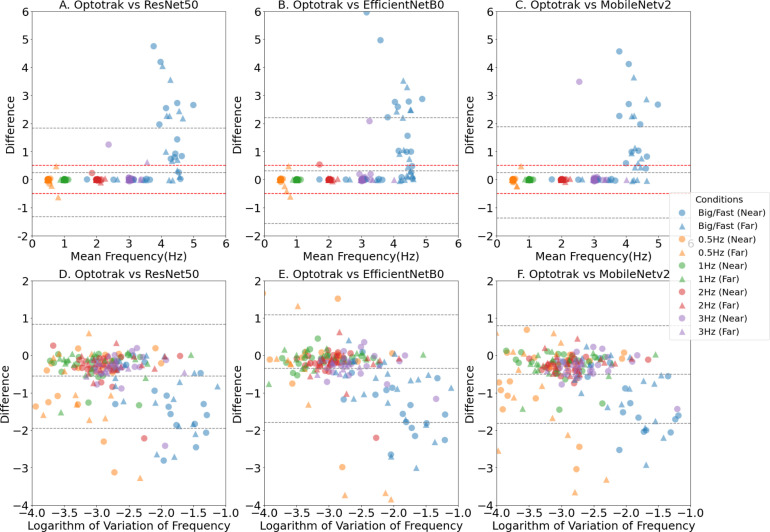

When the participants finger tapped between 0.5 Hz and 4 Hz, the mean tapping frequencies obtained from the three-computer vision methods correlated highly with the Optotrak measures; see Fig. 6, Fig. 7, and Table 3. Almost all (95.8%; 538/552) of the computer vision measures were within +/−0.5 Hz of the Optotrak measures in this frequency range; specifically 95.7%, 176/184 for ResNet50; 92.9%, 171/184 for EfficientNetB0 and 98.9%, 182/184 for MobileNetV2. However, as can be seen in Fig. 6, when participants tapped at frequencies higher than 4 Hz, there was a decline in the accuracy of the computer vision methods with significant differences between the computer vision and Optotrak methods ( Table 3). The computer vision methods progressively under-estimated the tapping frequencies with fast movements, giving falsely low measures of frequency compared to the benchmark. On viewing the videos at higher tapping frequencies, it was noted that they had considerable motion blur on some frames. It was hard to manually label the correct positions of key points on these blurred frames, this blur led to inaccurate key point detection performance of the computer vision methods at higher speeds. The further validation assessments using a range of other neural networks, namely ResNet101, ResNet152, MobileNetV1, EfficientNetB3 and EfficientNetB6 are presented in the Supplementary Materials. In summary, all the neural networks generally showed accurate hand movement features extraction in low frequency finger tapping cases, but inaccurate extraction of hand movement features at tapping frequencies above 4HZ.

Fig. 6.

Tapping Frequency x–y scatter plot between different computer vision methods (y axis) and the Optotrak method (x axis). A, B and C show the scatter plots of mean tapping frequency. D, E and F show the scatter plots of the logarithm of variation in the tapping frequency. The coloured marks represent the different finger tapping conditions with blue denoting the ‘Big/Fast’ self-paced conditions, and yellow, green, red and purple the externally paced conditions at frequencies of 0.5 Hz, 1 Hz, 2 Hz and 3 Hz respectively. Circles are for conditions performed Near-To-Laptop (60 cm) and triangles are for conditions performed Far-From-Laptop (80 cm).

Fig. 7.

Validity of computer measures of Tapping Frequency, demonstrated by the Bland Altman Plots, with the representation of the limits of agreement (red dashed lines), and from −1.96 standard deviation to +1.96 standard deviation (lower and upper grey dashed lines). A, B and C show the mean tapping frequency comparison between the Optotrak system and the three computer vision methods. D, E and F show a measure of tapping rhythm — the logarithm of variation in the tapping frequency. The coloured marks represent the different finger tapping conditions with blue denoting the ‘Big/Fast’ self-paced conditions, and yellow, green, red and purple the externally paced conditions at frequencies of 0.5 Hz, 1 Hz, 2 Hz and 3 Hz respectively. Circles are for conditions performed Near-To-Laptop (60 cm) and triangles are for conditions performed Far-From-Laptop (80 cm).

Table 3.

Accuracy of each computer vision method compared with the Optotrak measures.

| Methods | M-TF -value (-value) | Var-TF -value (-value) |

|---|---|---|

| ‘As fast as possible’ condition with frequency ¡ 4 Hz | ||

| ResNet50 | 0.52 (0.48) | 1.48 (0.24) |

| EfficientNetB0 | 0.72 (0.47) | 2.60 (0.01) |

| MobileNetV2 | 0.39 (0.54) | 1.89 (0.19) |

| ‘As fast as possible’ condition with frequency ¿ 4 Hz | ||

| ResNet50 | 107.61 (0) | 59.40 (0) |

| EfficientNetB0 | 7.44 (0) | 6.57 (0) |

| MobileNetV2 | 109.67 (0) | 41.56 (0) |

| 0.5 Hz condition | ||

| ResNet50 | 0.39 (0.53) | 6.41 (0.02) |

| EfficientNetB0 | 0.84 (0.40) | 1.81 (0.08) |

| MobileNetV2 | 0.02 (0.88) | 7.63 (0.01) |

| 1 Hz condition | ||

| ResNet50 | 0.02 (0.90) | 3.40 (0.70) |

| EfficientNetB0 | 0.02 (0.99) | 0.58 (0.56) |

| MobileNetV2 | 0.05 (0.82) | 3.63 (0.06) |

| 2 Hz condition | ||

| ResNet50 | 0.02 (0.89) | 6.10 (0.02) |

| EfficientNetB0 | 0.93 (0.36) | 1.43 (0.16) |

| MobileNetV2 | 0.01 (0.92) | 4.94 (0.03) |

| 3 Hz condition | ||

| ResNet50 | 4.26 (0.04) | 3.92 (0.05) |

| EfficientNetB0 | 1.61 (0.11) | 1.79 (0.08) |

| MobileNetV2 | 2.16 (0.15) | 3.63 (0.06) |

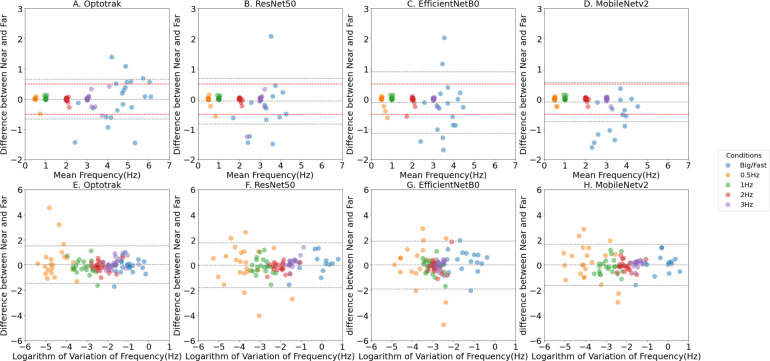

3.2. Reliability of computer vision methods at two different distances from camera

There were no significant differences compared to the Optotrak system between tapping frequencies, or variation, measured Near-To-Laptop and Far-From-Laptop by the three computer vision methods (); see Fig. 8 and Table 4. It is important to note that there will be a natural variation between a participant’s performance of the same condition (e.g. 1 Hz paced) in the Near-To-Laptop and Far-From-Laptop positions as humans very rarely reproduce movements 100% precisely at two different time points, even when paced. This is especially the case for internally paced ‘Big/Fast’ conditions, as exemplified by the variation in the Optotrak measures at higher frequencies too. The reliability assessments using a range of other neural networks, namely ResNet101, ResNet152, MobileNetV1, EfficientNetB3 and EfficientNetB6 are presented in the Supplementary Materials. In summary, all the neural networks generally showed the distance between participant and camera (in a range of 60 to 80 cm) does not affect the feature extraction.

Fig. 8.

Reliability of computer measures of Tapping Frequency at two distances from camera, demonstrated by the Bland Altman Plots, with the representation of the limits of agreement (red dashed lines), and from −1.96 standard deviation to +1.96 standard deviation (lower and upper grey dashed lines). A, B, C and D show the mean tapping frequency scatter plots between Near-To-Laptop (60 cm camera to hand) and Far-From-Laptop (80 cm camera to hand) conditions for the Optotrak system and the three computer vision methods respectively. E, F, G and H show a measure of tapping rhythm — the logarithm of variation in the tapping frequency. The coloured marks represent the different finger tapping conditions with blue denoting the ‘Big/Fast’ self-paced conditions, and yellow, green, red and purple are the externally paced conditions at frequencies of 0.5 Hz, 1 Hz, 2 Hz and 3 Hz respectively. The middle grey dashed line represents the mean difference between Near-To-Laptop and Far-From-Laptop conditions. The upper and lower grey dashed lines represent the upper and lower borders at 95% confidence level. The upper and lower red dashed lines represent the +/−0.5 Hz agreement levels.

Table 4.

Difference between Near-To-Laptop and Far-From-Laptop computer measures compared with the Optotrak.

| Methods | M-TF -value (-value) | Var-TF -value (-value) |

|---|---|---|

| ‘As fast as possible’ condition | ||

| Optotrak | 0 (1) | 0.43 (0.52) |

| ResNet50 | 0.55 (0.46) | 0.36 (0.55) |

| EfficientNetB0 | 1.86 (0.07) | 0.45 (0.66) |

| MobileNetV2 | 3.63 (0.07) | 0.94 (0.34) |

| 0.5 Hz condition | ||

| Optotrak | 0.48 (0.5) | 2.64 (0.12) |

| ResNet50 | 1.07 (0.31) | 0.64 (0.43) |

| EfficientNetB0 | 1.50 (0.15) | 1.33 (0.20) |

| MobileNetV2 | 1.07 (0.31) | 0.13 (0.72) |

| 1 Hz condition. | ||

| Optotrak | 2.87 (0.1) | 1.71 (0.2) |

| ResNet50 | 2.16 (0.15) | 0.29 (0.6) |

| EfficientNetB0 | 1.39 (0.17) | 1.30 (0.20) |

| MobileNetV2 | 1.76 (0.19) | 0.45 (0.51) |

| 2 Hz condition. | ||

| Optotrak | 0.84 (0.37) | 0.52 (0.48) |

| ResNet50 | 1.66 (0.21) | 0.03 (0.85) |

| EfficientNetB0 | 1.39 (0.18) | 0.26 (0.80) |

| MobileNetV2 | 0.62 (0.44) | 0.32 (0.57) |

| 3 Hz condition. | ||

| Optotrak | 0 (0.93) | 1.86 (0.18) |

| ResNet50 | 0.98 (0.34) | 1.33 (0.26) |

| EfficientNetB0 | 1.30 (0.20) | 1.63 (0.12) |

| MobileNetV2 | 1.28 (0.27) | 2.18 (0.16) |

4. Discussion

Our results demonstrate that when tapping frequencies were between 0.5 Hz and 4 Hz, the accuracy of the computer vision methods employing 2D video data collected at 30 FPS were comparable to the ‘gold standard’ wearable sensor method. These computer vision methods were also reliable when the hand was at different distances from the laptop camera. This is the first study to apply DLC methods to videos from a standard laptop camera to measure human hand movements. The three DLC deep learning models, ResNet50, EfficientNetB0 and MobileNetV2, showed similar validity and test–retest reliability.

The implication of this study is that existing hardware currently used for video consultations may be sufficient to objectively measure movement in order to augment clinician judgement. The accuracy of the method reduced above 4 Hz due to inaccurate fingertip tracking on some blurred frames related to using the low FPS laptop camera. However, this may have little relevance for clinical use, as few patients are likely to tap at such high frequencies; for example a study [24] that quantified Parkinson’s finger tapping frequency found that the mean tapping frequency was around 2 Hz.

Our study extends existing understanding of methods to quantify the finger tapping examination. A variety of studies have shown that devices can be used to record finger tapping and extract clinically useful information. For example, Djuric et al. [25] proposed a method to assess finger tapping task using 3D gyroscopes; Summa et al. [26] used magneto-inertial devices to record hand motor tasks (including finger tapping tests) to assess motor symptoms. There are several reports of using video tracking to measure finger tapping [23], [27], [28], [29] including one with laptop cameras, but none have validated their method against precise wearable sensors. A particular strength of the work presented here is the use of a gold standard kinematic measure as the benchmark to test laptop camera validity. Optotrak can accurately measure movement at different distances from the infrared cameras with no constraints to ambient lighting or a cluttered background [30]. Optotrak markers collected position data in x, and z directions at a high frequency with an accuracy of 0.1 mm. The fast and accurate data ensured the reliability of ‘ground truth data. It is technically challenging to compare computer vision with established technology to record clinical examination, since ‘wearables’ will add relevant markers to the video, potentially improving the performance of computer vision tracking. However, we avoided this problem by novel positioning of the Optotrak markers and camera on the opposite side to the laptop camera, making the markers invisible on the video.

This is a timely study as telemedicine use dramatically increases around the world, and clinicians and researchers need accurate methods to measure hand movements. Limitations of our study include the relatively small number of participants and the homogeneity of our sample i.e. younger adults accustomed to using technology who did not have any cognitive deficit or motor impairment. Future steps would include assessing movement tracking in a wider range of participants with positive and negative controls, validating in other types of movement, and undertaking a classification-based research study using different computer vision methods.

5. Conclusion

Remote video consultation forms an expanding part of healthcare systems globally. Widespread availability of devices that allow a remote video assessment are alleviating the burden of frequent travel to clinic appointments for people living with frailty and impaired mobility [31], [32]and reducing the inequity of access to healthcare systems for people who live in rural or remote locations. There is potential for computer vision techniques to provide precise objective measures of movement to augment clinician judgement during video calls. Webcams are standard hardware for video consultation, but the accuracy of computer vision using that low-cost equipment for computer tracking of clinical examination has never been tested before now [33], [34]. Our study provides evidence that deep learning technologies have advanced to the stage where it is now feasible to integrate computer vision into remote healthcare systems using standard computer equipment. This could improve the clinical consultation, not only remotely but also when face-to-face if a camera was used to video record the examination, as it would allow clinicians to view an overlay of live extracted movement features during their clinical evaluation.

6. Summary

-

•

This is the first study to compare tracking of human hand movements using deep learning methods applied to 2D laptop videos to a 3D wearable sensor method.

-

•

The deep learning video methods were able to accurately measure finger tapping frequency in the 0 to 4 Hz range.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

The authors would like to thank all the participants for taking part in the study. We also acknowledge support from the National Health and Medical Research Council (2004051), the J.O. and J.R. Wicking Trust (Equity Trustees) and the University of Tasmania. The funding bodies have no direct role in the study design, data collection, analysis, and interpretation or manuscript preparation.

Footnotes

Supplementary material related to this article can be found online at https://doi.org/10.1016/j.compbiomed.2022.105776. Comparative accuracy of 8 computer vision methods to track finger tapping in videos collected with 30 fps laptop camera.

Appendix A. Supplementary data

The following is the Supplementary material related to this article.

.

.

References

- 1.Currie Jon, Ramsden Ben, McArthur Cheryl, Maruff Paul. Validation of a clinical antisaccadic eye movement test in the assessment of dementia. Arch. Neurol. 1991;48(6):644–648. doi: 10.1001/archneur.1991.00530180102024. [DOI] [PubMed] [Google Scholar]

- 2.Benecke R, Rothwell JC, Dick JPR, Day BL, Marsden CD. Performance of simultaneous movements in patients with parkinson’s disease. Brain. 1986;109(4):739–757. doi: 10.1093/brain/109.4.739. [DOI] [PubMed] [Google Scholar]

- 3.Li Renjie, Wang Xinyi, Lawler Katherine, Garg Saurabh, Bai Quan, Alty Jane. Applications of artificial intelligence to aid detection of dementia: a scoping review on current capabilities and future directions. J. Biomed. Inform. 2022 doi: 10.1016/j.jbi.2022.104030. [DOI] [PubMed] [Google Scholar]

- 4.Shima Keisuke, Tsuji Toshio, Kandori Akihiko, Yokoe Masaru, Sakoda Saburo. Measurement and evaluation of finger tapping movements using log-linearized Gaussian mixture networks. Sensors. 2009;9(3):2187–2201. doi: 10.3390/s90302187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wissel Tobias, Pfeiffer Tim, Frysch Robert, Knight Robert T, Chang Edward F, Hinrichs Hermann, Rieger Jochem W, Rose Georg. Hidden Markov model and support vector machine based decoding of finger movements using electrocorticography. J. Neural Eng. 2013;10(5) doi: 10.1088/1741-2560/10/5/056020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Khan Haroon, Noori Farzan M, Yazidi Anis, Uddin Md Zia, Khan MN, Mirtaheri Peyman. Classification of individual finger movements from right hand using fNIRS signals. Sensors. 2021;21(23):7943. doi: 10.3390/s21237943. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Greenhalgh Trisha, Koh Gerald Choon Huat, Car Josip. Covid-19: A remote assessment in primary care. Bmj. 2020;368 doi: 10.1136/bmj.m1182. [DOI] [PubMed] [Google Scholar]

- 8.Kristoffersen Espen Saxhaug, Sandset Else Charlotte, Winsvold Bendik Slagsvold, Faiz Kashif Waqar, Storstein Anette Margrethe. Experiences of telemedicine in neurological out-patient clinics during the COVID-19 pandemic. Ann. Clin. Transl. Neurol. 2021;8(2):440–447. doi: 10.1002/acn3.51293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Mathis Alexander, Mamidanna Pranav, Cury Kevin M, Abe Taiga, Murthy Venkatesh N, Mathis Mackenzie Weygandt, Bethge Matthias. DeepLabCut: markerless pose estimation of user-defined body parts with deep learning. Nature Neurosci. 2018;21(9):1281–1289. doi: 10.1038/s41593-018-0209-y. [DOI] [PubMed] [Google Scholar]

- 10.Duan-Porter Wei, Van Houtven Courtney H, Mahanna Elizabeth P, Chapman Jennifer G, Stechuchak Karen M, Coffman Cynthia J, Hastings Susan Nicole. Internet use and technology-related attitudes of veterans and informal caregivers of veterans. Telemed. E-Health. 2018;24(7):471–480. doi: 10.1089/tmj.2017.0015. [DOI] [PubMed] [Google Scholar]

- 11.Durner Gregor, Durner Joachim, Dunsche Henrike, Walle Etzel, Kurzreuther Robert, Handschu René. 24/7 live stream telemedicine home treatment service for parkinson’s disease patients. Mov. Disord. Clin. Pract. 2017;4(3):368–373. doi: 10.1002/mdc3.12436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.States R.A., Pappas E. Precision and repeatability of the optotrak 3020 motion measurement system. J. Med. Eng. Technol. 2006;30(1):11–16. doi: 10.1080/03091900512331304556. [DOI] [PubMed] [Google Scholar]

- 13.Nath Tanmay, Mathis Alexander, Chen An Chi, Patel Amir, Bethge Matthias, Mathis Mackenzie Weygandt. Using DeepLabCut for 3D markerless pose estimation across species and behaviors. Nat. Protoc. 2019;14(7):2152–2176. doi: 10.1038/s41596-019-0176-0. [DOI] [PubMed] [Google Scholar]

- 14.Buades Antoni, Coll Bartomeu, Morel Jean-Michel. Non-local means denoising. Image Processing on Line. 2011;1:208–212. [Google Scholar]

- 15.He Kaiming, Zhang Xiangyu, Ren Shaoqing, Sun Jian. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2016. Deep residual learning for image recognition; pp. 770–778. [Google Scholar]

- 16.Tan Mingxing, Le Quoc. International Conference on Machine Learning. PMLR; 2019. Efficientnet: Rethinking model scaling for convolutional neural networks; pp. 6105–6114. [Google Scholar]

- 17.Howard Andrew G, Zhu Menglong, Chen Bo, Kalenichenko Dmitry, Wang Weijun, Weyand Tobias, Andreetto Marco, Adam Hartwig. 2017. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv preprint arXiv:1704.04861. [Google Scholar]

- 18.Wu Shaoen, Xu Junhong, Zhu Shangyue, Guo Hanqing. A deep residual convolutional neural network for facial keypoint detection with missing labels. Signal Process. 2018;144:384–391. [Google Scholar]

- 19.Hong Feng, Lu Changhua, Liu Chun, Liu Ruru, Jiang Weiwei, Ju Wei, Wang Tao. Pgnet: Pipeline guidance for human key-point detection. Entropy. 2020;22(3):369. doi: 10.3390/e22030369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Colaco Savina, Han Dong Seog. Facial landmarks detection with MobileNet blocks. Proceedings of the Korea Telecommunications Society Conference. 2020:1198–1200. [Google Scholar]

- 21.Kingma Diederik P., Ba Jimmy. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980. [Google Scholar]

- 22.Giavarina Davide. Understanding bland altman analysis. Biochemia Medica. 2015;25(2):141–151. doi: 10.11613/BM.2015.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Williams Stefan, Fang Hui, Relton Samuel D, Wong David C, Alam Taimour, Alty Jane E. Accuracy of smartphone video for contactless measurement of hand tremor frequency. Mov. Disord. Clin. Pract. 2021;8(1):69–75. doi: 10.1002/mdc3.13119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Agostino Rocco, Currà Antonio, Giovannelli Morena, Modugno Nicola, Manfredi Mario, Berardelli Alfredo. Impairment of individual finger movements in parkinson’s disease. Mov. Disord. 2003;18(5):560–565. doi: 10.1002/mds.10313. [DOI] [PubMed] [Google Scholar]

- 25.Djurić-Jovičić Milica, Jovičić Nenad S, Roby-Brami Agnes, Popović Mirjana B, Kostić Vladimir S, Djordjević Antonije R. Quantification of finger-tapping angle based on wearable sensors. Sensors. 2017;17(2):203. doi: 10.3390/s17020203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Summa Susanna, Tosi Jacopo, Taffoni Fabrizio, Di Biase Lazzaro, Marano Massimo, Rizzo A Cascio, Tombini Mario, Di Pino Giovanni, Formica Domenico. 2017 International Conference on Rehabilitation Robotics (ICORR) IEEE; 2017. Assessing bradykinesia in parkinson’s disease using gyroscope signals; pp. 1556–1561. [DOI] [PubMed] [Google Scholar]

- 27.Khan Taha, Nyholm Dag, Westin Jerker, Dougherty Mark. A computer vision framework for finger-tapping evaluation in parkinson’s disease. Artif. Intell. Med. 2014;60(1):27–40. doi: 10.1016/j.artmed.2013.11.004. [DOI] [PubMed] [Google Scholar]

- 28.Criss Kjersten, McNames James. 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE; 2011. Video assessment of finger tapping for parkinson’s disease and other movement disorders; pp. 7123–7126. [DOI] [PubMed] [Google Scholar]

- 29.Wong David C, Relton Samuel D, Fang Hui, Qhawaji Rami, Graham Christopher D, Alty Jane, Williams Stefan. 2019 IEEE 32nd International Symposium on Computer-Based Medical Systems (CBMS) IEEE; 2019. Supervised classification of bradykinesia for parkinson’s disease diagnosis from smartphone videos; pp. 32–37. [Google Scholar]

- 30.Schmidt Jill, Berg Devin R, Ploeg Heidi-Lynn, Ploeg Leone. Precision, repeatability and accuracy of optotrak® optical motion tracking systems. Int. J. Exp. Comput. Biomech. 2009;1(1):114–127. [Google Scholar]

- 31.Contreras Carlo M, Metzger Gregory A, Beane Joal D, Dedhia Priya H, Ejaz Aslam, Pawlik Timothy M. Telemedicine: patient-provider clinical engagement during the COVID-19 pandemic and beyond. J. Gastrointest. Surg. 2020;24(7):1692–1697. doi: 10.1007/s11605-020-04623-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Roy Bhaskar, Nowak Richard J, Roda Ricardo, Khokhar Babar, Patwa Huned S, Lloyd Thomas, Rutkove Seward B. Teleneurology during the COVID-19 pandemic: A step forward in modernizing medical care. Journal of the Neurological Sciences. 2020;414 doi: 10.1016/j.jns.2020.116930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Williams Stefan, Zhao Zhibin, Hafeez Awais, Wong David C, Relton Samuel D, Fang Hui, Alty Jane E. The discerning eye of computer vision: Can it measure parkinson’s finger tap bradykinesia? Journal of the Neurological Sciences. 2020;416 doi: 10.1016/j.jns.2020.117003. [DOI] [PubMed] [Google Scholar]

- 34.Williams Stefan, Relton Samuel D, Fang Hui, Alty Jane, Qahwaji Rami, Graham Christopher D, Wong David C. Supervised classification of bradykinesia in parkinson’s disease from smartphone videos. Artif. Intell. Med. 2020;110 doi: 10.1016/j.artmed.2020.101966. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

.

.