Abstract

In recent times, nutrition recommendation system has gained increasing attention due to their need for healthy living. Current studies on the food domain deal with a recommendation system that focuses on independent users and their health problems but lack nutritional advice to individual users. The proposed system is developed to suggest nutritional food to people based on age and gender predicted from their face image. The designed methodology preprocesses the input image before performing feature extraction using the deep convolution neural network (DCNN) strategy. This network extracts D-dimensional characteristics from the source face image, followed by the feature selection strategy. The face's distinctive and identifiable traits are chosen utilizing a hybrid particle swarm optimization (HPSO) technique. Support vector machine (SVM) is used to classify a person's age and gender. The nutrition recommendation system relies on the age and gender classes. The proposed system is evaluated using classification rate, precision, and recall using Adience dataset and UTKface dataset, and real-world images exhibit excellent performance by achieving good prediction results and computation time.

1. Introduction

In recent years, many real-life applications such as social media, security control, advertising, and entertainment have made use of information contained in a human face. Automatic age [1] as well as gender [2] prediction from facial image plays a vital role in interpersonal communication and is always a significant area for researchers of computer vision [3]. Face age and gender recognition are a very important aspect of face analysis that has piqued the interest of researchers in areas such as demographic information collection, surveillance, human-computer interaction, marketing intelligence, and security. Recently nutrition recommendation has gained attention among both healthy and unhealthy people. This paper focuses on recommending nutritional advice for people based on their age and gender.

Different methodologies have been available to identify gender based on human biometric traits, mannerisms, and behaviours. A face provides distinguished information about a person that includes age, gender, expression, mood, ethnicity, etc. Gender identification from a person's face image is a difficult application in the computer vision community, image analysis, and artificial intelligence that recognises gender based on masculinity and femininity. It is binary classification problem which assigns a gender class to an individual. Gender identification is one part of facial analysis [4, 5] which focus on classifying the images under a controlled environment. There is a need for gender classification under an uncontrolled environment which is proposed in [6]. The gender of a person provides supplementary information that helps to retrieve fast and accurate information using human inspection whereas it is a challenging problem for computers.

Research efforts are taken to automatically predict the age from the face of a person [7]. The proposed method focuses by obtaining age-specific characteristics from face image, followed by age classification. The age of a human can be estimated using ageing cues present in the face image. Skin changes also help in perceiving the age of the adults. Age identification [8] is a complex process that depends on gender, race, ethnicity, lifestyle, make-up, and other external factors. Accurate facial age prediction remains challenging as the exact age differs from predicted age. Some public age recognition datasets include classifications such as child, teenager, adolescent, intermediate, and senior citizens.

The recommendation system suggests nutritional advice for the individual user based on their choice. In 2016, the World Health Organization [9] predicted that nearly 650 million people aged 18+ are overweight. Diet-related issues mainly, overweight and obesity are becoming the main reason for death throughout the world [10, 11]. A proper dietary plan is required to enhance people's standard of living. Therefore, a recommendation system for nutritional food consumption would be an appropriate solution for people with a busy lifestyle.

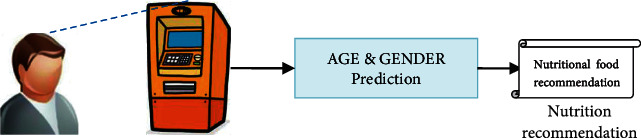

The planned nutrition recommendation system is depicted in Figure 1. This method captures a person's face as well as predicts their age and gender. Nutrition recommendation is provided to them based on this prediction.

Figure 1.

Overview of proposed nutrition recommendation system.

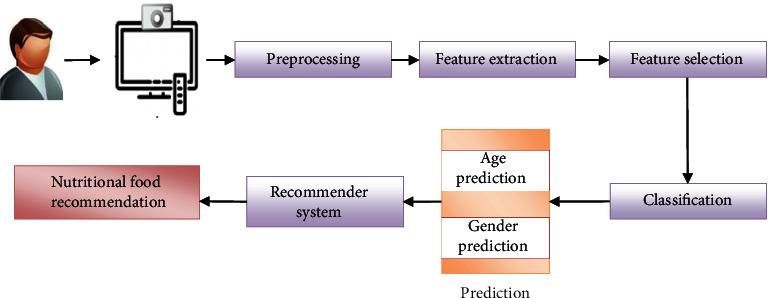

The proposed system obtains the input from the dataset or through the real-time camera. Preprocessing is carried out to make it ready for further processing. DCNN is performed on the preprocessed image to retrieve the important features. Following that, feature selection is performed using hybrid particle swarm optimization (HPSO). The gender has 2 classes (male and female), and age of a person is classified into 8 age classes as “0–2,” “4–6,” “8–13,” “15–20,” “25–32,” “38–43,” “48–53,” and “60+” which is classified using support vector machine (SVM). The recommendation system provides nutritional advice based on the age and gender predicted for the individual. This main idea of the research are summarised below:

A nutrition recommendation framework is developed to provide nutritional advice based on the user's age and gender

DCNN is utilized for feature extraction, which learns relevant characteristics by retrieving distinctive features

HPSO is used to select the best features in the image

Combining DCNN with HPSO improves the computation time and accuracy

SVM classifies age and gender

The model is robust and surpasses the conventional scheme in contexts of classification rate, precision, and recall, as demonstrated by experiments on the Adience dataset and real pictures

The plan of the recommendation system is described in the following. Section 2 articulates relevant research on age and gender. The conceptual methodology is detailed in Section 3. Section 4 goes into detail about the exploratory designs, and Section 5 elaborates the performance evaluation. Section 6 discusses the conclusion of the research.

2. Literature Survey

People's interest in nutrition recommendation systems has grown in recent years due to their relevance to healthy living. Existing nutrition recommendation systems suggests nutritional food for the people based on their health condition and individual preferences by getting input from the user. The proposed work automatically captures the face of a person and predicts their age and gender. Nutritional advice is recommended for a person based on their age category and their respective gender. Existing research works related to face are discussed in detail.

2.1. Face Detection and Identification

It is an important module of any face recognition system which should be more accurate and fast. Face detection algorithms are inspired mainly from object detection approaches. Region-based object detection classifies the generated object proposals. Each suggestion is classified as a face or nonface using a classifier. Hyperface [12] is a hierarchical multitask training architecture to conduct face identification, landmark mapping, posture prediction, and gender recognition. Region-based processing is faster. R-CNN [13] employs the region proposal network (RPN) [14], a tiny CNN. It predicts whether there is a sliding on the last feature map object or not and also predicts the boundary of those objects. RPN aids in the reduction of unnecessary face recommendations and the enhancement of their level. Face detection is generated at every place in a feature space at a particular scale using sliding window approaches. It is based on the feed-forward convolutional network. It has a shallow filter that can forecast object classifications and perform detection at multiple scales. Several facial tasks, such as facial attribute inference [15], face verification [16–19], and face recognition [20, 21], need the recognition and labeling of facial landmarks.

2.2. Gender Identification

Gender authentication may be done using a variety of data, including face photographs, hand skin photos, and physiological movements [22, 23], which contains a poll on gender detection systems utilizing face photos. Gender identification may be divided into two categories, according to [24, 25] (i) geometric oriented recognition and (ii) texture oriented recognition. Golomb et al. [26] proposed work on human gender detection that relies on neural networks. In gender detection, neural networks [27] were commonly employed for feature retrieval and categorization. Backpropagation neural networks are used in [28–30] for gender recognition. Furthermore, CNN has subsequently been found to be effective in obtaining exclusionary features and distinguishing genders [31, 32]. SVM, LDA, and AdaBoost are a few of the classification algorithms utilized in visual gender detection.

2.3. Age Identification

The person's face carries a great deal of information, including individuality, emotion, attitude, maturity level, ethnicity, race, and gender [33], which provided a detailed study of age modeling approaches using face photos. Kwon and Lobo [34] suggested a strategy for classifying photos into distinct age categories based on face characteristics by computing ratios of different metrics. This strategy, however, may not be appropriate for photographs with a lot of fluctuations in position, lighting, emotion, or blockage. The extraction of features is an important step in predicting human age. Active appearance model (AAM) [35], local binary patterns (LBP) [36–38], anthropometric features [39], and biologically inspired features (BIF) [40] are some of the feature extraction approaches that have been developed.

2.4. Deep Learning Methods

The initial deep learning technology utilized in a ML algorithm [41–43] was the deep neural network (DNN) [44, 45]. However, DNN has an overfitting problem and takes much too long to train. During learning, DNN was enhanced by utilizing limited Boltzmann machines (RBMs) and a deep belief network (DBN) [41, 46, 47]. DBN learning is quicker than DNN due to the inclusion of RBM. The RBMs are stacked DBM with unguided connections across the levels [48–53].

2.5. Feature-Based Methods

He et al. [54] proposed a linear appearance based method called principle component analysis (PCA). PCA is unsuitable for classifying because it maintains undesired intra-person differences when used for biometrics.

Babu et al. [55] proposed another linear appearance based method that classifies objects into sets of measurable object features called linear discriminant analysis (LDA). LDA has been more sensitive towards the training set's specific selection, resulting in lower outcomes than PCA.

To depict a diverse face expression, Donato [56] employed independent component analysis features using support vectors. Several researchers use it to analyse faces and facial expressions [57, 58]. Kernel PCA (KPCA) was proposed by Tanaka et al. [59], a nonparametric technique on the data to determine direction and minimize high dimensions.

Several nature-inspired techniques, such as PSO [60], GA [61], and ACO [62], have recently been employed for feature selection. In comparison to the previous techniques, GWO [63] is a novel methodology based on wolf chasing strategy. Wolf communities are created at random, which might lead to a lack of variation among wolves throughout the search process. This has a significant influence on the eventual solution's global convergence rate and efficiency. Thus, a novel approach is proposed to overcome this drawback.

2.6. Nutrition Recommendation System

Many works have been proposed for food recommendation which obtains the information from the user based on their preferences [64]. The collaborative filtering method [65] considers users' interest and makes predictions. But most of the systems do not suggest healthy and dietary food recommendations. Krizhevsky et al. proposed a dietary advice system [66] for individuals with diabetics. This system using k-means and SOM for clustering the food and suggests substitutes based on nutrition and food.

3. Proposed Work

The research work includes preprocessing, feature extraction, feature selection, age and gender categorization, and nutrition recommendation. Figure 2 depicts the planned framework's block diagram, which is explored in depth below.

Figure 2.

Proposed nutrition recommendation system.

3.1. Image Preprocessing

It has a strong favourable impact on the quality of feature retrieval and the outcomes of image exploration. This is a combination comprising enhancements and enrichments that is required for a face recognition pipeline. Thus, image processing chores include noise subdual, contrast enrichments, and removal of undesirable effects on detention such as blurring by motion effects and color alterations.

3.1.1. Noise Removal Using Mean Filter

Filtering is a technique for modifying and enriching an image. The main objective of such effects is to reduce noise, but they could be used to accentuate specific characteristics. 2D filtering techniques are typically considered an extension of 1D signal processing theory in image processing. The type of work, as well as the kind and characteristics of the data, frequently influences the filter selection. A mean filter is a basic linear filter that is both spontaneous and straightforward to use as a means of picture leveling. It aids in decreasing the degree of intensity fluctuation between pixels. It is commonly used to minimize picture noise. The primary principle behind mean filtering is to replace individual pixels value in a picture with the mean value of its neighbors, including itself. It has the potential to remove image pixels which are out of place in respective context. It is built round the kernel that represents the form and area of the neighborhood to be tested while computing the mean. A 3 × 3 square kernel is often employed, although a 5 × 5 square kernel could be utilized for extreme flattening. The two main difficulties with mean filtering are as follows:

Singular pixel having an uncertain frequency that can have a negative impact on the total mean for other relevant pixels among its vicinity

If there is an overlaps on the edge, the image becomes blurred

Two of the above concerns are handled using the median filter, that is, usually a superior noise-reduction filter over the mean filter though it takes longer to calculate.

3.1.2. Face Detection and Alignment Using Landmark Localisation

Facial detection is the fundamental phase in any face recognition process. A face detection method assists in finding any face portion of an image. A face detection system must be resistant to changes in stance, lighting, emotion, scale, skin color, occlusions, disguises, make-up, and so on. The proposed method identifies the 68 landmark points in the face using the Dlib library.

Facial keypoints include the nasal tip, ear margins, mouths edges, eye contours, and so on. Certain face landmarks are required for face orientation that is required for facial registration. Face alignment utilizes the eye position and the center point in the face [67]. Based on these factors, the input photo is cropped and scaled, having the size of the image set to 110 × 110. Facial recognition and alignment are crucial aspects in biometrics, gender categorization, and age estimation.

3.2. Deep Convolutional Neural Network (DCNN)

DCNN is a deep neural network architecture which helps to extract the unique and distinguishing features from the preprocessed input. It helps in reducing the original dimension of the image and represents it in reduced form in a lesser space. Even after the reduced dimension, the features generated from the DCNN procedure yield the equivalent outcome as the source picture.

Studies prove that DCNN [68] excellently captures image features that have multilayer neural network architecture. DCNN [69] has a variety of uses in face recognition and is extremely sophisticated in learning the image's properties. Adopting DCNN in the proposed work to extract age [70] and gender [71] features in the face provides promising results.

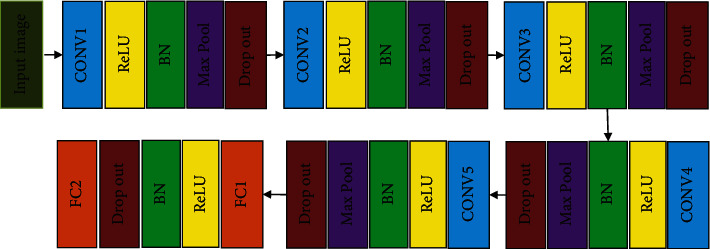

The proposed age and gender recognition problem is solved using the designed DCNN architecture. This network has a six layer architecture that comprises of 5 convolution layers and 2 fully connected layers. DCNN has a deep learning network which performs feature extraction and classification task. The input image given to the proposed system is preprocessed and cropped to size 110 × 110 based on the landmarks detected. The input to the system is 5 × 112 × 112 owing the inclusion of zero padding to the matrices. There are 5 convolutional layers, each accompanied by ReLU, batch normalisation (BN), and max-pooling, as well as a dropout layer. After the fifth set of convolutional layers, the first fully connected layer appears, continued by ReLU, BN, dropout, and a subsequent fully connected layer. The network's second fully connected layer outputs 512 features. For the dropout layer, the dropout ratio is chosen as 0.5. The conv1 layer has a filter of size 7 × 7 and the stride of 4 × 4. All the max-pool layers have a filter of size 3 × 3 and the stride of 2 × 2. The conv2 layer has a 5 × 5-size filter, while the final convolution layer has a 3 × 3-size filter. The features are passed to the softmax function to normalise 512 features. Figure 3 depicts a conceptual illustration of the proposed DCNN architecture.

Figure 3.

Schematic diagram of the proposed network.

Therefore, feature extraction employing DCNN retrieves the distinctive, exact, and informative characteristics found in an individual's facial picture, which aids in classification.

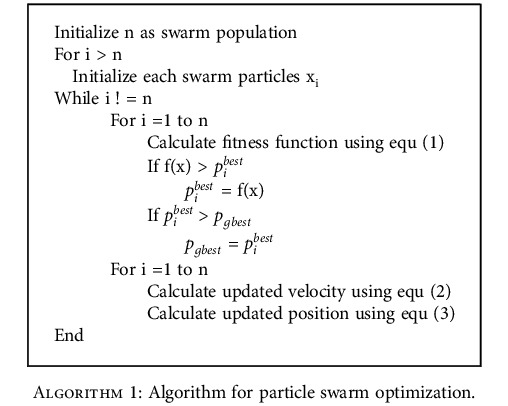

3.3. Particle Swarm Optimization (PSO)

PSO [72] is relatively a well-known optimization approach for finding optimal solution from a set of available alternatives. PSO is an optimization method inspired by the cooperative nature of a group of birds or swarm. Swarms have knowledge of predicting the distance of the food from their present location, while searching for food. PSO is composed of a collection of components. Every component is represented as a point, and it searches for an optimum. These components travel about the search space based on their individual best position as well as position of the swarm or nearby neighbors. In PSO, every solution is “swarm,” and the potential solutions are called as “particles.” Initially, the swarms have their position and velocity at a time (t) since the particles are moving. They change their positions by comparing their own best solution with the best solution of the neighbor. Each particle remembers the position, where it had its best solution. So far, this algorithm is used in artificial neural network training. In this method, PSO algorithm is incorporated to select the related features for the classification tasks. It also provides an iteratively better candidate solution or features.

Every particle is initialized with random position and velocity. The fitness value f(x) is calculated for the particles using equ (1).

| (1) |

where xi is the particle and n is the number of particles. The fitness value is then contrasted against the optimum value of the particle on the previous fitness values which are named as personal best (pbest). Using the personal best, the global best (gbest) value is generated. It is continued until the stopping criteria. The pbest, gbest, and the old velocities are used in updating the velocity which is shown in

| (2) |

where t represents time, new velocity is given by vi (t + 1), the weighting coefficients for are given by c1andc2, pi(t) is the particle position pbest is the optimum location of swarm, and pibest is ith optimum known location. rand() gives uniformly random variables. Equation (3) can be used to modify the particle's location.

| (3) |

where pi(t + 1) is the newly updated position, pi is the recent position, and vi is the recent velocity. The particles try to change their positions using factors like present position (pi), present velocity (vi), distance between present position (pi) and pbest (pibest), and the distance between present position (pi) and gbest (pgbest). The algorithm of PSO is given below.

The features present in the face image, which comes from the CNN framework, are considered as particles for training. The application of PSO throughout the training phase improves the solution vector's outcome and shortens the execution time. Premature convergence is the fundamental downside of PSO, which is mitigated by hybrid PSO.

3.4. Hybrid Particle Swarm Optimization (HPSO)

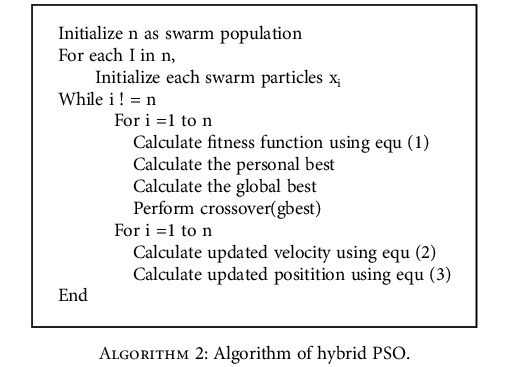

To simulate the particles, hybrid PSO is a unique feature selection approach that integrates PSO with the genetic algorithm (GA) [73, 74]. As PSO reaches the local optimum quickly, this local optimum cannot be avoided in the search space, and it reaches premature convergence at the earliest stage, and hence, this causes PSO to obtain local optimum region. To overcome this drawback, PSO is combined with GA. Combining GA and PSO is advantageous by sharing information among the particles which helps in computation steps. The hybrid PSO is proposed by performing a crossover operation on global best particles obtained from PSO. The problem dependent performance is one of the disadvantages of stochastic approaches. Thus, the different parameter settings are needed to exhibit high performance. The variations in the speed concerning inertia concluded that PSO is problem-dependent. Hence, this can be avoided with the help of hybrid PSO. The algorithm of the hybrid PSO is explained below.

Population is initialized, and fitness value is calculated for the population using equation (1). The fitness value is initialized to pibest if the intended fitness value is greater than pibest. If not, pibest is assigned to pgbest. The velocity is updated using equation (2), and the position is updated using equation (3). The crossover operation is added to the PSO algorithm to make it as a hybrid. The crossover is an operator in genetic algorithm preferably called recombination. Basically, there are various types of crossover used in genetic algorithm; here, single point crossover is used. In single point crossover, a random point is fixed, and the parent and the child are interchanged to produce the output of the crossover. The crossover is executed over the best solutions of PSO. The execution time is reduced with a hybrid PSO algorithm, used for training.

Hybrid PSO is carried out by the crossover operation of the best particles which are obtained from the PSO. The best particles are obtained by performing fitness calculations to each particle and compare these particles with others to get the pbest values or features. These personal best values are compared with other particles to acquire the global best particles. Later, these global best particles are given as input to crossover. Thus, the results are obtained, and the velocity and the positions are updated.

3.5. Age and Gender Classifications

Identity refers to the factors which differentiate one face from another. It can be age, gender, facial expression, and facial landmarks. The proposed system considers identity as the age and gender classifications. The proposed method uses classification to determine the age and gender of a human based on the input image. To categorize the age and gender, the classification procedure uses SVM [75].

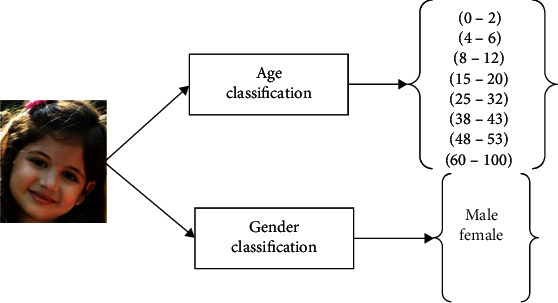

SVM assists to understanding the attributes present in image and carry out the classification. SVM constructs an ideal hyperplane in multidimensional space, which aids in the categorization of images into two classes in gender categorization and eight classes in age categorization. The results of HPSO is mapped into the multidimensional space. The maximum marginal hyperplane (MMH) helps in distinguishing the classes. Figure 4 shows the classification based on age and gender.

Figure 4.

Classification of age and gender.

4. Experimental Results

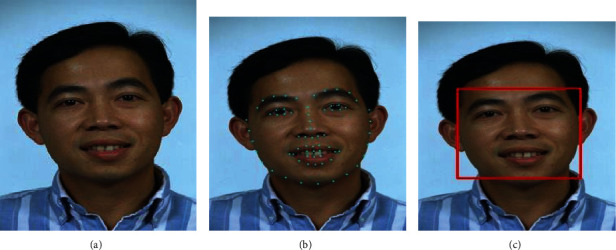

The proposed system exhibits excellent performance by achieving a good classification of age and gender with reduced computation time and higher accuracy and also suggests nutritional advice for the user. The proposed system receives the input picture either by selecting it through the dataset or perhaps in real-time via the webcam. The source image is preprocessed to enhance the matching process's efficiency. The entry to the convolution network is 5 × 112 × 112 significantly with the addition of zero padding to the matrix of size 110 × 110. The landmark points present in the face are detected which helps to align and localize the face regions. The preprocessing procedures for the input facial image are depicted in Figures 5(a) input image, 5(b) face landmark, and 5(c) face detection.

Figure 5.

Image preprocessing: (a) input image; (b) face landmark; (c) face detection.

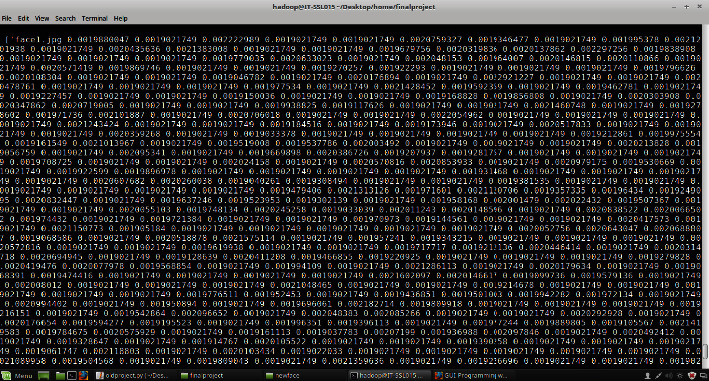

The next phase in the proposed framework is feature extraction, which finds the characteristics that represent the face picture. The collected distinctive features from image will enhance the precision and performance of the system. These image characteristics are retrieved using DCNN and are distinct, allowing one class to be distinguished from another (for both age and gender). This network has a six-layer architecture that comprises of 5 convolution layers and 2 fully connected layers. These layers are then proceeded by a softmax layer at the last, which extracts 512 unique features. Figure 6 depicts the features retrieved by DCNN as a consequence of the feature extraction method.

Figure 6.

Features extracted from DCNN.

Once the features are extracted, feature selection is performed using HPSO which reduces the number of features extracted by DCNN. This helps in further reducing the image dimension and helps in improving the execution time.

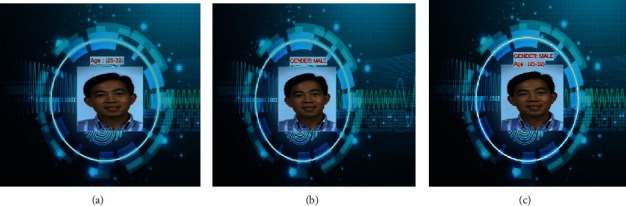

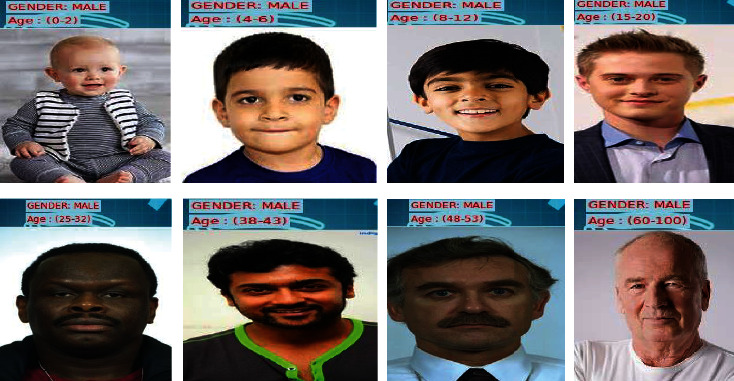

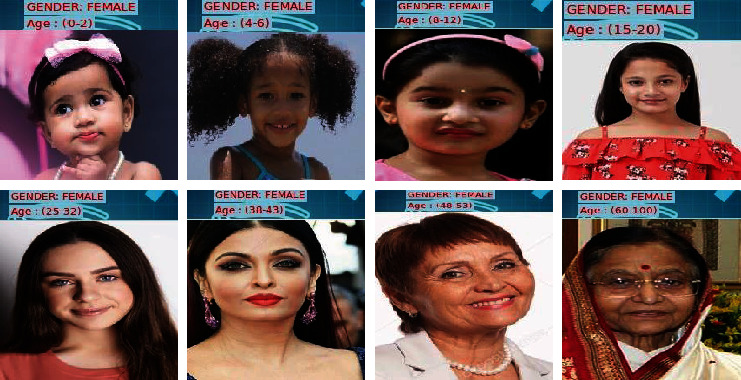

Feature selection is then proceeded by the classification process which classifies the face image in terms of age and gender. Age is categorized into 8 classes as (0–2), (4–6), (8–12), (15–20), (25–32), (38–43), (48–53), and (60–100), and gender is categorized as male/female. Figure 7 shows the result of classification.

Figure 7.

Classification results: (a) predicted age; (b) predicted gender; (c) combined output.

Figure 8 depicts the classification output for men across all eight categories. Figure 9 depicts the classification output for females across all eight categories.

Figure 8.

Classification results of males across all ages.

Figure 9.

Classification results of females across all ages.

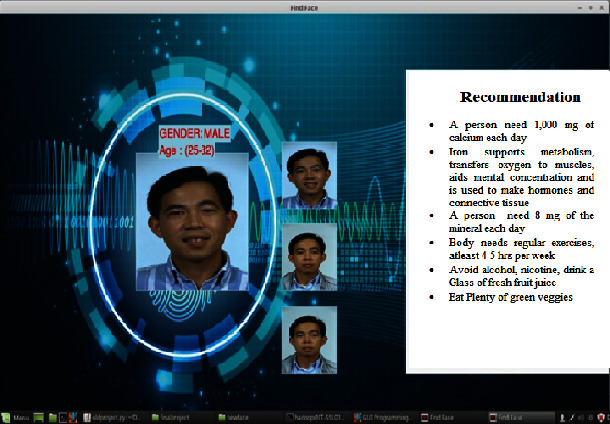

Figure 10 illustrates the system's output for the provided source image, which displays the matching image as well as the relevant age and gender category.

Figure 10.

Nutrition recommendation based on predicted age and gender.

The recommendation system suggests nutritional advice for the individual user based on their age and gender. Figure 10 depicts the system's recommendations based on predicted age and gender class.

5. Performance Evaluation

In this part, the recommended system's performance is compared and assessed against current methodologies. To assess the effectiveness of the current research, publicly available datasets like Adience and real-world images are used for experimentation purposes. Experiments are conducted in order to determine the age and gender of an input facial picture.

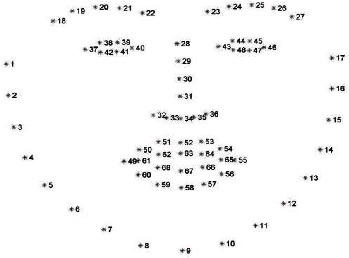

5.1. Implementation Details

Proposed system is implemented using Python TensorFlow framework. The input images are loaded using OpenCV while the dataset is split into train and test sets. Image preprocessing would be the first step that is performed on all images in the dataset to generate an image of size 112 × 112. Facial landmarks are detected and extracted using dlib and OpenCV. The location of 68 (x, y) coordinate points mapping to the structures on the face is estimated using the dlib library. Figure 11 shows the 68 coordinate points detected in the input image using Dlib library. It then proceeds with alignment and localization of keypoints. Deep convolutional neural network (DCNN) is constructed and implemented in Python with the TensorFlow framework. The filter size starts from 32 and doubles in each convolutional layer until it reaches 512. Max pool layer is made up of 2 × 2 filters with a stride of 2. The dropout rate has been set at 0.5. To test the efficiency of the recommended system, publicly available datasets such as Adience and real-time photos are employed.

Figure 11.

Visualization of 68 facial landmark coordinates in the dataset.

5.2. Dataset Description

The Adience dataset [76] is made up of photographs that were regularly published to Flickr by a smartphone. The Adience dataset, which comprises benchmarks of face pictures, is primarily utilized for age and gender recognition. The collection includes photographs with varying degrees of look, noise, stance, and lighting, as well as images obtained without rigorous planning or positioning. This dataset contains images of ages in 8 categories, namely, (0-2, 4-6, 8-13, 15-20, 25-32, 38-43, 48-53, 60+), and contains both the genders of images. The Adience face dataset is shown in Table 1, along with the face distribution across age categories and the total photos per category for men and women. Real-world images are also used which includes socially available face images and images that are captured in real time using a web camera.

Table 1.

Adience dataset.

| Gender | Labels in year | Total | |||||||

|---|---|---|---|---|---|---|---|---|---|

| 0-2 | 4-6 | 8-13 | 15-20 | 25-32 | 38-43 | 48-53 | 60+ | ||

| Female | 682 | 1234 | 1360 | 919 | 2589 | 1056 | 433 | 427 | 9411 |

| Male | 745 | 928 | 934 | 734 | 2308 | 1294 | 392 | 442 | 8192 |

| Both | 1427 | 2162 | 2294 | 1653 | 4897 | 2350 | 825 | 869 | 19487 |

5.3. Performance Metrics

The intended work's performance is measured in terms of classification rate, precision, and recall.

It is represented as

| (4) |

Further, the precision and recall are calculated using

| (5) |

| (6) |

where TP represents the true positive, TN represents the true negative, FP represents the false positive, and FN represents the false negative.

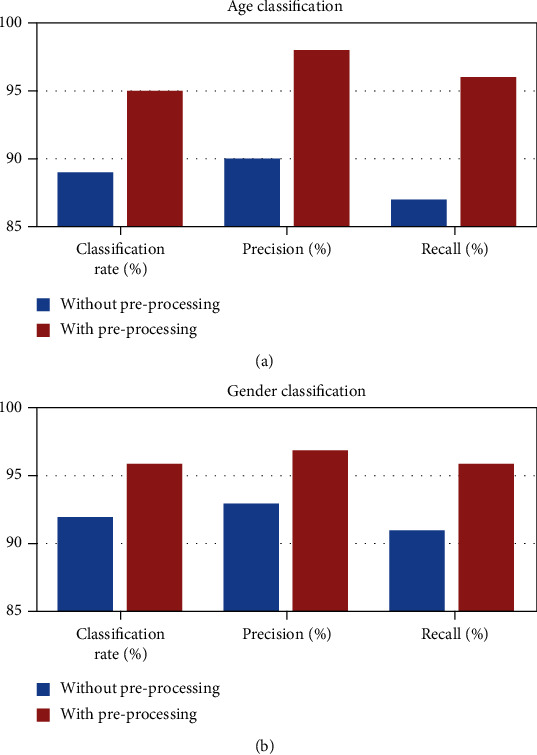

5.4. Image Preprocessing

Importance of image preprocessing in the proposed system enhances the input image and prepares it for the next step in the recommendation system. The preprocessing step achieves improved accuracy, sensitivity, and specificity. Thus, using efficient preprocessing algorithms for image filtering, face detection, and face alignment makes the proposed system robust. Figure 12 shows that there is an increase in the performance when the image preprocessing module is incorporated in the proposed system.

Figure 12.

Performance of image preprocessing module for (a) age and (b) gender classifications.

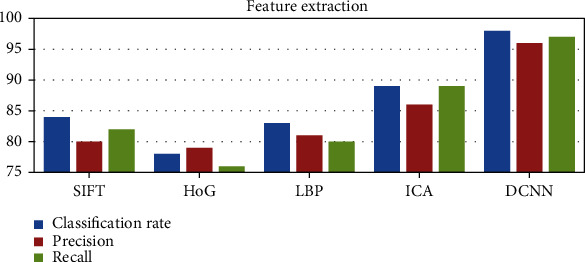

5.5. Feature Extraction

It is an essential step for automated methods which helps in extracting unique features from the given image. It helps in dimensionality reduction by mapping from a multidimensional space into a space of lesser dimensions. The proposed DCNN-based feature extraction technique is evaluated with other existing techniques such as SIFT [77], histogram of oriented gradients [78], LBP [79], and ICA [80]. The empirical findings of several feature extraction strategies are shown in Figure 13.

Figure 13.

Experimental results of feature extraction techniques.

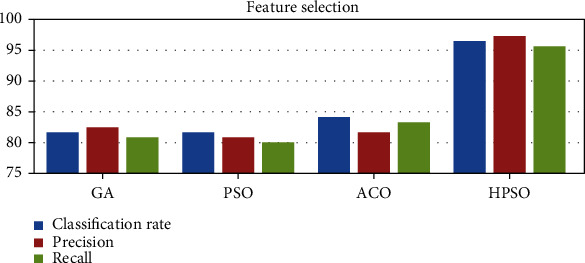

5.6. Feature Selection

Feature selection is also a dimensionality reduction method that helps in discarding irrelevant features by retaining only the discriminatory features. Several feature selection approaches such as GA, PSO, and ACO are evaluated with the proposed hybrid PSO-based feature selection strategy. The empirical findings of several feature selection strategies are shown in Figure 14.

Figure 14.

Experimental results of feature selection techniques.

5.7. Evaluation with State-of-the-Art Methods

The developed age and gender classification approach was evaluated using the Adience dataset and some real-world images. Simonyan and Zisserman [80] introduced the VGG network architecture which consists of a simple network with 3 × 3 convolutional layer stacked one above another. It consists of max pooling, two fully connected layers, and softmax classifier. The 16 and 19 in the VGG network represent the total number of hidden layers (weight layers). VGG network suffered from convergence and took huge time for training, and the architecture is very large. Szegedy et al. [81] introduced the inception network. This network extracts multilevel features with different convolutional layers of sizes 1 × 1, 3 × 3, and 5 × 5.

The proposed system is compared with VGG16, VGG19, and InceptionV3 models by statistical analysis using classification rate, precision, and recall. Table 2 demonstrates that the developed method achieves superior result in classification, precision, and recall.

Table 2.

Performance comparison of the proposed model with existing models.

| Model | Classification rate (%) | Precision (%) | Recall (%) |

|---|---|---|---|

| VGG16 | 89.25 | 87.93 | 87.34 |

| VGG19 | 90.71 | 89.48 | 90.83 |

| Inception V3 | 93.61 | 93.78 | 92.86 |

| Proposed age classification module | 96.90 | 97.03 | 96.80 |

| Proposed gender classification module | 97.38 | 97.31 | 96.43 |

| Proposed age and gender module | 98.87 | 98.89 | 98.34 |

5.8. Execution Time

The execution time of various methods is compared.

5.8.1. Measure of Execution Time with CNN Only

The CNN resulted in high execution time of 68 seconds approximately. This is because CNN used all the features that are retrieved. There is a comparatively large number of features that are obtained from CNN. Hence, due to numerous feature comparisons, the execution time on CNN is more than other methods. The execution time for CNN is approximately 68 seconds.

5.8.2. Measure of Execution Time with CNN + PSO Method

This is varied method of selecting the best features using CNN + PSO. The features of the face images are reduced. It took lesser time than CNN since the face retrieval uses a lesser number of features which makes the execution faster. CNN-PSO takes 45 seconds approximately for face retrieval of the top 10 images.

5.8.3. Measure of Execution Time with CNN + Hybrid PSO Method

The execution time is further reduced by CNN + hybrid PSO because of the crossover operation. The resulted execution time is approximately 4 seconds to classify the input image. The time is optimized as the features of the face images are optimized. The execution time for the three methods is depicted in Table 3.

Table 3.

Execution time of various methods.

| Method | Execution time (in seconds) |

|---|---|

| Convolution neural network (CNN) | 68 (approx.) |

| Convolution neural network with particle swarm optimization (CNN-PSO) | 45 (approx.) |

| Convolution neural network with hybrid particle swarm optimization (CNN-hybrid PSO) | 4 (approx.) |

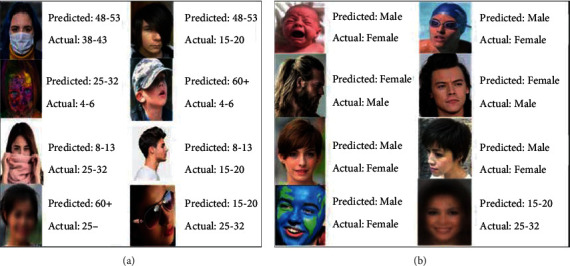

5.9. Misclassifications

Misclassification rate also called as error rate defines the number of images wrongly classified in terms of age and gender. Some examples of age and gender misclassifications are provided in Figures 15(a) and 15(b), respectively. The results show that these misclassifications are due to the exceptionally varying conditions in the dataset as well as in the real-time image collected. Most of the misclassifications are caused because of occlusions in the face portion, low resolution/blur images, and side positions of the face. Gender misclassification occurs mainly for babies/kids and sometimes based on the hairstyle where the gender attributes are not completely differentiable. The misclassification rate is defined as

| (7) |

Figure 15.

Misclassifications results of (a) age and (b) gender.

The misclassification rate produced in the proposed system is 1.315%.

6. Conclusion and Future Work

For a wide range of applications, age and gender are critical factors. The scientific community has been more interested in estimating age and gender through facial photographs. This research offers a revolutionary nutrition recommendation system depending on age and gender detection from a facial image. Most of the existing nutrition recommendation system provides nutritional advice based on the information entered by the user manually or using the pathological reports. In this context, the current paper presents a recommender system which automatically captures the face and classifies the age and gender of an individual without any physical communication. Based on the classification results, nutritional recommendation is listed to the users. This paper incorporates DCNN, HPSO, and classification. Experiments reveal that the proposed system's age and gender recognition approaches exceed existing methods on the basis of accuracy and computational efficiency. In future, it is planned to develop group recommendation system for a group of users in public places.

Algorithm 1.

Algorithm for particle swarm optimization.

Algorithm 2.

Algorithm of hybrid PSO.

Data Availability

The data used to support the findings of this study are included within the article.

Conflicts of Interest

The authors declare that there is no conflict of interest regarding the publication of this article.

References

- 1.Wang X., Liang L., Wang Z., Hu S. Age estimation by facial images: a survey. China Journal of Image and Graphics . 2012;17(6):603–622. [Google Scholar]

- 2.Cottrell G. W., Metcalfe J. Face emotion and gender recognition using holons. Proceedings of Conference on Advances in Neural Information Processing Systems . 1990;3:564–571. [Google Scholar]

- 3.Zhao W., Chellappa R., Phillips P. J., Rosenfeld A. Face recognition. ACM Computing Surveys . 2003;35(4):399–458. doi: 10.1145/954339.954342. [DOI] [Google Scholar]

- 4.Baluja S., Rowley H. Boosting sex identification performance. International Journal of Computer Vision . 2007;71(1):111–119. doi: 10.1007/s11263-006-8910-9. [DOI] [Google Scholar]

- 5.Moghaddam B., Yang M. Gender classification with support vector machines. Proceedings of the 4th IEEE International Conference on Automatic Face and Gesture Recognition; 2000; Grenoble, France. pp. 306–311. [Google Scholar]

- 6.Lapedriza A., Maryn-Jimenez M., Vitria J. Gender recognition in non controlled environments. Proceedings of the 18th International Conference on Pattern Recognition . 2006;3:834–837. [Google Scholar]

- 7.Geng X., Zhou Z. H., Smith-Miles K. Automatic age estimation based on facial aging patterns. IEEE Transactions on Pattern Analysis and Machine Intelligence . 2007;29(12):2234–2240. doi: 10.1109/TPAMI.2007.70733. [DOI] [PubMed] [Google Scholar]

- 8.Guo G., Mu G. Human age estimation: what is the influence across race and gender?. 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops; 2010; San Francisco, CA, USA. pp. 71–78. [DOI] [Google Scholar]

- 9.Chandran V., Patil C. K., Karthick A., Ganeshaperumal D., Rahim R., Ghosh A. State of charge estimation of lithium-ion battery for electric vehicles using machine learning algorithms. World Electric Vehicle Journal . 2021;12(1):p. 38. doi: 10.3390/wevj12010038. [DOI] [Google Scholar]

- 10.Chandran V., Sumithra M. G., Karthick A., et al. Diagnosis of cervical cancer based on ensemble deep learning network using colposcopy images. BioMed Research International . 2021;2021:15. doi: 10.1155/2021/5584004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Srinivasu P. N., JayaLakshmi G., Jhaveri R. H., Praveen S. P. Ambient assistive living for monitoring the physical activity of diabetic adults through body area networks. Mobile Information Systems . 2022;2022:18. doi: 10.1155/2022/3169927.3169927 [DOI] [Google Scholar]

- 12.Ranjan R., Patel V. M., Chellappa R. Hyperface: a deep multi-task learning framework for face detection, landmark localization, pose estimation, and gender recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence . 2019;41(1):121–135. doi: 10.1109/TPAMI.2017.2781233. [DOI] [PubMed] [Google Scholar]

- 13.Kumar P. M., Saravanakumar R., Karthick A., Mohanavel V. Artificial neural network-based output power prediction of grid-connected semitransparent photovoltaic system. Environmental Science and Pollution Research . 2022;29(7):10173–10182. doi: 10.1007/s11356-021-16398-6. [DOI] [PubMed] [Google Scholar]

- 14.Chen D., Hua G., Wen F., Sun J. Supervised transformer network for efficient face detection. European Conference on Computer Vision; 2016; Cham. pp. 122–138. [Google Scholar]

- 15.Kogilavani S. V., Prabhu J., Sandhiya R., et al. COVID-19 detection based on lung CT scan using deep learning techniques. Computational and Mathematical Methods in Medicine . 2022;2022:13. doi: 10.1155/2022/7672196.7672196 [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 16.Lu C., Tang X. Surpassing human-level face verification performance on LFW with GaussianFace. Twenty-Ninth AAAI Conference on Artificial Intelligence; 2014; Austin Texas, USA. [Google Scholar]

- 17.Sun Y., Wang X., Tang X. Deep learning face representation by joint identification-verification. Advances in Neural Information Processing Systems . 2014;27 [Google Scholar]

- 18.Sun Y., Wang X., Tang X. Deep learning face representation from predicting 10,000 classes. Proceedings of the IEEE conference on computer vision and pattern recognition; 2014; Austin Texas, USA. pp. 1891–1898. [Google Scholar]

- 19.Zhu Z., Luo P., Wang X., Tang X. Recover canonical-view faces in the wild with deep neural networks. 2014. https://arxiv.org/abs/1404.3543 .

- 20.Zhu Z., Luo P., Wang X., Tang X. Deep learning identity-preserving face space. Proceedings of the IEEE international conference on computer vision; 2013; pp. 113–120. [Google Scholar]

- 21.Zhu Z., Luo P., Wang X., Tang X. Deep learning multi-view representation for face recognition. 2014. https://arxiv.org/abs/1406.6947.

- 22.Kaliappan S., Saravanakumar R., Karthick A., et al. Hourly and day ahead power prediction of building integrated semitransparent photovoltaic system. International Journal of Photoenergy . 2021;2021:8. doi: 10.1155/2021/7894849.7894849 [DOI] [Google Scholar]

- 23.Haseena S., Bharathi S., Padmapriya I., Lekhaa R. Deep learning based approach for gender classification. 2018 Second International Conference on Electronics, Communication and Aerospace Technology (ICECA); 2018; Coimbatore, India. pp. 1396–1399. [DOI] [Google Scholar]

- 24.Subramaniam U., Subashini M. M., Almakhles D., Karthick A., Manoharan S. An expert system for COVID-19 infection tracking in lungs using image processing and deep learning techniques. BioMed Research international . 2021;2021:17. doi: 10.1155/2021/1896762.1896762 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kanchan T., Bhoyar K. K. A Neural Network Approach to Gender Classification Using Facial Images . International Conference on Industrial Automation and Computing; 2014. [Google Scholar]

- 26.Golomb B. A., Lawrence D. T., Sejnowski T. J. Sexnet: a neural network identifies sex from human faces. NIPS . 1990;1:572–579. [Google Scholar]

- 27.Ganesh S. S., Kannayeram G., Karthick A., Muhibbullah M. A novel context aware joint segmentation and classification framework for glaucoma detection. Computational and Mathematical Methods in Medicine . 2021;2021:19. doi: 10.1155/2021/2921737.2921737 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Jaswante A., Khan A. U., Gour B. Back propagation neural network based gender classification technique based on facial features. International Journal of Computer Science and Network Security . 2014;14(11):91–96. [Google Scholar]

- 29.Khan A., Gour B. Gender classification technique based on facial features using neural network. International Journal of Computer Science and Information Technologies . 2013;4:839–843. [Google Scholar]

- 30.Tamura S., Kawai H., Mitsumoto H. Male/female identification from 8 × 6 very low resolution face images by neural network. Pattern Recognition . 1996;29(2):331–335. doi: 10.1016/0031-3203(95)00073-9. [DOI] [Google Scholar]

- 31.Zhang K., Tan L., Li Z., Yu Q. Y. Gender and smile classification using deep convolutional neural networks. IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW); 2016; Las Vegas, NV, USA. pp. 34–38. [Google Scholar]

- 32.Hmidet A., Subramaniam U., Elavarasan R. M., et al. Design of efficient off-grid solar photovoltaic water pumping system based on improved fractional open circuit voltage MPPT technique. International Journal of Photoenergy . 2021;2021:18. doi: 10.1155/2021/4925433.4925433 [DOI] [Google Scholar]

- 33.Fu Y., Guo G., Huang T. S. Age synthesis and estimation via faces: a survey. IEEE Transactions on Pattern Analysis & Machine Intelligence . 2010;32:1955–1976. doi: 10.1109/TPAMI.2010.36. [DOI] [PubMed] [Google Scholar]

- 34.Kwon Y. H., Lobo N. D. V. Age classification from facial images. Computer Vision and Pattern Recognition, Proceedings CVPR '94., IEEE Computer Society Conference on; 1994; Seattle, WA, USA. pp. 762–767. [Google Scholar]

- 35.Cootes T. F., Taylor C. J., Cooper D. H., Graham J. Active shape models-their training and application. Computer Vision & Image Understanding . 1995;61(1):38–59. doi: 10.1006/cviu.1995.1004. [DOI] [Google Scholar]

- 36.Gunay A., Nabiyev V. V. Automatic age classification with LBP. 2008 23rd international symposium on computer and information sciences; 2008; Istanbul, Turkey. pp. 1–4. [Google Scholar]

- 37.Patel A., Swathika O. V., Subramaniam U., et al. A practical approach for predicting power in a small-scale off-grid photovoltaic system using machine learning algorithms. International Journal of Photoenergy . 2022;2022:21. doi: 10.1155/2022/9194537. [DOI] [Google Scholar]

- 38.Ylioinas J., Hadid A., Pietikainen M. Age classification in unconstrained conditions using LBP variants. Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012); 2012; Tsukuba, Japan. pp. 1257–1260. [Google Scholar]

- 39.Gunay A., Nabiyev V. V. Automatic detection of anthropometric features from facial images. 2007 IEEE 15th Signal Processing and Communications Applications; 2007; Eskisehir, Turkey. pp. 1–4. [Google Scholar]

- 40.Guo G., Mu G., Fu Y., Huang T. S. Human age estimation using bio-inspired features. 2009 IEEE conference on computer vision and pattern recognition; 2009; Miami, FL, USA. pp. 112–119. [Google Scholar]

- 41.Vulli A., Srinivasu P. N., Sashank M. S., Shafi J., Choi J., Ijaz M. F. Fine-tuned DenseNet-169 for breast cancer metastasis prediction using FastAI and 1-cycle policy. Sensors . 2022;22(8):p. 2988. doi: 10.3390/s22082988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Haseena S., Saroja S., Revathi T. A fuzzy approach for multi criteria decision making in diet plan ranking system using cuckoo optimization. Neural Computing and Applications . 2022 doi: 10.1007/s00521-022-07163-y. [DOI] [Google Scholar]

- 43.Nanmaran R., Srimathi S., Yamuna G., et al. Investigating the role of image fusion in brain tumor classification models based on machine learning algorithm for personalized medicine. Computational and Mathematical Methods in Medicine . 2022;2022:13. doi: 10.1155/2022/7137524. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Subramanian M., Kumar M. S., Sathishkumar V. E., et al. Diagnosis of retinal diseases based on Bayesian optimization deep learning network using optical coherence tomography images. Computational Intelligence and Neuroscience . 2022;2022:15. doi: 10.1155/2022/8014979. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Hinton G. E., Osindero S., Teh Y.-W. A fast learning algorithm for deep belief nets. Neural computation . 2006;18(7):1527–1554. doi: 10.1162/neco.2006.18.7.1527. [DOI] [PubMed] [Google Scholar]

- 46.Chidambaram S., Ganesh S. S., Karthick A., Jayagopal P., Balachander B., Manoharan S. Diagnosing breast cancer based on the adaptive neuro-fuzzy inference system. Computational and Mathematical Methods in Medicine . 2022;2022:11. doi: 10.1155/2022/9166873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Salakhutdinov R., Hinton G. Deep Boltzmann machines. Proceedings of the thirteenth international conference on artificial intelligence and statistics; 2009; Florida USA. pp. 448–455. [Google Scholar]

- 48.Dash S., Verma S., Bevinakoppa S., Wozniak M., Shafi J., Ijaz M. F. Guidance image-based enhanced matched filter with modified thresholding for blood vessel extraction. Symmetry . 2022;14(2):p. 194. doi: 10.3390/sym14020194. [DOI] [Google Scholar]

- 49.Aamir K. M., Sarfraz L., Ramzan M., Bilal M., Shafi J., Attique M. A fuzzy rule-based system for classification of diabetes. Sensors . 2021;21(23):p. 8095. doi: 10.3390/s21238095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Mandal M., Singh P. K., Ijaz M. F., Shafi J., Sarkar R. A tri-stage wrapper-filter feature selection framework for disease classification. Sensors . 2021;21(16):p. 5571. doi: 10.3390/s21165571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Kumar Y., Koul A., Sisodia P. S., et al. Heart failure detection using quantum-enhanced machine learning and traditional machine learning techniques for Internet of artificially intelligent medical things. Wireless Communications and Mobile Computing . 2021;2021:16. doi: 10.1155/2021/1616725.1616725 [DOI] [Google Scholar]

- 52.Shafi J., Obaidat M. S., Krishna P. V., Sadoun B., Pounambal M., Gitanjali J. Prediction of heart abnormalities using deep learning model and wearabledevices in smart health homes. Multimedia Tools and Applications . 2022;81(1):543–557. doi: 10.1007/s11042-021-11346-5. [DOI] [Google Scholar]

- 53.Saroja S., Madavan R., Haseena S., et al. Human centered decision-making for COVID-19 testing center location selection: Tamil Nadu—a case study. Computational and mathematical methods in medicine . 2022;2022:13. doi: 10.1155/2022/2048294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.He X., Yan S., Hu Y., Niyogi P., Zhang H. J. Face recognition using Laplacianfaces. IEEE transactions on pattern analysis and machine intelligence . 2005;27(3):328–340. doi: 10.1109/TPAMI.2005.55. [DOI] [PubMed] [Google Scholar]

- 55.Babu J. C., Kumar M. S., Jayagopal P., et al. IoT-based intelligent system for internal crack detection in building blocks. Journal of Nanomaterials . 2022;2022:8. doi: 10.1155/2022/3947760. [DOI] [Google Scholar]

- 56.Donato G., Bartlett M. S., Hager J. C., Ekman P., Sejnowski T. J. Classifying facial actions. IEEE Transactions on Pattern Analysis and Machine Intelligence . 1999;21(10):974–989. doi: 10.1109/34.799905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Fasel B., Luettin J. Recognition of asymmetric facial action unit activities and intensities. Proceedings of the International Conference on Pattern Recognition (ICPR 2000); 2000; Barcelona, Spain. pp. 1100–1103. [Google Scholar]

- 58.Ebied H. M. Feature extraction using PCA and Kernel-PCA for face recognition. 2012 8th International Conference on Informatics and Systems (INFOS); May 2017; Giza, Egypt. pp. 72–77. [Google Scholar]

- 59.Tanaka K., Kurita T., Kawabe T. Selection of import vectors via binary particle swarm optimization and cross-validation for kernel logistic regression. Proceedings of the International Joint Conference on Neural Networks (IJCNN ‘07); August 2007; Orlando, Fla, USA. pp. 1037–1042. [Google Scholar]

- 60.Raymer M. L., Punch W. F., Goodman E. D., Kuhn L. A., Jain A. K. Dimensionality reduction using genetic algorithms. IEEE Transactions on Evolutionary Computation . 2000;4(2):164–171. doi: 10.1109/4235.850656. [DOI] [Google Scholar]

- 61.Dorigo M., Birattari M., Stutzle T. Ant colony optimization. IEEE Computational Intelligence Magazine . 2006;1(4):28–39. doi: 10.1109/MCI.2006.329691. [DOI] [Google Scholar]

- 62.Forbes P., Zhu M. Content-boosted matrix factorization for recommender systems: experiments with recipe recommendation. Proceedings of the fifth ACM conference on Recommender systems; October 2011; Chicago. pp. 261–264. [Google Scholar]

- 63.Phanich M., Pholkul P., Phimoltares S. Food recommendation system using clustering analysis for diabetic patients. 2010 International Conference on Information Science and Applications; April 2010; Seoul, Korea (South). pp. 1–8. [Google Scholar]

- 64.Asanov D. A. Algorithms and Methods in Recommender Systems . Berlin, Germany: Berlin Institute of Technology; 2011. [Google Scholar]

- 65.Sikkandar H., Thiyagarajan R. Deep learning based facial expression recognition using improved cat swarm optimization. Journal of Ambient Intelligent and Humanized Computing . 2021;12(2):3037–3053. doi: 10.1007/s12652-020-02463-4. [DOI] [Google Scholar]

- 66.Krizhevsky A., Sutskever I., Hinton G. E. Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems . 2012;25:1097–1105. [Google Scholar]

- 67.Sikkandar H., Thiyagarajan R. Soft biometrics-based face image retrieval using improved grey wolf optimisation. IET Image Processing . 2020;14(3):451–461. doi: 10.1049/iet-ipr.2019.0271. [DOI] [Google Scholar]

- 68.Ramanathan N., Chellappa R. Modeling age progression in young faces. 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'06); 2006; New York, NY, USA. pp. 387–394. [Google Scholar]

- 69.Guo G., Mu G. A framework for joint estimation of age, gender and ethnicity on a large database. Image and Vision Computing . 2014;32(10):761–770. doi: 10.1016/j.imavis.2014.04.011. [DOI] [Google Scholar]

- 70.Sakaue K., Amano A., Yokoya N. Optimization approaches in computer vision and image processing. IEICE TRANSACTIONS on Information and Systems . 1999;82(3):534–547. [Google Scholar]

- 71.Braik M., Sheta A. F., Ayesh A. Image enhancement using particle swarm optimization. World congress on engineering . 2007.

- 72.Hole K. R., Gulhane V. S., Shellokar N. D. Application of genetic algorithm for image enhancement and segmentation. International Journal of Advanced Research in Computer Engineering & Technology (IJARCET) . 2013;2(4):p. 1342. [Google Scholar]

- 73.Tsai H. H., Chang Y. C. Facial expression recognition using a combination of multiple facial features and support vector machine. Soft Computing . 2018;22:4389–4405. [Google Scholar]

- 74. https://talhassner.github.io/home/projects/Adience/Adience-data.html .

- 75.Biswas S., Aggarwal G., Ramanathan N., Chellappa R. A non generative approach for face recognition across aging. 2008 IEEE Second International Conference on Biometrics: Theory, Applications and Systems; October 2008; Arlington, Virginia. pp. 1–6. [Google Scholar]

- 76.Navneet D. Histogram of oriented gradients (HOG) for object detection. 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'05); June, 2005; San Diego, CA, USA. pp. 886–893. [Google Scholar]

- 77.Wang X., Han T. X., Yan S. An HOG-LBP human detector with partial occlusion handling. 2009 IEEE 12th International Conference on Computer Vision; 2009; Kyoto, Japan. pp. 32–39. [Google Scholar]

- 78.Bhele S. G., Mankar V. H. A review paper on face recognition techniques. International Journal of Advanced Research in Computer Engineering & Technology . 2012;1(8):923–925. [Google Scholar]

- 79.Kanan H. R., Faez K., Hosseinzadeh M. Face recognition system using ant colony optimization-based selected features. Proceedings of the 2007 IEEE symposium on computational intelligence in security and defense applications (CISDA 2007); 2007; Honolulu, HI, USA. pp. 57–62. [Google Scholar]

- 80.Simonyan K., Zisserman A. Very deep convolutional networks for large-scale image recognition. 2014. https://arxiv.org/abs/1409.1556 .

- 81.Szegedy C., Liu W., Jia Y., et al. Going deeper with convolutions. 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2015; Boston, MA, USA. pp. 1–9. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data used to support the findings of this study are included within the article.