This cross-sectional study assesses whether generative models can synthesize circumpapillary optic nerve head optical coherence tomography (OCT) images of normal and glaucomatous eyes and determines the usability of synthetic images for training deep learning models for glaucoma detection.

Key Points

Question

Can realistic circumpapillary optical coherence tomography scans of normal and glaucomatous eyes be generated, and can these be used in the training of a deep learning (DL) glaucoma detection model?

Findings

In this cross-sectional study of 990 normal eyes and 862 glaucomatous eyes, identification of real images from synthetic images by 2 clinicians appeared similar. DL detection models for glaucoma trained exclusively on synthetic images resulted in comparable performance on independent internal and external test sets with a model trained exclusively on real images.

Meaning

Generative models can synthesize realistic optical coherence tomography scans, which can be used to train DL glaucoma detection models and for data sharing.

Abstract

Importance

Deep learning (DL) networks require large data sets for training, which can be challenging to collect clinically. Generative models could be used to generate large numbers of synthetic optical coherence tomography (OCT) images to train such DL networks for glaucoma detection.

Objective

To assess whether generative models can synthesize circumpapillary optic nerve head OCT images of normal and glaucomatous eyes and determine the usability of synthetic images for training DL models for glaucoma detection.

Design, Setting, and Participants

Progressively growing generative adversarial network models were trained to generate circumpapillary OCT scans. Image gradeability and authenticity were evaluated on a clinical set of 100 real and 100 synthetic images by 2 clinical experts. DL networks for glaucoma detection were trained with real or synthetic images and evaluated on independent internal and external test data sets of 140 and 300 real images, respectively.

Main Outcomes and Measures

Evaluations of the clinical set between the experts were compared. Glaucoma detection performance of the DL networks was assessed using area under the curve (AUC) analysis. Class activation maps provided visualizations of the regions contributing to the respective classifications.

Results

A total of 990 normal and 862 glaucomatous eyes were analyzed. Evaluations of the clinical set were similar for gradeability (expert 1: 92.0%; expert 2: 93.0%) and authenticity (expert 1: 51.8%; expert 2: 51.3%). The best-performing DL network trained on synthetic images had AUC scores of 0.97 (95% CI, 0.95-0.99) on the internal test data set and 0.90 (95% CI, 0.87-0.93) on the external test data set, compared with AUCs of 0.96 (95% CI, 0.94-0.99) on the internal test data set and 0.84 (95% CI, 0.80-0.87) on the external test data set for the network trained with real images. An increase in the AUC for the synthetic DL network was observed with the use of larger synthetic data set sizes. Class activation maps showed that the regions of the synthetic images contributing to glaucoma detection were generally similar to that of real images.

Conclusions and Relevance

DL networks trained with synthetic OCT images for glaucoma detection were comparable with networks trained with real images. These results suggest potential use of generative models in the training of DL networks and as a means of data sharing across institutions without patient information confidentiality issues.

Introduction

Glaucoma is a progressive eye disease that causes irreversible damage to the retinal ganglion cells,1 leading to blindness.2 Because of the asymptomatic nature of the disease, loss of sight is often undetected until advanced stages. By 2040, an estimated 111.8 million people3 will have glaucoma, which makes earlier detection critical.

Artificial intelligence–based algorithms4,5,6,7 have emerged as a potential tool to improve clinical workflows and management8 in ophthalmology by detecting patterns associated with conditions such as age-related macular degeneration,9 diabetic retinopathy,5,6 and glaucoma.7 Development of deep learning (DL) models for disease detection requires large amounts of high-quality labeled data. Patient data acquisition is often hindered because of time constraints, privacy concerns, and institutional restrictions. Recently, generative adversarial networks (GANs)9 have been extensively used in the artificial intelligence community for the synthesis of artificial images and have emerged as a potential technique to address the challenge of data scarcity in biomedical applications.10 In ophthalmic studies, GAN models have been demonstrated in segmentation,11,12,13,14 data augmentation,15,16,17,18,19 domain transfer,20,21,22 image enhancement,23,24,25,26 and others.27 In data augmentation with GANs, prior studies on synthetic fundus15,28,29 and optical coherence tomography (OCT) image generation16,30 have largely focused on retinal disorders, which are assessed by the presence of lesions. However, studies on the use of GANs for image synthesis in glaucoma, a disease of the optic nerve characterized by progressive loss, have been limited to fundus photography18 and anterior segment OCT imaging17 and has not been reported on optic nerve head OCT images (eTable 3 in the Supplement), in which assessment of nerve fiber layer thinning is routinely performed in glaucoma assessment.31,32 In this study, we evaluate the use of GANs for the generation of circumpapillary OCT scans for normal and glaucomatous eyes and their potential to train deep neural networks for glaucoma detection.

Method

Participants

This retrospective cross-sectional study was conducted from January 2012 to March 2021 and included Chinese participants with glaucoma from 4 clinical studies (2009-2021)32,33 and controls from the Singapore Epidemiology of Eye Disease program,34 a population-based study (2009-2021) performed at the Singapore Eye Research Institute in Singapore. Additional data from a clinical study of non-Hispanic White participants from the Carol Davila University of Medicine and Pharmacy, Bucharest, Romania (2020-2021), were also included. Both studies were approved by the respective review boards, performed in accordance with the tenets of the Declaration of Helsinki,35 and written informed consent was obtained from all participants. Travel allowances were provided to study participants. This study followed the Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis (TRIPOD) reporting guideline.

Study methodologies were identical for the Singapore and Bucharest sites. Sex and ethnicity were self-reported. Participants were excluded if they exhibited signs of retinal or optic neuropathies other than glaucoma, history of retinal operation or laser treatments, and if they had systemic diseases that might affect the retina or visual field. Glaucoma was based on clinical assessment, having both glaucomatous optic neuropathy and glaucomatous visual field loss. Severity of glaucoma was based on mean deviation (MD) and defined as mild glaucoma (MD, ≥−6 dB), moderate glaucoma (MD, −6.01 to −12.00 dB), or advanced glaucoma (MD, <−12 dB). Normal controls were individuals free from glaucoma and other clinically relevant eye conditions such as macular or vitreoretinal diseases, including epiretinal membrane, diabetic retinopathy, age-related macular degeneration, and other retinopathies that might affect retinal thickness.

OCT Imaging

Cirrus spectral-domain OCT (Carl Zeiss Meditec) was performed using a 6 × 6 mm2 scan protocol centered on the optic nerve head after pupil dilation. Circumpapillary cross-sectional OCT images were obtained with a 3.46-mm diameter circular circle centered on the optic nerve head. Each scan was manually checked and excluded if the signal strength was below 6 or contained substantial movement artefacts.

Workflow and Development

In this study, we examined the usage of the GANs to produce synthetic images. From participants enrolled at the Singapore study site (a validation set of 84 normal and 84 glaucomatous eyes and an internal test set of 70 normal and 70 glaucomatous eyes) were first randomly selected and set aside to be used during the development of the DL models. Eyes from a participant were not allowed to be in different subsets, and participants were wholly in either the validation or internal test sets. The remaining 990 healthy eyes and 862 glaucomatous eyes were used to develop 2 separate GAN models to generate synthetic normal or glaucoma circumpapillary OCT images. DL models for glaucoma detection were trained using either exclusively real or synthetic images. Detection performance of these DL models were evaluated on the internal test set and on an independent external test set based on the Bucharest data. An overall workflow of the study is provided in eFigure 1 in the Supplement.

Image Synthesis

For image synthesis, a type of generative model known as progressive GAN (PGGAN) was used.36 GANs use adversarial training wherein the probability of the generated images is matched to the real images as the training progresses, using a generator, which takes in a random latent noise vector, and a discriminator, which classifies images as real or synthetic. PGGAN includes incremental addition of layers, which allows for more stable training to generate high-resolution synthetic images. In this study, an output image resolution of 256 × 256 pixels was defined. The development of PGGAN models was done in Pytorch. PGGAN models were trained for approximately 72 hours using a NVIDIA DGX Workstation (Lamda Labs) with 4-T V100 graphic processing units.

Once trained, a latent vector fed as input to the PGGAN model generates a synthetic image and this approach can be extended to produce any number of synthetic images. Two different PGGAN models, 1 for the generation of normal OCT images and 1 for the generation of glaucoma OCT images, were developed separately.

Clinician Evaluation Methodology

Two hundred OCT images comprising real glaucoma images (n = 50), real normal images (n = 50), synthetic glaucoma images (n = 50), and synthetic normal images (n = 50) were randomly selected and were manually evaluated by 2 expert clinician-scientists with more than 10 years (expert 1: R.S.C.) and 20 years (expert 2: J.G.S.) of experience. The experts were allowed to review the images at a setting and time of their convenience. No prior information regarding the data distribution was given to the clinicians to avoid any bias. The images were prepared on a digital grading form with inputs requested for gradeability and authenticity as follows: (1) gradeability evaluation: the objective of this experiment was to evaluate the quality of the OCT images, including both real and synthetic images, as gradable or nongradable and (2) authenticity evaluation: the 2 clinicians were asked to determine if the OCT images appeared real or synthetic.

DL Evaluation Methodology

To assess whether synthetic images generated using the PGGAN model for normal and glaucomatous eyes were good enough to be used for training DL algorithms, we compared the diagnostic performance of DL classification models trained only with real images or with only synthetic images. Both DL models used the VGG 11 architecture37 and were trained from scratch. Stochastic gradient descent optimizers with learning rate of 0.001, momentum of 0.9, cross entropy loss function and batch size of 16 were used. For training, early stopping with a patience factor of 10 was used and the checkpoint with best performance on the validation data set was saved as the final model.

The DL classification model based on real images was trained using 600 normal eyes and 600 glaucomatous eyes, randomly sampled from the GAN training data. For the DL models trained with synthetic images generated from the GAN models, different data set sizes were used to evaluate the effect of synthetic data set size on DL detection performance. Total data set sizes of 1200, 10 000, 60 000, and 200 000 were generated using the GAN models, with an equal split between the generated synthetic normal and glaucomatous eyes at each data set size. A summary of the DL training data can be found in eTable 2 in the Supplement. Both real and synthetic image-based DL models were tested and evaluated on the unseen real internal and external test images. To avoid any bias, PGGAN model training did not include any of the images from the test sets. A baseline area under the curve (AUC) score was obtained for glaucoma detection using the circumpapillary retinal nerve fiber layer (cpRNFL) thickness.

Additional Analysis

To evaluate the distribution of the generated synthetic images and real images, a DL-based UNet segmentation38 model was used to measure the thickness of the retinal nerve fiber layer (RNFL) layer. Additionally, similarity of the generated images to real images was evaluated using the Fréchet Inception Distance (FID) score,39 where a lower FID score indicates a greater similarity. Class activation maps (CAMs)40 were generated and visualized as heat maps overlaid on the OCT images to visualize the regions that influenced the classifications of the DL models.

Statistical Analysis

Evaluation metrics for glaucoma detection were the AUC, sensitivity, and specificity. 95% CIs were generated using bootstrapping clustered at the participant level to adjust for intraeye correlations. Differences in AUC between models were compared by 2-sided Delong tests,41 with P values less than .05 considered statistically significant. Comparisons were performed between individual models and P values were not corrected for multiple comparisons. All the statistical analysis was done using Python and the scikit-learn library.

Results

Human Evaluation Results by Clinical Experts

A total of 1016 glaucomatous eyes from 728 Asian Chinese participants and 1144 eyes from 806 Asian Chinese normal controls were enrolled from the Singapore site, while 150 glaucomatous eyes from 77 non-Hispanic White participants and 150 eyes from 83 non-Hispanic White normal controls were enrolled from the Bucharest site. Further characteristics of the participants can be found in eTable 1 in the Supplement.

The results of image gradeability, as evaluated by the 2 clinicians, are presented in Table 1. Image quality as graded by both clinical experts was almost similar: 92.0% for clinical expert 1 and 93.0% for clinical expert 2. However, synthetic images were assessed to have better image quality compared with the real images, which was largely because of the poorer-quality glaucoma images in the real image data set. Furthermore, all the synthetic images generated for normal control eyes using the PGGAN method were gradable. The results of the authenticity evaluation are shown in Table 2, with a similar accuracy score of 51.8% for clinical expert 1 and 51.3% for clinical expert 2, indicating that both clinicians found it difficult to determine the synthetic images from the real images.

Table 1. Human Assessment of Gradeability of Circumpapillary Optical Coherence Tomography (OCT) Image Qualitya.

| Variable | No. of images | Image quality, No. (%) | |

|---|---|---|---|

| Clinical expert 1 | Clinical expert 2 | ||

| All | 200 | 184 (92.0) | 186 (93.0) |

| Real | 100 | 88 (88.0) | 90 (90.0) |

| Normal | 50 | 49 (98.0) | 46 (92.0) |

| Glaucoma | 50 | 39 (78.0) | 44 (88.0) |

| Synthetic | 100 | 96 (96.0) | 96 (96.0) |

| Normal | 50 | 50 (100.0) | 50 (100.0) |

| Glaucoma | 50 | 46 (92.0) | 46 (92.0) |

To avoid any evaluation bias, image type (real glaucoma/real normal/synthetic glaucoma/synthetic normal) of the 200 images was not revealed to either of the clinicians. Image quality of each of the 200 images was graded by both clinicians. Values indicate the number (%) of images graded as good quality.

Table 2. Human Assessment of Authenticity of Real and Synthetic Optical Coherence Tomography (OCT) Imagesa.

| Variable | Clinical expert 1, % | Clinical expert 2, % | ||||

|---|---|---|---|---|---|---|

| Accuracy | Sensitivity | Specificity | Accuracy | Sensitivity | Specificity | |

| All | 51.8 | 51.5 | 52.0 | 51.4 | 48.9 | 53.5 |

| Normal | 41.0 | 60.0 | 22.0 | 55.2 | 60.4 | 50.0 |

| Glaucoma | 62.0 | 42.8 | 82.0 | 47.6 | 38.0 | 57.1 |

The ability of clinicians to differentiate whether each of the images are real or synthetic on visual inspection is reported. Accuracy, sensitivity, and specificity scores of discriminations were calculated for all 200 images including 100 normal and 100 glaucoma images.

DL Classification Model Results

The glaucoma detection performance for the different DL models is presented in Table 3. The mean cpRNFL thicknesses resulted in a baseline AUC of 0.92 for the internal test data set (mean [SD] cpRNFL: normal eyes, 03.0 [14.0] µm; glaucomatous eyes, 71.4 [17.7] µm) and 0.83 for the external test data set (mean [SD] cpRNFL: normal eyes, 103.0 [8.7] µm; glaucomatous eyes, 83.5 [17.7] µm). AUCs for the DL model trained on real images was 0.96 for the internal test data set and 0.84 for the external test data set, whereas the AUCs for the DL models trained on synthetic images ranged from 0.95 to 0.97 for the internal test data set and 0.86 to 0.90 for the external test data set. On the external test data set, the best-performing DL model trained with synthetic images (n = 200 000) resulted in a higher AUC of 0.90 (95% CI, 0.87-0.93; P = .002) compared with the DL model (AUC, 0.84 [95% CI, 0.80-0.87]) trained with real images. However, on the internal test data set, AUC scores of the best-performing DL models trained with synthetic images (AUC, 0.97 [95% CI, 0.95-0.99]) were not statistically different (P = .49) from the DL model (AUC, 0.96 [95% CI, 0.94- 0.99]) trained with real images.

Table 3. Diagnostic Performance of Deep Learning (DL) Classifier Models for Glaucoma Detection.

| Model | Internal test data set (70 normal eyes, 70 glaucomatous eyes) | External test data set (150 normal eyes, 150 glaucomatous eyes) | ||||

|---|---|---|---|---|---|---|

| AUC (95% CI) | Sensitivity at 80% specificity | Sensitivity at 90% specificity | AUC (95% CI) | Sensitivity at 80% specificity | Sensitivity at 90% specificity | |

| cpRNFL (baseline)a | 0.92 (0.88-0.96) | 87.1 | 75.7 | 0.83 (0.79-0.87) | 61.3 | 40.7 |

| Real imagesb | 0.96 (0.94-0.99) | 95.7 | 88.6 | 0.84 (0.80-0.87) | 74.7 | 42 |

| Synthetic images, No.c | ||||||

| 1200 | 0.95 (0.92-0.97) | 91.4 | 84.3 | 0.86 (0.82-0.89) | 70.7 | 53.3 |

| 10 000 | 0.96 (0.94-0.98) | 94.3 | 90.0 | 0.88 (0.85-0.91) | 74.7 | 58.9 |

| 60 000 | 0.97 (0.94-0.99) | 98.6 | 94.3 | 0.87 (0.84-0.91) | 69.6 | 56.7 |

| 200 000 | 0.97 (0.95-0.99) | 95.7 | 91.4 | 0.90 (0.87-0.93) | 86.7 | 69.4 |

Abbreviations: AUC, area under the curve; cpRNFL, circumpapillary retinal fiber layer.

Model based on cpRNFL thickness.

DL model trained using only real images.

Four DL models trained using synthetic images of varying data set sizes.

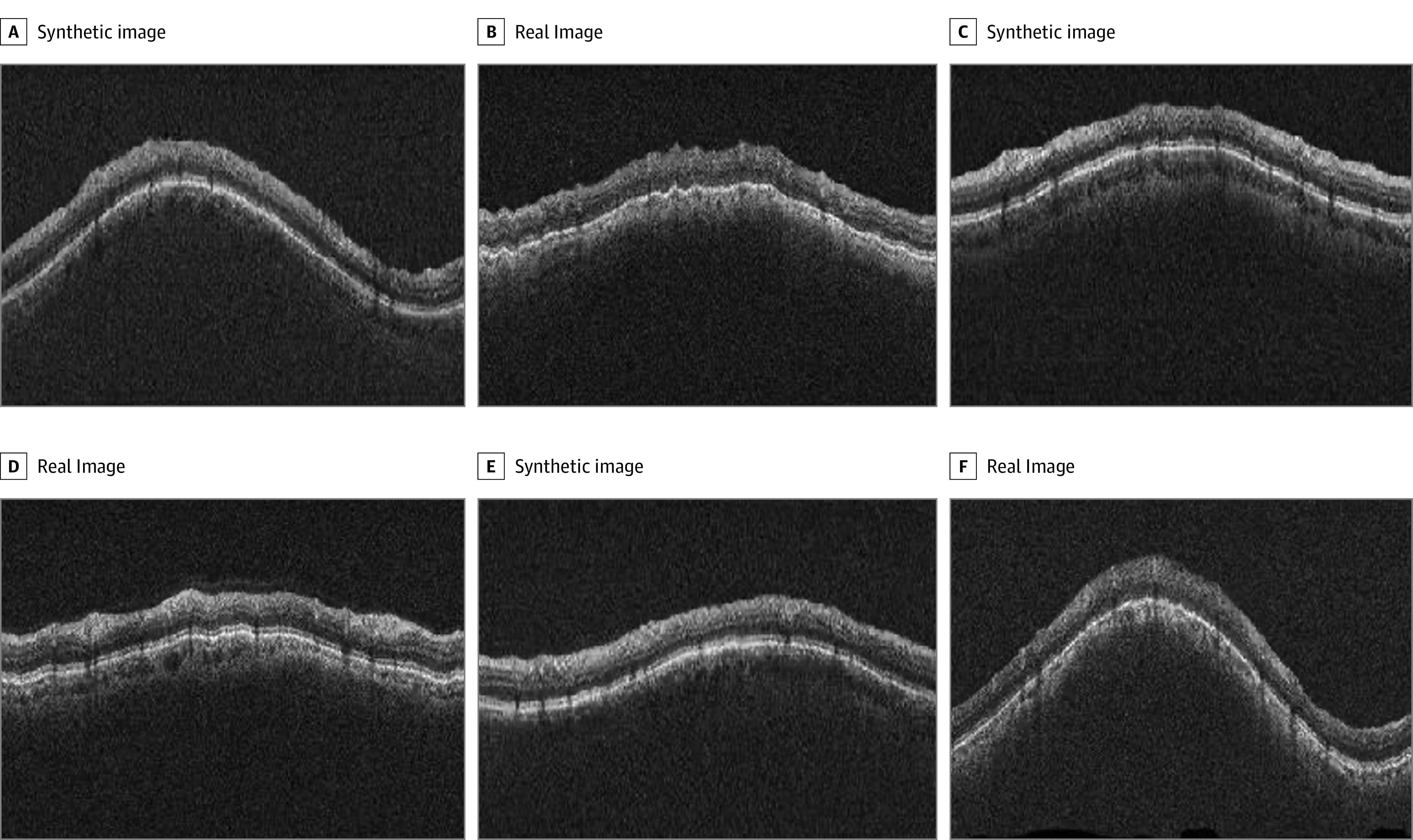

Samples of the real and generated images are shown in the Figure (glaucomatous eyes) and in eFigure 2 of the Supplement (normal eyes). To assess the quantitative similarity of the generated synthetic images with the real images, the FID scores were calculated.24 For the 4 synthetic data sets with increasing data set sizes, FID score ranged from 15.8 to 16.7 for normal eyes and 12.0 to 15.6 for glaucomatous eyes (eTable 2 in the Supplement). Additionally, CAMs were generated to visually indicate regions that contributed to the respective classifications for the DL networks. It can be observed that the DL models were relatively consistent for both real and synthetic images, with major regions typically located at the temporal and inferior regions of the circumpapillary images, and largely at the RNFL layer, which is consistent with the expected regions of glaucomatous damage42 as highlighted in red in the CAM maps (eFigure 4 in the Supplement).

Figure. Circumpapillary Optical Coherence Tomography (OCT) Images of Real and Synthetic Glaucomatous Eyes.

Examples of images of circumpapillary OCT images from real participants and synthetically generated circumpapillary OCT images from the generative adversarial network (GAN) model for glaucomatous eyes. Circumpapillary OCT images of real glaucomatous eyes are located in panels B, D, and E. All other images were synthetically generated from the GAN model for glaucomatous eyes.

Discussion

In this study, we developed and evaluated GANs for generating high-resolution circumpapillary OCT images for normal and glaucomatous eyes. The results of the quality evaluation demonstrated that synthetic OCT images can be difficult to discern from real images by experienced clinicians. When the synthetic images were used to train a DL model for glaucoma detection, the results showed that the glaucoma detection performance of a model trained with enough synthetic images was comparable with that of a model trained using real data, on both internal test and external test data sets. The development of deep networks for glaucoma detection based on synthetically generated circumpapillary OCT images is a first-of-its-kind approach, and to our knowledge, has not been reported elsewhere. These findings suggest the potentiality of generating realistic-looking synthetic images for the training of deep networks for glaucoma, as well as a possible means of privacy-agnostic data sharing between institutions. In addition, the technology developed for glaucoma in the present work may be translated for DL approaches in rare disease such as hereditary retinal diseases.

Deep models largely depend on the quantity and quality of the input data provided, with a larger number of high-quality data typically associated with improved performance. However, large-scale data acquisition is often resource intensive and involves patient data confidentiality concerns, and an alternative model that efficiently and effectively produces usable data for DL development independent of such constraints is desirable. GANs have seen rapidly increasing interest for its ability to generate realistic synthetic images. Specifically, PGGANs36 have been reported in previous ophthalmology-related studies for the generation of fundus images for referrable and nonreferrable age-related macular degeneration15 and macula OCT scans for urgent and nonurgent referrals16 of eyes with drusen, choroidal neosvacularization, and diabetic macular edema. A recent study also demonstrated the use of synthetic image generation in anterior segment–OCT imaging for angle assessment in glaucoma.17 The promising results of the methodology described in these previous works encouraged us to adopt PGGANs for this study. In the gradeability assessment, overall image quality as evaluated by the 2 clinicians was similar. Image quality of the synthetic images outperformed the real images, largely because of the poorer gradeability of real glaucoma images. Clinicians also compared similarly in evaluating the authenticity of the images, which suggests that the generated images were realistic enough to be difficult to discern from real data.

A critical challenge to the development of DL models is the requirement of large data sets. While low-shot approaches could be used as viable strategies to address bias through better data representation with smaller data sets,43 generative models were evaluated in this study. We generated synthetic images for normal and glaucomatous eyes, with data set sizes ranging from 1200 to 200 000, and the results of our experiments show that glaucoma detection improved as data set size increased, achieving comparable performance to that of real images in training DL models. The FID scores (range, 12.0-16.7), although moderately higher than values reported on natural images,36 provided an indication of the quality of the synthetic images. CAMs shown in eFigure 3 in the Supplement help to identify the regions for classifying the cpRNFL OCT scans as normal or glaucomatous, and it can be observed that for both real and synthetic images, the highlighted regions are consistent, focusing largely on the RNFL layer at the superior and inferior regions. This in general agrees with the patterns of glaucomatous damage, with progressive thinning of the RNFL in eyes with glaucoma.42,44

Previous studies15,16 reported a decrease in performance when models were trained exclusively on synthetic images and tested on real images. In our study, the DL model trained on 200 000 synthetic images resulted in similar classification performance to that of the DL model trained on 1200 real images on the internal test data set. To evaluate the generalizability of the DL models in our study, we evaluated the performance of DL models on an independent external data set. We observed an increase in performance when the DL model trained on 200 000 synthetic images was tested on the external data set, compared with the cpRNFL baseline model and the glaucoma detection model trained on real images. A possible explanation for the improvement in performance when applied on an external data set could be that extraction of the intrinsic feature space by a GAN followed by usage of feature space representations for task-specific training improves abstraction of generalizable latent space glaucomatous features for training a DL model. However, further experiments are needed to better evaluate this in a more rigorous fashion.

Limitations

Two separate GAN models for normal and glaucoma images were developed, and future studies could involve the use of conditional GAN-based models to produce synthetic images for specific severities and normal eyes in a single GAN model. Because of the learning of the latent space manifold for GANs, focal changes in the development of glaucoma may be difficult to model. Although models were evaluated on both internal and independent external test sets, the number of test images are relatively small, and studies on larger data sets, with a particular emphasis on nonobvious confounding cases, would be useful. Only circumpapillary images at the optic nerve head from OCTs (Cirrus) were used. While the approach used in this study could also work with data from other OCTs and at the macula in glaucoma, this was not evaluated, nor the effects of combining data from different OCTs. However, it is currently difficult to generate 3-dimensional volumes based on a GAN approach. This is also the reason why we included circumpapillary OCT scans rather than volumetric optic nerve head data. Synthetic images may also contain intrinsic embedded characteristics that are not visually perceivable but may bias discriminatory performance, and such effects may need to be evaluate in studies combining both real and synthetic data for model development.

Conclusions

Evaluations of the synthetic circumpapillary OCT images by clinical experts and the glaucoma detection models developed with these images suggest that GANs can generate realistic synthetic images that can be used for training deep networks. Synthetic images generated with GANs could potentially facilitate privacy-agnostic data sharing.

eTable 1. Summary of the Study Participants

eTable 2. Summary of Data used for Training Deep Learning Glaucoma Detection Models

eTable 3. Summary of Search Terms

eFigure 1. Workflow of the study

eFigure 2. Real and synthetic circumpapillary OCT images of normal eyes

eFigure 3. Distribution of the extracted circumpapillary RNFL thicknesses from the (a) real normal and real glaucoma images used for GAN training, and (b) synthetic 100,000 normal and synthetic 100,000 glaucoma images generated from the normal and glaucoma GAN models respectively

eFigure 4. Class Activation Maps of the glaucoma detection models

References

- 1.Weinreb RN, Aung T, Medeiros FA. The pathophysiology and treatment of glaucoma: a review. JAMA. 2014;311(18):1901-1911. doi: 10.1001/jama.2014.3192 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Budenz DL, Barton K, Whiteside-de Vos J, et al. ; Tema Eye Survey Study Group . Prevalence of glaucoma in an urban West African population: the Tema Eye Survey. JAMA Ophthalmol. 2013;131(5):651-658. doi: 10.1001/jamaophthalmol.2013.1686 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Tham YC, Li X, Wong TY, Quigley HA, Aung T, Cheng CY. Global prevalence of glaucoma and projections of glaucoma burden through 2040: a systematic review and meta-analysis. Ophthalmology. 2014;121(11):2081-2090. doi: 10.1016/j.ophtha.2014.05.013 [DOI] [PubMed] [Google Scholar]

- 4.Ting DSW, Peng L, Varadarajan AV, et al. Deep learning in ophthalmology: The technical and clinical considerations. Prog Retin Eye Res. 2019;72:100759. doi: 10.1016/j.preteyeres.2019.04.003 [DOI] [PubMed] [Google Scholar]

- 5.Gulshan V, Peng L, Coram M, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316(22):2402-2410. doi: 10.1001/jama.2016.17216 [DOI] [PubMed] [Google Scholar]

- 6.Bellemo V, Lim ZW, Lim G, et al. Artificial intelligence using deep learning to screen for referable and vision-threatening diabetic retinopathy in Africa: a clinical validation study. Lancet Digit Health. 2019;1(1):e35-e44. doi: 10.1016/S2589-7500(19)30004-4 [DOI] [PubMed] [Google Scholar]

- 7.Ran AR, Tham CC, Chan PP, et al. Deep learning in glaucoma with optical coherence tomography: a review. Eye (Lond). 2021;35(1):188-201. doi: 10.1038/s41433-020-01191-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ting DS, Cheung GC, Wong TY. Diabetic retinopathy: global prevalence, major risk factors, screening practices and public health challenges: a review. Clin Exp Ophthalmol. 2016;44(4):260-277. doi: 10.1111/ceo.12696 [DOI] [PubMed] [Google Scholar]

- 9.Dong L, Yang Q, Zhang RH, Wei WB. Artificial intelligence for the detection of age-related macular degeneration in color fundus photographs: a systematic review and meta-analysis. EClinicalMedicine. 2021;35:100875. doi: 10.1016/j.eclinm.2021.100875 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Yi X, Walia E, Babyn P. Generative adversarial network in medical imaging: a review. Med Image Anal. 2019;58:101552. doi: 10.1016/j.media.2019.101552 [DOI] [PubMed] [Google Scholar]

- 11.Heisler M, Bhalla M, Lo J, et al. Semi-supervised deep learning based 3D analysis of the peripapillary region. Biomed Opt Express. 2020;11(7):3843-3856. doi: 10.1364/BOE.392648 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Yildiz E, Arslan AT, Yıldız Taş A, et al. Generative adversarial network based automatic segmentation of corneal subbasal nerves on in vivo confocal microscopy images. Transl Vis Sci Technol. 2021;10(6):33-33. doi: 10.1167/tvst.10.6.33 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wang S, Yu L, Yang X, Fu CW, Heng PA. Patch-based output space adversarial learning for joint optic disc and cup segmentation. IEEE Trans Med Imaging. 2019;38(11):2485-2495. doi: 10.1109/TMI.2019.2899910 [DOI] [PubMed] [Google Scholar]

- 14.Park KB, Choi SH, Lee JY. M-GAN: retinal blood vessel segmentation by balancing losses through stacked deep fully convolutional networks. IEEE Access. 2020;8:146308-146322. doi: 10.1109/ACCESS.2020.3015108 [DOI] [Google Scholar]

- 15.Burlina PM, Joshi N, Pacheco KD, Liu TYA, Bressler NM. Assessment of deep generative models for high-resolution synthetic retinal image generation of age-related macular degeneration. JAMA Ophthalmol. 2019;137(3):258-264. doi: 10.1001/jamaophthalmol.2018.6156 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Zheng C, Xie X, Zhou K, et al. Assessment of generative adversarial networks model for synthetic optical coherence tomography images of retinal disorders. Transl Vis Sci Technol. 2020;9(2):29-29. doi: 10.1167/tvst.9.2.29 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Zheng C, Bian F, Li L, et al. Assessment of generative adversarial networks for synthetic anterior segment optical coherence tomography images in closed-angle detection. Transl Vis Sci Technol. 2021;10(4):34-34. doi: 10.1167/tvst.10.4.34 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Diaz-Pinto A, Colomer A, Naranjo V, Morales S, Xu Y, Frangi AF. Retinal image synthesis and semi-supervised learning for glaucoma assessment. IEEE Trans Med Imaging. 2019;38(9):2211-2218. doi: 10.1109/TMI.2019.2903434 [DOI] [PubMed] [Google Scholar]

- 19.Abdelmotaal H, Abdou AA, Omar AF, El-Sebaity DM, Abdelazeem K. Pix2pix conditional generative adversarial networks for scheimpflug camera color-coded corneal tomography image generation. Transl Vis Sci Technol. 2021;10(7):21. doi: 10.1167/tvst.10.7.21 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Tavakkoli A, Kamran SA, Hossain KF, Zuckerbrod SL. A novel deep learning conditional generative adversarial network for producing angiography images from retinal fundus photographs. Sci Rep. 2020;10(1):21580. doi: 10.1038/s41598-020-78696-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Zhao H, Li H, Maurer-Stroh S, Cheng L. Synthesizing retinal and neuronal images with generative adversarial nets. Med Image Anal. 2018;49:14-26. doi: 10.1016/j.media.2018.07.001 [DOI] [PubMed] [Google Scholar]

- 22.Costa P, Galdran A, Meyer MI, et al. End-to-end adversarial retinal image synthesis. IEEE Trans Med Imaging. 2018;37(3):781-791. doi: 10.1109/TMI.2017.2759102 [DOI] [PubMed] [Google Scholar]

- 23.Cheong H, Devalla SK, Pham TH, et al. DeshadowGAN: a deep learning approach to remove shadows from optical coherence tomography images. Transl Vis Sci Technol. 2020;9(2):23-23. doi: 10.1167/tvst.9.2.23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Huang Y, Lu Z, Shao Z, et al. Simultaneous denoising and super-resolution of optical coherence tomography images based on generative adversarial network. Opt Express. 2019;27(9):12289-12307. doi: 10.1364/OE.27.012289 [DOI] [PubMed] [Google Scholar]

- 25.Yoo TK, Choi JY, Kim HK. CycleGAN-based deep learning technique for artifact reduction in fundus photography. Graefes Arch Clin Exp Ophthalmol. 2020;258(8):1631-1637. doi: 10.1007/s00417-020-04709-5 [DOI] [PubMed] [Google Scholar]

- 26.Lazaridis G, Lorenzi M, Mohamed-Noriega J, et al. ; United Kingdom Glaucoma Treatment Study Investigators . OCT signal enhancement with deep learning. Ophthalmol Glaucoma. 2021;4(3):295-304. doi: 10.1016/j.ogla.2020.10.008 [DOI] [PubMed] [Google Scholar]

- 27.You A, Kim JK, Ryu IH, Yoo TK. Application of generative adversarial networks (GAN) for ophthalmology image domains: a survey. Eye Vis (Lond). 2022;9(1):6. doi: 10.1186/s40662-022-00277-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Chen JS, Coyner AS, Chan RVP, et al. Deepfakes in ophthalmology: applications and realism of synthetic retinal images from generative adversarial networks. Ophthalmol Sci. 2021;1(4). doi: 10.1016/j.xops.2021.100079 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Zhou Y, Wang B, He X, Cui S, Shao L. DR-GAN: conditional generative adversarial network for fine-grained lesion synthesis on diabetic retinopathy images. IEEE J Biomed Health Inform. 2022;26(1):56-66. doi: 10.1109/JBHI.2020.3045475 [DOI] [PubMed] [Google Scholar]

- 30.He X, Fang L, Rabbani H, Chen X, Liu Z. Retinal optical coherence tomography image classification with label smoothing generative adversarial network. Neurocomputing. 2020;405:37-47. doi: 10.1016/j.neucom.2020.04.044 [DOI] [Google Scholar]

- 31.Medeiros FA, Zangwill LM, Bowd C, Vessani RM, Susanna R Jr, Weinreb RN. Evaluation of retinal nerve fiber layer, optic nerve head, and macular thickness measurements for glaucoma detection using optical coherence tomography. Am J Ophthalmol. 2005;139(1):44-55. doi: 10.1016/j.ajo.2004.08.069 [DOI] [PubMed] [Google Scholar]

- 32.Wong D, Chua J, Baskaran M, et al. Factors affecting the diagnostic performance of circumpapillary retinal nerve fibre layer measurement in glaucoma. Br J Ophthalmol. 2021;105(3):397-402. doi: 10.1136/bjophthalmol-2020-315985 [DOI] [PubMed] [Google Scholar]

- 33.Chua J, Tan B, Ke M, et al. Diagnostic ability of individual macular layers by spectral-domain OCT in different stages of glaucoma. Ophthalmol Glaucoma. 2020;3(5):314-326. doi: 10.1016/j.ogla.2020.04.003 [DOI] [PubMed] [Google Scholar]

- 34.Lavanya R, Jeganathan VSE, Zheng Y, et al. Methodology of the Singapore Indian Chinese Cohort (SICC) eye study: quantifying ethnic variations in the epidemiology of eye diseases in Asians. Ophthalmic Epidemiol. 2009;16(6):325-336. doi: 10.3109/09286580903144738 [DOI] [PubMed] [Google Scholar]

- 35.World Medical Association . World Medical Association Declaration of Helsinki: ethical principles for medical research involving human subjects. JAMA. 2013;310(20):2191-2194. doi: 10.1001/jama.2013.281053 [DOI] [PubMed] [Google Scholar]

- 36.Karras T, Aila T, Laine S, Lehtinen J. Progressive growing of GANs for improved quality, stability, and variation. arXiv. Preprint posted online October 27, 2017. doi: 10.48550/arXiv.1710.10196 [DOI]

- 37.Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. arXiv. Preprint posted online September 4, 2014. doi: 10.48550/arXiv.1409.1556 [DOI]

- 38.Ronneberger O, Fischer P, Brox T. U-Net: Convolutional networks for biomedical image segmentation. Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015. Springer; 2015. doi: 10.1007/978-3-319-24574-4_28 [DOI] [Google Scholar]

- 39.Heusel M, Ramsauer H, Unterthiner T, Nessler B, Hochreiter S. GANs trained by a two time-scale update rule converge to a local nash equilibrium. Proceedings of the 31st International Conference on Neural Information Processing Systems; 2017; Long Beach, California. [Google Scholar]

- 40.Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Paper presented at: 2017 IEEE International Conference on Computer Vision (ICCV); October 22-29, 2017. [Google Scholar]

- 41.DeLong ER, DeLong DM, Clarke-Pearson DL. Comparing the areas under two or more correlated receiver operating characteristic curves: a nonparametric approach. Biometrics. 1988;44(3):837-845. doi: 10.2307/2531595 [DOI] [PubMed] [Google Scholar]

- 42.Bowd C, Weinreb RN, Williams JM, Zangwill LM. The retinal nerve fiber layer thickness in ocular hypertensive, normal, and glaucomatous eyes with optical coherence tomography. Arch Ophthalmol. 2000;118(1):22-26. doi: 10.1001/archopht.118.1.22 [DOI] [PubMed] [Google Scholar]

- 43.Burlina P, Paul W, Mathew P, Joshi N, Pacheco KD, Bressler NM. Low-shot deep learning of diabetic retinopathy with potential applications to address artificial intelligence bias in retinal diagnostics and rare ophthalmic diseases. JAMA Ophthalmol. 2020;138(10):1070-1077. doi: 10.1001/jamaophthalmol.2020.3269 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Leung CK, Cheung CY, Weinreb RN, et al. Evaluation of retinal nerve fiber layer progression in glaucoma: a study on optical coherence tomography guided progression analysis. Invest Ophthalmol Vis Sci. 2010;51(1):217-222. doi: 10.1167/iovs.09-3468 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eTable 1. Summary of the Study Participants

eTable 2. Summary of Data used for Training Deep Learning Glaucoma Detection Models

eTable 3. Summary of Search Terms

eFigure 1. Workflow of the study

eFigure 2. Real and synthetic circumpapillary OCT images of normal eyes

eFigure 3. Distribution of the extracted circumpapillary RNFL thicknesses from the (a) real normal and real glaucoma images used for GAN training, and (b) synthetic 100,000 normal and synthetic 100,000 glaucoma images generated from the normal and glaucoma GAN models respectively

eFigure 4. Class Activation Maps of the glaucoma detection models