Abstract

In 2020 the Medical Council of Canada created a task force to make recommendations on the modernization of its practices for granting licensure to medical trainees. This task force solicited papers on this topic from subject matter experts. As outlined within this Concept Paper, our proposal would shift licensure away from the traditional focus on high-stakes summative exams in a way that integrates training, clinical practice, and reflection. Specifically, we propose a model of graduated licensure that would have three stages including: a trainee license for trainees that have demonstrated adequate medical knowledge to begin training as a closely supervised resident, a transition to practice license for trainees that have compiled a reflective educational portfolio demonstrating the clinical competence required to begin independent practice with limitations and support, and a fully independent license for unsupervised practice for attendings that have demonstrated competence through a reflective portfolio of clinical analytics. This proposal was reviewed by a diverse group of 30 trainees, practitioners, and administrators in medical education. Their feedback was analyzed and summarized to provide an overview of the likely reception that this proposal would receive from the medical education community.

Abstract

En 2020, le Conseil médical du Canada a créé un groupe de travail chargé de formuler des recommandations sur la modernisation de ses pratiques d’octroi du titre de licencié aux stagiaires en médecine. À cette fin, le groupe de travail a sollicité la contribution d’auteurs experts en la matière. Dans le présent article conceptuel, nous proposons de réorienter l’approche traditionnelle axée sur l’évaluation sommative par des examens à enjeux élevés vers l’intégration de la formation, la pratique clinique et la réflexion. Plus précisément, nous proposons un modèle d’octroi progressif de la licence en trois étapes : un titre pour les stagiaires qui ont démontré qu’ils possèdent les connaissances nécessaires pour commencer leur formation en tant que résident étroitement supervisé, un titre de transition pour les stagiaires ayant un portfolio d’apprentissage réflexif qui démontre la compétence clinique requise pour entamer une pratique autonome avec du soutien et certaines limites, et un titre permettant la pratique pleinement autonome et non supervisée pour ceux dont le portfolio réflexif démontre une compétence en analyse clinique. Cette proposition a été examinée par un groupe diversifié de 30 stagiaires, praticiens et gestionnaires en éducation médicale. Leurs commentaires ont été analysés et résumés pour donner une idée de l’accueil que la proposition serait susceptible de recevoir de la part du milieu de l’éducation médicale.

Introduction

Increasing amounts of educational and clinical data are collected on medical learners and practitioners. Within the context of competency-based assessment systems, our institutions collect frequent low-stakes, workplace-based assessments of our trainees.1–3 At the same time, the proliferation of electronic health records has made data related to clinical performance readily available.4 Historically, medical licensing authorities have struggled to obtain relevant data on the trainees and clinicians that they oversee. The greater challenge now is to collect, aggregate, analyze, and visualize educational and clinical data in a way that is meaningful to clinicians and to these bodies that supports the development of our trainees and clinicians.2

Overlaying this challenge is the increasingly sophisticated evidence-base supporting effective learning and assessment strategies. Traditionally, licensing bodies relied on intermittent, high-stakes examinations and the tracking of attendance at educational events to grant and maintain licensure.5,6 Unfortunately, these are not effectives strategies for ensuring the competence or supporting the development of our trainees and clinicians.6-7 Strategies better aligned with modern educational theory would present assessment and/or clinical practice data to our trainees and clinicians in a way that supports reflection, self-assessment, learning, and quality improvement.2

Within the context of these challenges, the Medical Council of Canada (MCC) created a task force to provide recommendations on the modernization of its licensure practices. This Concept Paper was submitted as part of the task force’s consultation with subject matter experts. Within it, we review key concepts that we feel should be considered as Canadian medical licensure is reimagined, outline the challenges inherent in the historical paradigm, propose a reimagined system of graduated licensure, and detail feedback on this proposal that we obtained from a diverse group of educators and trainees.

Key concepts

Prior to diving into our proposed solution, we must first define some key concepts in clinical practice and medical education. These concepts will form the basis for our proposal for the future of medical licensure in Canada.

Audit and feedback

To maintain competence throughout their careers, physicians need a mechanism to identify perceived and unperceived learning needs. Audit and feedback techniques are used for this purpose in many jurisdictions and may be required of practicing physicians in the future. For instance, some forecast College of Physicians and Surgeons of Alberta will be mandating audit & feedback for maintained licensure by 2024.8 Similarly, the Royal College of Physicians and Surgeons of Canada have just announced an initiative to encourage all members to maintain the competence via a continuous quality improvement (CQI) format that would inevitably involve some form of audit and feedback processes.9

Audit and feedback techniques summarize clinical performance over a specified period to identify how a physician (or a group of physicians) can improve the quality of the care that they provide to their patients. Ivers et al. summarized the most recent literature around audit and feedback in 2012, finding that these processes often lead to “small but potentially important improvements in professional practice.”10 More recent evidence suggests that audit and feedback processes are effective when systems are developed to further potentiate change in light of their results.11,12 Researchers exploring an audit and feedback program in Calgary found that it prompted physicians to question the data and reflect on their practice. This led to discussions around how to enact change in clinical practice.11 The Calgary Audit and Feedback Framework has been developed to foster feedback for physicians in a group environment.13

Indeed, audit and feedback techniques are increasingly being viewed as a foundational concept within the Canadian continuous professional development (CPD) space. The Future of Medical Education in Canada’s report from April 1, 2019 states that physicians will be expected to participate in a “continuous cycle of practice improvement that is supported by understandable, relevant, and trusted individual or aggregate practice data with facilitated feedback for the benefit of patients.”14 However, this has not yet come to fruition in most jurisdictions.

Moving forward, better connections will be needed to connect the educational practices being espoused by the CFPC Triple C15,16 and RCPSC Competence By Design17 programs with the continuous quality improvement processes that are beginning to be implemented within CPD. It is likely that literature and tools derived in the competency-based assessment literature will inform the effective provision of feedback to trainees participating in audit and feedback processes18 and that coaching discussions will be richer with the benefit of real clinical data.

Educational dashboards

The field of learning analytics analyzes and presents data about learners with the goal of improving education.19 These analytics can be presented in educational dashboards that help learners to reflect on their performance20 and stakeholders to gain insight on their trainees and educational programs.20–23 Such dashboards have recently been described within undergraduate24 and postgraduate20–23 medical education and are becoming more relevant as we collect increasing amounts of data about our learners.21,25 Preclinically, data about learners’ norm- and criterion-referenced performance on assignments, written examinations, and clinical examinations can be visualized to support reflection, coaching, and goal-setting.26 Clinically, competency based medical education programs use dashboards to highlight learning needs, visualize learning trajectories, and facilitate the review of large and complex assessment datasets.1,2,20–23 Additional insights can be gained from competence-based assessment data by analyzing and visualizing faculty member, rotation, program, and institution-based metrics.2,22,23

Clinical dashboards

With the advent of electronic health records (EHRs), there has been an explosion in the number of available data points that are collected regarding clinical care and performance.4 Clinical dashboards are being increasingly utilized to help visualize this data.27,28 Depending on the data and IT infrastructure that exists, the type of data and the granularity of information provided to physicians regarding their metrics can vary dramatically between hospitals and health care systems. Ideally, clinicians would receive real time, clinically relevant, evidence-based data at the individual level that is contextualized with either peer comparators or achievable benchmarks.29

There are several challenges with clinical dashboards. Firstly, encouraging physicians to engage meaningfully with them can be challenging. It is important to ensure that they receive data that they believe is meaningful and clinically relevant.26 Historically, physicians were provided with metrics that were easily measurable, even though the presented metrics may not have been clinically important and could be misleading. This can lead to disengagement and mistrust that can be mitigated by integrating a process that allows physicians to question the validity of the data and understand the comparator data.13 Even when physicians trust the data they are presented, they may be unsure of how to enact change in their clinical practice.11

Despite these challenges, the barriers to clinical dashboards are surmountable and indoctrinating residents into the process of interacting with their clinical data, reflecting on it, and enacting practice change during their training is likely to increase the chances that they will continue to use such data for continuous practice improvement as independent practitioners.

A need for change: integrating educational and clinical data within licensure

Until recently, physicians seeking independent licensure within the Canadian medical education system generally completed three examinations. The MCCQE1 is a standardized knowledge examination that is written at the end of medical school. The MCCQE2 was a clinical skills examination that is generally written during the first two years of residency training that was recently discontinued by the Medical Council of Canada.30 Lastly, prior to independent licensure candidates write a specialty-specific certification examination overseen by the Canadian College of Family Physicians or the Royal College of Physicians and Surgeons of Canada. Having completed these examinations successfully, physicians are recognized as independent practitioners by provincial medical regulatory authorities and maintain their license by complying with a maintenance of competence program.

Box 1. Historical path to medical licensure in Canada

MCCQE Part 1 - knowledge test

+MCCQE Part 2 - clinical skills

+RCPSC or CCFP certification examination

License and mandate to practice

Maintenance of Competence mechanisms (e.g. CME credits, assessment-based)

Change has become more urgent with the formal discontinuation of the MCCQE part 2 examination. However, there are numerous reasons to reimagine this system.

Medical school quality and the relevance of the MCCQE Part 1 examination to residency training

Historically, the MCCQE part 1 examination played a key role in assessing an individual trainee’s readiness for practice as an intern. While there may still be a need to ensure a base level of knowledge for trainees at the end of medical school, it is important to acknowledge the incredibly high pass rate for trainees prepared within Canadian medical schools31 and the high accreditation standards to which these institutions are held.32 Given that the examination is taken at a point in time that is years away from independent practice and does not correlate with clinical performance in residency,33 it is unclear what value this provides to medical training. Notably, the focus of medical schools on preparing senior students for this examination diverts the resources of both trainees and institutions that could be directed to other tasks.

The historical context and role of the MCCQE Part 2 has changed

The historical context under which this program was designed has changed. The MCCQE part 2 was introduced in 1992 by the MCC at the request of the provincial medical licensing authorities to assess the basic clinical skills of physicians who were entering practice.34 At this time, trainees performed a rotating internship and had to pass the examination before receiving a general practice license from their medical regulatory authority. With the development of family medicine as a specialty and phasing out of general practice licenses by medical regulatory authorities, the successful completion of the MCCQE part 2 no longer conveys independent practice privileges and is increasingly seen as a relic of another time.

The lack of alignment between the MCCQE Part 1 and 2 with independent practice

Many medical trainees study areas of medicine that are not well represented by the clinical content examined by the MCCQE (e.g. pathology, pediatrics, and genetics). In particular, the MCCQE Part 2 was written after a year of training in a residency program that may not provide trainees with learning experiences relevant to its content. This thereby requires residents to divert their attention to the study of areas of medicine that will not play a role in either their training or their eventual practice. Notably, this criticism has been levered at the examination for as long as it has existed.35

The structural bias inherent in standardized examinations

There is a significant body of evidence which suggests that standardized examinations contribute to systemic bias, disadvantaging physicians from marginalized groups or underrepresented minorities.36 While the MCCQE part 1 and 2 have not generally been used for competitive purposes, they may still impact the progression of competent trainees through their training.

Maintenance of Competence programs do not integrate data that could inform practice

Current Maintenance of Competence programs do not integrate clinical data or practice analytics that could be used to inform practice enhancement. Electronic health record data on practice patterns and outcomes is not used to inform the development of individualized learning plans. Ideally, ongoing licensing requirements should support the development of skills that focus on the enhancement or development of skills that are relevant to an individual physician’s practice.

The reimagined state: integrated licensure portfolios with facilitated reflection

Recognizing the limitations of the current system, our reimagined state attempts to best align licensure practices with the reality of our trainees. We have designed our proposed licensure system in keeping with three key principles:

Principle #1: Better integration between residency training and licensure practices

Medical school is no longer the terminal checkpoint for practice. In most jurisdictions within Canada, the general license no longer exists. Instead, we have residency and subspecialty training. Consequently, the need to have the MCCQE take place at the end of medical school and far from independent clinical practice makes little sense. To use an analogy, we essentially have a driving test without graduated licensure. There is a real opportunity to link licensure to competence assessed via educational assessments and clinical practice metrics that are more relevant to independent practice.

Principle #2: Better integration between reflective practice and licensure during training

In the current state, there are limited links between licensure to educational data. If we expect independent practitioners to review their performance data, reflect upon it, and make changes in their clinical practice we should begin this process during training with educational data. Indeed, the Canadian Family Physicians College (CFPC) has incorporated educational practice improvement into their Field Notes assessment program,37 which requires the regular review of assessments with their academic supervisors. More recently, all of the current 40 Royal College of Physicians and Surgeons of Canada (RCPSC) Competency by Design programs have already built in practices that more firmly link educational reflection to practice.38 While this is already happening to varying degrees within these programs, this reflection is not tied to licensure.

Principle #3: Better integration between reflective practice and licensure during clinical practice

While still in its nascent stage in many Canadian jurisdictions, clinical practice review is increasingly de rigeur. There is an opportunity for the MCC to accelerate this positive change by better linking clinical practice improvement to licensure. While early in training the linking of clinical data to learners is challenging due to the interdependency between supervisor and trainee likely makes such links problematic,39,40 near the end of training (e.g. during phases of training focused on transitioning learners to practice) it should be possible to use licensing procedures to lay groundwork for examining and determining self-improvement plans based on one's own performance metrics and clinical dashboards/report cards.

By inculcating a culture of self-reflection and facilitated self-regulated learning using practice driven data during all phases of residency training, we will ease the transition from residency to independent practice and increase comfort with this process. Capitalizing on the fact that residents are used to receiving frequent feedback and enacting action plans for future learning objectives, we feel that integrating this process into MCC licensure would help new staff for several reasons.

Firstly, as mentioned above, physicians are going to be increasingly expected to participate in some form of continuous practice improvement, whether this is section three credits from the Royal College or as outlined in the FMEC report.14 Secondly, incorporating data driven practice change into residency will promote the notion that self-reflection with data is a key element of a “growth mindset”41,42 rather than data that will be used punitively to “evaluate” their performance as clinicians. Finally, by creating a culture amongst all physicians that clinical practice review is an expectation of a learning health care system, will encourage the healthy discussions and sharing of practice improvement ideas identified in the study by Cooke et al.11

Our proposal for MCC Licensure

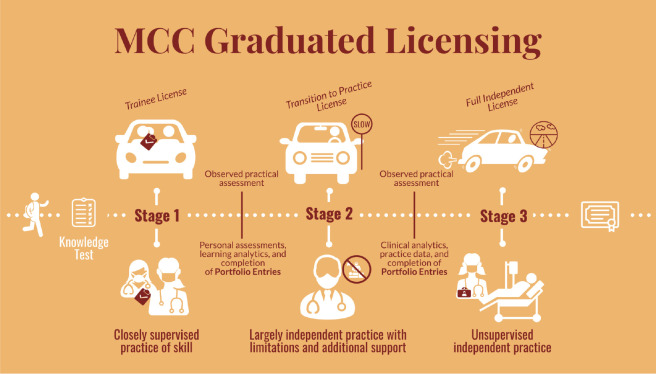

In keeping with the above principles, we propose a reimagined state wherein the MCC uses a “graduated licensing” approach with three steps (Figure 1). Broadly speaking, our reimagined state aligns with the current movement towards the incorporation of workplace-based observations, clinical data, and reflection into licensing practices.

Figure 1.

Outline of our proposed path to MCC licensure described using a ‘drivers license’ analogy

Stage 0 (no graduated license)

Following the successful completion of a knowledge test at the end of medical school training, trainees would be granted a ‘trainee license.’ This examination would be the equivalent of taking the “paper test” for a driving license. It would ensure that trainees have adequate baseline knowledge required to practice medicine. To decrease the harm caused by the retention of a high stakes examination,6 it should allow for multiple attempts throughout the final phase of medical school training, acknowledging the formative nature of the graduated licensing. Once trainees pass this test, they would progress to stage 1.

Graduated license stage 1

Individuals at this stage will enter a transitional stage of graduated licensing that would allow regulators to understand their level of training and ensure that their educational license reflects their current level of competency. During this stage (usually within the years where most programs would consider these individuals “junior residents”), assessment data would be regularly compiled and reviewed by the trainee who would then draft formal learning plans with the support of mentors within their program. Since clinical data for junior residents is strongly influenced by teachers and senior trainees (e.g. an interdependence problem),39 educational assessment data would serve as a surrogate marker of competency that could inform trainees’ reflections on their educational progress.

To graduate to stage 2, trainees would generate reflective portfolios attesting to at least three individual learning plans they have developed and completed based on their educational data. Developmentally, this stage aligns with work that is being done with existing educational portfolios but adds on a layer of integration and sophistication through formalized reflection. Advanced programs may also be able to provide clinical data for reflection through the tracking of some resident-sensitive clinical metrics such as those previously described by Schumacher and colleagues.43

These reflections could be compiled into a trainee portfolio that would also contain an attestation from a local mentor (e.g. program director, associate program director, mentor, or academic coach/advisor) regarding the trainee’s ability to engage in reflection and guided self-reflection. This mentor would review and provide feedback on each portfolio element as it is produced. The mentor then might draft a brief narrative describing the trainee’s ability to turn data into learning plans, confirm the creation of learning plans, and attest to the level of achievement for each learning plan. The review of these educational artifacts by the program’s Competence Committee would incorporate a developmental model to help trainees adjust to using external data for calibrating competence and confidence in a supported and scaffolded environment,44 while still being supported by educators who would provide them external feedback on their own insights.

Graduated license stage 2

Within stage 2, trainees would begin to use real clinical data to assess their practice. Developmentally, this stage would occur near the end of training (e.g., the last 6-12 months) in alignment with the Transition to Practice period of Competence By Design training programs such that senior trainees would be able to review their own practice patterns. The clinical metrics that are used should align closely with what they should expect in their eventual early practice setting. While these metrics may still have shades of the interdependence problem,43 we hope the creation of such an assessment requirement will shift the conversation nationally to ensure that all senior trainees begin to have access to personal practice data analytics and/or feedback about their practice from patients and colleagues. Reviews of clinical metrics would be similar to Quality Assurance audits that are required by some regulators both within Canada and internationally, but could also include more qualitative data points (e.g. chart audits of referral and consultations by a colleague, patient experience surveys or focus groups). Ideally, this stage would extend the end-of-training assessments that have been incorporated into the end of competency-based training programs to help senior trainees to understand how they function within their clinical setting and the greater health system while also contributing to their clinical development. The review of metrics would help trainees calibrate their competence and confidence.44 Facilitated feedback of the clinical metrics (such as the use of the Calgary audit and feedback framework13) by the trainee’s mentor would help them understand the goal of clinical metrics, normalize their reactions to receiving practice-specific data, and develop learning plans that address clinical areas in need of improvement. Tools that facilitate the self-reflection process and encourage development of action plans are available and could be used asynchronously by learners.10 The trainee’s mentor would once again review each portfolio element as it is produced, provide feedback, and draft a brief narrative outlining their perspectives on their trainee’s ability to incorporate clinical data into their practice which would contextualize the review of the portfolio by the Competence Committee.

Table 1.

Summary table describing our proposed stages and the points where trainees would enter and exit each stage

| Stage | Entry into this Stage | Exit from this Stage |

|---|---|---|

| Stage 0 | Medical School Training | Completion of a knowledge test (e.g. the MCCQE1) within the final 6 months of medical school training. This examination should allow multiple attempts over this final period of 6-12 months. |

| Stage 1 Translating Educational Data into Action |

Completion of successful MCCQE 1 during final months of medical school training. | Completion of a portfolio that incorporate the analysis of educational data by the learner to develop, complete, and reflect upon a learning plan. See Supplemental Data 1 and 2 for samples of what these documents could look like. The portfolio would be assessed by the training program’s Competence Committee (or equivalent) and should contain: 3 learning plans, with a short reflection on the rationale of the learning plan and its linkage to the educational data analyzed; 3 reflections on the achievement or adjustment of completed learning plans; 1 supervisor narrative including (narrative about the trainee’s ability to turn data into learning plans, confirm the creation of a learning plan, and attest to the level of achievement for each learning plan). |

| Stage 2 Translating Clinical Data into Action |

Completion of reflective educational portfolio +/- milestone assessment per their training program (e.g. PGY4 examination for many RCPSC specialty programs). | Completion of a portfolio that incorporates the analysis of clinical data by the learner to develop, complete, and reflect upon a learning plan. See Supplemental Data 1 and 2 for samples of what these documents could look like. The portfolio would be assessed by the training program’s Competence Committee (or equivalent) and should contain: 2 learning plans, with a short reflection on the rationale of the learning plan and its linkage to the clinical data analyzed; 2 reflections on the achievement or adjustment of completed learning plans; 1 supervisor narrative including (narrative about the trainee’s ability to turn data into learning plans, confirm the creation of a learning plan, and attest to the level of achievement for each learning plan). |

| Unsupervised practice Continued maintenance of Clinical Data Analysis and Translation into Action |

Completion of reflective clinical portfolio. | This will be governed by the provincial regulators and the maintenance of competence programs from the CFPC and RCPSC and would be beyond the purview of the MCC. |

Stakeholder consultation and feedback

The approach to licensure that we have outlined would represent a major shift in the oversight and credentialing of physicians. To gauge how it would be received, we sought reactions from a diverse group of trainees and medical educators. In total, we invited 51 individuals with a focus on ensuring representation in geographic location, gender, and level of training/seniority. We asked participants to watch an embedded video (https://youtu.be/vwNhFbdfrLM) with an explanation of the proposal (~24 minutes in length) then complete a short Google Form requesting their reactions.

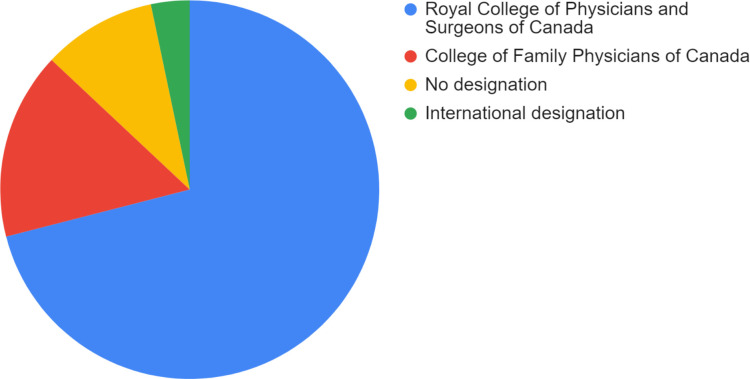

The 51 individuals invited to participate included representatives from all 10 provinces and the United States, a balance of men and women (52.9% female), and a mix of trainees (three medical students and seven residents) and faculty (seven junior, 15 mid-career, and 19 senior). 31 individuals responded (response rate = 60.8%). In accordance with the terms of our institutional review board exemption for this process, we have presented only the aggregate feedback in the results below and Supplemental Data 3. As consultation participants were not required to provide their names, we do not have full demographic data beyond knowing that most hold designations with either with either the Royal College of Physicians and Surgeons of Canada affiliation (71%) or Canadian College Family Physicians (16.1%) (Figure 2).

Figure 2.

Pie chart outlining the designations of the consultation participants.

Figure 3.

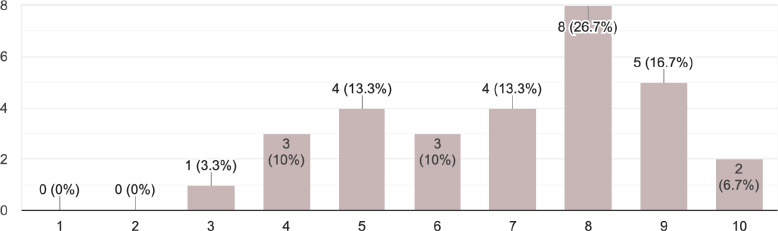

Frequency histogram of the consultation participants sentiment towards the proposal rated from 1 (hate this idea) to 1

We gauged the sentiment for our proposal with a single question asking participants to rate their excitement about the idea from a level of 1 (hate this idea) to 10 (love this idea). Figure 2 presents our results in a frequency histogram. 50% of respondents rated the idea between 8 and 10 (mean 7.0 SD 1.9).

The participants also provided qualitative feedback. We conducted a content analysis with a focus on identifying advantages and disadvantages to the proposed approach. Table 2 contains a summary of their feedback. The disadvantages identified describe resource constraints and implementation challenges that should be considered by the MCC if it moves ahead with the implementation of some or all elements of this proposal. Appendix A contains selected quotes exemplifying the response to each of the questions that they were asked.

Table 2.

Summary of the advantages and disadvantages of this approach to medical licensure

|

Advantages |

Disadvantages |

|---|---|

| Developmentally aligned to help individuals form habits around examining, reflecting, and establishing plans to improve their practice. Requirement and demonstration of the use of practice metrics to inform evidence-based practice. Demonstration of what high-yield CME looks like / requires Demonstrates existing CME processes (e.g. Royal College section MOC 3 credits / CCFP Mainpro 3 credits) Greater alignment with the clinical practice of independent physicians. Potentially cheaper to implement and oversee than the existing MCCQE2 examination. Reviewing portfolios could be done asynchronously by assessors rather than requiring a physical plant and co-location Could support the transition to nationwide certification and credentialing |

Introduces a layer of complexity and new work on top of specialty-based requirements from CFPC/RCPSC Will require cooperation between multiple important groups (CFPC, RCPSC, AFMC, FMRAC) Requires integration between clinical and educational systems to collect practice data. This will require more resources and training. Asks of programs to create a “transition to practice” period where senior trainees can gather their OWN clinical data about their own performance (e.g. faculty have to “lay back from influencing decisions, etc. to prevent the interdependence problem). There will be an upfront platform development cost for housing and submitting portfolio entries, as well as ongoing costs for maintaining such a platform. Will require extensive trainee and faculty development to ensure these are done well. Some trainees and/or faculty may not have the innate capacity to fully engage in a reflective practice required by this program. Subspecialty training programs do not fit nicely within this model as they move from one discipline (e.g. internal medicine) to a sub-discipline (e.g. cardiology) without independent practice. Current proposed model does not reflect the diversity of ways in which international medical graduates (IMGs) with partially validated previous training would enter clinical practice. For primary entry into concurrent residency training, there would be no difference, but if trainees are being slotted into other more senior levels, there may be different routes for licensing equivalency to be completed. This would need customization and further articulation. |

Alignment with future practice

The CPD landscape is moving towards audit and feedback, workplace-based assessment, and practice-based improvement. The proposed changes to licensure would normalize and support reflective practice by tying it to the reflective review of educational and clinical data and development relevant learning plans. By connecting licensure to a program that encourages the use of data to generate useful practice-driven change/feedback processes, we hope to see trainees transition into practice more effectively with habits that support self-regulated learning, foster a growth mindset, and result in the improvement of the broader healthcare system.

Appendix A. Key quotes outlining the feedback received from the proposal consultation

| What are your first impressions of this idea? What aspects do you like? |

| I like the analogy. It aligns with CBD and its goals. It emphasizes movement of the exam earlier in training which a lot of disciplines have not done. It support “growth mindset” principles. I think it sunsets part 2 in residency when the focus should be on training. Initially I liked the graded model a lot. It inherently makes sense. Additionally it really transitions the way to nationwide certification and credentialing which we really need. FINALLY an initiative to tie in clinical performance with licensing. It's great to begin to encourage reflection on one's own performance and patient metrics before the training wheels fully come off!” Using learning and clinical analytics to inform residency progress is fundamental in this day and age. I do not see a role for the MCC in residency education. I [one-hundred percent] agree that MCCQE2 could be replaced with something more aligned with the needs of today in a more practical format that is significantly less expensive and less high stakes. Love the idea of a graduated license but am a little unclear how these transitions can be squeezed into FM. I would be curious on the data of trainees and staff physicians who currently would self identify as people who actively identify learning deficits or areas where they can improve their competence and how they address this. I like the idea of having specific benchmarks and a sequential, graduated approach - rather than testing the same thing over and over again. The analogy to graduated licensing for new drivers is appropriate - I appreciated the historical perspective on the traditional licensing route - and would love to see a new route that better matches current healthcare systems in Canada At a system level: it creates efficiency and decrease waste of time and resources used to conduct exams that do not add or change our management. I like the idea of having a gradual increase in learner responsibility and the ability to get an early first taste of independent practice while still having supervision and people to turn to during difficult times. I also like the fact that learners will start out with personal analytics and then move on to clinical analytics and learn the nitty gritty gradually, rather than having no direction in terms of how learning needs to be structured. Although I think it would be an improvement on the current MCC process, I worry about it adding extra"hoops" for residents to jump through. More reflective of the needs and progression of a trainee after medical school to individual practice. Continuous assessments, clinical dashboards, and practice data are more data points to more accurately assess physicians as they progress through their training and develop independence. |

| Do you have any concerns about this proposed model? |

| Two and three tiered specialty and subspecialty training does not nicely fit within this model (eg cardiology residency and then AFC training in a subspecialty) The duration of that license may become important - as if less than 1 year, a massive infrastructure for the regulators to stand up for a couple of months, with their own costs (maybe offset by a fee - but then may also need to allow trainee to bill to offset that increased fee). My first concern (surgery in particular) is how will the transition license translate into the ability of attendings to bill. Currently to the best of my knowledge, you have to be in the OR and scrubbed for the case to bill. If this remains the case, it functionally won't change anything or won't be able to get off the ground if it is tied directly to attending reimbursement or have a limited roll out based on fields. Most surgical outcomes are based on 90 day readmission rates/infection in the literature, largely driven by US medicare reimbursement implications. In order to get usable analytics for most of the data in Sx, you would likely need to have the transitional license by the end of PGY 4 at the latest. In fields like ortho[pedics] where surgeons are not retiring and forcing new grads to do the bulk of the call, this system of intergenerational abuse could be easily propagated by the graded responsibility model. Additionally, the call burden could be abused “you do the case I am not coming in" would prevent some age related retirements. This is very field specific. Additionally, for some specialities it may be more difficult to define valid and reliable clinical analytics. Who would be defining what the appropriate analytics or markers are? I worry about the risk of this being too narrowly defined by administrative bodies rather than something that is actually driven by learners and their teachers who understand the nuances of their particular practice environment. I am also concerned about the “how to” for this part and think it may require a major culture shift and/or building infrastructure/support in some cases. For some specialities and centres, these are pretty easy to define and the data is readily available. For some hospitals and training sites, this might be harder to come by. I would be curious about how the last stage will be implemented in family medicine, given the shorter time frame, and what those clinical practice metrics would be, but that does not in any way seem insurmountable to me. The biggest barrier that I foresee is getting buy in from staff / evaluators. Despite the support for staff physicians in how to better evaluate learners in the roll out of CBME, there still is a paucity of information that does not appear on written feedback (though it is sometimes verbally communicated with the learner). While many sites and preceptors are really great at providing rich feedback, certain sites or preceptors are challenging to win over / convince to spend the time providing good feedback (or even learning how to do so). I wonder if the people providing the feedback may be the weakest link in this new proposed system. This [proposal] requires FMRAC to come together to put this into practice. Federation of Medical Regulatory Authorities of Canada [FMRAC] does not have a good track record (see national licensure proposals). Is the white paper intended to persuade FMRAC? Perhaps the “license” you are talking about is simply a formalization of what is supposed to be happening in residency programs anyway? Trustworthiness of programs and preceptors to give objective and difficult assessments is a major flaw because they do not - failure to fail would undermine much of your model without guaranteed objective and critical measures in the system. Having MCC resources deployed to help facilitate the data required might be welcomed but it is hard to say. Lots of questions about governance, ethics, ownership of data, etc. So my concern is too many licenses transitions for family medicine but I love the PLP and practice data use with focus on QI that is demonstrated and made a normal expectation in residency because it is totally expected for future practice. The future plans for CPD are definitely in line with this proposal and I think that would be great. The Triple C curriculum does differ across programs and in those that still use block rotations in the final 6 mo of FM residency there may not be much family medicine except for a few half days back and that would likely hamper appropriate data collection. The proposed graduated license approach would potentially work better in versions of Triple C with more consistent longitudinal experiences in FM but this varies from program to program. Additionally, for Family Medicine residents planning to practice rurally, I do wonder how the “junior attending” status will work in areas where there may not be a lot or any other “senior attending” around. I understand from your video that this “junior attending” area may be done as part of the end of residency, in which case, this concern would be a nonissue, but if it extended beyond residency and depending on the flexibility of the model, I think this would have to be considered. Similarly, any family physician planning to go into independent practice (albeit few these days but a possibility), how would this model then be applied in those instances, again if the “junior attending” status extended beyond the formal residency training period. I state all of this while fulling recognizing that new to practice Family Physicians often do rely on a lot of informal mentorship and relationships in their first few years of practice (and realistically more than a few years..) and think a supportive model is welcomes to facilitate this transition -- whether that needs to be formalized or not, I am unsure.” For the learners to be able to complete their portfolios and choose the indicator metrics, they will need coaching and mentorship support. Hence, faculty development would be necessary for the success of this change. As for stage 3, I suspect may competency-based programs are already using such a quality improvement framework for residents to reflect on their practice as part of their transition to practice stage. It may be challenging in some specialties to define what the metrics are. I am also a bit concerned that this might duplicate work already being done as part of the transition to practice stage. I do see the value in teaching good reflective practice habits, however, and the TTP stage seems like the right time to do that. I am not convinced that portfolio entries and practice quality improvement reviews are an improvement on just removing the MCC2. Having a core knowledge test at the end of medical school is still important - agree with continuing it. However, would obtaining the “trainee license” be contingent on passing the exam? In some jurisdictions the MD graduate can enter residency without having passed the MCCQE1. The model, as presented, suggests that one would have to pass the exam before being issued the “trainee license”. I would agree with this approach, but then the medical schools would have to have some kind of “remediation” program, to prepare the graduate to pass subsequent attempts at the exam and move on. On the other, we are required to make a “terminal” or summative decision about successful completion of the program (med school or residency). There is a huge risk of “failing to fail”. So, one value of the MCC is that it is a body OUTSIDE of the educational system that provides that summative evaluation. So, would the MCC have any role in evaluating the evaluations generated for the resident as they move through the residency? We need to keep in mind the original mandate of the MCC, which is to provide an assessment for licensure that is acceptable to all of the Canadian regulators. The Part II examination is imperfect and the timing of it is no longer relevant, however, at least some of the communication and other skills assessed by it would likely be considered relevant by the regulators. So, what process would reassure the regulators that the skills / attitudes that they are concerned about would be evaluated? I'm not sure that leaving it up to the universities to do that evaluation would be deemed sufficient - if it was, the MCCQE2 would not have been created. The idea of portfolios/reflections etc works for those learners who are willing to make use of them. However, the regulators are going to be concerned about those who DON'T. They are likely to be the ones who will get into regulatory trouble when in practice - because they just don't get it. So, again, some kind of external evaluation I think is still likely to be needed to reassure the regulators that someone has been watching/monitoring the learner. I think that implementing any kind of graduated licensing process through 13 provincial and territorial regulators will be close to impossible. I think the bottom line for me has less to do with the ideas themselves, but more that I do not feel the MCC is the right body for this work. This seems like a lot of added work for residents - will their programs provide them with protected time to do this work or will this be done on their own time? I am concerned about the added time/effort/expectations on a group of trainees who already have significantly high level of burnout. What does the special license afford [trainees]? For example, would they be able to bill with a transition to practice license? Who is going to fund the supervisors for the “largely independent practice” Need for system to be able to gather clinical data, possible difficulty with implementing consistently across various training programs, especially those with distributed sites, remote locations, etc. May also be difficult for objectively determine if the reflection in the portfolio is sufficient for progression. |

| What are some advantages to the proposed system? (Feel free to compare it to the current state of MCCQE 1 then MCCQE2) |

| High stakes single day exams that are only available once a year are an archaic and cruel process that have no role in the 21st century. If they are existing, they do need to be done in the way you propose earlier on to prevent dramatic impacts on the lives of learners. It can financially destroy you to do it later. I agree that it is important to include a knowledge test at the end of medical school training for the provisional “G1” license. I like that, compared to the MMCQE2, it has the flexibility to be tailored to each individual specialty and assess competency within that speciality. I like that it is focused more on learning processes rather than outcomes (though I think outcomes are also captured in it and in a more meaningful way with the clinical analytics QI compared to an artificial SP encounter), because the Royal College and CCFP already cover that through tailored knowledge and skill test at the end of residency. Dr. Chan's comment about equity also really resonated with me and I think that this system would be more equitable than the current MCCQE2 - which is only offered a couple times a year in certain locations and with seemingly rigid policies that surround it. I think having a historical test (part 2) that is not well aligned with the current training programs does really warrant review, so very glad to see this. I also think people can do okay on part 2 and still have a lot of concerns that the residency needs to sort out so it is really great that your proposal is more integrated and reflective of our current reality. Multiple dates of sitting offered for MCCQE1 (I suggest maybe quarterly but I imagine most medical students will just want to complete it at the end of medical school) An approach that embraces programmatic assessment is a good thing - just seems a little timid in going full PA - why? Analytics have great potential but current evidence suggests that connection of EHR data to education is weak. less high stakes, allows evaluation at multiple touch points which will be far more valid, fosters development of skills for life long learning; does away with an exam (MCCQE2) that is not relevant in our current system and replaces it with a relevant assessment. The idea that MCC would be doing something that assesses residents for full licensure that is grounded in the real skills they need for lifelong learning and practice is very exciting and ties the PGME and CPD skills appropriately as a “developmental” step from one to the other. This would modernize the role for the MCC and make it relevant and give it a legitimate role rather than what feels now like a competitive one that is disconnected. The “driver's license” comparison is not quite accurate as the driving test evaluator is not the person teaching the learner to drive. Would keeping these functions separate have more credibility/validity? Graduated makes sense! I do not think readiness to practice is a yes/no or on/off switch. I think it is a dovetail/process. |

| What are some potential problems with this proposed system? |

| What happens to those who never pass RC exam? Currently, they can practice in some underserviced areas because they have an independent practice license The MCC isn't the one issuing any of the licenses. It is all well and good but if say, QC decides to not join in or say BC, what happens then? However this could be a great way to transition out of the colleges to a national licensure. The nice thing about the graded system is that it allows for transition into independence etc. However, when you compare it to other types of graded exams like the USMLE, once you complete the exams and have a medical license, you can practice independently without your board exams or FRCSC/PC/CFPC equivalent. They are desired and often work place required but you can financially work without one. The question I have here is why do we not transition that way? With CBME, accreditation, the RC/CFPC exams, and the MCC why do we need all of them?” Dovetailing stage 2 with the FRCPC / CCFP exams and managing learner burnout. R2 is an easier time to study for the MCCQE (in the emergency medicine model) than later on in training. Still has single high stakes exams. Is very dependent on questionable data (EHR etc) in terms of its educational utility and reliability. Would need substantive research to show this was sufficient, reliability, validity etc. EHRs tend to be designed in very education-unfriendly ways. So, graduated and heterogeneous and longitudinal? Absolutely. I would be a bit more critical of the current environment and cultures that could and would undermine a model like this. Seems complicated- layered on top of an already huge disruption by CBME. Seems to have increased involvement with regulating colleges which will be costly. Practical deployment of the resources - but honestly this could be an impetus for some major change that is needed. There may be challenges securing funding and expertise in departments to implement the IT strategies to report on learning analytics and even practice information to track information needed. Also the issues around ethics, data use etc. with so many jurisdictions seems crazy. Lots of hurdles but still worth exploring. I think a large percent of this model's actual success in improving clinical care and patient care hinges on changing a culture in medicine towards being okay to say “I don't know this,” “I don't feel comfortable with my skills related to X”, “This is an area I have typically struggled with and actually found myself avoiding but now would like to tackle head-on and learn more about”... I.e. moving away from e.g. “ECGs.... ahhhhhhh,” or highlighting what you are good at and not challenging one's self to look at areas of weakness. This also then in turn hinges on evaluation in medical school and residency/fellowship training to not penalize candidates for identifying areas of weakness and then not looking like a rockstar at all times... to put it in simple turns... the issue is complex but I hope you can catch my drift with what I am trying to get across. “ What level of supervision is needed for the transition of practice for medico-legal responsibility. You will run into problems with the existing feudal system we have to implement this. Too many “masters in medical education”. Too much redundancy. Not enough integration. Too feudal. Start identifying the competencies that are core... Customized and tailored to specialty. Change can be a problem. So getting national buy-in, changing mindsets about the traditional, high stakes licensing exam route, getting medical schools to work together around sharing analytics perhaps (if that becomes necessary). Beyond that, I think it makes sense Not convinced all training locations would easily be able to provide the practice metrics for practice quality review.” Given their potentially conflicted role, I'm not sure that med schools and residencies can be relied upon to provide the kind of assessment that would be acceptable to the regulators for the purpose of licensure - given that the focus of the regulators is on public/patient safety. I expect they would still want some kind of external evaluation of the learner THROUGHOUT their undergrad and residency education. This would be consistent with the progression toward an independent “driver's license.” It would also potentially be more valid than an one-off Part II examination. |

Conflicts of Interest

None of the authors have a conflict of interest to declare.

Funding

No funding was received to support the work outlined within this manuscript.

References

- 1.Chan T, Sebok-Syer S, Thoma B, Wise A, Sherbino J, Pusic M. learning analytics in medical education assessment: the past, the present, and the future. AEM Educ Train. 2018;2(2):178-87. 10.1002/aet2.10087 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Thoma B, Caretta-Weyer H, Schumacher DJ, et al. Becoming a deliberately developmental organization: using competency-based assessment data for organizational development. Med Teach. 2021. May 25;43(7):801-9. 10.1080/0142159X.2021.1925100 [DOI] [PubMed] [Google Scholar]

- 3.Thoma B, Hall AK, Clark K, et al. Evaluation of a national competency-based assessment system in emergency medicine: a CanDREAM Study. J Grad Med Educ. 2020. Aug 1;12(4):425-34. 10.4300/JGME-D-19-00803.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Blonde L, Khunti K, Harris SB, Meizinger C, Skolnik NS. Interpretation and impact of real-world clinical data for the practicing clinician. Adv Ther. 2018. Nov 1;35(11):1763-74. 10.1007/s12325-018-0805-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Reznick RK, Blackmore D, Dauphinee WD, Rothman AI, Smee S. Large-scale high-stakes testing with an OSCE: report from the Medical Council of Canada. Acad Med. 1996. Jan;71(1):S19. 10.1097/00001888-199601000-00031 [DOI] [PubMed] [Google Scholar]

- 6.Thoma B, Monteiro S, Pardhan A, Waters H, Chan T. Replacing high-stakes summative examinations with graduated medical licensure in Canada. CMAJ. 2022. Feb 7;194(5):E168-70. 10.1503/cmaj.211816 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hafferty FW, O'Brien BC, Tilburt JC. Beyond high-stakes testing: learner trust, educational commodification, and the loss of medical school professionalism. Acad Med J Assoc Am Med Coll. 2020. Jun;95(6):833-7. 10.1097/ACM.0000000000003193 [DOI] [PubMed] [Google Scholar]

- 8.Physician Practice Improvement [Internet]. College of Physicians & Surgeons of Alberta. [cited 2021 Oct 1]. Available from: https://cpsa.ca/physicians-competence/ppip/

- 9.Royal College of Physicians and Surgeons . Essential Guidance for Quality Improvement. 2021. Available from: https://www.royalcollege.ca/rcsite/cpd/essential-guidance-for-quality-improvement-e?utm_source=ceo-message&utm_campaign=acpd [Accessed Oct 1, 2021].

- 10.Ivers N, Jamtvedt G, Flottorp S, et al. Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane effective practice and organisation of care group, editor. Cochrane Database Syst Rev [Internet]. 2012. Jun 13 10.1002/14651858.CD000259.pub3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Cooke LJ, Duncan D, Rivera L, Dowling SK, Symonds C, Armson H. How do physicians behave when they participate in audit and feedback activities in a group with their peers? Implement Sci. 2018. Dec;13(1):104. 10.1186/s13012-018-0796-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kamhawy R, Chan TM, Mondoux S. Enabling positive practice improvement through data-driven feedback: A model for understanding how data and self-perception lead to practice change. J Eval Clin Pract. 10.22541/au.159318396.69126591 [DOI] [PubMed] [Google Scholar]

- 13.Cooke LJ, Duncan D, Rivera L, Dowling SK, Symonds C, Armson H. The Calgary Audit and Feedback Framework: a practical, evidence-informed approach for the design and implementation of socially constructed learning interventions using audit and group feedback. Implement Sci. 2018. Dec;13(1):136. 10.1186/s13012-018-0829-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Campbell C, Sisler J. Supporting Learning and Continuous Practice Improvement for Physicians in Canada: a new way forward. The Future of Medical Education in Canada: Continuing Professional Development; 2019. Available from: https://www.cpdcoalition.ca/wp-content/uploads/2021/01/FMEC-CPD_Synthesized_EN_WEB.pdf [Accessed Sept 22, 2021].

- 15.Tannenbaum D, Konkin J, Parsons E,, et al. Triple C competency-based curriculum. Report of the Working Group on Postgraduate Curriculum Review - Part 1. College of Family Physicians of Canada; 2011;p. 1-101. Available from: https://www.cfpc.ca/CFPC/media/Resources/Education/WGCR_TripleC_Report_English_Final_18Mar11.pdf [Accessed on Sept 22, 2021].

- 16.Oandasan I, Saucier D. Triple C Competency Based Curriculum Report - Part 2 Advancing Implementation. The College of Family Physicians of Canada; 2013. p. 1-170. Available from: https://www.cfpc.ca/CFPC/media/Resources/Education/TripleC_Report_pt2.pdf

- 17.Karpinski J, Frank JR. The Role of EPAs in creating a national system of time-variable competency-based medical education. Acad Med. 2021. Jul 1;96(7S):S36-41. 10.1097/ACM.0000000000004087 [DOI] [PubMed] [Google Scholar]

- 18.Lockyer J, Armson H, Könings KD, et al. In-the-moment feedback and coaching: improving R2C2 for a new context. J Grad Med Educ. 2020. Feb 1;12(1):27-35. 10.4300/JGME-D-19-00508.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Clow D. An overview of learning analytics. Teach High Educ. 2013;18(6):683-95. 10.1080/13562517.2013.827653 [DOI] [Google Scholar]

- 20.Carey R, Wilson G, Bandi V, et al. Developing a dashboard to meet the needs of residents in a competency-based training program: a design-based research project. Can Med Educ J. 2020;11(6):e31-5. 10.36834/cmej.69682 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Thoma B, Bandi V, Carey R, et al. Developing a dashboard to meet Competence Committee needs: a design-based research project. Can Med Educ J. 2020;11(1):e16-34. 10.36834/cmej.68903 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Yilmaz Y, Carey R, Chan T, et al. Developing a dashboard for faculty development in competency-based training programs: a design-based research project. Can Med Educ J. 2021;12(4):48-64. 10.36834/cmej.72067 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Yilmaz Y, Carey R, Chan TM, et al. Developing a dashboard for program evaluation in competency-based training programs: a design-based research project. Can Med Educ J. in-press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hauer KE, Iverson N, Quach A, Yuan P, Kaner S, Boscardin C. Fostering medical students' lifelong learning skills with a dashboard, coaching and learning planning. Perspect Med Educ. 2018. Oct 1;7(5):311-7. 10.1007/s40037-018-0449-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Boscardin C, Fergus KB, Hellevig B, Hauer KE. Twelve tips to promote successful development of a learner performance dashboard within a medical education program. Med Teach. 2018;40(8):855-61. 10.1080/0142159X.2017.1396306 [DOI] [PubMed] [Google Scholar]

- 26.MacLean CH, Kerr EA, Qaseem A. Time out-charting a path for improving performance measurement. N Engl J Med. 2018. May 10;378:1757-61. 10.1056/NEJMp1802595 [DOI] [PubMed] [Google Scholar]

- 27.Buttigieg SC, Pace A, Rathert C. Hospital performance dashboards: a literature review. J Health Organ Manag. 2017. Jan 1;31(3):385-406. 10.1108/JHOM-04-2017-0088 [DOI] [PubMed] [Google Scholar]

- 28.Almasi S, Rabiei R, Moghaddasi H, Vahidi-Asl M. Emergency department quality dashboard; a systematic review of performance indicators, functionalities, and challenges. 2021. Jun 18;9. [DOI] [PMC free article] [PubMed]

- 29.Dowling SK, Mondoux S, Bond CM, Cheng AHY, Kwok E, Lang E. Audit and feedback for individual practitioners in the emergency department: an evidence-based and practical approach. CJEM. 2020. Jul;22(4):528-33. 10.1017/cem.2020.28 [DOI] [PubMed] [Google Scholar]

- 30.Medical Council of Canada . The MCC suspends the delivery of the MCCQE Part II. Available from: https://mcc.ca/news/mcc-suspends-delivery-of-mccqe-part-ii/ [Accessed on Sept 22, 2021].

- 31.MCCQE Part I Annual Technical Report 2019. 2019;53. [Google Scholar]

- 32.Committee on Accreditation of Canadian Medical Schools . CACMS Standards and Elements [Internet]. Ottawa, ON: Committee on Accreditation of Canadian Medical Schools; 2020. Available from: https://cacms-cafmc.ca/sites/default/files/documents/CACMS_Standards_and_Elements_AY_2021-2022.pdf [Google Scholar]

- 33.Woloschuk W, McLaughlin K, Wright B. Is undergraduate performance predictive of postgraduate performance? Teach Learn Med. 2010. Jun 22;22(3):202-4. 10.1080/10401334.2010.488205 [DOI] [PubMed] [Google Scholar]

- 34.Dauphinee W, Harley C. The Medical Council of Canada responds. Can Med Assoc J. 1995. Jan 15;152(2):147-8.7820786 [Google Scholar]

- 35.Alibhai SM. Timing of the Medical Council of Canada clinical examination. CMAJ 1993. Jan 15;148(2):128-30. [PMC free article] [PubMed] [Google Scholar]

- 36.Davis D, Dorsey JK, Franks RD, Sackett PR, Searcy CA, Zhao X. Do racial and ethnic group differences in performance on the MCAT exam reflect test bias ?: Acad Med. 2013. May;88(5):593-602. 10.1097/ACM.0b013e318286803a [DOI] [PubMed] [Google Scholar]

- 37.Laughlin T, Brennan A, Brailovsky C. Effect of field notes on confidence and perceived competence. Can Fam Physician. 2012. Jun;58(6):e352-6. [PMC free article] [PubMed] [Google Scholar]

- 38.Sherbino J, Bandiera G, Doyle K, et al. The competency-based medical education evolution of Canadian emergency medicine specialist training. Can J Emerg Med. 2020. Jan;22(1):95-102. 10.1017/cem.2019.417 [DOI] [PubMed] [Google Scholar]

- 39.Sebok-Syer SS, Chahine S, Watling CJ, Goldszmidt M, Cristancho S, Lingard L. Considering the interdependence of clinical performance: implications for assessment and entrustment. Med Educ. 2018;52(9):970-80. 10.1111/medu.13588 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Sebok-Syer SS, Goldszmidt M, Watling CJ, Chahine S, Venance SL, Lingard L. Using Electronic Health Record Data to Assess Residents' Clinical Performance in the Workplace: The Good, the Bad, and the Unthinkable. Acad Med J Assoc Am Med Coll. 2019;94(6):853-60. 10.1097/ACM.0000000000002672 [DOI] [PubMed] [Google Scholar]

- 41.Wolcott MD, McLaughlin JE, Hann A, et al. A review to characterise and map the growth mindset theory in health professions education. Med Educ. 2021;55(4):430-40. 10.1111/medu.14381 [DOI] [PubMed] [Google Scholar]

- 42.Richardson D, Kinnear B, Hauer KE, et al. Growth mindset in competency-based medical education. Med Teach. 2021. Jul 3;43(7):751-7. 10.1080/0142159X.2021.1928036 [DOI] [PubMed] [Google Scholar]

- 43.Schumacher DJ, Holmboe E, Carraccio C, et al. Resident-Sensitive Quality Measures in the Pediatric Emergency department: exploring relationships with supervisor entrustment and patient acuity and complexity. Acad Med. 2020. Feb;1. 10.1097/ACM.0000000000003242 [DOI] [PubMed] [Google Scholar]

- 44.Gottlieb M, Chan TM, Zaver F, Ellaway R. Confidence-competence alignment and the role of self-confidence in medical education: aconceptual review. Med Educ. 10.1111/medu.14592 [DOI] [PubMed] [Google Scholar]