Abstract

Colour vision represents a vital aspect of perception that ultimately enables a wide variety of species to thrive in the natural world. However, unified methods for constructing chromatic visual stimuli in a laboratory setting are lacking. Here, we present stimulus design methods and an accompanying programming package to efficiently probe the colour space of any species in which the photoreceptor spectral sensitivities are known. Our hardware-agnostic approach incorporates photoreceptor models within the framework of the principle of univariance. This enables experimenters to identify the most effective way to combine multiple light sources to create desired distributions of light, and thus easily construct relevant stimuli for mapping the colour space of an organism. We include methodology to handle uncertainty of photoreceptor spectral sensitivity as well as to optimally reconstruct hyperspectral images given recent hardware advances. Our methods support broad applications in colour vision science and provide a framework for uniform stimulus designs across experimental systems.

This article is part of the theme issue ‘Understanding colour vision: molecular, physiological, neuronal and behavioural studies in arthropods’.

Keywords: Python, colour vision, colour management, colour space, univariance, nonlinear optimization

1. Introduction

From insects to primates, colour vision represents a vital aspect of perception that ultimately enables a wide variety of species to thrive in the natural world. Each animal is equipped with an array of photoreceptors expressing various opsin types and optical filters that define the range of wavelengths the animal is sensitive to. But opsin expression alone does not predict how an animal might ‘see’ colours. The downstream neural circuit mechanisms that process photoreceptor signals are critical in shaping colour perception. Interrogating these neural mechanisms in a laboratory setting necessitates experimentalists to construct and present chromatic visual stimuli that are relevant to the animal in question. Outside of trichromatic primates, for which studies in human perception and psychophysics have led the way, there is a lack of unifying methodology to assay colour vision across species using disparate laboratory visual stimulation systems. Here, we describe standardized methods to create chromatic stimuli, using a minimal set of light sources, that can continuously span a wavelength spectrum and be flexibly applied to photoreceptor systems in various species.

Light, the input to a photoreceptor, comprises two components: wavelength and intensity. Importantly, a photon of light of any wavelength elicits the same response once absorbed by a photoreceptor. This principle of univariance limits a single photoreceptor from distinguishing between wavelength and intensity, as different wavelength–intensity combinations can elicit the same response, rendering single photoreceptors ‘colour-blind’ [1,2]. By combining outputs from different types of photoreceptors in downstream neural circuits, animals can separate wavelength and intensity information to ultimately allow colour discrimination. As a result of univariance, particular wavelength–intensity combinations remain indistinguishable if they produce an equivalent set of photoreceptor responses in an animal. To the human eye, a red–green mixture is perceived as identical to pure yellow, as both of these sources equally activate the three cones. This metamerism is taken advantage of in red–green–blue (RGB) screens, which can display many colours using only three light sources. However, out-of-the-box RGB screens cannot easily be used to investigate colour processing across animals. This is because the softwares that operate them are based on experimentally measured colour matching functions (i.e. ‘matching’ ratios of R/B/G to perceptual colours) that are specific to the set opsins expressed in human cones and the neural processing of their signals in the human brain. Even though such colour matching functions are not available for most animals, it is still possible to leverage fundamental concepts of metamerism to construct chromatic stimuli [3,4]. The use of such methods has been limited, in part because of a lack of a practical framework to apply a wide range of well-established colour theory concepts.

Here, we present a set of algorithms, and accompanying Python software package drEye, for designing chromatic stimuli, that allow the simulation of arbitrary spectra using only a minimal set of light sources. Our framework is founded on established colour theory [1,5,6] and is applicable to any animal for which the photoreceptor spectral sensitivities are known. Our approach allows the reconstruction of a variety of stimuli, including some that are hard to reproduce in a laboratory setting, such as natural images. Importantly, our methods are flexible to various modelling parameters and can account for uncertainties in the spectral sensitivities. Even though we provide mathematical tools to select appropriate light sources, our methods are ultimately agnostic of the hardware used for visual stimulation. For this reason, our method can be used as a colour management tool to control conversion between colour representations of various stimulus devices. We illustrate basic principles as well as examples of our algorithms using the colour systems of mice, bees, humans, fruit flies and zebrafish.

2. Colour theory and colour spaces

As colour scientists, we aim to understand how a given animal processes spectral information and thus perceives colour. A ‘perceptual colour’ space gives an approximation of how physical properties of light are experienced by the viewer. CIE 1931 colour spaces, for instance, are defined mathematical relationships between spectral distributions of light and physiologically perceived colours in human vision [7]. These spaces were derived from psychophysical colour matching experiments [7,8], and are an essential tool when dealing with colour displays, printers and image recording devices. Because the underlying quantitative transformation from the spectral distributions of light to the perceived colours in humans is dependent on both the cone spectral sensitivities and the neural mechanisms that process them, human colour spaces do not transfer to other animals.

Can we approximate the perceptual space of animals using available physiological and/or behavioural information? A perceptual colour space is the result of a series of transformations starting from the stimulus itself. The stimulus can be represented in ‘spectral space’, simply describing the spectral distribution of light. This space, however, is high-dimensional, and therefore difficult to work with. Instead, a lower-dimensional colour space can be constructed, by taking into account the photoreceptor spectral sensitivities of the viewer. A photoreceptor’s spectral sensitivity defines the relative likelihood of photon absorption across wavelengths (e.g. figure 1a–c; electronic supplementary material, figure S1a,b). Weighting a total light power spectrum by the photoreceptor’s spectral sensitivities renders n effective values of a stimulus—with n being the number of photoreceptor types. The n values compose an n-stimulus specification of the objective colour of the light spectrum for an animal, called the photon capture. This results in an n-dimensional receptor-based ‘capture space’. For dichromats such as New World monkeys and mice, this receptor-based space is two-dimensional (figure 1d). For trichromats, such as humans and bees, it is three-dimensional (figure 1e) and for tetrachromats, such as zebrafish and fruit flies, it is four-dimensional (figure 1f).

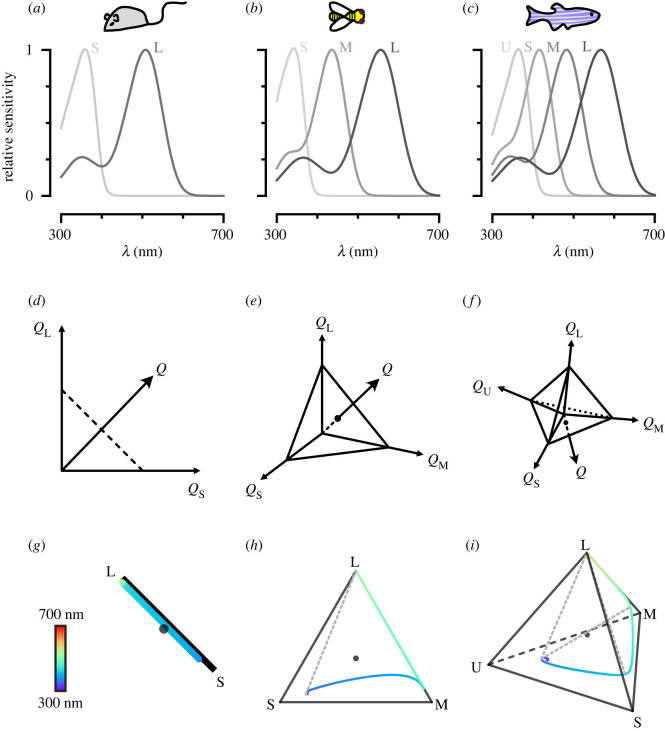

Figure 1.

Colour and chromatic spaces of di-, tri- and tetrachromatic animals. (a–c) Spectral sensitivity functions for the different opsins expressed in the photoreceptors of the mouse, the honeybee and the zebrafish, respectively. Photoreceptors are assigned the labels long (L), medium (M), short (S), ultrashort (U) from the longest to shortest wavelength-sensitive photoreceptors. (d–f) Schematic of receptor-based colour spaces of di-, tri- and tetrachromatic animals, respectively. Q denotes capture. (g–i) Chromatic diagrams for the mouse, the honeybee and the zebrafish, respectively. The coloured line indicates the loci of single wavelengths in the chromatic diagram. The dotted lines indicate hypothetical non-spectral colour lines that connect the points along the single wavelength colour line that maximally excite non-consecutive photoreceptors.

In addition, within this n-dimensional receptor-based capture space, it is often useful to define a hyperplane, where vector points sum up to 1, and where colour is therefore represented independently of intensity. The resulting ‘chromaticity diagram’ is the n − 1 simplex where a point represents the proportional capture of each photoreceptor (figure 1g–i; electronic supplementary material, figure S1c,d). For dichromats, this visualization simplifies to a line, for trichromats it is a triangle, and for tetrachromats, a tetrahedron. The loci of single wavelengths can be mapped onto these spaces as a one-dimensional manifold, as can theoretical ‘non-spectral’ colour lines. Non-spectral colours result from the predominant excitation of photoreceptor pairs that are not adjacent along the single wavelength manifold [9,10].

Although the number of photoreceptors, which determines the dimensionality of the receptor-based space, is not always equal to the effective dimensionality of perceived colours [11], it provides its theoretical maximum. The effective dimensionality depends on the processing of photoreceptor signals in the brain. In fact, neural processing effectively distorts the shape of receptor-based spaces, to eventually produce a perceptual space, where distances do not necessarily match the distances measured in receptor-based spaces.

Receptor-based spaces are, however, a good starting point to mathematically approximate the transformations that the brain applies to photoreceptor inputs. They can in particular serve to design relevant chromatic stimuli to interrogate these transformations experimentally. Throughout this paper, we will use receptor-based colour spaces as the foundation for a unified framework for developing such chromatic stimuli.

3. Reconstructing arbitrary light spectra: a general framework

Probing an animal’s colour vision requires measuring behavioural or physiological responses to relevant chromatic stimuli. Among these are artificial stimuli that are constructed to probe specific aspects of visual processing, such as a set of Gaussian spectral distributions to measure spectral tuning, naturalistic stimuli, such as measured natural reflectances, or randomly drawn spectral stimuli, akin to achromatic noise stimuli. Current methods to display such stimuli often do not take into account the visual system of the animal under examination, and instead focus on spectral space, which is often not as relevant functionally. Instead, we have developed a method that allows the reconstruction of a wide range of chromatic stimuli, with only a limited number of light sources, that can be applied across animals for which spectral sensitivities are known. Here, we describe the core method for light spectra reconstruction, followed by highlights of important considerations regarding the stimulus system and aspects of the fitting procedure. An overview of the method is illustrated in figure 2 for an idealized dichromatic animal chosen for ease of visualization.

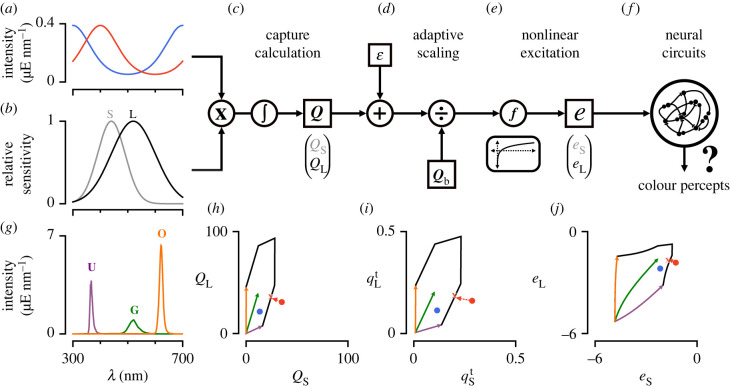

Figure 2.

Schematic of the photoreceptor model. (a) Two example spectral distributions of light constructed artificially. Red: exp(sin (2π(λ − 300 nm)/400 nm)); blue: exp(cos (2π(λ − 300 nm)/400 nm)). (b) Artificial spectral sensitivities constructed using a Gaussian distribution with mean 440 and 520 nm and standard deviation 50 and 80 nm for the shorter (S) and longer (L) wavelength-sensitive photoreceptor, respectively. (c) To calculate capture, the lights in (a) hitting the photoreceptors in (b) are each multiplied by the spectral sensitivities of each photoreceptor and integrated across wavelengths. A small baseline capture value ε can be added to the light-induced capture value. (d) To calculate the relative capture, the absolute capture calculated in (c) is divided by the background capture according to von Kries adaptation. (e) A nonlinear transformation is applied to the relative capture values to obtain photoreceptor excitations. (f) Photoreceptor signals are further processed in downstream circuits to give rise to colour percepts. (g) Example stimulation system consisting of a set of three LED light sources at their maximum intensity (violet, green and orange). (h–j) Capture space, relative capture space and excitation space of photoreceptors in (b). The coloured vectors represent the integration of the LED spectra in (g) with the spectral sensitivities in (b). The colours match the colours of the LEDs in (g). These vectors can be combined arbitrarily up to their maximum LED intensities and define the gamut of the stimulation system (black lines). The red and blue circles are the calculated captures, relative captures and excitation values for the spectra in (a). The red-coloured spectrum is out-of-gamut for the stimulation system defined in (g). Projection of this out-of-gamut spectrum onto the gamut of the stimulation system gives different solutions when done in capture, relative capture, or excitation space (red line). The red X drawn at the edge of the stimulation system’s gamut corresponds to the projection of the red-coloured spectrum onto the gamut in excitation space (i.e. the fit in excitation space).

(a) . Building receptor-based colour spaces

The light-induced photon capture Q elicited by any arbitrary stimulus j is calculated by integrating its spectral distribution Ij(λ) (in units of photon flux: ) with the effective spectral sensitivity Si(λ) of photoreceptor i across wavelengths (figure 2a–c). Even when no photons hit a photoreceptor, it randomly produces dark events [2,12,13]. Mathematically, we can add these dark events as a baseline capture εi to the light-induced capture. By multiplying this sum by the absolute sensitivity of photoreceptor i (Ci), we obtain the total absolute capture :

| 3.1 |

When we calculate the total capture for all n photoreceptor types present in an animal, we get a vector that can be represented as a point in the receptor-based capture space (figure 2c,h):

| 3.2 |

Equations (3.1) and (3.2) assume we know the spectral sensitivity of each photoreceptor and two more quantities: the absolute sensitivity Ci and the baseline capture εi. Unlike the spectral sensitivities of photoreceptors, both Ci and εi are usually unknown (and difficult to estimate) for most model organisms. In many conditions, it is assumed that the photoreceptors adapt to a constant background light according to von Kries adaptation [2,14]. This removes Ci from the equation, and we obtain the relative light-induced capture q(i,j) and baseline capture η(i,b) for background b:

| 3.3 |

For all n photoreceptor types, we obtain a vector (qt = q + η) representing a point in relative receptor-based capture space (figure 2d,i). Note that equation (3.3) is mathematically equivalent to setting Ci to 1/(Q(i,b) + εi). Thus, the relative photon capture is simply a form of multiplicative scaling that has been shown to approximate adaptational mechanisms within isolated photoreceptors [2,15,16].

Finally, if we assume that the light-induced capture is much larger than the baseline capture, we can drop η, so that q = qt. However, we will show in a later example why setting a baseline capture value to a specific low value can have practical uses for designing colour stimuli even when we lack knowledge of the exact biophysical quantity ascribed to it.

Receptor-based photon capture spaces do not take into account the neural transformation applied by the photoreceptors themselves once photons are absorbed to give rise to electrical signals. It can therefore be beneficial to further convert our relative capture values to photoreceptor excitations e by applying a transformation f that approximates the change in the response in photoreceptors (figure 2e,j):

| 3.4 |

Common functions used for animal colour vision models are the identity, the log, or a hyperbolic function [6,17–19]. Applying any of these functions—except the identity function—will change the geometry of the colour space and thus distances measured between points (figure 2h–j). If the transfer function is not known for an animal’s photoreceptors, the identity function (i.e. the linear case) can be used or a transfer function can be reasonably assumed given measured transfer functions in other animals or photoreceptor types. Photoreceptor excitation values are the de facto inputs to the visual nervous system of the organism. We will therefore consider spectral stimuli within photoreceptor excitation spaces as a foundation for our subsequent fitting procedures.

(b) . Fitting procedure

The goal of our method is to enable experimenters to use only a limited set of light sources to create metamers that ‘simulate’ arbitrary light spectra for a given animal or animals. In order to do so, we use a generalized linear model of photoreceptor responses (figure 2a–e) to adjust the intensities of a set of the light sources in order to map intended spectral distributions onto calculated excitations of each photoreceptor type. Using equations (3.1)–(3.4), we can calculate photoreceptor excitation for any desired light stimulus. This results in an n-dimensional vector e that represents the effect of this visual stimulus on the assortment of photoreceptors of the animal. Instead of presenting this particular arbitrary distribution of light, we can use a visual stimulus system composed of a limited set of light sources to approximate this vector e and thus match the responses to our desired light stimulus. In figure 2, this corresponds to finding a coefficient for each light source vector to approximate the coordinates of the visual stimulus points in the two-dimensional excitation space. This operation could theoretically be done in capture or relative capture space. However, given that each transformation distorts the distances between points, it is more appropriate to perform this operation in excitation space, given that it is closer to perceptual space.

Given an animal’s n photoreceptors and m available light sources, we can construct a normalized capture matrix A using equation (3.3):

| 3.5 |

Here, q(i,j) is the relative light-induced photon capture of photoreceptor i given the light source j at an intensity of one unit photon flux. Calculating A requires knowledge of the spectral distribution of each light source, which can be obtained using standard methods in spectrophotometry [20,21]. This will also yield the intensity bounds of each light source. We denote the lower bound intensity vector as ℓ and the upper bound intensity vector as u. The theoretical minimum value for ℓ is 0 as a light source cannot show negative intensities.

To match the desired photoreceptor excitations, we need to find the intensity vector x for the available light sources so that the calculated excitations of the system match the desired photoreceptor excitations e:

| 3.6 |

To find the optimal x, we first consider two points. First, x needs to be constraint by the lower bound ℓ and upper bound u. If an experimenter wants to find the best-fit independent of the intensity range of the stimulation system, we only need to have a non-negativity constraint for x (theoretical minimum of ℓ). Second, an experimenter may want to weight each target photoreceptor excitation differently, if certain photoreceptors are thought to be less involved in colour processing (see e.g. [20]). Thus, we obtain a constrained objective function that minimizes the weighted (w) difference between our desired excitations (e) and possible excitations (f(Ax + η)) subject to the intensity bound constraints ℓ and u:

| 3.7 |

To ensure that we consistently find the same x, we use a deterministic two-step fitting procedure. First, we fit the relative capture values—before applying any nonlinear transformation—using constrained convex optimization algorithms. Next, we initialize x to the value found during linear optimization and use nonlinear least-squares fitting (trust region reflective algorithm) to find an optimal set of intensities that match the desired excitations. This two-step fitting procedure is deterministic and ensures that the nonlinear solution found is the closest to the linear solution, if the nonlinear optimization problem is not convex. If the transformation function is the identity function, the second step is skipped completely as the first step gives the optimal solution. There are a few important points to note regarding this fitting procedure, which we address below.

(c) . Gamut and visual stimulation systems

So far, we have not considered the hardware and the ability of a visual stimulus system to represent colours. In human colour vision, the ‘gamut’ represents the total subset of colours that can be accurately represented by an output device, such as an LED-stimulation system [22]. In order to generalize this concept and use it to design stimulus systems that are adequate for our fitting procedures, we have derived a gamut metric that corresponds to the ‘percentage of (animal) colours reproduced by a stimulation system’. To calculated this metric, we separately consider a ‘perfect’ stimulation system, where the intensity of each unit wavelength along the (animal) visible spectrum can be varied independently, and a ‘real’ stimulation system, composed of a combination of light sources. We derive a measure of size that each system occupies in an animal’s corresponding chromaticity diagram and calculate their ratio (see the electronic supplementary material for details). This mathematical tool can be applied to any set of light sources for which the spectra have been measured, and can be used to optimally select a set of light sources in the context of our fitting procedure.

For illustration purposes, we consider a set of commercially available LEDs (electronic supplementary material, figure S2a–g) which can be combined to create a stimulus system [20]. We vary the composition and number of LEDs of a stimulus system, and calculate the metric for mice, bees, humans, fruit flies and zebrafish. We find that if the number of LEDs is below the number of photoreceptors, each LED added to the system significantly increases the fraction of colours that can be represented (electronic supplementary material, figure S2h–l). Adding more LEDs than this only minimally improves the system (electronic supplementary material, figure S2h–l). Examining the distribution of all n-sized LED stimulus systems (with n being the number of photoreceptor types of the animal) highlights that different animals allow more or less freedom of LED choice (electronic supplementary material, figure S2m–q). Interestingly, LED combinations that would be chosen according to the peak of the sensitivities, a commonly used strategy when designing stimulus systems [23,24], most often are not included in the 10% largest gamuts of all n-LED combinations (electronic supplementary material, figure S2m–q). This is due to the fact that our metric takes into account the shape and overlap of the sensitivities and LEDs, in addition to the peak of the sensitivities and LEDs.

Finally, a desired property of a given stimulus system may be to enable experiments across vastly different intensity regimes. As stimulus intensities are increased, LEDs will reach their maximal intensities (electronic supplementary material, figure S2b) and the gamut of the stimulation system will decrease (electronic supplementary material, figure S2h–l). At higher intensities of a stimulus, including additional LEDs can enable reconstruction of more colours. This gamut metric is therefore a useful tool for assessing the suitability of an existing visual stimulation system or selecting light sources for de novo assembly.

(d) . In- and out-of-gamut fitting

A desired capture value of light can be within the gamut of the stimulation system or out-of-gamut (e.g. figure 2h). If the desired captures of a stimulus set are within the gamut of the stimulus system, applying any excitation transformation or changing the weighting factor w will have no effect on the fitted intensities as an ideal solution exists (figure 2h–j). In this case, the second step of the fitting procedure (i.e. the nonlinear optimization) will be skipped to improve efficiency. Conversely, the intensities found when fitting captures outside the system’s gamut can vary depending on the chosen nonlinearity and weighting factor w (electronic supplementary material, figure S3). In these cases, it is especially important to consider the light conditions during experiments (photopic, mesopic and scotopic). According to various models of photoreceptor noise, the noise of photoreceptors is constant in dark-adapted conditions and becomes proportional to the capture in light-adapted conditions (Weber’s Law) [2,25]. Thus the monotonic transformation function f chosen for each condition should be the identity or the log, respectively, in order to ensure homogeneity of variance. We have found that using a log transformation and adding a small constant baseline capture ε provide a good prediction of photoreceptor responses across intensities for the fruit fly in the dark-adapted state (electronic supplementary material, figure S4). This nonlinearity effectively rectifies the calculated captures for small values that are indistinguishable from dark, and smoothly transitions between a linear and logarithmic regime. Furthermore, this transformation approximates the measured responses of other photoreceptors [26] and prevents a zero division error in dark-adapted or close to dark-adapted conditions when using a log transformation.

(e) . Gamut correction prior to fitting

For humans, many displays use gamut correction algorithms to adjust how out-of-gamut colours are represented [27]. For example, an image that is too intense will be scaled down in overall intensity in order to fit within the gamut. This can also be achieved with our method by fitting the image without any upper intensity bounds and then rescaling the fitted intensities to fit within the gamut of the stimulation system. Alternatively, capture values can be scaled prior to fitting, so that they are within the intensity bounds of the stimulation system (electronic supplementary material, §C for details). For values that are completely outside of the colour gamut—they cannot be reproduced by scaling the intensities—values are usually scaled and clipped in a way to minimize ‘burning’ of the image [27]. An image is burned when it contains uniform blobs of colour that should have more detail. Procedures to minimize burning of an image for humans usually involve preserving relative distances between values along dimensions that are most relevant for colour perception. But these procedures are imperfect and will ultimately distort some of the colours. To generalize such procedures to non-primate animals, we have implemented an algorithm that assesses which capture values are outside a system’s gamut and adjusts the capture values across the whole image to minimize ‘burning-like’ effects by preserving relative distances between target values (electronic supplementary material, §C for details). Our gamut-corrective procedures can be applied before fitting or optimized during fitting using our package. Applying gamut-corrective procedures will ensure that the relative distribution of capture values of the fitted image resembles the distribution of the original image, thus minimizing burning-like effects. Additionally, gamut correction does not require a specification of the nonlinear transformation function as all capture values are projected into the system’s gamut (as discussed in §3d).

(f) . Underdetermined stimulus system

If the stimulus system is underdetermined—i.e. there are more light sources to vary than there are types of photoreceptors—then a space of target excitations can be matched using different combinations of intensities (electronic supplementary material, figure S3). By default, the intensities that have smallest L2-norm within the intensity constraints are chosen. This will choose a set of light source intensities that generally have a low overall intensity and similar proportions. However, in our package drEye, we provide alternative options such as maximizing/minimizing the intensity of particular light sources or minimizing the differences between the intensities of particular light sources. An underdetermined system can also be leveraged in other ways, as we discuss in §5.

4. Example applications

(a) . Targeted stimuli

To illustrate our approach, we have applied our fitting procedure to two sets of targeted stimuli using the five example animals and LED-stimulation systems composed of the LEDs introduced previously (electronic supplementary material, figure S2a,b). The first stimulus is a set of Gaussian spectral distributions. Simulating this set of stimuli is similar to exciting the eye using a monochromator and mapping responses along a one-dimensional manifold in colour space. We have simulated such a set of stimuli previously to map the tuning properties of photoreceptor axons in the fruit fly [20]. The second stimulus is a set of natural reflectances of flowers multiplied with a standard daylight spectrum [28–31] (figure 3a). In both cases, we assume that photoreceptors are adapted to the mean of either stimulus set. We include a small baseline ε of 10−3 μE and a log transformation in our photoreceptor model. Each photoreceptor type is equally weighted. To compare the results for different stimulation systems, we calculated their R2 values as a measure of goodness-of-fit. For the single wavelength stimulus set, a good fit is usually achieved with an n-size LED stimulus system, although for tetrachromatic animals some wavelengths may require more LEDs for a good reconstruction (figure 3b–d,h–j). Furthermore, more LEDs become necessary for high-intensity simulations (electronic supplementary material, figure S5a–f). For the natural reflectance stimulus set, an n-size LED stimulus system usually gives a perfect fit, if an appropriate LED set is chosen (figure 3e–j). However, more LEDs than this improve fits for high-intensity spectra (electronic supplementary material, figure S5a–f). The naturalistic stimulus set is more correlated across wavelengths. Thus, these types of stimuli are usually easier to simulate given different stimulation systems, as they are covered by a stimulus system’s gamut—especially when adapted to the mean.

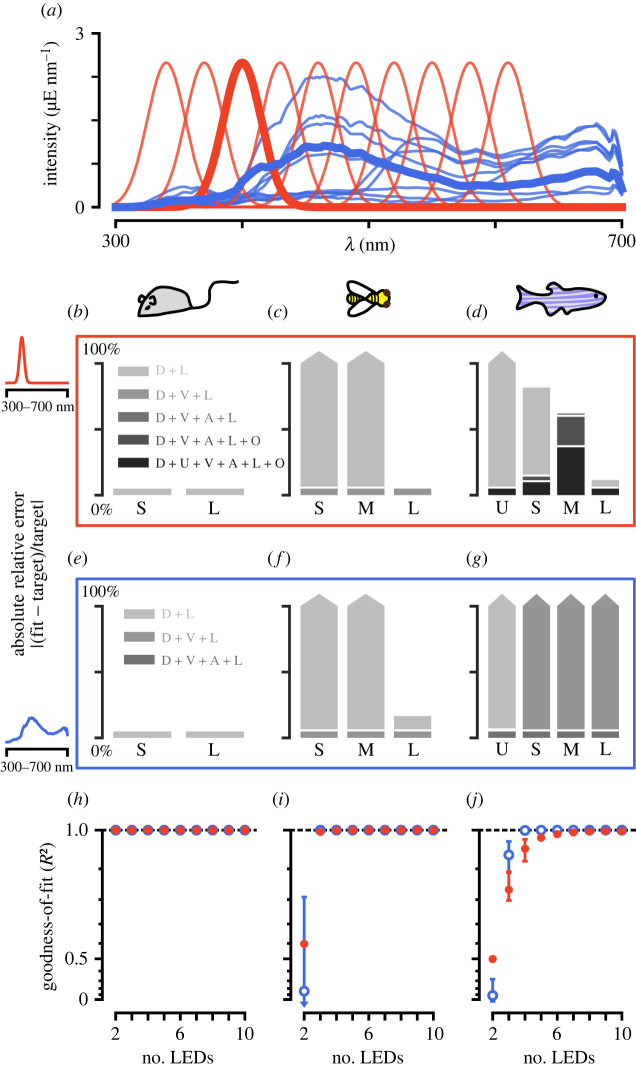

Figure 3.

Fitting targeted stimuli to different model organisms. (a) Example target spectra to be reconstructed: a set of natural spectral distributions (blue) and a set of Gaussian spectral distributions (red). (b–d) Absolute relative error of fitting the 400 nm spectrum to the mouse, honeybee and zebrafish, respectively. For the mouse, two LEDs are sufficient to recreate the spectrum, but for the zebrafish, a perfect recreation is not even possible with six LEDs. (e–g) Absolute relative error of fitting a natural spectrum to the mouse, honeybee and zebrafish, respectively. For the mouse, two LEDs, for the honeybee, three LEDs and for the zebrafish, four LEDs are sufficient to perfectly simulate the spectrum. (h–j) Goodness-of-fit (R2) values for the best LED sets (top 10%) across different number of LED combinations for the mouse, honeybee and zebrafish, respectively. The bars for each point correspond to the range of R2 values achieved for the top 10% of LED combinations. The y-axis is plotted on an exponential scale to highlight differences in the goodness-of-fit close to 1.

(b) . Random stimuli

So far, we have assumed we have a set of spectral distributions to simulate. However, this is not necessarily the case. Random sampling of a colour space can be a useful way to probe a chromatic system. Similar to stimulating the eye using artificial achromatic stimuli, such as random square or white noise stimuli [32,33], we can stimulate the eye using artificial chromatic stimuli to extract detailed chromatic receptive fields. This can theoretically be done in either spectral- or receptor-based colour space. To compare both methods, we will consider the excitation space of the medium- and long-wavelength photoreceptors of our five animals—the mouse, honeybee, zebrafish, human and fruit fly. For this example, the stimulus system consists of violet and lime LEDs (electronic supplementary material, figure S2a). Photoreceptors are adapted to the sum of 1 μE photon flux of light for both LEDs, and the photoreceptor model incorporates a small ε of 10−3 μE and a log transformation. We sample 121 individual stimuli that equally span a two-dimensional plane from −1 to 1. The samples are drawn either from relative LED intensity space—log(i/i b) with i being the LED intensities and i b being the background intensities—or from photoreceptor excitation space. In the latter case, we fit LED intensities using our fitting procedure to best match the desired excitations. Points outside of the system’s gamut will be clipped as per the fitting procedure. No fitting is required when directly drawing LED intensities. When drawing equally spaced samples in relative LED space, the samples are highly correlated along the achromatic dimension of the excitation space (i.e. eL = eM) and do not span the available gamut in excitation space (electronic supplementary material, figure S6a–c). Consequently, any neural or behavioural tuning extracted from such a stimulus set can be biased towards specific directions in colour space [32,34]. On the other hand, drawing samples in excitation space and then fitting them to the given LED stimulus system will always ensure that as much of the available colour space is tested (electronic supplementary material, figure S6d–f). Colours outside the system’s gamut will be clipped, but if the system is chosen to efficiently span colour space, clipping can be reduced significantly. Since photoreceptor sensitivities always have some overlap, the photoreceptor axes are not completely independent as is the case with the spatial dimensions of width and height. Thus, clipping would even occur in a perfect stimulus system. Our drEye Python package includes various ways to efficiently draw samples within the gamut of a stimulation system to avoid clipping.

5. Dealing with uncertain spectral sensitivities

So far, we have assumed that the spectral sensitivities are uniquely described. However, measured sensitivities can vary depending on the experimental methods used [35,36]. Furthermore, eye pigments and the optics of photoreceptors can change the effective sensitivities of photoreceptors [36,37]. This can lead to uncertainty in the measured sensitivity of photoreceptors within the experimental conditions of interest. For example, recent measurements of fly photoreceptors show a shift in the peak of the Rh6-expressing photoreceptor and a general broadening in the photoreceptors, when compared with original measurements [36]. These differences likely reflect differences in sample preparation and measurement technique. Instead of having to (re-)measure the sensitivities within the experimental context of interest, previous measurements can be taken into account to build a prior distribution of photoreceptor sensitivities.

In order to take into account the distribution of photoreceptor properties, we first construct a normalized variance matrix :

| 5.1 |

Here, is the estimated variance of the relative light-induced capture of photoreceptor i given the light source j at an intensity of one unit photon flux. We can estimate each variance by drawing samples from the distribution of photoreceptor properties, then determining the light-induced capture for each sample given each light source at one unit photon flux, and finally calculating the empirical variance across samples. Given a particular set of light source intensities x, we can approximate the total variance of the calculated excitations by propagating using Taylor expansions:

| 5.2 |

A large value of indicates that the chosen intensities x result in calculated excitations that vary considerably between different samples from the prior distribution of photoreceptor properties. We are less certain that the calculated excitations match the desired target values. Conversely, a smaller value of indicates that we are more certain that the calculated values match the desired target excitations. Thus, we wish to minimize while also matching the target excitations. To do this, we apply a two-step procedure. The first step uses the mean spectral sensitivities and fits the excitation values as described in equation (3.7). We use the fitted x from the first step as our initial guess for the second step. In the second step, we minimize , while constraining our solution for x to not deviate significantly from our fit in the first step:

| 5.3 |

δ is the value of the objective function after optimizing x in the first step plus some added small value. δ may even be zero in an underdetermined system as multiple solutions can exist that give an optimal fit but have different values for the overall uncertainty .

As an example, we consider two photoreceptors with spectral sensitivities that follow a Gaussian distribution (figure 4a) and an underdetermined stimulus system consisting of a UV, green and orange LED (figure 2g). The widths of the sensitivities vary between 30 and 70 nm and between 60 and 100 nm for each photoreceptor, respectively. The peaks of the sensitivities vary between 420 and 460 nm and between 500 and 540 nm for each photoreceptor, respectively. Changing the width and/or peak of the spectral sensitivity of either photoreceptor will affect the calculated capture for each LED differently. As an example of fitting using equation (5.3), we take a look at four different relative capture values (figure 4b). All four values are within the gamut of the stimulation system for the expected spectral sensitivities. As this is an underdetermined system, we can find multiple LED intensities x that fit the desired capture values for the expected sensitivities (figure 4c). Using only the standard fitting procedure from §3b, we find a set of intensities that has the smallest L2-norm (X symbols in figure 4c). If we subsequently optimize to minimize the variance , the fitted intensities x can differ significantly from the first fitting procedure (open squares in figure 4c). However, the overall goodness-of-fit for the expected target excitations is not affected as the solution has simply moved within the space of possible optimal solutions (lines in figure 4c). To get a better idea of how fits differ between the original approach and this variance minimization approach, we drew many random capture values that are inside and outside the system’s gamut (figure 4d). After fitting, we calculated the average R2 scores of the two approaches for all samples from the prior distribution of spectral sensitivities. We find that on average the variance minimization approach improves the R2 score when considering a distribution of possible spectral sensitivities (figure 4e).

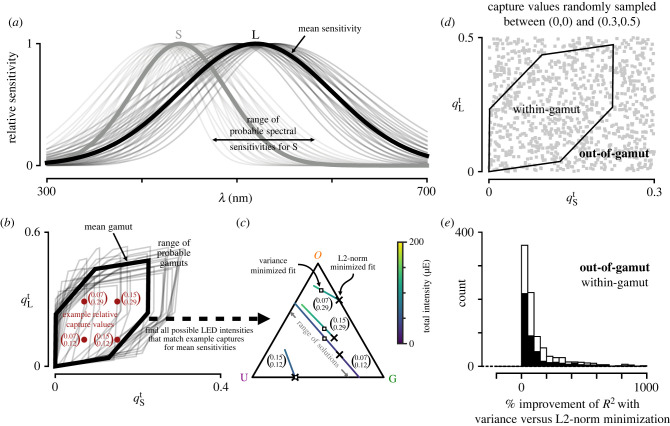

Figure 4.

Minimizing the variance in excitation values due to uncertainty improves the average fit. (a) Variance in the spectral sensitivity for the short and long photoreceptors from the example in figure 2b by varying the mean between 420 and 460 nm and between 500 and 540 nm for each photoreceptor in steps of 10 nm and varying the standard deviation between 30 and 70 nm and between 60 and 100 nm in steps of 10 nm for each photoreceptor, respectively. (b) Relative capture space of the photoreceptors in (a) adapted to a flat background spectrum. Gamut of the LED set in figure 2g (thick line) and the resulting variance of the gamut due to the variance in the spectral sensitivities (thin lines). X symbols correspond to example capture values that are within the gamut given the expected sensitivities in (a) (thick lines). (c) Possible LED proportions that result in the same calculated capture for the four examples in (b) using the expected sensitivities and the stimulation system from figure 2g. Each coloured line corresponds to the set of proportions that result in the same capture. The colour indicates the overall intensities of the set of LEDs. Xs indicate the fitted LED intensities using the fitting procedure defined by equation (3.7). The open squares indicate the fitted intensities after minimizing the variance according to equation (5.3) given the uncertainty in the spectral sensitivities as shown in (a). (d) Randomly drawn captures that are in- and out-of-gamut (grey squares). (e) Average improvement in the R2 score for all possible samples of the spectral sensitivities in (a) when fitting the points in (d) with the additional variance optimization step. The black bars correspond to within-gamut samples and open bars correspond to out-of-gamut samples.

The variance minimization approach works well when the goal is to fit particular excitation values (e.g. to span the excitation colour space, as in §4b). However, if the goal is instead to fit particular spectral distributions, the method can lack accuracy because the corresponding target excitation values are not unique owing to uncertainty of photoreceptor sensitivities. To deal with this problem, we can increase the number of photoreceptors we use to fit artificially by adding different samples of sensitivities to the estimation procedure and weighting them by their prior probability. We can still perform variance minimization as a second step, if the sampled sensitivities cover a range of possible excitation values.

A final approach to dealing with uncertainty of the spectral sensitivities is to update the prior distribution of the sensitivities with different behavioural and/or physiological data. Besides the more classical approaches to assessing the spectral sensitivity of photoreceptors and the dimensionality of colour vision, sets of metameric stimuli can be designed to probe the responses of various neurons responding to visual inputs. For example, the lines in figure 4c correspond to a range of metameric stimuli that match the four example target captures in figure 4b. In the drEye package, we provide various ways to design metameric stimuli. For another example, we have measured the responses of photoreceptor axons in the fruit fly to stimuli that simulate the spectrum of one LED and then compared this response with the response of the neuron to the actual LED (electronic supplementary material, figure S7). If the responses match, the sensitivities used are a good approximation within the wavelength range tested. However, care should still be taken as different sensitivities can still produce the same response in a (randomly) chosen neuron or behaviour. Thus, many different types of neurons should be measured and stimuli tested for validation purposes.

6. Application to patterned stimuli

So far, we have not explicitly considered the spatial aspect of chromatic stimuli. Our method can be used to display not only full field stimuli but also patterned stimuli, simply by applying it pixel by pixel. However, specific considerations need to be taken into account when it comes to these types of stimuli, which depend both on the animal and on the hardware.

Our method can directly be applied to LCD screens and any other screens that pack small LEDs onto single pixels [38], as long as the set of light sources is adequate for the animal in question (see §3c). However, if the gamut afforded by the available set of LEDs used in a particular display is small, it will require a change in the LED set at every pixel, a time-consuming and expensive task. In such cases, projectors offer a more flexible and affordable solution, as these only require replacing a single set of light sources by either swapping one or more LEDs or filters or using fibre optics to couple an external light source [21,39]. However, the effective use of our method in the context of this type of hardware depends on two factors: the dimensionality of colour vision and the flicker fusion rate of the animal of interest.

Indeed, the core principle of projector design relies on ‘temporally’ mixing light sources in different ratios in repeating patterns of subframes, and doing so at a higher frequency than the flicker fusion rate of the viewer (figure 5a). In most modern video projectors, this mixing occurs independently at each pixel, owing to an array of mirrors that are synced with each subframe and thus allow a patterned image to be formed. This method at its core is equivalent to the algorithm we presented above, mixing in time instead of space.

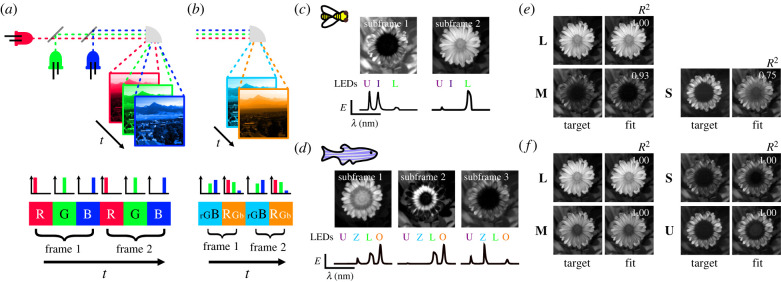

Figure 5.

Reconstructing hyperspectral images with fewer subframes and number of photoreceptor types in the honeybee and zebrafish. (a) Schematic of the subframe structure in traditional RGB projectors. (b) Schematic of a subframe structure with fewer subframes than LEDs. (c,d) Reconstruction of a hyperspectral flower [40] in the honeybee and zebrafish with two or three subframes and three or four LEDs, respectively. The top images are the 8-bit mask for each subframe and the bottom are the normalized LED intensities used for each subframe. (e,f) Comparison of target photoreceptor captures and fitted captures for each photoreceptor type for the honeybee and zebrafish, respectively. The R2 value for each photoreceptor type is indicated in the image of fitted values.

Importantly, in such systems, each subframe is dedicated to one light source. Therefore this subframe structure typically limits the experimenter to use only up to a number of independent light sources equal to the number of subframes, to reconstruct a light spectrum at each pixel. If this number is equal to or larger than the number of photoreceptor types of a given animal, and if the refresh rate of the hardware is higher than the flicker fusion rate of the animal, the algorithm detailed above can be applied to reconstruct patterned images. However, when either or both of these conditions are not met, the method is not suitable. If there are fewer subframes than photoreceptor types, the gamut of the system will be too small to properly reconstruct most images. If the flicker fusion rate of the animal is higher than the refresh rate of the hardware, the temporal mixing will not work, and the subframes will be seen as flickering.

For such cases, we have instead developed a different algorithm that can alleviate either problem, by allowing the use of a higher number of light sources than subframes and using the high spatial-spectral correlations existing in natural images, to optimally mix light sources in each subframe. We take advantage of modifications to some projector systems that allow a more flexible use of their subframe structure. This flexibility in practice lifts the requirement of one dedicated light source per subframe, giving the user control over the spectral composition of each subframe [21,39].

As pixel intensity and light source intensities can be manipulated independently, the aim of our algorithm is to find the best light source intensities X (sources × subframes) and pixel intensities P (subframes × pixels) for each independent subframe, so that they match the target photoreceptor excitations E (photoreceptors × pixels) of the whole image:

| 6.1 |

The light sources are constrained by the lower ℓ and upper u bound intensities they can reach, whereas the pixel intensities are parameterized, so that 1 allows all light from the light sources to go through and 0 does not allow any light to go through (i.e. luminosity). We fit P and X using an iterative approach similar to common electron microscopy-type algorithms, where we fix either P or X at each iteration while fitting the other using the same nonlinear least-squares approach as previously. To initialize both P and X, we first decompose the relative capture matrix of the image Q (photoreceptors × pixels) using standard non-negative matrix factorization. This returns two non-negative matrices P0 (subframes × pixels) and Q0 (photoreceptors × subframes), whose dot product approximates Q. P0 is normalized so that its maximum is 1 and used as the initial matrix for P. For each column—i.e. each subframe—in Q0, we apply the nonlinear transformation to obtain excitation values and then fit light source intensities according to our objective function in equation (3.7). Using the initial values for P and X, only a few iterations are needed (usually fewer than 10) to obtain a good fit for reconstructing the image.

As an example, we fit a hyperspectral image of a calendula flower [40] given the bee and the zebrafish photoreceptor sensitivities and their corresponding optimal LED sets (figure 5). For both animals, we set the number of subframes to be smaller than the number of photoreceptor types (2 subframes for the trichromatic bee and 3 subframes for the tetrachromatic zebrafish), allowing a high projector frame rate and high image bit-depth to be set given the experimenter’s hardware. Despite having fewer subframes than photoreceptors, we are able to achieve good fits by mixing LEDs available in each subframe at different intensities, showing that we can effectively increase the refresh rate of a projector system for trichromatic animals, or use four LEDs for tetrachromatic animals, without sacrificing our fits. It is important to note that, although this method works well in most cases, it may sometimes be impossible to achieve perfect fits for every photoreceptor, depending on the given photoreceptor sensitivities and the spectral correlations of the hyperspectral image. An example of this is clear for the fitting of the S photoreceptor of the bee in our example image, only reaching an R2 value of 0.753. In such cases, hardware limitations may prompt the experimenter to use more subframes at the cost of the projector frame rate.

7. Conclusion

While studies in trichromatic primates have benefited from the wide adoption of consistent methods for designing chromatic stimuli, studies in other animals have suffered from a lack of uniform methodology. This has resulted in difficulties in comparing experimental results both within and between animals. More generally used chromatic stimuli—e.g. using monochromators or standard RGB displays—also do not take into account the colour space of the animal under investigation and usually give an incomplete description of the properties of a colour vision system. Furthermore, with the currently available techniques, it has been challenging to design more natural stimuli, especially natural images, and thus understand the role of spectral information in processing ecologically relevant scenes.

Here, we present a method for designing chromatic stimuli, founded on colour theory, that resolves these issues and can suit any animal where the spectral sensitivities of photoreceptors are known using a minimal visual stimulation system. Specifically, we provide a series of tools to reconstruct a wide range of chromatic stimuli such as targeted and random stimuli as well as hyperspectral images. We offer refinements to our methods to handle various nuances of colour vision, such as uncertainty in spectral sensitivities or handling out-of-gamut colour reconstruction. Even though our methods are hardware agnostic, we provide guidelines for assessing the suitability of a given stimulus system or selecting de novo light sources. Because our methods do not depend on the stimulation device itself, they can serve as a colour management tool to control stimulus systems within and between laboratories and therefore improve reproducibility of experimental results.

In addition to the tools that we present here, our Python package drEye contains other tools that we have only mentioned briefly or omitted. These include efficient and even sampling of the available gamut, designing metameric pairs in underdetermined stimulation systems, and finding silent substitution pairs [42] (e.g. electronic supplementary material, §D). In addition, we have focused here on receptor spaces as a foundation for building stimuli; however, if further transformations of receptor excitation, such as opponent processing, are known, these can also be included using our package, allowing the user to work in a space that might be ‘closer’ to the animal’s perceptual space. Future updates will include new features such as the possibility of taking into account the varying spatial distribution of photoreceptor types across the eye of many animals [41]. With the aim of making adoption of our methods effortless, we provide our open-source drEye API and have created an accessible web application, which will be easy to use, regardless of coding proficiency.

Acknowledgements

We thank Larry Abbott, Darcy Peterka, Elizabeth Hillman and Sharon Su for their comments on the manuscript.

Contributor Information

Matthias P. Christenson, Email: mpc2171@columbia.edu.

Rudy Behnia, Email: rb3161@columbia.edu.

Data accessibility

The drEye Python package is available at https://github.com/gucky92/dreye, and a list of the essential dependencies is provided as electronic supplementary material, table S1 [43]. Tutorials for using the different methods mentioned in the paper and additional approaches that were omitted can be found in the documentation for the package at https://dreye.readthedocs.io/en/latest/. The Web app associated with drEye is available at https://share.streamlit.io/gucky92/dreyeapp/main/app.py.

Authors' contributions

M.P.C.: conceptualization, data curation, formal analysis, methodology, software, validation, visualization, writing—original draft, writing—review and editing; S.N.M.: data curation, validation, visualization, writing—original draft; O.E.: ‘conceptualization’; S.L.H.: investigation, writing—original draft; R.B.: conceptualization, funding acquisition, project administration, supervision, writing—original draft, writing—review and editing.

All authors gave final approval for publication and agreed to be held accountable for the work performed hherein.

Conflict of interest declaration

We declare we have no competing interests.

Funding

M.P.C. was supported by NIH grant no. 5T32EY013933 and NIH grant no. R01EY029311. S.L.H. acknowledges support from NSF grant no. GRF DGE-1644869 and NIH grant no. F31EY030319. S.N.M. was supported by NIH grant no. R01EY029311. R.B. was supported by NIH grant no. R01EY029311, the McKnight Foundation, the Grossman Charitable Trust, the Pew Charitable Trusts and the Kavli Foundation.

References

- 1.Rushton WAH. 1972. Review lecture. Pigments and signals in colour vision. J. Physiol. 220, 1-31. ( 10.1113/jphysiol.1972.sp009719) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Stockman A, Brainard DH. 2010. Color vision mechanisms. In The OSA handbook of optics, 3rd edn (ed. M. Bass), pp. 11.1-11.104. New York, NY: McGraw-Hill. [Google Scholar]

- 3.Fleishman LJ, McClintock WJ, D’Eath RB, Brainard DH, Endler JA. 1998. Colour perception and the use of video playback experiments in animal behaviour. Anim. Behav. 56, 1035-1040. ( 10.1006/anbe.1998.0894) [DOI] [PubMed] [Google Scholar]

- 4.Tedore C, Johnsen S. 2017. Using RGB displays to portray color realistic imagery to animal eyes. Cur. Zool. 63, 27-34. ( 10.1093/cz/zow076) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kelber A, Osorio D. 2010. From spectral information to animal colour vision: experiments and concepts. Proc. R. Soc. B 277, 1617-1625. ( 10.1098/rspb.2009.2118) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hempel de Ibarra N, Vorobyev M, Menzel R. 2014. Mechanisms, functions and ecology of colour vision in the honeybee. J. Comp. Physiol. A 200, 411-433. ( 10.1007/s00359-014-0915-1) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Smith T, Guild J. 1931. The C.I.E. colorimetric standards and their use. Trans. Opt. Soc. 33, 73-134. ( 10.1088/1475-4878/33/3/301) [DOI] [Google Scholar]

- 8.Wright WD. 1929. A re-determination of the trichromatic coefficients of the spectral colours. Trans. Opt. Soc. 30, 141-164. ( 10.1088/1475-4878/30/4/301) [DOI] [Google Scholar]

- 9.Thompson E, Palacios A, Varela FJ. 1992. Ways of coloring: comparative color vision as a case study for cognitive science. Behav. Brain Sci. 15, 1-26. ( 10.1017/S0140525X00067248) [DOI] [Google Scholar]

- 10.Stoddard MC, Eyster HN, Hogan BG, Morris DH, Soucy ER, Inouye DW. 2020. Wild hummingbirds discriminate nonspectral colors. Proc. Natl Acad. Sci. USA 117, 15 112-15 122. ( 10.1073/pnas.1919377117) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Jacobs GH. 2018. Photopigments and the dimensionality of animal color vision. Neurosci. Biobehav. Rev. 86, 108-130. ( 10.1016/j.neubiorev.2017.12.006) [DOI] [PubMed] [Google Scholar]

- 12.Chu B, Liu CH, Sengupta S, Gupta A, Raghu P, Hardie RC. 2013. Common mechanisms regulating dark noise and quantum bump amplification in Drosophila photoreceptors. J. Neurophysiol. 109, 2044-2055. ( 10.1152/jn.00001.2013) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Barlow RB, Birge RR, Kaplan E, Tallent JR. 1993. On the molecular origin of photoreceptor noise. Nature 366, 64-66. ( 10.1038/366064a0) [DOI] [PubMed] [Google Scholar]

- 14.Kelber A, Vorobyev M, Osorio D. 2003. Animal colour vision - behavioural tests and physiological concepts. Biol. Rev. Camb. Phil. Soc. 78, 81-118. ( 10.1017/S1464793102005985) [DOI] [PubMed] [Google Scholar]

- 15.Juusola M. 1993. Linear and non-linear contrast coding in light-adapted blowfly photoreceptors. J. Comp. Physiol. A 172, 511-521. ( 10.1007/BF00213533) [DOI] [Google Scholar]

- 16.Clark DA, Benichou R, Meister M, Azeredo da Silveira R. 2013. Dynamical adaptation in photoreceptors. PLoS Comput. Biol. 9, e1003289. ( 10.1371/journal.pcbi.1003289) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Chittka L. 1992. The colour hexagon: a chromaticity diagram based on photoreceptor excitations as a generalized representation of colour opponency. J. Comp. Physiol. A 170, 533-543. ( 10.1016/s0042-6989(02)00122-0) [DOI] [Google Scholar]

- 18.Vorobyev M, Osorio D. 1998. Receptor noise as a determinant of colour thresholds. Proc. R. Soc. Lond. B 265, 351-358. ( 10.1098/rspb.1998.0302) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Clark RC, Santer RD, Brebner JS. 2017. A generalized equation for the calculation of receptor noise limited colour distances in n-chromatic visual systems. R. Soc. Open Sci. 4, 170712. ( 10.1098/rsos.170712) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Heath SL, Christenson MP, Oriol E, Saavedra-Weisenhaus M, Kohn JR, Behnia R. 2020. Circuit mechanisms underlying chromatic encoding in Drosophila photoreceptors. Curr. Biol. 30, 264-275.e8. ( 10.1016/j.cub.2019.11.075) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Franke K, Chagas AM, Zhao Z, Zimmermann MJ, Bartel P, Qiu Y, Szatko KP, Baden T, Euler T. 2019. An arbitrary-spectrum spatial visual stimulator for vision research. eLife 8, e48779. ( 10.7554/eLife.48779) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Balasubramanian R, Dalal E. 1997. Method for quantifying the color gamut of an output device. In Proc. SPIE, Color Imaging: Device-Independent Color, Color Hard Copy, and Graphic Arts II, 4 April 1997, San Jose, California (eds R Eschbach, GG Marcu), vol. 3018, pp.10–116. Bellingham, WA: Society of Photo-Optical Instrumentation Engineers (SPIE). ( 10.1117/12.271580) [DOI]

- 23.Schnaitmann C, Haikala V, Abraham E, Oberhauser V, Thestrup T, Griesbeck O, Reiff DF. 2018. Color processing in the early visual system of Drosophila. Cell 172, 318-330.e18. ( 10.1016/j.cell.2017.12.018) [DOI] [PubMed] [Google Scholar]

- 24.Zimmermann MJ, Nevala NE, Yoshimatsu T, Osorio D, Nilsson DE, Berens P, Baden T. 2018. Zebrafish differentially process color across visual space to match natural scenes. Curr. Biol. 28, 2018-2032.e5. ( 10.1016/j.cub.2018.04.075) [DOI] [PubMed] [Google Scholar]

- 25.Lakshminarayanan V. 2016. Light detection and sensitivity. In Handbook of visual display technology (eds J Chen, W Cranton, M Fihn), pp. 105–112. Cham, Switzerland: Springer. ( 10.1007/978-3-319-14346-0_5) [DOI] [Google Scholar]

- 26.Kawasaki M, Kinoshita M, Weckström M, Arikawa K. 2015. Difference in dynamic properties of photoreceptors in a butterfly, Papilio xuthus: possible segregation of motion and color processing. J. Comp. Physiol. A 201, 1115-1123. ( 10.1007/s00359-015-1039-y) [DOI] [PubMed] [Google Scholar]

- 27.Bae Y, Jang JH, Ra JB. 2010. Gamut-adaptive correction in color image processing. In Proc. 2010 IEEE Int. Conf. Image Processing, 26–29 September 2010, Hong Kong, China, pp. 3597–3600. New York, NY: IEEE. ( 10.1109/ICIP.2010.5652000Y) [DOI]

- 28.Gumbert A, Kunze J, Chittka L. 1999. Floral colour diversity in plant communities, bee colour space and a null model. Proc. R. Soc. Lond. B 266, 1711-1716. ( 10.1098/rspb.1999.0836) [DOI] [Google Scholar]

- 29.Hernández-Andrés J, Romero J, Nieves JL, Lee RL. 2001. Color and spectral analysis of daylight in southern Europe. J. Opt. Soc. Am. A 18, 1325. ( 10.1364/JOSAA.18.001325) [DOI] [PubMed] [Google Scholar]

- 30.Chittka L, Shmida A, Troje N, Menzel R. 1994. Ultraviolet as a component of flower reflections, and the colour perception of Hymenoptera. Vision Res. 34, 1489-1508. ( 10.1016/0042-6989(94)90151-1) [DOI] [PubMed] [Google Scholar]

- 31.Chittka L. 1997. Bee color vision is optimal for coding flower color, but flower colors are not optimal for being coded–why? Israel J. Plant Sci. 45, 115-127. ( 10.1080/07929978.1997.10676678) [DOI] [Google Scholar]

- 32.Meyer AF, Williamson RS, Linden JF, Sahani M. 2017. Models of neuronal stimulus-response functions: elaboration, estimation, and evaluation. Front. Syst. Neurosci. 10, 109. ( 10.3389/fnsys.2016.00109) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Zhuang J, Ng L, Williams D, Valley M, Li Y, Garrett M, Waters J. 2017. An extended retinotopic map of mouse cortex. eLife 6, e18372. ( 10.7554/eLife.18372) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Weller JP, Horwitz GD. 2018. Measurements of neuronal color tuning: procedures, pitfalls, and alternatives. Vision Res. 151, 53-60. ( 10.1016/j.visres.2017.08.005) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Salcedo E, Zheng L, Phistry M, Bagg EE, Britt SG. 2003. Molecular basis for ultraviolet vision in invertebrates. J. Neurosci. 23, 10 873–10 878. ( 10.1523/JNEUROSCI.23-34-10873.2003) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Sharkey CR, Blanco J, Leibowitz MM, Pinto-Benito D, Wardill TJ. 2020. The spectral sensitivity of Drosophila photoreceptors. Scient. Rep. 10, 18242. ( 10.1038/s41598-020-74742-1) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Hart NS. 2001. The visual ecology of avian photoreceptors. Prog. Retin. Eye Res. 20, 675-703. ( 10.1016/S1350-9462(01)00009-X) [DOI] [PubMed] [Google Scholar]

- 38.Powell SB, Mitchell LJ, Phelan AM, Cortesi F, Marshall J, Cheney KL. 2021. A five-channel LED display to investigate UV perception. Methods Ecol. Evol. 12, 602-607. ( 10.1111/2041-210X.13555) [DOI] [Google Scholar]

- 39.Bayer FS, Paulun VC, Weiss D, Gegenfurtner KR. 2015. A tetrachromatic display for the spatiotemporal control of rod and cone stimulation. J. Vis. 15, 15. ( 10.1167/15.11.15) [DOI] [PubMed] [Google Scholar]

- 40.Resonon. 2021. Hyperspectral images of Calendula flower. See https://downloads.resonon.com.

- 41.Wernet MF, Perry MW, Desplan C. 2015. The evolutionary diversity of insect retinal mosaics: common design principles and emerging molecular logic. Trends Genet. 31, 316-328. ( 10.1016/j.tig.2015.04.006) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Estévez O, Spekreijse H. 1982. The ‘silent substitution’ method in visual research. Vision Res. 22, 681-691. ( 10.1016/0042-6989(82)90104-3) [DOI] [PubMed] [Google Scholar]

- 43.Christenson MP, Mousavi SN, Oriol E, Heath SL, Behnia R. 2022. Exploiting colour space geometry for visual stimulus design across animals. Figshare. ( 10.6084/m9.figshare.c.6098630) [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The drEye Python package is available at https://github.com/gucky92/dreye, and a list of the essential dependencies is provided as electronic supplementary material, table S1 [43]. Tutorials for using the different methods mentioned in the paper and additional approaches that were omitted can be found in the documentation for the package at https://dreye.readthedocs.io/en/latest/. The Web app associated with drEye is available at https://share.streamlit.io/gucky92/dreyeapp/main/app.py.