Abstract

Background

In this systematic review we sought to characterize practice effects on traditional in-clinic or digital performance outcome measures commonly used in one of four neurologic disease areas (multiple sclerosis; Huntington's disease; Parkinson's disease; and Alzheimer's disease, mild cognitive impairment and other forms of dementia), describe mitigation strategies to minimize their impact on data interpretation and identify gaps to be addressed in future work.

Methods

Fifty-eight original articles (49 from Embase and an additional 4 from PubMed and 5 from additional sources; cut-off date January 13, 2021) describing practice effects or their mitigation strategies were included.

Results

Practice effects observed in healthy volunteers do not always translate to patients living with neurologic disorders. Mitigation strategies include reliable changes indices that account for practice effects or a run-in period. While the former requires data from a reference sample showing similar practice effects, the latter requires a sufficient number of tests in the run-in period to reach steady-state performance. However, many studies only included 2 or 3 test administrations, which is insufficient to define the number of tests needed in a run-in period.

Discussion

Several gaps have been identified. In particular the assessment of practice effects on an individual patient level as well as the temporal dynamics of practice effects are largely unaddressed. Here, digital tests, which allow much higher testing frequency over prolonged periods of time, can be used in future work to gain a deeper understanding of practice effects and to develop new metrics for assessing and accounting for practice effects in clinical research and clinical trials.

Keywords: Practice effects, Multiple sclerosis, Huntington disease, Parkinson disease, Dementia, Alzheimer disease, Mild cognitive impairment

Practice effects, Multiple sclerosis, Huntington disease, Parkinson disease, Dementia, Alzheimer disease, Mild cognitive impairment.

1. Introduction

Chronic neurological diseases such as multiple sclerosis, Huntington's disease, Parkinson's disease or dementia may manifest in functional impairment in one or several functional domains (Lees et al., 2009; Roos, 2010; Sosnoff et al., 2014). Assessing these domains regularly can provide valuable insights into both the subject's disease status and the disease course and also inform treatment and disease management (Tur et al., 2018). Repeated performance assessments over time may, however, be susceptible to practice effects. Practice effects (also sometimes known as learning effects; see Panel for definition) is any change or improvement that results from repetition of tasks or activities, including repeated exposure to an instrument, rather than due to a true change in a patient's ability (Heilbronner et al., 2010; McCaffrey and Westervelt, 1995). For example, patients may perform better in subsequent tests as they fully comprehend the tasks (context memory or context effects) or gain knowledge of the sequence of tasks (episodic memory or content effects) and map the stimulus to the response (Goldberg et al., 2015). Over time this familiarity with the test could lead the subject to develop strategies that result in inflated test performance compared with a subject exposed to the test for the first time (Goldberg et al., 2015). The overall improvement in performance, or practice effects, is the result of consecutive gains that tend to be largest at first and gradually become smaller as the number of assessments increases (Figure 1) (Bartels et al., 2010; Falleti et al., 2006). In particular at short inter-test intervals, practice effects are often much greater than normative functional change over a similar interval (Jones, 2015).

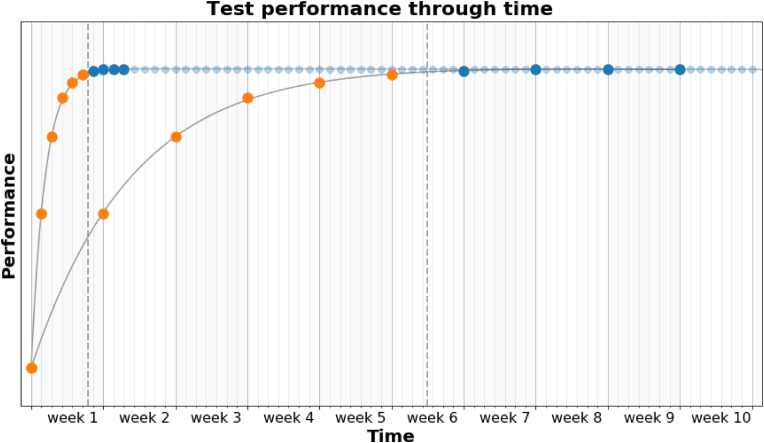

Figure 1.

Schematic representation of the evolution of test performance through time solely due to task repetition (practice effects) when different assessment frequencies are considered. Each curve represent the test performance through time for a daily assessment (top curve) and a weekly assessment schedule (bottom curve). Each individual test is represented by a dot, colored either orange if it is part of the practice period (or run-in period), or blue if it is part of the steady-state period. During the practice period, performance gain between consecutive tests is largest at first and gradually reduces as the number of assessments increases. The assessment frequency does not alter the overall performance gain or number of iterations required to reach a steady-state suitable for reliable assessment, but decreases or increases the time needed to reach such state (e.g. 7 days vs 7 weeks). The subject's abilities are considered constant over the period of time considered.

Practice effects are often considered to introduce unwanted variance and thus complicate the interpretation of repeated clinical assessments (McCaffrey and Westervelt, 1995). If not accounted for, practice effects can lead to misdiagnosis or misinterpretation of clinical data, resulting in delayed access to the most effective treatment option (Elman et al., 2018; Marley et al., 2017). Despite a large body of literature on practice effects, their impact on the subject's performance is seldom addressed and has been described as “large, pervasive and underappreciated” (Jones, 2015). Current study designs typically do not adequately estimate and mitigate their impact on test performance despite most repeated assessments being affected by practice effects to a varying degree (Johnson et al., 1991; McCaffrey and Westervelt, 1995). Thus, key in addressing this challenge is not only the characterization of practice effects and their underlying mechanisms, but also the implementation of mitigation strategies.

| Panel. Definitions |

|---|

| Practice effects: Practice effects are any change or improvement that results from practice or repetition of task items or activities, including repeated exposure to an instrument, rather than due to a true change in an individual's ability. Many studies, however, consider such improvements to be practice effects only if these improvements resulted in improved test scores. Practice effects are sometimes also known as learning effects. |

| Longitudinal effects: Unlike practice effects, longitudinal effects describe changes in test performance resulting from functional changes, treatment intervention, or changes in motivation or fatigue levels. These longitudinal effects typically occur at larger timescales but may be confounded with practice effects. |

| Run-in period: A period/number of test iterations during which large practice effects are allowed to occur, until the magnitude of alterations from one test to the next is negligible. The run-in assessments are discarded and the subsequent test iteration is considered as a measure of baseline performance. Sometimes also known as ‘familiarization’ or ‘massed practice’ period. |

| Iterations: Number of times a subject undertakes an assessment irrespective of the duration between test repetitions. |

This systematic review aims to evaluate the presence and magnitude of practice effects associated with commonly used performance outcome measures in patients living with one of the four neurologic disorders: multiple sclerosis; Huntington's disease; Parkinson's disease; and Alzheimer's disease, mild cognitive impairment and other forms of dementia. In addition, this review discusses the different mitigation strategies that have been applied to minimize the impact of practice effects. Finally, it identifies gaps in our understanding of practice effects in patients with neurologic disorders, which should be addressed in future research.

2. Methods

A systematic literature search was conducted on Embase and PubMed according to PRISMA guidelines to identify original articles that discuss practice effects in patients with neurologic disorders (cut-of date: January 13, 2021). Three separate search strings were used: one to identify original articles on commonly used performance outcome measures, one to identify original articles on practice effects, and one to identify original articles on either multiple sclerosis; Parkinson's disease; Huntington's disease; or mild cognitive impairment, Alzheimer's disease or other forms of dementia (Table 1). Combining these three search strings with a Boolean “AND” resulted in the list of publications that were considered for this systematic review. Additional relevant records were identified through clinicaltrials.gov and from our own collection of references.

Table 1.

Search string.

| Search string 1: Performance outcome measures |

Search string 2: Practice effects |

Search string 3: Neurologic disorders |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

While ‘neuropsychological test’ was included in the search string, this was only used to identify original articles that reported practice effects on at least one of the other performance outcome measures.

Publications were excluded if they were not original articles (for example, congress abstracts or other review articles); written in a language other than English, were duplicates; did not report practice effects or mitigation strategies in one of four disease areas specified in the search string; or did not report practice effects or mitigation strategies for one of the performance outcomes measures specified in the search string. ‘Neuropsychological test’ was included in the search string to identify original studies that investigated practice effects or mitigation strategies in test batteries that include at least one of the other performance outcome measures defined in the search string. This eligibility assessment was performed by the first author.

To minimize the impact of bias, only improvements in test performance that resulted from practice or repetition of task and cannot be explained by other means were considered to be practice effects. Thus, practice effects were considered whenever possible in the non-interventional cohort. Risk of publication bias and selective reporting was assessed by identifying the number of completed and potentially relevant studies listed on clinicaltrials.gov for which results have not been published yet.

In this systematic review, we aim to address the following five questions:

-

•

Which metrics were used to identify possible practice effects?

-

•

Were practice effects observed in patients with neurologic disorders, and how common were they?

-

•

Which mitigation strategies were applied to minimize the impact of practice effects?

-

•

Do practice effects carry any clinically meaningful information?

-

•

Are there any gaps in our current understanding of practice effects in patients with neurologic disorders?

3. Results

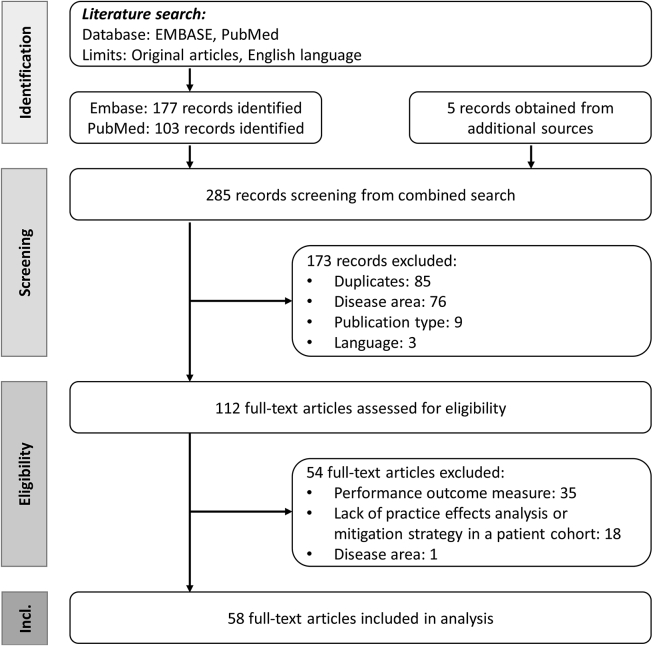

The literature search on Embase and PubMed identified a total of 177 and 103 records, respectively. An additional 5 studies from a search on clinicaltrials.gov or from our own collection of references were included in the analysis. Of the 285 records, 58 were considered eligible (Figure 2). Records were excluded during screening for the following reasons: duplicates (n = 85), disease area (n = 76), publication type other than original articles (n = 9) and language other than English (n = 1). While assessing the full-text articles for eligibility, additional 54 records were excluded (performance outcome measures: n = 35, did not report on practice effects or mitigation strategy: n = 18, disease area: n = 1). Only two completed and potentially relevant studies were identified on clinicaltrials.gov that did not publish results on practice effect analyses (NCT02225314 and NCT02476266). Table 2 summarizes the functional domains assessed by each performance outcome measure included in the analysis.

Figure 2.

PRISMA flow diagram. Incl, Inclusion.

Table 2.

Performance outcome measures and their functional domain.

| Performance outcome measure | Functional domain | Reference |

|---|---|---|

| SDMT | Information processing speed, working memory | Smith (1982), Toh et al. (2014) |

| PASAT | Information processing speed, working memory | Gronwall (1977), Rao et al. (1989) |

| SRT | Sequential learning | Schendan et al. (2003) |

| TMT | ||

| TMT-A | Information processing speed | Salthouse (2011), Duff et al. (2018) |

| TMT-B | Executive function | Toh et al. (2014) |

| Stroop Test | ||

| Stroop Word Test | Information processing speed | Stroop (1935), Toh et al. (2014) |

| Stroop Color Test | Information processing speed | Stroop (1935), Toh et al. (2014) |

| Stroop Interference Test | Executive function | Stroop (1935), Toh et al. (2014) |

| BVMT-R | Visuospatial memory | Benedict (1997) |

| CVLT | Learning and memory | Delis et al. (1987), Elwood (1995) |

| HVLT | Learning and memory | Brandt (1991) |

| WAIS | ||

| Coding/Digit Symbol | Information processing speed | Wechsler (2008) |

| Digit Span | Working memory | Wechsler (2008) |

| Letter-Number Sequencing | Working memory | Wechsler (2008) |

| Similarities | Verbal comprehension | Wechsler (2008) |

| Matrix Reasoning | Perceptual Organization | Wechsler (2008) |

| WMS | ||

| Spatial Span | Working memory | Wechsler (2009) |

| Logical Memory | Episodic memory | Wechsler (2009) |

| Visual Reproduction | Episodic memory | Wechsler (2009) |

| Paired Associations | Verbal comprehension | Wechsler (2009) |

| MMSE | Global cognition | Folstein et al. (1975) |

| T25FW | Gait | Motl et al. (2017) |

| 2MWT | Gait | Rossier and Wade (2001) |

| TUG | Gait, dynamic and static balance | Podsiadlo and Rirchardson (1991) |

| 9HPT | Hand-motor function, manual dexterity | Feys et al. (2017) |

| Purdue Pegboard | Hand-motor function, manual dexterity | Tiffin (1968) |

| Speeded Tapping/Alternating Tapping | Hand-motor function, manual dexterity | Stout et al. (2014), Prince et al. (2018), Westin et al. (2010) |

| Paced Tapping | Hand-motor function, manual dexterity | Stout et al. (2014) |

| Smartphone-based SDMT | Information processing speed, working memory | Pham et al. (2021) |

| Memory Test | Short-term memory | Prince et al. (2018) |

| Brain on Track | ||

| Attention task III | Attention, information processing speed | Ruano et al. (2020) |

| Visual memory task II | Visual memory, attention | Ruano et al. (2020) |

| Delayed verbal memory | Verbal memory | Ruano et al. (2020) |

| Calculus task | Calculus | Ruano et al. (2020) |

| Colour interference task | Executive function | Ruano et al. (2020) |

| Verbal memory II | Verbal memory | Ruano et al. (2020) |

| Opposite task | Executive function, inhibitory control | Ruano et al. (2020) |

| Written comprehension | Language comprehension, information processing speed | Ruano et al. (2020) |

| Word categories | Language | Ruano et al. (2020) |

| Sequences | Executive function | Ruano et al. (2020) |

| Puzzles | Visuospatial abilities | Ruano et al. (2020) |

| CANTAB | ||

| One Touch Stockings of Cambridge | Executive function | Giedraitiene and Kubrys (2019) |

| Spatial Working Memory | Working memory | Giedraitiene and Kubrys (2019) |

| Reaction Time Task | Information processing speed | Giedraitiene and Kubrys (2019) |

| Paired Associates Learning | Visual memory | Giedraitiene and Kubrys (2019) |

| MSReactor | ||

| Simple Reaction Time | Information processing speed | Merlo et al. (2019) |

| Choice Reaction Time | Visual attention | Merlo et al. (2019) |

| One-Back Test | Working memory | Merlo et al. (2019) |

| CogState | ||

| Detection Task | Information processing speed | Hammers et al. (2011) |

| Identification Task | Visual attention | Hammers et al. (2011) |

| One-Back Task | Working memory | Hammers et al. (2011) |

| One Card Learning | Visual recognition | Hammers et al. (2011) |

| Divided Attention | Divided attention | Hammers et al. (2011) |

| Associative Learning | Associative learning | Hammers et al. (2011) |

| Visual Search | Cognitive function, motor behavior | Utz et al. (2013) |

| MSPT | ||

| Manual Dexterity Test | Hand-motor function, manual dexterity | Rao et al. (2020) |

| Contrast Sensitivity Test | Vision | Rao et al. (2020) |

| Walking Speed Test | Gait | Rao et al. (2020) |

| Driving Simulator | Visual information integration | Teasdale et al. (2016) |

2MWT, Two-Minute Walk Test; 9HPT, Nine-Hole Peg Test; BVMT-R, Brief Visuospatial Memory Test-Revised; CANTAB, Cambridge Neuropsychological Test Automated Battery; CVLT, California Verbal Learning Test; HVLT, Hopkins Verbal Learning Test; MMSE, Mini-Mental State Examination; MSPT, Multiple Sclerosis Performance Test; PASAT, Paced Auditory Serial Addition Test; SDMT, Symbol Digit Modalities Test; SRT, Serial Reaction Time; T25FW, Timed 25-Foot Walk; TMT, Trail-Making Test; TUG, Timed Up and Go; WAIS, Wechsler Adult Intelligence Scale; WMS, Wechsler Memory Scale.

3.1. Identifying and quantifying practice effects

Several different approaches and metrics have been applied to identify practice effects, to quantify their magnitude and temporal dynamics, and to address potential biases in the interpretation of the data. These different approaches and metrics are summarized in Table S1 in the supplementary appendix.

3.1.1. Identifying practice effects

Descriptive statistics have been used to compare the change in performance between baseline and retest (Cohen et al., 2000, 2001; Duff et al., 2007, 2012; Duff and Hammers, 2022). However, it is more common to test the difference for statistical significance. Depending on the study design and the distribution of data, t-tests, Friedman's test, Wilcoxon rank test, ANOVA, ANCOVA or other general linear models have been used to identify practice effects (Bachoud-Lévi et al., 2001; Barker-Collo, 2005; Beglinger et al., 2014a, 2014b; Benedict, 2005; Benedict et al., 2008; Benninger et al., 2011, 2012; Bever et al., 1995; Buelow et al., 2015; Campos-Magdaleno et al., 2017; Claus et al., 1991; Duff et al., 2017, 2018; Eshaghi et al., 2012; Frank et al., 1996; Fuchs et al., 2020; Gallus and Mathiowetz, 2003; Gavett et al., 2016; Giedraitiene and Kaubrys, 2019; Glanz et al., 2012; Hammers et al., 2011; Merlo et al., 2019; Meyer et al., 2020; Nagels et al., 2008; Patzold et al., 2002; Pliskin et al., 1996; Rao et al., 2020; Reilly and Hynes, 2018; Rosti-Otajärvi et al., 2008; Ruano et al., 2020; Snowden et al., 2001; Solari et al., 2005; Sormani et al., 2019; Stout et al., 2014; Teasdale et al., 2016; Toh et al., 2014; Vogt et al., 2009; Westin et al., 2010). Alternatively, longitudinal data can be fitted with cubic splines to detect improvements over time that indicate practice effects (Merlo et al., 2019).

Practice effects may also be indirectly identified. For example, a change in clinical diagnosis (for example, from mild cognitive impairment to cognitively healthy) can indicate the presence of practice effects (Duff et al., 2011). Similarly, patients who showed functional disability at baseline may, due to practice, improve their performance at retest sufficiently to no longer be classified as impaired (Schwid et al., 2007). Practice effects may also be indirectly inferred from a reliable change index analysis (Rosas et al., 2020). As the reliable change index captures the expected change based on the change observed in a reference population. An improvement beyond this index suggests that the patient showed greater than expected improvements, which may indicate practice effects.

For the purpose of this review, any improvements in test performance that cannot be explained by other means such as interventional effects, functional recovery or decline etc. were considered to be practice effects.

3.1.2. Quantifying the magnitude of practice effects

A common approach to quantify practice effects is to compute their effect size. Available effect size metrics include Cohen's d, repeated-measures effect size, η2 and partial η2 (Beglinger et al., 2014a; Benedict, 2005; Benedict et al., 2008; Campos-Magdaleno et al., 2017; Duff et al., 2017; Eshaghi et al., 2012; Giedraitiene and Kaubrys, 2019; Gross et al., 2018; Hammers et al., 2011; Higginson et al., 2009; Rao et al., 2020; Stout et al., 2014; Vogt et al., 2009). Similarly the change in test scores can be quantified in SD units (Elman et al., 2018; Erlanger et al., 2014; Gavett et al., 2016). Practice effects can also be quantified by computing the ratio between the test scores at retest and at baseline to obtain a progression ratio (Prince et al., 2018). An alternative approach to estimate a reliable change index from a normative or reference population (Duff et al., 2017; Rosas et al., 2020; Turner et al., 2016; Utz et al., 2016). The reliable change index can be applied on an individual patient level and compared against the observed change. This results in a z-score that informs about the magnitude of practice effects relative to the expected practice effects. Z-scores greater than 1 indicate greater than expected practice effects. Non-parametric statistics can then be applied to assess between-group differences (Duff et al., 2017). Alternatively, cut-off values can be defined to classify patients into one of three groups: significant improvement, significant worsening or stable response (Duff et al., 2017; Rosas et al., 2020; Turner et al., 2016; Utz et al., 2016). Similarly, regression-based models can be used to predict test scores at retest. Such models can be built either with data obtained from a normative or reference population (Duff et al., 2014, 2018; Duff and Hammers, 2022) or from baseline scores and demographic data of the studied patient population (Duff et al., 2015, 2017). Comparing the predicted with the observed test scores at retest results in a z-score, similar to the reliable change index approach. Finally, slope-intercept models can be fitted to the test scores to estimate the average change over time (Britt et al., 2011).

3.1.3. Estimating the temporal dynamics of practice effects

Besides quantifying the magnitude of practice effects, few studies have also estimated the duration until steady-state performance is reached. In Prince et al. (2018), the steady-state index was computed as the first confirmed test iteration at which the performance reached a predefined threshold. In contrast, Pham et al. (2021) fitted a biphasic, linear + linear learning curve model to the data, with the first phase fitting the practice phase and the second phase the steady-state performance phase. Using non-linear regression, they identified the change point, which marked the end of the practice phase.

3.1.4. Addressing biases

Finally, few analyses attempted to account for various biases. These include accounting for the attrition effect (Elman et al., 2018), which describes the bias introduced by patients lost to follow-up, and for the reduced capacity for practice effects among patients with high test performance at baseline (Gross et al., 2018; Sormani et al., 2019).

3.2. Practice effects

Across all four disease areas, certain performance outcome measures, or tests, were more prone to practice effects than others (Table 3). Among those assessing information processing speed, the Paced Auditory Serial Addition Test (PASAT) was most strongly associated with practice effects. A possible explanation is its stronger working memory component and its increased complexity. Among tests of executive function, the Trail-Making Test B (TMT-B) was more likely to produce practice effects than the Stroop Interference Test. The inhibitory processes involved when performing the Stroop Interference Test might make this test less prone to practice effects. On tests of learning and memory or visuospatial memory such the Hopkins Verbal Learning Test (HVLT) or the Brief Visuospatial Memory Test-Revised (BVMT-R), practice effects were more common if the same form was used. This suggest that practice effects are mostly driven by item learning. In addition, on the BVMT-R, delayed recall was more often associated with practice effects than immediate recall. However, this was not observed on the HVLT.

Table 3.

Percentage of publications reporting practice effects.

| Performance outcome measurea | Functional domain | Practice effectsb |

||

|---|---|---|---|---|

| Continuous or initial | Inconclusive | No | ||

| SDMT | Information processing speed, working memory | 7 | 5 | 5 |

| PASAT | Information processing speed, working memory | 13 | 1 | 1 |

| TMT-A | Information processing speed | 2 | 4 | 2 |

| TMT-B | Executive function | 3 | 5 | 4 |

| Stroop Word | Information processing speed | 3 | 1 | 2 |

| Stroop Color | Information processing speed | 3 | 0 | 2 |

| Stroop Interference | Executive function | 1 | 0 | 4 |

| BVMT-R total recall | Visuospatial memory | 3 | 5 | 1 |

| BVMT-R delayed recall | Visuospatial memory | 5 | 3 | 1 |

| CVLT total recall | Learning & memory | 2 | 1 | 3 |

| CVLT delayed recall | Learning & memory | 3 | 1 | 2 |

| HVLT total recall | Learning & memory | 3 | 4 | 0 |

| HVLT delayed recall | Learning & memory | 2 | 3 | 0 |

| Digit Span | Working memory | 2 | 1 | 3 |

| Logical Memory | Learning & memory | 2 | 0 | 4 |

| MMSE | Global cognition | 1 | 2 | 1 |

| T25FW | Gait | 1 | 0 | 5 |

| 9HPT | Hand-motor function, manual dexterity | 4 | 0 | 1 |

9HPT, Nine-Hole Peg Test; BVMT-R, Brief Visuospatial Memory Test-Revised; CVLT, California Verbal Learning Test; HVLT, Hopkins Verbal Learning Test; MMSE, Mini-Mental State Examination; PASAT, Paced Auditory Serial Addition Test; SDMT, Symbol Digit Modalities Test; T25FW, Timed 25-Foot Walk; TMT, Trail-Making Test; VR, Visual Reproduction.

Only performance outcome measures reported in at least 4 studies are included in this analysis.

3.3. Practice effects in patients with multiple sclerosis

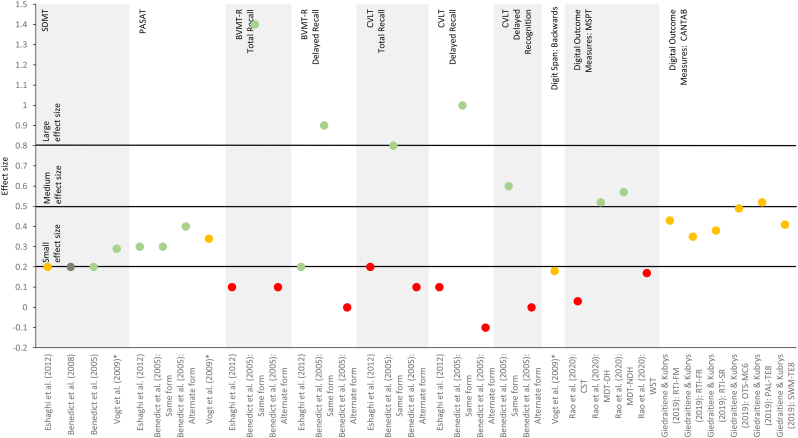

The literature search revealed 27 studies on practice effects in multiple sclerosis. Details on study design and presence of practice effects are summarized in Table 4. Effect sizes (Cohen's d) are depicted in Figure 3.

Table 4.

Practice effects in patients with multiple sclerosis.

| Study | Sample size |

Study type | Follow-up duration | # test iteration | Practice effects in cohort of interesta |

Comment | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| Cohort of interest | Add. cohort | Continuous effects | Initial effects | Inconclusive | No improvement | |||||

| Barker-Collo (2005) |

|

LO | Single session | 2 |

|

Practice effects on the PASAT were observed for the 2.0-, 1.6-, and 1.2-second presentation, but not for the 2.4-second presentation. | ||||

| Benedict et al. (2005) |

|

LO | 1 week | 2 |

|

Practice effects on the BVMT-R and CVLT were observed only with the same form. | ||||

|

||||||||||

|

||||||||||

|

||||||||||

| Benedict et al. (2008) |

|

|

LO | 5 months | 6 |

|

An ANOVA was conducted to investigate the main effect over time among patients with multiple sclerosis. | |||

| Bever et al. (1995) |

|

RCT | 16 weeks | 5 |

|

|

All patients randomized to the active treatment arm had been off the study drug (3,4-diaminopyridine) for at least 30 days at the time of each evaluation. | |||

| Cohen et al. (2000) |

|

LO | 6 months | 8 |

|

|

||||

|

||||||||||

| Cohen et al. (2001) |

|

RCT | 28 days | 4 |

|

|

|

Practice effects were assessed during a run-in period prior to randomization. | ||

| Erlanger et al. (2014) |

|

LO | 45 days | 2 |

|

Results are reported for a composite score. | ||||

|

||||||||||

|

||||||||||

| Eshaghi et al. (2012) |

|

LO | Mean (SD) of 10.8 (3.78) days | 2 |

|

|

|

A total of 158 patients were recruited, of which 41 were included in the practice effects analysis. A trend towards improvement was observed on the SDMT. | ||

|

|

|||||||||

| Fuchs et al. (2020) |

|

LO | 16 years | ≤10 |

|

|||||

| Gallus and Mathiowetz (2003) |

|

LO | 1 week | 2 |

|

|

||||

|

||||||||||

| Giedraitiene and Kubrys (2019) |

|

|

LO | 3 months | 3 |

|

Practice effects were only assessed in patients with relapsing MS. | |||

|

|

Functional recovery and practice effects may have occurred concurrently in relapsing MS. | ||||||||

|

||||||||||

|

||||||||||

| Glanz et al. (2012) |

|

LO | 5 years | 7 |

|

|

||||

|

||||||||||

| Merlo et al. (2019) |

|

|

LO | 18 months | ≤10 |

|

A total of 450 patients with MS were recruited and completed their baseline assessment, practice effects were assessed in a subset of 328 patients with MS who completed up to 10 assessments. | |||

| Meyer et al. (2020) |

|

|

LO | 4–5 weeks | 4 |

|

|

Practice effects are reported for 8 patients with MS; 2 patients with MS were excluded due to muscle exhaustion/pain). | ||

|

||||||||||

| Nagels et al. (2008) |

|

LO | Single session | 2 |

|

|||||

| Patzold et al. (2002) |

|

|

NRI | 20 days | 3 |

|

||||

|

||||||||||

|

||||||||||

| Pham et al. (2021) |

|

LO | ≥20 weeks | ≥20 |

|

A total of 154 patients and 39 healthy controls were recruited, of which 15 patients and 1 healthy control were included in the practice effects analysis. | ||||

|

||||||||||

| Pliskin et al. (1996)b |

|

RCT | 2 years | 2 |

|

|

|

Main effect of time was observed for improvement on Stroop Word Test and WMS Visual Reproduction (immediate recall); improvement on WMS Visual Reproduction (delayed recall) was associated with high-dose IFN-β-1b. | ||

|

|

|

||||||||

|

|

|||||||||

|

||||||||||

|

||||||||||

| Rao et al. (2020) |

|

|

LO | Single session | 2 |

|

|

|||

|

||||||||||

| Reilly and Hynes (2018) |

|

NRI | 18 weeks | 3 |

|

|

Observed improvements on were associated with cognitive rehabilitation; improvements on the SDMT and the TMT-A did not reach statistical significance. | |||

|

||||||||||

|

||||||||||

|

||||||||||

|

||||||||||

| Rosti-Otajärvi et al. (2008) |

|

|

LO | 4 weeks | 5 |

|

|

|||

|

||||||||||

| Ruano et al. (2020) |

|

|

LO | 3 months | 4 |

|

|

|

||

|

|

|

||||||||

|

|

|||||||||

|

||||||||||

|

||||||||||

|

||||||||||

| Schwid et al. (2007) |

|

RCT with OLE | 10 years | 3 |

|

A total of 251 patients were initially randomized, of whom 153 had 10-year follow-up data and were included in the analyses. Improvements at year 2 were independent of initial treatment allocation. | ||||

|

||||||||||

| Solari et al. (2005) |

|

LO | Single session | 6 |

|

• T25FW | ||||

|

||||||||||

| Sormani et al. (2019) |

|

RCT | 2 weeks | 3 |

|

Practice effects were assessed during a run-in period prior to randomization. | ||||

| Utz et al. (2016) |

|

NRI | 1 year | 3 |

|

|

Initially 73 patients were recruited, of whom 41 had follow-up data and did not switch therapy. | |||

|

||||||||||

|

||||||||||

|

||||||||||

| Vogt et al. (2009) |

|

NRI | 4–8 weeks | 3 |

|

|

WMS: Digit Span (forward) | Improvements on PASAT and Digit Span (backwards) were associated with additional cognitive training. | ||

|

|

|||||||||

|

||||||||||

9HPT, Nine-Hole Peg Test; Add., additional; Approx., approximately; BVMT-R, Brief Visuospatial Memory Test-Revised; CANTAB, Cambridge Neuropsychological Test Automated Battery; CVLT, California Verbal Learning Test; HC, healthy controls; MS, multiple sclerosis; MSPT, Multiple Sclerosis Performance Test; PASAT, Paced Auditory Serial Addition Test; RCT, randomized controlled trial; SDMT, Symbol Digit Modalities Test; T25FW, Timed 25-Foot Walk; TMT-A/B, Trail-Making Test A/B; TUG, Timed Up and Go; WMS, Wechsler Memory Scale.

‘Continuous effects’ is defined as a continuous improvement in test performance for ≥4 test administrations, with test performance continuing to improve up to the last test administered. By definition this can only be applied to studies that administered the test at least 4 times. In all other instances, practice effects are described as either ‘initial effect’ if clear signs of practice effects were evident; ‘inconclusive’ if practice effects were observed for a selection of test metrics, in a subgroup of patients only, or if other reasons may explain the improvement in test performance (for example, due to contribution of other tests in composite scores, or association with additional training or treatment etc.); or ‘no effect’ if no improvement in test performance was observed.

Results of the repeated assessments were not consistently reported for the placebo cohort; hence outcomes for the total cohort are reported.

Figure 3.

Effect sizes (Cohen's d, unless otherwise noted) for observed changes between test iterations in patients with multiple sclerosis. Studies (horizontal axis) that reported effect sizes for individual performance outcome measures are shows in the figure. Studies that did not report effect sizes or reported effect sizes for composite scores are not included in this figure. Dark green dots ( ) indicate continuous practice effects, light green dots (

) indicate continuous practice effects, light green dots ( ) initial practice effects, yellow dots (

) initial practice effects, yellow dots ( ) inconclusive effects and red dots (

) inconclusive effects and red dots ( ) absence of practice effects, as defined in Table 4. Small, medium and large effect sizes are defined as d = 0.2, d = 0.5 and d = 0.8, respectively, and apply to Cohen's d only (Cohen, 1992). ∗ Partial η2. BVMT-R, Brief Visuospatial Memory Test-Revised; CANTAB, Cambridge Neuropsychological Test Automated Battery; CST, Contrast Sensitivity Test; CVLT, California Verbal Learning Test; MDT-DH, Dominant-handed Manual Dexterity Test; MDT-NDH, Non-dominant-handed Manual Dexterity Test; MSPT, Multiple Sclerosis Performance Test; OTS-MC6, One Touch Stockings of Cambridge with 6 moves; PAL-TE8, Total error at 8-figure stage of the Paired Associates Learning; PASAT, Paced Auditory Serial Addition Test; RTI-FM, Five-choice movement time; RTI-FR, Five-choice reaction time; RTI-SR, Simple reaction time; SDMT, Symbol Digit Modalities Test; SWM-TE8, Total error for 8 boxes of Spatial Working Memory; WST, Walking Speed Test.

) absence of practice effects, as defined in Table 4. Small, medium and large effect sizes are defined as d = 0.2, d = 0.5 and d = 0.8, respectively, and apply to Cohen's d only (Cohen, 1992). ∗ Partial η2. BVMT-R, Brief Visuospatial Memory Test-Revised; CANTAB, Cambridge Neuropsychological Test Automated Battery; CST, Contrast Sensitivity Test; CVLT, California Verbal Learning Test; MDT-DH, Dominant-handed Manual Dexterity Test; MDT-NDH, Non-dominant-handed Manual Dexterity Test; MSPT, Multiple Sclerosis Performance Test; OTS-MC6, One Touch Stockings of Cambridge with 6 moves; PAL-TE8, Total error at 8-figure stage of the Paired Associates Learning; PASAT, Paced Auditory Serial Addition Test; RTI-FM, Five-choice movement time; RTI-FR, Five-choice reaction time; RTI-SR, Simple reaction time; SDMT, Symbol Digit Modalities Test; SWM-TE8, Total error for 8 boxes of Spatial Working Memory; WST, Walking Speed Test.

Repeated assessment of information processing speed was likely to result in practice effects. Both the traditional, clinician-administered as well as the smartphone-based Symbol Digit Modalities Test (SDMT) produced practice effects in most studies, although they were minimal and smaller than observed in healthy controls (Cohen's d: 0.2 vs 0.8) (Benedict, 2005; Benedict et al., 2008; Eshaghi et al., 2012; Glanz et al., 2012; Pham et al., 2021; Reilly and Hynes, 2018; Schwid et al., 2007; Vogt et al., 2009). Only Fuchs et al. (2020) and Bever et al. (1995) noted an absence of practice effects. However, all patients were previously exposed to the SDMT prior to enrolment, which might have impacted the ability to detect practice effects (Fuchs et al., 2020). Practice effects were also common on the PASAT, both with short inter-test intervals (every two weeks or shorter) and long inter-test intervals (every month or longer) (Barker-Collo, 2005; Benedict, 2005; Bever et al., 1995; Cohen et al., 2000, 2001; Eshaghi et al., 2012; Glanz et al., 2012; Nagels et al., 2008; Rosti-Otajärvi et al., 2008; Schwid et al., 2007; Solari et al., 2005; Sormani et al., 2019; Utz et al., 2016). Compared with the SDMT, PASAT-related practice effects were larger in magnitude (Cohen's d: 0.3–0.4 vs 0.2) (Benedict, 2005; Eshaghi et al., 2012). A trend towards improved test scores was also noted on the TMT-A in Reilly and Hynes (2018); however, all patients underwent cognitive rehabilitation prior to retest. Practice effects were also discernable on the Word, but not Color subtest of the Stroop Test (Pliskin et al., 1996).

There was little evidence of practice effects associated with executive function. On the TMT-B, subtle practice effects were observed in Reilly and Hynes (2018), but not in Pliskin et al. (1996). By comparison, repeated testing with the Stroop Interference test did not result in practice effects (Pliskin et al., 1996).

Practice effects were common on assessments of learning and memory or visuospatial memory. Both the California Verbal Learning Test (CVLT) and the BVMT-R produced practice effects. This was particularly evident if the same form was used (Benedict, 2005; Eshaghi et al., 2012). On the Visual Reproduction test, improvement in performance was independent of treatment allocation only on immediate recall but not on delayed recall (Pliskin et al., 1996). On the latter, improved test scores were observed only in patients receiving high-dose interferon-β. Finally, the digital Visual Search test was not associated with practice effects (Utz et al., 2016).

On the Digit Span test, a measure of working memory, improved test scores were only observed in the backward condition (Vogt et al., 2009). However, this improvement was associated with additional cognitive training.

On digital cognitive batteries, practice effects were observed on the Brain on Track test (Ruano et al., 2020), the MSReactor (Merlo et al., 2019) and the Cambridge Neuropsychological Test Automated Battery (CANTAB) (Giedraitiene and Kaubrys, 2019), with larger practice effects associated with more demanding tasks (Giedraitiene and Kaubrys, 2019; Merlo et al., 2019).

On gait and balance tests, short-term practice effects were reported on the Timed Up and Go (Meyer et al., 2020). In contrast, most studies showed an absence of practice effects on the Timed 25-Foot Walk (T25FW) (Cohen et al., 2000, 2001; Patzold et al., 2002; Rosti-Otajärvi et al., 2008; Solari et al., 2005), Two-Minute Walk Test (2MWT) (Meyer et al., 2020) and the digital Walking Speed Test (Rao et al., 2020); only Meyer et al. (2020) demonstrated discernable practice effects on the T25FW.

On assessments of hand-motor function, practice effects were observed on the digital Manual Dexterity Test (Rao et al., 2020) and Nine-Hole Peg Test (9HPT) (Cohen et al., 2000, 2001; Rosti-Otajärvi et al., 2008; Solari et al., 2005). However, in Patzold et al. (2002), 9HPT-related improvements were only observed in those patients receiving active treatment for acute relapse. By comparison, practice effects were unlikely to occur on the Purdue Pegboard, especially when each hand was considered separately (Gallus and Mathiowetz, 2003; Pliskin et al., 1996).

Finally, there was no evidence of practice effects on the Contrast Vision Test (Rao et al., 2020).

3.4. Practice effects in patients with Parkinson's disease

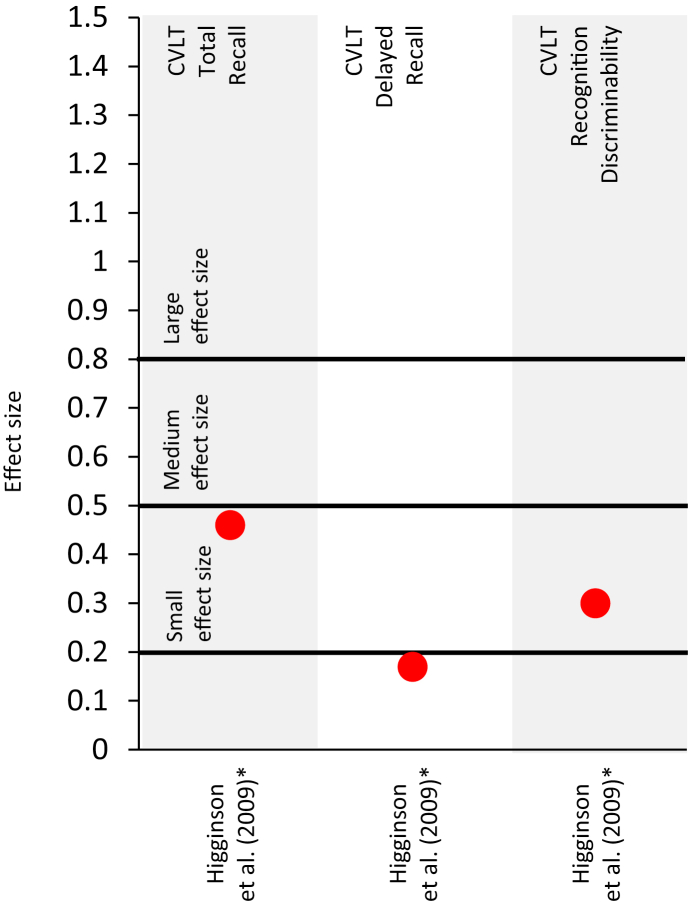

The literature search revealed seven studies on practice effects in Parkinson's disease. Details on study design and presence of practice effects are summarized in Table 5. Only one study reported effect sizes (Cohen's d), which are depicted in Figure 4.

Table 5.

Practice effects in patients with Parkinson's disease.

| Study | Sample size |

Study type | Follow-up duration | # test iterations | Practice effects in cohort of interesta |

Comment | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| Cohort of interest | Add. cohort | Continuous effects | Initial effects | Inconclusive | No improvement | |||||

| Benninger et al. (2011) |

|

RCT | 1 month | 3 |

|

Practice effects were independent of the intervention. | ||||

|

||||||||||

| Benninger et al. (2012) |

|

RCT | 1 month | 3 |

|

Practice effects were independent of the intervention. | ||||

|

||||||||||

| Buelow et al. (2015) |

|

|

RCT | 10–16 weeks | 2 |

|

Practice effects were only assessed in the placebo cohort. | |||

| Higginson et al. (2009) |

|

NRI | Mean (SD) of 15.7 (5.6) months | 2 |

|

|||||

| Prince et al. (2018) |

|

|

LO | 6 months | ≥20 Tapping tests; ≥ 10 memory tests |

|

||||

|

|

|

||||||||

| Turner et al. (2016) |

|

|

RCT | 10 weeks | 2 |

|

Practice effects were only assessed in the placebo cohort. | |||

|

||||||||||

| Westin et al. (2010) |

|

NRI | 1–6 weeks | 28–168 (4x per day) |

|

No difference was observed between first three days and remaining days. | ||||

Add., additional; CVLT, California Verbal Learning Test; HC, healthy controls; iTBS, intermittent theta-burst stimulation; LO, longitudinal observational; MCI, mild cognitive impairment; NRI, non-randomized interventional; PD, Parkinson's disease; RCT, randomized controlled trial; rTMS, repetitive transcranial magnetic stimulation; SDMT, Symbol Digit Modalities Test; WAIS, Wechsler Adult Intelligence Scale; YHC, young healthy controls.

‘Continuous effects’ is defined as a continuous improvement in test performance for ≥4 test administrations, with test performance continuing to improve up to the last test administered. By definition this can only be applied to studies that administered the test at least 4 times. In all other instances, practice effects are described as either ‘initial effect’ if clear signs of practice effects were evident; ‘inconclusive’ if practice effects were observed for a selection of test metrics, in a subgroup of patients only, or if other reasons may explain the improvement in test performance (contribution of other tests in composite scores, association with additional training or treatment etc.); or ‘no effect’ if no improvement in test performance was observed.

Figure 4.

Effect sizes (Cohen's d) for observed changes between test iterations in patients with Parkinson's disease. Studies that did not report effect sizes or reported effect sizes for composite scores are not included in this figure. Red dots ( ) absence of practice effects, as defined in Table 5. Small, medium and large effect sizes are defined as d = 0.2, d = 0.5 and d = 0.8, respectively (Cohen, 1992). CVLT, California Verbal Learning Test. ∗Effect size indicates a worsening in test performance.

) absence of practice effects, as defined in Table 5. Small, medium and large effect sizes are defined as d = 0.2, d = 0.5 and d = 0.8, respectively (Cohen, 1992). CVLT, California Verbal Learning Test. ∗Effect size indicates a worsening in test performance.

Most of these studies did not reveal any practice effects, whether on the CVLT (Higginson et al., 2009), the Digit Span Test (Turner et al., 2016), the Similarities Test (Turner et al., 2016) or the digital Tapping Test (Westin et al., 2010). On the Serial Reaction Time test, two studies revealed improvements, or reduced reaction times, that suggest practice effects (Benninger et al., 2011, 2012). However, Buelow et al. (2015) noted a worsening at retest.

This does not preclude the possibility that a subgroup of patients show signs of practice effects. In fact, Prince et al. (2018) identified three subgroups of patients on both the Alternating Tapping Test and Memory Test included in the mPower dataset: those who improved over time by at least 20%, those who deteriorated over time by at least 20% and those who remained stable.

3.5. Practice effects in patients with Huntington's disease

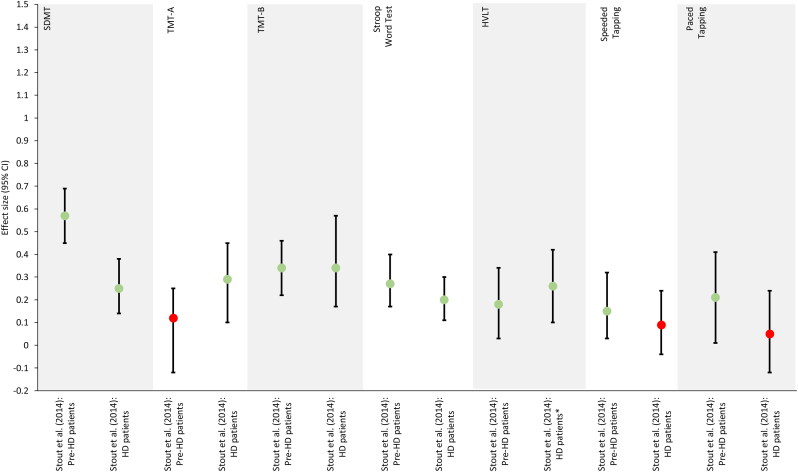

The literature search revealed seven studies on practice effects in Huntington's disease. Details on study design and presence of practice effects are summarized in Table 6. Only one study reported effect sizes (Cohen's d), which are depicting in Figure 5.

Table 6.

Practice effects in patients with Huntington's disease.

| Study | Sample size |

Study type | Follow-up duration | # test iterations | Practice effects in cohort of interesta |

Comment | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| Cohort(s) of interest | Add. cohort(s) | Continuous effects | Initial effects | Inconclusive | No improvement | |||||

| Bachoud-Lévi et al. (2001) |

|

LO | 2–4 years | 3–5 |

|

|||||

|

||||||||||

| Beglinger et al. (2014a) |

|

RCT | ≤24 hours and ≥6 days (mean [SE]: 20.4 [2.2] days) | 2 |

|

|

Practice effects were assessed prior to randomization. Initial effects on the SDMT were observed only with longer inter-test interval. | |||

|

||||||||||

|

||||||||||

| Beglinger et al. (2014b) |

|

|

RCT | 20 weeks | 6 |

|

Results are reported for a composite score. | |||

|

||||||||||

|

||||||||||

|

||||||||||

|

||||||||||

|

||||||||||

| Duff et al. (2007) |

|

LO | Mean (SD) of 220 (122) days | 2 |

|

|||||

|

||||||||||

| Snowden et al. (2001) |

|

|

LO | 1–3 years | 2–4 |

|

||||

|

||||||||||

|

||||||||||

|

||||||||||

| Stout et al. (2014) |

|

|

LO | 5–7 weeks | 3 |

|

Practice effects were only assessed in patients with HD or pre-HD, but not in HC. Practice effects on the TMT-A were observed only in HD patients, while practice effects on the Speed Tapping and Paced Tapping tests were only observed in pre-HD, patients. | |||

|

|

|||||||||

|

||||||||||

|

||||||||||

|

||||||||||

|

||||||||||

|

||||||||||

| Toh et al. (2014) |

|

|

LO | 12 months | 2 |

|

Composite scores were computed based on performances on the SDMT, TMT-A/B, Stroop Test, BVMT-R, CVLT and Digit Span test. | |||

|

||||||||||

|

||||||||||

|

||||||||||

|

||||||||||

|

||||||||||

|

||||||||||

|

||||||||||

|

||||||||||

|

||||||||||

Add., additional; BVMT-R, Brief Visuospatial Memory Test-Revised; CVLT, California Verbal Learning Test; HC, healthy controls; HD, Huntington's disease; HVLT, Hopkins Verbal Learning Test; LO, longitudinal observational; MMSE, Mini-Mental State Examination; pre-HD, pre-manifest Huntington's disease; RCT, randomized controlled trial; SDMT, Symbol Digit Modalities Test; TMT, Trail-Making Test; WAIS, Wechsler Adult Intelligence Scale.

‘Continuous effects’ is defined as a continuous improvement in test performance for ≥4 test administrations, with test performance continuing to improve up to the last test administered. By definition this can only be applied to studies that administered the test at least 4 times. In all other instances, practice effects are described as either ‘initial effect’ if clear signs of practice effects were evident; ‘inconclusive’ if practice effects were observed for a selection of test metrics, in a subgroup of patients only, or if other reasons may explain the improvement in test performance (contribution of other tests in composite scores, association with additional training or treatment etc.); or ‘no effect’ if no improvement in test performance was observed.

Figure 5.

Repeated-measures effect sizes of the observed changes between test iterations in Huntington's disease obtained from Stout et al. (2014). Light green dots ( ) indicate initial practice effects. Red dots (

) indicate initial practice effects. Red dots ( ) indicate an absence of practice effects, as defined in Table 6. ∗ Effect size reported for the change observed between the second and third test iteration rather than between the first and second test iteration. CVLT, California Verbal Learning Test; HD, Huntington's disease; HVLT, Hopkins Verbal Learning Test; pre-HD, pre-manifest Huntington's disease; SDMT, Symbol Digit Modalities Test; TMT, Trail-Making Test.

) indicate an absence of practice effects, as defined in Table 6. ∗ Effect size reported for the change observed between the second and third test iteration rather than between the first and second test iteration. CVLT, California Verbal Learning Test; HD, Huntington's disease; HVLT, Hopkins Verbal Learning Test; pre-HD, pre-manifest Huntington's disease; SDMT, Symbol Digit Modalities Test; TMT, Trail-Making Test.

The practice effects analyses revealed mostly mixed results. On the SDMT, for example, practice effects were observed in two studies, with patients with pre-manifest Huntington's disease showing larger practice effects than patients with manifest Huntington's disease (Beglinger et al., 2014a; Stout et al., 2014). However, one study did not find any discernable practice effects (Duff et al., 2007). On the TMT-A, larger practice effects were observed in patients with manifest Huntington's disease as opposed to pre-manifest Huntington's disease (Stout et al., 2014). Mixed results were also obtained on the Stroop Word Test (Beglinger et al., 2014a; Snowden et al., 2001; Stout et al., 2014) and the Stroop Color Test (Beglinger et al., 2014a; Snowden et al., 2001).

By comparison, the initial improvement on the TMT-B observed within 1–3 days was of similar magnitude in patients with pre-manifest or with manifest Huntington's disease (Stout et al., 2014). When assessed annually, the initial gain was followed by a decline in performance, which likely reflected a progression of the disease (Bachoud-Lévi et al., 2001). Analyses of the Stroop Interference Test revealed mixed results (Beglinger et al., 2014a; Duff et al., 2007; Snowden et al., 2001).

On the HVLT, practice effects were found for patients with either pre-manifest or manifest Huntington's disease (Stout et al., 2014). On the Speeded Tapping Test and Paced Tapping Test, however, practice effects were observed in patients with pre-manifest but not with manifest Huntington's disease (Stout et al., 2014).

The Digit Span test was generally not associated with practice effects, in particular in the forward condition (Bachoud-Lévi et al., 2001; Snowden et al., 2001); although, practice effects were reported in the backward condition (Bachoud-Lévi et al., 2001). Similarly, the repeated testing with the Mini-Mental State Examination (MMSE) did not result in practice effects (Toh et al., 2014).

Few studies also studied practice effects for composite scores. Practice effects were observed for a composite score that included the Letter-Number Sequencing Test (Beglinger et al., 2014b). Mixed results were obtained for composite scores that included either the SDMT, the TMT or the Stroop Test (Beglinger et al., 2014b; Toh et al., 2014). Finally, neither the BVMT-R, CVLT nor Digit Span Test were associated with practice effects when included in composite scores (Toh et al., 2014).

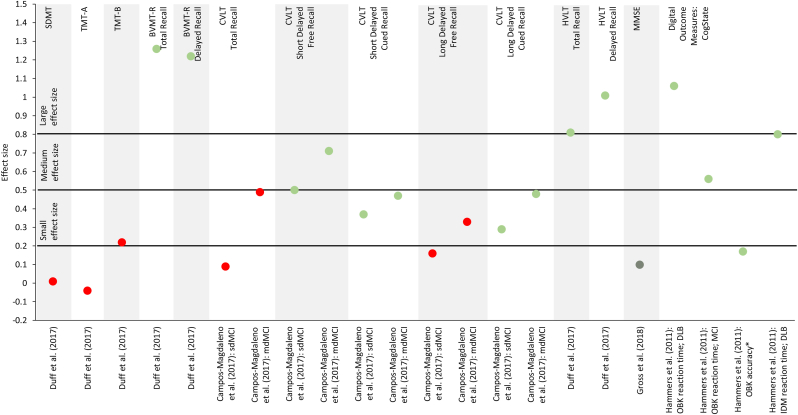

3.6. Practice effects in patients with mild cognitive impairment, Alzheimer's disease or other forms of dementia

The literature search revealed 18 studies on practice effects analyses in mild cognitive impairment, Alzheimer's disease and other forms of dementia. Details on study design and presence of practice effects are summarized in Table 7, and effects sizes (Cohen's d) are depicted in Figure 6.

Table 7.

Practice effects in patients with either mild cognitive impairment, Alzheimer's disease or other forms of dementia.

| Study | Sample size |

Study type | Follow-up duration | # test iterations | Practice effects in cohort of interesta |

Comment | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| Cohort of interest | Add. cohort | Continuous effects | Initial effects | Inconclusive | No improvement | |||||

| Britt et al. (2011) |

|

|

LO | 60 months | 2–8 |

|

||||

|

|

|||||||||

| Campos-Magdaleno et al. (2017) |

|

|||||||||

|

LO | 18 months | 2 |

|

Practice effects for total recall and long delayed free recall were observed in only patients with SMC, but not in patients with MCI. | |||||

|

||||||||||

| Claus et al. (1991) |

|

|

LO | 2 weeks | 3 |

|

||||

|

||||||||||

| Duff and Hammers, 2022 |

|

LO | Mean (SD) of 1.3 (0.1) years | 2 |

|

All observed follow-up scores were compared against predicted scores. | ||||

|

||||||||||

|

||||||||||

|

||||||||||

|

||||||||||

| Duff et al. (2007) |

|

LO | 2 weeks | 2 |

|

Lack of statistical testing. | ||||

|

||||||||||

| Duff et al. (2011) |

|

HC: 57 | LO | 1 week | 2 |

|

Lack of statistical testing. | |||

|

||||||||||

|

||||||||||

|

||||||||||

|

||||||||||

| Duff et al. (2012) |

|

LO | Single session | 2 |

|

Lack of statistical testing. | ||||

|

||||||||||

| Duff et al. (2014) |

|

LO | 1 week | 2 |

|

|||||

|

||||||||||

| Duff et al. (2015) |

|

|

LO | Approx. 1 week | 2 |

|

Lack of statistical testing. | |||

|

||||||||||

|

||||||||||

|

||||||||||

|

||||||||||

| Duff et al. (2017) |

|

LO | 1 week | 2 |

|

|

||||

|

|

|||||||||

|

||||||||||

| Duff et al. (2018) |

|

LO | Approx. 1 week | 2 |

|

|

||||

|

|

|

||||||||

|

||||||||||

| Elman et al. (2018) |

|

LO | 6 years | 2 |

|

|

|

A trend towards improvement was observed on the Stroop Word Test, Digit Span (forwards condition only), Visual Reproduction Test (immediate recall only), and the Matrix Reasoning tests. | ||

|

|

|

||||||||

|

|

|||||||||

|

|

|||||||||

|

||||||||||

|

||||||||||

|

||||||||||

| Frank et al. (1996) |

|

|

LO | Approx. 2.4 years | 2 |

|

|

|

Practice effects on the Visual Reproduction test were only observed in patients with MCI, but not in patients at risk of developing AD. On the TMT-B, Visual Reproduction (delayed recall in AD patients) and MMSE, practice effects were only observed in specific sex subgroups (male vs female). | |

|

|

|||||||||

|

||||||||||

| Gavett et al. (2016) |

|

|

LO | 5 years | 5 |

|

Practice effects were only observed in patients with MCI, but not in patients with AD. | |||

|

||||||||||

| Gross et al. (2018) |

|

LO | 2.4 years | ≤7 |

|

A random effects model analysis revealed an overall main retest (practice) effect over time. | ||||

| Hammers et al. (2011) |

|

|

LO | Single session | 2 |

|

|

Practice effects on the OBK reaction time task were observed only in patients with MCI or DLB, while practice effects on the IDM reaction time task were observed only in patients with DLB. | ||

|

|

|

||||||||

|

|

|

||||||||

|

||||||||||

| Rosas et al. (2020) |

|

|

LO | Mean (SD) of 25.96 (11.28) months | 2 |

|

Practice effects were indirectly inferred from reliable change index analyses. | |||

|

|

|||||||||

|

||||||||||

|

||||||||||

|

||||||||||

| Teasdale et al. (2016) |

|

LO | 6 months | 6 |

|

Practice effects observed only during training phase during which feedback was given. | ||||

AD, Alzheimer's disease; Approx., approximately; BVMT-R, Brief Visuospatial Memory Test-Revised; CVLT, California Verbal Learning Test; DLB, dementia with Lewis Bodies; FTD, frontotemporal dementia; HC, healthy controls; HVLT, Hopkins Verbal Learning Test; IDM, divided attention task; MCI, mild cognitive impairment; MMSE, Mini-Mental State Examination; OBK, One-Back Test; SCD, subjective cognitive decline; SDMT, Symbol Digit Modalities Test; SMC, subjective memory complaint; TMT, Trail-Making Test; WAIS, Wechsler Adult Intelligence Scale; WASI, Wechsler Abbreviated Scale of Intelligence; WMS, Wechsler Memory Scale.

‘Continuous effects’ is defined as a continuous improvement in test performance for ≥4 test administrations, with test performance continuing to improve up to the last test administered. By definition this can only be applied to studies that administered the test at least 4 times. In all other instances, practice effects are described as either ‘initial effect’ if clear signs of practice effects were evident; ‘inconclusive’ if practice effects were observed for a selection of test metrics, in a subgroup of patients only, or if other reasons may explain the improvement in test performance (contribution of other tests in composite scores, association with additional training or treatment etc.); or ‘no effect’ if no improvement in test performance was observed.

Based on clinical rating at the end of the study.

Data from 1,220 and 995 patients were available for visit 1 and 2, of which 11.0% and 15.2% (after correcting for practice effects) were diagnosed with mild cognitive impairment, respectively.

At follow-up, 48 participants were diagnosed with Alzheimer's disease, 200 with mild cognitive impairment and 64 with subjective cognitive decline, while 46 participants were considered as cognitively healthy.

Figure 6.

Effect sizes (Cohen's d, unless otherwise noted) for observed changes between test iterations in patients with mild cognitive impairment, Alzheimer's disease or other forms of dementia. Studies that did not report effect sizes or reported effect sizes for composite scores are not included in this figure. Dark green dots ( ) indicate continuous practice effects, light green dots (

) indicate continuous practice effects, light green dots ( ) initial practice effects, yellow dots (

) initial practice effects, yellow dots ( ) inconclusive effects and red dots (

) inconclusive effects and red dots ( ) absence of practice effects, as defined in Table 7. Small, medium and large effect sizes are defined as d = 0.2, d = 0.5 and d = 0.8, respectively, and apply to Cohen's d only (Cohen, 1992). ∗η2. BVMT-R, Brief Visuospatial Memory Test-Revised; CVLT, California Verbal Learning Test; DLB, dementia with Lewis bodies; HVLT, Hopkins Verbal Learning Test; IDM, divided attention task; LDFR, long delayed free recall; LM, Logical Memory; LNS, Letter-Number Sequencing; MCI, mild cognitive impairment; mdMCI, multi-domain mild cognitive impairment; MMSE, Mini-Mental State Examination; OBK, One-Back Test; SDFR, short delayed free recall; sdMCI, single-domain mild cognitive impairment; SDMT, Symbol Digit Modalities Test; TMT, Trail-Making Test; TR, total recall; VR, Visual Reproduction; WAIS, Wechsler Adult Intelligence Scale; WMS, Wechsler Memory Scale.

) absence of practice effects, as defined in Table 7. Small, medium and large effect sizes are defined as d = 0.2, d = 0.5 and d = 0.8, respectively, and apply to Cohen's d only (Cohen, 1992). ∗η2. BVMT-R, Brief Visuospatial Memory Test-Revised; CVLT, California Verbal Learning Test; DLB, dementia with Lewis bodies; HVLT, Hopkins Verbal Learning Test; IDM, divided attention task; LDFR, long delayed free recall; LM, Logical Memory; LNS, Letter-Number Sequencing; MCI, mild cognitive impairment; mdMCI, multi-domain mild cognitive impairment; MMSE, Mini-Mental State Examination; OBK, One-Back Test; SDFR, short delayed free recall; sdMCI, single-domain mild cognitive impairment; SDMT, Symbol Digit Modalities Test; TMT, Trail-Making Test; TR, total recall; VR, Visual Reproduction; WAIS, Wechsler Adult Intelligence Scale; WMS, Wechsler Memory Scale.

Compared with the other disease areas, practice effects were less common on test of information processing speed, especially on the SDMT (Duff et al., 2017, 2018; Duff and Hammers, 2022) and TMT-A (Duff et al., 2017, 2018; Duff and Hammers, 2022). However, when present, they tend to be smaller than observed in healthy controls (Duff et al., 2015), and their magnitude correlated significantly with hippocampal volume (r = 0.73; P < 0.01) (Duff et al., 2018). Practice effects on the SDMT and the Word and Color subtests of the Stroop Test were more likely to be observed in patients with greater levels of cognitive impairment (Rosas et al., 2020). Moreover, a trend towards improved test scores were observed on the Stroop Word test and subtle practice effects on the Stroop Color test in a mixed cohort of patients with mild cognitive impairment and healthy volunteers even after a long inter-test interval of six years (Elman et al., 2018). Practice effects on the Digit-Symbol or Coding Test were also more likely to occur with increasing levels of cognitive impairment (Rosas et al., 2020). However, Duff et al. (2012) reported an inverse correlation between the magnitude of practice effects and dementia severity measured by MMSE (partial r = 0.26; P = 0.046; Cohen's d = 0.54), even after controlling for baseline performance.

For tests assessing executive function such as the TMT-B or the Interference subtest of the Stroop Test, there was little evidence of practice effects (Britt et al., 2011; Duff et al., 2015, 2017, 2018; Duff and Hammers, 2022; Elman et al., 2018). Nonetheless, practice effects were more likely to occur with increasing levels of cognitive impairment (Rosas et al., 2020) or in specific subgroups (Frank et al., 1996).

Repeated testing with the CVLT, a measure of learning and memory, resulted in practice effects, especially on less demanding tasks such as short delayed free or cued recall and long delayed cued recall (Campos-Magdaleno et al., 2017; Elman et al., 2018). The lack of practice effects on the more memory-demanding tasks of the CVLT, including long delayed free recall, suggests that explicit memory deteriorates in amnestic mild cognitive impairment while implicit memory involved in practice effects is still preserved (Campos-Magdaleno et al., 2017). Practice effects were also observed on both total and delayed recall of the HVLT when retested within a week (Duff et al., 2017, 2018). In patients with probable Alzheimer's disease, stronger practice effects correlated inversely with disease severity measured by MMSE after controlling for baseline performance (partial r = 0.47; P < 0.001; Cohen's d = 1.016) (Duff et al., 2012).

On the Visual Reproduction test, improvements suggestive of practice effects were observed in patients at risk of developing Alzheimer's disease for both delayed and immediate recall, while the performance on both tasks tended to remain stable or worsen in patients diagnosed with Alzheimer's disease (Frank et al., 1996). By comparison, a mixed cohort of patients with mild cognitive impairment and healthy volunteers showed definite practice effects only on delayed recall (Elman et al., 2018). Finally, on the Logical Memory test, practice effects were more common in patients with mild cognitive impairment than in patient with Alzheimer's disease (Britt et al., 2011; Claus et al., 1991; Elman et al., 2018; Gavett et al., 2016).

On the BVMT-R, a measure of visuospatial memory, practice effects were reported on both total and delayed recall, in particular with short inter-test intervals (Duff et al., 2007, 2015, 2017, 2018). However, there was little-to-no signs of practice effects if the inter-test interval was increased to one year or longer (Duff and Hammers, 2022). Furthermore, the magnitude of practice effects on delayed recall correlated with 18F-flutemetamol uptake in amyloid plaques (r = −0.45; P = 0.02; Cohen's d = 1.1) (Duff et al., 2014).

Working memory was assessed with a couple of different tests. Digit Span, in particular in the backward condition, Spatial Span and Letter-Number Sequencing were all associated with practice effects (Elman et al., 2018). Similarly, repeated testing with CogState's One-Back Test resulted in reduced reaction times in patients with either mild cognitive impairment or dementia with Lewis Bodies and in improved accuracy scores in the entire study cohort (Hammers et al., 2011).

Few studies also studied practice effects on other cognitive abilities. Reduced reaction times indicative of practice effects were reported in patients with dementia with Lewis bodies on CogState's Divided Attention test (Hammers et al., 2011). Practice effects were also observed on the Driving Simulator of Teasdale et al. (2016) during the training phase when live feedback was provided. But the gain from practice was lost during the recall phase, during which no feedback was provided. A trend towards improved scores was observed on the Matrix Reasoning test (Elman et al., 2018). Finally, no practice effects were found on the Verbal Comprehension (Claus et al., 1991).

The MMSE showed little-to-no signs of practice effects (Duff et al., 2007; Frank et al., 1996; Toh et al., 2014), although they cannot be entirely ruled out (Gross et al., 2018).

3.7. Mitigation strategies

Mitigation strategies help to account and control for practice effects, thereby ensuring accurate interpretation of longitudinal data of functional ability. Several different approaches to mitigate and minimize the impact of practice effects have been implemented (Table S2; supplementary appendix).

3.7.1. Reliable change index

One approach is to compute a reliable change index that corrects for practice effects by identifying whether an observed change is clinically relevant and greater than the expected practice effect (Duff et al., 2017; Higginson et al., 2009; Turner et al., 2016; Utz et al., 2016). However, this approach is associated with some limitations. To compute a reliable change index, data on practice effects obtained from a reference population is required (Utz et al., 2016). Typically, a normative, healthy population is used as the reference population. It is therefore crucial that both the studied patient population and the reference population show practice effects of similar magnitude. Otherwise, the computed reliable change index cannot effectively account for practice effects. The threshold to detect changes considered to be clinically relevant will be reduced if practice effects are underestimated in the reference population (Utz et al., 2016). As a result, a subset of patients showing practice effects would be falsely identified as showing a clinically relevant change. On the other hand, overestimation of practice effects in the reference population would result in more extensive lower bounds for detecting functional decline in the studied patient population (Turner et al., 2016). To circumvent these potential limitations, it has been suggested to use data collected from a comparable but separate patient population instead (Higginson et al., 2009).

Additionally, the reliable change index assumes that the gain resulting from practice effects remains constant over time. With multiple test repetitions, however, the gain from practice effects can vary as a function of time or number of test iterations (Glanz et al., 2012). A constant reliable change index will therefore not accurately identify those who show clinically meaningful change beyond practice effects, an effect that is exacerbated with an increasing number of test repetitions. An adaptive reliable change index that takes the temporal dynamics of practice effects into account could help address this limitation.

Finally, ceiling or floor effects may prevent the ability to detect clinically meaningful changes if the difference between the baseline score and the maximum or minimum score, respectively, is smaller than the reliable change index (Benedict, 2005).

3.7.2. Standardized regression-based models

A similar approach is to apply standardized regression-based models to predict the test scores at retest (Duff et al., 2017). Unlike the reliable change index, the standardized regression-based model uses information from the studied cohort to predict their test performance at retest. In this simplest form, this prediction is solely based on the baseline test performance. More complicated models make use of additional covariates such as age, gender, level of education or inter-test interval. The z-scores computed from the difference between the predicted and observed scores at retest can be used to define a threshold for detecting functional decline or functional recovery beyond the expected practice effect.

3.7.3. Replacement method

The replacement method of Elman et al. (2018) estimates group-level, attrition-corrected practice effects. With this method, the cohort at retest (i.e., the returnee cohort) is compared against a test-naïve, age-matched cohort (i.e., the replacement cohort). Any difference observed between these two cohorts is assumed to be a combination of attrition and practice effects. Attrition-corrected practice effects are obtained by subtracting the difference in mean scores of the overall cohort at baseline (i.e, mean baseline scores of returnees and those lost to follow-up) and the returnee cohort at baseline (attrition effect) from the difference in mean score of a separate, test-naïve replacement cohort at baseline and the returnee cohort retest (difference score). These estimated practice effects can then be subtracted from the test scores of the returnee cohort obtained at retest, resulting in practice-effect–corrected retest scores. This methodology is more robust for larger sample size of the overall cohort and the cohort lost to follow-up. Depending on the drop-out rate, the replacement cohort can be small (and thus returnee population large), resulting in instability in the calculation of the difference score which is a key part of the attrition-corrected practice effect value. Furthermore, this methodology has been demonstrated for a single retest. While it is possible to apply it to more than one retest, it would require the management of multiple cohorts as retests (i.e., multiple replacement and returnee cohorts). Finally, data from a test-naïve, age-matched replacement cohort may not always be available for less established tests.

3.7.4. Alternative forms

Few studies purposely administered the same form to maximize practice effects (Duff et al., 2011, 2017, 2018). Conversely, the use of alternative forms – if available – can help reduce practice effects if they are driven by learning a specific sequence of items (Beglinger et al., 2014b; Benedict, 2005). In fact, several studies reported on absence of practice effects when using the alternative form, including on the SDMT (Fuchs et al., 2020), the CVLT (Eshaghi et al., 2012) or the Wechsler Memory Scale (Claus et al., 1991).

Moreover, in a direct comparison, the use of an alternative form prevented practice effects on all CVLT and BVMT-R metrics (Benedict, 2005). This is contrary to the practice effects observed on both measures in Eshaghi et al. (2012) and in Reilly and Hynes (2018), suggesting that alternative forms may only reduce but not fully prevent practice effects. Mixed results were also obtained on the HVLT, where only patients with pre-manifest, but not manifest, Huntington's disease showed signs of practice effects on the alternative form (Stout et al., 2014).

Consistent with the literature (Bever et al., 1995; Cohen et al., 2000, 2001; Eshaghi et al., 2012; Glanz et al., 2012; Nagels et al., 2008; Rosti-Otajärvi et al., 2008), the direct comparison of Benedict (2005) revealed practice effects on both SDMT (group by time interaction effect: P > 0.05) and PASAT (Cohen's d for same form: 0.3; for alternative form: 0.4), irrespective whether the same and alternative form was used. This suggests that patients may develop over time more effective test taking strategies and that this drives the practice effects seen despite the use of alternative forms (Beglinger et al., 2014a; Gross et al., 2018). Consequently, other strategies are needed to minimize the impact of practice effects.

3.7.5. Run-in period

Since improvements due to practice are typically strongest between the first few test iterations, a run-in or familiarization period prior to taking the baseline assessments has been suggested to reduce the magnitude of practice effects (Beglinger et al., 2014a; Beglinger et al., 2014b; Cohen et al., 2000; Stout et al., 2014; Sormani et at., 2019). Such a run-in period would allow patients to become fully familiar with the test and test conditions and to reach steady-state performance prior to their baseline assessment, thereby preventing post-baseline practice effects (Patzold et al., 2002). For the success of a run-in period, it is therefore critical to administer a sufficient number of tests prior to the baseline assessment. However, many studies included in this analysis have administered only two or three test iterations (Tables 4, 5, 6, and 7). This makes it more challenging to establish the minimum number of tests required for the run-in period for each of the four disease areas. For instance, in patients with multiple sclerosis, two to three pre-baseline assessments have been recommended for the PASAT (Cohen et al., 2000; Rosti-Otajärvi et al., 2008). This may not be sufficient considering that a trend of continuous improvement beyond the third test iteration was observed in Glanz et al. (2012). Similarly, Gavett et al. (2016) argued that the previously recommended 2 or 3 pre-baseline assessments with the Wechsler Memory Scale may not be sufficient to prevent further practice effects as patients with mild cognitive impairment showed continuous improvements over all 5 test iterations (Gavett et al., 2016). In addition, the inter-test interval during the run-in period can also impact the likelihood of post-baseline practice effects (Beglinger et al., 2014a). Finally, implementing a run-in period will increase the burden of the patient and increase the cost and time needed to run clinical trials. Time and cost constraints may also limit its use in clinical practice. This is particularly valid for clinician-administered tests. By comparison, digital tests that can be remotely administered at home without supervision by a healthcare professional promise to offer a means to minimize the additional patient burden and cost associated with including multiple pre-baseline assessments, thereby making a run-in period more feasible.

4. Discussion and outlook

Practice effects are a common phenomenon associated with the repeated administration of performance outcome measures (Tables 4, 5, 6, and 7). Despite the research conducted on practice effects, some gaps still remain:

-

•

Many different approaches to identify, assess and study practice effects have been applied, which complicates a comparison across studies.

-

•

Most studies defined practice effects on the basis of improved test performance at retest. This does not allow for practice effects and functional decline (and other longitudinal effects), which is commonly observed in patients with chronic neurologic disorders, to coincide. Such changes in functional ability limit the ability to detect and to account for practice effects. Thus, optimal methods to distinguish between practice effects and functional decline, but also changes in motivation and fatigue, functional recovery, and treatment effects need to be further investigated.

-

•

The possible impact of previous exposure on the ability to detect further practice effects was largely unaddressed.

-

•

The temporal dynamics of practice effects has not been studied in detail and further research could expand our understanding how practice effects vary over time.

-

•

Practice effects on an individual patient level have not been fully characterized.

-

•

The clinically meaningful information contained within practice effects remains unclear and further research is needed to establish their usefulness in guiding disease management.

-

•

Finally, further research into the possible impact of the more granular datasets collected with digital performance outcome measures on practice effects is needed.

4.1. Comparing practice effects across studies