Abstract

Objective

: The aim of this study is to develop an artificial intelligence model to detect cephalometric landmark automatically enabling the automatic analysis of cephalometric radiographs which have a very important place in dental practice and is used routinely in the diagnosis and treatment of dental and skeletal disorders.

Methods:

In this study, 1620 lateral cephalograms were obtained and 21 landmarks were included. The coordinates of all landmarks in the 1620 films were obtained to establish a labeled data set: 1360 were used as a training set, 140 as a validation set, and 180 as a testing set. A convolutional neural network-based artificial intelligence algorithm for automatic cephalometric landmark detection was developed. Mean radial error and success detection rate within the range of 2 mm, 2.5 mm, 3 mm, and 4 mm were used to evaluate the performance of the model.

Results:

Presented artificial intelligence system (CranioCatch, Eskişehir, Turkey) could detect 21 anatomic landmarks in a lateral cephalometric radiograph. The highest success detection rate scores of 2 mm, 2.5 mm, 3 mm, and 4 mm were obtained from the sella point as 98.3, 99.4, 99.4, and 99.4, respectively. The mean radial error ± standard deviation value of the sella point was found as 0.616 ± 0.43. The lowest success detection rate scores of 2 mm, 2.5 mm, 3 mm, and 4 mm were obtained from the Gonion point as 48.3, 62.8, 73.9, and 87.2, respectively. The mean radial error ± standard deviation value of Gonion point was found as 8.304 ± 2.98.

Conclusion:

Although the success of the automatic landmark detection using the developed artificial intelligence model was not insufficient for clinical use, artificial intelligence-based cephalometric analysis systems seem promising to cephalometric analysis which provides a basis for diagnosis, treatment planning, and following-up in clinical orthodontics practice.

Keywords: Anatomic landmark, lateral cephalometric radiograph, deep learning, artificial intelligence

Main Points

Artificial intelligence (AI)-based cephalometric analysis system can show clinically acceptable performance.

As a clinical decision support system, AI-based systems can help orthodontists with diagnosis, treatment planning, and follow-up in clinical orthodontics practice.

Automatic cephalometric analysis software will save the orthodontists time making their work easier.

Introduction

Orthodontics is one of the specialties of dentistry that mainly deals with the diagnosis of malocclusion and ultimately aims to prevent and correct them. It mainly deals with the correction of defects in the craniofacial skeleton and dentoalveolar structures. Correct diagnosis and treatment planning are considered the key elements in the success of orthodontic treatment. Orthodontists must be very precise in their diagnosis and treatment planning. Orthodontic diagnosis is mainly based on the patient’s dental and medical history, clinical examination, study models, and cephalometric radiographs, which are considered the most useful tool for orthodontic diagnosis. Cephalometric radiography is a standard diagnostic imaging technique in orthodontics.1,2 It is the most important tool for diagnosis and treatment to detect problems in craniofacial skeletal structures and incompatibility of anatomical structures related to each other. The skeletal relationship between the cranial base and the maxilla or mandible, the relationship between the maxilla and the mandible, and the dentoalveolar relationship were quantitatively evaluated using cephalometric radiographs. They also serve to determine the growth pattern through quantitative and qualitative assessments and superimposition of serial radiographs. In addition to that, cephalometric radiographs are also required to plan an orthognathic surgery.3-6 Identifying anatomical points on cephalometric radiographs is crucial for accurate cephalometric analysis as the initial step of the analysis. However, detecting cephalometric anatomical points is a tedious, difficult, and time-consuming process. There is a possibility of intra- and interobserver variability. It may occur due to differences in education and clinical experience. A clear projection of the craniofacial area into a 2-dimensional image is difficult because of the overlapping of complex anatomical structures and the diversity of dentofacial morphology that differs from patient to patient.1-8

In the last few decades, artificial intelligence (AI) technology, which is based on the principles of imitating the functioning of the human brain, lead to important developments in the field of dentistry.9-11 Artificial intelligence has many sub-fields that are widely used in different fields, especially in biological and medical diagnostics, which includes namely machine learning (ML), artificial neural networks (ANNs), deep learning (DL), and convolutional neural networks (CNNs). Machine learning, the main sub-fields of AI, includes ANN and DL. Artificial neural network has been developed by imitating biological neural networks through computer programs that model the way the brain performs a function. The multi-layered network structure, which is formed by combining artificial neurons and connecting artificial neuron layers with mathematical operations, is called DL. The convolutional neural network is one of the popular and successful DL model for image classification. These neural networks are mathematical computational models that can truly simulate the functioning of the biological neuron. These automated technologies will come in use as powerful tools to predict diagnosis and assist clinicians in treatment planning.9-11 These models can be trained with clinical data sets and used for a variety of diagnostic tasks in dentistry. Taking into consideration the literature, quite a number of studies are available to assess the performance of AI algorithms to solve different problems in dentistry such as tooth detection and numbering, caries and restoration detection, detection of periapical lesion and jaw pathologies, dental implant planning, impacted tooth detection, etc.12-18 Moreover, AI-based automatic and semi-automatic system that can be an alternative to fully automatic systems with the advantages such as faster and easier point identification, although it has some disadvantages including loss of standardization, has a great potential in developing tools that will provide significant benefits to assist orthodontists in providing standardized patient care and maximizing the chances of meeting goals. Orthodontists can benefit from AI technology for better clinical decision-making. Besides, orthodontists save time using AI-based systems.9-11

The aim of this study is to develop an AI model for the automatic detection of cephalometric landmark that enables the automatic analysis of cephalometric radiographs which have a very important place in dental practice and are routinely used in the diagnosis and treatment of dental and skeletal disorders.

Methods

Radiographic Images Data Sets

Lateral cephalometric images of patients aged between 9 and 20 years, in the mixed or permanent dentition, were obtained from the radiology archive of the Department of Orthodontics, Faculty of Dentistry, Eskişehir Osmangazi University. The cephalometric radiographs had position error, missing/unerupted, or has any developmental problem of central incisors and first molars, metal artifacts caused by orthodontic appliance, implant, etc., trauma and maxillofacial surgery were excluded from study data. Eskişehir Osmangazi University Faculty of Medicine Clinical Research Ethics Committee (approval number: August 6, 2019/14) approved the study protocol, and all procedures were followed in accordance with the Declaration of Helsinki principles. All lateral cephalometric radiographs were taken from patients sitting upright in a natural head position with Plenmeca Promax Dental Imaging Unit (Planmeca, Helsinki, Finland) following parameters 58 kVp, 4 mA, 5 sn.

Ground Truth Labeling

As an orthodontist with 9 years of experience, M.U. labeled lateral cephalometric radiographs with CranioCatch Annotation Software (CranioCatch, Eskisehir, Turkey) for 21 different cephalometric landmarks using the point identification tool. Following cephalometric landmarks were annotated: sella (S), nasion (N), orbitale (Or), porion (Po), Mx1r, B point, pogonion (Pg), menton (Me), gnathion (Gn), gonion (Go), Md1c, Mx1c, labiale superior (Ls), labiale inferior (Li), subnasale (Sn), soft tissue pogonion (Pg’), posterior nasal spina (PNS), anterior nasal spina (ANS), articulare (Ar), A point, and Md1r (Table 1).

Table 1.

Definition of Cephalometric Landmark

1.

|

The midpoint of sella turcica |

2.

|

The extreme anterior point of the frontonasal suture/junction of frontonasal suture |

3.

|

Inferior border of orbit |

4.

|

Top of external auditory meatus |

5.

|

The tip of the upper incisor root |

6.

|

The deepest point in the curvature of the mandibular alveolar process |

7.

|

The extreme anterior point of the chin |

8.

|

The extreme inferior point of the chin |

9.

|

The midpoint between pogonion and menton |

10.

|

The midpoint of the mandibular angle between ramus and the mandibular corpus |

11.

|

The tip of the lower incisor |

12.

|

The tip of the upper incisor |

13.

|

Most anterior point on outline of upper lip (vermillion border) |

14.

|

Most anterior point on outline of the lower lip (vermillion border) |

15.

|

Junction of nasal septum and upper lip in mid -sagittal plane. |

16.

|

Most anterior point on outline of ST chin. |

17.

|

The extreme posterior point of the maxilla |

18.

|

The extreme anterior point of the maxilla |

19.

|

A point on the posterior border of the ramus at the intersection with the basilar portion of the occipital bone |

20.

|

The deepest point in the curvature of the maxillary alveolar process |

21.

|

The tip of the lower incisor root |

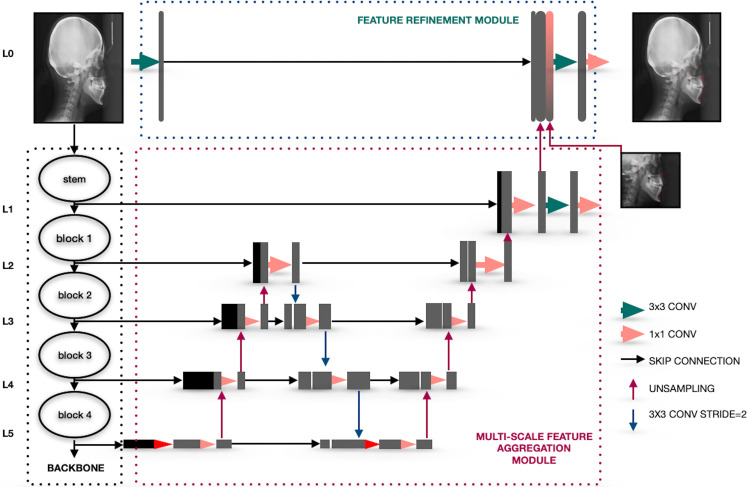

Deep Learning Architecture

Feature aggregation and refinement network (FARNet) proposed by Yueyuan et al.19 was used to model the development of cephalometric landmark detection as a CNN-based deep learning model. The feature aggregation and refinement network comprises 3 main systems including a backbone network, a multi-scale feature aggregation (MSFA), and a feature refinement (FR). The backbone network is a pre-trained architecture trained on ImageNet. The backbone network figures out a feature hierarchy of feature maps at various ranges. Feature maps were extracted from the input images with a ranging step of 2 and it works as the first down-sampling way. The MSFA module has an up-sampling and down-sampling way followed by an up-sampling way to combine the multi-range features. In each feature fusion block, features with different resolutions are combined through higher resolution-dominant coupling, where higher resolution features are highlighted by retaining more channels than lower resolution ones. Feature maps obtained from the MSFA module have half resolution as the input image. In order to obtain a more accurate prediction, the FR module was used to generate feature maps with the same resolution as in the input image (Figure 1).

Figure 1.

The system architecture of the CNN-based AI algorithm

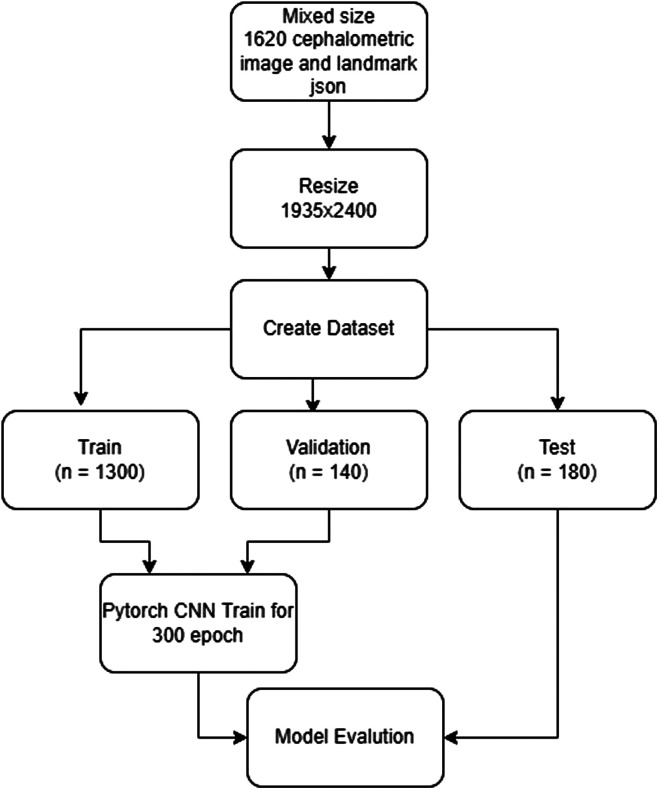

Model Developing

The model developing process was conducted on computer equipment in the Dental-AI Laboratory of Faculty of Dentistry in Eskişehir Osmangazi University that contained a Precision 3640 Tower CTO BASE workstation Intel(R) Xeon(R) W‐1250P (6 core, 12 M cache, core processor frequency 4.1 GHz, Max Turbo Frequency 4.8 GHz) DDR4‐2666, 64 GB DDR4 (4 X16GB) 2666 MHz UDIMM ECC memory capacity, 256 GB SSD SATA, Nvidia Quadro P620, 2 GB) and NVIDIA Tesla V100 graphics card (Dell, Texas, ABD) and 27", 1920 x 1080 pixel IPS LCD monitor (Dell, Tex, ABD). Python open-source programming language (v.3.6.1; Python Software Foundation, Wilmington, Del, USA) and Pytorch library were used for model development, and 1620 cephalometric mixed sizes images with 21 points labels were obtained. A row is determined for each point. Labels were saved in txt format as 21 points in the specified order. Images and labels resized to 1935 × 2400. The data sets were divided into 3 parts as training, testing, and validation:

Training: 1360 images and 21 points labels

Validaton: 140 images and 21 points labels

Test: 180 images and 21 points labels

The data obtained from the testing group were not reused. The training of the AI model was performed using 300 epochs with PyTorch implemented CNN-based deep learning method. The learning rate of the model was determined as 0.0001 (Figure 2).

Figure 2.

AI model pipeline of automatic landmark detection (JSON: Java Script Object Notation)

Evaluation of the Model Performance

The point-to-point error of each landmark was measured with the absolute distance and averaged over the all-test data set. Landmark error was measured manually and was estimated landmark position of an image respectively. Mean Radial Error (MRE) and Standart Deviation (SD) values were reported for the all landmarks. The radial error (R) computed as ∆x is the distance between the estimated position and the manual localized standard position in the x direction, and ∆y is the distance between the estimated position and the manual localized standard position in the y direction in the horizontal (x) and vertical (y) coordinate systems.2

MRE and SD were computed using following formula2:

The successful detection rates (SDR) were measured which indicate percentages of estimated points within each precision range of 2 mm, 2.5 mm, 3 mm, and 4 mm, respectively. For each cephalometric landmark, if the distance between the automatically determined position by AI and the ground truth is no higher than a certain value d, automatic localization detected by AI is accepted successful, and the SDR related to the accuracy of d can be calculated.2

RESULTS

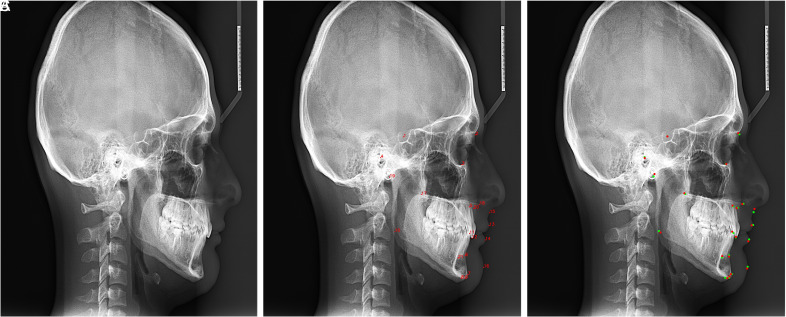

The presented AI system (CranioCatch, Eskisehir, Turkey) could detect 21 anatomic landmarks in a lateral cephalometric radiograph (Figure 3). The highest SDR score of 2 mm, 2.5 mm, 3 mm, and 4 mm was obtained from the S point as 98.3, 99.4, 99.4, and 99.4, respectively. The MRE ± SD value of S was found as 0.616 ± 0.43. The lowest SDR score of 2 mm, 2.5 mm, 3 mm, and 4 mm were obtained from the Go point as 48.3, 62.8, 73.9, and 87.2, respectively. The MRE ± SD value of Go was found as 8.304 ± 2.98. The MRE and SDR value of each anatomic landmark obtained from test data is summarized in Table 2.

Figure 3. A-C.

Automatic detection of cephalometric points by the AI model. (A) Original image (B) Automatic landmark detection by AI model. (C) The comparison of landmark detection by expert and AI. Red: landmark location detected by expert. Green: landmark location detected by AI

Table 2.

The MRE and SDR value of landmarks obtained from test data

| Anatomic Landmark | 2 mm | 2.5 mm | 3 mm | 4 mm | MRE ± SD | |

|---|---|---|---|---|---|---|

| Sella (S) | 98.3 | 99.4 | 99.4 | 99.4 | 0.616 ± 0.43 | |

| Nasion (N) |

77.8 | 83.9 | 89.4 | 94.4 | 1.391 ± 1.26 | |

| Orbitale (Or) |

66.1 | 73.3 | 83.3 | 92.2 | 2.070 ± 1.63 | |

| Porion (Po) |

65.0 | 75.6 | 80.6 | 90.6 | 3.963 ± 1.78 | |

| Mx1r |

72.2 | 82.2 | 87.8 | 93.9 | 4.870 ± 1.84 | |

| B point |

66.1 | 79.4 | 85.0 | 91.1 | 3.416 ± 1.82 | |

| Pogonion (Pg) |

73.9 | 80.6 | 87.2 | 93.3 | 1.579 ± 1.31 | |

| Menton (Me) |

67.8 | 75.0 | 83.9 | 92.8 | 1.429 ± 1.33 | |

| Gnathion (Gn) |

88.9 | 93.3 | 96.1 | 97.8 | 2.172 ± 1.13 | |

| Gonion (Go) |

48.3 | 62.8 | 73.9 | 87.2 | 8.304 ± 2.98 | |

| Md1c |

91.7 | 93.3 | 95.0 | 95.6 | 5.318 ± 1.62 | |

| Mx1c |

94.4 | 95.0 | 95.6 | 95.6 | 1.774 ± 0.86 | |

| Labiale superior (Ls) |

90.6 | 94.4 | 95.6 | 96.7 | 2.519 ± 1.10 | |

| Labiale inferior (Li) |

86.7 | 89.4 | 92.2 | 95.6 | 2.110 ± 1.18 | |

| Subnasale (Sn) |

90.6 | 94.4 | 96.1 | 97.2 | 2.028 ± 1.08 | |

| Soft tissue pogonion (Pg’) |

53.9 | 66.1 | 70.0 | 78.9 | 4.045 ± 2.32 | |

| Posterior nasal spina (PNS) |

66.1 | 78.3 | 84.4 | 90.6 | 5.780 ± 2.24 | |

| Anterior nasal spina (ANS) |

78.3 | 86.1 | 90.6 | 95.6 | 4.187 ± 1.68 | |

| Articulare (Ar) |

69.4 | 77.2 | 82.2 | 90.0 | 5.570 ± 2.03 | |

| A point |

76.1 | 83.3 | 87.8 | 94.4 | 5.124 ± 1.67 | |

| Md1r |

81.7 | 89.4 | 95.6 | 97.8 | 3.524 ± 1.41 | |

| Mean | 76.2 | 83.5 | 88.2 | 93.4 | 3.400 ± 1.57 |

MRE, mean radial error; SD, standard deviation.

DISCUSSION

Deep learning-based AI algorithms are using commonly medical image analysis. Cephalometric images are routinely used to evaluate the relationship between mandible and maxilla and dentoalveolar structure and detection of dental and skeletal anomalies in orthodontics practice. Although analysis of cephalometric images is so important, it is a time-consuming and strong procedure and the result of the analysis can be varying from person to person. Taking into consideration, the opinion of automatic cephalometric analysis using AI algorithms was found to be useful and so many studies were available using different methods in the literature. In this view, automatic cephalometric landmark detection challenges were organized by the International Symposium on Biomedical Imaging (ISBI) and the Institute of Electrical and Electronics Engineers which created public data set comprising the 19 cephalometric landmarks. Using this data set, different AI methods such as decision tree, random forest, Bayesian convolutional neural networks, and cascade CNNs were applied for the detection of cephalometric landmark.20-26 A study conducted by Zeng et al.20 proposed an original way based on cascaded CNNs for automatic cephalometric landmark detection of 19 points on ISBI 2015 challenge test 1 data set. In this study, the highest SDR score of 2 mm, 2.5 mm, 3 mm, and 4 mm were obtained from incision superius point as 95.33, 96.00, 98.00, and 100.0, respectively. The MRE ± SD value of incision superius was found as 0.96 ± 0.61. The lowest SDR score of 2 mm, 2.5 mm, 3 mm, and 4 mm were obtained from P as 54.67, 68.67, 80.67, and 94.00, respectively. The MRE ± SD value of incision superius was found as 2.02 ± 1.25. The average SDR score of 2 mm, 2,5 mm, 3 mm, and 4 mm were obtained as 81.37, 89.09, 93.79, and 97.86, respectively. The average value of MRE ± SD was found as 1.34 ± 0.92. Lee et al.21 developed a new network for detecting cephalometric points with confidence regions using Bayesian CNNs. Their AI model was also trained with the public data set from the ISBI 2015 grand challenge in dental x-ray image analysis. The highest SDR score of 2 mm, 2.5 mm, 3 mm, and 4 mm were obtained from lower lip point as 97.33, 98.67, 98.67, and 99.33, respectively. Landmark error with SD of lower lip point was found as 1.28 ± 0.85. The lowest SDR score of 2 mm, 2,5 mm, 3 mm, and 4 mm were obtained from the A point as 52.00, 62.00, 74.00, and 87.33, respectively. The MRE with SD values of lower lip point was found as 2.07 ± 2.53. The average SDR score of 2 mm, 2,5 mm, 3 mm, and 4 mm were obtained from 82.11, 88.63, 92.28, and 95.96, respectively. Landmark error with SD of average was found as 1.53 ± 1.74. A study conducted by Bulatova et al.22 evaluated the accuracy of cephalometric landmark detection between the You Only Look Once, Version 3 (YOLOv3) algorithm based on the CNN and the manual identification group. There were no found statistical differences between manual identification and AI groups for 11 out of 16 points. Significant differences (>2 mm) were found for points of U1 apex, L1 apex, Basion, Go, and Or. They concluded that AI may increase the efficiency of the cephalometric point identification in routine clinical practice. Kim et al.7 investigated the accuracy of automated detection of cephalometric points using the cascade CNNs on lateral cephalograms obtained from multi-centers in South Korea. A total of 3150 lateral cephalograms were used for training. For external validation, 100 lateral cephalograms were used as the data set. The mean identification error for each point was found to be between 0.46 ± 0.37 mm for the maxillary incisor crown tip and 2.09 ± 1.91 mm for the distal root tip of the mandibular first molar.

Taking literature into consideration, many cephalometric points including A point, Ar, Go, Pg’, and Or were detected difficult, and these points present higher errors or lower SDR values than other points. In the present study, Go point had the lowest value of SDR as 48.3, 62.8, 73.9, and 87.2 for 2 mm, 2.5 mm, 3 mm, and 4 mm, respectively. The points of Pg’, PNS, Me, Or, B, Ar, and Po had SDR values also lower than 70.0 for 2 mm. The SDR score of 2 mm, 2.5 mm, 3 mm, and 4 mm obtained from A point was found as 76.1, 83.3, 87.8, and 94.4, respectively. The MRE ± SD value of a point was found as 5.124 ± 1.67. Although the success of the model was not clinically acceptable in automatic landmark detection, the success of the system seems promising and open to improvement (developable and upgradeable). The present study has many limitations such as including images obtained from only 1 center and the same exposure parameters, making of the labeling by an orthodontist, no testing of external data set, and limited numbers of cephalometric landmarks for cephalometric analysis. The results obtained are promising in terms of localizing the cephalometric landmarks.

CONCLUSION

Convolutional neural network-based AI algorithms show promising success for medical image evaluations. Although the success of the automatic landmark detection developed using the AI model was not insufficient for clinical use, AI-based cephalometric analysis systems seem promising to cephalometric analysis which provides a basis for diagnosis, treatment planning, and following-up in clinical orthodontics practice.

Footnotes

Ethics Committee Approval: Eskişehir Osmangazi University Faculty of Medicine Clinical Research Ethics Committee (approval number: 06.08.2019/14) was approved the study protocol and all procedures were followed Declaration of Helsinki principles.

Informed Consent: Verbal informed consent was obtained from all participants who participated in this study.

Peer-review: Externally peer-reviewed.

Acknowledgments: I would like to thank Ozer Celik, Ibrahim Sevki Bayrakdar, Elif Bilgir for allowing me to use the radiological archive, creating the concept of the study, and developing AI models.

Declaration of Interests: The authors have no conflict of interest to declare.

Funding: This work has been supported by Eskişehir Osmangazi University Scientific Research Projects Coordination Unit under grant number 202045E06.

References

- 1. Yue W, Yin D, Li C, Wang G, Xu T. Automated 2-D cephalometric analysis on X-ray images by a model-based approach. IEEE Trans Bio Med Eng. 2006;53(8):1615 1623. 10.1109/TBME.2006.876638) [DOI] [PubMed] [Google Scholar]

- 2. Yao J, Zeng W, He T.et al. Automatic localization of cephalometric landmarks based on convolutional neural network. Am J Orthod Dentofac Orthop. 2021;S0889-5406(21)00695-00698. 10.1016/j.ajodo.2021.09.012) [DOI] [PubMed] [Google Scholar]

- 3. Kim H, Shim E, Park J, Kim YJ, Lee U, Kim Y. Web-based fully automated cephalometric analysis by deep learning. Comput Methods Programs Biomed. 2020;194:105513. 10.1016/j.cmpb.2020.105513) [DOI] [PubMed] [Google Scholar]

- 4. Hutton TJ, Cunningham S, Hammond P. An evaluation of active shape models for the automatic identification of cephalometric landmarks. Eur J Orthod. 2000;22(5):499 508. 10.1093/ejo/22.5.499) [DOI] [PubMed] [Google Scholar]

- 5. Rueda S, Alcañiz M. An approach for the automatic cephalometric landmark detection using mathematical morphology and active appearance models. Med Image Comput Comput Assist Interv. 2006;9(1):159 166. 10.1007/11866565_20) [DOI] [PubMed] [Google Scholar]

- 6. Proffit WR, Fields HW, Sarver DM. Contemporary Orthodontics. Amsterdam: Elsevier Health Sciences; 2006. [Google Scholar]

- 7. Kim J, Kim I, Kim YJ.et al. Accuracy of automated identification of lateral cephalometric landmarks using cascade convolutional neural networks on lateral cephalograms from nationwide multi-centres. Orthod Craniofac Res. 2021;24(suppl 2):59 67. 10.1111/ocr.12493) [DOI] [PubMed] [Google Scholar]

- 8. Lindner C, Wang CW, Huang CT, Li CH, Chang SW, Cootes TF. Fully automatic system for accurate localisation and analysis of cephalometric landmarks in lateral cephalograms. Sci Rep. 2016;6:33581. 10.1038/srep33581) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Schwendicke F, Samek W, Krois J. Artificial intelligence in dentistry: chances and challenges. J Dent Res. 2020;99(7):769 774. 10.1177/0022034520915714) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Shan T, Tay FR, Gu L. Application of artificial intelligence in dentistry. J Dent Res. 2021;100(3):232 244. 10.1177/0022034520969115) [DOI] [PubMed] [Google Scholar]

- 11. Monill-González A, Rovira-Calatayud L, d'Oliveira NG, Ustrell-Torrent JM. Artificial intelligence in orthodontics: where are we now? A scoping review. Orthod Craniofac Res. 2021;24(suppl 2):6 15. 10.1111/ocr.12517) [DOI] [PubMed] [Google Scholar]

- 12. Tuzoff DV, Tuzova LN, Bornstein MM.et al. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dento Maxillo Fac Radiol. 2019;48(4):20180051. 10.1259/dmfr.20180051) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Başaran M, Çelik Ö, Bayrakdar IS.et al. Diagnostic charting of panoramic radiography using deep-learning artificial intelligence system. Oral Radiol. 2021. 10.1007/s11282-021-00572-0) [DOI] [PubMed] [Google Scholar]

- 14. Bayrakdar IS, Orhan K, Akarsu S.et al. Deep-learning approach for caries detection and segmentation on dental bitewing radiographs. Oral Radiol. 2021. 10.1007/s11282-021-00577-9) [DOI] [PubMed] [Google Scholar]

- 15. Kurt Bayrakdar S, Orhan K, Bayrakdar IS.et al. A deep learning approach for dental implant planning in cone-beam computed tomography images. BMC Med Imaging. 2021;21(1):86. 10.1186/s12880-021-00618-z) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Orhan K, Bilgir E, Bayrakdar IS, Ezhov M, Gusarev M, Shumilov E. Evaluation of artificial intelligence for detecting impacted third molars on cone-beam computed tomography scans. J Stomatol Oral Maxillofac Surg. 2021;122(4):333 337. 10.1016/j.jormas.2020.12.006) [DOI] [PubMed] [Google Scholar]

- 17. Yang H, Jo E, Kim HJ.et al. Deep learning for automated detection of cyst and tumors of the jaw in panoramic radiographs. J Clin Med. 2020;9(6):1839. 10.3390/jcm9061839) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Bayrakdar IS, Orhan K, Çelik Ö.et al. A U-net approach to apical lesion segmentation on panoramic radiographs. BioMed Res Int. 2022;2022:7035367. 10.1155/2022/7035367) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Ao Y, Hong W. Feature Aggregation and Refinement Network for 2D Anatomical landmark detection. arXiv Preprint. 2021.. arXiv:2111.00659 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Zeng M, Yan Z, Liu S, Zhou Y, Qiu L. Cascaded convolutional networks for automatic cephalometric landmark detection. Med Image Anal. 2021;68:101904. 10.1016/j.media.2020.101904) [DOI] [PubMed] [Google Scholar]

- 21. Lee JH, Yu HJ, Kim MJ, Kim JW, Choi J. Automated cephalometric landmark detection with confidence regions using Bayesian convolutional neural networks. BMC Oral Health. 2020;20(1):270. 10.1186/s12903-020-01256-7) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Bulatova G, Kusnoto B, Grace V, Tsay TP, Avenetti DM, Sanchez FJC. Assessment of automatic cephalometric landmark identification using artificial intelligence. Orthod Craniofac Res. 2021;24(suppl 2):37 42. 10.1111/ocr.12542) [DOI] [PubMed] [Google Scholar]

- 23. Ibragimov B, Likar B, Pernus F, Vrtovec T. Automatic cephalometric X-ray landmark detection by applying game theory and random forests. In: Proc ISBI Int Symp Biomed Imaging. Automat. Cephalometric X-Ray Landmark Detection Challenge. Springer-Verlag Berlin Heidelberg Beijing. China; 2014:1 8. [Google Scholar]

- 24. Zhong Z, Li J, Zhang Z, Jiao Z, Gao X. An Attention-guided Deep Regression Model for Landmark Detection in Cephalograms. Medical Image Computing and Computer Assisted Intervention – MICCAI 2019. MICCAI 2019. Lecture Notes in Computer Science. Cham: Springer. 2019:540 548. 10.1007/978-3-030-32226-7_60) [DOI] [Google Scholar]

- 25. Wang CW, Huang CT, Lee JH.et al. A benchmark for comparison of dental radiography analysis algorithms. Med Image Anal. 2016;31:63 76. 10.1016/j.media.2016.02.004) [DOI] [PubMed] [Google Scholar]

- 26. Oh K, Oh IS, Le VNT, LEE DW. Deep anatomical context feature learning for Cephalometric landmark detection. IEEE J Biomed Health Inform. 2021;25(3):806 817. 10.1109/JBHI.2020.3002582) [DOI] [PubMed] [Google Scholar]