Abstract

To extract blue roofs (BRs) from remote sensing images quickly, first, this study used WorldView-2 and WorldView-3 as the data sources and created a new BR spectral area model (BRSAM) using the polygon area difference between the spectral curves of BRs and other image categories in bands 2–8. Then the extraction effect of BRSAM with those of the blue ground object spectral index (BGOSI), blue object spectrum index (BOSI) and maximum likelihood classification was compared; the results showed that BRSAM overcomes the shortcomings of BGOSI and BOSI, i.e. erroneously extracted shadow and white and yellow ground objects as BRs. However, BRSAM has the disadvantage of erroneously extracting some vegetation and green plastic playground as BRs. Considering that the disadvantage of one of BRSAM, BGOSI, and BOSI in extracting BRs can be compensated by the two other spectral models/indices, we combined the three spectral models/indices and used the synthetic spectral model to extract BRs. Notably, the synthetic spectral model overcomes the shortcomings of the three spectral models in BR extraction, and its effect is better than any one of them separately used. Meanwhile, the spectral model/index method used in BR extraction is better than the classification method. The spectral model/index method is a convenient and effective method for BR extraction, which could be used as a reference in the classification of other data.

Keywords: WorldView-2 and WorldView-3 imagery, Blue roof, Spectral area model creation, Spectral model synthesis, Target object extraction, Performance comparison

WorldView-2 and WorldView-3 imagery; Blue roof; Spectral area model creation; Spectral model synthesis; Target object extraction; Performance comparison

1. Introduction

Blue ground objects, similar to red, white, and yellow ground objects, are typical ground features in geographic space and are widely distributed in the urban surface environment. Blue ground objects in cities are mostly iron sheet houses with blue top surfaces (painted with blue paint); they are distributed mostly in special urban environments, such as urban villages (e.g. the roof is wrapped with a blue iron sheet to prevent water leakage or the coloured steel house is stored outside a room), factories (e.g. large production workshop) and construction zones (e.g. temporary accommodation for workers). These areas are some of the most important areas for urban environmental change monitoring. Based on high-spatial-resolution remote sensing images, blue ground object extraction can provide some basic data for specific target detection, illegal building monitoring and urban development quality assessment, which is of considerable significance.

At present, aside from our previous research on red, blue, white and cyan ground object extraction in cities (Liu and An, 2020; Liu et al., 2020), no special extraction research on the specific colour of ground objects has been conducted. Ground objects with different colours in cities are mainly building roofs with different colours. For roof extraction, scholars usually extract the buildings in the city as a whole, and the extracted results contain buildings with different colours on the top surface. For building roof extraction, the data used in previous research include low/high-resolution multispectral images and single-layer grayscale images. The methods used include unsupervised/supervised classification based on pixels, and spectral index threshold technology is applied. Recently, machine/deep learning technology has been widely used in building roof extraction. A typical literature review is as follows. Zhang (2001) used the unsupervised ISODATA clustering method for extracting large buildings (i.e. 10–20 m wide) in urban areas by fusing TM multispectral data with SPOT panchromatic data and obtained an average accuracy of 86%. Erener (2013) used the maximum likelihood classifier (MLC) and support vector machine to extract buildings from QuickBird images and achieved good results. However, the over-extraction and under-extraction phenomena occur in both methods. Notarangelo et al. (2021) used high-resolution raster digital surface model (DSM) data to extract buildings, and their research results showed that the use of only high-resolution raster DSM data to extract buildings could achieve high accuracy. Several scholars used radar data to extract the top surface of buildings using methods such as classification, filtering, segmentation, clustering and interpolation to achieve the highest extraction accuracy of 95% (Zhu et al., 2006; Li et al., 2016; Zhao et al., 2017; Gilani et al., 2018; Zhang et al., 2018). Schlosser et al. (2020) combined spectral band with texture data to extract buildings using machine learning technologies, and through feature selection, the building extraction accuracy exceeded 95% at the pixel level. Several scholars built a convolutional neural network (CNN) and used deep learning to extract building roofs. Their results showed that CNN has good recognition performance, and its extraction accuracy was more than 90% (Siddula et al., 2016; Liu et al., 2018; Alidoost and Arefi, 2019; Zhou et al., 2020; Wang et al., 2021). You et al. (2018) proposed a building detection method based on the morphological building index to detect buildings in multiple regional Gaofen-2 satellite images and achieved an overall accuracy (OA) of more than 92%. Samsudin et al. (2016) used the normalised difference concrete condition index (NDCCI) and the normalised difference metal condition index (NDMCI) to extract building roofs, which achieved higher spectral index extraction accuracy than supervised classification. The extraction accuracy of NDCCI and NDMCI is 84.44% and 94.17%, respectively, which are higher than that of SVM. By reviewing the methods used in building roof extraction, we determined that the index method has the advantage of being simpler, faster and easier to operate than the classification method and deep learning technology in roof extraction, which is convenient for practical application. Therefore, we need to create or combine some spectral indices to realise specific types of ground object extraction.

In our previous research, we created several new spectral indices, and the threshold method of these spectral indices can be used to realise the extraction of specific colour ground objects. Blue ground object spectral index (Liu et al., 2020) (BGOSI) and blue object spectrum index (Liu and An, 2020) (BOSI) are two of the typical spectral indices that we have created, which can extract blue roofs (BRs) with high accuracy. However, these spectral indices have the disadvantage of erroneously extracting shadow and white and yellow ground objects as BR; thus, their performance is imperfect. Therefore, we need to conduct an in-depth analysis of the spectral reflectance characteristics of BRs and other image categories in remote sensing data and identify new indicators and methods that can characterise their differences to fully reduce the probability of these image categories being erroneously extracted as BRs and improve BR recognition accuracy.

Recently, we determined that the polygon area formed by the spectral curves of BRs and other image categories in some bands of WorldView-3 is different, which may lead to the development of a new spectral model for BR extraction. Therefore, in this study, we used WorldView-3 and WorldView-2 as modelling and test data, respectively. From a new perspective, by analysing the polygon area difference between the spectral curves of the BR and other image categories in the coordinate system, we can create a new spectral model that can enhance the information on the BR and weaken the information of other image categories. Then, we use the new spectral model to extract the BR and further analyse the advantages and disadvantages of the new spectral model, as well as BGOSI and BOSI. We fully combine the advantages of the three spectral models to compensate for their shortcomings. Moreover, we intend to extract BRs using their combination and propose an improved method for BR extraction. This study will contribute a more reliable, simple and feasible method for extracting BRs from high-spatial-resolution remote sensing data, such as WorldView-3 and WorldView-2.

2. Materials and methods

2.1. Data source and preprocessing

We used the data source derived from a WorldView-3 image of Luoyang City taken on October 27, 2017, as the modelling data. The WorldView-3 image had a panchromatic band (with a spatial resolution of 0.3 m) and eight multispectral bands (with a spatial resolution of 1.24 m). The detailed parameters of WorldView-3 are shown in Table 1.

Table 1.

Band parameters of WorldView-3 imagery.

| Band number | Band name | Spatial resolution (m) | Wavelength range (nm) |

|---|---|---|---|

| 1 | Coastal blue | 1.24 | 400–450 |

| 2 | Blue | 450–510 | |

| 3 | Green | 510–580 | |

| 4 | Yellow | 585–625 | |

| 5 | Red | 630–690 | |

| 6 | Red edge | 706–745 | |

| 7 | Near-infrared 1 | 770–895 | |

| 8 | Near-infrared 2 | 860–1040 | |

| 9 | Panchromatic | 0.3 | 450–800 |

The entire WorldView-3 image had a latitude and longitude range of 112°24′28.87″–112°28′05.56″ E and 34°37′35.01″–34°40′35.23″ N, respectively, and covered an area of 25.00 km2. The data were calibrated (i.e. the absCalFactor in the.imd file is identified for WorldView radiance calibration), fused (i.e. Gram–Schmidt spectral sharpening) and subjected to atmospheric correction (i.e. FLAASH atmospheric correction) using the ENVI 5.4 software before use. To reduce the amount of data computation, we crop a small image with a rich BR distribution from the entire WorldView-3 image as the test area. This small test area covered 1.83 km2.

The other data are the WorldView-2 data of Hohhot used in previous studies (Liu and An, 2020; Liu et al., 2020), which have the same band setting and preprocessing as WorldView-3 data. However, WorldView-2 data have a different spatial resolution from WorldView-3 data (the resolutions of panchromatic and multispectral images are 0.5 and 2 m, respectively). We have taken WorldView-2 as the test data. The images of the model building and test area used in this study are shown in Figure 1 (true colour, RGB532 combination).

Figure 1.

Images of model building area (WorldView-3) and test area (WorldView-2). (a) Test area image; (b) model building image.

2.2. Methods

2.2.1. Sample collection and spectral curve fitting

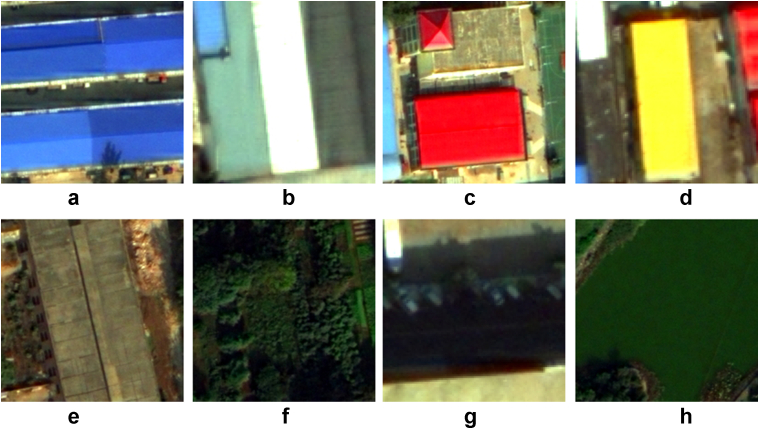

We display the WorldView-3/2 images in true colour (RGB532), observe the colour of ground objects in the image and collect samples of typical image categories, such as roofs of different colours, vegetation, shadow, and water. The survey results showed that there are five colours of roofs, i.e. red, white, blue, yellow/cyan (cyan in the WorldView-2 image) and grey in the WorldView-3 image. The pixel numbers of the samples used in spectral curve fitting and classification training are 867 (216, for WorldView-2, the same below), 831 (215), 840 (217), 857 (204) and 876 (210) for red objects (ROs), white objects (WOs), BRs, yellow/cyan objects (YOs/COs) and grey objects (GOs), respectively, and 883 (218), 845 (223) and 881 (215) for vegetation (VEG), shadow (SHA) and waterbody (WB), respectively. The pixel numbers of the samples used in accuracy verification for BRs non-BRs are 5041 (2541) and 5030 (2581), respectively. The detailed sample collection information is shown in Table 2, and the typical images (for WorldView-3) of each image category are shown in Figure 2.

Table 2.

Spectral curve fitting/training and accuracy verification samples.

| Data | Sample | Blue roofs | Others | Others contained in the image categories |

|---|---|---|---|---|

| WorldView-3 | Fitting/training | 840 | 6040 | Red, white, yellow and grey roofs and vegetation, shadow and waterbody |

| Validation | 5041 | 5030 | ||

| WorldView-2 | Fitting/training | 217 | 1501 | Red, white, cyan and grey roofs and vegetation, shadow and waterbody |

| Validation | 2541 | 2581 |

Figure 2.

Collected samples of typical WorldView-3 image categories. (a)–(e) represent blue, white, red, yellow and grey roofs; (f) vegetation; (g) shadow; (h) waterbody.

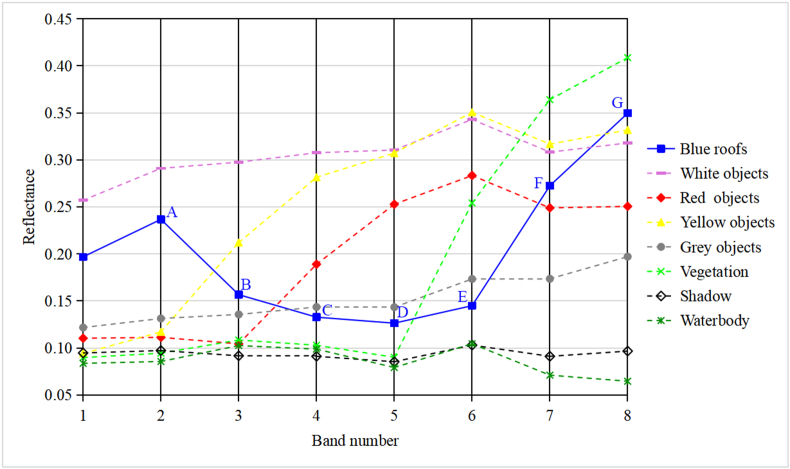

We use the samples of different image categories to count their average reflection values (mean) and standard deviation (stdev) in each band of WorldView-3, and the statistical results are shown in Table 3. Then, we use the mean values in Table 3 to fit the spectral curves of different image categories, and the results are shown in Figure 3.

Table 3.

Mean and standard deviation of spectral reflection values of different ground objects and shadows.

| Image classes | Band 1 |

Band 2 |

Band 3 |

Band 4 |

Band 5 |

Band 6 |

Band 7 |

Band 8 |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | Stdev | Mean | Stdev | Mean | Stdev | Mean | Stdev | Mean | Stdev | Mean | Stdev | Mean | Stdev | Mean | Stdev | |

| Blue roofs | 0.197 | 0.017 | 0.237 | 0.020 | 0.157 | 0.025 | 0.133 | 0.023 | 0.126 | 0.022 | 0.145 | 0.021 | 0.273 | 0.041 | 0.350 | 0.060 |

| White objects | 0.257 | 0.013 | 0.291 | 0.013 | 0.298 | 0.013 | 0.308 | 0.015 | 0.310 | 0.016 | 0.343 | 0.019 | 0.308 | 0.019 | 0.318 | 0.022 |

| Red objects | 0.110 | 0.022 | 0.111 | 0.023 | 0.104 | 0.025 | 0.189 | 0.026 | 0.253 | 0.041 | 0.283 | 0.037 | 0.249 | 0.027 | 0.251 | 0.032 |

| Yellow objects | 0.094 | 0.009 | 0.117 | 0.009 | 0.212 | 0.012 | 0.281 | 0.017 | 0.307 | 0.029 | 0.351 | 0.048 | 0.317 | 0.062 | 0.332 | 0.077 |

| Grey objects | 0.122 | 0.014 | 0.131 | 0.012 | 0.136 | 0.008 | 0.144 | 0.007 | 0.143 | 0.010 | 0.173 | 0.014 | 0.174 | 0.023 | 0.197 | 0.029 |

| Vegetation | 0.090 | 0.005 | 0.094 | 0.005 | 0.108 | 0.009 | 0.103 | 0.009 | 0.090 | 0.010 | 0.254 | 0.042 | 0.364 | 0.068 | 0.409 | 0.067 |

| Shadow | 0.095 | 0.004 | 0.097 | 0.004 | 0.092 | 0.005 | 0.091 | 0.005 | 0.085 | 0.006 | 0.103 | 0.007 | 0.091 | 0.009 | 0.097 | 0.013 |

| Waterbody | 0.084 | 0.002 | 0.086 | 0.001 | 0.103 | 0.002 | 0.099 | 0.002 | 0.079 | 0.002 | 0.104 | 0.002 | 0.071 | 0.002 | 0.065 | 0.002 |

Figure 3.

Spectral curves of different image categories.

2.2.2. Creating a new spectral model for blue roofs

As shown in Figure 3, the intersection points of the mean reflectivity of BRs in bands 2–8 and the axes of the bands are A, B, C, D, E, F and G, respectively. The polygon A–B–C–D–E–F–G formed by these points is defined as the BR spectral area model (BRSAM). The area of the polygon A–B–C–D–E–F–G can be obtained by subtracting the areas of the trapezoids A–2–3–B, B–3–4–C, C–4–5–D, D–5–6–E, E–6–7–F and F–7–8–G from the area of the trapezoid A–2–8–G. The calculation formula is expressed in Eq. (1) as follows:

| (1) |

where i = 2, 3, 4, 5, 6, 7; λi is the central wavelength of band i; and R1, R2, R3, R4, R5, R6, R7 and R8 are the reflectances of coastal blue, blue, green, yellow, red, red edge, Near-infrared 1 and Near-infrared 2 bands of WorldView-3 (or WorldView-2), respectively (the same below).

By combining the spectral curves shown in Figure 3 and the operation rules of Formula (1), we observe that, among the polygons formed by the spectral curves of different image categories in bands 2–8, the area of BRs is the largest and has a positive value; the area of vegetation is the second largest and also has a positive value; the areas of white, red and yellow ground objects and waterbody all have negative values; and the areas of grey ground objects and shadow are all close to 0. Notably, the polygon area of BRs is different from that of other image categories. Therefore, according to the area difference, the created spectral model can enhance the information of BRs and weaken the information of other image categories.

2.2.3. Blue roof extraction

Taking BRSAM of WorldView-3 as an example (i.e. other spectral models of WorldView-3/2 is the same as WorldView-3), first, the grayscale histograms of the BRSAM image were calculated to obtain a curve with two peaks, and the grayscale value corresponding to the valley between the two peaks was selected as the initial threshold for image binary classification. We combined our visual observations and adjusted the threshold values to extract BRs accurately. When BRs were well extracted and other typical image categories were not erroneously extracted as BRs, the current threshold was determined as the final threshold for BR extraction. Then, we used the final threshold value to detect the BR in BRSAM images.

Meanwhile, we adopted the same threshold approach to extract BRs from BGOSI and BOSI images to compare and evaluate the performance of BRSAM in BR extraction. BGOSI is constructed using the subtraction, addition, and ratio operations of two characteristic bands (i.e. band b2 with the largest difference and band b7 with the smallest difference between the reflectance values of BR, vegetation and green plastic playground) and other bands, and its calculation formula is expressed in Eq. (2). BOSI is constructed using a combination of the addition, subtraction and multiplication operations in some key bands to enhance the information of BRs and weaken the information of other image categories. The constructed spectral index is beneficial to the extraction of urban BRs, and its calculation formula is expressed in Eq. (3).

| (2) |

| (3) |

2.2.4. Performance test of blue roof extraction

From the BRSAM, BGOSI, and BOSI images, we used the samples that fitted the spectral curves to perform statistical analysis of the minimum, maximum, mean, and stdev data values (pixel digital number) of blue, white, red, yellow/cyan, and grey ground objects and vegetation, shadow, and water to produce the box plots of different image categories. Then, we utilised the box plots to analyse the separability of BRs and the image categories in the three spectral models.

After the BR were extracted using these spectral models, we analysed the extraction effect of these spectral model/index threshold methods by observing the completeness (observe whether a BR can be completely extracted) of the BR extraction results and the over-extraction and under-extraction phenomena. We fully summarised their advantages and disadvantages in BR extraction. When the shortcomings of some spectral models can be compensated by the advantages of other spectral models, we consider combining these spectral models (i.e. BRSAM + BGOSI + BOSI) to extract BRs and analyse whether the combined form can further improve the extraction results.

Furthermore, we used the samples that fitted the spectral curves of different image categories as the training samples, employed the MLC (using ENVI 5.4 software, all parameters were default) to classify the WorldView-3 and WorldView-2 images, kept the BR as one class, and merged the other image categories as another class. Then, we used the validation samples to generate the confusion matrix and evaluate the accuracy of the classification results. The OA, kappa coefficient (KC), producer accuracy (PA) and user accuracy (UA) of the confusion matrix were used to quantitatively evaluate the classification results. The extraction results of BRs obtained by the classification method were compared with those obtained by the spectral model threshold methods to further evaluate the performance of the spectral model method in BR extraction. The extraction effects of different spectral models and their combinations on BRs were also quantitatively evaluated.

3. Results and discussion

3.1. Image of spectral model/index extraction

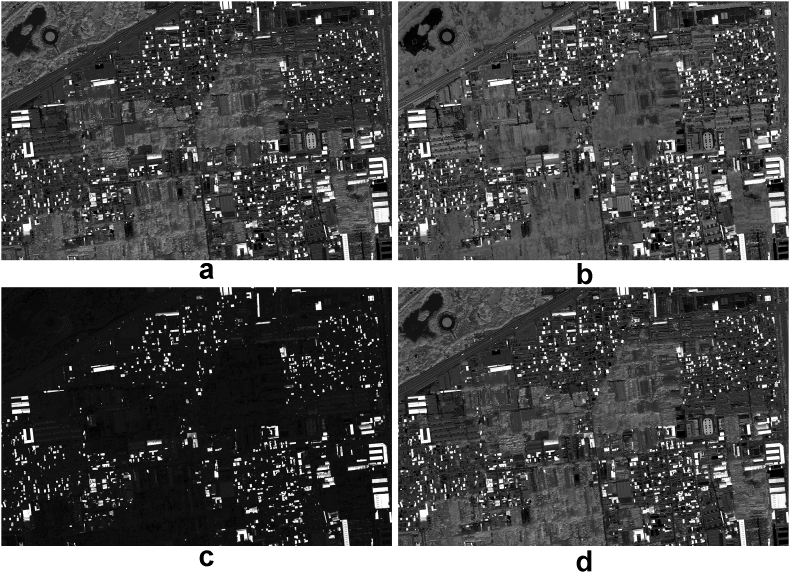

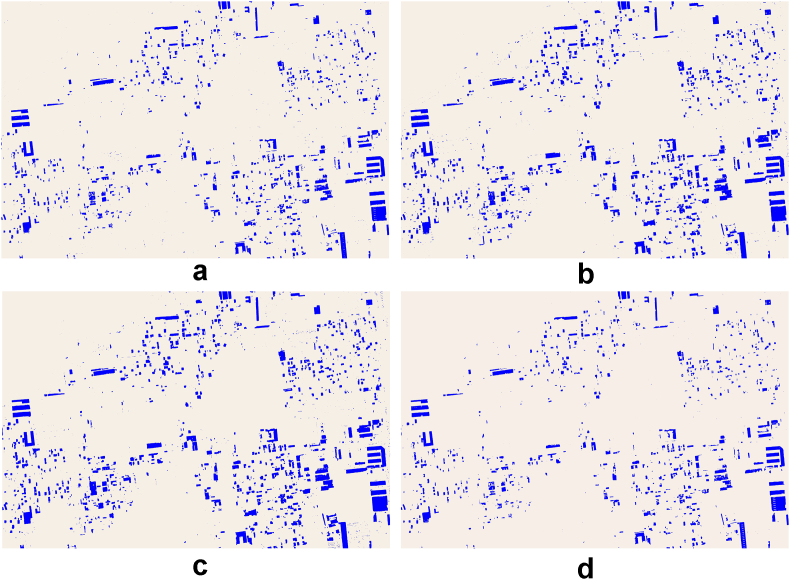

From the WorldView-3 and WorldView-2 data, the BRSAM, BGOSI and BOSI images were extracted using Formulas (1) to (3). The images extracted from the WorldView-3 data are shown in Figure 4.

Figure 4.

Images of the BR spectral models of WorldView-3. (a) BRSAM image, the value range of pixels is [-57.016, 85.615]; (b) BGOSI image, the value range of pixels is [−2.882, 4.545]; (c) BOSI image, the value range of pixels is [−0.061, 0.240]; (d) image obtained by BRSAM + BGOSI + BOSI, the value range of pixels is [−36.859, 83.013].

In Figure 4, the BR in the BRSAM, BGOSI and BOSI images are all displayed in white, indicating that the information on BRs in these images has been enhanced, and the other image categories are displayed in grey or black. In terms of the visual effect, Figure 4 shows that the vegetation in BRSAM is brighter than that in BGOSI, BOSI and their combinations. However, in WorldView-2, the vegetation in BGOSI is brighter than that in BOSI and BRSAM. The vegetation, green plastic playground and shadow in BRSAM and BGOSI are also shown in bright colours. Whether the BR in the BRSAM and BGOSI images can be effectively extracted by the threshold method needs statistical analysis and image classification observation.

3.2. Separation test of blue roofs in the spectral models

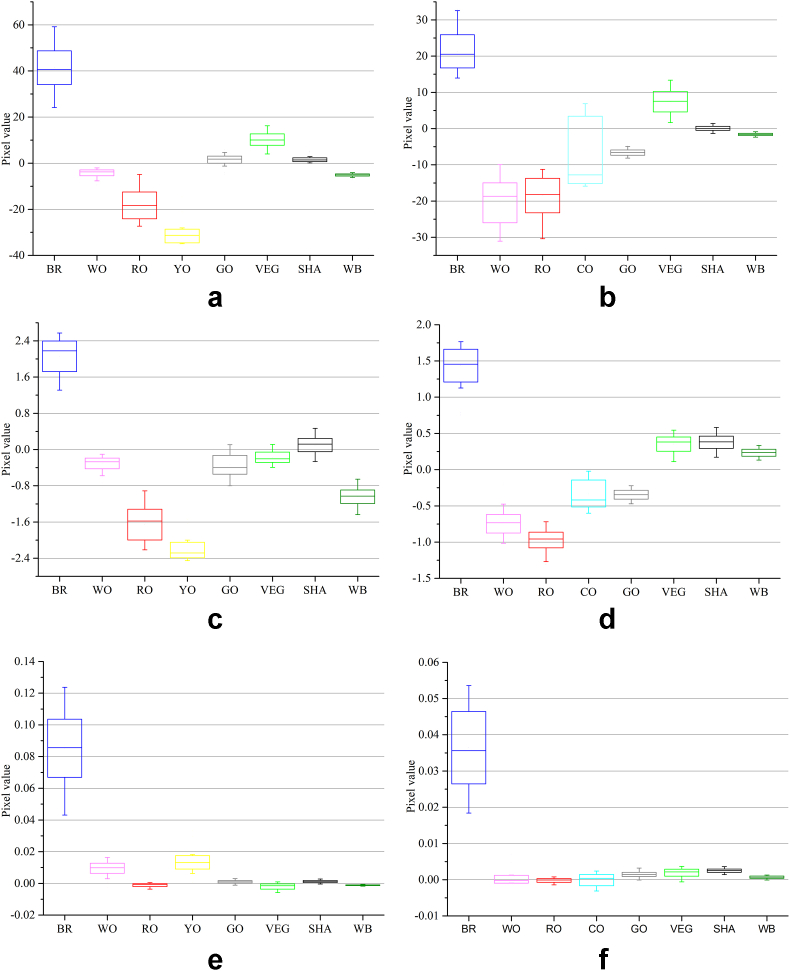

The box plots of the eight typical image categories generated after the statistical analysis of the BRSAM, BGOSI and BOSI images of WorldView-3 and WorldView-2 are shown in Figure 5.

Figure 5.

Box plots of different image categories on different spectral model/index images of WorldView-3/2. (a) BRSAM of WorldView-3; (b) BRSAM of WorldView-2; (c) BGOSI of WorldView-3; (d) BGOSI of WorldView-2; (e) BOSI of WorldView-3; (f) BOSI of WorldView-2.

As shown in Figure 5, in the BRSAM image of WorldView-3, the maximum values of all other image categories are less than the minimum value of BRs. In the BGOSI and BOSI images of WorldView-3, the maximum values of all other image categories and the minimum value of BRs are also relatively different. The mean value of BRs in the BRSAM, BGOSI and BOSI images is positive, whereas the mean value of each of the other image categories is negative, indicating that the newly created BRSAM, as well as the previously created BGOSI and ROSI, can distinguish BRs from other image categories.

However, we determined that, in the BRSAM, BGOSI and BOSI images, the minimum value of BRs is close to the maximum values of vegetation, shadow and yellow ground objects, which indicates that the threshold method used for extracting BRs from these spectral models erroneously extracted vegetation, shadow and yellow ground objects as BRs.

Moreover, in the BRSAM, BGOSI and BOSI images of Worldview-2, the maximum values of all other image categories and the minimum value of BRs have similar rules as those of Worldview-3. In the BRSAM and BGOSI images, the minimum value of BRs is also close to the maximum values of vegetation and shadow. Because there are no yellow ground objects in Worldview-2 (but there are cyan ground objects), therefore, in the BOSI images, the separation of blue and yellow ground objects cannot be compared.

3.3. Blue roof extraction using a separate spectral model/index

In the BRSAM, BGOSI and BOSI images of WorldView-3, for BR extraction, the suitable thresholds are [22.914, 85.615], [0.955, 4.545] and [0.02, 0.257], respectively, and their extraction results are shown in Figure 6(a) to (c).

Figure 6.

The blue target object extraction results, used WorldView-3 data. (a) Extraction results of BRSAM; (b) extraction results of BGOSI; (c) extraction results of BOSI; (d) extraction results of BRSAM + BGOSI + BOSI.

As shown in Figure 6, when BRSAM, BGOSI and BOSI are used separately to extract BRs from WorldView-3, most of the BR can be well extracted. Moreover, each spectral model/index has a comparatively good effect on BR extraction. However, it is unclear which spectral model/index has the best extraction effect on BR. Therefore, we focus on the more detailed parts of the classification results so that we can identify which BRs have not been detected and which non-BRs have been erroneously extracted as BR. We use this method to measure the performance of the three spectral models in BR extraction.

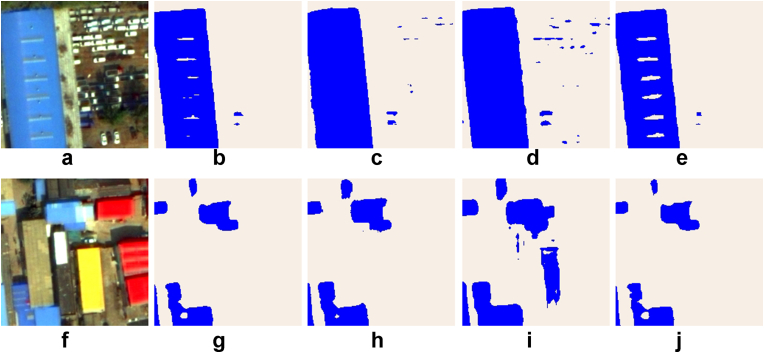

We determined that all three spectral model/index threshold methods can detect BR in the WorldView-3 and WorldView-2 images. However, in terms of extraction completeness, BOSI is the best, BGOSI is the second best, and BRSAM is the worst. When using the three spectral model/index threshold methods to extract BR, other ground objects are erroneously extracted as BR. Taking WorldView-3 as an example, the observation results are shown in Figure 7.

Figure 7.

Examples of over-extraction of BRs by the three spectral models. (a) Original image; (b) vegetation was erroneously extracted as BRs by BRSAM; (c) original image; (d) green plastic playground was erroneously extracted as BRs by BRSAM; (e) original image; (f) shadow was erroneously extracted as BRs by BGOSI; (g) original image; (h) the white parts of cars were erroneously extracted as blue roof by BRs; (i) original image; (j) yellow ground objects were erroneously extracted as BRs by BOSI; (k) original image; (l) white ground objects were erroneously extracted as BRs by BOSI.

As shown in Figure 7(a) to (d), in BR extraction based on BRSAM, sporadic vegetation and green plastic playground were erroneously extracted as BRs. In BR extraction based on BGOSI, shadow and the white parts of cars were erroneously extracted as BRs (Figure 7(e) to (h)). In BR extraction based on BOSI, yellow and white ground objects (including the white parts of cars) were erroneously extracted as BRs (Figure 7(i) to (l)). In WorldView-2, we observed that BRSAM would extract vegetation and blue plastic playground as BRs, and BGOSI and BOSI would erroneously extract the white parts of cars as BRs. This false extraction phenomenon was similar to that in WorldView-3.

From the perspective of non-BRs being erroneously extracted as BRs, it is difficult to evaluate which of the three spectral models has the best performance. However, we determined that vegetation was well extracted by BOSI but erroneously extracted by BRSAM, and white and yellow ground objects were well extracted by BRSAM but erroneously extracted by BGOSI and BOSI. If the three spectral models are synthesised, then their shortcomings could be compensated by their advantages, and the accuracy of BR extraction could be improved.

3.4. Blue roof extraction using a synthetic spectral model

We combined three spectral models (BRSAM + BGOSI + BOSI) to generate a new grayscale image, as shown in Figure 4(d). We used a threshold of [27.097, 83.013] to extract BR in the image of the model building area, and the extraction result is shown in Figure 6(d). From Figures 6(b) and 6(c), we selected small block images of the inaccurate extraction results obtained by BGOSI and BOSI and compared them with those of the extraction results of the synthetic spectral model, and the comparison results are shown in Figure 8.

Figure 8.

Comparison of the extraction results of the synthetic spectral model and other models for BRs. (a) Original image; (b) BRSAM extraction results; (c) BGOSI extraction results; (d) BOSI extraction results; (e) BRSAM + BGOSI + BOSI extraction results; (f) original image; (g) BRSAM extraction results; (h) BGOSI extraction results; (i) BOSI extraction results; (j) Synthetic spectral index extraction results.

As shown in Figure 8, BGOSI and BOSI erroneously extracted the white parts of cars and yellow ground objects as BRs; the synthetic spectral model did not extract them, and the synthetic spectral model better controls the details of BRs than BRSAM. Furthermore, in the extraction results obtained by the synthetic spectral model, nearly no vegetation, green plastic playground and shadow were erroneously extracted as BRs. Thus, the combination of the three models can effectively exert their respective advantages and improve the accuracy of BR extraction.

3.5. Quantitative comparison of different extraction results

We used the spectral model/index threshold methods and MLC classification method to extract BR, and the confusion matrices of the WorldView-3 and WorldView-2 image classification results are shown in Tables 4 and 5, respectively.

Table 4.

Blue roof extraction results of different methods based on WorldView-3 imagery.

| Methods | Type | BO | Non-BO | Row total | PA (%) | UA (%) | OA (%) | KC |

|---|---|---|---|---|---|---|---|---|

| BRSAM | BO | 4876 | 86 | 4962 | 96.73 | 98.27 | 97.5077 | 0.9502 |

| Non-BO | 165 | 4,944 | 5109 | 98.29 | 96.77 | |||

| Column total | 5041 | 5030 | 10,071 | |||||

| BGOSI | BO | 5018 | 405 | 5423 | 99.54 | 92.58 | 95.7502 | 0.9150 |

| Non-BO | 23 | 4625 | 4648 | 91.95 | 99.51 | |||

| Column total | 5041 | 5030 | 10,071 | |||||

| BOSI | BO | 4967 | 352 | 5319 | 98.53 | 93.38 | 95.7700 | 0.9154 |

| Non-BO | 74 | 4678 | 4752 | 93.00 | 98.44 | |||

| Column total | 5041 | 5030 | 10,071 | |||||

| BRSAM + BGOSI + BOSI | BO | 4817 | 17 | 4834 | 95.56 | 99.65 | 97.6070 | 0.9521 |

| Non-BO | 224 | 5013 | 5237 | 99.66 | 95.72 | |||

| Column total | 5041 | 5030 | 10,071 | |||||

| MLC | BO | 5038 | 430 | 5468 | 99.94 | 92.14 | 95.7005 | 0.9140 |

| Non-BO | 3 | 4600 | 4603 | 91.45 | 99.93 | |||

| Column total | 5041 | 5030 | 10,071 |

Table 5.

Blue roof extraction results of different methods based on WorldView-2 imagery.

| Methods | Type | BO | Non-BO | Row total | PA (%) | UA (%) | OA (%) | KC |

|---|---|---|---|---|---|---|---|---|

| BRSAM | BO | 2462 | 102 | 2564 | 96.89 | 96.02 | 96.4662 | 0.9293 |

| Non-BO | 79 | 2479 | 2558 | 96.05 | 96.91 | |||

| Column total | 2541 | 2581 | 5122 | |||||

| BGOSI | BO | 2498 | 143 | 2641 | 98.31 | 94.59 | 96.3686 | 0.9274 |

| Non-BO | 43 | 2438 | 2481 | 94.46 | 98.27 | |||

| Column total | 2541 | 2581 | 5122 | |||||

| BOSI | BO | 2540 | 347 | 2887 | 99.96 | 87.98 | 93.2058 | 0.8643 |

| Non-BO | 1 | 2234 | 2235 | 86.56 | 99.96 | |||

| Column total | 2541 | 2581 | 5122 | |||||

| BRSAM + BGOSI + BOSI | BO | 2463 | 77 | 2540 | 96.93 | 96.97 | 96.9738 | 0.9395 |

| Non-BO | 78 | 2504 | 2582 | 97.02 | 96.98 | |||

| Column total | 2541 | 2581 | 5122 | |||||

| MLC | BO | 2541 | 578 | 3119 | 100.00 | 81.47 | 88.7153 | 0.7747 |

| Non-BO | 0 | 2003 | 2003 | 77.61 | 100.00 | |||

| Column total | 2541 | 2581 | 5122 |

As shown in Table 4, in the WorldView-3 data, the three spectral models used to extract BR achieved a high OA. The OA of using BRSAM to extract BRs was higher than that of using the two other spectral indices. The extraction accuracy of using BRSAM to extract BRs was not as high as that of the two other spectral indices. And BRSAM did not generate much different PAs between BRs and non-BRs, whereas the two other spectral indices produced significantly different PAs. When using the three combined spectral indices to distinguish BRs and non-BRs, the OA was slightly improved compared with that when using BRSAM. Although the accuracy of the combined spectral models used to extract BRs was lower than that of the three spectral models used separately, the extraction accuracy of non-BRs was considerably improved.

The use of the maximum likelihood classification method to distinguish BRs and non-BRs also achieved a high OA, but it was slightly lower than that of the spectral model/index threshold methods used. Moreover, the accuracy of the classification method used to extract non-BR was low, indicating that the use of this method for BR extraction leads to the over-extraction phenomenon.

Table 5 shows that, in the WorldView-2 data, each spectral model/index can also achieve a high OA in distinguishing BRs and non-BRs. BRSAM and the combined spectral index in the WorldView-2 data have the same excellent performance as in the WorldView-3 data when distinguishing BRs and non-BRs. The classification method erroneously extracted non-BRs, which indicates the occurrence of the over-extraction phenomenon of BRs.

Usually, we see objects as blue because such objects have high reflectivity in the blue band of the visible range. Therefore, we naturally think that the blue band is an important band for such object detection. However, BRs also have strong reflectivity in two near-infrared bands, so these bands are also important for blue object detection. The high reflectance of the iron roof coated with blue paint in the blue and near-infrared bands and the low reflectance in the green, yellow, red, and red bands make the spectral curve of such object present a concave shape, which is different from that of other ground objects. This makes it possible to build some models that can express their differences based on the differences between the spectral curves of the BR and other objects to detect BRs.

The physical dimensions of BRSAM created in this study are the product of reflectance and wavelength; BGOSAI is reflected in the ratio of reflectance to reflectance, which is an adimensional index; BOSI has physical dimensions of reflectance squared. The three spectral models have different physical dimensions. From this perspective, it seems that the image layers derived from the three spectral models cannot be added together. However, from the perspective that the three models all can enhance the signal of the BR, weaken the signal of other objects (including shadows) and their combination can eliminate their respective shortcomings. When their image layers are added together, the signal of the BR in the composite image is still enhanced, and the signal of other objects that are not well weakened in the independent model can be further weakened. Therefore, in practical applications, the combination of the three spectral models could be considered to achieve accurate extraction of BRs.

4. Conclusions

In this study, a new spectral model, i.e. BRSAM, was created based on the polygon area difference between the spectral curves of BRs and other typical image categories in bands 2–8 of WorldView-3 (including WorldView-2). Then, we compared its extraction effect on BRs with that of the BGOSI and BOSI threshold methods and the classification method. The results showed that BRSAM erroneously extracted some vegetation and green plastic playground as BRs, BGOSI erroneously extracted shadows and the white parts of cars as BRs, and BOSI erroneously extracted white ground objects (including the white parts of cars) and yellow objects as BRs. All three spectral models had shortcomings when they were used to extract BRs. However, their disadvantages can be compensated by the two other spectral models. The combination of these three spectral models was used to extract BRs, which to a certain extent overcomes the disadvantages of the three spectral models when used separately for BR extraction, and the extraction effect was better than any one of them. Meanwhile, the model/index method was better than the classification method in BR extraction.

The BRSAM proposed in this study was designed according to the polygon area difference formed by the spectral curves of BRs and other image categories in bands 2–8 of WorldView-3. Because the spectral reflection of ground objects in images is affected by many factors and is unstable, in some cases, the polygons formed by the spectral curves of BRs and other image categories in these characteristic bands do not always have obvious area differences. Furthermore, there may be other image categories with spectral reflection characteristics similar to those of BRs. When these situations occur, they will affect the accuracy of BR extraction by the newly designed spectral model. Therefore, BR extraction using the newly designed spectral model may be limited by certain factors.

To evaluate the performance of the newly created spectral model, we use the threshold method to extract BRs. In the follow-up study, the performance of BRSAM, BGOSI, BOSI and their combination in extracting BRs should be tested in more data, and their calculation forms should be improved with the complexity of data and ground object types.

Declarations

Author contribution statement

Huaipeng Liu: Conceived and designed the experiments; Performed the experiments; Analyzed and interpreted the data; Contributed reagents, materials, analysis tools or data; Wrote the paper.

Xiaoqing Zuo: Performed the experiments; Contributed reagents, materials, analysis tools or data.

Funding statement

This work was supported by Scientific and Technological Project of Henan Province, China [202102210363], National Natural Science Foundation of China [32001250]. Both grants are from China. The former is a provincial project and the latter is a national project.

Data availability statement

Data will be made available on request.

Declaration of interest’s statement

The authors declare no conflict of interest.

Additional information

No additional information is available for this paper.

References

- Alidoost F., Arefi H. A CNN-Based approach for automatic building detection and recognition of roof types using a single aerial image. PFG-J. Photogramm. Rem. 2019;86:235–248. [Google Scholar]

- Erener A. Classification method, spectral diversity, band combination and accuracy assessment evaluation for urban feature detection. Int. J. Appl. Earth Obs. Geoinf. 2013;21:397–408. [Google Scholar]

- Gilani S.A.N., Awrangjeb M., Lu G. Segmentation of airborne point cloud data for automatic building roof extraction. GIScience Remote Sens. 2018;55:63–89. [Google Scholar]

- Li L., Wang C., Li S.H., Xi X.H. Building roof point extraction based on airborne LiDAR data. J. Univ. Chin. Acad. Sci. 2016;33:537–541. [Google Scholar]

- Liu H.P., An H.J. Extraction of four types of urban ground objects based on a newly created WorldView-2 multi-colour spectral index. J. Indian. Soc. Remote Sens. 2020;48:1091–1100. [Google Scholar]

- Liu H.P., Zhang Y.X., An H.J. Urban blue ground object extraction by using a new spectral index from WorldView-2. Fresenius Environ. Bull. 2020;29:9654–9660. [Google Scholar]

- Liu W.T., Li S.H., Qin Y.C. Automatic building roof extraction with fully convolutional neural network. J. Geo-Inf. Sci. 2018;20:1562–1570. [Google Scholar]

- Notarangelo N.M., Mazzariello A., Albano R., Sole A. Comparing three machine learning techniques for building extraction from a digital surface model. Appl. Sci. 2021;11:6072. [Google Scholar]

- Samsudin S.H., Shafri H.Z.M., Hamedianfar A. Development of spectral indices for roofing material condition status detection using field spectroscopy and WorldView-3 data. J. Appl. Remote Sens. 2016;10 [Google Scholar]

- Schlosser A.D., Szabó G., Bertalan L., Varga Z., Enyedi P., Szabó S. Building extraction using orthophotos and dense point cloud derived from visual band aerial imagery based on machine learning and segmentation. Rem. Sens. 2020;12:2397. [Google Scholar]

- Siddula M., Dai F., Ye Y., Fan J. Unsupervised feature learning for objects of interest detection in cluttered construction roof site images. Procedia Eng. 2016;145:428–435. [Google Scholar]

- Wang Y.H., Gu L.J., Li X.F., Ren R.Z. Building extraction in multitemporal high-resolution remote sensing imagery using a multifeature LSTM network. Geosci. Rem. Sens. Lett. IEEE. 2021;18:1645–1649. [Google Scholar]

- You Y., Wang S., Ma Y., Chen G., Wang B., Shen M., Liu W. Building detection from VHR remote sensing imagery based on the morphological building index. Rem. Sens. 2018;10:1287. [Google Scholar]

- Zhang C.S., He Y.X., Fraser C.S. Spectral clustering of straight-line segments for roof plane extraction from airborne lidar Point clouds. Geosci. Rem. Sens. Lett. IEEE. 2018;15:267–271. [Google Scholar]

- Zhang Y. Detection of urban housing development by fusing multisensor satellite data and performing spatial feature post-classification. Int. J. Rem. Sens. 2001;22:3339–3355. [Google Scholar]

- Zhao C., Zhang B.M., Chen X.W., Guo H.T., Lu J. Accurate and automatic billing roof extraction using neighborhood information of point clouds. Acta Geod. Cartogr. Sinica. 2017;46:1123–1144. [Google Scholar]

- Zhou D.J., Wang G.Z., He G.J., Long T.F., Yin R.Y., Zhang Z.M., Chen S.B., Luo B. Robust building extraction for high spatial resolution remote sensing images with self-attention network. Sensors. 2020;20:7241. doi: 10.3390/s20247241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu J.J., Fan X.T., Shao Y. Building’s roof extraction by fusing high-resolution SAR with optical images. J. Grad. Sch. Chin. Acad. Sci. 2006;23:178–185. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data will be made available on request.