Abstract

We introduce a region-specific diffeomorphic metric mapping (RDMM) registration approach. RDMM is non-parametric, estimating spatio-temporal velocity fields which parameterize the sought-for spatial transformation. Regularization of these velocity fields is necessary. In contrast to existing non-parametric registration approaches using a fixed spatially-invariant regularization, for example, the large displacement diffeomorphic metric mapping (LDDMM) model, our approach allows for spatially-varying regularization which is advected via the estimated spatio-temporal velocity field. Hence, not only can our model capture large displacements, it does so with a spatio-temporal regularizer that keeps track of how regions deform, which is a more natural mathematical formulation. We explore a family of RDMM registration approaches: 1) a registration model where regions with separate regularizations are pre-defined (e.g., in an atlas space or for distinct foreground and background regions), 2) a registration model where a general spatially-varying regularizer is estimated, and 3) a registration model where the spatially-varying regularizer is obtained via an end-to-end trained deep learning (DL) model. We provide a variational derivation of RDMM, showing that the model can assure diffeomorphic transformations in the continuum, and that LDDMM is a particular instance of RDMM. To evaluate RDMM performance we experiment 1) on synthetic 2D data and 2) on two 3D datasets: knee magnetic resonance images (MRIs) of the Osteoarthritis Initiative (OAI) and computed tomography images (CT) of the lung. Results show that our framework achieves comparable performance to state-of-the-art image registration approaches, while providing additional information via a learned spatio-temporal regularizer. Further, our deep learning approach allows for very fast RDMM and LDDMM estimations. Code is available at https://github.com/uncbiag/registration.

1. Introduction

Quantitative analysis of medical images frequently requires the estimation of spatial correspondences, i.e.image registration. For example, one may be interested in capturing knee cartilage changes over time, localized changes of brain structures, or how organs at risk move between planning and treatment for radiation treatment. Specifically, image registration seeks to estimate the spatial transformation between a source image and a target image, subject to a chosen transformation model.

Transformations can be parameterized via low-dimensional parametric models (e.g., an affine transformation), but more flexible models are required to capture subtle local deformations. Such registration models [3, 32] may have large numbers of parameters, e.g., a large number of B-spline control points [30] or may even be non-parametric where vector fields are estimated [5, 22]. Spatial regularity can be achieved by appropriate constraints on displacement fields [14] or by parameterizing the transformation via integration of a sufficiently regular stationary or time-dependent velocity field [5, 15, 38, 8, 41]. Given sufficient regularization, diffeomorphic transformations can be assured in the continuum. A popular approach based on time-dependent velocity fields is LDDMM [5, 15]. Optimal LDDMM solutions are geodesics and minimize a geodesic distance. Consequentially, one may directly optimize over a geodesic’s initial conditions in a shooting approach [40].

Most existing non-parametric image registration approaches use spatially-invariant regularizers. However, this may not be realistic. E.g., when registering inhale to exhale images of a lung one expects large deformations of the lung, but not of the surrounding tissue. Hence, a spatially-varying regularization would be more appropriate. As the regularizer encodes the deformation model this then allows anticipating different levels of deformation at different image locations.

While spatially-varying regularizers may be used in LDDMM variants [31] existing approaches do not allow for time-varying spatially-varying regularizers. However, such regularizers would be natural for large displacement as they can move with a deforming image. Hence, we propose a family of registration approaches with spatio-temporal regularizers based on advecting spatially-varying regularizers via an estimated spatio-temporal velocity field. Specifically, we extend LDDMM theory, where the original LDDMM model becomes a special case. In doing so, our entire model, including the spatio-temporal regularizer is expressed via the initial conditions of a partial differential equation. We propose three different approaches based on this model: 1) A model for which the regularizer is specified region-by-region. This would, for example, be natural when registering a labeled atlas image to a target image, as illustrated in Fig. 1. 2) A model in which the regularizer is estimated jointly with the spatio-temporal velocity fields. 3) A deep learning model which predicts the regularizer and the initial velocity field, thereby resulting in a very fast registration approach.

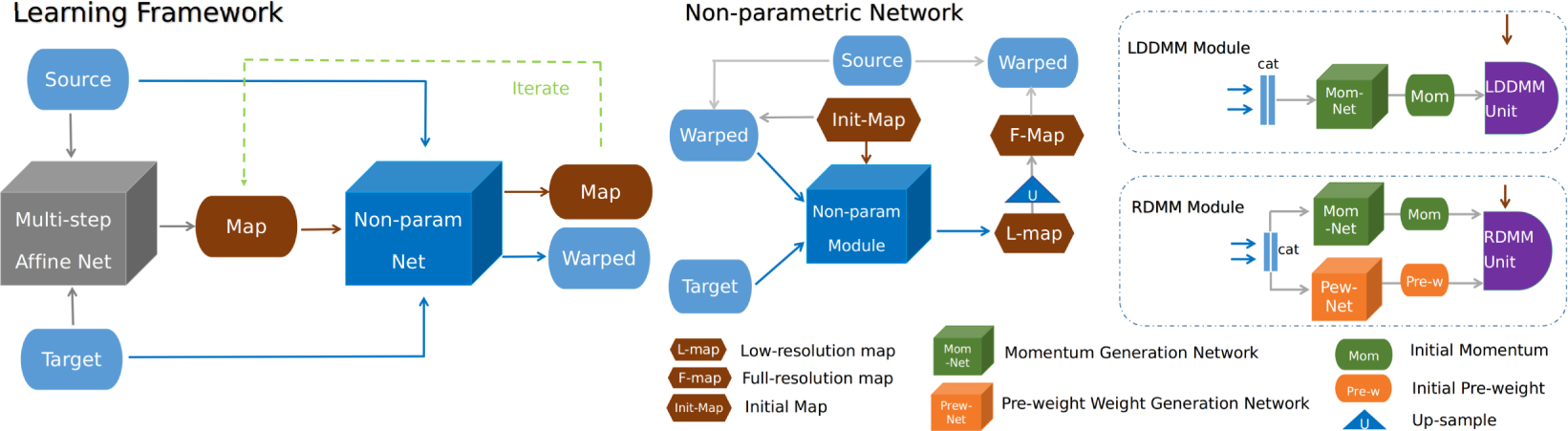

Figure 1:

RDMM registration example. The goal is to register the dark blue area with high fidelity (i.e., allowing large local deformations), while assuring small deformations in the cyan area. Initially (t = 0), a spatially-varying regularizer (fifth column) is specified in the source image space, where dark blue indicates small regularization and yellow large regularization. Specifically, regularizer values indicate effective local standard deviations of a local multi-Gaussian regularizer. Since the transformation map and the regularizer are both advected according to the estimated velocity field, the shape of the regularizer follows the shape of the deforming dark blue region and is of the same shape as the region of interest in the target space at the final time (t = 1) (as can be seen in the second and the last columns). Furthermore, objects inside the dark blue region are indeed aligned well, whereas objects in the cyan region were not strongly deformed due to the larger regularization there.

Related Work

Only limited work [26, 27, 36, 39] on spatially-varying regularizers exists. The most related work [23] learns a spatially-varying regularizer for a stationary velocity field registration model. In [23] both the velocity field and the regularizer are assumed to be constant in time. In contrast, both are time-dependent in RDMM. Spatially-varying regularization has also been addressed from a Bayesian view in [34] by putting priors on B-spline transformation parameters. However, metric estimation in [34] is in a fixed atlas-space, whereas RDMM addresses general pairwise image registration.

Contributions:

1) We propose RDMM, a new registration model for large diffeomorphic deformations with a spatio-temporal regularizer capable of following deforming objects and thereby providing a more natural representation of deformations than existing non-parametric models, such as LDDMM. 2) Via a variational formulation we derive shooting equations that allow specifying RDMM solutions entirely based on their initial conditions: an initial momentum field and an initial spatially-varying regularizer. 3) We prove that diffeomorphisms can be obtained for RDMM in the continuum for sufficiently regular regularizers. 4) We explore an entire new family of registration models based on RDMM and provide optimization-based and very fast deep-learning-based approaches to estimate the initial conditions of these registration models. 5) We demonstrate the utility of our approach via experiments on synthetic data and on two 3D medical image datasets.

2. Standard LDDMM Model

LDDMM [5] is a non-parametric registration approach based on principles from fluid mechanics. It is based on the estimation of a spatio-temporal velocity field v(t, x) from which the sought-for spatial transformation φ can be computed via integration of ∂tφ (t, x) = v(t, φ(t, x)). For appropriately regularized velocity fields [13], diffeomorphic transformations can be guaranteed. The optimization problem underlying LDDMM for images can be written as (∇ being the gradient; 〈·,·〉 indicating the inner product)

| (2.1) |

Here, the goal is to register the source image I0 to the target image I1 in unit time. Sim(A, B) is a similarity measure between images, often sum of squared differences, normalized cross correlation, or mutual information. Furthermore, we note that I(1, y) = I0 ◦ φ−1(1, y), where φ−1 denotes the inverse of φ in the target image space. The evolution of this map can be expressed as

| (2.2) |

where D denotes the Jacobian. Equivalently, in Eq. (2.1), we directly advect the image [15, 40] via ∂tI + 〈∇I, v〉 = 0. To assure smooth transformations, LDDMM penalizes non-smooth velocity fields via the norm , where L is a differential operator.

At optimality of Eq. (2.1) the Euler-Lagrange equations are (div denoting the divergence)

| (2.3) |

Here, λ is the adjoint variable to I, also known as the scalar momentum [15, 40]. As L−1 is a smoother it is often chosen as a convolution, i.e., v = K ⋆ (λ∇I). Note that m(t, x) := λ∇I is the vector-valued momentum and thus v = K ⋆ m. One can directly optimize over v solving Eq. (2.3) [5] or regard Eq. (2.3) as a constraint defining a geodesic path [40] and optimize over all such solutions subject to a penalty on the initial scalar momentum as well as the similarity measure. Alternatively, one can express 1 these equations entirely with respect to the vector-valued momentum, m, resulting in the Euler-Poincaré equation for diffeomorphisms (EPDiff) [44]:

| (2.4) |

which defines the evolution of the spatio-temporal velocity field based on the initial condition, m0, of the momentum, from which the transformation φ can be computed using Eq. (2.2). Both (2.3) and (2.4) can be used to implement shooting-based LDDMM [40, 35]. As LDDMM preserves the momentum, is constant over time and hence a shooting formulation can be written as

| (2.5) |

subject to the EPDiff equation (2.4) including the advection of φ−1, where I(1) = I0 ◦ φ−1(1).

A shooting-formulation has multiple benefits: 1) it allows for a compact representation of φ via its initial conditions; 2) as the optimization is w.r.t. the initial conditions, a solution is a geodesic by construction; 3) these initial conditions can be predicted via deep-learning, resulting in very fast registration algorithms which inherit the theoretical properties of LDDMM [43, 42]. We therefore use this formulation as the starting point for RDMM in Sec 3.

3. Region-Specific Diffeomorphic Metric Mapping (RDMM)

In standard LDDMM approaches, the regularizer L (equivalently the kernel K) is spatially invariant. While recent work introduced spatially-varying metrics in LDDMM, for stationary velocity fields, or for displacement-regularized registration [27, 31, 23, 26], all of these approaches use a temporally fixed regularizer. Hereafter, we generalize LDDMM by advecting a spatially-varying regularizer via the estimated spatio-temporal velocity field. Standard LDDMM is a special case of our model.

Following [23], we introduce (Vi)i=0,…,N−1 a finite family of reproducing kernel Hilbert spaces (RKHS) which are defined by the pre-defined Gaussian kernels with σ0 < … < σN−1. We use a partition of unity wi(x, t), i = 0, … ,N − 1, on the image domain. As we want the kernel to be active on the region determined by wi we introduce the vector field for vi ∈ Vi On this new space of vector fields, there exists a natural RKHS structure defined by

| (3.1) |

whose kernel is . Thus the velocity reads (see suppl. 7.1 for the derivation)

| (3.2) |

which can capture multi-scale aspects of deformation [28]. In LDDMM, the weights are constant and pre-defined. Here, we allow spatially-dependent weights wi(x). In particular (see formulation below), we advect them via, v(t, x) thereby making them spatio-temporal, i.e., wi(t, x). In this setup, weights only need to be specified at initial time t = 0. As the Gaussian kernels are fixed convolutions can still be efficiently computed in the Fourier domain.

We prove (see suppl. 7.4, for the proof) that, for sufficiently smooth weights wi, the velocity field is bounded and its flow is a diffeomorphism. Following [23], to assure the smoothness of the initial weights we instead optimize over initial pre-weights, hi(0, x) ≥ 0, s.t. wi(0, x) = Gσ ⋆ hi(0, x), where Gσ is a fixed Gaussian with a small standard deviation, σ. In addition, we constrain to locally sum to one. The optimization problem for our RDMM model then becomes

| (3.3) |

subject to the constraints

| (3.4) |

As for LDDMM, we can compute the optimality conditions for Eq. (3.3) which we use for shooting.

Theorem 1 (Image-based RDMM optimality conditions).

With the adjoints γi (for hi) and λ (for I) and the momentum the optimality conditions for (3.3) are:

| (3.5) |

| (3.6) |

| (3.7) |

subject to

| (3.8) |

Theorem 2 (Momentum-based RDMM optimality conditions).

The RDMM optimality conditions of Thm. (1) can be written entirely w.r.t. the momentum (as defined in Thm (1)). They are:

| (3.9) |

| (3.10) |

where hi(t, x) = hi(0, x) ◦ φ(t, x)−1 and subject to the constraints of Eq. (3.8) which define the relationship between the velocity and the momentum.

For spatially constant pre-weights, we recover EPDiff from the momentum-based RDMM optimality conditions. Instead of advecting hi via φ(t, x)−1, we can alternatively advect the pre-weights directly, as ∂thi + 〈∇hi, v〉 = 0, hi(0) = (hi)0. For the image-based and the momentum-based formulations, the velocity field v is obtained by smoothing the momentum, ..

Regularization of the Regularizer

Given the standard deviations σ0 < … < σN−1 of Eq. (3.2), assigning larger weights to the Gaussians with larger standard deviation will result in smoother (i.e., more regular) and therefore simpler transformations. To encourage choosing simpler transformations we follow [23], where a simple optimal mass transport (OMT) penalty on the weights is used. Such an OMT penalty is sensible as the non-negative weights, {wi}, sum to one and can therefore be considered a discrete probability distribution. The chosen OMT penalty is designed to measure differences from the probability distribution assigning all weight to the Gaussian with the largest standard deviation, i.e., wN−1 = 1 and all other weights being zero. Specifically, the OMT penalty of [23] is , where s is a chosen power. To make the penalty more consistent with penalizing weights for a standard multi-Gaussian regularizer (as our regularizer contains effectively the weights squared) we do not penalize the weights directly, but instead penalize their squares using the same form of OMT penalty. Further, as the regularization only affects the initial conditions for the pre-weights, the evolution equations for the optimality conditions (i.e., the modified EPDiff equation) do not change. Additional regularizers, such as total variation terms as proposed in [23], are possible and easy to integrate into our RDMM framework as they only affect initial conditions. For simplicity, we focus on regularizing via OMT.

Shooting Formulation

As energy is conserved (see suppl. 7.3 for the proof) the momentum-based shooting formulation becomes

| (3.11) |

subject to the evolution equations of Thm. 2. Similarly, the shooting formulation can use the image-based evolution equations of Thm. 1 where optimization would be over λ(0) instead of m(0).

4. Learning Framework

The parameters for RDMM, i.e., the initial momentum and the initial pre-weights, can be obtained by numerical optimization, either over the momentum and the pre-weights or only over the momentum if the pre-weights are prescribed. Just as for the LDDMM model, such a numerical optimization is computationally costly. Consequentially, various deep-learning (DL) approaches have been proposed to instead predict displacements [4, 6], stationary velocity [29] or momentum fields [43, 23]. Supervised [43, 42] and unsupervised [16, 11, 18, 4, 10] DL registration approaches exist. All of them are fast as only a regression solution needs to be evaluated at test time and no further numerical optimization is necessary. Additionally, such DL models benefit from learning over an entire population instead of relying only on information from given image-pairs.

Most non-parametric registration approaches are not invariant to affine transformations based on the chosen regularizers. Hence, for such non-parametric methods, affine registration is typically performed first as a pre-registration step to account for large, global displacements or rotations. Similarly, we make use of a two-step learning framework (Fig. 2 (left)) learning affine transformations and subsequent non-parametric deformations separately. For the affine part, a multi-step affine network is used to predict the affine transformation following [33]. For the non-parametric part, we use two different deep learning approaches, illustrated in the right part of Fig. 2, to predict 1) the LDDMM initial momentum, m0, in Eq. (2.5) and 2) the RDMM initial momentum, m0, and the pre-weights, {hi(0)*}, in Eq. (3.11). Overall, including the affine part, we use two networks for LDDMM prediction and three networks for RDMM prediction.

Figure 2:

Illustration of the learning framework (left) and its non-parametric registration component (right). A multi-step affine-network first predicts the affine transformation map [33] followed by an iterable non-parametric registration to estimate the final transformation map. The LDDMM component uses one network to generate the initial momentum. RDMM also uses a second network to predict the initial regularizer pre-weights. We integrate the RDMM evolution equations at low-resolution (based on the predicted initial conditions) to save memory. The final transformation map is obtained via upsampling. See suppl. 7.6 for the detailed structure of the LDDMM/RDMM units.

We use low-resolution maps and map compositions for the momentum and the pre-weight networks. This reduces computational cost significantly. The final transformation map is obtained via upsampling, which is reasonable as we assume smooth transformations. We use 3D UNets [9] for momentum and pre-weight prediction. Both the affine and the non-parametric networks can be iterated to refine the prediction results: i.e. the input source image and the initial map are replaced with the currently warped image and the transformation map respectively for the next iteration.

During training of the non-parametric part, the gradient is first backpropagated through the differentiable interpolation operator, then through the LDDMM/RDMM unit, followed by the momentum generation network and the pre-weight network.

Inverse Consistency:

For the DL approaches we follow [33] and compute bidirectional (source to target denoted as st and target to source denoted as ts) registration losses and an additional symmetry loss, , where id refers to the identity map. This encourages symmetric consistency.

5. Experimental Results and Setup

Datasets:

To demonstrate the behavior of RDMM, we evaluate the model on three datasets: 1) a synthetic dataset for illustration, 2) a 3D computed tomography dataset (CT) of a lung, and 3) a large 3D magnetic resonance imaging (MRI) dataset of the knee from the Osteoarthritis Initiative (OAI). The synthetic dataset consists of three types of shapes (rectangles, triangles, and ellipses). There is one foreground object in each image with two objects inside and at most five objects outside. Each source image object has a counterpart in the target image; the shift, scale, and rotations are random. We generated 40 image pairs of size 2002 for evaluation. Fig. 1 shows example synthetic images. The lung dataset consists of 49 inspiration/expiration image pairs with lung segmentations. Each image is of size 1603. We register from the expiration phase to the inspiration phase for all 49 pairs. The OAI dataset consists of 176 manually labeled MRI from 88 patients (2 longitudinal scans per patient) and 22,950 unlabeled MR images from 2,444 patients. Labels are available for femoral and tibial cartilage. We divide the patients into training (2,800 pairs), validation (50 pairs) and testing groups (300 pairs), with the same evaluation settings as for the cross-subject experiments in [33].

Deformation models:

Affine registration is performed before each LDDMM/RDMM registration.

Affine model:

We implemented a multi-scale affine model solved via numerical optimization and a multi-step deep neural network to predict the affine transformation parameters.

Family of non-parametric models:

We implemented both optimization and deep-learning versions of a family of non-parametric registration methods: a vector-momentum based stationary velocity field model (vSVF) (v(x), w = const), LDDMM (v(t, x), w = const), and RDMM (v(t, x), w(t, x)). We use the dopri5 solver using the adjoint sensitivity method [7] to integrate the evolution equations in time. For solutions based on numerical optimization, we use a multi-scale strategy with L-BGFS [19] as the optimizer. For the deep learning models, we compute solutions for a low-resolution map (factor of 0.5) which is then upsampled. We use Adam [17] for optimization.

Image similarity measure:

We use multi-kernel Localized Normalized Cross Correlation (mkLNCC) [33]. mk-LNCC computes localized normalized cross correlation (NCC) with different window sizes and combines these measures via a weighted sum.

Weight visualization:

To illustrate the behavior of the RDMM model, we visualize the estimated standard deviations, i.e.the square root of the local variance .

Estimation approaches:

To illustrate different aspects of our approach we perform three types of RDMM registrations: 1) registration with a pre-defined regularizer (Sec. 5.1), 2) registration with simultaneous optimization of the regularizer, via optimization of the initial momentum and pre-weights (Sec. 5.2), and 3) registration via deep learning predicting the initial momentum and regularizer pre-weights (Sec. 5.3). Detailed settings for all approaches are in the suppl. 7.8.

5.1. Registration with a pre-defined regularizer

To illustrate the base capabilities of our models, we prescribe an initial spatially-varying regularizer in the source image space. We show experimental results for pair-wise registration of the synthetic data as well as for the 3D lung volumes.

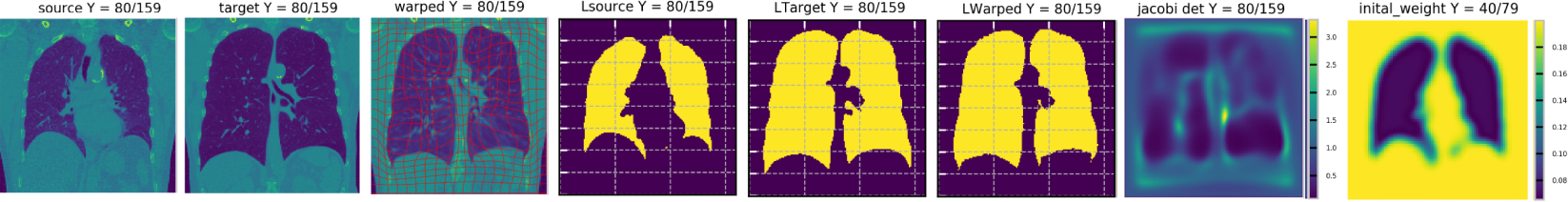

Fig. 1 shows the registration result for an example synthetic image pair. We use small regularization in the blue area and large regularization in the surrounding area. As expected, most of the deformations occur inside the blue area as the regularizer is more permissive there. We also observe that the regularizer is indeed advected with the image. For the real lung image data we use a small regularizer inside the lung (as specified by the given lung mask) and a large regularizer in the surrounding tissue. Fig. 3 shows that most of the deformations are indeed inside the lung area while the deformation outside the lung is highly regularized as desired. We evaluate the Dice score between the warped lung and the target lung, achieving 95.22% on average (over all inhalation/exhalation pairs). Fig. 3 also shows the determinant of the Jacobian of the transformation map Jφ−1 (x) := ∣Dφ−1(x)∣ (defined in target space): the lung region shows small values (illustrating expansion) while other region are either volume preserved (close to 1) or compressed (bigger than 1). Overall the deformations are smooth.

Figure 3:

RDMM lung registration result with a pre-defined regularizer. Lung images at expiration (source) are registered to the corresponding inspiration (target) images. Last column: Inside the lung a regularizer with small standard deviation (σi={0.04, 0.06, 0.08}, ) and outside the lung with large standard deviation is used (σi={0.2}, ). Deformations are largely inside the lung, the surrounding tissue is well regularized as expected. Columns 1 to 3 show results in image space while columns 4 to 6 refer to the results in label space. The second to last column shows the determinant of the Jacobian of the spatial transformation, φ−1.

5.2. Registration with an optimized regularizer

In contrast to the experiments in Sec. 5.1, we jointly estimate the initial momentum and the initial regularizer pre-weights for pairwise registration. We use the synthetic data and the knee MRIs.

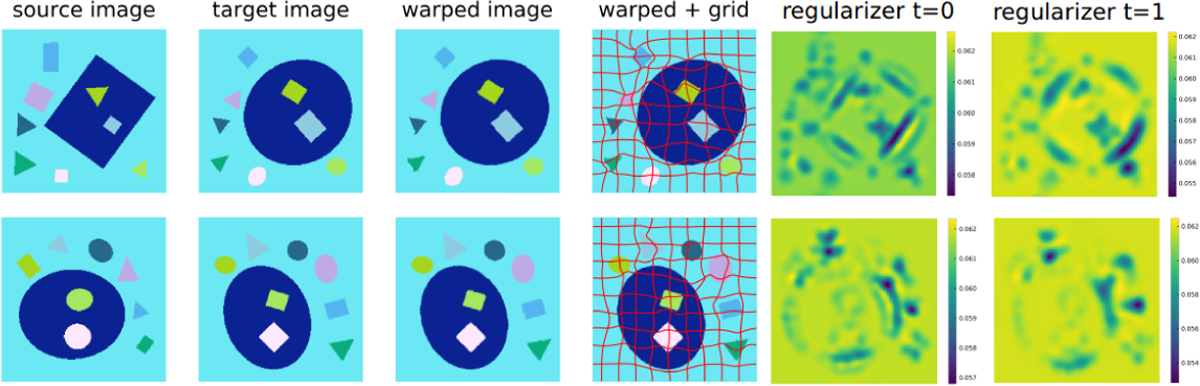

Fig. 4 shows that the registration warped every object in the source image to the corresponding object in the target image. The visualization of the initial regularizer shows that low regularization is assigned close to object edges making them locally deformable. The visualizations of the regularizer at the initial time point (t = 0) and the final time point (t = 1) show that it deforms with the image. That low regularization values are localized is sensible as the OMT regularizer prefers spatially sparse solutions (in the sense of sparsely assigning low levels of regularity). If the desired deformation model is piecewise constant our RDMM model could readily be combined with a total variation penalty as in [23]. Fig. 5 compares average Dice scores for all objects for vSVF, LDDMM and RDMM separately. They all achieve high and comparable performance indicating good registration quality. But only RDMM provides additional information about local regularity.

Figure 4:

Illustration of the RDMM registration results with an optimized regularizer on the synthetic dataset. All objects are warped from the source image space to the target image space. The last two columns show the regularizer (σ(x)) at t = 0 and t = 1 respectively.

Figure 5:

Average Dice scores for all objects. Left to right: SVF, LD-DMM and RDMM.

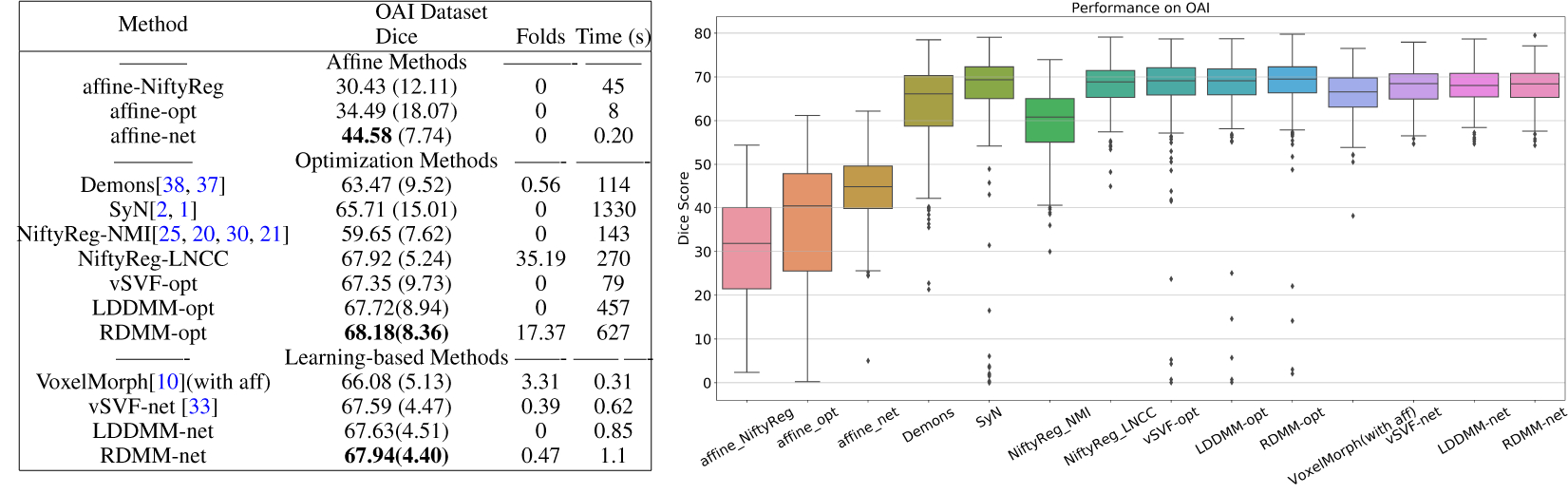

We further evaluate RDMM on 300 images pairs from the OAI dataset. The optimization methods section of Tab. 6 compares registration performance for different optimization-based algorithms. RDMM achieves high performance. While RDMM is in theory diffeomorphic, we observe some foldings, whereas no such foldings appear for LDDMM and SVF. This is likely due to inaccuracies when discretizing the evolution equations and when discretizing the determinant of the Jacobian. Further, RDMM may locally exhibit stronger deformations than LDDMM or vSVF, especially when the local regularization (via OMT) is small, making it numerically more challenging. Most of the folds appear at the image boundary (and are hence likely due to boundary discretization artifacts) or due to anatomical inconsistency in the source and target images, where large deformations may be estimated.

5.3. Registration with a learnt regularizer via deep learning

Finally, we evaluate our learning framework for non-parametric registration approaches on the OAI dataset. For vSVF, we follow [33] to predict the momentum. We implement the same approach for LDDMM, where the vSVF inference unit is replaced by one for LDDMM (i.e., instead of advecting via a stationary velocity field we integrate EPDiff). Similarly, for RDMM, the inference unit is replaced by the evolution equation for RDMM and we use an additional network to predict the regularizer pre-weights. Fig. 6 shows the results. The non-parametric DL models achieve comparable performance to their optimization counterparts, but are much faster, while learning-based RDMM simultaneously predicts the initial regularizer which captures aspects of the knee anatomy. Fig. 7 shows the determinant of the Jacobian of the transformation map. It is overall smooth and folds are much less frequent than for the corresponding optimization approach, because the DL model penalizes transformation asymmetry. Fig. 7 also clearly illustrates the benefit of training the DL model based on a large image dataset: compared with the optimization approach (which only works on individual image pairs), the initial regularizer predicted by the deep network captures anatomically meaningful information much better: the bone (femur and tibia) and the surrounding tissue show large regularity.

Figure 6:

Comparison of registration methods for cross-subject registrations on the OAI dataset based on Dice scores. -opt and -net refer to optimization- and DL-based methods respectively. For all DL methods, we report performance after two-step refinement. Folds refers to the absolute value of the sum of the determinant of the Jacobian in the folding area (i.e., where the determinant of the Jacobian is negative); Time refers to the average registration time for a single image pair.

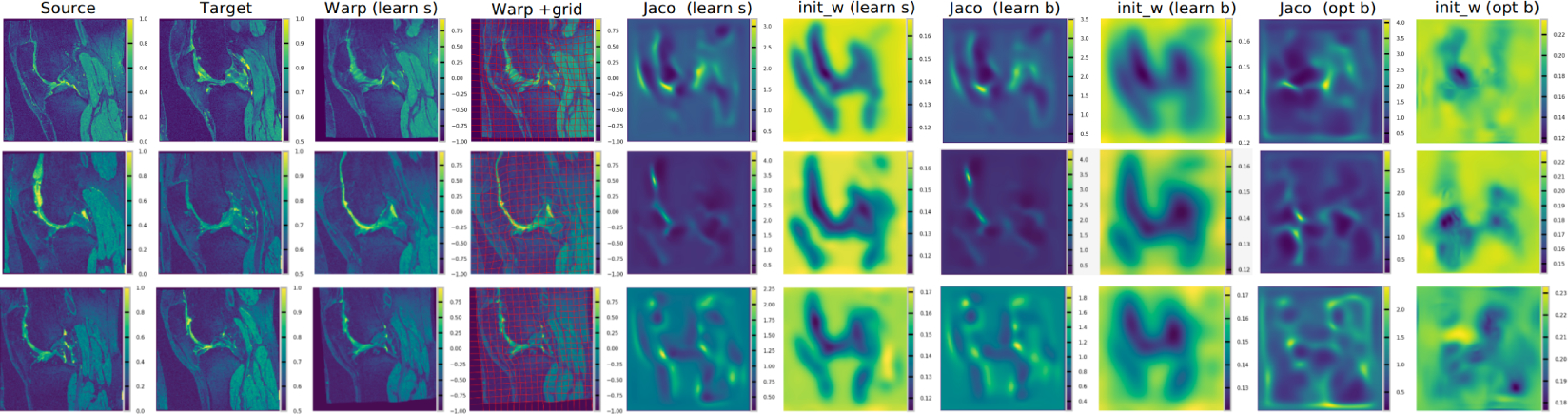

Figure 7:

Illustration of RDMM registration results on the OAI dataset. “* s” and “* b” refer to the regularizer with σ in Gσ set to 0.04 and 0.06 respectively; “learn *” and “opt *” refer to a learnt regularizer and an optimized one respectively; “Jaco” refers to the absolute value of the determinant of the Jacobian; “init_w” refers to the initial weight map of the regularizer (as visualized via σ(x)). The first four columns refer to registration results in image space.

6. Conclusion and Future Work

We introduced RDMM, a generalization of LDDMM registration which allows for spatially-varying regularizers advected with a deforming image. In RDMM, both the estimated velocity field and the estimated regularizer are time- and spatially-varying. We used a variational approach to derive shooting equations which generalize EPDiff and allow the parameterization of RDMM using only the initial momentum and regularizer pre-weights. We also prove that diffeomorphic transformation can be obtained for RDMM with sufficiently regular regularizers. Experiments with pre-defined, optimized, and learnt regularizers show that RDMM is flexible and its solutions can be estimated quickly via deep learning.

Future work could focus on numerical aspects and explore different initial constraints, such as total-variation constraints, depending on the desired deformation model. Indeed, a promising avenue of research consists in learning regularizers which include more physical/mechanical a-priori information in the deformation model. For instance, a possible first step in this direction consists in parameterizing non-isotropic kernels to favor deformations in particular directions.

Supplementary Material

Acknowledgements

Research reported in this work was supported by the National Institutes of Health (NIH) and the National Science Foundation (NSF) under award numbers NSF EECS-1711776 and NIH 1R01AR072013. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH or the NSF. We would also like to thank Dr. Raúl San José Estépar for providing the lung data.

Footnotes

33rd Conference on Neural Information Processing Systems (NeurIPS 2019), Vancouver, Canada.

Thm. (2) in suppl. 7.2 provides a more generalized derivation.

Contributor Information

Zhengyang Shen, UNC Chapel Hill.

François-Xavier Vialard, LIGM, UPEM.

Marc Niethammer, UNC Chapel Hill.

References

- [1].Avants BB, Epstein CL, Grossman M, and Gee JC. Symmetric diffeomorphic image registration with cross-correlation: evaluating automated labeling of elderly and neurodegenerative brain. Medical image analysis, 12(1):26–41, 2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Avants BB, Tustison N, and Song G. Advanced normalization tools (ANTS). Insight j, 2:1–35, 2009. [Google Scholar]

- [3].Bajcsy R and Kovačič S. Multiresolution elastic matching. CVGIP, 46(1):1–21, 1989. [Google Scholar]

- [4].Balakrishnan G, Zhao A, Sabuncu MR, Guttag J, and Dalca AV. An unsupervised learning model for deformable medical image registration. In CVPR, pages 9252–9260, 2018. [Google Scholar]

- [5].Beg MF, Miller MI, Trouvé A, and Younes L. Computing large deformation metric mappings via geodesic flows of diffeomorphisms. IJCV, 61(2):139–157, 2005. [Google Scholar]

- [6].Cao X, Yang J, Zhang J, Wang Q, Yap P-T, and Shen D. Deformable image registration using a cue-aware deep regression network. IEEE Transactions on Biomedical Engineering, 65(9):1900–1911, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Chen TQ, Rubanova Y, Bettencourt J, and Duvenaud DK. Neural ordinary differential equations. In Advances in Neural Information Processing Systems, pages 6571–6583, 2018. [Google Scholar]

- [8].Chen Z, Jin H, Lin Z, Cohen S, and Wu Y. Large displacement optical flow from nearest neighbor fields. In CVPR, pages 2443–2450, 2013. [Google Scholar]

- [9].Çiçek Ö, Abdulkadir A, Lienkamp SS, Brox T, and Ronneberger O. 3D U-Net: learning dense volumetric segmentation from sparse annotation. In International conference on medical image computing and computer-assisted intervention, pages 424–432. Springer, 2016. [Google Scholar]

- [10].Dalca AV, Balakrishnan G, Guttag J, and Sabuncu MR. Unsupervised learning for fast probabilistic diffeomorphic registration. MICCAI, 2018. [DOI] [PubMed] [Google Scholar]

- [11].de Vos BD, Berendsen FF, Viergever MA, Staring M, and Išgum I. End-to-end unsupervised deformable image registration with a convolutional neural network. In DLMIA, pages 204–212. Springer, 2017. [Google Scholar]

- [12].Delon J, Salomon J, and Sobolevski A. Local matching indicators for transport problems with concave costs. SIAM Journal on Discrete Mathematics, 26(2):801–827, 2012. [Google Scholar]

- [13].Dupuis P, Grenander U, and Miller MI. Variational problems on flows of diffeomorphisms for image matching. Quarterly of applied mathematics, pages 587–600, 1998. [Google Scholar]

- [14].Haber E and Modersitzki J. Image registration with guaranteed displacement regularity. International Journal of Computer Vision, 71(3):361–372, 2007. [Google Scholar]

- [15].Hart GL, Zach C, and Niethammer M. An optimal control approach for deformable registration. In CVPR, pages 9–16. IEEE, 2009. [Google Scholar]

- [16].Jaderberg M, Simonyan K, Zisserman A, et al. Spatial transformer networks. In NIPS, pages 2017–2025, 2015. [Google Scholar]

- [17].Kingma DP and Ba J. Adam: A method for stochastic optimization. ICLR, 2014. [Google Scholar]

- [18].Li H and Fan Y. Non-rigid image registration using fully convolutional networks with deep self-supervision. ISBI, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Liu DC and Nocedal J. On the limited memory BFGS method for large scale optimization. Mathematical programming, 45(1–3):503–528, 1989. [Google Scholar]

- [20].Modat M, Cash DM, Daga P, Winston GP, Duncan JS, and Ourselin S. Global image registration using a symmetric block-matching approach. Journal of Medical Imaging, 1(2):024003, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Modat M, Ridgway GR, Taylor ZA, Lehmann M, Barnes J, Hawkes DJ, Fox NC, and Ourselin S. Fast free-form deformation using graphics processing units. Computer methods and programs in biomedicine, 98(3):278–284, 2010. [DOI] [PubMed] [Google Scholar]

- [22].Modersitzki J. Numerical methods for image registration. Oxford University Press on Demand, 2004. [Google Scholar]

- [23].Niethammer M, Kwitt R, and Vialard F-X. Metric learning for image registration. CVPR, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Nocedal J and Wright S. Numerical optimization. Springer Science & Business Media, 2006. [Google Scholar]

- [25].Ourselin S, Roche A, Subsol G, Pennec X, and Ayache N. Reconstructing a 3D structure from serial histological sections. Image and vision computing, 19(1–2):25–31, 2001. [Google Scholar]

- [26].Pace DF, Aylward SR, and Niethammer M. A locally adaptive regularization based on anisotropic diffusion for deformable image registration of sliding organs. IEEE transactions on medical imaging, 32(11):2114–2126, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Risser L, Vialard F-X, Baluwala HY, and Schnabel JA. Piecewise-diffeomorphic image registration: Application to the motion estimation between 3D CT lung images with sliding conditions. Medical image analysis, 17(2):182–193, 2013. [DOI] [PubMed] [Google Scholar]

- [28].Risser L, Vialard F-X, Wolz R, Holm DD, and Rueckert D. Simultaneous fine and coarse diffeomorphic registration: application to atrophy measurement in Alzheimer’s disease. In MICCAI, pages 610–617. Springer, 2010. [DOI] [PubMed] [Google Scholar]

- [29].Rohé M-M, Datar M, Heimann T, Sermesant M, and Pennec X. SVF-Net: Learning deformable image registration using shape matching. In MICCAI, pages 266–274. Springer, 2017. [Google Scholar]

- [30].Rueckert D, Sonoda LI, Hayes C, Hill DL, Leach MO, and Hawkes DJ. Nonrigid registration using free-form deformations: application to breast MR images. TMI, 18(8):712–721, 1999. [DOI] [PubMed] [Google Scholar]

- [31].Schmah T, Risser L, and Vialard F-X. Left-invariant metrics for diffeomorphic image registration with spatially-varying regularisation. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 203–210. Springer, 2013. [DOI] [PubMed] [Google Scholar]

- [32].Shen D and Davatzikos C. HAMMER: hierarchical attribute matching mechanism for elastic registration. TMI, 21(11):1421–1439, 2002. [DOI] [PubMed] [Google Scholar]

- [33].Shen Z, Han X, Xu Z, and Niethammer M. Networks for joint affine and non-parametric image registration. CVPR, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Simpson IJ, Cardoso MJ, Modat M, Cash DM, Woolrich MW, Andersson JL, Schnabel JA, Ourselin S, Initiative ADN, et al. Probabilistic non-linear registration with spatially adaptive regularisation. Medical image analysis, 26(1):203–216, 2015. [DOI] [PubMed] [Google Scholar]

- [35].Singh N, Hinkle J, Joshi S, and Fletcher PT. A vector momenta formulation of diffeomorphisms for improved geodesic regression and atlas construction. In 2013 IEEE 10th International Symposium on Biomedical Imaging, pages 1219–1222. IEEE, 2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Stefanescu R, Pennec X, and Ayache N. Grid powered nonlinear image registration with locally adaptive regularization. Medical image analysis, 8(3):325–342, 2004. [DOI] [PubMed] [Google Scholar]

- [37].Vercauteren T, Pennec X, Perchant A, and Ayache N. Symmetric log-domain diffeomorphic registration: A demons-based approach. In MICCAI, pages 754–761. Springer, 2008. [DOI] [PubMed] [Google Scholar]

- [38].Vercauteren T, Pennec X, Perchant A, and Ayache N. Diffeomorphic demons: Efficient non-parametric image registration. NeuroImage, 45(1):S61–S72, 2009. [DOI] [PubMed] [Google Scholar]

- [39].Vialard F-X and Risser L. Spatially-varying metric learning for diffeomorphic image registration: A variational framework. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 227–234. Springer, 2014. [DOI] [PubMed] [Google Scholar]

- [40].Vialard F-X, Risser L, Rueckert D, and Cotter CJ. Diffeomorphic 3D image registration via geodesic shooting using an efficient adjoint calculation. International Journal of Computer Vision, 97(2):229–241, 2012. [Google Scholar]

- [41].Wulff J and Black MJ. Efficient sparse-to-dense optical flow estimation using a learned basis and layers. In CVPR, pages 120–130, 2015. [Google Scholar]

- [42].Yang X, Kwitt R, and Niethammer M. Fast predictive image registration. In DLMIA, pages 48–57. Springer, 2016. [Google Scholar]

- [43].Yang X, Kwitt R, Styner M, and Niethammer M. Quicksilver: Fast predictive image registration-a deep learning approach. NeuroImage, 158:378–396, 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [44].Younes L, Arrate F, and Miller MI. Evolutions equations in computational anatomy. NeuroImage, 45(1):S40–S50, 2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.