Abstract

Background

Breast cancer is a kind of cancer that starts in the epithelial tissue of the breast. Breast cancer has been on the rise in recent years, with a younger generation developing the disease. Magnetic resonance imaging (MRI) plays an important role in breast tumor detection and treatment planning in today's clinical practice. As manual segmentation grows more time-consuming and the observed topic becomes more diversified, automated segmentation becomes more appealing. Methodology. For MRI breast tumor segmentation, we propose a CNN-SVM network. The labels from the trained convolutional neural network are output using a support vector machine in this technique. During the testing phase, the convolutional neural network's labeled output, as well as the test grayscale picture, is passed to the SVM classifier for accurate segmentation.

Results

We tested on the collected breast tumor dataset and found that our proposed combined CNN-SVM network achieved 0.93, 0.95, and 0.92 on DSC coefficient, PPV, and sensitivity index, respectively. We also compare with the segmentation frameworks of other papers, and the comparison results prove that our CNN-SVM network performs better and can accurately segment breast tumors.

Conclusion

Our proposed CNN-SVM combined network achieves good segmentation results on the breast tumor dataset. The method can adapt to the differences in breast tumors and segment breast tumors accurately and efficiently. It is of great significance for identifying triple-negative breast cancer in the future.

1. Introduction

Among the new cases of female malignant tumors, breast cancer accounts for as high as 29%, and the mortality rate ranks second in cancer mortality [1]. Breast cancer is a systemic disease with local manifestations. There is currently no effective preventive method for breast cancer. Early detection and early treatment are the only effective means to improve postoperative survival [2]. Breast cancer mainly relies on microvascular oxygen supply. However, in the early diagnosis, due to the early manifestations of malignant lesions and the enhancement of benign lesions, the specificity of the disease is poor [3–5]. In recent years, molecular typing of breast cancer has become a research hotspot because different molecular types of breast cancer have significant differences in disease expression, response to treatment, prognosis, and survival outcomes. Triple-negative breast cancer is defined as breast cancer that is immunohistochemically negative for estrogen receptor (ER), progesterone receptor (PR), and human epidermal growth factor receptor 2 (HER2). TNBC accounts for 15% to 20% of all breast cancer pathology types, with low-grade particular variants like secretory carcinoma and adenoid cystic carcinoma having a good prognosis. On the other hand, high-grade malignancies such spindle cell metaplastic carcinoma and basaloid carcinoma have a poor prognosis.

Triple-negative breast cancer is more common in premenopausal women, and it is also a specific molecular subtype of breast cancer in clinical practice [6]. Breast cancer that is triple-negative progresses quickly and is invasive. The cancerous breast cells are loosely connected, and cancer cells are efficiently distributed throughout the body along with the lymphatic system and blood circulation, resulting in cancer metastasis, which not only increases the difficulty of treatment but also has a particular impact on the health of patients [7–9]. Furthermore, modern women are under more physical and emotional stress, which has resulted in an increase in the prevalence of breast cancer, particularly triple-negative breast cancer. All of the receptors for human epidermal symptom factor 2, progesterone receptors, and estrogen receptors are negative. This is the term for triple-negative breast cancer [10–12]. Furthermore, triple-negative breast cancer is aggressive, with a high risk of recurrence, distant metastasis, and visceral and bone metastases. Breast cancer that is triple-negative has a poorer prognosis than other types of breast cancer [13–15]. Because there is no known specific treatment for TNBC, it is currently usually treated with chemotherapy. Once metastasis and dissemination have occurred, TNBC patients have a 5-year survival rate of less than 30%. As a result, for triple-negative breast cancer patients, early detection is critical [16, 17].

In clinical practice, there are several diagnostic programs for triple-negative breast cancer, and X-ray irradiation exposes the body to a significant quantity of radiation. Magnetic resonance imaging (MRI) is a noninvasive operation. 3.0 T MRI can determine the morphological information of breast cancer and evaluate the tumor function and the surrounding blood vessels of the tumor [18–20]. It has a high application value for triple-negative breast cancer. This method has a high application value [21–24]. The molecular typing of breast cancer is generally diagnosed by an immunohistochemical examination of patients, which is complicated and invasive. Therefore, some researchers try to predict the molecular type of breast cancer through patient images. The imaging techniques mainly include mammography, breast ultrasound, positron emission tomography, and dynamic enhanced magnetic resonance. These techniques generally use artificial extraction of features, and these are subjective. It is difficult to objectively reflect the essential characteristics of breast cancer [25, 26].

Today, deep learning methods are applied to many pattern recognition tasks with good results, in which convolutional neural network algorithms can automatically learn image features [27]. Men et al. [28] presented DD-ResNet, an end-to-end deep learning model that allows for quick training and testing. The authors then evaluated an extensive dataset of 800 patients receiving breast-conserving therapy. The authors found that the model can achieve good segmentation results. By creating two fully convolutional neural networks (CNNs) based on SegNet and U-Net tumor segmentation, two deep learning techniques were proposed by El Adoui et al. [29] to automate breast imaging in dynamic contrast-enhanced magnetic resonance imaging (DCE-MRI). For a convolutional neural network (R-CNN) network, Lei et al. [30] built a backbone network, a region proposal network, a region convolutional neural network head, a mask head, and a scoring head. The network properly segments breast cancers. Singh et al. [31] proposed a breast tumor segmentation method based on a contextual information-aware conditional generative adversarial learning framework. The approach extends a deep adversarial learning framework by collecting texture information and contextual dependencies in tumor photographs to achieve successful breast segmentation.

This study presents a fully automated segmentation method based on a convolutional neural network algorithm and SVM. The framework is split into two sections. In the initial stage, the CNN is taught mapping from image space to tumor label space. To accomplish accurate segmentation in the testing phase, the CNN's predicted label output is used and combined with the test grayscale picture in an SVM classifier. After the SVM classifier, a more accurate binary segmentation image can be obtained. In recent years, the algorithm combining CNN and SVM has been proposed in much literature. The algorithm described in this work is primarily distinct from the CNN research approach when compared to other integrated algorithms. The N4ITK method, with ReLU as the activation function, negative log-likelihood loss function as the loss function, stochastic gradient descent as the optimization strategy, and so on, is employed as a preprocessing technique in this study. Furthermore, to improve the quality of the features retrieved from CNN, they are sent to the SVM classifier. This paper also includes a stage of intermediate processing that improves segmentation.

2. Materials and Methods

2.1. Construction of the Dataset

2.1.1. Data Acquisition

We collected breast MRI data from 272 patients from the Second Affiliated Hospital of Fujian Medical University. There were 165 cases of other molecularly pressed breast cancer and 107 triple-negative breast cancer cases. The inclusion criteria were as follows: (1) breast MRI examination was performed before surgery. (2) The postoperative pathology report accurately indicated the molecular subtype of breast cancer. MRI were collected utilizing a specialized phased array 8-channel breast coil on a 3.0 T MRI scanner (PHILIPS, Ingenia 3.0 T) with patients in the prone position. The identical imaging strategy was used on all of the patients. The breast DCE-MRI protocol included an axial T2-weighted Fast Spin Echo (T2-FSE) sequence (Repetition Time/Echo Time (TR/TE) = 3600/100 ms, flip angle = 90°, matrix size = 512 × 512, and slice thickness = 4.0 mm) and an axial Short Tau Inversion Recovery (STIR) sequence (TR/TE = 3900/90 ms, flip angle = 90°, and matrix size = 512 × 512). The DWI methodology included a DWI sequence (TR/TE = 6000 ms/90 ms, flip angle = 90°, matrix size = 256 × 256, slice thickness = 4.0 mm, and b values of 0 and 850 s/mm2) collected before the contrast material was injected. Figure 1 depicts a portion of the scanned picture data.

Figure 1.

Partially scanned image based on the (a) T2 scan sequence and (b) DWI scan sequence.

2.1.2. Data Annotation

Two senior medical radiologists engaged in breast imaging diagnosis manually delineated the tumor images we collected. The molecular subtype labeling was the gold standard based on the pathology report. In addition, corresponding contour labels are also made according to the boundaries of the tumor labels. The boundary of the contour label is represented by 1, and the area outside the boundary is represented by 0. Because Bayesian field distortion alters the MRI picture, the gray value of the same spot in the image will be unequal. We utilized the N4ITK approach to solve this issue.

At the same time, we also performed block extraction on the data. In this paper, each pixel in the image is taken as the center to extract a two-dimensional data block as training data. The retrieved data block is separated into positive and negative samples based on the label of the center pixel, according to the gold standard for tumor segmentation. Tumor areas correlate with positive samples. The nontumor region, i.e., the normal tissue structure of the chest, corresponds to the negative samples. Because the lesion area is significantly less than the normal tissue region in practice, the number of negative samples far outnumbers the number of positive samples. A random downsampling strategy is used to balance the quantity of positive and negative data submitted to the training model. Make the block size 17 by 17 inches.

2.2. Convolutional Neural Network Model Implementation

A convolutional neural network [32] is essentially a multilayer perceptron with additional layers. It is a multilayer neural network design. There are three levels to it: an input layer, a hidden layer, and an output layer. In the hidden layer, there might be several levels. Each layer is made up of a number of two-dimensional planes, each containing a number of neurons. A feature extraction layer and a subsampling layer are stacked in the concealed layer. Figure 2 depicts the structure of a convolutional neural network. Here are some of CNN's most important features.

Figure 2.

CNN network structure diagram.

The activation function uses a linear correction unit (ReLU), which is defined as

| (1) |

It is found that the algorithm using ReLU can achieve better results and faster training speed than the traditional sigmoid or hyperbolic tangent function [33]. A softmax classifier was used for 2-class classification when using CNN alone for segmentation.

This paper uses max pooling [34], which computes the maximum value of a particular feature in an image region. This solves the problem that after the features are obtained by convolution if all the extracted features are used for training, the calculation will increase, and it is easy to overfit.

Regularization is used in this research to reduce overfitting. When utilizing CNN alone for segmentation, dropout technology is used in the FC layer to clear the output value in the hidden layer node with a probability of 0.2 to reduce the dependency between the hidden layer nodes. This is a good approach to prevent overfitting.

The loss function, in its most basic form, is the function that is used to calculate the training model's error. In general, it is intended that this function may be reduced to the bare minimum. The negative log-likelihood loss function is used in this study, and it is defined as

| (2) |

2.3. SVM Principle

Based on statistical learning theory, VC dimension theory, and structural risk reduction criterion, support vector machine is a supervised learning strategy that attempts the best balance between model complexity and learning capacity. It can gain lower real risk and has a great generalization capacity [35]. When using SVM to solve nonlinear classification problems, the inner product kernel function replaces the nonlinear mapping to the high-dimensional space rather than the dimension of the sample space, avoiding the problems that traditional learning classifiers can encounter when dealing with nonlinear high-dimensional problems. It is difficult to keep track of the digits. The support vector machine's goal is to discover the optimal hyperplane for feature space division, and it works on the maximization of the classification interval concept. The minimization function for determining the best hyperplane margin and associated restrictions is defined by equations (3) and (4) and (3)–(5).

| (3) |

subject to the constraints

| (4) |

where w is the normal, b is the threshold, and xi is each sample instance.

The optimal classification surface or optimal classification line should separate the two types of samples without error and maximize the classification interval of the two types, which is the essential requirement of the structural risk minimization criterion. The purpose of separating the two types of samples without errors is to minimize the empirical risk; maximizing the classification interval of the two types ensures that the classifier has the smallest VC dimension and thus obtains the smallest confidence range, so that the classifier has the least real risk. The optimal classification surface of the SVM method is unique.

The support vector machine in this work uses the Gaussian radial basis kernel function, which is defined as

| (5) |

At this time, the support vector machine is a radial basis function classifier, which is different from the traditional radial basis function technique, and its weights are produced automatically.

2.4. Breast Tumor Segmentation Based on the CNN-SVM Combined Model

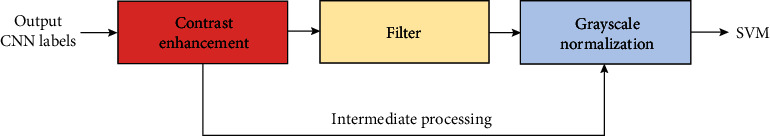

This study proposes a brain tumor segmentation approach based on a convolutional neural network and SVM. Preprocessing, feature extraction, CNN and SVM training, and testing and providing final segmentation results are the major steps of the proposed architecture, as shown in Figure 3.

Figure 3.

Algorithm framework of breast tumor segmentation based on the combination of CNN and SVM.

Convolutional neural networks and support vector machines are trained separately in the first stage to learn the mapping from the grayscale picture domain to the tumor label domain. During the testing phase, the SVM classifier receives the labeled output of the convolutional neural network as well as the test grayscale picture for accurate segmentation. A simple intermediate processing step is introduced to create relevant features for CNN training, as illustrated in Figure 4. To represent each pixel, we employ first-order characteristics (grayscale, mean, and median). A CNN is taught utilizing these properties during the training phase to learn a nonlinear mapping between input information and labels. The SVM classifier is trained independently during the testing phase, using the CNN label map and the same features as before.

Figure 4.

Intermediate processing steps.

2.5. Model Design Details

Softmax is a multiclass classifier used here for 2-class classification whose output is a conditional probability value between 0 and 1. The convolutional neural network structure proposed in this paper consists of a convolutional layer, a pooling layer, a fully connected layer, and a softmax classification layer, where softmax is a multiclass classifier used here for 2-class classification whose output is a conditional probability value between 0 and 1.

ReLU is used in the network's transfer function. The stochastic gradient descent technique is used to get the network parameters and model by minimizing the loss function. The network's input image data block is 17 × 17 bytes long. After the first convolution layer C1, 32 filter templates of size 5 × 5 are used for convolution to obtain 32 feature maps of size 13 × 13, and then, the maximum pooling is performed. With sampling layer S2, use 2 × 2 template nonoverlapping pooling to obtain 32 feature maps of size 66, and then, through convolution layer C3, use 64 filter templates of size 3 × 3 convolutions to obtain 64 sizes. It starts with a 2 × 2 feature map, then downsamples S4 using the maximum pooling of the 2 × 2 templates, then converts each feature map into a single neuron node using 128 2 × 2 filter template convolutions, and lastly links all of them together. At the connection layer F6, some tumor edge and texture features will be acquired from the network, and the low-level features will be converted into high-level features after multilayer learning. Finally, intermediate preprocessing is performed to give the SVM classifier the obtained high-level features. Each pixel in the image is sorted into two groups based on the likelihood value of the category to get the probability value of tumor or normal chest tissue. Size classification is utilized to create a segmented binary image of the tumor.

With the increase in the window size, the correct rate of SVM classification samples will decrease, and the effect is better when the window size is small. At the same window scale, the segmentation results of SVMs with different kernel functions are not much different.

The SVM technique using polynomial kernels, on the other hand, yields slightly poorer results than the linear kernel and radial basis kernel SVM methods. In addition, we set C = 1, 10, 100, 1000, and 10000 and σ2 = 0.01, 0.125, 0.5, 1.5, and 1.0, respectively, for sample training and found through experimental data that when C is set between 100 and 1000, when σ2 is set between 0.01 and 0.125, the cross-validation accuracy is the highest. As a result, when utilizing the radial basis kernel function to offer the best segmentation results, the penalty factors C and 2 should not be too little or too large. As a consequence, we chose C = 1000, σ2 = 0.01, 5 × 5 as the window size, and 2000 as the training sample count.

3. Results

3.1. Evaluation Metrics

Dice Similarity Coefficient (DSC) is an index that measures the rate at which manual and automatic segmentation is repeated. It is defined as follows:

| (6) |

The number of tumor sites identified as true positive, false positive, and false negative, respectively, is denoted as TP, FP, and FN.

Positive predictive value refers to the ratio of correctly segmented tumor points to the segmentation result of tumor points (PPV), which is defined as

| (7) |

The percentage of successfully segmented tumor points to the real value of tumor points is known as sensitivity, and it is defined as

| (8) |

3.2. Segmentation Results of the CNN-SVM Model

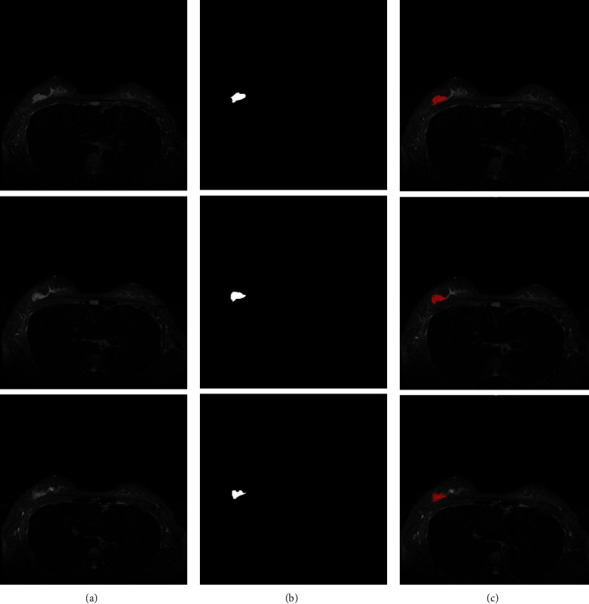

We train and segment the gathered breast tumor photos and present the experimental findings to demonstrate the CNN-SVM model's segmentation effect. The segmentation impact is clearly visible in the ensuing graph, demonstrating the efficacy of our strategy. The segmentation results of image slices of triple-negative breast cancer samples are shown in Figure 5.

Figure 5.

Image slice segmentation results of triple-negative breast cancer samples based on (a) raw MRI scan image, (b) segmentation results of CNN-SVM, and (c) real image segmentation result.

At the same time, to verify our method's effectiveness again, we randomly selected several samples from the scanned images of other types of breast cancer for testing. Figure 6 shows the segmentation results of image slices of other types of breast cancer samples.

Figure 6.

Image slice segmentation results of other types of breast cancer samples based on (a) raw MRI scan image, (b) segmentation results of CNN-SVM, and (c) real image segmentation results.

As can be seen in the diagram, our network is capable of successfully segmenting breast cancers. As a result, our network is extremely successful in segmenting breast cancers.

3.3. Comparison with Existing Methods

To demonstrate the usefulness of our suggested approach, we compare it to methods offered by other researchers, and the results of the comparison are presented in Table 1. To segment breast cancers, Byra et al. [36] designed a Selective Kernel (SK) U-Net convolutional neural network. Rouhi et al. [37] used convolutional neural networks for breast tumor segmentation. Haq et al. [38] proposed an automatic breast tumor segmentation method using conditional GAN (cGAN). Table 1 shows that the CNN-SVM combination model developed in this study outperforms other approaches in terms of DSC, PPV, and sensitivity, demonstrating the practicality of our strategy.

Table 1.

Comparison of different algorithms.

4. Discussion

Breast cancer is increasing at a rate of 3% to 4% every year, and it has become the first cancer-related death among women. Most of them go to the hospital for treatment only after symptoms, and they have the worst understanding of the census. The etiological factors of this disease include oral contraceptives, a high-fat diet, heavy drinking, obesity, long-term smoking, and unstable ovarian function, which seriously affect patients' quality of life and increase the economic burden on patients. As a result, it is vital to diagnose and treat people with breast cancer or suspected breast tumors as soon as possible. In recent years, MR is often used to diagnose breast cancer. Most studies have shown that 3D imaging of MRI has the advantages of multiparameter and multiplanar imaging and has a higher soft tissue resolution. Compared with mammography, MRI can more accurately display the type of lesions and tumor staging. At the same time, MRI dynamic contrast-enhanced scanning has high diagnostic sensitivity and accuracy and can well present high breast lesions, deep lesions, and multifocal lesions. In addition, this inspection method can show the morphological changes of the mass and reflect the microvascular perfusion and angiogenesis of the mass through dynamic enhanced scanning to effectively evaluate the malignancy of the mass and facilitate grading diagnosis.

Based on a convolutional neural network method and SVM, we present a hybrid network for complete automatic segmentation. The network is made up of two phases that are linked together. The CNN is trained initially to learn the mapping from image space to tumor label space. The anticipated label output from the CNN is then added to an SVM classifier together with the test grayscale picture to obtain correct segmentation. A more accurate binary segmentation picture can be obtained after the SVM classifier.

Similar to other researchers, our method also suffers from certain limitations. First, the accuracy of data annotation needs to be improved, and the size of the dataset needs to be expanded. Existing medical imaging data is primarily labeled by hand, which necessitates a significant amount of people and material resources. The accuracy of labeling is closely related to the level of doctors, so the quality of datasets is uneven. In addition, the private nature of medical images also makes it difficult to obtain data. Doctors at the hospital use regular sketching software to annotate the obtained annotation dataset. The annotation quality must be enhanced, and the quantity of MRI pictures must be increased. Secondly, the network performance also needs to be improved, reducing the number of parameters and speeding up the training speed. The segmented breast tumor may be reconstructed into a three-dimensional model [39] for better visualization. In addition, one may use extreme learning techniques [40, 41] for the segmentation to compare against our proposed method in future implementation.

5. Conclusion

For MRI breast tumor segmentation, this research presents a CNN-SVM network. The label output of a learnt convolutional neural network is guided into a support vector machine in this technique. The convolutional neural network and the support vector are trained separately in the training phase to learn the mapping from the grayscale picture domain to the tumor label domain. The SVM classifier receives the labeled output of the convolutional neural network and the grayscale picture of the test for correct segmentation in the testing stage. In future study, we will look at convolutional neural networks paired with additional robust classifiers. When compared to previous studies' segmentation frameworks, the DSC, PPV, and sensitivity of our CNN-SVM combined network are 0.93, 0.95, and 0.92, respectively, indicating that it has higher segmentation performance and can accurately segment breast cancers. The diagnosis of breast cancer by medical elastography can be further enhanced with the possibility of implementing optical flow motion analysis on the MRI scans [42, 43] and identifying the regions of abnormal stiffness.

Acknowledgments

This work was supported by the Nursery Fund Project of the Second Affiliated Hospital of Fujian Medical University (No. 2021MP06). The authors received the technical assistance provided by the Deep Red AI platform.

Data Availability

Data are available on request from the authors due to privacy/ethical restrictions.

Consent

All human subjects in this study have given their written consent for the participation of our research.

Conflicts of Interest

The authors declare that there is no conflict of interest for this paper.

Authors' Contributions

Ying-Ying Guo and Yin-Hui Huang contributed equally to this work and are co-first authors.

References

- 1.Harbeck N., Penault-Llorca F., Cortes J., et al. Breast cancer. Nature Reviews Disease Primers . 2019;5(1):1–31. doi: 10.1038/s41572-019-0111-2. [DOI] [PubMed] [Google Scholar]

- 2.Sun Y. S., Zhao Z., Yang Z. N., et al. Risk factors and preventions of breast cancer. International Journal of Biological Sciences . 2017;13(11):1387–1397. doi: 10.7150/ijbs.21635. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Akram M., Iqbal M., Daniyal M., Khan A. U. Awareness and current knowledge of breast cancer. Biological Research . 2017;50(1):1–23. doi: 10.1186/s40659-017-0140-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Waks A. G., Winer E. P. Breast cancer Treatment. JAMA . 2019;321(3):288–300. doi: 10.1001/jama.2018.19323. [DOI] [PubMed] [Google Scholar]

- 5.Sharma G. N., Dave R., Sanadya J., Sharma P., Sharma K. K. Various types and management of breast cancer: an overview. Journal of Advanced Pharmaceutical Technology & Research . 2010;1(2):109–126. [PMC free article] [PubMed] [Google Scholar]

- 6.Anders C. K., Johnson R., Litton J., Phillips M., Bleyer A. Breast cancer before age 40 years. Seminars in Oncology . 2009;36(3):237–249. doi: 10.1053/j.seminoncol.2009.03.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Stephenson G. D., Rose D. P. Breast cancer and obesity: an update. Nutrition and Cancer . 2003;45(1):1–16. doi: 10.1207/S15327914NC4501_1. [DOI] [PubMed] [Google Scholar]

- 8.Pike M. C., Krailo M. D., Henderson B. E., Casagrande J. T., Hoel D. G. ‘Hormonal’ risk factors, ‘breast tissue age’ and the age-incidence of breast cancer. Nature . 1983;303(5920):767–770. doi: 10.1038/303767a0. [DOI] [PubMed] [Google Scholar]

- 9.McDonald E. S., Clark A. S., Tchou J., Zhang P., Freedman G. M. Clinical diagnosis and management of breast cancer. Journal of Nuclear Medicine . 2016;57(Supplement 1):9S–16S. doi: 10.2967/jnumed.115.157834. [DOI] [PubMed] [Google Scholar]

- 10.Engel J., Eckel R., Kerr J., et al. The process of metastasisation for breast cancer. European Journal of Cancer . 2003;39(12):1794–1806. doi: 10.1016/S0959-8049(03)00422-2. [DOI] [PubMed] [Google Scholar]

- 11. JNCI: Journal of the National Cancer Institute . Oxford Academic; Etiology of human breast cancer: a review. https://academic.oup.com/jnci/paper-abstract/50/1/21/926518 . [DOI] [PubMed] [Google Scholar]

- 12.Benson J. R., Jatoi I., Keisch M., Esteva F. J., Makris A., Jordan V. C. Early breast cancer. The Lancet . 2009;373(9673):1463–1479. doi: 10.1016/S0140-6736(09)60316-0. [DOI] [PubMed] [Google Scholar]

- 13.Gunasinghe N. P. A., Wells A., Thompson E. W., Hugo H. J. Mesenchymal–epithelial transition (MET) as a mechanism for metastatic colonisation in breast cancer. Cancer and Metastasis Reviews . 2012;31(3-4):469–478. doi: 10.1007/s10555-012-9377-5. [DOI] [PubMed] [Google Scholar]

- 14.Karagiannis G. S., Pastoriza J. M., Wang Y., et al. Neoadjuvant chemotherapy induces breast cancer metastasis through a TMEM-mediated mechanism. Science Translational Medicine . 2017;9(397, article eaan0026) doi: 10.1126/scitranslmed.aan0026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Liang Y., Zhang H., Song X., Yang Q. Metastatic heterogeneity of breast cancer: molecular mechanism and potential therapeutic targets. Seminars in Cancer Biology . 2020;60:14–27. doi: 10.1016/j.semcancer.2019.08.012. [DOI] [PubMed] [Google Scholar]

- 16.Hayward J. L., Carbone P. P., Heusen J. C., Kumaoka S., Segaloff A., Rubens R. D. Assessment of response to therapy in advanced breast cancer. British Journal of Cancer . 1977;35(3):292–298. doi: 10.1038/bjc.1977.42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Howell A., Anderson A. S., Clarke R. B., et al. Risk determination and prevention of breast cancer. Breast Cancer Research . 2014;16(5):1–19. doi: 10.1186/s13058-014-0446-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Sheth D., Giger M. L. Artificial intelligence in the interpretation of breast cancer on MRI. Journal of Magnetic Resonance Imaging . 2020;51(5):1310–1324. doi: 10.1002/jmri.26878. [DOI] [PubMed] [Google Scholar]

- 19.Lehman C. D., Gatsonis C., Kuhl C. K., et al. MRI evaluation of the contralateral breast in women with recently diagnosed breast cancer. New England Journal of Medicine . 2007;356(13):1295–1303. doi: 10.1056/NEJMoa065447. [DOI] [PubMed] [Google Scholar]

- 20.Morris E. A. Breast cancer imaging with MRI. Radiologic Clinics . 2002;40(3):443–466. doi: 10.1016/S0033-8389(01)00005-7. [DOI] [PubMed] [Google Scholar]

- 21.Moffa G., Galati F., Collalunga E., et al. Can MRI biomarkers predict triple-negative breast cancer? Diagnostics . 2020;10(12):p. 1090. doi: 10.3390/diagnostics10121090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Uematsu T., Kasami M., Yuen S. Triple-negative breast cancer: correlation between MR imaging and pathologic findings. Radiology . 2009;250(3):638–647. doi: 10.1148/radiol.2503081054. [DOI] [PubMed] [Google Scholar]

- 23.Chen J.-H., Agrawal G., Feig B., et al. Triple-negative breast cancer: MRI features in 29 patients. Annals of Oncology . 2007;18(12):2042–2043. doi: 10.1093/annonc/mdm504. [DOI] [PubMed] [Google Scholar]

- 24.Dogan B. E., Turnbull L. W. Imaging of triple-negative breast cancer. Annals of Oncology . 2012;23:vi23–vi29. doi: 10.1093/annonc/mds191. [DOI] [PubMed] [Google Scholar]

- 25.Wang Z. P., Liu G., Chen Y., et al. Correlation between multimodality MRI findings and molecular typing of breast cancer. The Journal of Practical Medicine . 2018;24:1725–1729. [Google Scholar]

- 26.Wang G.-S., Zhu H., Bi S.-J. Pathological features and prognosis of different molecular subtypes of breast cancer. Molecular Medicine Reports . 2012;6(4):779–782. doi: 10.3892/mmr.2012.981. [DOI] [PubMed] [Google Scholar]

- 27.Song Y., Ren S., Lu Y., Fu X., Wong K. K. Deep learning-based automatic segmentation of images in cardiac radiography: a promising challenge. Computer Methods and Programs in Biomedicine . 2022;220, article 106821 doi: 10.1016/j.cmpb.2022.106821. [DOI] [PubMed] [Google Scholar]

- 28.Men K., Zhang T., Chen X., et al. Fully automatic and robust segmentation of the clinical target volume for radiotherapy of breast cancer using big data and deep learning. Physica Medica . 2018;50:13–19. doi: 10.1016/j.ejmp.2018.05.006. [DOI] [PubMed] [Google Scholar]

- 29.El Adoui M., Mahmoudi S. A., Larhmam M. A., Benjelloun M. MRI breast tumor segmentation using different encoder and decoder CNN architectures. Computers . 2019;8(3) doi: 10.3390/computers8030052. [DOI] [Google Scholar]

- 30.Lei Y., He X., Yao J., et al. Breast tumor segmentation in 3D automatic breast ultrasound using mask scoring R-CNN. Medical Physics . 2021;48(1):204–214. doi: 10.1002/mp.14569. [DOI] [PubMed] [Google Scholar]

- 31.Singh V. K., Abdel-Nasser M., Akram F., et al. Breast tumor segmentation in ultrasound images using contextual-information-aware deep adversarial learning framework. Expert Systems with Applications . 2020;162, article 113870 doi: 10.1016/j.eswa.2020.113870. [DOI] [Google Scholar]

- 32.Yamashita R., Nishio M., Do R. K. G., Togashi K. Convolutional neural networks: an overview and application in radiology. Insights Into Imaging . 2018;9(4):611–629. doi: 10.1007/s13244-018-0639-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Agarap A. F. Deep learning using rectified linear units (ReLU) https://arxiv.org/abs/1803.08375.

- 34.Giusti A., Cireşan D. C., Masci J., Gambardella L. M., Schmidhuber J. Fast image scanning with deep max-pooling convolutional neural networks. 2013 IEEE International Conference on Image Processing; 2013; Melbourne, VIC, Australia. pp. 4034–4038. [DOI] [Google Scholar]

- 35.Hearst M. A., Dumais S. T., Osuna E., Platt J., Scholkopf B. Support vector machines. IEEE Intelligent Systems and their Applications . 1998;13(4):18–28. doi: 10.1109/5254.708428. [DOI] [Google Scholar]

- 36.Byra M., Jarosik P., Szubert A., et al. Breast mass segmentation in ultrasound with selective kernel U-Net convolutional neural network. Biomedical Signal Processing and Control . 2020;61, article 102027 doi: 10.1016/j.bspc.2020.102027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Rouhi R., Jafari M., Kasaei S., Keshavarzian P. Benign and malignant breast tumors classification based on region growing and CNN segmentation. Expert Systems with Applications . 2015;42(3):990–1002. doi: 10.1016/j.eswa.2014.09.020. [DOI] [Google Scholar]

- 38.Haq I. U., Ali H., Wang H. Y., Cui L., Feng J. BTS-GAN: computer-aided segmentation system for breast tumor using MRI and conditional adversarial networks. Engineering Science and Technology, an International Journal . 2022;36, article 101154 doi: 10.1016/j.jestch.2022.101154. [DOI] [Google Scholar]

- 39.Zhao C., Lv J., Shichang D. Geometrical deviation modeling and monitoring of 3D surface based on multi-output Gaussian process. Measurement . 2022;199, article 111569 doi: 10.1016/j.measurement.2022.111569. [DOI] [Google Scholar]

- 40.Tang Z., Wang S., Chai X., Cao S., Ouyang T., Li Y. Auto-encoder-extreme learning machine model for boiler NOx emission concentration prediction. Energy . 2022;256, article 124552 doi: 10.1016/j.energy.2022.124552. [DOI] [Google Scholar]

- 41.Lu Y., Fu X., Chen F., Wong K. K. Prediction of fetal weight at varying gestational age in the absence of ultrasound examination using ensemble learning. Artificial Intelligence in Medicine . 2020;102, article 101748 doi: 10.1016/j.artmed.2019.101748. [DOI] [PubMed] [Google Scholar]

- 42.Wong K. K. L., Kelso R. M., Worthley S. G., Sanders P., Mazumdar J., Abbott D. Theory and validation of magnetic resonance fluid motion estimation using intensity flow data. PLoS One . 2009;4(3, article e4747) doi: 10.1371/journal.pone.0004747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Wong K. K. L., Sun Z., Tu J. Y., Worthley S. G., Mazumdar J., Abbott D. Medical image diagnostics based on computer-aided flow analysis using magnetic resonance images. Computerized Medical Imaging and Graphics . 2012;36(7):527–541. doi: 10.1016/j.compmedimag.2012.04.003. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data are available on request from the authors due to privacy/ethical restrictions.