Abstract

The rapid adoption of electronic health records (EHRs) has created extensive repositories of digitized data that can be used to inform improvements in care delivery, processes, and patient outcomes. While the clinical data captured in EHRs are widely used for such efforts, EHRs also capture audit log data that reflect how users interact with the EHR to deliver care. Automatically collected audit log data provide a unique opportunity for new insights into EHR user behavior and decision‐making processes. Here, we provide an overview of audit log data and examples that could be used to improve oncology care and outcomes in four domains: diagnostic reasoning and consumption, care team collaboration and communication, patient outcomes and experience, and provider burnout/fatigue. This data source could identify gaps in performance and care, physician uptake of EHR features that enhance decision‐making, and integration of data trends for oncology. Ensuring researchers and oncologists are familiar with the data's potential and developing the data engineering capacity to utilize this rich data source, will expand the breadth of research to improve cancer care.

Keywords: audit log, decision‐making, digital health, electronic health records, informatics, quality and safety

This review discusses the current literature base for audit log research, which automatically captures electronic health record (EHR) user behavior and interactions with patient records. We place this work in context and propose questions to guide future oncology research to improve care decisions, quality, and outcomes.

![]()

1. INTRODUCTION

The electronic health record (EHR) contains readily available, highly detailed data, which has the potential to be used to generate clinical insights. 1 , 2 Current cancer informatics research has focused on the clinical patient information contained within EHR. 3 , 4 , 5 While these data are clinically useful, automatically collected, unprocessed data are available to analyze for research and quality improvement purposes that have been underutilized. We introduce the reader to the methodologies of using the audit log dataset in the field of oncology. As domain experts, oncologists can play a role in guiding the development and use of this methodology with health administrators and informatics researchers. This review can facilitate collaboration between oncologists, health administrators, and data scientists to better understand clinical EHR behaviors, and accordingly improve the EHR and their patient care.

1.1. Defining the audit log dataset

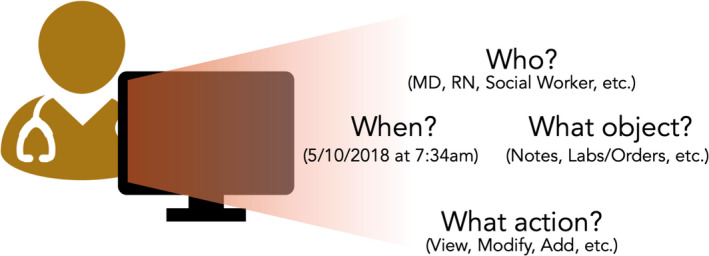

The audit log is defined as the metadata and details of every user interaction within the EHR. This information is automatically collected by the EHR system. Historically, these data have been used to audit any security breaches to key information technology (IT) infrastructure. Figure 1 shows how the audit log tracks information about different aspects of patient record access: the viewer, the action, the timestamp, and the patient record. 6

FIGURE 1.

Automatic data collection in the audit log dataset. The information in this figure is collected automatically as part of the audit log metadata. While initially collected solely for security reasons, this data can be repurposed for informatics and health services research into EHR user behavior

2. THE AUDIT LOG IN ONCOLOGY

Health information technology in the past two decades has enabled this highly detailed, audit log data to be collected. The Health Information Technology for Economic and Clinical Health (HITECH) Act in 2009 led to the broad adoption of electronic health records, which has been ubiquitously used by clinicians. Anticipating the importance of privacy protections in this software, the EHR audit log was required to be collected as part of the Health Insurance Portability and Accountability Act (HIPAA) Security Rule of 2005. 7

In contrast with research conducted on clinical data entered by a health care professional into the EHR, audit log research is automatically derived from user behaviors. The audit log allows researchers to study physician behaviors, because it systematically captures data that reflects a user's behavior in a software system. 8 The benefit of using the audit log for data collection, aggregation, and analysis is that there is no independent human observer necessary to conduct the collection. 8 However, as introduced later in this review, the drawback to this data type is that clinicians and researchers are needed to interpret the audit log data elements and verify that the conclusions reflect real‐world behaviors. The gold standard for validation through manual data collected is by administering observation‐based time and motion studies, which have in the past been used to validate the audit log dataset and their behaviors. 9 , 10

2.1. A non‐traditional dataset for research

EHR audit log data represent a class of data that has rarely been used in research yet holds potential insights for cancer care improvement. Specifically, they can extend current research by better elucidating clinical decision‐making and health care processes that contribute to the quality and health services improvement missions of cancer care. This underutilized dataset accessible to most organizations using EHRs has the potential to fuel an integrated learning ecosystem between clinical practice and research. 5 , 11 Many audit log datasets can also be used in conjunction with other user‐level, time‐level, or patient‐level datasets and variables that make it a versatile tool to collect insights about health services research.

3. OPPORTUNITIES FOR RESEARCH

The use of the audit log in oncology is limited. We characterize themes by research questions, identifying four possible oncology research areas that could be advanced via using EHR audit log data: diagnostic reasoning and consumption, care team collaboration and communication, patient outcomes and experience, and EHR user characterizations. For those seeking a more technical review, Rule et al. 6 provide a list of previous work organized by technique and specialty.

3.1. Diagnostic reasoning and consumption

It is cognitively challenging for oncologists to find and consume multiple streams of data that are available in the EHR (radiology, pathology, labs, and notes) in an efficient manner. 12 Current EHRs have been designed for coding and structuring forms, rather than for streamlining doctor or caregiver use to make and interpret decisions. 13 , 14 While chart review of historical data is important, many clinicians worry about overlooking relevant information due to the volume of data and differing EHR configurations.

The audit log can assist in tracking a user's information consumption pattern, which can be a proxy for a physician's decision‐making. Audit log studies can be useful to confirm self‐reported survey data collected about EHR review by physicians. A study found that while nearly all intensive care unit (ICU) clinicians surveyed perform an EHR review when admitting new patients, nearly half (49%) of clinicians indicated that a significant amount of time was spent reviewing EHR charts “haphazardly,” with searches going back three or more years to find relevant information. 12 Information‐seeking behaviors also differed. 15

Prior studies have studied how physicians might consume or add information. A study of task analyses of 16 physicians tried to understand what relevant notes are reviewed for particular appointments, given the cognitive burden of current charts, for both acute and chronic clinic visit types. 16 Note review and input method varied by specialty: specialists tended to prefer using a voice recorder, while primary care physicians tended to use templates tailored for visit types (e.g., “well visit” vs. urgent problem‐based visit). 17 Consumption can also include information seeking and charting. Commonly searched terms can be used to improve the EHR's ability to conveniently retrieve this information and train providers more effectively. 18 The study was also able to determine that most physicians used the search feature, but its use was generally low among nonphysicians and pharmacists.

Given the cognitive burden of excessive information, access log studies can also be used to predict EHR user roles based on their behavior, and assess if particular roles are given the access appropriate for their level and usage. 19 , 20 This is important because if data are not necessary for particular roles to access, its presence may overwhelm the user and prevent them from properly performing their tasks.

These studies have implications for cancer care since audit log studies could identify optimal review strategies to improve workflows and make it more efficient for physicians to find relevant information in large patient files. These could also impact the design and development of new features in the EHR to make it easier to find the information that is needed specifically for cancer patients.

3.2. Care team collaboration and communication

Cancer care is an inherently collaborative and interdisciplinary effort, with many different care providers providing distinct support to cancer patients. In the literature, high intensity of interprofessional collaboration is associated with increased patient satisfaction, which is associated with improved care, cost control, and reduction in clinical errors. 21 , 22 Cancer care often is centered around multidisciplinary patient tumor boards or team huddles to review patient records. Improving interprofessional, team‐based performance can potentially improve care quality, impact patient diagnoses and treatments, and outcomes. 23 , 24 , 25 , 26 , 27 However, understanding how different members of a care team might interact with one another–especially through an EHR–is presently a complex undertaking. For example, each team member may interact with notes asynchronously or access different information panels. It is also not known whether certain team interactions may occur only in an EHR, and not in‐person, which is how collaboration is conventionally defined.

Assessing team‐based collaborations and the logistics of care administration is a possible use case for audit log data. For example, audit log studies to understand collaboration and communication have been conducted in obstetrics/gynecology and neonatal care. 28 , 29 Audit log studies outside of the oncology context have found that as team size increased, so too did the use of electronic actions, daily hours of use, and the number of computer sessions. 30 This means that larger teams are associated with increased interactions with the EHR. Another study found that greater delegation of tasks within clinician teams is associated with improved productivity, as measured by work relative value units (RVUs). 31 Studies have also been conducted to determine which members of the care team collaborate at different times in the patient's visit cycle.

Of the domains discussed in this paper, collaboration and communication have been the most robustly studied in the oncology context using the audit log. Some examples include network analyses to better understand the different physicians who contributed notes to patient encounters. A study of surgical colorectal cancer audit log records found that a given health care professional was on average connected with six other professionals for each patient record. 32 These studies can assess not only who is on a particular patient team, but also to what extent each team member plays a role. These data could also be used to understand whether certain principal care team members share increased responsibilities in communicating with one another and interacting on the patient record. 33 Future research might consider which roles tend to have higher EHR use burden, and identify areas in which communication and interfaces are lacking, especially for patient supportive care services and social work. If these deficiencies exist, these studies could allow programs to prioritize cross‐training and familiarity between roles to ensure linkages and continuity of care. These audit log studies can determine if collaboration on patient records occurs at a given point in time. 34 , 35 These studies could complement prior research that attempts to understand the order in which events take place when providing clinical pathways for treatment. 36

3.3. Patient outcomes and experience

Oncology is characterized as a long‐term journey with the potential for many touchpoints with the medical system. Further research is necessary to study patterns in EHR use and downstream outcomes, such as patient outcomes (e.g., emergency care events) or patient‐reported outcomes (e.g., satisfaction). Though the literature is sparse, we describe how work in other care contexts could guide research in the oncology context.

These studies could be conducted in an inpatient environment specifically studying those who care for hospitalized oncology patients. For example, a study conducted in a general medicine ward found an association between EHR‐measured work hours and a patient outcome composite variable of mortality, transfer to the ICU, or 30‐day same‐hospital readmission. 30 This study confirmed previous literature which suggested that high workload (including EHR use burden) can lead to worse patient outcomes, such as increased length of stay and ICU transfers. 37 , 38

The audit log literature has found contradictory effects on the role of EHR usage and patient outcomes, and likely is specific to the ambulatory context. For the emergency department, these could include door‐to‐disposition, throughput efficiency, or readmissions. For example, a study of physician EHR usage activities in the emergency department found that there were positive correlations between physician EHR review time and door‐to‐disposition. 39 In contrast, overall emergency department throughput efficiency in the emergency department decreased with increased time for physician's note review. 39 Another study found that review of notes by an emergency physician led to a decrease in 7‐day readmission by 6%. 40 This work would continue efforts underway in the outpatient cancer care context, where researchers have already started studying oncology treatment‐based acute care needs or unplanned care visits. 41 , 42 , 43

Another example of an outpatient oncology study could be studying EHR use and patient satisfaction. A pilot study of general internists and medical subspecialists' daytime EHR usage was inversely related to patient satisfaction metrics. 44 Audit log data could also be used to study the efficacy and patient satisfaction metrics of those physicians who are early adopters of new EHR features, such as an interoperable integrated view. 45 These types of studies could be conducted in an outpatient oncology context and study informatics initiatives designed to improve patient care.

Ultimately, further research is needed to disentangle whether patient outcomes or patient‐reported outcomes are impacted positively or negatively by a physician's EHR usage. In oncology, it is not yet known if the audit log could provide a useful dataset for further investigation of patient outcomes or patient experience.

3.4. EHR use and burnout

The causes of burnout are multifaceted and well documented in oncology. 46 , 47 , 48 Burnout and emotional fatigue can affect individuals differently, but one way to study these differences is through EHR use patterns. For instance, clinicians at an academic medical center spent about one‐third of their EHR usage after hours. 49 Workload and task demand could heterogeneously impact specific members of the cancer care team. In this section, we describe how differing EHR user behavior could have implications for burnout.

Audit log studies have found differences in EHR behavior by gender. In an ambulatory setting, female physicians spent more time than male physicians in the EHR on weekends and after hours. 50 Second, audit log studies to study physician EHR usage have been conducted across specialties. General surgery residents spent more time per patient than orthopedic surgery residents on EHR time, chart review, and documentation. 51 EHR use behaviors were also dependent on the service through which residents were rotating and the attending on each team. 52 , 53 Prior studies have considered a diverse number of specialties (e.g., radiation oncology, dermatology). Often, these papers do not provide disaggregated analyses on these groups. 54

Future research could disaggregate EHR use burden and present a complement to published literature on burnout and charting burden in oncology. For example, one paper found that surgical oncologists spent the most time using the EHR compared to other surgeons with a mean usage time of 2.5 h. When disaggregated, breast surgeons' use was even higher than other surgical oncologists. 55

The research priorities are to focus on different cancer specialties and training levels within each (e.g., internal medicine residents and fellows vs. radiation oncology residents and fellows) to better understand their behaviors. A next step might be to consider how these behaviors might be correlated with burnout and job satisfaction specifically in the oncology context. Furthermore, additional studies are needed to better understand the causal pathway of EHR use and burnout, in consideration of other variables known to contribute to burnout. For example, one recent study found that adjusting by clinician sex and work culture play a more significant role in predicting burnout than EHR use. 56

Though differences in EHR usage are not inherently good or bad, they could play a role in explaining burnout on an oncology care team. Identifying those users' behaviors and characteristics (e.g., if they spend excessive time on EHRs after hours or bear a disproportionate burden) could be useful to improve the health and well‐being of physicians. 47 , 57 , 58 These studies could be correlated with job satisfaction data and burnout, and help inform institutional initiatives and policy.

4. THE CLINICAL ONCOLOGIST'S ROLE

Due to its implications on practice, domain experts will play an indispensable role in the cycle of audit log research, from conception to EHR redesign, based on findings in these data.

4.1. Project conception

Informaticians and health system administrators often lack the context to understand how to improve the physician experience in the EHR. The oncologist in audit log research could collaborate with these individuals to propose appropriate research questions (e.g., with the domains mentioned above) or tie usage to EHR user behaviors or meaningful patient outcomes. The sheer volume of data being automatically collected needs to be better leveraged to improve care, so oncologists could propose to repurpose the data to answer pressing clinical questions.

4.2. Data validation

The audit log dataset will also need to be properly validated, as the use of these data to answer clinical questions remains in its nascence. For example, the EHR log may consider a window of time that either overestimates (e.g., physician steps away from EHR to see patient with the session open) or underestimates (e.g., physician has multiple windows or panels open at the same time) user actions.

These unique differences also may contribute to difficulty generalizing the data across different institutions or clinical sites with different EHR features. Therefore, it is in the interests of oncologists and those curating these data to ensure that physician EHR use is accurate. Oncologists can collaborate with researchers in in‐person observational studies collected in time and motion studies, which have previously research studies that have been conducted with EHR audit logs. 9 , 10

4.3. Contextualizing findings with other research spheres

As leaders in their clinical environments, oncologists can collaborate with informaticians and health administrators to contextualize the findings of audit log studies in their daily clinical environments and lead to quality improvement. For example, research has also found that the EHR burden is also disproportionately impacting women and younger trainees, so experts and clinical leaders can better contextualize and understand where disparities in their care team's EHR usage could be occurring. Oncologists can also ensure synergies with current precision medicine and implementation sciences efforts in cancer care. Ensuring synergy could support effective practices. 2

4.4. EHR redesign

Beyond providing qualitative data to EHR developers on how best they could redesign EHR infrastructure, oncologist‐researchers can propose to test hypotheses on the most effective ways of navigating the EHR or facilitating searches. These findings, if proven quantitatively, could perhaps lead to changes in future EHR systems for their health system. By participating in research on audit log usage, oncologists could help to redesign the EHR to reduce their clicks or overall time in the EHR.

5. FUTURE OPPORTUNITIES

The study of the audit log, especially in oncology, is in its infancy. While the EHR audit log dataset has been studied in various contexts as described in the four domains, there are opportunities to tie this work more closely to the cancer context. While treatment and systems‐level interventions appear most obviously at the top of the cancer research agenda, there remains a substantial research gap in understanding the role of physician decision‐making in health and health care outcomes. Some considerations have already been studied elsewhere, including the use of heuristics, patient/physician decision‐making models, and time spent by physicians with patients. 56 , 59 The current cancer research agenda can be enhanced by considering the role that physician behavior might play in contributing to the quality of care, behavioral and gender care disparities, and physician/patient well‐being and clinical outcomes.

Any work conducted in the oncology community specifically would be part of a burgeoning literature base studying audit log usage. Utilizing the audit log would enable researchers to study how oncologists access relevant information in the EHR to make their treatment decisions, enhance team‐based collaboration, and improve patient outcomes in the process.

CONFLICT OF INTEREST

Julia Adler‐Milstein is on the Board and holds shares in Project Connect that are unrelated to the current work. Julian Hong reported a patent for systems and methods for predicting acute care visits during outpatient cancer therapy pending that is unrelated to the current work. Yash S. Huilgol and Susan L. Ivey have no conflict to disclose.

AUTHOR CONTRIBUTION

Conceptualization (YSH, JCH), methodology and design (YSH, JCH), supervision (JAM, SLI, and JCH), writing – original draft preparation (YSH), writing – review & editing (all authors).

ETHICS STATEMENT

This review does not meet the criteria for human subject research by UC Berkeley Committee for Protection of Human Subjects and did not require ethics approval.

ACKNOWLEDGMENTS

The authors acknowledge Ziad Obermeyer, MD, MPhil for comments and suggestions to improve the review. The authors thank Michael Sholinbeck, MLIS, and Jen Gong, PhD for their advice on conducting the literature review. Finally, we appreciate the advising and support of Coco Auerswald, MD, MS, Ronald Dahl, MD, Jonae Boatwright, MSW, and Deanine Johnson, MA.

Huilgol YS, Adler‐Milstein J, Ivey SL, Hong JC. Opportunities to use electronic health record audit logs to improve cancer care. Cancer Med. 2022;11(17):3296–3303. doi: 10.1002/cam4.4690

Funding information

UC Berkeley‐UCSF Joint Medical Program (Helen Marguerite Schoeneman Fund),

UC Berkeley‐UCSF Joint Medical Program (Joint Medical Program Thesis Grant)

University of California, Berkeley School of Public Health Alumni Association (William Griffiths Fellowship), Publication made possible in part by support from the UCSF Open Access Publishing Fund.

DATA AVAILABILITY STATEMENT

Data sharing not applicable ‐ no new data generated, or the article describes entirely theoretical research.

REFERENCES

- 1. Wallace PJ. Reshaping cancer learning through the use of health information technology. Health Aff. 2007;26(Supplement 1):w169‐w177. doi: 10.1377/hlthaff.26.2.w169 [DOI] [PubMed] [Google Scholar]

- 2. Chambers DA, Feero WG, Khoury MJ. Convergence of implementation science, precision medicine, and the learning health care system: a new model for biomedical research. JAMA. 2016;315(18):1941‐1942. doi: 10.1001/jama.2016.3867 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Hesse BW, Suls JM. Informatics enabled behavioral medicine in oncology. Cancer J. 2011;17(4):222‐230. doi: 10.1097/PPO.0b013e318227c811 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Sinha S, Garriga M, Naik N, et al. Disparities in electronic health record patient portal enrollment among oncology patients. JAMA Oncol. 2021;8:935‐937. doi: 10.1001/jamaoncol.2021.0540 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Alfano CM, Mayer DK, Beckjord E, et al. Mending disconnects in cancer care: setting an agenda for research, practice, and policy. JCO Clin Cancer Inform. 2020;4:539‐546. doi: 10.1200/CCI.20.00046 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Rule A, Chiang MF, Hribar MR. Using electronic health record audit logs to study clinical activity: a systematic review of aims, measures, and methods. J Am Med Inform Assoc. 2020;27(3):480‐490. doi: 10.1093/jamia/ocz196 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Office for Civil Rights . Summary of the HIPAA security rule. HHS.gov. Published November 20, 2009. Accessed August 3, 2020. https://www.hhs.gov/hipaa/for‐professionals/security/laws‐regulations/index.html

- 8. Zheng K, Hanauer DA, Weibel N, Agha Z. Computational ethnography: automated and unobtrusive means for collecting data in situ for human–computer interaction evaluation studies. In: Patel VL, Kannampallil TG, Kaufman DR, eds. Cognitive Informatics for Biomedicine: Human Computer Interaction in Healthcare. Health Informatics. Springer International Publishing; 2015:111‐140. doi: 10.1007/978-3-319-17272-9_6 [DOI] [Google Scholar]

- 9. Sinsky C, Colligan L, Li L, et al. Allocation of physician time in ambulatory practice: a time and motion study in 4 specialties. Ann Intern Med. 2016;165(11):753‐760. doi: 10.7326/M16-0961 [DOI] [PubMed] [Google Scholar]

- 10. Mamykina L, Vawdrey DK, Hripcsak G. How do residents spend their shift time? A time and motion study with a particular focus on the use of computers. Acad Med. 2016;91(6):827‐832. doi: 10.1097/ACM.0000000000001148 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. National Cancer Institute . NCI Cancer Research Data Ecosystem. Accessed August 7, 2020. cancer.gov/research/infrastructure/bioinformatics/cancer‐research‐data‐ecosystem‐infographic

- 12. Nolan ME, Cartin‐Ceba R, Moreno‐Franco P, Pickering B, Herasevich V. A multisite survey study of EMR review habits, information needs, and display preferences among medical ICU clinicians evaluating new patients. Appl Clin Inform. 2017;8(4):1197‐1207. doi: 10.4338/ACI-2017-04-RA-0060 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Cimino JJ. Putting the “why” in “EHR”: capturing and coding clinical cognition. J Am Med Inform Assoc. 2019;26(11):1379‐1384. doi: 10.1093/jamia/ocz125 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Weed LL. Medical records that guide and teach. N Engl J Med. 1968;278(11):593‐600. doi: 10.1056/NEJM196803142781105 [DOI] [PubMed] [Google Scholar]

- 15. Kannampallil TG, Jones LK, Patel VL, Buchman TG, Franklin A. Comparing the information seeking strategies of residents, nurse practitioners, and physician assistants in critical care settings. J Am Med Inform Assoc. 2014;21(e2):e249‐e256. doi: 10.1136/amiajnl-2013-002615 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Koopman RJ, Steege LMB, Moore JL, et al. Physician information needs and electronic health records (EHRs): time to reengineer the clinic note. J Am Board Fam Med. 2015;28(3):316‐323. doi: 10.3122/jabfm.2015.03.140244 [DOI] [PubMed] [Google Scholar]

- 17. Pollard SE, Neri PM, Wilcox AR, et al. How physicians document outpatient visit notes in an electronic health record. Int J Med Inform. 2013;82(1):39‐46. doi: 10.1016/j.ijmedinf.2012.04.002 [DOI] [PubMed] [Google Scholar]

- 18. Ruppel H, Bhardwaj A, Manickam RN, et al. Assessment of electronic health record search patterns and practices by practitioners in a large integrated health care system. JAMA Netw Open. 2020;3(3):e200512. doi: 10.1001/jamanetworkopen.2020.0512 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Zhang W, Gunter CA, Liebovitz D, Tian J, Malin B. Role prediction using electronic medical record system audits. AMIA Annu Symp Proc. 2011;2011:858‐867. [PMC free article] [PubMed] [Google Scholar]

- 20. Ash JS, Berg M, Coiera E. Some unintended consequences of information Technology in Health Care: the nature of patient care information system‐related errors. J Am Med Inform Assoc. 2004;11(2):104‐112. doi: 10.1197/jamia.M1471 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Mahdizadeh M, Heydari A, Karimi MH. Clinical interdisciplinary collaboration models and frameworks from similarities to differences: a systematic review. Global J Health Sci. 2015;7(6):170‐180. doi: 10.5539/gjhs.v7n6p170 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Will KK, Johnson ML, Lamb G. Team‐based care and patient satisfaction in the hospital setting: a systematic review. J Patient Cent Res Rev. 2019;6(2):158‐171. doi: 10.17294/2330-0698.1695 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Baker A. Crossing the quality chasm: a new health system for the 21st century. BMJ. 2001;323(7322):1192. doi: 10.1136/bmj.323.7322.1192 [DOI] [PubMed] [Google Scholar]

- 24. Levit LA, Balogh E, Nass SJ, Ganz P. Delivering High‐Quality Cancer Care: Charting a New Course for a System in Crisis. National Academies Press; 2013. [PubMed] [Google Scholar]

- 25. Pillay B, Wootten AC, Crowe H, et al. The impact of multidisciplinary team meetings on patient assessment, management and outcomes in oncology settings: a systematic review of the literature. Cancer Treat Rev. 2016;42:56‐72. doi: 10.1016/j.ctrv.2015.11.007 [DOI] [PubMed] [Google Scholar]

- 26. Specchia ML, Frisicale EM, Carini E, et al. The impact of tumor board on cancer care: evidence from an umbrella review. BMC Health Serv Res. 2020;20(1):73. doi: 10.1186/s12913-020-4930-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Fleissig A, Jenkins V, Catt S, Fallowfield L. Multidisciplinary teams in cancer care: are they effective in the UK? Lancet Oncol. 2006;7(11):935‐943. doi: 10.1016/S1470-2045(06)70940-8 [DOI] [PubMed] [Google Scholar]

- 28. Gray JE, Feldman H, Reti S, et al. Using digital crumbs from an electronic health record to identify, study and improve health care teams. AMIA Annu Symp Proc. 2011;2011:491‐500. [PMC free article] [PubMed] [Google Scholar]

- 29. Gray JE, Davis DA, Pursley DM, Smallcomb JE, Geva A, Chawla NV. Network analysis of team structure in the neonatal intensive care unit. Pediatrics. 2010;125(6):e1460‐e1467. doi: 10.1542/peds.2009-2621 [DOI] [PubMed] [Google Scholar]

- 30. Ouyang D, Chen JH, Krishnan G, Hom J, Witteles R, Chi J. Patient outcomes when Housestaff exceed 80 hours per week. Am J Med. 2016;129(9):993‐999.e1. doi: 10.1016/j.amjmed.2016.03.023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Adler‐Milstein J, Huckman RS. The impact of electronic health record use on physician productivity. Am J Manag Care. 2013;19(10 Spec No):SP345‐352. [PubMed] [Google Scholar]

- 32. Yao N, Zhu X, Dow A, Mishra VK, Phillips A, Tu SP. An exploratory study of networks constructed using access data from an electronic health record. J Interprof Care. 2018;32(6):666‐673. doi: 10.1080/13561820.2018.1496902 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Zhu X, Tu SP, Sewell D, et al. Measuring electronic communication networks in virtual care teams using electronic health records access‐log data. Int J Med Inform. 2019;128:46‐52. doi: 10.1016/j.ijmedinf.2019.05.012 [DOI] [PubMed] [Google Scholar]

- 34. Caron F, Vanthienen J, Vanhaecht K, Limbergen EV, De Weerdt J, Baesens B. Monitoring care processes in the gynecologic oncology department. Comput Biol Med. 2014;44:88‐96. doi: 10.1016/j.compbiomed.2013.10.015 [DOI] [PubMed] [Google Scholar]

- 35. Huang Z, Dong W, Ji L, Gan C, Lu X, Duan H. Discovery of clinical pathway patterns from event logs using probabilistic topic models. J Biomed Inform. 2014;47:39‐57. doi: 10.1016/j.jbi.2013.09.003 [DOI] [PubMed] [Google Scholar]

- 36. Huang Z, Lu X, Duan H. On mining clinical pathway patterns from medical behaviors. Artif Intell Med. 2012;56(1):35‐50. doi: 10.1016/j.artmed.2012.06.002 [DOI] [PubMed] [Google Scholar]

- 37. Leroyer E, Romieu V, Mediouni Z, Bécour B, Descatha A. Extended‐duration hospital shifts, medical errors and patient mortality. Br J Hosp Med (Lond). 2014;75(2):96‐101. doi: 10.12968/hmed.2014.75.2.96 [DOI] [PubMed] [Google Scholar]

- 38. Landrigan CP, Rothschild JM, Cronin JW, et al. Effect of reducing interns' work hours on serious medical errors in intensive care units. N Engl J Med. 2004;351(18):1838‐1848. doi: 10.1056/NEJMoa041406 [DOI] [PubMed] [Google Scholar]

- 39. Kannampallil TG, Denton CA, Shapiro JS, Patel VL. Efficiency of emergency physicians: insights from an observational study using EHR log files. Appl Clin Inform. 2018;9(1):99‐104. doi: 10.1055/s-0037-1621705 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Ben‐Assuli O, Shabtai I, Leshno M. Using electronic health record systems to optimize admission decisions: the creatinine case study. Health Informatics J. 2014;1:73‐88. doi: 10.1177/1460458213503646 [DOI] [PubMed] [Google Scholar]

- 41. Jairam V, Lee V, Park HS, et al. Treatment‐related complications of systemic therapy and radiotherapy. JAMA Oncol. 2019;5(7):1028‐1035. doi: 10.1001/jamaoncol.2019.0086 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Handley NR, Schuchter LM, Bekelman JE. Best practices for reducing unplanned acute Care for Patients with Cancer. JOP. 2018;14(5):306‐313. doi: 10.1200/JOP.17.00081 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Hong JC, Eclov NCW, Dalal NH, et al. System for high‐intensity evaluation during radiation therapy (SHIELD‐RT): a prospective randomized study of machine learning‐directed clinical evaluations during radiation and chemoradiation. J Clin Oncol. 2020;38(31):3652‐3661. doi: 10.1200/JCO.20.01688 [DOI] [PubMed] [Google Scholar]

- 44. Marmor RA, Clay B, Millen M, Savides TJ, Longhurst CA. The impact of physician EHR usage on patient satisfaction. Appl Clin Inform. 2018;9(1):11‐14. doi: 10.1055/s-0037-1620263 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Legler A, Price M, Parikh M, et al. Effect on VA patient satisfaction of Provider's use of an integrated viewer of multiple electronic health records. J Gen Intern Med. 2019;34(1):132‐136. doi: 10.1007/s11606-018-4708-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Copur MS. Burnout in oncology. Cancer Netw. 2019;33(11). Accessed June 9, 2021. 451–453. https://www.cancernetwork.com/view/burnout‐oncology [Google Scholar]

- 47. Gardner RL, Cooper E, Haskell J, et al. Physician stress and burnout: the impact of health information technology. J Am Med Inform Assoc. 2019;26(2):106‐114. doi: 10.1093/jamia/ocy145 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Hlubocky FJ, Back AL, Shanafelt TD. Addressing burnout in oncology: why cancer care clinicians are at risk, what individuals can do, and how organizations can respond. Am Soc Clin Oncol Educ Book. 2016;35:271‐279. doi: 10.1200/EDBK_156120 [DOI] [PubMed] [Google Scholar]

- 49. McPeek‐Hinz E, Boazak M, Sexton JB, et al. Clinician burnout associated with sex, clinician type, work culture, and use of electronic health records. JAMA Netw Open. 2021;4(4):e215686. doi: 10.1001/jamanetworkopen.2021.5686 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Gupta K, Murray SG, Sarkar U, Mourad M & Adler‐Milstein J Differences in ambulatory EHR use patterns for male vs. female physicians. NEJM Catalyst. 2019;5(6):1. doi: 10.1056/CAT.19.0690 [DOI] [Google Scholar]

- 51. Maloney SR, Peterson S, Kao AM, Sherrill WC, Green JM, Sachdev G. Surgery resident time consumed by the electronic health record. J Surg Educ. 2020;77(5):1056‐1062. doi: 10.1016/j.jsurg.2020.03.008 [DOI] [PubMed] [Google Scholar]

- 52. Wang JK, Ouyang D, Hom J, Chi J, Chen JH. Characterizing electronic health record usage patterns of inpatient medicine residents using event log data. PLoS One. 2019;14(2):e0205379. doi: 10.1371/journal.pone.0205379 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Chi J, Bentley J, Kugler J, Chen JH. How are medical students using the electronic health record (EHR)?: an analysis of EHR use on an inpatient medicine rotation. PLoS One. 2019;14(8):e0221300. doi: 10.1371/journal.pone.0221300 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Tait SD, Oshima SM, Ren Y, et al. Electronic health record use by sex among physicians in an academic health care system. JAMA Intern Med. 2021;181(2):288‐290. doi: 10.1001/jamainternmed.2020.5036 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Cox ML, Risoli T, Peskoe SB, Turner DA, Migaly J. Quantified electronic health record (EHR) use by academic surgeons. Surgery. 2021;169:1386‐1392. doi: 10.1016/j.surg.2020.12.009 [DOI] [PubMed] [Google Scholar]

- 56. Tariman JD, Berry DL, Cochrane B, Doorenbos A, Schepp K. Physician, patient and contextual factors affecting treatment decisions in older adults with cancer: a literature review. Oncol Nurs Forum. 2012;39(1):E70‐E83. doi: 10.1188/12.ONF.E70-E83 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Kannampallil T, Abraham J, Lou SS, Payne PRO. Conceptual considerations for using EHR‐based activity logs to measure clinician burnout and its effects. J Am Med Inform Assoc. 2020;22:1032‐1037. doi: 10.1093/jamia/ocaa305 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Tajirian T, Stergiopoulos V, Strudwick G, et al. The influence of electronic health record use on physician burnout: cross‐sectional survey. J Med Internet Res. 2020;22(7):e19274. doi: 10.2196/19274 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Marewski JN, Gigerenzer G. Heuristic decision making in medicine. Dialogues Clin Neurosci. 2012;14(1):77‐89. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data sharing not applicable ‐ no new data generated, or the article describes entirely theoretical research.