Abstract

Systematic reviews are fast increasing in prevalence in the toxicology and environmental health literature. However, how well these complex research projects are being conducted and reported is unclear. Since editors have an essential role in ensuring the scientific quality of manuscripts being published in their journals, a workshop was convened where editors, systematic review practitioners, and research quality control experts could discuss what editors can do to ensure the systematic reviews they publish are of sufficient scientific quality. Interventions were explored along four themes: setting standards; reviewing protocols; optimizing editorial workflows; and measuring the effectiveness of editorial interventions. In total, 58 editorial interventions were proposed. Of these, 26 were shortlisted for being potentially effective, and 5 were prioritized as short-term actions that editors could relatively easily take to improve the quality of published systematic reviews. Recent progress in improving systematic reviews is summarized, and outstanding challenges to further progress are highlighted.

1. Introduction and objectives

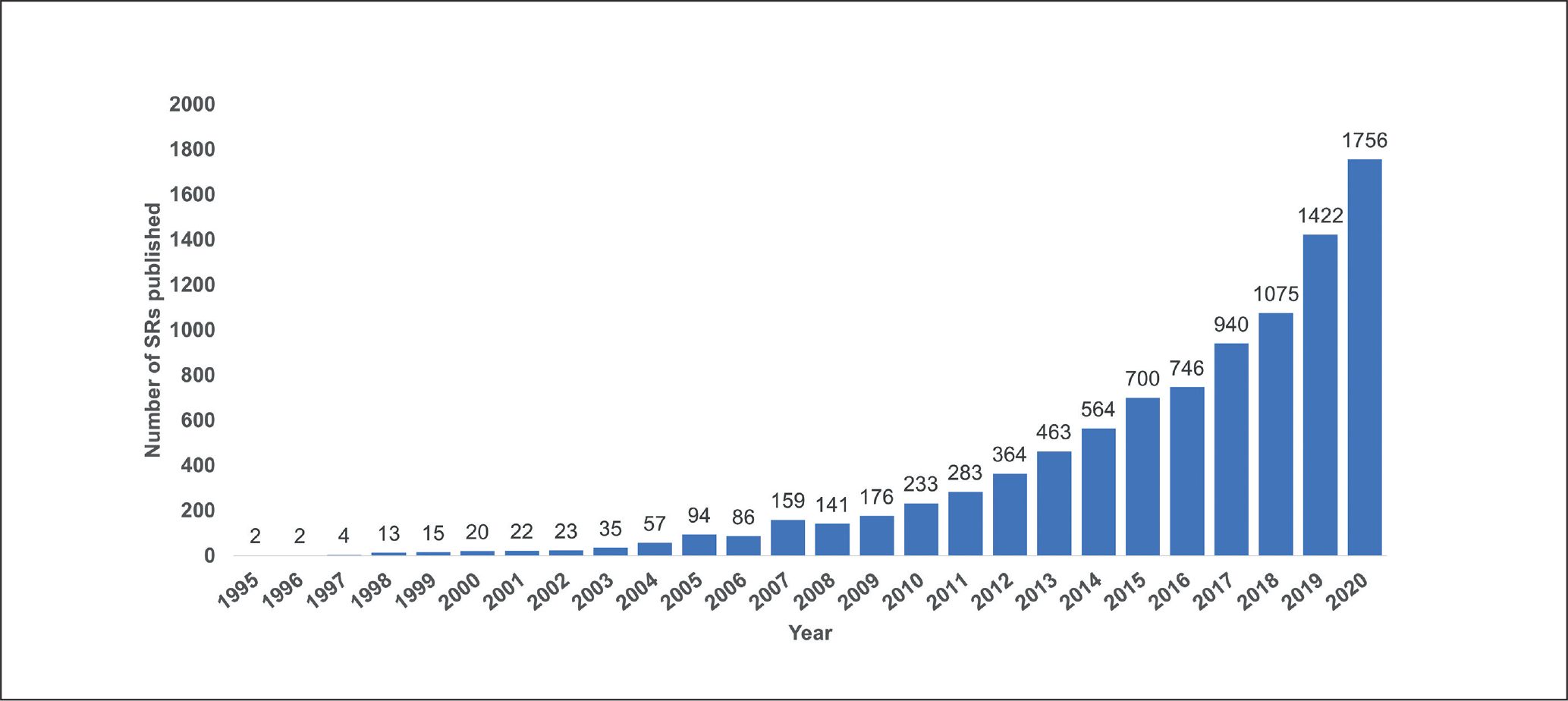

Systematic review is a methodology for minimizing risk of systematic and random error, and maximizing transparency of decision-making, when using existing evidence to answer specific research questions (Higgins et al., 2019b; Whaley et al., 2020b), and represents an increasingly prevalent type of publication in the toxicological and environmental health literature. For example, the total number of systematic reviews in toxicology indexed in Web of Science approximately doubled from 2016 to 2020 (see Fig. 1). This trend is likely driven by a combination of factors, including: the recognition of the value of a systematic approach to research and evidence-based decision-making in toxicology and environmental health; the potential prestige associated with authoring a type of publication perceived as being of an academic “gold standard”; the high citation rates associated with systematic reviews; and the ability to carry out a systematic review without experimental work or laboratory access.

Fig. 1: Estimated annual frequency of environmental health and toxicology systematic reviews indexed in Web of Science.

Results of search string as follows: TITLE: (“systematic review”). Refined by: [excluding] DOCUMENT TYPES: ( MEETING ABSTRACT ) AND WEB OF SCIENCE CATEGORIES: ( PUBLIC ENVIRONMENTAL OCCUPATIONAL HEALTH OR TOXICOLOGY ) AND [excluding] WEB OF SCIENCE CATEGORIES: ( PHARMACOLOGY PHARMACY ). Timespan: All years. Indexes: SCI-EXPANDED, SSCI, A&HCI, CPCI-S, CPCI-SSH, BKCI-S, BKCI-SSH, ESCI, CCR-EXPANDED, IC.

Systematic reviews are, however, complex projects that require a distinct methodological skill set and often take hundreds of hours to complete. They consist of multiple steps requiring input from a range of different domains of expertise (Whaley et al., 2016; Woodruff and Sutton, 2014; Rooney et al., 2014). These steps include defining the specific research objective(s), developing a detailed protocol, conducting comprehensive database searches, screening and extracting data, assessing the propensity for systematic error in the included studies, and using quantitative, qualitative and narrative methods to synthesize the included evidence to answer the research question (Hoffmann et al., 2017). How well these complex projects are being performed and documented in the field of toxicology and environmental health is unclear: To our knowledge only three reviews of this have been conducted, all of which found important shortcomings in the systematic reviews that they analyzed (Sutton et al., 2021; Sheehan and Lam, 2015; Sheehan et al., 2016). Indirect evidence from reviews of biomedical systematic reviews also suggests there may be room for improvement (Ioannidis, 2016; Page et al., 2016).

Editors of scientific journals have a fundamental role in ensuring that the published scholarly literature is of sufficient scientific quality that it is useful and can be replicated. They are involved in setting quality standards that submitted manuscripts must meet, overseeing the peer review process, and deciding when a submission has met a requisite standard for publication. Editors also have an important role in incentivizing the scientific community to employ good practices in conducting research. Given the value of systematic reviews in evaluating and summarizing evidence, their increased use in decision-making, and their rapidly increasing prevalence, ensuring that systematic reviews are reliable and trustworthy should be a high priority issue for editors of toxicology and environmental health journals.

The Evidence-based Toxicology Collaboration (EBTC) is an organization whose goal is to promote the uptake of good research practices in the toxicological and environmental health sciences and encourage the utilization of good-quality evidence in decision-making. Given the importance for its strategic agenda of promoting high quality standards for systematic reviews, EBTC convened a workshop in May 2019 to advance understanding of the major issues that journal editors face in publishing systematic reviews of environmental health and toxicological research, and to develop a joint strategy among journal editors as to how to ensure their scientific quality.

The workshop had three objectives:

To develop a common understanding of the challenges that editors of environmental health and toxicology journals face in ensuring that published systematic reviews meet an acceptable standard of scientific quality;

To articulate a set of strategic editorial interventions (i.e., actions that can be taken by editors to improve publishing outcomes) that would be expected by the participants to improve the scientific quality of published systematic reviews;

If possible, to identify and commit to five or more actions (an “action-plan”) that can be implemented short-term at the editors’ respective journals, which would have an immediate impact on the scientific quality of published systematic reviews.

The workshop was held May 29–31, 2019 in Research Triangle Park, NC, USA. It was conducted under the Chatham House Rule “according to which information disclosed during a meeting may be reported by those present, but the source of that information may not be explicitly or implicitly identified” (Simpson and Weiner, 1989). The agenda was designed to maximize participant interaction and maintain energy levels over an intense, extended period of discussion (see SM11).

Participants were recruited to provide three types of expertise: editors, as publishing experts and the target audience of the workshop; systematic review practitioners, to provide as researchers practical insight into the conduct of systematic reviews; and specialists in the quality management of research, to provide expert insight and advice into approaches for identifying and addressing challenges in improving publishing standards. Discussions were focused on four themes:

Setting standards and providing guidance, including how to ensure the methods and results of systematic reviews are fully reported; the utility of conduct and reporting guidelines in this context; and the difference between endorsement and enforcement of guidelines.

Preventing mistakes before they happen, including how to create more opportunities for engagement with researchers prior to submission of completed systematic reviews (e.g., by publishing protocols); and results-free publication models such as registered reports and their application to publishing systematic reviews.

Optimizing editorial workflows, including how to extract maximum value from the peer review process; integrating reporting standards into editorial workflows; identifying and utilizing editorial competencies; and training.

Measuring the efficacy of editorial interventions, including conducting observational and randomized studies of the efficacy of editorial interventions intended to improve the quality of published systematic reviews.

The workshop was prefaced by two presentations that introduced general issues relating to publication of systematic reviews, potential cause for concern about the quality of published systematic reviews raised by the experiences of the biomedical research community, and the objectives and themes of the workshop. Each thematic topic was introduced by a short presentation from a relevant domain expert, leading into a focused breakout session. On the final day, the results of the breakout sessions were themselves discussed to come to overall conclusions and recommendations from the workshop.

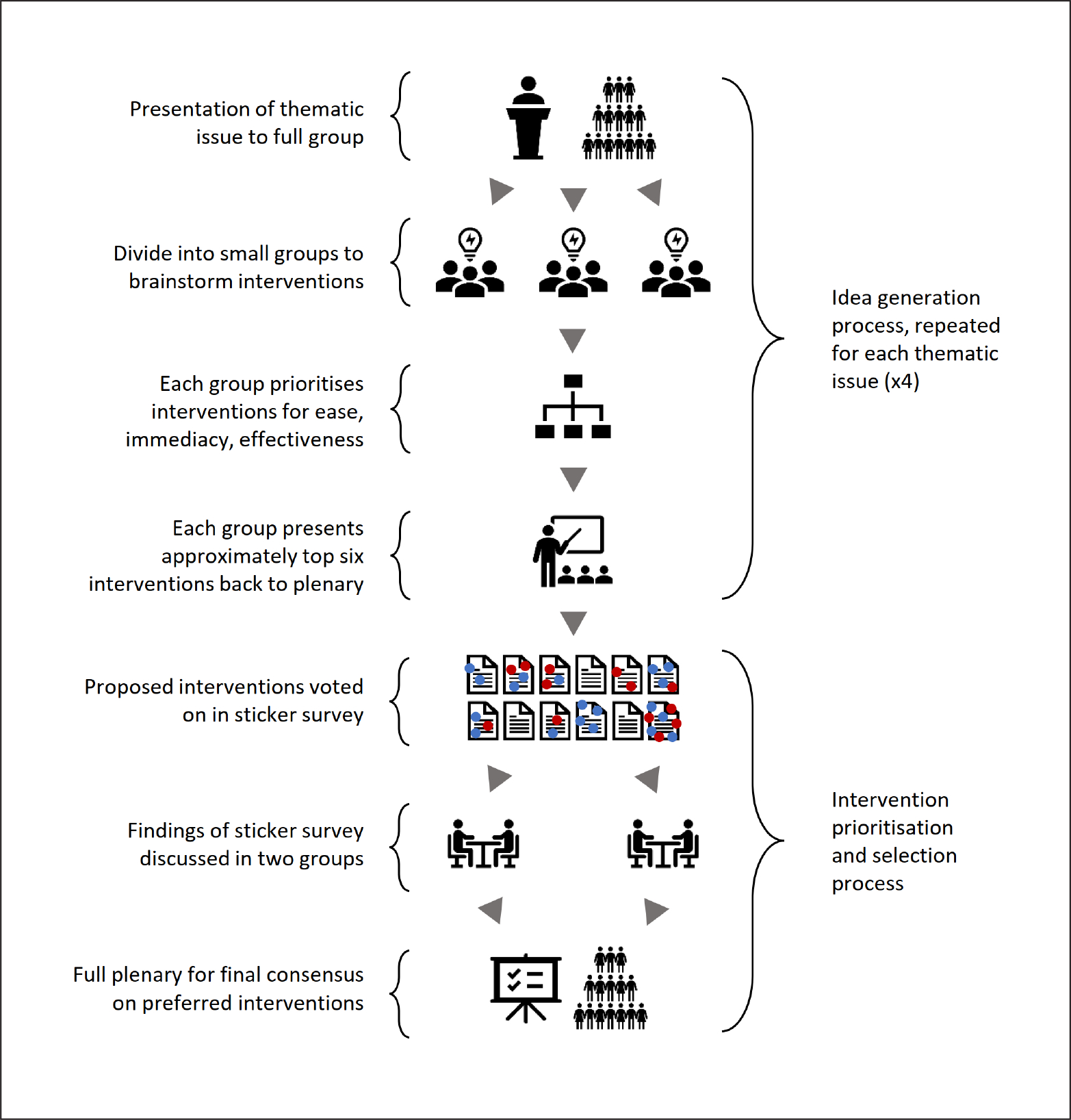

The first four breakout sessions focused on brainstorming a list of potential interventions for further evaluation and prioritization later in the workshop, with one session for each theme listed above. Each breakout session was prefaced by a presentation from one of the specialists in research quality management in order to introduce the same basic level of understanding of the issues across participants and stimulate discussion on a range of ideas and questions. Discussants were then split into three groups, each constructed to balance the number of editors with approximately the same number of systematic review or quality management experts, plus one facilitator. The group brainstormed possible theme-related challenges to publishing high-quality systematic reviews and ideas for interventions that might address them, and were asked to score the interventions for ease of implementation, likely effectiveness, and immediacy of result. The approximately six top-scoring or preferred interventions chosen by each group were presented to the whole workshop. This process, and the steps described below, are illustrated in Figure 2.

Fig. 2: Illustration of the procedure for breakout discussion of each thematic issue covered by the workshop.

The fifth breakout session generated a shortlist of priority interventions from those put forward by the first four sessions. This was conducted via a voting process. Each unique intervention from the first four sessions was summarized on a post-it note. Each participant was asked to vote for their 10 preferred interventions (those they thought likely to be most effective in a one-year time window) by applying a sticker to a post-it note. Votes were color-coded, red for editors and blue for other participants. Participants were instructed to vote a maximum of once per intervention and were allowed to discuss their voting intention with other participants. Votes were not blinded. The process is shown in Photograph 1. Votes were then tallied, and the top six interventions being favored by the editors were shortlisted for the action-plan.

Photograph 1: The prioritization exercise conducted as the fifth breakout session.

Each post-it note describes an intervention proposed during the previous day’s breakout sessions. Participants were given 10 stickers (red for editors, blue for other participants) to indicate their 10 favored interventions. The results of the voting exercise fed into the subsequent consensus-building breakouts (sixth breakout) and final plenary session (seventh breakout). Credit: Paul Whaley

In the sixth breakout session, the workshop participants were split into two groups, with an even distribution of editors, systematic reviewers, and research quality management specialists in each group. The task for each group was to discuss whether they agreed with the shortlist produced by the voting exercise and come to a consensus on what the final action-plan ought to be. In the seventh and final break-out session, the two groups’ revised action-plans were presented to plenary for discussion and to come to reach a final consensus on the action-plan.

To facilitate discussions, a workshop microsite2 was created to summarize instructions to participants for each theme and breakout session, provide links to useful reading material, store presentation slide decks for easy access during the workshop, and store rapporteurs’ notes and the raw data from the breakout groups and plenary exercises.

21 people participated in the workshop. For the purposes of running the workshop and dividing expertise out evenly across breakout groups, participants were classified in three categories: nine editors-in-chief of toxicology and environmental health journals (ECH, TBK, MS, AFO, BJB, DW, KH, WB, SK); five experts in toxicology and environmental health systematic reviews (TW, AAR, CK, ER, JB); and five experts in research quality management (DTM, LS, JR, PT, MJP). There were also two facilitators (PW, KT). PW participated in voting due to their role as an editor at an environmental health journal. Several participants in fact covered multiple areas of expertise.

Overall, 12 journals in the fields of toxicology and environmental health were represented. These are listed along with a full agenda and record of participants in the supplemental material SM11. Links to the slide decks for all the presentations can be accessed from the workshop microsite2 and are available in SM2–SM81. The slide decks contain considerably more detail and ideas for improving publishing standards than the brief summaries of the main thematic issues that are presented below.

2. Overview of the thematic issues: summary of presentations

PW introduced the concept of systematic review as a research methodology for “testing a hypothesis using existing evidence instead of conducting a novel experiment” and consisting of a sequence of steps intended to minimize potential for systematic error and maximize transparency in the evidence review process. PW emphasized that while systematic review is a well-regarded and potentially powerful methodology, it should not be taken for granted that any particular systematic review is sufficiently scientifically rigorous. Poor-quality systematic reviews can misinform or misdirect policymakers, leading to sub-optimal decisions and a potential hesitancy in the future to rely on what ought to be the most robust methodology currently available for summarizing evidence to answer a specific research question. PW then presented an overview of the purpose of the workshop and how it was to be structured. For the full presentation, see SM21.

Next, MJP presented on the quality of systematic reviews published in medicine and the potential implications for environmental health. MJP distinguished between quality of conduct of a systematic review (how well it was done) versus quality of reporting of a systematic review (how well what was done was written up). Judgements of the quality of conduct are necessarily mediated by the quality of write-up but are not the same. MJP then presented evidence of systemic challenges in health research related to the widespread publication of weak systematic reviews that use inadequate methodology at multiple stages of the process. For example, Pussegoda et al. (2017) found that approximately 1/3 of published systematic reviews do not use comprehensive search strategies and 1/3 do not use appropriate statistical techniques for meta-analysis. Overall, many systematic reviews fail to adhere to conduct and reporting guidelines that would help ensure their validity and utility, and fail to report their methods in a way that would allow users to reproduce their findings. For the full presentation and citations, see SM31.

2.1. Theme 1: Standards and guidance

For Theme 1, MJP presented on the use of reporting and conduct guidelines in systematic reviews. Reporting standards are numerous, widespread, and can cover the whole systematic review process or individual steps thereof. While reporting standards are assumed to be a contributor to improving publishing standards, research has, for example, found that journal endorsement of the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement (Moher et al., 2009) is only modestly associated with improved reporting quality of a systematic review (Page and Moher, 2017; Stevens et al., 2014). This highlights the importance of the difference between endorsement of a standard (recommending its use by authors) and enforcement of a standard (taking positive steps to ensure manuscripts adhere to the standard).

Research into measures taken by journals to improve adherence to reporting guidelines shows that only a limited number of a wide range of possible interventions have actually been attempted. In general, passive strategies encouraging adherence to a guideline are by far the most prevalent intervention, and the effectiveness of passive strategies is underwhelming (Blanco et al., 2019). In contrast, Cochrane has implemented a range of measures to enforce adherence to their conduct and reporting guidelines (Methodological Expectations of Cochrane Intervention Reviews, MECIR) for conduct of systematic reviews (Higgins et al., 2019a) and consistently publishes higher quality systematic reviews than the average for the biomedical field (Pollock et al., 2017; Goldkuhle et al., 2018). For the full presentation, see SM41.

2.2. Theme 2: Preventing mistakes before they happen

The concept of Theme 2 was to consider how journal editors and peer reviewers might be able to engage with researchers to improve the methods of a systematic review before it is conducted. Participants were encouraged to consider novel workflows outside the traditional manuscript handling process, whereby interactions with editors might begin before a final manuscript is submitted. Engagement by journals in the planning process of a systematic review should allow potential issues to be identified and corrected before it is too late for them to be addressed, preventing a situation where a completed systematic review may turn out to be critically compromised by, e.g., a search strategy that overlooked relevant evidence or a failure to assess study quality.

On this theme, DTM presented the registered reports model for publishing research (Nosek and Lakens, 2014). The registered reports model was introduced in response to how a strong bias toward statistically significant findings, combined with post-hoc decision-making in data analysis, results in irreproducible research. Under the model, research is preregistered with a research plan that consists of the study hypotheses, data collection procedures, manipulated and measured variables, and an analysis plan. “Preregistered” means that the research plan has been time-stamped, is read-only, created before the study, and submitted to a public registry. Preregistration allows a report of the study plans to be submitted to a journal for peer review and revision in advance of data collection, thereby allowing any methodological issues to be corrected with a view to generating results that are valid rather than merely significant. DTM reported that preregistration seems to be effective in addressing publication bias, increasing the proportion of null findings in the literature. For the full presentation, see SM51.

2.3. Theme 3: Optimizing editorial workflows

The concept of Theme 3 was to consider how the value of editorial and peer review processes can be maximized in improving the scientific quality of a systematic review submission. This includes understanding the core competencies of the academic editor to help ensure editors are selected and trained for the specific requirements of the role; integrating conduct and reporting guidelines into the publishing process; and ensuring that the peer review process does as much as it can to raise the overall standard of published manuscripts. Two presentations were given.

For the first presentation, PT focused on editorial competencies, based on the findings of a scoping review and consensus process by a group of researchers who had collectively examined this issue (Galipeau et al., 2016; Moher et al., 2017). The core competencies cover editor qualities and skills (n = 5), publication ethics and research integrity (n = 3), and editorial principles and process (n = 6). These competencies include: acting with leadership, integrity and accountability; demonstrating knowledge related to the integrity of research and publishing; and evaluating the scientific rigor and integrity of manuscripts. PT also presented editorial competencies that can be viewed as specific to systematic reviews, using the example of how the Campbell Collaboration defined six core competencies for editors of Campbell Systematic Reviews. These include having specific knowledge of systematic review methods and understanding of how systematic reviews are used in practice. Finally, PT presented on how an editor’s competencies can be developed through the themes of resources (e.g., training material), actions (e.g., apprenticeship and hands-on experience in conducting systematic reviews), and assessment (e.g., checklists for guideline compliance). For the full presentation, see SM61.

For the second presentation, JR presented seven universal steps toward launching an in-journal process for improving reporting standards. This is to address the phenomenon of there being little improvement in the reporting quality of research despite there being many highly cited papers on its generally poor standard. Although developed for primary studies, these steps are generalizable to systematic reviews. While this is a detailed strategy that is challenging to implement, it does yield successful outcomes. Key steps include: identifying the needs of the journal (to cover addressing a potential lack of interest in change, entrenched practices, and misinterpretation of standards); selecting in-journal “champions” who are willing to promote the adoption and enforcement of reporting checklists (echoing the lessons of Theme 1); and securing the buy-in of senior members of the management and editorial team to policy changes via a launch plan and clear communication to all journal stakeholders of the change in process and its benefits. For the full presentation, see SM71.

2.4. Theme 4: Evaluating the effectiveness of editorial interventions

Theme 4 concerned metrics for measuring the effectiveness of editorial interventions. LS introduced the concept of theory-informed behavior change interventions. This uses evidence of how well an intervention translates into changes in practice that increase the number of people using effective interventions (French et al., 2012). This approach to changing community or organizational practices consists of four elements: identifying who needs to do what differently; identifying barriers to and enablers of change that are both modifiable and necessary to address; identifying intervention components that overcome barriers and enhance enablers; and measuring behavior change. An important aspect of the model is that it posits that systems are “like Swiss cheese”: they fail only when structural weaknesses align. As such, it is only necessary to plug the hole in one slice for a systemic weakness to be addressed.

In terms of assessing the effectiveness of interventions, LS presented the results of research by Heim et al. (2018). In this survey, research methodologists were asked about their favored interventions for improving peer review, and their favored study designs for assessing the effectiveness of the proposed interventions. Randomized controlled trials of an intervention, with randomization of manuscripts or peer reviewers, was found to be the favored study design for evaluating all interventions. Interventions included training peer reviewers, adding an expert to the peer review process, using reporting guidelines, peer review that is blinded to results, incentivized peer review, and post-publication peer review, among others. For the full presentation, see SM81.

3. Prioritization of potential editorial interventions

3.1. Results from thematic breakout discussions

In total, 58 unique interventions were suggested. 46 interventions were directly related to the four themes of the workshop. A further 12 interventions were related to other themes. 18 interventions were suggested for Theme 1 (standards and guidance), 8 for Theme 2 (preventing mistakes), 17 for Theme 3 (editorial workflows), and 3 for Theme 4 (evaluating effectiveness). The full results of the brainstorming sessions, organized according to workshop themes, are available in SM91. Raw data from the rapporteurs’ records of group discussions is available from the workshop microsite2.

3.2. Results from voting (fifth break-out session)

From the four thematic break-out sessions, the groups put forward 26 favored interventions for discussion and final prioritization. The votes for each suggested intervention are shown in Table 1.

Tab. 1:

Results of voting on suggested interventions

| Suggested intervention | Votes from editors (n = 10) | Votes from specialists (n = 11) |

|---|---|---|

| Develop a common statement on a shared standard for systematic reviews | 10 | 8 |

| Set up a bank of methods reviewers for systematic reviews | 8 | 4 |

| Publish an editorial about journal desires and objectives for systematic reviews | 6 | 2 |

| Accept systematic review protocols as a submission type | 5 | 7 |

| Provide educational resources for peer-reviewers in systematic review protocols and methods | 5 | 3 |

| Use an appraisal tool for triage decisions | 5 | 0 |

| Appoint specialist editors to handle systematic review submissions | 4 | 6 |

| Assign a systematic review specialist to each systematic review submission | 4 | 5 |

| Require a completed PRISMA checklist for systematic review submissions | 4 | 5 |

| Develop and implement Transparency and Openness Promotion (TOP) guidelines (Nosek et al., 2015) for environmental health and toxicology journals | 4 | 5 |

| Build an author support toolbox | 4 | 4 |

| Add to reviewer databases classifications of systematic review expertise for reviewers, e.g., search strategies | 4 | 3 |

| Endorse Registered Reports for systematic review submissions | 4 | 3 |

| Build an editor support toolbox | 4 | 2 |

| Implement a data-sharing standard such as Minimum Information About a Microarray Experiment (MIAME) (Brazma et al., 2001) but for systematic review | 3 | 1 |

| Provide education for editors and journal Editorial Boards in systematic review protocols and methods | 3 | 1 |

| Develop written materials and guidance on systematic reviews | 3 | 0 |

| Run RCTs to evaluate effectiveness of interventions | 2 | 2 |

| Use manuscript quality control software such as StatReviewer | 2 | 1 |

| Run workshops for upstream stakeholders | 2 | 0 |

| Institute badges for papers that are compliant with good practices | 1 | 2 |

| Provide examples of good systematic reviews and good protocols | 1 | 1 |

| Develop case studies of good practices around systematic review editing | 1 | 1 |

| Provide detailed, constructive feedback to authors about editor’s decision on a systematic review | 1 | 1 |

| Run Open Science Framework (OSF) workshops for stakeholders | 0 | 2 |

| Run community-building exercises | 0 | 0 |

3.3. The five-point action-plan for improving published systematic reviews

From the sixth breakout session and final plenary discussion, five of the suggested interventions were prioritized as being both relatively straightforward to implement by journal editors and likely to be reasonably effective in improving the quality of published systematic reviews. This formed the near-term “action plan” output of the workshop and is presented in Table 2.

Tab. 2:

Five editorial interventions for short-term improvement in quality of published systematic reviews

| # | Intervention | Explanatory notes |

|---|---|---|

| 1 | Develop a common statement or editorial across multiple journals on a shared standard for systematic reviews | All editors voted for this intervention. The statement should cover a minimum set of elements that journals should expect a systematic review to include and be accompanied by a commitment from the undersigning journals to enforce these expectations. This should help better communicate to both journal teams and authors the basic requirements of systematic review submissions. |

| 2 | Provide the option for systematic review protocols as a submission type | It was recognized that protocols are important to the scientific quality of a systematic review, and it is beneficial if they are peer-reviewed; however, no consensus was reached as to how protocols should be handled. As such, giving journals flexibility in accommodating protocols was seen as important. |

| 3 | Set up a bank of peer reviewers with methodological expertise in systematic reviews | Systematic reviews employ specific methods of which non-specialist reviewers and editors do not have comprehensive knowledge. Furthermore, since non-specialist editors may not be integrated into systematic review research networks, they may not know who to invite to peer-review a systematic review submission. Therefore, a general cross-journal “bank” of specialist peer-reviewers would be valuable for improving the scientific quality of systematic reviews. |

| 4 | Create a set of systematic review “toolboxes” | Authors, editors, and peer-reviewers would all benefit from a curated repository of good examples of systematic reviews, protocols, systematic evidence maps, guidance documents, training materials, appraisal tools, etc. that facilitate the planning, peer-review and editing of systematic review manuscripts. These need not be created from scratch – links to existing resources would suffice. A toolbox website should be created. |

| 5 | Assign a systematic review specialist to submissions | Journals should ensure a specialist (either editor or reviewer) is assigned to systematic review submissions. While toolboxes and banks of specialist reviewers are helpful, their role is to compensate for lack of specialist expertise in systematic reviews at journals. |

Proposed interventions that were considered important but did not form part of the action-plan included: education for peer reviewers, editors and journal editorial boards; the implementation of data sharing standards; classifying people as having systematic review expertise in peer reviewer databases; introducing the registered reports model for systematic review submissions; using a critical appraisal tool for systematic review submissions; specifically appointing specialist systematic review editors; requiring completed PRISMA checklists be provided with systematic review submissions; and implementing the Transparency and Openness Promotion (TOP) guidelines at journals.

Interventions that were not considered a priority were: the use of badges for indicating papers that are compliant with good practices in publishing; running randomized controlled trials to assess the effectiveness of editorial interventions; the use of quality control software to assess manuscripts; and running workshops for upstream stakeholders.

3.4. Protocol publication as a major point of discussion

Peer-reviewed, pre-published protocols are a recognized mainstay of Cochrane systematic reviews (Higgins et al., 2019b) and were a major point of discussion at the workshop. While the introduction of protocols as a submission type at environmental health and toxicology journals was not universally supported by the participating editors, there was consensus that the joint editorial to be produced after the workshop should recommend that systematic reviews be based on a protocol and that such protocols, as a minimum, be made public in a preprint archive, a systematic review protocols registry (e.g., PROSPERO), or equivalent.

Uncertainty about introducing protocol publication policies at journals derived from a lack of clarity about the effect that publishing protocols has on journal impact factor, whether a journal should spend time editing a low-impact protocol for which author teams could take the final systematic review elsewhere, and whether it is consistent to require that systematic reviews, but not primary studies, be based on peer-reviewed protocols. Journals operating on page-count publishing models viewed protocols as a type of publication that does not fit with the limited number of pages they can publish each year. Potential solutions to these problems included creating specialist journals for protocols or, since protocols need not be formally published by journals so long as they are publicly available, offering in-principle acceptance to protocols that are then posted on pre-print repositories (although concerns were raised that the journal may then get little credit for peer review and editorial work on these papers). Additionally, as more journals become online-only titles, page and word limits may become less of a barrier to publishing detailed protocols.

4. Outlook

Follow-up on implementing the five priority actions from the workshop has been slowed by the COVID-19 pandemic on top of general constraints in editorial processes and budgets. Nonetheless, some important general milestones relating to themes discussed at the workshop have since been reached. More than one environmental health journal is now accepting protocol publications (Sgargi et al., 2020; Romeo et al., 2021; van Luijk et al., 2019), and at least three journals are putting in place specialist systematic review editors (DW, personal communication). The first set of formal recommendations for conduct of environmental health systematic reviews, “COSTER”, has been published (Whaley et al., 2020a), and an online tool3 for facilitating consistent editorial triage against a basic set of expectations for systematic reviews has been created, “CREST_Triage” (Whaley and Isalski, 2020). While a bank of specialist peer reviewers for systematic reviews has not yet been created, there is now an online database4 of specialist peer reviewers for search strategies in systematic reviews (Nyhan et al., 2020). The first commentary focusing on advancing systematic review methodology for exposure science, authored by one of the workshop participants (Cohen Hubal et al., 2020), has been published. This was followed by the first peer-reviewed, pre-published protocol for a systematic review of exposure studies (Sanchez et al., 2020). The World Health Organization is conducting an increasing number of environmental health systematic reviews according to peer-reviewed, pre-published protocols (Pachito et al., 2021; Verbeek et al., 2021; Pega et al., 2021).

The above developments have contributed an important set of resources that can be compiled into a domain-specific toolbox for supporting editors, authors, and peer reviewers of environmental health systematic reviews (Intervention #4). They also provide further experience for development of systematic review protocols in environmental health (Intervention #2). A common statement of expectations for systematic reviews (Intervention #1) is currently under development.

Several challenges remain in implementing our strategy for improving the quality of systematic reviews in toxicology and environmental health. Specific expertise in systematic review remains a challenge for many editors, and it is likely that submission rates will increase faster than this expertise can be acquired or recruited. Many toxicology and environmental health journals are low-budget, specialist titles run with minimum staff, where day-to-day work tends to overwhelm time and opportunity to implement workflow and structural changes. As a result, training editorial staff is a low priority, if training is available at all, and longer-term strategic planning and capacity building tends to be displaced by the need to be responsive to relatively large numbers of submissions being handled by relatively small teams of editors. A key issue observed at the workshop is the lack of an overarching organization, similar in function to Cochrane in healthcare or the Campbell Collaboration for the social sciences, which can provide a governance structure and consistent quality-control checkpoints built around improving systematic reviews in environmental health and toxicology.

5. Conclusions

The workshop proposed five priority interventions (see Tab. 2) for improving the scientific quality of systematic reviews. These are expected by the editors, in consultation with the specialist expert participants, to have a significant, near-term impact on the quality of systematic reviews being published in environmental health and toxicology. A further nine interventions were recognized as being of strategic value but more challenging to implement. A total of 46 unique interventions, which may be further explored and potentially implemented by the research community, were collected and provide a wide variety of ideas for improving the quality of published systematic reviews. Overall, the value of systematic reviews of high scientific quality remains critical, not only because of the role systematic reviews have in decision-making, but also for identifying issues in the primary literature that could potentially be responded to by editors as well.

Supplementary Material

Acknowledgements

The workshop was sponsored by the Doerenkamp-Zbinden Foundation. Writing of this report was funded by the Evidence-based Toxicology Collaboration. We would like to thank Dr Jason Roberts (Origin Editorial, Arvada, CO, 80005), Prof. Malcolm Sim (School of Public Health and Preventive Medicine, Monash University, Melbourne, Australia), and Dr Windy Boyd (Environmental Health Perspectives, National Institute of Environmental Health Sciences, Durham, NC, USA) for their contributions to the workshop.

Footnotes

Disclaimer

The views expressed in this article are those of the authors and do not necessarily reflect the views or policies of the U.S. Environmental Protection Agency or the U.S. National Toxicology Program.

A report of t4 – the transatlantic think tank for toxicology, a collaboration of the toxicologically oriented chairs in Baltimore, Konstanz and Utrecht sponsored by the Doerenkamp-Zbinden Foundation.

https://sites.google.com/whaleyresearch.uk/editors-workshop-2019/home (accessed 11.06.2021)

https://crest-tools.site/ (accessed 11.06.2021)

https://sites.google.com/view/mlprdatabase/ (accessed 04.04.2021)

References

- Blanco D, Altman D, Moher D et al. (2019). Scoping review on interventions to improve adherence to reporting guidelines in health research. BMJ Open 9, e026589. doi: 10.1136/bmjopen-2018-026589 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brazma A, Hingamp P, Quackenbush J et al. (2001). Minimum information about a microarray experiment (MIAME)-toward standards for microarray data. Nat Gen 29, 365–371. doi: 10.1038/ng1201-365 [DOI] [PubMed] [Google Scholar]

- Cohen Hubal EA, Frank JJ, Nachman R et al. (2020). Advancing systematic-review methodology in exposure science for environmental health decision making. J Exp Sci Environ Epidemiol 30, 906–916. doi: 10.1038/s41370-020-0236-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- French SD, Green SE, O’Connor DA et al. (2012). Developing theory-informed behaviour change interventions to implement evidence into practice: A systematic approach using the theoretical domains framework. Implement Sci 7, 38. doi: 10.1186/1748-5908-7-38 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galipeau J, Barbour V, Baskin P et al. (2016). A scoping review of competencies for scientific editors of biomedical journals. BMC Med 14, 16. doi: 10.1186/s12916-016-0561-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldkuhle M, Narayan VM, Weigl A et al. (2018). A systematic assessment of Cochrane reviews and systematic reviews published in high-impact medical journals related to cancer. BMJ Open 8, e020869. doi: 10.1136/bmjopen-2017-020869 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heim A, Ravaud P, Baron G et al. (2018). Designs of trials assessing interventions to improve the peer review process: A vignette-based survey. BMC Med 16, 191. doi: 10.1186/s12916-018-1167-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Higgins JP, Lasserson T, Chandler J et al. (2019a). Methodological Expectations of Cochrane Intervention Reviews (MECIR). Cochrane. https://community.cochrane.org/mecir-manual [Google Scholar]

- Higgins JP, Thomas J, Chandler J et al. (2019b). Cochrane Handbook for Systematic Reviews of Interventions. Wiley. doi: 10.1002/9781119536604. https://training.cochrane.org/handbook [DOI] [Google Scholar]

- Hoffmann S, de Vries RBM, Stephens ML et al. (2017). A primer on systematic reviews in toxicology. Arch Toxicol 91, 2551–2575. doi: 10.1007/s00204-017-1980-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ioannidis JPA (2016). The mass production of redundant, misleading, and conflicted systematic reviews and meta-analyses. Milbank Q 94, 485–514. doi: 10.1111/1468-0009.12210 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moher D, Liberati A, Tetzlaff J et al. (2009). Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Med 6, e1000097. doi: 10.1371/journal.pmed.1000097 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moher D, Galipeau J, Alam S et al. (2017). Core competencies for scientific editors of biomedical journals: Consensus statement. BMC Med 15, 167. doi: 10.1186/s12916-017-0927-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nosek BA and Lakens D (2014). Registered reports: A method to increase the credibility of published results. Soc Psychol 45, 137–141. doi: 10.1027/1864-9335/a000192 [DOI] [Google Scholar]

- Nosek BA, Alter G, Banks GC et al. (2015). Scientific standards. Promoting an open research culture. Science 348, 1422–1425. doi: 10.1126/science.aab2374 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nyhan K, Haugh D, Nardini HG et al. (2020). Librarian Peer Reviewer Database. Connecting experts in systematic searching to the journal editors who need them. https://sites.google.com/view/mlprdatabase/

- Pachito DV, Pega F, Bakusic J et al. (2021). The effect of exposure to long working hours on alcohol consumption, risky drinking and alcohol use disorder: A systematic review and meta-analysis from the WHO/ILO joint estimates of the work-related burden of disease and injury. Environ Int 146, 106205. doi: 10.1016/j.envint.2020.106205 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Page MJ, Shamseer L, Altman DG et al. (2016). Epidemiology and reporting characteristics of systematic reviews of biomedical research: A cross-sectional study. PLoS Med 13, e1002028. doi: 10.1371/journal.pmed.1002028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Page MJ and Moher D (2017). Evaluations of the uptake and impact of the preferred reporting items for systematic reviews and meta-analyses (PRISMA) statement and extensions: A scoping review. Syst Rev 6, 263. doi: 10.1186/s13643-017-0663-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pega F, Momen NC, Ujita Y et al. (2021). Systematic reviews and meta-analyses for the WHO/ILO joint estimates of the work-related burden of disease and injury. Environ Int 155, 106605. doi: 10.1016/j.envint.2021.106605 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pollock M, Fernandes RM and Hartling L (2017). Evaluation of AMSTAR to assess the methodological quality of systematic reviews in overviews of reviews of healthcare interventions. BMC Med Res Methodol 17, 48. doi: 10.1186/s12874-017-0325-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pussegoda K, Turner L, Garritty C et al. (2017). Systematic review adherence to methodological or reporting quality. Syst Rev 6, 131. doi: 10.1186/s13643-017-0527-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Romeo S, Zeni O, Sannino A et al. (2021). Genotoxicity of radiofrequency electromagnetic fields: Protocol for a systematic review of in vitro studies. Environ Int 148, 106386. doi: 10.1016/j.envint.2021.106386 [DOI] [PubMed] [Google Scholar]

- Rooney AA, Boyles AL, Wolfe MS et al. (2014). Systematic review and evidence integration for literature-based environmental health science assessments. Environ Health Perspect 122, 711–718. doi: 10.1289/ehp.1307972 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sanchez KA, Foster M, Nieuwenhuijsen MJ et al. (2020). Urban policy interventions to reduce traffic emissions and traffic-related air pollution: Protocol for a systematic evidence map. Environ Int 142, 105826. doi: 10.1016/j.envint.2020.105826 [DOI] [PubMed] [Google Scholar]

- Sgargi D, Adam B, Budnik LT et al. (2020). Protocol for a systematic review and meta-analysis of human exposure to pesticide residues in honey and other bees’ products. Environ Res 186, 109470. doi: 10.1016/j.envres.2020.109470 [DOI] [PubMed] [Google Scholar]

- Sheehan MC and Lam J (2015). Use of systematic review and meta-analysis in environmental health epidemiology: A systematic review and comparison with guidelines. Curr Environ Health Rep 2, 272–283. doi: 10.1007/s40572-015-0062-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sheehan MC, Lam J, Navas-Acien A et al. (2016). Ambient air pollution epidemiology systematic review and meta-analysis: A review of reporting and methods practice. Environ Int 92–93, 647–656. doi: 10.1016/j.envint.2016.02.016 [DOI] [PubMed] [Google Scholar]

- Simpson JA and Weiner ES (1989). The Oxford English Dictionary. 2nd edition. Oxford, UK: Clarendon. [Google Scholar]

- Stevens A, Shamseer L, Weinstein E et al. (2014). Relation of completeness of reporting of health research to journals’ endorsement of reporting guidelines: Systematic review. BMJ 348, g3804. doi: 10.1136/bmj.g3804 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sutton P, Chartres N, Rayasam SD et al. (2021). Reviews in environmental health: How systematic are they? Environ Int 152, 106473. doi: 10.1016/j.envint.2021.106473 [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Luijk JAKR, Popa M, Swinkels J et al. (2019). Establishing a health-based recommended occupational exposure limit for nitrous oxide using experimental animal data – A systematic review protocol. Environ Res 178, 108711. doi: 10.1016/j.envres.2019.108711 [DOI] [PubMed] [Google Scholar]

- Verbeek J, Oftedal G, Feychting M et al. (2021). Prioritizing health outcomes when assessing the effects of exposure to radiofrequency electromagnetic fields: A survey among experts. Environ Int 146, 106300. doi: 10.1016/j.envint.2020.106300 [DOI] [PubMed] [Google Scholar]

- Whaley P, Halsall C, Ågerstrand M et al. (2016). Implementing systematic review techniques in chemical risk assessment: Challenges, opportunities and recommendations. Environ Int 92–93, 556–564. doi: 10.1016/j.envint.2015.11.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whaley P and Isalski M (2020). CREST_Triage. https://crest-tools.site/

- Whaley P, Aiassa E, Beausoleil C et al. (2020a). Recommendations for the conduct of systematic reviews in toxicology and environmental health research (COSTER). Environ Int 143, 105926. doi: 10.1016/j.envint.2020.105926 [DOI] [PubMed] [Google Scholar]

- Whaley P, Edwards SW, Kraft A et al. (2020b). Knowledge organization systems for systematic chemical assessments. Environ Health Perspect 128, 125001. doi: 10.1289/EHP6994 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woodruff TJ and Sutton P (2014). The navigation guide systematic review methodology: A rigorous and transparent method for translating environmental health science into better health outcomes. Environ Health Perspect 122, 1007–1014. doi: 10.1289/ehp.1307175 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.