Abstract

Spike sorting is a fundamental step in extracting single-unit activity from neural ensemble recordings, which play an important role in basic neuroscience and neurotechnologies. A few algorithms have been applied in spike sorting. However, when noise level or waveform similarity becomes relatively high, their robustness still faces a big challenge. In this study, we propose a spike sorting method combining Linear Discriminant Analysis (LDA) and Density Peaks (DP) for feature extraction and clustering. Relying on the joint optimization of LDA and DP: DP provides more accurate classification labels for LDA, LDA extracts more discriminative features to cluster for DP, and the algorithm achieves high performance after iteration. We first compared the proposed LDA-DP algorithm with several algorithms on one publicly available simulated dataset and one real rodent neural dataset with different noise levels. We further demonstrated the performance of the LDA-DP method on a real neural dataset from non-human primates with more complex distribution characteristics. The results show that our LDA-DP algorithm extracts a more discriminative feature subspace and achieves better cluster quality than previously established methods in both simulated and real data. Especially in the neural recordings with high noise levels or waveform similarity, the LDA-DP still yields a robust performance with automatic detection of the number of clusters. The proposed LDA-DP algorithm achieved high sorting accuracy and robustness to noise, which offers a promising tool for spike sorting and facilitates the following analysis of neural population activity.

Subject terms: Neuroscience, Computational neuroscience

Introduction

The development of neuroscience has put forward high requirements for analyzing neural activity at both single neuron1–4 and population levels5–7. The basis of neural data analysis is the correct assignment of each detected spike to the appropriate units, a process called spike sorting8–11. Spike sorting methods will encounter difficulties in the face of noise and perturbation. Since the algorithms commonly fall into two processes12,13, feature extraction and clustering, an outstanding spike sorting algorithm needs to be highly robust in the feature extraction and clustering process.

For feature extraction methods, the extracted features are descriptions of spikes in low-dimensional space. An appropriate feature extraction method can reduce data dimensions while ensuring the degree of differentiation1. Currently, extracting the geometric features of waveforms is the simplest way, including peak-to-peak value, width, zero-crossing feature, etc.14. Although this method is easy to operate with extremely low complexity, it has a low degree of differentiation for similar spikes and is highly sensitive to noise12,13. First and Second Derivative Extrema (FSDE) calculates the first and second derivative extrema of spike waveform, which is relatively simple and has certain robustness to noise15. Other methods like Principal Components Analysis (PCA)13,16 and Discrete Wavelet Transform (DWT)17–19 have certain robustness to noise. In recent years, deep learning methods have been proposed, such as the 1D-CNNs20 and the Autoencoder (AE)21. However, the deep learning methods are limited in practical use because of their high computational complexity and high demands on the training set.

So far, many previous methods tend to be perturbed by noise or the complexity of the data. They cannot effectively extract the features with high differentiation, resulting in poor effect in the subsequent clustering, especially in the case of high noise level and data similarity. For solving this problem, some studies improved the robustness by using supervised feature extraction and clustering iteration to get the optimal subspace with strong clustering discrimination22–25.

Clustering algorithms are also developing with the update of data analysis methods. Early on, the commonly used method was manually26 segmenting clusters. When more channels come, the workload of operations becomes higher, so it is less used later. K-means (Km)13 is a widely used clustering method because it is simple to calculate. However, it requires users to determine the number of clusters in advance. Thus, it is sensitive to the initial parameters and lacks robustness27,28. Some distribution-based methods, such as Bayesian Clustering13 and Gaussian Mixture Model29–31, represent the data with Gaussian-distribution assumptions. Some methods based on neighboring relations can avoid assumptions, for example, the Superparamagnetic Clustering19,32. In addition, Neural Networks33, T-distribution34, Hierarchical Clustering35, and Support Vector Machines36,37 are also used in spike sorting.

For supervised feature extraction methods, the clustering method has a powerful influence on the performance of feature extraction and further affects the performance of the whole algorithm. Ding et al. proposed the LDA-Km algorithm, which used K-means to obtain classification labels and LDA to find the feature space based on the labels and then continuously iterated the two algorithms to convergence24,25. Keshtkaran et al. introduced a Gaussian Mixture Model (GMM) based on LDA-Km and put forward the LDA-GMM algorithm22, which had high accuracy and strong robustness against noise and outliers. LDA-GMM needs to iterate several times by changing the initial value of important parameters (such as the initial projection matrix) to obtain the optimal result. Its operation also calls LDA-Km which brings additional computation complexity. Recently, the concept of joint optimization of feature extraction and clustering has been adopted to construct a unified optimization model of PCA and Km-like procedures38, which integrates the feature extraction and clustering steps for spike sorting.

Inspired by these efforts, we proposed a framework that integrates the supervised feature extraction and the clustering to make them benefit each other. Thus, a remarkable clustering method is also crucial. Density Peaks (DP) proposed by Rodriguez et al. define the cluster centers as local maxima in the density of data points39. This algorithm does not assume the data distribution and can well adapt to the nonspherical distribution, which is more applicable to the complex distribution of spikes in vivo, making it a win–win for robustness and computation cost. Therefore, this paper integrates the LDA and DP as a joint optimization model LDA-DP for spike sorting.

Methods

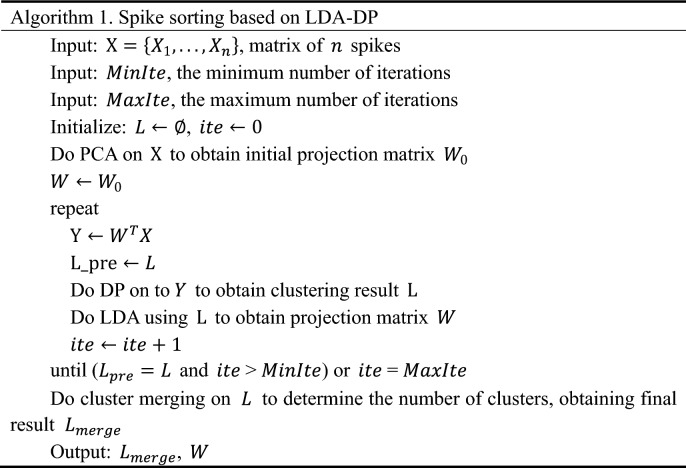

An overview of the LDA-DP algorithm

This study proposed a spike sorting algorithm combining LDA with DP. LDA is a supervised machine learning method that requires prior information about cluster labels. The data is initially projected into an initial subspace and then clustered by Density Peaks to obtain cluster labels. Thus, we need an initial projection matrix . As summarized in Algorithm 1, the projection matrix is initialized by executing PCA on spike matrix , cutting the first coefficients, and assigning it to . We chose = 3 for overall consideration to maintain performance and computation complexity, in line with the other feature extraction methods compared in this study. In each iteration, the algorithm updates a clustering result . When the updated is relatively consistent with the result in the previous iteration () or the number of iterations reaches the upper limit , the iteration ends. The minimum number of iterations ensures that the algorithm iterates adequately. The suggested value for minimum iteration is 5 and maximum iteration is 50. Finally, in the last step of the algorithm, the similar clusters are merged and we obtain the sorting result  .

.

Discriminative feature extraction using Linear Discriminant Analysis

Linear Discriminant Analysis (LDA), also known as "Fisher Discriminant Analysis", is a linear learning method proposed by Fisher23. LDA is a supervised machine learning method that finds an optimal feature space, where the intra-class scatters are relatively small and inter-class scatters are relatively large.

For a multi-cluster dataset, the quality of clusters can be measured by the intra-class scatter metric and the inter-class scatter metric , as shown in Formula (1) and (2):

| 1 |

| 2 |

denotes the th data point in the th cluster , denotes the mean value of data points in , denotes the number of data points in , denotes the mean value of all data points, and denotes the total number of data points.

To calculate the projection matrix , LDA performs optimization by maximizing objective function (Formula (3)).

| 3 |

Then data points can be projected to a -dimensional subspace which captures discriminative features by the obtained projection matrix . In this study, was fixed to 3 by default.

Clustering features based on Density Peaks

The principle of the Density Peaks Algorithm (DP)39 is very simple. For each data point, two parameters are calculated, the local density of the point and the minimum distance between the current point and the data point with a larger local density. The DP algorithm assumes that if a point is a cluster center, it will satisfy two conditions: (1) its local density is high; (2) it is far away from another point that has a larger local density. That is, the center of the cluster is large for both and . After the cluster centers are identified, the remaining data points are allocated according to the following principle: each point falls into the same cluster with its nearest neighbor point who has a higher local density.

In this study, the Gaussian kernel is adopted to calculate local density. Local density of the th point is shown in Formula (4):

| 4 |

denotes the Euclidean distance between the sample and , as is shown in Formula (5):

| 5 |

denotes the cutoff distance. In this study, we defined cutoff distance by selecting a value in ascending sorted sample distances :

| 6 |

is the cutoff distance index, and as a rule of thumb, it generally ranges from 0.01 to 0.02, and denotes the rounding function.

The minimum distance and the nearest neighbor point is calculated in Formula (7) and (8) where denotes the maximum local density:

| 7 |

| 8 |

To automate39 the search for the cluster centers where both and are large, we creatively defined the DP index as the product of and , as shown in Formula (9).

| 9 |

The algorithm selects data points with the largest DP index as the clustering centers. If the data is randomly distributed, then the distribution of is in line with the monotonically decreasing power function, and the DP index of the cluster centers is significantly higher, which makes it feasible to select cluster centers according to the DP index . As iteration times increase, the DP index difference between the center and the non-center increases. As the value is determined, the method can be automated. denotes the initial number of clusters, its value actually affects the performance of the algorithm. Since one channel in sparse electrodes could commonly record no more than 4 single units, the default value of in this study is 4, for an overall consideration of sorting accuracy and running time. See Supplementary Fig. S1 for information on the effects of different initial on the sorting accuracy and running time. In some rare instances, more units might be recorded if the probes are thinner, in which case the value should be adjusted according to the electrodes.

For dataset which contains data points , giving cutoff distance index and the initial number of clusters , the flow of the DP clustering algorithm is as follows:

- Step 1:

Calculate the distance between every two data points , as shown in Formula (5).

- Step 2:

Calculate the cutoff distance as shown in Formula (6).

- Step 3:

For each data point, calculate the local density , the minimum distance and nearest neighbor point , as shown in Formulas (4) (7) and (8).

- Step 4:

For each data point, calculate the DP index , as shown in Formula (9).

- Step 5:

Select cluster centers: the point with the largest is the center of Cluster 1, the point with the second-largest is the center of Cluster 2, and so on to get centers.

- Step 6:

- Classify non-center points: rank the non-center points in descending order according to their local density , traversing each non-center point, and then the label of the th point is calculated as Formula (10):

where denotes the cluster label of the nearest neighbor point of the th point.10

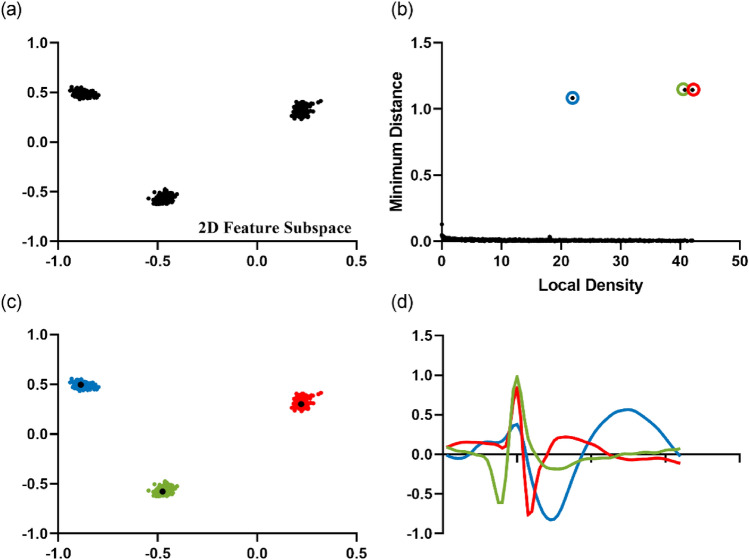

Here, we demonstrated the process using the testing set C1_005 (see “Evaluation”). In the feature extraction step, LDA finds out the feature subspace with the optimal clustering discrimination through continuous iteration (Fig. 1a). For each data point, our algorithm calculates its local density () and the minimum distance (). As described in the previous section, cluster centers are the points whose and are relatively large. DP screens the cluster centers using a previously defined DP index that is the product of and . Figure 1b shows a schematic diagram where and are set as the horizontal and vertical axes in the case of screening three cluster centers. The screened centers are the three points with the largest . They are circled in three colors corresponding to three clusters. The defined DP index is competent for center point screening for the screened points all have a large and value. As a result, Density Peaks clustering obtains the three clusters (Fig. 1c), and Fig. 1d shows the waveforms of each cluster center.

Figure 1.

Running scenario of the DP clustering stage using Dataset C1_005. (a) Data points projected in a two-dimensional feature subspace after LDA. This subspace is considered to be optimal for the within-class scatters are relatively small and intra-class scatters are relatively large. (b) Schematic diagram with the local density and the minimum distance as the horizontal and vertical axes. The points circled in three colors are the screened cluster centers. (c) Scatter plot of 3 clusters obtained by DP. The three colors of red, green and blue respectively represent the clustering results of cluster 1, cluster 2 and cluster 3. The black dots represent the cluster centers. (d) The waveforms of three cluster centers.

Automatic detection of the number of clusters

The last step of the algorithm is a cluster merging step, through which the number of clusters will be determined. The purpose of cluster merging is to avoid similar clusters being over-split. After the merging step, the number of clusters is determined automatically. The number of clusters is a critical parameter required by many spike sorting algorithms. However, manually setting the number of clusters in advance relies heavily on the experience of operators and may cause problems in practice. Thus, a merging step is crucial to automatically determine the number of clusters, in order to reduce the workload and artificial error of manual operation. The cluster merging finds similar clusters, combines them, and repeats the process. Here, a threshold is used to end the cluster merging process. Once the similarity between the most similar clusters goes below the threshold, the merging is stopped, and thus the number of clusters is automatically determined.

The similarity between clusters can be measured in several ways. Common distance metrics include the Minkowski distance, the cosine distance and the inner product distance40. According to the Davis-Bouldin Index (DBI)40–43, we defined cluster similarity as the ratio of the compactness and the separation .

Intra-class distance is a parameter to evaluate the internal compactness of a cluster. Thus, the compactness can be calculated by (11).

| 11 |

denotes the within-class distance of the cluster , denotes the th data point in the th cluster , denotes the center of .

Inter-class distance is a parameter to evaluate the separation of clusters. Thus, the separation can be calculated by (12).

| 12 |

denotes the inter-class distance between the cluster and , and and denotes the center of the cluster and , respectively.

Similarity metric is shown in Formula (13)

| 13 |

If two clusters have high similarity, the two clusters are merged. We set the threshold as a proportional function of the mean value of , as is shown in Formula (14)

| 14 |

denotes the threshold coefficient. The threshold should be significantly higher than the mean value, and as a rule of thumb, is above 1.4.

The flow of the cluster merging process is as follows:

- Step 1:

Calculate the compactness for each cluster, as shown in Formula (11)

- Step 2:

Calculate the separation for every two clusters, as shown in Formula (12)

- Step 3:

Calculate similarity metric for for every two clusters, as shown in Formula (13)

- Step 4:

Calculate the threshold , as shown in Formula (14)

- Step 5:

Find the maximum similarity . If , merge cluster and cluster , set the center of cluster as the new center, , return to step 1; Otherwise, stop merging.

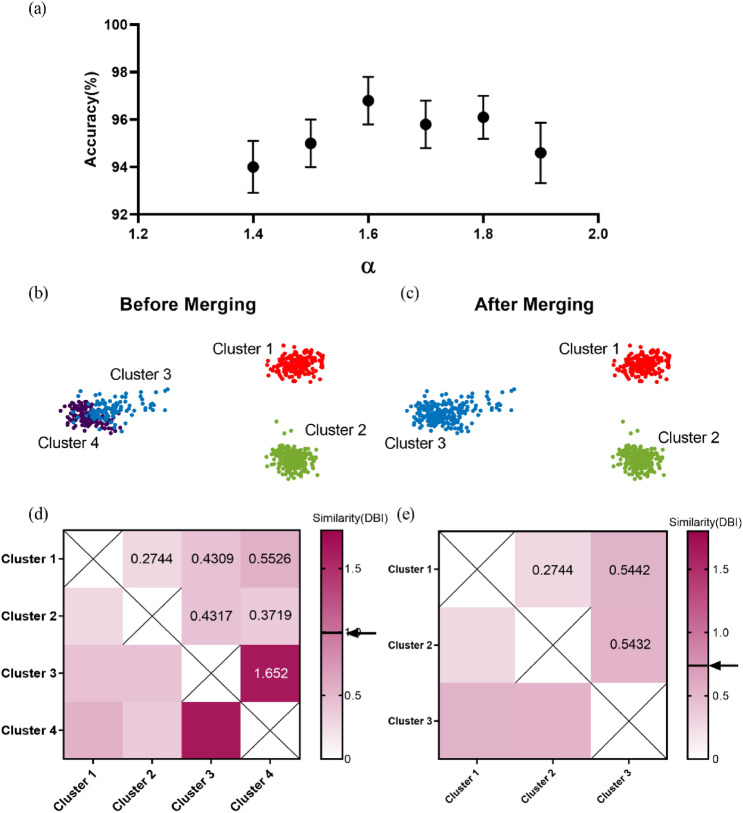

Notably, the threshold coefficient largely affects the merging results. Figure 2a shows the influence of the threshold coefficient on algorithm performance (accuracy) on Dataset A (see “Evaluation”). The mean accuracy reaches the highest when . Therefore, we found an appropriate value of = 1.6 to make the algorithm achieve a general optimal performance on all datasets. In subsequent evaluation, we fixed as 1.6.

Figure 2.

An illustration of determining the number of clusters. (a) Accuracy on all testing sets in dataset A versus threshold coefficient . The error bars represent the standard error of the mean. (b,c) The results of classification before and after merging. Before merging, data points are clustered into four clusters (denoted by four colors: red, violet, green and blue), while after merging, we obtain three clusters (denoted by three colors: red, green and blue). (d) Heatmap of similarity between clusters before merging. The black solid line and the arrow mark the threshold (threshold = 0.99, see Formula (14)). It can be seen from the heatmap, the similarity between cluster 3 and cluster 4 is above the threshold, thus merging cluster 3 and cluster 4. (e) Heatmap of similarity between clusters after merging. At this time the number of clusters is 3, and the similarity between clusters is below the threshold (threshold = 0.73, see Formula (14)), the merging stage terminates. The number of clusters is finally determined to be 3.

To visualize the effects of the merging process, we selected the testing set C1_020 (see “Evaluation”) for illustration. We obtained the threshold in Formula (14) with . The number of clusters is 4 before merging in Fig. 2b and is 3 after merging in Fig. 2c. Figure 2d,e show the similarity between every two clusters measured by DBI40 before and after merging respectively. It is worth noting that the cluster similarity between cluster 3 and 4 is above the threshold (threshold = 0.99) before the merging process (Fig. 2d), while all cluster similarity reaches below the threshold (threshold = 0.73) after the merging (Fig. 2e). Thus, our proposed algorithm can automatically determine the number of clusters through the cluster merging process.

Evaluation

Datasets

Spike waveform data containing cluster information are generally obtained in two ways. One way is to use simulated data that quantifies algorithm performance and compares different algorithms. The other way is in-vivo extracellular recordings capturing the variability inherent in spike waveforms, which lacks in the simulated data.

Dataset A: simulated dataset wave_clus

In this study, we used one common simulated dataset provided by Quiroga et al.19. In the simulation study, spike waveforms have a Poisson distribution of interspike intervals, and the noise is similar to the spikes in the power spectrum. In addition, the spike overlapping, electrode drift and explosive discharge under real conditions are simulated. To date, has been used by many spike sorting algorithms for evaluating sorting performance19–22,24.

Dataset A contains four sets of data C1, C2, C3 and C4. Each testing set contains three distinct spike waveform templates, in which template similarity levels are significantly different (C2, C3 and C4 > C1) and the background noise levels are represented in terms of their standard deviation: 0.05, 0.10, 0.15, 0.20 (C1, C2, C3 and C4), 0.25, 0.30, 0.35, 0.40 (C1). Both similarity levels and noise levels will affect the classification performance. In this study, the correlation coefficient (CC) was used to evaluate the similarity levels of spike waveforms. The higher the correlation of the two templates, the higher the similarity of the waveforms and the more difficult it will be to distinguish the two clusters.

According to spike time information, the waveforms were extracted from the dataset, and then the spike alignment was conducted. Each waveform lasts about 2.5 ms and is composed of 64 sample points. The peak value was aligned at the 20 th sample point.

Dataset B: public in-vivo real recordings HC1

HC1 is a publicly available in-vivo dataset, which contains the extracellular and intracellular signals from rat hippocampal neurons with silicon probes44. It is a widely used benchmark recorded with sparse electrodes22,31,38. We used the simultaneous intracellular recording as the label information of extracellular recording to obtain partial ground truth44. In a recent study, SpikeForest, a validation platform has evaluated the performance of ten major spike sorting toolboxes on HC145.

For all the datasets, raw data were filtered by a Butterworth bandpass filter (filter frequency band 300–3000 Hz), and the extracellular spikes were detected by double thresholding using Formula (15).

| 15 |

Since intracellular recording had little noise, single threshold detection was adopted to obtain intracellular action potentials. If the difference between the extracellular spike time and the intracellular peak time is within 0.3 ms, they are regarded as the same action potential. After analysis, we obtained some spikes in the extracellular recording, which corresponded to the action potentials in the intracellular recording. Thereafter we call them the marked spikes, and the rest spikes are called unmarked spikes. With regard to the typical dataset d533101 in HC1, d533101:6 contains the intracellular potential of a single neuron, while the dataset d533101:4 contains simultaneous waveforms of this single neuron as well as some other neurons. We detected 3000 extracellular spikes from extracellular recording (dataset d533101:4) and 849 intracellular action potentials from intracellular recording (dataset d533101:6). After alignment, 800 marked spikes in the extracellular recording corresponded to the action potential in the intracellular recording and were used as ground truth. The rest 2200 spikes are unmarked spikes.

Dataset C:in-vivo real recordings from a non-human primate

We also compared the performance of spike sorting algorithms on in-vivo recordings from a macaque performing a center-out task in a previous study46. All methods involved in the experiment on macaques are reported in accordance with the ARRIVE guidelines. In brief, the 96-channel intracortical microelectrode array (Blackrock Microsystems, US) was chronically implanted in the primary motor cortex (M1) of a male rhesus monkey (Macaca mulatta). The monkey took roughly a week to recover from the surgery, after which the in-vivo neural signals were recorded through the Cerebus multichannel data acquisition system (Blackrock Microsystems, US) at a sample rate of 30 kHz. Testing sets were obtained from 30 stable channels by measuring the stationarity of spike waveforms and the interspike interval (ISI) distribution. All experimental procedures involving animal models described in this study were approved by the Animal Care Committee of Zhejiang University.

Performance measure metrics

One of the performance measure metrics is the sorting accuracy which is the percentage of the detected spikes labeled correctly. For sample set , the accuracy of classification algorithm is defined as the ratio of the number of spikes correctly classified to the total number of spikes used for classification. The calculation is shown in formula (16):

| 16 |

Another metric is DBI40 that does not require prior information of clusters. DBI calculates the worst-case separation of each cluster and takes the mean value, as shown in Formula (17).

| 17 |

denotes the number of clusters. denotes the similarity between the clusters for quantitatively evaluating cluster quality. A small DBI index indicates a high quality of clustering.

To evaluate algorithm performance on real dataset HC1 with partial ground truth45, we considered it as a binary classification problem. The classification results were divided into four cases: True Positive (TP), False Positive (FP), True Negative (TN), and False Negative (FN). We evaluated the performance of the algorithm in terms of precision rate and recall rate, as shown in Formula (18) and Formula (19), referring to the validation platform SpikeForest45.

| 18 |

| 19 |

Ethics

All surgical and experimental procedures conformed to the Guide for The Care and Use of Laboratory Animals (China Ministry of Health) and were approved by the Animal Care Committee of Zhejiang University, China (No. ZJU20160353).

Results

In this study, our LDA-DP algorithm was compared with five typical spike sorting methods on one simulated dataset and two real datasets concerning several performance measure metrics. For comparison, we choose the algorithm LDA-GMM22, which has the same feature extraction method as LDA-DP, and choose the algorithm PCA-DP, which has the same clustering method. Then we select two classic and widely-used spike sorting algorithms, PCA-Km13 and LE-Km27, along with a recently proposed algorithm GMMsort31. SpikeForest has compared the performance of ten spike sorting methods on several neural datasets from both high-density probes (like neuropixel) and sparse probes (like classical tetrodes or microwire arrays). IronClust outperforms the other 9 methods on Dataset B47. Thus we quoted these results and compared them with our LDA-DP and the above-mentioned 5 algorithms. These algorithms are all unsupervised and automated, except that GMMsort needs some manual operation in the last step of clustering. In comparison, the feature subspace dimension was fixed as 5 for GMMsort31 by default and 3 for the rest algorithms13,22,27.

Performance comparison in the simulated Dataset A

A prominent feature extraction method can find the low-dimensional feature subspace with a high degree of differentiation, which is the basis of the high performance of the whole algorithm. Thus, we compared the robustness of different feature extraction methods.

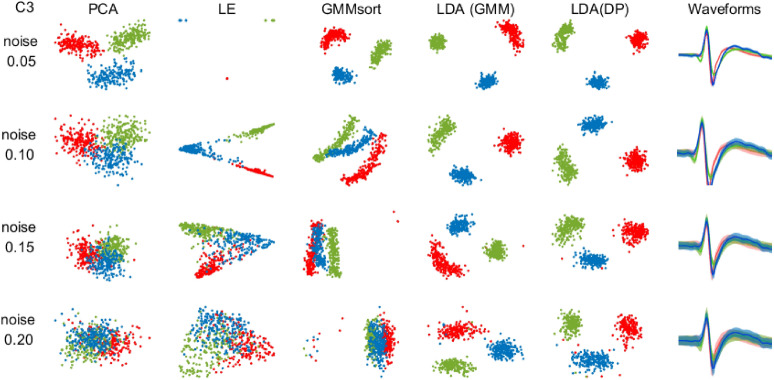

As the noise level or waveform similarity increases, the feature points of different clusters will gradually get closer in the feature subspace, the inter-class distance will decrease, and the boundary will be blurred, increasing classification difficulty. Thus, we chose the testing set C3 with high waveform similarity as the testing set to compare the performance and the noise resistance for five feature extraction methods (PCA-KM and PCA-DP used the same feature extraction method PCA).

When the noise level increases, the standard deviation of each waveform template increases (Waveforms column in Fig. 3), bringing difficulties to feature extraction. In this case, feature points extracted by the LDA method in LDA-DP and LDA-GMM are clustered separately, while in the contrast, feature points from the rest three methods are overlapped to some degree. Even under the worst condition when the noise level rises to 0.20, the proposed LDA-DP algorithm has the least overlapped feature points among the five methods (see Supplementary Fig. S2 for feature subspace during the iteration process). It notes that the feature extracted by the LDA-DP algorithm has high robustness to noise and waveform similarity.

Figure 3.

Two-dimensional feature subspace for algorithms on dataset C3 under four noise levels. For comparing the feature extraction capability of each algorithm, the data points were colored according to the ground truth. Red, green and blue dots represent the features extracted from three clusters, respectively. The last column shows the average spike waveforms of three clusters obtained by LDA-DP, and the shaded part represents the standard deviation.

We examined the performance of the 6 algorithms (PCA-Km, LE-Km, PCA-DP, LDA-GMM, GMMsort, and LDA-DP) on the Dataset A, excluding the overlapping spikes. In order to compare the robustness of each algorithm, two performance metrics were employed in this study: sorting accuracy and cluster quality. For each testing set, fivefold cross-validation was performed. For PCA-Km and LE-Km, the number of clusters was set to 3; And for PCA-DP, LDA-GMM, GMMsort, and LDA-DP, the number of clusters can be determined automatically.

Table 1 presents the average and the standard deviation (std) of the sorting accuracy. It is worth noting that the average sorting accuracy of LDA-DP on most of the testing sets is higher than that of the other methods. At the same time, LDA-DP also achieves a lower standard deviation of the average accuracy on most of the testing sets.

Table 1.

Average sorting accuracy percentage on the Dataset A (simulated dataset ) (with no overlapping spikes) for 6 algorithms.

| Dataset | PCA-Km | LE-Km | PCA-DP | GMMsort | LDA-GMM | LDA-DP |

|---|---|---|---|---|---|---|

| C1_005 | 100 (0.0) | 100 (0.0) | 100 (0.0) | 100 (0.0) | 100 (0.0) | 100 (0.0) |

| C1_010 | 100 (0.0) | 100 (0.0) | 100 (0.0) | 99.9 (0.2) | 100 (0.0) | 100 (0.0) |

| C1_015 | 99.9 (0.1) | 99.8 (0.1) | 99.9 (0.1) | 99.9 (0.1) | 99.9 (0.1) | 99.9 (0.1) |

| C1_020 | 99.4 (0.3) | 98.8 (0.6) | 99.2 (0.4) | 97.5 (2.3) | 92.9 (10.3) | 99.4 (0.3) |

| C1_025 | 97.6 (0.2) | 96.7 (0.6) | 97.2 (0.3) | 96.5 (0.9) | 91.7 (12.6) | 97.7 (1.0) |

| C1_030 | 93.8 (1.5) | 81.8 (22.9) | 81.7 (21.0) | 92.0 (2.9) | 97.5 (1.0) | 96.4 (2.3) |

| C1_035 | 66.9 (22.7) | 78.6 (19.7) | 76.1 (11.9) | 76.7 (15.8) | 93.3 (2.3) | 94.9 (3.2) |

| C1_040 | 62.5 (21.7) | 79.8 (13.6) | 67.3 (12.8) | 69.7 (12.4) | 83.3 (16.6) | 90.7 (8.5) |

| C2_005 | 100 (0.0) | 100 (0.0) | 100 (0.0) | 100 (0.0) | 100 (0.0) | 100 (0.0) |

| C2_010 | 98.2 (0.6) | 99.9 (0.1) | 98.2 (0.6) | 81.6 (16.7) | 86.8 (16.2) | 93.2 (13.5) |

| C2_015 | 87.5 (1.2) | 96.4 (0.8) | 79.5 (9.6) | 66.3 (17.3) | 85.4 (18.0) | 86.3 (16.7) |

| C2_020 | 72.9 (1.1) | 82.3 (1.6) | 36.8 (4.9) | 61.9 (5.2) | 96.5 (6.4) | 97.0 (5.5) |

| C3_005 | 99.6 (0.3) | 100 (0.0) | 100 (0.0) | 93.4 (13.1) | 96.2 (7.5) | 100 (0.0) |

| C3_010 | 89.9 (0.9) | 97.2 (0.9) | 73.9 (12.4) | 77.2 (14.2) | 95.6 (8.8) | 91.8 (11.7) |

| C3_015 | 76.3 (2.1) | 85.0 (0.8) | 42.3 (9.7) | 80.9 (12.2) | 93.2 (12.7) | 99.6 (0.3) |

| C3_020 | 55.7 (9.3) | 62.6 (6.9) | 37.2 (6.5) | 43.9 (8.5) | 70.3 (29.1) | 91.1 (11.0) |

| C4_005 | 99.6 (0.4) | 100 (0.0) | 99.8 (0.1) | 100 (0.0) | 100 (0.0) | 100 (0.0) |

| C4_010 | 94.1 (1.0) | 97.2 (0.7) | 73.1 (10.7) | 94.5 (7.7) | 96.5 (7.0) | 99.8 (0.3) |

| C4_015 | 63.4 (19.6) | 68.2 (22.7) | 65.7 (4.5) | 68.7 (8.1) | 96.5 (5.9) | 96.9 (1.2) |

| C4_020 | 50.9 (15.0) | 58.6 (4.9) | 43.5 (10.8) | 49.0 (15.0) | 90.3 (13.3) | 88.4 (4.9) |

| Average | 85.4 (5.4) | 89.1 (5.4) | 78.5 (6.5) | 82.4 (8.5) | 93.3 (9.3) | 96.2 (4.5) |

The standard deviation of the accuracy is in parenthesis. The bold number represents the best performance in each testing set.

In the testing set C1 whose classification difficulty is low, most algorithms achieve high accuracy. As the noise level rises to 0.40, the sorting accuracy of the rest five algorithms drops below 90%, but the accuracy of LDA-DP is still up to 90.7%. Moreover, in the testing set C2, C3 and C4, when both the waveform similarity and the noise level increase, only LDA-DP and LDA-GMM can maintain high accuracy relatively. The comparative results indicate that these two algorithms have a great power to distinguish waveforms and are highly resistant to noise. LDA-DP is especially outstanding because it maintains a higher sorting accuracy steadily (> 85%). On average, the mean accuracy of LDA-DP reaches 96.2%, which is the highest in all 6 algorithms. At the same time, LDA-DP achieves the lowest mean standard deviation (std = 4.5).

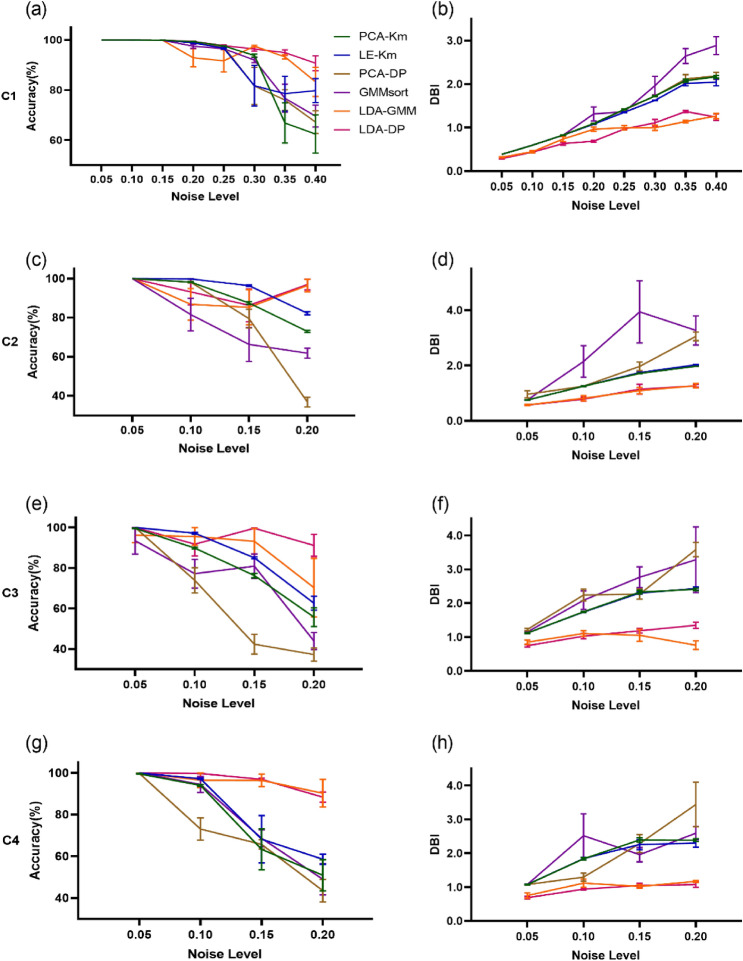

To further visualize the robustness of each algorithm concerning noise, we plotted the changing curve of performance with four or eight noise levels on 4 simulated datasets (C1, C2, C3 and C4). Figure 4a,c,e,g show the accuracy curve, while Fig. 4b,d,f,h show the DBI curve. In Fig. 4, as the noise level increases, the performance of all algorithms drops (The sorting accuracy decreases and the DBIs increase). When the noise level is low, all of the 6 algorithms get high accuracy and low DBI. The gaps between algorithms are not obvious. However, when the noise levels increase, the performance of PCA-Km, LE-Km, PCA-DP and GMMsort deteriorates. And in most cases, LDA-DP performs better than LDA-GMM. In all simulated data, LDA-DP displays a high level of performance: the sorting accuracy rate is above 85%, and the DBI is below 1.5, which is generally superior to other algorithms and shows high robustness to noise.

Figure 4.

The sorting performance of 6 spike sorting algorithms on Dataset A concerning noise levels. (a) We compared sorting accuracy of PCA-Km, LE-Km, PCA-DP, LDA-GMM, GMMsort and LDA-DP with respect to noise on the testing set C1 (noise level: 0.05, 0.1, 0.15, 0.2, 0.25, 0.3, 0.35, 0.4). Error bars represent s.e.m.. (b) DBI comparison concerning noise on the testing set C1. The evaluation was performed as in a. (c–h) Sorting accuracy and DBI concerning noise on the testing set C2, C3 and C4 respectively.

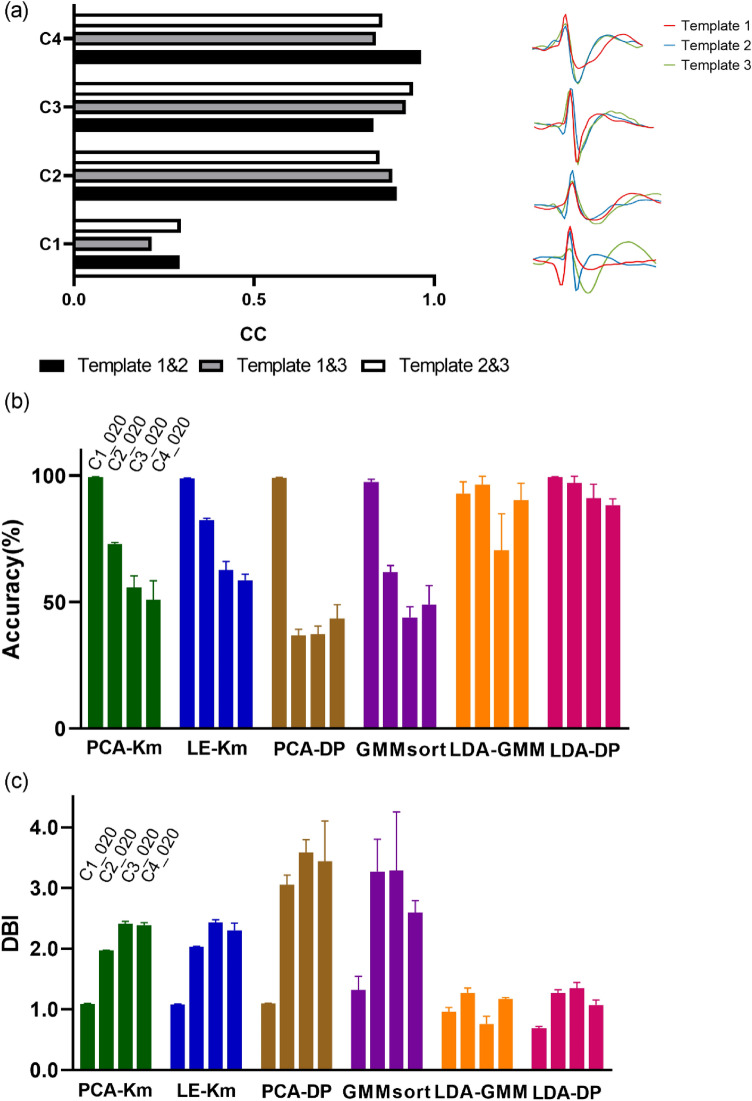

We also compared the robustness concerning waveform similarity. In the right side of Fig. 5a, the shapes of the three waveform templates were plotted for four testing sets. The correlation coefficients (CC) of the three templates were used to measure the similarity level in each testing set (Fig. 5a left). The results indicate that the waveform similarity in C2, C3 and C4 is significantly higher than that in C1 (Paired t test, p < 0.01), thus the classification of C2, C3 and C4 is relatively more difficult. In order to intuitively show the algorithm performance differences, we chose to plot the accuracy and DBIs of 6 algorithms on the four testing sets: C1_020, C2_020, C3_020 and C4_020, in which the waveform similarity is diverse and the noise level remains the same (Fig. 5b,c). In Fig. 5b, LDA-DP is superior to the other algorithms in terms of sorting accuracy. In the case of high waveform similarity, the accuracy of other algorithms fluctuates somewhat, while the accuracy of LDA-DP still maintained at a high level. For the DBIs (Fig. 5c), the cluster quality of LDA-DP is also promising.

Figure 5.

The sorting performance of 6 spike sorting algorithms concerning waveform similarity using the testing set C1_020, C2_020, C3_020 and C4_020. (a) Comparing the similarity of waveform templates in four testing sets. Left: bar graph of the correlation coefficient (CC) of the three waveform templates for four datasets. Black, gray and white respectively represent CC of template 1 and template 2, CC of template 1 and template 3, and CC of template 2 and template 3. Right: Waveform templates of each testing set. Red, blue and green represent template 1, template 2 and template 3, respectively. (b) Bar graph of sorting accuracy of 6 spike sorting algorithms on the testing set C1_020, C2_020, C3_020 and C4_020. Error bars represent s.e.m. (c) Bar graph of DBI of 6 spike sorting algorithms on the testing set C1_020, C2_020, C3_020 and C4_020. Error bars represent s.e.m.

Performance comparison in the in-vivo Dataset B

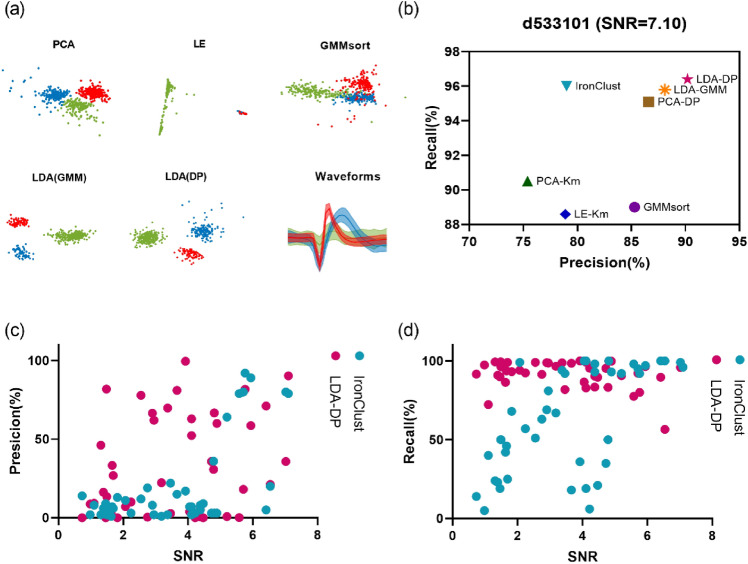

To further evaluate the performance of our algorithm on in-vivo datasets, we compared the performance of LDA-DP and the above 5 algorithms on Dataset B. For PCA-Km and LE-Km, the number of clusters was manually set to 3; And for PCA-DP, LDA-GMM, GMMsort, and LDA-DP, the number of clusters can be determined automatically. Firstly, one dataset d533101 in Dataset B, which was widely adopted in previous studies22,31,38, was chosen for illustration. Figure 6a shows the two-dimensional feature subspace extracted by each method. Data points are grouped into three clusters in the subspace and the waveform panel shows the average spike waveforms of the three clusters obtained by LDA-DP. The figure suggests that the LDA method successfully extracts optimal feature subspace, benefiting from the credible feedback of the clustering method (DP) through several iterations. The three clusters are much more distinct in the subspace of LDA (GMM) and LDA (DP) than in other methods.

Figure 6.

The sorting results on the in-vivo Dataset B when comparing 6 spike sorting algorithms. (a) Two-dimensional feature subspace extracted by each method. The data points are colored according to the sorting results, in which red, green and blue represent cluster 1, cluster 2 and cluster 3 respectively. The waveform panel shows the average spike waveforms of three clusters obtained by LDA-DP, and the shaded part represents the standard deviation. (b) The precision rate and the recall rate obtained according to partial ground truth on dataset d533101 in HC1. (c,d) The precision rate and recall rate comparison with regard to SNR on all the 43 datasets in Dataset B.

According to the partial ground truth, we analyzed the classification results of algorithms by evaluating the precision rate and the recall rate on the d533101 (SNR = 7.10). Figure 6b shows the classification results of 6 algorithms, along with one outstanding spike sorting software package, IronClust47, which is benchmarked by the SpikeForest45. Compared with other methods, LDA-DP has a maximum precision rate, as well as a maximum recall rate. Although IronClust is a density-based sorter and shows the top average precision and recall rate among the 10 toolboxes validated in SpikeForest, its precision rate on the sparse recording is inferior to some algorithms developed for sparse neural recording.

For further performance evaluation, we compared LDA-DP and IronClust on all the 43 datasets in Dataset B, with regard to signal-to-noise ratio (SNR). In Fig. 6c, LDA-DP has the higher precision rate on 25 datasets among all 43 datasets. Moreover, under a lower SNR (SNR < 4.0), the precision of LDA-DP is higher on 17 datasets out of 23 datasets. In Fig. 6d, LDA-DP has the higher recall rate on 28 datasets among all 43 datasets. Especially, under the lower SNR (SNR < 4.0), the recall rate of LDA-DP is higher on 21 datasets out of 23 datasets, demonstrating robustness convincingly. The results show that the state-of-art high-density spike sorters are still inferior to the specialized sparse algorithm, such as LDA-DP, in the face of sparse electrode data. Our results indicate that LDA-DP also outperforms other algorithms on Dataset B.

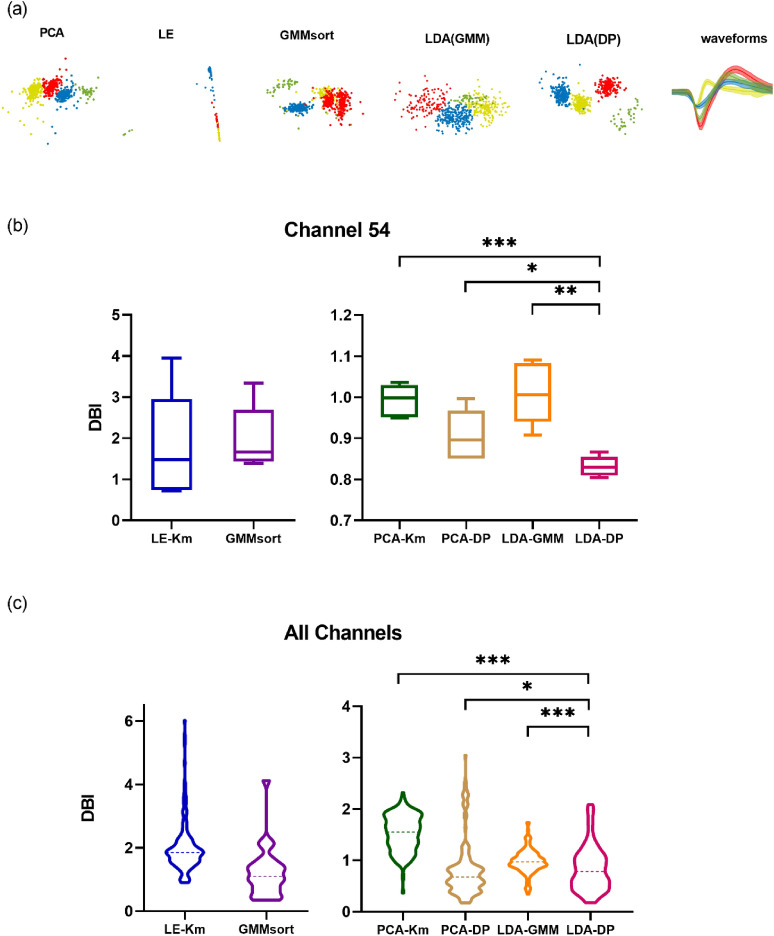

Performance comparison in the in-vivo Dataset C

In order to test the robustness of the algorithm in the real case with more complex distribution characteristics, we also compared the performance of 6 algorithms on the in-vivo Dataset C (Fig. 7 and Supplementary Fig. S3). In particular, as shown in Fig. 7a, spikes from one typical channel 54 in dataset C present a more messy distribution in the two-dimensional feature subspaces extracted by each method. The data points are colored by the sorting results and are grouped into four clusters. The Waveforms column shows the shapes of the four spikes due to the sorting results of LDA-DP. It indicates that these four spikes have highly similar shapes. The above complication may pose huge challenges to feature extraction. As Fig. 7a suggests, features extracted by LDA(DP) are apparently more separable than all other methods, leading the subsequent clustering to be more accurate. We notice that the data points show nonspherical-distributed in the LE subspace. Since clustering methods, such as K-means, have poor performance in identifying the clusters of nonspherical distribution, LE-Km may encounter difficulties in clustering features. We further compared the cluster quality of 6 algorithms using the spikes in this channel. For PCA-Km and LE-Km, the number of clusters was manually set to 4; And for PCA-DP, LDA-GMM, GMMsort, and LDA-DP, the number of clusters can be determined automatically. In Fig. 7b, the DBI index of LDA-DP is significantly lower than those of other algorithms (*p < 0.05, **p < 0.01, Kruskal–Wallis test), indicating that LDA has higher cluster quality and better performance than other algorithms. Moreover, we conducted a comparison on all 30 channels in Dataset C. The results are presented in Fig. 7c, the median of the DBI index for the LDA-DP is lower. Although the performance of PCA-DP and LDA-DP is similar in median value, in one channel with SNR = 2.82, the DBI of LDA-DP is 1.56, while for PCA-DP is 3.05 in one of the folds of cross-validation. The PCA-DP has some high DBIs, which are up to 3, indicating bad clustering. Our LDA-DP presented a more stable performance (the std of PCA-DP is 0.54, while the std of LDA-DP is 0.43). In general, LDA-DP has a significantly higher cluster quality (*p < 0.05, ***p < 0.001, Kruskal–Wallis test). Thus, LDA-DP also demonstrates outstanding robustness advantages on Dataset C, which is consistent with the results from the previous two datasets.

Figure 7.

The sorting results on Dataset C when comparing 6 spike sorting algorithms. (a) Two-dimensional feature subspace extracted by each method. The data points are colored according to the sorting results, in which red, green, yellow and blue represent cluster 1, cluster 2, cluster 3 and cluster 4 respectively. The last column shows the average spike waveforms of four clusters obtained by LDA-DP, and the shaded part represents the standard deviation. (b) Comparison of cluster quality using spikes from one particular channel 54 in Dataset C. We conducted a fivefold cross-validation and draw boxplots of the DBI index for 6 algorithms. *p < 0.05, **p < 0.01, ***p < 0.001, Kruskal–Wallis test. (c) Comparison of cluster quality using spikes from all 30 channels in Dataset C. We conducted a fivefold cross-validation for each channel and then drew the violin plot. The dashed lines represent the median of the DBI. *p < 0.05, ***p < 0.001, Kruskal–Wallis test.

Discussion

In this study, our proposed LDA-DP competed with six algorithms on both simulated and real datasets. The LDA-DP exhibits high robustness on both simulated and real datasets. For the simulated dataset wave_clus19, the LDA-DP maintains an outstanding sorting accuracy and cluster quality, indicating high robustness to noise and waveform similarity. And on the real dataset HC144, the comparison further illustrates the robustness of LDA-DP under low SNR. We finally evaluated the algorithm on real data from non-human primates46. The LDA-DP presents a more stable performance, especially under low SNR. Therefore, the performance of LDA-DP also exceeds other algorithms when facing more complex data distributions.

In this study, the performance of LDA-DP and LDA-GMM is significantly better than the other 4 algorithms (Figs. 4, 5, 6, 7). We can see the gap between LDAs and non-LDAs, for example, LDA-DP and PCA-DP. It is probably because the LDAs are supervised methods while the other methods are unsupervised. Through multiple iterations, LDA finds the optimal feature subspace based on the feedback provided by the clustering method, while unsupervised methods get no feedback. Therefore, the advantages of LDA-DP and LDA-GMM benefit from the combination of the feature extraction method LDA and the cluster method (DP or GMM). This kind of advantage might not be obvious in some cases when SNR is high, but the gap between the LDAs and the non-LDAs is significant when under low SNR (Fig. 7c). Our results are consistent with previous studies22,24.

Although two LDAs have high performance, LDA-DP has a better performance than LDA-GMM (Figs. 6, 7) on in-vivo datasets. Due to the joint optimization framework, the feature extraction method LDA also benefits from an outstanding clustering method. Since DP does not make any assumption about data distribution, the DP39 method has more advantages when processing real data with more complex characteristics. GMM48, as its name implies, assumes that data follows the Mixture Gaussian Distribution. This sort of fitting often encounters difficulties in dealing with more complex situations, where real data are not perfectly Gaussian distributed. Several studies in other fields have encountered similar problems49–53.

Additionally, some classic clustering methods, such as K-means, specify the cluster centers and then assign each point to the nearest cluster center28. Thus, this kind of methods perform poorly when applied to nonspherical data. In the contrast, the DP algorithm is based on the assumption that the cluster center is surrounded by points with lower density than it, and the cluster centers are relatively far apart. According to this assumption, DP identifies cluster centers and assigns cluster labels to the rest points. Therefore, it can well deal with the distribution of nonspherical data. This is one of the potential reasons for the outstanding performance of LDA-DP.

It is worth noting that LDA-DP is an automated algorithm. Although the values of some parameters may affect the final results, we can still preset some optimized values to avoid manual intervention during operation. For example, in this study, we fixed the threshold coefficient as 1.6 and verified its high performance on one simulation dataset and two in-vivo real datasets. Although whether this optimal value fits all datasets needs to be tested and evaluated with more data, the current evaluation has fully demonstrated the general applicability of this value.

Most methods compared in our study were employed to sort the spike data from a single microelectrode. These spike data can be collected through sparse probes such as Utah arrays and microwire arrays that are widely used in neuroscience and have advantages in the stability of long-term recording. Thus our algorithm cannot be verified on the data from high-density electrodes. The comparison with IronClust is a supplement to the evaluations. The results show that the state-of-art high-density spike sorters are still not as good as the specialized sparse sorters when facing the data from sparse electrodes. As a supplement, we also compared the LDA-DP with 4 state-of-art high-density algorithms in SpikeForest (Supplementary Fig. S6). The comparison results further illustrated this point. Actually, it is not the main issue of this paper. In the future, we will try to swap out the clustering steps in high-density spike sorters such as Kilosort54 or Mountainsort10 with DP to see whether the DP would bring an accuracy improvement in the neural recordings from high-density probes. Moreover, The advantages of our LDA-DP lie in its high robustness. For some cases that may cause waveform deformation, such as electrode drifts and spike overlapping, the study did not propose a novel solution yet. It is also the target improvement of the algorithm in the future.

Conclusion

By combining LDA and DP to construct a joint optimization framework, we proposed an automated spike sorting algorithm and found it is highly robust to noise. Based on the iteration of LDA and DP, the algorithm makes the feature extraction and clustering benefit from each other, continuously improving the differentiation of feature subspace and finally achieves high spike sorting performance. After evaluation on both simulated and in-vivo datasets, we demonstrate that the LDA-DP meets the requirements of high robustness for sparse spikes in the cortical recordings.

Supplementary Information

Acknowledgements

The study was supported by the National Key R&D Program of China (2022ZD0208600, 2017YFE0195500, 2021YFF1200805), the Key Research & Development Program for Zhejiang (2021C03003, 2022C03029, 2021C03050), the National Natural Science Foundation of China (31371001).

Author contributions

Y.Z. and J.H. contributed equally to this work. Y.Z., J.H. and S.Z. conceived and designed the experiment. Y.Z., J.H. and T.L. conducted the experiment. Y.Z.and Z.Y. analysed the results. Y.Z. drafted the manuscript. Y.Z., J.H. and S.Z. commented and revised the manuscript. W.C. supervised the project. All authors reviewed the manuscript.

Data availability

The code of the LDA-DP used in the current study is available at https://github.com/EveyZhang/LDA-DP.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-022-19771-8.

References

- 1.Quiroga RQ. Spike sorting. Curr. Biol. 2012;22:R45–R46. doi: 10.1016/j.cub.2011.11.005. [DOI] [PubMed] [Google Scholar]

- 2.Ganguly K, Dimitrov DF, Wallis JD, Carmena JM. Reversible large-scale modification of cortical networks during neuroprosthetic control. Nat. Neurosci. 2011;14:662–667. doi: 10.1038/nn.2797. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Quiroga RQ. Concept cells: The building blocks of declarative memory functions. Nat. Rev. Neurosci. 2012;13:587–597. doi: 10.1038/nrn3251. [DOI] [PubMed] [Google Scholar]

- 4.Rey HG, Ison MJ, Pedreira C, Valentin A, Alarcon G, Selway R, Richardson MP, Quian Quiroga R. Single-cell recordings in the human medial temporal lobe. J. Anat. 2015;227:394–408. doi: 10.1111/joa.12228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Todorova S, Sadtler P, Batista A, Chase S, Ventura V. To sort or not to sort: The impact of spike-sorting on neural decoding performance. J. Neural Eng. 2014;11:056005. doi: 10.1088/1741-2560/11/5/056005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Buzsáki G. Large-scale recording of neuronal ensembles. Nat. Neurosci. 2004;7:446–451. doi: 10.1038/nn1233. [DOI] [PubMed] [Google Scholar]

- 7.Gibson S, Judy JW, Marković D. Spike sorting: The first step in decoding the brain: The first step in decoding the brain. IEEE Signal Process. Mag. 2011;29:124–143. doi: 10.1109/MSP.2011.941880. [DOI] [Google Scholar]

- 8.Pillow JW, Shlens J, Chichilnisky EJ, Simoncelli EP. A model-based spike sorting algorithm for removing correlation artifacts in multi-neuron recordings. PLoS ONE. 2013;8:e62123. doi: 10.1371/journal.pone.0062123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bestel R, Daus AW, Thielemann C. A novel automated spike sorting algorithm with adaptable feature extraction. J. Neurosci. Methods. 2012;211:168–178. doi: 10.1016/j.jneumeth.2012.08.015. [DOI] [PubMed] [Google Scholar]

- 10.Chung JE, Magland JF, Barnett AH, Tolosa VM, Tooker AC, Lee KY, Shah KG, Felix SH, Frank LM, Greengard LF. A fully automated approach to spike sorting. Neuron. 2017;95:1381–1394. doi: 10.1016/j.neuron.2017.08.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wang, P. A Real-Time Neural Spike Sorting System and Its Application on Neural Decoding. Doctoral dissertation (University of Macau, 2021).

- 12.Hennig MH, Hurwitz C, Sorbaro M. Scaling spike detection and sorting for next-generation electrophysiology. In vitro Neuronal Netw. 2019;22:171–184. doi: 10.1007/978-3-030-11135-9_7. [DOI] [PubMed] [Google Scholar]

- 13.Lewicki MS. A review of methods for spike sorting: The detection and classification of neural action potentials. Netw. Comput. Neural Syst. 1998;9:R53. doi: 10.1088/0954-898X_9_4_001. [DOI] [PubMed] [Google Scholar]

- 14.Kamboh AM, Mason AJ. Computationally efficient neural feature extraction for spike sorting in implantable high-density recording systems. IEEE Trans. Neural Syst. Rehabil. Eng. 2012;21:1–9. doi: 10.1109/TNSRE.2012.2211036. [DOI] [PubMed] [Google Scholar]

- 15.Paraskevopoulou SE, Barsakcioglu DY, Saberi MR, Eftekhar A, Constandinou TG. Feature extraction using first and second derivative extrema (FSDE) for real-time and hardware-efficient spike sorting. J. Neurosci. Methods. 2013;215:29–37. doi: 10.1016/j.jneumeth.2013.01.012. [DOI] [PubMed] [Google Scholar]

- 16.Abeles M, Goldstein MH. Multispike train analysis. Proc. IEEE. 1977;65:762–773. doi: 10.1109/PROC.1977.10559. [DOI] [Google Scholar]

- 17.Chan HL, Wu T, Lee ST, Lin MA, He SM, Chao PK, Tsai YT. Unsupervised wavelet-based spike sorting with dynamic codebook searching and replenishment. Neurocomputing. 2010;73:1513–1527. doi: 10.1016/j.neucom.2009.11.006. [DOI] [Google Scholar]

- 18.Lieb F, Stark HG, Thielemann C. A stationary wavelet transform and a time-frequency based spike detection algorithm for extracellular recorded data. J. Neural Eng. 2017;14:036013. doi: 10.1088/1741-2552/aa654b. [DOI] [PubMed] [Google Scholar]

- 19.Quiroga RQ, Nadasdy Z, Ben-Shaul Y. Unsupervised spike detection and sorting with wavelets and superparamagnetic clustering. Neural Comput. 2004;16:1661–1687. doi: 10.1162/089976604774201631. [DOI] [PubMed] [Google Scholar]

- 20.Li Z, Wang Y, Zhang N, Li X. An accurate and robust method for spike sorting based on convolutional neural networks. Brain Sci. 2020;10:835. doi: 10.3390/brainsci10110835. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Eom J, Park IY, Kim S, Jang H, Park S, Huh Y, Hwang D. Deep-learned spike representations and sorting via an ensemble of auto-encoders. Neural Netw. 2021;134:131–142. doi: 10.1016/j.neunet.2020.11.009. [DOI] [PubMed] [Google Scholar]

- 22.Keshtkaran MR, Yang Z. Noise-robust unsupervised spike sorting based on discriminative subspace learning with outlier handling. J. Neural Eng. 2017;14:036003. doi: 10.1088/1741-2552/aa6089. [DOI] [PubMed] [Google Scholar]

- 23.Fisher RA. The use of multiple measurements in taxonomic problems. Ann. Eugen. 1936;7:179–188. doi: 10.1111/j.1469-1809.1936.tb02137.x. [DOI] [Google Scholar]

- 24.Ding, C. & Li, T. Adaptive dimension reduction using discriminant analysis and k-means clustering. In Proceedings of the 24th international conference on Machine learning (2007).

- 25.Keshtkaran, M. R. & Yang, Z. Unsupervised spike sorting based on discriminative subspace learning. In 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (IEEE, 2014). [DOI] [PubMed]

- 26.Gray CM, Maldonado PE, Wilson M, McNaughton B. Tetrodes markedly improve the reliability and yield of multiple single-unit isolation from multi-unit recordings in cat striate cortex. J. Neurosci. Methods. 1995;63:43–54. doi: 10.1016/0165-0270(95)00085-2. [DOI] [PubMed] [Google Scholar]

- 27.Chah E, Hok V, Della-Chiesa A, Miller JJH, O'Mara SM, Reilly RB. Automated spike sorting algorithm based on Laplacian eigenmaps and k-means clustering. J. Neural Eng. 2011;8:016006. doi: 10.1088/1741-2560/8/1/016006. [DOI] [PubMed] [Google Scholar]

- 28.Jain AK. Data clustering: 50 years beyond K-means. Pattern Recogn. Lett. 2010;31:651–666. doi: 10.1016/j.patrec.2009.09.011. [DOI] [Google Scholar]

- 29.Harris KD, Henze DA, Csicsvari J, Hirase H, Buzsaki G. Accuracy of tetrode spike separation as determined by simultaneous intracellular and extracellular measurements. J. Neurophysiol. 2000;84:401–414. doi: 10.1152/jn.2000.84.1.401. [DOI] [PubMed] [Google Scholar]

- 30.Pouzat C, Mazor O, Laurent G. Using noise signature to optimize spike-sorting and to assess neuronal classification quality. J. Neurosci. Methods. 2002;122:43–57. doi: 10.1016/S0165-0270(02)00276-5. [DOI] [PubMed] [Google Scholar]

- 31.Souza BC, Lopes-dos-Santos V, Bacelo J, Tort AB. Spike sorting with Gaussian mixture models. Sci. Rep. 2019;9:1–14. doi: 10.1038/s41598-018-37186-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Ott T, Kern A, Steeb WH, Stoop R. Sequential clustering: Tracking down the most natural clusters. J. Stat. Mech. Theory Exp. 2005;2005:P11014. doi: 10.1088/1742-5468/2005/11/P11014. [DOI] [Google Scholar]

- 33.Ding W, Yuan J. Spike sorting based on multi-class support vector machine with superposition resolution. Med. Biol. Eng. Comput. 2008;46:139–145. doi: 10.1007/s11517-007-0248-0. [DOI] [PubMed] [Google Scholar]

- 34.Shoham S, Fellows MR, Normann RA. Robust, automatic spike sorting using mixtures of multivariate t-distributions. J. Neurosci. Methods. 2003;127:111–122. doi: 10.1016/S0165-0270(03)00120-1. [DOI] [PubMed] [Google Scholar]

- 35.Fee MS, Mitra PP, Kleinfeld D. Automatic sorting of multiple unit neuronal signals in the presence of anisotropic and non-Gaussian variability. J. Neurosci. Methods. 1996;69:175–188. doi: 10.1016/S0165-0270(96)00050-7. [DOI] [PubMed] [Google Scholar]

- 36.Werner T, Vianello E, Bichler O, Garbin D, Cattaert D, Yvert B, De Salvo B, Perniola L. Spiking neural networks based on OxRAM synapses for real-time unsupervised spike sorting. Front. Neurosci. 2016;10:474. doi: 10.3389/fnins.2016.00474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Pathak, R., Dash, S., Mukhopadhyay, A. K., Basu, A. & Sharad, M. Low power implantable spike sorting scheme based on neuromorphic classifier with supervised training engine. In 2017 IEEE Computer Society Annual Symposium on VLSI (ISVLSI), 266–271 (IEEE, 2017).

- 38.Huang L, Gan L, Ling BWK. A unified optimization model of feature extraction and clustering for spike sorting. IEEE Trans. Neural Syst. Rehabil. Eng. 2021;29:750–759. doi: 10.1109/TNSRE.2021.3074162. [DOI] [PubMed] [Google Scholar]

- 39.Rodriguez A, Laio A. Clustering by fast search and find of density peaks. Science. 2014;344:1492–1496. doi: 10.1126/science.1242072. [DOI] [PubMed] [Google Scholar]

- 40.Davies DL, Bouldin DW. A cluster separation measure. IEEE Trans. Pattern Anal. Mach. Intell. 1979;2:224–227. doi: 10.1109/TPAMI.1979.4766909. [DOI] [PubMed] [Google Scholar]

- 41.Coelho, G. P., Barbante, C. C., Boccato, L., Attux, R. R., Oliveira, J. R. & Von Zuben, F. J. Automatic feature selection for BCI: an analysis using the davies-bouldin index and extreme learning machines. In The 2012 International Joint Conference on Neural Networks (IJCNN), 1–8 (IEEE, 2012).

- 42.Sato, T., Suzuki, T. & Mabuchi, K. Fast automatic template matching for spike sorting based on Davies-Bouldin validation indices. In 2007 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, 3200–3203 (IEEE, 2007). [DOI] [PubMed]

- 43.Vesanto J, Alhoniemi E. Clustering of the self-organizing map. IEEE Trans. Neural Netw. 2000;11:586–600. doi: 10.1109/72.846731. [DOI] [PubMed] [Google Scholar]

- 44.Mizuseki, K., Diba, K., Pastalkova, E., Teeters, J., Sirota, A. & Buzsáki, G. Neurosharing: large-scale data sets (spike, LFP) recorded from the hippocampal-entorhinal system in behaving rats. F1000Research, 3 (2014). [DOI] [PMC free article] [PubMed]

- 45.Magland J, Jun JJ, Lovero E, Morley AJ, Hurwitz CL, Buccino AP, Garcia S, Barnett AH. SpikeForest, reproducible web-facing ground-truth validation of automated neural spike sorters. Elife. 2020;9:e55167. doi: 10.7554/eLife.55167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Zhang, Y., Wan, Z., Wan, G., Zheng, Q., Chen, W. & Zhang, S. Changes in modulation characteristics of neurons in different modes of motion control using brain-machine interface. In Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society. Annual International Conference, 2021, 6445–6448 (2021). [DOI] [PubMed]

- 47.Jun, J. J., Mitelut, C., Lai, C., Gratiy, S. L., Anastassiou, C. A. & Harris, T. D. Real-time spike sorting platform for high-density extracellular probes with ground-truth validation and drift correction. Preprint at 10.1101/101030v2 (2017).

- 48.Anzai Y. Pattern Recognition and Machine Learning. Elsevier; 2012. [Google Scholar]

- 49.Haykin SS. Adaptive Filter Theory. Pearson Education India; 2008. [Google Scholar]

- 50.Julier, S. J. & Uhlmann, J. K. New extension of the Kalman filter to nonlinear systems. Signal processing, sensor fusion, and target recognition VI, Vol. 3068, 182–193 (International Society for Optics and Photonics, 1997.

- 51.Wan, E. A. & Van Der Merwe, R. The unscented Kalman filter for nonlinear estimation. In Proceedings of the IEEE 2000 Adaptive Systems for Signal Processing, Communications, and Control Symposium (Cat. No. 00EX373), 153–158 (IEEE, 2000).

- 52.Gomez-Uribe CA, Karrer B. The decoupled extended kalman filter for dynamic exponential-family factorization models. J. Mach. Learn. Res. 2021;22:5–1. [Google Scholar]

- 53.Xue Z, Zhang Y, Cheng C, Ma G. Remaining useful life prediction of lithium-ion batteries with adaptive unscented kalman filter and optimized support vector regression. Neurocomputing. 2020;376:95–102. doi: 10.1016/j.neucom.2019.09.074. [DOI] [Google Scholar]

- 54.Pachitariu M, Steinmetz NA, Kadir SN, Carandini M, Harris KD. Fast and accurate spike sorting of high-channel count probes with KiloSort. Adv. Neural Inf. Process. Syst. 2016;29:4448–4456. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The code of the LDA-DP used in the current study is available at https://github.com/EveyZhang/LDA-DP.