Significance

Linking observed states of neural circuits to beliefs, actions, and plans is a core goal of systems neuroscience, but doing so presents substantial challenges for experimental design, analysis, and theory. Particularly in the domain of delay tasks, the representational logic of neural ensembles has been widely debated. Using a delay task that allows independent control of computational demands on a trial-wise basis, we identify a putative delay representation that reflects plans regarding upcoming expected stimuli. Contingency representations enable efficient mappings to responses for a variety of disparate delay tasks. In task-optimized neural networks, we explore how contingency representations relate to observed phenomena from the neurophysiological working memory literature. Lastly, we demonstrate that human behavior is consistent with contingency representations.

Keywords: working memory, computational model, neural network, representational geometry

Abstract

Real-world tasks require coordination of working memory, decision-making, and planning, yet these cognitive functions have disproportionately been studied as independent modular processes in the brain. Here, we propose that contingency representations, defined as mappings for how future behaviors depend on upcoming events, can unify working memory and planning computations. We designed a task capable of disambiguating distinct types of representations. In task-optimized recurrent neural networks, we investigated possible circuit mechanisms for contingency representations and found that these representations can explain neurophysiological observations from the prefrontal cortex during working memory tasks. Our experiments revealed that human behavior is consistent with contingency representations and not with traditional sensory models of working memory. Finally, we generated falsifiable predictions for neural data to identify contingency representations in neural data and to dissociate different models of working memory. Our findings characterize a neural representational strategy that can unify working memory, planning, and context-dependent decision-making.

In time-varying environments, flexible cognition requires the ability to store and combine information across time to appropriately guide behavior. In commonly used delay task paradigms, a transient sensory stimulus provides information, which the agent must maintain internally across a seconds-long mnemonic delay to guide a future response (1–4). Working memory is a core cognitive function for the active maintenance and manipulation of task-relevant information for subsequent use. To guide flexible behavior, contents of working memory must interface with other cognitive functions, such as planning and context-dependent decision-making. Yet within neuroscience, these functions have been studied largely independently, and it remains poorly understood how the brain coordinates these processes in the service of goal-directed behavior.

Internal representations related to cognitive states can be revealed through recording neural activity during delay tasks in animals and humans. Neurons in the prefrontal cortex exhibit content-selective activity patterns during mnemonic delays of working memory tasks (5, 6). Selective delay activity during working memory is most commonly interpreted and implemented in computational models as representing features of sensory stimuli, which can be processed to guide a later response. In the dominant conceptual framework, the proposed cognitive strategy thereby uses working memory representations that are fundamentally sensory in nature (5, 6). The sensory strategy for working memory has been challenged by observations of prefrontal delay activity that better correlate with diverse task variables, including actions, expected stimuli, and rules (2, 3, 7). Furthermore, a growing literature characterizes substantial nonlinear mixed selectivity of features in the prefrontal cortex, which can in principle support context-dependent behavior (8–10). These diverse observations suggest that a more unified framework is needed to account for the computational roles of working memory, planning, and context-dependent decision-making.

Computational modeling has been fruitfully applied to examine potential neural circuit mechanisms supporting cognitive functions, including working memory and decision-making (11). Working memory functions are commonly modeled with distinct modular circuits, which do not account for mixed selectivity in the prefrontal cortex and assume that working memory maintains sensory representations (11, 12). A complementary modeling approach utilizes artificial neural network models, which are trained to perform cognitive tasks (13). In contrast to hand-designed models, task-trained recurrent neural network (RNN) models can perform delay tasks without requiring assumptions about structured circuit architectures or the form of working memory representations (14). This approach is, therefore, well suited to examine computational mechanisms through which working memory representations can support flexible computations (4, 15–18).

In this study, we investigate the implications of a cognitive strategy in which internal states represent plans rather than perceptions or actions. Specifically, our theoretical framework defines contingency states based on how future behaviors depend on upcoming events. We designed a task paradigm, conditional delayed logic (CDL), to dissociate contingency-based strategies from sensory- or action-based strategies for working memory. RNN models trained on the CDL task develop enrichment of contingency in their state representations, which is reflected in the geometric structure of population activity patterns. The model’s internal representations capture diverse phenomena of neural activity observed during working memory. We tested human behavior on the CDL task and found key signatures of a contingency-based strategy in response-time behavior. Lastly, we provide falsifiable predictions for neural activity to distinguish contingency representations from alternative computational schemes. Taken together, our study presents a theoretical framework for how working memory supports planning for temporally extended cognition and flexible behavior, which explains disparate behavioral and neural observations and is experimentally testable.

Results

CDL Task.

In many commonly used delay tasks, there are correlations among sensory stimuli, responses, computational demands, or rules that prevent dissociable attribution of neural activity to specific cognitive task variables (1, 19). We, therefore, sought a task that involves 1) computation on informational inputs separated by a delay; 2) exact intermediate computational states that reoccur frequently; 3) trials for which the correct response can be predicted during the delay on some trials and not on others; and 4) decorrelation of action, sensory, rule, and computational-state information.

To meet these demands, we designed the CDL task, which applies a binary classification to two binary stimuli separated by a delay (Fig. 1A). We refer to the predelay and postdelay stimuli as “cue A” and “cue B,” respectively. The rules can be defined as Boolean logical operations, and one rule is presented tonically throughout each trial’s duration. On each trial, the agent is presented with a task rule, cue A, and cue B and must generate an associated response. For example, in the OR rule, the agent’s response should be “one” if either cue is equal to one. From 16 possible two-bit binary classifications, we will focus on 10 rules (Fig. 1B) for which the response is dependent on cues but independent of cue order. The final 10 rules include the logical operators (N)OR, (N)AND, and X(N)OR as well as (Anti-)Memory and (Anti-)Report. In Memory, the agent must respond with the cue A stimulus for that trial, and in Report, the agent must respond with the cue B stimulus for that trial. In both cases, “Anti-” signals an inversion of the output. Rule, cue A, and cue B are varied randomly across trials.

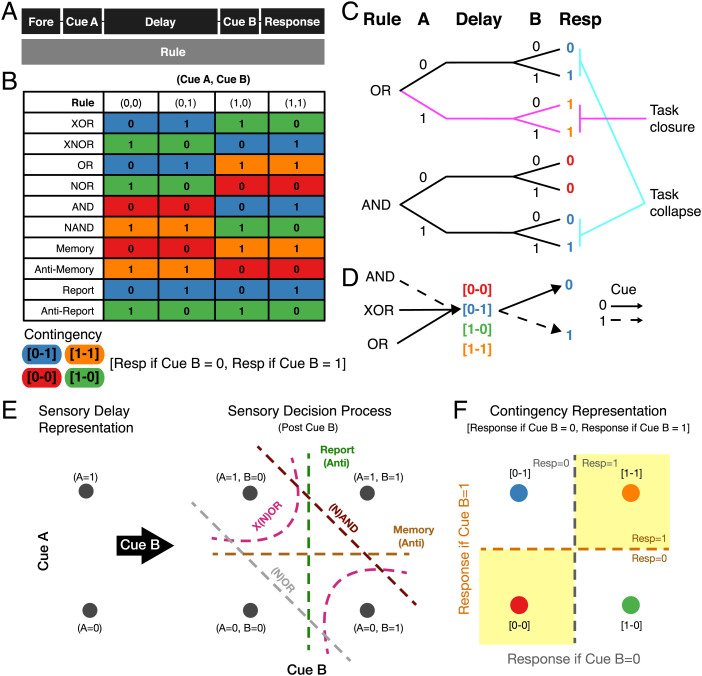

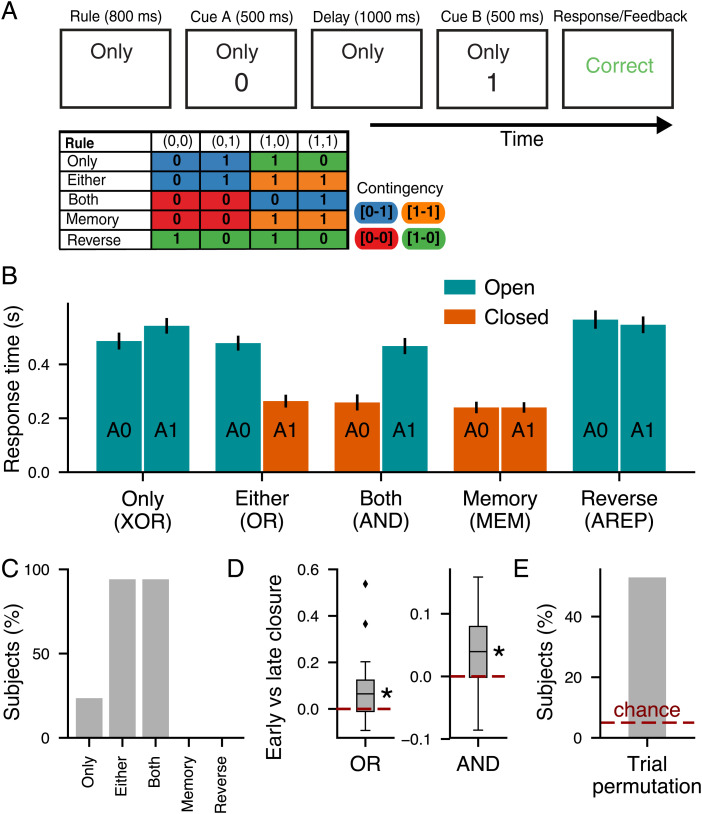

Fig. 1.

CDL task and contingency states. (A) Time course of events in the CDL task. (B) The contingency table of responses by rule and (cue A, cue B) pair. Color indicates the contingency of that condition. (C) A schematic example of task closure and collapse between tasks and conditions. (C, Upper) Tree structure of an OR trial. (C, Lower) Tree structure of an AND trial. The magenta lines indicate a condition (OR, cue A 1) in which the trial is termed closed due to the fact that the correct response is independent of cue B. The cyan lines indicate two conditions (OR, cue A 0; AND, cue A 1) that collapse during the delay. This is because after cue A, their response patterns to cue B become identical; in both cases, if B 0, the correct response is zero, and if B 1, the correct response is one. The color of responses indicates the associated contingency. (D) Example mappings of the CDL task through contingency states. Solid lines represent a cue of zero, and dashed lines represent a cue of one. All three tasks (AND, cue A 0; XOR, cue A 1; OR, cue A 1) have a [0-1] contingency, and therefore, responses can be generated by the same mapping from the contingency state according to cue B after the delay. (E) A schematic of the stimulus-based sensory representation of the CDL task. (E, Left) The sensory delay representation comprises just two states representing different values of observed cue A. (E, Right) Following cue B, four states form, each representing a specific sensory combination (cue A, cue B). Each dashed line forms a separatrix that performs one of the rule operations in the sensory representation space. Each line represents two rules as the negation of each rule is just the inverse mapping of the points on either side of the separatrix. (F) A schematic of the contingency representation. Each point represents a specific contingency state [response to B 0–response to B 1]. The dashed lines are separatrices capable of solving the 10 tasks from the contingency representation.

Contingency Representations.

To perform the CDL task, the agent must maintain a representation of task information across the delay. One might expect the agent to maintain the stimulus identity of cue A, which would be sufficient to solve all trials. In this “sensory strategy,” which is commonly assumed in models of working memory, the task-relevant information is maintained across the delay to guide a response in conjunction with information in cue B and rule (5, 20). An alternative “action strategy” is possible for trial conditions in which the response can be preplanned during the delay (2, 21). Furthermore, the agent may represent the rule identity, which is presented during the delay (3). These representations are not exclusive.

In contrast to sensory, action, or rule representations, an alternative strategy for the CDL task and other working memory tasks uses what we call “contingency representations.” Contingencies are defined by mappings from the upcoming cues to responses (Fig. 1B). For example, if the rule is OR and cue A is “zero,” then the contingency state during the delay is the mapping from cue B being zero or one to the correct response being zero or one, respectively. Throughout this paper, we will refer to contingency using the notation [R0-R1], where R0 and R1 are the response targets for cue B being zero and one, respectively. We would, therefore, say that the above trial has a [0-1] contingency state, which could be represented in working memory.

The CDL task provides two important features that can be utilized by contingency representations: task “closure,” which gives insight into conditional computational demands, and task “collapse,” which enables identification of specific computationally relevant states. Closure describes the extent to which all information necessary to perform the next step of the task is already presented. In the CDL task, closure is defined as a condition in which the correct response can be decided from the rule and cue A alone, and therefore, the response can be preplanned during the delay before cue B. For example, if the rule is OR and cue A is one, then regardless of cue B the correct response will always be one (Fig. 1C). This enables the agent to plan the response directly following cue A.

Collapse describes the condition in which the agent reaches the same computational branch point in a multistage problem independent of the prior path. In the CDL task, collapse describes when two different rule and cue A conditions have the same contingency state with regard to cue B. One example of this is in the OR and AND tasks. The [0-1] contingency state is reached both by the OR rule with cue A of zero and by the AND rule with cue A of one (Fig. 1D). In this way, two trials that share neither rule nor cue A can share a contingency state, demonstrating functional collapse. As described later, this property will be crucial in dissociating contingency representations from sensory representations in neural delay activity.

Computing through Contingency Representations.

We found that one advantage of the contingency representation is that it reduces the overall classifier complexity problem of the task at the time of cue B onset. Here, we contrast a sensory working memory strategy with a contingency strategy in performing the CDL task.

In the sensory strategy, cue A is retained mnemonically through the delay, and an orthogonal cue B component is added to the representation at the end of the delay. To this representation, we can then apply a “conditional classifier,” defined as a set of classifiers that are applied to the representation conditionally on some trial feature. The number of linear classifiers is one way to quantify the relative complexity of mapping responses to output using the conditioned feature. Here, we apply a conditional classifier conditioned on rule, the trial feature not already included in the sensory representation. Notably, such a conditional classifier requires 12 classifier hyperplanes to implement the 10 rules as response mappings (one linear hyperplane for each rule except for XOR and XNOR, which each require two hyperplanes due to their nonlinearity) (Fig. 1E).

In contrast, the contingency strategy makes multiple rules linearly separable by the same hyperplanes (Fig. 1D). Contingency representations break the CDL task into two steps, a first step mapping nonlinearly from rule and cue A to a contingency state and a second conditional classifier as above that applies a different linear separatrix to contingency states, here conditioned on cue B. Such a conditional classifier requires only two classifier hyperplanes to implement the 10 rules as response mappings from the contingency representation (Fig. 1F). This decomposition thereby turns a single complex classification problem into two simple ones. Moreover, this decomposition efficiently utilizes the available computational resource of time during the delay period itself such that only the least possible remaining computation remains to be done on cue B onset. Interestingly, this complexity reduction can be formalized using a straightforward linear matrix decomposition between predelay features (rule and cue A) and postdelay features (cue B and target) (SI Appendix, Fig. S1), which results in the contingency basis as described above (Fig. 1F).

It is important to note that the use of contingency states is separable from the organization into a two-dimensional contingency subspace as represented above (Fig. 1F). Contingency states could still be used, either in an alternative organizational geometry or through nonlinear downstream readout from a mixed representation that preserves cue A and rule information. Nevertheless, the above analysis shows how using contingency enables efficient geometric organization with respect to multirule problems, such as the CDL task.

Task-Trained RNNs Utilize Contingency Representations.

To explore how contingency-based computations may be realized in a distributed recurrent circuit, we trained RNN models to perform the full CDL task (Fig. 2A). Our trained RNN model reached high accuracy across all subtasks (99.3% average accuracy, with all >95%). In order to identify the structure of contingency information in our trained RNNs, we used a simple subspace identification procedure (22). Using the network-state vector from the late-delay epoch (just before cue B onset), we identified two contingency-coding axes maximally capturing variance in neural states across units in the RNN explained by the target response conditioned on each cue B stimulus (Materials and Methods). We then projected the late-delay state from each trial into this two-dimensional contingency subspace. This representation was found to cleanly separate the four possible contingency conditions using two linear separatrices (Fig. 2B). We refer to this two-dimensional subspace as the “contingency subspace.”

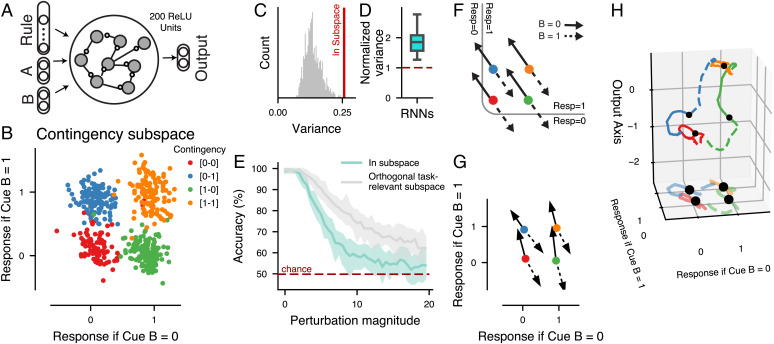

Fig. 2.

Functional contingency subspace in CDL-trained RNNs. (A) Schematic of the RNN model structure. Hidden layer composed of 200 fully connected ReLU (rectified linear unit) neurons. (B) We defined a contingency subspace through a linear regression of unit states during the late-delay epoch to define two axes along which “Response to B 0” and “Response to B 1” were projected. The contingency subspace was identified on a train set of trials, and we used held-out test trials for plots and analysis for an example RNN. (C) A permutation test comparing the amount of variance in the contingency subspace with random two-dimensional task-relevant subspaces (described in Materials and Methods). (D) The analysis in B is replicated for 20 RNNs over random initializations, and the distribution of variance captured by the contingency subspace compared with the mean variance of random task-relevant subspaces of equal dimension is plotted. (E) Mean CDL performance (accuracy) across replicates for a perturbation analysis in which the RNN state was perturbed at the time point prior to cue B onset by a random vector of a given norm either within the contingency subspace (cyan line) or in an orthogonal task-relevant subspace (gray line). The perturbation magnitude, norm of the perturbation, was sequentially increased. Shaded regions represent the SEMs across replicate RNNs. The red dashed line represents chance behavior. (F) Theoretical schematic of CDL response selection utilizing a contingency representation. Solid arrows represent trajectories caused by cue B 0, and dashed arrows represent trajectories caused by cue B 1. The nonlinear separatrix boundary (gray) divides regions of state space from which the network will transition to a response state of zero or one. (G) Mean contingency subspace trajectories of the example RNN model from onset to offset of cue B. Trajectories are divided by contingency and cue B. (H) Three-dimensional mean RNN state-space trajectories from cue B onset to trial end. The x and y axes represent the contingency subspace, while the z axis is the output axis of the example RNN model (i.e., the difference between the magnitude of the two output units).

To test the importance of the contingency subspace, we measured the amount of trial-wise variance in the late delay–state vectors captured by the contingency subspace and found greater variance compared with random two-dimensional task-relevant subspaces (one-way ANOVA, ) (Fig. 2 C and D). In a complementary state-space approach, we measured the alignment between the contingency subspace and the dominant eigenmodes of between-trial covariance in the late delay–state vector. We find that the contingency subspace is also significantly more highly aligned with the sources of state variance when using this state-space approach (SI Appendix, Fig. S2). We tested the functional relevance of the subspace for task performance through a perturbation approach. Just prior to cue B onset, we shifted the state of the network by a given magnitude in a random direction, which was either within the contingency subspace or in an orthogonal task-relevant subspace. We found that RNNs suffered greater performance deficits from perturbations within the contingency plane, indicating the functional relevance of the subspace for the CDL task (t test, P < 0.05 for all perturbation magnitudes of more than one) (Fig. 2E). Lastly, we analyze error trials in our RNN replicate models, finding that behavioral errors in our RNN predicted misalignment between the true contingency and the representation in the contingency subspace on error trials (SI Appendix, Fig. S3).

One advantage of the contingency representation is that it organizes delay representations such that they are already separable by mappings from cue B to response. Although task computations can be conceptualized as the conditional classifiers described above (Fig. 1 E and F), for RNNs a more intuitive theoretical schematic comes from considering state-space dynamics. If each cue B input perturbs the system’s state in an opposing direction within the contingency subspace, a nonlinear separatrix can be defined such that that the B-perturbed state maps to the correct behavioral response (Fig. 2F). We analyzed the average displacements in the RNN’s contingency subspace for trials in each of the contingencies during cue B and found that they closely correspond to this theoretical schematic (Fig. 2G). As a dynamical system, our RNN’s responses are made through the transition to readout states determined by the trained readout weights. In further analysis (Fig. 2H), we specifically examined the dynamics that carry the network activity to those readout-related states. The state-space dynamics following cue B offset demonstrate a nonlinear consolidation trajectory from contingency to the appropriate response state (Fig. 2H), revealing that while the response mapping problem can be defined within the RNN’s contingency subspace, response-state generation in the RNN relies on higher-dimensional nonlinear dynamics. This nonlinear step is fundamental to the structure of our RNN models based on their construction, as their responses are a function of the projection of neural activity along fixed readout weights. This contrasts with the theoretical contingency schematic (Fig. 1F) in which responses can be mapped from states via linear classifiers conditional on cue B.

Control analyses investigated the computational properties leading to contingency representations in artificial network models trained on CDL task variants. First, we trained an RNN on a task variant in which the rule is not presented until the cue B epoch (SI Appendix, Fig. S4). This RNN performs this late-rule task with high accuracy, but it did not develop enrichment in contingency variance during the delay, the presence of a contingency subspace with separability for contingency states, nor selective vulnerability to perturbations within the contingency subspace. This demonstrates that RNNs can perform the CDL task using a sensory strategy and that contingency representations are not an artifact of our analysis method. In a second CDL task variant, we provided time following cue B for the computation to take place before the network was asked to respond. In this variant, we see a substantial reduction in evidence for a contingency strategy (SI Appendix, Fig. S4 E–H). This is in line with our predictions from Fig. 1 E and F that contingency may be important to reduce computational complexity, as enrichment of contingency in the network delay representation is reduced when the pressure of limited computational time is alleviated.

In a second control analysis, we trained a three-layer feed-forward network in contrast to an RNN, providing task inputs into different layers of the network (SI Appendix, Fig. S5). Contingency coding only emerged as a dominant middle-layer representation when cue A and rule inputs were provided upstream and cue B was provided downstream, which is the feed-forward analog of the temporal structure in the CDL task. These analyses suggest that computational stages of information processing, not specific choice of model architecture, give rise to emergent contingency tuning. Further, the ability of this structurally distinct model to recapitulate the main results of contingency representation in the combination of cue A and rule during the CDL tasks demonstrates the strong robustness of this representation to choice of model.

Model Neural Activity Captures Neurophysiological Findings.

While at the population level, our trained RNNs can be structurally and functionally identified to be utilizing contingency representations, it is unclear how that is instantiated at the level of individual units and therefore, how it might relate to the findings in single-neuron recordings from animals performing working memory tasks. We profiled the selectivity properties of our unit activity vector (Fig. 3A). While the cue A–averaged traces showed substantial tuning within a rule, we found that between rules, the apparent cue A tuning would invert. For many units, however, this inversion across rules could be substantially explained by contingency. Using a linear model, we identified the percentage of variance explained in unit state by cue A, rule, and contingency. While we found that most units predominantly encoded cue A during the stimulus epoch, by the late delay this pattern had changed to primarily encode contingency (Fig. 3B). Similarly, rule tuning was maximized during the stimulus epoch dropping into the delay, even though the tonic input of rule information persisted throughout the entire trial (Fig. 3B). Nonlinear dimensionality reduction via UMAP (uniform manifold approximation and projection) recapitulated the transition from sensory to contingency representations and showed that contingency and sensory tuning are dominant factors driving unit states in the late- vs. early-delay epoch, respectively (SI Appendix, Fig. S6). This transition in information coding from retrospectively oriented to prospectively oriented during the delay matches prior analyses of single-neuron recordings from prefrontal cortex in monkeys performing working memory tasks (7).

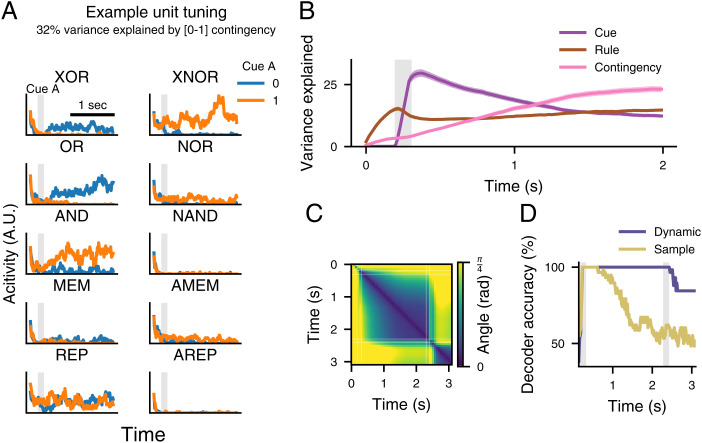

Fig. 3.

Contingency tuning captures neurophysiological responses in the prefrontal cortex. (A) An example RNN unit with traces representing mean activity in trials divided by cue A identity and rule for each of the ten rules (XOR, XNOR, OR, NOR, AND, NAND, Memory [MEM], Anti-memory [AMEM], Report [REP] and Anti-report [REP]); 32% of unit activity variance was explained by a binary predictor of whether it was a [0,1] contingency trial. Shaded gray regions indicate the time of cue A presentation. Traces are plotted from trial onset through delay. Firing rates are in arbitrary units (A.U.). (B) Mean percentage of unit variance explained by rule, cue A, and contingency over time. Solid lines represent means and shaded regions represent the SEMs across replicate RNNs. (C) Analysis of tuning dynamics across time. Activity in the example RNN was averaged within each condition, and then, principal component analysis (PCA) was used to identify the dominant axis of condition tuning. The angle between these axes was measured across each pair of time points. Gray shaded areas indicate cue A and cue B epochs. (D) A linear decoder was trained to read out cue A identity from sample epoch RNN activity and tested throughout the delay epoch. This was compared with a “dynamic” decoder trained and tested on data from the same time point. The cross-validated accuracy for each decoder is plotted.

This type of dynamic activity in persistent populations has drawn considerable interest in recent years, with many studies identifying a dynamic decoding axis of perceptual information from sample to delay epochs (23–25). The contingency representation as implemented by our RNN models provides one possible explanation for this phenomenon. As during the delay, the network shifts from being dominated by sensory variance to contingency variance, if one was to only examine a single task it would appear that the axis along which cue A is encoded has shifted. We used two analyses to detect dynamic working memory activity. In the first, we evaluate change in the principal tuning axis over time using principal component analysis (25), and in the second, we train static and dynamic decoders, showing a shift in the separatrix necessary to correctly classify the response from trial-state vectors (Fig. 3 C and D). In both cases, our network matches phenomena previously interpreted as dynamic memory.

Contingency Subspace Explains Heterogenous Neuronal Tuning.

One question that arises from the above results is how contingency representations would be reflected in typical analyses that measure tuning of neural activity, predominantly firing rates, for task variables, such as stimulus or rule identity. As a proof of concept, we demonstrate how utilizing subtasks of the CDL paradigm, within the same fixed network we can generate tuning for cue A, rule, or both features from contingency representations by altering the correlations between cue or rule information and contingency states. These correlations can exist even when cue A, cue B, and responses are all independently varied in the task, as they are induced by the nonlinear map from cue A and rule to contingency. We examined this in CDL variants with only two rules. For example, the rule pair of Memory and XOR are such that contingency representations yield neural tuning to cue A but not to rule due to averaging over contingency states for those subtasks (Fig. 4A).

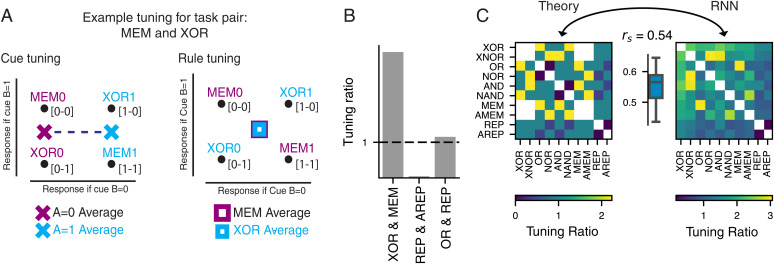

Fig. 4.

Contingency explains interactions of sensory and rule tuning for pairs of tasks. (A) Schematic of cue and rule tuning for an example pair of rules (MEM and XOR) utilizing a contingency representation. Task names are followed by a zero or one indicating the cue A on that trial and are placed in the appropriate contingency for that rule and cue A pair. (A, Left) X marks denote cue A averages, with purple for cue A 0 and blue for cue A 1. (A, Right) Square marks denote rule averages, with purple for MEM and blue for XOR. The dotted line in cue tuning indicates the difference between average cue states and therefore, cue tuning. In contrast, the overlap of rule averages indicates that this task pair will show little or no rule tuning. (B) The cue/rule tuning ratio for three example pairs of rules. Bars show the measured tuning in the RNN when just that rule pair was analyzed. The dashed line represents equal cue and rule tuning. (C, Left) Contingency based predictions for the cue/rule tuning ratio for each pair of rules in the conditional delayed logic (CDL) family defined as the Euclidean distance between cue averages and rule averages. A value greater than one indicates more cue tuning, A value less than one indicates more rule tuning, and a value of one represents equal tuning. (C, Right) Tuning ratio between Euclidean distances of cue and rule averages of state-space representations of RNNs when analyzed for each pair of rules. Distances are averaged across replicate RNNs. (C, Inset) The Spearman correlation of (off-diagonal) predicted and measured tunings ().

The nonlinear mapping from cue A and rule to contingency can cause the same unit to be identified as rule or cue tuned depending on precisely which task the agent is performing (Fig. 4 A and B). To demonstrate this, we analyzed our network units separately for each possible rule pair in the CDL task, forming 100 possible pairs of rules. For each task pair, we could use the expected contingency states to determine the theoretical prediction for cue and rule tuning (SI Appendix, Fig. S7). We found that the theoretical contingency-predicted cue/rule tuning ratio significantly correlated with the ratio of tuning measured from the full CDL-trained RNN model in the late-delay epoch (Fig. 4C).

Together, these results show how linear analyses for neural tuning of contingency-coding units can yield apparent tuning to cues and rules. While in any real system, this simplified theory of pure contingency tuning will not fully explain all sources of variance, the extent to which even a simple theory captures between-condition tuning variance in our trained high-dimensional and nonlinear RNN demonstrates the robustness of the predictions made by contingency representations. Further, since tuning predictions can be made with small subsets of the CDL task, it opens the door to experiments in animals for which it may not be feasible to train on a larger set of rules.

Human Behavior Matches the Contingency Strategy.

While there are theoretical advantages to computing with contingency representations compared with sensory representations and we find that trained RNN models develop and use contingency representations, it is unclear whether humans would use contingency as a strategy to perform the CDL task. To identify whether contingency is present in human behavior, we investigated the impact that closure and collapse had on response times (RTs) in a five-rule variant of the CDL task (Fig. 5A). We hypothesized that “closed” trial conditions with task closure would elicit shorter RTs than “open” conditions without closure because on closed trials, participants can preplan their motor response during the delay, whereas on open trials, they must wait for cue B to form their response.

Fig. 5.

Human behavior on the CDL task supports contingency-based strategies. (A, Upper) The procession of stimuli shown to participants during an example trial [Only (XOR), (cue A 0, cue B 1)]. (A, Lower) The table of responses by rule and (cue A, cue B) pair. Color indicates the contingency of that condition. (B) Mean RT by cue A and rule. Error bars represent SEMs across participants. Blue bars are open trial conditions, and orange bars are closed trial conditions. Logical rule names as well as Memory (MEM) and Anti-Report (AREP) are in parentheses below labels. (C) Percentage of participants with a significant (P < 0.05) difference in mean RT between cue A 0 and cue A 1 within a given rule. (D) The difference in closure effect, defined as the difference between the mean RT of the open condition and closed condition, between early trials (the first two blocks of the task) and late trials (the last two blocks) plotted separately for the OR and AND rules. (E) The percentage of participants for whom contingency explained more RT variance than would be expected by chance for the linear model permutations. Dashed lines represent chance level, and asterisks indicate P < 0.05 (one-sided t test).

We tested 17 human participants on the CDL task, measuring accuracies and RTs. Participants learned to perform the task with a high mean accuracy (97 ± 1%) across all rules (SI Appendix, Fig. S8). To investigate cognitive and computational strategies underlying performance of the CDL task, we measured and compared RTs across task conditions both between rules and between cues A (Fig. 5B). We found that RTs varied strongly across trial conditions, and we broadly sort them into two groups according to closed vs. open conditions, with closed trials having a significantly shorter mean RT than open trials ().

Crucially, the OR and AND subtasks, which vary on their closure status on a trial-by-trial basis, indicate that this RT difference between closed and open trials is not due to rule or cue differences. Within OR and AND, there remained a significant difference in RT between open and closed trials (OR: ; AND: ) (Fig. 5B). Within-individual analysis found that only for OR and AND was there a significant effect of cue A on RT for the majority of participants (Fig. 5C), which would be expected if closure was driving RT. We observed that the closure effect, the impact of closure on RT, grew over the course of the session (OR: P = 0.018; AND: P = 0.009) (Fig. 5D and SI Appendix, Fig. S8D), which suggests that the effect of closure can be learned through experience and be enhanced through training. The difference in RT between closed vs. open trials implies that they were capable of flexible updating of plans within a trial as they processed information from the rule and cue A. This conditionally variant RT pattern is opposed to sensory strategies for which subtasks of similar complexity ought to take similar times to complete independent of how cue A interacts with the rule (Fig. 1E).

While the results above show strong evidence for participant response times being impacted by closure, this is not definitive proof of adoption of a contingency strategy with collapse across task conditions as there exist potential alternative explanations for closure (26). One way we investigated the presence of contingency collapse in our human RT behavior is through a linear modeling approach to measure the proportions of variance in RTs explainable by contingency while controlling for the effects of closure, response target, rule, and congruency between cue B and response (Materials and Methods). If a participant has idiosyncratic differences in their facility with a specific contingency, we would expect that this difference would be shared across conditions with that contingency in a contingency-based strategy but not if each condition was represented independently. Importantly, while this test can be used to identify unexpected contingency variance in RT, absence of such variance does not imply the participants were not using contingency as they may respond to different contingency conditions with the same RT even if they are internally distinct states.

We utilized a permutation method to measure individual-level significance (Fig. 5E). We found that 53% of our participants (9 of 17) had significant explainable RT variance attributable to contingency and that such a proportion was substantially higher than would be expected by chance () (Fig. 5E).

It is important to note that the analyses above are designed to test the theory that participants are using contingency-based strategies in the broad sense that there are intermediate internal states computed during the delay, which are associated with upcoming contingencies. Importantly, our behavior alone cannot speak to the geometric organization of those states: for example, whether it matches the two-dimensional contingency subspaces identified theoretically (Fig. 1F) and in our trained RNNs (Fig. 2B).

Collectively, our behavioral findings provide support that in the CDL task, humans use contingency-based strategies and not sensory working memory strategies. Furthermore, our behavioral results establish the viability of the CDL task to test the neural bases of working memory representational strategies in humans through noninvasive neuroimaging modalities (27–31).

Predictions for Neural Data and Model Comparison.

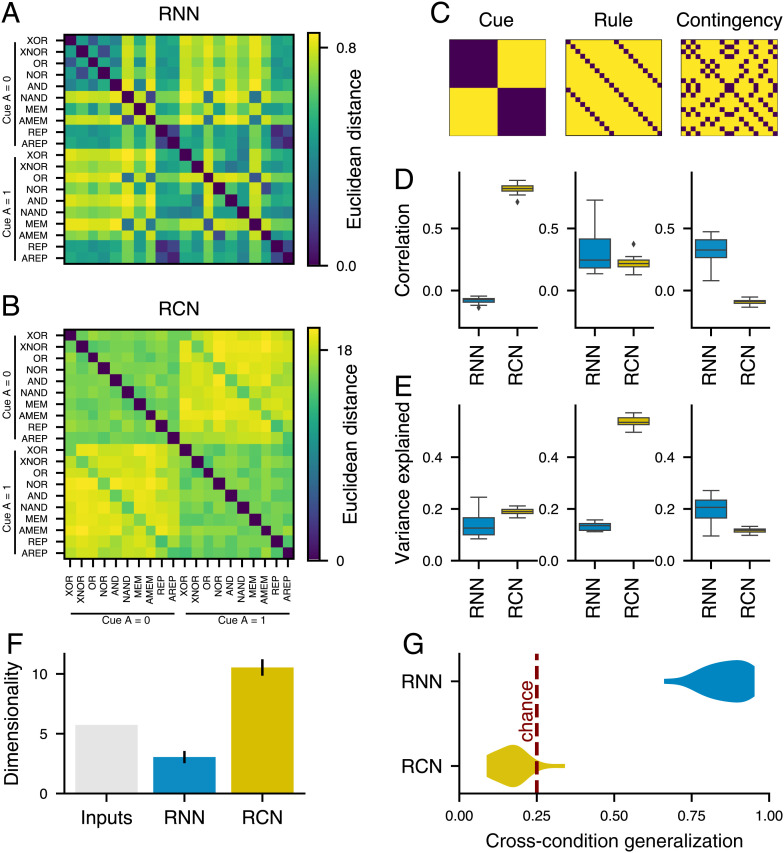

To gain insight into how our theory of contingency representation differs from alternative theories of neural representations and how they may be tested experimentally, we compared them against two alternative models. The first is a pure sensory encoding model, which we call an Inputs model, in which neural states uniquely identify different sensory cues presented during the first stimulus epoch and the tonic rule input. The second is a randomly connected network (RCN) model, which generates high-dimensional linearly separable representations of rule and cue A in order to enable arbitrary classification (8, 32). These alternative model classes represent two ends of a continuum from the most input-structured representation (Inputs) to the least structured (RCN). Furthermore, they serve as important controls because both have been used to model prefrontal computations in the literature (8, 9, 33).

Due to our focus on between-condition representational structure, one useful analysis is representational similarity analysis (RSA) (28). RSA enables the abstraction of high-dimensional data into a set of comparisons between conditions called a representational similarity matrix (RSM). We use cue A, rule, and contingency as theoretical intercondition templates (e.g., in the cue A template, all conditions with the same cue A should be similar) (Fig. 6C). The correlation between RSMs and theoretical templates measures the extent to which activity is structured by those features, as demonstrated in studies using functional magnetic resonance imaging (fMRI) in humans and single-neuron recordings in monkeys (28, 34).

Fig. 6.

Model comparison and predictions. (A and B) RSMs for (A) our example RNN model and for (B) an RCN model capable of performing the CDL task. Model similarity was calculated as the Euclidean distance between averaged late-delay epoch unit activity across conditions for the RNN model and mixed-layer activity for the RCN model. (C) Candidate RSMs for representational schemas organized by cue A, rule, and contingency. (D) Spearman correlation between the lower triangular portions of the candidate matrices and the RNN and RCN RSMs for each of our 20 replicate networks. (E) Mean fraction of variance explained by cue A, rule, and contingency across unit states during the late-delay epoch for the RNN and RCN. The distribution of averages across replicate networks is plotted. (F) Dimensionality of late-delay activity of the RNN model, the RCN model, and a “pure” model, in which the network represents the cue A and rule information orthogonally. Error bars represent SEMs across replicate networks. (G) Distribution of cross-condition generalization (CCG) measured across the replicate network for our RNN and RCN models. CCG was defined using a contingency subspace classifier. The contingency subspace (Fig. 2B) was fit as described above with one task condition held out. Then, that condition was projected into the subspace, and trials were classified based on the quadrant they fell in. The red dashed line represents chance classification.

The Inputs model by definition only contains input along the cue A and rule dimensions, but we calculated RSMs for the task-trained RNN and the RCN model (Fig. 6 A and B). We then compared these observed similarity matrices with our candidate expected similarity structures constructed by cue A, rule, and contingency (Fig. 6C). For the RCN model, cue A predicts the most between-condition similarity, with rule explaining a lesser fraction and a small negative correlation with contingency. In contrast, the RNN model has intercondition correlation best explained by contingency (Fig. 6D). These model predictions for population-level analyses were recapitulated in single-unit analyses by examining the amount of late-delay activity variance captured by a linear model including cue A, rule, and contingency regressors (Fig. 6E).

To generate single-neuron predictions, we fit a linear model for cue A, rule, and contingency to each unit’s activity in the late-memory epoch to determine what fraction of variance in activity each regressor explains. The sensory model, by definition, has single-unit tuning toward cue A and rule. The RCN model has a majority of units tuned to rule followed by cue A, with few units having substantial variance explained by contingency. The RNN model has the most variance explained by contingency followed by rule and cue A (Fig. 6E).

The dimensionality of model-state representations provides a complementary view into their geometric structure (35–37). We found that the three models make starkly different predictions regarding the dimensionality of the delay representations. The sensory model has dimensionality governed by the inputs directly, and the RCN substantially expands that dimensionality. In contrast, the RNN contracts representations into a lower effective dimensionality, which is only slightly higher than the two dimensions of the contingency code itself (Fig. 6F).

While the RCN model is designed for information to be generically decodable for a diversity of possible tasks, its high-dimensional representations are relatively unstructured and therefore, ought not generalize across conditions. We utilized a cross-condition generalization (CCG) analysis (38) to measure the extent to which the geometry across rule by cue A pairs was preserved in both our RNN and RCN models. We first fit a contingency subspace, with all trials of a given task condition (rule × cue A pair) held out. We then projected the held-out trials into the subspace and defined a correct classification as a trial falling within the correct subspace quadrant. We found that our RNN models had a mean CCG of 86%, substantially higher than chance (, one-sided t test), whereas our RCN models had a mean CCG score of 17%, which does not exceed the chance level of 25% (Fig. 6G). This CCG analysis, which uses linear classifiers, indicates that our RNN hidden state maintains the linear generalization across multiple rules in accord with the contingency representation theory described above (Fig. 1F).

Testing Contingency Coding in Neural Data.

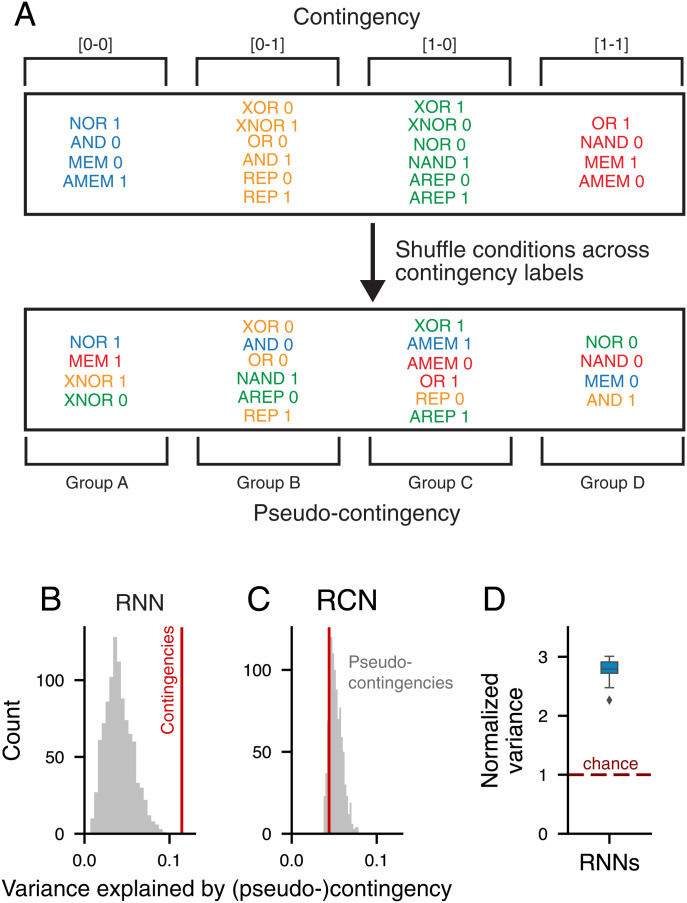

The analyses above are sufficient to characterize contingency encoding data generated for a known model class. We next examined how to statistically test for the presence of contingency coding in neural data in which we cannot know the process by which representations are generated (e.g., in experimental datasets of neural recordings). To avoid distributional assumptions about a neural dataset, we devised a nonparametric partitioned variance approach to test whether a given dataset has more contingency-based nonlinear structure than would be expected based on the amount of other nonlinear structure in the data (Fig. 7A).

Fig. 7.

Partitioned variance analysis to test contingency coding. (A) Schematic of the structure-preserving contingency shuffling method. We construct pseudocontingencies of equal size to our actual contingencies but with random conditions. (B and C) The reshuffling test for the proportion of contingency explained compared with shuffled pseudocontingencies from A. The red lines represent the mean variances explained by contingency for unit states in the RNN (RCN) model, while the gray bars represent the same measures for pseudocontingency replications. The RNN had significantly more variance explained by contingency than would be expected by chance, while the RCN model did not. (D) The ratio of variance explained by contingency compared with mean pseudocontingency shuffling over 20 replicate RNNs. The dashed line represents chance level.

By shuffling contingency mappings across conditions (here, rule–cue pairs) into “pseudocontingencies” and testing their ability to explain mean unit-state variance, we can build a null hypothesis for how well we would expect a nonlinear interaction similar in structure, but different in specific conditions, to contingency to explain the data. Comparing random permutations with the measured quantity from the actual contingency mappings tests whether the dataset is better explained by contingency than would be expected by chance independent of the general nonlinearity, feature mixing, or noise structure in the representation. We validated this approach with our RNN and RCN models, finding that our RNN model was better explained by contingency than chance (P < 0.001), while our RCN model was not (Fig. 7 B and C). Each replicate RNN model exhibited at least twice as much mean unit variance explained by contingency than the mean of its pseudocontingency null distribution (Fig. 7D).

Discussion

In this study, we defined a representational schema for delay tasks in which network states encode the mapping between expected stimuli and actions termed the contingency representation. We developed a task, the CDL task, capable of dissociating contingency representations from stimulus or response representations. RNN models trained on the CDL task developed enrichment of contingency in the representations used to perform the task. The structure of contingency representations in the model naturally captures experimentally observed phenomena, including context-dependent tuning, mixed selectivity, and dynamic coding for working memory. Human performance on the CDL task exhibited interconditional RT variance consistent with a contingency-based strategy and largely inconsistent with a stimulus memory strategy. Lastly, we generated falsifiable predictions for neural recordings of animals or humans performing the CDL task and compared results with alternative models.

Contingency representations can potentially unify memory, planning, and cognitive control in a manner that does not require an external system to control the interactions between these subprocesses. In delay tasks where cues have a one-to-one correspondence with contingencies, the contingency representation is essentially indistinguishable from a sensory representation. In tasks where responses can be directly inferred from predelay cues, contingency states divide along future motor responses. Interestingly, tasks, such as the CDL, can exhibit both of the above cases. This directly links working memory to cognitive control through representational structure rather than positing an exogenous controller of inputs as in prior modeling (39). Importantly, in neural systems, contingency need not work as a stand-alone representation but rather, can form part of a representation useful for the completion of a given task in combination with other features. In such cases, we might expect to see contingency enrichment in representations rather than a representation that is purely encoding contingency, as we observe in our trained RNN models.

Contingency representations form a specific instantiation of the more broad concept of task sets (40). Prior research has indicated that human learning is consistent with the application and reuse of one-stage task sets as contingencies (41). Our behavioral data show that humans can update their internal plans on the fly during a working memory task between conditions of open contingency and closed contingency. We note that other models, such as selective output gating, could also produce similar behavioral closure effects (26). While our analysis of RT variance provides some evidence for human collapse of contingency states, further experimental investigation is needed.

While contingency representations share the prospective aspects of prior predictive models of working memory, they differ in several crucial aspects. In contrast to predictive sensory theories of working memory (7, 42), it is the expected stimuli–action interactions rather than expected stimuli themselves that are encoded and selected in the establishment of contingency tuning. Further, where prior models of action-focused prospective working memory (43, 44) will treat states with the same expected distribution of actions and states as dissociable, such states can have different contingency representations. For example, the [0-1] contingency and the [1-0] contingency have no difference in the probability of either the upcoming sensory cues or actions, yet in the contingency framework, they remain distinct as what is encoded are the relations between predicted upcoming stimuli and actions. Lastly, in contrast to prior models that utilize prospective information as a way to perform task-adaptive stimulus selection for working memory encoding (42, 45), contingency tuning requires an intermediate computation on inputs rather than mere selection. Additionally, while both classes of models predict differences in tuning across tasks, in contingency these are epiphenomena of the correlations between contingency and task variables rather than in adaptive encoding models, where they represent true transitions in neural tuning.

One intriguing property of contingency representations is that they can help to unify differing interpretations of neural activity in working memory delay tasks. Prior studies using neuronal recordings from primate association cortex sought to dissociate retrospective sensory and more prospective signals for upcoming responses or expected stimuli (2, 7, 21) and found tuning for task rules (3). Using our RNN model and top-down theory generated from the contingency subspace of the CDL task, we offer a putative explanation for some of the diversity of signals observed. By analyzing the network as it performed pairs of tasks with different geometric relationships in the contingency subspace, we demonstrate that units can appear tuned to rule or stimulus. This is due to the fact that nonlinear transformations from rule and cue A lead to different forms of collinearity in the contingency subspace.

The hypothesis that contingency could explain these results is bolstered by our behavioral results indicating that RTs in our delay task are well explained by contingency. RTs have been shown to reflect cognitive processes (46), including difference in preparation and intention (47), and have been related closely to neural activity across the cortex (48, 49). The observations that RT was strongly modulated by condition (closed vs. open) and that idiosyncratic RT variance could be explained by contingency in the CDL task provide evidence that participants utilized contingency during task performance.

Empirical analyses of unbiased samples of neurons have found that substantial populations are tuned to multiple features in a task. This phenomenon has been termed mixed selectivity, with one hypothesis being that mixed selectivity generates a high-dimensional and mostly unstructured basis to facilitate flexible computation (8, 32). Here, we find that since many nonlinear combinations of rule and stimuli can lead to the same contingency state, units tuned to contingency can appear mixed. While we do find that some units in our RNN are tuned to cue A and rule, units are consistently better tuned to contingency and therefore, would appear as mixed to traditional linear analysis methods. This contingency tuning, however, is highly structured. Our finding is, therefore, better matched to recent research exploring the trade-off between generalization and flexibility as a function of between-condition structure (38).

Recent studies have indicated that there are substantial temporal dynamics in the pattern of activity seen during cue onset and early delay compared with late delay (24, 50–52). One hypothesis is that the mechanisms of neural persistence are intrinsically dynamic (53). In our RNN, representations demonstrate what appears to be dynamics as the network moves from a stimulus-dominant representation in the cue epoch to a contingency representation by the late-delay epoch. In many experimental contexts, contingencies are correlated with stimuli. In such cases, the stimuli to contingency dynamics may appear as a change or rotation in stimulus coding (Fig. 2).

One important advantage to utilizing task-trained RNN modeling in this study is that we do not impose any specific internal representation, thus allowing for emergent phenomena constrained by the task itself (14, 17). This strongly contrasts with most of the prior working memory modeling, in which the structure of delay states is prespecified by the researchers (12, 54). Further observability and controllability over the RNN during information processing enabled us to implement precise perturbations to measure the causal role of contingency representations in behavior. These features enabled us to expand on recent work identifying shared modules in a trained multitask network performing many tasks previously identified with prefrontal activity (17). In complement to the heuristic modularity they observed, the precision of the CDL task in sharing common computational intermediate stages allowed us to produce a top-down theory of modularity that we could then use to guide investigation and measure exact modular overlap in our RNN.

Our study opens substantial theoretical and experimental questions for future research. One important question is whether differences in task demands would lead to alternative strategies to contingency being preferred. For tasks like CDL, in which stimulus dimensionality is higher than response dimensionality, the overall complexity of the problem can be reduced by computing through contingencies rather than stimuli. For tasks with higher response dimensionality than stimulus dimensionality, however, it is unclear whether contingency representations would be advantageous. Furthermore, contingency is not fully invertible as it is not isomorphic with cue A and rule and therefore, may be unsuitable in contexts in which it is often necessary to return to the previous cue information. Of note, while contingency does reduce the CDL problem complexity, it does not reduce the amount of information stored mnemonically as contingency must be stored as two bits: one to encode a response if cue B is zero and one if cue B is one. In contrast, the sensory strategy can function with a one-bit memory, combining with the tonically present rule information only at cue B onset. To study the impact of working memory capacity on representational structure, the CDL task could be generalized to arbitrary dimensionalities of cue and action spaces to systematically examine how capacity in both memory and problem complexity impacts strategies in human participants and in task-trained artificial neural network models. Even within the original CDL tasks, it is unclear whether all neural network architectures will develop contingency representations. As we identify theoretically and in our RNNs, for the CDL task contingencies provide a set of states that can be jointly divided by the same two hyperplanes. RNNs, however, are capable of performing robust nonlinear computation. For this reason, open questions include why linearly separable contingency states develop in networks during training with these task designs and whether these representations may not develop in networks with different architectural constraints.

Future experiments can record neural activity patterns from human participants or animals performing CDL tasks to test whether internal representations are governed by contingency (27–30). Monkeys can be trained to perform logical operations of the type presented in the CDL task (55). Task feature conjunctions necessary for contingency representations can be identified in humans using electroencephalogram (EEG) recordings and associated with RT behavior (31). Neuroimaging could localize representational heterogeneity among cortical regions during the CDL task and explore how changes in information flow between sensory, frontal, and motor areas accompany the differential information routing for which we found behavioral evidence (56). Furthermore, such experiments could be augmented by modulating the computational constraints imposed by the specific task design. As we demonstrate in the “late-rule” and “output gap” task variants (SI Appendix, Fig. S4) as well as in the feed-forward network analysis (SI Appendix, Fig. S5), the appearance of contingency coding can be shaped by controllable task demands. In turn, future modeling can incorporate aspects of known neurobiology, which are potentially important for neural circuit computation, to extend beyond the relatively simple architecture used here. Neurobiologically motivated properties, such as Dale’s principle (57, 58), short-term synaptic plasticity (59), or multiregional connectivity constraints (60), could be explored for their effects on the emergence or structure of contingency representations.

In conclusion, we introduced a representational schema, contingency representations, capable of unifying working memory and planning without the use of an external controller. We found evidence of contingency-based strategies in human behavior. In a task-trained neural network model, we identified ways in which contingency representations can explain findings on tuning from neurophysiological experiments on working memory as well as provide testable hypotheses for future studies of neural activity during cognitive tasks.

Materials and Methods

Methods for analyses and models are provided in SI Appendix, SI Text.

Supplementary Material

Acknowledgments

We thank members of the laboratory of J.D.M. for useful discussions and Rishidev Chaudhuri for feedback on an earlier version of the manuscript. This research was supported by the Gruber Foundation (D.B.E.), NIH Grant R01MH112746 (to J.D.M.), and NSF NeuroNex Grant 2015276 (to J.D.M.).

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission. S.F. is a guest editor invited by the Editorial Board.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.2115610119/-/DCSupplemental.

Data Availability, Materials, and Software

Choice and RT data from human subjects as well as RNN model code have been deposited in a publicly available GitHub repository (https://github.com/murraylab/conditionalDelayedLogicTask) (61).

References

- 1.Funahashi S., Bruce C. J., Goldman-Rakic P. S., Mnemonic coding of visual space in the monkey’s dorsolateral prefrontal cortex. J. Neurophysiol. 61, 331–349 (1989). [DOI] [PubMed] [Google Scholar]

- 2.Quintana J., Fuster J. M., From perception to action: Temporal integrative functions of prefrontal and parietal neurons. Cereb. Cortex 9, 213–221 (1999). [DOI] [PubMed] [Google Scholar]

- 3.Wallis J. D., Anderson K. C., Miller E. K., Single neurons in prefrontal cortex encode abstract rules. Nature 411, 953–956 (2001). [DOI] [PubMed] [Google Scholar]

- 4.Wu Z., et al., Context-dependent decision making in a premotor circuit. Neuron 106, 316–328.e6 (2020). [DOI] [PubMed] [Google Scholar]

- 5.Goldman-Rakic P. S., Cellular basis of working memory. Neuron 14, 477–485 (1995). [DOI] [PubMed] [Google Scholar]

- 6.Constantinidis C., Procyk E., The primate working memory networks. Cogn. Affect. Behav. Neurosci. 4, 444–465 (2004). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Rainer G., Rao S. C., Miller E. K., Prospective coding for objects in primate prefrontal cortex. J. Neurosci. 19, 5493–5505 (1999). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Rigotti M., et al., The importance of mixed selectivity in complex cognitive tasks. Nature 497, 585–590 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Lindsay G. W., Rigotti M., Warden M. R., Miller E. K., Fusi S., Hebbian learning in a random network captures selectivity properties of the prefrontal cortex. J. Neurosci. 37, 11021–11036 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Bouchacourt F., Buschman T. J., A flexible model of working memory. Neuron 103, 147–160.e8 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wang X. J., Decision making in recurrent neuronal circuits. Neuron 60, 215–234 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Brunel N., Wang X. J., Effects of neuromodulation in a cortical network model of object working memory dominated by recurrent inhibition. J. Comput. Neurosci. 11, 63–85 (2001). [DOI] [PubMed] [Google Scholar]

- 13.Kriegeskorte N., Deep neural networks: A new framework for modeling biological vision and brain information processing. Annu. Rev. Vis. Sci. 1, 417–446 (2015). [DOI] [PubMed] [Google Scholar]

- 14.Barak O., Recurrent neural networks as versatile tools of neuroscience research. Curr. Opin. Neurobiol. 46, 1–6 (2017). [DOI] [PubMed] [Google Scholar]

- 15.Remington E. D., Narain D., Hosseini E. A., Jazayeri M., Flexible sensorimotor computations through rapid reconfiguration of cortical dynamics. Neuron 98, 1005–1019.e5 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Mastrogiuseppe F., Ostojic S., Linking connectivity, dynamics, and computations in low-rank recurrent neural networks. Neuron 99, 609–623.e29 (2018). [DOI] [PubMed] [Google Scholar]

- 17.Yang G. R., Joglekar M. R., Song H. F., Newsome W. T., Wang X. J., Task representations in neural networks trained to perform many cognitive tasks. Nat. Neurosci. 22, 297–306 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Cueva C. J., et al., Low-dimensional dynamics for working memory and time encoding. Proc. Natl. Acad. Sci. U.S.A. 117, 23021–23032 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Miller E. K., Erickson C. A., Desimone R., Neural mechanisms of visual working memory in prefrontal cortex of the macaque. J. Neurosci. 16, 5154–5167 (1996). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Wang X. J., Synaptic reverberation underlying mnemonic persistent activity. Trends Neurosci. 24, 455–463 (2001). [DOI] [PubMed] [Google Scholar]

- 21.Funahashi S., Chafee M. V., Goldman-Rakic P. S., Prefrontal neuronal activity in rhesus monkeys performing a delayed anti-saccade task. Nature 365, 753–756 (1993). [DOI] [PubMed] [Google Scholar]

- 22.Mante V., Sussillo D., Shenoy K. V., Newsome W. T., Context-dependent computation by recurrent dynamics in prefrontal cortex. Nature 503, 78–84 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Rossi-Pool R., et al., Decoding a decision process in the neuronal population of dorsal premotor cortex. Neuron 96, 1432–1446.e7 (2017). [DOI] [PubMed] [Google Scholar]

- 24.Spaak E., Watanabe K., Funahashi S., Stokes M. G., Stable and dynamic coding for working memory in primate prefrontal cortex. J. Neurosci. 37, 6503–6516 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Cavanagh S. E., Towers J. P., Wallis J. D., Hunt L. T., Kennerley S. W., Reconciling persistent and dynamic hypotheses of working memory coding in prefrontal cortex. Nat. Commun. 9, 3498 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Chatham C. H., Frank M. J., Badre D., Corticostriatal output gating during selection from working memory. Neuron 81, 930–942 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Haynes J. D., Rees G., Decoding mental states from brain activity in humans. Nat. Rev. Neurosci. 7, 523–534 (2006). [DOI] [PubMed] [Google Scholar]

- 28.Kriegeskorte N., Mur M., Bandettini P., Representational similarity analysis - connecting the branches of systems neuroscience. Front. Syst. Neurosci. 2, 4 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Soon C. S., He A. H., Bode S., Haynes J. D., Predicting free choices for abstract intentions. Proc. Natl. Acad. Sci. U.S.A. 110, 6217–6222 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Mok R. M., Love B. C., Abstract neural representations of category membership beyond information coding stimulus or response. J. Cogn. Neurosci., 10.1162/jocn_a_01651 (2020). [DOI] [PubMed] [Google Scholar]

- 31.Kikumoto A., Mayr U., Conjunctive representations that integrate stimuli, responses, and rules are critical for action selection. Proc. Natl. Acad. Sci. U.S.A. 117, 10603–10608 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Barak O., Rigotti M., Fusi S., The sparseness of mixed selectivity neurons controls the generalization-discrimination trade-off. J. Neurosci. 33, 3844–3856 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Wong K. F., Wang X. J., A recurrent network mechanism of time integration in perceptual decisions. J. Neurosci. 26, 1314–1328 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Hunt L. T., et al., Triple dissociation of attention and decision computations across prefrontal cortex. Nat. Neurosci. 21, 1471–1481 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Ahlheim C., Love B. C., Estimating the functional dimensionality of neural representations. Neuroimage 179, 51–62 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Farrell M., Recanatesi S., Moore T., Lajoie G., Shea-Brown E., Recurrent neural networks learn robust representations by dynamically balancing compression and expansion. bioRxiv [Preprint] (2019). https://www.biorxiv.org/content/10.1101/564476v2.full (Accessed 23 August 2021).

- 37.Badre D., Bhandari A., Keglovits H., Kikumoto A., The dimensionality of neural representations for control. Curr. Opin. Behav. Sci. 38, 20–28 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Bernardi S., et al., The geometry of abstraction in the hippocampus and prefrontal cortex. Cell 183, 954–967.e21 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Cohen J. D., Servan-Schreiber D., McClelland J. L., A parallel distributed processing approach to automaticity. Am. J. Psychol. 105, 239–269 (1992). [PubMed] [Google Scholar]

- 40.Sakai K., Task set and prefrontal cortex. Annu. Rev. Neurosci. 31, 219–245 (2008). [DOI] [PubMed] [Google Scholar]

- 41.Collins A. G. E., Frank M. J., Cognitive control over learning: Creating, clustering, and generalizing task-set structure. Psychol. Rev. 120, 190–229 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Rowe J. B., Toni I., Josephs O., Frackowiak R. S., Passingham R. E., The prefrontal cortex: Response selection or maintenance within working memory? Science 288, 1656–1660 (2000). [DOI] [PubMed] [Google Scholar]

- 43.Parr T., Rikhye R. V., Halassa M. M., Friston K. J., Prefrontal computation as active inference. Cereb. Cortex 30, 682–695 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Procyk E., Goldman-Rakic P. S., Modulation of dorsolateral prefrontal delay activity during self-organized behavior. J. Neurosci. 26, 11313–11323 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Duncan J., An adaptive coding model of neural function in prefrontal cortex. Nat. Rev. Neurosci. 2, 820–829 (2001). [DOI] [PubMed] [Google Scholar]

- 46.Ratcliff R., McKoon G., The diffusion decision model: Theory and data for two-choice decision tasks. Neural Comput. 20, 873–922 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Rosenbaum D. A., Human movement initiation: Specification of arm, direction, and extent. J. Exp. Psychol. Gen. 109, 444–474 (1980). [DOI] [PubMed] [Google Scholar]

- 48.Churchland M. M., Yu B. M., Ryu S. I., Santhanam G., Shenoy K. V., Neural variability in premotor cortex provides a signature of motor preparation. J. Neurosci. 26, 3697–3712 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Snyder L. H., Dickinson A. R., Calton J. L., Preparatory delay activity in the monkey parietal reach region predicts reach reaction times. J. Neurosci. 26, 10091–10099 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Stokes M. G., et al., Dynamic coding for cognitive control in prefrontal cortex. Neuron 78, 364–375 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Murray J. D., et al., Stable population coding for working memory coexists with heterogeneous neural dynamics in prefrontal cortex. Proc. Natl. Acad. Sci. U.S.A. 114, 394–399 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Parthasarathy A., et al., Time-invariant working memory representations in the presence of code-morphing in the lateral prefrontal cortex. Nat. Commun. 10, 4995 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Barak O., Sussillo D., Romo R., Tsodyks M., Abbott L. F., From fixed points to chaos: Three models of delayed discrimination. Prog. Neurobiol. 103, 214–222 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Machens C. K., Romo R., Brody C. D., Flexible control of mutual inhibition: A neural model of two-interval discrimination. Science 307, 1121–1124 (2005). [DOI] [PubMed] [Google Scholar]

- 55.Buschman T. J., Machon M., Miller E. K., Comparison of AND, OR, and XOR rules in monkeys. Program No. 63.16. Neuroscience 2006 Abstracts (Society for Neuroscience, Atlanta, GA, 2006). Online.

- 56.Siegel M., Buschman T. J., Miller E. K., Cortical information flow during flexible sensorimotor decisions. Science 348, 1352–1355 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Song H. F., Yang G. R., Wang X. J., Training excitatory-inhibitory recurrent neural networks for cognitive tasks: A simple and flexible framework. PLoS Comput. Biol. 12, e1004792 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Ehrlich D. B., Stone J. T., Brandfonbrener D., Atanasov A., Murray J. D., PsychRNN: An accessible and flexible python package for training recurrent neural network models on cognitive tasks. eNeuro 8, ENEURO.0427-20.2020 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Masse N. Y., Yang G. R., Song H. F., Wang X. J., Freedman D. J., Circuit mechanisms for the maintenance and manipulation of information in working memory. Nat. Neurosci. 22, 1159–1167 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Pinto L., et al., Task-dependent changes in the large-scale dynamics and necessity of cortical regions. Neuron 104, 810–824.e9 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Ehrlich D. B., Murray J. D., conditionalDelayedLogicTask. GitHub. https://github.com/murraylab/conditionalDelayedLogicTask. Deposited 17 August 2022.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Choice and RT data from human subjects as well as RNN model code have been deposited in a publicly available GitHub repository (https://github.com/murraylab/conditionalDelayedLogicTask) (61).