Abstract

Given the costliness of HIV drug therapy research, it is important not only to maximize true positive rate (TPR) by identifying which genetic markers are related to drug resistance, but also to minimize false discovery rate (FDR) by reducing the number of incorrect markers unrelated to drug resistance. In this study, we propose a multiple testing procedure that unifies key concepts in computational statistics, namely Model-free Knockoffs, Bayesian variable selection, and the local false discovery rate. We develop an algorithm that utilizes the augmented data-Knockoff matrix and implement Bayesian Lasso. We then identify signals using test statistics based on Markov Chain Monte Carlo outputs and local false discovery rate. We test our proposed methods against non-bayesian methods such as Benjamini–Hochberg (BHq) and Lasso regression in terms TPR and FDR. Using numerical studies, we show the proposed method yields lower FDR compared to BHq and Lasso for certain cases, such as for low and equi-dimensional cases. We also discuss an application to an HIV-1 data set, which aims to be applied analyzing genetic markers linked to drug resistant HIV in the Philippines in future work.

Keywords: Bayesian variable selection, Model-free Knockoffs, False discovery control, Drug resistant HIV-1

Introduction

Through the continued development of modern computing technology, it has become easier to store, synthesize, and extract insights from large-scale data. A major focus of these developments is on variable selection, which deals with identifying a subset of characteristics, features, or covariates that are important in describing a certain observed phenomenon. For instance, regression has been a go-to technique in many fields to determine significant features from a diverse set of variables. Several methods have been developed over the years for specialized purposes such as high dimensional and sparse data, among which are Bayesian statistical methods (Guan and Stephens 2011; Sindhu et al. 2017, 2019). The main objective of these techniques is to increase the accuracy of variable selection using a novel Bayesian technique, eventually gaining more insights about the outcome of interest.

This development is particularly evident in genomics, which is the field of study concerned with the structure, function, evolution, and mapping of genomes—the genetic material that describes an organism (McKusick and Ruddle 1987). Advancements in the discovery of genetic markers for various applications such as studying genetic variabilities, species identification, and medical applications have flourished over the past decade. Moreover, the detection of significant single-nuceotide polymorphisms (SNPs), which are genetic variations in DNA, have paved the way for specialized drug delivery through detecting genetic markers that point to drug resistance (Metzner 2016). Recently, it is typical to observe thousands or millions of covariates in genome-wide association studies (GWAS). In the typical GWAS, a large number of genetic markers such as SNPs are measured from thousands of individuals where the primary goal is to identify which parts of the genome harbor markers that affect some physical characteristics, such as drug resistance (Guan and Stephens 2011).

One particular important application of genomics is the study of the global Human Immunodeficiency Virus (HIV) pandemic. Researchers agree that understanding the virus’ genetic sequence is key in developing both vaccines and treatments for those affected. Yet, one problem that arises in developing treatments is HIV drug resistance, which happens when false positive genetic markers are incorrectly detected as significant contributors to drug resistance. This is especially risky due to possible complications and side effects from taking the wrong HIV drug. For example, if identified genomic traits in individuals falsely lead to determining resistance to a certain drug, then a wrong drug may be administered, leading to various side effects. Also, given the wrong drug, patients may have to take larger doses to compensate for the resistance (Jaymalin 2018). Meanwhile, undetected drug resistance may lead to an increase in viral load in the hosts body, severely weakening the immune system, potentially leading to full blown Acquired Immunodeficiency Syndrome (AIDS) (Nasir et al. 2017; Kuritzkes 2011; National Institutes of Health 2020).

The study is motivated by the growing HIV problem in the Philippines. It is one of the few countries in the world where the HIV infection rate is accelerating (World Health Organization 2018), while also experiencing rising Drug resistant HIV. Unfortunately, testing for drug resistance is not part of the routine due to unavailability of testing kits or expenses involved (Jaymalin 2018). With the understanding that the country has limited access to both testing and a wide range of anti-retroviral drugs, the University of the Philippines National Institutes of Health (UP NIH) is working on developing a cheap and accessible diagnostic kit for HIV drug resistance testing (Macan 2019). The authors aim to develop a methodology for the analysis of genetic markers linked to drug resistant HIV in the Philippines, once the diagnostic kit is made publicly available and mass testing allows for the collection of data.

All-in-all, this study aims to propose a method to detect true signals from nulls while ensuring false discovery control. We bridge the concepts in computational statistics, namely false discovery control (Efron et al. 2001) and Bayesian Lasso Regression (Park and Casella 2008) with the new concept called “Knockoffs” introduced by Barber and Candès (2015). We use both numerically simulated data and a real-world HIV-1 dataset in demonstrating the proposed method’s potential for detecting genetic markers with false discovery control as compared to existing methodologies.

Review of related literature

False discovery rate and Knockoffs

Multiple testing procedures have been increasingly important in the era of big data. In order to weave through large datasets and find tiny nuggets of gold that are significant variables, innovations in multiple testing procedures have been introduced since the 1990s (Westfall and Young 1993; Efron et al. 2001; Dudoit et al. 2003; Efron 2008). This is especially important in fields like genomics where minimizing false discoveries in gene and mutation detection allows for more accurate drug delivery, specialized treatments, among other important discoveries. The primary objective is to test p pairs of null and alternative hypotheses (Efron et al. 2001), , in which we generally have a decision rule based on a pre-defined test statistic that will decide whether each p is null or non-null. Our goal is to minimize the false discovery proportion Q, where and V and S are the number of false and true discoveries, respectively, among the rejected null hypotheses.

Benjamini and Hochberg (1995) proposed the concept of False Discovery Rate (FDR), which is defined as the expected value of the proportion of false rejections among rejected hypotheses where R is the number of discoveries or the number of variables tagged as significant. Furthermore, they also proposed a distribution-free, linear step-up method that controls the FDR.

While Benjamini and Hochberg (1995)’s method has since been the standard for false discovery control, the landmark framework by Barber and Candès implemented a key innovation in false discovery control by introducing the idea of constructing a “fake” or knockoff design matrix that mimics the correlation structure of , it becomes possible to create test statistics, say , for the corresponding such that the differences between the original and its knockoff are large for non-null cases, and small for null cases (Barber and Candès 2015). They were able to prove theoretically that the procedure controls FDR using a data-dependent threshold. By ensuring FDR is not too high, discoveries are reliably true and replicable. Implementing the knockoffs framework can generally be summarized in three steps:

Construct knockoffs from the original design matrix

Compute knockoff statistics

Find the knockoff threshold

Our proposed method primarily focuses on creating new test statistics for controlled variable selection. To compute test statistics, 2p ’s are computed based on the augmented data matrix : (), and then compute each based on each pair of and . A large positive value of means the original parameter j enters the model before its knockoff (index ). The crux to the knockoff method’s guaranteed FDR control is through the choosing of a data-dependent threshold. We select such that it is larger than t and positive (), where t is the threshold Barber and Candès (2015).

While the Benjamini-Hochberg procedure is phrased in terms of the classical p-value, for the case of large-scale testing where thousands of these p-values are measured at once, it is important that outcomes are judged on their own terms and not with respect to the hypothetical possibility of more extreme results (Efron 2012). Thus, Efron et al. (2001) introduced local false discovery rates (lfdr), prompted by a Bayesian idea and implemented using empirical Bayes methods for large-scale testing. Local false discovery rate measures confidence in each effect being non-zero among a large number of imprecise measurements in large scale multiple testing Korthauer et al. (2019). Efron et al. (2001) defined local false discovery rate as:

| 1 |

where is the proportion of nulls, is the proportion of non-nulls, is the null density, and is the non-null density. The null distribution is assumed known while can be estimated. Consequently, we can either estimate the mixture distribution f or estimate and then plug in to in order to determine f (Efron 2012). The interpretation of the local FDR value is analogous to the frequentist’s p-value wherein local FDR values less than a specified level of significance provide stronger evidence against the null hypothesis.

Bayesian Lasso

Regression methods are ubiquitous in statistics for its ability to relate a dependent variable to a design matrix of independent variables . While there are many types of regression methods available from simple linear, to non-linear regression, and even nonparametric methods, Bayesian methods have gained ground in recent years due to increased access to computational facilities (O’Hara and Sillanpää 2009; Bijak and Bryant 2016; Robert and Casella 2011). In conjunction with the knockoffs framework, to be discussed in the next sect. 2.1, it is fitting to implement more straightforward Bayesian regression methods. It is advantageous to use these methods because they are easy to understand and utilize for most end-users rather than sophisticated adaptive approaches.

Park and Casella (2008) suggested that based from the form of Tibshirani (1996), Lasso may be interpreted as a Bayesian posterior mode estimate when the parameters have independent and identical double exponential (Laplace) priors. They formulated a hierarchical specification of the prior distribution for as follows:

| 2 |

| 3 |

| 4 |

| 5 |

| 6 |

Since may be integrated out, the joint density (marginal only over ) is proportional to

| 7 |

where (7) depends on only through . It is then more simple to form a Gibbs sampler for , , , and based on this density because the conjugacy of the other parameters remains unaffected (Park and Casella 2008).

Methodology

As mentioned previously, the primary objective of GWAS is to identify the relevant genetic markers. Statistically, this translates to identifying the nonzero regression coefficients of . In order to test our p pairs of null and alternative hypotheses,

we propose a multiple testing procedure based on the Bayesian Lasso and the Knockoffs framework. In conjunction with Bayesian regression methods, local false discovery rate is used in order to detect signals from noise and control FDR appropriately.

Existing literature on the knockoffs framework implementing Bayesian methods is currently scarce (Gimenez et al. 2018; Candès et al. 2018). This paper aims to show it is feasible to apply computationally-intensive Bayesian methods in creating knockoff statistics for accurate inference. By carefully applying techniques previously done by Barber and Candès (2015) and Efron et al. (2001), in conjunction with Bayesian techniques also allows for incorporating appropriate prior information on the data through the choice of prior distribution. Our application of local false discovery (Efron et al. 2001) also allows for easily interpretable measures of importance, previously not possible with penalized regression approaches like Lasso. Most importantly, benchmarking from Barber and Candès (2015)’s challenge to understand and choose statistics that yield high power with FDR control, we aim to show that the proposed feature importance statistics obtain the desired FDR control and display comparable performance in statistical power more commonly used frequentist methods.

In order to detect which hypotheses are non-nulls from nulls, we calculate the posterior probability that given the observed data , and the knockoffs , . We utilize Efron et al. (2001)’s local false discovery rate to compute this probability.

However, the local FDR formulation consists of unknown quantities and , which must be estimated accordingly. We assume that follows a Beta distribution. Using the draws of generated from the Gibbs sampler, we can arrive at an estimate , where . Secondly, we assume that and follow the normal distribution. Using the draws of and generated from the Gibbs sampler, we can estimate the unknown parameters of the null and the non-null distribution, respectively.

We specify that the null distribution is centered at zero while there is a location-shift in the non-null distribution from zero. This follows from Efron (2007)’s “zero assumption” where observations around the central peak of the distribution consists mainly of null cases. Also, both null and non-null distribution have the same variance .

Algorithm for Bayesian Lasso with Knockoffs

Following the discussion of Bayesian Lasso in Sect. 2.2, we draw from the Inverse Gamma distribution. We use the conditional posterior of where we use the augmented data vector , the augmented parameter vector , and hyperparameters A and B to get

| 8 |

| 9 |

Meanwhile, we draw the augmented parameter from the Multivariate Normal distribution as mentioned. The augmented data vector , augmented tuning parameter vector , and the current iterate of are used to specify the parameters of MVN as shown below:

| 10 |

| 11 |

| 12 |

To update in step 6, we use the previous iterate of and the current iterates of , and to get

| 13 |

Finally, to update in step 7, we use the current iterates of , as well as the hyperparameters C and D to get

| 14 |

To detect non-null covariates using the Gibbs sampler iterates from Bayesian regression models, we use the concept of local false discovery rate introduced in Sect. 2.1. For each iteration t, we compute :

| 15 |

where

| 16 |

| 17 |

| 18 |

After computing the local false discovery rate , we use it to compute for , defined below. We will use for computing the test statistics, and it also provides a connection between and .

| 19 |

Moreover, in the general case when we truly do not know the number of non-null covariates, then a computational procedure based on local false discovery rate is used to estimate it. Suppose

| 20 |

The full conditional posterior of given is

| 21 |

The steps of the algorithm are outlined below:

Proposed test statistics

In this section, we present two distinct test statistics and two thresholds for the decision rule. These statistics-threshold combinations yield 4 different decision rules for choosing whether to reject the null hypothesis or not, which for simplicity we refer to as test statistics. We denote these decision rules as , where . The summary is provided in Table 1 in the Annex.

Table 1.

Test statistics using iterates of Gibbs samples

| Test statistic | Reject if . | |

|---|---|---|

| . | ||

| . | ||

| . | ||

| . |

After drawing T samples and burning-in U iterates, we are left with V draws. The proposed test statistics are based on the posterior means of the remaining V iterates. The decision rule utilizes which is the knockoff threshold defined by Barber and Candès (2015). Similarly, we will also use which is the knockoff threshold that guarantees the modified FDR control.

In the results section, the posterior means used for the proposed decision rules presented in Table 1 are computed from a Gibbs sampler with 5000 iterations and a burn-in of 1000 iterations.

Numerical simulation study

To gather insights on the performance of our proposed models, numerical simulation studies are performed. We investigate the settings required among the data generation procedure first and then proceed to the proposed methods. As an overview, the following settings are used:

Number of observations n: 50, 100, 200

Number of parameters p: 50, 100, 200

Signal noise : 1, 2.5, 3.5

Decision rules:

Prior specifications for : Exponential Distribution Shaped Prior (EXP), Right-Skewed Prior (RSK), Normal-Distribution Shaped Prior (NOR)

To ensure that we cover the settings on number of observations n and number of parameters p, we consider nine combinations. The choice of the number of observations and parameters intentionally reflects the original knockoffs literature. Barber and Candès (2015) discussed two cases ( and ). In this paper, we simulate on and a subset of , which is . We also explore a high-dimensional case not explored in the original 2015 study: .

The choice for amplitude reflects the maximal noise level where it is possible, but not trivial, to distinguish signal from noise (Barber and Candès 2015). They chose the maximal signal amplitude as it is approximately the expected value of , where .

Results and discussions

Numerical simulations

As discussed in Sect. 3.3, we consider several combinations of settings of (1) number of observations ‘n’, (2) number of parameters ‘p’, (3) signal noise ‘’, (4) decision rules ‘’, and (5) 3 proposed prior specifications. Thus, there is a total of 324 scenarios for the proposed methods as a result of 9 combinations, 3 priors, 4 decision rules, and 3 values for the signal noise .

The False Discovery Rate (FDR) and True Positive Rate (TPR) using the above proposed methods will then be compared to FDR and TPR obtained using existing non-Bayesian methods (Frequentist), namely the (1) Benjamini Hochberg procedure (BHq in Figure 1), and (2) Lasso regression. Lasso regression was applied to the Knockoffs framework using both the original Knockoff and modified threshold. The resulting statistics using Lasso regression with Knockoffs are referred to as LCDK and LCDK+, respectively. We average FDR and TPR over 1000 trials.

In this study, we compare BHq, LCDK, and LCDK+ methods to each of the method-prior combinations given a nominal FDR target level of .

The summary tables in the Annex (Tables 2, 3, 4) displays the FDR and TPR of the ‘best’ decision rule T for each of prior setting, number of observations n, number of parameters p, and signal noise . The ‘best’ decision rule for each row (method-prior combination) in each table is chosen such that it has the highest TPR, while maintaining FDR under target level 10%.

Table 2.

Comparison of results for proposed methods when

| Prior | Best | FDR | TPR | |||

|---|---|---|---|---|---|---|

| n=100, p=50 | ||||||

| 1 | EXP | 0.01 (0.073) | 0.019 (0.137) | − 0.981, BHq | − 0.077 | |

| RSK | 0.009 (0.069) | 0.017 (0.129) | − 0.983, BHq | − 0.079 | ||

| NOR | 0.008 (0.065) | 0.014 (0.116) | − 0.986, BHq | − 0.08 | ||

| 2.5 | EXP | 0 (0) | 0.939 (0.116) | − 0.061, BHq | − 0.088 | |

| RSK | 0 (0) | 0.939 (0.115) | − 0.061, BHq | − 0.088 | ||

| NOR | 0 (0) | 0.939 (0.116) | − 0.061, BHq | − 0.088 | ||

| 3.5 | EXP | 0 (0) | 0.939 (0.12) | − 0.061, BHq | − 0.088 | |

| RSK | 0 (0) | 0.938 (0.12) | − 0.062, BHq | − 0.088 | ||

| NOR | 0 (0) | 0.938 (0.121) | − 0.062, BHq | − 0.088 | ||

| n=200, p=50 | ||||||

| 1 | EXP | 0.013 (0.083) | 0.024 (0.153) | − 0.976, BHq | − 0.075 | |

| RSK | 0.013 (0.084) | 0.024 (0.152) | − 0.976, BHq | − 0.074 | ||

| NOR | 0.013 (0.083) | 0.024 (0.153) | − 0.976, BHq | − 0.074 | ||

| 2.5 | EXP | 0 (0) | 0.996 (0.029) | − 0.004, BHq | − 0.087 | |

| RSK | 0 (0) | 0.996 (0.029) | − 0.004, BHq | − 0.087 | ||

| NOR | 0 (0) | 0.995 (0.031) | − 0.005, BHq | − 0.087 | ||

| 3.5 | EXP | 0 (0) | 0.997 (0.027) | − 0.003, BHq | − 0.087 | |

| RSK | 0 (0) | 0.997 (0.026) | − 0.003, BHq | − 0.087 | ||

| NOR | 0 (0) | 0.997 (0.026) | − 0.003, BHq | − 0.087 | ||

| n=200, p=100 | ||||||

| 1 | EXP | 0.045 (0.083) | 0.588 (0.489) | − 0.412, BHq | − 0.048 | |

| RSK | 0.046 (0.084) | 0.593 (0.487) | − 0.407, BHq | − 0.047 | ||

| NOR | 0.045 (0.084) | 0.585 (0.49) | − 0.415, BHq | − 0.048 | ||

| 2.5 | EXP | 0 (0) | 0.946 (0.081) | − 0.054, BHq | − 0.092 | |

| RSK | 0 (0) | 0.946 (0.081) | − 0.054, BHq | − 0.092 | ||

| NOR | 0 (0) | 0.946 (0.081) | − 0.054, BHq | − 0.092 | ||

| 3.5 | EXP | 0 (0) | 0.947 (0.081) | − 0.053, BHq | − 0.092 | |

| RSK | 0 (0) | 0.947 (0.081) | − 0.053, BHq | − 0.092 | ||

| NOR | 0 (0) | 0.946 (0.082) | − 0.054, BHq | − 0.092 | ||

Table 3.

Comparison of results for proposed methods when

| Prior | Best | FDR | TPR | |||

|---|---|---|---|---|---|---|

| n=50, p=50 | ||||||

| 1 | EXP | 0.014 (0.089) | 0.025 (0.156) | 0.005, LCDK+ | 0.003 | |

| RSK | 0.016 (0.093) | 0.028 (0.165) | 0.008, LCDK+ | 0.005 | ||

| NOR | 0.014 (0.087) | 0.024 (0.152) | 0.004, LCDK+ | 0.003 | ||

| 2.5 | EXP | 0 (0) | 0.999 (0.015) | 0.977, LCDK+ | − 0.012 | |

| RSK | 0 (0) | 0.999 (0.015) | 0.977, LCDK+ | − 0.012 | ||

| NOR | 0 (0) | 0.99 (0.05) | 0.968, LCDK+ | − 0.012 | ||

| 3.5 | EXP | 0 (0.009) | 1 (0) | 0.979, LCDK+ | − 0.011 | |

| RSK | 0 (0.009) | 1 (0) | 0.979, LCDK+ | − 0.011 | ||

| NOR | 0 (0.005) | 1 (0) | 0.979, LCDK+ | − 0.011 | ||

| n=100, p=100 | ||||||

| 1 | EXP | 0.081 (0.103) | 0.959 (0.196) | − 0.035, LCDK+ | 0.005 | |

| RSK | 0.08 (0.103) | 0.96 (0.194) | − 0.034, LCDK+ | 0.005 | ||

| NOR | 0.081 (0.104) | 0.958 (0.199) | − 0.036, LCDK+ | 0.005 | ||

| 2.5 | EXP | 0 (0) | 1 (0) | 0, LCDK+ | − 0.076 | |

| RSK | 0 (0) | 1 (0) | 0, LCDK+ | − 0.076 | ||

| NOR | 0 (0) | 1 (0) | 0, LCDK+ | − 0.076 | ||

| 3.5 | EXP | 0 (0) | 1 (0) | 0, LCDK+ | − 0.076 | |

| RSK | 0 (0) | 1 (0) | 0, LCDK+ | − 0.076 | ||

| NOR | 0 (0) | 1 (0) | 0, LCDK+ | − 0.076 | ||

| n=200, p=200 | ||||||

| 1 | EXP | 0.084 (0.076) | 1 (0) | 0, LCDK+ | 0.001 | |

| RSK | 0.084 (0.076) | 1 (0) | 0, LCDK+ | 0.001 | ||

| NOR | 0.085 (0.078) | 1 (0) | 0, LCDK+ | 0.002 | ||

| 2.5 | EXP | 0 (0) | 1 (0) | 0, LCDK+ | − 0.082 | |

| RSK | 0 (0) | 1 (0) | 0, LCDK+ | − 0.082 | ||

| NOR | 0 (0) | 1 (0) | 0, LCDK+ | − 0.082 | ||

| 3.5 | EXP | 0 (0) | 1 (0) | 0, LCDK+ | − 0.082 | |

| RSK | 0 (0) | 1 (0) | 0, LCDK+ | − 0.082 | ||

| NOR | 0 (0) | 1 (0) | 0, LCDK+ | − 0.082 | ||

Table 4.

Comparison of Results for Proposed Methods when

| Prior | Best | FDR | TPR | |||

|---|---|---|---|---|---|---|

| n=50, p=100 | ||||||

| 1 | EXP | 0.035 (0.105) | 0.1 (0.268) | −0.179, LCDK+ | −0.015 | |

| RSK | 0.035 (0.105) | 0.1 (0.269) | − 0.179, LCDK+ | − 0.015 | ||

| NOR | 0.034 (0.104) | 0.096 (0.264) | − 0.183, LCDK+ | − 0.016 | ||

| 2.5 | EXP | 0.03 (0.077) | 0.817 (0.228) | − 0.037, LCDK+ | − 0.05 | |

| RSK | 0.029 (0.072) | 0.816 (0.228) | − 0.037, LCDK+ | − 0.051 | ||

| NOR | 0.021 (0.067) | 0.761 (0.245) | − 0.093, LCDK+ | − 0.059 | ||

| 3.5 | EXP | 0.064 (0.094) | 0.936 (0.166) | 0.042, LCDK+ | − 0.016 | |

| RSK | 0.063 (0.094) | 0.936 (0.167) | 0.042, LCDK+ | − 0.018 | ||

| NOR | 0.049 (0.086) | 0.928 (0.175) | 0.035, LCDK+ | − 0.031 | ||

| n=50, p=200 | ||||||

| 1 | EXP | 0.015 (0.084) | 0.013 (0.068) | 0.008, LCDK+ | 0.009 | |

| RSK | 0.015 (0.082) | 0.012 (0.067) | 0.008, LCDK+ | 0.008 | ||

| NOR | 0.016 (0.086) | 0.013 (0.068) | 0.009, LCDK+ | 0.01 | ||

| 2.5 | EXP | 0.017 (0.084) | 0.016 (0.075) | 0.006, LCDK+ | 0.009 | |

| RSK | 0.017 (0.081) | 0.016 (0.075) | 0.006, LCDK+ | 0.008 | ||

| NOR | 0.016 (0.082) | 0.016 (0.076) | 0.006, LCDK+ | 0.008 | ||

| 3.5 | EXP | 0.018 (0.087) | 0.017 (0.079) | 0.006, LCDK+ | 0.008 | |

| RSK | 0.018 (0.087) | 0.017 (0.08) | 0.006, LCDK+ | 0.008 | ||

| NOR | 0.017 (0.085) | 0.017 (0.081) | 0.007, LCDK+ | 0.007 | ||

| n=100, p=200 | ||||||

| 1 | EXP | 0.077 (0.101) | 0.455 (0.343) | − 0.338, LCDK+ | − 0.005 | |

| RSK | 0.077 (0.1) | 0.456 (0.344) | − 0.337, LCDK+ | − 0.005 | ||

| NOR | 0.077 (0.1) | 0.447 (0.343) | − 0.345, LCDK+ | − 0.005 | ||

| 2.5 | EXP | 0.019 (0.043) | 0.953 (0.116) | − 0.026, LCDK+ | − 0.068 | |

| RSK | 0.018 (0.043) | 0.952 (0.116) | − 0.026, LCDK+ | − 0.068 | ||

| NOR | 0.014 (0.037) | 0.942 (0.125) | − 0.036, LCDK+ | − 0.072 | ||

| 3.5 | EXP | 0.037 (0.060) | 0.982 (0.094) | 0, LCDK+ | − 0.039 | |

| RSK | 0.036 (0.059) | 0.982 (0.093) | 0, LCDK+ | − 0.040 | ||

| NOR | 0.031 (0.058) | 0.982 (0.095) | 0, LCDK+ | − 0.054 | ||

To illustrate, Table 2 summarizes the results for , in which the first row shows the results when , , , using an exponential-distribution shaped (EXP) prior. The ‘best’ decision rule, or the decision rule that has the highest TPR while maintaining FDR control, is , with FDR 0.01 and TPR 0.019.

and represents the difference between the FDR and TPR, respectively, of the ‘best’ decision rule of that proposed Bayesian setting, and the FDR and TPR for the ‘best’ non-Bayesian method among BHq, LCDK, and LCDK+. For example, for the first setting (first row) in Table 2, the best frequentist method is BHq with TPR 1 and FDR 0.087. Since the ‘best’ proposed decision rule is with TPR 0.019 and FDR 0.01, then our proposed decision rule bests BHq’s FDR by 0.77 (eg. ), and lags behind TPR by 0.981 (eg. ).

For these results, a negative means our proposed method for that setting is superior to the ‘best’ frequentist method in terms of minimizing FDR. Conversely, a positive means our proposed method is better than the ‘best’ frequentist method in selecting signals. Thus, for optimality, we want to be negative and to be positive.

For brevity, only the tables for and 3.5 is featured in this section. Each of and 3.5 represent the settings when nulls and signals are heavily-mixed, moderately-mixed, and well-separated respectively.

Table 2 summarizes the simulation study results for the first case when . We are able to detect signals consistently for cases where 2.5 or 3.5. TPR is only less than 5% worse than BHq, but improves on FDR by more than 7%. For the case where signals are heavily-mixed (), the method had difficulties in detecting signals for the low parameter case , while for , , we were able to detect a respectable 59% of signals. For this case, results for all 3 prior specification were quite similar.

Table 3 summarizes the simulation study results for the second case when . We are able to detect signals consistently for cases where 2.5 or 3.5. TPR is similarly effective as Lasso with the modified knockoffs threshold, but with 1 to 8% improvement in FDR. Similar to the previous case where , for the case where signals are heavily-mixed (), the method had difficulties in detecting signals for the low parameter case . When , we were able to detect a respectable 96 to 100% of signals. For this case, results for all 3 prior specification were quite consistent.

Table 4 summarizes the simulation study results for the final case when . For this highest dimensional case (, ), we were not able to successfully detect signals using both Lasso and the proposed method. For the other two subcases (, and , ), we were able to detect at 76 to 98% of signals using our proposed method. The EXP and RSK prior specifications were superior in both subcases to the NOR prior. When , our proposed methods were 4 to 9% inferior to Lasso in terms of TPR, but reduced FDR by 5 to 6%. For the well-separated signal case (), our proposed method was either tied with Lasso or 1 to 4% superior in terms of TPR, while redicung FDR by 2 to 7%.

Results on HIV-1 data

In order to apply the proposed procedures and test whether FDR control and sufficient TPR is achieved, we will use a publicly available data set: the Human Immunodeficiency Virus Type 1 (HIV-1) drug resistance database (Rhee et al. 2005). We limit the analysis the analysis of drug resistance measurements on genotype information related to non-nucleoside reverse transcriptase inhibitor (NNRTI) drug class, which has three generic drugs classified under it, namely Delavirdine (DLV), Efavirenz (EFV), and Nevirapine (NVP).

To validate the results, we compare the selected markers p with existing treatment-selected mutation panels (TSM) from Rhee et al. (2005). While this is a previously conducted and vetted study, ground truth or an oracle to which determines the true nulls and non-nulls are not available. Nevertheless, using this previous study is a good approximation to determine the effectiveness of our method. We aim to see replicability, which means we wish to see how many of the markers identified by our methods also appear in the TSM panel.

For each prior specification, we will be comparing each of the proposed test statistics to BHq and Lasso Coefficient Difference (using both the original and modified thresholds), similar to the numerical study in the previous section. Instead of using FDR and TPR, we assess these results based on the number of selections that appear in the TSM lists, representing True Positives, and the number of selections hose that don’t appear in TSM lists, representing False Discoveries. The figures and tables show the number of selections averaged over 1000 trials.1

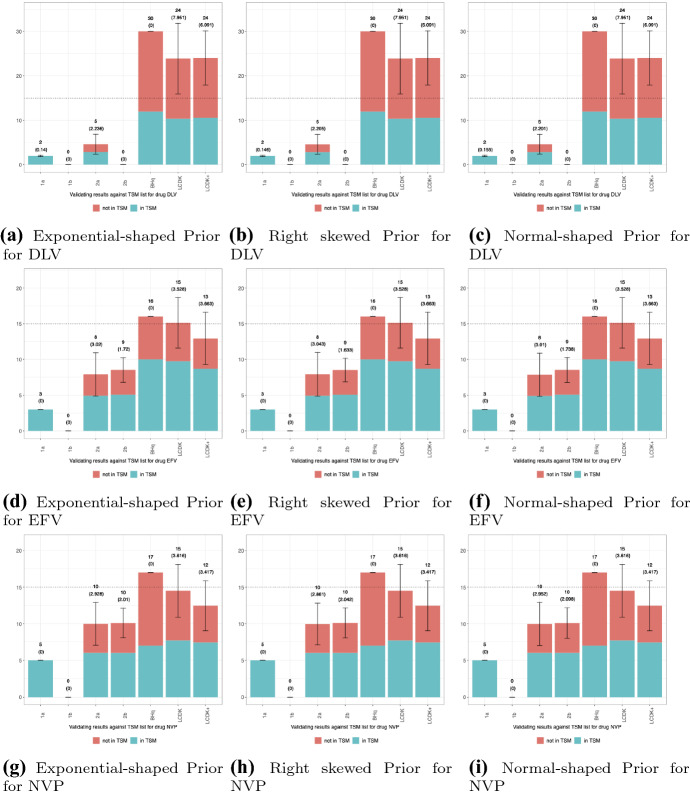

Figure 1 shows the agreement between the TSM lists and our proposed method for the 3 priors 3 generic drug combinations. In each chart, we see the decision rules (Table 1) in the X-axis, and the # of selected markers in the Y-axis. The blue bars represent the markers that are confirmed TSM lists (True Positives), while the red bars represent those that are not in the TSM lists (False Discoveries).

Fig. 1.

Results for HIV Data

We see in Figure 1 the non-Bayesian methods, BHq and Lasso, select a lot more markers that do no agree with the TSM list (in red). Our proposed methods are able to more conservatively select the markers previously confirmed by the TSM lists (True Positives in blue), while reducing potential false discoveries (in red). The results are especially outstanding for the drug Nevirapine (NVP) since a similar number of markers were selected compared to the frequentist methods, while choosing less markers not in the TSM lists. Thus, for Nevirapine, our proposed method and test statistics were able to more accurately replicate the selections of Rhee et al. (2005)’s study while minimizing the selection of possible false discoveries. In practice, our method is more viable for researchers who aim ensure the selected genetic markers for drug resistance have minimal false discoveries. This is important since out of the 3 drugs, Nevirapine is typically the cheapest and most accessible.

While the number of TSM-validated selections vary per drug and test statistic, overall, the proposed methods show promise in selecting positions that correspond to real effects, as verified by the TSM list. Researchers are always looking for ways to minimize false discoveries given the costs related to proceeding experiments for each genetic marker. We have demonstrated our proposed methods can help researchers achieve this, while maintaining competitive TPR.

Conclusions and recommendations

Motivated by the growing HIV epidemic in the Philippines, our proposed methods show potential in being used by genomic researchers to find significant genetic marker, while minimizing the number of false discoveries in HIV data. Our numerical studies show that the proposed methods not only had competitive TPR compared to BHq and Lasso, but had less FDR in most of the cases discussed. This contributes to computational statistics by demonstrating that unifying Bayesian Lasso with Model-free Knockoff unlocks the potential for achieving high TPR, while reducing FDR for low and equi-dimensional cases.

We are also able to demonstrate this through the HIV-1 data set, where we were able to select many of Rhee et al. (2005)’s identified genetic markers, while minimizing selecting those outside their study. While this work only focused on a small genomic dataset due to the number of replicates needed and the large computational power needed by the Bayesian methods, we believe this was able to demonstrate its potential for application to larger datasets that are similar in design and structure. A small reduction in FDR may seem insignificant in a scale of this study, but in future, larger scale studies, a 1–2% FDR reduction means hundreds of hours and millions of dollars saved on manpower. For example, in a 20,000 SNP dataset with 2000 selections, a 1% reduction in FDR means 20 less positions that need time and resources for further experimentation and research.

These findings not only aim to contribute to more accurate and cost-effective HIV drug resistance research in the Philippines, but more so lives saved as it aims to help patients receive proper treatment and prevent unnecessary costs, risks, and burdens associated with taking the wrong drug. Given that we were able to show this method’s potential in identifying significant genetic markers towards detecting drug resistance, perhaps one day it can be used to detect drug resistance towards other fast-mutating viruses, such as COVID-19.

Acknowledgements

The authors would like to thank the anonymous referees for their feedback, the UP Diliman School of Statistics and the thesis committee for their comments on the initial version, and the Philippines’ Department of Science and Technology - Advanced Science and Technology Institute (DOST-ASTI)’s Computing and Archiving Research Environment (COARE) for their remote computing facility.

Annex

Declaration

Conflict of interest

The authors declare that they have no conflict of interest.

Footnotes

In certain subfigures, some test statistics have values of 0 with standard deviation 0. This represents the cases where a significant number of the 1000 trials failed due to the knockoffs threshold choosing t=, thus selecting 0 markers.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Jurel K. Yap, Email: yapjurel@gmail.com

Iris Ivy M. Gauran, Email: irisivy.gauran@kaust.edu.sa

References

- Barber RF, Candès EJ. Controlling the false discovery rate via knockoffs. Ann Stat. 2015 doi: 10.1214/15-aos1337. [DOI] [Google Scholar]

- Benjamini Y, Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J Royal Stat Soc Ser B (Methodological) 1995;57(1):289–300. doi: 10.1111/j.2517-6161.1995.tb02031.x. [DOI] [Google Scholar]

- Bijak J, Bryant J. Bayesian demography 250 years after bayes. Popul Stud. 2016;70(1):1–19. doi: 10.1080/00324728.2015.1122826. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Candès E, Fan Y, Janson L, Lv J. Panning for gold: ‘model-x’ knockoffs for high dimensional controlled variable selection. J Royal Stat Soc Ser B (Stat Methodol) 2018;80(3):551–577. doi: 10.1111/rssb.12265. [DOI] [Google Scholar]

- Dudoit S, Shaffer JP, Block JC. Multiple hypothesis testing in microarray experiments. Stat Sci. 2003;18(1):71–103. doi: 10.1214/ss/1056397487. [DOI] [Google Scholar]

- Efron B. Size, power and false discovery rates. Ann Stat. 2007 doi: 10.1214/009053606000001460. [DOI] [Google Scholar]

- Efron B. Simultaneous inference: When should hypothesis testing problems be combined? Ann Appl Stat. 2008 doi: 10.1214/07-aoas141. [DOI] [Google Scholar]

- Efron B. Large-scale inference: empirical Bayes methods for estimation, testing, and prediction. Cambridge: Cambridge University Press; 2012. [Google Scholar]

- Efron B, Tibshirani R, Storey J, Tusher V. Empirical Bayes analysis of a microarray experiment. J Am Stat Assoc. 2001;96(456):1151–1160. doi: 10.1198/016214501753382129. [DOI] [Google Scholar]

- Gimenez JR, Ghorbani A, Zou J (2018) Knockoffs for the mass: new feature importance statistics with false discovery guarantees. arXiv preprint arXiv:1807.06214

- Guan Y, Stephens M. Bayesian variable selection regression for genome-wide association studies and other large-scale problems. Ann Appl Stat. 2011;5(3):1780–1815. doi: 10.1214/11-aoas455. [DOI] [Google Scholar]

- Jaymalin M (2018) Drug-resistant hiv on the rise. The Philippine Star. https://www.philstar.com/headlines/2018/01/31/1783140/drug-resistant-hiv-rise. Accessed 12 Mar 2020

- Korthauer K, Kimes PK, Duvallet C, Reyes A, Subramanian A, Teng M, Shukla C, Alm EJ, Hicks SC. A practical guide to methods controlling false discoveries in computational biology. Genome Biology. 2019;20(1):1–21. doi: 10.1186/s13059-019-1716-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuritzkes DR. Drug resistance in HIV-1. Curr Opin Virol. 2011;1(6):582–589. doi: 10.1016/j.coviro.2011.10.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Macan JG (2019) Dost-pchrd supports development of a more affordable, accessible hiv drug resistance diagnostic tool. Philippine Council for Health Research and Development Website. http://pchrd.dost.gov.ph/index.php/news/6453-dost-pchrd-supports-development-of-a-more-affordable-accessible-hiv-drug-resistance-diagnostic-tool-2. Accessed 14 May 2020

- McKusick VA, Ruddle FH. A new discipline, a new name, a new journal. Genomics. 1987;1(1):1–2. doi: 10.1016/0888-7543(87)90098-x. [DOI] [Google Scholar]

- Metzner KJ. HIV whole-genome sequencing now: answering still-open questions. J Clin Microbiol. 2016;54(4):834–835. doi: 10.1128/jcm.03265-15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nasir IA, Emeribe AU, Ojeamiren I, Adekola HA. Human immunodeficiency virus resistance testing technologies and their applicability in resource-limited settings of africa. Infect Dis Res Treat. 2017;10:117863371774959. doi: 10.1177/1178633717749597. [DOI] [PMC free article] [PubMed] [Google Scholar]

- National Institutes of Health (2020) Drug resistance understanding hiv/aids. U.S. Department of Health and Human Services. https://aidsinfo.nih.gov/understanding-hiv-aids/fact-sheets/21/56/drug-resistance. Accessed 15 Apr 2020

- O’Hara RB, Sillanpää MJ. A review of Bayesian variable selection methods: what, how and which. Bayesian Anal. 2009 doi: 10.1214/09-ba403. [DOI] [Google Scholar]

- Park T, Casella G. The Bayesian lasso. J Am Stat Assoc. 2008;103(482):681–686. doi: 10.1198/016214508000000337. [DOI] [Google Scholar]

- Rhee SY, Fessel WJ, Zolopa AR, Hurley L, Liu T, Taylor J, Nguyen DP, Slome S, Klein D, Horberg M, Flamm J, Follansbee S, Schapiro JM, Shafer RW. HIV-1 protease and reverse-transcriptase mutations: correlations with antiretroviral therapy in subtype b isolates and implications for drug-resistance surveillance. J Infect Dis. 2005;192(3):456–465. doi: 10.1086/431601. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robert C, Casella G. A short history of Markov Chain Monte Carlo: subjective recollections from incomplete data. Stat Sci. 2011;26(1):102–115. doi: 10.1214/10-sts351. [DOI] [Google Scholar]

- Sindhu TN, Feroze N, Aslam M. A class of improved informative priors for Bayesian analysis of two-component mixture of failure time distributions from doubly censored data. J Stat Manag Syst. 2017;20(5):871–900. doi: 10.1080/09720510.2015.1121597. [DOI] [Google Scholar]

- Sindhu TN, Hussain Z, Aslam M. On the Bayesian analysis of censored mixture of two Topp-Leone distribution. Sri Lankan J Appl Stat. 2019;19(1):13. doi: 10.4038/sljastats.v19i1.7993. [DOI] [Google Scholar]

- Tibshirani R. Regression shrinkage and selection via the lasso. J Roy Stat Soc Ser B (Methodol) 1996;58(1):267–288. doi: 10.1111/j.2517-6161.1996.tb02080.x. [DOI] [Google Scholar]

- Westfall P, Young SS. Resampling-based multiple testing: examples and methods for p-value adjustment. New York: Wiley; 1993. [Google Scholar]

- World Health Organization (2018) Joint who and unaids questions and answers to hiv strain and drug resistance in the philippines. World Health Organization Website. https://www.who.int/philippines/news/feature-stories/detail/joint-who-and-unaids-questions-and-answers-to-hiv-strain-and-drug-resistance-in-the-philippines. Accessed 8 Jun 2020