Abstract

Coronavirus (COVID-19) has adversely harmed the healthcare system and economy throughout the world. COVID-19 has similar symptoms as other chest disorders such as lung cancer (LC), pneumothorax, tuberculosis (TB), and pneumonia, which might mislead the clinical professionals in detecting a new variant of flu called coronavirus. This motivates us to design a model to classify multi-chest infections. A chest x-ray is the most ubiquitous disease diagnosis process in medical practice. As a result, chest x-ray examinations are the primary diagnostic tool for all of these chest infections. For the sake of saving human lives, paramedics and researchers are working tirelessly to establish a precise and reliable method for diagnosing the disease COVID-19 at an early stage. However, COVID-19’s medical diagnosis is exceedingly idiosyncratic and varied. A multi-classification method based on the deep learning (DL) model is developed and tested in this work to automatically classify the COVID-19, LC, pneumothorax, TB, and pneumonia from chest x-ray images. COVID-19 and other chest tract disorders are diagnosed using a convolutional neural network (CNN) model called CDC Net that incorporates residual network thoughts and dilated convolution. For this study, we used this model in conjunction with publically available benchmark data to identify these diseases. For the first time, a single deep learning model has been used to diagnose five different chest ailments. In terms of classification accuracy, recall, precision, and f1-score, we compared the proposed model to three CNN-based pre-trained models, such as Vgg-19, ResNet-50, and inception v3. An AUC of 0.9953 was attained by the CDC Net when it came to identifying various chest diseases (with an accuracy of 99.39%, a recall of 98.13%, and a precision of 99.42%). Moreover, CNN-based pre-trained models Vgg-19, ResNet-50, and inception v3 achieved accuracy in classifying multi-chest diseases are 95.61%, 96.15%, and 95.16%, respectively. Using chest x-rays, the proposed model was found to be highly accurate in diagnosing chest diseases. Based on our testing data set, the proposed model shows significant performance as compared to its competitor methods. Statistical analyses of the datasets using McNemar’s, and ANOVA tests also showed the robustness of the proposed model.

Keywords: COVID-19, Pneumonia, Deep learning, Chest x-rays, Coronavirus

Introduction

Several patients with unknown causes of pneumonia disease were reported at Wuhan city of chine in early December 2019 [42, 60]. In the early stages of this pneumonia with an unknown cause, severe acute respiratory syndrome (SARS) has been observed in some patients. On the other hand, only a small number of patients have shown signs of rapid increase in acute respiratory distress (ARD) disorder and other concerning complications have also been noted. [41]. The “Chinese Center for Disease Control and Prevention” (CCDC) identified a novel coronavirus (nCoV) on January 7, 2020, from a throat swab sample taken from a patient in Wuhan and was later named 2019-nCoV by “World Health Organization” (WHO) [100]. A total of 284,992,606 coronavirus-infected cases, 5,440,570 death cases, and 252,735,264 recovered cases have been reported since December 31, 2021, around the world [101]. In this challenging situation, the frontline experts initially used a real-time reverse transcription-polymerase chain reaction (RT-PCR) test for the detection of COVID-19 [91]. The reverse transcription procedure is used to get the DNA of the infected person, which is then allocated to PCR for strengthening DNA before being analyzed. Therefore, it can detect the coronavirus because this virus only carries RNA patterns [17]. Due to higher demand, the COVID-19 tests have delayed the result obtained using PCR kits. The outcomes produced by PCR kits are not reliable because it also contains the false-negative (FN) outputs [8].

Globally, 7% of the populace is infected by pneumonia in a year and it may also cause death [75]. Pneumonia is considered as being a lethal disease and has relentless results within a diminutive time, due to the stream of fluid inside the lungs, which leads to drowning. Pneumonia has an incendiary reaction in the portion of lung sacs known as alveoli due to the bacteria, microbes, etc. [48]. As the microorganisms yield in the lung, white blood cells start the process to take any action against the bacteria, and fungi and produce sores in the sacs. Thus, the portion of the lung sacs gets replete with an infected fluid of pneumonia and causes a problem with breathing, tussis, and pyrexia. If this perilous pneumonia infection is not dealt with in the medicines at the earlier stages, it may cause the death of a person [6, 56].

The COVID-19 infected patients have the symptoms of fever, cough, loss of taste or smell, sore throat, chest pain, and shortness of breath [41]. These symptoms are also most commonly occurred in pneumonia, pneumothorax, LC, and TB-infected patients. Therefore, it is difficult for doctors in detecting the COVID-19. The health experts and researchers are working tirelessly in finding an effective solution for diagnosing the COVID-19. They choose the x-ray imaging analysis for the identification of coronavirus [44]. Today, one of the most economical and conventional clinical diagnostic methods is chest radiography (chest x-rays) [78]. Even in impoverished countries, modern digital radiography machines are easily affordable [53]. Hence, this practice is broadly used by health experts in their medical practices for the detection and diagnosis of fatal diseases such as TB, pulmonary nodules, pneumonia, and the initial stage of LC [104]. Chest x-rays contain an enormous quantity of information about a patient’s health biography. Although, accurately identifying COVID-19 from the chest radiograph is always a foremost task for health experts. In chest x-rays, overlapped structures of the tissue have greatly increased the complexity of the correct analysis. Therefore, detection of COVID-19 is challenging for the human diagnostician when the amount of the dissimilarity among the lesion and the adjacent tissues is very low, or the lesion partially covers the ribs. Even for an experienced health expert, it is sometimes not simple to identify the COVID-19, pneumothorax, pneumonia, LC, and TB from the chest x-rays. Therefore, the automated diagnosis of these diseases from chest radiographs through artificial intelligence (AI), basically in the subfield of deep learning models, might be able to solve this dilemma [52]. The reports of deep learning and transfer learning approaches exceeding humans in diagnostic evaluation have designed considerable elation. In AI, both methods i.e., deep learning present a framework to exploit previously acquired knowledge to address new but relevant tasks much more rapidly and effectively [59]. The major factor of the transfer learning process is their capability to fine-tune based on pre-trained models. This feature makes them the dominant tool for the classification of diseases from medical imaging.

Deep learning models have revolutionized the process of disease classification and opened a new door for medical professionals [35, 36, 40, 85, 103]. Chest infections [43], Cancer cell diagnosis [15], brain and breast tumor segmentation and identification [67], and gene analysis [7, 73, 79, 80] have all improved dramatically as a result of medical systems working together with CNN. The Chest Disease Classifier Network (CDC Net) is a novel multi-classification model based on the CNN model that includes the combination of the residual network though and dilated convolution has been developed for this study. The CDC_Net is applied to classify the COVID-19 infected chest x-rays from the pneumothorax, pneumonia, LC, and TB. The objective of the study is to accurately detection of the COVID-19 and other chest-related diseases i.e., pneumothorax, pneumonia, LC, and TB by using chest x-rays and to assist our doctors in identifying the irregular or abnormal patterns that happened due to these diseases. To the best of our knowledge, this is the first study that presents the single CNN model for the detection of a collection of chest diseases using x-rays images. We believe that our work also reduces the burden on the doctor to employ various approaches for the detection of each chest condition individually. In addition, the CDC_Net model is also compared with three well-known pre-trained classifiers such as Vgg-19 [36, 85], ResNet-50 [98], and inception v3 [40] in terms of accuracy (ACC), sensitivity (SEN), specificity (SPEC), F1-score, and area under the receiver operating characteristic curves AU(ROC).

In summary, the main contributions of this study are stated as follows:

We design a novel end-to-end CNN model in combination with the residual network thought and dilated convolution (CDC_Net) for the classification of COVID-19, pneumothorax, pneumonia, LC, and TB by using chest x-rays. Furthermore, depth comparison has been done with state-of-the-art classifiers as well as three baseline classifiers such as Vgg-19, ResNet-50 & Inception v3.

The CDC_Net has been trained and tested on a wide range of chest radiography datasets for the categorization of COVID-19, pneumothorax, pneumonia, TB, and LC chest x-rays. Radiopaedia.org [28], SIRM database [19], TCIA [27], Mendeley [2], Chest Radiographs of COVID-19 infected [62], NIH chest X-ray photos [70], GitHub source [18], and Kaggle repository [68] were used to obtain the images of COVID-19 x-rays. About 20,000 chest x-rays and CT scans were gathered from [84] for use in compiling a lung cancer database. The 12,000 x-rays in the Kaggle repository [72] were used to compile the dataset on pneumothorax. Radiologists in North America (RSNA) [50] provided the dataset of pneumonia x-rays. A total of 5216 x-ray images (3867 pneumonia and 1349 normal) are included in this dataset. A total of around 4200 chest x-rays, including 3500 normal images and 700 infected x-rays, were gathered from [95].

The CDC_Net efficiently solves the dilemma of low resolution, partial overlap in the inflammatory portion of chest x-rays. We also demonstrate that chest radiography can provide the different patterns of chest disease infection during the time of the convolutional process and provide significant information, which helps to enhance the classification accuracy.

The CDC_Net showed significant results in terms of accuracy (99.39%), recall (98.13%), precision (99.42%), and F1-score (98.26%).

Using chest x-rays, we developed a new framework for detecting COVID-19-infected patients.

This manuscript is structured as follows. State-of-the-art is discussed in section 2. In section 3, the context of materials and methods is presented. Section 4 consists of extensive experimental results and their discussion. The conclusion and future work of this study is described in section 5.

State-of-the-art

One of the most essential tasks in deep learning is the classification of disorders of the respiratory system using various medical imaging modalities, such as MRI, radiography, CT scans, and sonography. X-rays have been used in numerous studies to detect COVID-19, which saves time and effort for medical professionals [26, 31, 33]. It has been a challenging area of the current study to identify COVID-19 at an early stage of the disease. Here are some of the most important and appropriate papers on AI approaches for the identification of COVID-19 pneumonia, pneumothorax, LC, and TB. In Table 1, current research on COVID-19 is compared to research on other chest disorders.

Table 1.

Comparative study of literature related to COVID-19 detection and other chest-related diseases

| Ref | Datasets | Objective | Models | Results |

|---|---|---|---|---|

| [1] |

COVID-19 and SARS images [16], |

To identify the COVID-19 using chest x-rays. | ResNet | Accuracy = 95.12% |

| [58] |

COVID-19 images [16], Normal and Pneumonia images [50]. |

To classify the COVID-19 x-rays from the pneumonia-infected x-rays. | GoogleNet | Accuracy = 80.56% |

| [74] |

COVID-19 images [16], Normal and Pneumonia images [51]. |

Using x-rays to automatically diagnose COVID-19 and pneumonia. | Xception + ResNet50V2 | Accuracy = 91.40% |

| [71] |

COVID-19 images [16], Normal and Pneumonia images [46]. |

To diagnose pneumonia and coronavirus infected images. | Patch based CNN | Accuracy = 88.90% |

| [105] |

COVID-19 images [16], Normal images [97]. |

Classification of COVID-19 positive and healthy images. | 18-layer residual CNN | Accuracy = 72.31% |

| [4] |

Normal and Pneumonia images [50] |

Classification of COVID-19, pneumonia, and healthy images. | MobileNet-V2 | Accuracy = 96.78% |

| [94] | COVID-19 images [16], Normal and Pneumonia images [50, 97]. | To detect pneumonia, COVID-19, and normal images. | Inception V3 | Accuracy = 76.0% |

| [83] |

Normal and Pneumonia images [50]. |

To classify COVID-19, pneumonia, and normal images using chest x-rays. | Resnet50 + SVM | Accuracy = 95.33% |

| [32] | Normal and Pneumonia images [97]. | To detect pneumonia (normal, bacterial, and viral) cases from chest X-rays | CNN | Accuracy =95.72% |

| [25] | Normal and Pneumonia images [50, 97]. | To detect and evaluate pneumonia (bacterial, viral, COVID-19 and normal) | CNN | Accuracy =94.84% |

The authors of the present work have recently developed a BDCNet model (based on CNN) for the classification of COVID-19 contaminated chest radiographs [64]. COVID-19, PN, and LC, three different types of lung illnesses, were all examined in this research utilizing one deep learning model. This model was tested against the ResNet-50 [14], Vgg-16, Vgg-19 [29], and inception v3 models in terms of performance measures. With an accuracy rate greater than 99.10%, the BDCNet model was found to be superior to other competing approaches. Using a patch-based technique, Oh et al. [71] created a CNN model for a small number of datasets. In order to get the desired result, the patching classifiers were asked to vote for the majority of their peers. A total of 15,043 x-ray images were used in the study, comprising 6012 photos of pneumonia, 180 images of infection with COVID-19, and 8851 photographs of healthy subjects. Accuracy of 88.9%, the sensitivity of 84.9%, the f1-score of 84.4%, the precision of 83.4%, and specificity of 96.4% were attained by the model. Based on three important contributions, Zhang et al. [105] built an 18-layer of residual CNN model for chest-radiograph pictures, and their analysis was based on that. As part of their first phase, they used a CNN model to identify the most prominent features, then worked on classification. To detect model scores in the last stage, the anomaly module was used. There were a total of 1531 x-ray photos used in their investigation, with 100 images showing good results for COVID-19 and the remaining images showing signs of infection with pneumonia. For the detection of COVID-19, they had a recall of 96% and a precision of 70.65%.

On two distinct datasets, Apostolopoulos et al. [4] employed a MobileNet-V2 and CNN model. The first dataset contains 1427 photos, of which 224 images are COVID-19 positives, 700 images are bacterial infections, and the balance of the images is normal. A similar number of COVID-19, pneumonia and normal pictures may be found in the second dataset. In comparison with CNN, the MobileNet-V2 model had an improved accuracy of 96.78%, a recall of 98.6%, and a specificity of 96%. Using a pre-trained model called Inception V3, Tsiknakis et al. [94] suggested a new method for automatic detection of COVID-19. 572 instances of bacterial and viral illnesses were studied using this approach, together with 122 photos taken using COVID-19 and 150 photographs taken of healthy individuals. An accuracy of 76% was achieved. The 381 chest x-ray pictures were fed into nine different pre-trained models in the study [83]. COVID-19 was detected using these features extracted by the SVM and ResNet-50 models. The ResNet-50 model demonstrates the highest accuracy and f1-score of 95.33% and 95.3%, respectively, when it comes to obtaining features.

For the detection of coronaviruses in x-ray images, Saha et al. [82] developed a new model called EMCNet. To extract the feature from the chest x-rays, the EMCNet model uses a simple CNN structure. An ensemble of machine learning (ML) classifiers was used to categorize the COVID-19 infected photos after extracting the features using CNN. Greater classification accuracy of 98.91% was attained by the EMCNet model. For the classification of COVID-19 images, they [61] created a model called CovXNet based on CNN. For automatic detection, the CNN model was utilized to extract the feature and its depth-wise convolution phenomena. Gradient-based discriminative localization and a slack method are included in the CovXNet model for COVID-19 identification. X-ray images of pneumonia and healthy people were used to develop this model. Among cases of normal and COVID-19, the model achieved a classification accuracy of 97.4%, while creating a classification accuracy of 90.2% for all other cases, such as pneumonia, including viral and bacterial infections. For the classification of COVID-19 contaminated pictures, Horry et al. [39] used four well-known medical transfer learning classifiers. A total of 60,798 photos were used to test the model, comprising 115 images with COVID-19 positive x-rays, 60,361 images with normal contrast, and 322 images with pneumonia infection. Vgg-19 was able to correctly categorize 81.0% of the COVID-19 positive instances in all four transfer learning models.

Kermany et al., [50] evaluate the performance criterion of a pre-trained model, such as Inception v3 to diagnose pneumonia from pediatric chest radiographs. The model achieved a diagnostics accuracy of 92.8%; recall or true negative (TN) value was 93.2% and 90.1%, respectively. Wang et al., [97] consider the consequence of localization approaches to identify and locate the pneumonia infection in chest X-rays. Their method concludes the area under the curve (AUC) of 63,3% for the classification of pneumonia. Rajpurkar et al. [76] applied a proposed “121- layer of CNN and achieved the AUC of 0.768 in diagnosing pneumonia from X-rays. Moreover, they also named their model “CheXNet” which was further evaluated and validated on the publically available “ChestX-ray14” images dataset [69] having 112,120 frontal chest radiographs. Guan et al., [30] compute the consummation of two-branch CNN for the classification of chest disease. Their model achieves an AUC of 0.776 on the open-access ‘ChestX-ray14’ dataset for detecting pneumonia. Diagnosis of diseases from radiographs images was monitored in [66], and the segmentation process was applied to different body organs by using chest X-ray imaging was performed in [38].

Wang et al. [99] observed the patterns of pneumonia disease related to the deadly coronavirus (COVID-19), which infected the severe acute respiratory of humans, started in Wuhan, China. The stats about ailing people with the COVID-19 pandemic are scarce and to control the spread of this type of disease a large number of screenings are required. The pathogenic laboratory was a good option at the time of the pandemic, but their testing process was lengthy with false negative (FN) results generated [55]. Consequently, the screening of this disease through different medical imaging such as CT scan, MRI, etc. using deep learning methods was performed. The initial screening achieved an AUC of (89.5%) with a specificity of 0.88 and a recall of 0.87. Ayan et al. [5] described that COVID-19 disease is an infection with the initial symptoms of flu and fever and later it converts into pneumonia. In this research article, they applied deep learning approaches to screen the patient’s chest X-ray with the hope to bring precision and diagnosing confirmation of coronavirus or pneumonia patients.

Stephen et al. [88] applied the CNN model to classify pneumonia patients from a database of chest X-ray images. CNN model was trained from scratch to extract the substantial and dominant features from the chest x-rays and diagnose pneumonia. This model dealt with medical imaging of a large number of pneumonia datasets and attains significant results. Other approaches rely solely on pre-trained and handcrafted methods to accomplish a notable classification performance. Wang et al. [98] used a deep regression framework for automatic screening of pneumonia and train the model with the information and multi-channel images to simulate the clinical monitoring process. Firstly, they extract the visual features from multi-mode of images and improve the ability to screen the pneumonia disease. Secondly, the structure of chest scans has been analyzed by using RCNN, which can extract automatically multiple image features from multi-channel image slices. The proposed model showed an increase in diagnostic accuracy of 2.3% and sensitivity by 3.1% to the baseline model i.e. RCNN(ResNet).

Janizek et al. [49] defined pneumonia as an infection and illness which happens in the respiratory system and is caused by fungi, bacteria, and viruses. This kind of infection affects the human lung’s air sacs with a load full of fluid. The common practice which is used to diagnose pediatric pneumonia is using chest x-rays of patients. In this modern era, the development of an automatic system to detect pneumonia and treat the disease is now possible. The CNN method was widely used by researchers in detecting pediatric pneumonia and assists the medical experts in early and possible treatment. The variant kind of CNN model based on pre-trained layers such as LeNet, VGGNet, ResNet, and GoogleNet achieved tremendous accuracy in diagnosing pneumonia from medical imaging. Dansana et al. [22] evaluate the pre-trained convolutional networks such as VGG-19, Inception-v2, and decision tree (DT) on COVID-19 infected chest X-rays and computed tomography (CT). They divulge that VGG-19 achieved 91% of diagnostics accuracy and out-class the other approaches in classifying COVID-19 infected patients’ radiographs and CT scans. Chohan et al. [12] proposed a novel deep learning framework for the detection of pneumonia disease for health experts. They extracted dominant features from the chest X-rays by applying different types of pre-trained neural networks and analyzed their classification accuracy. Afterward, they ensemble the output layers of all pre-trained models and achieved the best diagnostics accuracy. Waheed et al. [96] generate synthetic chest radiographs (CXR) in their study by evolving an “Auxiliary Classifier Generative Adversarial Network” (ACGAN) based model named COVIDGAN. Their models improve the accuracy of the CNN model in the detection of pediatric pneumonia and are considered the more efficient and helpful model for radiologists.

Materials and methods

This section presents the results of an experiment that was carried out to determine the classification accuracy of CDC_Net as well as three baseline transfer learning models, namely Vgg-19, ResNet-50, and Inception-V3. The human respiratory system is susceptible to a wide variety of diseases, some of which include pneumonia, LC, TB, and most recently, COVID-19 [25, 32]. X-rays are required for the diagnosis of disorders that affect the chest tract [90]. In this study, we developed an automated CDC_Net model for the detection of chest tract diseases. This system was trained and tested on a total of twelve different databases. The collected x-ray datasets have a fixed image size of 299 × 299 pixels. 10-fold cross-validation (CV) was used to divide the database x-rays into three categories: training, validation, and testing. Images of normal, COVID-19, pneumothorax, pneumonia, LC, and TB were used to train the CDC_Net model. With a batch size of 64, the experiment was run for up to 500 epochs. The CDC_Net achieved the required and appropriate training and validation accuracy after completing all epochs. The multiclassification confusion matrix was used to evaluate and compare the classification performance of the CDC_Net model with three other transfer learning classifiers. The proposed structure of the present study is shown in Fig. 1.

Fig. 1.

The proposed framework for diagnosing multiple chest infections was used in this study

Dataset descriptions

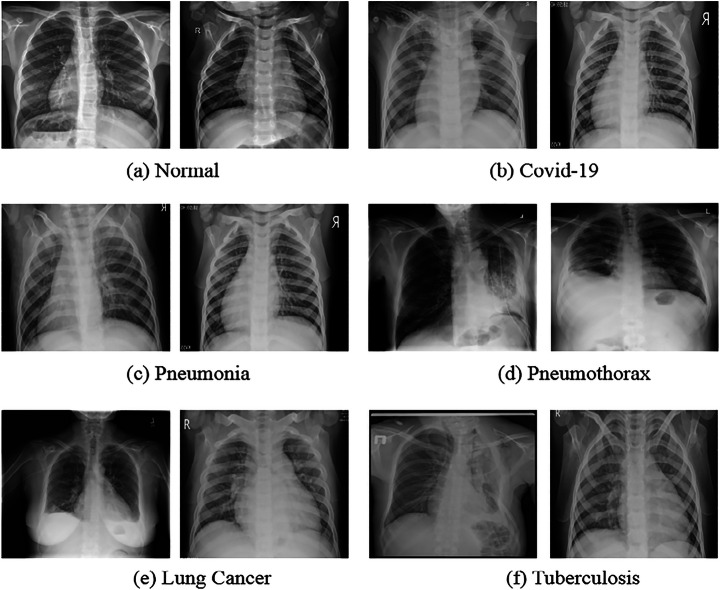

The CDC_Net was trained and tested using twelve different chest disease databases that were acquired from various sources. At first, we retrieve 660 COVID-19 chest x-rays from a repository on GitHub that was established by Cohen et al. [16]. This archive contains x-ray images that have been gathered from different hospitals as well as public sources. Although the entire metadata information was not supplied, the age range of COVID-19-positive patients was approximately 50 to 55 years old on average. After that, 1711 COVID-19 positive chest x-rays were obtained from the SIRM database [19], the TCIA [27], radiopaedia.org [28], Mendeley [2], Chest Radiographs of COVID-19 infected [62], as well as the GitHub source [18]. Thus, a total of 2371 COVID-positive chest x-rays were employed in this investigation. The normal person’s chest x-rays were acquired from two repositories such as NIH chest X-ray pictures [70] and the Kaggle repository [68]. The LC dataset encompassing chest x-rays and CT scan images was taken from [84], including roughly 20,000 chest x-rays and CT scans. On the other hand, the CT scans of patients with LC were not taken into consideration for this investigation. The pneumothorax dataset was taken from the Kaggle repository [72], which consists of 12,000 x-rays. The RSNA [50] was the source from which the dataset of x-rays of pneumonia was obtained. This dataset includes a total of 5216 x-ray images, including 3867 pneumonia-related images and 1349 normal x-rays. The dataset of TB x-rays was obtained from [95], and it contains approximately 4200 chest x-rays, of which 3500 are considered normal and 700 are TB infected. Figure 2, represents the instances of the normal, COVID-19, pneumothorax, pneumonia, LC, and TB.

Fig. 2.

Sample images of multi-chest diseases such as COVID-19, pneumonia, pneumothorax, LC, and TB

The k-fold cross-validation technique is used in applied ML to measure the model’s ability to predict data that was not utilized in the model’s training [77]. In this study, 10-fold cross-validation is used for the CDC_Net model. Thus, 90% of the data was utilized for training, and 10% was used to test the classifiers. Finally, we calculated the average metrics for 10-fold techniques. The total statistics for the division of all datasets are presented in Table 2.

Table 2.

Splitting of the dataset for training, testing, and validation

| Data Splitting | COVID-19 | Pneumothorax | Pneumonia | Lung Cancer | Tuberculosis | Normal | Total |

|---|---|---|---|---|---|---|---|

| Training | 1670 | 8400 | 2707 | 3500 | 2940 | 2065 | 21,282 |

| Validation | 467 | 2400 | 773 | 1000 | 840 | 589 | 6069 |

| Testing | 234 | 1200 | 387 | 500 | 420 | 295 | 3036 |

| Total | 2371 | 12,000 | 3867 | 5000 | 4200 | 2949 | 30,387 |

Data preprocessing, augmentation, and normalization

The dimensions of the chest x-rays images varied between 1350 to 2800 pixels in width and 690 to 1340 pixels in height. For consistency, we resized the images to the fixed 299 × 299 resolutions. It is observed that (see Table 2) the training and validation images of COVID-19 are comparatively less than other chest diseases. Thus, we used Synthetic Minority Oversampling Technique (SMOTE) [11] on COVID-19, pneumonia, LC, TB, and normal chest x-rays to increase the number of images at the time of training which addresses the overfitting dilemma [57]. SMOTE generates synthetic data using a k-nearest neighbor technique. SMOTE begins by selecting random data from the minority class, i.e. COVID-19, pneumonia, LC, TB, and normal chest x-rays, and then determining the data. There are different data normalization [87] techniques available to normalize the data. For this study, the pixel normalization techniques were used to normalize the data for the proper training of the CDC_Net. Now, the datasets are ready to be fed into the CDC_Net model for the training.

Transfer learning image classifiers

For the clinical task of categorizing COVID-19 and chest disorders, this section offers the most recent pre-trained classifiers. ImageNet (ILSVR) database [98] was utilized to train a pre-trained Deep Convolutional Neural Networks (DCNN) architecture. To train and evaluate picture classification algorithms, this database contains thousands of diverse objects. As a general open-source platform for chest illness diagnostics and other research applications [51, 63, 88], VGG-16 and VGG-19 [49] have been developed specifically for this purpose. Sigmoid functions were used to replace the final three layers of VGG-16 architecture, allowing the model to diagnose sickness. The VGG-19 is also a lot deeper than the VGG-16, too. As compared to VGG-16, the training parameters in VGG-19 are far more extensive and expensive to implement. To achieve strong convergence tendencies, He et al. [37] devised the Residual Neural Network (ResNet) model using skip connections to move over some layers of the network. ResNet-50 is the name given to the improved version of ResNet. ResNet’s architecture is similar to that of the VGGNet, although it is approximately eight (8) times deeper [92]. The classification of medical images is mostly accomplished using a CNN-based inception model. They were chosen because they have been thoroughly tested [81, 89], reviewed, and recognized as state-of-the-art classifiers for medical picture classification tasks [85]. Aside from the final and completely linked layers, the weights of these models were randomly initialized after pre-training on an extensive ImageNet database. These models were pre-trained with initial weights based on previously trained ImageNet data, which allowed us to unlock the last layer so that the weights of these models could be modified as we progressed through the training process.

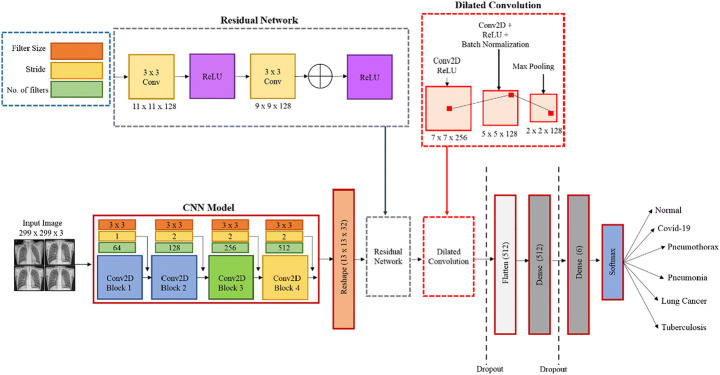

Proposed CDC_Net model

When evaluating the proposed model’s architecture, it is necessary to comprehend the chest x-ray patterns of COVID-19, pneumothorax, pneumonia, LC, and tubercular infection. Texture, shape, and spatial interactions between pixels are distinctive sequences in various disorders. Researchers typically use the local texture feature to identify the infected area of the radiographs because they altered the texture of the lung. A simple visual notion, however many studies do not define the texture of COVID-19 and other chest disorders [86, 89]. The grayscale image of a radiograph displays the image’s texture and distributes the image’s pixel values based on the image’s neighboring pixel values [54]. Texture can be viewed here as a set of repeating values of the local area that is present across all the x-rays being produced. The feature extractors in CNN are the kernel parameters. The input of each neuron is connected to the preceding levels in a stack in the CNN architecture to extract local information. By fine-tuning the convolutional layers of the CNN, we can get better results in finding effective texture patterns. The texture of radiographs, on the other hand, appears to lack some basic features. Therefore, selecting merely textures will not provide you with all the image’s information. Increasing the depth of the model reduces the resolution of the feature map. Figure 3 depicts the design of our proposed model, which incorporates residual networks and dilated convolution by the designs stated above. Differences between expected and actual values are calculated using the residual network in statistical mathematics. Our CDC Net model is fed these tiny changes by using deep residual thinking to remove the identical parts of the radiographs. Large-resolution images necessitate both more sophisticated computations per layer and a significant increase in memory consumption [57]. Because of this, the model’s input has been limited to 299 × 299 pixel-resolution radiographs, which have been convolutionally layered. Filter sizes of 3 × 3 were then used for the convolutional layer. Using the ReLU function, we can observe non-linear activation and use a kernel size of less than or equal to 5 × 5 and 7 × 7. We believe that the size of each neuron is smaller compared to the receptive portion of the input radiographs used for fetching the local texture characteristics associated with the intended outputs.

Fig. 3.

Proposed CDC_Net model used for classifying multiple chest infections

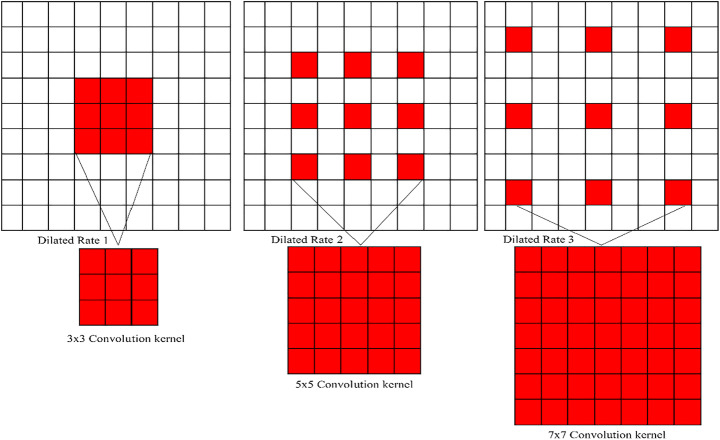

In the present study, the authors have presented the dilated convolutional layers, with the belief that the resolution size of the original chest x-rays is constant, which reduces the chance of resolution loss. The rule of dilated convolution network is illustrated in Fig. 4. Dilated convolution appears to limit the ability of the kernel to grow as the receptive field expands. This method will help keep feature map resolution at a reasonable level. The non-linear activation function input value was positioned in the sensitive region of the input by adding batch normalization layers after the convolution layer. The problem of gradient disappearances caused by increasing the number of training steps will be less of a concern. The final convolutional layer is coupled to a global average pooling layer that measures the average value of the feature map. Two fully linked networks are used to feed the feature map of the final convolutional layer (FCN). In the first dense layer, there are 512 nodes of hidden neurons, while the second comprises six dense layers that are utilized to classify the x-ray into one of the chest disorders, such as a COVID-19, LC, TB, normal, pneumothorax, and pneumonia. To avoid overfitting, the model’s learning rate is set to 0.5. Complex tasks can be better understood by looking at the model’s activation function. The convolutional layers were triggered using the ReLU function. The sigmoid function is used to classify diseases in the final layer of FCN’s dense layer, which incorporates ReLU. In the classification process, the accuracy and dependability of the model can be certain only when a large amount of training and test data of similar feature patterns are available, which means that model requires a training process each time the image data is modified. Even in clinical applications, previously marked illnesses dataset samples may be erroneous or vary with the distribution of fresh test data for reasons. Due to patient privacy concerns, it is difficult to obtain a substantial number of medical images dataset. Researchers are turning to transfer learning (pre-trained) algorithms to solve this issue. Loss function explanation and backpropagation method selection are the most important aspects of CNN training. Cross-entropy loss (CELF) was reduced using the Adam optimizer [87] in this work. This is used to update the CNN weight at the time of training. Unlike, stochastic gradient descent (SGD), this optimizer performs independently for different values by estimating the first and second moment of the gradient. The value measured by CELF demonstrates the difference between the actual and the predicted results. Moreover, the magnitude shows the proximity of their probability distribution. The entire number of trainable parameters (TPs) is 49,66,118, while the total number of non-trainable parameters (NTPs) is 161. The TPs are the parameters that vary during training and are necessary for training to achieve the optimal value of these parameters, whereas NTPs are the parameters that do not change during training and are not necessary for training to achieve the optimal value of these parameters. As a result, NTPs will have no impact on classification. Table 3 discusses the CDC_Net model’s layer and provides a full description.

Fig. 4.

Different dilated values corresponding (from left to right): the dilated value of 1 with a 3 × 3 convolutional kernel, which is like the normal convolution process. Dilated value of 2 with 3 × 3 convolutional kernel contains the receptive field of 5 × 5 convolution process and contains the receptive field of 7 × 7 with a dilated value of 4

Table 3.

CDC_Net Model Summary

| No. of Layers | Layers | Shape | Parameters |

|---|---|---|---|

| 0 | chest_input_1 (InputImage) | (299, 299, 3) | 0 |

| 1 | block1_conv1 (Conv2D) | (297, 297, 64) | 1882 |

| 2 | block1_conv1 (Conv2D) | (295, 295, 64) | 37,828 |

| 3 | block1_pool1 (MaxPooling2D) | (147, 147, 64) | 0 |

| 4 | dropout_1 (Dropout) | (147, 147, 64) | 0 |

| 5 | block2_conv2 (Conv2D) | (145, 145, 128) | 74,956 |

| 6 | block2_conv2 (Conv2D) | (143, 143, 128) | 158,584 |

| 7 | block2_pool2 (MaxPooling2D) | (71, 71, 128) | 0 |

| 8 | dropout_2 (Dropout) | (71, 71, 128) | 0 |

| 9 | block3_conv3 (Conv2D) | (69, 69, 256) | 306,278 |

| 10 | block3_conv3 (Conv2D) | (67, 67, 256) | 600,080 |

| 11 | block3_pool3 (MaxPooling2D) | (33, 33, 256) | 0 |

| 12 | dropout_3 (Dropout) | (33, 33, 256) | 0 |

| 13 | block4_conv4 (Conv2D) | (31, 31, 512) | 1,191,160 |

| 14 | block4_conv4 (Conv2D) | (29, 29, 512) | 2,359,809 |

| 15 | block4_conv4 (Conv2D) | (27, 27, 512) | 2,469,809 |

| 16 | block4_pool4 (MaxPooling2D) | (13, 13, 512) | 0 |

| 17 | dropout_4 (Dropout) | (13, 13, 512) | 0 |

| 18 | reshape_layer (ReshapeLayer) | (13,13,32) | 9349 |

| 19 | residual_layer1 (Conv2D) | (11, 11, 128) | 9349 |

| 20 | residual_layer2 (Conv2D) | (9, 9, 128) | 9549 |

| 21 | dilated_conv1 (Conv2D) | (7, 7, 256) | 18,496 |

| 22 | dilated_conv2 (Conv2D) | (5, 5, 128) | 20,596 |

| 23 | dilated_conv2 (BatchNormalization) | (5, 5, 128) | 22,846 |

| 24 | dilated_pool5 (MaxPooling2D) | (2,2128) | 0 |

| 25 | dropout_5 (Dropout) | (2,2128) | 0 |

| 26 | flatten_1 (Flatten) | 64 | 0 |

| 27 | dense_1 (Dense) | 512 | 34,390 |

| 28 | dropout_6 (Dropout) | 512 | 0 |

| 29 | dense_2 (Dense) | 6 | 1127 |

Performance evaluation

For the present study, we solved the multi-classification chest disease problem i.e., Normal, COVID-19, pneumothorax, pneumonia, LC, and TB. A confusion matrix was then created to compare the performance of CDC_Net, Vgg-19, ResNet-50, and Inception-v3 classifiers. Accuracy (ACC), sensitivity (SEN) (sometimes called recall), specificity (SPEC), f1-score, and AUC were used to evaluate the proposed model’s classification performance in independent testing data. The ACC, SEN, SPEC, and f1-score values of the following eqs. (1), (2), (3), and (4) are each represented by the following equations:

| 1 |

| 2 |

| 3 |

| 4 |

Experimental results and discussions

Chest x-rays are used in the CDC_Net model to diagnose disorders of the chest such as COVID-19, pneumothorax, pneumonia, LC, and TB. By modifying the CDC_Net model’s hyperparameters (epoch, batch size, and learning rate), grid search was utilized to fine-tune the model’s performance. The CDC Net model was trained with a batch size of 64 and epochs up to 500 for testing. The “stochastic gradient descent” (SGD) optimizer was used to set the starting learning rate of CDC_Net and all three transfer learning classifiers to 0.05. When training didn’t make any progress after 20 epochs, we lowered the learning rate parameter by 0.1. The reason behind this is to prevent the CDC_Net and other three models i.e., Vgg-19, ResNet-50, and Inception-v3 from overfitting [3]. The ACC, SEN, SPEC, f1-score, confusion matrix and AUC of the CDC Net for each class label were examined.

Experimental process

Three transfer learning classifiers and the CDC Net model were implemented using Keras. In addition, Python is used to program the methodologies that aren’t immediately related to convolutional networks. On a Linux operating system with an 11 GB NVIDIA GPU and 32 GB of RAM, the experiment was carried out.

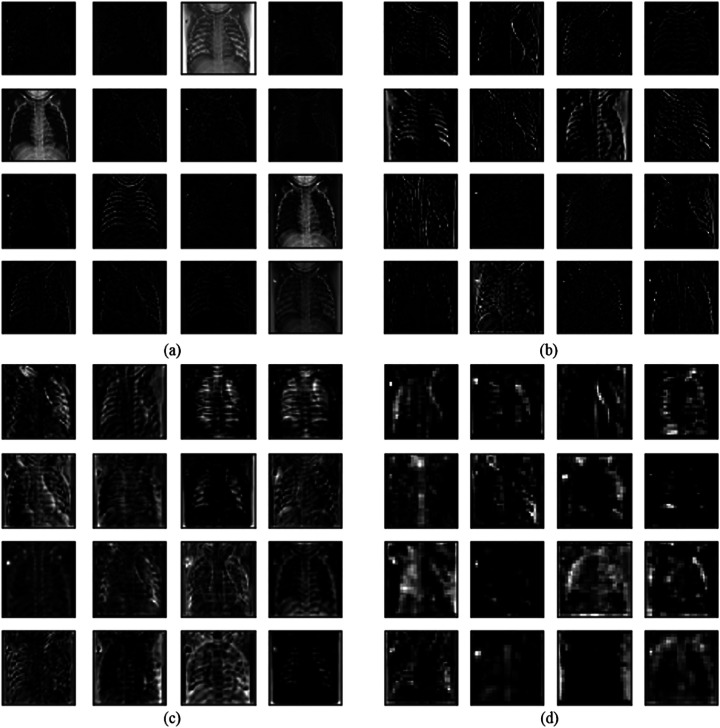

Visualization of test outputs

Here, we visualize the performance of the CDC_Net model to make the classification process clearer. Figure 5 illustrates the visualization of a first convolutional layer with the partial output of the feature map. It is observed that the convolutional layer can detect the edges of the chest radiographs and other methods apprehend the basic edge modes.

Fig. 5.

Proposed method partial visualization of feature maps. a Feature maps extracted from the initial block, b Feature maps extracted from the second block, c Added layer of the first bottleneck designed module d Last added layer

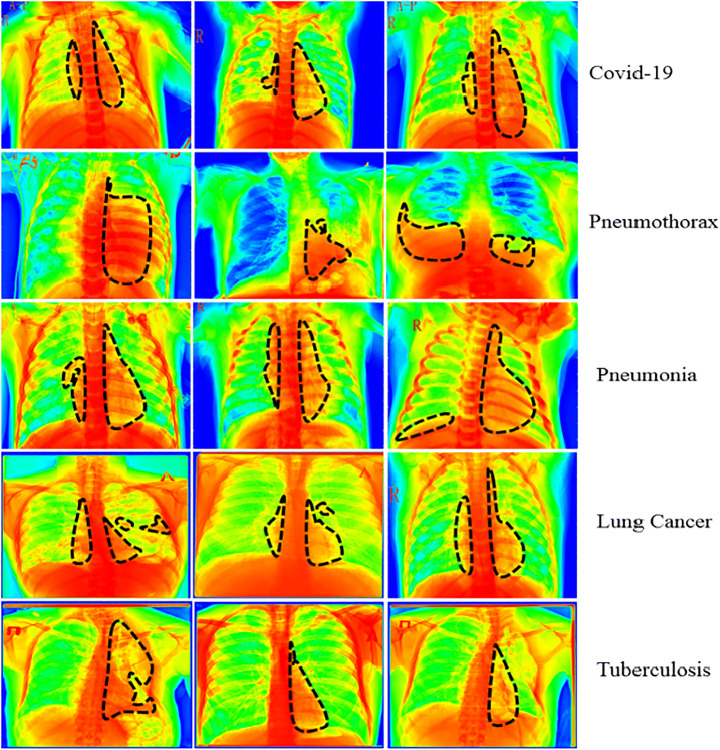

In addition, we utilized the Grad-CAM [63] heatmap approach to provide a graphical representation of the results that were generated by the CDC_Net model. The heatmap’s primary objective is to draw attention to the significant part of the chest x-rays that the model focuses on emphasizing. A representation of the heatmap produced by using the proposed model is shown in Fig. 6. In addition, the section of the lungs that are affected is represented by the black dotted line.

Fig. 6.

Heatmap of all five chest diseases

Results analysis

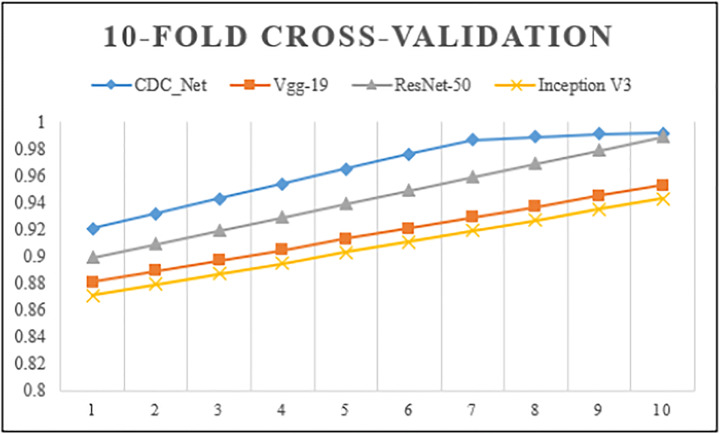

A 10-fold CV technique was used to analyze the complete dataset on CDC Net and three baseline models – Vgg-19, ResNet-50, and inception v3 classifiers. The CNN model was run up to 500 epochs. The maximum training accuracy was 99.01%, while the validation accuracy was 98.93%. These values showed that the CDC_Net model trained well and could correctly classify the chest diseases versus normal cases. The training loss was 0.0051 and the validation loss was 0.065. As shown in Fig. 7, the 10-fold CV approaches accurately trained and validated the classifiers CDC_Net, Vgg-19, ResNet-50, and inception v3.

Fig. 7.

10-fold cross-validation performance analysis of the CDC_Net; Vgg-19, ResNet-50, and Inception-v3

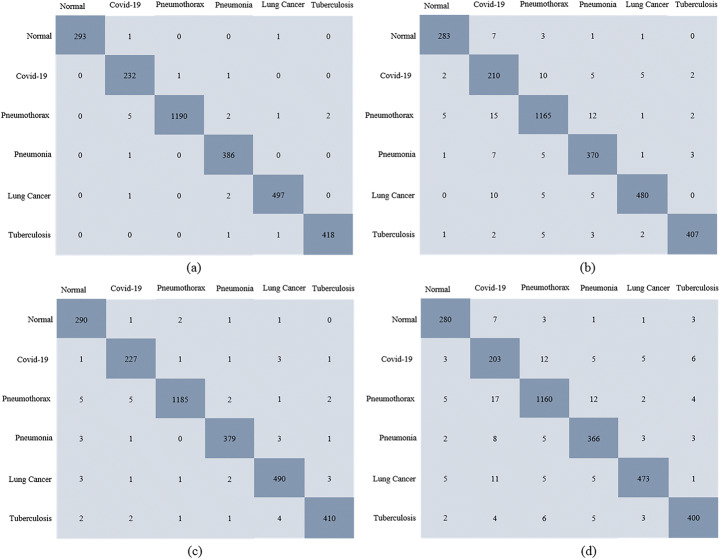

Several metrics were used to evaluate the model’s ability to accurately diagnose COVID-19 and other chest disorders. Figure 8 describes the confusion matrix for the proposed CDC_Net model and three transfer learning classifiers. In the independent test set, there were 295 normal, 234 COVID-19, 1200 pneumothorax, 387 pneumonia, 500 LC, and 420 TB x-ray images. In the confusion matrix, actual cases were placed along rows, and predicted cases were placed along with columns. For the CDC_Net, among 234 COVID-19 x-rays, the model detected 232 x-rays and misclassified 2 x-rays images as pneumothorax and pneumonia. In pneumothorax, the CDC_Net model correctly detected 1190 images and misclassified 10 images as COVID-19, pneumonia, LC, and TB. In addition, this model also accurately classified the 386 images, 497 images, and 418 images of pneumonia, LC, and TB, respectively. For Vgg-19 (where pre-trained weights were used to train the model), the model detected 210 cases and misclassified 24 cases as normal, pneumothorax, pneumonia, LC, and TB among 234 COVID-19 images. Moreover Vgg-19 also accurately detected the exact class label of pneumothorax having 1165 images, 370 images of pneumonia, 480 images of LC, and 407 images of TB. ResNet-50 predicted the exact class label of 227 COVID-19 cases and misclassified 7 cases as normal, pneumothorax, pneumonia, LC, and TB. This model also accurately classifies the 1185 images of pneumothorax, 379 pneumonia images, 490 LC images, and 410 TB images. For inception v3, among 234 COVID-19 images, the model detected 203 cases and misclassified 31 cases as normal, pneumothorax, pneumonia, LC, and TB. Among 295 normal cases, the model detected 280 cases and misclassified 15 cases as COVID-19, pneumothorax, pneumonia, LC, and TB. Furthermore, 1160 pneumothorax, 366 pneumonia, 473 LC, and 400 TB cases were also correctly classified by inception v3.

Fig. 8.

Confusion matrix (a) CDC_Net; (b) Vgg-19, (c) ResNet-50, and (d) Inception-v3

Table 4 displays the classifiers’ ACC, SEN, SEN, and f1-score assessment matrices for all chest classes. As shown in this table, the CDC_Net achieved 99.42% precision, 98.13% recall, 99.39% accuracy, and 98.26% f1-score for automatic diagnosis of COVID-19 and other chest diseases. For the vgg-19 model, the accuracy was 95.61%, the recall was 95.42%, precision was 96.41%, and f1-score was 95.47%. For the ResNet-50 model, the accuracy was 96.15%, the recall was 96.19%, precision was 96.91%, and f1-score was 96.18%. Finally, inception v3 achieved 95.16% accuracy, 95.01% recall, 95.15% precision, and 95.19% f1-score.

Table 4.

Performance comparison of the proposed model with pre-trained models

| Sr.# | Models | ACC (%) | SEN (%) | Precision (%) | F1 score (%) |

|---|---|---|---|---|---|

| 1 | Vgg-19 | 95.61 | 95.42 | 96.41 | 95.47 |

| 2 | ResNet-50 | 96.15 | 96.19 | 96.91 | 96.18 |

| 3 | Inception v3 | 95.16 | 95.01 | 95.15 | 95.19 |

| 4 | CDC_Net | 99.39 | 98.13 | 99.42 | 98.26 |

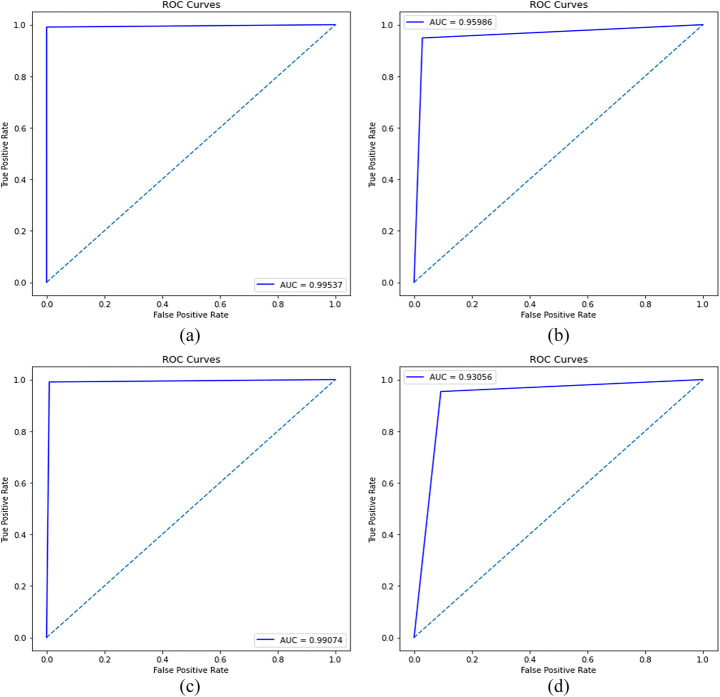

A comparison of the three transfer learning classifiers (inception v3, Vgg-19, and ResNet-50) shows that the CDC Net model outperformed them all. Transfer learning classifiers are deep networks, and the spatial resolution of the feature map for their final Conv layer outcomes has been significantly reduced, resulting in poor classification accuracy. These transfer learning classifiers’ filter size is not suited for such issues, and crucial properties such as the neuron’s receptive fields were not taken into account when developing the large input. There are no more concerns with low resolution and overlap in COVID-19 x-rays due to this CDC_Net model. This approach reduces the impact of structured noise and improves classification performance by using a suitable filter size and a faster convergence time. The model is considered to be suitable and effective if it attained the highest value of AU (ROC). The ROC of the model is calculated by using TP and FP values. Figure 9 shows the AU(ROC) of the CDC_Net and the other three transfer learning classifier. The CDC_Net model achieved an AU(ROC) of 0.9953. For Vgg-19, Resnet-50 and inception-v3 the AU(ROC) were 0.9598, 0.9907, and 0.9305, respectively. The results of AU(ROC) divulge that the CDC_Net model outperforms the other three transfer learning classifiers. We believe that the CDC_Net model would be helpful for medical experts in detecting the COVID-19 positive cases from chest x-rays.

Fig. 9.

ROC curves of (a) CDC_Net, (b) Vgg-19, (c) Resnet, (d) Inception-v3

Statistical analysis

McNemar’s statistical test [23] and the analysis of variance (ANOVA) test [20] were used to evaluate the CDC_Net model’s viability, comparing it to the base classifiers, whose probability scores were utilized to decide the construction of the proposed model. Table 5 shows the results of McNemar’s and ANOVA tests on the multi-chest disease x-ray dataset used in this study. There must be a lower p value (i.e., 0.05) in both McNemar’s and ANOVA tests to reject the null hypothesis. Table 5 shows that the p values are less than 0.05 in all dataset examples. Both statistical tests showed that the null hypothesis was rejected. This shows that the CDC_Net model incorporates additional information from the base classifiers and that its predictions are superior, ensuring that this model is statistically unique from the other contributing models.

Table 5.

Results of McNemar’s test and ANOVA test of the CDC_Net model

| Statistical Analysis | P Value |

|---|---|

| McNemar’s test | 0.0230 |

| ANOVA test | 0.0021 |

Discussions

X-rays of the chest are typically performed to diagnose multiple chest conditions at once. It produces a detailed view of a specific region, which enables us to diagnose chest problems as well as inside infections utilizing those images. When compared to the more modern technology of RT-PCR, the procedure of classifying patients with COVID-19, pneumothorax, pneumonia, LC, and TB infection using chest x-rays is quick, reliable, and efficient. However, the number of people who have tested positive for COVID-19 is increasing daily; therefore, an automatic detection approach was required to discover this new flu virus at an earlier stage. Chest x-rays can be analyzed with the use of artificial intelligence (AI), which enables us to automatically diagnose cases of COVID-19 as well as other chest disorders. As a result, we built a CNN-based model named CDC_Net that accurately detects x-ray images of COVID-19, pneumothorax, pneumonia, LC, and TB. This model can assist medical professionals in initiating the treatment process for COVID-19 infected persons at an earlier stage. The experimental work that was performed above demonstrates that the CDC_Net model is significantly and effectively trained on COVID-19, pneumothorax, pneumonia, LC, and TB infection appearing on the lungs and that it correctly classifies these infected cases through x-rays. The CDC_Net has achieved outstanding classification accuracy in detecting chest-related diseases using x-ray images, having an accuracy of 99.39% when compared to the classification performance of three CNN-based pre-trained classifiers. On datasets with a fixed size of 299 × 299 × 3 image resolutions, the CDC Net, Vgg-19, Resnet-50, and Inception-v3 neural networks were trained. In addition to this, the cross-entropy loss method was utilized when it came to the training of the CDC Net model. Table 4 displays a comparison of the classification performance of the CDC_Net model and the other three pre-trained classifiers that were evaluated for this work in terms of a variety of parameters, including accuracy, precision, recall, and f1-score. It has been observed that the CDC_Net model has accomplished a remarkable level of performance, achieving the best levels of accuracy (99.39%), precision (99.42%), and f1-score (98.26%), recall (98.13%), and AUC (0.9953). With pre-trained weights, the categorization performance of the other three models has slightly deteriorated. The AUC score for ResNet-50 was 0.9907, which was higher than the AUC scores of Vgg-19 and Inception-v3, which were 0.9598 and 0.9305, respectively. In comparison to all other methods, inception-v3 yields the lowest less AUC score of 0.9583, the accuracy of 95.16%, recall of 95.01%, f1-score of 95.19%, and precision of 95.15%. Pre-trained CNN models did not have an impact on the overall binary classification problems. In contrast to this, these models are more suited for recognizing several disease kinds [3] or for more complex problems, such as segmentation. Additionally, most of the researchers [3, 9, 13, 20, 23, 24, 34, 47, 65, 86, 89, 102] believe that when the number of CNN layers rises for a given binary classification task, these classifiers did not work appropriately.

The classification accuracy of the CDC_Net model is compared with the state-of-the-art classifiers. The analysis of experimental outcomes with recent state-of-the-art methods shows that the CDC_Net model of detecting the COVID-19, pneumothorax, pneumonia, LC, and TB-infected x-rays has added considerable output in assisting the clinical expert. Jain et al. [47] proposed an Xception model and achieved a classification accuracy of 97.97% in identifying COVID-19 infected patients. Saha et al. [82] designed a novel CNN model which is ensembled with different ML classifiers in classifying the COVID-19 radiograph images. They achieved an accuracy of 98.91%. Apostolopoulos et al. [4] developed a MobileNet-V2 model for classifying COVID-19, and pneumonia including viral and bacterial, and normal images. Their proposed model achieved an accuracy of 96.78%. Tsiknakis et al. [94] proposed a CNN-based Inception-V3 model for classifying multi-chest diseases. They achieved an accuracy of 76.0%. The work presented by Zhang et al. [105], achieved the classification performance of the CNN model on multi-chest diseases using chest radiographs in terms of accuracy was 72.77%. A CNN model called “dense connection network with parallel attention module” (PAM-DenseNet) was constructed for the identification of COVID-19 in lung images by Xiao et al. [102]. This model has achieved a recall of 95.74%, a specificity of 96.77%, an accuracy of 94.29%, and a precision of 93.75%. When using chest x-rays to detect COVID-19, pneumonia, and normal patients, Chowdhury et al. [13] developed the CNN-based EfficientNet model known as ECOVNet. The ECOVNet model has a detection accuracy of chest illnesses of 96.07%. The authors of the study [65] have designed an automated system to classify multi-chest diseases using EfficientNet, and they achieved an average accuracy of 96.70%. For the diagnosis of COVID-19, Canayaz [9] proposed a novel hybrid technique based on CBAM and EfficientNet. 99%of COVID-19 contaminated images are correctly predicted by their model. Using MobileNet, Trivedi and Gupta [93] developed a lightweight deep learning algorithm that can automatically diagnose pediatric pneumonia based on chest x-rays. They were able to accurately diagnose 97% of the time.

Table 4 shows that CDC_Net is more capable of identifying abnormalities and extracting the dominant and discriminative patterns in x-ray imaging samples, with the greatest accuracy result of 99.39%. There are three more CNN-based pre-trained classifiers whose results are also discussed in Table 4, and we provide a comprehensive explanation for why prior arts show less classification performance by investigating the nature of COVID-19, pneumonia, pneumothorax, and TB-infected chest x-rays and LC. As a first step, the deep networks of the CNN-based pre-trained classifiers have been reduced to their final convolutional (Conv) layers, which limits their classification performance. These pre-trained classifiers also have a filter size that is unsuitable because the neurons connected to the input are so vast that the major elements are ignored completely. These issues can be resolved using the CDC_Net model. To diagnose several chest ailments utilizing x-ray images, we developed an end-to-end CNN-based model in conjunction with the residual network thought and dilated convolution values. In the inflammatory portion of chest x-rays, the CDC_Net model has solved the problem of low resolution and overlapping. This model greatly reduces the detrimental impact of structured noise and further enhances classification performance while accelerating convergence. The CDC_Net model has a filter size of 3 × 3 as well. COVID-19, pneumothorax, LC, PNEU, and TB were all successfully classified using the CDC_Net approach using x-rays, and the results show that this method has provided great assistance to medical professionals.

Conclusion

In this study, the proposed CDC_Net model efficiently classifies the COVID-19, pneumothorax, pneumonia, LC, and TB using chest x-rays. As a demonstration of the viability of our approach, we compared the classification accuracy of the CDC_Net model with three transfer learning models i.e., Vgg-19, ResNet-50, and Inception-v3. The proposed model was successful in distinguishing the COVID-19 from other chest-related disorders with a rate of accuracy of 99.39%. These findings demonstrate that the CDC Net model may be able to provide automated viewing and detection of chest ailments by monitoring the lung’s opaqueness from chest x-rays with a high accuracy rate and repeatability. These repercussions are primarily significant to other medical professionals who rely mainly on subjective medical imaging-based diagnostic characteristics. They have the potential to revolutionize the diagnostic process that radiologic technologists use to identify these diseases from chest x-rays soon. In future work, COVID-19 additional diagnostic values will be compared to standard morphological aspects by the deep learning architecture, and CNN-based algorithms will be evaluated for their performance in other chest-related cases. AI integration into radiology systems could be a valuable tool to advise at the point of care and improve COVID-19 diagnosis from chest x-ray quality, usability, and cost worldwide.

Funding

No funding was received.

Data availability

The authors declare that all data supporting the findings of this study are available within the article.

Declarations

Conflict of interest

The authors declare no conflict of interest.

Footnotes

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Abbas A, Abdelsamea MM, Gaber MM. Classification of COVID-19 in chest X-ray images using DeTraC deep convolutional neural network. Appl Intell. 2020;51:854–864. doi: 10.1007/s10489-020-01829-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Alqudah AM, Qazan S (2020) Augmented COVID-19 X-ray images dataset, 4. 10.17632/2FXZ4PX6D8.4

- 3.Alqudah AM, Qazan S, Masad IS. Artificial intelligence framework for efficient detection and classification of pneumonia using chest radiography images. J Med Biol Eng. 2021;41:599–609. [Google Scholar]

- 4.Apostolopoulos ID, Mpesiana TA. COVID-19: automatic detection from X-ray images utilizing transfer learning with convolutional neural networks. Phys Eng Sci Med. 2020;43(2):635–640. doi: 10.1007/s13246-020-00865-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ayan E, Ünver HM (2019) Diagnosis of pneumonia from chest x-ray images using deep learning. In: 2019 scientific meeting on Electrical-Electronics & Biomedical Engineering and computer science (EBBT). IEEE. pp 1-ll

- 6.Aydogdu M, Ozyilmaz E, Aksoy H, Gursel G, Numan E. Mortality prediction in community- acquired pneumonia requiring mechanical ventilation; values of pneumonia and intensive care unit severity scores. TuberkToraks. 2010;58(1):25–34. [PubMed] [Google Scholar]

- 7.Berahmand K, Nasiri E, Li Y. Spectral clustering on protein-protein interaction networks via constructing affinity matrix using attributed graph embedding. Comput Biol Med. 2021;138:104933. doi: 10.1016/j.compbiomed.2021.104933. [DOI] [PubMed] [Google Scholar]

- 8.Bezier C, Anthoine G, Charki A. Reliability of real-time RT-PCR tests to detect SARS-Cov-2: a literature review. Int J Metrol Qual Eng. 2020;11:13. doi: 10.1051/ijmqe/2020014. [DOI] [Google Scholar]

- 9.Canayaz M. C+ EffxNet: a novel hybrid approach for COVID-19 diagnosis on CT images based on CBAM and EfficientNet. Chaos, Solitons Fractals. 2021;151:111310. doi: 10.1016/j.chaos.2021.111310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Candemir S, Jaeger S, Palaniappan K, Musco JP, Singh RK, Xue Z, Karargyris A, Antani S, Thoma G, McDonald CJ. Lung segmentation in chest radiographs using anatomical atlases with nonrigid registration. IEEE Trans Med Imag. 2014;33(2):577–590. doi: 10.1109/TMI.2013.2290491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP. SMOTE: synthetic minority over-sampling technique. J Artif Intell Res. 2002;16:321–357. doi: 10.1613/jair.953. [DOI] [Google Scholar]

- 12.Chouhan V, Singh SK, Khamparia A, Gupta D, Tiwari P, Moreira C, De Albuquerque VHC. A novel transfer learning based approach for pneumonia detection in chest X-ray images. Appl Sci. 2020;10(2):559. doi: 10.3390/app10020559. [DOI] [Google Scholar]

- 13.Chowdhury NK, Kabir MA, Rahman MM, Rezoana N. ECOVNet: a highly effective ensemble based deep learning model for detecting COVID-19. PeerJ Comput Sci. 2021;7:e551. doi: 10.7717/peerj-cs.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Cifci MA. Deep learning model for diagnosis of corona virus disease from CT images. Int J Sci Eng Res. 2020;11(4):273–278. [Google Scholar]

- 15.Cireşan DC, Giusti A, Gambardella LM, Schmidhuber J. Mitosis detection in breast cancer histology images with deep neural networks. Lect Notes Comput Sci. 2013;8150 LNCS(PART 2):411–418. doi: 10.1007/978-3-642-40763-5_51. [DOI] [PubMed] [Google Scholar]

- 16.Cohen JP, Morrison P, Dao L, Roth K, Duong TQ, Ghassemi M (2020) COVID-19 image data collection: prospective predictions are the future [Online]. Available: http://arxiv.org/abs/2006.11988

- 17.Corman VM, et al. Detection of 2019 novel coronavirus (2019-nCoV) by real-time RT-PCR. Euro Surveill. 2020;25(3):2000045. doi: 10.2807/1560-7917.ES.2020.25.3.2000045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.COVID 19 chest X-ray [Online] (n.d.) Available: https://github.com/agchung

- 19.COVID-19 DATABASE | SIRM [Online] (n.d.) Available: https://www.sirm.org/en/category/articles/COVID-19-database/

- 20.Cuevas A, Febrero M, Fraiman R. An anova test for functional data. Comput Stat Data Anal. 2004;47:111–122. doi: 10.1016/j.csda.2003.10.021. [DOI] [Google Scholar]

- 21.Dadario AM (2020) COVID-19 X rays | Kaggle. https://www.kaggle.com/andrewmvd/convid19-x-rays.

- 22.Dansana D, Kumar R, Bhattacharjee A, Hemanth DJ, Gupta D, Khanna A, Castillo O (2020) Early diagnosis of COVID-19-affected patients based on X-ray and computed tomography images using deep learning algorithm. Soft Comput:1–9. 10.1007/s00500-020-05275-y [DOI] [PMC free article] [PubMed] [Retracted]

- 23.Dietterich T. Approximate statistical tests for comparing supervised classification learning algorithms. Neural Comput. 1998;10:1895–1923. doi: 10.1162/089976698300017197. [DOI] [PubMed] [Google Scholar]

- 24.Dunnmon JA, Yi D, Langlotz CP, Ré C, Rubin DL, Lungren MP. Assessment of convolutional neural networks for automated classification of chest radiographs. Radiology. 2019;290:537–544. doi: 10.1148/radiol.2018181422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.El Asnaoui K. Design ensemble deep learning model for pneumonia disease classifcation. Int J Multimed Inf Retr. 2021;10:55–68. doi: 10.1007/s13735-021-00204-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, Thrun S. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542(7639):115–118. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Frederick Nat Lab (2018) The cancer imaging archive (TCIA). The Cancer Imaging Archive, p 1 [Online]. Available: https://www.cancerimagingarchive.net

- 28.Gaillard F (2014) Radiopaedia.org, the wiki-based collaborative Radiology resource. Radiopaedia.org; [Online]. Available: http://radiopaedia.org/

- 29.Grewal M, Srivastava MM, Kumar P, Varadarajan S. 2018 IEEE 15th international symposium on biomedical imaging (ISBI 2018) IEEE; 2018. Radnet: radiologist level accuracy using deep learning for hemorrhage detection in ct scans; pp. 281–284. [Google Scholar]

- 30.Guan Q, Huang Y, Zhong Z, Zheng Z, Zheng L, Yang Y (2018) Diagnose like a radiologist: attention guided convolutional neural network for thorax disease classification. arXiv:1801.09927. Retrieved from https://arxiv.org/abs/1801.09927v1

- 31.Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, Venugopalan S, Widner K, Madams T, Cuadros J, Kim R, Raman R, Nelson PC, Mega JL, Webster DR. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316(22):2402–2410. doi: 10.1001/jama.2016.17216. [DOI] [PubMed] [Google Scholar]

- 32.Hammoudi K, Benhabiles H, Melkemi M, Dornaika F, Arganda-Carreras I, Collard D, Scherpereel A. Deep learning on chest X-ray images to detect and evaluate pneumonia cases at the era of COVID19. J Med Syst. 2021;45:75–75. doi: 10.1007/s10916-021-01745-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Hannun AY, Rajpurkar P, Haghpanahi M, Tison GH, Bourn C, Turakhia MP, Ng AY. Cardiologist-level arrhythmia detection and classification in ambulatory electrocardiograms using a deep neural network. Nat Med. 2019;25(1):65–69. doi: 10.1038/s41591-018-0268-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Hassantabar S, Ahmadi M, Sharifi A. Diagnosis and detection of infected tissue of COVID-19 patients based on lung x-ray image using convolutional neural network approaches. Chaos, Solitons Fractals. 2020;140:110170. doi: 10.1016/j.chaos.2020.110170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.He K, Zhang X, Ren S, Sun J. Proceedings of the IEEE conference on computer vision and pattern recognition. 2016. Deep residual learning for image recognition; pp. 770–778. [Google Scholar]

- 36.He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition. IEEE, pp 770–778

- 37.He K, Zhang X, Ren S, Sun J (2016) In: 2016 IEEE conference on computer vision and pattern recognition IEEE conference on computer vision and pattern recognition. IEEE, pp 770–778

- 38.Hermann S (2014) Evaluation of scan-line optimization for 3D medical image registration. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3073–3080

- 39.Horry M et al (2020) X-ray image based COVID-19 detection using pre-trained deep learning models. 10.31224/osf.io/wx89s

- 40.Huang G, Liu Z, Van Der Maaten L, Weinberger KQ. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE; 2017. Densely connected convolutional networks; pp. 4700–4708. [Google Scholar]

- 41.Huang C, Wang Y, Li X, Ren L, Zhao J, Hu Y, Zhang L, Fan G, Xu J, Gu X, Cheng Z. Clinical features of patients infected with 2019 novel coronavirus in Wuhan, China. Lancet. 2020;395:497–506. doi: 10.1016/S0140-6736(20)30183-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Hui DS, Azhar EI, Madani TA, et al. The continuing 2019nCoV epidemic threat of novel coronaviruses to global health the latest 2019 novel coronavirus outbreak in Wuhan, China. Int J Infect Dis. 2020;91:264–266. doi: 10.1016/j.ijid.2020.01.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Hwang EJ, et al. Development and validation of a deep learning-based automated detection algorithm for major thoracic diseases on chest radiographs. JAMA Netw Open. 2019;2(3):e191095. doi: 10.1001/jamanetworkopen.2019.1095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Islam MM, Karray F, Alhajj R, Zeng J. A review on deep learning techniques for the diagnosis of novel coronavirus (COVID-19) IEEE Access. 2021;9:30551–30572. doi: 10.1109/ACCESS.2021.3058537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Jaeger S, Karargyris A, Candemir S, Folio L, Siegelman J, Callaghan F, Xue Z, Palaniappan K, Singh RK, Antani S, Thoma G, Wang Y-X, Lu P-X, McDonald CJ. Automatic tuberculosis screening using chest radiographs. IEEE Trans Med Imag. 2014;33(2):233–245. doi: 10.1109/TMI.2013.2284099. [DOI] [PubMed] [Google Scholar]

- 46.Jaeger S, Candemir S, Antani S, Wáng Y-XJ LP-X, Thoma G. Two public chest Xray datasets for computer-aided screening of pulmonary diseases. Quant Imag Med Surg. 2014;4(6):475–477. doi: 10.3978/j.issn.2223-4292.2014.11.20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Jain R, Gupta M, Taneja S, Hemanth DJ. Deep learning-based detection and analysis of COVID-19 on chest X-ray images. Appl Intell. 2021;51(3):1690–1700. doi: 10.1007/s10489-020-01902-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Jaiswal AK, Tiwari P, Kumar S, Gupta D, Khanna A, Rodrigues JJ. Identifying pneumonia in chest X-rays: a deep learning approach. Measurement. 2019;145:511–518. doi: 10.1016/j.measurement.2019.05.076. [DOI] [Google Scholar]

- 49.Janizek JD, Erion G, DeGrave AJ, Lee SI. Proceedings of the ACM conference on health, inference, and learning. 2020. An adversarial approach for the robust classification of pneumonia from chest radiographs; pp. 69–79. [Google Scholar]

- 50.Kermany DS, Goldbaum M, Cai W, Valentim C, Liang H, Baxter SL et al (2018) Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell 172(5 Feb 2018):1122–1131. 10.1016/j.cell.2018.02.010 [DOI] [PubMed]

- 51.Kermany D, Zhang K, Goldbaum M. Labeled optical coherence tomography (OCT) and chest X-ray images for classification. Mendeley Data. 2018;2(2):651. [Google Scholar]

- 52.King BF., Jr Artificial intelligence and radiology: what will the future hold? J Am CollRadiol. 2018;15:501–503. doi: 10.1016/j.jacr.2017.11.017. [DOI] [PubMed] [Google Scholar]

- 53.Komal A, Malik H. Transfer learning method with deep residual network for COVID-19 diagnosis using chest radiographs images. In: Proceedings of international conference on information technology and applications. Singapore: Springer; 2022. pp. 145–159. [Google Scholar]

- 54.Liang G, Zheng L. A transfer learning method with deep residual network for pediatric pneumonia diagnosis. Comput Methods Prog Biomed. 2020;187:104964. doi: 10.1016/j.cmpb.2019.06.023. [DOI] [PubMed] [Google Scholar]

- 55.Litjens G, Kooi T, Bejnordi BE, Setio AAA, Ciompi F, Ghafoorian M, van der Laak J, van Ginneken B, Sanchez CI. A survey on deep learning in medical image analysis. Med Image Anal. 2017;42:60–88. doi: 10.1016/j.media.2017.07.005. [DOI] [PubMed] [Google Scholar]

- 56.Liu J, Liu F, Liu Y, Wang HW, Feng ZC. Lung ultrasonography for the diagnosis of severe neonatal pneumonia. Chest. 2014;146(2):383–388. doi: 10.1378/chest.13-2852. [DOI] [PubMed] [Google Scholar]

- 57.Liu W, Wang Z, Liu X, Zeng N, Liu Y, Alsaadi FE. A survey of deep neural network architectures and their applications. Neurocomputing. 2017;234:11–26. doi: 10.1016/j.neucom.2016.12.038. [DOI] [Google Scholar]

- 58.Loey M, Smarandache F, Khalifa NEM. Within the lack of chest COVID-19 X-ray dataset: a novel detection model based on GAN and deep transfer learning. Symmetry. 2020;12(4):651. doi: 10.3390/SYM12040651. [DOI] [Google Scholar]

- 59.Lu J, Behbood V, Hao P, Zuo H, Xue S, Zhang G. Transfer learning using computational intelligence: a survey. Knowl-Based Syst. 2015;80:14–23. doi: 10.1016/j.knosys.2015.01.010. [DOI] [Google Scholar]

- 60.Lu H, Stratton CW, Tang YW. Outbreak of pneumonia of unknown etiology in Wuhan China: the mystery and the miracle. J Med Virol. 2020;92:401–402. doi: 10.1002/jmv.25678. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Mahmud T, Rahman MA, Fattah SA. CovXNet: a multi-dilation convolutional neural network for automatic COVID-19 and other pneumonia detection from chest X-ray images with transferable multi-receptive feature optimization. Comput Biol Med. 2020;122:103869. doi: 10.1016/j.compbiomed.2020.103869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Malik H, Anees T, Naeem A (2020) Chest Radiographs of COVID-19 infected. Mendeley Data V1. 10.17632/67dmnmx33v.1

- 63.Malik H, Farooq MS, Khelifi A, Abid A, Qureshi JN, Hussain M. A comparison of transfer learning performance versus health experts in disease diagnosis from medical imaging. IEEE Access. 2020;8:139367–139386. doi: 10.1109/ACCESS.2020.3004766. [DOI] [Google Scholar]

- 64.Malik H, Anees T, Mui-zzud-din (2022) BDCNet: multi-classification convolutional neural network model for classification of COVID-19, pneumonia, and lung cancer from chest radiographs. Multimedia Systems 28:815–829. 10.1007/s00530-021-00878-3 [DOI] [PMC free article] [PubMed]

- 65.Marques G, Agarwal D, de la Torre DI. Automated medical diagnosis of COVID-19 through EfficientNet convolutional neural network. Appl Soft Comput. 2020;96:106691. doi: 10.1016/j.asoc.2020.106691. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Melendez J, van Ginneken B, Maduskar P, Philipsen RH, Reither K, Breuninger M, Sánchez CI. A novel multiple-instance learning-based approach to computer-aided detection of tuberculosis on chest x-rays. IEEE Trans Med Imaging. 2014;34(1):179–192. doi: 10.1109/TMI.2014.2350539. [DOI] [PubMed] [Google Scholar]

- 67.Mohsen H, El-Dahshan E-SA, El-Horbaty E-SM, Salem A-BM. Classification using deep learning neural networks for brain tumors. Futur Comput Informatics J. 2018;3(1):68–71. doi: 10.1016/j.fcij.2017.12.001. [DOI] [Google Scholar]

- 68.Mooney P (2018) Chest X-ray images (pneumonia) | Kaggle. Kaggle.com. https://www.kaggle.com/paultimothymooney/chest-xray-pneumonia%0Ahttps://www.kaggle.com/paultimothymooney/chest-xray-pneumonia%0Ahttps://data.mendeley.com/datasets/rscbjbr9sj/2. Accessed 30 Aug 2021

- 69.National Institutes of Health Chest X-Ray Dataset (2019) Retrieved January 29, 2020 from https://nihcc.app.box.com/v/ChestXray-NIHCC/folder/36938765345

- 70.NIH chest X-rays (2018) Kaggle; [Online]. Available: https://www.kaggle.com/nih-chest-xrays/data

- 71.Oh Y, Park S, Ye JC. Deep learning COVID-19 features on CXR using limited training data sets. IEEE Trans Med Imag. 2020;39(8):2688–2700. doi: 10.1109/TMI.2020.2993291. [DOI] [PubMed] [Google Scholar]

- 72.Pneumothorax Database (2021) Kaggle; [online]. Available: https://www.kaggle.com/vbookshelf/pneumothorax-chest-xray-images-and-masks

- 73.Quang D, Xie X. DanQ: a hybrid convolutional and recurrent deep neural network for quantifying the function of DNA sequences. Nucleic Acids Res. 2016;44(11):e107. doi: 10.1093/nar/gkw226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Rahimzadeh M, Attar A. A modified deep convolutional neural network for detecting COVID-19 and pneumonia from chest X-ray images based on the concatenation of Xception and ResNet50V2. Informatics Med Unlocked. 2020;19:100360. doi: 10.1016/j.imu.2020.100360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Rajaraman S, Candemir S, Kim I, Thoma G, Antani S. Visualization and interpretation of convolutional neural network predictions in detecting pneumonia in pediatric chest radiographs. Appl Sci. 2018;8(10):1715. doi: 10.3390/app8101715. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Rajpurkar P, Irvin J, Zhu K, Yang B, Mehta H, Duan T et al (2017) CheXNet: radiologist-level pneumonia detection on chest x-rays with deep learning. arXiv:1711.05225. Retrieved from https://arxiv.org/abs/1711.05225

- 77.Refaeilzadeh P, Tang L, Liu H. Cross-validation. Encyclopedia Database Syst. 2009;5:532–538. doi: 10.1007/978-0-387-39940-9_565. [DOI] [Google Scholar]

- 78.Resnick S, Inaba K, Karamanos E, Skiada D, Dollahite JA, Okoye O, Demetriades D. Clinical relevance of the routine daily chest X-ray in the surgical intensive care unit. Am J Surg. 2017;214(1):19–23. doi: 10.1016/j.amjsurg.2016.09.059. [DOI] [PubMed] [Google Scholar]

- 79.Rostami M, Berahmand K, Nasiri E, Forouzandeh S. Review of swarm intelligence-based feature selection methods. Eng Appl Artif Intell. 2021;100:104210. doi: 10.1016/j.engappai.2021.104210. [DOI] [Google Scholar]

- 80.Rostami M, Forouzandeh S, Berahmand K, Soltani M, Shahsavari M, Oussalah M. Gene selection for microarray data classification via multi-objective graph theoretic-based method. Artif Intell Med. 2022;123:102228. doi: 10.1016/j.artmed.2021.102228. [DOI] [PubMed] [Google Scholar]

- 81.Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M, Berg AC, Fei-Fei L. ImageNet large scale visual recognition challenge. Int J Comput Vis. 2015;115:211–252. doi: 10.1007/s11263-015-0816-y. [DOI] [Google Scholar]

- 82.Saha P, Sadi MS, Islam MM. EMCNet: automated COVID-19 diagnosis from X-ray images using convolutional neural network and ensemble of machine learning classifiers. Inform Med Unlocked. 2021;22:100505. doi: 10.1016/j.imu.2020.100505. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Sethy PK, Behera SK, Ratha PK, Biswas P. Detection of coronavirus disease (COVID- 19) based on deep features and support vector machine. Int J Math Eng Manag Sci. 2020;5(4):643–651. doi: 10.33889/IJMEMS.2020.5.4.052. [DOI] [Google Scholar]

- 84.Shiraishi J, Katsuragawa S, Ikezoe J, Matsumoto T, Kobayashi T, Komatsu KI, Matsui M, Fujita H, Kodera Y, Doi K. Development of a digital image database for chest radiographs with and without a lung nodule: receiver operating characteristic analysis of radiologists' detection of pulmonary nodules. Am J Roentgenol. 2000;174(1):71–74. doi: 10.2214/ajr.174.1.1740071. [DOI] [PubMed] [Google Scholar]

- 85.Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. In: International Conference on Learning Representations (ICLR)

- 86.Singh D, Kumar V. Vaishali et al. classification of COVID-19 patients from chest CT images using multi-objective differential evolution–based convolutional neural networks. Eur J Clin Microbiol Infect Dis. 2020;39:1379–1389. doi: 10.1007/s10096-020-03901-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R. Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res. 2014;15(1):1929–1958. [Google Scholar]

- 88.Stephen O, Sain M, Maduh UJ, Jeong DU. An efficient deep learning approach to pneumonia classification in healthcare. J Healthc Eng. 2019;2019:1–7. doi: 10.1155/2019/4180949. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Szegedy C, Wei L, Yangqing J et al (eds) (2015) Going deeper with convolutions. In: 2015 IEEE conference on computer vision and pattern recognition (CVPR)

- 90.Szegedy C, Ioffe S, Vanhoucke V, Alemi A (2017) Inception-v4, inception-resnet and the impact of residual connections on learning. In: Proceedings of the AAAI conference on artificial intelligence (Vol. 31, no. 1)

- 91.Tahamtan A, Ardebili A. Real-time RT-PCR in COVID-19 detection: issues affecting the results. Expert Rev Mol Diagn. 2020;20(5):453–454. doi: 10.1080/14737159.2020.1757437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Too EC, Yujian L, Njuki S, Yingchun L. A comparative study of fine-tuning deep learning models for plant disease identification. Comput Electron Agric. 2019;161:272–279. doi: 10.1016/j.compag.2018.03.032. [DOI] [Google Scholar]

- 93.Trivedi M, Gupta A. A lightweight deep learning architecture for the automatic detection of pneumonia using chest X-ray images. Multimed Tools Appl. 2021;81:5515–5536. doi: 10.1007/s11042-021-11807-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Tsiknakis N, Trivizakis E, Vassalou E, Papadakis G, Spandidos D, Tsatsakis A, Sánchez-García J, López-González R, Papanikolaou N, Karantanas A, Marias K. Interpretable artificial intelligence framework for COVID-19 screening on chest X-rays. Exp Ther Med. 2020;20:727–735. doi: 10.3892/etm.2020.8797. [DOI] [PMC free article] [PubMed] [Google Scholar]