Abstract

Glaucoma is an asymptotic condition that damages the optic nerves of a human eye. Glaucoma is frequently caused due to abnormally high pressure in an eye that leads to permanent blindness. Detecting glaucoma at an initial phase has the possibility of curing this disease, but diagnosing accurately is considered as a challenging task. Therefore, this paper proposes a novel method known as a glaucoma detection system that performs the diagnosis of glaucoma by exploiting the prescribed characteristics. The significant intention of this paper involves diagnosing the glaucoma disease present at the top optical nerve of a human eye. The proposed glaucoma detection has used four different phases namely data preprocessing or enhancement phase, segmentation phase, feature extraction phase, and classification phase. Here, a novel classifier named fractional gravitational search-based hybrid deep neural network (FGSA-HDNN) is developed for the effective classification of glaucoma-infected images from the normal image. Finally, the experimental analysis for the proposed approach and various other techniques are performed, and the accuracy rate while diagnosing glaucoma achieved is 98.75%.

Keywords: Glaucoma, Optical coherence tomography, Segmentation, Blood vessels, Optic disc, Feature extraction, Classification, Deep neural network, FGSA

Introduction

In the sophisticated healthcare group, medical imaging has become a very important device. Since this holds various visual documents and reports of patients and refines data for various ailments like diabetic retinopathy, glaucoma, and macular degeneration, it has become riskier [1]. Glaucoma is a neurodegenerative disease that causes optic nerve damage which results in visual loss. Glaucoma is an asymptotic as well as a chronic disease,vision loss is avoided by the early diagnosis. Regarding the social condition and population, glaucoma disease is considered as the world’s largest cause for sightlessness only caused by cataracts. It is reversible via surgery, while glaucoma leads to enduring blindness [2].

Glaucoma turns out to be the world’s most prevalent reason for sightlessness. It is predicted that by 2020, about 80 million individuals will be affected by glaucoma. The symptoms are not reflected at the early phase, and this will damage the optic nerve, progressively causing irreversible loss of vision [3]. The major reasons for glaucoma are the rise of intraocular pressure (IOP) at the inner eye part. This increased stress often leads to the improper balance of fluid in the eye, and hence, it injures the optical nerves and also affects eye movement. Further, in the greater part of glaucoma cases, the symptoms or pain are not reflected initially, and so, the glaucoma disease is referred to as the “silent thief of sight” [4].

The earlier glaucoma phase is asymptomatic; about 50% of the patient is reported to be unconscious about this disease. Glaucoma progression without proper diagnosis and proper therapy leads gradually to an irreparable vision loss. For appropriate management of the disease, diagnosing and treating glaucoma at early phases are necessary [5]. Glaucoma occurs mainly in people over 40 years old, but can also occur in young adults, or even children. There is an elevated incidence of glaucoma over the age of 40 years, a history of glaucoma in the family, impaired vision, diabetes, and eye injuries. The signs of glaucoma are vision loss, eye redness, nausea or vomiting, a hazy-looking eye (particularly in children), and narrowness of the vision. The study was carried out with the reference of fundus images collected from the fundus camera [6].

Usually, some test procedures can be done by optical coherence tomography (OCT) for suspected glaucoma diagnosis, such as tonometry. Tonometry is an intraocular pressure monitoring process. For a fast air pulse containing an effect of air-jet to calculate IOP [7], contactless air-puff-based tonometers are employed. Gonioscopy is the method to verify the opening and closing of angle, optical coherence, or fundus images to verify the visibility of the optic nerve and retina. Glaucoma can be diagnosed with help of the retinal fundus image which is used to evaluate the retinal nerve fiber layer (RNFL) thickness. Moreover, it is one of the non-invasive approaches mostly employed by the ophthalmologist, as it can be exploited easily to take images of healthy as well as non-healthy retinas [8].

Several research works have been conducted to examine the correlation between the image field test and structural estimates produced by optical coherence tomography (OCT) scanners [9–14]. Of these, RNFL thickness has been estimated to find the linear relationship of vision loss at an advanced stage of the disease. Moreover, previously, the ocular disease can be detected and diagnosed with the help of fundus 2D color images incorporated with deep learning techniques. This includes retinal vessel segmentation, optic disc segmentation and optic cup, glaucoma recognition, as well as image registration [15]. For glaucoma detection, the retinal fundus images are employed in evaluating the thickness of the retinal nerve fiber layer (RFNL) [16]. Several studies have been focusing on automated detection of glaucoma in accordance with the color fundus images, but the CDR estimation is the most complex one [17, 18].

To ease the estimation of CDR, precisely this paper proposes a novel method known as a glaucoma detection system that performs the diagnosis of glaucoma by exploiting the prescribed characteristics and proposed a novel classifier for effective classifications of glaucoma-infected image from the normal image. The significant contributions of the paper are.

The input fundus images are enhanced before subjecting to the process of the segmentation phase.

The segmentation is performed efficiently to extract the optic disc image and blood vessels from the input image.

Evolutionary based DNN classifier is proposed combining fractional gravitation search optimization (FGSO) algorithm and deep neural network for classifying the glaucoma image from the extracted features.

The remaining paper is organized as follows: “Review of Related Works” section discusses the literature works of the various algorithms used for glaucoma detection. “Proposed Framework” section depicts the details about the proposed methodology to detect glaucoma incorporated with the classifier. The experimental results and the comparative analysis are discussed in “Results and Discussions” section. The conclusion of the paper is stated in “Conclusion” section.

Review of Related Works

A great effort has been made, and numerous studies that have been presented by many researchers are described in Table 1. Rahouma et al. [19] proposed the gray-level run-length matrix (GLRLM) and gray-level co-occurrence matrix (GLCM) to extract glaucoma features. The database used here was RIM-1 (retinal image) that consists of 614 images in that 340 images are normal images and 274 glaucoma-infected images. The database was obtained from the three Spanish hospitals. In the preprocessing, the RGB image was converted to grayscale and trimmed for focusing on the region of interest (ROI) curve. The ANN with a back-propagation classifier achieves the highest accuracy of 99%. Local phase quantization (LPQ) with the principal component analysis (PCA) method was proposed by Chan et al. [20] for combining the feature vectors. An optical coherence tomography angiogram (OCTA) image was used for detecting glaucoma. This method assists clinicians to recognize glaucoma at the early stage. The OCTA images consist of four types of images such as Ocular sinister (OS) macular, OS disc, Ocular Dexter (OD) macular, and OD disc. In the preprocessing stage, image was resized to 300 × 300 pixels and converted to grayscale, contrast limited adaptive histogram equalization (CLAHE) employed for the enhancement of contrast. The problem of this approach was the tedious feature extraction process, clinically significant because of the usage of more images.

Table 1.

Review of related works for glaucoma detection

| References | Method | Dataset | Preprocessing | Classifier | Evaluation results |

|---|---|---|---|---|---|

| Rahouma et al. [19] | GLCM and GLRLM | RIM-ONE [21] of 614 images consists of 340 normal images and 274 glaucomatous image | ROI extraction and color conversion | ANN with backpropagation technique | Accuracy 99%, sensitivity 100%, and specificity 100% |

| Chan et al. [20] | LPQ with PCA | OCTA images with OS disc, OS macular, OD disc and OD macular | Image resizing, CLAHE for contrast enhancement | AdaBoost classifier | Accuracy 94.3%, precision 98.2%, sensitivity 94.4%, specificity 94% |

| Li et al. [22] | Automated glaucoma detection | LAG database | ROI extraction and resizing the image | AG-CNN | Accuracy 96.2%, sensitivity 95.4%, specificity 96.7%, F1 score 0.954, AUC 0.983 |

| Kirar et al. [23] | ICs and DWT | RIM-1 [21] of 505 images | CLAHE for contrast improvement | LS-SVM | Accuracy 84.95%, sensitivity 86%, specificity 83.85% |

| Agrawal et al. [24] | QB-VMD | RIM-one [21] of MIAG database | CLAHE for contrast improvement, Median filter for noise removal | LS-SVM | Accuracy 86.13%, specificity 84.80%, sensitivity 87.43% |

| Dey and Bandyopadhyay [25] | PCA | 100 digital fundus images | 2D Gaussian filter for noise removal and AHE for contrast improvement | RBF kernel using SVM | Accuracy 96%, sensitivity 100%, specificity 92% |

| Abdel-Hamid [26] | Wavelet-based glaucoma detection algorithm | Glaucoma DB and HRF image database | Unsharp masking and CLAHE for enhancing the contrast | kNN | For Glaucoma DB sensitivity 87.9%, specificity 90.9%, accuracy 89.4%, AUC 92.2% and for HRF sensitivity 93.3%, specificity 100%, accuracy 96.7, and AUC 94.7 |

| Sharma et al. [27] | Automatic glaucoma diagnosis | Drishti [28], HRF and Refugee database | ROI extraction and blood vessel removal | Deep CNN | Sensitivity 96%, specificity 84%, PPV 85.72%, accuracy 90%, F1 score 91.45% |

| Martins et al. [4] | CAD pipeline diagnosis method | Origa [29], Dhrishti [28], RIM-one r1 [30] r2 [31] r3[31], iChallenge [32] and RIGA [33] | Gaussian blur or Median blur, CLAHE for image enhancement | CNN | IOU 91%, accuracy 87%, sensitivity 85%, and AUC 93% |

| Al-Akhras et al. [34] | Soft computing techniques | 106 retina images | Gaussian filter, Histogram equalization, blood removal, ROI detection | SVM and genetically optimized ANN | For SVM specificity 100%, accuracy 87% and for ANN specificity 91.4%, accuracy 98% |

Li et al. [22] proposed automatic glaucoma detection for fundus mages to increase reliability and accuracy. Large-scale attention-based glaucoma (LAG) datasets achieved from the Beijing Tongren Hospital and Chinese glaucoma study alliance (CGSA) consist of 11,760 fundus images. The RGB channels of images are transformed into binary glaucoma labels for removing high redundancy in the fundus image. The automated glaucoma CNN (AG-CNN) was used to classify the normal image as well as the glaucoma-infected image. Glaucoma detection used image channels (ICs), as well as the discrete wavelet transform (DWT) for the fundus image, which was suggested by Kirar et al. [23]. Images were resized to the red channel, blue channel, green channel, and grayscale images, and CLAHE was used on ICs to eradicate the unnecessary lighting effect and improve image visibility. The least-square support vector machine (LS-SVM) was employed as the classifier for the classification of glaucoma-infected images as well as non-infected images. RIM-1 database consists of 505 images out of which 250 were glaucoma-infected images and 255 were the normal images. The main drawback of this approach was the difficulty to detect multiclass glaucoma.

The new exact approach for the detection of computerized glaucoma recognition by quasi-bivariate variational mode decomposition (QB-VMD) was proposed by Agrawal et al. [24]. RIM-1 database consists of the MIAG database evaluated on 505 images, in those 255 healthy images and 250 glaucoma-infected images. Noise removal, median filter, and CLAHE for contrast enhancement were the techniques used in the preprocessing stage. LS-SVM classifier was employed to categorize the infected and healthy images. The accuracy of the classifier was 85.94% and 86.13% for threefold and tenfold cross-validation databases. The large database multistage glaucoma detection was not possible using this method. The method to detect the digital fundus image for examining the glaucoma image and normal images was proposed by Dey and Bandyopadhyay [25]. PCA method was employed for the feature extraction, and the SVM method was utilized for classifying the images. The database contains 100 digital eye fundus images. Preprocessing technique such as two-dimensional Gaussian filter was introduced for noise reduction, and the adaptive histogram equalization was employed for contrast enhancement.

The glaucoma detection with a wavelet-based method for the real-time screening systems was proposed by Abdel-Hamid [26]. The integration of statistical features and wavelet textural evaluated from the optic disc region was utilized to split the images into healthy or glaucoma images. Two public databases were used: Glaucoma DB and HRF databases. In the preprocessing stage, red channel illumination was normalized; unsharp masking and CLAHE was executed to maximize the contrast. K nearest neighbor (kNN) classifier was employed in classifying normal and infected images, and the accuracy obtained was 96.7%. Sharma et al. [27] proposed the automatic glaucoma diagnosis for the digital fundus image. Drishti [28] (31 normal and 70 glaucomatous images), HRF (15 healthy and 15 infected images), and Refugee [35] (80 glaucomatous and 360 normal images) were used for the classification purposes. The classifier was employed for classifying the retinal fundus images using deep CNN. The method has faced the over-fitting problem, so in the future, a large data collection was employed to improve the robustness.

The computer-aided diagnosis (CAD) pipeline has been suggested by Martins et al. [4] to diagnose glaucoma by fundus images and smartphone off-line runs. For preprocessing, the Gaussian blur, average or median blur for blurring the image, and CLAHE for image enhancement were used. Several databases such as Origa (168 glaucomas and 482 non-glaucoma image), Drishti-GS (70 glaucoma and 31 non-glaucoma image), iChallenge (80 glaucoma and 720 non-glaucoma image), RIM-one r1 (40 glaucoma and 18 non-glaucoma image), r2 (200 glaucoma and 255 non-glaucoma image), r3 (148 glaucoma and 170 non-glaucoma image), and RIGA (749 images) were utilized for the classification of retinal images as well as the normal images. CNN classifier was used as the classifier for the healthy and retinal fundus images. Al-Akhras et al. [34] proposed enhanced methods to separate disease infected and features. Gaussian filter, histogram equalization, noise reduction, and ROI detection were the techniques used for the preprocessing. SVM and ANN were the classifiers employed for glaucoma-infected image classification. Automatic diagnosis allows regular checks that can be made for the patients to detect the glaucoma infection.

Proposed Framework

The proposed methodology for detecting glaucoma is depicted in Fig. 1. Here, deep neural network is employed as an efficient technique to detect infected and normal images. The four modules used in the proposed method include preprocessing, segmentation, feature extraction, as well as classification phases.

Fig. 1.

Proposed framework for glaucoma detection

Image Preprocessing Phase

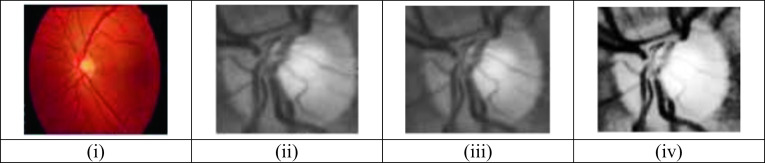

The preprocessing method considers the most important phase in the image processing that is established to assure all the images are normalized with each other before the authentic examination. It is utilized to improve the data images by conquering unnecessary features like speckles, blind spots, noise [36], low contrast, irrelevant variations, etc. The preprocessing scheme improves the important aspects for further processing. It includes the schemes like image resizing, channel extraction, and noise removal; image enhancement is employed (2019), and the preprocessed image is shown in Fig. 2.

Fig. 2.

Preprocessing output: (i) input image, (ii) green channel-extracted image, (iii) median-filtered image, and (iv) CLAHE image

Image Resizing

The input fundus and OCT images are obtained from the database and are resized to 300 × 300 pixels which create all the original images with the same resolution, dimensions, and similar scale for better analysis purposes.

Image Extraction

The resized images are employed to extract the green channel, red channel, blue channel, and grayscale images [23]. The fundus image consists of RGB color as shown in Fig. 2. The pseudocode color representation is utilized to distinguish all the information channels more accurately. Several operations cannot be performed in this method as they possess many colors; hence, only one channel is extracted to optimize the result. The human eye is most responsive to the green channel because the green channel carries more information for processing, and they differentiate the healthy and the glaucoma-infected images. Hence, the CLAHE is used in enhancing the green channel image.

Median Filter

Errors in the image processing occur due to the occurrence of diverse noise signals like Gaussian, salt and pepper, gamma, exponential, uniform, Rayleigh, Erlang, etc. in the input images. Hence, median filters are employed to eliminate these noises from the input image for improved and precise results.

Contrast Limited Adaptive Histogram Equalization

CLAHE technique is employed in the entire information channels for the image quality enhancement, image visibility, and also to remove the unnecessary lighting consequences. The green channel images are improved by utilizing the CLAHE scheme [20]. The input image quality shall be affected because of noises and artifacts that result in low contrast. The image contrast is improved by CLAHE and is employed to process the image quality and also improves the differences among the pixel of image intensities inside the nearby distance. The speed of identifying the diseases using the input OCTA and fundus images depends upon the image quality. Histogram equalization changes the information channel in the image due to the probability distribution and extends the distribution limit for improving the contrast and the visual effects. This equalization establishes the transformation utilities to generate the output image that has a uniform histogram. The CLAHE specifically targets the image entropy and obtains better equalization employing the maximum entropy. This equalization removes the common problem in the adaptive histogram equalization; however, they overcome the reliable regions inside the images because the histograms of such regions are highly concentrated and normally dense. CLAHE permits the user to set the pre-described value to control and restrict the amplification of the contrast, and also avoiding the problem of over amplifying the near reliable regions inside the image.

Image Segmentation

The process next to pre-processing is segmentation. It is the procedure of separating the image into numerous segments based on the characteristics of the image pixels. As glaucoma disease concerns the retinal optic nerve, the ROI for the detection of glaucoma focuses on the optical disc and blood vessels. The pre-processed images are given as an input to the segmentation section. The segmentation process split the image into two segments namely the blood vessel and optic disc. The segmented image IS numerically expressed as,

| 1 |

From Eq. (1), the factor is used to denote the blood vessel segments and the factor is used to represent the optical disc segment in the image I. The process of segmentation of the image I is described as follows:

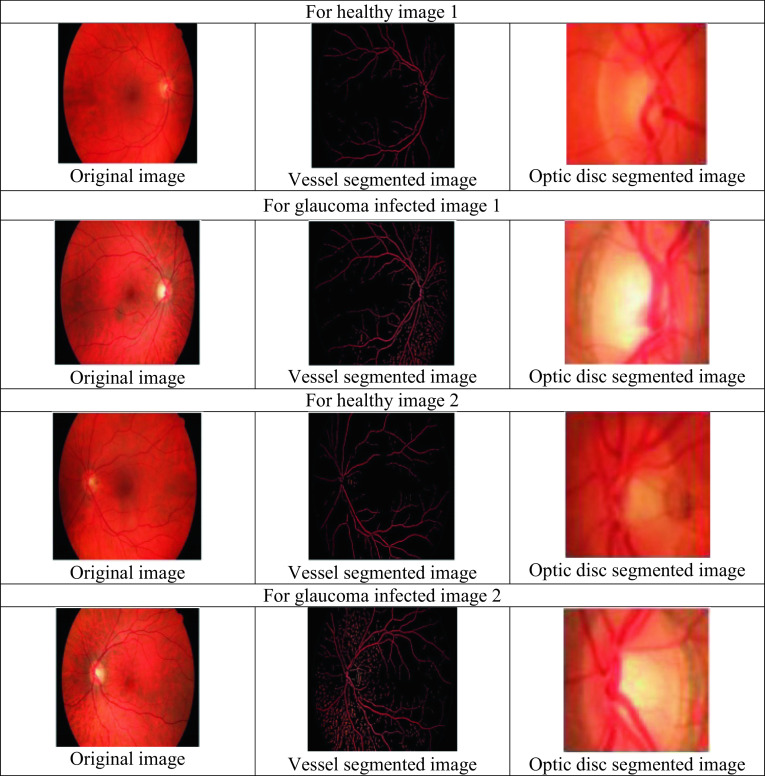

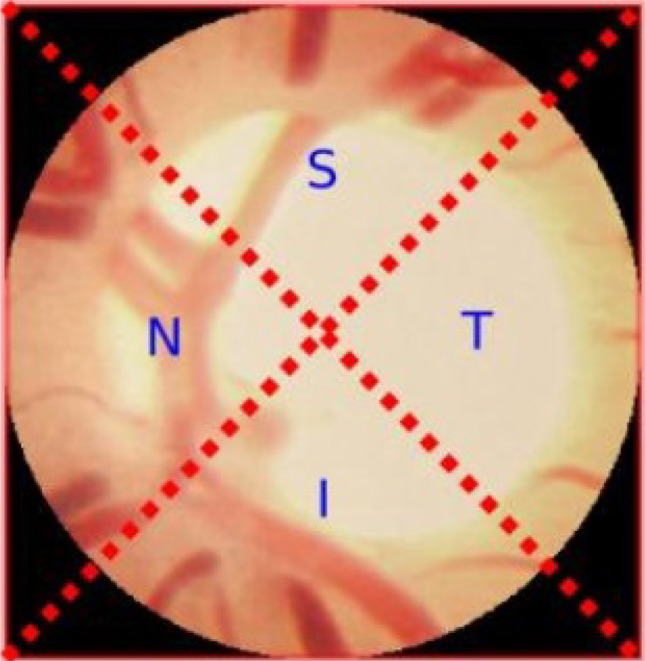

Segmentation Phase by Blood Vessels by a Thresholding Method

In the eye, the blood vessels are used to carry the oxygenated blood. However, a sudden rise in the interocular pressure (IOP) is able to modify the eyes’ blood vessels. The blood vessel segmentation is obtained from the pre-processed image that enhances detection rate. It is one of the most important steps as the important attributes are used for the glaucoma identification. During segmentation, the fundus image utilizes the green channel. And in the segmentation of blood vessels, first the optic disc needs to be eradicated and then the morphological opening is employed. The CLAHE output has deducted for separating the vessels to obtain the final image. The noise from the image is eliminated, and the images are transformed into black and white. From this, new threshold values are evaluated in separating the blood vessels [37]. If all the pixels are less, then the threshold level is set to 0, and if all the pixels are greater, then the threshold level is set to 1. This blood vessel segmentation constitutes of four regions such as inferior (I), superior (S), nasal (N), and temporal (T) and is revealed in Fig. 3 [8]. The region which has additional blood vessels for glaucoma-infected person than the healthy person is the nasal region.

Fig. 3.

ISNT quadrants of blood vessel segmentation

Segmentation Phase by Optic Disc

The adaptive threshold-based scheme is employed in segmenting the optimal disc, and the glaucoma detection is performed by optic disc cup. Due to the principal orange setting of the fundus image retina, the disc segmentation is achieved by red channel. In the initial process of disc segmentation, the red channel provides better results to attain the preliminary threshold for disc localization within the retina. In some cases, the green channel provides enhanced performance than the red channel [38]. The threshold is established from the histogram equalization that is explained in the previous section and is employed to the green channel. The image achieved is the binary image, and that is provided to the morphological closing. The morphological dilation requirement occurs due to the optic nerve head (ONH) and wrapped by blood vessels. To eradicate the gaps, the morphological closing is made. The dimension of the structural element is equal to the preliminary blood vessel width on the selected optic nerve head for the closing process. The preliminary blood vessel is established at the optic disc boundary gap due to its highest value equivalent to the primary blood vessel width [39]. The preprocessed green channel generates the ONH region only.

The average amount of pixels is evaluated according to the subsequent parameter [40] and considered as the disc.

| 2 |

In Eq. (2) d denotes the diameter of the retina which is converted into pixels. This average pixel amount is obtained to evaluate the threshold for the segmentation. If the pixel between the two grayscale tones is greater than , then the biggest tone is chosen as the threshold. There are three conditions for the segmentation evaluation that includes the ratio of height versus block width which has the disc equal to the elliptical shape, pixel amount within the blob to be less than , and the location of the block. The segmentation procedure repeats iteratively and evaluates the threshold accuracy until the three conditions satisfied. The steps in the optical disc segmentation are as follows: First, the image is partitioned into various blocks. The bright pixel count is performed for every image block, and then establishes the bright pixel count, and the total white pixels are represented by the bright pixels in the specified region. The image optical disc from the center point is described by fixing the circle. The optic disc segmented image is denoted as .

Feature Extraction Phase

After segmentation phase, the extraction of features is established. Several features extracted in this paper are from the segmented images. These features establish improved results for glaucoma detection. Cup-to-disc ratio (CDR), neuro-retinal rim (NRR), haralick features, retinal nerve fiber layer (RNFL), etc. are the features used for the classification purposes.

Cup-to-Disc Ratio

The cup-to-disc ratio is the factor efficiently utilized in identifying glaucoma. The sudden enhancement in the intraocular pressure causes disc cupping. For the usual disc, the value of CDR is less than 0.3, and the CDR value is larger than 0.3 for glaucoma-infected image.

| 3 |

Neuro-Retinal Rim

It is described as the region among the optic cup edge and the optic disc edge. In temporal regions, the proportion of the area is absorbed by NRR and the NRR is engaged in the inferior as well as the superior regions because the nasal regions become thicker than all the other regions.

Retinal Nerve Fiber Layer

It is created by the optic nerve fiber expansion which is the thickest layer near the optic disc. For an ordinary eye, the RNFL is frequently observed at inferior temporal region pursued through the superior temporal regions, inferior nasal, and temporal nasal.

INST Features

This feature indicates the blood vessel proportion area present in the regions of both inferior and superior to the regions of the temporal and nasal for the optic disc image segmentation.

| 4 |

From Eq. (4), , , , and represent the regions of the temporal, nasal, superior, and inferior for the optic disc image segmentation.

Mean

Mean is used to determine the average number of white pixels. The input mean fundus image is described in the following equation.

| 5 |

where represents the pixel position in the image, denotes the total amount of total amount of pixels in the image, and .

Variance

Variance separates the image contrast information, and it depends upon the mean value. It is represented as follows:

| 6 |

From Eq. (6), V indicates the variance, the total intensity level in an image is indicated as M, and the histogram probability of the image is indicated as .

Textural Features

The features that are extensively implemented in the analysis of the medical field are textural features that provide useful information regarding the particular object characteristics or ROI inside the image. The GLCM is the frequently employed scheme for the evaluations of texture feature. It explains the texture of an image by displaying the particular pixel pairs occurred in the image; therefore, it has obtained the information regarding the pixel arrangement in that particular image. Various textural features are implemented for the optic disc feature arrangement such as energy, contrast, homogeneity, entropy, correlation, dissimilarity, cluster prominence, cluster shade, information correlation measure, and difference variance [41]. Different variance and contrast are utilized to calculate the contrast of the image where the cluster head imitates the human perception. Moreover, the energy, homogeneity, correlation, entropy, and information correlation measures are employed to characterize the homogeneity of the image. The GLCM is described in g (x, y) whereas x and y describe the row and column inside the particular matrix. The textural features were evaluated as per the below equations.

| 7 |

| 8 |

| 9 |

| 10 |

| 11 |

| 12 |

| 13 |

| 14 |

| 15 |

where , , , and represent the means as well as the standard deviations for the columns and rows of , where and characterizes xth and yth factors in the marginal a and b probability matrix of and

| 16 |

| 17 |

| 18 |

Statistical Features

There are various statistical features described in this statistical feature extraction like energy, mean, skewness, variance, and kurtosis. The skewness and mean describes the entire intensity of the identified optic disc area while kurtosis and variance explains the illumination discrepancy, and the energy provides the information present in the optic disc field [26, 42].

Let represent the random variable which indicates the central slice diagonal values of the bicep strum, and denotes the probability of the occurrence , where represents the distinct amount of random values. The statistical features are represented as

| 19 |

The value of the mean provides the average gray level of every area, and it is represented as

| 20 |

The value of the variance evaluates the number of gray level fluctuations commencing from the mean, and it is described as

| 21 |

The term skewness evaluates the distribution asymmetry in the region of the sample mean. The minus value represents the data spread more towards the left rather than right side and vice versa, and it is evaluated as

| 22 |

Kurtosis is utilized to calculate the distribution tailedness relative to the normal distribution, and it is described as follows:

| 23 |

Image Classification

Soon after the feature extraction process, the selected features are fed to the classification phase input [43]. There are two stages of classification,they are training stage and testing stage. For the training process, it uses 80% of the images and 20% of the images are employed for the testing stage. Here, the hybridized deep neural network for classifying the images and the weight values of the deep neural network are selected using the FGSO [44]. The deep neural network is the kind of artificial neural network that comprises of several hidden layers as well as the output layer. Furthermore, the learning parameter includes the pre-training stage using the deep belief network (DBN) and the fine-tuning stage. The values of the weight are optimized by FGSO in the fine-tuning process.

Deep Belief Networks

The feed-forward neural network is the significant element of deep belief network, and the network consists of several hidden layers. In that input layer is the visible unit, and N represents the total number of layers in the hidden layers along with the output layers. The weights among the DBN factors are represented as wi, and the biases bi of layer I and between the unit layers are I − 1.

-

Pre-training stage

The parameter initialization is the major problems in the deep neural network training. The reduced local minimum for the fault identification is established by the random initialization. The restricted Boltzmann machine (RBM) for the training series issues is employed in Hinton [45]. The repeated neural network that comprises two layers is called RBM, and the weighted links employ the binary inputs. The first layer of the RBM receives the inputs of visible units represented as b, and the remaining layer covered units are represented as c. After the training of RBM, the feature detector is obtained by the hidden units and indicates the input vector compact. The energy function is signified in below equation.24 Hence, the bias vectors of the visible and hidden layers are represented as x, y, and the weight is represented as w by the conditional distributions represented as follows:25 26 The logistic function that turns out to be lies in the interval range of (0, 1).

Initially, the training process is unsupervised and disregards the class label. RBM on the other hand express the socialized vector, and it collides with the identical input data. Lastly, the deliberation in the RBM is to circulate the hidden unit’s criteria. Consequently, this process is persistent, and the factors attained are scheduled as pursues:27 28 29 -

DNN pre-training schemes

- First, initialization of training vector by the visible unit b.

- Also, updating the visible units in parallel as per Eq. (24).

- Depending upon the examined reconstruction of a similar equation given in the above utilization in step 2, the hidden units are again updated in parallel.

- Weight update by utilizing .

When the process of training is by RBM, the multilayer is produced, and at the to, another RBM shall be heaped. The stacked RBM among the units in the previously RBM-trained layers and the initialized vector is established through the present weights and biases. The novel RBM input is by the previously trained layers, and this process is continued until the preferred terminating criteria is satisfied. In this, there are 253 layers of input, three hidden layers, and only one layer of output. The stage of fine-tuning is through the achieved weights of deep neural networks.

Fine-Tuning Process

The value of the weight is adjusted, and the error is minimized by the FGSO algorithm. Initially, the values of the weights are initialized randomly . The solution demonstration is a significant method for resolving the issues in the whole optimization algorithm. Next, the fitness function for every solution is computed. The classification accuracy is regarded as the fitness function, and the value depends on Eq. (31). Hence, update the fitness computation and FGSO solutions. In the next step, the procedure for the update is provided. The layer of the output is offered at the deep neural network top which divides the images. The dataset for the training Td is employed for weight optimization in the training process. First, the fresh features are provided to the DNN; after that, the weights are provided. Eventually, the database Td is tested to classify the image that depends upon the best weight (w). Mostly, the decreased aspects are provided to the DNN, whereas the weight function is randomly modified. Through the selection of the optimal weights, the FGSO algorithm is employed by the proposed approach and the procedures are described below.

-

Fractional gravitational search optimization algorithm

FGSO algorithm is the integration of the GSA [46] and the fractional theory [47]. This search algorithm mainly depends on the gravity law. The problem of convergence is neglected in the GSA algorithm by enhancing the search space through the fractional calculus theory adaptation. The proposed fractional gravitational search algorithm is depicted in the following section.

In the FGSO algorithm, the data entities are regarded as agents and masses are used to calculate the performance. The agent location represents the solution for the issue. The steps for the FGSO are depicted below.-

Step 1: initialization stageInitially, the object location and the agents are randomly generated. The location of the qth objects is described for J objects.

30 -

Step 2: fitness evaluation stageIn the search space, the fitness function for every particle is evaluated by using Eq. (31). The fitness employed for the two agent’s locations is described in this stage. At first, the distance between an individual object from the center point is computed, and then, the optimal center point is computed. The fitness function for the FGSO algorithm is formulated as below.

31 -

Step 3: inertial mass computationWhen the inertial mass is high, the particle attraction is also high. The optimal solution is achieved from the higher particle mass. The agent’s inertial mass is computed using the subsequent equation.

32 From Eq. (32), value is described as33 The best, as well as the worst function, is mentioned as34 35 36 -

Step 4: gravitational force stageThe gravitational forces among the entity q and are computed by the below Eq. (37)

37 From Eq. (37), represents the small constant, indicates the dynamic mass of the gravitation linked with the entity , represents the reactive mass of the gravitation linked with the agent , and the gravitational constant for the particular time is represented as . -

Step 5: velocity and acceleration computationThe agent velocity is computed using the fractional present velocity inserted to its acceleration, and it is formulated as

38 From Eq. (38), represents the agent acceleration at the particular time t. The agent acceleration is computed by the update depending upon the inertial mass, and this mass gives the best solution. The acceleration is computed using the following equation.39 -

Step 6: fractional location updateThe agent location at the subsequent repetition is calculated by the fractional theory adaptation in the GSA algorithm. The agent location is updated by using Eq. (40)If the fitness value of the agent of the next repetition and the earlier iterations are identical, the fractional calculus took over its region.

40 Then, the fractional calculus is represented as . is the constant value and normalized to the actual number among the range 0 and 1.41 The left-hand side of Eq. (41) is rearranged as42 Equation (43) represents the updated fractional agent location. -

Step 7: end stageThe location update process is repeated till the best solution is achieved.

-

Results and Discussions

This section describes an early glaucoma detection using the retinal blood vessel segmentation and executed in the MATLAB 2014 platform with the system configurations by the i5 processor in 4 GB RAM. The illustration computes the factors like pressure drop, stress, blood velocity, and strain for the arteries as well as vascular networks. Additionally, the proposed approach for the classification of images is from the fundus images, and OCT images are evaluated using accuracy, specificity, positive predictive value, and sensitivity.

Database

The fundus images are extensively employed for the primary assessment of ophthalmic abnormalities. The OCT is a moderate imaging method that establishes the quantitative examination of retinal layers [49]. These OCT images are employed to examine the morphological alterations in the retinal layers that offer the specified image of the ocular disease. The database consists of glaucoma and controlled fundus images as well as the OCT images. The database was obtained from 27 subjects scanned on the system of TOPCON 3D OCT. It contains eye data for 24 subjects and one eye data for 3 subjects. Thus, the observed data consists of 50 fundus and OCT images with 22 controlled and 28 glaucoma-infected patients.

Performance Measures

Specificity, sensitivity, and accuracy are the metrics used for the measurement of success of the classifiers in classifying images to normal or glaucoma-affected images. To make clear the metrics, the following metrics are required.

True positive : the glaucoma image numbers that are exactly categorized.

True negative : usual image numbers that are exactly categorized.

False-positive : usual images that are classified wrongly as glaucoma-infected images.

False-negative : number of glaucoma images that are classified wrongly as usual.

- Specificity: It is defined as the measure that evaluates the probability of the consequences which are true negative that indicates the non-vessel numbers identified accurately.

44 - Sensitivity: It is defined as the measure that evaluates the probability of the consequences which are true positive that describes the infected image region identified exactly.

45 - Accuracy: The measure that evaluates the probability in which how many images are correctly categorized.

46 47 48 49 50

Experimental Evaluations

This section describes the discussion of glaucoma detection. The proposed FGSA-HDNN approach described in this article separates several features from the pre-processing stage, optic disc segmentation, and blood vessel. It represents the output image obtained from the proposed approach for the glaucoma recognition system by the healthy image processing. It is observed that the scheme has distinguished the normal as well as glaucoma-affected image. The differentiation in the blood vessel segmentation and optic disc segmentation for the normal, as well as glaucoma-affected images, are shown in Fig. 4.

Fig. 4.

Experimental results for the glaucoma detection

The proposed optic disc segmentation method has been employed for the TOPCONS 3D OCT dataset images, and the results for the segmentation are compared with the corresponding images provided by the dedicated ophthalmologists. The factors achieved by the optic disc segmentation for the images sets are described in Table 2. The performances of the proposed approach are computed by evaluating the segmentation results with the resultant truth images.

Table 2.

Results of optic disc segmentation

| Image | Accuracy | Sensitivity | Specificity | PPV | NPV | FPR | NPR |

|---|---|---|---|---|---|---|---|

| 1 | 98.74 | 72.54 | 98.47 | 84.12 | 99.27 | 0.29 | 21.57 |

| 2 | 98.98 | 83.67 | 99.36 | 88.46 | 99.48 | 0.48 | 22.86 |

| 3 | 99.42 | 47.62 | 98.86 | 90.32 | 98.35 | 0.19 | 32.48 |

| 4 | 99.78 | 68.48 | 99.12 | 84.84 | 96.31 | 0.16 | 49.62 |

| 5 | 99.56 | 72.62 | 99.48 | 88.48 | 99.29 | 0.75 | 38.14 |

| 6 | 99.38 | 82.84 | 99.74 | 94.27 | 99.17 | 0.58 | 28.67 |

| 7 | 99.24 | 64.82 | 99.08 | 96.48 | 99.83 | 0.32 | 18.32 |

| 8 | 99.74 | 74.21 | 99.84 | 92.84 | 99.08 | 0.41 | 16.85 |

| Average | 99.4 | 70.85 | 99.24 | 89.98 | 98.85 | 0.3975 | 28.56 |

The proposed approach for the segmentation of optic disc gives 99.18% accuracy, 70.85% sensitivity, and 99.24% specificity. Table 3 depicts the comparison performances of the proposed optic disc segmentation with another method by Prakash and Selvathi [50], Almazroa et al. [33], Yu et al. [5], and Thakur and Juneja [51]. The proposed optic disc segmentation method obtains 99.4% of accuracy whereas the traditional method such as Prakash and Selvathi [50] obtains an average accuracy of 99.3%, Almazroa et al. [33] obtains an average accuracy of 83.9%, Yu et al. [5] obtains an average accuracy of 94.8%, and Thakur and Juneja [51] obtains an average accuracy of 95.34% for the optical disc segmentation. The reason for the proposed approach for optic disc segmentation is to identify and segment the inner and outer boundary of the optic disc region perfectly than other traditional methods for optic disc segmentation.

Table 3.

Comparative analysis for the optic disc segmentation results

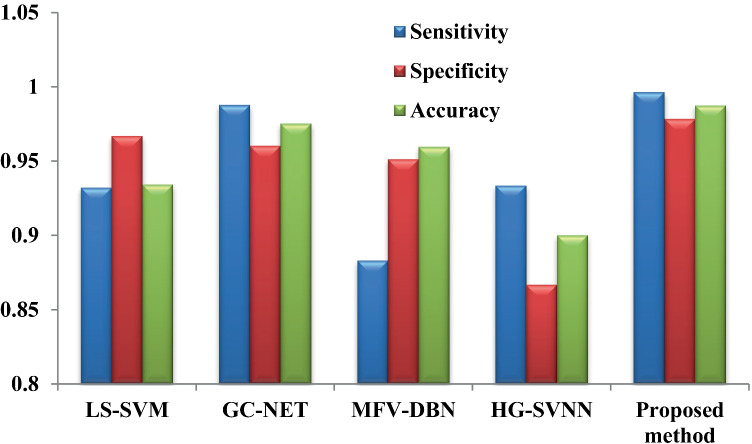

The performance measures like specificity, sensitivity, and accuracy for the various state-of-the-art methods are compared with our proposed hybridized deep neural network with a fractional gravitational search algorithm (HDNN-FGSA) for glaucoma detection. The performance comparison of the proposed method and other methods is demonstrated in Table 4.

Table 4.

Comparison of the evaluation metrics for various classifiers

| Method | Sensitivity | Specificity | Accuracy |

|---|---|---|---|

| LS-SVM | 0.932 | 0.9667 | 0.9340 |

| GC-NET | 0.9878 | 0.9602 | 0.9751 |

| MFV-DBN | 0.883 | 0.9512 | 0.9595 |

| HG-SVNN | 0.9333 | 0.8667 | 0.9 |

| FGSA-HDNN | 0.9964 | 0.9784 | 0.9875 |

The performance measures such as sensitivity, specificity, and accuracy for the proposed HDNN-FGSA are compared with the other state-of-the-art methods like LS-SVM [7], GC-NET [52], MFV-DBN [53], and HG-SVNN [54]. The comparisons of the above-stated metrics are demonstrated in Fig. 5.

Fig. 5.

Comparative analysis of metrics with other state-of-the-art methods

Conclusion

The vision illness strength due to glaucoma is decreased when it is detected at an earlier phase. In this paper, the glaucoma detection scheme is based on the proposed novel classifier that detects the glaucoma image more accurately. The segmentation process is done by optic disc, and the blood vessels in the input fundus images and the output of the segmentation are used for the extraction of features and then for classification purposes. The proposed classifiers consider the statistical, textural, and vessel-extracted features; hence, the classifier performance is enhanced. The image classification depends upon the proposed FGSO-based HDNN classifier. The experimental analysis of the proposed method is compared with a few existing methods like LS-SVM, GC-NET, MFV-DBN, and HG-SVNN. The results described that the proposed method obtains improved performances with the values of 99.64%, 97.84%, and 98.75% for sensitivity, specificity, and accuracy metrics. In the future, more hybrid features like color, neuro-retinal rim shape, and size will be detected regarding suspected glaucoma. In addition to this, the scalability of the proposed approach will be extended to determine huge datasets.

Data Availability

Data sharing does not apply to this article as no new data were created or analyzed in this study.

Declarations

Human and Animal Rights

This article does not contain any studies with human or animal subjects performed by any of the authors.

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Conflict of Interest

The authors declare competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Shoba SG, Therese AB. (2020 Sep 1) Detection of glaucoma disease in fundus images based on morphological operation and finite element method. Biomedical Signal Processing and Control. 62: 101986.

- 2.Bisneto TR, de Carvalho Filho AO, Magalhães DM. (2020 May 1) Generative adversarial network and texture features applied to automatic glaucoma detection. Applied Soft Computing, 90: 106165.

- 3.Pruthi J, Khanna K, Arora S. (2020 Jul1) Optic Cup segmentation from retinal fundus images using Glowworm Swarm Optimization for glaucoma detection. Biomedical Signal Processing and Control, 60: 102004.

- 4.Martins J, Cardoso JS, Soares F. (2020 Aug 1) Offline computer-aided diagnosis for Glaucoma detection using fundus images targeted at mobile devices. Computer Methods and Programs in Biomedicine, 192: 105341. [DOI] [PubMed]

- 5.Yu S, Xiao D, Frost S, Kanagasingam Y. Robust optic disc and cup segmentation with deep learning for glaucoma detection. Computerized Medical Imaging and Graphics. 2019;74:61–71. doi: 10.1016/j.compmedimag.2019.02.005. [DOI] [PubMed] [Google Scholar]

- 6.Kumar BN, Chauhan RP, Dahiya N. (2016 Jan 23) Detection of Glaucoma using image processing techniques: A review. In2016 International Conference on Microelectronics, Computing and Communications (MicroCom), (pp. 1–6). IEEE.

- 7.Parashar D, Agrawal DK. (2020 Jun 12) Automated Classification of Glaucoma Stages Using Flexible Analytic Wavelet Transform From Retinal Fundus Images. IEEE Sensors Journal.

- 8.Carrillo J, Bautista L, Villamizar J, Rueda J, Sanchez M. (2019 Apr 24) Glaucoma detection using fundus images of the eye. In2019 XXII Symposium on Image, Signal Processing and Artificial Vision (STSIVA), (pp. 1–4). IEEE.

- 9.Gowthul Alam MM, Baulkani S. Local and global characteristics-based kernel hybridization to increase optimal support vector machine performance for stock market prediction. Knowl Inf Syst. 2019;60(2):971–1000. doi: 10.1007/s10115-018-1263-1. [DOI] [Google Scholar]

- 10.Gowthul Alam MM, Baulkani S. Geometric structure information based multi-objective function to increase fuzzy clustering performance with artificial and real-life data. Soft Comput. 2019;23(4):1079–1098. doi: 10.1007/s00500-018-3124-y. [DOI] [Google Scholar]

- 11.Sundararaj V, Muthukumar S, Kumar RS. An optimal cluster formation based energy efficient dynamic scheduling hybrid MAC protocol for heavy traffic load in wireless sensor networks. Computers & Security. 2018;77:277–288. doi: 10.1016/j.cose.2018.04.009. [DOI] [Google Scholar]

- 12.Sundararaj V. An efficient threshold prediction scheme for wavelet based ECG signal noise reduction using variable step size firefly algorithm. International Journal of Intelligent Engineering and Systems. 2016;9(3):117–126. doi: 10.22266/ijies2016.0930.12. [DOI] [Google Scholar]

- 13.Sundararaj V. Optimised denoising scheme via opposition-based self-adaptive learning PSO algorithm for wavelet-based ECG signal noise reduction. International Journal of Biomedical Engineering and Technology. 2019;31(4):325. doi: 10.1504/IJBET.2019.103242. [DOI] [Google Scholar]

- 14.Sundararaj V, Anoop V, Dixit P, Arjaria A, Chourasia U, Bhambri P, Rejeesh MR, Sundararaj Regu. CCGPA-MPPT: Cauchy preferential crossover-based global pollination algorithm for MPPT in photovoltaic system. Progress in Photovoltaics: Research and Applications. 2020;28(11):1128–1145. doi: 10.1002/pip.3315. [DOI] [Google Scholar]

- 15.George YM, Antony B, Ishikawa H, Wollstein G, Schuman J, Garnavi R. (2020 Jun9) Attention-guided 3D-CNN Framework for Glaucoma Detection and Structural-Functional Association using Volumetric Images. IEEE Journal of Biomedical and Health Informatics. [DOI] [PMC free article] [PubMed]

- 16.Bautista L, Villamizar J, Calderón G, Rueda JC, Castillo J. (2019 Oct 16) Mimetic Finite Difference Methods for Restoration of Fundus Images for Automatic Glaucoma Detection. InECCOMAS Thematic Conference on Computational Vision and Medical Image Processing, (pp. 104–113). Springer, Cham.

- 17.Almazroa A, Sun W, Alodhayb S, Raahemifar K, Lakshminarayanan V. Optic disc segmentation for glaucoma screening system using fundus images. Clinical Ophthalmology (Auckland, NZ), 2017; pp. 11. [DOI] [PMC free article] [PubMed]

- 18.Lakshmanaprabu SK, Mohanty SN, Sheeba R, Krishnamoorthy S, Uthayakumar J, Shankar K (2019) Online clinical decision support system using optimal deep neural networks. Applied Soft Computing 81105487. 10.1016/j.asoc.2019.105487

- 19.Rahouma KH, Mohamed MM, Hameed NS. (2019 Sep) Glaucoma Detection and Classification Based on Image Processing and Artificial Neural Networks. Egyptian Computer Science Journal, 43(3).

- 20.Chan YM, Ng EY, Jahmunah V, Koh JE, Lih OS, Leon LY, Acharya UR. (2019 Dec 1) Automated detection of glaucoma using optical coherence tomography angiogram images. Computers in biology and medicine, 115: 103483. [DOI] [PubMed]

- 21.Rim-One-Medical Image Analysis Group. (20 April 2017) Available at http://medimrg.webs.ull.es/, accessed.

- 22.Li L, Xu M, Liu H, Li Y, Wang X, Jiang L, Wang Z, Fan X, Wang N. A Large-Scale Database and a CNN Model for Attention-Based Glaucoma Detection. IEEE transactions on medical imaging. 2019;39(2):413–424. doi: 10.1109/TMI.2019.2927226. [DOI] [PubMed] [Google Scholar]

- 23.Kirar BS, Agrawal DK, Kirar S. (2020 Jul 28) Glaucoma Detection Using Image Channels and Discrete Wavelet Transform. IETE Journal of Research, (pp. 1–8).

- 24.Agrawal DK, Kirar BS, Pachori RB. Automated glaucoma detection using quasi-bivariate variational mode decomposition from fundus images. IET Image Processing. 2019;13(13):2401–2408. doi: 10.1049/iet-ipr.2019.0036. [DOI] [Google Scholar]

- 25.Dey A, Bandyopadhyay SK. (2016) Automated glaucoma detection using support vector machine classification method. Journal of Advances in Medicine and Medical Research, (pp. 1–2).

- 26.Abdel-Hamid L. Glaucoma detection from retinal images using statistical and textural wavelet features. Journal of digital imaging. 2020;33(1):151–158. doi: 10.1007/s10278-019-00189-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sharma A, Agrawal M, Roy SD, Gupta V. (2020) Automatic Glaucoma Diagnosis in Digital Fundus Images Using Deep CNNs. InAdvances in Computational Intelligence Techniques, (pp. 37–52), Springer, Singapore.

- 28.Sivaswamy J, Krishnadas SR, Joshi GD, Jain M, Tabish AUS (2014) Drishti-GS: retinal imagedataset for optic nerve head (ONH) segmentation. In: 2014 IEEE 11th international symposiumon biomedical imaging (ISBI). Beijing, pp 53–56.

- 29.Z. Zhang, F.S. Yin, J. Liu, W.K. Wong, N.M. Tan, B.H. Lee, J. Cheng, T.Y. Wong, (2010) ORIGA-light: an online retinal fundus image database for glaucoma analysisand research, in: 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBC, 10: 3065–3068. [DOI] [PubMed]

- 30.F. Fumero, S. Alayon, J.L. Sanchez, J. Sigut, M. Gonzalez-Hernandez, RIMONE: (2011) an open retinal image database for optic nerve evaluation, IEEE Symp. Comput.-Based Med, Syst, (pp. 1–6).

- 31.Fumero F, Sigut J, Alayon S, González-Hernández M, González de la Rosa M. Short Papers Proceedings - WSCG 2015. Pilsen: Czech Republic; 2015. de la Rosa, Interactive tool and database for optic disc and cup segmentation of stereo and monocular retinal fundus images; pp. 91–97. [Google Scholar]

- 32.ichallenge, (07/12/2019) (http://ichallenge.baidu.com/). (Accessed on).

- 33.Almazroa A, Sun W, Alodhayb S, Raahemifar K, Lakshminarayanan V. Optic disc segmentation for glaucoma screening system using fundus images. Clinical Ophthalmology. 2017;11:2017–2029. doi: 10.2147/OPTH.S140061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Al-Akhras M, Alawairdhi M, Habib M. (2019 Oct 24), Using soft computing techniques to diagnose Glaucoma disease. Journal of Infection and Public Health. [DOI] [PubMed]

- 35.Xu Y, (2019) Chinese Academy of Sciences, China, https://refuge.grand-challenge.org/

- 36.Rejeesh MR, Thejaswini PMOTF. Multi-objective Optimal Trilateral Filtering based partial moving frame algorithm for image denoising. Multimedia Tools Appl. 2020;79:28411–28430. doi: 10.1007/s11042-020-09234-5. [DOI] [Google Scholar]

- 37.Deepika E, Maheswari S. Earlier glaucoma detection using blood vessel segmentation and classification. In2018 2nd International Conference on Inventive Systems and Control (ICISC) 2018 Jan 19 (pp. 484–490). IEEE.

- 38.Rehman ZU, Naqvi SS, Khan TM, Arsalan M, Khan MA, Khalil MA. Multi-parametric optic disc segmentation using superpixel based feature classification. Expert Systems with Applications. 2019;120:461–473. doi: 10.1016/j.eswa.2018.12.008. [DOI] [Google Scholar]

- 39.Issac A, Sarathi MP, Dutta MK. An adaptive threshold based image processing technique for improved glaucoma detection and classification. Computer methods and programs in biomedicine. 2015;122(2):229–244. doi: 10.1016/j.cmpb.2015.08.002. [DOI] [PubMed] [Google Scholar]

- 40.Dhumane C, Patil SB. Automated glaucoma detection using cup to disc ratio. International Journal of Innovative Research in Science, Engineering and Technology. 2015;4(7):5209–5216. [Google Scholar]

- 41.Haralick RM, Shanmugam K. Textural features for image classification. IEEE Trans Syst Man Cybern SMC- 1973;3(6):610–621. doi: 10.1109/TSMC.1973.4309314. [DOI] [Google Scholar]

- 42.Acharya UR, Ng EY, Eugene LW, Noronha KP, Min LC, Nayak KP, Bhandary SV. Decision support system for the glaucoma using Gabor transformation. Biomedical Signal Processing and Control. 2015;15:18–26. doi: 10.1016/j.bspc.2014.09.004. [DOI] [Google Scholar]

- 43.Shirley CP, Mohan NR, Chitra B. (2020 Jun 17) Gravitational search-based optimal deep neural network for occluded face recognition system in videos. Multidimensional Systems and Signal Processing, (pp. 1–27).

- 44.Rejeesh MR. Interest point based face recognition using adaptive neuro fuzzy inference system. Multimedia Tools Appl. 2019;78:22691–22710. doi: 10.1007/s11042-019-7577-5. [DOI] [Google Scholar]

- 45.Hinton, G. (2010). A practical guide to training restricted Boltzmann machines. University of Toronto, UTML TR 2010–003.

- 46.Rashedi E, Nezamabadi-pour H, Saryazdi S. GSA: A gravitational search algorithm. Information Sciences. 2009;179:2232–2248. doi: 10.1016/j.ins.2009.03.004. [DOI] [Google Scholar]

- 47.Solteiro Pires EJ, Tenreiro Machado JA, de Moura Oliveira PB, Boaventura Cunha J, Mendes L. Particle swarm optimization with fractional-order velocity. Nonlinear Dynamics. 2010;61:295–301. doi: 10.1007/s11071-009-9649-y. [DOI] [Google Scholar]

- 48.Devidas Pergad N, Hamde ST. Fractional gravitational search-radial basis neural network for bone marrow white blood cell classification. The Imaging Science Journal. 2018;66(2):106–124. doi: 10.1080/13682199.2017.1383677. [DOI] [Google Scholar]

- 49.Pazos M, Dyrda AA, Biarnés M, Gómez A, Martín C, Mora C, Fatti G, Antón A. Diagnostic accuracy of Spectralis SD OCT automated macular layers segmentation to discriminate normal from early glaucomatous eyes. Ophthalmology. 2017;124(8):1218–1228. doi: 10.1016/j.ophtha.2017.03.044. [DOI] [PubMed] [Google Scholar]

- 50.Prakash NB, Selvathi D. An Efficient Detection System for Screening Glaucoma in Retinal Images. Biomedical and Pharmacology Journal. 2017;10(1):459–465. doi: 10.13005/bpj/1130. [DOI] [Google Scholar]

- 51.Thakur N, Juneja M. Optic disc and optic cup segmentation from retinal images using hybrid approach. Expert Systems with Applications. 2019;127:308–322. doi: 10.1016/j.eswa.2019.03.009. [DOI] [Google Scholar]

- 52.Juneja M, Thakur N, Thakur S, Uniyal A, Wani A, Jindal P. GC-NET for classification of glaucoma in the retinal fundus image. Machine Vision and Applications. 2020;31(5):1–8. [Google Scholar]

- 53.Ajesh F, Ravi R, Rajakumar G. (2020 Feb 24) Early diagnosis of glaucoma using multi-feature analysis and DBN based classification. Journal of Ambient Intelligence and Humanized Computing, (pp. 1–0).

- 54.Kanse SS, Yadav DM. HG-SVNN: HARMONIC GENETIC-BASED SUPPORT VECTOR NEURAL NETWORK CLASSIFIER FOR THE GLAUCOMA DETECTION. Journal of Mechanics in Medicine and Biology. 2020;20(01):1950065. doi: 10.1142/S0219519419500659. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data sharing does not apply to this article as no new data were created or analyzed in this study.