Abstract

Introduction

Capsule endoscopy has revolutionized the management of patients with obscure gastrointestinal bleeding. Nevertheless, reading capsule endoscopy images is time-consuming and prone to overlooking significant lesions, thus limiting its diagnostic yield. We aimed to create a deep learning algorithm for automatic detection of blood and hematic residues in the enteric lumen in capsule endoscopy exams.

Methods

A convolutional neural network was developed based on a total pool of 22,095 capsule endoscopy images (13,510 images containing luminal blood and 8,585 of normal mucosa or other findings). A training dataset comprising 80% of the total pool of images was defined. The performance of the network was compared to a consensus classification provided by 2 specialists in capsule endoscopy. Subsequently, we evaluated the performance of the network using an independent validation dataset (20% of total image pool), calculating its sensitivity, specificity, accuracy, and precision.

Results

Our convolutional neural network detected blood and hematic residues in the small bowel lumen with an accuracy and precision of 98.5 and 98.7%, respectively. The sensitivity and specificity were 98.6 and 98.9%, respectively. The analysis of the testing dataset was completed in 24 s (approximately 184 frames/s).

Discussion/Conclusion

We have developed an artificial intelligence tool capable of effectively detecting luminal blood. The development of these tools may enhance the diagnostic accuracy of capsule endoscopy when evaluating patients presenting with obscure small bowel bleeding.

Keywords: Capsule endoscopy, Artificial intelligence, Convolutional neural networks, Small bowel, Gastrointestinal bleeding

Resumo

Introdução:

A endoscopia por cápsula revolucionou a abordagem a doentes com hemorragia digestiva obscura. No entanto, a leitura de imagens de endoscopia por cápsula é morosa, havendo suscetibilidade para a perda de lesões significativas, limitando desta forma a sua eficácia diagnóstica. Este estudo visou a criação de um algoritmo de deep learning para deteção automática de sangue e resíduos hemáticos no lúmen entérico usando imagens de endoscopia por cápsula.

Métodos:

Foi desenvolvida uma rede neural convolucional com base num conjunto de 22,095 imagens de endoscopia de cápsula (13,510 imagens contendo sangue e 8,585 mucosa normal ou outros achados). Foi construído um grupo de imagens para treino, compreendendo 80% do total de imagens. O desempenho da rede foi comparado com a classificação consenso de dois especialistas em endoscopia por cápsula. Posteriormente, o desempenho da rede foi avaliado usando os restantes 20% de imagens. Foi calculada a sua sensibilidade, especificidade, exatidão e precisão.

Resultados:

O algoritmo detetou sangue e resíduos hemáticos no lúmen do intestino delgado com uma exatidão e precisão de 98.5% e 98.7%, respetivamente. A sensibilidade e especificidade foram 98.6% e 98.9%, respetivamente. A análise do conjunto de usado para teste da rede foi concluída em 24 segundos (aproximadamente 184 frames/s).

Discussão/Conclusão:

Foi desenvolvida uma ferramenta de inteligência artificial capaz de detetar efetivamente o sangue luminal. O desenvolvimento dessas ferramentas pode aumentar a precisão do diagnóstico da endoscopia por cápsula ao avaliar pacientes que apresentam sangramento obscuro do intestino delgado.

Palavras Chave: Endoscopia por cápsula, Inteligência artificial, Intestino delgado, Hemorragia gastrointestinal

Introduction

Capsule endoscopy (CE) has revolutionized the approach to patients with suspected small bowel disease, providing noninvasive inspection of the entire small bowel, thus overcoming shortcomings of conventional endoscopy and the invasiveness of deep enteroscopy techniques. The clinical value of CE has been demonstrated for a wide array of diseases, including the evaluation of patients with suspected small bowel hemorrhage, diagnosis and evaluation of Crohn's disease activity, and detection of protruding small intestine lesions [1, 2, 3, 4].

Obscure gastrointestinal bleeding (OGIB), either overt or occult, accounts for 5% of all cases of gastrointestinal bleeding. The source of bleeding is located in the small intestine in most cases, and OGIB is currently the most frequent indication for CE [5]. Nevertheless, the diagnostic yield of CE for detection of the bleeding source remains suboptimal [2].

Evaluation of CE exams can be a burdensome task. Each CE video comprises a mean number of 20,000 still frames, requiring an average of 30–120 min [6]. Moreover, significant lesions may be restricted to a very small number of frames, which increases the risk of overlooking clinically important lesions.

The large pool of images produced by medical imaging exams and the continuous growth of computational power have boosted the development and application of artificial intelligence (AI) tools for automatic image analysis. Convolutional neural networks (CNNs) are a subtype of AI based on deep learning algorithms. This neural architecture is inspired by the human visual cortex, and it is designed for enhanced image analysis and pattern detection [7]. This type of deep learning algorithm has delivered promising results in diverse fields of medicine [8, 9, 10]. Endoscopic imaging, and particularly CE, is one of the branches where the development of CNN-based tools for automatic detection of lesions is expected to have a significant impact [11]. Future clinical application of these technological advances may translate into an increase in diagnostic accuracy of CE for the detection of several lesions. Moreover, these tools may contribute to optimizing the reading process, including its time cost, thus lessening the burden on gastroenterologists and overcoming one of its main drawbacks.

With this project, we aimed to create a CNN-based system for automatic detection of blood or hematic traces in the small bowel lumen. This project was divided into 3 distinct phases: first, acquisition of CE frames containing normal mucosa or other findings and others with luminal blood or hematic traces; second, development of a CNN and optimization of its neural architecture; third, validation of the model for automatic identification of blood or hematic traces in CE images.

Materials and Methods

Patients and Preparation of Image Sets

All subjects submitted to CE between 2015 and 2020 at a single tertiary center (São João University Hospital, Porto, Portugal) were approached for enrolment in this retrospective study (n = 1,229). A total of 1,483 CE exams were performed. Data retrieved from these examinations was used for development, training, and validation of a CNN-based model for automated detection of blood or hematic residues within the small bowel in CE images. The full-length CE video of all participants was reviewed (total number of frames: 23,317,209). Subsequently, 22,095 frames were ultimately extracted. These frames were labeled by 2 gastroenterologists (M.M.S. and H.C.) with experience in CE (>1,000 CE exams). The inclusion and final labelling of the frames was dependent on a double-validation method, requiring consensus between both researchers for the final decision.

Capsule Endoscopy Protocol

All procedures were conducted using the PillCamTM SB3 system (Medtronic, Minneapolis, MN, USA). The images were reviewed using PillCamTM Software version 9 (Medtronic). Image processing was performed in order to remove possible patient-identifying information (name, operating number, date of procedure). Each extracted frame was stored and assigned a consecutive number.

Each patient received bowel preparation following previously published guidelines by the European Society of Gastrointestinal Endoscopy [12]. In summary, patients were recommended to have a clear liquid diet on the day preceding capsule ingestion, with fasting in the night before examination. A bowel preparation consisting of 2 L of polyethylene glycol solution was used prior to the capsule ingestion. Simethicone was used as an anti-foaming agent. Prokinetic therapy (10 mg domperidone) was used if the capsule remained in the stomach 1 h after ingestion, upon image review on the data recorder worn by the patient. No eating was allowed for 4 h after the ingestion of the capsule.

Convolutional Neural Network Development

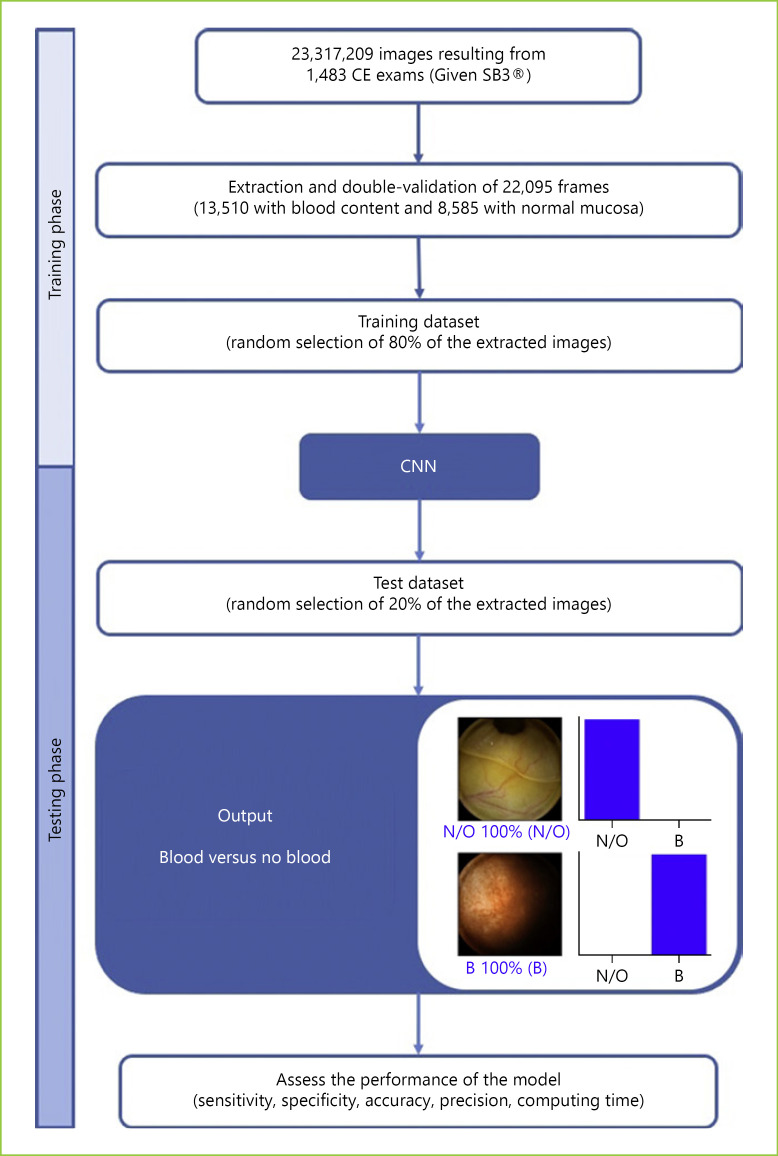

From the collected pool of images (n = 22,095), 13,510 images contained hematic content (active bleeding or free clots) and 8,585 had normal mucosa or nonhemorrhagic findings (protruding lesions, vascular lesions, lymphangiectasia, and ulcers and erosions). A training dataset was constructed by selecting 80% of all extracted frames (n = 17,676). The remaining 20% were used for the construction of a validation dataset (n = 4,419). The validation dataset was used for assessing the performance of the CNN. A flowchart summarizing the study design is presented in Figure 1.

Fig. 1.

Study flow chart for the training and validation phases. N/O, normal/other findings; B, blood or hematic residues.

To create the CNN, we used the Xception model with its weights trained on ImageNet. To transfer this learning to our data, we kept the convolutional layers of the model. We removed the last fully connected layers and attached fully connected layers based on the number of classes we used to classify our endoscopic images. We used 2 blocks, each having a fully connected layer followed by a Dropout layer of 0.3 drop rate. Following these 2 blocks, we added a Dense layer with a size defined as the number of categories to classify. The learning rate of 0.0001, batch size of 32, and the number of epochs of 100 were set by trial and error. We used Tensorflow 2.3 and Keras libraries to prepare the data and run the model. The analyses were performed with a computer equipped with an Intel® Xeon® Gold 6130 processor (Intel, Santa Clara, CA, USA) and a NVIDIA Quadro® RTXTM 4000 graphic processing unit (NVIDIA Corporate, Santa Clara, CA, USA).

Performance Measures and Statistical Analysis

The primary performance measures included sensitivity, specificity, precision, and accuracy in differentiating between images containing blood and those with normal findings or other pathologic findings. Moreover, we used receiver operating characteristic (ROC) curves analysis and area under the ROC curves to measure the performance of our model. The network's classification was compared to the diagnosis provided by specialists' analysis, the latter being considered the gold standard. In addition to the evaluation of diagnostic performance, the computational speed of the network was determined by calculating the time required by the CNN to provide output for all images.

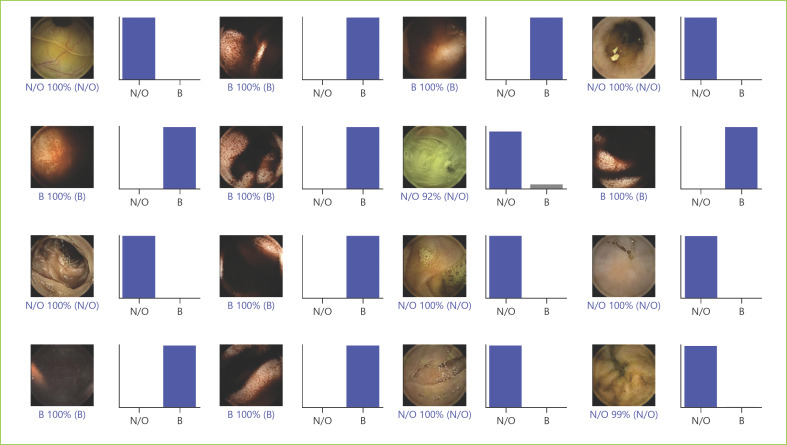

For each image, the model calculated the probability of hematic content within the enteric lumen. A higher probability score translated into a greater confidence in the CNN prediction. The category with the highest probability score was outputted as the CNN's predicted classification (Fig. 2). Sensitivities, specificities, and precisions are presented as means ± SD. ROC curves were graphically represented and AUROC calculated as means and 95% CIs, assuming normal distribution of these variables. Statistical analysis was performed using Sci-Kit learn version 0.22.2 [13].

Fig. 2.

Output obtained from the application of the convolutional neural network. The bars represent the probability estimated by the network. The finding with the highest probability was outputted as the predicted classification. A blue bar represents a correct prediction. Red bars represent an incorrect prediction. N/O, normal/other findings; B, blood or hematic residues.

Results

Construction of the Network

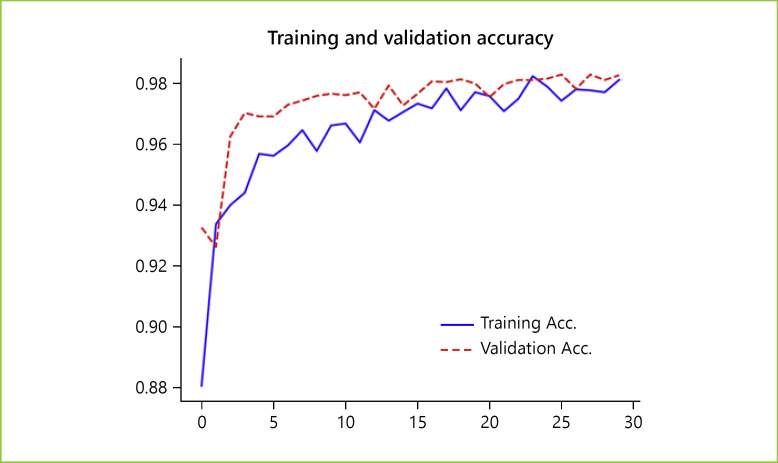

The performance of the CNN was evaluated using a validation dataset containing a total of 4,419 frames (20% of the total of extracted images), 2,702 labeled as containing blood in the enteric lumen, and 1,717 with normal mucosa or other distinct pathological findings. The CNN evaluated each image and predicted a classification (normal mucosa/other pathologic findings or luminal blood/hematic residues) which was compared with the labelling provided by the pair of specialists. The network demonstrated its learning ability, with increasing accuracy as data was being repeatedly inputted into the multi-layer CNN (Fig. 3).

Fig. 3.

Evolution of the accuracy of the convolutional neural network during training and validation phases, as the training and validation datasets were repeatedly inputted in the neural network.

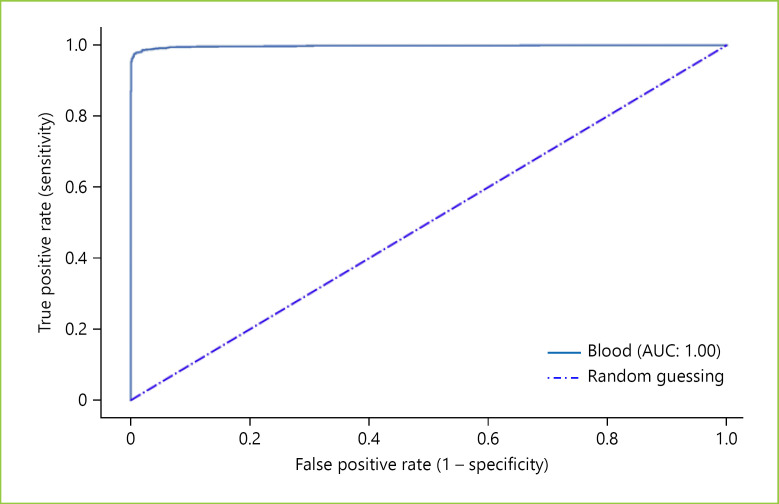

Global Performance of the Network

The distribution of results is displayed in Table 1. The calculated sensitivity, specificity, precision, and accuracy were 98.6% (95% CI 97.6–99.7), 98.9% (95% CI 97.6–99.7), 98.7% (95% CI 97.7–99.6), and 98.5% (95% CI 98.6–98.6). The AUROC for detection of hematic content in the small bowel was 1.0 (Fig. 4).

Table 1.

Confusion matrix of the automatic detection versus the expert classification

| Expert |

||

|---|---|---|

| blood/hematic residues | normal/other findings | |

| CNN Blood/hematic residues |

2,638 | 12 |

| Normal/other findings | 64 | 1,705 |

Sensitivity and specificity are expressed as mean (95% CI). CNN, convolutional neural network.

Fig. 4.

Receiver operating characteristic (ROC) curve of the convolutional neural network for detecting the presence of blood in the enteric lumen. AUC, area under the ROC curve.

Computational Performance of the Network

The reading process of the tested CNN was completed in 24 s, an approximate rate of 184 images per second. At this rate, revision of a full-length CE video containing 20,000 frames would require an estimate of 109 s.

Discussion/Conclusion

CE is a minimally invasive diagnostic method for the study of suspected small bowel disease. It assumes a pivotal role in the investigation of patients presenting with OGIB, either overt or occult [2]. In this setting, CE has shown to have a comparable diagnostic yield to the more invasive double-balloon enteroscopy, and it has demonstrated a favorable cost-effectiveness profile for the initial management of patients with OGIB [2, 14, 15]. However, reading CE exams is a monotonous and time-consuming task for gastroenterologists, which increases the risk of failing to diagnose significant lesions. The exponential growth of AI technologies allows to aim at the development of efficient tools that can assist the gastroenterologist in visualizing these exams, thus overcoming these drawbacks.

In this study, we have developed a deep learning tool capable of automatically detecting luminal blood or hematic residues in the small bowel using a large pool of CE images. Our CNN demonstrated high sensitivity, specificity, and accuracy for the detection of these findings. Also, our algorithm demonstrated a high image processing performance, with approximate image reading rates of 184 images per second. This performance is superior to most studies exploring the application of CNN algorithms for automatic detection of lesions in CE images [6, 16, 17, 18, 19]. Although this is a proof-of-concept study, we believe that these results are promising and lay foundations for the development and introduction of these tools for AI-assisted reading of CE exams. Subsequent studies in real-life clinical settings, preferably of prospective and multicentric design, are required to assess if AI-assisted CE image reading translates into enhanced time and diagnostic efficiency compared to conventional reading.

OGIB is the most common indication for CE [20]. Several studies have assessed the performance of different AI architectures for the automatic detection of blood in CE images. Pan et al. [21] developed the first CNN for detection of GI bleeding, based on a large number of images (3,172 hemorrhage images and 11,458 images of normal mucosa). Their model had a sensitivity and specificity of 93 and 96%, respectively. Moreover, deep learning CNN models have shown to be able to identify lesions with substantial hemorrhagic risk, such as angiectasia, with high sensitivity and specificity [6, 18].

Recently, Aoki et al. [16] shared their experience with a model designed for the identification of enteric blood content. Our network presented performance metrics comparable to those reported in their work. Nevertheless, our system had a higher sensitivity (98.6 vs. 96.6%). Further studies replicating our results are needed to assess if this gain in sensitivity translates into fewer missed bleeding lesions. Moreover, our algorithm demonstrated a higher image processing performance (184 vs. 41 images per second). The clinical relevance of this increased image processing capacity is yet to be determined. Subsequent cost-effectiveness studies should address this question.

We developed a highly sensitive, specific, and accurate model for the detection of luminal blood in CE images. Our results are superior to most other studies regarding the application of AI techniques in the detection of GI hemorrhage (Table 2). Although promising, the interpretation of these results must acknowledge the proof-of-concept design of this study and that the application of this tool to a real clinical scenario may lead to different results.

Table 2.

Summary of studies using AI methods to aid CE detection of hematic content

| Reference | Year | Number | AI type | Results |

|---|---|---|---|---|

| Lau et al. [22] | 2007 | 577 abnormal images | NA | Sensitivity: 88.3% |

|

| ||||

| Giritharan et al. [23] | 2008 | 400 GI bleeding frames | SVM | Sensitivity: >80% |

|

| ||||

| Li et al. [24] | 2009 | 10 patients (200 bleeding frames) | MLP | Sensitivity: >90% Specificity: >90% Accuracy: >90% |

|

| ||||

| Pan et al. [21] | 2009 | 150 full CE videos | CNN | Sensitivity: 93% Specificity: 96% |

| Fu et al. [25] | 2014 | 20 different CE videos | SVM | Sensitivity: 99% Specificity: 94% Accuracy: 95% |

|

| ||||

| Ghosh et al. [26] | 2014 | 30 CE videos | SVM | Sensitivity:93%, Specificity: 94.9% |

|

| ||||

| Hassan et al. [27] | 2015 | 1,720 testing frames | SVM | Sensitivity: >98.9% Specificity: >98.9% |

|

| ||||

| Pogorelov et al. [28] | 2019 | 700 testing frames | SVM | Sensitivity: 97.6% Specificity: 95.9% Accuracy: 97.6% |

|

| ||||

| Aoki et al. [16] | 2020 | 208 GIB frames | CNN | Sensitivity: 96.6% Specificity: 99.9% Accuracy: 99.9% |

CNN, convolutional neural network; MLP, multilayer perceptron; SVM, support vector machine; NA, not applicable.

There are several limitations to our study. First, our study focused on patients evaluated at a single center and was conducted in a retrospective manner. Thus, these promising results must be confirmed by robust prospective multicenter studies before application to clinical practice. Second, analyses in this proof-of-concept study were image-based instead of patient-based. Second, we applied this model to selected still frames and, therefore, these results may not be valid for full-length CE videos. Third, all CE exams during this period were performed using the Pillcam SB3® system. Therefore, results may not be completely generalizable for other CE systems. Fourth, although our network demonstrated high image processing speed, we did not assess if CNN-assisted image review reduces the reading time compared to conventional reading.

In summary, it is expected that the implementation of AI algorithms in daily clinical practice will introduce a landmark in the evolution of modern medicine. The development of these tools has the potential to revolutionize medical imaging. Endoscopy, and particularly CE, is a fertile ground for such innovations. The development and validation of highly accurate AI tools for CE, such as that described in this study, constitute the basic pillar for the creation of software capable of assisting the clinician in reading these exams.

Statement of Ethics

This study was approved by the Ethics Committee of São João University Hospital/Faculty of Medicine of the University of Porto (No. CE 407/2020) and respects the original and subsequent revisions of the Declaration of Helsinki. This study is of noninterventional nature; therefore, there was no interference with the conventional clinical management of each included patient. Any information deemed to potentially identify the subjects was omitted, and each patient was assigned a random number in order to guarantee effective data anonymization for researchers involved in the development of the CNN. A team with Data Protection Officer certification (Maastricht University) confirmed the nontraceability of data and conformity with the general data protection regulation.

Conflict of Interest Statement

The authors have no conflicts of interest to declare.

Funding Sources

The authors have no sources of funding or grants of any form to disclose.

Author Contributions

M.M.S., T.R., J.A., J.P.S.F., H.C., P.A., M.P.L.P., R.N.J., and G.M.: study design; M.M.S., H.C., and P.A.: revision of CCE videos; M.M.S., T.R., J.A., and J.P.S.F.: construction and development of the CNN; J.P.S.F.: statistical analysis; M.M.S., T.R., and J.A.: drafting of the manuscript; T.R. and J.A.: bibliographic review.

References

- 1.Triester SL, Leighton JA, Leontiadis GI, Fleischer DE, Hara AK, Heigh RI, et al. A meta-analysis of the yield of capsule endoscopy compared to other diagnostic modalities in patients with obscure gastrointestinal bleeding. Am J Gastroenterol. 2005 Nov;100((11)):2407–18. doi: 10.1111/j.1572-0241.2005.00274.x. [DOI] [PubMed] [Google Scholar]

- 2.Teshima CW, Kuipers EJ, van Zanten SV, Mensink PB. Double balloon enteroscopy and capsule endoscopy for obscure gastrointestinal bleeding: an updated meta-analysis. J Gastroenterol Hepatol. 2011 May;26((5)):796–801. doi: 10.1111/j.1440-1746.2010.06530.x. [DOI] [PubMed] [Google Scholar]

- 3.Le Berre C, Trang-Poisson C, Bourreille A. Small bowel capsule endoscopy and treat-to-target in Crohn's disease: A systematic review. World J Gastroenterol. 2019 Aug;25((31)):4534–54. doi: 10.3748/wjg.v25.i31.4534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Cheung DY, Lee IS, Chang DK, Kim JO, Cheon JH, Jang BI, et al. Korean Gut Images Study Group Capsule endoscopy in small bowel tumors: a multicenter Korean study. J Gastroenterol Hepatol. 2010 Jun;25((6)):1079–86. doi: 10.1111/j.1440-1746.2010.06292.x. [DOI] [PubMed] [Google Scholar]

- 5.Pennazio M, Spada C, Eliakim R, Keuchel M, May A, Mulder CJ, et al. Small-bowel capsule endoscopy and device-assisted enteroscopy for diagnosis and treatment of small-bowel disorders: European Society of Gastrointestinal Endoscopy (ESGE) Clinical Guideline. Endoscopy. 2015 Apr;47((4)):352–76. doi: 10.1055/s-0034-1391855. [DOI] [PubMed] [Google Scholar]

- 6.Leenhardt R, Vasseur P, Li C, Saurin JC, Rahmi G, Cholet F, et al. CAD-CAP Database Working Group A neural network algorithm for detection of GI angiectasia during small-bowel capsule endoscopy. Gastrointest Endosc. 2019 Jan;89((1)):189–94. doi: 10.1016/j.gie.2018.06.036. [DOI] [PubMed] [Google Scholar]

- 7.Matsugu M, Mori K, Mitari Y, Kaneda Y. Subject independent facial expression recognition with robust face detection using a convolutional neural network. Neural Netw. 2003 Jun-Jul;16((5-6)):555–9. doi: 10.1016/S0893-6080(03)00115-1. [DOI] [PubMed] [Google Scholar]

- 8.Gargeya R, Leng T. Automated Identification of Diabetic Retinopathy Using Deep Learning. Ophthalmology. 2017 Jul;124((7)):962–9. doi: 10.1016/j.ophtha.2017.02.008. [DOI] [PubMed] [Google Scholar]

- 9.Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017 Feb;542((7639)):115–8. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Yasaka K, Akai H, Abe O, Kiryu S. Deep Learning with Convolutional Neural Network for Differentiation of Liver Masses at Dynamic Contrast-enhanced CT: A Preliminary Study. Radiology. 2018 Mar;286((3)):887–96. doi: 10.1148/radiol.2017170706. [DOI] [PubMed] [Google Scholar]

- 11.Soffer S, Klang E, Shimon O, Nachmias N, Eliakim R, Ben-Horin S, et al. Deep learning for wireless capsule endoscopy: a systematic review and meta-analysis. Gastrointest Endosc. 2020 Oct;92((4)):831–839.e8. doi: 10.1016/j.gie.2020.04.039. [DOI] [PubMed] [Google Scholar]

- 12.Rondonotti E, Spada C, Adler S, May A, Despott EJ, Koulaouzidis A, et al. Small-bowel capsule endoscopy and device-assisted enteroscopy for diagnosis and treatment of small-bowel disorders: European Society of Gastrointestinal Endoscopy (ESGE) Technical Review. Endoscopy. 2018 Apr;50((4)):423–46. doi: 10.1055/a-0576-0566. [DOI] [PubMed] [Google Scholar]

- 13.Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, et al. Scikit-learn: Machine Learning in Python. J Mach Learn Res. 2011;12:2825–30. [Google Scholar]

- 14.Marmo R, Rotondano G, Rondonotti E, de Franchis R, D'Incà R, Vettorato MG, et al. Club Italiano Capsula Endoscopica - CICE Capsule enteroscopy vs. other diagnostic procedures in diagnosing obscure gastrointestinal bleeding: a cost-effectiveness study. Eur J Gastroenterol Hepatol. 2007 Jul;19((7)):535–42. doi: 10.1097/MEG.0b013e32812144dd. [DOI] [PubMed] [Google Scholar]

- 15.Otani K, Watanabe T, Shimada S, Hosomi S, Nagami Y, Tanaka F, et al. Clinical Utility of Capsule Endoscopy and Double-Balloon Enteroscopy in the Management of Obscure Gastrointestinal Bleeding. Digestion. 2018;97((1)):52–8. doi: 10.1159/000484218. [DOI] [PubMed] [Google Scholar]

- 16.Aoki T, Yamada A, Kato Y, Saito H, Tsuboi A, Nakada A, et al. Automatic detection of blood content in capsule endoscopy images based on a deep convolutional neural network. J Gastroenterol Hepatol. 2020 Jul;35((7)):1196–200. doi: 10.1111/jgh.14941. [DOI] [PubMed] [Google Scholar]

- 17.Saito H, Aoki T, Aoyama K, Kato Y, Tsuboi A, Yamada A, et al. Automatic detection and classification of protruding lesions in wireless capsule endoscopy images based on a deep convolutional neural network. Gastrointest Endosc. 2020 Jul;92((1)):144–151.e1. doi: 10.1016/j.gie.2020.01.054. [DOI] [PubMed] [Google Scholar]

- 18.Tsuboi A, Oka S, Aoyama K, Saito H, Aoki T, Yamada A, et al. Artificial intelligence using a convolutional neural network for automatic detection of small-bowel angioectasia in capsule endoscopy images. Dig Endosc. 2020 Mar;32((3)):382–90. doi: 10.1111/den.13507. [DOI] [PubMed] [Google Scholar]

- 19.Aoki T, Yamada A, Aoyama K, Saito H, Tsuboi A, Nakada A, et al. Automatic detection of erosions and ulcerations in wireless capsule endoscopy images based on a deep convolutional neural network. Gastrointest Endosc. 2019 Feb;89((2)):357–363.e2. doi: 10.1016/j.gie.2018.10.027. [DOI] [PubMed] [Google Scholar]

- 20.Liao Z, Gao R, Xu C, Li ZS. Indications and detection, completion, and retention rates of small-bowel capsule endoscopy: a systematic review. Gastrointest Endosc. 2010 Feb;71((2)):280–6. doi: 10.1016/j.gie.2009.09.031. [DOI] [PubMed] [Google Scholar]

- 21.Pan G, Yan G, Song X, Qiu X. BP neural network classification for bleeding detection in wireless capsule endoscopy. J Med Eng Technol. 2009;33((7)):575–81. doi: 10.1080/03091900903111974. [DOI] [PubMed] [Google Scholar]

- 22.Lau PY, Correia PL. Detection of bleeding patterns in WCE video using multiple features. Annu Int Conf IEEE Eng Med Biol Soc. 2007;2007:5601–4. doi: 10.1109/IEMBS.2007.4353616. [DOI] [PubMed] [Google Scholar]

- 23.Giritharan B, Yuan X, Liu J, Buckles B, Oh J, Tang SJ. Bleeding detection from capsule endoscopy videos. Annu Int Conf IEEE Eng Med Biol Soc. 2008;2008:4780–3. doi: 10.1109/IEMBS.2008.4650282. [DOI] [PubMed] [Google Scholar]

- 24.Li B, Meng MQ. Computer-aided detection of bleeding regions for capsule endoscopy images. IEEE Trans Biomed Eng. 2009 Apr;56((4)):1032–9. doi: 10.1109/TBME.2008.2010526. [DOI] [PubMed] [Google Scholar]

- 25.Fu Y, Zhang W, Mandal M, Meng MQ. Computer-aided bleeding detection in WCE video. IEEE J Biomed Health Inform. 2014 Mar;18((2)):636–42. doi: 10.1109/JBHI.2013.2257819. [DOI] [PubMed] [Google Scholar]

- 26.Ghosh T, Fattah SA, Shahnaz C, Wahid KA. An automatic bleeding detection scheme in wireless capsule endoscopy based on histogram of an RGB-indexed image. Annu Int Conf IEEE Eng Med Biol Soc. 2014;2014:4683–6. doi: 10.1109/EMBC.2014.6944669. [DOI] [PubMed] [Google Scholar]

- 27.Hassan AR, Haque MA. Computer-aided gastrointestinal hemorrhage detection in wireless capsule endoscopy videos. Comput Methods Programs Biomed. 2015 Dec;122((3)):341–53. doi: 10.1016/j.cmpb.2015.09.005. [DOI] [PubMed] [Google Scholar]

- 28.Pogorelov K, Suman S, Azmadi Hussin F, Saeed Malik A, Ostroukhova O, Riegler M, et al. Bleeding detection in wireless capsule endoscopy videos - Color versus texture features. J Appl Clin Med Phys. 2019 Aug;20((8)):141–54. doi: 10.1002/acm2.12662. [DOI] [PMC free article] [PubMed] [Google Scholar]