Abstract

Skin is the primary protective layer of the internal organs of the body. Nowadays, due to increasing pollution and multiple other factors, various types of skin diseases are growing globally. With variable shapes and multiple types, the classification of skin lesions is a challenging task. Motivated by this spreading deformity in society, a lightweight and efficient model is proposed for the highly accurate classification of skin lesions. Dynamic-sized kernels are used in layers to obtain the best results, resulting in very few trainable parameters. Further, both ReLU and leakyReLU activation functions are purposefully used in the proposed model. The model accurately classified all of the classes of the HAM10000 dataset. The model achieved an overall accuracy of 97.85%, which is much better than multiple state-of-the-art heavy models. Further, our work is compared with some popular state-of-the-art and recent existing models.

Keywords: deep learning, skin diseases, biomedical image, artificial intelligence

1. Introduction

The largest organ in the human body is the skin, which is composed of many layers (the epidermis, the dermis, the subcutaneous tissues, the blood vessels, the lymphatic vessels, the nerves, and the muscles). The ability of the skin to act as a barrier can be strengthened by employing fluids to stop the breakdown of lipids in the epidermis. Diseases of the skin can be brought on by a fungus that grows on the skin, bacteria that are not visible to the naked eye, allergic responses, bacteria that change the texture of the skin, or pigmentation [1]. Chronic skin conditions can develop into cancerous tissues on rare occasions. Skin disorders must be treated as soon as they appear to keep them from spreading and progressing [2]. Imaging-based treatments for determining the consequences of various skin diseases are in high demand. It may take months before a patient is diagnosed with the signs of several skin illnesses, making treatment difficult. There has been a lack of generalization in previous dermatological computer-aided categorization works due to a lack of data and a concentration on routine tasks such as dermoscopy, which refers to the examination of the skin utilizing a microscope of the skin surface. Computer-aided diagnosis can be used to diagnose skin illnesses and provide treatment based on the symptoms of patients [3]. Skin illnesses can be accurately identified using supervisory procedures that reduce the cost of diagnosis. The progression of sick growth is monitored using a grey-level co-occurrence matrix. For more effective treatment and lower pharmaceutical costs, it is critical that a diagnosis be accurate.

There is a big disparity between those who have skin illnesses and those who have the training to treat them. Dermatologists, equipment, drugs, and researchers are among the resources available. Those living in rural areas suffer the most from a lack of resources, according to the World Health Organization. Automated expert systems for the classification of early skin lesions are necessary because of the massive imbalance between the skin patients and the expertise. In resource-constrained locations, these categorization algorithms can aid in the early detection of skin lesions [4,5]. Computer vision algorithms have been offered in the literature as comprehensive research solutions for early skin lesion diagnosis and the aforementioned complexity [6]. DTs, SVMs, and ANNs are just a few examples of the many different approaches available for classifying data [7,8]. In Reference [9], a comprehensive evaluation of various strategies is provided. As a result, many machine learning approaches rely on photos with low noise and high contrast that cannot be used with skin cancer data. Color, texture, and structural traits play a role in skin classification. As skin lesions have a significant degree of inter-class homogeneity and intra-class heterogeneity, the classification may lead to incorrect findings with weak feature sets [10]. Since skin cancer data are not normally distributed, the usual methodologies cannot use them because they are parametric. These approaches are ineffective since each lesion has a unique pattern. Using deep learning approaches in skin classification, dermatologists can accurately diagnose lesions. Deep learning’s role in medical applications has been explored in depth in some studies [11,12].

Basal cell carcinoma, squamous cell carcinoma, and melanocyte carcinoma are the most common subtypes of skin cancer [13]. The most prevalent kind of cancer, known as basal cell carcinoma, is characterized by sluggish progression and does not metastasize to other areas of the body. Because it often comes back, getting rid of it thoroughly from the body is essential. Squamous cell carcinoma is a different type of skin cancer that can spread to other places of the body and goes deeper into the skin than basal cell carcinoma. When the skin is exposed to sunlight, melanocytes, the cells that make the skin dark or tan, create melanin. Cancerous moles, which are also known as melanoma cancer, arise when the melanin within these cells accumulates in the body. Melanocyte-based malignancies, on the other hand, are classified as malignant and can be life-threatening due to their ability to inflict minor damage to surrounding tissues. The ISIC Skin Imaging Collaboration is one of the datasets that has been utilized rather frequently for the purpose of this study [14]. According to the data provided by the ISIC 2016–2020, lesions may be broken down into the following four categories: The most frequent types of skin lesions are known as nevus (NV), seborrheic keratosis (SK), benign (BEN), and malignant (MEL). The trunk, arms, and legs can all display varying hues of pink, brown, and tan that indicate NV cancer. The next kind is the SK, which, when it is not malignant, can have the appearance of a waxy brown, black, or tan. BEN is a sort of lesion that is not malignant and does not penetrate the tissues that are nearby, nor does it spread to other parts of the body. A lesion is said to be BEN if it possesses both NV and SK components. MEL is a massive brown mole with dark speckles that can bleed or change color over time. This is the final and most important point. It is a cancer that is quite aggressive and quickly spreads throughout the body. There are several subtypes of MEL, including acute, nodular, and superficial. The purpose of this research is to differentiate between MEL and BEN cancers, which is the study’s primary objective. Our major contributions are as follows: (i) An improved convolutional neural network is proposed by using variable size kernels and activation function in the network. Moreover, fewer numbers of kernels are used in the first three layers of the network as compared to the last two layers, which results in efficient utilization of kernels. (ii) The ReLU activation function is used in the first three layers of the network, whereas leakyReLU is used in the last two layers of the convolutional neural network to improve the performance of the skin lesion classification. (iii) Class-wise balancing of data has been performed to unbiased the training. (iv) The model has achieved high accuracy with fewer parameters and in less computational time as compared to other state-of-the-art models and existing works.

2. Background of Study

There is a huge number of deaths each year due to skin cancer, which is prevalent all over the world [15]. To preserve lives, it is critical to perform early identification of this aggressive disease. The ABCDE [16] criteria are followed by several histopathology tests by clinical professionals. Preprocessing, feature extraction, segmentation, and classification are some of the standard processes that can be automated using artificial intelligence-based algorithms. Handcrafted feature sets, which lack generalizability for dermoscopic skin pictures, were heavily relied upon in several classification algorithms [17,18]. Because of their similarities in color, shape, and size, lesions are highly linked, resulting in inadequate feature information [19,20].

In order to extract features, the ABCD scoring method was applied to the data. Lesion classification was completed by employing a combination of existing approaches. The thickness of the lesion was used to classify melanoma in [21]. First, lesions were classified as thin or thick, and second, they were classed as thin, medium, and thick. The logistic regression and artificial neural networks were proposed for classification purposes. To increase the number of lesions, a median filter was applied in a distinct manner to each of the RGB channels [22]. In order to segregate these lesions, a deformable model was utilized. The Chan–Vese model was used as the foundation for a segmentation approach that was developed in [23].

A support vector machine was used to classify these features support vector machine (SVM). The paraconsistent logic (PL) method was used by the authors to classify melanoma (MEL) and basal cell carcinoma (BCC) [24]. They were able to determine the strength of the evidence, the pattern of formation, and the diagnostic contrast. BCC and MEL were distinguished using spectra with values of 30, 96, and 19, respectively. In [25], the binary mask of ROIs was extracted using a Delaunay Triangulation. By removing the granular layer boundary, the authors of [26] were able to identify only two lesions in the histological pictures. Alam et al. [27] presented an SVM to automate the detection of eczema. This was accomplished by segmenting the acquired image, choosing features based on texture-based information for more accurate predictions, and ultimately utilizing the support vector machine (SVM) for evaluating the advancement of eczema as reported by I. Immagulate [28]. When dealing with noisy image data, it is inappropriate to apply the support vector machine modeling technique [29]. When working with an SVM, it is essential to locate parameter values that are feature-based. If there are more parameters in each feature vector than there are data samples that were utilized for training, then its performance will be subpar.

Artificial neural networks and convolutional neural networks (CNN) are the methods that are employed most frequently for artificial neural networks to detect and diagnose abnormalities in radiological imaging data [30,31]. The CNN method of diagnosing skin diseases has produced good results [32]. This makes working with images taken on a smartphone or digital camera difficult because CNN models are not scaled or rotation invariant. Both neural network approaches require enormous amounts of training data to achieve the model’s high performance, which in turn necessitates a substantial amount of computational effort [33]. The models based on neural networks are more abstract, and we are unable to modify them to suit our own requirements because of this. Additionally, the number of trainable parameters in ANN skyrockets as picture resolution improves, which necessitates massive training efforts in order to achieve accurate results. The gradient shrinks and explodes, which causes problems for the ANN model. In CNN’s findings, the object’s magnitude and size are not correctly interpreted [34,35].

J. Zhang et al. [35] proposed CNN for skin classification. A deep convolutional neural network (DCNN) was used to investigate the network’s inherent ability to pay attention to itself. With the use of attention maps at lower layers, each ARL block develops residual learning mechanisms that help it better categorize input data. On the basis of the ISIC 2017-19 datasets, Iqbal et al. [36] developed a DCNN model for classifying multi-class skin lesions. In the beginning, the model transmits feature information from the top to the bottom of the network; their model employs 68 convolutional layers, which are made up of interconnected blocks. In addition, a similar approach was used by Jinnai and colleagues [37]. They classify melanoma using 5846 clinical photos rather than dermoscopy using the FRCNN algorithm. To prepare the training dataset, they manually drew borders around lesion locations. Ten board-certified dermatologists and ten dermatology trainees were outperformed by the FRCNN, which had a better level of accuracy. Barata et al. [38] offered an inquiry into boosting the performance of the ensemble CNN model in terms of accuracy by developing the proposed model. The fusion of data generated by four separate classification layers was utilized to create an ensemble model for three class classifications from GoogleNet, AlexNet, VGG, and ResNet Classification accuracy can be improved by taking into account the patient’s metadata as proposed by Jordan Yap et al. [39]. Dermoscopic and macroscopic pictures were both sent into the ResNet50 network and then were utilized to classify them together. Multimodel classification outperformed the simple macroscopy-based model with an AUC of 0.866. The ISIC 2019 dataset was used by Gessert et al. [40] to develop an ensemble model that incorporated EfficientNet, SENet, and ResNeXt WSL. They used a cropping approach to deal with photos having different resolutions from different models. In addition, a technique of loss balancing was created to deal with datasets that were unbalanced. On the HAM10000 dataset, Srinivasu et al. [41] classified lesions by employing a deep convolutional neural network (DCNN) equipped with MobileNetV2 and long short-term memory (LSTM). MobileNetV2 was a CNN model that offered several advantages over existing CNN models, including a cheaper computational cost, a smaller network size, and compatibility with mobile devices. In the LSTM network, the features of MobileNetV2 were each given a timestamp as they were stored. When MobileNetV2 was combined with LSTM, there was an improvement in accuracy of up to 85.34 percent.

A straightforward and efficient method for improving images is known as histogram equalization. Because the equalizing method has the potential to dramatically alter the luminance of a picture in certain circumstances, it has never before been used in a video system; this is the reason why the technology has never been used. In this research, a novel histogram equalization method known as equal area dualistic sub-image histogram equalization is proposed [42].

Huang et al. [43] proposed deep learning techniques to create a lightweight model for classifying skin cancer that might be used to improve medical care. In this study, they looked at the clinical images and medical records of patients who had received a histological diagnosis of basal cell carcinoma, squamous cell carcinoma, melanoma, seborrheic keratosis, or melanocytic nevus in the Department of Dermatology at Kaohsiung Chang Gung Memorial Hospital between the years 2006 and 2017. In order to develop a skin cancer classification model, they used deep learning models to differentiate between malignant and benign skin tumors in the KCGMH and HAM10000 datasets. This was accomplished by binary classification and multi-class classification. In the KCGMH dataset, the deep learning model achieved an accuracy of 89.5% for binary classifications (benign vs. malignant), whereas in the HAM10000 dataset, the accuracy of the deep learning model was 85.8%.

Thurnhofer-Hemsi et al. [44] introduced a deep learning model, namely MobileNet V2 and long short-term memory-based deep learning, to identify skin cancer. Experiments were conducted on the HAM10000 dataset, a sizable collection of dermatoscopic images, with the aid of data augmentation techniques to boost results. The investigation’s findings indicate that the DenseNet201 network is well suited for the undertaking at hand, as it achieved high classification accuracies and F-measures while simultaneously reducing the number of false negatives [45].

Ioannis Kousis et al. [46] presented a convolutional neural network (CNN) for detecting skin cancer; using the HAM10000 dataset, they trained and evaluated 11 different CNN architectures for identifying seven distinct types of skin lesions. In order to combat the imbalance issue and the great similarity between images of some skin lesions, they employed data augmentation (during training), transfer learning, and fine-tuning. According to their results, the DenseNet169 transfer model outperformed the other 10 CNN architecture variants.

3. Materials and Methods

3.1. Dataset

The standard skin lesion dataset HAM10000 was used for experimentation. It contains 10,015 skin lesion images of divergent populations distributed in seven major classes, as shown in Table 1. The sample image of each class is shown in Figure 1.

Table 1.

Distribution of skin lesion images in seven major classes and total images in the dataset.

| Class | Count |

|---|---|

| Melanocytic nevi (nv) | 6705 |

| Basal cell carcinoma (bcc) | 1113 |

| Melanoma (mel) | 1099 |

| Vascular lesions (vasc) | 514 |

| Benign keratosis-like lesions (bkl) | 327 |

| Actinic keratoses (akiec) | 142 |

| Dermatofibroma (df) | 115 |

| Total | 10,015 |

Figure 1.

Sample images of each class of the dataset.

3.2. Data Balancing and Augmentation

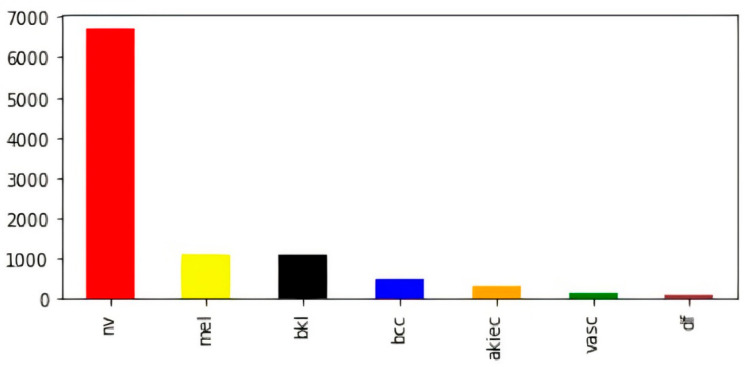

To overcome variable-sized images in the dataset, all images were resized to (28, 28, 3). In addition to this, we found a significant disparity in the numbers of images contained inside the various classes. For example, the melanocytic nevi (mel) class has a large number of samples as compared to the rest of the classes. Further, vascular lesions (vasc) and dermatofibroma (df) classes have fewer samples. The bar visualization of the class-wise sample distribution of the original dataset is shown in Figure 2; however, to efficiently train a deep-learning-based model, we require a reasonable amount of balanced data. Further, the imbalanced data may cause the model training to remain biased towards some particular classes with a comparatively large number of samples; therefore, to avoid biased training of the model, data balancing is performed by artificially generating the required samples [47,48,49].

Figure 2.

Class-wise count of images in original dataset (before balancing).

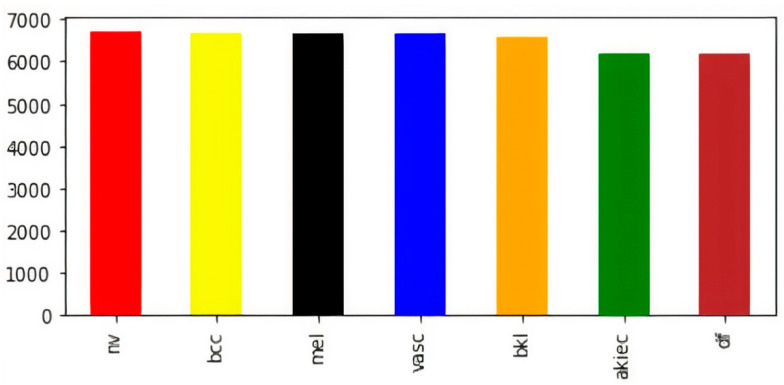

The visual representation of class-wise images in the balanced dataset is shown in Figure 3. Now, the dataset has an almost equal number of images in every class of skin lesions, which ensures the unbiased training of the model. Now, for the sake of training, testing, and validation, we perform a random split of the data, as shown in Table 2.

Figure 3.

Class-wise count of images after balancing.

Table 2.

Distribution of skin lesions in train and testing data.

| Classes | nv | mel | bkl | bcc | akiec | vasc | df | Total |

|---|---|---|---|---|---|---|---|---|

| # Training samples | 5323 | 5360 | 5289 | 5371 | 5004 | 5308 | 4949 | 36,604 |

| # Testing samples | 1382 | 1318 | 1305 | 1311 | 1209 | 1366 | 1261 | 9152 |

| # Validation samples | 1338 | 1391 | 1303 | 1314 | 1271 | 1295 | 1239 | 9151 |

# represents”Number of”.

After splitting the dataset, augmentation is performed to improve the robustness of the trained model so that the model can perform adequately for unseen samples; therefore, training samples are horizontally and vertically flipped, randomly rotated (−10 to +10), horizontally and vertically shifted (0.2), zoomed-in and zoomed-out (20%), and empty areas in the samples are filled by nearest pixels; whereas testing data samples are augmented to estimate the performance of the presented model over actual data. We perform random rotation for smaller angles as we are already performing horizontal and vertical flips.

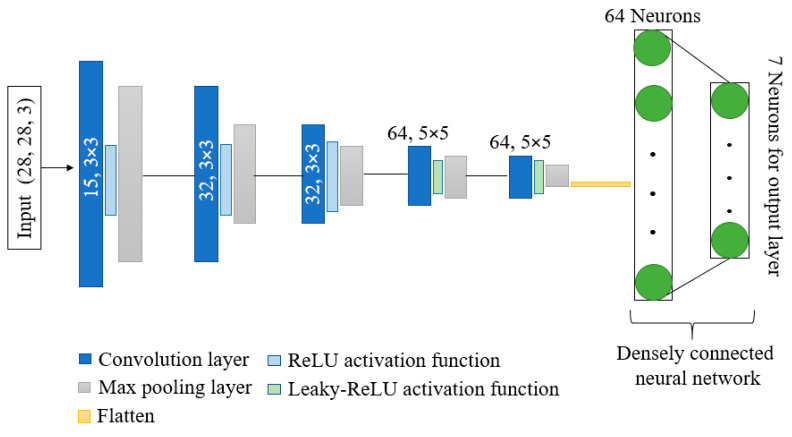

3.3. Architecture Overview

Figure 4 depicts the overall design of the model that is being suggested. The model has shown better performance with fewer parameters and computation time as compared to other state-of-the-art models. Kernels and activation functions play a vital role in the training of any convolution-based model. Performance and resource utilization of the model depends on the size and number of kernel and activation functions used in the network. Motivated by the astounding abilities of kernels and activation functions, we have proposed a network with dynamic-sized kernels and two different activation functions in the network. The network has five layers; each layer comprises convolution operation, activation function, and pooling operation. The input of shape 28 × 28 × 3 is given to the first layer of the network, and the first layer has 15 kernels and each of size 3 × 3. Fewer kernels are used in the first layer. The starting layer’s kernels are used to identify some basic features only; therefore, using fewer kernels reduces the number of parameters, computation times, and can easily identify basic features. During convolution operation, the padding remains the same so that the size of the feature maps remain the same as the input image. These feature maps are passed through the ReLU activation function to reduce the linearity. Further, max pooling is applied on the feature maps with a pool size of 2 × 2. In the pooling layer also, padding is the same to avoid input dimension problems during max pooling operation. In the starting layers, the feature maps contain fewer complex features; therefore, we used the ReLU activation function to reduce linearity. Further, in the starting layers, we have a slight chance of information loss due to the activation function. In the second layer, we increase the number of kernels from 15 to 32 to extract more complex features as compared to layer 1, and used the ReLU activation function and max pooling with a pool size of 2 × 2.

Figure 4.

Architecture of proposed DKCNN model.

The configuration of layer 3 is the same as layer 2. In layers 4 and 5, we further increased the number of kernels from 32 to 64 with a size of 5 × 5. Further, the leakyReLU activation function is used instead of the ReLU activation function. In the last two layers, to obtain more complex features, we have increased the number of parameters by increasing the kernel size and number of kernels.

LeakyReLU is preferred in the last two layers due to its ability to maintain non-linearity in the feature maps and to reduce the chances of information loss of salient features in the feature maps. Moreover, the output of the last layer is flattened and provided as input to the densely connected neural network. The input layer of the dense network has 64 neurons, and ReLU is used as an activation function. In contrast, the output layer has 7 neurons, equal to the number of classes in the dataset with a SoftMax activation function.

4. Result and Discussion

This section provides comprehensive information about the dataset, the process of data balancing and augmentation, experimental setup, evaluation metrics and performance analysis, and comparative studies with various state-of-the-art methods.

4.1. Evaluation Metrics and Performance Analysis

Precision, recall, f1-score, and accuracy are calculated to evaluate the performance of the trained model with testing samples. Testing samples are skin lesion images without augmentation. The objective here is to know the performance of the model for unseen samples. Mathematical formulas for calculating these metrics are shown in Equations (1)–(4).

| (1) |

| (2) |

| (3) |

| (4) |

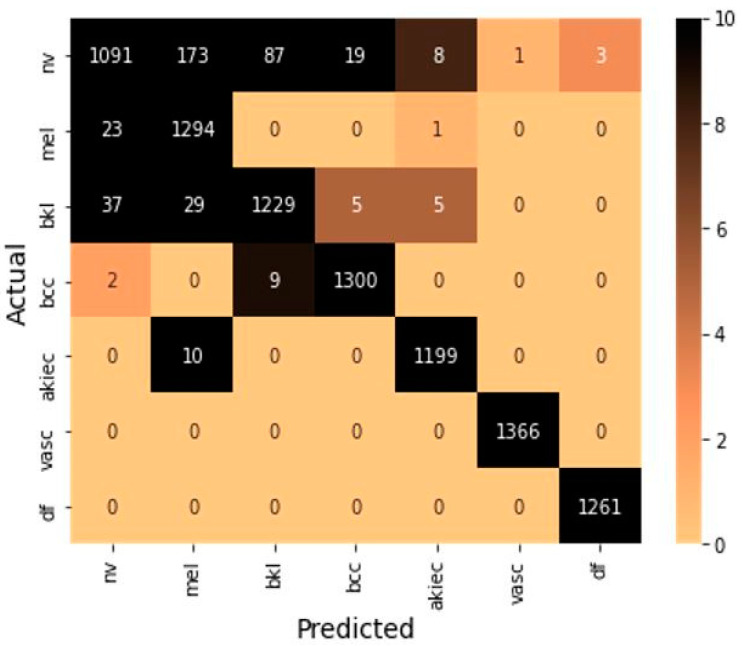

These formulas include true positive (TP) and true negative (TN), which represent a number of positive and negative samples identify correctly. Further, false positive (FP) and false negative (FN) indicate the number of positive and negative samples identified incorrectly. The confusion matrix is shown in Figure 5 to summarize the class-wise prediction information of the model. Providing the number of TP, TN, FP, and FN predicted by the model also highlighted the non-zero numbers in ascending order with increasing color density to improve the readability of the confusion matrix.

Figure 5.

Confusion matrix on testing samples.

Model is trained using Adam optimizer for 20 epochs with a batch size of 64. With efficient utilization of number and kernel size, very few trainable parameters are used to train the model with an initial learning rate of 0.001. The model is broadly summarized in Table 3. Activation function ReLU is used in some starting layers of the model, whereas leakyReLU is used in the last two layers before flattening.

Table 3.

Broad summary of the model.

| Optimizer | Batch Size | # Epochs | Activation | Optimizer | Batch Size |

|---|---|---|---|---|---|

| Adam | 64 | 20 | ReLU & LeakyReLU | 0.001 | 172,362 |

# represents”Number of”.

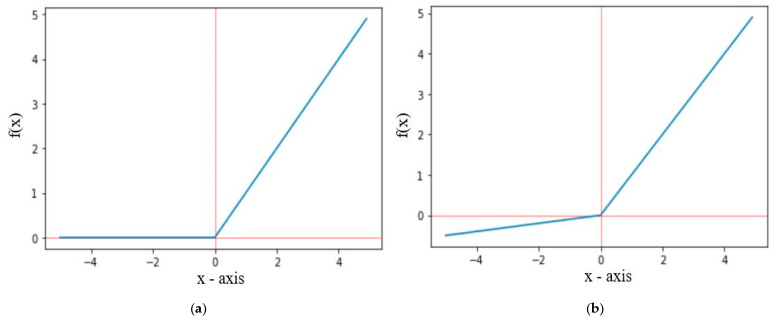

The ReLU activation function only propagates positive values received from previous layers to output or hidden layer, whereas the leakyReLU activation function allows some small negative values. The graphical representation of ReLU and leakyReLU are shown in Figure 6, where f(x) for ReLU and leakyReLU activation functions are defined in Equations (5) and (6), respectively. For leakyReLU α = 0.01, which allows a small part of negative values instead of 0.

| (5) |

| (6) |

Figure 6.

Graph representation of (a) ReLU and (b) LeakyReLU.

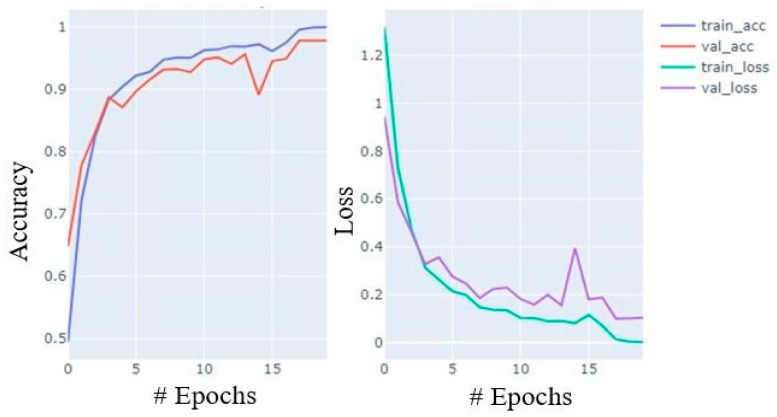

During training, the accuracy of the model increases with celerity until the 7th epoch; thereafter, it increases at a moderate pace until the 15th and the growth slows from the 16th to the 20th. Similarly, loss also decreases in almost similar paces. The accuracy and loss curves for training and validation data are shown in Figure 7.

Figure 7.

Accuracy and loss plot during training process with training and validation data.

The model has shown remarkable performance over unseen tenting samples. The class-wise accuracy (ACC), precision (PRE), recall (REC), and f1-score of the model are shown in Table 4. The model obtained 97.858% as the overall accuracy. Further, the performance of the model was evaluated using the k-fold cross-validation approach. The dataset is divided into k = 10 equal parts (folds), the model is trained with k-1 folds (training set), and the remaining fold is used as a test set. This process continued for k times and each fold was used once as a test set. The average accuracy, precision, recall, and f1-score calculated with each fold of data using k-fold cross validation methods are shown in Table 5.

Table 4.

Class-wise accuracy, precision, recall, and f1-score obtained by the model.

| Label | ACC | PRE | REC | F1-Score |

|---|---|---|---|---|

| nv | 0.87 | 1.00 | 0.87 | 0.93 |

| mel | 0.98 | 0.94 | 1.00 | 0.97 |

| bkl | 0.99 | 0.94 | 0.99 | 0.97 |

| bcc | 1.00 | 0.99 | 1.00 | 0.99 |

| akiec | 1.00 | 1.00 | 1.00 | 1.00 |

| vasc | 1.00 | 1.00 | 1.00 | 1.00 |

| df | 1.00 | 1.00 | 1.00 | 1.00 |

| Average | 0.978 | 0.981 | 0.98 | 0.98 |

Table 5.

Accuracy, precision, recall, and f1-score with each fold of data calculated using k-fold cross-validation method.

| Folds | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

|---|---|---|---|---|---|---|---|---|---|---|

| ACC | 0.94 | 0.91 | 0.94 | 0.97 | 0.98 | 0.99 | 1.00 | 0.98 | 1.00 | 1.00 |

| PRE | 0.95 | 0.97 | 0.94 | 0.98 | 0.98 | 0.98 | 1.00 | 0.96 | 0.98 | 0.98 |

| RECALL | 0.96 | 0.97 | 0.95 | 0.98 | 0.98 | 0.98 | 1.00 | 0.97 | 0.98 | 0.98 |

| F1-score | 0.95 | 0.97 | 0.94 | 0.98 | 0.98 | 0.99 | 1.00 | 0.96 | 0.98 | 0.98 |

4.2. Experimental Setup

The proposed model was implemented using python 3.8.8 with Keras API of the TensorFlow 2.7.0 library. The model was trained using a Jupyter notebook with a CPU for computation. Further, the model was trained separately on a Kaggle notebook using a GPU. With the efficient utilization of a number and size of kernels, the proposed model was trained in much less computation time and with only 172,363 parameters.

The training times of the model with CPU and GPU are 375.17 and 82.48 s, respectively. We have used an Adam optimizer with a batch size of 64, 20 epochs, and an initial learning rate of 0.001, which was reduced to 0.0001 after the 11th epoch.

4.3. Comparison with Some State-of-the-Art Methods and Other Existing Models

The performance of the model in terms of the number of parameters, precision, recall, and f1-score are compared with some existing models over a similar dataset, which is shown in Table 6. It has been found that the proposed model has shown outstanding performance with very few trainable parameters and computation time.

Table 6.

Comparison of proposed work with some standard models and other existing models.

5. Conclusions and Future Scope

Nowadays, skin disease is a global challenge affecting a major proportion of the world’s population. Multiple classes of skin disease may extend from minor to major types of involved danger. Skin cancer is rapidly increasing and becoming a significant health concern. Correct and timely treatment plays a pivotal role in reducing this life threat; therefore, an accurate and fully automatic classification system is a major requirement of the medical field; however, there are multiple machine learning and deep-learning-based methods that have been proposed to solve this purpose. As per the literature review, we find opportunities to propose a better lightweight model with high accuracy. As a result, we proposed a CNN-based model with efficient utilization of kernels and activation functions. The proposed model has shown a remarkable class-wise (seven classes) accuracy and overall accuracy of 97.85% on the test dataset with fewer parameters than is standard (172,363). The proposed model can also be used for disease classification with a dataset that has more classes. The model still has room for more accurate prediction of benign keratosis-like lesions, melanoma, and melanocytic nevi classes of skin lesions.

Author Contributions

Conceptualization, T.H.H.A. and A.V.; methodology, T.H.H.A., A.V., M.H.A.-A., and D.K.; software, T.H.H.A., A.V. and M.H.A.-A.; validation, T.H.H.A. and M.H.A.-A.; formal analysis, T.H.H.A., M.H.A.-A., and D.K.; investigation, T.H.H.A., M.H.A.-A. and D.K.; resources, T.H.H.A.; data curation, T.H.H.A. and M.H.A.-A.; writing—original draft, T.H.H.A., A.V., M.H.A.-A. and D.K.; preparation: T.H.H.A., A.V., M.H.A.-A. and D.K.; writing—review and editing, T.H.H.A., A.V., M.H.A.-A. and D.K.; visualization, T.H.H.A. and M.H.A.-A.; supervision, T.H.H.A.; project administration, T.H.H.A. and M.H.A.-A.; funding acquisition, T.H.H.A. and M.H.A.-A. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available here: https://www.kaggle.com/kmader/skin-cancer-mnist-ham10000 (accessed on 17 May 2022).

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research and the APC were funded by the Deanship of Scientific Research at King Faisal University for the financial support under grant No. NA000242.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Almeida M.A.M., Santos I.A.X. Classification Models for Skin Tumor Detection Using Texture Analysis in Medical Images. J. Imaging. 2020;6:51. doi: 10.3390/jimaging6060051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ki V., Rotstein C. Bacterial skin and soft tissue infections in adults: A review of their epidemiology, pathogenesis, diagnosis, treatment and site of care. Can. J. Infect. Dis. Med. Microbiol. 2008;19:173–184. doi: 10.1155/2008/846453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Cahan A., Cimino J. A Learning Health Care System Using Computer-Aided Diagnosis. J. Med. Internet Res. 2017;19:e6663. doi: 10.2196/jmir.6663. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.World Health Organization . New Report Shows that 400 Million Do not Have Access to Essential Health Services. World Health Organization; Geneva, Switzerland: 2015. [Google Scholar]

- 5.Most Common Skin Disorders. [(accessed on 2 June 2022)]. Available online: http://www.foxnews.com/story/2009/12/15/5-most-common-skin-disorders.html.

- 6.Thomsen K., Iversen L., Titlestad T.L., Winther O. Systematic review of machine learning for diagnosis and prognosis in dermatology. J. Dermatol. Treat. 2020;31:496–510. doi: 10.1080/09546634.2019.1682500. [DOI] [PubMed] [Google Scholar]

- 7.Dhivyaa C., Sangeetha K., Balamurugan M., Amaran S., Vetriselvi T., Johnpaul P. Skin lesion classification using decision trees and random forest algorithms. J. Ambient. Intell. Humaniz. Comput. 2020:1–13. doi: 10.1007/s12652-020-02675-8. [DOI] [Google Scholar]

- 8.Murugan A., Nair S.A.H., Kumar K.S. Detection of skin cancer using SVM, random forest and KNN classifiers. J. Med. Syst. 2019;43:269. doi: 10.1007/s10916-019-1400-8. [DOI] [PubMed] [Google Scholar]

- 9.Hekler A., Utikal J.S., Enk A.H., Hauschild A., Weichenthal M., Maron R.C., Berking C., Haferkamp S., Klode J., Schadendorf D., et al. Superior skin cancer classification by the combination of human and artificial intelligence. Eur. J. Cancer. 2019;120:114–121. doi: 10.1016/j.ejca.2019.07.019. [DOI] [PubMed] [Google Scholar]

- 10.Masood A., Ali Al-Jumaily A. Computer aided diagnostic support system for skin cancer: A review of techniques and algorithms. Int. J. Biomed. Imaging. 2013;2013:323268. doi: 10.1155/2013/323268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Codella N., Cai J., Abedini M., Garnavi R., Halpern A., Smith J.R. International Workshop on Machine Learning in Medical Imaging. Springer; Berlin/Heidelberg, Germany: 2015. Deep learning, sparse coding, and SVM for melanoma recognition in dermoscopy images; pp. 118–126. [Google Scholar]

- 12.Haggenmüller S., Maron R.C., Hekler A., Utikal J.S., Barata C., Barnhill R.L., Beltraminelli H., Berking C., Betz-Stablein B., Blum A., et al. Skin cancer classification via convolutional neural networks: A systematic review of studies involving human experts. Eur. J. Cancer. 2021;156:202–216. doi: 10.1016/j.ejca.2021.06.049. [DOI] [PubMed] [Google Scholar]

- 13.American Cancer Society Skin Cancer. 2019. [(accessed on 2 May 2022)]. Available online: https://www.cancer.org/cancer/skin-cancer.html/

- 14.Sloan Kettering Cancer Center The International Skin Imaging Collaboration. 2019. [(accessed on 15 May 2022)]. Available online: https://www.isic-archive.com/#!/topWithHeader/wideContentTop/main/

- 15.Fontanillas P., Alipanahi B., Furlotte N.A., Johnson M., Wilson C.H., Pitts S.J., Gentleman R., Auton A. Disease risk scores for skin cancers. Nat. Commun. 2021;12:160. doi: 10.1038/s41467-020-20246-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Rigel D.S., Friedman R.J., Kopf A.W., Polsky D. ABCDE—An evolving concept in the early detection of melanoma. Arch. Dermatol. 2005;141:1032–1034. doi: 10.1001/archderm.141.8.1032. [DOI] [PubMed] [Google Scholar]

- 17.Anand V., Gupta S., Nayak S.R., Koundal D., Prakash D., Verma K.D. An automated deep learning models for classification of skin disease using Dermoscopy images: A comprehensive study. Multimed. Tools Appl. 2022:1–23. doi: 10.1007/s11042-021-11628-y. [DOI] [Google Scholar]

- 18.Xie F., Fan H., Li Y., Jiang Z., Meng R., Bovik A. Melanoma classification on dermoscopy images using a neural network ensemble model. IEEE Trans. Med. Imaging. 2016;36:849–858. doi: 10.1109/TMI.2016.2633551. [DOI] [PubMed] [Google Scholar]

- 19.Celebi M.E., Kingravi H.A., Uddin B., Iyatomi H., Aslandogan Y.A., Stoecker W.V., Moss R.H. A methodological approach to the classification of dermoscopy images. Comput. Med. Imaging Graph. 2007;31:362–373. doi: 10.1016/j.compmedimag.2007.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kasmi R., Mokrani K. Classification of malignant melanoma and benign skin lesions: Implementation of automatic ABCD rule. IET Image Process. 2016;10:448–455. doi: 10.1049/iet-ipr.2015.0385. [DOI] [Google Scholar]

- 21.Sáez A., Sánchez-Monedero J., Gutiérrez P.A., Hervás-Martínez C. Machine Learning Methods for Binary and Multiclass Classification of Melanoma Thickness From Dermoscopic Images. IEEE Trans. Med. Imaging. 2016;35:1036–1045. doi: 10.1109/TMI.2015.2506270. [DOI] [PubMed] [Google Scholar]

- 22.Oliveira R.B., Marranghello N., Pereira A.S., Tavares J.M.R.S. A computational approach for detecting pigmented skin lesions in macroscopic images. Expert Syst. Appl. 2016;61:53–63. doi: 10.1016/j.eswa.2016.05.017. [DOI] [Google Scholar]

- 23.Inácio D.F., Célio V.N., Vilanova G.D., Conceição M.M., Fábio G., Minoro A.J., Tavares P.M., Landulfo S. Paraconsistent analysis network applied in the treatment of Raman spectroscopy data to support medical diagnosis of skin cancer. Med. Biol. Eng. Comput. 2016;54:1453–1467. doi: 10.1007/s11517-016-1471-3. [DOI] [PubMed] [Google Scholar]

- 24.Pennisi A., Bloisi D.D., Nardi D.A., Giampetruzzi R., Mondino C., Facchiano A. Skin lesion image segmentation using Delaunay Triangulation for melanoma detection. Comput. Med. Imaging Graph. 2016;52:89–103. doi: 10.1016/j.compmedimag.2016.05.002. [DOI] [PubMed] [Google Scholar]

- 25.Noroozi N., Zakerolhosseini A. Differential diagnosis of squamous cell carcinoma in situ using skin histopathological images. Comput. Biol. Med. 2016;70:23–39. doi: 10.1016/j.compbiomed.2015.12.024. [DOI] [PubMed] [Google Scholar]

- 26.Alam M., Munia T.T.K., Tavakolian K., Vasefi F., MacKinnon N., Fazel-Rezai R. Automatic detection and severity measurement of eczema using image processing; Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Orlando, FL, USA. 16–20 August 2016; pp. 1365–1368. [DOI] [PubMed] [Google Scholar]

- 27.Immagulate I., Vijaya M.S. Categorization of Non-Melanoma Skin Lesion Diseases Using Support Vector Machine and Its Variants. Int. J. Med. Imaging. 2015;3:34–40. [Google Scholar]

- 28.Awad M., Khanna R. Support Vector Machines for Classification. Efficient Learning Machines. Apress; Berkeley, CA, USA: 2015. pp. 39–66. [Google Scholar]

- 29.Mehdy M., Ng P., Shair E.F., Saleh N., Gomes C. Artificial Neural Networks in Image Processing for Early Detection of Breast Cancer. Comput. Math. Methods Med. 2017;2017:2610628. doi: 10.1155/2017/2610628. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Rathod J., Waghmode V., Sodha A., Bhavathankar P. Diagnosis of skin diseases using Convolutional Neural Networks; Proceedings of the 2018 Second International Conference on Electronics, Communication and Aerospace Technology (ICECA); Coimbatore, India. 29–31 March 2018; pp. 1048–1051. [Google Scholar]

- 31.Harangi B. Skin lesion classification with ensembles of deep convolutional neural networks. J. Biomed. Inform. 2018;86:25–32. doi: 10.1016/j.jbi.2018.08.006. [DOI] [PubMed] [Google Scholar]

- 32.Naranjo-Torres J., Mora M., Hernández-García R., Barrientos R.J., Fredes C., Valenzuela A. A Review of Convolutional Neural Network Applied to Fruit Image Processing. Appl. Sci. 2020;10:3443. doi: 10.3390/app10103443. [DOI] [Google Scholar]

- 33.Noord N., Postma E. Learning scale-variant and scale-invariant features for deep image classification. Pattern Recognit. 2017;61:583–592. doi: 10.1016/j.patcog.2016.06.005. [DOI] [Google Scholar]

- 34.Zhang J., Xie Y., Xia Y., Shen C. Attention residual learning for skin lesion classification. IEEE Trans. Med. Imaging. 2019;38:2092–2103. doi: 10.1109/TMI.2019.2893944. [DOI] [PubMed] [Google Scholar]

- 35.Iqbal I., Younus M., Walayat K., Kakar M.U., Ma J. Automated multi-class classification of skin lesions through deep convolutional neural network with dermoscopic images. Comput. Med. Imaging Graph. 2021;88:101843. doi: 10.1016/j.compmedimag.2020.101843. [DOI] [PubMed] [Google Scholar]

- 36.Jinnai S., Yamazaki N., Hirano Y., Sugawara Y., Ohe Y., Hamamoto R. The development of a skin cancer classification system for pigmented skin lesions using deep learning. Biomolecules. 2020;10:1123. doi: 10.3390/biom10081123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Barata C., Celebi M.E., Marques J.S. A survey of feature extraction in dermoscopy image analysis of skin cancer. IEEE J. Biomed. Health Inform. 2018;23:1096–1109. doi: 10.1109/JBHI.2018.2845939. [DOI] [PubMed] [Google Scholar]

- 38.Yap J., Yolland W., Tschandl P. Multimodal skin lesion classification using deep learning. Exp. Dermatol. 2018;27:1261–1267. doi: 10.1111/exd.13777. [DOI] [PubMed] [Google Scholar]

- 39.Gessert N., Nielsen M., Shaikh M., Werner R., Schlaefer A. Skin lesion classification using ensembles of multi-resolution EfficientNets with meta data. MethodsX. 2020;7:100864. doi: 10.1016/j.mex.2020.100864. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Hossen M.N., Panneerselvam V., Koundal D., Ahmed K., Bui F.M., Ibrahim S.M. Federated machine learning for detection of skin diseases and enhancement of internet of medical things (IoMT) security. IEEE J. Biomed. Health Inform. 2022 doi: 10.1109/JBHI.2022.3149288. [DOI] [PubMed] [Google Scholar]

- 41.Mukherjee S., Adhikari A., Roy M. Recent Trends in Signal and Image Processing. Springer; Berlin/Heidelberg, Germany: 2019. Malignant melanoma classification using cross-platform dataset with deep learning CNN architecture; pp. 31–41. [Google Scholar]

- 42.Wang Y., Chen Q., Zhang B. Image enhancement based on equal area dualistic sub-image histogram equalization method. IEEE Trans. Consum. Electron. 1999;45:68–75. doi: 10.1109/30.754419. [DOI] [Google Scholar]

- 43.Huang H., Hsu B.W., Lee C., Tseng V.S. Development of a light-weight deep learning model for cloud applications and remote diagnosis of skin cancers. J. Dermatol. 2020;48:310–316. doi: 10.1111/1346-8138.15683. [DOI] [PubMed] [Google Scholar]

- 44.Thurnhofer-Hemsi K., Domínguez E. A Convolutional Neural Network Framework for Accurate Skin Cancer Detection. Neural Process. Lett. 2020;53:3073–3093. doi: 10.1007/s11063-020-10364-y. [DOI] [Google Scholar]

- 45.Srinivasu P.N., SivaSai J.G., Ijaz M.F., Bhoi A.K., Kim W., Kang J.J. Classification of Skin Disease Using Deep Learning Neural Networks with MobileNet V2 and LSTM. Sensors. 2021;21:2852. doi: 10.3390/s21082852. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Kousis I., Perikos I., Hatzilygeroudis I., Virvou M. Deep Learning Methods for Accurate Skin Cancer Recognition and Mobile Application. Electronics. 2022;11:1294. doi: 10.3390/electronics11091294. [DOI] [Google Scholar]

- 47.Wells A., Patel S., Lee J.B., Motaparthi K. Artificial intelligence in dermatopathology: Diagnosis, education, and research. J. Cutan Pathol. 2021;48:1061–1068. doi: 10.1111/cup.13954. [DOI] [PubMed] [Google Scholar]

- 48.Cazzato G., Colagrande A., Cimmino A., Arezzo F., Loizzi V., Caporusso C., Marangio M., Foti C., Romita P., Lospalluti L., et al. Artificial Intelligence in Dermatopathology: New Insights Perspectives. Dermatopathology. 2021;8:418–425. doi: 10.3390/dermatopathology8030044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Young A.T., Xiong M., Pfau J., Keiser M.J., Wei M.L. Artificial Intelligence in Dermatology. A Primer. J. Investig. Dermatol. 2020;140:1504–1512. doi: 10.1016/j.jid.2020.02.026. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data presented in this study are available here: https://www.kaggle.com/kmader/skin-cancer-mnist-ham10000 (accessed on 17 May 2022).