Abstract

We perform a thorough analysis of RNA velocity methods, with a view towards understanding the suitability of the various assumptions underlying popular implementations. In addition to providing a self-contained exposition of the underlying mathematics, we undertake simulations and perform controlled experiments on biological datasets to assess workflow sensitivity to parameter choices and underlying biology. Finally, we argue for a more rigorous approach to RNA velocity, and present a framework for Markovian analysis that points to directions for improvement and mitigation of current problems.

Author summary

Single-cell sequencing data are snapshots of biological processes, making it challenging to infer dynamic relationships between cell types. RNA velocity attempts to bypass this challenge by treating the unspliced RNA content as a proxy for spliced RNA content in the near future, and using this “extrapolation” to build directional relationships. However, the method, as implemented in several software packages, is not yet reliable enough to be actionable, in part due to the large number of arbitrary, user-set hyperparameters, as well as fundamental incompatibilities between the biophysics of transcription in the living cell and the models used throughout the velocity workflows. In this study, we review these issues, and use existing results from the fields of stochastic modeling and fluorescence transcriptomics to develop an alternative theoretical framework. We show that our framework can facilitate the development and inference of physically consistent models for sequencing data, as well as the unification of single-cell analyses to self-consistently treat variation due to cell type dynamics and identities, the stochasticity inherent to single-molecule processes, and the uncertainty introduced by sequencing experiments.

Introduction

Background

The method of RNA velocity [1] aims to infer directed differentiation trajectories from snapshot single-cell transcriptomic data. Although we cannot observe the transcription rate, we can count molecules of spliced and unspliced mRNA. The unspliced mRNA content is a leading indicator of spliced mRNA, meaning that it is a predictor of the spliced mRNA content in the cell’s near future. This causal relationship can be usefully exploited to identify directions of differentiation pathways without prior information about cell type relationships: “depletion” of nascent RNA suggests the gene is downregulated, whereas “accumulation” suggests it is upregulated. This qualitative premise has profound implications for the analysis of single-cell RNA sequencing (scRNA-seq) data. The experimentally observed transcriptome is a snapshot of a biological process. By carefully combining snapshot data with a causal model, it is for the first time possible to reconstruct the dynamics and direction of this process without prior knowledge or dedicated experiments.

The bioinformatics field has recognized this potential, widely adopting the method and generating numerous variations on the theme. The roots of the theoretical approach date to 2011 [2], but the two most popular implementations for scRNA-seq were released in 2017–2018: velocyto by La Manno et al. [1], which introduced the method, and scVelo by Bergen et al. [3], which extended it to fit a more sophisticated dynamical model. Aside from these packages, numerous auxiliary methods have been developed, including protaccel [4] for incorporating newly available protein data, MultiVelo [5] and Chromatin Velocity [6] for incorporating chromatin accessibility, VeTra [7], CellPath [8], Cytopath [9], CellRank [10], and Revelio [11] for investigating coarse-grained global trends, scRegulosity [12] for identifying local trends, Velo-Predictor [13] for incorporating machine learning, dyngen [14] and VeloSim [15] for simulation, and VeloViz [16] and evo-velocity [17] for constructing velocity-inspired visualizations. This profusion of computational extensions has been accompanied by a much smaller volume of analytical work, including discussions of potential extensions and pitfalls [18–21], as well as theoretical studies based on optimal transport [22, 23] and stochastic differential equations [24]. However, at their core, these auxiliary methods are built on top of the theory and code base from velocyto or scVelo.

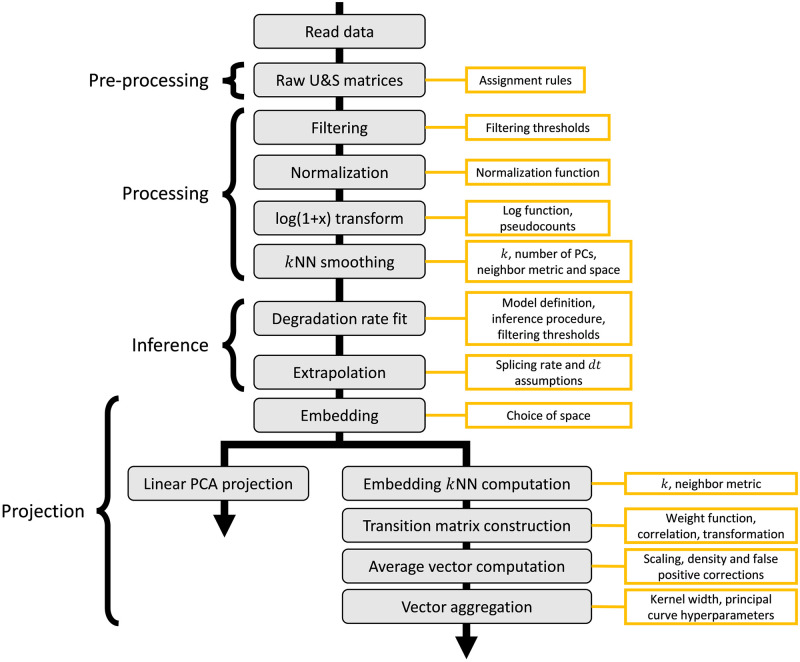

These two most popular software implementations emphasize usability and integration with standard visualization methods. The typical user-facing workflows, with internal logic abstracted away, are shown in Fig 1: a set of reads is converted to cell × gene matrices derived from spliced and unspliced mRNA molecule measurements, the matrices are processed to generate phase plots describing a dynamical transcription process, and finally the transcriptional dynamics are fit, extrapolated, and displayed in a low-dimensional embedding.

Fig 1. A summary of the user-facing workflow of a typical RNA velocity workflow.

Initial processing of sequencing reads produces spliced and unspliced counts for every cell, across all genes. Inference procedures, implemented in velocyto and scVelo, fit a model of transcription, and predict cell-level velocities. The final embedding of cells and smoothed velocities is displayed in the top two principal component dimensions. Visualizations adapted from [25, 26]; dataset from [1]. The DNA and RNA illustrations are derived from the DNA Twemoji by Twitter, Inc., used under CC-BY 4.0.

Despite the popularity of RNA velocity [13, 27] and increasingly sophisticated attempts to combine it with more traditional methods for trajectory inference [8, 10], there has been little comprehensive investigation of the modeling assumptions that underlie the seemingly simple workflow, with the sole dedicated critique to date largely focusing on the embedding process [28]. This is an impediment to applying, interpreting, and refining the methods, as problems arise even in the simplest cases. Consider, for example, the result displayed in Fig 1, where the outputs of the two most popular RNA velocity programs applied to human embryonic forebrain data generated by La Manno et al. [1] (“forebrain data”) are qualitatively different. The inferred directions in the example should recapitulate a known differentiation trajectory from radial glia to mature neurons. However, scVelo, which “generalizes” velocyto, fails to identify, and even reverses the trajectory, suggesting totally different causal relationships between cell types. This type of problematic result has been reported elsewhere (Figs 2–3 of [3], Fig 2 of [21], Fig 4B of [5], Fig 5A of [9], Fig 5 of [10], and Fig 3 of [29]).

Motivated by such discrepancies, we wondered whether either velocyto or scVelo are reliable for standard use in applications where ground truth may be unknown. An examination of their theoretical foundations, and those of related methods, revealed that they are largely informal. Even the term “RNA velocity” is not precisely defined, and is used for the following distinct concepts:

A generic method to infer trajectories and their direction using relative unspliced and spliced mRNA abundances by leveraging the causal relationship between the two RNA species, which is the interpretation in [24].

A set of tools implementing this method or parts of it, as in an “RNA velocity workflow implemented in kallisto|bustools,” which is the interpretation in [30].

A gene- and cell-specific quantity under a continuous model of transcription, as in “the RNA velocity of a cell is ”, which is the interpretation in [18, 27].

A gene- and cell-specific quantity under a probabilistic model of transcription, as in “the RNA velocity of a cell is ”, which is the interpretation in [4].

A gene-specific average quantity, as in “the total RNA velocity of a gene is ∑i(βui − γsi)”, which is the interpretation in [12, 27].

A cell-specific vector composed of gene-specific velocity components, as in “the vector RNA velocity of a cell is βjuij − γjsij”, which is the interpretation in [7, 9, 27].

The cell-specific linear or nonlinear embedding of a cell-specific vector in a low-dimensional space, which is the interpretation in [9].

A local property, such as curvature, of a theorized cell landscape computed either from an embedding or a set of velocities, which is the interpretation in [22, 29].

These discrepancies and, more broadly, the limitations of current theory, stem from historical differences between sub-fields, which have calcified over the past twenty years of single-cell biology. On the one hand, fluorescence transcriptomics methods, including single-molecule fluorescence in situ hybridization and live-cell MS2 tagging, which target small, well-defined systems with a narrow set of probes [31–33], have motivated the development of interpretable stochastic models of biological variation [34, 35]. On the other hand, “sequence census” methods [36], such as scRNA-seq, provide genome-wide quantification of RNA, but the associated challenges of exploratory, high-dimensional data analysis have not, for the most part, been addressed with mechanistic models. Instead, descriptive summaries, such as graph representations and low-dimensional embeddings, are the methods of choice [37]. Nevertheless, descriptive analyses, even if ad hoc, can still facilitate biological discovery: RNA velocity has been used to produce plausible trajectories [38–46], and our simulations show that it can recapitulate key information about differentiation trajectories in best-case scenarios (Fig A in S1 Text). These results highlight the potential of RNA velocity, and motivated us to review its assumptions, understand its current failure modes, and to solidify its foundations.

Towards this end, we found it helpful to contrast the sub-fields of fluorescent transcriptomics and sequencing, which have analogous goals, albeit disparate origins that have led to analytical methods with distinct philosophies and mathematical foundations. The sub-fields have, at times, interacted. Fluorescence transcriptomics can now quantify thousands of genes at a time, and this scale of data is now occasionally presented using visual summaries popular for RNA sequencing data, such as principal component analysis (PCA) [47], Uniform Manifold Approximation and Projection (UMAP) [48], and t-distributed stochastic neighbor embedding (t-SNE) [49, 50]. Conversely, the commercial introduction of scRNA-seq protocols with unique molecular identifiers (UMIs) has spurred the adoption of theoretical results from fluorescence transcriptomics for sequence census analysis [51–55]. Sequencing studies frequently use count distribution models that arise from stochastic processes, such as the negative binomial distribution, albeit without explicit derivations or claims about the data-generating mechanism [51, 56, 57]. These connections highlight the promise of mechanistic gene expression models: in principle, parameters can be fit to sequencing data to produce a physically interpretable, genome-scale model of transcriptional regulation in living cells, and some steps have been taken in this direction over the past decade [52–55, 58, 59].

RNA velocity methods are products of the sequence census paradigm: they draw heavily on low-dimensional embeddings and graphs derived from the raw data. Their current limitations stem from viewing biology through the lens of signal processing, where noise is something to be eliminated or smoothed out. We posit that it is more appropriate to view the data through the lens of quantitative fluorescence transcriptomics, in which noise is a biophysical phenomenon in its own right. Through this lens, modeling that decomposes variation into single-molecule (intrinsic) and cell-to-cell (extrinsic) [60] components, in addition to technical noise [61], is key. Beyond this conceptual issue, we find that an assessment of the impact of hyper-parameterized, heuristic data pre-processing and visualization in current RNA velocity workflows is useful for developing more reliable analyses.

Goals and findings

To fully describe what RNA velocity does, why it may fail, and how it can be improved, requires work on several fronts:

In the section “Workflow and implementations,” we describe an idealized “standard” RNA velocity workflow. We introduce the biophysical foundations presented in the original publication, outline the methodological choices implemented in the software packages, and enumerate the tunable hyperparameters left to the user.

In the section “Logic and methodology,” we probe the logic of the assumptions made in the workflow and describe potential failure points. This analysis revisits the outline through complementary critical lenses, adapted to the mechanistic and phenomenological steps. To characterize its biological coherence, we compare the concrete and implicit biophysical models to those standard in the field of fluorescence transcriptomics, and discuss the implications of assumptions that do not appear to be backed by a biophysical or mathematical argument. To characterize its stability, we test the quantitative effects of tuning hyperparameters and using different software implementations on real datasets.

Our findings on RNA velocity have implications for other scRNA-seq analyses. On one hand, the theory behind RNA velocity is not sufficiently robust. The models disagree with known biophysics: they do not recapitulate bursty production [62], and place needlessly restrictive constraints on regulatory trends. They are also internally inconsistent, as they do not preserve cell identities: genes are fit independently, so the same cells’ placement along putative trajectories differs between genes. Furthermore, the embedding processes are ad hoc and heavily rely on error cancellation, apparently discarding much of the data in the process. These problems are intrinsic, and derived methods inherit them.

Fortunately, better options, inspired by fluorescence transcriptomics models, are available. In order to develop a meaningful foundation for RNA velocity, we formalize its stochastic model and describe an inferential procedure that can be internally coherent and consistent with transcriptional biophysics. Furthermore, by examining the assumptions underpinning RNA velocity and reframing them in terms of stochastic dynamics, we find that the velocyto and scVelo procedures naturally emerge as approximations to our solutions. Our approach, presented in the section “Prospects and solutions,” provides an alternative to current trajectory inference methods: instead of using physically uninterpretable adjacency metrics and fitting a narrow set of topologies, it is relatively straightforward to solve many combinations of transient or stationary topologies and apply standard Bayesian methods to identify the best fit. Conceptually, instead of “denoising” data, our approach proposes fitting the molecule distributions and preserving the uncertainty inherent in noisy biological and experimental processes.

Workflow and implementations

We begin with a conceptual overview of an idealized RNA velocity workflow, with a description of implementation-specific choices. We focus on datasets with cell barcodes and UMIs, such as those generated by the 10x Genomics Chromium platform [63], as they provide the most natural comparison to discrete stochastic models later in the discussion (“Occupation measures provide a theoretical framework for scRNA-seq” under “Prospects and solutions”). We summarize the workflow in Fig 2, giving particular attention to the parameter choices required at each step. To clarify the information transfer in the process, we report the manipulations performed and the variables defined in a single run of the processing workflow in Fig B in S1 Text (as used to generate Fig 4 of [1]).

Fig 2. An RNA velocity workflow, beginning with read processing and ending with two-dimensional projection, and the parameters that must be specified by the user.

Pre-processing

RNA velocity analysis begins by processing raw sequencing data to distinguish spliced and unspliced molecules. This is a genomic alignment problem. For example, reads aligning to intronic references are assigned to unspliced molecules, whereas reads spanning exon-exon splice junctions are assigned to spliced molecules. Data from reads associated with a single UMI are combined to generate a label of “spliced,” “unspliced,” or “ambiguous” for each read. “Ambiguous” reads are omitted from downstream analysis, so the assignments are effectively binary.

Until recently, traditional alignment and UMI counting software, such as Cell Ranger from 10x Genomics, discarded intronic information [63]. The same was true of pseudoalignment methods, as they identify transcript classes consisting of annotated, and presumably terminal, isoforms [64]. The explicit quantification of transient intron-containing molecules appears to have been introduced in the velocyto command-line interface [1]. Since then, existing workflows have added functionality for unspliced transcript quantification [27]. In particular, alignment can be performed via STARsolo [65] and dropEst [66], whereas pseudoalignment can be performed via kallisto|bustools [30] or salmon [27]. Benchmarking has shown discrepancies between the outputs of these workflows [27, 30], apparently due to differences in filtering, thresholding, and counting ambiguous reads. However, there is currently little principled reason to prefer one program’s results to another, as quantification rules largely follow velocyto, and assume a two-species model is sufficient.

Count processing

The raw count data are processed to smooth out noise contributions that can skew the downstream analysis. This step is generally combined with the standard quality control techniques for scRNA-seq [37]. First, cells with extremely low expression are filtered out. Then, a subset of several thousand genes with the highest expression and variation are selected. The counts are normalized by the number of cell UMIs to counteract technical and cell size effects. At this point, the PCA projection is computed from log-transformed spliced RNA counts. Finally, the normalized counts are smoothed out by nearest-neighbor pooling. To accomplish this, the algorithm computes the k nearest cell neighbors in a PCA space for each cell, then replaces the abundance with the neighbors’ average. This step is crucial, as it produces the cyclic or near-cyclic “phase portraits” used in the inference procedure.

The implementation specifics vary even between the two most popular packages, the Python versions of velocyto and scVelo. For example, there appears to be no consensus on the appropriate k or neighborhood definition for imputation. The original publication reports k between 5 and 550, calculated using Euclidean distance in 5–19 top PC dimensions [1]. By default, scVelo uses k = 30 in the top 30 PC dimensions [3].

Inference

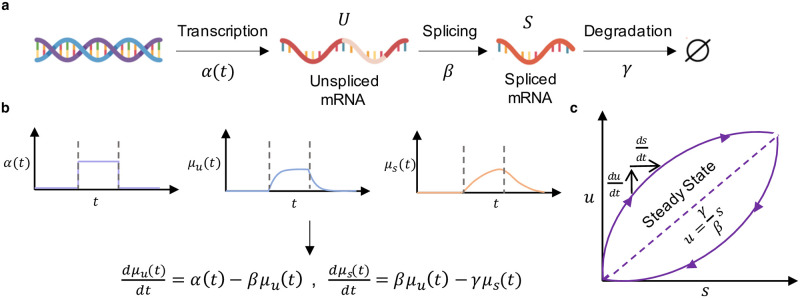

The normalized and smoothed count matrices are fit to a biophysical model of transcription. The model structure for a single gene is outlined in Fig 3a. α(t) is a transcription rate, which has pulse-like behavior over the course of the trajectory. The constant parameters are β, the splicing rate, and γ, the degradation rate. Driving by α(t) induces continuous trajectories μu(t) and μs(t), which informally represent instantaneous averages, μ, of the unspliced, u, and spliced, s, species, governed by the following ordinary differential equations (ODEs):

| (1) |

Fig 3.

a. The continuous model of transcription, splicing, and degradation used for RNA velocity analysis. b. Plots of α(t), μu(t), and μs(t) over time t and the corresponding governing equations for the system. Dashed lines indicate time of switching event. c. Outline of the common phase portrait representation, with both steady state and dynamical models denoted. Adapted from [1]. The DNA and RNA illustrations are derived from the DNA Twemoji by Twitter, Inc., used under CC-BY 4.0.

The qualitative behaviors of these functions are shown in Fig 3b. By fitting smoothed count data for a single gene, now interpreted as samples from a dynamical phase portrait governed by Eq 1 (Fig 3c), it is possible to estimate the ratio γ/β. Finally, with this ratio in hand, the velocity vi may be computed for each cell i:

| (2) |

where si and ui are cell-specific counts, Δt is an arbitrary small time increment, and Δsi is the change in spliced counts achieved over that increment.

The popular packages differ on the appropriate way to fit the rate parameters. The velocyto procedure presupposes that the system reaches equilibria at the low- and high-expression states of α(t), and approximates them by the extreme quantiles of the phase plots. By computing the slope of a linear fit to these quantiles, it obtains the parameter γ/β (Fig 3c). On the other hand, scVelo relaxes the assumption of equilibrium and implements a “dynamical” model, which fits the solution of Eq 1 to the entire phase portrait to obtain γ and β separately. This methodological difference corresponds to conceptual differences in the interpretation of imputed data. In velocyto, imputation appears to be an ad hoc procedure for filtering technical effects, in line with the usual usage [67, 68]. On the other hand, in scVelo, the imputed data are called “moments” and treated as identical to the instantaneous averages μu(t) and μs(t) of the process. In addition, scVelo offers a “stochastic” model, which posits pooled second moments are equivalent to the instantaneous second moments (e.g., the sum of s2 over neighbors is equal to ).

The genes are analyzed independently, generating a velocity vij for each cell i and gene j. As the velocyto procedure cannot separately fit βj and γj, its velocities have different units for different genes. On the other hand, the scVelo procedure does separately fit the rate parameters, albeit by assigning a latent time tij to each cell, distinct for each gene’s fit.

Embedding

Low-dimensional representations are generated using one of the conventional algorithms, such as PCA, t-SNE, or UMAP. These algorithms can be conceptualized as functions that map from a high-dimensional vector si to a low-dimensional vector E(si). The original publication offers two methods to convert cell’s velocity vector vi to a low-dimensional representation [1].

If the embedding is deterministic (e.g., E is PCA on log-transformed counts), one can define a source point E(si), compute a destination point E(si + viΔt) = E(si + Δsi), and take the difference of these two low-dimensional vectors to obtain a local vector displacement:

| (3) |

This displacement is then interpreted as a scalar multiple of the cell-specific embedded velocity.

If the embedding is non-deterministic, one can apply an ad hoc nonlinear procedure. This procedure essentially computes an expected embedded vector by weighting the directions to k embedding neighbors; neighbors that align with Δsi are considered likely destinations for cell state transitions in the near future:

| (4) |

where w is a composition of the softmax operator (with a tunable kernel width parameter) with a measure of concordance between the arguments. Once an average direction is computed, it undergoes a set of corrections, e.g., to remove bias toward high-density regions in the embedded space. Finally, the cell-specific embedded vectors are aggregated to find the average direction over a region of the low-dimensional projection.

The packages almost exclusively use the nonlinear embedding procedure. There is no consensus on the appropriate choice of embedding, number of neighbors, or measure of concordance. PCA, t-SNE, and UMAP have been used to generate low-dimensional visualizations [1, 3]. The original publication uses k between 5 and 300 and applies square-root or logarithmic transformations prior to computing the Pearson correlation between the velocity and neighbor directions [1]. In contrast, scVelo uses a recursive neighbor search by averaging over neighbors and neighbors of neighbors (with k = 30), and implements several variants of cosine similarity [3]. An optional step adjusts the embedded velocities by subtracting a randomized control; this correction is usually omitted in demonstrations of velocyto and implemented but apparently undocumented in scVelo.

As demonstrated in Fig 2, the linear PCA embedding is the simplest dimensionality reduction technique; it consists of a projection and requires fewer parameter choices than other methods. However, it is only consistently used in Revelio [11]. The velocyto package does not appear to have a native implementation of this procedure, although it is briefly demonstrated in the original article (Fig 2d and SN2 Figs 8–9 of [1]). On the other hand, scVelo does implement the PCA velocity projection, but disclaims the results of using it as unrepresentative of the high-dimensional dynamics.

Logic and methodology

To understand the implications of the choices implemented in various RNA velocity workflows, we examined the procedures from a biophysics perspective, with a view towards understanding the mechanistic and statistical meaning of methods implemented. In this section, we broadly discuss potential challenges, problematic assumptions, and contradictory results. In the following section, we draw on lessons learned and propose a modeling approach of our own.

Pre-processing

As outlined in “Pre-processing” under “Workflow and implementations,” several workflows are available for converting raw reads to molecule counts. These workflows largely follow the logic set out in the original implementation [1]; however, as pointed out by Soneson et al. [27], they produce different outputs from the same data. We reproduced their analysis on a broader selection of datasets (as reported in “Data availability”) in Fig C in S1 Text, according to the procedure outlined under “Pre-processing concordance.” The performance was broadly consistent with the previous benchmarking and the description under “Pre-processing” in the previous section: the methods agreed on the definition of “spliced” molecules, but different rules for the assignment of “unspliced” molecules led to discrepancies in counts. These discrepancies were particularly pronounced when comparing datasets one gene at a time, likely due to noise in tens of thousands of low-expressed genes (ρ by cell in Fig C in S1 Text; cf. lower triangle of Fig S10 in [27]).

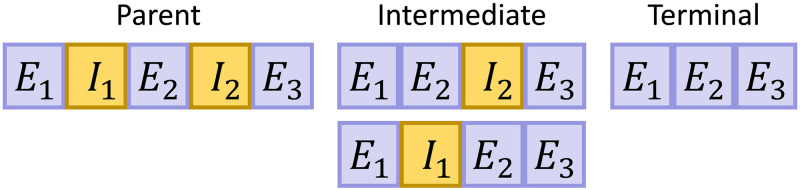

However, a simple comparison between the software outputs obscures a far more fundamental challenge: the binary classification of transcripts as either spliced or unspliced is necessarily incomplete. The average human transcript has 4–7 introns [69], and a combinatorial number of potential transient and terminal isoforms. The vast majority of genes are alternatively spliced [70–72].

We can consider the hypothetical example of a nascent transcript with the structure E1I1E2I2E3, where Ii are introns and Ei are exons, as shown in Fig 4. If we place all UMIs with intronic reads into the “unspliced” category, we conflate the parent and intermediate transcripts. On the other hand, if we place all UMIs with splice junctions into the “spliced” category, we conflate the intermediate and terminal transcripts. Adding more complexity, some isoforms may retain introns through alternative splicing mechanisms; for example, the intermediate transcripts may be exported, translated, and degraded alongside the terminal.

Fig 4. A two-intron mRNA species may not have well-defined “unspliced” and “spliced” forms.

The binary model is not large enough to include the diversity of possible splicing dynamics, but approximately holds under fairly restrictive conditions: the predominance of a single terminal isoform, as well as the existence of a single rate-limiting step in the splicing process. Previous work reports that minor isoforms are non-negligible [70, 72], differential isoform expression is physiologically significant [72–74], and intron retention in particular is implicated in regulation and pathology [75–78]. Splicing rate data are more challenging to obtain, but targeted experiments [79], genome-wide imaging [80], and our preliminary mechanistic investigations [81] suggest that selection and removal of individual introns is stochastic, but the overall splicing process has rather complex kinetics, not reducible to a single step.

RNA velocity biophysics

We will first inspect the complexity obscured by the simple schema given in Fig 3a. The velocity manuscripts use several distinct models for the transcription rate α(t). Furthermore, the amounts of molecular species and (previously denoted informally by u and s) have incompatible interpretations. The following models make fundamentally different claims about the data-generating process and imply fundamentally different inference procedures.

α(t) is piecewise constant over a finite time horizon; u and s are discrete (SN2 pp. 2–3, Fig 1a-b of [1]).

α(t) is continuous and periodic; u and s are discrete (SN2 pp. 2–3, Fig 1e of [1]).

α, β, and γ all smoothly vary over a finite time horizon according to an undisclosed function, with α exhibiting pulse behavior; u and s are discrete (SN2 Fig 5 of [1]).

α, β, and γ all smoothly vary over a finite time horizon according to an arbitrary function; u and s are continuous (Fig 3 of [21]).

α(t) is piecewise constant over a finite time horizon; u and s are continuous (Fig 1 of [1], Methods of [3]).

α(t) is piecewise constant over a finite time horizon; u and s are continuous-valued but may contain discontinuities (Methods of [3]).

α is constant; u and s are continuous (Fig 1b and SN1 pp. 1–2 of [1]). This formulation yields the reaction rate equation, and cannot produce the bimodal phase plots of interest.

α is constant; u and s are discrete (SN1 pp. 2–3 of [1]). This is the stochastic extension [82] of the previous model, and cannot produce the bimodal phase plots of interest, as explicitly shown on page 3 of SN1 in [1].

These discrepancies make a comprehensive analysis challenging. Models 7–8 do not contain differentiation dynamics. Certain models are contrived; models 3–4 propose transcription rate variation without motivating the specific form, and model 6 introduces nonphysical discontinuities. Model 2 alludes to limit cycles in stochastic systems under periodic driving, an intriguing phenomenon in its own right [83, 84], but not otherwise explored in the scVelo and velocyto publications. For the rest of this report, we focus on the discrete formulation (model 1) and its continuous analog (model 5).

For the discrete formulation, the RNA velocity v should be interpreted as the time derivative of the expectation of a random variable St that tracks the number of spliced RNA, conditional on the current state (Section A in S1 Text):

| (5) |

For the continuous formulation, it should be interpreted as the time derivative of the deterministic variable st that tracks the amount of spliced RNA, initialized at the current state:

| (6) |

These formulations happen to be mathematically identical, which creates ambiguity. Nevertheless, both are legitimate, if narrow, statements about the near future of a process initialized at a state with u unspliced and s spliced molecules. The questions that arise immediately before, and immediately after, the velocity computation procedure, are (1) what generative model should be fit to obtain β and γ and (2) even with a v, how much use can one make of it?

Model definition

Continuous, deterministic models are fundamentally inappropriate in the low-copy number regime, which is predominant across the transcriptome [85–87]. Although continuous equations such as Eq 1 can represent the evolution of moments, they are insufficient for inference, as fitting average mRNA abundance amounts to invoking the central limit theorem for a very small, strictly positive quantity [88–92]. A comprehensive understanding of the stochastic noise model is necessary prior to making such approximations. Therefore, simulation methods that use a continuous model are immediately suspect [15]. We describe implementation-specific concerns in the subsections “Count processing” and “Inference” under “Logic and methodology.”

The motivation behind a pulse model of transcriptional regulation is obscure. Although dynamic processes certainly have transiently expressed genes [93–95, 95, 96], it is far from clear that this model applies across the transcriptome, to thousands of potentially irrelevant genes. Indeed, it is not even coherent with genes showcased in the original report (Fig 4d and Extended Data Fig 8b of [1]): only ELAVL4 appears to show a symmetric pulse of expression. Finally, even when this model does apply, the assumption of constant splicing and degradation rates across the entire lineage is a potentially severe source of error, with no simple way to diagnose it [21].

Most problematic is that even the discrete model is incoherent with known mammalian transcriptional dynamics. If we suppose induction and repression periods are relatively long, as for a stationary, terminal, or unregulated cell population, we arrive at genome-wide constitutive transcription, in which the rate of RNA production is constant. This contradicts numerous sources that suggest transcriptional activity varies with time even in stationary cells [62, 90, 97–103], and is effectively described by a telegraph model that stochastically switches between active and inactive states [104, 105].

Thus, we must impose basic consistency criteria. Using the models outlined under “RNA velocity biophysics” requires the assumption that stationary, homogeneous cell populations are Gaussian or Poisson-distributed. This assumption contradicts at least thirty years of evidence for widespread bursty transcription [98, 105]. We have obtained the answer to question (1) in the previous subsection: the model must be coherent with known biophysics, and provide a robust way to identify cases when its assumptions fail.

Count processing

Some standard properties of constitutive systems appear to at least qualitatively motivate gene filtering. Only genes with spliced–unspliced Pearson correlation above 0.05 are used for fitting parameters (as on p. 4 of SN2 in [1]); if the correlation is below this threshold, the gene is removed from procedure and presumed stationary. This is valid for the constitutive model, but inappropriate for broader model classes: for example, bursty transcription yields strictly positive correlations, making this statistic ineffective for identifying dynamics [81, 106].

Normalization relative to the cell’s molecular count is a standard feature of sequencing workflows [37, 107, 108], but reduces interpretability. Normalization converts absolute discrete count data to a proportion of the total cellular counts, ostensibly to account for the compositional nature of read-data data [109]. Several recent studies strongly discourage normalization of UMI-based counts [110, 111], although this perspective is not universal [112, 113]. It is clear that continuous-valued normalized data are incompatible with discrete mechanistic models. Moreover, the suitability of continuous models (such as Eq 1) is never explicitly justified, but merely assumed. Since normalization nonlinearly transforms the molecule distributions [68, 111] and introduces a coupling even between independent genes, the precise interpretation of single-gene ODE models is unclear.

Nearest-neighbor averaging is used to smooth the data after normalization. Though it effaces much of the stochastic noise to give an “averaged” trajectory, it introduces distortions of unknown magnitude. As discussed in the subsection “Inference” under “Workflow and implementations,” the imputation step does not have a consistent interpretation. The original report [1] defines it as “kNN pooling” in the manuscript and “imputation” in the documentation (and Fig 17 of SN2), placing the emphasis on denoising. On the other hand, scVelo interprets the local average as an estimate of the expectations μu(t), μs(t). Neither approach appears to be justified by previous studies or benchmarking on ground truth, and both are circular as the neighborhood is computed based on the observed counts. A probabilistic analysis in Section B in S1 Text formalizes more deep-seated issues with using model-agnostic point estimates to “correct” data. Although these claims may hold and simply require more theoretical work to prove, our simulations in “Count processing” under “Prospects and solutions” strongly suggest they are invalid even in the best-case scenario: the phase portraits are smoothed out, but fail to capture the underlying dynamics in a way coherent with those claims.

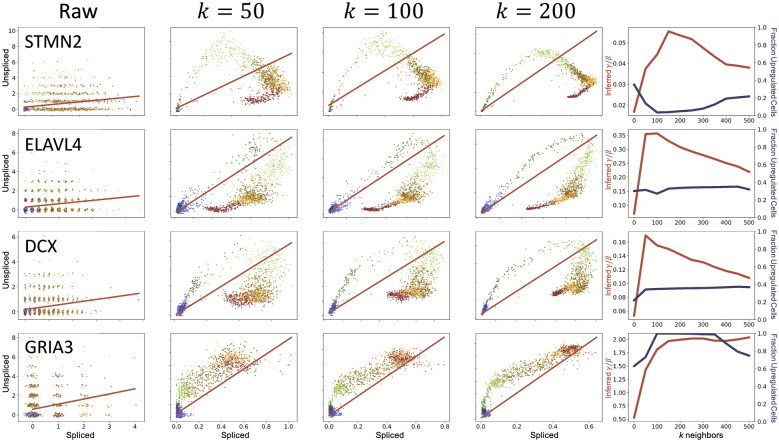

To illustrate these problems, we performed a simple test of self-consistency, illustrated in Fig 5. We reprocessed the forebrain dataset (Fig 4 of [1]) using the velocyto workflow, varying k, and investigated its effect on the appearance of the phase plots and the inferred parameters. As the neighborhood size was increased, the phase plot was distorted, with no apparent “optimal” choice of k.

Fig 5. Distortions in data and instabilities in the inferred γ/β values introduced by the imputation procedure on the forebrain data from [1].

Column 1: Raw data (points: spliced and unspliced counts with added jitter; color: cell type, as in Fig 1; line: best fit line u = γs/β + q, estimated from the entire dataset). Columns 2–4: Normalized and imputed data under various values of k (points: spliced and unspliced counts; color: cell type, as in Fig 1; line: best linear fit u = γs/β + q, estimated from extreme quantiles). Column 5: Inferred values of γ/β (red, left axis) and inferred fraction of upregulated cells, defined as ∑iui − (γsi/β + q)>0 (blue, right axis).

Inference

Broadly speaking, velocyto-like moment estimates for γ/β are legitimate if the system has time to equilibrate (as outlined under “The ‘deterministic’ velocyto model as a special case”). However, moment-based estimation underperforms maximum likelihood estimation in general. The two approaches are in concordance under the highly restrictive assumptions of error normality and homoscedasticity. These assumptions are routinely violated in the low-copy number regime [88].

Regression on top and bottom quantiles inherits all of the issues of regression on the entire dataset, but compounds them by discarding a large fraction of data. Extremal quantile regression is otherwise a well-developed method [114–117], but it is generally applied to processes with nontrivial tail effects. For the quantile computation the filtering criterion is ad hoc, and not amenable to theoretical investigation. The order statistics of discrete distributions are notoriously challenging to compute [118–120], and even the simplest Poisson case exhibits complex trends [121]. In other words, the extrema themselves may be affected by noise, introducing more uncertainty into inference. Although the original article does perform some validation (SN2, Sec. 3 of [1]), it focuses on cell-specific velocities rather than parameter values, and only provides relative performance metrics rather than actual comparisons to simulated ground truth.

Even without testing the inference procedures against simulations, we can characterize their performance in terms of internal controls. As we demonstrate in Fig 5, the inferred γ/β values were unstable under varying k: the velocyto parameter inference procedure was highly sensitive to a user-defined neighborhood hyperparameter. On the other hand, using a simple ratio of the means (as in the first column and the k = 0 case in the fifth column of Fig 5) produced biases [1].

Interestingly, the fraction of cells predicted to be upregulated is qualitatively more stable, suggesting that the inference step is best understood as an ad hoc binary classifier, rather than a quantitative descriptor of system state. Given the stability of this classifier, as well as our preliminary discussion of similar results in the context of validating protaccel [4], we used this binary classifier as a benchmark in the subsections named “Embedding” under “Logic and methodology” and “Prospects and solutions.”

Regression of the piecewise deterministic “dynamical” model in scVelo asserts the imputed counts have normal noise with equal residuals for spliced and unspliced species, once again implausible in the low-copy number regime. More fundamentally, it fails to preserve gene-gene coherence. If a cell is predicted to lie at the beginning of a trajectory for one gene, this estimate does not inform fitting for any other gene. The “dynamical” model appears to address this discrepancy in a post hoc fashion. First, the algorithm identifies putative “root cells,” which are themselves computed from the velocity embedding. Then, the disparate gene-specific times are aggregated into one by computing a quantile near the median. This procedure presupposes that the velocity graph is self-consistent and physically meaningful, and that the point estimate of process time is sufficient, but does not mathematically prove these points or test them by simulation.

Embedding

After inference and evaluation of Δs for every cell and gene, the array is converted to an embedding-specific representation. In the single-cell sequencing field, low-dimensional projections are more than a visualization method: they are ubiquitous tools for inference and discovery. Transcriptomics workflows convert large data arrays to human-parsable visuals; these visuals are then used to explore gene expression and validate cell type relationships, under the assumption that they represent the underlying data well enough to draw conclusions. However, the embedding procedures involve several distortive steps, which should be recognized and questioned.

For such visuals, the goal is to recapitulate local and global cell-cell relationships. However, accurately representing desired properties such as pairwise relationships between many points is inherently difficult, requiring dimensions several orders of magnitudes larger than two to faithfully represent the data [122]. Thus distortion of cell-cell relationships is naturally induced in two-dimensional embeddings, and grows as for M cells [122, 123]. Both linear (PCA) and nonlinear (t-SNE/UMAP) methods exhibit these distortions, and warp existing cell-cell relationships or suggest new ones not present in the underlying data [122, 124]. Tuning algorithm parameters can slightly improve some distortion metrics, though often at the expense of others [125]. Essentially, nonlinear embeddings utilize sensitive hyperparameters that can be tuned, but do not provide well-defined criteria for an “optimal” choice [124, 125]. Using visualizations for discovery thus risks confirmation bias.

The velocity algorithms present a particularly natural criterion for quantifying the embedding distortion. The nonlinear embedding procedure generates weights for vectors defined with reference to embedding neighbors. Therefore, we can reasonably investigate the effect of the embedding on the neighborhood definitions. In other words, if the velocity arrows quantify the probability of transitioning to a set of cells, what relationship does this set have to the set of neighbors in the pre-embedded data?

This relationship is conventionally [124] quantified by the Jaccard distance, defined by the following formula:

| (7) |

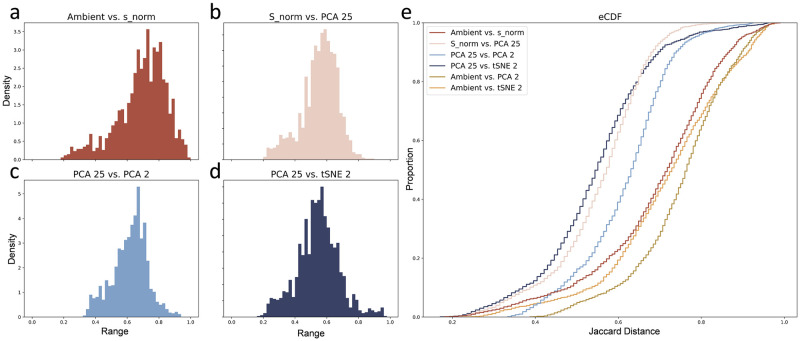

which reports the normalized overlap between the original sets of cell neighbors (A) and embedded cell sets (B). This dissimilarity metric ranges from 0 to 1, where 1 (or 100%) denotes completely non-overlapping sets. We applied standard steps of dimensionality reduction and smoothing to the forebrain dataset (Fig 4 of [1]) and computed their effect on the neighborhoods (taken to be k = 150 for consistency with the velocity embedding process). We report the Jaccard distance distributions in Fig 6, and observe the gradual degradation of neighborhoods. On average, moving from the ambient high-dimensional space to a two-dimensional representation induced a dJ of 70–75%. Therefore, cell embedding substantially distorts precisely the local structure relevant to velocity embedding.

Fig 6. Normalization followed by two rounds of dimensionality reduction introduce distortions in the local neighborhoods.

a.– d. Histograms of Jaccard distances between intermediate embeddings. e. Empirical cumulative distribution functions of Jaccard distances between intermediate embeddings, as well as the overall distortion (Ambient vs. PCA 2 and Ambient vs. tSNE 2). The palette used is derived from dutchmasters by EdwinTh.

The two-dimensional arrows in Fig 1 combine three sources of error: the intrinsic information loss of low-dimensional projections, the instabilities in upstream processing and inference, and any additional error incurred by the nonlinear procedure outlined in “Embedding” under “Workflow and implementations.” The softmax kernel-based procedure exhibits an inherent tension that merits closer inspection. On one hand, it is explicitly designed [1] to mitigate error incurred by cell- and gene-specific noise by performing several steps of pooling and smoothing. On the other hand, it is technically questionable: if we assume differentiation processes are largely governed by a small set of “marker genes,” pooling them with thousands of non-marker genes amounts to hoping that variation in an orthogonal data-generating process cancels out well enough to recapture latent dynamics. Certain processes may involve the modulation of large sets of genes, e.g., if expression overall increases over the transcriptome. However, the velocity workflows are intrinsically unable to identify such trends, as they use normalized data. As we demonstrate later, a model with no latent dynamics at all (Fig F in S1 Text) can generate apparent signal in the embedded space, illustrating the dangers of relying on error cancellation. When multiple data-generating processes are present, naïve aggregation risks obscuring rather than revealing signal.

Aside from this high-level inconsistency, other problems emerge upon investigating the embedding procedure closer, even prior to performing any numerical controls. The nonlinear embedding approach introduced by La Manno et al. (Eq 4) is highly hyperparametrized, not motivated by any previous theory, has no physical interpretation, and does not appear to have been formally validated against linear or simulated ground truth. Just as with the cell embedding, the procedure is dependent on an arbitrary number of nearest neighbors and velocity transformation functions, with no clearly optimal choices. These hyperparameters can be tuned to correct for such instabilities, potentially resulting in overfitting to a pre-determined hypothesis. Since the procedure has no physical basis, potential false discoveries are challenging to diagnose. Furthermore, it reduces the limited biophysical interpretability of the result, particularly because the relationship between cell state graphs and the underlying physical process is obscure and subject to distortions (Section C in S1 Text). The velocity derivation is model-informed and, as discussed under “The ‘deterministic’ velocyto model as a special case” and “The ‘dynamical’ scVelo model as a special case” in the next section, can be informally viewed as an approximation under several strong assumptions about the process biophysics. The embedding, on the other hand, is ad hoc and can only degrade the information content.

A final theoretical point remains before we can begin quantitatively validating the embeddings: as suggested by Eq 2, and discussed under “Inference” in the previous section, the velocyto gene-specific vj have different units. Therefore, the aggregation in Eq 4 is questionable. The standard velocyto workflow assumes that the splicing rates are close enough to neglect differences, which appears to contradict other results reported in the same paper (Extended Data Fig 2f of [1]).

To bypass this limitation in a self-consistent way, we implemented a “Boolean” or binary measure of velocity, as motivated by validation in the original manuscript (Sec. 3 in SN2 of [1]), introduced in the context of validating protaccel [4], and implied by resampling β values from a uniform distribution in an investigation of latent landscapes (p. 3 in Supplementary Methods of [29]). Essentially, instead of computing transition probabilities based on the velocity values, we computed them based on signs, bypassing the unit inconsistency. The algorithm used to produce this embedding is described under “Velocity embedding.”

The Boolean procedure offers a natural internal benchmark. If this approach largely recapitulates findings from the standard methods, the embedding process serves as an information bottleneck: the inference procedure performs as well as a binary classifier, and the complexities of the dynamics are effaced by embedding. We used this approach as a trivial baseline and compared it to the standard suite of variance-stabilizing transformations implemented in velocyto. In addition, we tested the effects of neighborhood sizes, in the vein of the stability analysis performed in the original manuscript (Sec. 11 in SN2 of [1]). In Fig D in S1 Text, we plot the distributions of angle deviations between a linear baseline, obtained by projecting the extrapolated cell state and computing E(si + Δsi) − E(si) using PCA, and the nonlinear velocity embedding. This control has not previously been investigated in any detail, but seems key to the claim that the nonlinear velocity embedding is meaningful: intuitively, we expect it to recapitulate the simplest baseline, at least on average. To avoid any confusion, we reiterate that the linear embedding is given by Eq 3, and not the identity nonlinear embedding implemented in velocyto (i.e., ϱ(x) = x in SN1, pp. 9–10 of [1]).

The angle deviations in arrow directions were all severely biased relative to the linear baseline. The different normalization methods were distortive to approximately the same degree. The performance of the Boolean embedding, which discards nearly all of the quantitative information, was nearly identical to the built-in methods, which suggests that the choice of normalization methods is a red herring: quantitative velocity magnitudes have little effect on the embedding quality. This is consistent with previous investigations (cf. Fig S52 in [4]). On the other hand, the neighborhood sizes did not appear to matter much, at least over the modest range explored here (in contrast to Sec. 11 in SN2 of [1]). Therefore, the directions reported in embeddings were unrepresentative of the actual velocity magnitudes in high-dimensional space, as well as severely distorted relative to the linear projection. These discrepancies are a potential cause for concern. Observing the qualitative similarity of Figs 2d and 2h in the original report [1], the reasonable performance of the linear extrapolation in t-SNE in its supplement (SN2 Fig 9a of [1]), as well as the cell cycle dynamics explored with the linear embedding in Revelio [11], a casual reading of the RNA velocity literature would suppose these embeddings to be largely interchangeable.

Finally, we visualized the aggregated velocity vectors in Fig 7 to assess the local and global structures. This visualization served as both an internal and an external control. The internal control demonstrated the local structure and the stability of the velocity-specific methods, i.e., the actual directions of the arrows on the grid. We compared the conventional nonlinear projection to the Boolean method, as well as the linear embedding. The external control concerned the global structure, which can be analyzed in light of known physiological relationships: radial glia differentiate through neuroblasts into neurons [1]. If this global relationship is not captured by the embedding, the inferred trajectories are a priori physically uninterpretable, in a way that is particularly challenging to diagnose.

Fig 7. Performance of cell and velocity embeddings on the forebrain data.

Top: PCA embedding with linear baseline and nonlinear aggregated velocity directions. Bottom: UMAP and t-SNE embeddings with nonlinear velocity projections. The palette used is derived from dutchmasters by EdwinTh.

In the PCA embedding, the global structure was retained and the arrows were fairly robust, even when the non-quantitative Boolean method was used. However, the various projection options suggested drastically different relationships between the cell types, with PCA presenting more continuous representations of cell relationships faithful to ground truth, and UMAP and t-SNE presenting more local images, with distinct and discrete clusters of cells. Clearly, if the relationship between progenitor and descendant is lost, the velocity workflow cannot infer it. The t-SNE and UMAP parameters can be adjusted by the user; however, adding a new set of tuning steps and optimizations provides an opportunity for confirmation bias to overrule the data.

Summary

The standard RNA velocity framework presupposes that the evolution of every gene’s transcriptional activity throughout a differentiation or cycling process can be described by a continuous model with a single upregulation event and a single downregulation event. It proceeds to normalize and smooth the data until the rough edges of single-molecule noise are filed off, and fitting the continuous model assuming Gaussian residuals.

In the process, the stochastic dynamics that predominate in the low-copy number regime, and that characterize nearly all of mammalian transcription, are lost and cannot be recovered. Although parameters can be fit, they are distorted to an unknown extent, due to a combination of data transformation, suboptimal inference, and unit incompatibilities. The gene-specific components of velocity are underspecified due to their direct dependence on the imputation neighborhood and splicing timescale. In scVelo, parameters are estimated under a highly restrictive model, yet applied to make broad claims about complex topologies. In velocyto, only the sign of the velocity is physically interpretable. It can still be used to calculate low-dimensional directions, and this binary velocity embedding is seemingly as good as any other, suggesting that other methods fail to fully utilize valuable information. However, the embedding process itself is not based on biophysics, and is not guaranteed to be stable or robust. Fortunately, the natural match between stochastic models and UMI-aided molecule counting offers hope for quantitative and interpretable RNA velocity.

Prospects and solutions

Is there no balm in Gilead? Given the foundational issues we have raised, how can the RNA velocity framework be reformulated to provide meaningful, biophysically interpretable insights? We propose that discrete Markov modeling can directly and naturally address the fundamental issues. In particular, transient and stationary physiological models can be defined and solved via the chemical master equation (CME), which describes the time evolution of a discrete stochastic process. Since the “noise” is the data of interest, in such an approach smoothing is not required. Rather, technical and extrinsic noise sources can be treated as stochastic processes in their own right, and explicit modeling of them can improve the understanding of batch and heterogeneity effects. Finally, within this framework, parameters can be inferred using standard and well-developed statistical machinery.

Pre-processing

The diversity of potential intermediate and terminal transcripts suggests that simplistic splicing models are inadequate for physiologically faithful descriptions of transcription dynamics. What is needed is a treatment of the types of transcripts listed in “Pre-processing” under “Logic and methodology” as distinct species. This approach immediately leads to several significant challenges, relating to quantification, biophysics, and identifiability.

Transient, low-abundance intermediate transcripts are substantially less characterized than coding isoforms. Some data are available from fluorescence transcriptomics with intron-targeted probes [47], but such imaging is impractical on a genome-wide scale. Unfortunately, the references and computational infrastructure necessary to identify intermediate transcripts do not yet exist.

Even if intermediate isoforms could be perfectly quantified, single-cell RNA-seq data do not generally contain enough information to identify the order of intron splicing. The problem of splicing network inference has been examined; however, experimental approaches [126, 127] are challenging to scale, whereas computational approaches [128] do not generally have enough information to resolve ambiguities.

Furthermore, even with complete annotations and a well-characterized splicing graph at hand, large-scale short-read sequencing cannot fully resolve transcripts. This limitation gives rise to a challenging inference problem. For example, if transcripts A ≔ E1I1E2I2E3 and B ≔ E1E2I2E3 are indistinguishable whenever only the 3’ end of each molecule is sequenced, it is necessary to fit parameters through the random variable XA + XB, i.e., from aggregated data. The functional form of this random variable’s distribution is not yet analytically tractable.

We have described a preliminary method that can partially bypass these problems [81]. Sequencing “long” reads, which at this time is possible with technologies such as Oxford Nanopore [72], or sequencing of “full-length” libraries produced with methods such as Smart-seq3 [129], enhances identifiability and facilitates the construction of new annotations based on presence or absence of intron sequences. Finally, even though additional data are required to specify entire splicing networks, sequencing data are sufficient to constrain parts of these networks; for example, if two transcripts differ by one intron, the longer one cannot possibly be generated from the shorter.

Defining more species leads to inferential challenges in downstream analysis. Even if sequencing data are available, their relationship to the biological counts is nontrivial: some intermediate transcripts may not be observable using certain technologies because they do not contain sequences necessary to initiate reverse priming, whereas others may be over-represented in the data because they contain many. In a preliminary investigation [130], which adopts the binary categories of “spliced” and “unspliced” defined in the original RNA velocity publication, we found that unspliced molecules originating from long genes are overrepresented in short-read sequencing datasets. This suggests that multiple priming occurs at intronic poly(A) sequences. To “regress out” this effect, a simple length-based proxy for the number of poly(A) stretches can be used, but a more granular description would require a sequence-based kinetic model for each intermediate transcript’s capture rate.

Occupation measures provide a theoretical framework for scRNA-seq

The data simulated in the exposition of RNA velocity [1] comes from a particular set of what are called Markov chain occupation measures. As an illustration of what this means, we consider the simplest, univariate model of transcription, a classical birth-death process:

| (8) |

where α is a constant transcription rate and β is a constant efflux rate. Depending on the system, β may have various biophysical interpretations, such as splicing, degradation, or export from the nucleus [81, 106].

Formally, the exact solution to this system is given by the chemical master equation (CME), an infinite series of coupled ordinary differential equations that describe the flux of probability between microstates x, which specify the integer abundance of , defined on :

| (9) |

This equation encodes a full characterization of the system: transcription is zeroth-order, efflux is first-order, and the dynamics are memoryless, i.e., depend only on the state at t. We define the quantity y, namely the solution to the underlying reaction rate equation that governs the average of the copy number distribution:

| (10) |

where y0 is the average at time t = 0. To simplify the analysis, we assume that the initial condition is Poisson-distributed. Per classical results [82], the distribution of counts P(x;t) is described by a Poisson law for all t, and converges to Poisson(α/β) as t → ∞. Quantitatively, the time-dependent distribution is given by P(x;t)∼Poisson(y(t)):

| (11) |

However, this is not the correct model class for distributions observed in scRNA-seq datasets. To appreciate these subtleties, we delve into and interrogate assumptions that underpin the use of such distributions.

The sequencing process does not “know” anything about the transcriptional dynamics and their time t. This stands in contrast to transcriptomics performed in vitro, with a physically meaningful experiment start time. For example, in many standard protocols, a stimulus is applied to the cells at time t = 0, and populations of cells are chemically fixed and profiled at subsequent time points, potentially up to a nominal equilibrium state [2, 34, 88, 131]. However, if there is no experimentally imposed timescale, and we adopt the standard assumption that cell dynamics are mutually independent, the process time decouples from experiment time. Although cells are sampled simultaneously, their process times t are draws from a random variable that must be defined.

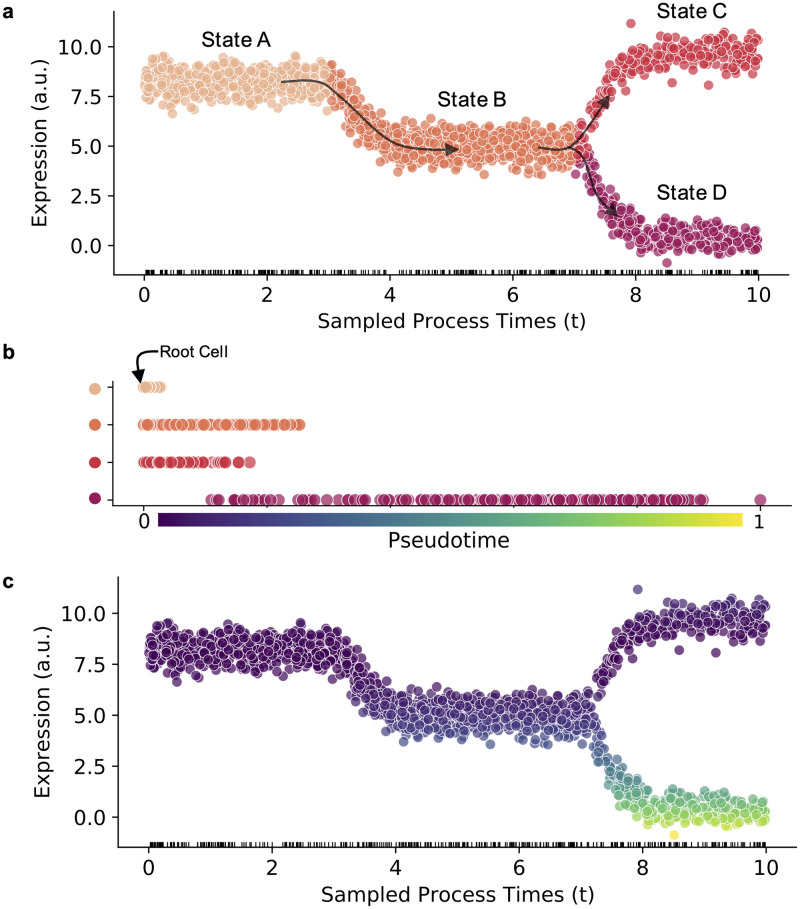

Formalizing this framework requires introducing the notion of occupation measures. Considering a single cell, we designate its process time t as a latent process time (Fig 8a), the mechanistic analogue to the phenomenological pseudotime. In brief, “pseudotime” conventionally denotes a one-dimensional coordinate along a putative cell trajectory, which parametrizes a principal curve in a space based on RNA counts [37] (Fig 8b and 8c). On the other hand, the process time is a real time coordinate, which governs the “clock” of the stochastic process. Broadly speaking, this is the physical quantity which the “latent time” discussed in the exposition of scVelo attempts to approximate. The difference between pseudotimes and process times is fundamental. The Markov chain process time is physically interpretable as the progress of a process that induces the observations in expression space. Conversely, the expression pseudotime is purely phenomenological (Fig 8c), and we are unaware of any trajectory inference methods that explicitly parameterize the underlying stochastic model using the CME; instead, all available implementations appear to use isotropic or continuous noise models [37, 108, 132–140]. As we emphasize in “Model definition” under “Logic and methodology,” these models are inappropriate for low-abundance molecular species.

Fig 8. Markov Chain process time versus expression pseudotime.

a. Simulated gene expression for 2000 cells over 4 states (states A, B, C, and D) with a bifurcation at C/D showing spliced counts of a single gene at the sampled process times. The abbreviation a.u. denotes arbitrary units. b. Ordering of all cells by expression pseudotime coordinate, calculated as the Euclidean distance between each cell and the root cell (cell at time t = 0) and scaled to between 0 and 1. c. The sampled cells colored by the calculated pseudotime value.

By construction, cell trajectories are observed at times (Fig 8a). This requires introducing a sampling distribution f(t), which describes the probability of observing a cell at a particular underlying process time. Therefore, the probability of observing x molecules of in the constitutive case takes the following form:

| (12) |

i.e., the expectation of P(x;t) under the sampling law. P(x) is called the occupation measure of the process, and reports the probability that a trajectory is observed to be in state x, a slight generalization of the usual definition [141–143].

Next, we must encode the assumption that cell observations are desynchronized from the sequencing process and each other. This assumption leads us to a choice consistent with the previous reports [1, 21], namely df = T−1dt, where [0, T] is the process time interval observable by the sequencing process. This constrains the probability of observing state x to be the actual fraction of time the system spends in that state. Then, we take T → ∞, yielding

| (13) |

which is a statement of the ergodic theorem [144]. Under mild conditions, this theorem guarantees that samples from unsynchronized trajectories converge to the same distribution as the far more tractable ensembles of synchronized trajectories.

With this discussion, we have clarified that the application of stationary distributions limt→∞ P(x;t) to describe ostensibly unsynchronized cells naturally emerges from assumptions about biophysics and the nature of the sampling process. However, these assumptions may be violated; for example, RNA velocity describes molecules sampled from a transient process. This distinction is key: limits such as limt→∞ P(x;t) may not even exist, and we expect to capture only a portion of the trajectory. A rigorous probabilistic model must treat the occupation measure directly, which remains valid without those assumptions. Formally, this amounts to relaxing the assumption of desynchronization: the sequencing process is time-localized to a particular interval of the underlying biological process.

To stay consistent, we continue using the sampling law df = T−1dt on [0, T], but infinite supports are valid so long as they decay rapidly enough to be integrable. As scRNA-seq data are atemporal, this time coordinate is unitless and cannot be assigned a scale without prior information, so T can be defined arbitrarily without loss of generality.

The occupation measure of the birth-death process takes the following form:

| (14) |

This integral can be solved exactly; however, this solution does not easily generalize to more complex systems. Instead, we can consider the probability-generating function (PGF), which also takes a remarkably simple form:

| (15) |

By linearity, the generating function H(z) of the occupation measure is the expectation of the generating function G(z;t) of the original process with respect to the sampling measure f. From standard properties of the birth-death process, this yields:

| (16) |

where Ei is the exponential integral [145]. This is the solution to the system of interest. Although straightforward to evaluate, it does not appear to belong to any well-known parametric family.

Modular extensions to broader classes of biological phenomena

Extending this approach to more complicated biological models requires positing a hypothesis about the dynamics of transcription and the mRNA life-cycle, formalizing it as a CME, then solving that CME. This is the “forward” problem of statistical inference, which is typically intractable. However, in the current subsection, we summarize the model components that can be assembled to produce solvable systems, and outline potential challenges.

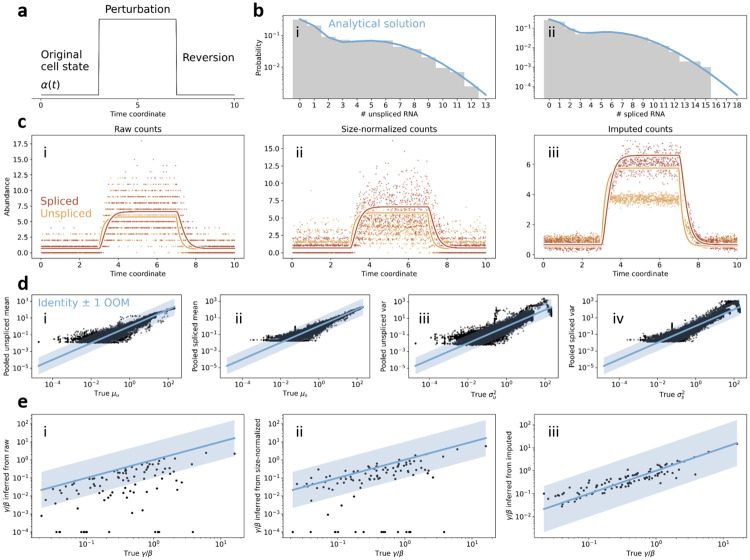

The canonical velocity model

To begin, we would like to fully recapitulate the model introduced and simulated in the original publication [1]. This model has the following structure:

| (17) |

where α(t) is a piecewise constant transcription rate. The instantaneous distribution of this process over copy number states (xu, xs) is well-known [81, 82]:

| (18) |

where U1 is the characteristic of the unspliced mRNA solution, whereas μu(t) and μs(t) are the instantaneous averages, which can be written down in closed form for arbitrary piecewise constant α(t). The generating function derivation presupposes that the system starts at steady state. By defining N genes whose RNA abundance evolves on [0, T], we can extend this univariate distribution to a multivariate occupation measure in 2N dimensions. This occupation measure is the putative source distribution for cells observed in experiment.

Eq 18 recapitulates the system proposed in the original publication [1], and we use it to set up and solve transient biophysical systems throughout the rest of this report. Restricting analysis in this way allows us to speculate about how such models could be fit, while keeping the mathematics in closed form. However, the schema in Eq 17 omits important physiological phenomena. Although these phenomena can be modeled, very few of them afford closed-form solutions, and their range can be classified according to their complexity.

PGF-tractable models

The first class includes models that afford transient single-gene solutions in terms of the generating function, and can be combined with the definition of an occupation measure to produce a generative model for observed data. These models can be solved using quadrature, and extend the dynamics in simple, Markovian ways, although strategies for fitting them are not yet well-developed.

The solution in Eq 18 generalizes to an arbitrary number of species, merely by converting the stoichiometry and rate matrix to the appropriate U1(u; s). The solution for a generic splicing and degradation network, under constitutive transcription, stays Poisson, and makes up one of the few closed-form cases [81, 82].

Transcriptional bursting at a gene locus can be represented by slightly modifying Eq 18:

| (19) |

where M is the generating function of the burst distribution, which governs the number of unspliced mRNA molecules produced per transcriptional event. This distribution can be time-dependent and reflect the transient modulation of bursting dynamics. As in Eq 18, we omit the explicit representation of initial conditions, described in [81].

In principle, we need not assume α(t) in Eq 17 is piecewise constant. If α(t) is deterministic, the solution can be obtained by quadrature. More sophisticated regulatory dynamics, such as transcriptional rates governed by a continuous- or discrete-valued stochastic process [146, 147], can be treated analogously. If the process is continuous-valued, e.g., representing the concentration of a rapidly-binding regulator, it requires solving a single, potentially nonlinear ODE. If it is Markovian and discrete-valued, e.g., representing transitions between distinct promoter stats, it requires solving a set of coupled ODEs.

Gene-gene coexpression, or the synchronization of transcriptional events induced by a common regulator, can be solved by setting M in Eq 19 to a multivariate generating function whose arguments are the characteristics of unspliced mRNA species [81].

The model is broad enough to describe cell type distinctions and cell fate stochasticity simply by defining a discrete mixture model over transcription rate trajectories. For example, if a cell can choose to enter cell fate A with probability wA or cell fate B with probability 1 − wA, the overall generating function takes the following form:

| (20) |

where each branch’s generating function is induced by distinct driving functions, αA(t) ≠ αB(t). This formulation is equivalent to defining a finite mixture of branches, with a categorical distribution of parameters as the mixing law. As previously discussed [81], it can be extended to continuous mixtures, although such systems are not typically tractable.

Certain models of technical noise are modular with respect to the biological processes. Specifically, if we suppose that sequencing is a random process that is independent and identically distributed for every molecule of a particular species i, with a distribution on , the PGF of the observed UMIs is G evaluated at xi = Gt, i(xi), where Gt, i(xi) is the PGF of the sampling distribution for species indexed by i. We have previously considered this model for Bernoulli and Poisson distributions, which presuppose that the cDNA library construction is sequestering and non-sequestering, respectively [130, 148].

Finally, it is useful to consider the meaning behind the choice of f in Eq 12. Thus far, we have assumed f is a uniform law on [0, T]. This choice is underpinned by a particular conceptual model of cell dynamics relative to the sequencing process. Formalizing such a conceptual model requires introducing several fundamental principles from the field of chemical reaction engineering and interpreting them through the lens of living systems. A reactor is a vessel that contains a reacting system; in the current context, it is a living tissue which is isolated for sequencing.

Three idealized extremes of reactor configurations exist: the plug flow reactor (PFR), the continuous stirred tank reactor (CSTR), and the batch reactor (BR). The PFR has no internal mixing: the reaction stream enters in the influx and exits from the efflux after a deterministic amount of time. Therefore, the PFR has memory: if the total residence time is T and reactor length is L, and the fluid element is localized at position Lt/T within the PFR, it will exit after a delay of T − t. Conversely, the CSTR is fully mixed: the reaction stream enters the vessel and combines with its existing contents. Therefore, the CSTR is memoryless or Markovian: if the average residence time is T, a given fluid element within the reactor will exit after a delay distributed according to Exp(T−1), independent of time since its entrance [149]. The CSTR and PFR can operate at steady state, whereas the BR accumulates reaction products: the BR is charged at t = 0, and the reaction proceeds until a predetermined time T.

We can translate these basic configurations to the design of transcriptomics experiments, treating individual cells as fluid elements, and assuming the variation in transcriptional dynamics begins as the cells enter the reactor at t = 0. If the sampling process is independent from a PFR in space or a BR in time, we obtain the uniform sampling measure df = T−1dt. If the process samples from a CSTR, we obtain the exponential sampling measure . If the process samples from the efflux of a PFR or the product of a BR, we obtain the Dirac sampling measure df = δ(T − t)dt. The first two scenarios appear to be more appropriate for describing scRNA-seq experiments, which can sample across entire processes, whereas the final scenario appears to correspond to fluorescence transcriptomics experiments, which have a well-defined start time. Assuming there are no cyclostationary dynamics, all of the reactor and sampling configurations converge to the ergodic limit as T → ∞.

This conceptual model is highly simplified, but serves to motivate the need for the sampling measure formulation, as well as provide an intuition into the physical assumptions encoded by the choice of df. To summarize, if we wish to describe a transient process observed by sequencing, we need to begin with one of two assumptions. On one hand, the transient gene program may be triggered externally, but fully executed within the cell, allowing us to define “time-desynchronized” sampling from a PFR, CSTR, or BR. On the other hand, it may be controlled by external factors, allowing us to define “space-desynchronized” sampling from a PFR. However, considerable further theoretical and experimental work is necessary to understand when such assumptions are justified.

Simulation-tractable models

The second class includes models which can be cast into a Markovian form and simulated [150], or partially solved using matrix algorithms [151]. Although we may be able to write down a CME and its formal solution, numerical evaluation typically requires considerable computational expense, and the appropriate inference strategies are unclear.

We abstract away the mechanistic details of regulation. However, the variation in transcriptional parameters should be understood as the outcome of a regulatory process that is tightly controlled in time (to justify step changes in rates) and concentration (to justify deterministic parameter values). It is possible to explicitly represent regulatory networks or feedback, in line with dyngen [14] and standard systems biology studies [152]. Further, we assume all reactions are zeroth- or first-order, although it is possible to model the kinetics of enzyme binding [153]. Unfortunately, such systems are intractable in the current context, due to high dimensionality, mathematical challenges, lack of protein data, and complexity of regulatory networks on a genome-wide scale.

We described a method to model sequencing as a random process, if the fairly restrictive conditions of independence and identical distribution hold. The distributional assumption can be relaxed for a particular gene and molecular species [130, 148]. It is also possible to write down—but challenging to fit—a hierarchical model, such that each cell’s sequencing model parameters are random. However, it does not yet appear feasible to solve and fit models which impose coupling between cells and genes through the compositional nature of the sequencing process [109]. At the same time, generating realizations from such models is trivial, and amounts to sampling without replacement. Analogously, it is straightforward to set up a model with “cell size” variation, such that each cell separately regulates its average transcription level for the entire genome [154]. It is unclear how such models should be fit, although neural [155] and perturbative [154] approaches have seen use.

We have restricted our discussion to regulatory modulation in terminally differentiated cells. In a variety of contexts, cell division, which involves molecule partitioning and transcriptional dosage compensation [93, 156], plays a significant role in differentiation, and it is inappropriate to omit its effects. Considerable literature exists on cell cycle models [93, 157, 158], but they are fairly challenging to solve, and the appropriate way to integrate them with occupation measures is unclear at this time.