Abstract

Multistep power consumption forecasting is smart grid electricity management’s most decisive problem. Moreover, it is vital to develop operational strategies for electricity management systems in smart cities for commercial and residential users. However, an efficient electricity load forecasting model is required for accurate electric power management in an intelligent grid, leading to customer financial benefits. In this article, we develop an innovative framework for short-term electricity load forecasting, which includes two significant phases: data cleaning and a Residual Convolutional Neural Network (R-CNN) with multilayered Long Short-Term Memory (ML-LSTM) architecture. Data preprocessing strategies are applied in the first phase over raw data. A deep R-CNN architecture is developed in the second phase to extract essential features from the refined electricity consumption data. The output of R-CNN layers is fed into the ML-LSTM network to learn the sequence information, and finally, fully connected layers are used for the forecasting. The proposed model is evaluated over residential IHEPC and commercial PJM datasets and extensively decreases the error rates compared to baseline models.

Keywords: electricity load forecasting, residual CNN, ML-LSTM, CNN-LSTM, electricity consumption

1. Introduction

Electricity load forecasting predicts future load based on single or multiple features or parameters. Features could be of multiple types, such as an ongoing month, hour, weather situation, electricity costs, economic conditions, geographical circumstances, etc. [1]. Electricity load forecasting is significantly increasing due to the development and extension of the energy market, as they endorsed trading electricity for each hour. Profitable market interactions could be enabled by accurate load forecasting [2] and leakage current prediction [3], which helps power firms guarantee electricity stability and decrease electricity wastage [4]. The electricity load prediction is handled through short-term electricity load forecasting [5], which is particularly significant due to smart grid development [6].

The United States Energy Information Administration stated that from 2015–40 the increase in power consumption would be boosted up to 28% [7], while the International Energy Agency stated that buildings and building construction account for approximately 36% of the world’s total energy consumption. Stimulating building energy efficiency is vital in the low carbon economy [8,9]. Accurate energy consumption forecasting is indispensable for buildings’ energy-saving design and renovation. The scrutiny of dissimilarity among the energy consumption forecasting and the experimented data can also provide a foundation for building operation monitoring. It can also provide a source for energy peak regulation for large buildings [10,11]. In addition, some large-scale buildings are essential to secure public resource supply in an area. Thus, accurate energy consumption forecasting can sustain the resident energy allocation sectors.

The following few important points could establish accurate forecasting models of consumption. First, it is crucial to precisely recognize the parameters that contain robust effects on a state’s consumption and add these indicators to the prediction model. Furthermore, choosing an appropriate modeling procedure is also a significant point. The input and output variables contain such a nonlinear relationship that it is very challenging to express them mathematically. Principles to advance the prediction performance develop conspicuously nowadays, instead of hypothetical principles in the model selection. In the end, the vital point is that the methodology must be capable of producing effective forecasting results.

Reviewing the literature in recent years, machine learning models are increasingly used by scholars to forecast the short-term energy consumption of buildings. Additionally, most scholars are adopting hybrid models due to their effective performance compared to single models. However, energy consumption in buildings is exaggerated by various factors and is extremely nonlinear. Thus, this area is still worthy and commendable to be studied and explored further. This research work contributes the following points to the current literature.

The collected benchmark datasets contain a lot of missing values and outliers, which occur due to defaulted meters, weather conditions, and abnormal customer consumption. These abnormalities and redundancies in datasets lead the forecasting network to ambiguous predictions. To resolve this problem, we performed data preprocessing strategies, including outlier removal via the three sigma rules of thumb algorithm, missing value via NAN interpolation method, and the normalization of the data using the MinMax scaler.

We present a deep R-CNN integrated with ML-LSTM for power forecasting using real power consumption data. The motivation behind R-CNN with ML-LSTM is to extract patterns and time-varied information from the input data for effective forecasting.

The proposed model results in the lowest error rates of MAE, MSE, RMSE, and MAPE and the highest R2 compared to recent literature. For the IHEPC dataset, the proposed model achieved 0.0447, 0.0132, 0.002, 0.9759, and 1.024 for RMSE, MAE, MSE, R2, and MAPE, respectively, over the hourly IHEPC dataset while these values are 0.0447, 0.0132, 0.002, 0.9759, and 1.024 over the IHEPC daily dataset. For the PJM dataset, the proposed model achieved 0.0223, 0.0163, 0.0005, 0.9907, 0.5504 for RMSE, MAE, MSE, R2, and MAPE, respectively. The lowest error metrics indicated the supremacy of the proposed model over state-of-the-art methods.

2. Literature Review

Short-term load forecasting is a current research area, and numerous studies have been conducted in the literature. These studies are mainly divided into four categories based on the learning algorithm: physical, persistence, artificial intelligence (AI), and statistical. The persistence model can predict future time series data behavior like electricity consumption or forecasting but failed for several hour ahead predictions [12]. Therefore, persistence models are not decisive for electricity forecasting. Physical models are based on mathematical expressions that consider meteorological and historical data. N. Mohan et al. [13] present a dynamic empirical model for short-term electricity forecasting based on a physical model. These models are also unreliable for electricity forecasting due to the high memory, and computational space required [12]. As compared to physical models, statistical models are less computationally expensive [14] and are typically based on autoregressive methods, i.e., GARCH [15], ARIMA [16], and linear regression methods. These models are based on linear data, while electricity consumption prediction or load forecasting is a nonlinear and complex problem. As presented in [12], the GARCH model can capture uncertainty but has limited capability to capture non-stationary and nonlinear characteristics of electricity consumption data. Several studies based on linear regression have also been developed in previous studies. For instance, N. Fumo et al. proposed a linear and multiple regression model for electricity load forecasting [17]. Similarly, [18,19] developed multi-regression-based electricity load forecasting models. Statistical-based models cannot capture uncertainty patterns directly but use other techniques, as presented in [20], to reduce uncertainty. AI methods can learn nonlinear complex data, divided into shallow and deep structure methods (DSM). The shallow-based methods like SVM [21], ANN [22], wavelet neural networks [23], random forest [24], and ELM [25] performed poorly, except for feature mining. Thus, improving the performance of these models additionally needs feature extraction and selection methods, remaining a challenging problem [20,26].

The AI methods are further categorized into machine learning and deep learning. In machine learning, several studies have been conducted in the literature for electricity load forecasting. Chen et al. used SVR for power consumption forecasting using electricity consumption and temperature data [27]. Similarly, in [28], an SVR-based hybrid electricity forecasting model was developed. In this study, the SVR model was integrated with adjusting the seasonal index and optimization algorithm fruity fly for better performance. Zhong et al. [29] proposed a vector field base SVR model for energy prediction and transformed the nonlinearity of the feature space into linearity to address the concern of the nonlinearity of the model to the input data. C. Li et al. conducted a study based on Random Forest Regression (RFR). First, the features are extracted in the frequency domain using fast Fourier transformation and then used RFR model for simulation and prediction [30]. Similarly, several deep learning models are developed for short-term electricity load forecasting. W. Kong et al. used LSTM recurrent network for electricity forecasting [31]. Another study [32] proposed bidirectional LSTM with an attention mechanism and rolling update technique for electricity forecasting. A recurrent neural network (RNN), based on a pooling mechanism, is developed in [20] to address the concern of overfitting.

Features extraction methods include different regular patterns, spectral analysis, and noise. However, these methods reduce the accuracy for meter level load [20] and these methods reduce the accuracy due to the low proportion of regular patterns in electricity data. DSM, also called deep neural networks (DNNs), addressed the challenges in shallow-based methods. DNN-based models are based on multi-layer processing and learn hierarchical features from input data. For sequence and pattern learning, LSTM [20,26] and CNN [33] are the most powerful architecture recently proposed. The long-tailed dependencies in raw time series data LSTM cannot capture it [34].

Similarly, CNN networks cannot learn temporal features of power consumption. Therefore, hybrid models are developed to forecast power consumption effectively. T. Y. Kim et al. combine CNN with LSTM for power consumption prediction [35], while another study presented in [36] used CNN with bidirectional LSTM. ZA Khan et al. developed a CNN-LSTM-autoencoder-based model to predict short-term load forecasting [37]. Furthermore, CNN with a GRU-based model is developed in [38]. These models address the problems in DNN networks, but the prediction accuracy is unreliable for real-time implementation. Therefore, we developed a two-stage framework for short-term electricity load forecasting in this work. In the first stage, the raw electricity consumption data collected from a residential house is preprocessed to remove missing values, outliers, etc. This refined data is then fed to our newly proposed R-CNN with ML-LSTM architecture to address the concerns of DNNs and improve the forecasting performance for effective power management.

3. Proposed Method

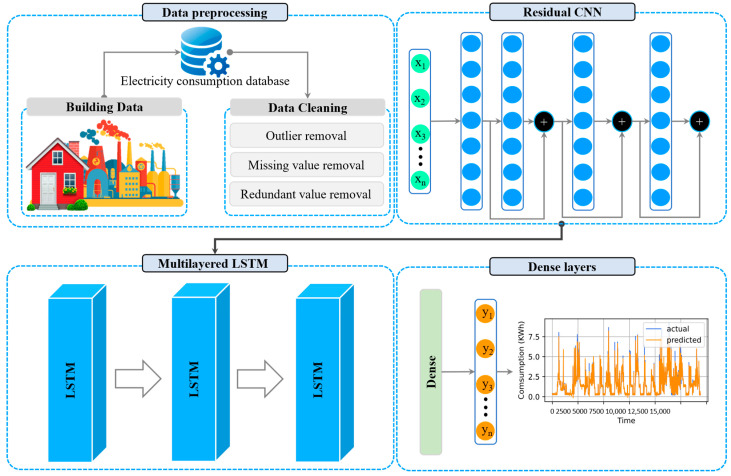

The overall architecture of R-CNN with ML-LSTM is shown in Figure 1 for short-term electricity load forecasting. A two-stage framework is presented, which includes data preprocessing and proposed R-CNN with ML-LSTM architecture. Data preprocessing includes filling missing values removing outliers, and normalizing the data for efficient training. The second step comprises R-CNN with ML-LSTM architecture, where R-CNN is employed for pattern learning. At the same time, the ML-LSTM layers are incorporated to learn the sequential information of electricity consumption data. Each step of the proposed framework is further explained in the following sub-sections.

Figure 1.

Proposed framework for electricity consumption forecasting.

3.1. Data Preprocessing

Smart meter sensors-based data generation contains outliers and missing values for several reasons, such as meter faults, weather conditions, unmanageable supply, storage issues, etc. [39], and must be preprocessed before training. Herein, we apply a unique preprocessing step. For evaluating the proposed method, we used IHEC Dataset, which includes the above-mentioned erroneous values. In addition, the performance is evaluated over the PJM benchmark dataset. To remove the outlier values in the dataset, we used three sigma rules [40] of thumb according to Equation (1).

| (1) |

where is a vector or superset of representing a value for power consumption in duration, i.e., minute, hour, day, etc. At the same time, is the average of , and represents the standard deviation of the . A recovering interpolation method is used as presented in Equation (2) for missing values.

| (2) |

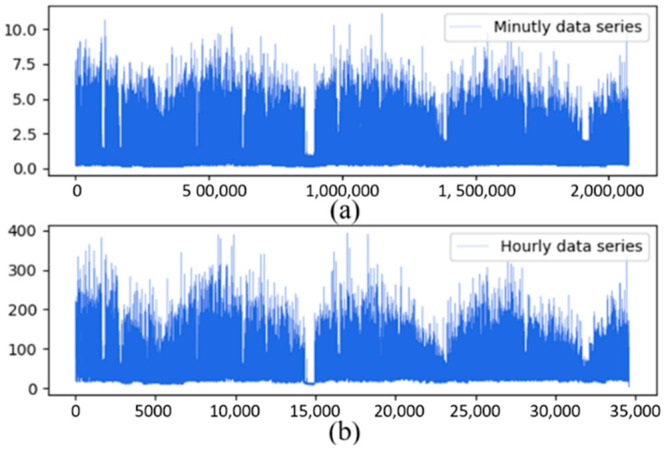

If is missing or null, we placed it as a . The IHEPC dataset was recorded in a one minute resolution, while the PJM dataset was recorded in a one hour resolution. The IHEPC dataset is down sampled for daily load forecasting into hourly resolutions. The input datasets are resampled into low resolution (from minutes to hours) in downsampling. The IHEPC dataset includes 2,075,259 recodes, down-sampled into 34,588 records for daily load forecasting, as shown in Figure 2.

Figure 2.

Data down sampling where “(a)” shows actual minute data and “(b)” shows hourly down-sampled data.

After data cleaning, we apply the data transformation technique to transform the cleaned data into a specific format more suitable for effective training. First, we use the power transformation technique to remove shifts and transform the data into more Gaussian-like. The power transformation includes Box–Cox [41] and Yeo–Johnson [42]. The Box–Cox is sensitive to negative values, while Yeo–Johnson can support both negative and positive values. In this work, we used the Box–Cox technique for power transformation to remove a shift from the electricity data distribution. This work uses univariate electricity load forecasting datasets, so the Box–Cox transformation for a single parameter is shown in Equation (3).

| (3) |

Finally, the min–max data normalization technique converts the data into a specific range because deep learning networks are sensitive to diverse data. The equation of min–max normalization is shown in Equation (4).

| (4) |

where is the actual data, while is the minimum and is the maximum values in the dataset.

3.2. R-CNN with ML-LSTM

The proposed architecture integrates R-CNN with ML-LSTM for power load forecasting. R-CNN and ML-LSTM can store the complex fluctuating trends and extract complicated features for electricity load forecasting. First, the R-CNN layers extract patterns, which are then passed to ML-LSTM as input for learning. CNN is a well know deep learning architecture consisting of four types of layers: convolutional, pooling, fully connected, and regression [43,44]. The convolutional layers include multiple convolution filters, which perform a convolutional operation between convolutional neuron weights and the input volume-connected region, which generates a feature map [45,46]. The basic equation of the convolutional layer operation is shown in Equation (5).

| (5) |

where is the bias of oth convolution filter in the lth layer and demonstrates the location and activation function . In the convolution operation, the weights must be shared with the overall input region, known as weight sharing. During model building, the weight sharing significantly decreases the cost of calculation time and training parameter numbers. After convolution, the pooling operation is performed. The pooling layer reduces feature map resolution for input feature aggregation [47,48]. The output of the pooling layer is shown in Equation (6).

| (6) |

where ε , ε is the region of location of . CNN has three types of pooling layers: max, min, and average poling. The CNN’s general network comprises several convolutional and pooling layers. Before the regression, the fully connected layers are typically set where every neuron in the previous layer is connected to every other in the next layer. The main purpose of the fully connected layer is to represent the learned feature distribution to a single space for high-level reasoning. The regression layer is the final output of the CNN model.

Due to the strong feature extraction ability, CNN architectures are extensively applied for image classification, video classification, time series, etc. Similarly, in time-series forecasting, these models are used for traffic [49,50], renewables [51], election prediction [52], and power forecasting [53]. Recent studies of image classification show the crucial performance of CNNs. As the network depth increases to a certain level, the degradation problem occurs in which the model performance is saturated. The experimentation shows that saturation is an optimization problem that is not caused by overfitting. To address the degradation concern, R-CNN architecture has been developed [54]. The conventional CNN learns the data in a linear mechanism, i.e., a direct function , but the R-CNN learns it differently, defined as .

The ResNet solves the degradation problem and performs satisfactory results over image recognition data, but electricity consumption is time series sequential data. The CNN architecture cannot learn the sequential features of power consumption data. Therefore, the R-CNN with ML-LSTM architecture is developed in this research study for future electricity load forecasting. The R-CNN layers extract spatial information from electricity consumption data. The extracted features of R-CNN are then fed to ML-LSTM as input for temporal learning.

The output of R-CNN is then forwarded to ML-LSTM architecture, that is responsible for storing time information. The ML-LSTM maintains long-term memory by merging its units to update the earlier hidden state, aiming to understand temporal relationships in the sequence. The three gates unit’s mechanism is incorporated to determine each memory unit state through multiplication operations. The input gate, output gate, and forget gate represent each gate unit in the LSTM. The memory cells are updated with an activation. The operation of each gate in the LSTM can be shown in Equations (7)–(9), and the output of each gate is represented by , , and notation, while is the activation function, represents the weight, and is the bias.

| (7) |

| (8) |

| (9) |

3.3. Architecture Design

The proposed R-CNN with ML-LSTM is based on three types of layers: R-CNN, ML-LSTM, and fully connected layers. The kernel size, filter numbers, and strides are adjustable in R-CNN layers according to the model’s performance. Many learning speeds, changes, and performance can happen by adjusting these parameters varying on the input data [55]. We can confirm the performance change by increasing or decreasing these parameters. We used a different kernel size in each layer to minimize the loss of temporal information. The data pass through the residual R-CNN layer, followed by the pooling layer for pattern learning. The output is then fed to the ML-LSTM for sequence learning, which is then forwarded to fully connected (FC) layers for final forecasting. Table 1 shows the layer type, the kernel’s size, and R-CNN parameters with the ML-LSTM network.

Table 1.

The internal architecture of R-CNN and ML-LSTM.

| Layer | Filter-Size | Kernel-Size | Layer-Parameter |

|---|---|---|---|

| Input | - | - | |

| Convolutional (conv)_1 | 32 | 7 | 10,816 |

| conv_2 | 32 | 5 | 20,544 |

| Add [conv_1, conv_2] | - | - | |

| conv_3 | 64 | 3 | 12,352 |

| Add [conv_2, conv_3] | - | - | |

| Convolutional_4 | 128 | 1 | 4160 |

| Add [conv_3, conv_4] | - | - | |

| LSTM | 100 | - | 66,000 |

| LSTM | 100 | - | 80,400 |

| LSTM | 100 | - | 80,400 |

| FC | 128 | - | 12,928 |

| FC | 60 | - | 7740 |

| Total parameters | 295,340 | ||

4. Results

The experimental setup, evaluation metrics, dataset, performance assessment over hourly data, and performance assessment over daily data of R-CNN with the ML-LSTM model are briefly discussed in the following section.

4.1. Experimental Setup

To validate the effectiveness of the proposed approach, the IHEPC dataset is used to implement comprehensive experiments. The R-CNN with ML-LSTM is trained over an Intel-Core-i7 CPU having 32GB RAM and GEFORCE-GTX-2060-GPU in Windows 10. The implementation was performed in Python 3.5 using the Keras framework.

4.2. Evaluation Metrics

The model performance is evaluated on mean square error (MSE), mean absolute error (MAE), root mean square error (RMSE), coefficient of determination (, and mean absolute percentage error (MAPE) metrics. MAE computes the closeness between actual and forecasted values, MSE calculates square error, RMSE is the square root of MSE, exhibits model fitting effect ranging from 0 to 1 where closer to 1 indicates best prediction performance, and MAPE is the absolute ratio error for all samples. The mathematical equations of each metric are demonstrated in Equations (10)–(12) Where is the actual power consumption value and is the forecasted value.

| (10) |

| (11) |

| (12) |

| (13) |

| (14) |

4.3. Datasets

The R-CNN with ML-LSTM model is evaluated over the UCI repository’s IHEPC and PJM datasets. The IHEPC comprises nine attributes: date and time variables, active and reactive power, voltage, intensity, and three submetering variables. Description of IHEPC attributes and their unit are shown in Table 2. The IHEPC dataset was collected from a residential building in France between the period of 2006 to 2010. PJM (Pennsylvania-New Jersey-Maryland) is a regional transmission society that operates the eastern electricity grid of the US. The PJM transmits the electricity to several US regions, including Maryland, Michigan, Delaware, etc. The power consumption data are stored on PJM’s official website, which is recorded in one-hour resolution in megawatts.

Table 2.

IHEPC dataset attributes and units.

| Attributes | Description | Units |

|---|---|---|

| Date and time | Comprise the range of datetime values | dd/mm/yyyy and hh:mm:ss |

| Global active, reactive power and intensity | Minutely averaged Global active1, reactive Power2, and intensity3 values | kilowatt (Kw)1,2 |

| Ampere (A)3 | ||

| Voltage | Minutely averaged voltage values | Volt(V) |

4.4. Comparative Analysis

The performance of R-CNN with the ML-LSTM model is compared to other models over residential electricity consumption IHEPC and regional electricity forecasting PJM datasets. The performance of the proposed model over these datasets and its comparison with other models are clarified in the subsequent sections.

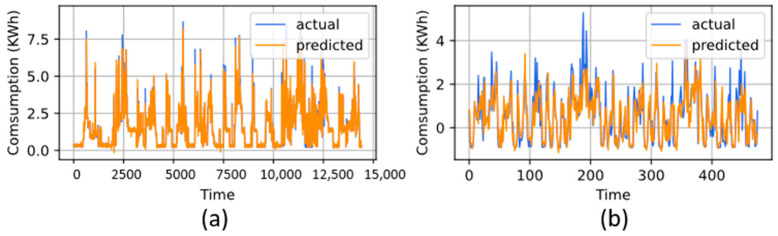

5. Evaluation of IHEPC Dataset

The supremacy of the proposed model over the IHEPC dataset is evaluated on hourly and daily load forecasting, whereas the R-CNN with ML-LSTM achieved satisfactory results. The performance of the test dataset for daily and hourly load forecasting is shown in Figure 3a,b. The comparison with other baseline methods for hourly load forecasting is presented in Table 3. For hourly load forecasting, the results are compared with linear regression [35], ANN [56], CNN [37], CNN-LSTM [35], CNN-BDLSTM [36], CNNLSTM-autoencoder [37], SE-AE [57], GRU [58], FCRBM [59], CNNESN [60], residual CNN stacked LSTM [61], CNN-BiGRU [62], CNN-GRU [63], STLF-Net [64], residual GRU [65] and Khan et al. [66]. Compared to all these studies, R-CNN with ML-LSTM achieved lower error rates of 0.0325, 0.0144, 0.0011, 0.9841, and 1.024 for RMSE, MAE, MSE, R2, and MAPE, respectively.

Figure 3.

Visual results of R-CNN with ML-LSTM for IHEPC (a) hourly and (b) daily.

Table 3.

Performance comparison of R-CNN and ML-LSTM with state-of-the-art over hourly IHEPC dataset.

| Method | RMSE | MAE | MSE | R2 | MAPE |

|---|---|---|---|---|---|

| Linear regression [35] | 0.6570 | 0.5022 | 0.4247 | - | 83.74 |

| ANN [56] | 1.15 | 1.08 | - | - | - |

| CNN [37] | 0.67 | 0.47 | 0.37 | - | - |

| CNNLSTM [35] | 0.595 | 0.3317 | 0.3549 | - | 32.83 |

| CNN-BDLSTM [36] | 0.565 | 0.346 | 0.319 | - | 29.10 |

| CNNLSTM-autoencoder [37] | 0.47 | 0.31 | 0.19 | - | - |

| SE-AE [57] | - | 0.395 | 0.384 | - | - |

| GRU [58] | 0.41 | 0.19 | 0.17 | - | 34.48 |

| FCRBM [59] | 0.666 | - | - | −0.0925 | |

| CNNESN [60] | 0.0472 | 0.0266 | 0.0022 | - | - |

| Residual CNN Stacked LSTM [61] | 0.058 | 0.003 | 0.038 | - | - |

| CNN-BiGRU [62] | 0.42 | 0.29 | 0.18 | - | - |

| CNN-GRU [63] | 0.47 | 0.33 | 0.22 | - | - |

| STLF-Net [64] | 0.4386 | 0.2674 | 0.1924 | - | 36.24 |

| Residual GRU [65] | 0.4186 | 0.2635 | 0.1753 | - | - |

| ESN-CNN [66] | 0.2153 | 0.1137 | 0.0463 | - | - |

| Proposed | 0.0325 | 0.0144 | 0.0011 | 0.9841 | 1.024 |

The performance of the proposed model over the hourly resolution of data also secured the lowest error rate compared to the baseline model. For a precedent, the performance over the daily resolution of data is compared with regression [35], CNN [37], LSTM [35], CNN-LSTM [35], and FCRBM [59], where the details results of each study are given in Table 4. Comparatively, the R-CNN with ML-LSTM model also reduces the error rates over the daily dataset and achieved 0.0447, 0.0132, 0.002, 0.9759, and 2.457 for RMSE, MAE, MSE, R2, and MAPE, respectively, for daily load forecasting.

Table 4.

Performance comparison of R-CNN and ML-LSTM with state-of-the-art over daily IHEPC dataset.

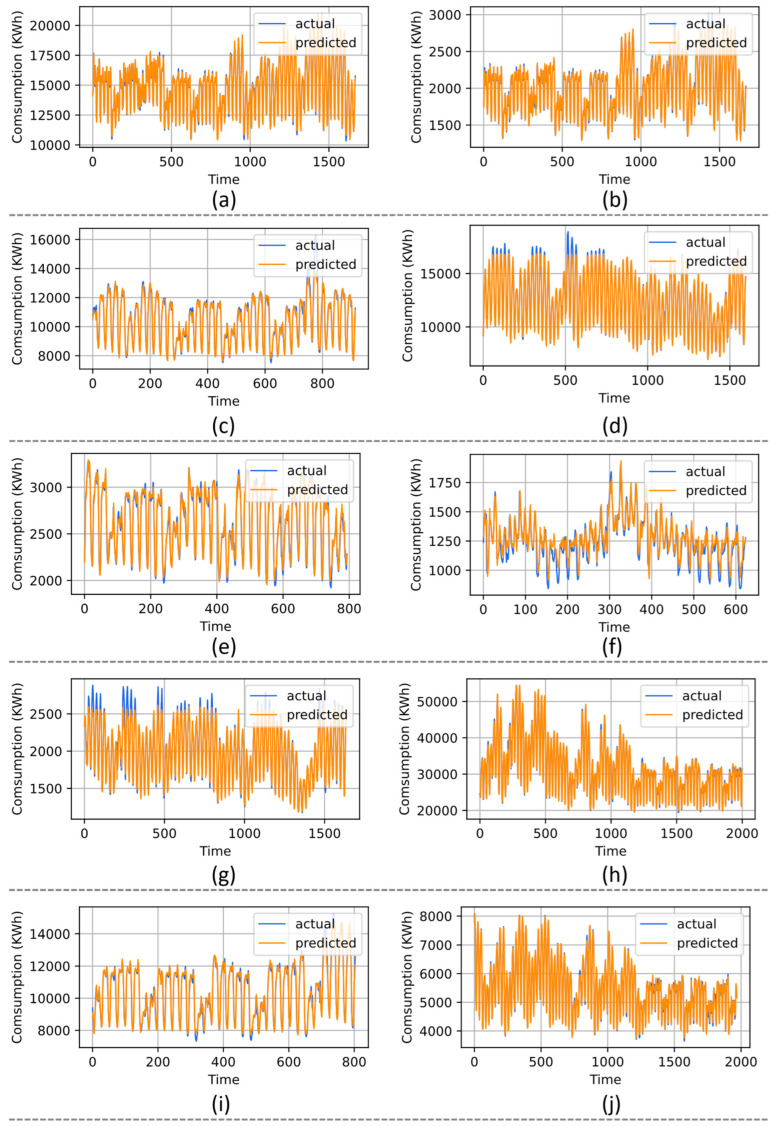

6. Evaluation of the PJM Dataset

The superiority of R-CNN with ML-LSTM is also evaluated over several PJM datasets for daily load forecasting. The PJM benchmark includes 14 datasets of electricity load forecasting, while for the experimentation, we chose 10 datasets from the PJM as selected by [61]. In the literature, we found the comparison for 10 regions dataset, which is demonstrated in Table 5, where the proposed model acquired the lowest error rate for each dataset. The performance of R-CNN with ML-LSTM is compared with Mujeeb et al. [67], Gao et al. [68], Chou et al. [69], Khan et al. [61], and Han et al. [58]. The R-CNN with ML-LSTM secures the lower error metrics for all datasets, where the details are given in Table 5, while the prediction results for all datasets in the PJM region are shown in Figure 4.

Table 5.

Performance comparison of the proposed model with state-of-the-art forecasting methods over the PJM hourly dataset.

| Dataset | Method | RMSE | MAE | MSE | R2 | MAPE |

|---|---|---|---|---|---|---|

| AEP | Mujeeb et al. [67] | 0.386 | - | - | - | 1.08 |

| Gao et al. [68] | 0.49 | - | - | - | 1.14 | |

| Han et al. [58] | 0.054 | - | - | - | - | |

| Khan et al. [61] | 0.031 | 0.001 | 0.027 | - | - | |

| Proposed | 0.0223 | 0.0163 | 0.0005 | 0.9907 | 0.5504 | |

| DAYTON | Khan et al. [61] | 0.046 | 0.033 | 0.002 | - | - |

| Proposed | 0.0206 | 0.0144 | 0.0004 | 0.9911 | 0.4982 | |

| COMED | Khan et al. [61] | 0.044 | 0.030 | 0.002 | - | - |

| Proposed | 0.0216 | 0.0131 | 0.0005 | 0.9906 | 0.5475 | |

| DOM | Khan et al. [61] | 0.057 | 0.039 | 0.003 | - | - |

| Proposed | 0.0212 | 0.0138 | 0.0005 | 0.9905 | 0.5987 | |

| DEOK | Khan et al. [61] | 0.053 | 0.036 | 0.003 | - | - |

| Proposed | 0.0174 | 0.0129 | 0.0003 | 0.9932 | 0.3974 | |

| EKPC | Khan et al. [61] | 0.055 | 0.034 | 0.003 | - | - |

| Proposed | 0.0274 | 0.0202 | 0.0008 | 0.9882 | 0.7965 | |

| DUQ | Khan et al. [61] | 0.054 | 0.041 | 0.003 | - | - |

| Proposed | 0.0430 | 0.0277 | 0.0009 | 0.9975 | 0.8234 | |

| PJME | Khan et al. [61] | 0.043 | 0.031 | 0.002 | - | - |

| Proposed | 0.0199 | 0.0128 | 0.0004 | 0.9913 | 0.4721 | |

| NI | Khan et al. [61] | 0.050 | 0.033 | 0.002 | - | - |

| Proposed | 0.0178 | 0.0129 | 0.0003 | 0.9930 | 0.3748 | |

| PJMW | Khan et al. [61] | 0.038 | 0.027 | 0.001 | - | - |

| Proposed | 0.0145 | 0.0102 | 0.0002 | 0.9949 | 0.2864 |

Figure 4.

Visual results of the proposed model over IHEPC dataset (a) AEP, (b) DAYTON, (c) COMED, (d) DOM, (e) DEOK, (f) EKPC, (g) DUQ, (h) PJME, (i) NI, and (j) PJMW dataset.

7. Conclusions

A two-phase framework is proposed in this work for power load forecasting. Data cleaning is the first phase of our framework where data preprocessing strategies are applied over raw data to make it clean for effective training. Secondly, a deep R-CNN with ML-LSTM architecture is developed where the R-CNN learns patterns from electricity data, and the outputs are fed to ML-LSTM layers. Electricity consumption comprises time series data that include spatial and temporal features. The R-CNN layers extract spatial features in this work, while the ML-LSTM architecture is incorporated for sequence learning. The proposed model was tested over residential and commercial benchmark datasets and conducted with satisfactory results. For residential power consumption forecasting, IHEPC data was used, while the PJM dataset was used for commercial evaluation. The experiments are performed for daily and hourly power consumption forecasting and extensively decrease the error rates. In the future, the proposed model will be tested over medium-term and long-term electricity load forecasting. In addition, we will integrate environmental sensor data that help to predict future electricity consumption. Furthermore, we also intend to investigate the performance of the R-CNN and ML-LSTM in other prediction domains such as fault prediction, renewable power generation prediction, and traffic flow prediction.

Acknowledgments

The authors extend their appreciation to the Deputyship for Research & Innovation, Ministry of Education, Saudi Arabia for funding this research work through the project number (QU-IF-04-02-28647). The authors also thank to Qassim University for technical support.

Author Contributions

Formal analysis, S.H.; Funding acquisition, M.I.; Investigation, W.A.; Methodology, S.H.; Project administration, M.F.A.; Resources, S.A.; Software, M.I.; Supervision, S.A.; Validation, W.A.; Visualization, D.A.D.; Writing—original draft, M.F.A. and S.H.; Writing—review & editing, D.A.D., W.A., M.F.A., S.A. and M.I. All authors have read and agreed to the published version of the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research work was funded by the Deputyship for Research & Innovation, Ministry of Education, Saudi Arabia through the project number (QU-IF-04-02-28647).

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Yar H., Imran A.S., Khan Z.A., Sajjad M., Kastrati Z. Towards smart home automation using IoT-enabled edge-computing paradigm. Sensors. 2021;21:4932. doi: 10.3390/s21144932. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Vrablecová P., Ezzeddine A.B., Rozinajová V., Šárik S., Sangaiah A.K. Smart grid load forecasting using online support vector regression. Comput. Electr. Eng. 2018;65:102–117. doi: 10.1016/j.compeleceng.2017.07.006. [DOI] [Google Scholar]

- 3.Sopelsa Neto N.F., Stefenon S.F., Meyer L.H., Ovejero R.G., Leithardt V.R.Q. Fault Prediction Based on Leakage Current in Contaminated Insulators Using Enhanced Time Series Forecasting Models. Sensors. 2022;22:6121. doi: 10.3390/s22166121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.He W. Load forecasting via deep neural networks. Procedia Comput. Sci. 2017;122:308–314. doi: 10.1016/j.procs.2017.11.374. [DOI] [Google Scholar]

- 5.Huang N., Lu G., Xu D. A permutation importance-based feature selection method for short-term electricity load forecasting using random forest. Energies. 2016;9:767. doi: 10.3390/en9100767. [DOI] [Google Scholar]

- 6.Dang-Ha T.-H., Bianchi F.M., Olsson R. Local short term electricity load forecasting: Automatic approaches; Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN); Anchorage, AK, USA. 14–19 May 2017; pp. 4267–4274. [Google Scholar]

- 7.Sieminski A. International energy outlook. Energy Inf. Adm. (EIA) 2014;18:2. [Google Scholar]

- 8.Lam J.C., Wan K.K., Tsang C., Yang L. Building energy efficiency in different climates. Energy Convers. Manag. 2008;49:2354–2366. doi: 10.1016/j.enconman.2008.01.013. [DOI] [Google Scholar]

- 9.Allouhi A., El Fouih Y., Kousksou T., Jamil A., Zeraouli Y., Mourad Y. Energy consumption and efficiency in buildings: Current status and future trends. J. Clean. Prod. 2015;109:118–130. doi: 10.1016/j.jclepro.2015.05.139. [DOI] [Google Scholar]

- 10.Zhao L., Zhang J.-l., Liang R.-b. Development of an energy monitoring system for large public buildings. Energy Build. 2013;66:41–48. doi: 10.1016/j.enbuild.2013.07.007. [DOI] [Google Scholar]

- 11.Ma X., Cui R., Sun Y., Peng C., Wu Z. Supervisory and Energy Management System of large public buildings; Proceedings of the 2010 IEEE International Conference on Mechatronics and Automation; Xi’an, China. 4–7 August 2010; pp. 928–933. [Google Scholar]

- 12.Khodayar M., Kaynak O., Khodayar M.E. Rough deep neural architecture for short-term wind speed forecasting. IEEE Trans. Ind. Inform. 2017;13:2770–2779. doi: 10.1109/TII.2017.2730846. [DOI] [Google Scholar]

- 13.Mohan N., Soman K., Kumar S.S. A data-driven strategy for short-term electric load forecasting using dynamic mode decomposition model. Appl. Energy. 2018;232:229–244. doi: 10.1016/j.apenergy.2018.09.190. [DOI] [Google Scholar]

- 14.Shi Z., Liang H., Dinavahi V. Direct interval forecast of uncertain wind power based on recurrent neural networks. IEEE Trans. Sustain. Energy. 2017;9:1177–1187. doi: 10.1109/TSTE.2017.2774195. [DOI] [Google Scholar]

- 15.Bikcora C., Verheijen L., Weiland S. Density forecasting of daily electricity demand with ARMA-GARCH, CAViaR, and CARE econometric models. Sustain. Energy Grids Netw. 2018;13:148–156. doi: 10.1016/j.segan.2018.01.001. [DOI] [Google Scholar]

- 16.Boroojeni K.G., Amini M.H., Bahrami S., Iyengar S., Sarwat A.I., Karabasoglu O. A novel multi-time-scale modeling for electric power demand forecasting: From short-term to medium-term horizon. Electr. Power Syst. Res. 2017;142:58–73. doi: 10.1016/j.epsr.2016.08.031. [DOI] [Google Scholar]

- 17.Fumo N., Biswas M.R. Regression analysis for prediction of residential energy consumption. Renew. Sustain. Energy Rev. 2015;47:332–343. doi: 10.1016/j.rser.2015.03.035. [DOI] [Google Scholar]

- 18.Vu D.H., Muttaqi K.M., Agalgaonkar A. A variance inflation factor and backward elimination based robust regression model for forecasting monthly electricity demand using climatic variables. Appl. Energy. 2015;140:385–394. doi: 10.1016/j.apenergy.2014.12.011. [DOI] [Google Scholar]

- 19.Braun M., Altan H., Beck S. Using regression analysis to predict the future energy consumption of a supermarket in the UK. Appl. Energy. 2014;130:305–313. doi: 10.1016/j.apenergy.2014.05.062. [DOI] [Google Scholar]

- 20.Shi H., Xu M., Li R. Deep learning for household load forecasting—A novel pooling deep RNN. IEEE Trans. Smart Grid. 2017;9:5271–5280. doi: 10.1109/TSG.2017.2686012. [DOI] [Google Scholar]

- 21.Wang Y., Xia Q., Kang C. Secondary forecasting based on deviation analysis for short-term load forecasting. IEEE Trans. Power Syst. 2010;26:500–507. doi: 10.1109/TPWRS.2010.2052638. [DOI] [Google Scholar]

- 22.Tsekouras G.J., Hatziargyriou N.D., Dialynas E.N. An optimized adaptive neural network for annual midterm energy forecasting. IEEE Trans. Power Syst. 2006;21:385–391. doi: 10.1109/TPWRS.2005.860926. [DOI] [Google Scholar]

- 23.Chen Y., Luh P.B., Guan C., Zhao Y., Michel L.D., Coolbeth M.A., Friedland P.B., Rourke S.J. Short-term load forecasting: Similar day-based wavelet neural networks. IEEE Trans. Power Syst. 2009;25:322–330. doi: 10.1109/TPWRS.2009.2030426. [DOI] [Google Scholar]

- 24.Lahouar A., Slama J.B.H. Day-ahead load forecast using random forest and expert input selection. Energy Convers. Manag. 2015;103:1040–1051. doi: 10.1016/j.enconman.2015.07.041. [DOI] [Google Scholar]

- 25.Li S., Wang P., Goel L. A novel wavelet-based ensemble method for short-term load forecasting with hybrid neural networks and feature selection. IEEE Trans. Power Syst. 2015;31:1788–1798. doi: 10.1109/TPWRS.2015.2438322. [DOI] [Google Scholar]

- 26.Kong W., Dong Z.Y., Hill D.J., Luo F., Xu Y. Short-term residential load forecasting based on resident behaviour learning. IEEE Trans. Power Syst. 2017;33:1087–1088. doi: 10.1109/TPWRS.2017.2688178. [DOI] [Google Scholar]

- 27.Chen Y., Xu P., Chu Y., Li W., Wu Y., Ni L., Bao Y., Wang K. Short-term electrical load forecasting using the Support Vector Regression (SVR) model to calculate the demand response baseline for office buildings. Appl. Energy. 2017;195:659–670. doi: 10.1016/j.apenergy.2017.03.034. [DOI] [Google Scholar]

- 28.Cao G., Wu L. Support vector regression with fruit fly optimization algorithm for seasonal electricity consumption forecasting. Energy. 2016;115:734–745. doi: 10.1016/j.energy.2016.09.065. [DOI] [Google Scholar]

- 29.Zhong H., Wang J., Jia H., Mu Y., Lv S. Vector field-based support vector regression for building energy consumption prediction. Appl. Energy. 2019;242:403–414. doi: 10.1016/j.apenergy.2019.03.078. [DOI] [Google Scholar]

- 30.Li C., Tao Y., Ao W., Yang S., Bai Y. Improving forecasting accuracy of daily enterprise electricity consumption using a random forest based on ensemble empirical mode decomposition. Energy. 2018;165:1220–1227. doi: 10.1016/j.energy.2018.10.113. [DOI] [Google Scholar]

- 31.Kong W., Dong Z.Y., Jia Y., Hill D.J., Xu Y., Zhang Y. Short-term residential load forecasting based on LSTM recurrent neural network. IEEE Trans. Smart Grid. 2017;10:841–851. doi: 10.1109/TSG.2017.2753802. [DOI] [Google Scholar]

- 32.Wang S., Wang X., Wang S., Wang D. Bi-directional long short-term memory method based on attention mechanism and rolling update for short-term load forecasting. Int. J. Electr. Power Energy Syst. 2019;109:470–479. doi: 10.1016/j.ijepes.2019.02.022. [DOI] [Google Scholar]

- 33.Raza M.Q., Mithulananthan N., Li J., Lee K.Y. Multivariate ensemble forecast framework for demand prediction of anomalous days. IEEE Trans. Sustain. Energy. 2018;11:27–36. doi: 10.1109/TSTE.2018.2883393. [DOI] [Google Scholar]

- 34.Alsanea M., Dukyil A.S., Riaz B., Alebeisat F., Islam M., Habib S. To Assist Oncologists: An Efficient Machine Learning-Based Approach for Anti-Cancer Peptides Classification. Sensors. 2022;22:4005. doi: 10.3390/s22114005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kim T.-Y., Cho S.-B. Predicting residential energy consumption using CNN-LSTM neural networks. Energy. 2019;182:72–81. doi: 10.1016/j.energy.2019.05.230. [DOI] [Google Scholar]

- 36.Ullah F.U.M., Ullah A., Haq I.U., Rho S., Baik S.W.J.I.A. Short-Term Prediction of Residential Power Energy Consumption via CNN and Multilayer Bi-directional LSTM Networks. IEEE Access. 2019;8:123369–123380. doi: 10.1109/ACCESS.2019.2963045. [DOI] [Google Scholar]

- 37.Khan Z.A., Hussain T., Ullah A., Rho S., Lee M., Baik S.W. Towards Efficient Electricity Forecasting in Residential and Commercial Buildings: A Novel Hybrid CNN with a LSTM-AE based Framework. Sensors. 2020;20:1399. doi: 10.3390/s20051399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Afrasiabi M., Mohammadi M., Rastegar M., Stankovic L., Afrasiabi S., Khazaei M. Deep-based conditional probability density function forecasting of residential loads. IEEE Trans. Smart Grid. 2020;11:3646–3657. doi: 10.1109/TSG.2020.2972513. [DOI] [Google Scholar]

- 39.Genes C., Esnaola I., Perlaza S.M., Ochoa L.F., Coca D. Recovering missing data via matrix completion in electricity distribution systems; Proceedings of the 2016 IEEE 17th International Workshop on Signal Processing Advances in Wireless Communications (SPAWC); Edinburgh, UK. 3–6 July 2016; pp. 1–6. [Google Scholar]

- 40.Habib S., Alsanea M., Aloraini M., Al-Rawashdeh H.S., Islam M., Khan S. An Efficient and Effective Deep Learning-Based Model for Real-Time Face Mask Detection. Sensors. 2022;22:2602. doi: 10.3390/s22072602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Box G.E., Cox D.R. An analysis of transformations. J. R. Stat. Soc. Ser. B. 1964;26:211–243. doi: 10.1111/j.2517-6161.1964.tb00553.x. [DOI] [Google Scholar]

- 42.Yeo I.K., Johnson R.A. A new family of power transformations to improve normality or symmetry. Biometrika. 2000;87:954–959. doi: 10.1093/biomet/87.4.954. [DOI] [Google Scholar]

- 43.Zhao X., Wei H., Wang H., Zhu T., Zhang K. 3D-CNN-based feature extraction of ground-based cloud images for direct normal irradiance prediction. Sol. Energy. 2019;181:510–518. doi: 10.1016/j.solener.2019.01.096. [DOI] [Google Scholar]

- 44.Yar H., Hussain T., Khan Z.A., Koundal D., Lee M.Y., Baik S.W. Vision sensor-based real-time fire detection in resource-constrained IoT environments. Comput. Intell. Neurosci. 2021;2021:5195508. doi: 10.1155/2021/5195508. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 45.Wang F., Li K., Duić N., Mi Z., Hodge B.-M., Shafie-khah M., Catalão J.P. Association rule mining based quantitative analysis approach of household characteristics impacts on residential electricity consumption patterns. Energy Convers. Manag. 2018;171:839–854. doi: 10.1016/j.enconman.2018.06.017. [DOI] [Google Scholar]

- 46.Ullah W., Hussain T., Khan Z.A., Haroon U., Baik S.W. Intelligent dual stream CNN and echo state network for anomaly detection. Knowl.-Based Syst. 2022;253:109456. doi: 10.1016/j.knosys.2022.109456. [DOI] [Google Scholar]

- 47.Yar H., Hussain T., Khan Z.A., Lee M.Y., Baik S.W. Fire Detection via Effective Vision Transformers. J. Korean Inst. Next Gener. Comput. 2021;17:21–30. [Google Scholar]

- 48.Aladhadh S., Alsanea M., Aloraini M., Khan T., Habib S., Islam M. An Effective Skin Cancer Classification Mechanism via Medical Vision Transformer. Sensors. 2022;22:4008. doi: 10.3390/s22114008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Ma X., Dai Z., He Z., Ma J., Wang Y., Wang Y. Learning traffic as images: A deep convolutional neural network for large-scale transportation network speed prediction. Sensors. 2017;17:818. doi: 10.3390/s17040818. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Khan Z.A., Ullah W., Ullah A., Rho S., Lee M.Y., Baik S.W. An Adaptive Filtering Technique for Segmentation of Tuberculosis in Microscopic Images. Association for Computing Machinery; New York, NY, USA: 2020. pp. 184–187. [Google Scholar]

- 51.Wang H., Yi H., Peng J., Wang G., Liu Y., Jiang H., Liu W. Deterministic and probabilistic forecasting of photovoltaic power based on deep convolutional neural network. Energy Convers. Manag. 2017;153:409–422. doi: 10.1016/j.enconman.2017.10.008. [DOI] [Google Scholar]

- 52.Ali H., Farman H., Yar H., Khan Z., Habib S., Ammar A. Deep learning-based election results prediction using Twitter activity. Soft Comput. 2021;26:7535–7543. doi: 10.1007/s00500-021-06569-5. [DOI] [Google Scholar]

- 53.Chen K., Chen K., Wang Q., He Z., Hu J., He J. Short-term load forecasting with deep residual networks. IEEE Trans. Smart Grid. 2018;10:3943–3952. doi: 10.1109/TSG.2018.2844307. [DOI] [Google Scholar]

- 54.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Las Vegas, NV, USA. 27–30 June 2016; pp. 770–778. [Google Scholar]

- 55.He K., Sun J. Convolutional neural networks at constrained time cost; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Boston, MA, USA. 7–12 June 2015; pp. 5353–5360. [Google Scholar]

- 56.Rajabi R., Estebsari A. Deep Learning Based Forecasting of Individual Residential Loads Using Recurrence Plots; Proceedings of the 2019 IEEE Milan PowerTech; Milan, Italy. 23–27 June 2019; pp. 1–5. [Google Scholar]

- 57.Kim J.-Y., Cho S.-B. Electric energy consumption prediction by deep learning with state explainable autoencoder. Energies. 2019;12:739. doi: 10.3390/en12040739. [DOI] [Google Scholar]

- 58.Han T., Muhammad K., Hussain T., Lloret J., Baik S.W. An efficient deep learning framework for intelligent energy management in IoT networks. IEEE Internet Things J. 2020;8:3170–3179. doi: 10.1109/JIOT.2020.3013306. [DOI] [Google Scholar]

- 59.Mocanu E., Nguyen P.H., Gibescu M., Kling W.L. Deep learning for estimating building energy consumption. Sustain. Energy Grids Netw. 2016;6:91–99. doi: 10.1016/j.segan.2016.02.005. [DOI] [Google Scholar]

- 60.Khan Z.A., Hussain T., Baik S.W. Boosting energy harvesting via deep learning-based renewable power generation prediction. J. King Saud Univ.-Sci. 2022;34:101815. doi: 10.1016/j.jksus.2021.101815. [DOI] [Google Scholar]

- 61.Khan Z.A., Ullah A., Haq I.U., Hamdy M., Maurod G.M., Muhammad K., Hijji M., Baik S.W. Efficient Short-Term Electricity Load Forecasting for Effective Energy Management. Sustain. Energy Technol. Assess. 2022;53:102337. doi: 10.1016/j.seta.2022.102337. [DOI] [Google Scholar]

- 62.Khan Z.A., Ullah A., Ullah W., Rho S., Lee M., Baik S.W. Electrical energy prediction in residential buildings for short-term horizons using hybrid deep learning strategy. Appl. Sci. 2020;10:8634. doi: 10.3390/app10238634. [DOI] [Google Scholar]

- 63.Sajjad M., Khan Z.A., Ullah A., Hussain T., Ullah W., Lee M.Y., Baik S.W. A novel CNN-GRU-based hybrid approach for short-term residential load forecasting. IEEE Access. 2020;8:143759–143768. doi: 10.1109/ACCESS.2020.3009537. [DOI] [Google Scholar]

- 64.Abdel-Basset M., Hawash H., Sallam K., Askar S.S., Abouhawwash M. STLF-Net: Two-stream deep network for short-term load forecasting in residential buildings. J. King Saud Univ.-Comput. Inf. Sci. 2022;34:4296–4311. doi: 10.1016/j.jksuci.2022.04.016. [DOI] [Google Scholar]

- 65.Khan S.U., Haq I.U., Khan Z.A., Khan N., Lee M.Y., Baik S.W. Atrous Convolutions and Residual GRU Based Architecture for Matching Power Demand with Supply. Sensors. 2021;21:7191. doi: 10.3390/s21217191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Khan Z.A., Hussain T., Haq I.U., Ullah F.U.M., Baik S.W. Towards efficient and effective renewable energy prediction via deep learning. Energy Rep. 2022;8:10230–10243. doi: 10.1016/j.egyr.2022.08.009. [DOI] [Google Scholar]

- 67.Mujeeb S., Javaid N. ESAENARX and DE-RELM: Novel schemes for big data predictive analytics of electricity load and price. Sustain. Cities Soc. 2019;51:101642. doi: 10.1016/j.scs.2019.101642. [DOI] [Google Scholar]

- 68.Gao W., Darvishan A., Toghani M., Mohammadi M., Abedinia O., Ghadimi N. Different states of multi-block based forecast engine for price and load prediction. Int. J. Electr. Power Energy Syst. 2019;104:423–435. doi: 10.1016/j.ijepes.2018.07.014. [DOI] [Google Scholar]

- 69.Chou J.S., Truong D.N. Multistep energy consumption forecasting by metaheuristic optimization of time-series analysis and machine learning. Int. J. Energy Res. 2021;45:4581–4612. doi: 10.1002/er.6125. [DOI] [Google Scholar]