Abstract

Purpose:

Cochlear implant (CI) recipients demonstrate variable speech recognition when listening with a CI-alone or electric-acoustic stimulation (EAS) device, which may be due in part to electric frequency-to-place mismatches created by the default mapping procedures. Performance may be improved if the filter frequencies are aligned with the cochlear place frequencies, known as place-based mapping. Performance with default maps versus an experimental place-based map was compared for participants with normal hearing when listening to CI-alone or EAS simulations to observe potential outcomes prior to initiating an investigation with CI recipients.

Method:

A noise vocoder simulated CI-alone and EAS devices, mapped with default or place-based procedures. The simulations were based on an actual 24-mm electrode array recipient, whose insertion angles for each electrode contact were used to estimate the respective cochlear place frequency. The default maps used the filter frequencies assigned by the clinical software. The filter frequencies for the place-based maps aligned with the cochlear place frequencies for individual contacts in the low- to mid-frequency cochlear region. For the EAS simulations, low-frequency acoustic information was filtered to simulate aided low-frequency audibility. Performance was evaluated for the AzBio sentences presented in a 10-talker masker at +5 dB signal-to-noise ratio (SNR), +10 dB SNR, and asymptote.

Results:

Performance was better with the place-based maps as compared with the default maps for both CI-alone and EAS simulations. For instance, median performance at +10 dB SNR for the CI-alone simulation was 57% correct for the place-based map and 20% for the default map. For the EAS simulation, those values were 59% and 37% correct. Adding acoustic low-frequency information resulted in a similar benefit for both maps.

Conclusions:

Reducing frequency-to-place mismatches, such as with the experimental place-based mapping procedure, produces a greater benefit in speech recognition than maximizing bandwidth for CI-alone and EAS simulations. Ongoing work is evaluating the initial and long-term performance benefits in CI-alone and EAS users.

Supplemental Material:

Cochlear implant (CI) recipients listening with a CI-alone or electric-acoustic stimulation (EAS) device vary widely in speech recognition performance (Adunka et al., 2013; Firszt et al., 2004; Gantz et al., 2016; Pillsbury et al., 2018). Some variables that are consistently observed to influence speech recognition in adults include duration of deafness, insertion depth and scalar location of the electrode array, and duration of device use (Blamey et al., 1996, 2013; Buchman et al., 2014; Finley et al., 2008; Holden et al., 2013; O'Connell et al., 2016). Another potential source of individual differences in speech recognition is variability in the place of electric stimulation relative to the natural tonotopicity of the cochlea. Spectral shifts in place of transduction, known as frequency-to-place mismatches, are created by the default mapping procedures for CI-alone and EAS devices. Those default mapping procedures do not account for known variability in angular insertion depth across and within electrode arrays, resulting in varying magnitudes of frequency-to-place mismatches, particularly for short (e.g., 24 mm) arrays (Canfarotta et al., 2020; Landsberger et al., 2015). The presence of frequency-to-place mismatches negatively influences speech recognition of CI-alone users (Başkent & Shannon, 2005; Canfarotta et al., 2020; Fu & Shannon, 1999b; Fu et al., 2002; Mertens et al., 2021), as well as participants with normal hearing when listening to CI-alone simulations (Başkent & Shannon, 2003, 2007; Dorman et al., 1997; Faulkner et al., 2003; Fu & Shannon, 1999b; T. Li & Fu, 2010; Shannon et al., 1998) and EAS simulations (Dillon et al., 2021; Fu et al., 2017; Willis et al., 2020). Although some listeners can acclimate to mismatches after auditory training and/or long-term listening experience (Faulkner, 2006; Fu et al., 2002, 2005; Fu & Galvin III, 2003; T. Li & Fu, 2007; T. Li et al., 2009; Reiss et al., 2007, 2014; Rosen et al., 1999; Sagi et al., 2010; Smalt et al., 2013; Svirsky et al., 2004, 2015; Vermeire et al., 2015), initial performance may be improved if the electrode array position is incorporated into the mapping of CI-alone and EAS devices to minimize frequency-to-place mismatches. Improvements in initial performance may support consistent, long-term CI-alone or EAS device use and limit nonuse.

Default Mapping: Influence of Spectrally Shifted Information

A primary objective of the CI-alone and EAS default mapping procedures is to provide the listener with access to the full speech spectrum. The CI-alone default mapping procedure distributes the speech spectrum (e.g., 100–8500 Hz) logarithmically across the electric filters for the active channels, which roughly resembles the tonotopicity of the cochlea (basilar membrane: Greenwood, 1990; spiral ganglion: Stakhovskaya et al., 2007). The EAS default mapping procedure identifies the frequency at which unaided acoustic hearing thresholds exceed a criterion level (e.g., 65 dB HL; Vermeire et al., 2008) and assigns that frequency to the low-pass filter of electric stimulation. The remaining information is then distributed logarithmically across the electric filters for the active channels.

The magnitude of frequency-to-place mismatches varies widely for CI recipients listening with default maps (Canfarotta et al., 2020; Landsberger et al., 2015) and has been shown to influence the early speech recognition for CI-alone users (Başkent & Shannon, 2005; Canfarotta et al., 2020; Fu & Shannon, 1999b; Fu et al., 2002; Mertens et al., 2021). For CI-alone users, recipients of short (e.g., 24 mm) arrays experience larger spectral shifts with default maps than recipients of long (e.g., 31.5 mm) arrays (Canfarotta et al., 2020). For example, Canfarotta et al. (2020) estimated the frequency-to-place mismatch at the 1500-Hz cochlear place frequency for a sample of CI-alone users with full insertions of 24-, 28-, or 31.5-mm lateral wall arrays. The mean mismatch by array length was 10 semitones (SD = 2) for the 24-mm array (n = 4), five semitones (SD = 3) for the 28-mm array (n = 32), and four semitones for the 31.5-mm array (n = 44). That is, CI-alone users with the 24-mm array had an average mismatch of approximately one octave (12 semitones). For EAS users, the magnitude of the frequency-to-place mismatch depends not only on the angular insertion depth but also on degree of residual hearing. For example, Canfarotta et al. (2020) also estimated mismatches for a sample of EAS users with full insertions of 24- and 28-mm lateral wall arrays. The mean mismatch by array length was nine semitones (SD = 6) for the 24-mm array (n = 7) and six semitones (SD = 3) for the 28-mm array (n = 16). This has clinical relevance as larger magnitudes of frequency-to-place mismatch were shown to be associated with poorer word recognition in quiet and poorer sentence recognition in noise within the initial 6 months of listening experience for CI-alone users (Canfarotta et al., 2020; Mertens et al., 2021). Some listeners demonstrate an ability to acclimate to spectrally shifted maps with auditory training and/or listening experience (Faulkner, 2006; Fu et al., 2002, 2005; Fu & Galvin III, 2003; T. Li & Fu, 2007; T. Li et al., 2009; Reiss et al., 2007, 2014; Rosen et al., 1999; Sagi et al., 2010; Smalt et al., 2013; Svirsky et al., 2004, 2015; Vermeire et al., 2015); however, acclimatization may take months to years and remains incomplete for some CI recipients (Reiss et al., 2014; Sagi et al., 2010; Svirsky et al., 2004, 2015; Tan et al., 2017).

Candidates for cochlear implantation who present with low-frequency thresholds ranging from normative levels up to a moderate loss are often implanted with a short (e.g., 20–24 mm) lateral wall array, as there is a greater likelihood of postoperative hearing preservation with a short than a long array (Suhling et al., 2016; Wanna et al., 2018). These patients are therefore at risk for large frequency-to-place mismatches, on the order of 10 semitones, when mapped using default procedures. CI recipients with hearing preservation are fit with EAS devices. Although EAS users benefit from access to low-frequency acoustic information (Dillon et al., 2015; Gantz et al., 2016; Gantz & Turner, 2003; Gifford et al., 2017; Helbig et al., 2011; Karsten et al., 2013; Lorens et al., 2008; Pillsbury et al., 2018), there are marked individual differences in speech recognition outcomes (Gantz et al., 2016; Pillsbury et al., 2018). Frequency-to-place mismatches may play a role in this variability (Dillon et al., 2021; Willis et al., 2020). CI recipients with postoperative severe-to-profound hearing loss are fit with CI-alone devices. Unfortunately, CI-alone performance is significantly poorer for shorter array (e.g., 20–24 mm) recipients than for longer (e.g., 28 mm) arrays recipients (Büchner et al., 2017). The number or spacing of electrode contacts could affect performance (see Friesen et al., 2001; Fu & Shannon, 1999a; Zhou, 2017), but greater frequency-to-place mismatches with default maps when presenting the full speech spectrum on a short array could also play a role in this result.

Place-Based Mapping

An alternative to the default mapping procedure, referred to here as place-based mapping, aligns the electric filter frequencies with the cochlear place frequencies to eliminate frequency-to-place mismatches. One approach for evaluating acute effects of place-based mapping is to compare performance using vocoder simulations of these maps with participants who have normal hearing. The analysis and synthesis bands of the vocoder are matched in frequency when simulating a place-based map, and they differ when simulating a map with frequency-to-place mismatches. Data obtained using these methods indicate better speech recognition with place-based maps than default maps (with mismatches) for both CI-alone and EAS simulations (Başkent & Shannon, 2003, 2007; Dillon et al., 2021; Dorman et al., 1997; Faulkner et al., 2003; Fu et al., 2017; Fu & Shannon, 1999b; T. Li & Fu, 2010; Shannon et al., 1998; Willis et al., 2020). These results suggest that incorporating information about the electrode array position into the mapping of CI-alone and EAS devices could result in improved speech recognition.

One caveat to this prediction is that the benefits of place-based maps may not be experienced by CI recipients of short arrays (e.g., 24 mm), because in certain situations, place-based mapping does not respect the aforementioned principal of full spectral representation adhered to in default mapping. For CI-alone users, place-based mapping discards low-frequency information for CI recipients with electrode array insertions that do not reach the low-frequency regions of the cochlea. This could be detrimental for speech recognition, as the listener would not have access to low-frequency speech information. For instance, Faulkner et al. (2003) observed that listeners of CI-alone simulations experienced significantly poorer speech recognition with place-based maps for simulations of shallow insertion depths (e.g., ≤ 23 mm) than for deeper insertion depths, due to the loss of low-frequency information. For EAS users, place-based mapping can create a gap in the frequency information between the acoustic and electric outputs. This would occur in cases in which the most apical electrode contact is positioned considerably basal to the cochlear region with aidable acoustic hearing. The presence of a spectral gap has been found to result in poorer speech recognition in EAS simulations (Dorman et al., 2005) and for EAS users (Karsten et al., 2013); however, other EAS simulation studies demonstrate that the detrimental effects of a spectral gap are reduced when the electric filter frequencies match the cochlear place frequencies (Dillon et al., 2021; Fu et al., 2017; Willis et al., 2020). An aim of this report was to compare speech recognition in noise for CI-alone or EAS simulations with default versus place-based maps that were modeled from a short array (i.e., 24 mm) recipient. This simulation study was conducted to predict the initial outcomes of CI recipients with default or place-based maps.

Experimental Procedures and Conditions

The experimental place-based mapping procedure used in this simulation study differs from other place-based mapping procedures in that the electric filter frequencies were aligned in the low- to mid-frequency cochlear region, and the remaining high-frequency information was logarithmically distributed across electrode contacts in the high-frequency region. Current CI-alone and EAS devices encode acoustic information up to 8.5 kHz. One approach for place-based mapping for CI-alone devices deactivates electrode contacts at place frequencies > 8.5 kHz to align the full speech spectrum with the cochlear place frequencies (Jiam et al., 2019). In contrast, this experimental place-based mapping procedure kept all intracochlear electrode contacts active, aligned the frequency information that contributes the most to speech intelligibility (e.g., < 4 kHz; ANSI S3.5–1997), and spectrally shifted the remaining high-frequency information across the basal contacts. This procedure assumes that listeners can tolerate spectral shifts of high-frequency information when filter frequencies are aligned with the cochlear place frequency for the critical speech frequencies (e.g., 1–4 kHz; ANSI S3.5–1997). This hypothesis is supported by findings from Başkent and Shannon (2007), who demonstrated relatively good speech recognition in CI-alone simulations when the mid-frequency information was aligned, regardless of the spectral shift in other frequency regions. This experimental place-based mapping procedure also aligned the low-frequency information (e.g., < 1 kHz), with the rationale that providing better spectral resolution of low-frequency cues would improve performance in noise (Jin & Nelson, 2010; Qin & Oxenham, 2003).

Simulations in this study were based on the cochlear place frequencies of an actual 24-mm lateral wall electrode array recipient. Patients with shorter electrode arrays tend to have larger frequency-to-place mismatches with default maps than those with longer arrays (Canfarotta et al., 2020; Landsberger et al., 2015). Interest in the short (24 mm) array was based on the observation that default and place-based maps are more similar for longer (28 and 31 mm) array recipients. The 24-mm array is also the shortest array used for hearing preservation cases at the study site. The model CI recipient had a shallower angular insertion depth (392°) than the average for 24-mm array recipients at our center (428°; Canfarotta et al., 2020). This case therefore represents a challenging scenario for the default map (greatest magnitude of mismatch) and experimental place-based map (low-frequency information discarded). The 12-channel vocoder simulations in this study mimicked two postoperative scenarios for an actual CI recipient: (a) preservation of functional hearing and fit with an EAS device or (b) loss of functional hearing and fit with a CI-alone device. The electric frequency information was derived from the clinical programming software for the default maps and from the experimental place-based mapping procedure described above for the place-based maps. There were five simulated conditions: (a) a CI-alone device with default filter frequencies (CI-default; 100–8500 Hz), (b) a CI-alone device with place-based filter frequencies (CI-place; 550–8500 Hz), (c) an EAS device with default filter frequencies (EAS-default; 250–8500 Hz), (d) an EAS device with place-based filter frequencies (EAS-place; 550–8500 Hz), and (e) a CI-alone device with EAS default filter frequencies (CI-defaultEAS; 250–8500 Hz; but no acoustic low-frequency information). The first four of these conditions represent maps that might be provided clinically, using either default or place-based mapping procedures. The primary aim for these four conditions was to compare performance between default and place-based maps, for both CI-alone and EAS configurations, using an extreme case that may be encountered clinically. The fifth condition (CI-defaultEAS; 250–8500 Hz) allowed for investigation of the influence of adding acoustic low-frequency information to spectrally shifted versus place-based maps.

Eliminating frequency-to-place mismatches using a place-based mapping procedure could have different effects for CI-alone and EAS conditions. The benefit of acoustic input in EAS may be greater with a place-based map than a default map because both the electric and acoustic cues are transduced at the natural tonotopic place, or because the electrically represented low-frequency information is limited when strictly aligning to cochlear place. This possibility is consistent with the results of Willis et al. (2020), who conducted EAS simulations and observed larger improvement in speech recognition with the addition of acoustic low-frequency information for a place-based map as compared with a spectrally shifted map. Alternatively, acoustic information may provide low-frequency cues that aid in deciphering the spectrally shifted electric information. In this scenario, the benefit of access to low-frequency acoustic information might be larger for a default map resulting in frequency-to-place mismatches than for place-based map. The default mapping procedures for CI-alone and EAS devices result in different electric filter frequency assignments, which confounds the ability to assess the performance benefit of adding acoustic low frequency when comparing the EAS-default (i.e., 250–8500 Hz) and CI-default (i.e., 100–8500 Hz) conditions. The CI-defaultEAS condition allowed for a direct comparison of the influence of adding acoustic low-frequency information to a spectrally shifted map (EAS-default to CI-defaultEAS).

This report compared sentence recognition in multitalker babble for participants with normal hearing when listening to a CI-alone or EAS simulation with the default or place-based map. The hypotheses were that (a) better performance would be observed with the place-based maps than the default maps, due to the detrimental effects of frequency-to-place mismatches; (b) better performance would be observed with the EAS simulations than the CI-alone simulations, due to the beneficial contributions of acoustic low-frequency cues; and (c) a larger benefit of adding acoustic low-frequency information would be observed with place-based maps than default maps, as observed by Willis et al. (2020).

Method

Participants with normal hearing completed a sentence recognition in noise task while listening to a CI-alone or EAS simulation. The study procedures were approved by the institutional review board at the University of North Carolina at Chapel Hill, and listeners provided consent prior to participation. Listeners received either undergraduate course credit or were compensated ($15/hour) for their participation. Data from the EAS simulations were previously reported by Dillon et al. (2021).

Participants

Thirty-two young adults (25 women) between 18 and 25 years of age (M = 20 years, SD = 2 years) participated in the study procedures. None of them had prior experience listening to vocoded speech, and all passed a hearing screening prior to participation. Hearing sensitivity was assessed behaviorally in a sound booth with circumaural headphones (Sennheiser HDA 200). To qualify for inclusion, listeners had to detect pure tones for octave frequencies 0.125 to 16 kHz and for 20 kHz at ≤ 20 dB HL, which is considered in the range of normal hearing (Bess & Humes, 2003). Participants were native speakers of American English.

Stimuli

The CI-alone and EAS simulations were generated using a custom MATLAB script (MATLAB 2019a). There were five conditions: (a) a CI-alone device with default filter frequencies (CI-default; 100–8500 Hz), (b) a CI-alone device with place-based filter frequencies (CI-place; 550–8500 Hz), (c) an EAS device with default filter frequencies (EAS-default; 250–8500 Hz), (d) an EAS device with place-based filter frequencies (EAS-place; 550–8500 Hz), and (e) a CI-alone device using the EAS default filter frequencies (CI-defaultEAS; 250–8500 Hz) but no acoustic low-frequency information. The electric stimulation was simulated with a 12-channel noise vocoder that extracted the envelope of the speech stimulus within each analysis band and applied it to a noise-band carrier (details below), similar to original investigations using vocoded speech (see Shannon et al., 1995). The frequency content of the noise-band carrier controlled the place of transduction via natural tonotopicity of the normal-hearing cochlea.

Filter frequencies for the vocoder simulations were derived from the analysis of the postoperative computed tomography (CT) for an actual CI recipient of the Flex24 electrode array (MED-EL GmbH). The Flex24 lateral wall array is 24 mm in length and features 12 stimulation channels, with Electrode 1 being the most apical contact. The CI recipient's postoperative CT confirmed a full insertion of the array. An image-guided algorithm determined the angular insertion depth and cochlear place frequency of each electrode contact (Noble et al., 2013; Zhao et al., 2019). Table 1 lists the angular insertion depth and cochlear place frequency for each, provided by Vanderbilt University, as well as the center frequencies associated with each channel for each condition. Frequency-to-place mismatch for each channel, calculated as the deviation in semitones between the channel center frequency and the cochlear place frequency for each contact, is listed in italic.

Table 1.

The angular insertion depth and cochlear place frequency for each channel and the center frequencies (CFs) for each simulated condition (CI-place, EAS-place, CI-default, EAS-default, and CI-defaultEAS).

| Angular insertion depth (°) |

Channel |

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

11 |

12 |

||

| 392 |

356 |

323 |

279 |

243 |

213 |

166 |

123 |

90 |

49 |

22 |

5 |

||

| Cochlear place (Hz) | 697 | 811 | 1078 | 1434 | 2017 | 2582 | 3633 | 5017 | 6181 | 11133 | 14521 | 16584 | |

| CI-place & EAS-place (550–8500 Hz) | CF (Hz) | 697 | 811 | 1078 | 1434 | 2017 | 2582 | 3633 | 5017 | 6500 | 7000 | 7500 | 8000 |

| Mismatch (st) | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | –1 | 8 | 11 | 13 | |

| CI-default (100–8500 Hz) | CF (Hz) | 149 | 261 | 408 | 601 | 854 | 1191 | 1638 | 2233 | 3028 | 4090 | 5510 | 7412 |

| Mismatch (st) | 27 | 20 | 17 | 15 | 15 | 13 | 14 | 14 | 12 | 17 | 17 | 14 | |

| EAS-default & CI-defaultEAS (250–8500 Hz) | CF (Hz) | 293 | 393 | 527 | 707 | 948 | 1272 | 1707 | 2290 | 3072 | 4121 | 5529 | 7418 |

| Mismatch (st) | 15 | 13 | 12 | 12 | 13 | 12 | 13 | 14 | 12 | 17 | 17 | 14 | |

Note. The angular insertion depth and cochlear place frequency values were provided by Vanderbilt University using postoperative imaging from an actual 24-mm lateral wall electrode array recipient. The CFs for the CI-default, EAS-default, and CI-defaultEAS conditions were obtained from the clinical programming software. Frequency-to-place mismatch, calculated as the deviation in semitones (st) between the default CFs and the cochlear place frequencies, is reported in italics. The CFs for the CI-place and EAS-place conditions were derived from the experimental place-based mapping procedure, where the electric filter frequencies of low- to mid-frequency channels were adjusted to match the CF with the cochlear place frequency. CI-place = cochlear implant–alone device with place-based filter frequencies; EAS-place = electric-acoustic stimulation device with place-based filter frequencies; CI-default = CI-alone device with default filter frequencies; EAS-default = EAS device with default filter frequencies; CI-defaultEAS = CI-alone device with EAS default filter frequencies.

The center frequencies for each channel for the CI-default, EAS-default, and CI-defaultEAS conditions were derived from the clinical programming software (Maestro Version 7.0.3). For the CI-default condition, the simulated input frequency range was 100–8500 Hz. For the EAS-default and CI-defaultEAS conditions, the actual CI recipient's acoustic hearing thresholds determined the lowest frequency filter for the electric frequency range. The acoustic hearing thresholds for this CI recipient were 50 dB HL at 125 Hz, 65 dB HL at 250 Hz, 80 dB HL at 500 Hz, and 85 dB HL at 1000 Hz (see Table 2). With these acoustic hearing thresholds, the EAS default mapping procedure resulted in a simulated electric input frequency range of 250–8500 Hz. The CI-alone and EAS default mapping procedures resulted in similar magnitudes of frequency-to-place mismatches for the mid- and high-frequency channels. For example, the frequency-to-place mismatch for Channel 5 was 15 semitones for the CI-default condition, compared with 13 semitones for the EAS-default and CI-defaultEAS conditions. Larger mismatches between conditions were observed on the low-frequency channels, with greater spectral shifts for the CI-default condition as compared with the EAS-default and CI-defaultEAS conditions. For example, the frequency-to-place mismatch for Channel 1 was 27 semitones for the CI-default condition, compared with 15 semitones for the EAS-default and CI-defaultEAS conditions (included in Table 1).

Table 2.

The unaided acoustic hearing thresholds in the implanted ear and aided sound-field thresholds with the default electric-acoustic stimulation acoustic settings from the modeled 24-mm lateral wall electrode array recipient.

| Threshold | Frequency (Hz) |

|||

|---|---|---|---|---|

| 125 | 250 | 500 | 1000 | |

| Unaided | 50 | 65 | 80 | 85 |

| Aided | 40 | 50 | 55 | 65 |

Note. The unaided and aided thresholds are reported in dB HL.

The center frequencies for the CI-place and EAS-place conditions were determined using the experimental place-based mapping procedure. This procedure aligned the center frequency of the channels with the cochlear place frequency for the electrode contacts in the low- to mid-frequency region. For the modeled CI recipient, this resulted alignment of the center frequency of Channels 1–8 with the cochlear place frequency for each electrode contact. The remaining high-frequency information was distributed across Channels 9–12. For this simulation, the cutoff frequency between two channels was the geometric mean of the two band's center frequencies. For the most apical (Electrode 1) and most basal (Electrode 12) contacts, the band was assumed to be symmetrical around the center frequency. The resultant simulated input frequency range for the CI-place and EAS-place conditions was 550–8500 Hz. While low-frequency acoustic information below 550 Hz was available in the EAS-place condition, the CI-place condition excluded this information.

A 12-channel noise-vocoded speech stimulus was generated using two finite impulse response (FIR) filterbanks. The number of taps controls the spectral resolution of the filter. For this study, the number of taps used to define each filter was selected such that spectral resolution was 20% of the bandwidth; tap arrays for each filter were constructed using the fir1 function (MATLAB 2019a) and symmetrically padded with zeros to ensure synchronous output across filters. The input filterbank, used to filter the speech stimulus, had center frequencies associated with one of the five conditions (i.e., CI-default, CI-defaultEAS, EAS-default, CI-place, or EAS-place). The output filterbank, used to generate the narrowband noise carriers, had the same structure but used center frequencies associated with the cochlear place of each electrode contact (see Table 1). The Hilbert envelope was extracted from each band of the input filterbank and low-pass filtered at 300 Hz with a fourth-order Butterworth filter, and the result was used to modulate the noise-band carrier from associated channel of the output filterbank.

The EAS simulations were previously described by Dillon et al. (2021). Briefly, the acoustic input for the EAS-default and EAS-place conditions was simulated by passing the speech through an FIR filter generated using the fir2 function (MATLAB 2019a). This filter shaped the output to match the aided sound field thresholds obtained from the actual CI recipient (included in Table 2), with linear extrapolation between frequencies and assuming thresholds of 0 dB HL, the average threshold for young adults with normal hearing (Bess & Humes, 2003). The number of taps matched that of the filterbank used to generate the vocoded speech (described above), to ensure temporal coherence of speech cues across frequency regions. The actual CI recipient's aided sound field thresholds with the default acoustic settings were 40 dB HL at 125 Hz, 50 dB HL at 250 Hz, 55 dB HL at 500 Hz, and 65 dB HL at 1000 Hz. For the EAS-default condition, the highest frequency of acoustic input extended up to the region of simulated electrical stimulation. For the EAS-place condition, there was a spectral gap between the acoustic and simulated electric stimulation. Spread of excitation from the acoustic output was simulated since the EAS conditions used the actual CI recipient's aided sound field thresholds to shape the acoustic output.

Procedure

Each of the five conditions includes data from 10 participants. Of the 32 participants in this study, 18 provided data for two randomly selected conditions, and 14 participants provided data for one condition. Four of the participants who provided data for one condition did not complete a second condition due to time limitations. The remaining 10 participants who completed only a single condition provided data in the CI-defaultEAS condition; data collection for this condition commenced after the other conditions were completed.

Stimuli were the 10 lists (20 sentences per list) from the AzBio sentences test (Spahr et al., 2012) that have been determined to be equivalent in intelligibility for CI recipients (Schafer et al., 2012). The masker was a 10-talker babble. Sentences were not repeated for individual participants. Participants were tested in a quiet room, and data collection in each condition did not exceed 1 hr.

The experiment was controlled using a MATLAB script that ran on a laptop, with the output routed through a sound card (M-AUDIO M-Track 2x2) then to the headphones (HD 280 Pro, Sennheiser). The 10-talker masker was 60 dB SPL, and the level of the target sentences varied across trials. The task followed the repeated-stimulus, ascending signal-to-noise ratio (SNR) method described by Buss et al. (2015), which characterizes performance at a range of SNRs with a limited corpus. Briefly, a sentence was presented at a challenging SNR of −5 dB SNR, and the participant was asked to repeat what they heard. Each keyword was scored as correct or incorrect. The SNR was increased in 2-dB step sizes, using the same target sentence and the same sample of the babble masker, until the participant correctly recognized all keywords or the maximum level of +19 dB SNR was reached, whichever occurred first. Feedback was not provided. In cases where the track was terminated prior to reaching the maximum level due to correct sentence recognition, correct responses were assumed at higher SNRs. A full list of 20 sentences was presented in each condition. The order of sentences within a list was randomized for each participant.

Data Analysis

Proportion of keywords correct at each SNR was fitted with a three-parameter (i.e., mean, slope, and asymptote) logit function for data from each participant and condition. Estimates of performance for individual participants at specific SNRs and at their asymptote were based on these fits. A logit transformation was applied to the resulting estimates of proportion correct prior to statistical analysis to normalize the variance (Oleson et al., 2019); prior to applying this transformation, values were restricted to the range of 0.001–0.999. Performance between conditions was compared at the levels used clinically to assess the performance of CI-alone and EAS users (i.e., at +5 and +10 dB SNR, and at asymptote).

Linear mixed-effects models assessed the main effects of simulated device (i.e., CI-alone and EAS), mapping procedure (i.e., default and place-based), and clinically relevant level (i.e., at +5 and +10 dB SNR, and asymptote), as well as the associated two-way and three-way interactions. There was a random intercept for each listener, and the Akaike information criterion (AIC) was used to guide selection of variance and covariance structures. After analyses guided by the theoretical questions of interest, we expanded the models to include sex as a main effect; there was no significant main effect of sex in either model (p ≥ .530). Models were implemented using R statistical software (R Core Team, 2020). Significance was defined as ∝ < 0.05.

Results

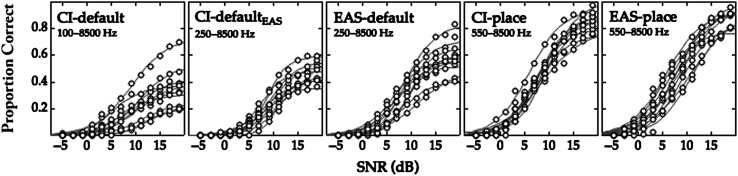

Figure 1 plots the proportion of keywords correctly recognized at each SNR for individual participants who provided data for the CI-default, CI-defaultEAS, EAS-default, CI-place, or EAS-place conditions. Logit fits were associated with r 2 ≥ .97 for all participants and conditions, indicating the goodness of the fit. Individual differences in performance were observed across conditions and SNRs. Generally, performance was poorest with the CI-default condition (simulated map with the most spectral shift), better with the CI-defaultEAS and EAS-default conditions, and best with the CI-place and EAS-place conditions.

Figure 1.

Proportion of words correctly recognized at each SNR for the listeners who provided data for the CI-default, CI-defaultEAS, EAS-default, CI-place, and EAS-place conditions, with 10 listeners in each condition. The simulated input electric filter ranges are indicated for each condition. Points indicate proportion correct for individual listeners, and lines indicate fits to those data. SNR = signal-to-noise ratio; CI-default = cochlear implant–alone device with default filter frequencies; CI-defaultEAS = CI-alone device with EAS default filter frequencies; EAS-default = EAS device with default filter frequencies; CI-place = CI-alone device with place-based filter frequencies; EAS-place = electric-acoustic stimulation device with place-based filter frequencies.

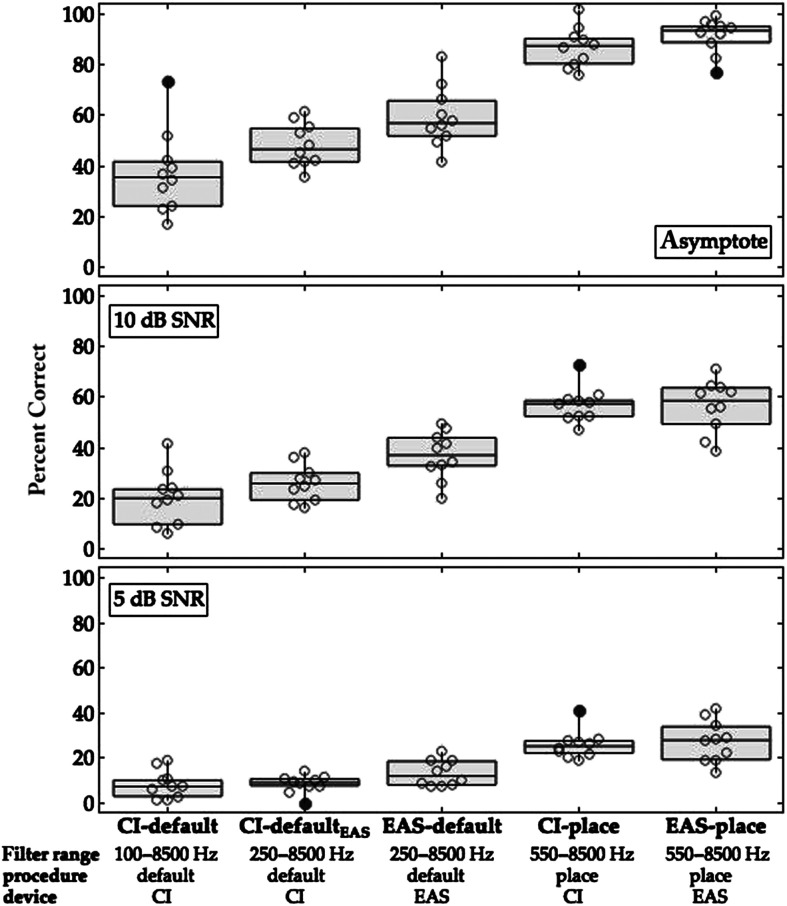

Figure 2 plots the percent correct performance at the clinically relevant levels (i.e., at +5 and +10 dB SNR, and at asymptote) for the CI-default, CI-defaultEAS, EAS-default, CI-place, and EAS-place conditions. Table 3 lists the minimum, 25th percentile, median, 75th percentile, and maximum values at the three clinically relevant levels for each condition.

Figure 2.

Percent correct on the masked sentence recognition task at 5 and 10 dB SNR and at asymptote for the CI-default, CI-defaultEAS, EAS-default, CI-place, and EAS-place conditions. Horizontal lines indicate median scores, with boxes spanning the 25th and 75th percentiles, and vertical lines indicate the 10th and 90th percentiles. Points indicate individual performance, with filled points indicating outliers. The electric filter range, mapping procedure, and simulation device are listed under each condition for reference. SNR = signal-to-noise ratio; CI-default = cochlear implant–alone device with default filter frequencies; CI-defaultEAS = CI-alone device with EAS default filter frequencies; EAS-default = EAS device with default filter frequencies; CI-place = CI-alone device with place-based filter frequencies; EAS-place = electric-acoustic stimulation device with place-based filter frequencies.

Table 3.

The minimum, 25th percentile, median, 75th percentile, and maximum values observed for each simulated condition (CI-default, CI-defaultEAS, EAS-default, CI-place, or EAS-place) at the reviewed clinically relevant levels (5 and 10 dB SNR, and at asymptote).

| Simulated condition | Level | Minimum | 25th percentile | Mdn | 75th percentile | Maximum |

|---|---|---|---|---|---|---|

| CI-default | 5 dB SNR | 1.3 | 3.6 | 7.4 | 10.5 | 18.9 |

| 10 dB SNR | 5.9 | 11.6 | 20.1 | 23.8 | 41.6 | |

| Asymptote | 17.0 | 25.8 | 35.6 | 41.3 | 73.3 | |

| CI-defaultEAS | 5 dB SNR | 0.1 | 7.5 | 9.2 | 10.9 | 13.9 |

| 10 dB SNR | 16.1 | 20.1 | 25.9 | 29.7 | 38.0 | |

| Asymptote | 35.5 | 41.6 | 46.6 | 54.6 | 61.6 | |

| EAS-default | 5 dB SNR | 7.4 | 8.1 | 12.1 | 18.3 | 22.8 |

| 10 dB SNR | 19.9 | 32.7 | 37.1 | 43.5 | 49.5 | |

| Asymptote | 41.5 | 52.5 | 57.0 | 64.7 | 83.2 | |

| CI-place | 5 dB SNR | 18.8 | 22.1 | 25.3 | 27.8 | 41.0 |

| 10 dB SNR | 46.8 | 52.2 | 57.4 | 58.8 | 72.9 | |

| Asymptote | 75.7 | 80.9 | 87.5 | 90.5 | 100.0 | |

| EAS-place | 5 dB SNR | 13.6 | 19.9 | 28.0 | 32.9 | 41.8 |

| 10 dB SNR | 38.5 | 50.7 | 58.6 | 63.6 | 70.9 | |

| Asymptote | 77.1 | 89.5 | 93.6 | 95.4 | 99.2 |

Note. Results are reported in percent correct. SNR = signal-to-noise ratio; CI-default = cochlear implant–alone device with default filter frequencies; CI-defaultEAS = CI-alone device with EAS default filter frequencies; EAS-default = EAS device with default filter frequencies; CI-place = CI-alone device with place-based filter frequencies; EAS-place = electric-acoustic stimulation device with place-based filter frequencies.

The first analysis compared performance between default and place-based maps that would be used clinically for the two scenarios for the 24-mm array recipient: (a) preservation of functional hearing and fit with EAS, or (b) loss of functional hearing and fit with a CI-alone. Thus, the first model included data from the CI-default, EAS-default, CI-place, and EAS-place conditions. There was a significant main effect of level, F(2, 87) = 81.53, p < .001, indicating significant differences in performance across the three clinically relevant levels. There was a significant main effect of mapping procedure, F(1, 87) = 44.29, p < .001, with significantly better performance for place-based maps than default maps. Also, there was a significant main effect of device, F(1, 87) = 11.91, p < .001, with better performance for EAS than the CI-alone simulations. There was a significant interaction between level and mapping procedure, F(2, 87) = 4.30, p = .017, demonstrating that the beneficial effects of the place-based map were larger at asymptote than at +5 or + 10 dB SNR. There was a nonsignificant trend for an interaction between device and mapping procedure, F(1, 87) = 2.91, p = .092, which is likely due to a larger benefit of place-based mapping for the CI-alone simulations than the EAS simulations. No interaction was detected between level and device, F(2, 87) = 0.95, p = .391. Additionally, the three-way interaction between level, device, and mapping procedure was nonsignificant, F(2, 87) = 1.24, p = .293. Coefficients are listed in Supplemental Material S1. Taken together, these data support the hypotheses of better performance with place-based maps than default maps and better performance with EAS simulations than CI-alone simulations.

The second analysis assessed the benefit of adding acoustic low-frequency information to spectrally shifted versus place-based maps. The benefit of adding acoustic low-frequency information cannot be evaluated based on the CI-default and EAS-default conditions, because the electric filter frequencies differ in these two cases. To isolate the benefit of access to low-frequency acoustic information, the second model used the data from the CI-alone simulation using the EAS default filter frequencies (CI-defaultEAS) but no acoustic low-frequency information. Thus, the second model included data from the CI-defaultEAS (250–8500 Hz), EAS-default (250–8500 Hz), CI-place (550–8500 Hz), and EAS-place (550–8500 Hz) conditions. Ability to model subject variance was limited since listeners in the CI-defaultEAS condition did not provide data for any other condition. Similar patterns of results as in the first model were observed, including significant main effects of level, F(2, 107) = 64.15, p < .001, device, F(1, 107) = 4.00, p = .048, and mapping procedure, F(1, 107) = 31.97, p < .001. There was a significant interaction between level and mapping procedure F(2, 107) = 5.21, p = .007, demonstrating the beneficial effects of the place-based map at the more favorable levels (i.e., 10 dB SNR and asymptote). No interactions were detected between level and device, F(2, 107) = 0.02, p = .985; device and mapping procedure, F(1, 107) = 0.58, p = .450; or level, device, and mapping procedure, F(2, 107) = 0.28, p = .759. Coefficients are listed in Supplemental Material S2. These results suggest that the benefit of acoustic low-frequency information provided by EAS was similar for default and place-based maps.

Discussion

This investigation compared the sentence recognition in noise for participants with normal hearing when listening to CI-alone or EAS simulations with an experimental place-based map or a default map. The experiment simulated two postoperative scenarios that would be encountered clinically for a recipient of a 24-mm lateral wall electrode array who presented preoperatively with low-frequency acoustic hearing: (a) preservation of low-frequency acoustic hearing and fit with an EAS device, and (b) loss of low-frequency acoustic hearing and fit with a CI-alone device. The CI-alone and EAS default mapping procedures created different magnitudes of electric frequency-to-place mismatches. The experimental place-based map eliminated mismatches in the low- to mid-frequency region. Participants demonstrated better performance with the place-based maps than for the default maps for both the CI-alone and EAS simulations. For instance, median performance at +10 dB SNR with default maps was 20% (CI-default) and 37% (EAS-default); this can be compared with performance with the place-based maps of 57% (CI-place) and 59% (EAS-place; see Table 3). Recall that the CI recipient modeled in these simulations had a shallower than average angular insertion depth, resulting in larger than average deviations in place frequency with the default maps and larger gaps in between acoustic and electric information in the EAS-place conditions. These data suggest that the experimental place-based mapping procedure may support better speech recognition for CI recipients of a 24-mm lateral wall electrode array listening with a CI-alone or EAS device.

This experimental place-based mapping procedure differs from previous place-based approaches in that the filter frequencies align with the cochlear place frequencies in the low- to mid-frequency region and distribute the remaining high-frequency information across basal contacts. Better performance has been consistently observed for participants with normal hearing when listening to CI simulations with aligned information versus spectrally shifted information (Başkent & Shannon, 2003, 2007; Dorman et al., 1997; Faulkner et al., 2003; Fu & Shannon, 1999b; T. Li & Fu, 2010; Shannon et al., 1998). The benefit of place-based mapping observed here demonstrates that listeners can tolerate spectral shifts of high-frequency information, but it is unclear whether additional benefit would be observed if place-based mapping was extended to the high-frequency region. This could be achieved by deactivating basal contacts that reside in cochlear regions > 8.5 kHz. Jiam et al. (2019) observed an improvement in pitch scaling performance for experienced CI users when listening with a map with aligned information for the full input range (i.e., 70–8500 Hz) as compared with default map. Performance differences were not observed on measures of speech recognition in quiet and noise; however, participants in that study listened with default maps and were tested acutely after the fitting of the place-based map. Another approach would be to extend the upper limit of acoustic speech cues above 8.5 kHz, to allow for place-based mapping of higher frequency information. Speech information in the 8–20 kHz region has recently been shown to contribute significantly to speech recognition in noise for listeners with normal hearing (Monson et al., 2019; Zadeh et al., 2019). Although current clinically available CI-alone and EAS devices do not allow for an extension of this input frequency range, work is needed to evaluate whether this approach would benefit CI recipients.

Another feature of the experimental place-based mapping procedure to keep in mind is that it discards information below the cochlear place frequencies of the most apical contact. This ensures appropriate place of transduction of low- to mid-frequency cues; however, it can result in a loss of substantial low-frequency information for CI-alone users and create a gap in frequency information for EAS users. Simulations in this study modeled the cochlear place frequencies and postoperative scenarios for a recipient of a 24-mm array with a shallow angular insertion depth (392°) to simulate a challenging scenario for the shortest lateral wall electrode array used at our center. Sentence recognition was significantly better with the place-based map as compared with the default map for the CI-alone and EAS simulations. Taken together, these data suggest that aligning low- and mid-frequency information with the experimental place-based mapping procedure supports better speech recognition for CI-alone and EAS simulations of a 24-mm array, although investigation of performance for CI recipients with arrays at even shallower insertion depths is needed.

For the CI-alone simulations (i.e., CI-default and CI-place), the benefit of place mapping is compelling particularly in light of the fact that the place-based map did not provide low-frequency speech information below 550 Hz. For example, the median speech recognition at +10 dB SNR was 20% for the CI-default condition and 57% for the CI-place condition. This result suggests that listeners tolerate reduced access to low-frequency speech information when mid-frequency information is aligned with the corresponding cochlear frequency region. For instance, Fu and Shannon (1999b) reported minimal changes in speech recognition for CI-alone simulations as the lowest filter cutoff frequency was increased up to 960 Hz; however, this pattern of results was observed only when the analysis and carrier bands were matched. Poorer speech recognition was observed with the loss of low-frequency information when the analysis and carrier bands were shifted to simulate a frequency-to-place mismatch. A consideration when interpreting these data is that the most apical electrode contact was at 392°. Discarding low-frequency speech information with a place-based map may be detrimental for CI recipients of electrode arrays at shallower insertion depths (e.g., 19 mm along the basilar membrane; Başkent & Shannon, 2005; Faulkner et al., 2003). Also, these data were assessed with acute listening experience. Participants with normal hearing listening to CI simulations and CI recipients demonstrate an ability to acclimate to spectrally shifted maps with auditory training and/or prolonged device use (Faulkner, 2006; Fu et al., 2002, 2005; Fu & Galvin, 2003; T. Li & Fu, 2007; T. Li et al., 2009; Reiss et al., 2007, 2014; Rosen et al., 1999; Sagi et al., 2010; Smalt et al., 2013; Svirsky et al., 2004, 2015; Vermeire et al., 2015). Investigations of recipients of short electrode arrays listening with CI-alone devices are needed to determine the minimal angular insertion depth for which a place-based mapping procedure is optimal, and the extent to which users acclimate to mismatches.

For the EAS simulations (i.e., EAS-default and EAS-place), place-based mapping conferred benefit despite the presence of a spectral gap between the simulated acoustic and electric stimulation, as reported previously (Dillon et al., 2021). For example, median speech recognition at +10 dB SNR was 37% for the EAS-default condition and 59% for the EAS-place condition. This finding challenges our current understanding of optimal EAS mapping procedures since maps resulting in spectral gaps have previously been shown to be detrimental to speech recognition compared with default maps (Gifford et al., 2017; Karsten et al., 2013). Listeners may be able to tolerate spectral gaps in speech information with EAS when the electric filter frequencies are aligned with cochlear place, precluding the need to acclimate to spectrally shifted electric information in combination with acoustic information. These findings corroborate those of Fu et al. (2017) who reported significantly improved vowel recognition when frequency-to-place mismatches were minimized in EAS simulations. One thing to keep in mind when evaluating results of this report is that the spectral gap was relatively small, and it fell in a frequency region that is not critical for speech recognition for listeners with normal hearing (250–550 Hz, ANSI S3.5–1997, 1997). Willis et al. (2020) created a spectral gap between 600 and 1200 Hz for an EAS simulation with a place-based map and also observed better performance with the place-based map as compared with a spectrally shifted map. Listeners of EAS simulations demonstrate reductions in speech recognition when the size of the frequency gap is increased from 500 to 3200 Hz (Dorman et al., 2005). The benefits of place-based mapping may be outweighed by the loss of speech information for larger gaps in frequency or gaps that fall within frequency regions that are most critical for speech recognition for listeners with normal hearing (i.e., 1–4 kHz, ANSI S3.5–1997, 1997).

An unexpected result from this investigation was better performance for the CI-place condition as compared with the EAS-default condition. These findings were unexpected, considering the combination of low-frequency acoustic information and mid- to high-frequency electric stimulation has been shown to provide better speech recognition than electric stimulation alone for CI recipients with low-frequency hearing preservation (Dillon et al., 2015; Gantz et al., 2016; Gantz & Turner, 2003; Gifford et al., 2017; Helbig et al., 2011; Karsten et al., 2013; Lorens et al., 2008; Pillsbury et al., 2018). Investigations of listeners with normal hearing and CI recipients demonstrate that the benefit of adding acoustic low-frequency information to electric stimulation is due to better resolution of low-frequency cues, including the fundamental frequency and lower harmonics (N. Li & Loizou, 2008; Qin & Oxenham, 2006; Rader et al., 2015; Sheffield & Gifford, 2014; Verschuur et al., 2013; Zhang et al., 2010). A consideration when comparing these findings to previous EAS simulation studies is that acoustic stimulation was modeled using a low-pass filter that shaped the stimulus based on the aided sound field thresholds of an actual EAS user; in contrast, previous EAS simulation studies have tended to use a wider bandwidth of unshaped speech (see Dorman et al., 2005; Qin & Oxenham, 2006; Rader et al., 2015). Thus, the audibility is poorer than would have been provided by unshaped speech. This method does not capture suprathreshold distortion associated with hearing loss and does not incorporate the compressive function of the acoustic component. Also, the modeled EAS user was programmed with the default acoustic settings; better performance may have been observed if the acoustic settings were modified to fit a prescriptive method (Dillon et al., 2014). Previous investigations of EAS users have reported fitting the acoustic component using prescriptive methods (Gantz et al., 2016; Karsten et al., 2013). The discrepancies between the acoustic fitting methods may account for the differences observed in the patterns of performance in this report and previous EAS user samples. Taken together, the observed differences in sentence recognition in noise between the EAS-default and CI-place conditions in this study may be due to the quality of the acoustic low-frequency output for the EAS-default condition and the presence/absence of frequency-to-place mismatches.

A similar benefit of EAS was observed for a spectrally shifted map (CI-defaultEAS and EAS-default) and the place-based map (CI-place and EAS-place). These findings contradict the pattern of results reported by Willis et al. (2020), who observed a larger benefit of adding acoustic low-frequency information to a place-based map than a spectrally shifted map. The discrepancy in findings may be due to differences in the simulated low-frequency input filter for the two place-based maps (i.e., 550 Hz in this data set and 1200 Hz in Willis et al.). Larger benefits of adding acoustic low-frequency information may be observed with place-based maps that provide little or no low-frequency information. Further investigation is needed regarding the influence of acoustic hearing with place-based maps versus default maps with varying magnitudes of frequency-to-place mismatches.

These data suggest a performance benefit may be observed for CI-alone and EAS users listening with maps created with the experimental place-based mapping procedure; however, there are several limitations worth considering. These experiments relied on CI simulations with participants who have normal hearing, and performance with vocoded speech may not reflect the performance of actual CI recipients (Bhargava et al., 2016; Chen & Loizou, 2011; Rader et al., 2015). Another consideration is that simulations modeled the postoperative scenarios for a single 24-mm lateral wall array recipient. The performance differences between the default and place-based maps may be minimal for recipients of longer lateral wall arrays, which tend to provide a closer alignment with the tonotopic place with the default mapping procedure, or for recipients with different amounts of acoustic low-frequency audibility. Also, the experimental place-based mapping procedure aligned the electric filter frequencies up to the mid-frequency cochlear region. Some data suggest that CI-alone users may vary in the specific frequency information needed for speech recognition, with some demonstrating elevated band importance in the low-frequency region as opposed to the mid-frequency region (Bosen & Chatterjee, 2016). Better performance may be observed with place-based maps that account for these individual differences. Finally, participants did not complete all conditions in the present protocol, limiting the ability for comparisons of within-participant variability and necessitating a comparison of performance across participants. There are large individual differences in performance for listeners with normal hearing who have limited exposure to vocoder speech (Erb et al., 2012); thus, variation in individual abilities may have influenced the observed pattern of results.

Another consideration of this report is that the simulations did not account for the broad channel interaction that may be experienced by CI-alone and EAS device users, which could minimize the effectiveness of a place-based map. Channel interactions result in the activation of the same neural population by two or more channels (Shannon, 1983) and are associated with poorer speech recognition (Friesen et al., 2001; Fu & Shannon, 1999a; Zhou & Pfingst, 2012). For EAS users, the broad current spread may also result in masking of the acoustic low-frequency information by the electric stimulation, known as electric-on-acoustic masking (Imsiecke, Büchner, et al., 2020; Imsiecke, Krüger, et al., 2020; Kipping et al., 2020; Krüger et al., 2017; Lin et al., 2011; Stronks et al., 2010, 2012), minimizing the benefits of adding the acoustic information. Alternatively, the acoustic stimulation may mask the information provided by the electric stimulation, known as acoustic-on-electric masking (Stronks et al., 2010, 2012). Future investigations are needed to optimize place-based mapping, particularly in respect to limiting channel interactions and determining the frequency regions over which to strictly control for frequency-to-place mismatch.

Another limitation of this experiment is that performance was assessed with acute listening experience. Previous work has demonstrated that some listeners acclimate to spectrally shifted information with auditory training and/or prolonged listening experience (Faulkner, 2006; Fu et al., 2002, 2005; Fu & Galvin, 2003; T. Li & Fu, 2007; T. Li et al., 2009; Reiss et al., 2007, 2014; Rosen et al., 1999; Sagi et al., 2010; Smalt et al., 2013; Svirsky et al., 2004, 2015; Vermeire et al., 2015). For instance, Rosen et al. (1999) assessed the speech recognition with CI-alone simulations that either incorporated a spectral shift of 6.5 mm or matched the analysis and carrier filter frequencies. The speech recognition with the spectrally shifted simulation improved with auditory training, although it did not reach the level of performance with the matched simulation. For this report, providing listening experience with the CI-alone or EAS simulations prior to testing sentence recognition might have reduced the differences in performance across conditions. Similarly, it is possible that CI recipients listening with default maps could improve over time (Sagi et al., 2010; Svirsky et al., 2004, 2015,Svirsky et al., 2004, 2015), reducing or eliminating the benefit associated with place-based maps. This study was conducted to assess initial outcomes with maps created with the experimental place-based mapping procedure as compared with the default clinical mapping procedure prior to initiation of an investigation with CI recipients.

These findings suggest implementing the experimental place-based mapping procedure into the fitting of CI-alone and EAS device users may provide superior early performance as compared with default maps with spectrally shifted information. A randomized, double-blinded, longitudinal study with CI recipients is currently under way, investigating whether the performance benefit associated with place-based maps is observed in CI-alone and EAS device users, and documenting the time-course of acclimatization and patterns of performance with spectrally shifted maps. The study will evaluate whether the benefits of place-based mapping observed in this report are observed in CI recipients, whose performance may also be influenced by the pathophysiology of hearing loss and broad channel interaction associated with electric stimulation.

Conclusions

Participants with normal hearing demonstrated better sentence recognition in noise with experimental place-based maps as compared with default maps for both CI-alone and EAS simulations. Similar improvement in performance with the addition of low-frequency acoustic information was observed with the spectrally shifted map and the place-based map. There is still much to be learned about individual differences in the ability to accommodate degraded auditory input, including a shift in frequency-to-place mapping.

Supplementary Material

Acknowledgments

Stacey Kane, Kathryn Sobon, Meredith Braza, Kira Griffith, Haley Murdock, and Alec Johnson assisted with subject recruitment and data collection. Jack Noble and Benoit Dawant from Vanderbilt reviewed the CT imaging and provided the angular insertion depth and cochlear place frequency for individual electrode contacts for the modeled CI recipient (R01DC014037, R01DC008408, and R01DC014462).

M.T.D. and E.B. are supported by a research grant provided to the university from MED-EL Corporation. B.P.O. is a consultant for Advanced Bionics Corporation MED-EL Corporation, and Johnson and Johnson. This work was funded in part by a clinical research grant provided by the Department of Otolaryngology/Head & Neck Surgery at the University of North Carolina at Chapel Hill and by the National Institute on Deafness and Other Communication Disorders under Award Numbers R21DC018389 and T32DC005460. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. The script for the vocoder is available by e-mailing Margaret Dillon (mdillon@med.unc.edu).

Funding Statement

Stacey Kane, Kathryn Sobon, Meredith Braza, Kira Griffith, Haley Murdock, and Alec Johnson assisted with subject recruitment and data collection. Jack Noble and Benoit Dawant from Vanderbilt reviewed the CT imaging and provided the angular insertion depth and cochlear place frequency for individual electrode contacts for the modeled CI recipient (R01DC014037, R01DC008408, and R01DC014462). M.T.D. and E.B. are supported by a research grant provided to the university from MED-EL Corporation. B.P.O. is a consultant for Advanced Bionics Corporation MED-EL Corporation, and Johnson and Johnson. This work was funded in part by a clinical research grant provided by the Department of Otolaryngology/Head & Neck Surgery at the University of North Carolina at Chapel Hill and by the National Institute on Deafness and Other Communication Disorders under Award Numbers R21DC018389 and T32DC005460.

References

- Adunka, O. F. , Dillon, M. T. , Adunka, M. C. , King, E. R. , Pillsbury, H. C. , & Buchman, C. A. (2013). Hearing preservation and speech perception outcomes with electric-acoustic stimulation after 12 months of listening experience. Laryngoscope, 123(10), 2509–2515. https://doi.org/10.1002/lary.23741 [DOI] [PubMed] [Google Scholar]

- ANSI S3.5-1997. (1997). American National Standard Methods for Calculation of the Speech Intelligibility Index.

- Başkent, D. , & Shannon, R. V. (2003). Speech recognition under conditions of frequency-place compression and expansion. The Journal of the Acoustical Society of America, 113(4), 2064–2076. https://doi.org/10.1121/1.1558357 [DOI] [PubMed] [Google Scholar]

- Başkent, D. , & Shannon, R. V. (2005). Interactions between cochlear implant electrode insertion depth and frequency-place mapping. The Journal of the Acoustical Society of America, 117(3), 1405–1416. https://doi.org/10.1121/1.1856273 [DOI] [PubMed] [Google Scholar]

- Başkent, D. , & Shannon, R. V. (2007). Combined effects of frequency compression-expansion and shift on speech recognition. Ear and Hearing, 28(3), 277–289. https://doi.org/10.1097/AUD.0b013e318050d398 [DOI] [PubMed] [Google Scholar]

- Bess, F. H. , & Humes, L. (2003). Audiology: The fundamentals (3rd ed.). Lippincott Williams and Wilkins. [Google Scholar]

- Bhargava, P. , Gaudrain, E. , & Başkent, D. (2016). The intelligibility of interrupted speech: Cochlear implant users and normal hearing listeners. Journal of the Association for Research in Otolaryngology, 17(5), 475–491. https://doi.org/10.1007/s10162-016-0565-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blamey, P. , Arndt, P. , Brimacombe, J. , Staller, S. , Bergeron, F. , Facer, G. , Larky, J. , Lindstrom, B. , Nedzelski, J. , Peterson, A. , & Shipp, D. (1996). Factors affecting auditory performance of postlinguistically deaf adults using cochlear implants. Audiology and Neuro-Otology, 1(5), 293–306. https://doi.org/10.1159/000259212 [DOI] [PubMed] [Google Scholar]

- Blamey, P. , Artieres, F. , Başkent, D. , Bergeron, F. , Beynon, A. , Burke, E. , Dillier, N. , Dowell, R. , Fraysse, B. , Gallégo, S. , Govaerts, P. J. , & Lazard, D. S. (2013). Factors affecting auditory performance of postlinguistically deaf adults using cochlear implants: An update with 2251 patients. Audiology and Neurotology, 18(1), 36–47. https://doi.org/10.1159/000343189 [DOI] [PubMed] [Google Scholar]

- Bosen, A. K. , & Chatterjee, M. (2016). Band importance functions of listeners with cochlear implants using clinical maps. The Journal of the Acoustical Society of America, 140(5), 3718–3727. https://doi.org/10.1121/1.4967298 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buchman, C. A. , Dillon, M. T. , King, E. R. , Adunka, M. C. , Adunka, O. F. , & Pillsbury, H. C. (2014). Influence of cochlear implant insertion depth on performance: A prospective randomized trial. Otology and Neurotology, 35(10), 1773–1779. https://doi.org/10.1097/MAO.0000000000000541 [DOI] [PubMed] [Google Scholar]

- Büchner, A. , Illg, A. , Majdani, O. , & Lenarz, T. (2017). Investigation of the effect of cochlear implant electrode length on speech comprehension in quiet and noise compared with the results with users of electro-acoustic-stimulation, a retrospective analysis. PLOS ONE, 12(5), Article e0174900. https://doi.org/10.1371/journal.pone.0174900 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buss, E. , Calandruccio, L. , & Hall, J. W. (2015). Masked sentence recognition assessed at ascending target-to-masker ratios: Modest effects of repeating stimuli. Ear and Hearing, 36(2), e14–e22. https://doi.org/10.1097/AUD.0000000000000113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Canfarotta, M. W. , Dillon, M. T. , Buss, E. , Pillsbury, H. C. , Brown, K. D. , & O'Connell, B. P. (2020). Frequency-to-place mismatch: Characterizing variability and the influence on speech perception outcomes in cochlear implant recipients. Ear and Hearing, 41(5), 1349–1361. https://doi.org/10.1097/AUD.0000000000000864 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen, F. , & Loizou, P. C. (2011). Predicting the intelligibility of vocoded speech. Ear and Hearing, 32(3), 331–338. https://doi.org/10.1097/AUD.0b013e3181ff3515 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dillon, M. T. , Buss, E. , Adunka, O. F. , Buchman, C. A. , & Pillsbury, H. C. (2015). Influence of test condition on speech perception with electric-acoustic stimulation. American Journal of Audiology, 24(4), 520–528. https://doi.org/10.1044/2015_AJA-15-0022 [DOI] [PubMed] [Google Scholar]

- Dillon, M. T. , Buss, E. , Pillsbury, H. C. , Adunka, O. F. , Buchman, C. A. , & Adunka, M. C. (2014). Effects of hearing aid settings for electric-acoustic stimulation. Journal of the American Academy of Audiology, 25(2), 133–140. https://doi.org/10.3766/jaaa.25.2.2 [DOI] [PubMed] [Google Scholar]

- Dillon, M. T. , Canfarotta, M. W. , Buss, E. , Hopfinger, J. , & O'Connell, B. P. (2021). Effectiveness of place-based mapping in electric-acoustic stimulation devices. Otology & Neurotology, 42(1), 197–202. https://doi.org/10.1097/MAO.0000000000002965 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman, M. F. , Loizou, P. C. , & Rainey, D. (1997). Simulating the effect of cochlear-implant electrode insertion depth on speech understanding. The Journal of the Acoustical Society of America, 102(5), 2993–2996. https://doi.org/10.1121/1.420354 [DOI] [PubMed] [Google Scholar]

- Dorman, M. F. , Spahr, A. J. , Loizou, P. C. , Dana, C. J. , & Schmidt, J. S. (2005). Acoustic simulations of combined electric and acoustic hearing (EAS). Ear and Hearing, 26(4), 371–380. https://doi.org/10.1097/00003446-200508000-00001 [DOI] [PubMed] [Google Scholar]

- Erb, J. , Henry, M. J. , Eisner, F. , & Obleser, J. (2012). Auditory skills and brain morphology predict individual differences in adaptation to degraded speech. Neuropsychologia, 50(9), 2154–2164. https://doi.org/10.1016/j.neuropsychologia.2012.05.013 [DOI] [PubMed] [Google Scholar]

- Faulkner, A. (2006). Adaptation to distorted frequency-to-place maps: Implications of simulations in normal listeners for cochlear implants and electroacoustic stimulation. Audiology and Neurotology, 11(1), 21–26. https://doi.org/10.1159/000095610 [DOI] [PubMed] [Google Scholar]

- Faulkner, A. , Rosen, S. , & Stanton, D. (2003). Simulations of tonotopically mapped speech processors for cochlear implant electrodes varying in insertion depth. The Journal of the Acoustical Society of America, 113(2), 1073–1080. https://doi.org/10.1121/1.1536928 [DOI] [PubMed] [Google Scholar]

- Finley, C. C. , Holden, T. A. , Holden, L. K. , Whiting, B. R. , Chole, R. A. , Neely, G. J. , Hullar, T. E. , & Skinner, M. W. (2008). Role of electrode placement as a contributor to variability in cochlear implant outcomes. Otology & Neurotology, 29(7), 920–928. https://doi.org/10.1097/MAO.0b013e318184f492 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Firszt, J. B. , Holden, L. K. , Skinner, M. W. , Tobey, E. A. , Peterson, A. , Gaggl, W. , Runge-Samuelson, C. L. , & Wackym, P. A. (2004). Recognition of speech presented at soft to loud levels by adult cochlear implant recipients of three cochlear implant systems. Ear and Hearing, 25(4), 375–387. https://doi.org/10.1097/01.AUD.0000134552.22205.EE [DOI] [PubMed] [Google Scholar]

- Friesen, L. M. , Shannon, R. V. , Baskent, D. , & Wang, X. (2001). Speech recognition in noise as a function of the number of spectral channels: Comparison of acoustic hearing and cochlear implants. The Journal of the Acoustical Society of America, 110(2), 1150–1163. https://doi.org/10.1121/1.1381538 [DOI] [PubMed] [Google Scholar]

- Fu, Q.-J. , Chinchilla, S. , Nogaki, G. , & Galvin, J. J. (2005). Voice gender identification by cochlear implant users: The role of spectral and temporal resolution. The Journal of the Acoustical Society of America, 118(3), 1711–1718. https://doi.org/10.1121/1.1985024 [DOI] [PubMed] [Google Scholar]

- Fu, Q.-J. , & Galvin, J. J., III (2003). The effects of short-term training for spectrally mismatched noise-band speech. The Journal of the Acoustical Society of America, 113(2), 1065–1072. https://doi.org/10.1121/1.1537708 [DOI] [PubMed] [Google Scholar]

- Fu, Q.-J. , Galvin, J. J. , & Wang, X. (2017). Integration of acoustic and electric hearing is better in the same ear than across ears. Scientific Reports, 7(1), 12500. https://doi.org/10.1038/s41598-017-12298-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fu, Q.-J. , & Shannon, R. V. (1999a). Effects of electrode location and spacing on phoneme recognition with the Nucleus-22 cochlear implant. Ear and Hearing, 20(4), 321–331. https://doi.org/10.1097/00003446-199908000-00005 [DOI] [PubMed] [Google Scholar]

- Fu, Q.-J. , & Shannon, R. V. (1999b). Recognition of spectrally degraded and frequency-shifted vowels in acoustic and electric hearing. The Journal of the Acoustical Society of America, 105(3), 1889–1900. https://doi.org/10.1121/1.426725 [DOI] [PubMed] [Google Scholar]

- Fu, Q.-J. , Shannon, R. V. , & Galvin, J. J. (2002). Perceptual learning following changes in the frequency-to-electrode assignment with the Nucleus-22 cochlear implant. The Journal of the Acoustical Society of America, 112(4), 1664–1674. https://doi.org/10.1121/1.1502901 [DOI] [PubMed] [Google Scholar]

- Gantz, B. J. , Dunn, C. , Oleson, J. , Hansen, M. , Parkinson, A. , & Turner, C. (2016). Multicenter clinical trial of the Nucleus Hybrid S8 cochlear implant: Final outcomes. Laryngoscope, 126(4), 962–973. https://doi.org/10.1002/lary.25572 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gantz, B. J. , & Turner, C. W. (2003). Combining acoustic and electrical hearing. Laryngoscope, 113(10), 1726–1730. https://doi.org/10.1097/00005537-200310000-00012 [DOI] [PubMed] [Google Scholar]

- Gifford, R. H. , Davis, T. J. , Sunderhaus, L. W. , Menapace, C. , Buck, B. , Crosson, J. , O'Neill, L. , Beiter, A. , & Segel, P. (2017). Combined electric and acoustic stimulation with hearing preservation: Effect of cochlear implant low-frequency cutoff on speech understanding and perceived listening difficulty. Ear and Hearing, 38(5), 539–553. https://doi.org/10.1097/AUD.0000000000000418 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greenwood, D. D. (1990). A cochlear frequency-position function for several species—29 years later. The Journal of the Acoustical Society of America, 87(6), 2592–2605. https://doi.org/10.1121/1.399052 [DOI] [PubMed] [Google Scholar]

- Helbig, S. , Van De Heyning, P. , Kiefer, J. , Baumann, U. , Kleine-Punte, A. , Brockmeier, H. , Anderson, I. , & Gstoettner, W. (2011). Combined electric acoustic stimulation with the PULSARCI100 implant system using the FLEXEAS electrode array. Acta Oto-Laryngologica, 131(6), 585–595. https://doi.org/10.3109/00016489.2010.544327 [DOI] [PubMed] [Google Scholar]

- Holden, L. K. , Finley, C. C. , Firszt, J. B. , Holden, T. A. , Brenner, C. , Potts, L. G. , Gotter, B. D. , Vanderhoof, S. S. , Mispagel, K. , Heydebrand, G. , & Skinner, M. W. (2013). Factors affecting open-set word recognition in adults with cochlear implants. Ear and Hearing, 34(3), 342–360. https://doi.org/10.1097/AUD.0b013e3182741aa7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Imsiecke, M. , Büchner, A. , Lenarz, T. , & Nogueira, W. (2020). Psychoacoustic and electrophysiological electric-acoustic interaction effects in cochlear implant users with ipsilateral residual hearing. Hearing Research, 386, 107873. https://doi.org/10.1016/j.heares.2019.107873 [DOI] [PubMed] [Google Scholar]

- Imsiecke, M. , Krüger, B. , Büchner, A. , Lenarz, T. , & Nogueira, W. (2020). Interaction between electric and acoustic stimulation influences speech perception in ipsilateral EAS Users. Ear and Hearing, 41(4), 868–882. https://doi.org/10.1097/AUD.0000000000000807 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiam, N. T. , Gilbert, M. , Cooke, D. , Jiradejvong, P. , Barrett, K. , Caldwell, M. , & Limb, C. J. (2019). Association between flat-panel computed tomographic imaging-guided place-pitch mapping and speech and pitch perception in cochlear implant users. JAMA Otolaryngology—Head and Neck Surgery, 145(2), 109–116. https://doi.org/10.1001/jamaoto.2018.3096 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jin, S.-H. , & Nelson, P. B. (2010). Interrupted speech perception: The effects of hearing sensitivity and frequency resolution. The Journal of the Acoustical Society of America, 128(2), 881–889. https://doi.org/10.1121/1.3458851 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karsten, S. A. , Turner, C. W. , Brown, C. J. , Jeon, E. K. , Abbas, P. J. , & Gantz, B. J. (2013). Optimizing the combination of acoustic and electric hearing in the implanted ear. Ear and Hearing, 34(2), 142–150. https://doi.org/10.1097/AUD.0b013e318269ce87 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kipping, D. , Krüger, B. , & Nogueira, W. (2020). The role of electroneural versus electrophonic stimulation on psychoacoustic electric-acoustic masking in cochlear implant users with residual hearing. Hearing Research, 395, 108036. https://doi.org/10.1016/j.heares.2020.108036 [DOI] [PubMed] [Google Scholar]

- Krüger, B. , Büchner, A. , & Nogueira, W. (2017). Simultaneous masking between electric and acoustic stimulation in cochlear implant users with residual low-frequency hearing. Hearing Research, 353, 185–196. https://doi.org/10.1016/j.heares.2017.06.014 [DOI] [PubMed] [Google Scholar]

- Landsberger, D. M. , Svrakic, M. , Roland, J. T. , & Svirsky, M. (2015). The relationship between insertion angles, default frequency allocations, and spiral ganglion place pitch in cochlear implants. Ear and Hearing, 36(5), e207–e213. https://doi.org/10.1097/AUD.0000000000000163 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li, N. , & Loizou, P. (2008). A glimpsing account for the benefit of simulated combined acoustic and electric hearing. The Journal of the Acoustical Society of America, 123(4), 2287–2294. https://doi.org/10.1121/1.2839013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li, T. , & Fu, Q. J. (2007). Perceptual adaptation to spectrally shifted vowels: Training with nonlexical labels. Journal of the Association for Research in Otolaryngology, 8(1), 32–41. https://doi.org/10.1007/s10162-006-0059-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li, T. , & Fu, Q. J. (2010). Effects of spectral shifting on speech perception in noise. Hearing Research, 270(1–2), 81–88. https://doi.org/10.1016/j.heares.2010.09.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li, T. , Galvin, J. J., III , & Fu, Q. J. (2009). Interactions between unsupervised learning and the degree of spectral mismatch on short-term perceptual adaptation to spectrally-shifted speech. Ear and Hearing, 30(2), 238–249. https://doi.org/10.1097/AUD.0b013e31819769ac [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin, P. , Turner, C. W. , Gantz, B. J. , Djalilian, H. R. , & Zeng, F.-G. (2011). Ipsilateral masking between acoustic and electric stimulations. The Journal of the Acoustical Society of America, 130(2), 858–865. https://doi.org/10.1121/1.3605294 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lorens, A. , Polak, M. , Piotrowska, A. , & Skarzynski, H. (2008). Outcomes of treatment of partial deafness with cochlear implantation: A DUET study. Laryngoscope, 118(2), 288–294. https://doi.org/10.1097/MLG.0b013e3181598887 [DOI] [PubMed] [Google Scholar]

- Mertens, G. , Van De Heyning, P. , Vanderveken, O. , Topsakal, V. , & Van Rompaey, V. (2021). The smaller the frequency-to-place mismatch the better the hearing outcomes in cochlear implant recipients. European Archives of Oto-Rhino-Laryngology. https://doi.org/10.1007/s00405-021-06899-y [DOI] [PubMed] [Google Scholar]

- Monson, B. B. , Rock, J. , Schulz, A. , Hoffman, E. , & Buss, E. (2019). Ecological cocktail party listening reveals the utility of extended high-frequency hearing. Hearing Research, 381, 107773. https://doi.org/10.1016/j.heares.2019.107773 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noble, J. H. , Labadie, R. F. , Gifford, R. H. , & Dawant, B. M. (2013). Image-guidance enables new methods for customizing cochlear implant stimulation strategies. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 21(5), 820–829. https://doi.org/10.1109/TNSRE.2013.2253333 [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Connell, B. P. , Cakir, A. , Hunter, J. B. , Francis, D. O. , Noble, J. H. , Labadie, R. F. , Zuniga, G. , Dawant, B. M. , Rivas, A. , & Wanna, G. B. (2016). Electrode location and angular insertion depth are predictors of audiologic outcomes in cochlear implantation. Otology & Neurotology, 37(8), 1016–1023. https://doi.org/10.1097/MAO.0000000000001125 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oleson, J. J. , Brown, G. D. , & McCreery, R. (2019). The evolution of statistical methods in speech, language, and hearing sciences. Journal of Speech, Language, and Hearing Research, 62(3), 498–506. https://doi.org/10.1044/2018_JSLHR-H-ASTM-18-0378 [DOI] [PMC free article] [PubMed] [Google Scholar]