Abstract

Background

Individuals who use wheelchairs and scooters rarely undergo fall risk screening. Mobile health technology is a possible avenue to provide fall risk assessment. The promise of this approach is dependent upon its usability.

Objective

We aimed to determine the usability of a fall risk mobile health app and identify key technology development insights for aging adults who use wheeled devices.

Methods

Two rounds (with 5 participants in each round) of usability testing utilizing an iterative design-evaluation process were performed. Participants completed use of the custom-designed fall risk app, Steady-Wheels. To quantify fall risk, the app led participants through 12 demographic questions and 3 progressively more challenging seated balance tasks. Once completed, participants shared insights on the app’s usability through semistructured interviews and completion of the Systematic Usability Scale. Testing sessions were recorded and transcribed. Codes were identified within the transcriptions to create themes. Average Systematic Usability Scale scores were calculated for each round.

Results

The first round of testing yielded 2 main themes: ease of use and flexibility of design. Systematic Usability Scale scores ranged from 72.5 to 97.5 with a mean score of 84.5 (SD 11.4). After modifications were made, the second round of testing yielded 2 new themes: app layout and clarity of instruction. Systematic Usability Scale scores improved in the second iteration and ranged from 87.5 to 97.5 with a mean score of 91.9 (SD 4.3).

Conclusions

The mobile health app, Steady-Wheels, has excellent usability and the potential to provide adult wheeled device users with an easy-to-use, remote fall risk assessment tool. Characteristics that promoted usability were guided navigation, large text and radio buttons, clear and brief instructions accompanied by representative illustrations, and simple error recovery. Intuitive fall risk reporting was achieved through the presentation of a single number located on a color-coordinated continuum that delineated low, medium, and high risk.

Keywords: usability testing, mobile health, wheeled device user, fall risk, telehealth, mHealth, mobile device, smartphone, health applications, older adults, elderly population, device usability

Introduction

Over 3 million individuals in the United States require the use of a wheelchair for mobility [1] and wheelchair use is expected to increase [2,3]. Although wheeled device use has numerous benefits [4], it presents several unique risks, such as falls. Roughly 75% of wheelchair users fall at least once a year [5-8]. Approximately 50% of reported falls cause injuries [7], ranging from minor (ie, abrasions) to serious (eg, fractures) [6]. Falls can also induce fear of falling [9] and activity curtailment [7], which are associated with isolation and decreased independence and quality of life [10].

Falls are detrimental to wheeled device users’ health and well-being, making fall risk screening a necessary part of overall health care. Although the US Centers for Disease Control and Prevention recommends annual fall risk screening for older adults, current screening recommendations are designed for ambulatory adults [11]. Moreover, fall risk screening is rarely performed in clinical practice, and there are numerous barriers to the implementation of effective fall prevention programs for wheelchair users. As a result, most individuals who rely on wheeled mobility do not undergo routine fall risk screening. Additionally, the COVID-19 pandemic and its related restrictions necessitate remote monitoring of health. This highlights the need for novel remote fall risk technology specific to this population.

Due to limited access, researchers are exploring innovative approaches to deliver comprehensive and objective fall risk assessment to wheeled device users. One possible method leverages the capabilities of smartphone technology by developing an at-home fall risk health app [12-16]. This approach has been examined in ambulatory adults with a range of physical function [17,18]. Building on this potential, it has been demonstrated that a smartphone-based approach is a valid and reliable method to distinguish wheeled device users with and without impaired seated postural control [19]. Collectively, these findings provide the rationale for the development of an objective mobile health app that can provide wheeled device users with at-home fall risk assessment.

Although there is a strong rationale for the development of this type of health app, ensuring that such a tool is easy to use and provides intuitive fall risk score reporting is a necessary precursor to its future use in health behavior interventions [20]. Consequently, the purpose of the current study is to determine the usability of a fall risk mobile health app, Steady-Wheels, and identify key technology development insights for aging adults who use wheeled devices. This health app is an adaptation of a pre-existing fall risk app for older adults [18]. Based on prior investigations, we hypothesized that this health app would have a high level of usability.

Methods

Underlying Design Considerations

When designing the first iteration of the health app, we considered our target users’ (individuals aging with a physical disability) characteristics (Table 1). To ensure a high degree of usability, age-related changes and limitations due to disease or injury were taken into consideration, particularly as they related to cognitive overload, dexterity, and sensory function. To reduce cognitive overload, the app was designed to provide written instructions immediately preceding a task. Only one set of instructions was presented per slide, and large graphics depicting the task were also provided. This layout streamlined the app and reduced the need for working memory of the participants. In total, there were 14 slides, taking approximately 10 minutes to complete. Decrements in dexterity are commonly seen in those who have neurological complications [21-23] and age-associated arthritis [24]. To account for this within the app, selection options and buttons were made large, and typed responses were avoided. Sensory-related changes were accommodated by the use of black text written in a 14-point font on a white background [25], and auditory processing deficits were accommodated by the use of leading audio cues with simultaneous vibrations.

Table 1.

Participant demographic information.

| Characteristics | First iteration | Second iteration | |||

| Age (years), mean (SD) | 59.0 (12.2) | 58.0 (13.1) | |||

| Sex, n (%) | |||||

|

|

Male | 3 (60) | 2 (40) | ||

|

|

Female | 2 (40) | 3 (60) | ||

| Smartphone usage, n (%) | 5 (100) | 5 (100) | |||

| Time using mobility device (years), mean (SD) | 25 (20.3) | 25 (27.4) | |||

| Primary mobility device, n (%) | |||||

|

|

Power chair | 4 (80) | 2 (40) | ||

|

|

Manual chair | 1 (20) | 0 | ||

|

|

Scooter | 0 | 3 (60) | ||

| Reason for wheeled mobility, n (%) | |||||

|

|

Multiple sclerosis | 2 (40) | 4 (80) | ||

|

|

Paraplegia/quadriplegia | 2 (40) | 1 (20) | ||

|

|

Stroke | 1 (20) | 0 | ||

| History of falls (≥1 falls/year), n (%) | 1 (20) | 2 (40) | |||

| Self-reported fear of falling, n (%) | 5 (100) | 5 (100) | |||

| Level of education, n (%) | |||||

|

|

High school graduate/General Educational Development Test Credential | 0 | 1 (20) | ||

|

|

Some or in-progress college/associate degree | 2 (40) | 0 | ||

|

|

Bachelor’s degree | 0 | 1 (20) | ||

|

|

Master’s degree | 3 (60) | 2 (40) | ||

|

|

Doctoral degree | 0 | 1 (20) | ||

Components of the Steady-Wheels App

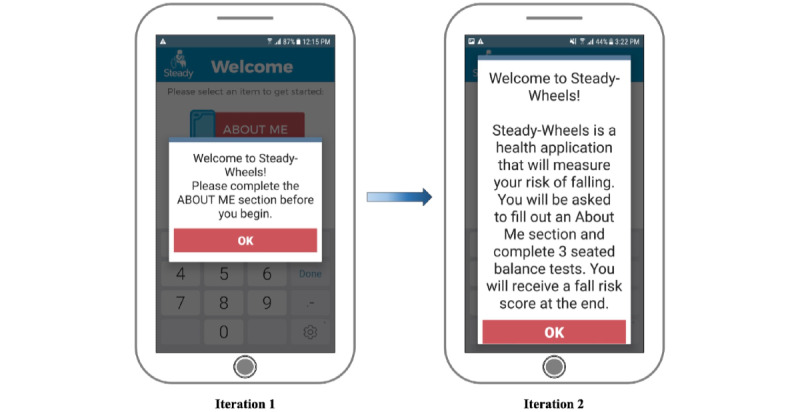

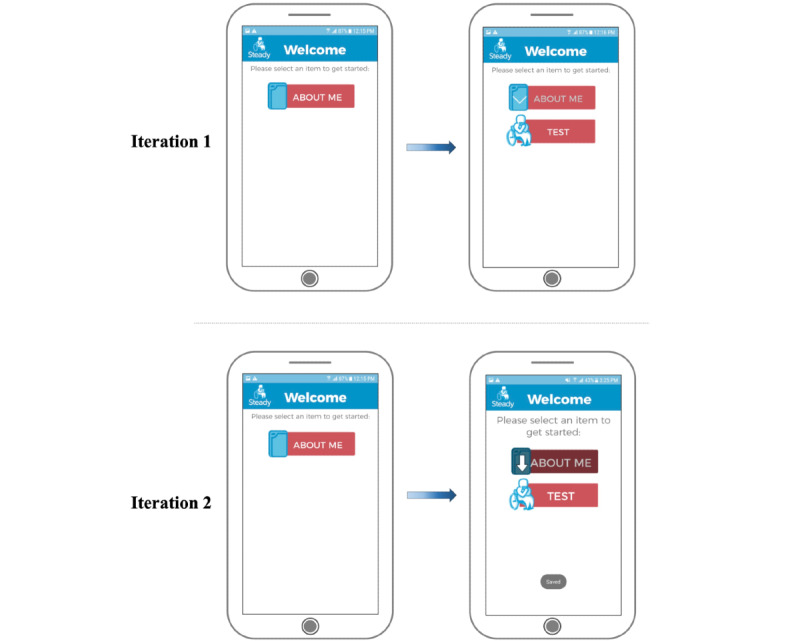

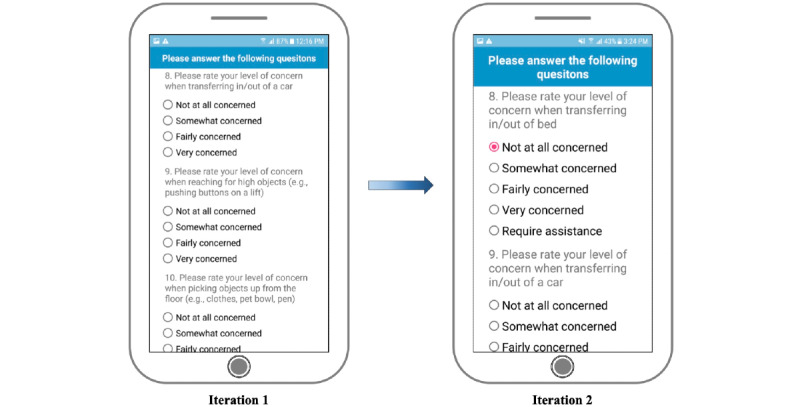

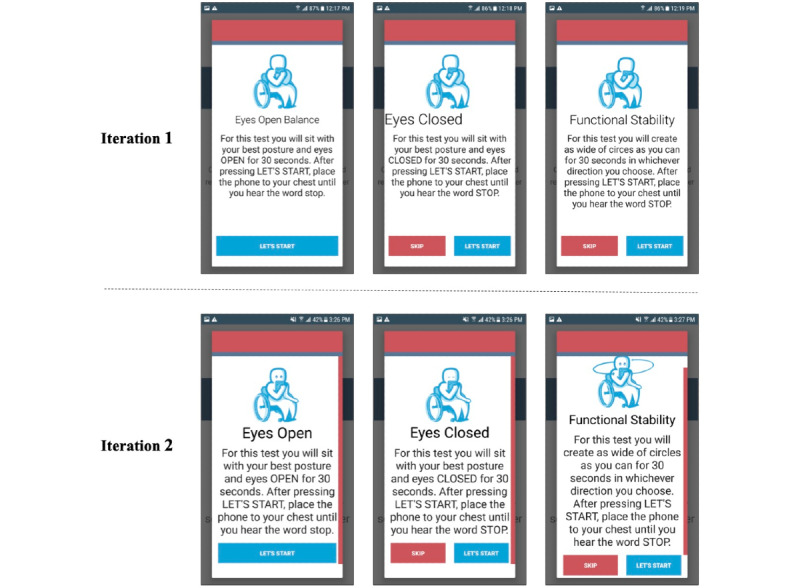

The fall risk app, Steady-Wheels, was developed in Android Studio 3.1.2. Upon opening the app, users are presented with a welcome screen that outlines the purpose of the app and provides an overview of the process (Figure 1). Steady-Wheels has two main components: a patient-reported outcome section and a performance test section (Figure 2). The patient-reported outcome component asks the participant to complete a 13-item health history questionnaire (including age, sex, number of falls in the last year, and activities that provoke concerns about falling [9]) (Figure 3). The performance component leads participants through a progressive series of seated postural control tasks (Figure 4). Before testing, participants were provided with written safety instructions. Participants were instructed to engage their wheel locks, and power wheelchair and scooter users were instructed to turn off their devices. All participants were asked to have a handrail or wall nearby in case they lost their balance. To complete the testing, the device guided participants through the completion of three 30-second seated balance tasks in a standardized order that increasingly challenged the participant’s base of support: an eyes-open balance task, an eyes-closed balance task, and a functional stability boundary task (Figure 4). These tests were chosen because they can provide insight into postural control [26] and have been linked to fall risk [27]. Written instructions on how to properly complete the balance tasks were provided before the start of each task. After the participant self-selected the “Let’s Start” option, an audio and vibratory countdown began from 5, leading to the word “start,” which cued the start of the test. The completion of the test was auditorily cued with the word “stop.” Participants were asked to hold the smartphone against the middle of their chest with their dominant hand for the duration of each test. Upon completion of each balance task, users reported if they were able to complete the task by selecting one of the following: “I completed the test,” “I was unable to complete the test,” and “I did NOT attempt to complete the test.” If participants were dissatisfied with their attempt at the task, they could select “I’d like to retry” and make another attempt.

Figure 1.

The text size was increased and the content was modified from iteration 1 to iteration 2 to allow for greater ease of use.

Figure 2.

Onscreen instructions were enhanced from iteration 1 to iteration 2.

Figure 3.

Changes made from iteration 1 to iteration 2 within the “About Me” section included larger text, larger radio buttons, and more choice response options.

Figure 4.

Modifications were made to the onscreen balance task instructions from iteration 1 to iteration 2.

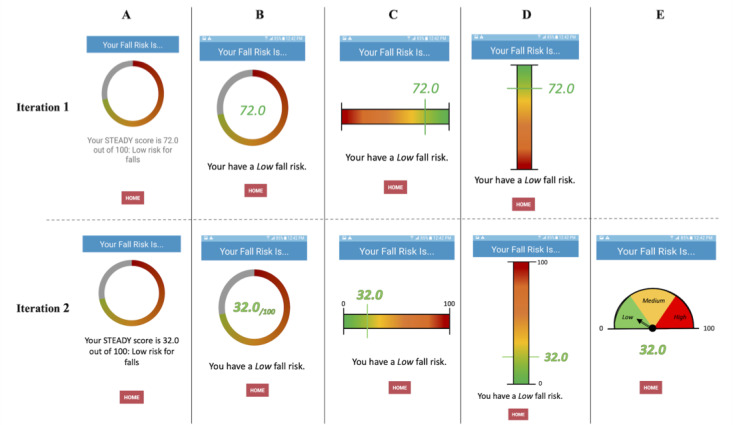

A future goal of this work is to utilize the participants’ demographic and movement data to generate a personalized fall risk score. To better understand users’ preferences for receiving their fall risk score, they were asked to rate different result screen options and provide insight on what made some illustrations better than others (Figure 5).

Figure 5.

Different options for the result screen (A-E), with modifications made from iteration 1 to iteration 2.

Ethics Approval

The Institutional Review Board of the University of Illinois at Urbana-Champaign approved all procedures (20192), and all participants provided informed consent before engaging in research activities. All research procedures were performed in accordance with the ethical standards of the responsible committee on human experimentation (institutional and national) and with the Helsinki Declaration of 1975.

Participant Characteristics

To be eligible, individuals were required to be ≥18 years old, utilize a wheeled mobility device for their main form of mobility, be able to sit unsupported for 30 seconds, have manual dexterity sufficient to use a smartphone, have hearing and vision that were normal or corrected to normal, and be able to read and speak English. In light of the COVID-19 pandemic, having access to video conferencing software (eg, Zoom, Facetime, or Skype), was an inclusion criterion for the second round of testing.

This study included 2 rounds of 5 different older adult wheelchair users (age 58.5 years, SD 12.6 years; 5 male, 5 female) who were recruited from the community through existing participant pools, sharing of research flyers, and word of mouth (Table 1). The first round of testing was completed in person between November 2020 and February 2021, while the second round was performed remotely between April and May 2021. During sessions, participants completed using the app, identified barriers to usability, and gave their rationale for their preferred results options. Feedback from the first round of 5 participants was used to modify the app. Following modification, the second round of volunteers participated in usability testing. This iterative design process is ideal for identifying use challenges, and having a sample size of 5 individuals per round of testing has been shown to be sufficient for identifying usability problems [28,29]. On average, the first round of testing in iterative design identifies 85% of usability problems, and the second round identifies an additional 13% [30]. This approach has been successful in the development of various health apps [31-33], including 2 recent fall risk apps for older adults [18] and patients with multiple sclerosis [34].

Experimental Session

After providing informed consent, each participant was given a smartphone (Samsung Galaxy S6, Samsung) that had the Steady-Wheels app installed. The participants were read an instructional prompt (Multimedia Appendix 1) asking them to speak their thought processes aloud while they independently used the app [35]. After the questions were answered, the researchers began visually and auditorily recording the participants’ interactions with the app and wrote field notes. After they completed using the app, the participants completed a semistructured interview in which they were asked to expand upon their likes and dislikes about the app’s layout and features (eg, graphics and wording) and to provide any suggestions for future iterations of the app. During this time, the participants also ranked the fall risk score results options from most to least favorite (Figure 5).

For the most part, these procedures remained constant for the second round of usability testing. The only difference was that the research supplies were delivered to the participants’ residences and the experimental session was completed over video conferencing software.

Along with feedback from the participant interviews, a smartphone usage questionnaire and the System Usability Scale (SUS) [36] were used to understand the participants’ experiences using smartphone and health apps and to quantify the usability of Steady-Wheels, respectively. While the questionnaire had a total of 6 choice and written response questions, the SUS consists of 10 questions with 5 response options [36,37], ranging from “strongly agree” (5 points) to “strongly disagree” (1 point). After calculation, results from the SUS range from zero (lowest usability) to 100 (highest usability); technology in general has an average score of 60 [37].

Qualitative Analysis

A thematic analysis approach was used to conduct the qualitative analysis [38]. Video recordings from the think-aloud activity and interviews were transcribed verbatim. The text was then independently reviewed and assigned codes (eg, instructions, testing duration, and graphics) based on its content using the software MAXQDA (version 12.3.3; Verbi GMBH). Once codes were reviewed and discussed by 2 authors (MF and KH), they were grouped into themes based on the commonality of the data. The same 2 authors (MF and KH) then deliberated on the main themes to ensure they reflected participant insights as accurately as possible. Both authors had prior experience conducting qualitative analyses.

Results

Participant demographic information is provided in Table 1. Table 2 presents participant responses as the mean response score (with SD) to each question of the SUS for the first and second iterations.

Table 2.

Participant responses to each System Usability Scale question for the first and second iterations.

| System Usability Scale question | Prompt | First iteration, mean score (SD) | Second iteration, mean score (SD) |

| 1 | I think that I would like to use this app frequently. | 2.6 (1.7) | 3.0 (1.6) |

| 2 | I found the app unnecessarily complex. | 1.0 (0) | 1.0 (0) |

| 3 | I thought the app was easy to use. | 4.8 (0.4) | 4.8 (0.5) |

| 4 | I think that I would need the support of a technical person to be able to use this app. | 1.3 (1.3) | 1.0 (0) |

| 5 | I found the various functions in this app were well-integrated. | 4.2 (0.8) | 4.5 (0.6) |

| 6 | I thought there was too much inconsistency in this app. | 1.4 (0.5) | 1.0 (0) |

| 7 | I would imagine that most people would learn to use this app very quickly. | 4.8 (0.4) | 4.8 (0.5) |

| 8 | I found the app very cumbersome to use. | 1.2 (0.4) | 1.0 (0) |

| 9 | I felt very confident using the app. | 4.4 (0.5) | 5.0 (0) |

| 10 | I needed to learn a lot of things before I could get going with this app. | 2.0 (1.4) | 1.3 (0.5) |

Iteration 1

The first round of usability testing yielded 2 themes: ease of use and flexibility of design. Representative participant quotes concerning these themes are reported throughout the following sections. The quotes are accompanied by participant characteristics (eg, sex, and age). System usability scores ranged from 72.5 to 97.5 and averaged 84.5 (SD 11.4), indicating “excellent” usability [39].

Ease of Use

Some participants found the app easy to use, saying it was “...very, very straightforward, very easy. I don’t happen to have much to say because it’s pretty straightforward” (male, 47 years old). Others had difficulty determining the sequence in which to complete the separate modules, stating, “There's only one item here. It says about me. Is that what I’m supposed to touch?” (male, 72 years old); this module can be seen in Figure 2. Following the completion of the “About Me” section, another participant said, “Now do I do the test?” (female, 43 years old). Although most participants were able to navigate the app, their thought processes indicated unnecessary cognitive load regarding the app layout: “Okay. Now we're ready to do the test portion I assume since I filled out the about me, so I’ll go ahead and do that” (male, 47 years old). Such insights may help to explain the large variance in participant responses to SUS question 10, which asks “I needed to learn a lot of things before I could get going with this app” (Table 2). In response to this feedback, the welcome screen was edited to provide a more thorough description of what the app was going to ask of the participant and the order in which it would be completed (Figure 1). The transition from the “About Me” section to the “Test” section was made more evident by shading the completed “About Me” option and providing a larger arrow pointing to the “Test” option (Figure 2).

Further participant feedback indicated that the app could be improved by having larger text and multiple-choice buttons, particularly within the “About Me” section. One participant said, “The layout? I guess I would say that some of it is a little bit small in terms of text and radio buttons. Since you're really focusing on your design you could blow it up a little...there's plenty of real estate to play with, so you might as well. Especially given the demographics of the people that will be using it—easier to make it more accessible” (male, 47 years old). Figure 1, Figure 3, and Figure 4 illustrate the changes made to text and radio button size. This increased font size led to the introduction of a vertical slide bar on slides that no longer fit on a single screen (Figure 4). Seated balance task titles were bolded and centered to draw attention (Figure 4).

The purpose of the app’s graphics was to help users further understand the instructional text. Based on participant feedback, it became apparent that the graphics used within the first iteration could be further refined. One participant stated, “It'd be easier if it [the graphics] demonstrated exactly what it was saying” (male, 47 years old). To better depict the nature of the tasks, open and closed eyes were added, an arm was moved to the side of the icon’s body to illustrate that only one hand was needed to hold the phone to the chest, and circular arrows were positioned around the icon completing the functional stability boundary task to represent the movement pattern of the task (Figure 4).

Flexibility of Design

Steady-Wheels aims to be applicable to all wheeled device users, but many participants showed difficulty answering the demographic questions accurately, due to the limited choice response options. Participants said, “Level of concern when reaching for higher objects? Well, I would normally ask for help” (male, 72 years old) and “Please rate your level of concern when pushing a wheelchair on uneven surfaces. Well, I don't push my wheelchair anymore” (male, 72 years old). The limited choice response options may have led participants to feel as if the app was not tailored to them, leading to the large variance in participant responses to SUS question 1, which asks, “I think that I would like to use this app frequently” (Table 2). To be more inclusive and comprehensive, more choice response options, such as “require assistance” and “I use a powered device” (Figure 3) were added, in addition to another demographic question asking, “What mobility device do you most commonly use?” with response options of “power wheelchair” or “manual wheelchair.”

App features that support individual preferences and allow for easy recovery from errors are known to increase the usability of a system. One feature that participants enjoyed was being able to swipe right to left to progress through the slides and left to right to retrieve prior slides. One participant said “Swiping works. That's useful. In addition to the buttons [eg, “next,” “back,” and “skip”], swiping left or right seems to work fine” (male, 47 years old). In addition to this, participants had the flexibility to change multiple-choice responses, retrieve prior slides, and reassess balance tasks if they were not pleased with their performance. Another participant said, “Whoops, can I go back? I missed something. It asked me a question” (male, 72 years old). For this participant, having the ability to retrieve prior slides and add or adjust their responses to questions was necessary for the accurate completion of the app. This flexibility of use also helped to counterbalance the difficulties associated with small radio buttons, which we have already discussed.

Primary modifications to the app were to increase the size of text and radio buttons for multiple-choice responses (Figure 1, Figure 3, and Figure 4), improve the on-screen directions (Figure 2 and Figure 4), and add response options to the demographic questions (Figure 3).

Preferred Results Screens

During the first iteration, participants strongly favored the result screen that showed a horizontal scale, numbered from 0 to 100 (Figure 5 C). Positive attributes of this option were the color scheme, the large fall risk number, the brief description of fall risk level, and the horizontal layout. Some criticisms included the lack of upper and lower bounds on the scale, (eg, “72 out of what?” [male, 72 years old]) and lack of clear low, medium, and high cut-off locations on the sliding scale. The participants also felt that a lower fall risk should be represented by a lower number. This feedback informed the development of new results screen options for the second iteration of testing.

Iteration 2

The second round of usability testing yielded 2 themes: app layout and clarity of instruction. SUS scores ranged from 87.5 to 97.5 and averaged 91.9 (SD 4.3), indicating “best imaginable” usability [39].

App Layout

In general, participants were very pleased with the layout of the app during the second iteration of testing. One stated, “I think overall, it's good. I think it's clear” (female, 52 years old) and “I think it was straightforward...it was rather clear, concise, and pretty compact” (male, 42 years old). These improvements may help explain the minimal variance in participant responses to SUS question 10: “I needed to learn a lot of things before I could get going on this app” (Table 2). Although there were no clear modifications that needed to be made to the layout, one participant provided some insight into the app’s instructions by stating, “It was all very user friendly, self-explanatory, if you take the time to read it.” (male, 53 years old). This statement suggests that the app had high-quality instructions, but perhaps too many of them. Further synthesis of the instructions or the inclusion of visual aids may help alleviate this in future iterations.

Clarity of Instructions

The only instructions that received criticism were the ones for the functional stability boundary test. Despite changes to the visual representation (Figure 4), most participants struggled to understand how to complete the test. One participant said, “Well, I don't know what to do with this one. It says, ‘create as wide of circles.’ I don't know if that's with my wheelchair, in which case, I’d have to turn it back on. And if I do, I can't hold the phone to my chest.” (female, 71 years old). For clarity, the instructional text will be altered to read “For this test, you will create as wide of circles with your trunk as you can...”

Preferred Results Screens

During the second iteration, participants strongly favored the result screen option that showed a dial (Figure 5 E), stating that it was a “real obvious one,” and complimenting its representation of low, medium, and high risk. Many related it to their preexisting understanding of a speedometer.

During both iterations, participants enjoyed the simplicity of receiving a single score, stating, “I think having a clear and concise one or two number metric is great. That's perfect” (male, 42 years old). However, most had lingering questions, such as “How do I use this number?” (male, 72 years old) and “What does it tell me?” after the app was complete. “Maybe give some more information about how the score is actually generated, and maybe give some feedback...maybe having a pop-up recommendation screen at the end for some suggestions with exercises or something like that, might be utilitarian” (male, 42 years old). Further changes to the app are needed to investigate how much information is appropriate and informative for users.

Discussion

Principal Results

Understanding the usability of a smartphone app provides insight into the quality and overall satisfaction of the user’s experience. An improved experience could lead to greater use of health apps and increased adherence to suggested interventions [40]. Consequently, the purpose of the current study was to determine the usability of a fall risk mobile health app, Steady-Wheels, and identify key insights into technology development for aging adults who use wheeled devices. Initial design considerations were based on age-related changes and physical limitations associated with disability, including motor, sensation, and cognitive impairments. A mixed-method, iterative design and testing process yielded high SUS scores; the app was rated as having “excellent” and “best imaginable” levels of usability. The main themes for each iteration were informed by participant feedback, with the first round of testing yielding 2 main themes (ease of use and flexibility of design) and the second round of testing yielding 2 different main themes (app layout and clarity of instruction). These themes helped identify insights into app development that could promote usability for aging adults who use wheeled devices.

Overall, participants found that the app was straightforward, easy to use, supportive of individual preferences, and allowed for easy recovery from errors. They appreciated the simple, objective fall risk score. App development and modifications came from participant feedback and insights from previously developed apps [18,34,40-42] and an understanding of usability heuristics for interface design, such as the visibility of the system, use of recognition rather than recall, aesthetics, minimalist design, error prevention, and a match between the system and the real world [43].

The visibility of the system and the timeliness and adequacy of feedback and information to users informed the modifications made to the welcome screen and the overall “step-by-step” style of the app. This approach allowed for the recognition of a recently described task rather than recall of prior instruction. While this promoted the ease of use of Steady-Wheels, the primary complaints about health apps made by users are often related to their aesthetics, especially poor or difficult to interpret color coding, graphics, and fonts [42]. Providing a simple color scheme and font with the inclusion of only essential graphics aided this and created minimal distractions within the app. This approach placed a reduced cognitive load on users and helped to reduce the occurrence of errors.

The primary goal of the results scores at the end of testing was to intuitively convey fall risk results to diverse users. Individuals learn and retain content better from visual information (eg, cartoons and graphics) [44] and can interpret its meaning much more easily if the design is recognizable [41] or matches real-world experiences and expectations [43]. Providing users with a results option that mimicked their preexisting knowledge of speedometers followed these concepts, was well received, and promoted curiosity in the users about what could be done to lower their fall risk. Collectively, these design features led to the development of an app with high perceived ease of use, which is associated with greater adoption of technology [45].

Along with the personal adoption of technology, it is also important to gain insights into the likelihood of users recommending this technology to other individuals. The SUS has a strong relationship with the Net Promotor Score, which has become a common metric to understand customer loyalty [46]. Consumers will likely promote a product if it achieves a SUS score of 81 or greater [39]. In the current study, both iterations of testing yielded a SUS score above this threshold. This is particularly noteworthy as technology in general has an average SUS score of 60 [37]. Overall, these findings indicate that Steady-Wheels may not only be adopted on a personal level, but will likely also be recommended to others at an equal or greater rate than other forms of technology.

Lessons Learned

Although this is the first app designed to measure fall risk in aging adults who use wheeled devices, our initial design was informed by the needs of users and learned experiences from prior attempts to develop fall risk screening apps, both for older adults [18] and for people with multiple sclerosis [34]; all of which have received high scores for usability from their respective users. Throughout the iterative testing of Steady-Wheels, we identified key insights that could further inform the development of mobile health apps for older users of wheeled devices. Depending on their physical ability, some individuals are reliant on the use of a single hand for all activities of daily living. Future development of remote assessment should account for this in the test selection and the test’s method of completion. By failing to consider this, researchers may increase the task’s safety risk, complexity, and error rates. It was common to see participants that had difficulties with dexterity, making large buttons to select and swiping options key to the app’s ease of use. Also critical to ease of use was the guided (step-by-step) navigation of the app with clear and brief instructions accompanied by representative illustrations along the way. These features helped to reduce the risk of errors, but if mistakes were made during testing, simple error recovery (eg, allowing for retests or access to previous slides to adjust choice responses) should be made possible. Lastly, intuitive fall risk reporting was achieved through the presentation of a single number located on a color-coordinated continuum for low, medium, and high fall risk. While participants found the simple reporting of their fall risk score to be useful, they were eager to learn ways to improve their fall risk. Providing follow-up preventative information may increase the app’s usefulness and encourage further engagement with the app and shared content [40]. Personalized messaging is an easy and effective strategy for altering patient behavior [47] and could be a feasible way to share such information.

Due to the COVD-19 pandemic, the second iteration of this study was completed remotely. This successful experience highlighted the potential feasibility of the home use of the app. Participants received a smartphone that they may not have been previously exposed to, but were able to turn it on, locate the previously installed app, and follow the instructions to completion. The validity and reliability of this novel measurement tool will need to be measured and compared to common clinical tests [48,49].

Limitations

The current investigation has three primary limitations: (1) baseline interviews with target users were not conducted to inform the app’s initial iteration, (2) all participants in the second round of usability testing were required to have access to videoconferencing software (eg, Zoom, Skype, or Facetime), and (3) 9 of the 10 participants had received some form of higher education.

While the current app considered our target users’ characteristics and abilities and the lessons learned during the development of previous fall risk apps, baseline interviews were not performed. Taking a more traditional user-centered approach would likely have highlighted additional thoughts, wants, and needs concerning technology and the app’s design. Identifying these key insights early on would have been a way to better serve the target users and help ensure that the researchers’ time and resources were being used most efficiently.

Although the second round of usability testing helped to provide insights into the app’s feasibility in a home setting, exclusively enrolling individuals that already used videoconferencing software may have created a biased, “technology-friendly” sample. Unfortunately, this was the only possible method of testing during the COVID-19 pandemic. Moving forward, researchers should aim to prioritize in-person data collection sessions when possible.

Despite efforts to recruit through a variety of methods and locations, most participants had received some form of higher education. Higher education may provide individuals with more experience engaging with technology and a better understanding of it, and our participants may have been more likely to understand the fall risk scores as presented. This, too, may have contributed to bias. Future researchers should consider accounting for this effect by enrolling roughly equal proportions of individuals with different educational backgrounds.

Conclusions

Previous literature has demonstrated that falls are common for individuals who use wheeled devices and are detrimental. The development of an objective, remote fall risk assessment tool could allow for accessible fall risk screening. Smartphone technology is a promising way to provide users with this information. Overall, aging adults who use wheeled devices found the mobile health app easy to use with a high level of usability due to characteristics such as guided navigation of the app, large text and radio buttons, clear and brief instructions that were accompanied by representative illustrations, and simple error recovery. Intuitive fall risk reporting was achieved through the presentation of a single number on a continuum of colors indicating low, medium, and high risk. Future apps developed for fall risk reporting for this population should consider leveraging the insights identified here to maximize usability.

Acknowledgments

This work was supported by the National Institute on Disability, Independent Living, and Rehabilitation Research (grant 90REGE0006-01-00). MF was funded by the MS Run the US Scholarship.

Abbreviations

- SUS

System Usability Scale

Instructional Prompt.

Footnotes

Authors' Contributions: MF contributed to participant recruitment, data collection, data and statistical analyses, and writing the manuscript. JF contributed to app development and writing the manuscript. KH contributed to data and statistical analyses and writing the manuscript. LR contributed to funding acquisition, experimental design, and writing the manuscript. JS contributed to funding acquisition, experimental design, and writing the manuscript.

Conflicts of Interest: JS declares ownership in Sosnoff Technologies, LLC. Other authors declare no conflicts of interest.

References

- 1.U.S. Wheelchair User Statistics. Pants Up Easy. [2020-09-17]. https://www.pantsupeasy.com/u-s-wheelchair-user-statistics/

- 2.Karmarkar AM, Dicianno BE, Cooper R, Collins DM, Matthews JT, Koontz A, Teodorski EE, Cooper RA. Demographic profile of older adults using wheeled mobility devices. J Aging Res. 2011;2011:560358. doi: 10.4061/2011/560358. doi: 10.4061/2011/560358. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Colby SL, Ortman JM. Projections of the Size and Composition of the U.S. Population: 2014 to 2060. United States Census Bureau. 2015. Mar, [2020-09-17]. https://www.census.gov/content/dam/Census/library/publications/2015/demo/p25-1143.pdf .

- 4.Edwards K, McCluskey A. A survey of adult power wheelchair and scooter users. Disabil Rehabil Assist Technol. 2010;5(6):411–9. doi: 10.3109/17483101003793412. [DOI] [PubMed] [Google Scholar]

- 5.Berg K, Hines M, Allen S. Wheelchair users at home: few home modifications and many injurious falls. Am J Public Health. 2002 Jan;92(1):48. doi: 10.2105/ajph.92.1.48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kirby RL, Ackroyd-Stolarz SA, Brown MG, Kirkland SA, MacLeod DA. Wheelchair-related accidents caused by tips and falls among noninstitutionalized users of manually propelled wheelchairs in Nova Scotia. Am J Phys Med Rehabil. 1994;73(5):319–30. doi: 10.1097/00002060-199409000-00004. [DOI] [PubMed] [Google Scholar]

- 7.Rice L, Kalron A, Berkowitz S, Backus D, Sosnoff J. Fall prevalence in people with multiple sclerosis who use wheelchairs and scooters. Medicine (Baltimore) 2017 Sep;96(35):e7860. doi: 10.1097/MD.0000000000007860. doi: 10.1097/MD.0000000000007860.00005792-201709010-00020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Rice LA, Ousley C, Sosnoff JJ. A systematic review of risk factors associated with accidental falls, outcome measures and interventions to manage fall risk in non-ambulatory adults. Disabil Rehabil. 2015;37(19):1697–705. doi: 10.3109/09638288.2014.976718. [DOI] [PubMed] [Google Scholar]

- 9.Boswell-Ruys CL, Harvey LA, Delbaere K, Lord SR. A Falls Concern Scale for people with spinal cord injury (SCI-FCS) Spinal Cord. 2010 Sep;48(9):704–9. doi: 10.1038/sc.2010.1.sc20101 [DOI] [PubMed] [Google Scholar]

- 10.Tinetti ME, Williams CS. The effect of falls and fall injuries on functioning in community-dwelling older persons. J Gerontol A Biol Sci Med Sci. 1998 Mar;53(2):M112–9. doi: 10.1093/gerona/53a.2.m112. [DOI] [PubMed] [Google Scholar]

- 11.Sarmiento K, Lee R. STEADI: CDC's approach to make older adult fall prevention part of every primary care practice. J Safety Res. 2017 Dec;63:105–109. doi: 10.1016/j.jsr.2017.08.003. https://europepmc.org/abstract/MED/29203005 .S0022-4375(17)30249-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Roeing KL, Hsieh KL, Sosnoff JJ. A systematic review of balance and fall risk assessments with mobile phone technology. Arch Gerontol Geriatr. 2017 Nov;73:222–226. doi: 10.1016/j.archger.2017.08.002.S0167-4943(17)30269-8 [DOI] [PubMed] [Google Scholar]

- 13.Reyes A, Qin P, Brown CA. A standardized review of smartphone applications to promote balance for older adults. Disabil Rehabil. 2018 Mar;40(6):690–696. doi: 10.1080/09638288.2016.1250124. [DOI] [PubMed] [Google Scholar]

- 14.Mellone S, Tacconi C, Schwickert L, Klenk J, Becker C, Chiari L. Smartphone-based solutions for fall detection and prevention: the FARSEEING approach. Z Gerontol Geriatr. 2012 Dec;45(8):722–7. doi: 10.1007/s00391-012-0404-5. [DOI] [PubMed] [Google Scholar]

- 15.Rasche P, Mertens A, Bröhl Christina, Theis S, Seinsch T, Wille M, Pape H, Knobe M. The "Aachen fall prevention App" - a Smartphone application app for the self-assessment of elderly patients at risk for ground level falls. Patient Saf Surg. 2017;11:14. doi: 10.1186/s13037-017-0130-4. https://pssjournal.biomedcentral.com/articles/10.1186/s13037-017-0130-4 .130 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wetsman N. Apple's new health features bring new focus to elder care technology. The Verge. [2021-07-06]. https://www.theverge.com/2021/6/10/22527707/apple-health-data-eldery-falls-walking-privacy .

- 17.Hsieh KL, Roach KL, Wajda DA, Sosnoff JJ. Smartphone technology can measure postural stability and discriminate fall risk in older adults. Gait Posture. 2019 Jan;67:160–165. doi: 10.1016/j.gaitpost.2018.10.005.S0966-6362(18)30452-1 [DOI] [PubMed] [Google Scholar]

- 18.Hsieh KL, Fanning JT, Rogers WA, Wood TA, Sosnoff JJ. A Fall Risk mHealth App for Older Adults: Development and Usability Study. JMIR Aging. 2018 Nov 20;1(2):e11569. doi: 10.2196/11569. https://aging.jmir.org/2018/2/e11569/ v1i2e11569 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Frechette ML, Abou L, Rice LA, Sosnoff JJ. The Validity, Reliability, and Sensitivity of a Smartphone-Based Seated Postural Control Assessment in Wheelchair Users: A Pilot Study. Front Sports Act Living. 2020;2:540930. doi: 10.3389/fspor.2020.540930. doi: 10.3389/fspor.2020.540930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Mohr DC, Schueller SM, Montague E, Burns MN, Rashidi P. The behavioral intervention technology model: an integrated conceptual and technological framework for eHealth and mHealth interventions. J Med Internet Res. 2014 Jun 05;16(6):e146. doi: 10.2196/jmir.3077. https://www.jmir.org/2014/6/e146/ v16i6e146 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Feys P, Lamers I, Francis G, Benedict R, Phillips G, LaRocca N, Hudson LD, Rudick R, Multiple Sclerosis Outcome Assessments Consortium The Nine-Hole Peg Test as a manual dexterity performance measure for multiple sclerosis. Mult Scler. 2017 Apr;23(5):711–720. doi: 10.1177/1352458517690824. https://journals.sagepub.com/doi/10.1177/1352458517690824?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Uzochukwu JC, Stegemöller Elizabeth L. Repetitive Finger Movement and Dexterity Tasks in People With Parkinson's Disease. Am J Occup Ther. 2019;73(3):7303205090p1–7303205090p8. doi: 10.5014/ajot.2019.028738. [DOI] [PubMed] [Google Scholar]

- 23.Johansson GM, Häger Charlotte K. A modified standardized nine hole peg test for valid and reliable kinematic assessment of dexterity post-stroke. J Neuroeng Rehabil. 2019 Jan 14;16(1):8. doi: 10.1186/s12984-019-0479-y. https://jneuroengrehab.biomedcentral.com/articles/10.1186/s12984-019-0479-y .10.1186/s12984-019-0479-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Erol AM, Ceceli E, Uysal Ramadan S, Borman P. Effect of rheumatoid arthritis on strength, dexterity, coordination and functional status of the hand: the relationship with magnetic resonance imaging findings. Acta Reumatol Port. 2016;41(4):328–337. http://actareumatologica.pt/article_download.php?id=1210 .AO140260 [PubMed] [Google Scholar]

- 25.Bernard M, Liao C, Mills M. The effects of font type and size on the legibility and reading time of online text by older adults. CHI '01 Extended Abstracts on Human Factors in Computing Systems; CHI01: Human Factors in Computing Systems; Mar 31-Apr 5, 2001; Seattle, WA. 2001. pp. 175–176. [DOI] [Google Scholar]

- 26.Shin S, Sosnoff JJ. Spinal cord injury and time to instability in seated posture. Arch Phys Med Rehabil. 2013 Aug;94(8):1615–20. doi: 10.1016/j.apmr.2013.02.008.S0003-9993(13)00133-0 [DOI] [PubMed] [Google Scholar]

- 27.Sung J, Ousley CM, Shen S, Isaacs ZJK, Sosnoff JJ, Rice LA. Reliability and validity of the function in sitting test in nonambulatory individuals with multiple sclerosis. Int J Rehabil Res. 2016 Dec;39(4):308–312. doi: 10.1097/MRR.0000000000000188. [DOI] [PubMed] [Google Scholar]

- 28.How Many Test Users in a Usability Study? Nielsen Norman Group. [2020-09-18]. https://www.nngroup.com/articles/how-many- test-users/

- 29.Macefield R. How To Specify the Participant Group Size for Usability Studies: A Practitioner's Guide. J Usability Stud. 2009;5(1):34–45. https://uxpajournal.org/wp-content/uploads/sites/7/pdf/JUS_Macefield_Nov2009.pdf . [Google Scholar]

- 30.Why You Only Need to Test with 5 Users. Nielsen Norman Group. [2022-04-22]. https://www.nngroup.com/articles/why-you-only -need-to-test-with-5-users/

- 31.Wu Y, Liu H, Kelleher A, Pearlman J, Cooper RA. Evaluating the usability of a smartphone virtual seating coach application for powered wheelchair users. Med Eng Phys. 2016 Jun;38(6):569–75. doi: 10.1016/j.medengphy.2016.03.001.S1350-4533(16)30020-0 [DOI] [PubMed] [Google Scholar]

- 32.Zou P, Stinson J, Parry M, Dennis C, Yang Y, Lu Z. A Smartphone App (mDASHNa-CC) to Support Healthy Diet and Hypertension Control for Chinese Canadian Seniors: Protocol for Design, Usability and Feasibility Testing. JMIR Res Protoc. 2020 Apr 02;9(4):e15545. doi: 10.2196/15545. https://www.researchprotocols.org/2020/4/e15545/ v9i4e15545 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Stinson JN, Jibb LA, Nguyen C, Nathan PC, Maloney AM, Dupuis LL, Gerstle JT, Alman B, Hopyan S, Strahlendorf C, Portwine C, Johnston DL, Orr M. Development and testing of a multidimensional iPhone pain assessment application for adolescents with cancer. J Med Internet Res. 2013 Mar 08;15(3):e51. doi: 10.2196/jmir.2350. https://www.jmir.org/2013/3/e51/ v15i3e51 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Hsieh K, Fanning J, Frechette M, Sosnoff J. Usability of a Fall Risk mHealth App for People With Multiple Sclerosis: Mixed Methods Study. JMIR Hum Factors. 2021 Mar 22;8(1):e25604. doi: 10.2196/25604. https://humanfactors.jmir.org/2021/1/e25604/ v8i1e25604 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Fonteyn ME, Kuipers B, Grobe SJ. A Description of Think Aloud Method and Protocol Analysis. Qual Health Res. 2016 Jul 01;3(4):430–441. doi: 10.1177/104973239300300403. [DOI] [Google Scholar]

- 36.Brooke J. SUS - A quick and dirty usability scale. Redhatch Consulting Ltd. [2020-09-21]. https://hell.meiert.org/core/pdf/sus.pdf .

- 37.Measuring Usability with the System Usability Scale (SUS) MeasuringU. [2020-09-21]. https://measuringu.com/sus/

- 38.Creswell JW. Qualitative inquiry and research design: Choosing among five traditions. Thousand Oaks, CA: Sage Publications; 1998. p. xv, 403. [Google Scholar]

- 39.5 Ways to Interpret a SUS Score. MeasuringU. [2020-11-30]. https://measuringu.com/interpret-sus-score/

- 40.Georgsson M, Staggers N. An evaluation of patients' experienced usability of a diabetes mHealth system using a multi-method approach. J Biomed Inform. 2016 Feb;59:115–29. doi: 10.1016/j.jbi.2015.11.008. https://linkinghub.elsevier.com/retrieve/pii/S1532-0464(15)00276-2 .S1532-0464(15)00276-2 [DOI] [PubMed] [Google Scholar]

- 41.Kirwan Morwenna, Duncan Mitch J, Vandelanotte Corneel, Mummery W Kerry. Design, development, and formative evaluation of a smartphone application for recording and monitoring physical activity levels: the 10,000 Steps "iStepLog". Health Educ Behav. 2013 Apr;40(2):140–51. doi: 10.1177/1090198112449460.1090198112449460 [DOI] [PubMed] [Google Scholar]

- 42.Schnall R, Rojas M, Bakken S, Brown W, Carballo-Dieguez A, Carry M, Gelaude D, Mosley JP, Travers J. A user-centered model for designing consumer mobile health (mHealth) applications (apps) J Biomed Inform. 2016 Apr;60:243–51. doi: 10.1016/j.jbi.2016.02.002. https://linkinghub.elsevier.com/retrieve/pii/S1532-0464(16)00024-1 .S1532-0464(16)00024-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.10 Heuristics for User Interface Design Internet. Nielsen Norman Group. [2021-10-23]. https://www.nngroup.com/articles/ten-usabi lity-heuristics/

- 44.Burmark L. Visual Literacy: Learn To See, See To Learn. Arlington, VA: Association for Supervision & Curriculum Development; 2002. [Google Scholar]

- 45.Davis FD. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Quarterly. 1989 Sep;13(3):319–340. doi: 10.2307/249008. [DOI] [Google Scholar]

- 46.Predicting Net Promoter Scores from System Usability Scale Scores. MeasuringU. [2020-11-30]. https://measuringu.com/nps-sus/

- 47.Fried TR, Redding CA, Robbins ML, Paiva AL, O'Leary JR, Iannone L. Development of Personalized Health Messages to Promote Engagement in Advance Care Planning. J Am Geriatr Soc. 2016 Feb;64(2):359–64. doi: 10.1111/jgs.13934. https://europepmc.org/abstract/MED/26804791 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Gorman SL, Radtka S, Melnick ME, Abrams GM, Byl NN. Development and validation of the Function In Sitting Test in adults with acute stroke. J Neurol Phys Ther. 2010 Sep;34(3):150–60. doi: 10.1097/NPT.0b013e3181f0065f.01253086-201009000-00005 [DOI] [PubMed] [Google Scholar]

- 49.Abou L, Sung J, Sosnoff JJ, Rice LA. Reliability and validity of the function in sitting test among non-ambulatory individuals with spinal cord injury. J Spinal Cord Med. 2020 Nov;43(6):846–853. doi: 10.1080/10790268.2019.1605749. https://europepmc.org/abstract/MED/30998421 . [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Instructional Prompt.