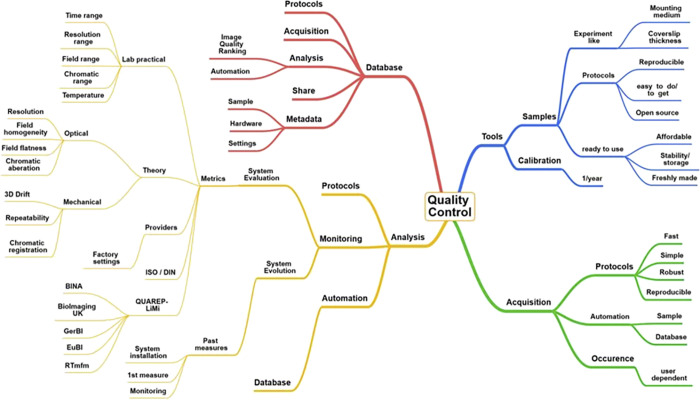

Faklaris et al. present tools, fast and robust acquisition protocols, and automated analysis methods to assess quality control metrics for light fluorescence microscopy. The authors collected data from 10 light microscopy core facilities and propose guidelines to ensure quantifiable and reproducible results among laboratories.

Abstract

Although there is a need to demonstrate reproducibility in light microscopy acquisitions, the lack of standardized guidelines monitoring microscope health status over time has so far impaired the widespread use of quality control (QC) measurements. As scientists from 10 imaging core facilities who encounter various types of projects, we provide affordable hardware and open source software tools, rigorous protocols, and define reference values to assess QC metrics for the most common fluorescence light microscopy modalities. Seven protocols specify metrics on the microscope resolution, field illumination flatness, chromatic aberrations, illumination power stability, stage drift, positioning repeatability, and spatial-temporal noise of camera sensors. We designed the MetroloJ_QC ImageJ/Fiji Java plugin to incorporate the metrics and automate analysis. Measurements allow us to propose an extensive characterization of the QC procedures that can be used by any seasoned microscope user, from research biologists with a specialized interest in fluorescence light microscopy through to core facility staff, to ensure reproducible and quantifiable microscopy results.

Introduction

Quality control (QC) is often neglected in the field of light microscopy because it is considered complex, costly, and time-consuming (Nelson et al., 2021). It is, however, essential in research to ensure quantifiable results and reproducibility among laboratories (Baker, 2016; Nature Methods, 2018; Deagle et al., 2017; Heddleston et al., 2021). Biologists aiming at publishing top-quality images in light microscopy need to understand not only the fundamentals of microscopy but also the limitations, variations, and deviations of microscope performance.

In the past, different groups have developed measurement protocols and published methods for a variety of wide-field and confocal microscope performance aspects (Kedziora et al., 2011; Murray et al., 2007; Petrak and Waters, 2014; Zucker et al., 2007; Zucker and Price, 1999). The majority of these QC studies were recently reviewed (Jonkman et al., 2020; Jost and Waters, 2019; Montero Llopis et al., 2021). Some of the studies were carried out on an international scale involving a larger community in the frame of the Association of Biomolecular Resource Facilities (ABRF; Cole et al., 2013; Stack et al., 2011). In a few of these studies, automation of the limit values and analysis workflows was proposed. The ISO 21073:2019 norm for confocal microscopy was recently published (ISO, 2019; Nelson et al., 2020), providing a fixed minimal set of tests to be performed. This norm is descriptive, no experimental values are shown, nor are limiting values proposed, but it is the first step toward standardization of QC in microscopy.

Due to a lack of commonly accepted QC guidelines, we made a significant advance by collecting experimental data from 10 light microscopy core facilities and developed tools, fast and robust acquisition protocols, and automated analysis methods that can render the monitoring of light microscope performance easily accessible to a broad scientific community.

We checked 117 objectives, 49 light sources, 25 stages, and 32 cameras, and additionally automated many of the acquisition and analysis procedures. Most of the major microscope manufacturers were represented, with the majority coming from Carl Zeiss Microscopy (50%), followed by Leica Microsystems (20%), Nikon (14%), Olympus/Evident (9%), Andor–Oxford Instruments (7%), and Till Photonics (2%). The facilities involved are part of the French microscopy technological network RTmfm. This study results from collaborative work over the past 10 yr of the QC working group (GT3M; https://rtmfm.cnrs.fr/en/gt/gt-3m/) of this network.

We first employed a qualitative approach followed by specific QC metric calculations. The paper is divided into six chapters that define guidelines to characterize (1) lateral and axial resolution, (2) field illumination, (3) coregistration, (4) illumination power stability, (5) stage drift and positioning repeatability, and (6) temporal and spatial noise sources of camera sensors (Fig. 1). Each chapter begins with an introduction that serves as the chapter background in which key questions are presented. The main conclusions are given in the discussion part of every chapter, with examples emphasizing the influence of QC in biological imaging.

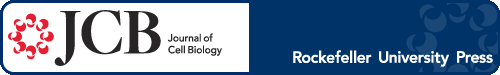

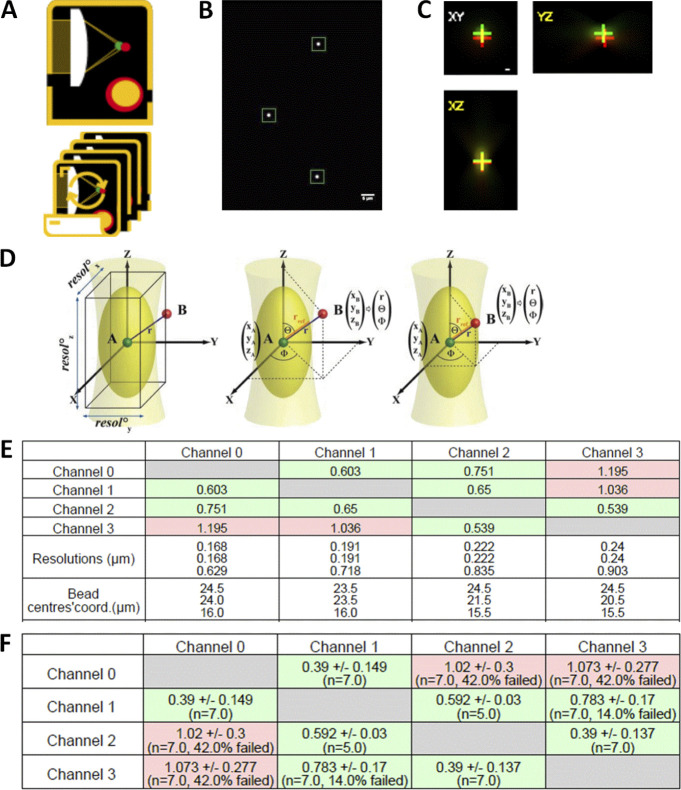

Figure 1.

Schematic overview of the seven proposed QC guideline fields, together with the corresponding MetroloJ_QC tool icon. (a) PSF is a simple way to characterize the resolution power in the x, y, and z dimensions. (b) Field illumination determines the intensity quantification over the whole field of view of the system, tile-scan reconstructions, and inhomogeneous sample bleaching. (c) Coregistration characterizes the chromatic mismatch between different color channel images of the same fluorescent emitter in the x, y, and z directions. (d) Illumination power stability at different time scales determines the reproducibility and quantification of the experiment. (e) The imaging quality depends on the stability of the sample in x, y, and z characterized by drift monitoring and stage positioning repeatability. (f) The noise and offset characterization of the camera sensor influences quantification, especially for weak signals.

These six chapters will be of interest to all biologists willing to acquire meaningful and reliable images on a well-tuned microscope. More precisely, the metrics and tools that we propose will be greatly useful for (1) microscopy users with access to core facilities, (2) core facility staff taking care of the routine operation of the microscopes, and (3) biologists with irregular seasonal usage of their microscope. A deeper knowledge of microscopy theory will help in reading the full article. Recent scientific reviews describe good practice guides, which are very useful to biologists interested in the fundamentals of fluorescence microscopy (Cuny et al., 2022; Swift and Colarusso, 2022).

We developed the MetroloJ_QC (ImageJ/Fiji Java plugin) software tool that fully automates the QC data analysis based on existing MetroloJ plugin tools (Cordelières and Matthews, 2010; Fig. 2). Processing was automated to minimize user actions. We also implemented multichannel image processing to avoid channel splitting and separately configuring the plugin for each color channel. The plugin incorporated metrics defined in this study, tools for camera characterization and drift monitoring, and permitted the creation of report documents to quickly identify parameters within/outside tolerances.

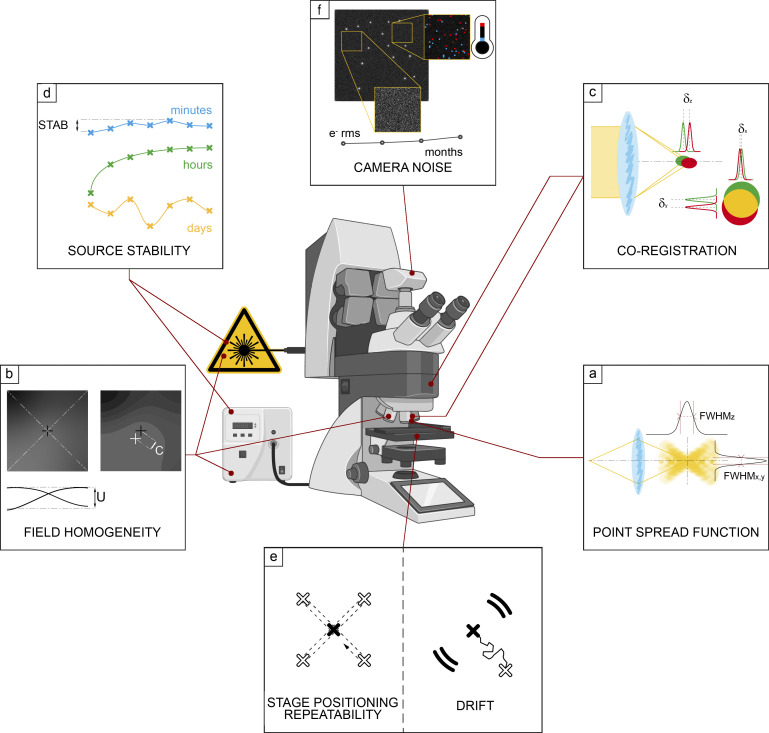

Figure 2.

Brief description of MetroloJ_QC ImageJ/Fiji plugin. The plugin analyzes image data to characterize the optics components, the detector and the stage properties of a light microscope. The active window gives access to three types of tools: (a) Single image set. (b) Batch mode for automation. (c) Plugin options allowing from left to right: to toggle the main ImageJ window, to close the bar, to customize the bar and remove some above-mentioned tools, and to open the current version manual.

We highlighted the importance of QC measurements during new microscope setup installations and their crucial role in building up a requisite vocabulary to interact with the microscope and device manufacturers and service technicians. We also recommended acquiring these metrics before and after any scheduled revision of a system to compare QC data.

We establish QC guidelines, propose tolerance values, and recommend how often microscopes should be subject to QC testing, parameters that provide valuable indicators of the setup health state and experimental reproducibility (see Table 1 for a summary and troubleshooting).

Table 1.

Summary of metrics and troubleshooting

| Monitoring points (measuring frequency) | Metrics | Limit values | Possible reasons for out of limit values: Proposed solutions |

|---|---|---|---|

| Point spread function (once per mo) | FWHM XY exp/theory | <1.5 |

Dirty or damaged objective: Meticulous front lens cleaning. Damaged objective: Close examination of the front lens (scratches, lens detachment); send to repair. Pinhole and collimator lens misalignment (LSCM): Check the alignment. Optical aberrations: Check the manufacturer correction specifications (APO, PlanAPO…); remove DIC or additional lens from the light path; clean optical elements in the light path; regulate correction collar for NA, temperature, or cover slip thickness. Sample: Adjust stage and sample flatness; choose #1.5 (0.17 mm thick) cover slip; image beads near cover slip; use appropriate mounting or immersion medium at the right temperature; make sure there are no bubbles in the immersion oil. Vibrations-drifts: Use an anti-vibration optical table and regulate air pressure properly; do a short time-lapse of a single bead. Galvanometric scanners (LSCM): Calibrate bidirectional mode; check at mono-directional mode. Excitation light alignment and polarization: Check sources coalignment and inspect light guide (polarization, bends). |

| FWHM Z exp/theory | <1.5 | ||

| LAR | Case-dependent illumination source | ||

| Field illumination (twice per yr) | Uniformity (U) | >50% |

Damaged objective: Close examination of the front lens (scratches, lens detachment); send to repair. Large camera sensor chip size (WF, SDCM): Crop the central field of view of the camera sensor chip. Incorrect position of the field diaphragm (WF): Centering of the field diaphragm. Sources and system alignment: Adjusting the collector lens or liquid light guide position; checking possible bending of the optical fibers; coalignment of the light sources; pinhole alignment; beam size at the back focal plane of the objective. UV misalignment: Check the manufacturers’ correction specifications of the optical path components. |

| Centering (C) | >20% | ||

| Co-registration (twice per yr) | Ratio rexp/rref | 1 |

Chromatic aberrations: Check the constructor correction specifications of the considered lens (APO, PlanAPO…). Damaged objective: Close examination of the front lens (scratches, lens detachment); send to repair. Sources and system alignment: Dichroic mirror and filter positions; additional lenses and light sources alignment. Micro-lenses disk (SDCM): Check the constructor correction specifications of the optical path components. |

| Illumination power stability (once per yr) | Stability STABpower | >97% |

Laser dying: Thorough check by the constructor. Electrical instabilities: AC-powered light source; check the power grid stability of the room. Environmental variations (thermal, air flow, humidity...): General verification of environmental and vibration conditions. Fiber polarization: Check the bends of the optical fibers; proper alignment of laser in fiber. Defective optical components (AOTF, AOM, fiber): Thorough check by the constructor. |

|

SDnormalized intensity |

<0.2 | ||

| Stage drift (once per yr) | Va: Mean velocity after stabilization | [0–50] nm/min |

Environmental variations (thermal, air flow, humidity...): General verification of environmental conditions; protect the stage from thermal shifts (cover, temperature-controlled chamber, or incubator); let the stage stabilize before starting experiments. Stage control components not properly working: Check joystick at “no move” position, check controller status. Mechanical instability: Tighten the mechanical elements; thorough check by the constructor. |

| Vb: Mean velocity before stabilization | [0–100] nm/min | ||

| τstab | <120 min | ||

| Stage positioning repeatability (once per yr) | σpositioning deviation | Stage specifications |

Environmental variations (thermal, air flow, humidity...): General verification of environmental conditions. Mechanical instability: Clean pieces of broken cover slip; tighten mechanical elements; thorough check by the constructor. Objective touches sample insert if position far from center: Change insert or limit movements to central positions. Not enough oil on the objective: Add enough oil beneath the whole sample before starting an experiment. Stage drift: Let the stage stabilize before starting a multiposition experiment. |

| Camera read noise (once per yr) | VAR STABnoise |

>90% >97% |

Electronics issue; detector temperature; detector aging; cosmic rays: Thorough check by the constructor. |

Summary of the basic metrics, the recommended measuring frequency, the experimental limit values, the possible reasons associated with their exceeding values, and troubleshooting advice.

Based on many of the experiences and results presented in that work, the initiative “Quality Assessment and Reproducibility for Instruments & Images in Light Microscopy” (QUAREP-LiMi) was started in April 2020 as an international joint approach with many stakeholders including the industry in the field of light microscopy to further improve QC in the major light microscopy techniques (Boehm et al., 2021; Nelson et al., 2021).

Lateral and axial resolution of the microscope

Background

One of the main missions of fluorescence microscopy is to visualize spatially cellular structures using mainly fluorescent proteins and fluorescent dyes coupled with antibodies. The resolution of a microscope, i.e., its ability to distinguish two close objects is of primary importance and is limited by diffraction. Images from single-point emitters (e.g., single fluorescent molecules in a sample) do not appear as infinitely small points on a detector of a conventional microscope. The emitted photons are scattered due to their interaction with lenses, filters, and other optical components in the imaging system and the sample itself, and therefore the resultant image is blurred. The limited aperture of the microscope objectives induces a spatial profile with a central spot and a series of concentric rings, known as the Airy diffraction pattern, referred to as the point spread function (PSF; Juškaitis, 2006; Keller, 1995; Nasse et al., 2007; Zucker, 2014).

Ernst Abbe described the blur effect and defined the resolution limit as the minimal distance allowing for two closely spaced Airy disk patterns (subdiffraction points) to be distinguished (Abbe, 1882). In biology, molecules of a few nanometers are typically organized within aggregates below Abbe’s limit, which is roughly 200 nm for a high numerical aperture (NA) objective. For QC measurements, it is convenient to treat the notion of a single point emitter by measuring and characterizing the PSF (Inoué, 2006) because it gives a robust QC characterization of the objectives and it is relatively easy to prepare samples for this kind of measurement.

Evaluating and monitoring the PSF of a microscope over time is the first key step in determining its performance stability, and it has been well studied in the past (Cole et al., 2011; Goodwin, 2013; Klemm et al., 2019; Zucker, 2014) by using dedicated software tools (Hng and Dormann, 2013; Theer et al., 2014). The size, shape, and symmetry of the PSF, as compared to the theoretical ideal resolution, characterize the entire optical setup, including the objective, and affect the image quality and the subsequent quantification analysis, especially for advanced microscopy techniques. Here, we defined two metrics, a measured to theoretical PSF ratio and the lateral asymmetry ratio (LAR). We automated the PSF analysis of subresolution fluorescent beads, with a tool calculating the two metrics directly, and proposed experimental tolerance values.

Materials and methods

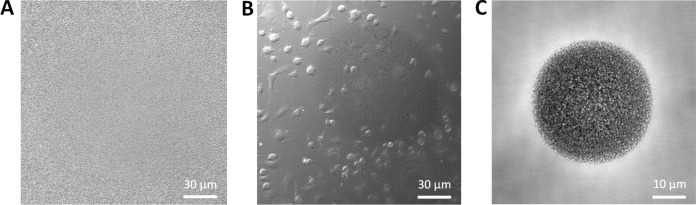

Sample

We used subresolution fluorescent beads for measuring the PSF of high NA objectives (Hiraoka et al., 1990). On the same slide, we also included larger beads (1 and 4 μm diameter) for easy detection of the focus plane and coregistration and repositioning measurements. The aim was to have one simple, low-cost slide containing different-sized beads that can serve for multiple types of measurements.

We used 175 nm diameter blue and green microspheres for the PSF measurements for the DAPI and GFP channels, respectively (beads PS-Speck, #7220; Thermo Fisher Scientific; 3 × 109 beads/ml). Beads were vortexed to avoid aggregates and diluted in distilled water to achieve a density of 106 beads/ml. A 50 μl aliquot of the diluted solution was dried overnight at room temperature in the dark on ethanol-cleaned type #1.5 high-performance, 18 mm square coverslip (Carl Zeiss Microscopy GmbH). The desired bead density on the slide was around 10 beads in a 100 × 100 μm field. The coverslips were mounted on slides with 10 μl of ProLong Gold antifade mounting medium (#P36930; Thermo Fisher Scientific, refractive index [RI] 1.46). As beads were directly juxtaposed to the coverslip, any spherical aberration/distortion induced by the potential RI mismatch between the mounting medium and lens immersion medium is minimized (Hell et al., 1993). Other configurations may be used to control the depth of the beads within the mounting medium and monitor the effect of the RI mismatch on image quality (to reproduce more realistic in-depth conditions of a typical biological sample). The slides were left for 3 d at room temperature in the dark to let the mounting medium fully cure. Then, samples were sealed with Picodent dental silicone (Picodent twinsil, picodent, Dental Produktions und Vertriebs GmbH). 10 bead slides were prepared in the same way and distributed to the different microscopy facilities participating in this study.

To compare the effects of the bead size on PSF measurements, we also mounted green 100 nm beads (FluoSpheres, #F8803; Thermo Fisher Scientific) using the same preparation method as described above.

Acquisition protocol

Before any measurements, dry and immersion objective lenses must be cleaned carefully. We tested immersion and some 20× dry objectives with NA higher than 0.7. For the blue channel, the beads were imaged on wide-field microscopes with settings used for DAPI imaging (typically a bandpass 350/50 nm excitation filter, a 400-nm long pass dichroic beamsplitter, and a 460/50 nm emission filter). On laser scanning confocal microscopes (LSCM), the beads were excited with a 405-nm laser line, and fluorescence was collected between 430 and 480 nm. For the green channel, the beads were imaged with the wide-field microscopes using setups similar to GFP imaging (typically with 470/40 and 525/50 nm bandpass excitation and emission filters combined with a 495-nm long pass beamsplitter) and for LSCM, the beads were excited with a 488-nm laser and fluorescence was collected between 500 and 550 nm.

The spatial sampling rate is crucial for accurate PSF measurements. At least the Shannon–Nyquist criterion should be fulfilled to ensure that the PSF image is not deprecated by the lack of spatial sampling. This criterion defines that the pixel size should be equal to at least one-half of the resolution of the optical system (Pawley, 2006a; Scriven et al., 2008; Shannon, 1949).

In our study, most of the collected images were slightly oversampled to provide a more precise fitting. In some cases, we were limited in the choice of the adapted pixel size, thus influencing the analysis. This is the case for the lateral X/Y sampling and 20× dry objectives with NA > 0.7 coupled with cameras used in classical wide-field setups, lacking any other magnification relay lens. Whereas the typical theoretical lateral resolution is around 350 nm for the GFP channel, the typical minimum camera pixel size is around 6.5 μm in a wide-field setup, corresponding to a pixel size of 325 nm in the image. For the same reason, respecting the Shannon–Nyquist criterion with EMCCD (electron multiplied charge-coupled device) cameras having a physical pixel size of 13–16 μm was difficult in some configurations. Concerning the lateral z sampling, the Shannon–Nyquist criterion was also considered which gave for instance 0.15 and 0.2 µm step size for the highest NA objectives (1.4) for confocal and wide-field microscopy respectively (Webb and Dorey, 1995).

For LSCM imaging, the pinhole was set to 1 Airy unit (A.U.), a setting at which the pinhole size matches the diameter of the central Airy disk of the diffraction pattern to assess the standard performance of a confocal microscope. Thus, using 1 A.U. is a good compromise for image collection between signal intensity and resolution (Cox and Sheppard, 2004). In the case of a degraded PSF (not straight or not symmetric along the z axis for instance), the pinhole was widely opened to record all of the aberrations. Pinhole alignment is necessary before or after the tests. Moreover, as noise affects the PSF measurement, a high signal-to-noise ratio is essential for the analysis fitting and the precise full width at half maximum (FWHM) calculation. FWHM is the width of a line shape at half of its maximum amplitude. For the Gaussian line shape (as we assume that is the case for the PSF profile), the FWHM is about 2.4 SDs. We found that single color beads, compared to multicolor ones, provide a higher signal and should preferably be used. A tutorial video summarizing the above protocol and parameters that influence PSF acquisition was produced by the working group GT3M of the RTmfm French microscopy network (https://youtu.be/ll4X_e8_mo8).

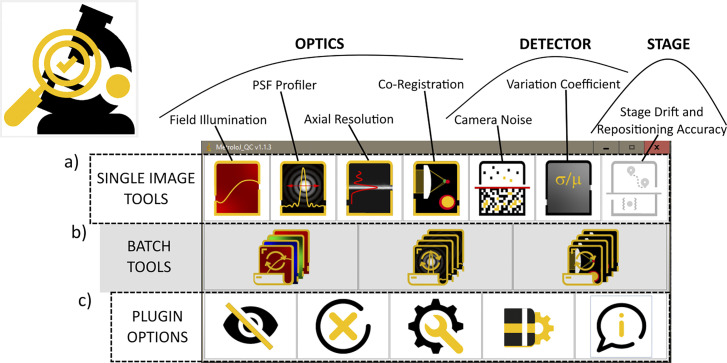

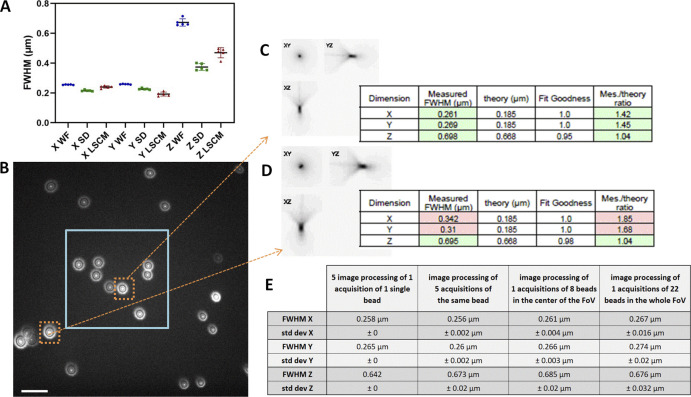

We first investigated if the proposed procedure was robust enough by examining (1) the image processing: for one z-stack of a single-bead five repetitions of the image processing with our plugin gave strictly identical results, (2) the image acquisition: for five acquisitions (five different time points) of the same bead we studied the variability of the measured FWHM for a single bead (Fig. S1). We found for the WF case x = 0.256 ± 0.002 µm; y = 0.26 ± 0.002 µm; and z = 0.673 ± 0.02 µm, (3) the field of view containing several beads (Fig. S1 B). For the beads that were in the central area of the image (the central area is the area that we defined in our protocol that contains the beads to be taken into account for the FWHM calculations), we found x = 0.261 ± 0.004 µm; y = 0.266 ± 0.003 µm; and z = 0.685 ± 0.02 µm. For the entire field of view, we found x = 0.267 ± 0.016 µm; y = 0.274 ± 0.02 µm; and z = 0.676 ± 0.032 µm. From the above results, we concluded that image processing is repeatable and robust. The repeatability accuracy of the different acquisitions showed variability of <1% in xy and <3% in the z-direction. When processing several beads from the central part of the field of view, we found variability of <2% in xy and <3% in the z axis. For the whole field of view, the variability increased to 8% in xy and 5% in the z axis. These results show that a small variability exists among different acquisitions and that acquisition variability is higher for the z axis. When the whole field of view was taken into account, spatial aberrations that are highly linked to the objective type and quality caused a higher variability.

Figure S1.

PSF variability with image processing, acquisition repeatability, and FOV. (A) PSF repeatability accuracy for wide-field (WF), SDCM, and LSCM along the x, y, and z axis. PSF were acquired five consecutive times. Error bars represent SD. (B) One z plane image of a 175 nm bead sample, slightly out of focus to observe the symmetry of the PSF pattern. Beads near the corners of the FOV show a significant asymmetry. Image taken on a WF-TIRF microscope (TiE Nikon) at zero angle mode, with the 488 nm laser, with a 100×/1.49 objective and use of an additional lens 1.5× to respect the Nyquist criterion (final pixel size, 73 nm), with a Prime95B Photometrics camera (FOV 87.6 × 87.6 μm). Scale bar is 2 μm. In blue square: the central area of the FOV that is taken into account for the PSF calculations for this objective. (C and D) PSF profiles along xy, yz, and xz as extracted by the MetroloJ_QC plugin (square root intensity) of the beads in dashed orange squares in B. Although along the z axis the FWHM is similar, along the xy axis the FWHM is significant different and higher for beads far from the center of the image. (E) Calculated FWHM with the SD for three repeatability studies (processing, acquisition, FOV).

Important considerations when acquiring and analyzing PSF images are the signal-to-background ratio (SBR) and the signal-to-noise ratio (SNR). The SBR was calculated directly from the MetroloJ_QC plugin, using the segmented bead mean intensity value (signal) and the mean value of a 1-μm-thick background annulus around the segmented bead (background). The SNR was calculated by using the square root of the maximum pixel intensity normalized to a 12-bit dynamic range, noting that the value describing the intensity of a pixel is only proportional to the number of photons (Stelzer, 1998). We observed that if the SBR or SNR is low, then the precision of the FWHM calculation is low (Fig. S2, A and B). Paying attention to background is therefore highly recommended, as shown by Stelzer (1998).

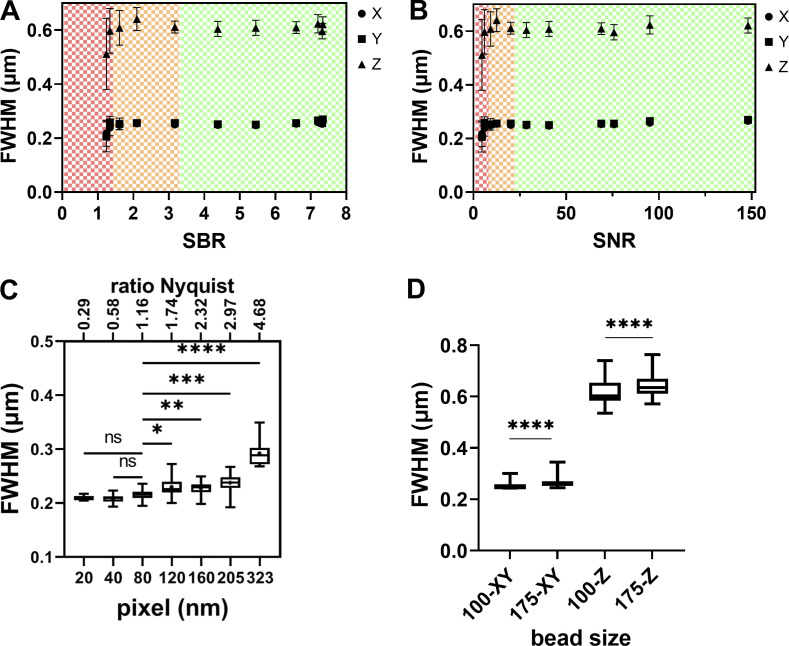

Figure S2.

PSF variability with SBR, SNR ratios, pixel size for LSCM, and bead size. (A and B) Effect of the SBR and SNR (for 12-bit dynamics) ratio on FWHM estimation precision for a wide-field microscope. The same three FOVs of 29 175-nm one-color beads were acquired for different exposures and excitation intensities on an upright Carl Zeiss Microscopy WF microscope, GFP channel, 63×/1.4 objective. The SBR ratio is estimated using the segmented bead mean intensity value (Signal) and the mean value of a 1-μm thick background annulus around the segmented bead (Background) as calculated by the plugin MetroloJ_QC. As SBR is increasing, the FWHM estimation accuracy gets better. For very low SBR the FWHM values decrease. We distinguish three tolerance zones. The green zone is the one presenting the lowest errors and the best fit (R2 equal to 1). The orange zone presents a higher error and a worse fit (0.93 < R2 < 0.99). The red zone presents both high error and bad fit (R2 < 0,9). (C) Lateral FWHM dependence on the sampling rate on a LSCM microscope (LSM880, Carl Zeiss Microscopy GmbH) with pinhole at 1 A.U. The normality of the distributions for each condition was first confirmed with Shapiro-Wilk test. Statistical significance was determined using Dunnett’s multicomparison test, with the 80 nm setting chosen as the control value. The P value was nonsignificant (ns), *, P < 0.05; **, P < 0.005; ***, P < 0.0005, or ****, P < 0.0001. Data points varied from 8 to 22. (D) PSF dependence on microsphere size for WF and LSCM microscopes (WF in A and B and LSCM in C). WF-100 and WF-175 stand for WF PSFs for 100 and 175 nm beads, respectively. Distribution normality was tested with Shapiro-Wilk test and showed a not normal distribution (P < 0.0001). Statistical significance was determined applying a Kolmogorov-Smirnov test for each pair and each condition (one pair: 100 and 175 nm beads). The P value for each pair was <0.0001. The data points were 87 and 156 for 100 and 175 nm beads respectively. All measurements in C and D were carried out with a 63×/1.4 lens, at the GFP channel (525 nm emission).

Another important consideration for PSF evaluation is the sampling density (i.e., pixel size). One has to collect images with a sampling density high enough (at least more than two times the resolution according to the Shannon–Nyquist criterion) in the xy and z-dimension to get the most accurate PSF Gaussian fitting. We consider that PSF can be sufficiently fitted by a Gaussian function (Zhang et al., 2007). To experimentally determine the adequate sampling rate/density, high SBR (SBR > 3 as measured by the plugin MetroloJ_QC) PSF acquisitions were performed using different voxel sizes. We did the image recording with a point scanning confocal setup since the pixel size can be easily adjusted (compared to a wide-field setup, where, due to their fixed physical camera pixel size, the options, such as camera binning or use of a magnification relay lens, are limited). The Shannon–Nyquist criterion was considered as met whenever pixel size was equal to or lower than λex/8*NA (Sheppard, 1986; Wilson and Tan, 1993). Using a 1.4 NA lens and an excitation wavelength of 488 nm, the criterion value is 43 nm. This is the case for a closed pinhole (near to 0.25 A.U.). For a 1 A.U. pinhole, the Nyquist criterion allows a 1.6× bigger pixel size (https://svi.nl/NyquistRate; Piston, 1998). In our case, with a pinhole of 1 A.U., we observed that the pixel size has an influence on lateral FWHM values when the pixel size value is higher than a threshold of 80 nm or higher than 1.16× the Nyquist criterion value (Fig. S2 C). It is statistically significant (P < 0.05) when performing Dunnett’s multiple comparison test, setting the 80 nm pixel size as control.

The fourth consideration for accurate PSF measurements is the choice of fluorescent microspheres. Their size and brightness can alter the FWHM calculation. The brightest beads should be used, and this is the reason we recommend using single-labeled beads, as described earlier. Ideally, the bead diameter should be below the resolution limit of the objective. Fig. S2 D shows measurements carried out with a WF setup using a 1.4 NA objective. For 100 or 175 nm diameter beads, FWHM values were significantly different (lateral xy or axial z FWHM values show P < 0.0001 for Kolmogorov–Smirnov test for each size pair and in the lateral and axial direction). We conclude that when looking for the most accurate PSF measurements, 100 nm beads are recommended. However, for PSF monitoring over time, brighter beads are needed. They are also more convenient if a wide magnification range of objectives is to be examined using the same slide. Hence, with 100 nm microspheres, dimmer than the 175 nm beads, and with low magnification/NA lenses, detecting the beads and achieving a correct FWHM estimation may prove quite challenging. For these reasons, we recommend using single-color 175-nm-diameter beads for reliable PSF monitoring. A mix of different bead colors may be used to speed up image acquisition and enable, in a single z-stack, measuring FWHM for different wavelengths.

Metrics

We calculated the experimental PSF values using the MetroloJ_QC plugin with the Fiji software (see Fig. S3 for the workflow). The plugin calculates the FWHM along the x, y, and z axis of all beads in the field of view (FOV). It applies a Gaussian fit for each of the three axes. Before analyzing the images with the plugin, we visually inspected the acquisitions. Some images were needed to be cropped to remove saturated beads. An alternative option can be used to automatically discard any saturated beads from the image using the MetroloJ_QC plugin. Only beads that were close to the center of the FOV (30% of the full chip of a sCMOS sensor, zoom of 6 for confocal) were analyzed to avoid aberrations (Hell and Stelzer, 1995). Experimental data shown in Fig. S1 confirm that aberrations and the deviation of the theoretical FWHM increase as we approach the corners of the FOV, especially for high NA objectives. At least five beads were analyzed per objective.

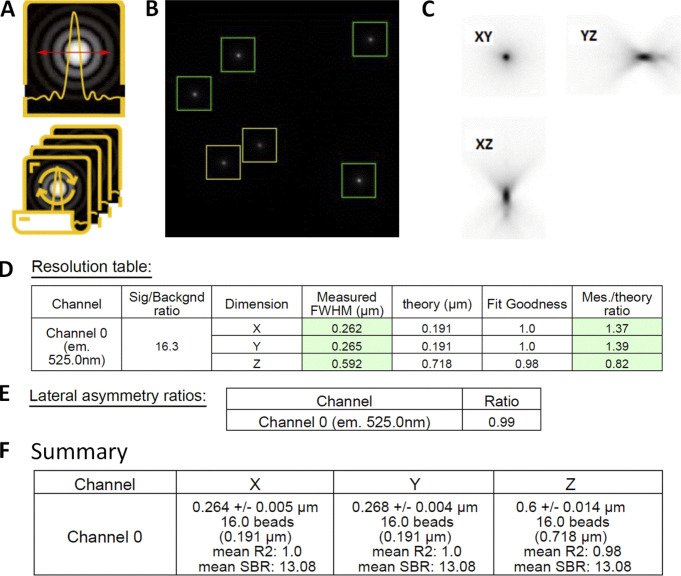

Figure S3.

PSF analysis workflow with the MetroloJ_QC. (A and B) PSF or PSF batch icon (A) and a “beadOverlay” image (B) is generated taking into account the declared user parameters (ROI size, prominence value). Beads that are taken into account for the FWHM calculation are in the green squares. Yellow square means that the beads are too close. (C) Square root PSF image of one bead that helps visualizing the PSF and detecting aberrations. (D) Resolution table: the measured FWHM along x, y, and z of the bead in C. Theoretical values are provided, along with the calculated fit goodness (R2) and the ratio FWHM measured/FWHM theoretical. If values are within specs they are highlighted in green; if not, they are highlighted in red. (E) xy asymmetry is monitored by the LAR. (F) Summary table for the multibead acquisition presenting the mean FWHM with the SD for the three axes, the number of beads taken into account, the mean R2, and the mean SBR value.

The experimental FWHM was compared with the expected theoretical FWHM.

(1) Wide-field:

(2) Scanning confocal (pinhole ≥1 A.U., NA > 0.5):

(3) Spinning disk confocal:

where λem is the emission wavelength, λex is the excitation wavelength, and n is the refractive index of the immersion liquid. Resolution formulas are the FWHM of the PSF and not the distance of the maximum to the first minimum of the intensity profile of the PSF (Amos et al., 2012; Wilhelm, 2011). For spinning disk confocal microscopy (SDCM), we consider that the pinhole size does not fill 1 A.U. (it is the case when we use a circular pinhole size close to 50 μm; Toomre and Pawley, 2006).

The LAR is also calculated and is defined as

Analysis

The MetroloJ_QC plugin works as follows: first, the xy coordinates of the beads are identified using a find maxima algorithm on a maximum intensity projection of the stack. Beads too close either to the image edge or to another bead are discarded from the analysis (the user in the plugin can modify this distance). Subsequently, FWHM is measured, where the maximum intensity pixel within the entire 3D data set is determined and an intensity plot along a straight line through this maximum intensity pixel point is extracted in all dimensions. Finally, the bell-like plots/curves are fitted to a Gaussian function using the built-in ImageJ curve fitting algorithm to determine the FWHM. After automation of the FWHM measurements on several beads/datasets, average values are extracted in all three dimensions, and SDs are calculated. A batch mode enables the automation of the analysis. If the algorithm detects spots that do not originate from the beads, they can be removed computationally using the Gaussian fitting parameter R2 for each FWHM measurement. We recommend a value superior to 0.95. Further inspection of each PSF, using the computed bead signal-to-background ratio, helps in removing aberrant values. Additional parameters are also measured, like lateral symmetry, or whether the acquisition meets the Shannon–Nyquist criterion.

Biological imaging

An example of the influence of the PSF in biological imaging was a LLC-PK1 (pig kidney) cell with nuclei staining (DAPI), a pericentrin staining (ab polyclonal primary antibody ab4448 coupled to the secondary antibody Alexa Fluor 488 – A11034; Invitrogen), and a γ-tubulin staining (ab monoclonal primary antibody by sigma GTU-88 clone coupled to the secondary antibody Alexa Fluor 568 – A11031; Invitrogen). The microscope was an SDCM (Dragonfly, Andor–Oxford Instruments), and images were taken with a Plan-Apo 100×/1.45 Nikon objective and an EMCCD iXon888 camera (Andor–Oxford Instruments).

Statistical analysis

The figures and statistical analysis were prepared using GraphPad Prism software. For LAR metric, significance was tested for the WF, SDCM, and LSCM modalities. First, the assumption of normality was tested using the Shapiro–Wilk test, with a P value <0.0001, 0.104, and 0.0813 for WF, SDCM, and LSCM modalities, respectively, which indicates that the WF distribution was not normal. Therefore, a Kruskal-Wallis test was used that determines whether the median values of the modalities are different. The test gave an H value of 42.7 (out of 141 values) and a P value <0.0001 (****), indicating that there is a significant difference in the LAR metric result of the three modalities.

Results

In total, we collected data from 117 objectives from WF, SDCM, and LSCM microscopy modalities. The technique and the setup and objective quality determine the PSF shape (Fig. 3 A). We showed that the ratios of experimental to theoretical lateral and axial PSF FWHM are better for the GFP compared with the DAPI channel (Fig. 3 B). The measured lateral FWHM mean value for the LSCM modality stayed close to the expected theoretical one with impressively low dispersion (Fig. 3 C), although it showed a large dispersion along the z axis. Many cases of elongated PSF profiles along the z axis are due to spherical aberrations, especially when the PSF is taken with water (black-capped line in Fig. 3 B) or air at low magnification and NA (25×/ 0.8) objectives (black arrows in Fig. 3 B), as Cole et al. (2011) have shown experimentally and Hell et al. (1993) theoretically. The WF and SDCM FWHM measurements show similar behavior. The measured FWHM values are not as good along the xy axis as on the z axis, compared to the theoretical ones. Of note, as the sampling criterion was met in z, the observed ratios for the axial FWHM are closer to the theoretical value. For instance, a WF case that is far from the theoretical value is shown with a blue arrow followed by “iii” (Fig. 3 B, the “iii” corresponds to the “iii” PSF profile of Fig. 3 A) and concerns a 40×/1.3 Plan-Apo objective lens, and acquisition with a pixel size of 183 nm. We measured a ratio of 2.33 for xy and 1.53 for z. Coma aberrations or astigmatism can also be present, although their influence on the measured xy FWHM stays low. A typical PSF example showing coma aberration is the one with the blue arrow followed by “ii” in Fig. 3 B (“ii” PSF in Fig. 3 A), characterized by an xy ratio of 1.65 and a z ratio of 1.31. It should be mentioned that we avoided measuring PSF for wavelength ranges with objectives that are not meant to be corrected for the used wavelength range. More specifically, this is the case for Plan Fluor or NeoFluar objectives for the DAPI channel. For instance, a 40×/1.3 NeoFluar objective gave a ratio of 3.3 and 2.1 for xy and z, respectively. These values are not shown in Fig. 3 B, as we considered that these objectives are not designed to give acceptable performances in these wavelength ranges.

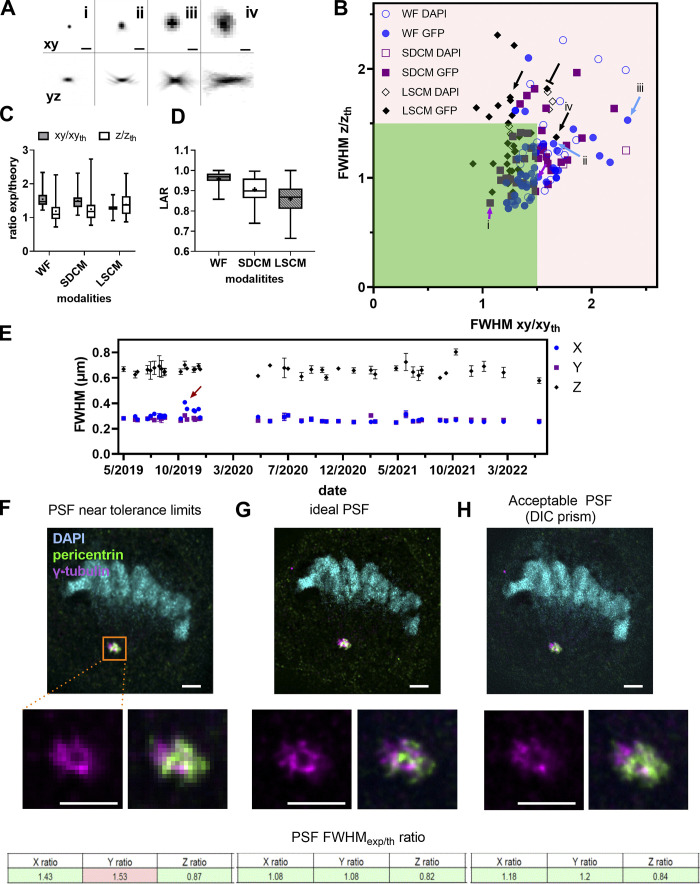

Figure 3.

PSF distribution and influence on image quality of biological structures. (A) Square root PSF along xy and yz of four cases (i-iv) shown in B. Scale bar is 1 μm. (B) Ratio of experimental to theoretical lateral and axial PSF FWHM values for WF, SDCM, and LSCM techniques for the DAPI and GFP channels. The red arrows show PSF in the limit values, the blue arrows show PSF of medium quality, the black-capped arrow shows a 40× water immersion objective with an elongated z-axis PSF, and the black arrows show PSF cases from dry 20× objectives. (C) Statistical analysis with median (horizontal line), mean values (dot), SD (box) and min/max values (whiskers) of B. (D) Box plots summarizing the distribution of the LARs for the PSFs shown in B. Statistical significance was determined by using Kruskal–Wallis test (P value was <0.0001 [****]). For C and D, the independent n data points were 61, 43, and 37 for WF, SDCM, and LSCM, respectively. (E) Stability evolution of PSF over 32 mo for an upright WF microscope. The red arrow shows when the PSF is significantly different along the x axis. The gap in the dates of January 2020 corresponds to the COVID-19 lockdown when no experiments could be carried out. Error bars represent the SD. At least five PSFs were analyzed per date. (F–H) Degradation of image quality on a biological sample depends on PSF quality. The biological sample is a cell in division (anaphase state). The cell nucleus is labeled with DAPI (cyan), the pericentrin protein of the centrosome is labeled with Alexa Fluor 488 (green), and the γ-tubulin is labeled with Alexa Fluor 561 (magenta). The images are acquired with a SDCM, Plan-Apo 100×/1.45 objective. For each PSF case, we show the acquisition of the cell in three colors, the zoom of the centrosome region for one color and two-color overlay, and the PSF summary results of the mean FWHMexp/th for the three axes. Scale bar is 2 μm. (F) Imaging with a PSF FWHM ratio along the y axis that is out of the tolerance values due to oversampling (big pixel size). (G) Imaging with an ideal PSF (use of additional lenses inducing a 3× magnification to respect Nyquist criterion). (H) Imaging with an acceptable PSF: the added DIC prism introduces coma aberrations.

From the experimental results shown in Fig. 3 B, we propose to set the tolerance value at a ratio of 1.5 between measured and theoretical FWHM. 66% of the lateral FWHM and 82% of the axial measurements are located inside the 1.5 ratio dark green square.

We studied the symmetry of the PSF by calculating the mean values of the LAR, defined as the ratio between the minimum and maximum experimental x and y FWHM of individual beads (Fig. 3 D). We observed that for LSCM the mean LAR is much lower than for the other two techniques (LSCM: 0.85, SDCM: 0.92, WF: 0.96) and it is statistically significant (P < 0.0001).

We finally measured the stability of the objective performances by analyzing PSFs acquired for 3 yr using the same microscope, objective, sample, and were also acquired by the same operator (Fig. 3 E). Variations of lateral and axial FWHM were small (<5%). In October 2019 (Fig. 3 E, red arrow), and 5 d after a planned manufacturer’s maintenance visit, measurements showed a 10% increase in the x-axis FWHM, while no significant change was observed for the y-axis FWHM. We recovered the symmetry after taking out and reinstalling the camera and paying special attention to avoid any tilt between the camera, the camera adaptor, and the microscope body (measurement of December 2019 and later on).

Finally, we showed that a degraded PSF influenced biological imaging, according to the resolution level of the studied structure. To this end, we imaged the centrosome and cellular DNA in a cell undergoing mitosis (Fig. 3, F–H). For the imaging of larger structures like the nuclei of this example, PSF quality is not that important. For near-to-diffraction limited imaging and colocalization analysis, like for the centrosomal proteins γ-tubulin and pericentrin, PSF quality is of great importance, as shown in the zoomed image of the centrosome proteins (Fig. 3, F–H). In this case, an under-sampling (Fig. 3 G) or a DIC prism in the light path (Fig. 3 H) showed degradation to the PSF and the biological image, highlighting the importance of optimal PSF settings to study diffraction-limited objects.

Discussion

Results showed the high variability of the PSF measurements due to a large variety in the quality of objectives used. Variability is also introduced by the users whose capacity to fully follow a strict procedure may vary. Besides any objective-induced aberration like coma, astigmatism, or distortion (Sanderson, 2019; Thorsen et al., 2018), special care should be given to following the same acquisition protocols, using the same slides, and paying special attention to avoid errors such as forgetting a DIC prism within the optical path, not cleaning the objectives before the measurements, a non-flat sample or stage insert, or not properly setting the objective correction collar, when necessary. Variability was associated with suboptimal sampling rate (e.g., inevitable for WF or SDCM for the lateral values for low magnification objectives and big pixel size cameras) or blue-emission dyes. Most conventional microscopes and commercially available optics are designed for optimal performance at visible wavelengths (Bliton and Lechleiter, 1995), and adding aberration correction within the UV or near-UV wavelength range is challenging. Spherical aberrations (due to refractive index mismatch or incorrect adjustment of the lens correction collar) and pinhole misalignment are possible sources of the high variability of LSCM axial FWHM values.

We also showed that while asymmetry was negligible in WF and SDCM, the average asymmetry ratio of LSCM is 0.86. This is expected, as FWHM is smaller in the direction perpendicular to the direction of the linear polarization of the excitation laser in high NA objectives (NA > 1.3; Li et al., 2015; Micu et al., 2017; Otaki et al., 2000).

We proposed experimentally defined limit values. Objective resolution performances can be considered within limits if the lateral and axial measured/theoretical ratios are kept below 1.5 (dark green zone of Fig. 3 B). Although such limit values may seem quite high, one has to keep in mind that these tolerance values rely on some theoretical formulas, considering optimal, though unobtainable, conditions such as shot-noise free confocal imaging or subresolution point sources, while in reality, we used 175 nm beads. It is important to specify that a ratio value slightly higher than the tolerance values defined here does not necessarily mean that a return of the objective to the manufacturer for a check is necessary. The user of the microscope can nevertheless continue monitoring the PSF quality of the objective while keeping in mind that imaging of structures with sizes near to the optical resolution of the microscope should be avoided.

For regular QC monitoring of the microscope, we recommend measuring PSF once per month. Our data showed a high dispersion of variability of the measurements over time for objectives with NA > 1. Improper use of the objective and generally a misalignment of the whole microscope system can result in a measured PSF value far from the expected theoretical one. Therefore, regular monitoring of the PSF with a frequency of once per month, if the human resources of the facility or research team allow that, is highly recommended. In cases of demanding experiments, like in super-resolution microscopy, PSF should be measured more frequently, even before each experiment. The same PSF measurement before the acquisition can be used for image deconvolution processing using experimental PSFs (McNally et al., 1999).

When the experimentally defined tolerance value is not met, the microscope user should use all available tools for troubleshooting (look at Table 1 for a summary and proposed actions). For instance, an adjustable pinhole at the LSCM is a possible solution. First, one has to be sure that the pinhole is well aligned before performing any confocal PSF measurements. Whenever the performance significantly diverges from theoretical values, we recommend using a fully opened pinhole or checking the objective on a WF microscope. These comparisons are helpful for precisely identifying the origin of the issue (i.e., objective-associated or related to some other component). For SDCM, the most often fixed pinhole size increases the variability, especially when we use objectives with lower magnification than 60× or 100×. Alternatively, the method described by Zucker et al. (2007) using a metal-coated mirror and detecting the reflected light of the laser can be performed to ensure pinhole alignment and measure the axial resolution.

To sum up, the impact of a low-quality PSF on the result of biological imaging is relative to the structure to be imaged. For objects in the cell near the resolution limit, like in Fig. 3, F–H, it will induce aberrant or artefactual structures and influence colocalization studies. For high- or super-resolution techniques (like, for instance, Airyscan, STED, SIM) the quality of the PSF should be optimal. For bigger structure imaging (e.g., nuclei imaging of Fig. 3 F), the quality of the PSF is not that crucial and imaging can still be satisfactory.

Field illumination

Background

Quantitative optical imaging requires a perfectly even field illumination. For example, if the center of the field of view is much brighter than the corners, two cells with an identical level of GFP expression will appear with a difference in intensity. Ideally, all the pixels in the image of a uniform sample should have the same gray level value across the field of view, considering the intrinsic noise of detectors (Murray et al., 2007). In the real world, illumination light source uniformity, alignment, and optical aberrations (from the objective and additional optics included in the light path) can affect the homogeneity of the field illumination.

Regarding light source uniformity, for WF microscopes the bulb-type sources are mercury, metal-halide, or xenon arc lamps. They contain a central bright source of illumination that is inherently non-uniform and often requires a light diffuser to homogenize the emitted light (Ibrahim et al., 2020). More recently, LED sources with their long lifetimes and excellent stability offer a highly improved field flatness due to their coupling with the microscope with a liquid light guide (Aswani et al., 2012). For SDCM and LSCM, the light sources are lasers that are coherent, with an intensity distribution described by a Gaussian function. The Gaussian profile is naturally inhomogeneous, presenting a maximum central spot of intensity. Lasers are coupled to the microscope with optical fibers that can introduce another source of non-uniformity. Relay optics and spatial filtering can be used to homogenize the beam Gaussian profile (Gratton and vandeVen, 1995). Scanners that are not perfectly calibrated can also influence the laser illumination uniformity as well as the detection uniformity of LSCMs (Stelzer, 2006). Furthermore, the dichroic mirror and the filter positioning in the filter cubes (for WF) or the emission filter wheel have an impact on the observed field illumination for each channel (Stelzer, 2006).

Accurate image intensity quantification over the entire field of view requires characterizing the field illumination pattern and correcting for heterogeneities if necessary. It is essential for image stitching, ratiometric imaging (Fura2, FRET), or segmentation applications. When non-uniform illumination occurs in experiments involving tile scans (acquisition of adjacent XY planes), it leads to optical vignetting and unwanted repetitive patterns in the reconstructed image (Murray, 2013). An image of non-uniformity, as a reference, is then a prerequisite to correct this shading effect. In live-cell experiments localized phototoxicity, but also with fixed samples, inhomogenous bleaching of the sample might be observed. Previous studies investigated the field illumination measurements by defining theoretical metrics and measurement protocols (Bray and Carpenter, 2018; Brown et al., 2015; Model and Burkhardt, 2001; Stack et al., 2011; Zucker and Price, 1999). Here, we proposed the centering and uniformity metrics using simple tools and we defined limit values.

Materials and methods

Sample

For the field illumination flatness assessment, fluorescent plastic slides can be used (blue, green, orange, and red, provided by Chroma Technology Group #92001 or #FSK5; Thorlabs). Their inconvenience is the low signal obtained in the far-red channel (>650 nm emission). For a complete field illumination characterization, measurements should be performed for all four main channels (DAPI channel through Cy5 channel). For a quick and regular characterization, a field illumination measurement at the DAPI and GFP channels can be enough. A glass coverslip can be sealed on the plastic slide with immersion oil to protect the plastic slide from scratches (Zucker, 2006b). An alternative to the plastic slide is to use a thin layer of a fluorescent dye (Zucker, 2014). The thin layer of a fluorescent dye gives a more precise illumination pattern and can be more convenient for shading corrections for tile scan acquisitions (Model and Burkhardt, 2001). We compared the plastic slides with a self-made homogeneous thin fluorescent specimen. We used a highly concentrated (1 mM) dye solution (rhodamine G6; Sigma-Aldrich) between a glass slide and coverslip for this specimen. We tested both plastic slide and dye solution and found that for the defined metrics, the plastic slides are convenient (Fig. S4), although, for SDCM, the pinhole crosstalk detection is well seen with plastic slides and may alter the uniformity measurement (Toomre and Pawley, 2006). We also observed that the centering measurement is independent of the sample type.

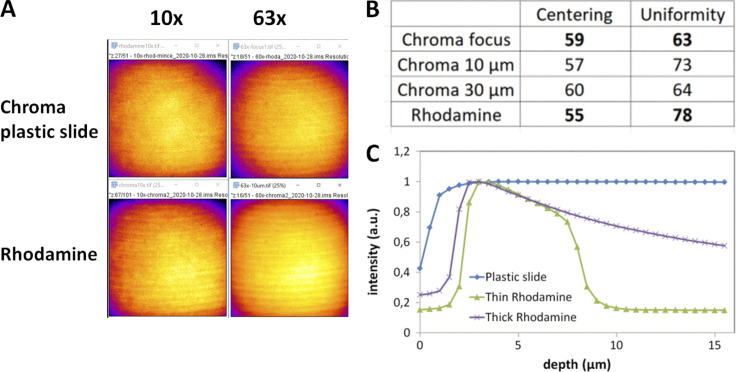

Figure S4.

Field illumination using either a green chroma plastic slide or a glass coverslide/coverslip configuration with a rhodamine layer for a spinning disk Dragonfly microscope (Andor–Oxford Instruments). (A) Field illumination images at 488 nm excitation for a 10× and 63× with a plastic and rhodamine slide, detected with an EMCCD iXon888 camera. Artifacts shown on these images come from a synchronization artifact between Nipkow disk rotation and camera acquisition. The image projected on the sensor chip results from the integration of multiple individual scans by the Nipkow disk. A short exposure time can result in these artifacts, as explained in (Chong et al., 2004). (B) Centering and uniformity metrics for A. The acquisition was set at the plastic/glass or rhodamine/coverslip interface, or 10/30 μm deep within the plastic/rhodamine layer. (C) Effect of focal plane depth on emission intensity using either a plastic slide or thin/thick layers of fluorescent rhodamine dye (PLAN APO 63×/1.4 objective, ex. 488 nm).

Acquisition protocol

For WF and SDCM microscopy, the full camera chip was used. For confocal microscopy, we used the recommended zoom 1× from the manufacturer to yield a uniform field of view, as suggested in the ISO 21073:2019 norm (ISO, 2019). We placed the slide on the stage and focused on the surface. We measured the field illumination slightly deeper than the surface level (around 5–10 μm) to avoid recording scratches or dust (Fig. S5 B). The acquisition parameters were set to take full advantage of the detector dynamic range and avoid saturation. Only one frame is required. A supplementary step of averaging the intensities of several images can be used for calibrating the flat field correction and creating a shading correction image, necessary for tile scan imaging (Model, 2014; Young, 2000). In our case, we focused on characterizing the field illumination, and a single image was sufficient.

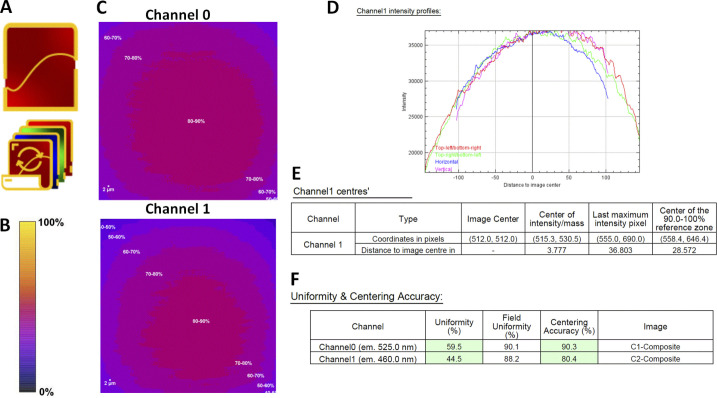

Figure S5.

Field illumination workflow with the MetroloJ_QC. (A) Field illumination single and batch icon. (B) Intensity scale bar. (C) Normalized intensity profile of channels 0 and 1. (D) Intensity profile along horizontal/vertical/diagonal lines going through the image geometrical center. (E) The location of the image center (geometrical center), the center of intensity (Center of Mass of the page channel), the maximum intensity pixel for the page channel) and the reference zone are provided, along with the distances to the geometrical image center. (F) Uniformity and centering metrics for both channels of C. Centering accuracy is computed using the 90–100% zone as reference rather than the maximum intensity pixel position. If values are within specs they are highlighted in green if not in red. Specs can be modified by the user.

Metrics

We defined the following two metrics to evaluate the field illumination:

(1) The uniformity of the illumination at the observed FOV, U (%), is expressed as follows:

where intensitymax and intensitymin are the maximum and minimum intensity acquired in the FOV, respectively. The size of the FOV influences the value of this metric. A ratio of 1 (100%) represents an ideal case.

(2) The centering of the maximal zone of the intensity of the image, C (%), is expressed as follows:

where Xmax and Ymax are the coordinates of the maximum intensity zone, Xcenter and Ycenter are the coordinates of the center of the FOV, and x and y determine the width and the height of the image, respectively. The size of the FOV does not influence the metric value (normalization by the geometrical center position of the image). A value equal to 1 or 100% is the ideal case.

The uniformity and the centering metrics are included in the ISO 21073:2019 norm (ISO, 2019).

Analysis

The MetroloJ_QC plugin was used, and the analysis workflow is shown in Fig. S5. The algorithm starts by applying a Gaussian blur filter (sigma = 2) to smooth the homogeneity image to avoid isolated saturated pixels (e.g., hot pixels) and to smooth local imperfections (Bankhead, 2016). Then, the plugin locates both minimum and maximum intensities. It also finds the center of the intensity. As with the MetroloJ plugin, a normalized intensity image is calculated. Next, using the normalized image, the maximum intensity location is determined considering a reference zone (either the 100% intensity, i.e., the geometrical center of all pixels with a normalized intensity of 1, or any other zone, such as 90–100%, i.e., the geometrical center of all pixels with a normalized intensity between 0.9 and 1).

The user is prompted to divide the normalized image intensities into categories (bins). This value will be used for the computation of the reference zone and generation of the iso-intensity map. A value of 10 will generate isointensity steps of 10%. A threshold is then used to locate all pixels with the maximum 100% intensity or the last bin window. The geometrical center of this reference zone is then located. Distances of these points to the geometrical center (center of intensity, maximum intensity, center of the thresholded zone) are computed, and the field illumination metrics are calculated.

The user can choose to discard or not a saturated image. Whenever saturation occurs in a few isolated pixels, noise may be removed using a Gaussian blur of sigma = 2. Note that saturation computation is carried out after the Gaussian blur step. Hence, whenever aberrant saturated isolated pixels are polluting the channel, if Gaussian blur removes them, the image will no longer be considered as saturated, as no saturated pixels will be found. In the case of “clusters” of saturated pixels, the applied Gaussian blur is not strong enough to eliminate the cluster center’s saturated pixels and the channel will still be recognized as saturated and skipped if the discard saturated sample option is selected. A batch mode enables the analysis automation.

Biological imaging

An example of the influence of the field illumination in biological imaging was MCF-7 cells with a nuclei staining (Hoechst 33342; Invitrogen) and an actin staining (phalloidine Alexa Fluor 488, A12379; Invitrogen). The microscope was a SDCM (Dragonfly, Andor–Oxford Instruments), and images were taken with a Plan-Apo 60×, 1.4 NA Nikon objective and an EMCCD iXon888 camera (Andor–Oxford Instruments).

Results

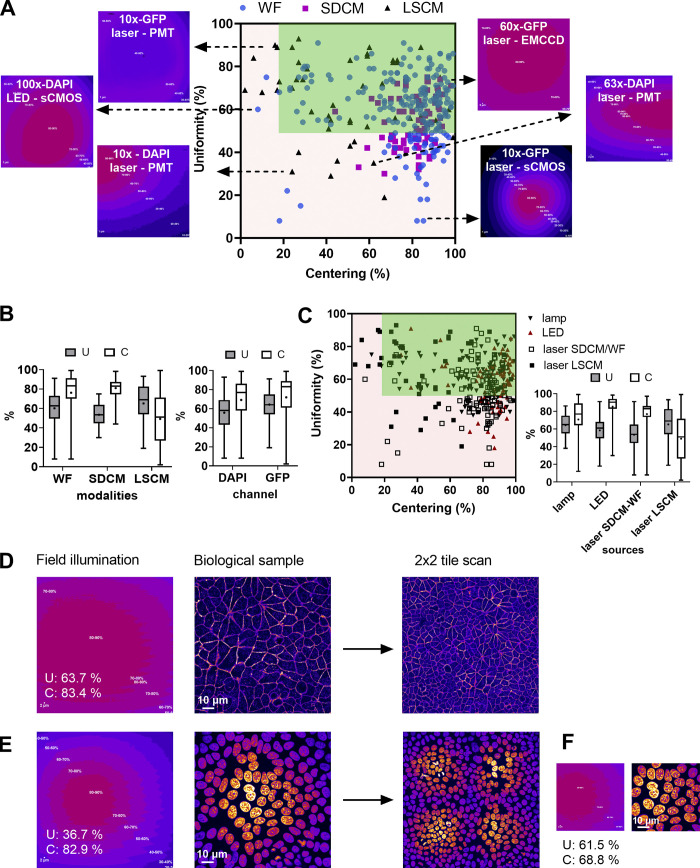

We studied the uniformity (U) and centering (C) distribution for the three main microscopy modalities by performing measurements for 130 objectives for a wide range of magnifications (5×–100×) mounted on 35 microscopes (Fig. 4 A). The U–C mean values were 61–76 and 53–82% for WF and SDCM techniques respectively, followed by a higher dispersion of both metrics for the WF technique (Fig. 4 B). To note, 60% of the SDCM microscopes were CSU X1 model and 40% W1 model. The former shows better U-C values due to the smaller FOV. For LSCM, the U-C mean values of all measurements were at 65–51%, with a higher dispersion compared with the two other modalities (Fig. 4 B). We studied the influence of the wavelength on the U–C values. We observed that for the DAPI channel, the uniformity showed a higher dispersion than for the GFP channel with a lower mean value (56% for DAPI and 68% for GFP). The results for the centering were similar, with a slightly higher mean value for the GFP channel (68% DAPI and 73% GFP). Two typical field illumination pattern examples of the DAPI channel low U values are selected in Fig. 4 A (acquisitions with a 10× and 63× objective on a LSCM).

Figure 4.

Distribution of uniformity and centering field illumination flatness metrics and their influence on biological imaging. (A) Uniformity (U) and centering (C) distribution are classified into microscopy modalities, with representative field illumination pattern examples. (B) Statistical analysis of A, according to the modality and the excitation channel. The mean value is shown as a cross, the SD as a box and the whiskers correspond to the min and max. (C) U–C distribution classified into excitation source types with the statistical analysis study. (D–F) Influence of field illumination flatness on image quality of biological structures. The objective is a Plan-Apo 60×/1.4 (Nikon) on a spinning disk microscope (Dragonfly-Andor). (D) Field illumination pattern and U–C values for a plastic slide extracted by MetroloJ_QC, the corresponding image for a DAPI stained nuclei sample, and a 2 × 2 tile scan. (E) Same configuration as D for the GFP channel and actin Alexa Fluor 488 labeling. (F) The 50% central area of D with the U–C calculated metrics.

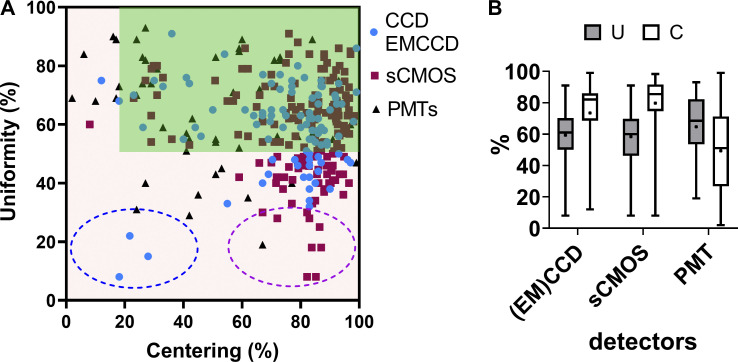

We also studied the U–C metrics distribution according to the detector type. We showed that when using cameras, the sensor chip size influenced the observed U values (Fig. S6 and example of Fig. 4 A for a 10× objective, GFP channel). Field illumination images acquired with large FOV (e.g., 13.31 × 13.31 mm) sCMOS cameras showed a lower U, as most of the optics of the microscope were originally designed for the use of smaller imaging area CCD (e.g., 8.77 × 6.60 mm) or EMCCD (e.g., 8.19 × 8.19 mm) devices.

Figure S6.

Distribution of uniformity (U) and centering (C) field illumination flatness metrics classified by the detector type. (A) U–C distribution. The tolerance area is in dark green. Representative examples are shown. Inside the blue dashed circle are three values measured with an EMCCD camera. The magenta dashed circle highlights a subpopulation of low U/high C cases, only associated with sCMOS camera images. (B) Box plots summarizing all the U–C distributions of A. Whiskers show the min/max values, the dot in the box shows the mean value, and the box shows the SD. We found that the sCMOS cameras show a higher dispersion compared to the CCD-EMCCD sensor chips. We indeed observed that low U/high C accuracy combinations were mostly associated with larger sensor sizes (magenta circle in A). The only low U and C combinations (blue circle in A) were associated with misaligned TIRF microscopes, on which proper laser alignment had to be manually set before each experiment. The reference is the GFP channel, although many of the measurements were performed for both the DAPI–GFP channels or for all of the basic four channels.

We also showed that the source type influences the field illumination (Fig. 4 C). One can clearly see that arc-lamp–based light sources result in U–C values with high variability, while LED sources result in less dispersed U–C values and notably excellent centering (mean value at 87%). Laser sources show a high dispersion of C values for LSCM with a mean value of 53%, while for SDCM and WF techniques the U–C value dispersion is smaller and the mean C value significantly higher (78%). This can be explained by the difference in the detector type (Fig. S6).

From the above measurements, we defined the experimental tolerance values as follows: U > 50% and C > 20% (Table 1). In total 27% of our measurements were out of the limits for both metrics. For biological applications, low U-C values can result in inaccurate quantification studies. Fig. 4, D and E shows the field illumination of a 63×/1.4 objective for a SDCM microscope using a plastic slide and a labeled biological sample (nuclei with DAPI and actin with Alexa Fluor 488 for GFP channel). We clearly see that for the DAPI channel, the uniformity values are less good and below the previously defined tolerance values (U: 36.7% <50% which is the limit value). Apart from a significant dimmer detected signal on the corners of the FOV, the low U values influence the quality of a tile scan when this is applied. When the central region of the FOV was acquired, the field illumination was significantly improved (U: 61.5% and C: 68.8%, Fig. 4 F).

Discussion

For techniques using a detection camera (WF and SDCM), our results demonstrate that the sensor chip size influences the U metric. Although smaller chips have been used in the past, most manufacturers recently introduced larger field corrections for objectives, taking into account the whole internal microscope light path. Hence, whenever homogeneity illumination is affected in the case of a wide camera sensor chip, besides choosing better-corrected objectives, in the case of a WF system, it could be advisable to adjust the collector lens or the liquid light guide position. For other cases, there remains the possibility of cropping the image to a central area of the sensor chip or using digital shading correction. The latter should be used with caution because it strongly modifies the value of the acquired intensities.

The excitation wavelength affects mostly uniformity. Most LSCM setup designs involve the 405 nm laser line passing through a different fiber/lightpath than “visible” laser lines, resulting in a different alignment between DAPI and visible channels, which can explain the U differences (Pawley, 2006b). For LSCM modality, the pinhole alignment can influence drastically uniformity. Such adjustment is not always accessible to the user, thus talking with the service technician and the constructors is necessary to ensure a properly aligned microscope.

Results also showed that lamp sources result in the least favorable U–C values, most often due to the less accurate autoalignment procedure. Laser sources are more accurately aligned (high C values) and present a better Gaussian profile, even though for SDCM and WF techniques their coupling to a large area sensor chip often results in low U values.

We propose a measuring frequency of twice per year for each objective and all channels detected by the microscope. In addition, after changing arc lamps, laser replacements or any other manipulations on the coupling of the light source to the microscope or the scan heads, field illumination needs to be checked.

For biological imaging, we defined the experimental limit values of U > 50% and C > 20%. When these values are not met, the microscope user should use all available tools for troubleshooting (Table 1). An after-sales service visit is not necessarily essential, but special attention should be paid when images are used for quantitative analysis, like colocalization studies, ratio imaging, deconvolution, and segmentation. In these cases, one should carefully characterize the field illumination. Values far from the limits that we give previously do not allow trustful quantitative analysis. Depending on the application, low values can have serious (quantification of the intensity of objects located over the entire FOV) or manageable consequences (segmentation, tracking). Especially when the tile scan option is used for imaging of large samples (e.g., slices of tissues), inhomogeneous illumination can deteriorate the resulting image (Fig. 4 E). Shading correction can be applied to correct the tiled image, but should be acquired with an appropriate uniformly fluorescent sample (slide containing a high concentration of a dye, like fluorescein, for better results).

Co-registration in xy and z

Background

When specimens are labeled with different colored fluorophores, the non-experienced microscope user expects an image in which the position of these different markers is absolute and not influenced by chromatic effects or other distortions (barrel, pincushion, dome, and 3D-rotation). Under ideal conditions, imaging of multicolor microspheres can lead to excellent colocalization in x, y, and z. However, a microscope image can suffer from non-optimal corrected objectives that induce a chromatic shift. In the z direction, this aberration is the result of the modification of the position of the focal point of the objective according to wavelength (Nasse et al., 2007). Depending on the objective corrections this shift may not be the same in the whole FOV. The chromatic shift may also be introduced by a misalignment of the excitation sources (if several lines are used in the confocal scanning scheme), insufficient chromatic, or other aberration-corrected optical elements, misaligned filter cubes (filters or dichroic mirrors not correctly positioned in the cube), the use of non-zero-pixel shift multiwavelength dichroic mirrors, stage drift, pinhole or lens collimator alignment, or misaligned camera(s) (Comeau et al., 2006; Zucker, 2006a).

The chromatic shift may influence quantitative imaging, like colocalization studies of proteins. It is thus important to characterize the chromatic shift and when needed to correct it. In this study, we proposed a protocol to evaluate coregistration for an objective in its central field, where the best chromatic corrections are expected. We do not seek calibration for chromatic differences and correction for the entire FOV. Calibration is often useful as coregistration is not linear across the FOV (Kozubek and Matula, 2000). A spatially resolved analysis of coregistration might help to identify the region of interest of the FOV and correct the shifts (e.g., for colocalization studies, ratiometric, or FRET imaging). To evaluate the coregistration in xy and z directions, we used multilabeled microspheres (Goodwin, 2013; Mascalchi and Cordelières, 2019; Stack et al., 2011) and defined a coregistration ratio as our metric.

Materials and methods

Sample

For the coregistration measurements, a custom-made bead slide containing 1 and 4 μm diameter, four-color beads (i.e., beads incorporating multiple-colored dyes) is used. We diluted the 1 μm bead solution (#T7282, TetraSpeck; Thermo Fisher Scientific) in distilled water to achieve a density of 105 particles/ml. The 4 μm beads (#T7283, TetraSpeck; Thermo Fisher Scientific) were diluted to a further extent to achieve a density of 104 particles/ml.

Acquisition protocol

3D stacks of multicolor fluorescent beads in two or more channels were collected along at least 10 μm. For LSCM systems, the zoom was higher than 3 to increase the acquisition speed. The Shannon–Nyquist criterion was met whenever achievable and saturation was avoided. At least five beads were acquired, coming from at least two different FOVs. The beads should be very close to the center of the image (not more than one-fourth of the total field of view) since objectives are usually best corrected for chromatic shifts in their central field.

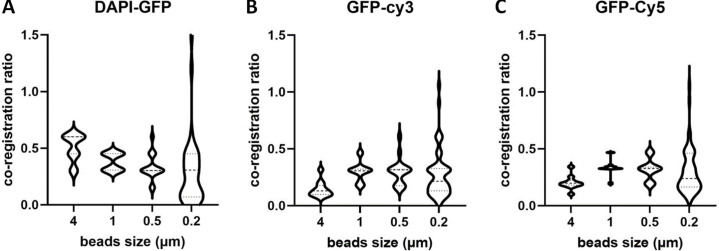

While subresolution beads are mandatory for resolution assessment, coregistration studies are possible with subresolution beads (0.1–0.2 μm) up to a few micrometer-size beads. We first studied the influence of bead size on the coregistration results. We observed that beads with a size of 1 μm are ideal for coregistration studies, while coregistration results based on smaller than 0.2 μm beads give a significantly higher dispersion (Fig. S7). We attribute this difference to the difficulty of achieving a high SBR with the 0.2 μm beads, a necessary condition for estimating their center coordinates.

Figure S7.

Influence of the bead size on the coregistration accuracy calculated by MetroloJ_QC. (A–C) Co-registration ratio distributions for 4, 1, 0.5, and 0.2 μm multicolor beads. For all cases, more than five beads per condition and two FOV were examined, for a 100×/1.4 objective, on an upright WF microscope (AxioImager Z2, Carl Zeiss Microscopy GmbH). We observe that 0.2 μm beads present a high dispersion, with a coefficient of variation higher than 65% for all channel combinations. The 1 μm beads show the smallest dispersion for all cases and are thus the most adapted beads to use for this kind of measurements.

Metrics

Our metric here was the rexp/rref ratio. In more detail, the acquisition consisted of 3D stacks with two or more emission channels. MetroloJ_QC plugin uses automation of the MetroloJ coregistration algorithm. Beads were identified and saturated beads or beads too close to the image border or another bead were discarded. The bead center of mass was subsequently calculated for each channel and the distances between the centers were estimated as rexp. For each channel to channel distance rexp, a reference distance was calculated as rref, as previously described (Cordelières and Bolte, 2008; Fig. S8 D). When r > rref, two points A and B are considered not colocalized. The plugin calculated the ratio rexp/rref for each color couple. A ratio higher than 1 indicates, in our case, a not accepted colocalization. The shortest wavelength of the color pair was used to calculate the theoretical resolution, as it is the most stringent resolution value. When analyzing the usual four-channel images, six combinations need to be considered.

Figure S8.

Co-registration workflow with the MetroloJ_QC. (A) Co-registration single and batch icon. (B) A “beadOverlay” image is generated when more than one bead is in the FOV, taking into account the declared user parameters (bead size, ROI size, bead position on z stack). (C) For each color/channel combination profile view images are composed of three maximum intensity projections, xy, xz, yz (side views) are generated. Crosses indicate the respective position of the green channel (first channel declared using the stack order) and the red channel. This is done for all channel combinations. (D) Calculation of the reference distance rref. Left: Centers of objects (A and B) are drawn as red and green spheres, respectively. The PSF is schematized in light yellow, while the first Airy volume appears in dark yellow. The former width, height, and depth define the resolution along the three axes. Middle: A and B are not colocalized as r > rref. Right: A and B are colocalized as r ≤ rref. Illustration from Cordelières and Bolte, ImageJ User and Developer Conference Proceedings, 2008, Luxembourg. (E) A ratio table is generated for each bead indicating the measured coregistration ratios of all channel combinations, the theoretical resolution for each channel, and the bead position coordinates. (F) If ratio values are within specs they are highlighted in green; if not, they are highlighted in red, when more than one bead is in the FOV, a ratio table gives the mean ratio values with their SD values. The number of beads taken into account for each channel is also given.

Analysis

MetroloJ_QC automatically generated analyses for all possible channel combinations, measured the pixel shifts, the (calibrated/uncalibrated) intercenter distances, and compared them to their respective reference distance rref. A ratio of the measured intercenter distance to the reference distance was also calculated. Images with more than one bead can be analyzed, and a batch mode enabled the analysis of multiple datasets. Fig. S8 and the plugin manual provide a more detailed description of our coregistration workflow.

Biological imaging

Monocyte dendritic cells (MoDC) were used with actin (phalloidin-Alexa Fluor 405, A30104; Invitrogen) and beta3 integrin staining (primary/secondary antibody conjugated to Cy3). Acquisitions were carried out with an upright WF microscope (AxioImager Z2; Carl Zeiss Microscopy GmbH), a Plan-Apo 63×/1.4 objective, and a sCMOS Zyla 4.2 camera (Andor–Oxford Instruments).

Results

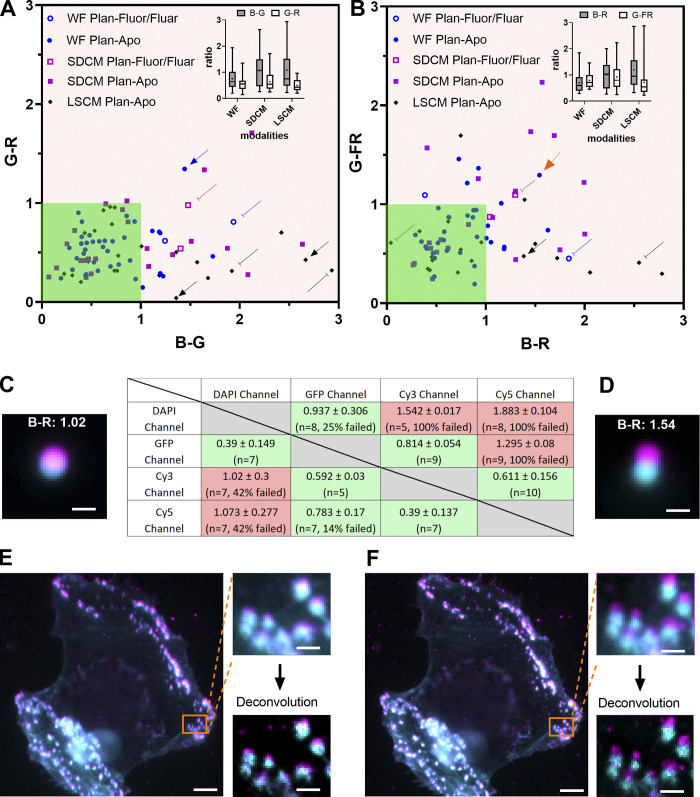

We collected data from setups of the three microscopy techniques using 92 different objectives.

We observed that >73% of the calculated coregistration ratios show nearly perfect coregistration (i.e., ratio <1) in blue-green (B-G) and green-red (G-R) channel combinations (Fig. 5 A). Our tolerance values range below unity (green zone of Fig. 5, A and B). When looking at individual combinations, 96% of the measures were below 1 for the G-R, 75% for G-FR (green-far red), 60% for B-R, and only 61% for the B-G. A comparison of the three different microscopy techniques shows that the above statement is true with all setups (insets of Fig. 5, A and B). However, SDCM is more often associated with insufficient chromatic correction with 60% of the measured ratios below 1 compared to LSCM or WF with 70 and 83%, respectively.

Figure 5.

Co-registration distribution and influence on biological imaging. (A and B) Co-registration ratio of a blue-green channel combination compared to green-red (A) and green-far red (B) channel combination classified by the microscopy technique and the kind of objective (Plan-Apo or Plan-Fluor/Fluar). The arrows highlight some extreme cases. The dark green area highlights the acceptable coregistration ratio values. Insets show the box plots of the ratio distribution. The data points were 39/20, 24/23, and 28/23 for WF, SDCM, and LSCM for A and B, respectively. (C and D) Co-registration ratio table results for 1 μm beads for two different Plan-Apo 63×/1.4 objectives, on the same WF microscope. Totally, 7 and 10 beads were used in each case. The DAPI channel in cyan and Texas Red channel in magenta are represented on the bead images, as well as the calculated ratio B-R. The D configuration is pointed out with an orange arrow in B. Scale bar is 1 μm. (E and F) Influence of coregistration on biological imaging using the same objective and setup of C and D. Cell is two color labeled with actin (Alexa Fluor 405, cyan) and beta3 integrin (Cy3, magenta). The two labeled structures are supposed to colocalize. The insets are zoomed WF and deconvolved images of the areas highlighted in orange. Scale bar is 5 and 1 μm for the cell image and the zoom, respectively.

A closer look at outlier values highlights interesting features. As expected, objectives less corrected for chromatic aberrations between the blue and the other channels showed high coregistration ratios when compared with the Plan-Apo series. It is the case for the 40×/1.3 Zeiss Plan-Neofluar of a WF and the Nikon Plan-Fluor objective of a SDCM setup represented with a round hollow blue spot pointed out with a blue-capped line (ratios B-G: 1.94, G-R: 0.81) and the square hollow red spot pointed out with a red-capped line (ratios B-G: 1.48, G-R: 0.98), respectively (Fig. 5 A).

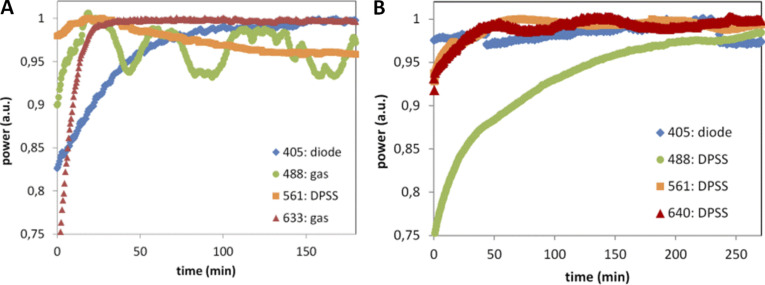

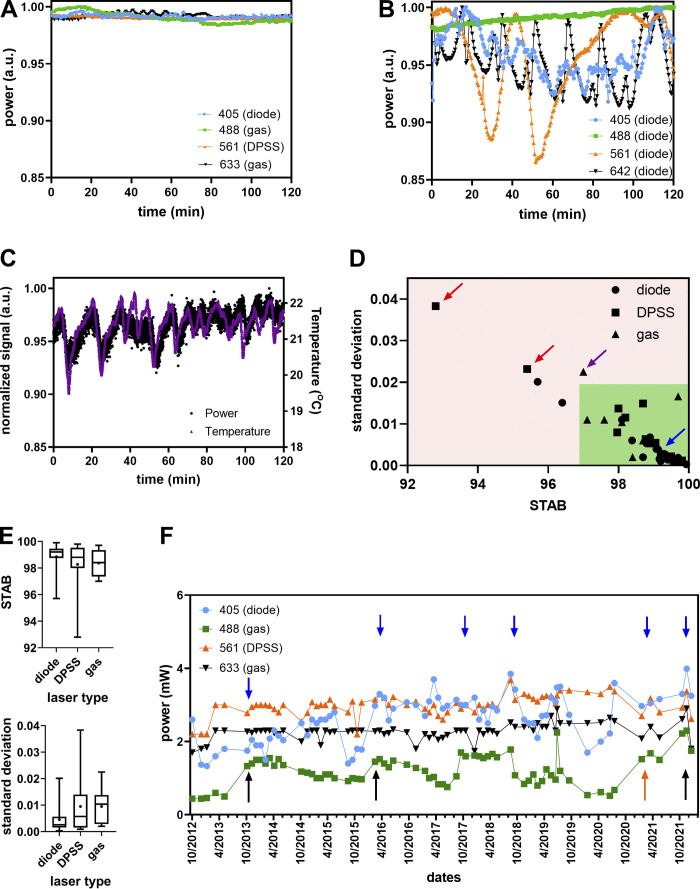

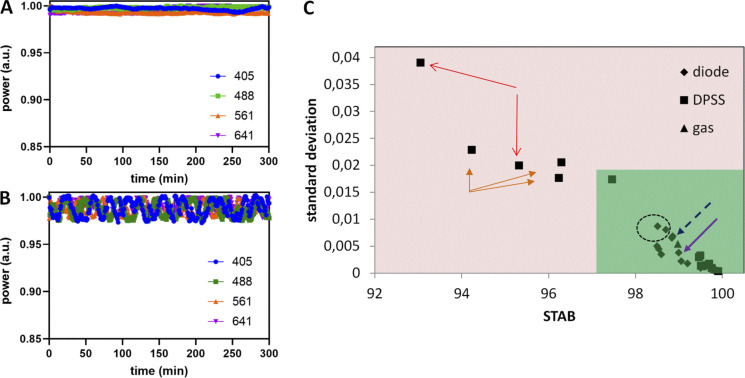

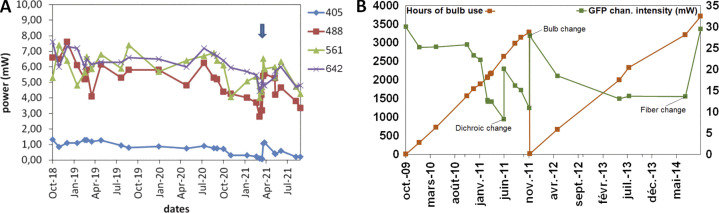

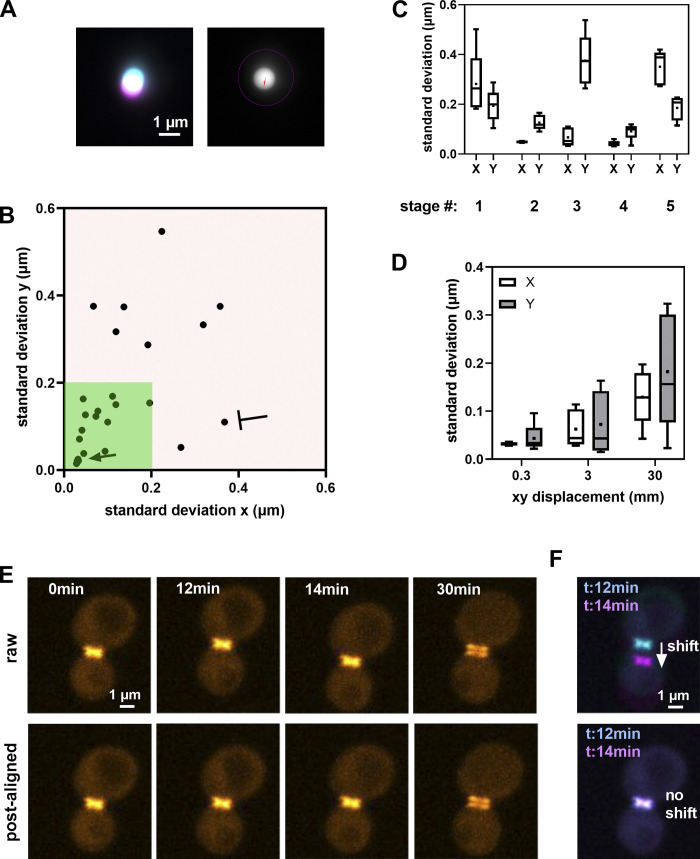

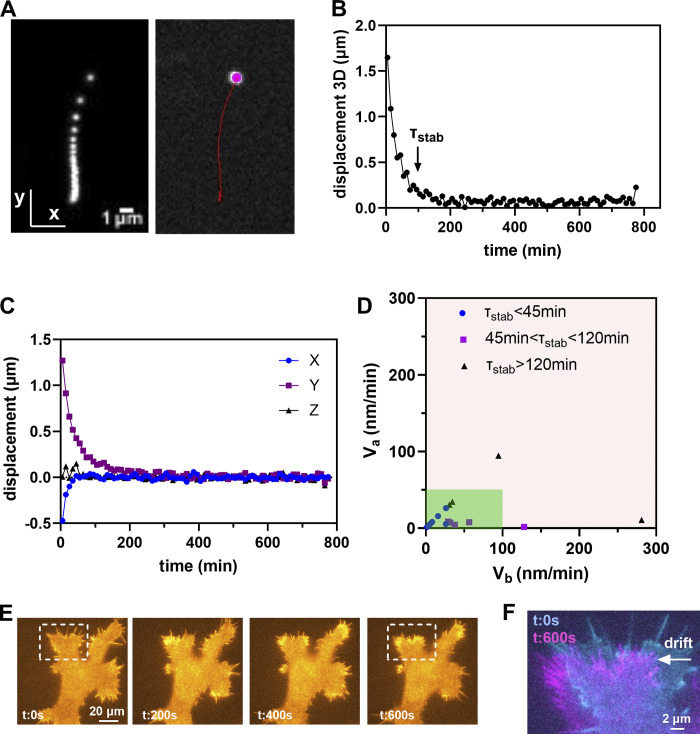

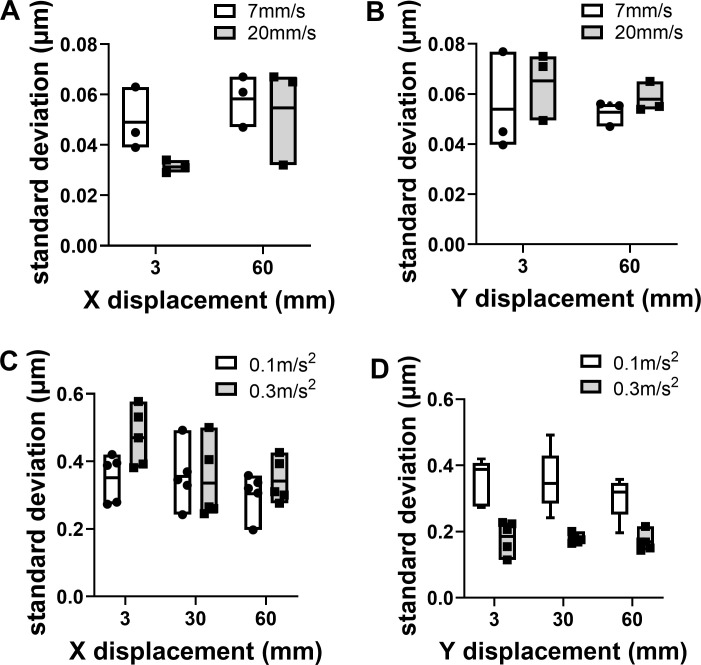

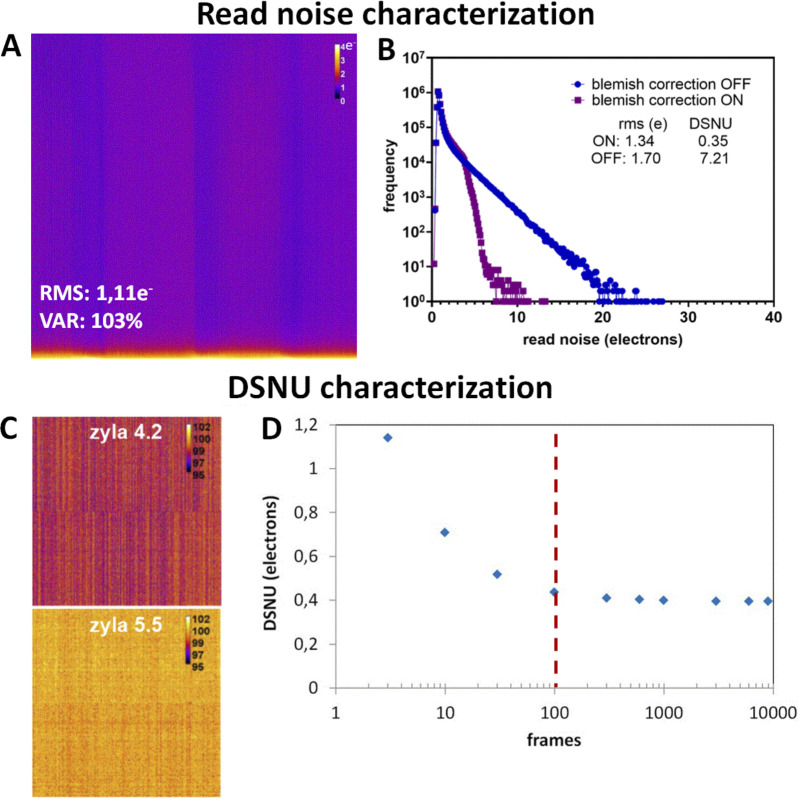

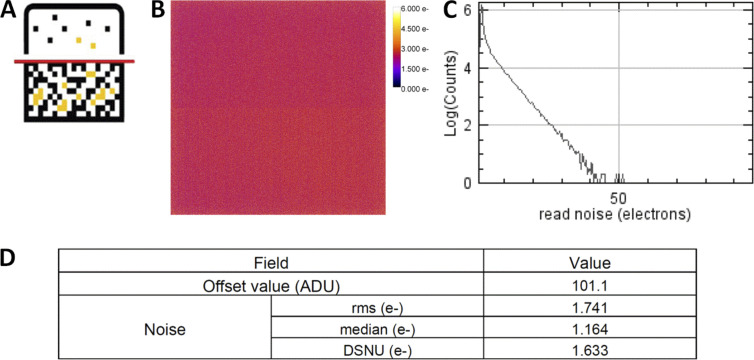

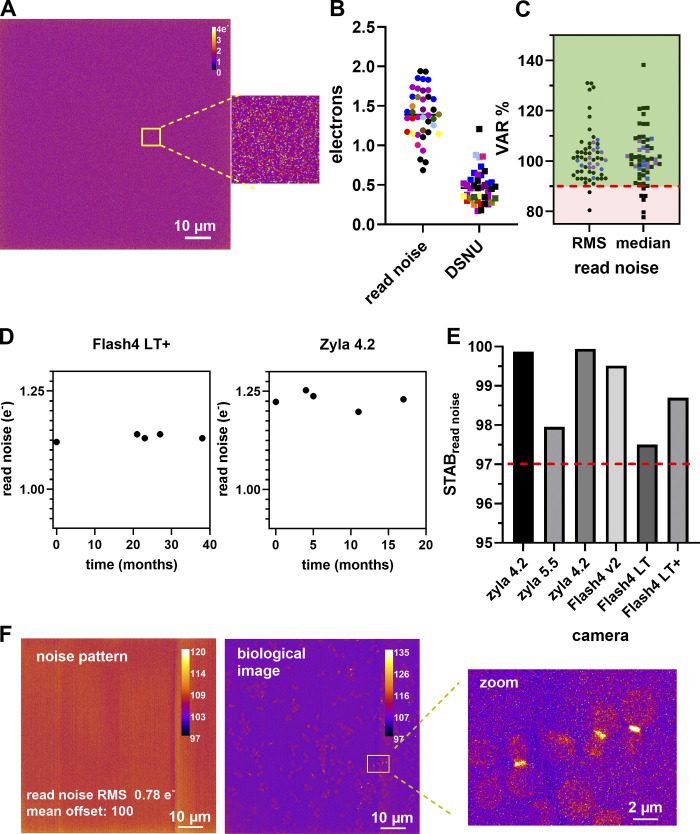

Interestingly, we also showed that coregistration shifts can be due to non-objective associated issues. The blue arrow (ratios B-G: 1.44, G-R: 1.34) was obtained with a 63×/1.4 Plan-Apo Zeiss objective of a WF system. After testing the same objective on a different microscope and testing different equivalent filter cubes on that setup, we found out that one source of error came from the alignment of the filter in the cube. A similar cube equivalent case was associated with ratios of B-G: 0.19 and G-R: 0.23, confirming that the issue came from the misalignment of the cube components (dichroic mirror).