Abstract

Spiculations/lobulations, sharp/curved spikes on the surface of lung nodules, are good predictors of lung cancer malignancy and hence, are routinely assessed and reported by radiologists as part of the standardized Lung-RADS clinical scoring criteria. Given the 3D geometry of the nodule and 2D slice-by-slice assessment by radiologists, manual spiculation/lobulation annotation is a tedious task and thus no public datasets exist to date for probing the importance of these clinically-reported features in the SOTA malignancy prediction algorithms. As part of this paper, we release a large-scale Clinically-Interpretable Radiomics Dataset, CIRDataset, containing 956 radiologist QA/QC’ed spiculation/lobulation annotations on segmented lung nodules from two public datasets, LIDC-IDRI (N=883) and LUNGx (N=73). We also present an end-to-end deep learning model based on multi-class Voxel2Mesh extension to segment nodules (while preserving spikes), classify spikes (sharp/spiculation and curved/lobulation), and perform malignancy prediction. Previous methods have performed malignancy prediction for LIDC and LUNGx datasets but without robust attribution to any clinically reported/actionable features (due to known hyperparameter sensitivity issues with general attribution schemes). With the release of this comprehensively-annotated CIRDataset and end-to-end deep learning baseline, we hope that malignancy prediction methods can validate their explanations, benchmark against our baseline, and provide clinically-actionable insights. Dataset, code, pretrained models, and docker containers are available at https://github.com/nadeemlab/CIR.

Keywords: Lung Nodule, Spiculation, Malignancy Prediction

1. Introduction

In the United States, lung cancer is the leading cause of cancer death [14]. Recently, radiomics and deep learning studies have been proposed for a variety of clinical applications, including lung cancer screening nodule malignancy prediction [5,8,9,11]. The likelihood of malignancy is influenced by the radiographic edge characteristics of a pulmonary nodule, particularly spiculation. Benign nodule borders are usually well-defined and smooth, whereas malignant nodule borders are frequently blurry and irregular. The American College of Radiology (ACR) created the Lung Imaging Reporting and Data System (Lung-RADS) to standardize lung cancer screening on CT images based on size, appearance type, and calcification [7]. Spiculation has been proposed as an additional image finding that raises the suspicion of malignancy and allows for more precise prediction. Spiculation is caused by interlobular septal thickness, fibrosis caused by pulmonary artery obstruction, or lymphatic channels packed with tumor cells (also known as sunburst or corona radiata sign). It has a good positive predictive value for malignancy with a positive predictive value of up to 90%. Another feature significantly linked to malignancy is lobulation, which is associated with varied or uneven development rates [15].

Spiculation/lobulation quantification has previously been studied [8,10,13] but not in an end-to-end deep learning malignancy prediction context. Similarly, previous methods [17] have performed malignancy prediction alone but without robust attribution to clinically-reported/actionable features (due to known hyperparameter sensitivity issues and variability in general attribution/explanation schemes [3,4]). To probe the importance of spiculation/lobulation in the context of malignancy prediction and bypass reliance on sensitive/variable saliency maps, first we release a large-scale Clinically-Interpretable Radiomics Dataset, CIRDataset, containing 956 QA/QC’ed spiculation/lobulation annotations on segmented lung nodules for two public datasets, LIDC-IDRI (with visual radiologist malignancy RM scores for the entire cohort and pathology-proven malignancy PM labels for a subset) and LUNGx (with pathology-proven size-matched benign/malignant nodules to remove the effect of size on malignancy prediction). Second, we present a multi-class Voxel2Mesh [16] extension to provide a good baseline for end-to-end deep learning lung nodule segmentation (while preserving spikes), spikes classification (lobulation/spiculation), and malignancy prediction; Voxel2Mesh [16] is the only published method to our knowledge that preserves spikes during segmentation and hence its use as our base model. With the release of this comprehensively-annotated dataset and end-to-end deep learning baseline, we hope that malignancy prediction methods can validate their explanations, benchmark against our baseline, and provide clinically-actionable insights. Dataset, code, pretrained models, and docker containers are available at https://github.com/nadeemlab/CIR.

2. CIRDataset

Rather than relying on traditional radiomics features that are difficult to reproduce and standardize across same/different patient cohorts [12], this study focuses on standardized/reproducible Lung-RADS clinically-reported and interpretable features (spiculation/lobulation, sharp/curved spikes on the surface of the nodule). Given the 3D geometry of the nodule and 2D slice-by-slice assessment by radiologists, manual spiculation/lobulation annotation is a tedious task and thus no public datasets exist to date for probing the importance of these clinically-reported features in the SOTA malignancy prediction algorithms.

We release a large-scale dataset with high-quality lung nodule segmentation masks and spiculation/lobulation annotations for LIDC (N=883) and LUNGx (N=73) datasets. The spiculation/lobulation annotations were computed automatically and QA/QC’ed by an expert on meshes generated from nodule segmentation masks using negative area distortion metric from spherical parameterization [8]. Specifically, (1) the nodule segmentation masks were rescaled to isotropic voxel size with the CT image’s finest spacing to preserve the details, (2) isosurface was extracted from the rescaled segmentation masks to construct a 3D mesh model, (3) spherical parameterization was then applied to extract the area distortion map of the nodule (computed from the log ratio of the input and the spherical mapped triangular mesh faces), and (4) spikes are detected on the mesh’s surface (negative area distortion) and classified into spiculation, lobulation, and other. Because the area distortion map and spikes classification map were generated on a mesh model, these must be voxelized before deep learning model training. The voxelized area distortion map is divided into two masks: the nodule base (ε > 0) and the spikes (ε ≤ 0). Then, in the spikes mask, the spiculation and lobulation classes were voxelized from the vertices classification map, while the other classifications were ignored and treated as nodule bases.

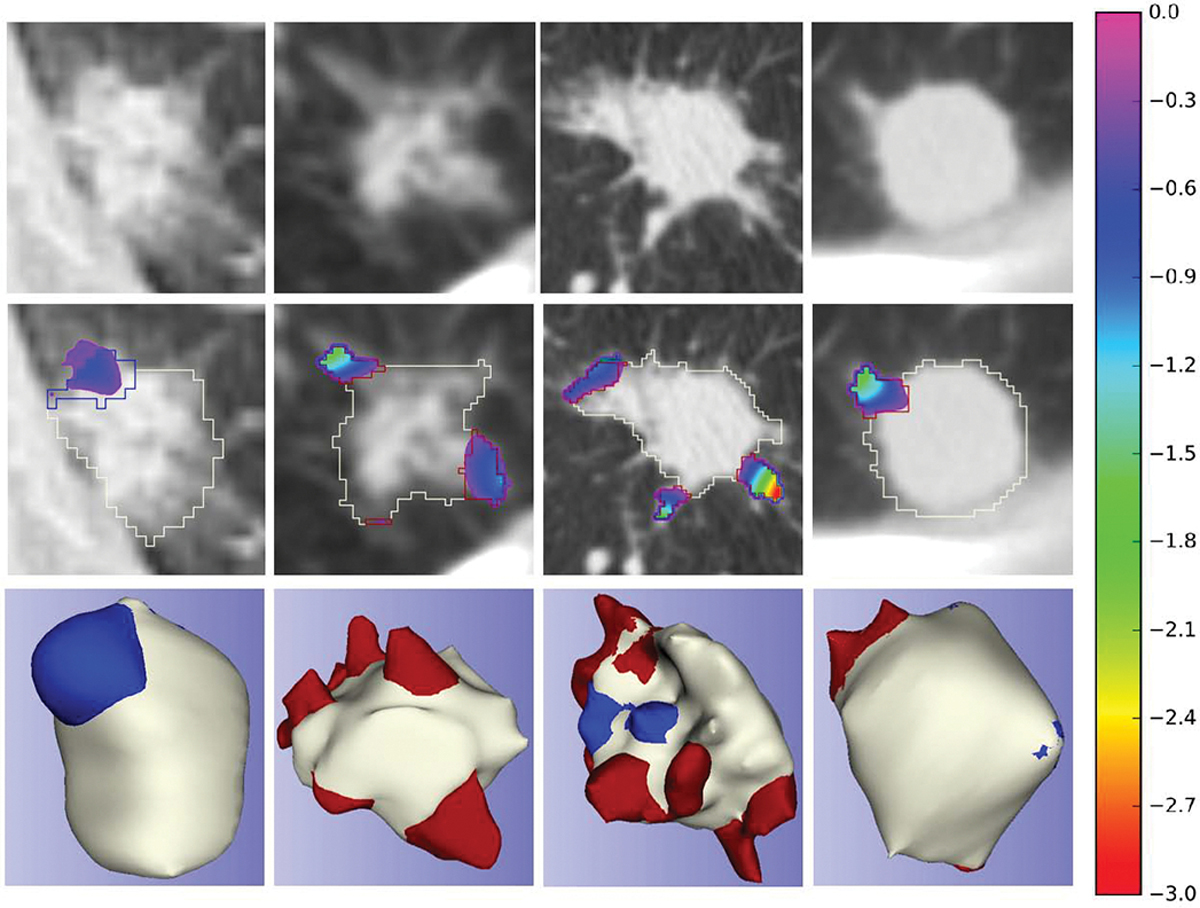

Following [8], we applied semi-auto segmentation for the largest nodules in each LIDC-IDRI patient scan [1,2] for more reproducible spiculation quantification, as well as calculated consensus segmentation using STAPLE to combine multiple contours by the radiologists. LUNGx only provides the nodule’s location but no the segmentation mask. We applied the same semi-automated segmentation method on nodules as LIDC to obtain the segmentation masks. All these segmentation masks are released in CIRDataset. Complete pipeline for generating annotations from scratch on LIDC/LUNGx or private datasets can also be found on our CIR GitHub along with preprocessed data for different stages. Samples of the dataset, including area distortion maps (computed from our spherical parameterization method), are shown in Figure 1.

Fig. 1.

Nodule spiculation quantification dataset samples; the first row - input CT image; the second row - superimposed area distortion map [8] and contours of each classifications on the input CT image; the third row - 3D mesh model with vertices classifications; red: spiculations, blue: lobulations, white: nodule

3. Method

Several deep learning voxel/pixel segmentation algorithms have been proposed in the past, but most of these algorithms tend to smooth out the high-frequency spikes that constitute spiculation and lobulation features (Voxel2Mesh [16] is the only exception to date that preserves these spikes). The Jaccard index for nodule segmentation on a random LIDC training/validation split via UNet, FPN, and Voxel2Mesh was 0.775/0.537, 0.685/0.592, and 0.778/0.609, and for peaks segmentation it was 0.450/0.203, 0.332/0.236, and 0.493/0.476.

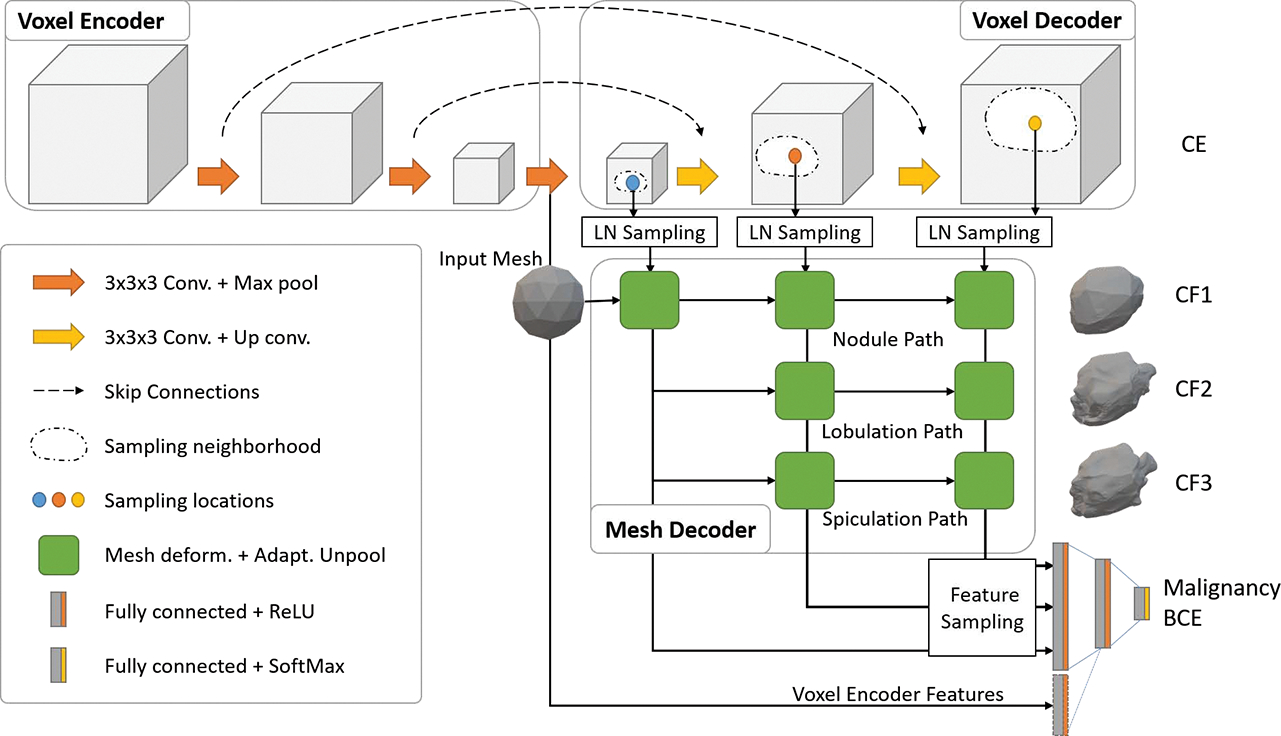

Using the Voxel2Mesh as our based model, we present a multi-class Voxel2Mesh extension that takes as input 3D CT volume and returns segmented 3D nodule surface mesh (preserving spikes), vertex-level spiculation/lobulation classification, and binary benign/malignancy prediction. Implementation details, code, and trained models can be found on our CIR GitHub.

3.1. Multi-class Mesh Decoder

Voxel2Mesh [16] can generate a mesh model and voxel segmentation of the target item simultaneously. However, Voxel2Mesh only allows for multi-class segmentation in different disjoint objects. This paper presents a multi-class mesh decoder that enables multi-class segmentation in a single object. The multi-class decoder segments a baseline model first, then deforms it to include spiculation and lobulation spikes. Traditional voxel segmentation and mesh decoders were unable to capture nodule surface spikes because they attempted to provide a smooth and tight surface of the target object. To capture spikes and classify these into lobulations and spiculations, we added extra deformation modules to the mesh decoder. The mesh decoder deforms the input sphere mesh to segment the nodule with the deformation being controlled by chamfer distance between the vertices on the mesh and the ground truth nodule vertices with regularization (laplacian, edge, and normal consistency). Following the generation of a nodule surface by the mesh decoder at each level, the model deforms the nodule surface to capture lobulations and spiculations. The chamfer distance loss (chamfer_loss) between the ground truth lobulation and spiculation vertices and the deformed mesh is used to assess the extra deformations. To capture spikes, we reduced the regularization for the extra deformation to allow free deformation. For lobulation and spiculation, we classified each vertex based on the distance between the same vertex on the nodule surface and the deformed surface. The mean cross entropy loss (ce_loss) between the final mesh vertices and the ground truth vertices is used to evaluate their vertex classification. Deep shape features were extracted during the multi-class mesh decoding for spiculation quantification and subsequently used to predict malignancy.

3.2. Malignancy prediction

LIDC provides pathological malignancy (strong label) for a small subset of the data (LIDC-PM, N=72), whereas LUNGx provides it for the entire dataset (N=73). Unlike PM, LIDC provides weakly labeled radiological malignancy scores (RM) for the entire dataset (N=883). Due to the limited number of strong labeled datasets, these can not be used to train a deep learning model. In contrast, RM cases are enough to train a data-intensive deep learning model. We used LIDC-RM to train and validate the model. In addition, because the RM score is graded on a five-point scale, RM>3 (moderately suspicious to highly suspicious) was used to binarize the scores and matched to PM binary classification.

Mesh Feature Classifier

We extracted a fixed-size feature vector for malignancy classification by sampling 1000 vertices from each mesh model based on their order. The earlier vertices come straight from the input mesh, while the later vertices are added by unpooling from previous layers. Less important vertices are removed by the learned neighborhood sampling. Using 32 features for each vertex, the mesh decoder deforms the input mesh to capture the nodule, lobulations, and spiculations, respectively. A total of 96 (32 × 3) features are extracted for each vertex. The feature vector is classified as malignant or benign using Softmax classification with two fully connected layers, and the results are evaluated using binary cross entropy loss (bce_loss). The model was trained end-to-end using the following total loss (with default Voxel2Mesh [16] weights):

Hybrid (voxel+mesh) Feature Classifier

The features from the last UNet encoder layer (256 × 4 × 4 × 4 = 16384) were flattened and then concatenated with the mesh features and fed into the last three fully connected layers to predict malignancy, as shown in Table 1. This leads to a total of 112384 (16384 + 96000) input features to the classifier which remains otherwise same as before. The motivation behind this hybrid feature classifier was to test using low level voxel-based deep features from the encoder in addition to the higher level shape features extracted from the mesh decoder for the task of malignancy prediction.

Table 1.

Malignancy prediction model using mesh features only and using mesh and encoder features

| Network | Layer | Input | Output | Activation |

|---|---|---|---|---|

|

| ||||

| Mesh Only | FC Layer1 | 96000 | 512 | RELU |

| Mesh Only | FC Layer2 | 512 | 128 | RELU |

| Mesh Only | FC Layer3 | 128 | 2 | Softmax |

|

| ||||

| Mesh+Encoder | FC Layer1 | 112384 | 512 | RELU |

| Mesh+Encoder | FC Layer2 | 512 | 128 | RELU |

| Mesh+Encoder | FC Layer3 | 128 | 2 | Softmax |

4. Results and Discussion

All implementations were created using Pytorch. After separating the 72 strongly labeled datasets (LIDC-PM) for testing, we divided the remaining LIDC dataset into train and validation subsets and trained on NVIDIA HPC clusters (4 × RTX A6000 (48GB), 2 × AMD EPYC 7763 CPUs (256 threads), and 768GB RAM) for a maximum of 200 epochs. We saved the best model during training based on the Jaccard Index on the validation set. Once fully trained, we tested both the trained networks on LIDC-PM (N = 72) and LUNGx (N = 73) hold out test sets. For estimating the mesh classification (nodule, spiculation and lobulation) performance, we computed Jaccard Index and Chamfer Weighted Symmetric index, and for measuring the malignancy classification performance we computed standard metrics including Area Under ROC Curve (AUC), Accuracy, Sensitivity, Specificity, and F1 score.

Table 2 reports the mesh classification results for the two models. On the LIDC-PM test set, the mesh-only model produces better Jaccard Index for nodule (0.561 vs 0.558), spiculation (0.553 vs 0.541), and lobulation classification (0.510 vs 0.507). Opposite trend is observed in the Chamfer distance metric. On the external LUNGx testing dataset (N=73), the hybrid voxel classifier model does better in terms of Chamfer distance metric for all three classes. The results for malignancy prediction are reported in Table 3. On the LIDC-PM test dataset, the hybrid features network produces an excellent AUC of 0.813 with an accuracy of 79.17%. The mesh-only features model, on the other hand, does slightly worse in terms of AUC (=0.790) and produces worse accuracy (70.83%). On the external LUNGx test dataset, the hybrid features network does better in terms of AUC and sensitivity metrics. This is likely due to the fact that the hybrid features’ model uses voxel-level deep features in classification and no datasets from the LUNGx are used in training. There may also be differences in CT scanning protocols and/or different scanner properties which are never seen during training.

Table 2.

Nodule (Class0), spiculation (Class1), and lobulation (Class2) peak classification metrics

| Training | ||||||

| Network | Chamfer Weighted Symmteric↓ | Jaccard Index↓ | ||||

| Class0 | Class1 | Class2 | Class0 | Class1 | Class2 | |

| Mesh Only | 0.009 | 0.010 | 0.013 | 0.507 | 0.493 | 0.430 |

| Mesh+Encoder | 0.008 | 0.009 | 0.011 | 0.488 | 0.456 | 0.410 |

| Validation | ||||||

| Network | Chamfer Weighted Symmteric↓ | Jaccard Index↓ | ||||

| Class0 | Class1 | Class2 | Class0 | Class1 | Class2 | |

| Mesh Only | 0.010 | 0.011 | 0.014 | 0.526 | 0.502 | 0.451 |

| Mesh+Encoder | 0.014 | 0.015 | 0.018 | 0.488 | 0.472 | 0.433 |

| Testing LIDC-PM N=72 | ||||||

| Network | Chamfer Weighted Symmteric↓ | Jaccard Index↓ | ||||

| Class0 | Class1 | Class2 | Class0 | Class1 | Class2 | |

| Mesh Only | 0.011 | 0.011 | 0.014 | 0.561 | 0.553 | 0.510 |

| Mesh+Encoder | 0.009 | 0.010 | 0.012 | 0.558 | 0.541 | 0.507 |

| Testing LUNGx N=73 | ||||||

| Network | Chamfer Weighted Symmteric↓ | Jaccard Index↓ | ||||

| Class0 | Class1 | Class2 | Class0 | Class1 | Class2 | |

| Mesh Only | 0.029 | 0.028 | 0.030 | 0.502 | 0.537 | 0.545 |

| Mesh+Encoder | 0.017 | 0.017 | 0.019 | 0.506 | 0.523 | 0.525 |

Table 3.

Malignancy prediction metrics.

| Training | |||||

| Network | AUC | Accuracy | Sensitivity | Specificity | F1 |

| Mesh Only | 0.885 | 80.25 | 54.84 | 93.04 | 65.03 |

| Mesh+Encoder | 0.899 | 80.71 | 55.76 | 93.27 | 65.94 |

| Validation | |||||

| Network | AUC | Accuracy | Sensitivity | Specificity | F1 |

| Mesh Only | 0.881 | 80.37 | 53.06 | 92.11 | 61.90 |

| Mesh+Encoder | 0.808 | 75.46 | 42.86 | 89.47 | 51.22 |

| Testing LIDC-PM N=72 | |||||

| Network | AUC | Accuracy | Sensitivity | Specificity | F1 |

| Mesh Only | 0.790 | 70.83 | 56.10 | 90.32 | 68.66 |

| Mesh+Encoder | 0.813 | 79.17 | 70.73 | 90.32 | 79.45 |

| Testing LUNGx N=73 | |||||

| Network | AUC | Accuracy | Sensitivity | Specificity | F1 |

| Mesh Only | 0.733 | 68.49 | 80.56 | 56.76 | 71.60 |

| Mesh+Encoder | 0.743 | 65.75 | 86.11 | 45.95 | 71.26 |

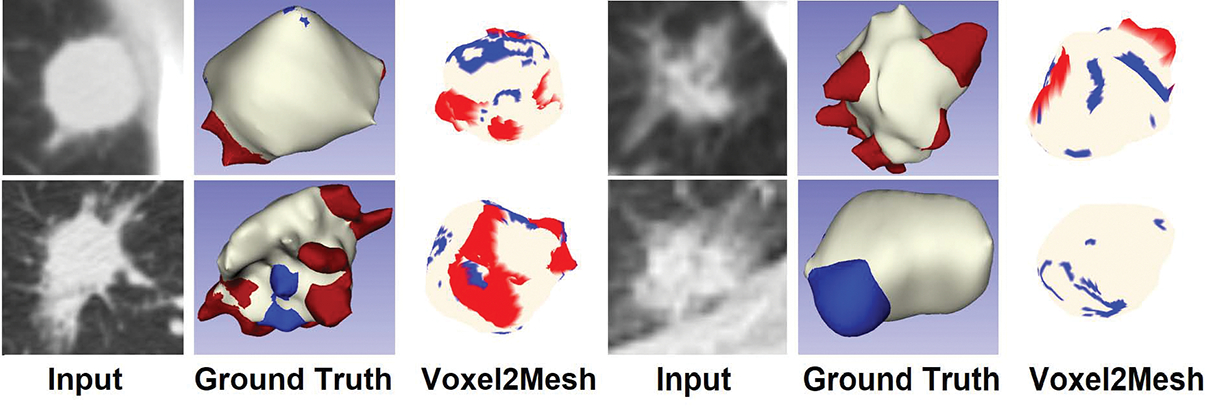

Figure 3 shows segmentation results using the proposed method, as well as ground truth mesh models with spiculation and lobulation classifications for comparison. The proposed method segmented nodule accurately while also detecting spiculations and lobulations at the vertex level. The vertex-level classification however detects only pockets of spiculations and lobulations rather than a contiguous whole. In the future, we will use mesh segmentation to solve this problem by exploiting the features of classified vertices and the relationship between neighboring vertices in the mesh model.

Fig. 3.

Results of nodule segmentation and vertex classification; the first column - input CT image; the second column - 3D mesh model with vertices classifications (ground truth); the third column - 3D mesh model with vertices classifications (predictions); red: spiculations, blue: lobulations, white: nodule

Previous works have performed malignancy prediction on LIDC and LUNGx datasets but again without any robust attribution to clinically-reported features. For reference, NoduleX [6] reported results only on the LIDC RM cohort, not the PM subset. When we ran the NoduleX pre-trained model (http://bioinformatics.astate.edu/NoduleX) on the LIDC PM subset, the AUC, accuracy, sensitivity, and specificity were 0.68, 0.68, 0.78, and 0.55 respectively versus ours 0.73, 0.68. 0.81 and 0.57. On LUNGx, AUC for NoduleX was 0.67 vs ours 0.73. MV-KBC [17] (implementation not available) reported the best malignancy prediction numbers with 0.77 AUC on LUNGx and 0.88 on LIDC RM (NOT PM).

In this work, we have focused on lung nodule spiculation/lobulation quantification via the Lung-RADS scoring criteria. In the future, we will extend our framework to breast nodule spiculation/lobulation quantification and malignancy prediction via BI-RADS scoring criteria (which has similar features). We will also extend our framework for advanced lung/breast cancer recurrence and outcomes prediction via spiculation/lobulation quantification.

Fig. 2.

Depiction of end-to-end deep learning architecture based on multi-class Voxel2Mesh extension. The standard UNet based voxel encoder/decoder (top) extracts features from the input CT volumes while the mesh decoder deforms an initial spherical mesh into increasing finer resolution meshes matching the target shape. The mesh deformation utilizes feature vectors sampled from the voxel decoder through the Learned Neighborhood (LN) Sampling technique and also performs adaptive unpooling with increased vertex counts in high curvature areas. We extend the architecture by introducing extra mesh decoder layers for spiculation and lobulation classification. We also sample vertices (shape features) from the final mesh unpooling layer as input to Fully Connected malignancy prediction network. We optionally add deep voxel-features from the last voxel encoder layer to the malignancy prediction network.

Acknowledgements:

This project was supported by MSK Cancer Center Support Grant/Core Grant (P30 CA008748) and by the Sidney Kimmel Cancer Center Support Grant (P30 CA056036)

References

- 1.Armato SG, McLennan G, Bidaut L, McNitt-Gray MF, Meyer CR, et al. : The lung image database consortium (LIDC) and image database resource initiative (IDRI): A completed reference database of lung nodules on CT scans. Medical Physics 38(2), 915–931 (2011). 10.1118/1.3528204 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Armato SG, McLennan G, Bidaut L, McNitt-Gray MF, Meyer CR, et al. : Data from LIDC-IDRI. The Cancer Imaging Archive. (2015). 10.7937/K9/TCIA.2015.LO9QL9SX [DOI] [Google Scholar]

- 3.Arun N, Gaw N, Singh P, Chang K, Aggarwal M, Chen B, Hoebel K, Gupta S, Patel J, Gidwani M, et al. : Assessing the trustworthiness of saliency maps for localizing abnormalities in medical imaging. Radiology: Artificial Intelligence 3(6) (2021) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Bansal N, Agarwal C, Nguyen A: Sam: The sensitivity of attribution methods to hyperparameters. In: Proceedings of the ieee/cvf conference on computer vision and pattern recognition. pp. 8673–8683 (2020) [Google Scholar]

- 5.Buty M, Xu Z, Gao M, Bagci U, Wu A, Mollura DJ: Characterization of lung nodule malignancy using hybrid shape and appearance features. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. pp. 662–670. Springer (2016) [Google Scholar]

- 6.Causey JL, Zhang J, Ma S, Jiang B, Qualls JA, Politte DG, Prior F, Zhang S, Huang X: Highly accurate model for prediction of lung nodule malignancy with ct scans. Scientific reports 8(1), 1–12 (2018) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Chelala L, Hossain R, Kazerooni EA, Christensen JD, Dyer DS, White CS: Lung-rads version 1.1: Challenges and a look ahead, from the ajr special series on radiology reporting and data systems. American Journal of Roentgenology 216(6), 1411–1422 (2021). 10.2214/AJR.20.24807, 10.2214/AJR.20.24807, pMID: 33470834 [DOI] [PubMed] [Google Scholar]

- 8.Choi W, Nadeem S, Alam SR, Deasy JO, Tannenbaum A, Lu W: Reproducible and interpretable spiculation quantification for lung cancer screening. Computer Methods and Programs in Biomedicine 200, 105839 (2021). 10.1016/j.cmpb.2020.105839, https://www.sciencedirect.com/science/article/pii/S0169260720316722 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Choi W, Oh JH, Riyahi S, Liu CJ, Jiang F, Chen W, White C, Rimner A, Mechalakos JG, Deasy JO, Lu W: Radiomics analysis of pulmonary nodules in low-dose CT for early detection of lung cancer. Medical Physics (2018). 10.1002/mp.12820 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Dhara AK, Mukhopadhyay S, Saha P, Garg M, Khandelwal N: Differential geometry-based techniques for characterization of boundary roughness of pulmonary nodules in CT images. International Journal of Computer Assisted Radiology and Surgery 11(3), 337–349 (2016) [DOI] [PubMed] [Google Scholar]

- 11.Hawkins S, Wang H, Liu Y, Garcia A, Stringfield O, Krewer H, Li Q, Cherezov D, Gatenby RA, Balagurunathan Y, et al. : Predicting malignant nodules from screening CT scans. Journal of Thoracic Oncology 11(12), 2120–2128 (2016) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Meyer M, Ronald J, Vernuccio F, Nelson RC, Ramirez-Giraldo JC, Solomon J, Patel BN, Samei E, Marin D: Reproducibility of ct radiomic features within the same patient: influence of radiation dose and ct reconstruction settings. Radiology 293(3), 583–591 (2019) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Niehaus R, Raicu DS, Furst J, Armato S: Toward understanding the size dependence of shape features for predicting spiculation in lung nodules for computeraided diagnosis. Journal of Digital Imaging 28(6), 704–717 (2015) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Siegel RL, Miller KD, Jemal A: Cancer statistics, 2019. CA: A Cancer Journal for Clinicians 69(1), 7–34 (2019). 10.3322/caac.21551, 10.3322/caac.21551 [DOI] [PubMed] [Google Scholar]

- 15.Snoeckx A, Reyntiens P, Desbuquoit D, Spinhoven MJ, Schil PEYV, van Meerbeeck JP, Parizel PM: Evaluation of the solitary pulmonary nodule: size matters, but do not ignore the power of morphology. Insights into Imaging 9, 73 – 86 (2017) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wickramasinghe U, Remelli E, Knott G, Fua P: Voxel2mesh: 3d mesh model generation from volumetric data. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. pp. 299–308. Springer (2020) [Google Scholar]

- 17.Xie Y, Xia Y, Zhang J, Song Y, Feng D, Fulham M, Cai W: Knowledge-based collaborative deep learning for benign-malignant lung nodule classification on chest ct. IEEE transactions on medical imaging 38(4), 991–1004 (2018) [DOI] [PubMed] [Google Scholar]