Abstract

In recent years, well-test research has witnessed several works to automate reservoir model identification and characterization using computer-assisted models. Since the reservoir model identification is a classification problem, while its characterization is a regression-based task, their simultaneous accomplishment is always challenging. This work combines genetic algorithm optimization and artificial neural networks to identify and characterize homogeneous reservoir systems from well-testing data automatically. A total of eight prediction models, including two classifiers and six regressors, have been trained. The simulated well-test pressure derivatives with varying noise percentages comprise the training samples. The feature selection and hyperparameter tuning have been performed carefully using the genetic algorithm to enhance the prediction accuracy. The models were validated using nine simulated and one real-field test case. The optimized classifier identifies all the reservoir models with a classification accuracy higher than 79%. In addition, the statistical analysis approves that the optimized regressors accurately perform the reservoir characterization with mean relative errors of lower than 4.5%. The minimized manual interference reduces human bias, and the models have significant noise tolerance for practical applications.

Subject terms: Engineering, Fossil fuels

Introduction

Underground fossil resources, including (gas1, gas condensate2, oil3, and coal4) are essential to satisfy energy demand in domestic, transportation, and industrial applications. These energy resources must be managed efficiently so that the maximum fluid can be extracted5. Accurate information about these highly heterogeneous systems is a prerequisite for reservoir management. The core analysis6, well-logging7, seismic8, and well-testing9 are the main available techniques for gathering information about hydrocarbon reservoirs for efficient management. Since the well testing is a dynamic operation and helps estimate the average properties of hydrocarbon reservoir over the drainage area, it attracted high popularity in this regard. During the well-testing operation, the pressure response corresponding to a temporary change in flow rate is recorded over time. Well-test analysis is an inverse solution approach to investigate reservoir characteristics10. It can be achieved by developing analytical or intelligent models of the reservoir which produce similar output responses as the existing reservoir systems11.

The well-test data interpretation methods have always been of keen interest to researchers worldwide. The type-curve analysis of the pressure derivatives has been amongst the most popular interpretation techniques12,13. The predictive modeling techniques have evolved to be prominent solutions to the well-test analysis problems14–16.

The artificial neural network (ANN) model has been demonstrated for the first time for identifying reservoir models using pressure derivative data17. Kharrat and Razavi trained ANN using normalized pressure derivative data to recognize homogeneous and dual-porosity reservoir models18. Almaraghi and El-Banbi developed an MLP with a back-propagation algorithm for reservoir model identification19. Ahmadi et al. combined time-series shapelets and machine learning methods (probabilistic neural network, random forest, and support vector machines) to detect reservoir models from pressure transient signals20. The confusion matrix has been applied in this study to monitor classification accuracy of the suggested strategy. All these three research studies only focused on reservoirs’ model identification and made no effort to estimate the associated parameters.

On the other hand, some researchers have utilized the traditional machine learning method only to characterize the reservoirs’ parameters. The Horner plot data of build-up tests from conventional and dual-porosity reservoirs was input into the ANN model to predict the initial reservoir pressure, skin, and permeability21. Alajmi and Ertekin attempted to estimate naturally fractured reservoir parameters using ANN with coefficients of interpolating polynomials of pressure data and measured the model performance using mean relative error22. Adibifard et al. utilized the synthetic pressure transient signals of naturally fractured reservoirs to estimate permeability, wellbore storage coefficient, skin factor, interporosity flow coefficient, and storativity ratio23. Indeed, these studies are only applicable to estimate some key reservoir parameters and have no business with the model identification.

The derivative curve characteristics, including the radial flow regime and the hump, were used to distinguish between the infinite homogeneous and dual-porosity reservoirs, alongside estimating their parameters using three-layered ANN24. Since this method uses the characteristic shapes of derivative curves, it needs user knowledge/help to distinguish reservoir models.

The recent advances in computational models and deep neural networks have provided promising results in different research fields25–30, including well-testing analysis31–35. Convolutional neural networks have many parameters due to vectorizing image input, which results in increasing the computational cost and training time. Standard recurrent neural networks are unsuitable for establishing long-term dependencies across the sequence datasets. The simple architecture of ANN makes it an attractive tool for well-test analysis. Further, gradient descent36–38 and evolutionary39–42 optimization algorithms aid in improving the efficiency of the model.

However, the well-testing research has not witnessed the implementation of evolutionary optimization as genetic algorithm (GA) integrated deep structured prediction models for automatic analysis of the noisy pressure transient test data of petroleum reservoirs so far in the published research outcomes. In addition, the simultaneous performing reservoir model identification and characterization are so problematic that little research has covered that. This paper investigates GA-optimized ANN (GA-ANN) prediction models to classify homogeneous petroleum reservoirs and simultaneously estimate the associated reservoir parameters. The homogeneous reservoir models with infinite acting (HO-IA), no flow (HO-NF), and pressure supported (HO-PS) boundary conditions have been considered, and 50 × 103 pressure derivative (∆p′) data for each state was obtained. Cumulatively 150 × 103 labeled pressure derivative signals and their corresponding reservoir parameter, Ln (CDe2S), have been used. The classifiers and regressors comprising the fully connected layers have been developed to perform the predictions. Further, the binary coded GA has been implemented to tune the hyperparameters and select the best features from the input dataset. Later, the GA-ANN models were implemented to perform predictions using the test case data. Comparative performance validation of ANN and GA-ANN models using ten well-test data shows the superiority of GA-ANN models in reservoir classification and characterization.

Methods

In this section, the data collection and model framework of the models have been discussed.

Data collection

The pressure signals for HO-IA, HO-NF, and HO-PS reservoirs have been simulated using the mathematical models available in the literature34,43. Stehfest’s algorithm has been considered for numerical inversion of the Laplace transformation44. The Gaussian noise of 0–2% was added during the data simulation. Table 1 represents the details of the noise present in the data samples used for regression model training, and the ranges of characteristic parameters are available in Table 2. The time (t), pressure change (∆p), and their corresponding parameter values Ln (CDe2S) were simulated for each reservoir outer boundary condition using the python programming coded algorithm. Each test has 100 pressure data points related to the 100 timesteps.

Table 1.

Details of noise and number of samples used for classifiers and regressors.

| Reservoir type | Noise (× 103) | Labels for classifiers | Total number of samples for regressors (× 103) | Total number of samples for classifiers (× 103) | ||

|---|---|---|---|---|---|---|

| 0% | 1% | 2% | ||||

| HO-IA | 20 | 20 | 10 | 0 | 50 | 150 |

| HO-NF | 20 | 20 | 10 | 1 | 50 | |

| HO-PS | 20 | 20 | 10 | 2 | 50 | |

Table 2.

Range of characteristics considered for data simulation.

| Range | CD | S | rD |

|---|---|---|---|

| Minimum | 6.60 × 102 | − 1 | 2271 |

| Maximum | 2.70 × 105 | 40 | 3211 |

The ∆p′ of the simulated data samples have been obtained using:

| 1 |

The gathered ∆p' data have been used for training the classifier and regressors. The classifiers were trained with 150 × 103 labeled samples, as indicated in Table 1. Each regressor has been trained with 50 × 103 data having corresponding Ln (CDe2S) values. The synthetic data set has been partitioned into 80% train, 10% validation, and 10% test set for classifiers and regressors. The data used during GA optimization of the ANN models are presented in Table 3. The data was split into 90% training and 10% validation during GA optimization.

Table 3.

Details of noise and number of samples for GA optimization of the models.

| Reservoir type | Noise (× 103) | Labels for classifiers | Total number of samples for regressors (× 103) | Total number of samples for classifiers (× 103) | ||

|---|---|---|---|---|---|---|

| 0% | 1% | 2% | ||||

| HO-IA | 5 | 5 | 5 | 0 | 15 | 45 |

| HO-NF | 5 | 5 | 5 | 1 | 15 | |

| HO-PS | 5 | 5 | 5 | 2 | 15 | |

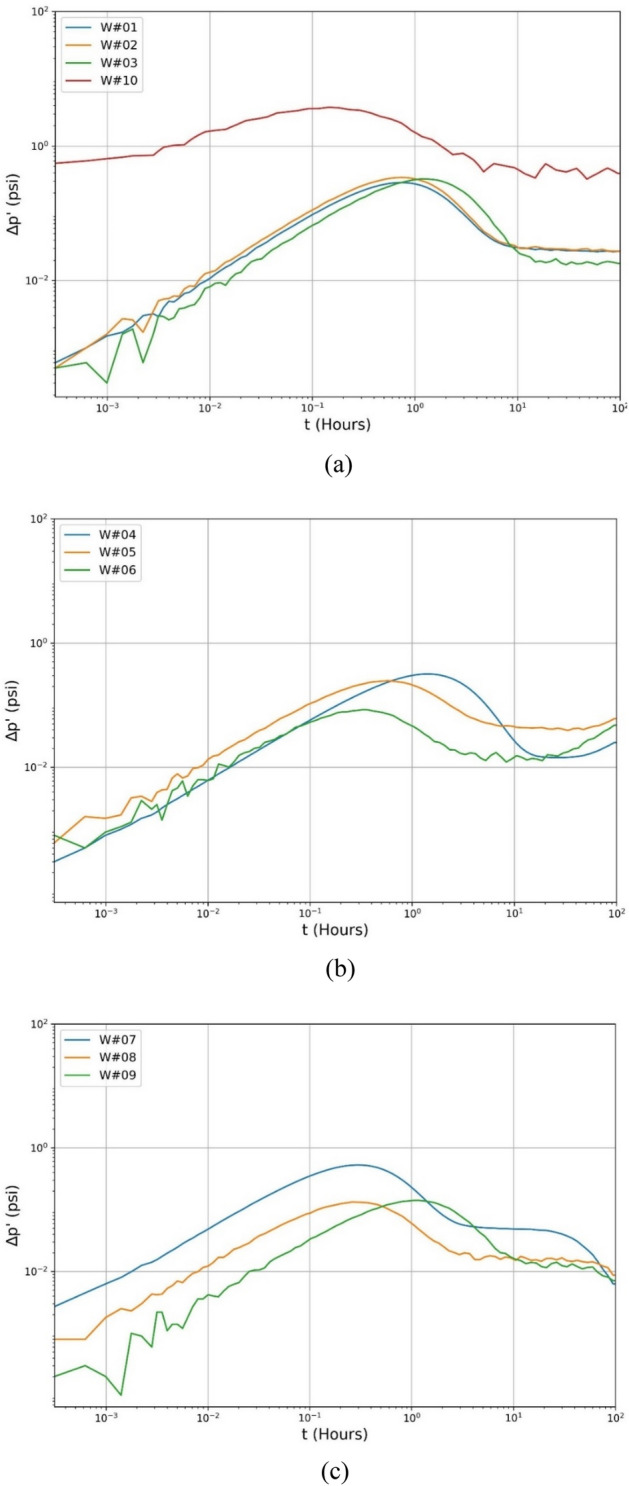

The optimized GA-ANN models have been trained with the data described in Table 1. The 10 test cases, including nine simulated and one real-field data45, have been considered to evaluate each ANN and GA-ANN model. The logarithmic plots of these cases are reproduced in Fig. 1a–c. W#01, W#04, and W#07 are smooth data, W#02, W#05, and W#08 have 1% noise, and W#03, W#06, and W#09 have 2% noise. The Gaussian noise percentage in real-field data from W#10 is unknown.

Figure 1.

Illustration of ∆p' curves for the (a) HO-IA, (b) HO-NF, and (c) HO-PS reservoirs.

Model framework

Artificial neural network

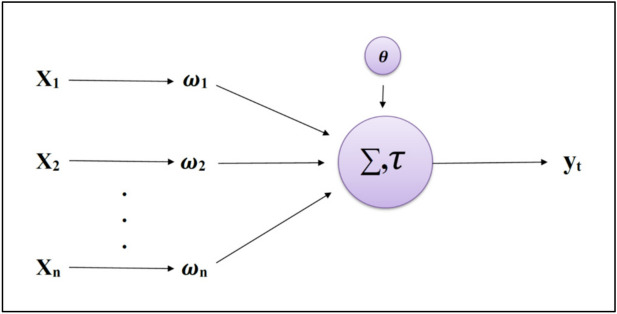

ANN are biologically inspired computer programs that learn by discovering relationships and patterns in data. The ANN architecture comprises individual units and neurons connected to weights forming sensory structures arranged in layers. The intermediate unit connections are improved during training until the prediction error is minimized. As the complexity of the model grows, the layer depth also increases, which is why it is known as the multi-layer perceptron. The purest form of ANN has one input, one hidden, and one output layer. The input layer takes the signals and transmits them to the hidden layers. The hidden layer processes the input as per the activation function, and finally, the output layer provides the prediction. Figure 2 illustrates the perceptron. The computation performed at each neuron in the layers of ANN is:

| 2 |

where ωti is the ith synapse of the tth neuron, θt is the bias of the tth neuron, Xi is the ith input of the tth neuron, N is the number of inputs, τ is the activation function, and yt is the output.

Figure 2.

Illustration of the perceptron.

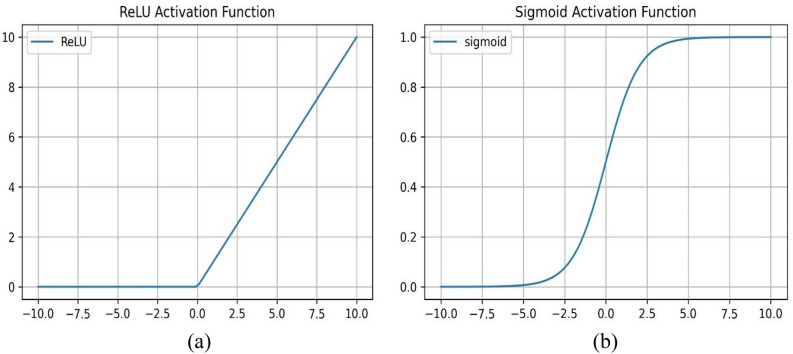

Four ANN structures (a classifier and three regressors) were first trained for 100 iterations. The six-layered classifier model has rectified linear unit (ReLU) activated hidden layers, and the output layer has sigmoid activation (σ). The three-layered regressor with ReLU activation function in a hidden layer and a linear activation function in an output layer has been trained to estimate Ln (CDe2S) associated with HO-IA, HO-NF, and HO-PS reservoirs. The activation functions decide to activate or deactivate neurons to get the desired output. The ReLu and σ activation functions convert their inputs (Z) into [0, Z] and [0, 1], as displayed schematically in Fig. 3a and b.

Figure 3.

Schematic representation of (a) ReLU and (b) σ activations.

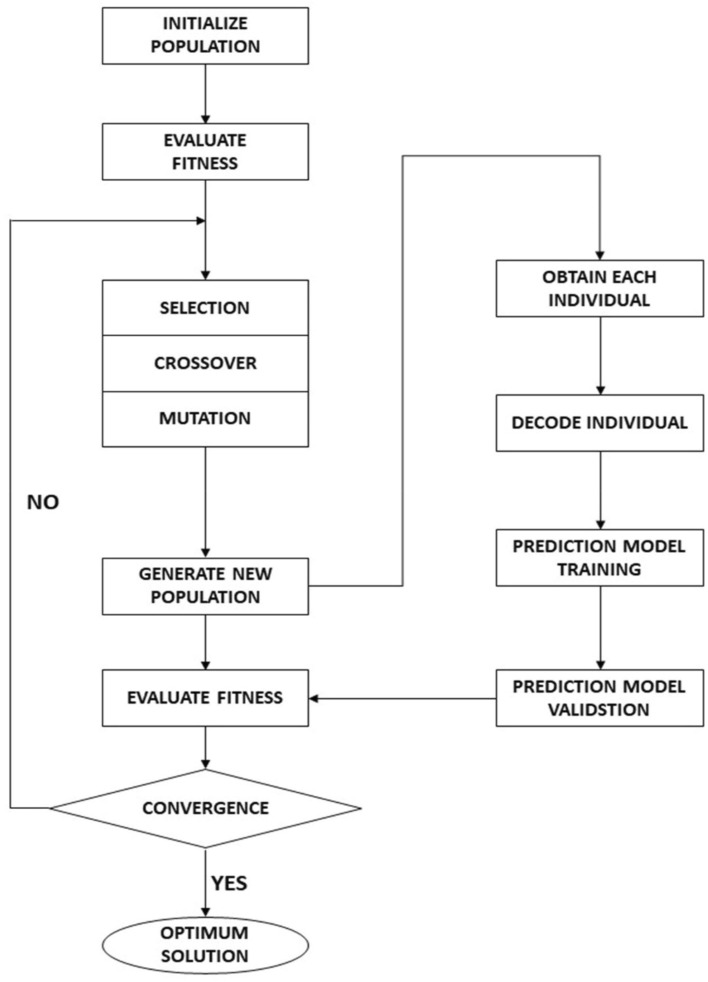

Genetic algorithm

GA is a meta-heuristic structured evolutionary optimization algorithm46 that combines survival of the fittest and a simplified version of the genetic inheritance process to optimize the network weights. Figure 4 represents the typical procedure performed by GA.

Figure 4.

Typical procedure performed by GA.

GA performs random sampling to generate the individual chromosomes of the initial population. The fitness function evaluates the estimation performance of the individuals using machine learning algorithms. The population evolves using genetic operators:

Selection The best chromosomes are extracted from the population to generate the mating pool. Available methods to select the chromosomes include roulette wheel selection, rank-based fitness assignment, tournament selection, and elitism. This model uses tournament selection to choose the parent chromosomes for the next operator.

Crossover The chromosomes of the parent individuals are swapped to generate offspring using the one-point crossover. The crossover operator ensures the inheritance of better genetic traits the future generations.

Mutation These operators randomly alter the genes of the selected individual with a probability equaling the mutation rate. It preserves genetic diversity and encourages GA propagation toward global extremes.

The binary-coded GA optimization has been implemented for feature selection and hyperparameter tuning. The number of neurons in the first three hidden layers of the classifier and regressors has been tuned using the GA algorithm. Genetic operators, including tournament selection, one-point crossover, and mutation, have been used. The crossover and mutation probabilities of 0.6 and 0.2 have been considered. The iterations for 500 generations have been performed for each ANN classifier and regressor model. The GA was allowed to converge to produce the best individuals representing the optimal solution with the best fitness value. The selected features and hyperparameters from GA optimization have been used to train the optimized GA-ANN models. A total of two classifiers and six regressors have been developed, as indicated in Table 4.

Table 4.

Details of trained classifier and regressor models.

| GA optimization | Reservoir type | Regressor | Classifier |

|---|---|---|---|

| No | HO-IA | R01 | C01 |

| HO-NF | R02 | ||

| HO-CP | R03 | ||

| Yes | HO-IA | R04 | C02 |

| HO-NF | R05 | ||

| HO-CP | R06 |

Results and discussion

This section covers ANN and GA-ANN model training results and the outcomes of feature selection and hyperparameter tuning using GA. The training models' validation results have been discussed using the test cases, and comparative performance analyses were done.

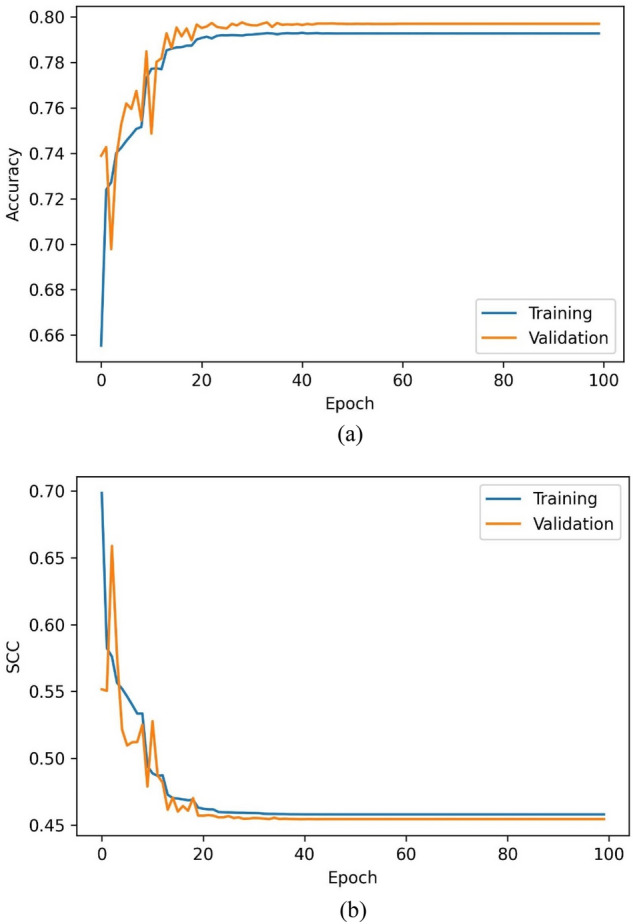

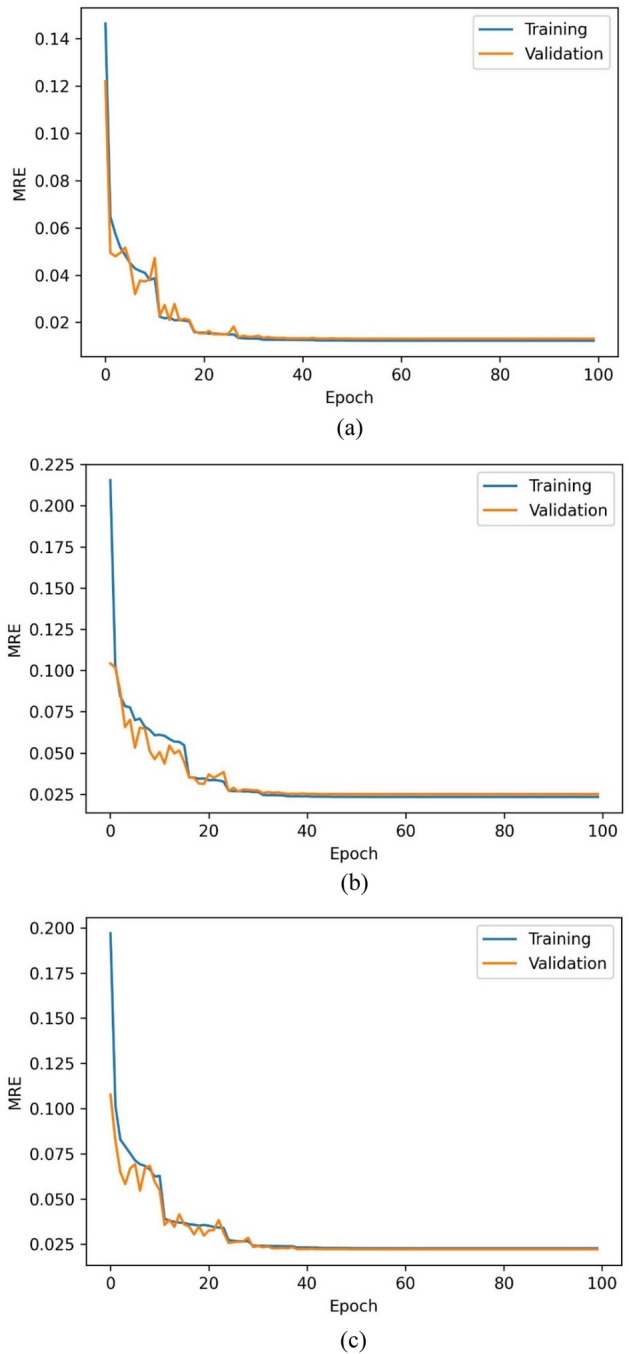

Model training performance

ANN models without optimization

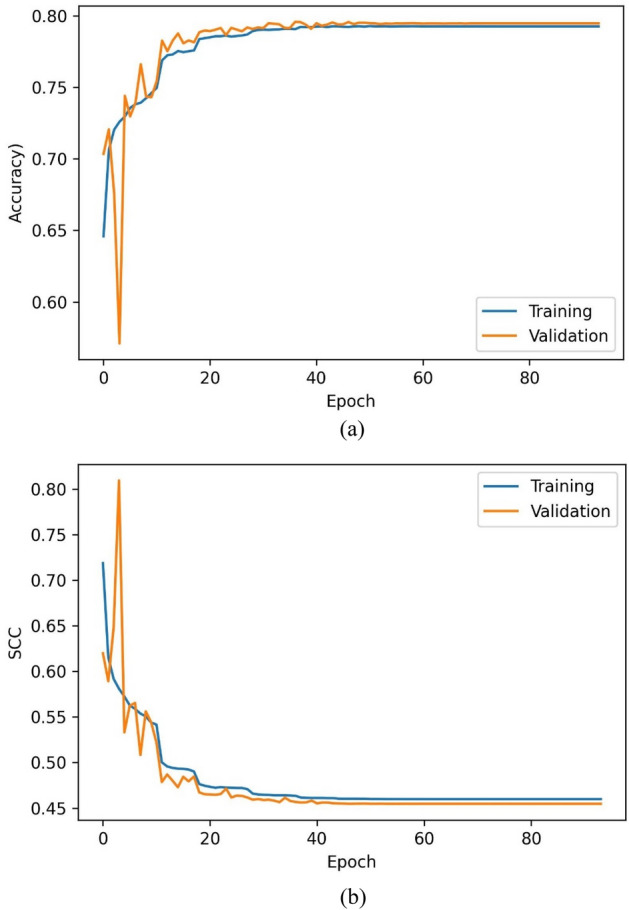

The training accuracy of the C01 model over the train and validation set has been represented in Fig. 5a and b. The training, validation, and testing sets have achieved classification accuracies of 79.23%, 79.47%, and 79.19%. The mean relative errors (MRE) have been observed while training the regressor models [Eq. (3)]. Figure 6a–c indicate the train and validation MREs for HO-IA, HO-NF, and HO-PS reservoirs. In Fig. 5, the SCC reduced gradually, accuracy increased for forty iterations, and later became constant with slight differences between the training and validation scores. Figure 6 represents that the MRE attained low values for both training and validation. The statistics suggest that the models have been trained well without any underfitting or overfitting data.

| 3 |

where N is the total number of Data Samples; and are the ith target and predicted values of the model, respectively.

Figure 5.

Training (a) accuracy and (b) SCC of ANN classifier.

Figure 6.

MRE of ANN regressor models for (a) HO-IA, (b) HO-NF, and (c) HO-PS.

Genetic algorithm optimization of ANN models

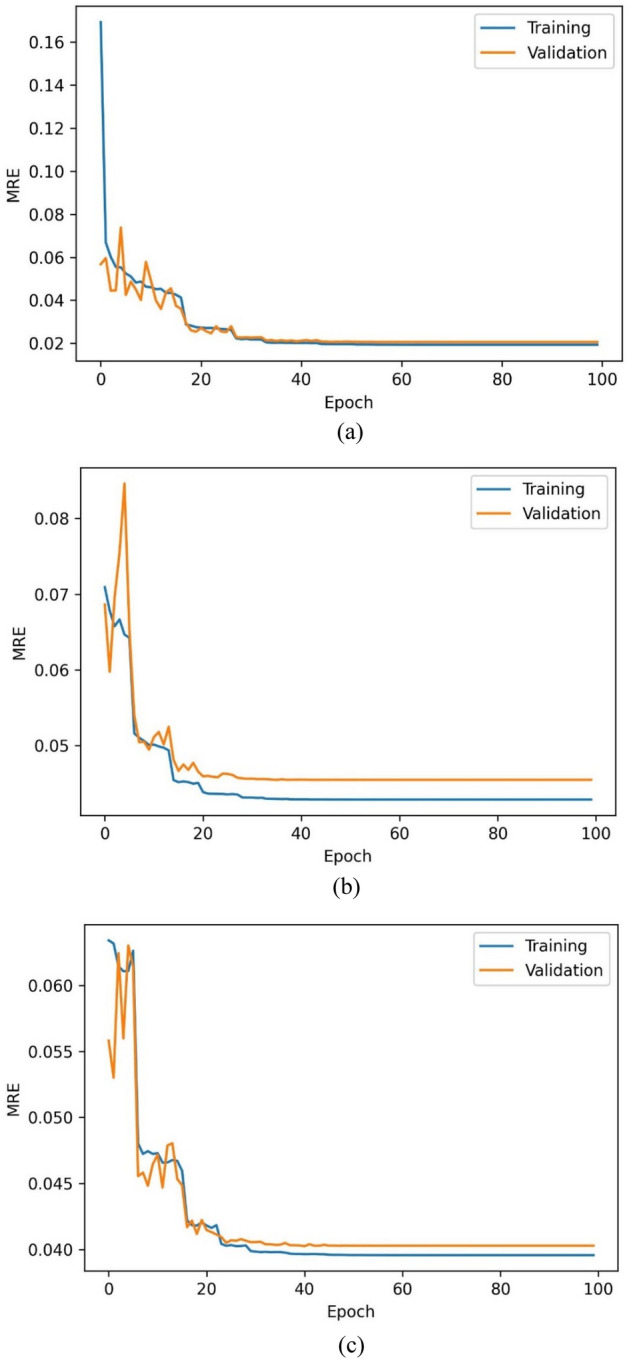

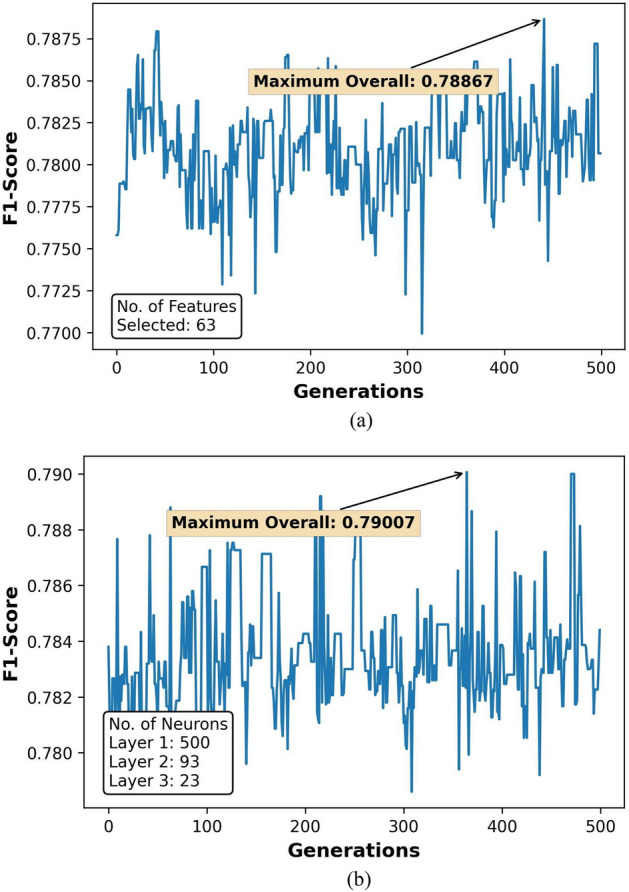

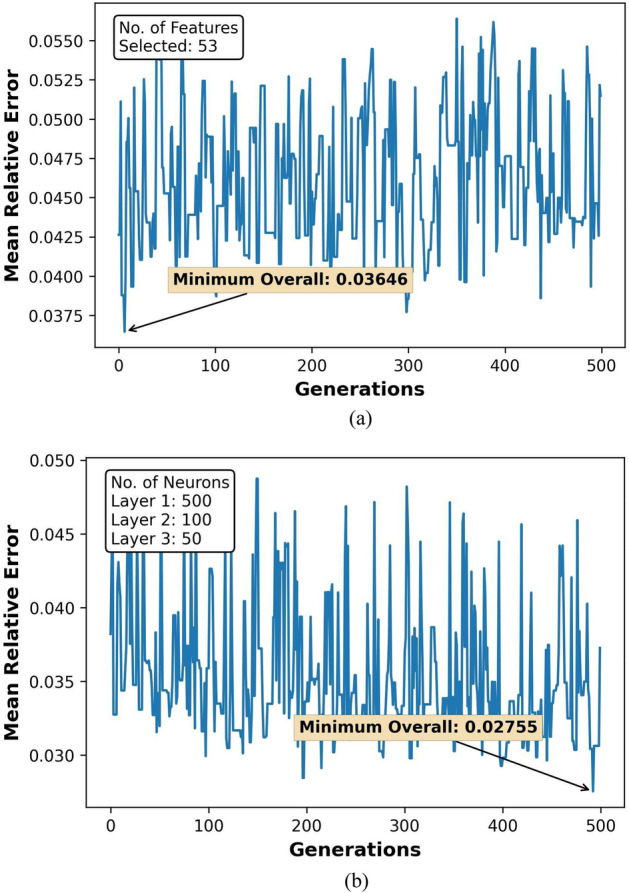

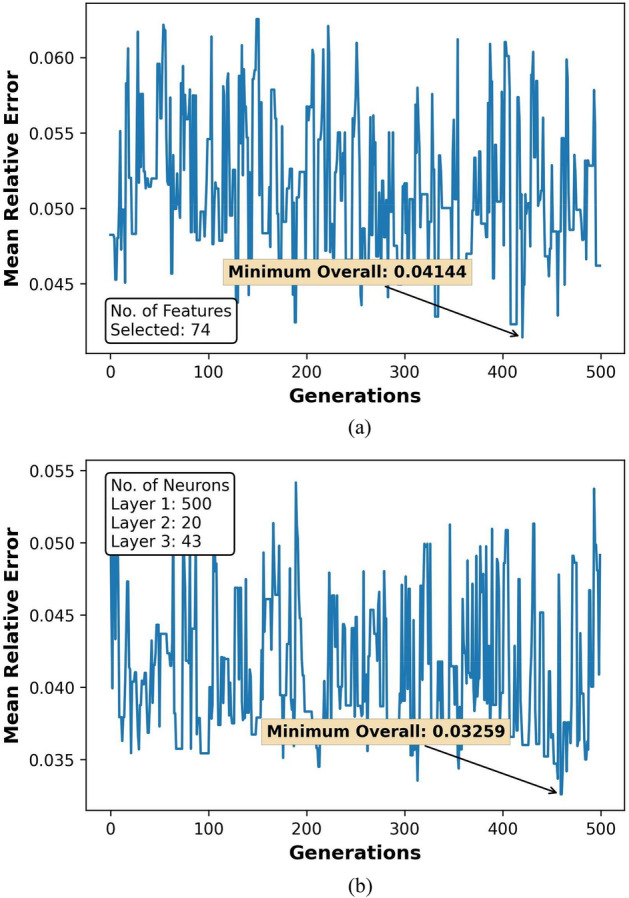

The GA optimization results for feature selection and hyperparameter tuning over 500 generations are represented in Figs. 7a,b, 8a,b, 9a,b, and 10a,b. Figures 8, 9 and 10 also show the count of selected features and the optimal neuron counts for the ANNs of each model, along with the best metric scores.

Figure 7.

F1-Score for classifier ANN model during (a) feature selection and (b) hyperparameter tuning.

Figure 8.

MRE for regressor ANN model during (a) feature selection and (b) hyperparameter tuning for HO-IA reservoir.

Figure 9.

MRE for regressor ANN model during (a) feature selection and (b) hyperparameter tuning for HO-NF reservoir.

Figure 10.

MRE for regressor ANN model during (a) feature selection and (b) hyperparameter tuning for HO-CP reservoir.

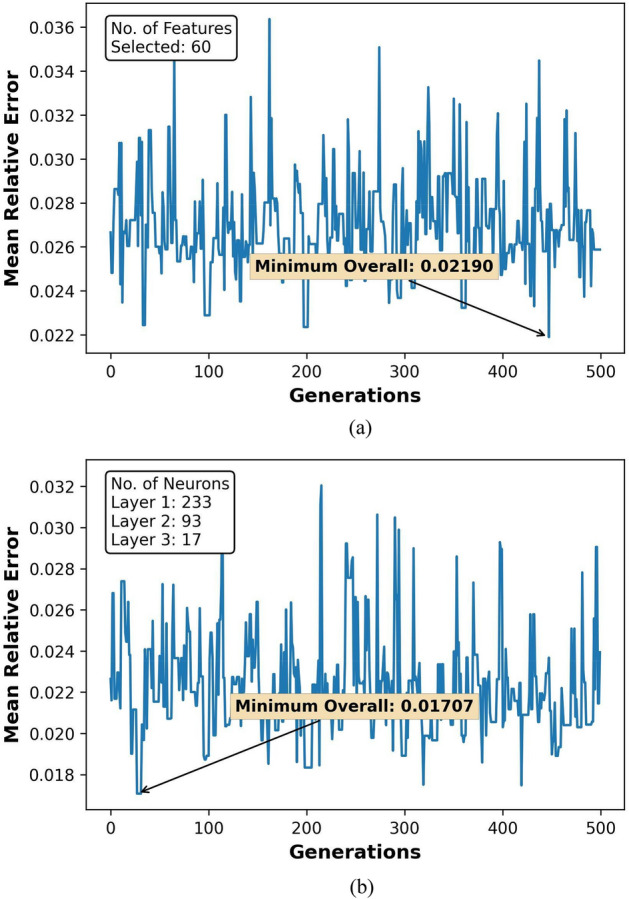

Optimized GA-ANN models

The GA-ANN classifiers and regressors have been trained with selected features and optimized hyperparameters. Figures 11a,b and 12a–c represent the training performance of the GA-ANN classifiers and regressors over 100 iterations. Tables 5 and 6 introduce the metric scores obtained by the trained classifiers and regressors. The C02 achieved relatively higher accuracy than C01. The MREs for all the GA-ANN models have decreased compared to the ANN models showcasing improved regression performance.

Figure 11.

Training (a) accuracy and (b) SCC of the C02 model.

Figure 12.

Training MRE of (a) R04, (b) R05, and (c) R06 models.

Table 5.

Accuracy and SCC values attained for the C01 and C02 model.

| Model | Accuracy (%) | SCC | ||||

|---|---|---|---|---|---|---|

| Training | Validation | Testing | Training | Validation | Testing | |

| C01 | 79.23 | 79.47 | 79.19 | 0.4598 | 0.4546 | 0.4571 |

| C02 | 79.48 | 79.66 | 79.38 | 0.4570 | 0.4545 | 0.4562 |

Table 6.

MRE (%) attained for the regression models.

| Model | MRE | ||

|---|---|---|---|

| Training | Validation | Testing | |

| R01 | 1.93 | 2.05 | 2.09 |

| R04 | 1.26 | 1.33 | 1.47 |

| R02 | 4.29 | 4.54 | 4.47 |

| R05 | 2.32 | 2.48 | 2.48 |

| R03 | 3.95 | 4.02 | 4.07 |

| R06 | 2.24 | 2.22 | 2.30 |

Performance validation of optimized models

The test cases discussed in Section “Data collection” have been used to assess the performance of the trained models. The trained GA-ANN models predicted the reservoir models correctly for all the test cases indicating excellent classification performance. The test case data were input into the regressors for estimating their associated characteristic parameter, Ln (CDe2S). The predictions using ANN and GA-ANN models have been represented in Table 7. We observe that forecasts of seven out of ten test cases resulted in reduced error using the GA-ANN.

Table 7.

Target and predicted values of the test cases using ANN and GA-ANN regressors.

| Test case | Target | Prediction | |

|---|---|---|---|

| ANN | GA-ANN | ||

| W#01 | 11.42 | 11.37 | 11.38 |

| W#02 | 13.13 | 13.23 | 13.27 |

| W#03 | 20.75 | 20.74 | 20.30 |

| W#04 | 26.94 | 28.02 | 27.09 |

| W#05 | 06.18 | 06.21 | 06.13 |

| W#06 | 06.34 | 06.45 | 06.34 |

| W#07 | 12.32 | 12.41 | 12.28 |

| W#08 | 09.02 | 08.96 | 09.08 |

| W#09 | 13.76 | 12.60 | 12.99 |

| W#10 | 08.60 | 11.30 | 09.99 |

Conclusions

This paper investigated the predictors' feature selection and hyperparameter tuning using GA optimization for pressure transient analysis of homogeneous reservoirs. The training results indicate that the optimized GA-ANN models yielded lower errors than the manually tuned ANN models. The optimized classification model achieved over 79% training accuracy in classifying the HO-IA, HO-NF, and HO-PS reservoirs. The GA optimization improved the regression models’ performance, and the optimized regressors predicted the characteristic parameter, Ln(CDe2S), with minimized errors. The performance of the predictive models has been validated using ten test cases. The optimized classification model accurately identified the reservoir models for all the test cases, and the regression models estimated the associated reservoir characteristics with minimized prediction errors.

Additionally, the models have adequate noise tolerance and provide computational efficiency. The proposed predictor is a robust tool for identifying and characterizing the homogeneous reservoirs from noisy real-field data with minimized human intervention. However, there is scope for improving the accuracy of the classifier model. We intend to investigate the performance of the deep structured models for the well-test analysis of heterogeneous reservoirs such as dual-porosity and composite reservoirs. Additionally, the performance comparison with other evolutionary algorithms, such as particle swarm optimization and Firefly algorithm, shall be conducted in future work.

Author contributions

The first four authors (R.K.P., S.A., G.N., and A.K.) recorded/analyzed the results, prepared figures, and wrote the main manuscript text. The last author (B.V.) checked the results and reviewed the final manuscript.

Data availability

The datasets used or analyzed during the current study are available from the corresponding author upon reasonable request.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Zhang L, Li J, Xue J, Zhang C, Fang X. Experimental studies on the changing characteristics of the gas flow capacity on bituminous coal in CO2-ECBM and N2-ECBM. Fuel. 2021;291:120115. [Google Scholar]

- 2.Wood JM, Cesar J, Ardakani OH, Rudra A, Sanei H. Geochemical evidence for the internal migration of gas condensate in a major unconventional tight petroleum system. Sci. Rep. 2022;12:1–15. doi: 10.1038/s41598-022-11963-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Qu M, et al. Mechanism study of spontaneous imbibition with lower-phase nano-emulsion in tight reservoirs. J. Pet. Sci. Eng. 2022;211:110220. [Google Scholar]

- 4.Zhang L, Huang M, Xue J, Li M, Li J. Repetitive mining stress and pore pressure effects on permeability and pore pressure sensitivity of bituminous coal. Nat. Resour. Res. 2021;30:4457–4476. [Google Scholar]

- 5.Castiñeira, D., Darabi, H., Zhai, X. & Benhallam, W. Smart reservoir management in the oil and gas industry. In Smart Manufacturing, 107–141 (Elsevier, 2020).

- 6.Goral J, et al. Confinement effect on porosity and permeability of shales. Sci. Rep. 2020;10:1–11. doi: 10.1038/s41598-019-56885-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Dong J, et al. Research on recognition of gas saturation in sandstone reservoir based on capture mode. Appl. Radiat. Isot. 2021;178:109939. doi: 10.1016/j.apradiso.2021.109939. [DOI] [PubMed] [Google Scholar]

- 8.Bonini M. Seismic loading of fault-controlled fluid seepage systems by great subduction earthquakes. Sci. Rep. 2019;9:1–12. doi: 10.1038/s41598-019-47686-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Amar MN, Zeraibi N, Redouane K. Bottom hole pressure estimation using hybridization neural networks and grey wolves optimization. Petroleum. 2018;4:419–429. [Google Scholar]

- 10.Kumar Pandey R, Kumar A, Mandal A, Vaferi B. Employing deep learning neural networks for characterizing dual-porosity reservoirs based on pressure transient tests. J. Energy Resour. Technol. 2022;144(11):113002. [Google Scholar]

- 11.Nait Amar M, Zeraibi N. A combined support vector regression with firefly algorithm for prediction of bottom hole pressure. SN Appl. Sci. 2020;2:1–12. [Google Scholar]

- 12.Bourdet D, Ayoub JA, Pirard YM. Use of pressure derivative in well test interpretation. SPE Form. Eval. 1989;4:293–302. [Google Scholar]

- 13.Gringarten AC. Interpretation of tests in fissured and multilayered reservoirs with double-porosity behavior: Theory and practice. J. Pet. Technol. 1984;36:549–564. [Google Scholar]

- 14.Hanga KM, Kovalchuk Y. Machine learning and multi-agent systems in oil and gas industry applications: A survey. Comput. Sci. Rev. 2019;34:100191. [Google Scholar]

- 15.Pandey RK, Dahiya AK, Mandal A. Identifying applications of machine learning and data analytics based approaches for optimization of upstream petroleum operations. Energy Technol. 2021;9:2000749. [Google Scholar]

- 16.Sircar A, Yadav K, Rayavarapu K, Bist N, Oza H. Application of machine learning and artificial intelligence in oil and gas industry. Pet. Res. 2021;6:379–391. [Google Scholar]

- 17.Al-Kaabi A-AU, Lee WJ. Using artificial neural nets to identify the well-test interpretation model. SPE Form. Eval. 1993;8:233–240. [Google Scholar]

- 18.Kharrat R. Determination of reservoir model from well test data, using an artificial neural network. Sci. Iran. 2008;15:487–493. [Google Scholar]

- 19.AlMaraghi, A. M. & El-Banbi, A. H. Automatic reservoir model identification using artificial neural networks in pressure transient analysis. In SPE North Africa Technical Conference and Exhibition (OnePetro, 2015).

- 20.Ahmadi R, Aminshahidy B, Shahrabi J. Well-testing model identification using time-series shapelets. J. Pet. Sci. Eng. 2017;149:292–305. [Google Scholar]

- 21.Jeirani Z, Mohebbi A. Estimating the initial pressure, permeability and skin factor of oil reservoirs using artificial neural networks. J. Pet. Sci. Eng. 2006;50:11–20. [Google Scholar]

- 22.Alajmi, M. N. & Ertekin, T. The development of an artificial neural network as a pressure transient analysis tool for applications in double-porosity reservoirs. In Asia Pacific oil and gas conference and exhibition (Society of Petroleum Engineers, 2007).

- 23.Adibifard M, Tabatabaei-Nejad SAR, Khodapanah E. Artificial neural network (ann) to estimate reservoir parameters in naturally fractured reservoirs using well test data. J. Pet. Sci. Eng. 2014;122:585–594. [Google Scholar]

- 24.Deng, Y., Chen, Q. & Wang, J. The artificial neural network method of well-test interpretation model identification and parameter estimation. in International Oil and Gas Conference and Exhibition in China (OnePetro, 2000).

- 25.Liu K, et al. DeepBAN: A temporal convolution-based communication framework for dynamic WBANs. IEEE Trans. Commun. 2021;69:6675–6690. [Google Scholar]

- 26.Li S, et al. Deep residual correction network for partial domain adaptation. IEEE Trans. Pattern Anal. Mach. Intell. 2020;43:2329–2344. doi: 10.1109/TPAMI.2020.2964173. [DOI] [PubMed] [Google Scholar]

- 27.Ribli D, Horváth A, Unger Z, Pollner P, Csabai I. Detecting and classifying lesions in mammograms with deep learning. Sci. Rep. 2018;8:1–7. doi: 10.1038/s41598-018-22437-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Soffer S, et al. Deep learning for pulmonary embolism detection on computed tomography pulmonary angiogram: A systematic review and meta-analysis. Sci. Rep. 2021;11:1–8. doi: 10.1038/s41598-021-95249-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Zhang, Y. et al. Learning from a complementary-label source domain: theory and algorithms. IEEE Trans. Neural Netw. Learn. Syst. (2021). [DOI] [PubMed]

- 30.Zheng W, Liu X, Yin L. Research on image classification method based on improved multi-scale relational network. PeerJ Comput. Sci. 2021;7:e613. doi: 10.7717/peerj-cs.613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Chu H, et al. An automatic classification method of well testing plot based on convolutional neural network (CNN) Energies. 2019;12:2846. [Google Scholar]

- 32.Daolun LI, Xuliang LIU, Wenshu ZHA, Jinghai Y, Detang LU. Automatic well test interpretation based on convolutional neural network for a radial composite reservoir. Pet. Explor. Dev. 2020;47:623–631. [Google Scholar]

- 33.Pandey RK, Dahiya AK, Pandey AK, Mandal A. Optimized deep learning model assisted pressure transient analysis for automatic reservoir characterization. Pet. Sci. Technol. 2022;40:659–677. [Google Scholar]

- 34.Pandey RK, Kumar A, Mandal A. A robust deep structured prediction model for petroleum reservoir characterization using pressure transient test data. Pet. Res. 2022;7:204–219. [Google Scholar]

- 35.Wang S, Chen S. Insights to fracture stimulation design in unconventional reservoirs based on machine learning modeling. J. Pet. Sci. Eng. 2019;174:682–695. [Google Scholar]

- 36.Çolak AB. Experimental analysis with specific heat of water-based zirconium oxide nanofluid on the effect of training algorithm on predictive performance of artificial neural network. Heat Transf. Res. 2021;52:67–93. [Google Scholar]

- 37.Çolak AB. Comparative investigation of the usability of different machine learning algorithms in the analysis of battery thermal performances of electric vehicles. Int. J. Energy Res. 2022;5:1–10. [Google Scholar]

- 38.Alatas B, Bingol H. Comparative assessment of light-based intelligent search and optimization algorithms. Light Eng. 2020;28:51–59. [Google Scholar]

- 39.Akyol S, Alatas B. Plant intelligence based metaheuristic optimization algorithms. Artif. Intell. Rev. 2017;47:417–462. [Google Scholar]

- 40.Alatas B, Bingol H. A physics based novel approach for travelling tournament problem: Optics inspired optimization. Inf. Technol. Control. 2019;48:373–388. [Google Scholar]

- 41.Dong X, Wang S, Sun R, Zhao S. Design of artificial neural networks using a genetic algorithm to predict saturates of vacuum gas oil. Pet. Sci. 2010;7:118–122. [Google Scholar]

- 42.Bingol H, Alatas B. Chaos based optics inspired optimization algorithms as global solution search approach. Chaos Solitons Fract. 2020;141:110434. [Google Scholar]

- 43.Anraku T, Home RN. Discrimination between reservoir models in well-test analysis. SPE Form. Eval. 1995;10:114–121. [Google Scholar]

- 44.Stehfest H. Algorithm 368: Numerical inversion of Laplace transforms [D5] Commun. ACM. 1970;13:47–49. [Google Scholar]

- 45.Horne R. Modern Well Test Analysis: A Computer-Aided Approach. Petroway Inc; 1995. [Google Scholar]

- 46.Zbigniew M. Genetic algorithms + data structures = evolution programs. Comput. Stat. 1996;1:372–373. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The datasets used or analyzed during the current study are available from the corresponding author upon reasonable request.