Introduction

There has been a rapid increase in the number of artificial intelligence (AI) systems approved for clinical use by the U.S. Food and Drug Administration (FDA) over the past 20 years (Fig 1) and a concomitant increase in the use of these AI systems in medicine. The field of AI raises more questions than answers at this point, one of which is, “Who owns or will own the data used to develop an AI system?” Data, even in health care, are a commodity, and the answer to this question will help guide the answer to the subsequent question, “Who will get paid for AI?” The aim of this article is to discuss data, data creation, and the potential financial stakeholders for the development of AI in medicine and to open the discussion about data ownership and patient consent in the era of AI in medicine.

Figure 1:

Number of U.S. Food and Drug Administration (FDA)–approved artificial intelligence (AI) systems, 1997–2020. Data from the publicly available FDA web page on approved artificial intelligence and machine learning devices at https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-aiml-enabled-medical-devices. Accessed January 2022.

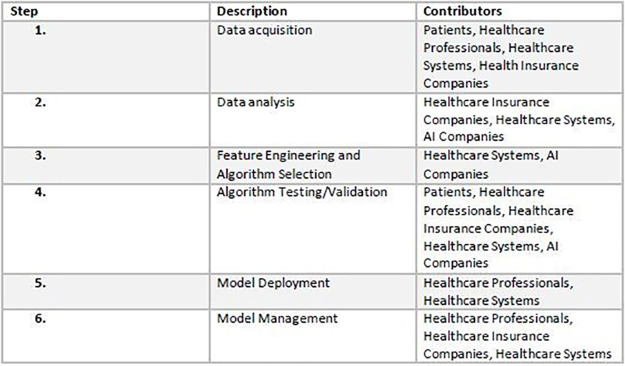

The life cycle of an AI system follows a predictable pattern, as follows: (a) idea conception, (b) data collection, (c) data analysis, (d) feature engineering and algorithm selection for model building, (e) tuning and validation, (f) testing, (g) model deployment, and (h) model management (Fig 2). Creating an off-the-shelf AI system in medicine requires the following: (a) patients to provide data, (b) health care professionals to render diagnoses to be used as the reference standard, (c) a health care system to curate and store data, (d) health insurance companies and patients to reimburse patient health care costs, and (e) an entity—an AI company, insurance company, health care system, or other third party—to hire programmers, procure data, and fund, develop, and commercialize the AI system.

Figure 2:

Artificial intelligence (AI) system life cycle.

Data

We define data as the input processed during the development of an AI system. Data can be numerical, textual, or visual and qualitative or quantitative. The accuracy and performance of any AI system is strongly dependent on the quality and quantity of curated, annotated data used to train and validate the algorithm (1). Data are thus integral for the development of AI systems.

We consider decision support systems in the electronic health record (EHR) as a subset of AI systems because these systems utilize data (either in-house data or previously published data) to create algorithms that are used in clinical practice. These algorithms may be simple linear or logistic regression statistical models or may use supervised or unsupervised machine learning models. These decision support systems follow the AI system life cycle (Fig 2). Although there may be no reimbursement for the use of these systems, there may be increased profits derived from using these decision support systems due to decreased expenditure by the health care system or health insurance company.

Data Creation

Patients

Health care AI systems cannot be created without patients, whether it be their medical images, pathologic slides, ophthalmologic examinations, electrocardiograms, or other data. Patient data are considered to be very different from internet browsing data and metadata because of patient-physician confidentiality as defined specifically in the American Medical Association (AMA) 1957 Code of Medical Ethics (2). The Health Insurance Portability and Accountability Act (HIPAA) Privacy Rule protects “protected health information” (PHI) held or transmitted by a covered entity or its business associates (3–5). The Privacy Rule provides national standards and guidelines to protect patients’ PHI and provides the conditions that govern the disclosure of PHI with and without patient authorization but does not govern the length of time PHI is retained (5). According to current regulations, patient data in a HIPAA-limited dataset can be shared, without patient consent, to health care professionals, health care systems, and public and private health care insurance companies (5–7). A key point to note is that current regulations allow use of data; they do not convey data ownership. The European Union’s General Data Protection Regulation (GDPR) recognizes health care data as a special category of data and has recently created rules on fair access to and use of this data (Data Act) (5,8).

Health Care Professionals

Health care AI systems are not self-learning. Health care professionals first must attend to a patient, diagnose a disease or condition, request tests or imaging, interpret those tests, communicate findings to the patient, and enter data into a system where they can be later accessed. Therefore, during regular clinical care, health care professionals create an asset (data) that has value. It takes health care professionals with years of training, knowledge, and expertise to help train health care AI systems by annotating which patient data correlate to which disease, pathologic condition, or outcome of interest. Furthermore, health care professionals also actively create annotations or diagnoses to be used to train and validate AI systems. This process can be quite time-consuming for health care professional experts in their respective fields.

Potential Financial Stakeholders for the Development of AI in Medicine

Patients

It could be argued that any data created from a patient’s body—whether it be cells, images, demographic data, or any patient outcome—are the property of that patient (9–11). If those data are used to create a lucrative AI system, then it can be argued that these data have value. It can be argued further that the patients to whom these data belong should receive some compensation for use of their data in the development of these lucrative AI systems. A cautionary tale from not-too-distant history is that of Henrietta Lacks and the HeLa cells derived from her tumor (12,13). The HeLa cell line, which has proven instrumental in the field of cancer research, was created using her cervical cancer cells without her permission, or even knowledge. Neither she nor her family benefited from the pioneering of this first, immortalized cell line. Will the same be said of those patients whose data serve to train AI systems? In the United States, patients often pay for their health care in the form of insurance copayments, which may give them an additional claim to ownership of their health care data.

Health Care Professionals

Health care professionals create data, which have value because the AI system is able to find a pattern that recapitulates the classification or actions of the health care professional. Essentially, the health care professionals’ years of experience and training are captured into an algorithm that can run without the need for sleep, breaks, health care plans, pension plans, or retirement accounts. Health care professionals also may have large student loans that require repayment. It can be argued that the health care professionals who created these data should receive some compensation for use of their data in the development of these AI systems.

In the future, health care professionals likely will work in tandem with AI algorithms. Initially, this cooperation may prove to be lucrative for physicians, as the patients’ insurance may be billed both for the study as per usual and for utilizing AI. Currently, the resource-based relative value scale is used to determine billing for a study and comprises two components, the professional component and the technical component (14). The professional component is further divided into physician work, practice expense, and malpractice expense (14). Currently, it is unclear whether AI systems will be considered part of the professional component, the technical component, or part of both. This situation will have implications for private practices, which vary in financial architecture and may be dependent on the professional component only or on both components. We suspect that each AI system would have to be considered individually and assessed as to how it contributes to each component, as AI systems vary in their function. Additionally, the overall relative value of each AI-assisted study may increase based on the criteria used by the AMA Relative Value Scale Update Committee. Increased efficiency can mean an increase in the amount of work done by each health care professional and increased work relative value units (RVUs) (15). However, an increased number of RVUs per physician with the use of AI may alter the landscape of supply and demand within certain medical specialties, such as radiology (16).

Health Care Systems

Health care systems invest in infrastructure such as laboratories, data storage facilities, and EHR hardware and software. Patient-related data are stored in the EHR and used for clinical care and for medicolegal reasons. Health care systems also must bear other costs, such as penalties associated with any data breach. Because health care systems house and curate the data for AI systems, they also have a claim to data ownership. Health care systems also may develop AI systems and decision support systems in-house, and therefore may have financial claims to these systems.

Health Insurance Companies

Health insurance companies (HIC) store patient data and health care professional data to meet their business needs. HIC have ownership claims to the data because they invest indirectly in the infrastructure needed to create data used by health care AI systems. HIC also may create, develop, and maintain AI systems, and therefore may have financial claims to these systems. As data have become a new commodity, the HICs’ claim to patients’ private health information may prove to be lucrative.

Currently, large databases being utilized for AI research have patients whose health care has been paid for by Medicaid and Medicare. These large databases are the result of millions of dollars of payments by U.S. taxpayers. The argument can be made that data and products derived from data arising from these databases should be made available to the U.S. public for free, as they were financed by U.S. taxpayers. This notion is not novel as, at this time, research studies funded by the National Institutes of Health are made available online to the public for free on PubMed (www.pubmed.gov).

AI Companies

The companies that create health care AI systems expend capital to obtain data, pay developers, conduct marketing, and maintain their software and hardware. For these reasons, AI companies also have ownership claims on AI systems. AI companies usually have software license agreements that state that the licensor (AI company) owns the AI system.

AI Developers

The individual AI developers contribute heavily to developing an algorithm that, in many cases, can run for long periods of time to generate income for the software company through sales to health care systems. Software developers may be compensated with different stock options, depending on the stage of development of the AI company, or with a cash salary. In the latter case, software developers may not continue to benefit directly from the assets that they have developed. It seems the software companies stand to financially benefit most from the revolution that AI promises to bring. How this potential revenue would be distributed throughout these software companies to software developers and shareholders is a separate question entirely, though one imagines the shareholders of these companies would certainly stand to gain.

Data Ownership and Patient Consent in the Era of AI in Medicine

Patients

Health care systems, in response to the Henrietta Lacks case, have made broad, sweeping, all-inclusive, blanket consent forms that essentially ensure that a patient waives his or her rights to anything derived from that patient. Disclosures and release statements saturate consent forms throughout modern medicine, but how often does the average patient read and understand these consent documents in their entirety? In some cases, the consent for receiving clinical care in a health care system is paired with the abdication of the patient’s rights to their data.

Consent should be informed, specific, and communicated through specific action. As part of any consent procedure, the risks, benefits, and alternatives of sharing their data should be discussed with the patient. However, the patient’s data will not be used to benefit that patient. A patient’s data may be used to create an algorithm that will only benefit future patients. For example, AI-assisted imaging systems may indeed be beneficial for patients by providing improved sensitivity and diagnostic accuracy (17–19), but these benefits extend only to future patients, not to the patients whose data are being used to train the algorithms. At the time of consent, even the health care professional obtaining consent has no idea of all the potential ramifications of the patient giving up their rights to their data. Also, patients are often not given the opportunity to refuse the use of their data for research purposes. Patient data utilized for research studies with the goal of commercialization may require a separate consent document explaining to the patients that signing the consent renounces their potential future financial claims.

Article 9 of the GDPR states that explicit consent should be used when dealing with sensitive data, including PHI. All details—what information is being collected and by whom, and what research is being performed—should be shared with the patient, and the patient should state, “I consent,” to the research. Unambiguous consent is used for ordinary, nonsensitive personal data. Here, consent is implied after a patient performs an affirmative action, such as a patient registering his or her email or phone number into a database.

Some have proposed dynamic consent where patients should not only be notified when their data are used in research but also informed of the implications of the patient’s data being used in this research (20,21). However, such a scheme is extremely difficult because it requires continuous communication between patients and the individuals using their data. Dynamic consent would also not be possible for deceased patients. Meta consent is based on the idea that patients are given the opportunity to make choices on the basis of their preferences for how and when to provide consent (22–24). The advantages and disadvantages of meta consents are still being debated (24). Another challenging issue is that a patient may revoke his or her consent at any time. This may be covered under Article 17 of the U.K. GDPR, “right to erasure” (25), but it is unclear whether similar provisions are available in the United States.

More recently, there have been several startups in which patients are able to sell their data through centralized marketplaces (26,27). As an example, Equideum Health has recently launched Decentralized Equideum Exchange in partnership with Nokia, a platform that allows patients to monetize their PHI and use a specialized public/private hybrid blockchain and decentralized AI networks that support tokenization of datasets (26). The patient data are anonymized in a way that meets HIPAA regulations governing patient privacy rights and preserves privacy for health data sharing. Further research is required to evaluate the ethics surrounding patient consent in this setting. The local ethics review boards have tremendous importance in evaluating the ethics surrounding patient consent and should ensure patients genuinely understand what they are contributing their data to and the scope of any research that will be performed using the patient’s data. A recent lawsuit in the United Kingdom involves a patient plaintiff who was very concerned that his data were shared with Google’s AI firm DeepMind by the Royal Free London National Health Service Foundation Trust (28).

Health Care Professionals

It is unclear if or how health care professionals give consent for their involvement in the creation of reference standard data or ground truth annotations for AI systems. These health care professionals’ data may be used to create an algorithm that will benefit future patients and future health care professionals, though they themselves may not directly benefit in some cases.

Health Care Systems

Health care systems may benefit through the sale of presumably anonymized patient data to AI companies. However, such actions raise the question of who owns the data within a health care system for extramural sharing of patient data. Are the data owned by the health care system, the department within the health care system that generated the data, the chief informatics officer, or the treating physician? Furthermore, consider a scenario where oncologists at an institution consult with an AI company and share the imaging data from their patients with that AI company. Are the data owned by the oncologists, the health care system, or the radiology department that stores the imaging data? Are the data also co-owned by others, such as the patient whose data are being shared? Further work is required to clearly understand the intramural ownership of data within health care systems. Further research is also required to understand good data sharing practices (29–31).

Conclusion

There are outstanding questions regarding reimbursement for the use of AI in health care. Given that data are now a commodity, the question of data ownership in this context is of paramount importance. There is no consensus on who owns medical data, or for how long. There are multiple stakeholders and multiple individuals who are essential when creating an AI system. Dissecting the individual contribution of each stakeholder and each individual to the development of an AI system is difficult and, in some cases, intractable. An urgent discussion is required in the scientific community to really understand data ownership as it pertains to medical AI and how its use will be reimbursed.

Footnotes

C.R. and R.S. contributed equally to this work.

Authors declared no funding for this work.

Disclosures of conflicts of interest: C.R. No relevant relationships. R.S. Deputy editor of Radiology: Artificial Intelligence.

References

- 1. Sessions V , Valtorta M . The Effects of Data Quality on Machine Learning Algorithms . Proceedings of the 11th International Conference on Information Quality , 2006. ; 485 – 498 . [Google Scholar]

- 2. Riddick FA Jr . The code of medical ethics of the American Medical Association . Ochsner J 2003. ; 5 ( 2 ): 6 – 10 . [PMC free article] [PubMed] [Google Scholar]

- 3. Kulynych J , Korn D . The new HIPAA (Health Insurance Portability and Accountability Act of 1996) Medical Privacy Rule: help or hindrance for clinical research? Circulation 2003. ; 108 ( 8 ): 912 – 914 . [DOI] [PubMed] [Google Scholar]

- 4. Nosowsky R , Giordano TJ . The Health Insurance Portability and Accountability Act of 1996 (HIPAA) privacy rule: implications for clinical research . Annu Rev Med 2006. ; 57 ( 1 ): 575 – 590 . [DOI] [PubMed] [Google Scholar]

- 5. Chiruvella V , Guddati AK . Ethical issues in patient data ownership . Interact J Med Res 2021. ; 10 ( 2 ): e22269 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. eCFR: 45 CFR 164.316 -- Policies and procedures and documentation requirements . Code of Federal Regulations . https://www.ecfr.gov/current/title-45/subtitle-A/subchapter-C/part-164/subpart-C/section-164.316. Accessed May 15, 2022 .

- 7. eCFR: 45 CFR Part 164 -- Security and Privacy . Code of Federal Regulations . https://www.ecfr.gov/current/title-45/subtitle-A/subchapter-C/part-164. Accessed May 15, 2022 .

- 8. General Data Protection Regulation (GDPR) Web site . https://gdpr.eu/. Accessed May 15, 2022 .

- 9. Telenti A , Jiang X . Treating medical data as a durable asset . Nat Genet 2020. ; 52 ( 10 ): 1005 – 1010 . [Published correction appears in Nat Genet 2020;52(12):1433.] [DOI] [PubMed] [Google Scholar]

- 10. Balthazar P , Harri P , Prater A , Safdar NM . Protecting your patients’ interests in the era of big data, artificial intelligence, and predictive analytics . J Am Coll Radiol 2018. ; 15 ( 3 Pt B ): 580 – 586 . [DOI] [PubMed] [Google Scholar]

- 11. Mittelstadt BD , Floridi L . The ethics of big data: current and foreseeable issues in biomedical contexts . Sci Eng Ethics 2016. ; 22 ( 2 ): 303 – 341 . [DOI] [PubMed] [Google Scholar]

- 12. Lacks H . Henrietta Lacks: science must right a historical wrong . Nature 2020. ; 585 ( 7823 ): 7 . [DOI] [PubMed] [Google Scholar]

- 13. Wolinetz CD , Collins FS . Recognition of research participants’ need for autonomy: remembering the legacy of Henrietta Lacks . JAMA 2020. ; 324 ( 11 ): 1027 – 1028 . [DOI] [PubMed] [Google Scholar]

- 14. Lam DL , Medverd JR . How radiologists get paid: resource-based relative value scale and the revenue cycle . AJR Am J Roentgenol 2013. ; 201 ( 5 ): 947 – 958 [Published correction appears in AJR Am J Roentgenol 2014;202(3):699.]. [DOI] [PubMed] [Google Scholar]

- 15. Ahuja AS . The impact of artificial intelligence in medicine on the future role of the physician . PeerJ 2019. ; 7 : e7702 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Mazurowski MA . Artificial intelligence may cause a significant disruption to the radiology workforce . J Am Coll Radiol 2019. ; 16 ( 8 ): 1077 – 1082 . [DOI] [PubMed] [Google Scholar]

- 17. Topol EJ . High-performance medicine: the convergence of human and artificial intelligence . Nat Med 2019. ; 25 ( 1 ): 44 – 56 . [DOI] [PubMed] [Google Scholar]

- 18. Lee D , Yoon SN . Application of artificial intelligence-based technologies in the healthcare industry: opportunities and challenges . Int J Environ Res Public Health 2021. ; 18 ( 1 ): 271 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Beam AL , Kohane IS . Translating artificial intelligence into clinical care . JAMA 2016. ; 316 ( 22 ): 2368 – 2369 . [DOI] [PubMed] [Google Scholar]

- 20. Prictor M , Lewis MA , Newson AJ , et al . Dynamic consent: an evaluation and reporting framework . J Empir Res Hum Res Ethics 2020. ; 15 ( 3 ): 175 – 186 . [DOI] [PubMed] [Google Scholar]

- 21. Kaye J , Whitley EA , Lund D , Morrison M , Teare H , Melham K . Dynamic consent: a patient interface for twenty-first century research networks . Eur J Hum Genet 2015. ; 23 ( 2 ): 141 – 146 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Ploug T , Holm S . Meta consent - a flexible solution to the problem of secondary use of health data . Bioethics 2016. ; 30 ( 9 ): 721 – 732 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Cumyn A , Barton A , Dault R , Safa N , Cloutier AM , Ethier JF . Meta-consent for the secondary use of health data within a learning health system: a qualitative study of the public’s perspective . BMC Med Ethics 2021. ; 22 ( 1 ): 81 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Ploug T , Holm S . Meta consent: a flexible and autonomous way of obtaining informed consent for secondary research . BMJ 2015. ; 350 : h2146 . [DOI] [PubMed] [Google Scholar]

- 25. Art. 17 GDPR - Right to erasure (‘right to be forgotten’) . General Data Protection Regulation (GDPR) . https://gdpr.eu/article-17-right-to-be-forgotten/. Accessed May 15, 2022.

- 26. Equideum Health Web site . https://equideum.health/. Accessed May 15, 2022 .

- 27. Hu-manity.co Web site. https://hu-manity.co/. Accessed May 15, 2022 .

- 28. DeepMind faces legal action over NHS data use . BBC News . https://www.bbc.co.uk/news/technology-58761324. Published October 1, 2021. Accessed May 15, 2022 .

- 29. Kalkman S , Mostert M , Gerlinger C , van Delden JJM , van Thiel GJMW . Responsible data sharing in international health research: a systematic review of principles and norms . BMC Med Ethics 2019. ; 20 ( 1 ): 21 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Morley J , Machado CCV , Burr C , et al . The ethics of AI in health care: A mapping review . Soc Sci Med 2020. ; 260 : 113172 . [DOI] [PubMed] [Google Scholar]

- 31. Cath C , Wachter S , Mittelstadt B , Taddeo M , Floridi L . Artificial Intelligence and the ‘Good Society’: the US, EU, and UK approach . Sci Eng Ethics 2018. ; 24 ( 2 ): 505 – 528 . [DOI] [PubMed] [Google Scholar]