Abstract

Machine learning is a powerful tool for creating computational models relating brain function to behavior, and its use is becoming widespread in neuroscience. However, these models are complex and often hard to interpret, making it difficult to evaluate their neuroscientific validity and contribution to understanding the brain. For neuroimaging-based machine-learning models to be interpretable, they should (i) be comprehensible to humans, (ii) provide useful information about what mental or behavioral constructs are represented in particular brain pathways or regions, and (iii) demonstrate that they are based on relevant neurobiological signal, not artifacts or confounds. In this protocol, we introduce a unified framework that consists of model-, feature- and biology-level assessments to provide complementary results that support the understanding of how and why a model works. Although the framework can be applied to different types of models and data, this protocol provides practical tools and examples of selected analysis methods for a functional MRI dataset and multivariate pattern-based predictive models. A user of the protocol should be familiar with basic programming in MATLAB or Python. This protocol will help build more interpretable neuroimaging-based machine-learning models, contributing to the cumulative understanding of brain mechanisms and brain health. Although the analyses provided here constitute a limited set of tests and take a few hours to days to complete, depending on the size of data and available computational resources, we envision the process of annotating and interpreting models as an open-ended process, involving collaborative efforts across multiple studies and laboratories.

Introduction

Machine learning (ML) and predictive modeling1,2—which encompasses many use cases of ML to predict individual observations—have provided the ability to develop models of the brain systems underlying clinical, performance and other outcomes, and to quantitatively evaluate the performance of those models to validate or falsify them as biomarkers. Because of these characteristics, ML has rapidly increased in popularity in both basic and translational research2–5 and forms the core of several now-common approaches, including brain decoding6–10, multivariate pattern analysis11, information-based mapping12 and pattern-based biomarker development2,13–16. By enabling the investigation of brain information that is simultaneously (i) finer-grained and more precise than traditional brain mapping and (ii) distributed across multiple brain regions and voxels, the use of ML in neuroimaging experiments has provided new answers to many enduring research questions11,17–19.

However, this rise in popularity is accompanied by concerns about the ‘blackbox-ness’ of ML models20,21. For basic neuroscientists, it is unclear how ML models will advance our neuroscientific knowledge if the models rely on hidden or complex patterns that are uninterpretable to researchers. For users in applied settings, it is unclear whether, and under what conditions, complex ML models will be trustworthy enough to contribute to the life-altering decisions made every day in medical and legal settings20. Without knowing why and how a model works, it is difficult to know when the model will fail, to which individuals or subgroups it applies and how it can advance our understanding of the neurobiological mechanisms underlying clinical and behavioral performance. In addition, some models are neurobiologically plausible and capture important aspects of brain function, whereas others capitalize on confounds such as head movement22. These models do not contribute equally to our understanding of the brain. Therefore, there is a pressing need for methods to help interpret and explain the model decisions23–26 and provide neuroscientific validation for neuroimaging ML models2.

Methods for interpreting predictive models in neuroimaging studies must address several key issues. First, neuroscience has a long-standing interest in understanding localized functions of individual brain areas or connections, whereas ML often focuses on developing integrative brain models (e.g., using patterns of whole-brain activity) that are highly complex and difficult to understand. Second, there is a tension between the goals of achieving high predictive accuracy versus providing mechanistic insights into underlying neural or disease processes27–30. Ideally models would achieve both goals, but these often do not go hand in hand. Biologically plausible models, such as biophysical generative models31–33 or biologically plausible neural network models34, use biological constraints (e.g., imaging data or findings in literature) and are built upon neurobiological principles. Predictive performance is usually less of a concern; rather, the goal is to capture and manifest human-like behaviors35. On the other hand, models that focus solely on predictive performance may achieve high accuracy but are often not human readable and reveal little about the underlying neural mechanisms involved36. Although neuroscientific explanation and predictive accuracy are distinct goals, they are not in opposition, and models developed for prediction can provide biological insights at several levels of abstraction. For example, deep neural networks trained for accurate image classification share common properties with the human visual system37 and are being used to understand the types of information represented in discrete brain regions38. Predictive models can also be inspired by neuroscientific findings, as deep neural networks for image recognition have been39,40. Third, ML, as well as traditional statistical methods, can be sensitive to variables that are correlated with, but not causally related to, outcomes of interest, and thus can be sensitive to systematic noise and confounds in data (e.g., head motion, eye movement, physiological noise). Models that use confounding variables to predict are not only uninterpretable but also behave unpredictably in new samples.

Therefore, for neuroimaging-based ML models to be interpretable to neuroscientists and users in applied settings, the models should (i) be readable and understandable to humans, (ii) provide useful information about what mental or behavioral constructs are represented in particular brain pathways or regions, and (iii) demonstrate that they are based on relevant neurobiological signals, not confounds. These goals require prioritizing model simplicity and sparsity over a complete description of brain function. The most interpretable models are not necessarily the most ‘correct’ ones—the brain and human behaviors are intrinsically complex and high dimensional, creating an unavoidable trade-off between biological precision and interpretability. However, as George Box famously wrote41, ‘All models are wrong, but some are useful’. On the other hand, this trade-off must be managed carefully. More complex models may better reflect the structure of the underlying biological mechanisms; therefore, prioritizing interpretability may come at a cost in biological realism, undercutting our understanding of how the brain works. As Albert Einstein said, ‘Everything should be made as simple as possible, but no simpler’.

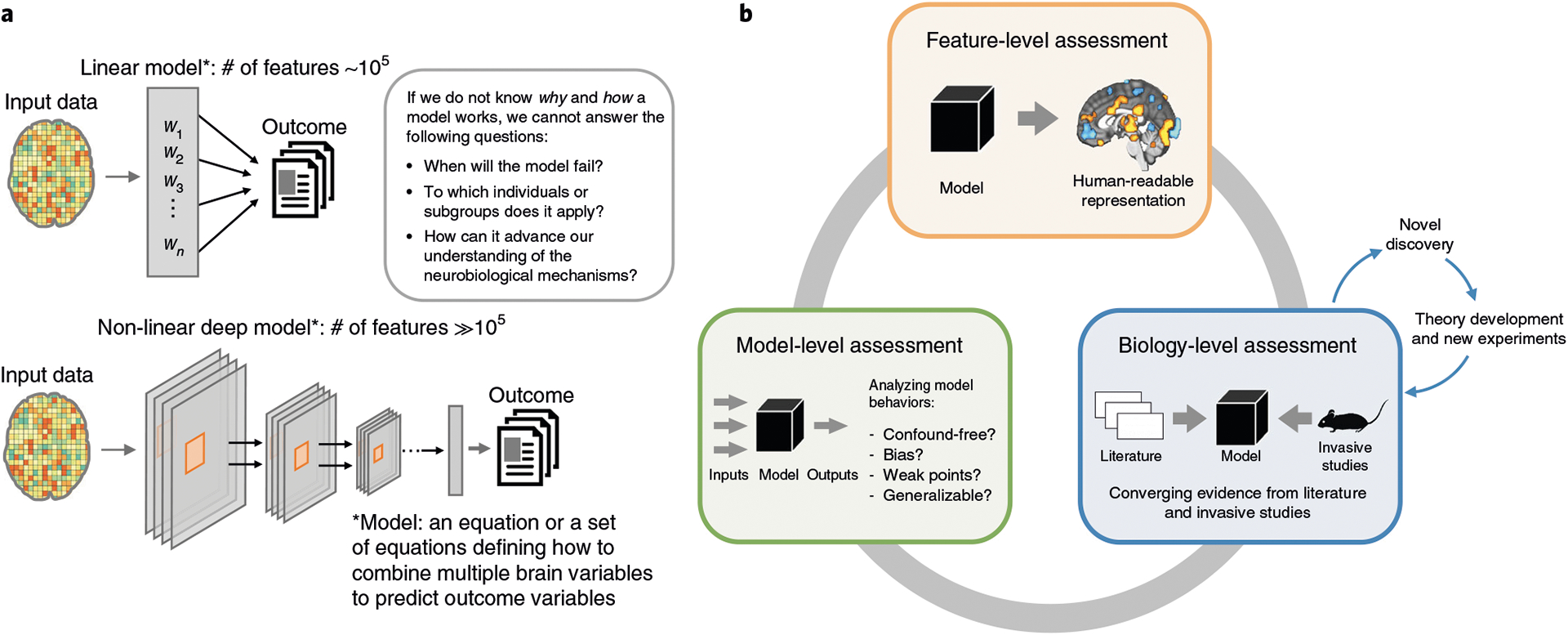

Whatever the chosen level of complexity of a model, tools for interpreting it can increase its usefulness by showing that the model can provide a useful approximation to more complex biological mechanisms. However, the nature of neuroimaging data makes interpretation of the models challenging (Fig. 1a). Neuroimaging produces high-dimensional data with a low signal-to-noise ratio and strong correlations between features. Moreover, the number of observations in neuroimaging studies (the sample size n, often in the tens or hundreds) is small in general compared to the number of features (p = ~105 in the case of whole-brain functional magnetic resonance imaging (fMRI) activation pattern-based models). Models built with p ≫ n data are susceptible to overfitting and often do not generalize well. Numerous studies have been focused on decreasing dimensionality or solving the p ≫ n problem as a way of enhancing interpretability. For example, regularizing model parameters by imposing sparsity has often been considered as one of the key strategies for enhancing model interpretability, and thus many different regularization methods have been developed42–46. However, these statistical methods do not provide a unified framework that can be used with a heterogeneous set of methods and algorithms to evaluate and improve interpretability of neuroimaging-based ML models. In addition, the ML algorithms do not, in themselves, provide any constraints related to neuroscientific interpretation and validity. Therefore, interpreting a neuroimaging ML model is a complex problem that is not solvable at the algorithmic level; it requires a multi-level framework and a multi-study approach.

Fig. 1 |. Model complexity in neuroimaging and the model interpretation framework.

a, Neuroimaging-based ML models are usually built upon a large number of features (e.g., ~105 in the case of whole-brain fMRI), which, along with considering potential confounds and correlations between features, makes even linear models complex. In the case of nonlinear models, the situation is more complicated, as it is not clear what a model uses as features. To trust models and find them useful in basic neuroscience and clinical settings, researchers need to know why and how a model works. b, The model interpretation framework consists of three levels of assessment. In the model-level assessment, the model is evaluated as a whole, and the characteristics of the model are derived mainly from observations of the input–output relationship. The assessment includes tests of specificity, sensitivity and generalizability, analyses of model’s representations and decisions and analyses of noise contribution. The feature-level assessment aims to identify features significant for a prediction within a model. The feature significance can be evaluated based on the feature’s impact on predictions or the feature’s stability across multiple samples of the training data. The explanation obtained by this level of assessment should enhance human readability of the model. The biology-level assessment aims to prove the neuroscientific plausibility of the model with evidence from previous literature and other studies using different methodology (e.g., invasive studies). In case a model suggests a novel finding that cannot be verified by the current state of the art, the model can serve as a basis for theory development, which should subsequently be corroborated by studies employing other (e.g., invasive) experimental methods.

In this protocol, we first propose a unified framework for interpreting ML models in neuroimaging based on model-level, feature-level and neurobiology-level assessments. Then, we provide a workflow that illustrates how this framework can be employed to predictive models, along with practical examples of analyses for each level of assessment with a sample fMRI dataset (available for download at https://github.com/cocoanlab/interpret_ml_neuroimaging). Although these methods can in principle be used for any type of model and data (e.g., predicting individual differences in personality or clinical symptoms based on structural neuroimaging data or functional connectivity patterns; predicting trial-by-trial responses within individuals), our example code focuses on classification models based on whole-brain, task-related fMRI activity patterns combining multiple participants’ data. Nevertheless, the analyses can be easily adapted to regression-based problems (e.g., predicting ratings of task stimuli) and can be extended to models built on other feature types, such as structural data or functional connectivity data.

Overview of the framework

In this section, we first establish a broader context for our proposed framework. Based on this framework, we provide a protocol that includes some selected analysis methods from each assessment level. As shown in Fig. 1b, the proposed framework consists of three levels of assessment: model-, feature- and biology-level assessments. Table 1 provides descriptions and example methods for subcategories of each level of assessment.

Table 1 |.

Descriptions and example methods for different levels of assessments

| Category | Description | Example methods |

|---|---|---|

| Model-level assessment | Models are characterized by… | |

| Sensitivity and specificity | Examining the response patterns of predictive models to different inputs and experimental conditions | Positive and negative controls13,47,118; testing models across a range of different conditions18; construct validity84; tuning curves of brain patterns86 |

| Generalizability | Testing models on multiple datasets from different samples, contexts and populations | Research consortia and multisite collaborations119 |

| Behavioral analysis | Analyzing the patterns of model decisions and behaviors over many instances and examples (or over time for adaptive models) | Methods analogous to psychological tests50; analyses at given time points52; error analysis120 |

| Representational analysis | Analyzing model representations using examples or representational distance | Deep dream71; deep k-nearest neighbors121; representational similarity analysis56 |

| Analysis of confounds | Examining whether any confounding factors contribute to the model (e.g., head movement, physiological confounds or other nuisance variables) | Comparison of the model of the signal of interest with a model of the nuisance variables122 |

| Feature-level assessment | Significant features are identified by… | |

| Stability | Measuring the stability of the selected features and predictive weights over multiple tests (e.g., cross-validation or resampling) | Stability analysis61,123; bootstrap test13,124; surviving count on random subspaces125; pattern reproducibility126 |

| Importance | Measuring the impact of features on a prediction | rFe63,127,128; variable importance in projection61; sensitivity analysis66; feature importance ranking measure129; measure of feature importance130; leave-one-covariate-out131; LRP64,69; local model-agnostic explanations (LIME)67; deep learning feature importance132; Shapley additive explanations (SHAP)133; regularization42–44; virtual lesion analysis47; in silico node deletion134; weight-activation product135 |

| Visualization | Visualizing feature-level properties | Class model visualization136; saliency map70; weight visualization18 |

| Biology-level assessment | Neurobiological basis is established by… | |

| Literature | Relating predictive models to previous findings from literature across different tasks, modalities and species (e.g., meta-analysis) | Meta-analysis18,77; large-scale resting-state brain networks83,84 |

| Invasive studies | Using more invasive methods, such as molecular, physiological and intervention-based approaches | Gene overexpression and drug injection88; transcranial magnetic stimulation90; postmortem assay91; optogenetic fMRI89 |

Model-level assessment

Model-level assessment treats and evaluates a model as a whole and characterizes the model based on its response patterns in different testing contexts and conditions. This includes, for example, various measures of model performance. Sensitivity and specificity concern whether a model shows a positive response when there is true signal (e.g., an outcome of interest has occurred) and negative response when there is no true signal. Generalizability concerns whether a model performs accurately on data collected in different contexts or with different procedures—e.g., data from out-of-sample individuals not used in model training or data from different laboratories, scanners, populations and experimental paradigms2 (for more detailed definitions of these terms, please see ref. 1). These types of measurement properties should be rigorously evaluated to understand what the model really measures and how it performs in different test contexts2,13,47,48. More broadly, these analyses can be seen as behavioral analyses of a model—investigating patterns of model behaviors (e.g., model decisions and responses) over multiple instances and examples49. This is similar to the study of human behavior using psychological tests. For example, a previous study examined a model’s ‘implicit biases’ using behavioral experiments and measures designed for ML models50. In another study, researchers developed a new ML model that can learn other ML models’ internal states (e.g., machine theory of mind51). For adaptive models, one can examine changes in model behaviors and learning over time, similar to the study of human developmental psychology52.

In addition, representational similarity analyses can be used to examine models’ internal representations and their relationships with different models and different brain regions53–55. Representational similarity analyses examine the similarity among a set of experimental conditions or stimuli on two multivariate measures—for example, vector representations across units in an artificial neural network or multi-voxel patterns of fMRI activity. As an example, a previous study examined representational similarities and differences between multiple computational models, including a deep neural network, and activity patterns in the inferior temporal cortex56.

Finally, one of the most important assessments at the model level is to examine potential contributions of noise and nuisance variables to a model and its predictions. Many different confounding factors such as physiological and motion-related noise are pervasive in neuroimaging data and present challenging issues that need careful attention57–60. These confounds can creep into training data and be utilized by predictive models to enhance their performance. The problem is that, if a model relies on information from the confounding variables, the model cannot be robust across contexts, because it will fail in samples without the same confounds or when methodological improvements (e.g., better noise-removal techniques) mitigate them. More importantly, those models that rely on nuisance variables will teach us nothing about the neurobiology of target outcomes. Therefore, researchers should provide evidence that their models are not influenced by confounds and nuisance variables to the degree possible. One way to do this is to test and show whether model predictions, features or outcomes are independent from nuisance variables. For example, one can test whether an ML model based on nuisance variables, such as in-scanner motion parameters, can predict either (i) responses/predictions made by a model of interest or (ii) the outcomes of interest13,18. If the nuisance model cannot predict these, model performance is unlikely to be driven by those nuisance variables.

This protocol includes multiple model-level assessment steps, including evaluation of model performance and generalizability (Steps 2 and 3 and Steps 8–10), potential influences of confounds (Steps 4–6) and a representational similarity analysis on multiple predictive models based on their performance (Steps 12–15).

Feature-level assessment

Feature-level assessment includes methods that evaluate the significance of individual features, such as voxels, regions or connections, that are used in prediction. The methods can be broadly categorized as (i) methods for evaluating feature stability, (ii) methods for evaluating feature importance, and (iii) methods for visualization.

Methods for assessing stability of features measure how stable each feature’s contribution (or predictive weight) is over multiple models trained on held-out datasets using resampling methods or cross-validation13,61. For example, in bootstrap tests, data are randomly resampled with replacement, and a model is trained on the resampled data62. This procedure is repeated multiple times (e.g., 10,000 iterations), and the stability of predictive weights can then be evaluated using the z and P values based on the mean and standard deviation of the sampling distribution of predictive weights. After correction for multiple comparisons, the features with predictive weights significantly different from zero based on the P values can be selected and visualized in standard brain space.

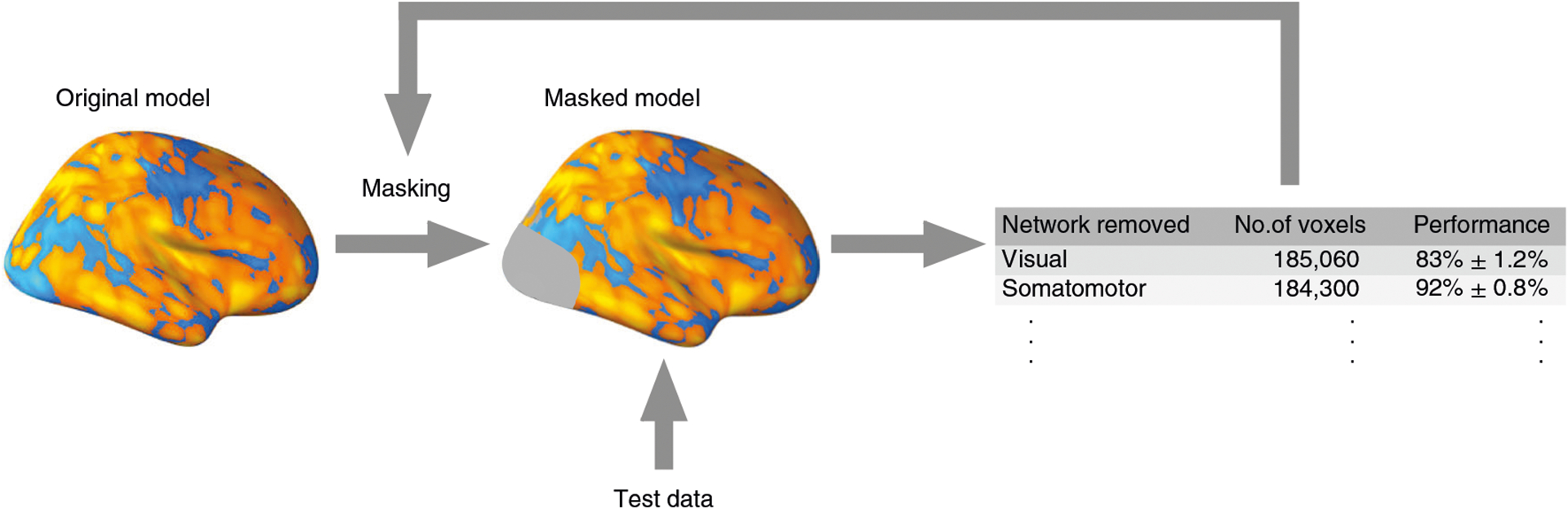

Methods for assessing feature importance focus on the impact of a feature on a prediction. These include methods that directly use the magnitude of predictive coefficients (e.g., recursive feature elimination (RFE)63), methods using feature-wise decomposition of the prediction (e.g., layer-wise relevance propagation (LRP)64 and Shapley values65) and methods using perturbation or ‘lesions’ (omission) of features47,66–68, among many others (see Table 1). For example, in RFE, the importance of a feature is estimated by the absolute value of its corresponding predictive weight, and less important features (i.e., with low predictive weights) are eliminated recursively. The ‘virtual lesion’ analysis47 has also been used to assess the feature importance. In the ‘virtual lesion’ analysis, a researcher first defines meaningful groups of features (e.g., brain parcellations or functional networks), removes each group of features from a model at each iteration and tests the predictive performance of the reduced model. A large decrease in the model performance indicates that the virtually lesioned features are necessary for the model to perform well. In LRP, the prediction score of a nonlinear classifier (e.g., neural network) is decomposed and recursively propagated back to the input feature level so that the contribution of each feature to the final prediction can be quantitatively identified and visualized64,69. These methods cannot fully explain complex models, because isolated features are often insufficient to predict either outcomes or full model performance, but they can help explain what is driving a model’s predictions.

Visualization methods provide ways to make a model human readable and thus enhance its interpretability. In case of linear models, visualizing important features is straightforward because significant predictive weights can be directly displayed on a feature space (e.g., a brain map). For nonlinear models, visualizing feature-level interpretation is not simple, but it is possible to visualize importance or stability scores calculated at the feature level on a feature space (using, e.g., a heat map64 or saliency map70). Another visualization technique for artificial neural networks is to examine what individual units or layers in a network represent by adjusting input patterns to maximize the activation of a target unit or layer (e.g., DeepDream71). Table 2 provides more details on a few selected feature-level assessment methods.

Table 2 |.

Selected methods of feature-level assessment

| Method | Applicability | Purpose of the method | Description | References |

|---|---|---|---|---|

| Stability analysis | Linear models | Feature selection based on feature stability | A model is repeatedly trained on resampled data using a sparse method. In each iteration, only a subset of features is selected by the algorithm. Features selected with a frequency above a threshold are considered stable. | Refs. 61, 123,125 |

| Bootstrap tests | Linear models | Identification of significant features within the training dataset based on feature stability | Training data are repeatedly resampled with replacement. Each sample serves as training data for a new model. Features with stable weights across the models are identified as stable and significant. | Refs. 13,124 |

| RFE | Linear models | Feature selection based on feature importance | A model is iteratively trained, and features corresponding to its lowest weights are eliminated from the training dataset. The process is repeated until the desired number of features is reached. | Ref. 128 |

| Sensitivity analysis | Model agnostic | Identification of significant features within a given data point based on feature importance | The input features are disrupted (e.g., by additive noise). The resulting error in the output is measured. Features that caused the largest error after being disrupted are considered important for the prediction. | Ref. 66 |

| LRP | Neural networks | Same as above | A prediction score is decomposed backward through the layers of the model until it reaches the input when a relevance score is assigned to each input feature. | Ref. 64 |

| LIME | Model agnostic | Same as above | A prediction is explained by an interpretable model fitted to sampled instances around the instance being explained. | Ref. 67 |

| SHAP | Model agnostic | Unified measure of feature importance | SHAP is a framework that provides the incremental impact of each feature on model decisions using Shapley values. This framework unifies some other model explanation methods (e.g., LIME and LRP). | Ref. 133 |

In this protocol, we propose four options for feature-level assessment (Step 7 of the protocol): bootstrap tests, RFE and ‘virtual lesion’ analysis for linear models and LRP for explaining nonlinear models. We visualize the significant features (or feature relevance scores in the case of LRP) on a standardized brain space.

Biology-level assessment

Biology-level assessment aims to provide additional validation for a model based on its neurobiological plausibility. Plausibility is based on converging evidence from other types of neuroscientific data, including previous studies, additional datasets or other techniques, particularly those that provide more direct measures of brain function or direct manipulation of brain circuits (e.g., intracranial recordings, optogenetics). Such validation is important for at least two reasons. First, it helps to elucidate what types of mental and behavioral representations are being captured in a predictive model. Second, it provides a bridge between ML models and neuroscience, helping neuroimaging-based ML models contribute to understanding mental processes and behaviors.

However, there are inherent challenges in identifying the neurobiological mechanisms underlying neuroimaging-based ML models and validating them against other techniques and datasets. Most ML algorithms have no intrinsic constraints related to neuroscientific plausibility. In addition, ML models are usually developed to maximize the model’s performance while being agnostic about its neurobiological meaning and validity. It may not be possible to provide definitive answers for biology-level assessment in many cases. Rather, the assessment should be regarded as an open-ended investigation that requires long-term sharing and testing the properties of established models. This is a multi-study, multi-technique and multidisciplinary process.

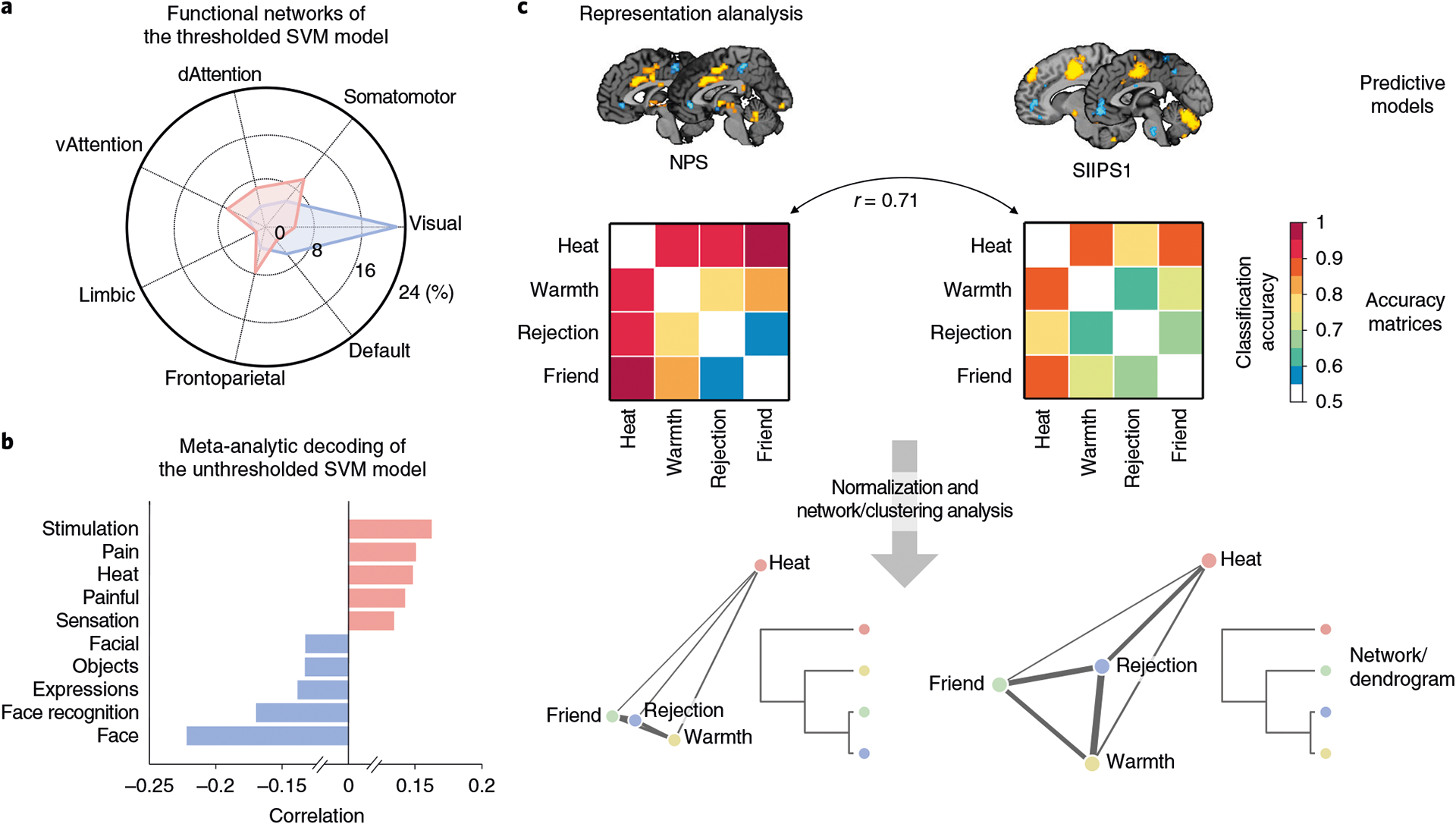

One way to examine neurobiological plausibility and validity of an ML model is to evaluate results from feature- and model-level assessments in the light of neuroscience literature across various modalities and species. For example, Woo et al.18 developed an fMRI-based ML model for predicting pain and examined the local pattern topography of predictive weights for some key brain regions in the model, including basal ganglia and amygdala, and found that their local patterns of predictive weights were largely consistent with previous findings in rodents72–74 and non-human primates75,] as well as in human literature76–78. In addition, one can examine what an ML model may represent (‘decode’ a model) using a meta-analytic approach77,79—for example, term-based decoding with automated meta-analysis tools (e.g., neurosynth.org80) and map-based decoding using an open neuroimaging database (e.g., openneuro.org81 or neurovault.org82). Another possibility is to examine the current ML model in relation to previously established large-scale resting-state brain networks48,83–85 or existing multivariate pattern-based neuroimaging markers86. The protocol below specifies two options for biology-level assessment: the analysis of the model in terms of its overlap with large-scale resting-state networks defined by Yeo et al.87 and meta-analysis–based decoding using Neurosynth80 (Step 11).

Other types of biological validation are beyond the scope of the current protocol but are important, particularly, searching for converging evidence from invasive studies that employ molecular, physiological and intervention-based approaches in animal88,89 or human studies90,91. Some methodologies may not be practical for widespread use as predictive models because they are more invasive, are testable only in special populations or cannot be tested in humans at all; however, they can provide valuable converging evidence, increasing our understanding of what a model measures. For example, Hultman et al.88 recently developed electrical neuroimaging biomarkers of vulnerability to depression using local field potentials in mice. They then assessed their models using multiple biological methods, including gene overexpression (molecular) and drug injection (physiological and intervention-based methods), and showed that their models responded to multiple ways of inducing vulnerability to depression. For humans, researchers cannot easily use invasive methods, but non-invasive interventions, such as transcranial magnetic stimulation90, and some more invasive methods, such as electrocorticography or post-mortem evaluation91, can also be used in some cases.

Although converging evidence from existing studies and theories can help validate a model, even the models that are not corroborated by existing neurobiological knowledge can also play an important role by promoting new discovery and theory building in neuroscience. For example, neuroimaging-based ML models for pain could reveal new substrates for pain perception in regions not previously understood as ‘pain-processing’ regions, leading to new discoveries of potential brain targets for further research and intervention92. Thus, the biology-level assessment does not need to be limited to currently available theories. Rather, researchers should be open to building new hypotheses and theories inspired by ML models, which can be subsequently tested with invasive methods or other modalities.

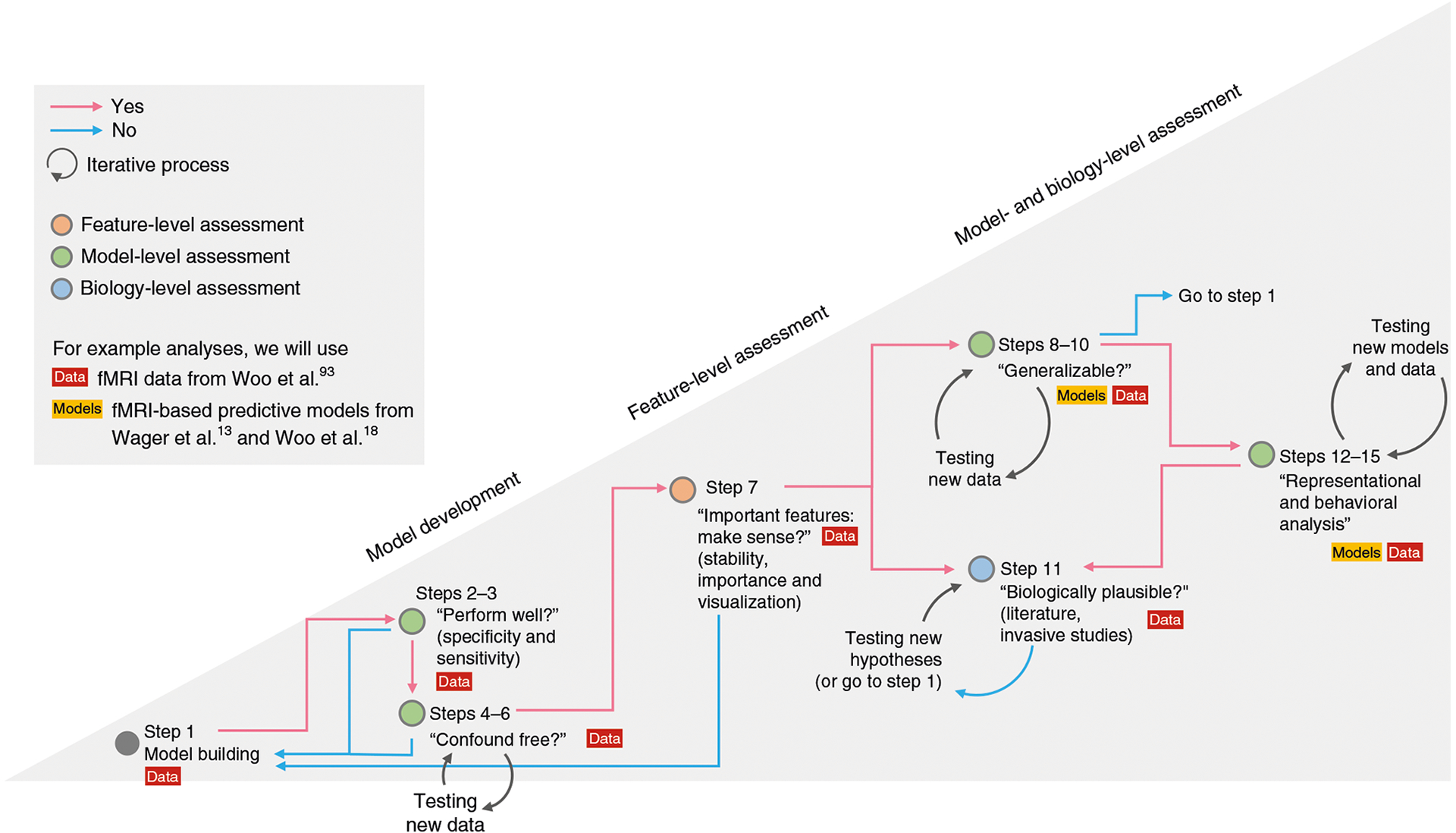

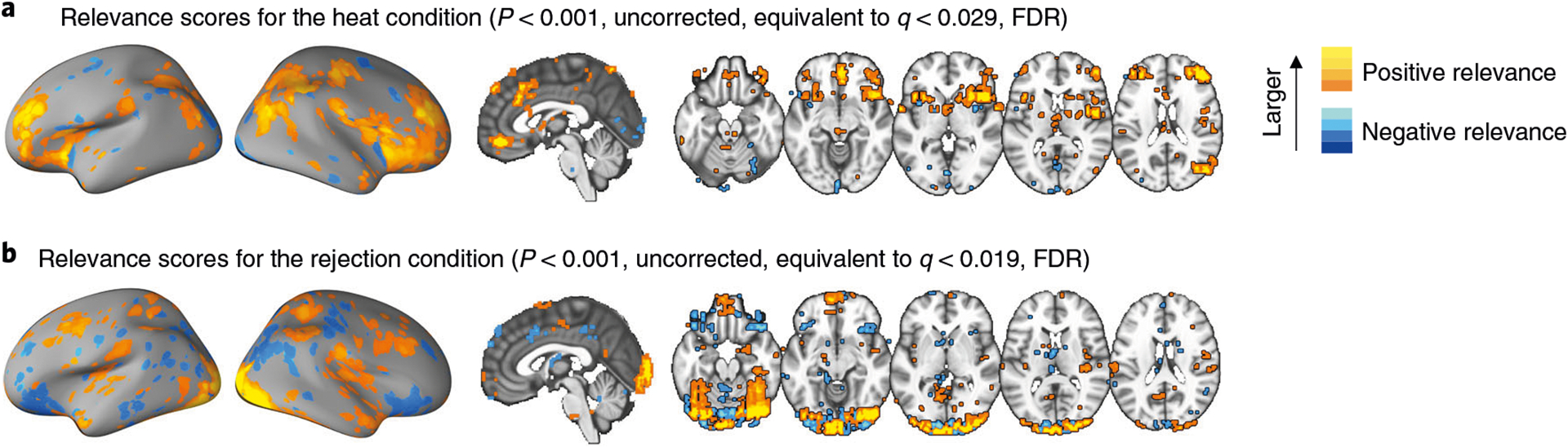

Development of the protocol

The proposed framework and analyses have been developed and discussed in multiple previous publications from our research group2,13,18,19,47,48,93, in which we have developed fMRI-based ML models for several different target outcomes. These previous studies used different methods and approaches to validate and interpret the models. Here, we aim to unify these various approaches into one framework and implement a workflow that can guide model validation and interpretation (Fig. 2). To practically demonstrate the use of the workflow, we apply its methods to a published fMRI dataset93. In the fMRI experiment, participants (N = 59) completed a somatic pain task and a social rejection task. In the somatic pain task, participants experienced painful heat or non-painful warmth, whereas in the social rejection task, participants viewed photographs of their ex-partners or friends. We used these data to build and interpret classification models. Although this protocol provides examples of only a few selected analyses and covers only non-invasive methods, other validation methods and steps should be employed as available. In addition, although the previous studies from our research group have generally used linear models, this framework can be applied to any type of ML model, including deep learning models.

Fig. 2 |. A proposed workflow for the procedure.

In this protocol, we present an example workflow that implements the unified model interpretation framework. The first step is the model building (Step 1). This is a prerequisite step that stands outside the interpretation framework. However, we include a brief description of this step to emphasize its importance. This protocol includes examples of building linear support vector machines (option A) and a convolutional neural network model (option B) using an example dataset from our previous publication93. Next, we evaluate the basic properties of a model, such as its predictive power (Steps 2 and 3) and contributions of confounds (Steps 4–6). In case either of the two steps shows insufficient quality of the model, one should return to Step 1 to review the data quality and to revise the model. Note that obtaining a definitive answer to the question of Steps 4–6 (i.e., whether the model is confound free) is challenging, and therefore Steps 4–6 should be an open-ended investigation. If the results from Steps 2 and 3 and Steps 4–6 are good enough to move forward, the next step (Step 7) is the feature-level assessment. This protocol provides analysis examples of four options of identifying significant features: bootstrap tests, RFE, ‘virtual lesion’ analysis and LRP. If the identified significant features provide sensible results, one can continue to Steps 8–10 and Step 11. Otherwise (e.g., all the significant features are located within the ventricles), one should revisit the model building. Generalizability testing (Steps 8–10) and biology-level assessment (Step 11) can be performed in an arbitrary order. In Steps 8–10, a model is tested for its generalizability to unseen data from new individuals, different laboratories, scanners and contexts. Testing generalizability requires new test data, which can take a long time to collect. Therefore, one can first examine the model’s biological validity (Step 11) and then test its generalizability, or vice versa. Both generalizability testing and biology-level assessment require open-ended test processes and should support each other; more generalizable models are likely to be more biologically plausible. For Step 11, we provide examples of two options: examining the relationship of the model with the large-scale resting-state functional networks (option A) and term-based meta-analytic decoding using Neurosynth80 (option B). In practice, this step should also include exhaustive literature reviews and support of invasive studies. The step can also be performed multiple times in case the model suggests novel theories that should be evaluated. The final step of this workflow is the representational analysis (Steps 12–15), which can provide a better understanding of the model’s decision principles by examining the patterns of model behaviors over multiple instances and examples. This step often requires other models with which to be compared, and for this reason, we include this as the last step of the workflow. However, if other models are already available, this step can be done earlier. The results from Steps 12–15 could provide converging evidence for Step 11. Since interpreting an ML neuroimaging model is, in fact, an open-ended process, this workflow should be regarded as the bare minimum, and more analyses other than the ones proposed here can help the model interpretation.

Comparison with other methods

Many previous approaches to improving interpretability have focused on model sparsity or constraining models to include a small number of variables. Various regularization techniques have been used for this purpose. Least-absolute-shrinkage-and-selection-operator (LASSO)42 and ElasticNet43 regression, for example, impose non-structured sparsity, without constraints on how variables are grouped when considering their inclusion. GraphNet44 and hierarchical region-network sparsity45 are examples of methods that impose structured sparsity, incorporating prior knowledge of brain anatomical specialization into the model-selection process. These structured methods result in grouped voxels in few clusters and promise easier interpretation than non-structured sparse models. However, imposing sparsity may not always be relevant to establishing neurobiologically valid brain models: sparse solutions may not provide the whole picture of complex interactions among many different players involved in a complex biological system.

Other studies have considered additional objective functions beyond predictive accuracy. Model stability, or reproducibility of model parameters across samples, is important for interpretability: models with unstable parameters have no consistent biological features to interpret. For example, Rasmussen et al.94 showed that there is a trade-off between prediction accuracy and the spatial reproducibility of a model, and concluded that regularization parameters should be selected considering both model reproducibility and interpretability. Baldassarre et al.95 also investigated the effects of several regularization methods on model stability and suggested that model stability can be enhanced by adding reproducibility as a model selection criterion.

Another important approach for enhancing model interpretability is dimensionality reduction. Principal component analysis or independent component analysis has often been used, and they can be combined with methods imposing sparsity96,97. However, at present, principal component analysis and independent component analysis are also used to extract characteristic features from single-modality or multimodal neuroimaging data98–100.

Most of these studies, however, focused only on one or a small number of aspects of model interpretation that can partially improve the interpretability. We aim to provide a unified framework for assessing model interpretability in multiple ways, along with concrete example analyses.

Limitations

This protocol aims to provide concrete analysis examples of the minimum set of components for the different levels of model interpretation. However, interpreting ML models in neuroimaging is intrinsically an open-ended process, and therefore the protocol provided here cannot cover all possible methods. In addition to the presented methods, users of the protocol may want to, for example, support the biological interpretation of their models by thorough literature review or conducting additional experiments focusing on the underlying neurobiological mechanisms of the models using invasive animal and human studies.

In this protocol, we sometimes make choices on algorithms and parameters based on previous research, though some of the decisions could have a direct impact on the model performance and interpretation. We recommend that researchers do not blindly use our choices as defaults. The algorithms we use (e.g., support vector machines (SVMs)) are not the only or even the best for many applications. The validation and process we implement could be used with many choices of algorithms (including both regression and classification algorithms), many outcomes (e.g., decoding stimulus conditions, predicting within-person behavior or predicting between-person clinical characteristics) and multiple types of data (e.g., structural images, functional task-related images, functional connectivity or arterial spin labeling/positron emission tomography/magnetic resonance spectroscopy images). However, for all of these choices, additional data- and outcome-type specific validation procedures are likely to be useful. Therefore, this protocol is a useful starting point but should not be taken as a complete description of the validation steps for all algorithms, data types and outcome types. Researchers should make deliberate choices on algorithms and parameters to answer their research questions. In addition, although our model interpretation framework can be applied to many types of models and data (e.g., fMRI connectivity, structural MRI and other imaging modalities), we do not provide example code for all possible applications.

This protocol provides analysis examples for feature-level assessment for a nonlinear model as well as a linear model. For a nonlinear model, we used LRP64, but only one previous neuroimaging study has used this method69. Although the method for the nonlinear model yielded similar results to the methods for the linear model in our analyses, the method for the nonlinear model presented here should be considered as an example and investigated further in future studies. In addition, other components of the model-level assessment (e.g., noise analysis and representational analysis) have not been tested with nonlinear models.

Finally, this protocol includes only two simple methods for biology-level assessment. However, in practice, biology-level validation should involve experiments using multiple modalities and approaches and collaborative efforts among multiple laboratories to search for converging evidence. We emphasized the importance of these approaches above, but such methods cannot be fully summarized in one protocol.

Overall, this protocol should serve as a sample practical implementation of the framework. There can be multiple equally valid analysis options that can achieve the same level of model interpretation. We encourage investigators to use the analysis methods and workflow proposed here but also to use different methods and a workflow that suits their research goals and experimental contexts.

Overview of the procedure

In this protocol, we provide a workflow that can guide a practical implementation of the framework (Fig. 2). To achieve most of the components of the workflow, we use the CANlab interactive fMRI analysis tools (Box 1), which are publicly available MATLAB-based analysis tools (see Materials for details on availability). The list of functions from the CANlab tools used in the protocol can be found in Table 3.

Box 1 |. CANlab interactive fMRI analysis tools.

Neuroimaging analyses are widely performed in well-established pipelines with optimized procedures. However, to discover new and better ways of neuroimaging data analysis, and to avoid spurious results, there is also a need for flexibility to allow users to be creative and explore the data and analysis methods. The CANlab neuroimaging analysis tools were designed with this goal in mind. The tools provide a high-level language for interacting with fMRI data. Users can apply simple commands to perform various analyses and to explore the distribution of data and consequences of different analysis choices. As a result, analysis scripts can be short, transparent and easy to read, write and interpret.

The CANlab imaging analysis tools enable interactive neuroimaging data analysis using objects with simple methods operating in MATLAB. There are eight main object classes suitable for different types of analyses. To perform analyses in this protocol, we use fmri_data and statistic_image objects, which both represent subclasses of the image_vector data class. A representative object of the image_vector data class contains basic information about loaded images, such as the data values in the .dat field, indicators of removed data in .removed_voxels and .removed_images fields, details about the volume information of the image in the .volInfo field or a record of past manipulations with the object in the .history field. Its subclasses, such as fmri_data and statistic_image, then inherit these basic properties. There are also various methods that can be performed on image_vector objects or other objects from its all subclasses. For example, one can save memory using the remove_empty method, which removes all empty voxels and images from the object but keeps track of the removal in .removed_voxels and .removed_images fields of the object. There is also a reverse method, replace_empty, which replaces the missing data values with zeros. One can also resample the image to match the space of another image using the resample_space method or mask the data with a mask image using the apply_mask method.

The fmri_data subclass is one of the main objects for the fMRI data analysis. It stores neuroimaging data in 2D space, enabling simple manipulations with the data. Properties of the fmri_data object include the properties inherited from the image_vector data class and additional information about the data, such as outcomes in the .Y field and covariates in the .covariates field. There are various useful methods that can be operated on the fmri_data objects, some of which are used in this protocol. For example, one can easily visualize raw data and examine data quality using the plot method, run one-sample t-test using ttest, conduct a regression analysis using regress, threshold the images using threshold, write image data as NIfTI or Analyze files using the write method and build a predictive model using the predict method. To see the full list of available methods, one can type methods(fmri_data) in the MATLAB Command Window.

The statistic_image subclass is another important object for the fMRI data analysis. It stores statistical test outputs, such as beta or t-values, P values, standard error, degree of freedom, sample size and significance. In the current protocol, the statistic_image object stores the output of the bootstrap tests performed with the predict function. It can be easily thresholded with desired thresholding methods, such as FDR, using the threshold method, and significant voxels can be visualized using the orthviews method. More detailed tutorials for the CANlab fMRI analysis tools are available at https://canlab.github.io/.

Table 3 |.

Key functions in the CanlabCore MATLAB toolbox used in the protocol

| Function | Description | Used in… |

|---|---|---|

| filenames | Lists file names that match a specific pattern in a directory | Step 1A; Step 8 |

| fmri_data | Loads images into the object or creates an empty fmri_data object | Step 1A; Step 5; Step 7C; Step 8; Step 11A |

| apply_mask | Masks an image with a defined mask or calculates pattern expression values | Step 1A; Step 9 |

| predict | Trains and evaluates predictive models and performs cross-validation and bootstrap tests | Step 1A; Step 2; Step 6; Step 7A |

| roc_plot | Calculates accuracy, sensitivity and specificity and visualizes the ROC curve | Step 3; Step 7C; Step 10; Step 12 |

| threshold | Applies a statistically valid threshold to the images with statistical test results | Step 7A |

| orthviews | Displays the image data stored in CANlab tools | Step 7A; Step 7B |

| write | Writes data stored in the fmri_data or statistic_image objects into a NIfTI (.nii) or Analyze (.img) file | Step 7A; Step 7B |

| svm_rfe | Performs recursive feature elimination with support vector machines | Step 7B |

| canlab_pattern_similarity | Calculates similarity between each column in a data matrix and a vector of pattern weights | Step 11A |

Step 1 of the workflow is model building. It is a prerequisite step, which is not included in the model interpretation framework, but successful and correct implementation of this step defines the success of the following methods of model interpretation. A crucial point in Step 1 is to divide data into a training set and a test set for cross-validation performed in Steps 2 and 3 (for more detailed information, see Step 1A). Steps 2–15 can then be divided into three parts: model development (Steps 2–6), feature-level assessment (Step 7) and model- and biology-level assessment (Steps 8–15).

In the model development stage, Steps 2 and 3 and Steps 4–6 evaluate the intrinsic quality of the new model in terms of its predictive power and a potential contribution of confounds. More specifically, Steps 2 and 3 evaluate the model’s accuracy, sensitivity and specificity. In these steps, it is critical to obtain unbiased estimates of model performance using cross-validation (though cross-validation is prone to bias in some cases101) and, ideally, held-out test data tested on only a single, final model. In this protocol, we provide examples of leave-one-subject-out (LOSO) and 8-fold cross-validation. If the model shows good performance, one can go to the next step. Steps 4–6 aim to ensure that a model is independent of potential confounds. However, obtaining a definitive answer to this question is challenging (e.g., potential confounds may be unmeasured), and therefore this should be an open-ended investigation. Although the order of these analysis steps is flexible, Steps 2–6 should logically precede other analyses as they validate the quality of the model.

Step 7 includes methods for the feature-level assessment of the model. We propose several options to identify important features, and these options can be selected depending on the types of models (e.g., linear or nonlinear) or desired properties (e.g., stability or importance). In this protocol, we describe (i) bootstrap tests as an example of feature stability evaluation in linear models, which were used in previous studies13,93; (ii) RFE as an example of evaluation of feature importance in linear models commonly used in neuroimaging63; and (iii) a ‘virtual lesion’ analysis in which features are groups of voxels defining regions or networks of interest47. We also describe (iv) LRP64 as an example of feature importance evaluation in nonlinear models. There are numerous other ways to identify significant features in models, and thus we encourage investigators to use other methods that suit their goals. For a list of possible options, see Table 1. When visualizing important features, researchers need to examine whether the identified important features make sense based on a priori domain knowledge. For example, important features should not be located outside of the brain, and if a condition involves a visual process, some of the important features should be located in the visual cortex.

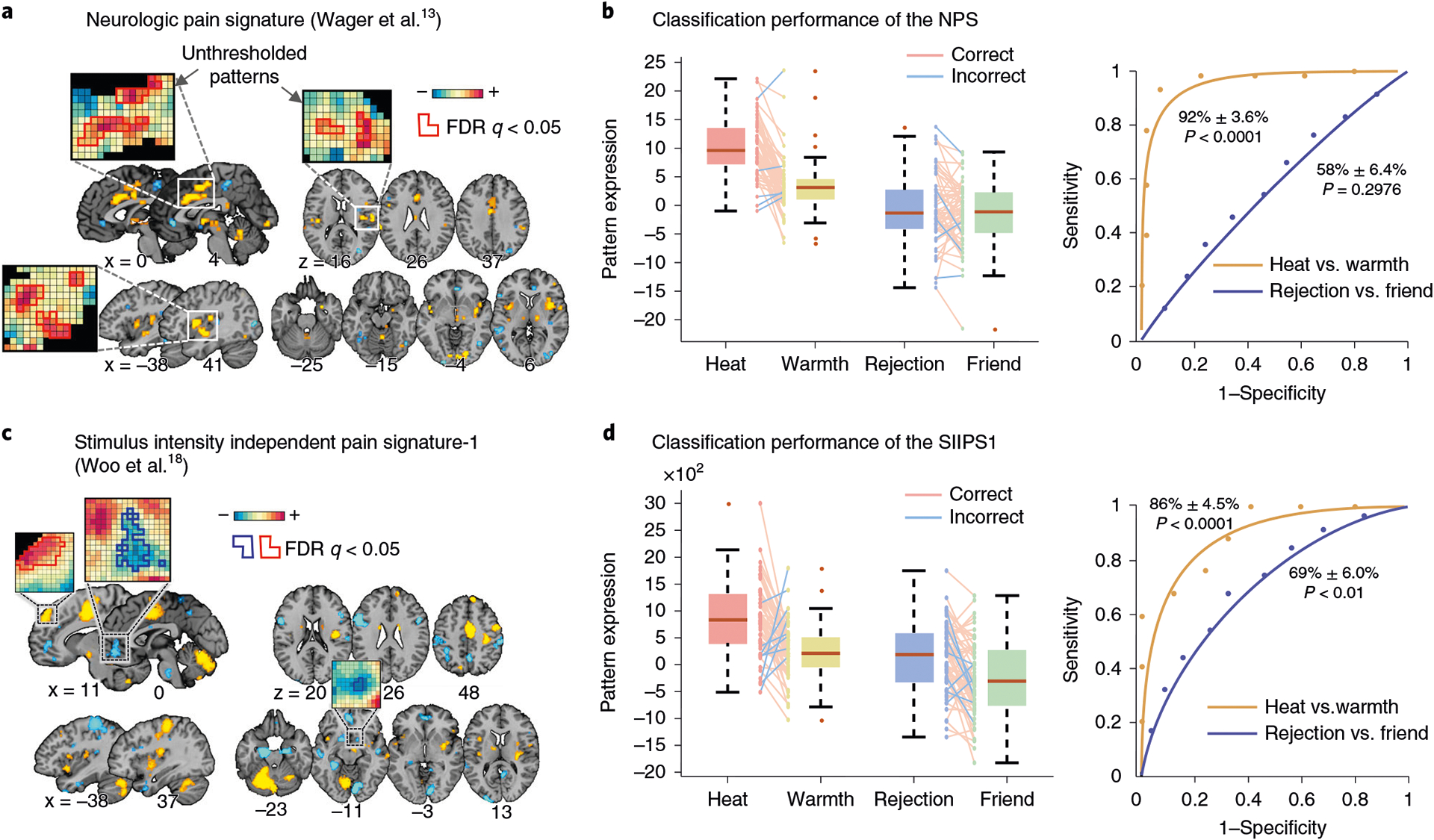

Following the feature-level assessment of the model, investigators should examine whether the new model can generalize across individuals and populations, different scanners and test contexts (Steps 8–10) and whether the model is biologically plausible (Step 11). The order of these two analyses is not important, but both analyses are critical in evaluating how robust and useful the model is for both an applied use and neuroscience. These steps should also be an open-ended process; for Steps 8–10, the generalizability tests can start with testing the model on a few independent datasets locally collected, but the tests should be scaled up to new data from broader contexts, such as data from different laboratories, populations, scanners and task conditions, with increasing levels of evidence. For Step 11, investigators need to keep seeking converging evidence from related literature and invasive studies with different experimental modalities and multiple species to understand the model’s neurobiological meaning. In the current protocol, for Steps 8–10, we provide an example of testing generalizability of two previously developed predictive models for pain, the Neurologic Pain Signature (NPS)13 and Stimulus Intensity Independent Pain Signature-1 (SIIPS1)18, on example fMRI data from a previous publication93 (for more details of the models and dataset, see Materials). For Step 11, we provide two basic analyses: first, term-based decoding based on a large-scale meta-analysis database, Neurosynth80, and second, comparisons of the model to large-scale networks identified by Yeo et al.87.

Representational and behavioral analyses can further our understanding of the model (Steps 12–15). For example, one can better understand the model’s decision making by examining the patterns of model behaviors (e.g., decisions and responses) over multiple instances and examples. Investigators can also analyze model representations by directly comparing weight vectors or measuring representational distances among different models. In this protocol, we provide an example of the representational analysis using two a priori predictive models applied to the sample dataset (see Materials for details about the predictive models and sample dataset).

Level of expertise needed to implement the protocol

Creating one’s own codes to perform the analyses described below is a demanding task in terms of programming abilities and knowledge of statistics and ML. However, we provide CanlabCore tools (https://github.com/canlab/CanlabCore), a MATLAB-based interactive analysis tool for fMRI data. With the CanlabCore tools, one can readily run most of the analyses described. To successfully use the CanlabCore tools, users should be familiar with the MATLAB programming environment, and they should be able to implement simple codes using predefined functions and different variable types (e.g., objects, structures and cell arrays). To implement the nonlinear model and LRP analysis, users should be familiar with Python and some deep learning libraries in Python, such as Tensorflow and Keras.

Materials

Equipment

Software

A computer with MATLAB and a web browser to access Neurosynth at http://neurosynth.org (or one can also use the Neurosynth Python toolbox available at https://github.com/neurosynth)

-

For linear models: The CanlabCore toolbox is available at https://github.com/canlab/CanlabCore ▲CRITICAL For full functionality, it is necessary to install the following dependencies: (i) MATLAB Statistics and Machine Learning toolbox, (ii) MATLAB Signal Processing toolbox, (iii) Statistical Parametric Mapping (SPM) toolbox available at https://www.fil.ion.ucl.ac.uk/spm/, and (iv) some external toolboxes (in the directory named ‘/External’), including the Spider toolbox (for SVMs), contained in the CanlabCore ▲CRITICAL Ensure that all the toolboxes are added with subfolders to the MATLAB path.

Note that our protocol could be readily adapted to other software platforms, particularly open-source alternative programming languages such as Python. Although we do not provide sample code in this protocol, the COSAN Lab (led by Luke Chang) has developed a Python package based on the CANlab tools, called NLTools, available at https://github.com/cosanlab/nltools. This package relies on Nilearn (http://nilearn.github.io) and scikit-learn (https://scikit-learn.org), providing an alternative, open-source format for implementing this protocol

For deep learning models: Python 3.6.5 or higher available at https://www.python.org/downloads/; NumPy 1.14.5 (Python library for scientific computing) available at https://github.com/numpy/numpy; Keras 2.2.4 (Python library for Deep Learning) available at https://keras.io/ and TensorFlow 1.8.0 available at http://www.tensorflow.org/install/; Matplotlib 3.0.2 (Python library for plotting) available at https://matplotlib.org/users/installing.html; scikit-learn 0.20.3 (Python library for ML) available at https://scikit-learn.org/stable/install.html; pandas 0.25.1 (Python library for data analysis) available at https://pandas.pydata.org/pandas-docs/stable/install.html; Scipy 1.3.0 (Python library for mathematics, science and engineering); Nilearn 0.5.2 (Python library for ML on neuroimaging data) available at http://nilearn.github.io/introduction.html#installation; Nipype 1.2.0 (Python library for an interface to neuroimaging analysis pipelines) available at https://pypi.org/project/nipype/; iNNvestigate102 1.0.8.3 (Keras explanation toolbox) available at https://github.com/albermax/innvestigate; other dependencies: Compute Unified Device Architecture (CUDA) and CUDA Deep Neural Network (CuDNN) library with an appropriate graphical processing unit (GPU)

Input data

Statistical parametric maps (used in all steps): The level of input data can be varied. For example, one can use first-level contrast or beta maps for event regressors, single-trial beta series, or repetition time (TR)-level images. The amount of data needed for model training depends on whether a researcher aims to build a model to predict between-person individual differences or within-person behaviors or stimulus conditions. In the case of between-individual prediction, N > 100 participants is usually required, but N > 300 is recommended1. For the prediction of within-person effects using group-level data, data from >20 participants are usually used, but >50 participants and 1–2 h of scanning for each person are recommended103. The recommended amount of data can also be varied depending on the types of data or algorithms (e.g., refs. 104,105). Note that these recommendations are heuristic only, as a full discussion of power and model reproducibility is beyond the scope of this review. In addition, it is extremely beneficial to have an independent hold-out dataset for prospective model validation and generalizability testing ▲CRITICAL The CanlabCore tools can read images in NIfTI format (.nii) or Analyze format (.img and .hdr). For deep learning models, we used data with 4D matrices (x, y, z, t) for each subject, created by the Nibabel library. The CanlabCore toolbox provides an easy way to create 4D matrices (see reconstruct_image.m) ▲CRITICAL If you are using single-trial level data, some trial estimates could be strongly affected by acquisition artifacts or sudden motion. For this reason, we recommend excluding images from trials with high variance inflation factors (VIFs). In previous studies, we usually removed trials with VIFs that exceeded 2.518,106. VIFs can be calculated with getvif.m in the CanlabCore toolbox ▲CRITICAL If you are using TR-level data, ensure that the data are properly filtered (e.g., high-pass filtering) and denoised (e.g., confound regression or signal decomposition methods)60. For in-depth quality checks for image data, please refer to ref. 60 or use the MRI Quality Control (MRIQC) tool107 to perform an automated data quality check. In the case of individual- or group-level preprocessed data (e.g., contrast maps), one can use the qc_metrics_second_level.m function from the CanlabCore toolbox. The function assesses, for example, signals from white matter and ventricles and their effect sizes or scale inhomogeneities across subjects in gray matter and ventricles. To use this tool, data should be spatially normalized to the Montreal Neurological Institute (MNI) template ! CAUTION A study collecting neuroimaging and behavioral data must be approved by an appropriate institutional ethical review committee, and all subjects must provide informed consent to the acquisition and use of the data in the case of a local study. In the case of certain public datasets, a data-sharing agreement must be approved. In the example dataset used in this protocol, all participants provided written informed consent in accordance with a protocol approved by Columbia University’s Institutional Review Board.

Nuisance data (used in Steps 4–6): Here, we used time series data for six head-motion parameters (x, y, z, roll, pitch and yaw)

A priori pattern-based predictive models (used in Steps 8–10 and 12–15): To run the example analyses provided here, one also needs two a priori pattern-based predictive models, the NPS and the SIIPS1. The NPS is available upon request with a data use agreement. The SIIPS1 can be downloaded from the CANlab Neuroimaging_Pattern_Masks repository (https://github.com/canlab/Neuroimaging_Pattern_Masks)

Functional Atlas data (used in Steps 7 and 11): We used seven functional networks from Yeo et al.87 (available at https://github.com/ThomasYeoLab/CBIG/tree/master/stable_projects/brain_parcellation)

Example dataset

We used an fMRI dataset (N = 59) from a previous publication93 as an example dataset for demonstration

In an fMRI experiment, all participants completed two tasks: a somatic pain task, in which participants experienced painful heat or non-painful warmth, and a social rejection task, in which participants viewed pictures of their ex-partners or friends

In this protocol, trial-level data are used for Steps 1–7 and Step 11, and participant-level contrast images are used for Steps 8–10 and 12–15. For the trial-level data, we use data from the painful heat trials (heat condition, eight trials per participant) and the ex-partner trials (rejection condition, eight trials per participant). For the contrast images, we use data from all four conditions (59 images per condition)

- The example data and codes can be downloaded from https://github.com/cocoanlab/interpret_ml_neuroimaging. Its directory structure can be found below, and our example codes use this data structure

/data /derivatives /trial_images/ /sub_01 heat_trial01.nii heat_trial02.nii … rejection_trial08.nii /sub_02 … /sub_59 /contrast_images heat_sub_01.nii heat_sub_02.nii … friend_sub_59.nii /masks /scripts

Procedure

Build a model ● Timing 20 min to a few hours

-

1This step builds fMRI-based ML models that are predictive of a target outcome. This is a prerequisite step to the workflow of the model interpretation framework. Although details of this step are not the main focus of the current protocol, we briefly describe the procedure for the model building to provide information about the two types of models used in this protocol. Option A describes the training of a widely used linear algorithm, SVMs. We chose SVMs because it is one of the most popular ML algorithms in current neuroimaging literature—for example, from the survey we conducted in ref. 2, 46.4% of the 481 ML models in neuroimaging studies used SVMs, which were followed by discriminant analysis and logistic regression with 12.7% and 7.5% prevalence, respectively. The steps in option A describe how to build an SVM model using the CanlabCore tools (Box 1). Option B is a procedure to build a nonlinear model. As an example of nonlinear models, we chose a Convolutional Neural Network (CNN), a successful deep learning model for a variety of applications on prediction. The steps in option B describe how to build a CNN model using Keras108.

Option Module 1A Linear model (SVMs) 1B Nonlinear model (CNN) - Training SVMS

-

Prepare a data matrix. You can use the following lines to import the fMRI data from image data with a gray matter mask.

basedir = ‘/The/base/directory’; % base directory gray_matter_mask = which(‘gray_matter_mask.img’); heat_imgs = filenames(fullfile(basedir, ‘data’, ‘derivatives’, … ‘trial_images’, ‘sub*’, ‘heat_*.nii’)); % read image file names for the heat condition rejection_imgs = filenames(fullfile(basedir, ‘data’, … ‘derivatives’, ‘trial_images’, ‘sub*’, ‘rejection_*.nii’)); % file names for the rejection condition data = fmri_data([heat_imgs; rejection_imgs], gray_matter_mask);The variable data.dat contains the activation data in a flat (2-D) and space-efficient # voxels × # observations matrix.? TROUBLESHOOTING - (Optional) Apply a mask. One can apply a mask to include only selected brain features before training a model. For example, the following lines of code apply the mask that was used in Woo et al.93:

mask = fullfile(basedir, ‘masks’, ‘neurosynth_mask_Woo2014.nii’); data = apply_mask(data, mask);

- Prepare an outcome variable and add it into dat.Y. For classification tasks, the outcome vector is defined using a categorical variable (e.g., binary values for different conditions), and for regression, the outcome vector is a continuous variable (e.g., trial-by-trial ratings). The following code defines the outcome vector for the SVM binary classifier in our example analysis. Coding observations with [1, −1] values is compatible with the Spider package and should be used:

data.Y = [ones(numel(heat_imgs),1); … −ones(numel(rejection_imgs),1)]; % heat:1, rejection:−1

-

Define training and test sets for cross-validation. To obtain an unbiased estimate of model performance, one should internally validate the model using cross-validation. A crucial step in cross-validation is to choose a suitable strategy of splitting the data into training and test sets. One can define a certain percentage of data as the test set (k-fold cross-validation) (e.g., 10% for 10-fold cross-validation) or use one subject’s data as test data in each fold (LOSO cross-validation). Because with increasing k, bias of the predictive accuracy decreases and variance increases, one needs to find a suitable solution for the trade-off, taking into account the size of the available dataset. LOSO cross-validation can be used in small datasets, whereas in large datasets, 5-fold or 10-fold cross-validation can be more efficient1. Care should be taken that no dependencies between training and test data have been introduced (see below).The optional input argument, ‘nfolds’, of the predict function can specify which types of cross-validation will be used. With a scalar value input k, it will run k-fold cross-validation. The optional input can also be a vector for using customized cross-validation folds (e.g., LOSO, leave-two-trials-out). If the optional input equals one, the function will not run cross-validation. If no optional input is given, it will run fivefold cross-validation stratified on the outcome variable as a default. For example, we can use LOSO cross-validation in our example dataset. That is, we reserve one subject’s data as test data and use the remaining 58 subjects’ data as the training set. The input argument ‘nfolds’ can then be defined as follows.

n_folds = [repmat(1:59, 8,1) repmat(1:59, 8,1)]; % Each subject % has 8 trial image data for each condition n_folds = n_folds(:); % flatten the matrix

▲CRITICAL STEP It is crucial to keep the training and test sets truly independent. Performing some analyses, such as denoising, feature selection or scaling, across training and test sets can create dependence between the training and test datasets, resulting in a bias in the estimate of the prediction performance1,2. Dependence between the training and test datasets can also occur if participants are related, as is typical in twin studies and occurs in some other studies, such as the Human Connectome Project109, ABCD110 and UK Biobank111 studies. In addition, other common situations can introduce some dependence, such as nesting participants within a scanning day, variation in scan timing relative to academic deadlines and other seasonal variables, or subsets tested by the same experimenter in a multi-experimenter study. These issues do not categorically invalidate cross-validation, but they make it more important to test generalizability in independent test cohorts—and ultimately in cohorts that are explicitly dissimilar in population and procedures to the training sample.▲CRITICAL STEP If k-fold cross-validation is used with the regression approach, we recommend using stratified cross-validation—stratifying holdout test sets for each fold based on the level of the outcome. To see how the CanlabCore tools implement stratified cross-validation, see stratified_holdout_set.m. -

Fit an SVM model to the training data. We use the predict function, which is a method of the fmri_data object in the CanlabCore tools (see Box 2). This function allows us to easily run many different ML algorithms on fMRI data with cross-validation. The following lines of code will fit an SVM classifier to the data and test the cross-validated error rate of the classifier. We explain the details of cross-validation in Step 2.

[cverr, stats_loso] = predict(data, ‘algorithm_name’, …’cv_svm’, ‘nfolds’, n_folds, ‘error_type’, ‘mcr’);

The output variable stats provides many kinds of information, including model weights, predicted y values based on cross-validation, and performance values (for more details, see Box 2).Note that one can use nested cross-validation to find the optimal hyper-parameters of the model. In nested cross-validation, an additional, nested cross-validation loop is performed on the training set to tune the hyper-parameters, while the outer cross-validation loop is used to estimate the model performance1,101. In our example, for simplicity, we use the default setting of the function for the SVM algorithm, where C = 1.If multiple models with a number of different parameters are tested on a single training set, a correction for multiple comparisons (e.g. False Discovery Rate (FDR) correction) must be conducted. Note that the corrections for multiple comparisons are designed to protect against false-positive feature identification but not against the inflated accuracy that comes from testing multiple models outside of cross-validation loops. Therefore, a more important step is to test the final model on additional validation or independent test datasets.In the following steps, we apply the analysis methods to the linear SVM model, a classifier model. Nonetheless, the predict function can also build regression-based models for predicting continuous outcomes. For example, the code below runs principal component regression (PCR):[cverr, stats_loso] = predict(data, ‘algorithm_name’, …’cv_pcr’, ‘nfolds’, n_folds, ‘error_type’, ‘mse’);

? TROUBLESHOOTING

-

- Training a CNN

- Prepare the data matrix. We used the pandas DataFrame (to create a data template) and Nilearn (to load data from NIfTI files). These are implemented in ‘Part 1: Initializing Data Matrix’ and ‘Part 2: Loading Data Function’ of our example Jupyter Notebook file, cnn_lrp.ipynb, which is available at https://github.com/cocoanlab/interpret_ml_neuroimaging/blob/master/scripts/cnn_lrp.ipynb.

- Define a CNN model. With Keras, one can define a CNN model using the following code, which defines four convolution layers and two fully connected layers, followed by a softmax output layer. One can also define the loss function with the Adam optimizer112 for the model training (‘Part 3: CNN Model’ of cnn_lrp.ipynb).

def make_custom_model_cnn_2D(): model = Sequential() model.add(Conv2D(8, (3,3), kernel_initializer=‘he_normal’, padding=‘same’, input_shape=fmri_shape)) model.add(Activation(‘relu’)) model.add(MaxPooling2D(pool_size=(2,2))) model.add(Conv2D(16, (3,3), kernel_initializer=‘he_normal’, padding=‘same’)) model.add(Activation(‘relu’)) model.add(MaxPooling2D(pool_size=(2,2))) model.add(Conv2D(32, (3,3), kernel_initializer=‘he_normal’, padding=‘same’)) model.add(Activation(‘relu’)) model.add(MaxPooling2D(pool_size=(2,2))) model.add(Conv2D(64, (3,3), kernel_initializer=‘he_normal’, padding=‘same’)) model.add(Activation(‘relu’)) model.add(MaxPooling2D(pool_size=(2,2))) model.add(Flatten()) model.add(Dense(128, kernel_initializer=‘he_normal’)) model.add(Activation(‘relu’)) model.add(Dense(2, kernel_initializer=‘he_normal’)) model.add(Activation(‘linear’)) model.add(Activation(‘softmax’)) adam = Adam(lr=0.001, beta_1=0.9, beta_2=0.999, epsilon=1e-08, decay=0.0) model.compile(loss=‘categorical_crossentropy’, optimizer=adam, metrics=[‘accuracy’]) return model - Split the data into training and test sets. As described above in the case of the linear SVM model, define the amount of data used as training and testing data for cross-validation. We used scikit-learn to create the cross-validation testing framework, which is implemented in ‘Part 4: Model Training example’ and ‘Part 5: Leave-One-Subject-Out Cross-validation’ of cnn_lrp.ipynb.

- Fit a CNN model on the training data. A CNN model can be trained with a variant of a mini-batch stochastic gradient descent method, such as Adam112, which is realized by the fit function in the Keras package. In the example code, we trained the model with 32 mini-batch size for every iteration. When running the train_model function we defined here, we evaluate the training loss (error) every epoch with the evaluate function in Keras. The following code also defines the number of epochs for training the CNN model (the second section of ‘Part 3. CNN Model’ of cnn_lrp.ipynb).

import tensorflow as tf config = tf.ConfigProto() config.gpu_options.allow_growth = True session = tf.Session(config=config) def train_model(train_X, train_y, test_X, test_y): # Optional: when you do not have enough GPUs, add the following line: # with tf.device(‘/cpu:0’): tr_data = {} tr_data[‘X_data’] = train_X tr_data[‘y_data’] = train_y te_data = {} te_data[‘X_data’] = test_X te_data[‘y_data’] = test_y tr_data[‘y_data’] = keras.utils.to_categorical(tr_data[‘y_data’], 2) te_data[‘y_data’] = keras.utils.to_categorical(te_data[‘y_data’], 2) # Initialize and compile the model model = make_custom_model_cnn_2D() model.compile(loss=“categorical_crossentropy”, optimizer=Adam(), metrics=[“accuracy”]) history = model.fit(tr_data[‘X_data’], tr_data[‘y_data’], batch_size=mini_batch_size, epochs=20, verbose=1) score = model.evaluate(te_data[‘X_data’], te_data[‘y_data’], verbose=0) return model,score - Test the model on test data. Once the training of CNN is done, we can test the model on a separate test dataset as in the following code, which returns the prediction accuracy on the test set.

score = model.evaluate(te_data[‘X_data’], te_data[‘y_data’], verbose=0)

Box 2 |. The predict function in the CanlabCore toolbox.

The predict function is a versatile tool for running many different ML algorithms with cross-validation. It operates on the fmri_data object, which stores the data matrix used for prediction in .dat (features×observations) and the corresponding outcome vector in .Y (observations×1). Users can specify the prediction algorithm, including multiple regression, LASSO regression, PCR, LASSO-PCR, SVMs and support vector regression.

In addition, the function includes an option to perform cross-validation. Users can define the number of cross-validation folds (i.e., k-fold cross-validation; the predict function stratifies cross-validation folds based on the outcome) or custom cross-validation fold, such as leave-one-out cross-validation. The function also evaluates performance with either misclassification rate or mean square error. There is also an option to automatically perform the bootstrap tests. Users can enter a desired number of bootstrap samples to use, and they can also save the bootstrapped weights if needed.

The output of the function provides many kinds of information, including predictive model weights, predicted outcomes based on cross-validation and performance values, such as prediction error or correlation between actual and predicted outcomes. For example, the .weight_object field in the output contains an fmri_data object (or a statistic_image object if the bootstrap tests are performed) that contains the weights of the trained model in the .dat field. Another important field of the output is the .other_output_cv field. It stores the weights of all models trained during the cross-validation in the first column, the corresponding predicted values in the second column, the intercept in the third column and additional information about the algorithm in the fourth column. If the bootstrap tests are performed, the results of the analysis are stored in the .WTS field, which contains the mean, P and z values and standard errors, as well as the bootstrapped weights if the ‘savebootweights’ option was used. You can find more information and some use cases in the help document of the predict function by typing help fmri_data.predict in the command window.

Assess the cross-validated performance ● Timing 5–20 min

▲CRITICAL This step evaluates the new model’s predictive performance in terms of accuracy, sensitivity and specificity. It is critical to obtain unbiased estimates of model performance using cross-validation or held-out test data. Below, we provide an analysis example of LOSO and eightfold cross-validation. Note that we made an arbitrary choice of k = 8 cross-validation folds for convenience, as cross-validation is typically largely insensitive to the choice of k, but researchers should consider other choices and/or cross-validation strategies. For further discussion on this topic, please see Step 1A(iv) and refs. 1,101.

-

2

Iteratively fit models using a training set, test the models on each fold’s holdout set and aggregate predicted outcome values across folds. In Step 1, we divided the data into the training and test sets. As an example, we split the data to perform LOSO cross-validation. Therefore, we reserve one participant’s data as a test set and fit a model (an SVM in our example) to the remaining 58 participants’ data. Subsequently, we use the model to classify the left-out data and save the distance from the hyperplane for each data point. We repeat this process 59 times. This process is automated in the predict function.

In addition, the following code shows how to run eightfold stratified cross-validation.[~, stats_8fold] = predict(data, ‘algorithm_name’, ‘cv_svm’, … ‘nfolds’, 8, ‘error_type’, ‘mcr’);

▲CRITICAL STEP Hyper-parameters of the models should be kept the same for all iterations of cross-validation, but a nested cross-validation, a common method to choose an optimal hyper-parameter, can use different hyper-parameters for each fold.

-

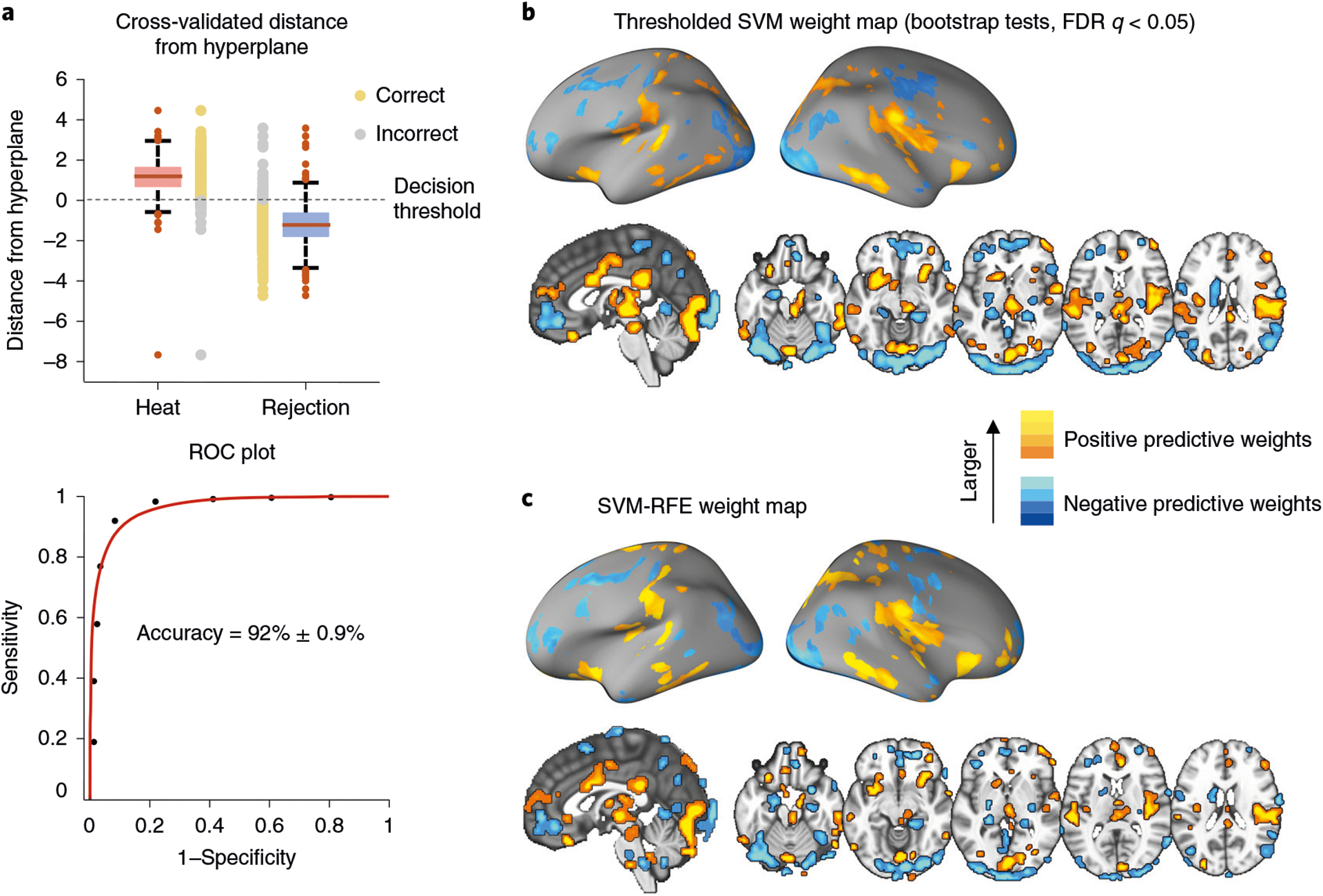

3Calculate the predictive accuracy, sensitivity and specificity by comparing the model prediction and the actual outcome. In the context of classification, the accuracy can be defined as 1 minus the misclassification rate, which is the ratio of the number of incorrectly classified data points and total number of data points. Classification decision can be made with a single threshold value θ (in SVMs, typically θ = 0 if the bias term is included in the model). One can also use a two-alternative forced-choice (2AFC) test if each data point has its counterpart (e.g., which of two conditions was the heat condition?). Sensitivity is defined as the ratio of correctly classified positives to the number of positive data points, and specificity as the ratio of correctly classified negatives to the number of negative data points. Figure 3a illustrates results of the accuracy estimation of the cross-validated classification. The roc_plot function from the CanlabCore tools provides all these performance metrics:

% LOSO cross-validation ROC_loso = roc_plot(stats_loso.dist_from_hyperplane_xval, … data.Y == 1, ‘threshold’, 0); % 8-fold cross-validation ROC_8fold = roc_plot(stats_8fold.dist_from_hyperplane_xval, … data.Y == 1, ‘threshold’, 0);