Abstract

Traditional research focuses on efficacy or effectiveness of interventions but lacks evaluation of strategies needed for equitable uptake, scalable implementation, and sustainable evidence-based practice transformation. The purpose of this introductory review is to describe key implementation science (IS) concepts as they apply to medication management and pharmacy practice, and to provide guidance on literature review with an IS lens. There are five key ingredients of IS, including: (1) evidence-based intervention; (2) implementation strategies; (3) IS theory, model, or framework; (4) IS outcomes and measures; and (5) stakeholder engagement, which is key to a successful implementation. These key ingredients apply across the three stages of IS research: (1) pre-implementation; (2) implementation; and (3) sustainment. A case example using a combination of IS models, PRISM (Practical, Robust Implementation and Sustainability model) and RE-AIM (Reach, Effectiveness, Adoption, Implementation, Maintenance), is included to describe how an IS study is designed and conducted. This case is a cluster randomized trial comparing two clinical decision support tools to improve guideline-concordant prescribing for patients with heart failure and reduced ejection fraction. The review also includes information on the Standards for Reporting Implementation Studies (StaRI), which is used for literature review and reporting of IS studies,as well as IS-related learning resources.

Keywords: implementation science, translational research, biomedical research, pharmacy

Traditional biomedical and health services research address questions related to efficacy (i.e., testing under optimal conditions focused on internal validity) or effectiveness (i.e., testing under real-world conditions with a focus on external validity) of interventions being studied. In these studies, less focus is placed on understanding factors and strategies needed for the equitable uptake, implementation, and sustained use of the intervention as it relates to practice change and evidence-based practice transformation. Even more broadly generalizable effectiveness trials, such as pragmatic clinical trials, provide little guarantee of public health impact.1,2 Additionally, it takes an average of 17 to 20 years for 14% of evidence-based innovations to be integrated into routine clinical practice.1,3,4 Implementation science (IS) is intended to address these gaps and accelerate change.5 These gaps also exist within pharmacy. Accordingly, some have called for the use of IS to transform pharmacy practice and curricula.6–9 There are numerous opportunities to accelerate the implementation of evidence-based interventions in pharmacy. Potential targets for the application of IS models and methods to advance pharmacy practice and care delivery include iterative design and evaluation of different models for pharmacist-led chronic disease management in outpatient clinics and transitions of care services, de-prescribing of inappropriate medications in older adults, antibiotic stewardship, applying clinically relevant pharmacogenomic recommendations that promote health equity, or improving population health using value-based reimbursement metrics.10–12 A recent review of factors influencing the implementation of innovations in community pharmacies identified important barriers to nationwide implementation, including pharmacy staff engagement with the innovation, resources needed for successful operationalization of the innovation, and external engagement (e.g., the perceptions of patients and other health care professionals and their relationship with the community pharmacy) with implemented innovations.13

IS is defined as “the scientific study of methods to promote the systematic uptake of research findings and other evidence-based practices into routine practice, and, hence, to improve the quality and effectiveness of health services”.5,14–16 Research projects using IS frameworks incorporate health care perspectives from multiple stakeholders across multiple levels including the patient, provider, organization, and policy. Its goals are to optimize the equitable uptake and scalable sustainment of innovative interventions into routine settings by identifying contextual barriers and facilitators, and developing and applying strategies to overcome these barriers and enhance facilitators.17–23

IS studies are different from efficacy, effectiveness, quality improvement, program evaluation, and dissemination studies. Efficacy and effectiveness studies evaluate the efficacy and effectiveness of interventions, whereas IS usually seeks to evaluate implementation outcomes of increasing uptake and sustainability of interventions with established efficacy or effectiveness.17,24 IS often employs effectiveness-implementation hybrid study designs in which implementation and effectiveness outcomes of an intervention are evaluated simultaneously.24 Quality improvement usually starts with a specific problem identified from multiple stakeholders in a specific practice to find strategies to improve that specific problem, whereas IS typically begins with an under-utilized evidence-based practice to addresses quality gaps at multiple stakeholder levels.1,17 Additionally, IS findings lead to generalizable knowledge that can be widely applied beyond the specific practice site under study.1,17 Program evaluation may overlap but is not the same as IS.25 Program evaluation is the science of improvement with a focus on changes in outcomes while IS integrates intervention into a variety of settings, with a focus on methods and measures and the impact of implementation strategies.25 IS may overlap with dissemination science, but is different in that dissemination science uses communication and education strategies to spread information.15,17,26 For example, the pharmaceutical industry effectively employs communications and marketing strategies such as direct-to-consumer advertising to disseminate drug information, but they seldom study the implementation impact of practice change or sustainability from drug products.27,28

Translational research investigates “the scientific and operational principles underlying each step of the translational process.”29 Within the spectrum of the five-phased National Institute of Health’s roadmap for translational research continuum spanning from basic research (T0) through population health research (T4), IS fits within the third (T3) and the fourth (T4) translational steps.5,30,31 The third step (T3) is translation to practice and identifies strategies that move evidence-based interventions or clinical guidelines into practice, and the fourth step (T4) is translation to community and involves stakeholders to evaluate implementation strategies and sustainability of interventions. IS bridges the “evidence-practice gap” – the gap between scientific discoveries or evidence and their translation into clinical practice.5 Most IS research in health care is conducted in disciplines other than in pharmacy. The purpose of this introductory paper is to describe key IS concepts as they apply to medication management and pharmacy practice, and provide guidance on literature review with an IS lens.

IMPLEMENTATION SCIENCE CONCEPTS

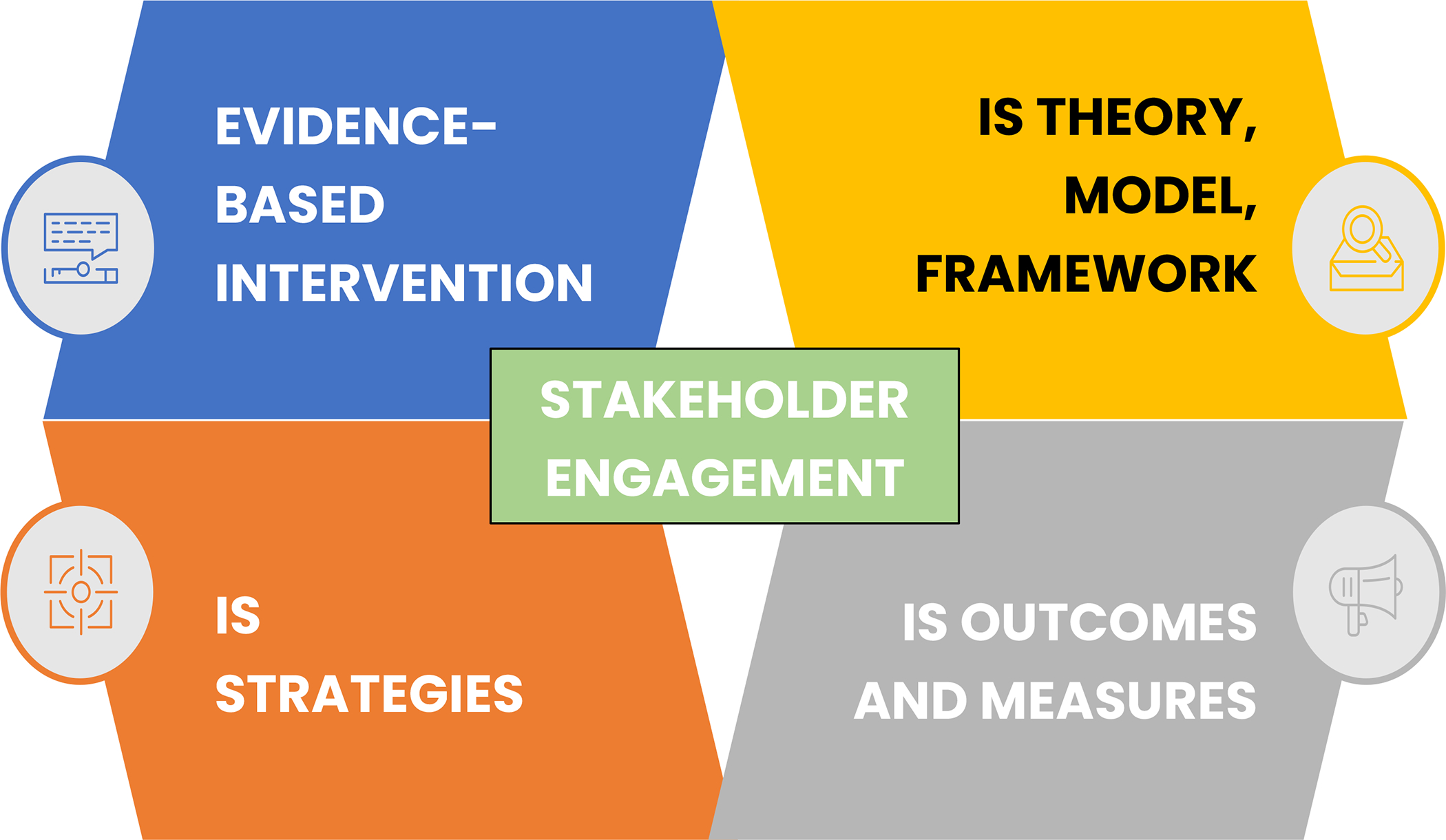

The key ingredients (or core elements) of IS studies include: (1) evidence-based intervention; (2) implementation strategies; (3) IS theory, model, or framework (TMF); (4) IS outcomes and measures; and (5) stakeholder engagement that are integrated within and informs all of the former components (Figure 1). Stakeholder engagement is at the core of IS and no IS work can be successfully completed without the engagement of diverse stakeholders. These key ingredients apply across the three stages of IS research, which include: 1) pre-implementation planning or study design; 2) implementation or study conduct; and 3) sustainment or study evaluation. To guide our review of IS, we propose a visual that has been successfully used in prior training and introductory presentations and includes the five key ingredients of IS. The idea of the “3 stages” of IS has been published in multiple works, but most prominently through the Veterans Administration Quality Enhancement Research Initiative (VA QUERI) Implementation Roadmap.32,33

Figure 1.

Key ingredients/core elements of implementation science (IS) studies.

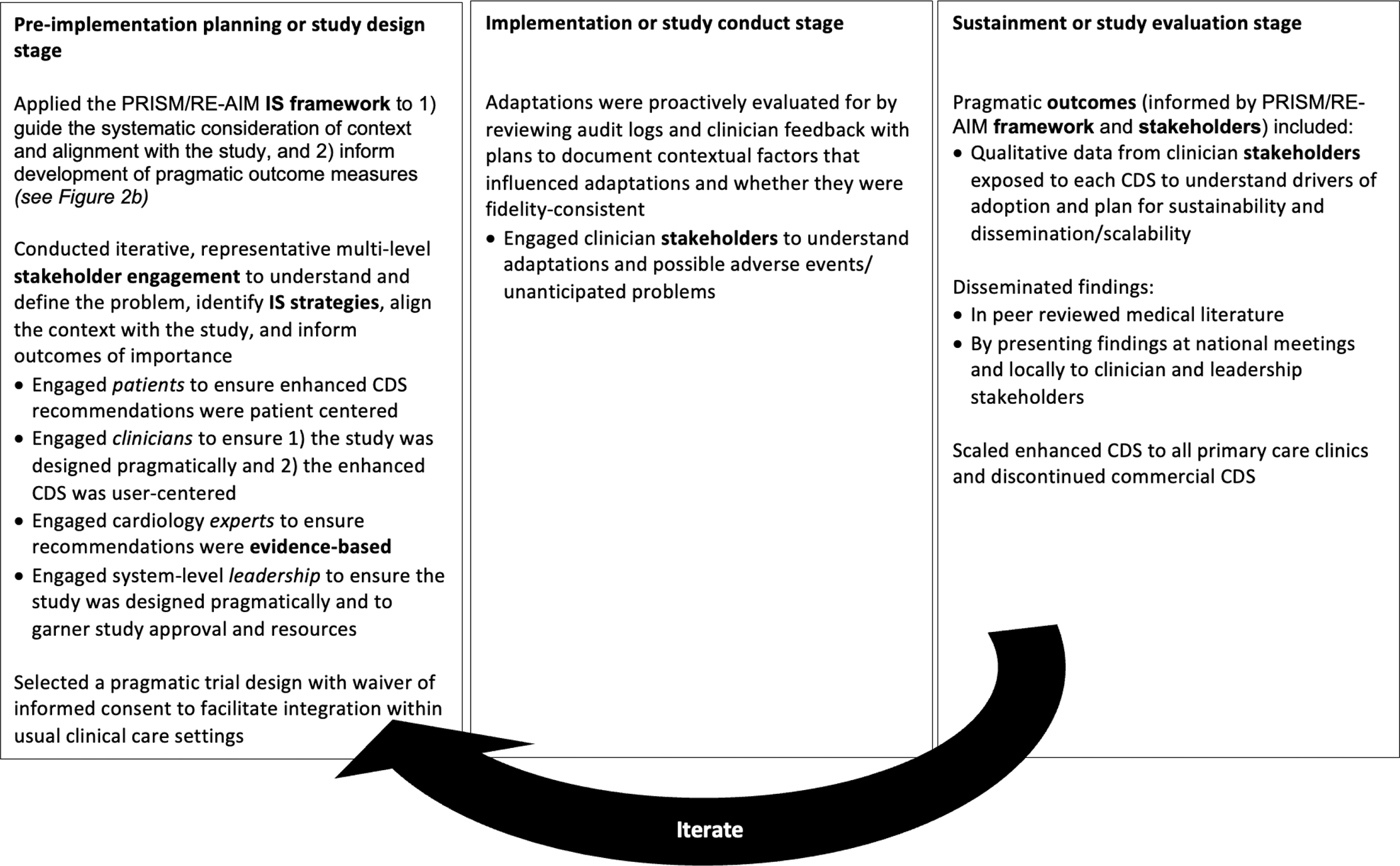

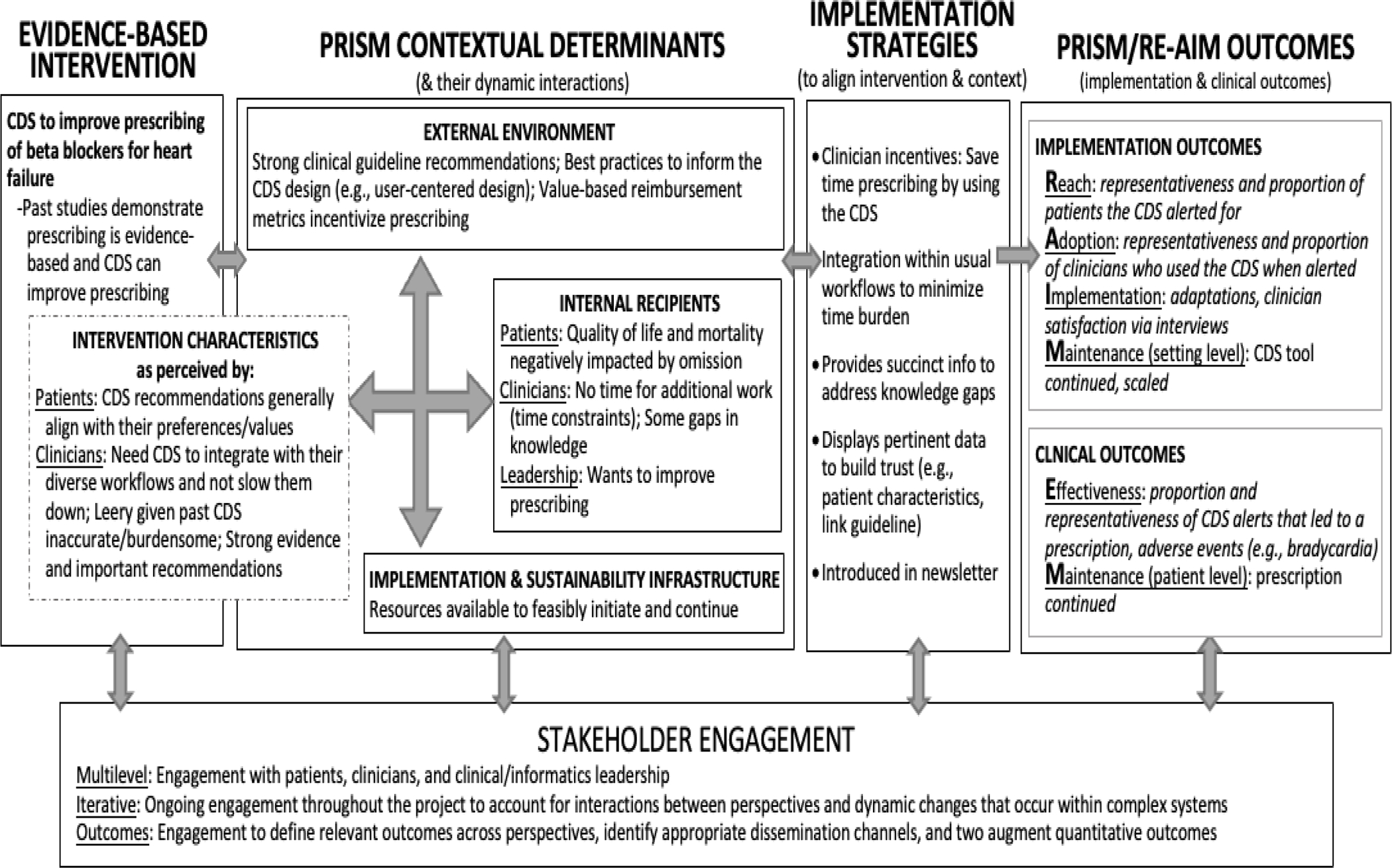

This section explores each of the key ingredients and provides guidance on including these across the three stages of IS research. Further, to illustrate how these ingredients are applied at each stage of research (Figures 2 and 3), we will refer to a case example describing the implementation of a clinical decision support (CDS) tool to improve guideline-concordant prescribing for patients with heart failure and reduced ejection fraction (HFrEF). Instead of including multiple examples on a broad scope, we selected one well-developed example with details to guide the reader through the process of designing an IS study. This study, conducted by a co-author (KT), aimed to compare the effectiveness of two different CDS tools to improve guideline-concordant prescribing of beta blocker medications for patients with HFrEF: a commercial CDS versus an enhanced CDS.34,35 Both CDSs alerted primary care clinicians within the electronic health record (EHR) during an office visit and recommended initiation of a beta-blocker for patients with HFrEF. However, the enhanced CDS was customized (user-centered) to be contextually relevant for the local health setting, whereas the commercially available (not user-centered) CDS was provided by the EHR vendor and created for the average health system. As depicted in Figure 2, IS was used to inform the overarching study design (pre-implementation stage), monitor and evaluate adaptations (implementation stage), and develop (pre-implementation stage) and evaluate (sustainment stage) outcome measures. IS was also used to inform the user-centered and contextually relevant design of the enhanced CDS tool (implementation stage), but not the design of the commercially available CDS tool. Figure 3 is adapted from Smith and colleagues’ Implementation Research Logic Model and illustrates how the PRISM (Practical, Robust Implementation and Sustainability model) / RE-AIM (Reach, Effectiveness, Adoption, Implementation, Maintenance) IS framework was applied to this study.36

Figure 2.

Stages of implementation science (IS). Example illustrating how IS and the five key ingredients (bolded) were applied to a cluster randomized trial comparing two clinical decision support (CDS) tools to improve guideline-concordant prescribing for heart failure.

Figure 3.

Description of how the PRISM/RE-AIM IS framework was used to inform the design of the enhanced CDS intervention and outcomes evaluated. CDS = clinical decision support; IS = implementation science; PRISM= Practical, Robust Implementation and Sustainability model; RE-AIM = Reach, Effectiveness, Adoption, Implementation, Maintenance.

Evidence-Based Intervention

Evidence-based interventions can be broadly defined in the context of IS research and can include what Brown and colleagues referred to as the 7Ps: programs, practices, principles, procedures, products, pills, and policies.25,37 Deciding on what is ready for broader dissemination and implementation (i.e., when there is enough evidence to justify practice change) can differ depending on the standards of a given field or industry.38,39 The type and strength/amount of evidence expected for a new medication before broad dissemination will be very different from the evidence required for the implementation of a behavioral program to address smoking cessation. Because there is not one standard to determine the readiness of a program for broader implementation, it is important to discuss the type of evidence that exists and why the researcher or practitioner believes that the available evidence justifies spread.

In the heart failure example, the evidence-based intervention is a CDS tool that makes recommendations to improve adherence to guidelines (Figures 2 and 3). In this study, the CDS tool was designated as the intervention’ based on the research question, whereas another research question may have appropriately designated the CDS tool as an IS strategy. The potential for ambiguity between the intervention and strategy reinforces the importance of clearly making these designations in research. The guideline recommendations are supported by numerous, high-quality studies and are highly regarded by the clinical community as important to HFrEF management,40,41 and CDS tools are considered to be an evidence-based method for supporting decision-making that are required by federal regulations;42,43 thus, the CDS tools are ready for spread.

Implementation Strategies

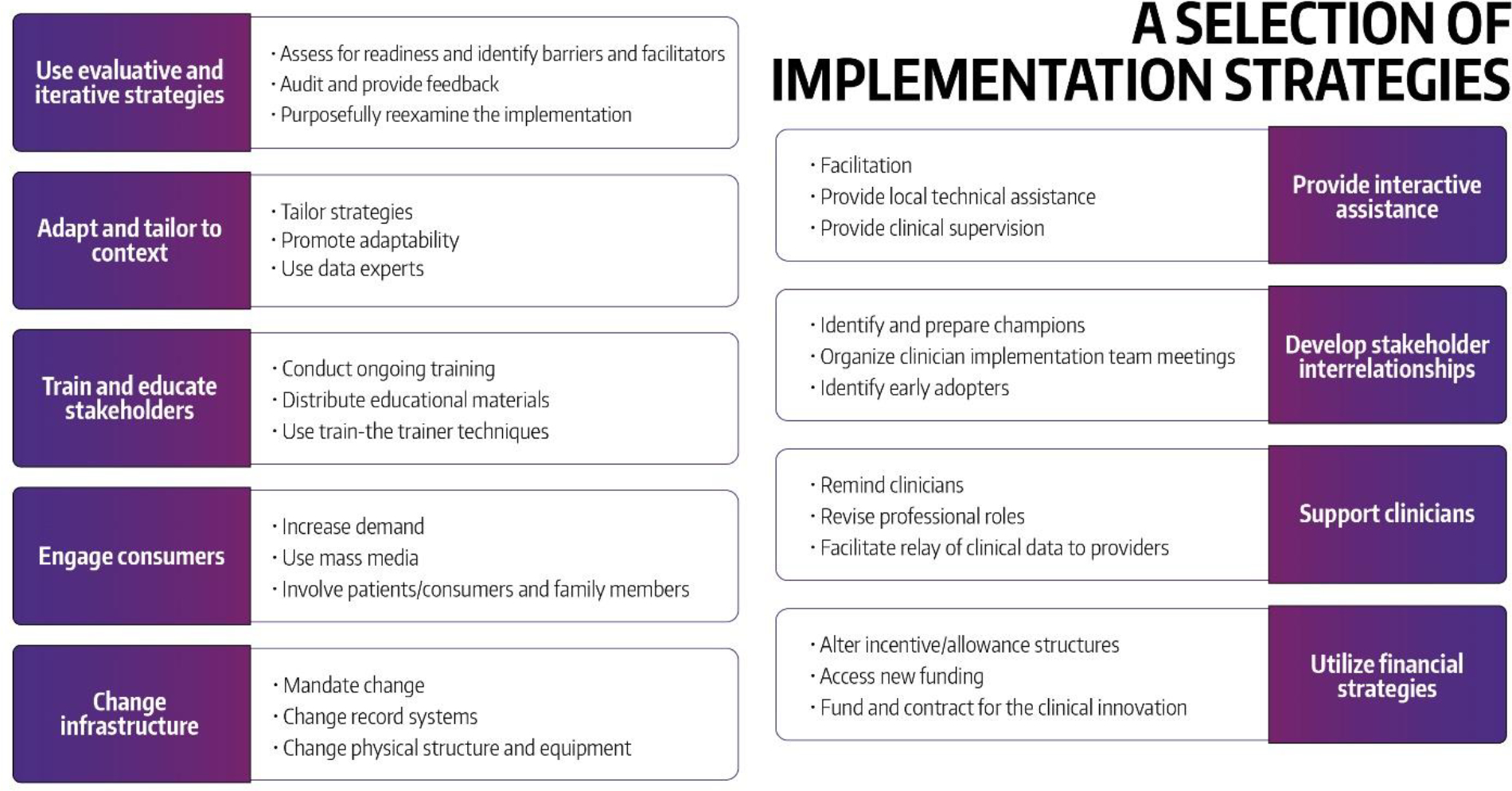

The ingredient that distinguishes IS most from more traditional efficacy and effectiveness research is the focus on implementation strategies. Implementation strategies are processes or activities that are used to support the equitable uptake, implementation, and sustained use of an intervention. They can be classified as discrete (individual activity) or multicomponent implementation strategies; they can act at different levels, such as the patient or student (sometimes also called supportive interventions), clinician or professor (i.e., pharmacist, physician, faculty), setting (i.e., pharmacy, clinic, classroom), or leadership (i.e., network manager, clinic director, Dean).44,45 To facilitate the distinction between interventions and strategies, Curran and colleagues described the intervention as the what or ‘the thing’ that is being implemented, and the implementation strategy as ‘how’ you will implement ‘the thing.’46 Powell and colleagues developed a taxonomy for implementation strategies, the Expert Recommendations for Implementing Change (ERIC). The ERIC taxonomy provides the listing of 73 distinct strategies organized around nine key domains,44,45 as described in Figure 4.47

Figure 4.

Expert recommendations for implementing change (ERIC): nine domains and examples of implementation strategies (used with permission from the University of Washington Implementation Science Resource Hub).47

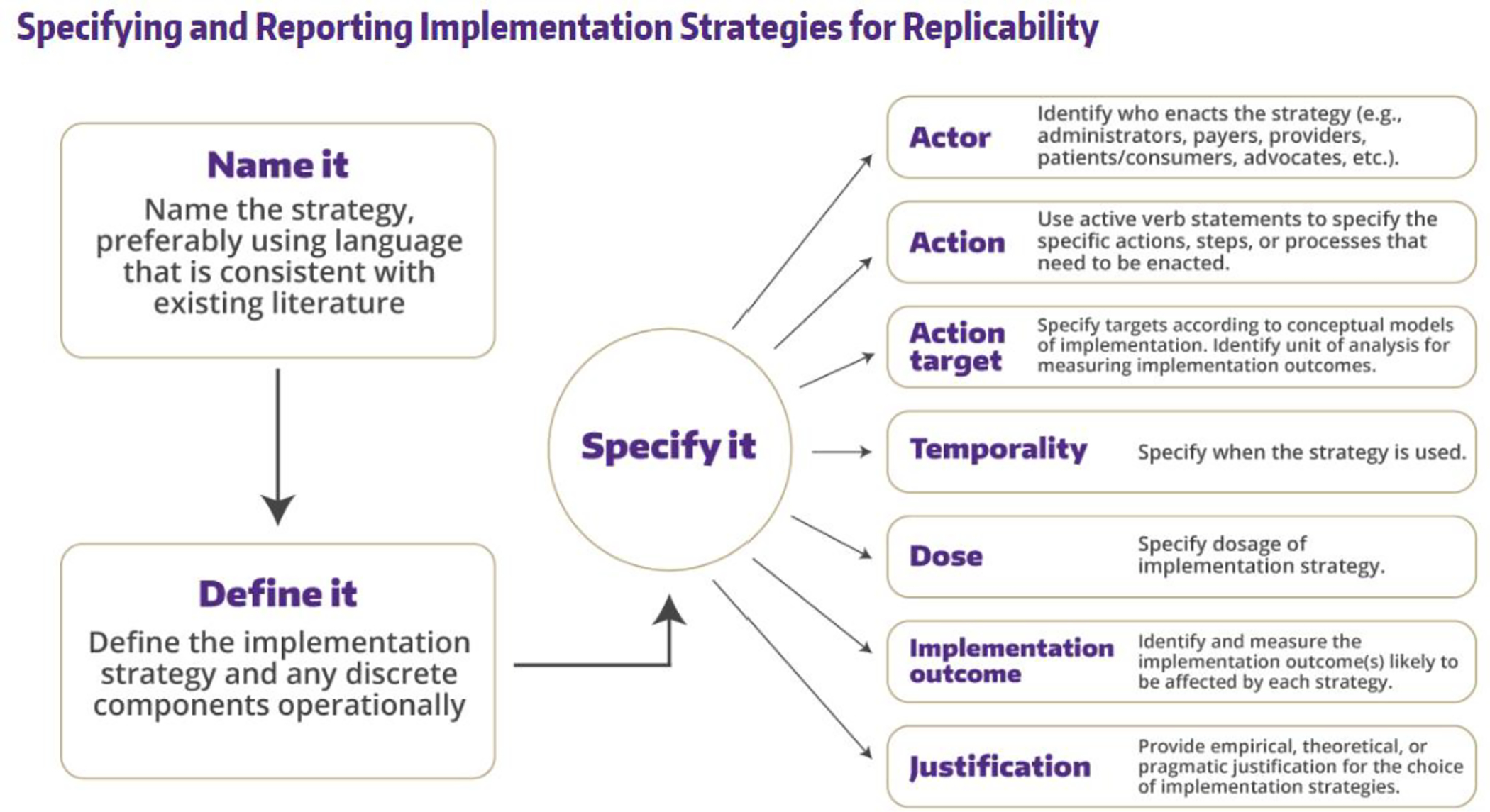

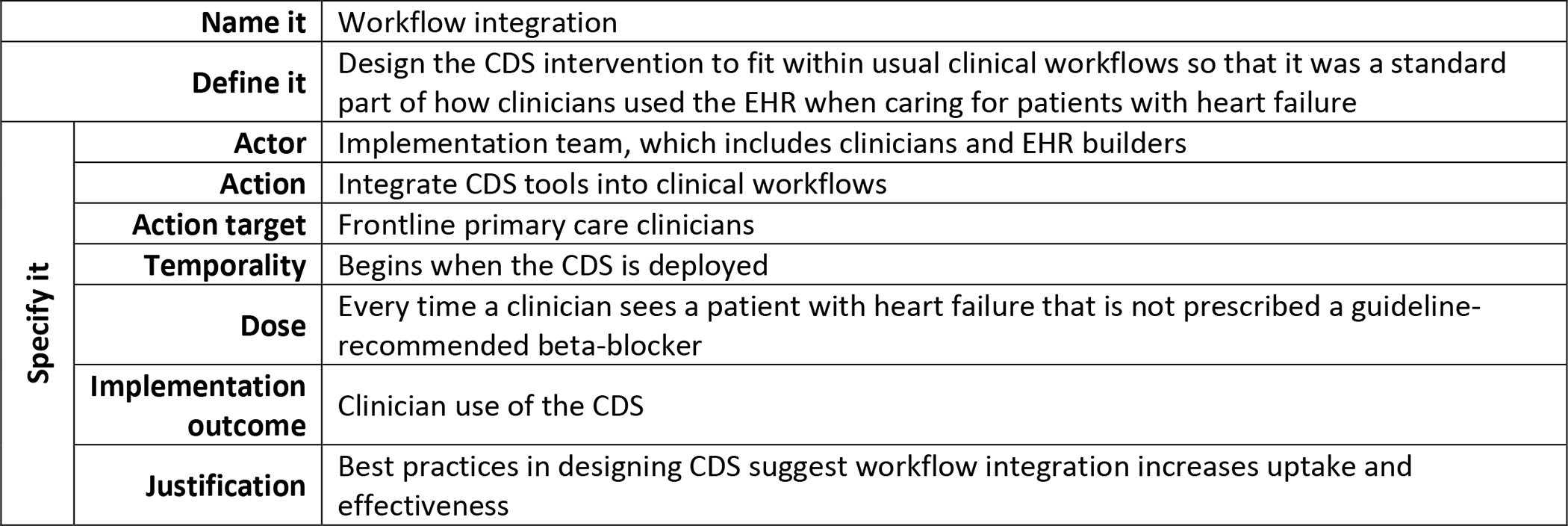

Multiple publications and resources have been developed to support the selection of implementation strategies.48–52 Briefly, implementation strategies can be linked to the anticipated or previously identified contextual determinants (barriers, facilitators) of the implementation of the intervention. It is important to sufficiently specify what the implementation strategy entails including what it is called, what activities are involved and why those were selected, when and by whom it is delivered to whom, and when and the target outcomes it is intended to change. Figure 5 provides guidance from Proctor and colleagues on specifying implementation strategies47,53 and Figure 6 depicts how this guidance was applied to the workflow integration as an IS strategy that was used in the heart failure case.

Figure 5.

Recommendations to specifying implementation strategies (used with permission from the University of Washington Implementation Science Resource Hub).47, 53

Figure 6.

Applying the recommendations for specifying implementation strategies. Description of how the workflow integration IS strategy used in the heart failure example was specified.

The IS TMF (see more details about this ‘ingredient’ in the next section) can be helpful in identifying implementation strategies as it is shown by the ERIC- Consolidated Framework for Implementation Research (CFIR) matching tool, which links specific CFIR constructs with relevant strategies from the ERIC compilation.52

In the heart failure example, implementation strategies were designed to address contextual determinants. For example, incentive strategies were used to address clinician time constraints, such that clinicians saved time by using the CDS intervention. Figure 3 illustrates how implementation strategies can be identified.

IS Theory, Model, and Framework (TMF)

Most studies in IS are guided by specialized theories, models, and frameworks (TMFs).54–56 The purpose of IS TMFs is to provide a general structure for IS studies where IS relevant and connected constructs can inform the: formulation of research questions; identification of key contextual determinants (barriers, facilitators) and related strategies; selection of study design, measurement plan (e.g., specific implementation and outcome measures), and analytic plan; and interpretation and presentation of findings. Nilsen provides a classification of TMFs as ones that can guide the implementation of evidence-based interventions into practice (i.e., process models), models that help us better understand and explain influencers of implementation outcomes (i.e., determinant frameworks, classic theories, and implementation theories), and models that guide evaluation (i.e., evaluation frameworks).57 A TMF can fulfill multiple of these purposes but could evolve overtime; for example, the RE-AIM framework can be used as a planning, implementation, and evaluation TMF58 and CFIR 2.0 can be used as a determinant and evaluation framework.59

For full benefit, IS TMFs should be consistently used iteratively throughout the lifetime of a project from early conceptualization through implementation to sharing of findings. TMFs should also be used with a health equity lens to promote equity and representativeness of outcomes.18,20,23 There are a large number of IS TMFs available to choose from to guide planning, implementation, and evaluation activities for IS studies.55,60,61 The most recent review by Strifler and colleagues identified 150 IS TMFs.62 The most commonly used TMFs include the Diffusion of Innovations theory,63 the CFIR,13,64 the RE-AIM framework58 and its contextually-expanded version of the Practical, Robust Implementation and Sustainability model (PRISM),65 the Exploration, Preparation, Implementation, Sustainment model (EPIS),66 and the Promoting Action on Research Implementation in Health Services (PARiHS) framework.67 An extensive listing of IS TMFs and guidance on how to plan, select, combine, adapt, use, and measure IS TMFs has been created by co-author Rabin and colleagues in the form of a publicly available interactive webtool, the Dissemination and Implementation Models in Health webtool (http://www.dissemination-implementation.org). In addition, TCast (an implementation Theory Comparison and selection tool) can be used to compare different IS TMFs.68

Because a large number of IS TMFs exist, it is unlikely that the creation of new TMFs is warranted. We encourage researchers and practitioners to identify a TMF that has been used in their situation or with the population they are concerned with. In some cases, the combination of multiple TMFs can provide a more comprehensive reflection of the research or practice need. Often the selection is guided by the availability of expertise for a given TMF. Because the use of TMF requires some familiarity of how to operationalize them, it is best to work with a colleague who has prior experience and expertise in using the given TMF. Many of the models we listed earlier have similar or comparable constructs and can often be used interchangeably. As a general rule, a desirable IS TMF will include multiple contextual levels (e.g., student, teacher, dean), links contextual domains with implementation and effectiveness outcomes, and has measures linked to its constructs. For those newer to IS, it can be helpful to have examples of the use of the given TMF in their own area of interest.

The heart failure case example illustrates how PRISM/RE-AIM can be used to inform a pharmacy-relevant study (Figures 2 and 3). PRISM is a good fit for this case example as it includes multilevel contextual domains (Intervention characteristics as perceived by patients and clinicians; Internal recipients of patients, clinicians, and leaders; Implementation and sustainability infrastructure; and External environment) that interact dynamically, and directly connect with a set of implementation outcomes (Reach, Adoption, Implementation, and Maintenance at the organizational level) and clinical outcomes (Effectiveness, Maintenance at the individual level) as described in Figure 3. To learn more about PRISM/RE-AIM, a set of tools are freely available at re-aim.org. The next section will provide more specifics about IS outcomes and measures.

IS Outcomes and Measures

Along with implementation strategies, another unique aspect of IS studies is the inclusion of pragmatic implementation outcomes along with more traditional clinical outcomes that consider key issues such as representativeness. In fact, often the primary focus of IS studies is on implementation outcomes because the effectiveness of the intervention has already been established. In the previous section, we listed a set of implementation outcomes included in the RE-AIM framework (i.e, Reach, Adoption, Implementation, and organizational Maintenance). Specific definitions from a more academic and pragmatic perspective are provided by Estabrooks and Glasgow and through the numerous resources of the RE-AIM.org webtool.69

Another commonly utilized guide for identifying outcomes of IS studies is the Implementation Outcomes Framework (IOF).70 The IOF distinguishes implementation outcomes, service outcomes, and client outcomes. The key implementation outcomes in IOF include acceptability, adoption, appropriateness, cost, feasibility, fidelity, penetration, and sustainability. The service outcomes are organized by relevant standards of care as defined by the organization or setting; examples include efficiency, safety, effectiveness, equity, and patient-centeredness.70 Finally, client outcomes align more closely with outcomes from traditional efficacy and effectiveness studies and can include measures of various clinical outcomes as well as satisfaction and quality of life. As it might be noted by the reader, the implementation outcomes defined by the RE-AIM framework and the IOF are similar. A recent publication by Reilly and colleagues provides definitions and a crosswalk between the two frameworks.71 There are multiple publications providing guidance for key measurement considerations for IS.72–76 In this paper, we highlight only one and encourage the reader to explore additional guidance from other publications. For successful and meaningful evaluation of IS studies, it is critical to develop outcomes measures from multiple stakeholder perspectives (e.g., what matters to leaders versus clinicians versus patients) that consider representativeness and to collect a combination of quantitative and qualitative data.77–80 Finally, there are resources and repositories that can support identification of IS measures. These include the SIRC Instrument repository,81,82 the Grid-enabled Measures IS workspace,83,84 and the IS policy measures database by Washington University in St. Louis.85

The heart failure case example relies on the RE-AIM outcomes and provides an overview of how the individual constructs of RE-AIM are operationalized (Figures 2 and 3). To assess reach, data were collected from the EHR and a ratio of patients who were reached by the CDS intervention was divided by all patients with a HFrEF diagnosis. In this situation, the ideal denominator for reach would be those eligible for a beta-blocker medication, but due to technical constraints of data availability, we used a less precise denominator. Similarly, adoption was evaluated using EHR data and defined as the proportion of clinicians who saw a CDS alert and used it. For the comprehensive exploration of implementation success, a combination of EHR data and information gained from interviews with clinicians and leaders was used.

Stakeholder Engagement

While all four prior ingredients are important for IS research, the meaningful engagement of diverse stakeholders throughout the planning, implementation, and sustainment of the intervention can serve as one of the most important predictors of success.86–88 When the diverse stakeholders are identified and included as partners throughout the entire IS research process, the likelihood of the equitable uptake, implementation, and sustained use of an intervention will be substantially increased. All IS studies should identify key stakeholders and devote strategies and resources to engaging them in the research process. Stakeholder engagement is considered on a spectrum from minimal engagement (inform) to complete partnership and co-creation of products (empowerment).89,90 While not all research projects are resourced appropriately to include all stakeholders as equal partners, there are always ways to improve engagement practices87 and increase representation in order to improve representative and equitable implementation.18,20 Regardless of the extent of stakeholder engagement, IS cannot be successfully implemented without stakeholder engagement.

The heart failure example describes how diverse patients, clinicians, and leadership were engaged at each stage of the study, which included focus groups and interviews (Figures 2 and 3).

REPORTING ON IMPLEMENTATION SCIENCES STUDIES

Similar to how the Consolidated Standards of Reporting Trials (CONSORT) was developed to address concerns of inconsistent reporting of randomized controlled trials,91 the Standards for Reporting Implementation Studies (StaRI) was developed to provide transparent and accurate reporting of IS studies.92,93 StaRI was developed under the guidance of the EQUATOR Network (www.equator-network.org). The StaRI Checklist comprises 27 items, with 3 overarching components: (1) logic pathway of an explicit hypothesis for both IS strategy and intervention; (2) balance between fidelity (“the degree of adherence to the described IS strategy and intervention”) and adaptation (“the degree to which users modify the strategy and intervention during implementation to suit the local needs”); and (3) context of successful implementation in the planned, facilitated process. As suggested by the first overarching component, the intervention and the implementation strategy both need to be considered when reviewing IS studies. As discussed in an earlier section of this paper, and as in most IS studies, the primary focus is on the implementation strategy, while also considering the evidence about the impact of the intervention on the priority population. StaRI was designed agnostically and applies to a wide range of study designs including randomized controlled trials, case studies, cohorts, time series, pre-post, and mixed methods, and should be used in combination with the design specific checklists.

In the heart failure example, the logic pathway used was the PRISM/RE-AIM framework and the hypothesis was that a user-centered and contextually-relevant CDS tool would lead to greater reach, adoption, and effectiveness of prescribing evidence-based medications for HFrEF; fidelity and adaptation were balanced by preemptively defining core components or functions of the CDS tool (e.g., interruptive CDS tool for primary care clinicians within the EHR workflow, CDS recommendations were evidence-based) and planning for proactive evaluation and documentation of any unanticipated adaptations based on their alignment with the core components/function with a description of contextual factors; and the context of the local setting and population (leadership structure, clinicians, patients) was described with enough detail that others could evaluate the relevance of the findings to their situation.

RESOURCES

In this introductory review, we have described key IS concepts using an example applicable to medication management and provided guidance on reviewing the literature with an IS lens. We look forward to seeing more IS studies in the field of pharmacy practice, medication management, and health professional education. For those interested in finding resources or training related to IS, additional information is available from the National Cancer Institute’s TIDIRC program – Training Institute for Dissemination and Implementation Research in Cancer (https://cancercontrol.cancer.gov/is/training-education/training-in-cancer/TIDIRC-open-access), the Academy Health (https://academyhealth.org/evidence/methods/translation-dissemination-implementation), the National Institutes of Health (NIH) National Center for Advancing Translational Sciences (https://ncats.nih.gov/), or the Agency for Healthcare Research and Quality (AHRQ) (https://www.ahrq.gov/ncepcr/tools/transform-qi/index.html).

Table 1.

Key Considerations When Reporting on or Reading an Implementation Science (IS) Study

| • Compliance with the StaRI Standards (Guidance of the EQUATOR Network)92,93 |

| • Three overarching components: (1) logic pathway; (2) balance between fidelity and adaptation; and (3) context |

| • Five key ingredients: (1) evidence-based intervention; (2) IS strategy (primary focus); (3) TMF; (4) IS outcomes and measures; and (5) stakeholder engagement |

| • Combine other standards as needed (e.g., CONSORT, STROBE)91 |

Note: StaRI – the Standards for Reporting Implementation Studies; EQUATOR – Enhancing the QUAlity and Transparency Of health Research; TMF – Theory, Model, and Framework; CONSORT – Consolidated Standards of Reporting Trials; STROBE – Strengthening the Reporting of Observational Studies in Epidemiology.

Acknowledgements:

Katy Trinkley is supported by the National Institutes of Health, National Heart Lung and Blood Institute K12 Training grant (NIH/NHLBI K12HL137862). Borsika Rabin is supported by the UC San Diego ACTRI Dissemination and Implementation Science Center and the Altman Clinical and Translational Research Institute at UC San Diego, funded by the National Institutes of Health, National Center for Research Resource (NIH UL1TR001442).

Footnotes

Conflict of interest statement: The authors declare no conflicts of interest.

Contributor Information

Grace M. Kuo, Texas Tech University Health Sciences Center and Professor Emerita at University of California San Diego; Address: 1300 S. Coulter Street, Suite 104, Amarillo, TX 79106.

Katy E. Trinkley, University of Colorado Skaggs Schools of Medicine and Pharmacy and Pharmaceutical Sciences at the Anschutz Medical Campus; Aurora, Colorado.

Borsika Rabin, Herbert Wertheim School of Public Health and Human Longevity Science and Co-Director of the UC San Diego ACTRI Dissemination and Implementation Science Center at University of California San Diego; La Jolla, California.

References:

- 1.Bauer MS, Damschroder L, Hagedorn H, Smith J, Kilbourne AM. An introduction to implementation science for the non-specialist. BMC Psychol. 2015;3(1):32. doi: 10.1186/s40359-015-0089-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Glasgow RE, Lichtenstein E, Marcus AC. Why don’t we see more translation of health promotion research to practice? Rethinking the efficacy-to-effectiveness transition. Am J Public Health. 2003;93(8):1261–1267. doi: 10.2105/AJPH.93.8.1261 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Balas E, Boren S. Managing clinical knowledge for health care improvement. Yearb Med. 2000;1(1):65–70. [PubMed] [Google Scholar]

- 4.Khan S, Chambers D, Neta G. Revisiting time to translation: implementation of evidence-based practices (EBPs) in cancer control. Cancer Causes and Control. 2021;32(3):221–230. doi: 10.1007/s10552-020-01376-z [DOI] [PubMed] [Google Scholar]

- 5.Rankin NM, Butow PN, Hack TF, et al. An implementation science primer for psycho-oncology: translating robust evidence into practice. Journal of Psychosocial Oncology Research & Practice. 2019;1(3):e14. doi: 10.1097/OR9.0000000000000014 [DOI] [Google Scholar]

- 6.Garza KB, Abebe E, Bacci JL, et al. Building Implementation Science Capacity in Academic Pharmacy: Report of the 2020–2021 AACP Research and Graduate Affairs Committee. Am J Pharm Educ. 2021;85(10):1159–1166. doi: 10.5688/AJPE8718 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Kuo G, Bacci JL, Chui MA, et al. Implementation Science to Advance Practice and Curricular Transformation: Report of the 2019–2020 AACP Research and Graduate Affairs Committee. Am J Pharm Educ. 2020;84(10):1440–1445. doi: 10.5688/AJPE848204 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Curran GM, Shoemaker SJ. Advancing pharmacy practice through implementation science. Res Social Adm Pharm. 2017;13(5):889–891. doi: 10.1016/J.SAPHARM.2017.05.018 [DOI] [PubMed] [Google Scholar]

- 9.Seaton TL. Dissemination and implementation sciences in pharmacy: A call to action for professional organizations. Res Social Adm Pharm. 2017;13(5):902–904. doi: 10.1016/J.SAPHARM.2017.05.021 [DOI] [PubMed] [Google Scholar]

- 10.Roberts MC, Mensah GA, Khoury MJ. Leveraging Implementation Science to Address Health Disparities in Genomic Medicine: Examples from the Field. Ethn Dis. 2019;29(Suppl 1):187–192. doi: 10.18865/ED.29.S1.187 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lieu E, Mercadante AR, Schwartzman E, Law A v. Transitions of care at an ambulatory care clinic: An implementation science approach. Res Social Adm Pharm. Published online June 2021. doi: 10.1016/J.SAPHARM.2021.06.022 [DOI] [PubMed] [Google Scholar]

- 12.Livorsi DJ, Drainoni ML, Reisinger HS, et al. Leveraging implementation science to advance antibiotic stewardship practice and research. Infect Control Hosp Epidemiol. 2022;43(2):139–146. doi: 10.1017/ICE.2021.480 [DOI] [PubMed] [Google Scholar]

- 13.Weir NM, Newham R, Dunlop E, Bennie M. Factors influencing national implementation of innovations within community pharmacy: a systematic review applying the Consolidated Framework for Implementation Research. Implement Sci. 2019;14(1). doi: 10.1186/S13012-019-0867-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Eccles MP, Mittman BS. Welcome to implementation science. Implementation Science. 2006;1(1):1–3. doi: 10.1186/1748-5908-1-1/METRICS [DOI] [Google Scholar]

- 15.Holtrop JS, Rabin BA, Glasgow RE. Dissemination and Implementation Science in Primary Care Research and Practice: Contributions and Opportunities. J Am Board Fam Med. 2018;31(3):466–478. doi: 10.3122/JABFM.2018.03.170259 [DOI] [PubMed] [Google Scholar]

- 16.Neta G, Brownson RC, Chambers DA. Opportunities for Epidemiologists in Implementation Science: A Primer. Am J Epidemiol. 2018;187(5):899–910. doi: 10.1093/AJE/KWX323 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Bauer MS, Kirchner JA. Implementation science: What is it and why should I care? Psychiatry Res. 2020;283. doi: 10.1016/J.PSYCHRES.2019.04.025 [DOI] [PubMed] [Google Scholar]

- 18.Shelton RC, Chambers DA, Glasgow RE. An extension of RE-AIM to enhance sustainability: Addressing dynamic context and promoting health equity over time. Frontiers in Public Health. 2020;8. doi: 10.3389/fpubh.2020.00134 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Shelton RC, Adsul P, Oh A. Recommendations for addressing structural racism in implementation science: A call to the field. Ethnicity and Disease. 2021;31(Suppl 1):357–364. doi: 10.18865/ed.31.S1.357 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kwan BM, Brownson RC, Glasgow RE, Morrato EH, Luke DA. Designing for dissemination and sustainability to promote equitable impacts on health. Annu Rev Public Health. 2022;43(1). doi: 10.1146/ANNUREV-PUBLHEALTH-052220-112457 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Mazzucca S, Arredondo EM, Hoelscher DM, et al. Expanding implementation research to prevent chronic diseases in community settings. Annu Rev Public Health. 2021;42:135–158. doi: 10.1146/ANNUREV-PUBLHEALTH-090419-102547 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Yousefi Nooraie R, Kwan BM, Cohn E, et al. Advancing health equity through CTSA programs: Opportunities for interaction between health equity, dissemination and implementation, and translational science. Journal of Clinical and Translational Science. 2020;4(3):168–175. doi: 10.1017/cts.2020.10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Brownson RC, Kumanyika SK, Kreuter MW, Haire-Joshu D. Implementation science should give higher priority to health equity. Implement Sci. 2021;16(1). doi: 10.1186/S13012-021-01097-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Landes SJ, McBain SA, Curran GM. An introduction to effectiveness-implementation hybrid designs. Psychiatry Res. 2019;280. doi: 10.1016/J.PSYCHRES.2019.112513 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Guerin RJ, Harden SM, Rabin BA, et al. Dissemination and Implementation Science Approaches for Occupational Safety and Health Research: Implications for Advancing Total Worker Health. Int J Environ Res Public Health. 2021;18(21). doi: 10.3390/IJERPH182111050 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Dearing J, Kee K, Peng T. Historical roots of dissemination and implementation science. In: Brownson R, Colditz G, Proctor E, eds. Dissemination and Implementation Research in Health: Translating Science to Practice. 2nd ed. Oxford University Press; 2017:47–61. [Google Scholar]

- 27.Huh J, DeLorme DE, Reid LN. Media credibility and informativeness of direct-to-consumer prescription drug advertising. Health Mark Q. 2004;21(3):27–61. doi: 10.1300/J026V21N03_03 [DOI] [PubMed] [Google Scholar]

- 28.Deshpande A, Menon A, Perri M, Zinkhan G. Direct-to-consumer advertising and its utility in health care decision making: a consumer perspective. J Health Commun. 2004;9(6):499–513. doi: 10.1080/10810730490523197 [DOI] [PubMed] [Google Scholar]

- 29.Issues in Translation | National Center for Advancing Translational Sciences. Accessed March 17, 2022. https://ncats.nih.gov/translation/issues

- 30.Fort DG, Herr TM, Shaw PL, Gutzman KE, Starren JB. Mapping the evolving definitions of translational research. Journal of Clinical and Translational Science. 2017;1(1):60. doi: 10.1017/CTS.2016.10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Lobb R, Colditz GA. Implementation science and its application to population health. Annu Rev Public Health. 2013;34:235–251. doi: 10.1146/ANNUREV-PUBLHEALTH-031912-114444 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Braganza MZ, Kilbourne AM. The Quality Enhancement Research Initiative (QUERI) Impact Framework: Measuring the Real-world Impact of Implementation Science. J Gen Intern Med. 2021;36(2):396–403. doi: 10.1007/S11606-020-06143-Z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Goodrich D, Miake-Lye I, Braganza M, Wawrin N, Kilbourne A. The QUERI Roadmap for Implementation and Quality Improvement. The QUERI Roadmap for Implementation and Quality Improvement. Published online 2020. Accessed June 9, 2022. https://www.ncbi.nlm.nih.gov/books/NBK566223/ [PubMed]

- 34.Trinkley KE, Kahn MG, Bennett TD, et al. Integrating the Practical Robust Implementation and Sustainability Model With Best Practices in Clinical Decision Support Design: Implementation Science Approach. J Med Internet Res. 2020;22(10). doi: 10.2196/19676 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Trinkley KE, Kroehl ME, Kahn MG, et al. Applying Clinical Decision Support Design Best Practices With the Practical Robust Implementation and Sustainability Model Versus Reliance on Commercially Available Clinical Decision Support Tools: Randomized Controlled Trial. JMIR Med Inform. 2021;9(3). doi: 10.2196/24359 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Smith JD, Li DH, Rafferty MR. The implementation research logic model: a method for planning, executing, reporting, and synthesizing implementation projects. Implement Sci. 2020;15(1). doi: 10.1186/S13012-020-01041-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Brown CH, Curran G, Palinkas LA, et al. An Overview of Research and Evaluation Designs for Dissemination and Implementation. Annu Rev Public Health. 2017;38:1–22. doi: 10.1146/ANNUREV-PUBLHEALTH-031816-044215 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Brownson RC, Fielding JE, Maylahn CM. Evidence-based public health: a fundamental concept for public health practice. Annu Rev Public Health. 2009;30:175–201. doi: 10.1146/ANNUREV.PUBLHEALTH.031308.100134 [DOI] [PubMed] [Google Scholar]

- 39.Brownson RC, Chriqui JF, Stamatakis KA. Understanding evidence-based public health policy. Am J Public Health. 2009;99(9):1576–1583. doi: 10.2105/AJPH.2008.156224 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Yancy CW, Jessup M, Bozkurt B, et al. 2017 ACC/AHA/HFSA Focused Update of the 2013 ACCF/AHA Guideline for the Management of Heart Failure. J Am Coll Cardiol. 2017;70(6):776–803. doi: 10.1016/j.jacc.2017.04.025 [DOI] [PubMed] [Google Scholar]

- 41.Yancy CW, Jessup M, Bozkurt B, et al. 2013 ACCF/AHA guideline for the management of heart failure: executive summary: a report of the American College of Cardiology Foundation/American Heart Association Task Force on practice guidelines. Circulation. 2013;128(16):1810–1852. doi: 10.1161/CIR.0b013e31829e8807 [DOI] [PubMed] [Google Scholar]

- 42.Sutton RT, Pincock D, Baumgart DC, Sadowski DC, Fedorak RN, Kroeker KI. An overview of clinical decision support systems: benefits, risks, and strategies for success. npj Digital Medicine. 2020;3(1). doi: 10.1038/s41746-020-0221-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Blumenthal D, Tavenner M. The “meaningful use” regulation for electronic health records. New England Journal of Medicine. 2010;363(1):1–3. [DOI] [PubMed] [Google Scholar]

- 44.Waltz TJ, Powell BJ, Matthieu MM, et al. Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: results from the Expert Recommendations for Implementing Change (ERIC) study. Implement Sci. 2015;10(1). doi: 10.1186/S13012-015-0295-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Powell BJ, Waltz TJ, Chinman MJ, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10(1). doi: 10.1186/S13012-015-0209-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Curran GM. Implementation science made too simple: a teaching tool. Implement Sci Commun. 2020;1(1). doi: 10.1186/S43058-020-00001-Z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.University of Washington Implementation Science. Implementation Strategies. https://impsciuw.org/implementation-science/research/implementation-strategies/.

- 48.Dickson KS, Holt TC, Arredondo E. Applying Implementation Mapping to an Expanded Care Coordination Program at a Federally Qualified Health Center. Frontiers in Public Health. 1AD;0:595. doi: 10.3389/FPUBH.2022.844898 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Davis JL, Ayakaka I, Ggita JM, et al. Theory-informed design of a tailored strategy for implementing household TB contact investigation in Uganda. Frontiers in Public Health. 1AD;0:596. doi: 10.3389/FPUBH.2022.837211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Schultes MT, Albers B, Caci L, Nyantakyi E, Clack L. A modified implementation mapping methodology for evaluating and learning from existing implementation. Frontiers in Public Health. 1AD;0:591. doi: 10.3389/FPUBH.2022.836552 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Kang E, Foster ER. Use of Implementation Mapping with Community-Based Participatory Research: Development of Implementation Strategies of a New Goal Setting and Goal Management Intervention System. Frontiers in Public Health. 1AD;0:733. doi: 10.3389/FPUBH.2022.834473 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Waltz TJ, Powell BJ, Fernández ME, Abadie B, Damschroder LJ. Choosing implementation strategies to address contextual barriers: diversity in recommendations and future directions. Implement Sci. 2019;14(1). doi: 10.1186/S13012-019-0892-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Proctor EK, Powell BJ, McMillen JC. Implementation strategies: Recommendations for specifying and reporting. Implementation Science. 2013;8(1). doi: 10.1186/1748-5908-8-139 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Tabak RG, Khoong EC, Chambers DA, Brownson RC. Bridging research and practice: models for dissemination and implementation research. Am J Prev Med. 2012;43(3):337–350. doi: 10.1016/j.amepre.2012.05.024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Moullin JC, Dickson KS, Stadnick NA, et al. Ten recommendations for using implementation frameworks in research and practice. Implement Sci Commun. 2020;1(1). doi: 10.1186/S43058-020-00023-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Rabin B, Brownson R. Developing terminology for dissemination and implementation research. In: Brownson R, Colditz G, Proctor EK, eds. Dissemination and Implementation Research in Health. 2nd ed. Oxford University Press; 2018:19–45. [Google Scholar]

- 57.Nilsen P Making sense of implementation theories, models and frameworks. Implementation Science. Published online 2015. doi: 10.1186/s13012-015-0242-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Glasgow RE, Harden SM, Gaglio B, et al. RE-AIM planning and evaluation framework: Adapting to new science and practice with a 20-year review. Frontiers in Public Health. 2019;7(MAR). doi: 10.3389/fpubh.2019.00064 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Damschroder LJ, Reardon CM, Opra Widerquist MA, Lowery J. Conceptualizing outcomes for use with the Consolidated Framework for Implementation Research (CFIR): the CFIR Outcomes Addendum. Implementation Science. 2022;17(1):1–10. doi: 10.1186/S13012-021-01181-5/TABLES/2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Tabak RG, Khoong EC, Chambers DA, Brownson RC. Bridging Research and Practice. American Journal of Preventive Medicine. 2012;43(3):337–350. doi: 10.1016/j.amepre.2012.05.024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Dissemination and implementation models in health research and practice. https://dissemination-implementation.org/.

- 62.Strifler L, Cardoso R, McGowan J, et al. Scoping review identifies significant number of knowledge translation theories, models, and frameworks with limited use. Journal of Clinical Epidemiology. 2018;100. doi: 10.1016/j.jclinepi.2018.04.008 [DOI] [PubMed] [Google Scholar]

- 63.Rogers EM. Diffusion of Innovations Theory. New York: Free Press. Published online 2003:5th ed. doi: 10.1111/j.1467-9523.1970.tb00071.x [DOI] [Google Scholar]

- 64.Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implementation Science. 2009;4(1):50. doi: 10.1186/1748-5908-4-50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Feldstein AC, Glasgow RE. A practical, robust implementation and sustainability model (PRISM) for integrating research findings into practice. Joint Commission Journal on Quality and Patient Safety. 2008;34(4):228–243. doi: 10.1016/S1553-7250(08)34030-6 [DOI] [PubMed] [Google Scholar]

- 66.Moullin JC, Dickson KS, Stadnick NA, Rabin B, Aarons GA. Systematic review of the Exploration, Preparation, Implementation, Sustainment (EPIS) framework. Implementation Science. 2019;14(1). doi: 10.1186/s13012-018-0842-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Helfrich CD, Damschroder LJ, Hagedorn HJ, et al. A critical synthesis of literature on the promoting action on research implementation in health services (PARIHS) framework. Implementation Science. 2010;5(1). doi: 10.1186/1748-5908-5-82 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Birken SA, Rohweder CL, Powell BJ, et al. T-CaST: An implementation theory comparison and selection tool. Implementation Science. 2018;13(1):1–10. doi: 10.1186/S13012-018-0836-4/FIGURES/3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Glasgow RE, Estabrooks PE. Pragmatic Applications of RE-AIM for Health Care Initiatives in Community and Clinical Settings. Prev Chronic Dis. 2018;15(1). doi: 10.5888/PCD15.170271 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Proctor E, Silmere H, Raghavan R, et al. Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health and Mental Health Services Research. 2011;38(2):65–76. doi: 10.1007/s10488-010-0319-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Reilly KL, Kennedy S, Porter G, Estabrooks P. Comparing, Contrasting, and Integrating Dissemination and Implementation Outcomes Included in the RE-AIM and Implementation Outcomes Frameworks. Front Public Health. 2020;8. doi: 10.3389/FPUBH.2020.00430 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Allen P, Pilar M, Walsh-Bailey C, et al. Quantitative measures of health policy implementation determinants and outcomes: a systematic review. Implement Sci. 2020;15(1). doi: 10.1186/S13012-020-01007-W [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Powell BJ, Stanick CF, Halko HM, et al. Toward criteria for pragmatic measurement in implementation research and practice: a stakeholder-driven approach using concept mapping. Implement Sci. 2017;12(1). doi: 10.1186/S13012-017-0649-X [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Stanick CF, Halko HM, Nolen EA, et al. Pragmatic measures for implementation research: development of the Psychometric and Pragmatic Evidence Rating Scale (PAPERS). Transl Behav Med. 2021;11(1):11–20. doi: 10.1093/TBM/IBZ164 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Glasgow RE. What Does It Mean to Be Pragmatic? Pragmatic Methods, Measures, and Models to Facilitate Research Translation. Health Education and Behavior. 2013;40(3):257–265. doi: 10.1177/1090198113486805 [DOI] [PubMed] [Google Scholar]

- 76.Glasgow RE, Riley WT. Pragmatic measures: what they are and why we need them. Am J Prev Med. 2013;45(2):237–243. doi: 10.1016/J.AMEPRE.2013.03.010 [DOI] [PubMed] [Google Scholar]

- 77.Holtrop JS, Rabin BA, Glasgow RE. Qualitative approaches to use of the RE-AIM framework: rationale and methods. BMC Health Services Research. 2018;18(1):177. doi: 10.1186/s12913-018-2938-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Dopp AR, Mundey P, Beasley LO, Silovsky JF, Eisenberg D. Mixed-method approaches to strengthen economic evaluations in implementation research. Implement Sci. 2019;14(1). doi: 10.1186/S13012-018-0850-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Gertner AK, Franklin J, Roth I, et al. A scoping review of the use of ethnographic approaches in implementation research and recommendations for reporting. Implement Res Pract. 2021;2:263348952199274. doi: 10.1177/2633489521992743 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Qualitative Methods in Implementation Science, National Cancer Institute Division of Cancer Control & Population Sciences | Implementation Science News. Accessed March 25, 2022. https://news.consortiumforis.org/2019/10/09/qualitative-methods-in-implementation-science-national-cancer-institute-division-of-cancer-control-population-sciences/

- 81.Instrument Repository – SIRC. Accessed March 17, 2022. https://societyforimplementationresearchcollaboration.org/measures-collection/

- 82.Lewis CC, Stanick CF, Martinez RG, et al. The society for implementation research collaboration instrument review project: A methodology to promote rigorous evaluation. Implementation Science. 2015;10(1):1–18. doi: 10.1186/S13012-014-0193-X/TABLES/6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Rabin BA, Purcell P, Naveed S, et al. Advancing the application, quality and harmonization of implementation science measures. Implement Sci. 2012;7(1). doi: 10.1186/1748-5908-7-119 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.GEM. Accessed March 17, 2022. https://www.gem-measures.org/Login.aspx

- 85.Home :: Health Policy Measures. Accessed March 17, 2022. https://www.health-policy-measures.org/

- 86.Pinto RM, Ethan Park S, Miles R, Ong PN. Community engagement in dissemination and implementation models: A narrative review: https://doi.org/101177/2633489520985305. 2021;2:263348952098530. doi: 10.1177/2633489520985305 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Stadnick NA, Cain KL, Watson P, et al. Engaging Underserved Communities in COVID-19 Health Equity Implementation Research: An Analysis of Community Engagement Resource Needs and Costs. Frontiers in Health Services. 2022;0:6. doi: 10.3389/FRHS.2022.850427 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Stadnick NA, Cain KL, Oswald W, et al. Co-creating a Theory of Change to advance COVID-19 testing and vaccine uptake in underserved communities. Health Serv Res. Published online March 4, 2022. doi: 10.1111/1475-6773.13910 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Bombard Y, Baker GR, Orlando E, et al. Engaging patients to improve quality of care: a systematic review. Implement Sci. 2018;13(1). doi: 10.1186/S13012-018-0784-Z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.International Association for Public Participation. Public Participation Spectrum. Published 2021. Accessed March 26, 2022. https://www.iap2.org/page/pillars

- 91.Moher D, Schulz KF, Altman D. The CONSORT Statement: revised recommendations for improving the quality of reports of parallel-group randomized trials 2001. Explore (NY). Published online 2005. doi: 10.1016/j.explore.2004.11.001 [DOI] [PubMed] [Google Scholar]

- 92.Standards for Reporting Implementation Studies (StaRI) Statement | The EQUATOR Network. Accessed March 17, 2022. https://www.equator-network.org/reporting-guidelines/stari-statement/ [Google Scholar]

- 93.Pinnock H, Barwick M, Carpenter CR, et al. Standards for Reporting Implementation Studies (StaRI): Explanation and elaboration document. BMJ Open. 2017;7(4). doi: 10.1136/bmjopen-2016-013318 [DOI] [PMC free article] [PubMed] [Google Scholar]