Abstract

Objective

Based on the heuristic–systematic model (HSM) and health belief model (HBM), this study aims to investigate how personalization and source expertise in responses from a health chatbot influence users’ health belief-related factors (i.e. perceived benefits, self-efficacy and privacy concerns) as well as usage intention.

Methods

A 2 (personalization vs. non-personalization) × 2 (source expertise vs. non-source expertise) online between-subject experiment was designed. Participants were recruited in China between April and May 2021. Data from 260 valid observations were used for the data analysis.

Results

Source expertise moderated the effects of personalization on health belief factors. Perceived benefits and self-efficacy mediated the relationship between personalization and usage intention when the source expertise cue was presented. However, the privacy concerns were not influenced by personalization and source expertise and did not significantly affect usage intention toward the health chatbot.

Discussion

This study verified that in the health chatbot context, source expertise as a heuristic cue may be a necessary condition for effects of the systematic cue (i.e. personalization), which supports the HSM's arguments. By introducing the HBM in the chatbot experiment, this study is expected to provide new insights into the acceptance of healthcare AI consulting services.

Keywords: Health chatbot, personalization, source expertise, heuristic–systematic model (HSM), health belief model (HBM)

Introduction

From enhancing the user experience and assisting healthcare professionals, to improving healthcare processes and unlocking potential benefits, health chatbots are being harnessed to achieve various goals, thereby demonstrating the power of conversational AI. 1 In the global market, many health chatbots are emerging, such as Ping An Good Doctor (PAGD) from China, Woebot from the USA and Babylon from the UK. 2 In particular, during the COVID-19 pandemic, the demand for virtual health services has grown substantially. For example, PAGD experienced phenomenal growth at the peak of the pandemic period with more than 1.27 billion consultations in 2021. 3 With the advances in artificial intelligence (AI) and natural language processing (NLP) technologies, some health chatbots can combine medical databases and users’ personal information to generate health advice automatically. Chatbots are helping clinicians in the UK to provide patient-centered medical care, while smoothing out differences across the nation and aiding patients in self-management. 4 In China, a substantial number of manufacturers are working on optimizing the interactions between users and health chatbots and attracting more users. 5

Improving usage intention is crucial for health chatbots to realize its beneficial outcomes. One of the major approaches adopted to enhance usage intention is to provide more tailored and professional health-related information to the users.6,7 Since Awad and Krishnan 8 proposed the personalization-privacy paradox, the number of discussions on how the presence of personalized information elicits more positive perceptions via the Internet has increased.9,10 Especially in the healthcare context, one of the characteristics of a health diagnosis is that it is tailored to users according to their demographic information, symptoms or lifestyle habits. 11 Hence, how to balance users’ concerns about privacy and the provision of personalized health services has become an issue of widespread concern among practitioners. In addition, previous studies have shown that the source of information is one of the aspects of users’ judgments regarding the trustworthiness and quality of chatbots.4,12 Among them, source expertise has exhibited its effect in terms of perceptions regarding the credibility of messages when users are processing online health-related information. 13 However, in the context of health chatbots, how the source expertise can affect users’ health-related perceptions still deserves an in-depth analysis.

From a theoretical standpoint, the HSM has provided an explanatory framework for online and offline health communication campaigns.14,15 Exploring users’ heuristic processing and systematic processing is helpful in understanding their health information decision-making and belief changes in the context of health chatbots. When users’ cognitive motivation is relatively high, it is possible to simultaneously process both the heuristic cue and the systematic cue.16,17 Given that users tend to utilize health chatbots for specific needs, we conducted an online experiment with health chatbots to explore the interaction effect of source expertise and personalized cues on users’ beliefs and behavorial intention. In addition, the HBM has also been found in the previous literature to be relevant and effective in predicting users’ health-related perceptions and attitudes for e-health interventions.18,19 We also examined the mediating role of three factors from health beliefs (i.e. perceived benefits, self-efficacy and privacy concerns) between the two cues of information-processing and usage intention.

Literature review

Health chatbot for consulting

Trained by massive amounts of healthcare data, chatbots that can provide medical information have exhibited potential in both academia and in practice. 20 Health chatbots have been widely applied to support users’ short-term or long-term health goals, such as assisting in automatic triage, performing cancer diagnosis, providing consultations or providing self-management. 21 With the popularity of embedded platforms (like Facebook Messenger and Telegram) and health applications, 22 chatbots that provide health consultation help to minimize medical costs, enhance accessibility to health knowledge and reduce the health and well-being gap.4,23 Chatbots have apparent advantages, such as being anonymous, non-judgmental and convenient.4,24 Taking the app of “the Left-hand Doctor” in China as an example, when a user inputs a simple keyword about a disease, the interface will cause the related knowledge and medical suggestions to pop up, mimicking general practitioner consultations in reality. Users expect to obtain tailored and professional information from the health chatbots, which makes personalization and professionalism become indispensable factors for this technology.25,26 Chatbots can give personalized and tailored responses around the user's profile by storing and retrieving user information in databases. 21 The AI algorithm or collaborations with prestigious health specialists and professional teams further warrant the expertise of health chatbots.12,27

Some of the previous research provided findings on the antecedents of usage intention toward health chatbots. Many of these studies focused on users’ perceptions of the chatbot system's performance. For example, Nadarzynski et al. 4 noted that convenience and anonymity may drive the user acceptability of health chatbots, whereas the worries related to accuracy and security may impede user acceptance. Zhu et al. 28 found that the different kinds of values (i.e. functional, emotional, epistemic and conditional) that users perceive can enhance their experience and continuance intention toward healthcare chatbots. Liu et al.'s 29 study revealed that factors from the Technology Acceptance model (i.e. perceived usefulness, perceived ease of use and perceived enjoyment) are significant antecedents of the continuance intention toward healthcare chatbots. Similarly, Huang and Chueh's research 27 found that perceived ease of use and perceived information quality can increase satisfaction and the behavioral intention to use. Although these studies offer valuable insights, how users’ health beliefs during the usage process affect their usage intention with regard to healthcare chatbots seems to have been neglected. As people use health chatbots primarily to solve health concerns and then decide whether to continue using this technology, it is necessary to consider theories like the HBM, which emphasizes individuals’ health beliefs, when examining the adoption by users of this technology.

Personalization and source expertise in online applications

In the health chatbot context, the personalization cue and source expertise cue are quite commonly employed to provide users with better services. As for personalization, after gathering users’ personal information either overtly or covertly, information systems can provide tailored and personalized content to specific individuals, 30 which to a large extent increases the personal relevance to the user.31,32 Although the message may be personalized in varying ways, a conceptual common ground with regard to its operationalization of personalization is the “match” between the message and the user's characteristics, needs and interests.33,34 Previous scholars provided evidence on personalization's influences in various contexts. In research on the marketing domain, personalization has been found to lead to more positive attitudes and purchase intentions. 35 In the human–computer interaction (HCI) area, scholars demonstrated that personalized content can produce favorable perceptions and a greater intention to revisit the interface. 32 Overall, past studies found that a personalized message can elicit more favorable outcomes than a non-personalized message, such as being more enjoyable, more memorable and more persuasive. 36

In the health domain, personalization can also exert positive effects on individuals’ judgments and behavioral intentions. Patients perceive the personalized message to be of higher quality, which further increases their behavioral intention to follow the treatment procedures, 37 thereby enhancing the effects of interventions for health promotion. 38 Within the context of health chatbots, personalization has been identified in a few studies as an important feature to improve user satisfaction, engagement and dialogue quality.28,39,40 However, only a very few studies employed experiments to directly explore the effects of personalized messages from chatbots on users’ health-related outcomes. Meanwhile, some extant research lacks a theoretical framework underpinning the personalization of health chatbots. 41

With regard to source expertise, it is a term that refers to the extent to which the individuals perceive the source to have enough competence and knowledge to provide convincing arguments. 42 After communicators demonstrate cues related to expertise such as claiming professionality, the recipients’ perceived credibility toward the source will increase accordingly, 43 which will further influence their decision-making and behavioral intentions.44,45 For mobile services and technological systems, expertise cues are often included in the interface to improve users’ positive evaluations.43,46 As for the health scenarios, source expertise has been found to be closely related to information judgments as users care a lot about the source from which they receive the health-related messages. 47 Health information from an authoritative and professional source is perceived to be more credible and persuasive than information from a normal sender.48,49 In the web-based environment, source expertise can significantly increase the perceived quality of health information, subsequently eliciting more trust from users. 50 However, few studies explore it in the health chatbot context. Meanwhile, previous studies draw much attention to the influences of source expertise on perceptions and judgments (e.g. perceived quality and credibility) on the messages per se, leaving individuals’ health beliefs relatively neglected.

Although previous research provides insights into the effects of the two respective cues, to the best of our knowledge, no prior research has investigated people's perceptions under the simultaneous influences of both cues. In addition, the issue of how people's health beliefs may change when the chatbot presents personalization and source expertise remains underexplored. Considering that the two important cues may co-present at the same time in the messages from the health chatbot, the HSM can provide a solid framework to help us understand the intertwined influences of different messages’ cues from the perspective of information processing.

The heuristic–systematic processing of health information

A health chatbot with AI can interact with users automatically to provide medical advice based on their symptoms. Users, in turn, inevitably need to face up to and judge the suggestions provided by the health chatbot. The HSM provides a perspective that may explain how users process and receive health advice from a chatbot. The HSM is a dual-process model that presents two distinctive processing approaches when individuals assess information. 51 Heuristic processing is identified as a faster, more intuitive processing route that requires comparatively less cognitive effort. 16 In contrast, systematic processing is a slower, more analytical processing route that requires deep-thinking and reasoning. 52 In the context of healthcare, systems and heuristic processing have been proven to jointly influence the individual's medical decision-making process. 15 Given that users might judge the values of different cues at the same time when using a health chatbot, we suppose that the inclusion of both processing paths in the exploration can help us better understand users’ decisions and intentions in relation to this technology.

When issues are highly relevant to individuals, 51 people are more likely to engage in a systematic way of information processing, which can be triggered by cues such as personalization. In mHealth environments, personalized recommendations that meet users’ precise needs have been shown to positively influence their usage intentions.7,28 Since health diagnoses involve a process that refers to personalized information, this study proposes that users systematically process their health issues when they are exposed to the personalization cue. Source expertise, on the other hand, is considered to be a typical type of heuristic cue. 51 Source expertise is particularly important for health-related issues as it is widely recognized as being one of the fundamental elements driving the principles of medical professionalism that underpin public trust in the knowledge and skills of healthcare practitioners. 53 Hence, this research also explores how source expertise, as a heuristic cue, influences the users’ health beliefs and usage intention.

The HSM specifies that the two types of information processing can not only co-exist but can also interact with each other. 17 In particular, the bias hypothesis of the HSM emphasizes the important influence of heuristic processing on systematic processing. Chaiken et al. 54 propose that a message could be first evaluated by heuristic cues. If the message is considered to be significant for this person, he or she might adopt a systematic evaluation of the content. Systematic processing is not entirely objective and could be biased by the expectation of an initial heuristic evaluation. As heuristic processing is the default strategy for humans’ information processing, 16 our research assumes that the users’ heuristic processing triggered by source expertise may play a crucial role when users are perceiving the personalization message from the health chatbot, which further influences their usage intention.

The interaction of the two information processing paths also helps us further understand the subsequent changes in people's attitudes. Among them, previous studies have adopted usage intention as a significant indicator to assess people's attitudes to technology and have treated it as a downstream outcome of various manipulations. 55 In this study, the usage intention in relation to health chatbots refers to the intention to use online conversational agents in healthcare. The usage intention as a kind of judgmental decision is determined by both systematic factors and heuristic factors. 56 Some previous health-related studies have proved that personalization, as one of the systematic cues, has a significant positive influence on usage intention.38,57 Nonetheless, users may not rely on a sole cue for decision-making. As discussed above, the cue of source expertise is especially important in health-related settings. 6 In other words, the positive effect of personalized cues on usage intention may be more reinforced under the condition of source expertise, since users may perceive a more direct and stronger sense of trust. Thus, we propose:

H1: Personalization (vs. non-personalization) will bring about more usage intention when the health chatbot provides source expertise information compared to when it does not.

Health belief model

In addition to usage intention, the forms of individual information processing also affect people's beliefs and perceptions. 58 With regard to health decision-making, the HBM is widely applied to investigate people's health-related behaviors. The HBM initially aimed at interpreting and predicting people's behavioral reactions to illness and provided a remarkable paradigm to remedy people's health condition. 59 With the continuous changes in practical health interventions, the HBM subsequently developed into a comprehensive theoretical framework with six constructs to explain individuals’ general health-related behaviors. The six constructs include perceived susceptibility, perceived severity, perceived benefits, perceived barriers, cues to action and self-efficacy. 60 The HBM has been implemented to explain health behaviors in the context of online information acquisition. In recent years, in particular, the HBM has been applied to investigate the adoption of health-related applications during COVID-19 and people's behaviors in seeking online health information.18,61 The HBM is suitable for health chatbot scenarios as users’ actions in this context contain both information processing and subsequent health-related behaviors. 62 Thus, we assume that the HBM has a considerable explanatory power in our study.

Previous studies often selected certain constructs from the HBM based on the characteristics of their research contexts.14,63 In this study, the perceived benefits and privacy concerns were included to explore people's health-related Internet engagement. It should be noted that most health chatbots inevitably collect basic and even sensitive patient information to improve efficiency and personalization, including that related to allergies, medication history and contact details. However, users are often not well informed about the ways in which chatbots ensure their privacy and data security, which may cause users to feel concerned about privacy. Such worries about data being stolen, mishandled or improperly sold to a third party may further impede users’ subsequent behavioral intentions. 64 Past studies have conceptualized privacy concerns as the perceived barrier in the healthcare contexts such as using smart healthcare services for psychiatric disorders 65 and adopting the COVID-19 tracing app. 18 Aligned with the status quo and previous studies, we focus on privacy concerns as the perceived barrier under the framework of HBM in the health chatbot context. In addition, as self-efficacy has been considered to be a significant factor that is closely linked to the two processing paths,66,67 it was selected from the HBM to illustrate an individual's level of confidence in terms of his or her ability to take action. 68

Utilizing effective heuristic cues or systematic cues may help users make decisions related to health. The forms of individual information processing have been proven to affect people's beliefs and perceptions. 58 When personalization cues are treated as systematic processing, users may be able to mainly focus on the content of the information relevant to them, and may thus feel that the diagnosis information is beneficial or helpful. Meanwhile, since the source expertise contributes to the perception of information quality 50 by enhancing users’ perceived creditability, it is possible that the relationship between personalization and perceived benefits will be enhanced by source expertise. Hence, we propose:

H2: Personalization (vs. non-personalization) will bring more perceived benefits when the health chatbot provides source expertise information compared to when it does not.

In this study, self-efficacy is defined as an individual's belief in his or her capabilities that is sufficient for gaining information, diagnosing and coping with diseases by using this health chatbot. Previous research has shown that self-efficacy is associated with dual-mode information processing. 66 For instance, Gaston et al. 69 proved that the health self-efficacy of pregnant women can be effectively improved in central route information processing. A past study related to chatbots for childhood vaccinations also showed that users who regarded information from chatbots as useful had higher vaccination self-efficacy. 70 Considering the features of personalized messages and professional sources, personalization and source expertise may be helpful in enhancing the individual's confidence in coping with the diseases. Thus, we propose:

H3: Personalization (vs. non-personalization advice) will lead to more self-efficacy when the health chatbot provides source expertise information compared to when it does not.

As for privacy concerns, Awad and Krishnan 8 proposed the personalization-privacy paradox, which argues that there is a continuous tension between the needs of a company to personalize consumer experience and consumers’ concerns over privacy. On the one hand, the higher the level of personalization, the greater the likelihood of satisfying consumers’ needs. 71 On the other hand, the tailored content may arouse users’ perceptions that the company has some manipulative purposes, thereby giving rise to privacy concerns. 72 Since the source expertise can enhance the perceived trust 50 which contributes to decreasing the privacy concerns, 73 the source expertise may influence the effect of personalization on privacy concerns. Therefore, we propose:

H4: Personalization (vs. non-personalization) will elicit fewer privacy concerns when the health chatbot provides source expertise information compared to when it does not.

The constructs from the HBM have been applied to predict health-related Internet usage.74,75 Among them, the perceived benefit is an important antecedent when deriving the usage intention regarding chatbots. 27 Moreover, self-efficacy, which is one's confidence in gaining positive outcomes through computer-mediated actions, has been confirmed to positively affect the usage intention in previous research. 76 In terms of the relationship between the perceived barriers and usage intention, Dhagarra et al. 64 proved that privacy concerns weaken the usage intention in the setting of health-related information technology. Combined with the personalization's impact on the perceived benefits, self-efficacy and privacy concerns noted above, we hypothesize that the constructs from the HBM may play mediating roles in the relationship between personalization and usage intention. Hence, we propose:

H5: (a) Perceived benefits, (b) self-efficacy and (c) privacy concerns mediate the effects of personalization on usage intention.

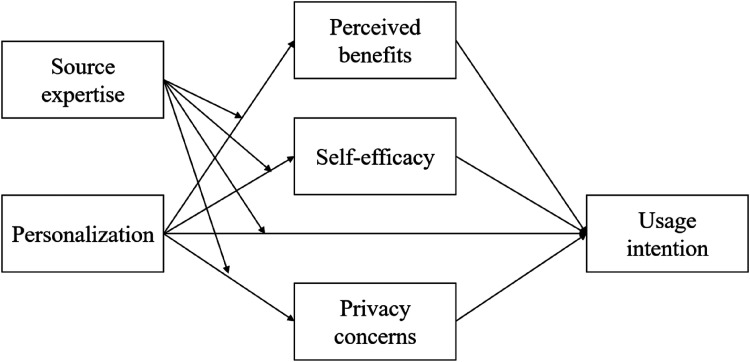

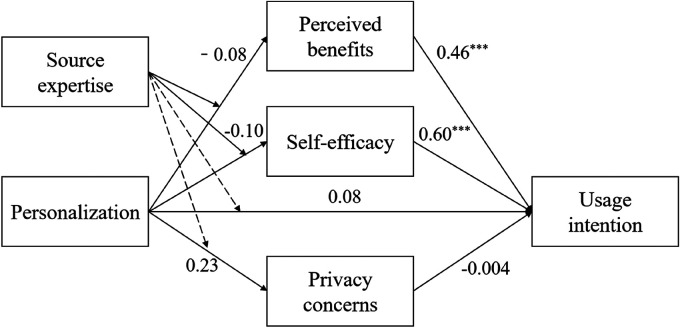

To summarize, the research model is shown in Figure 1.

Figure 1.

The research model in this study.

Method

Experiment design

This study aims to examine how source expertise and personalization jointly affect HBM-related factors and usage intention. A 2 (personalization vs. non-personalization) × 2 (source expertise vs. non-source expertise) between-subject experiment was designed. All online participants were randomly assigned to one of the four conditions. Participants were first asked to interact with a dummy chatbot designed by chatbot solution provider Sanuker (https://sanuker.com/), which is a fully-functioning platform for chatbot design enabling developers to extensively customize the conversations, deployment channels and user interfaces. Its chatbot builder Stella provides a code-free unified interface and methods for developers to embed chatbots in a variety of instant messaging or research platforms. Next, an online research company named Acadeta (Jishuyun) helped us with the randomized experiment design.

Stimulus material

By referring to the actual cases of major health chatbots in China (e.g. the Left-hand Doctor; Ping An Good Doctor), we designed a chatbot for this study. Participants could talk directly with this health consulting assistant called “Xiaokang.” When designing the health chatbot, we not only considered the purposes of our experiment but also referred to the real health chatbot products. With the standalone web chat as the deployment channel, “Xiaokang” was located on an independent website that does not require participants to register any accounts. To provide the participants with an immersive interaction, the “Xiaokang” interface includes a green chatbot logo, the dialogue frame and the chat bubbles, which is similar to extant commercial products. By allocating distinct identifiers to the participants, “Xiaokang” can identify, store, label and read users’ messages in an automatically generated database, so that the personalization features can be realized by inserting the user's information into specific replies. To exclude confounding factors, we adopted the linear conversation flows with the form of a tree structure to ensure a fixed conversation sequence in “Xiaokang.” Meanwhile, the error handling mechanism was configured for validation. The frame of the web chat of “Xiaokang” was eventually embedded into the online survey by using HTML fragments. Only after interacting with “Xiaokang,” following our instructions that were provided before the experiment, could the participant be directed to the survey interface. Before the experiment started, developers also tested the performance and debugged “Xiaokang” referring to the backend logs to ensure the chatbot's fluency and accuracy in the dialogue.

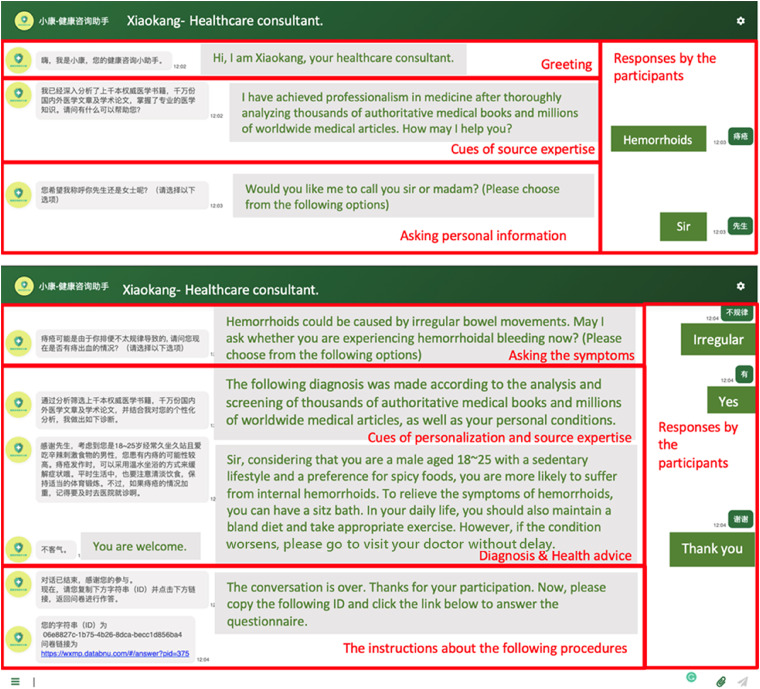

According to Sina News, 77 the incidence of hemorrhoids in China is about 51.1%. As hemorrhoids are generally regarded as one of the most common anorectal disorders, we chose hemorrhoids as the subject of this conversation. In addition, participants were asked to strictly follow standardized pre-set instructions by responding to the keywords from the chat scripts to the chatbot, which was aligned with prior research.12,78 We also followed the experimental path by using keyword recognition technology, in which the chatbot could match the corresponding replies based on the participants’ input. Examples of the chatbot interface at the condition of personalization × source expertise are provided in Figure 2.

Figure 2.

Chatbot interface examples.

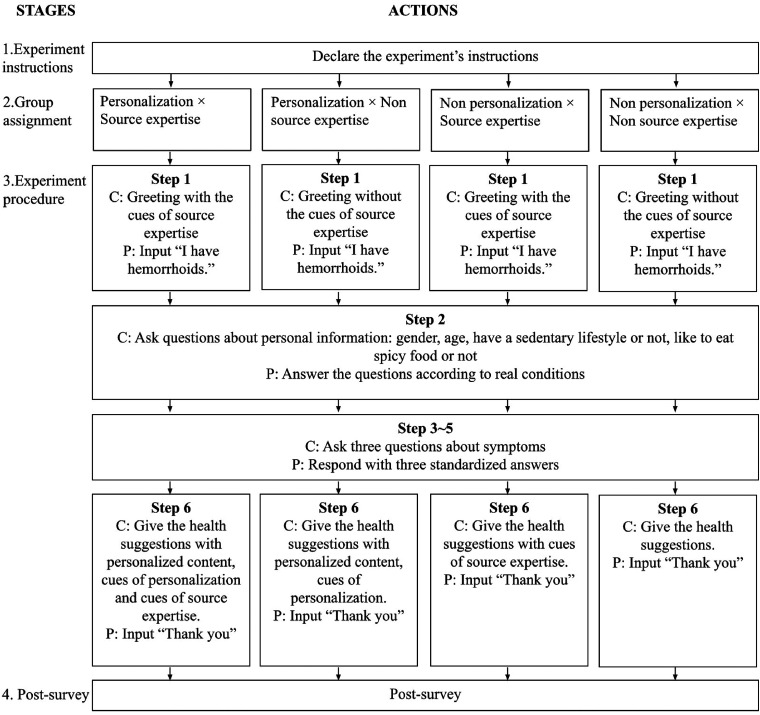

Procedure

Participants were first asked to read a standardized instruction for the conversation. They were told to assume that they had hemorrhoids-related symptoms and were seeking health suggestions from a health chatbot. We asked participants to strictly follow these steps sequentially. To be more precise, except for Step 2 where participants needed to enter their real information, other steps had to be answered by strictly following the standardized instructions. The experimental steps were as follows. Step 1, tell the chatbot “I have hemorrhoids.” Step 2, answer questions about personal information raised by the chatbot: gender, age, have a sedentary lifestyle or not, like to eat spicy food or not. This step requires participants to input their authentic personal information. Step 3, when the chatbot asks: “Do you have a history of anorectal problems?” participants need to select the option “No.” Step 4, when the chatbot asks: “Do you have regular bowel movements?” participants need to select the option “Not very regular.” Step 5, when the chatbot asks: “Are you experiencing hemorrhoidal bleeding?” participants need to select the button “Yes, a little bleeding.” Step 6, after reading the health suggestion given by the chatbot, participants need to input “Thank you” to end the dialogue. Next, after reading the instruction and the experimental steps, participants clicked a link to be randomly assigned to one of four scenarios in which the chatbot made either personalization × source expertise suggestions, personalization suggestions, suggestions including source expertise, or suggestions with non-personalization × non-source expertise. At the end of the conversation, the chatbot offered a link to move forward to a post-experiment survey. The flowchart of the experiment is displayed in Figure 3.

Figure 3.

Flowchart of the experiment.

In stage 3, C: chatbot; P: participants.

Manipulation materials

Stimuli were manipulated by explicit cues via text without adding other visual cues. Based on the actual cases of health chatbots in China and a previous study, 64 we manipulated source expertise by emphasizing the authority and reliability of the source in the message content, and the source information was emphasized twice in the conversation flow. At the beginning of the conversation, the chatbot first specified: “I have achieved professionalism in medicine after thoroughly analyzing thousands of authoritative medical books and millions of worldwide medical articles. How may I help you?” Second, before giving diagnostic suggestions, the chatbot starts by stating “The following diagnosis was made according to the analysis and screening of thousands of authoritative medical books and millions of worldwide medical articles…” For the condition of non-source expertise, the sentences on source expertise were omitted.

Personalization refers to the practice whereby systems utilize information from a certain user (e.g. demographics and preferences) to offer personalized services for the user. 79 Several approaches have been used to design online personalized content, such as the customers’ usage history for product recommendations 35 and customers’ personal information for advertising recommendations. 80 Considering that health suggestions need to be tailored based on the user's physical condition and lifestyle, we embedded a series of personal information when offering final diagnostic suggestions in the personalization condition for our manipulation, including gender, age, an eating habit and a living habit. For instance, in the scenario of personalization, the chatbot replied: “Sir, considering that you are a male aged 18∼25 with a sedentary lifestyle and a preference for spicy foods…” In the non-personalization scenario, the user's personality information was not repeated and emphasized in the final health advice. All scripts for manipulating the personalization and source expertise are displayed in Table 1.

Table 1.

Scripts for manipulating the personalization and source expertise.

| Personalization × Source Expertise | Personalization × No Source Expertise | No Personalization × Source Expertise | No Personalization × No Source Expertise | |

|---|---|---|---|---|

| Step 1 | Hi, I am Xiaokang, your healthcare consultant. I have achieved professionalism in medicine after thoroughly analyzing thousands of authoritative medical books and millions of worldwide medical articles… a |

Hi, I am Xiaokang, your healthcare consultant. | Hi, I am Xiaokang, your healthcare consultant. I have achieved professionalism in medicine after thoroughly analyzing thousands of authoritative medical books and millions of worldwide medical articles… |

Hi, I am Xiaokang, your healthcare consultant. |

| Step 2 | Asking about gender and other information b | Asking about gender and other information | Asking about gender and other information | Asking about gender and other information |

| Step 6 |

The following diagnosis was made according to the analysis and screening of thousands of authoritative medical books and millions of worldwide medical articles

as well as your personal conditions… |

The following diagnosis was made according to your personal conditions… | The following diagnosis was made according to the analysis and screening of thousands of authoritative medical books and millions of worldwide medical articles | (NULL) |

| Step 6 | Sir/Madam, considering that you are a male/female aged … with a sedentary lifestyle/(NULL) and a preference for spicy food/(NULL)… | Sir/Madam, considering that you are a male/female aged … with a sedentary lifestyle/(NULL) and a preference for spicy food/(NULL)… | (NULL) | (NULL) |

Phrases that are underlined are the stimulus material for manipulating source expertise.

Phrases in italics are the stimulus material for manipulating personalization.

Pre-test

A pre-test was conducted to ensure the function of the chatbot and that the stimulus materials could work successfully. Through snowball sampling, we received 91 participants’ valid responses. As for the stimuli, the independent-samples t-test showed that the stimulus material for source expertise was successful, t(89) = 2.189, p < 0.05. In terms of personalization, the stimulus material also passed the independent-samples t-test, t(89) = 2.510, p < 0.05. The content of the script and the wording of the questionnaire were adjusted according to the participants’ feedback from the pre-test.

Data collection

To determine the sample size for our experiment, we conducted a power analysis using G*Power. 81 We estimated the effect size f as 0.23, which is based on previous experiment studies regarding personalization and source expertise. 34 The results of the analysis indicated that 248 participants were needed in our research to achieve 95% power at an alpha-error level of 5%.

The participants in the online experiment were recruited between April and May 2021. The methods of recruitment were twofold. We posted a recruitment advertisement including the experiment's purpose and procedures on a WeChat official account platform named “marketing research group” to attract participants. Meanwhile, we also used a professional survey company called Acadeta (Jishuyun) to recruit samples. Each participant was paid 5 RMB. To prevent multiple submissions, we added a setting on the chatbot and questionnaire website to ensure that a participant with a duplicate IP address was blocked from entering the interaction interface and the questionnaire again. To ensure response quality, three attention check questions were added to the questionnaire. The first question required participants to select the disease with which the dialogue started. The second attention check further asked participants to recall whether the chatbot mentioned any information source and what the source was. Lastly, participants needed to answer whether the chatbot repeated the personal characteristics at the end of the interaction.

Formal participants

A total of 452 individuals participated in this study. Before the formal analysis, 79 participants were regarded as invalid samples since they did not follow the pre-set experiment instruction to interact with the chatbot; 92 participants were excluded due to incorrect responses to the three attention checks; 21 participants were filtered out since they finished the questionnaire in too short a time, which was far below the reasonable duration. After cleaning the data, there remained 260 valid observations. Among these participants, 176 (67.7%) were female and 84 (32.3%) were male. The 18–25 age range accounted for the largest proportion with 53.8%, followed by those aged 26–34 with 37.3%. In addition, 8.5% of the participants were in the 35–49 age group and 0.4% of them in the 50–64 age group. Regarding the education level, 68.5% indicated that they had a bachelor's degree (master's degree: 22.3%; college degree: 5.8%; high school diploma and below: 3.4%). 50.8% of participants had experienced health chatbots.

Measures

Variables for manipulation checks. To check the manipulation of the personalization cue, the perceived personalization toward a chatbot was measured using two items: “I believe that this chatbot's suggestion and diagnosis are personalized to meet my needs” and “I believe that this chatbot's suggestion and diagnosis meet my needs” 82 (Cronbach's α = 0.74). As for the manipulation check of the source expertise, the perceived source expertise in the context of the online chatbot was measured by using the following two items: “I believe this chatbot's suggestion and diagnosis are knowledgeable” and “I believe the suggestion and diagnosis of the chatbot reflect expertise”83,84 (Cronbach's α = 0.81). Responses for both manipulation check variables were scored on a 5-point Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree).

Mediating variables. All the items of the mediating variables were assessed on a 5-point Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree). The variable of perceived benefits was measured by five items. Three of these items were revised from Forslund 85 and Johnson and Grayson 83 : “I can obtain better knowledge about curing hemorrhoids,” “This chatbot offers a credible analysis,” and “This chatbot's careful work will not complicate things.” Two of the items were developed by the authors: “I can better know how to improve my hemorrhoid symptoms” and “It helps answer my questions about hemorrhoids” (Cronbach's α = 0.81).

Self-efficacy was measured via four items adapted from Carballo et al. 86 and Walrave et al. 18 The items were “I will be able to conduct the diagnosis myself by using this chatbot,” “I will adjust my habits based on the advice given by this chatbot,” “I fully understand how to use the chatbot,” and “By using this chatbot, I am confident that I can cure the hemorrhoids” (Cronbach's α = 0.71).

The privacy concern scale was adapted from Xu et al. 87 by using three items. Participants were asked to evaluate the degree of their agreement with the items: “It bothers me when this chatbot asks me for this much personal information,” “I worry that others could access my personal information from this chatbot,” and “I am concerned about providing personal information to this chatbot, because it could be used in a way I did not foresee” (Cronbach's α = 0.90).

Dependent variable. Usage intention was assessed by three items adapted from Pai and Huang 88 on a scale ranging from 1 (strongly disagree) to 5 (strongly agree). These items included “Given a chance, I intend to adopt this conversational service,” “I am willing to use this conversational service in the future if it is necessary,” and “I plan to use this conversational service in the future if it is necessary” (Cronbach's α = 0.90).

Results

Manipulation check

Source expertise. An independent-samples t-test was applied to determine the differences in the mean values between the source expertise group (N = 131) and the non-source expertise group (N = 129). The results indicated that participants in the source expertise group (M = 3.985, SD = 0.720) perceived more authoritative traits than those in the condition of the non-source expertise group (M = 3.771, SD = 0.778), t(256) = 2.294, p < 0.05. Hence, the stimulus material for the source expertise was manipulated successfully.

Personalization. As for the mean value discrepancies, we also used the independent-samples t-test to compare the personalization group (N = 122) with the non-personalization group (N = 138). Respondents who were assigned to the personalization condition (M = 4.045, SD = 0.616) perceived the message to be more tailored, compared to those who were assigned to the non-personalization condition (M = 3.833, SD = 0.781), t(258) = 2.404 p < 0.05. Therefore, the manipulation of personalization was successful.

Hypothesis testing

Usage intention. As for H1, the ANOVA results revealed that there were no significant main effects of personalization (F[1, 256] = 0.648, p = 0.422) and source expertise (F[1, 256] = 0.327, p = 0.568) on usage intention. The interaction effect between personalization and source expertise was also not significant (F[1, 256] = 1.067, p = 0.303). The descriptive values of usage intention for the four conditions are shown in Table 2.

Table 2.

Means and standard deviations (in parentheses) for the variables based on conditions.

| Condition | ||||

|---|---|---|---|---|

| Source expertise | No source expertise | |||

| Personalization | No personalization | Personalization | No personalization | |

| n = 65 | n = 66 | n = 57 | n = 72 | |

| Usage intention | 4.185 (0.799) | 4.000 (0.869) | 4.023 (0.791) | 4.046 (0.766) |

| Perceived benefits | 4.012 (0.633) | 3.709 (0.622) | 3.737 (0.620) | 3.814 (0.660) |

| Self-efficacy | 4.085 (0.648) | 3.814 (0.621) | 3.798 (0.622) | 3.903 (0.617) |

| Privacy concerns | 3.318 (1.231) | 3.551 (1.186) | 3.643 (1.263) | 3.412 (1.157) |

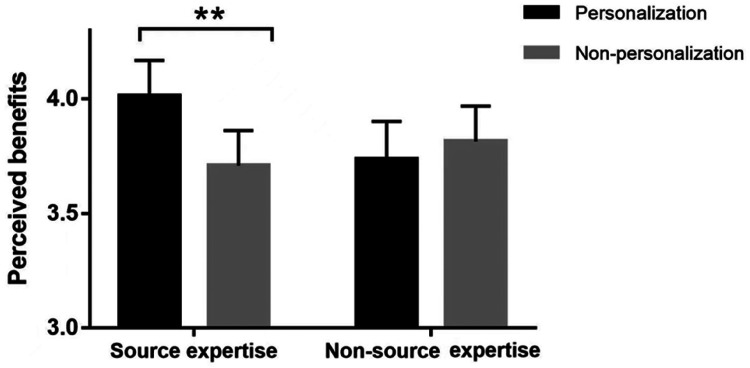

Perceived benefits. As for H2, the results of the ANOVA analysis showed that both the main effects of personalization (F[1, 256] = 2.047, p = 0.154) and source expertise (F[1, 256] = 1.165, p = 0.281) on the perceived benefits were not significant. However, the results yielded a significant interaction effect between personalization and source expertise (F[1, 256] = 5.786, p = 0.017). The simple comparisons showed that in the source expertise group, participants who were exposed to personalized information scored higher perceived benefits than those who were not (F[1, 256] = 7.466, p = 0.007). Personalization did not have a significant influence on the perceived benefits when the source expertise was absent (F[1, 256] = 0.468, p = 0.494) (see Figure 4 and Table 2).

Figure 4.

Means for perceived benefits according to conditions.

**p < 0.01.

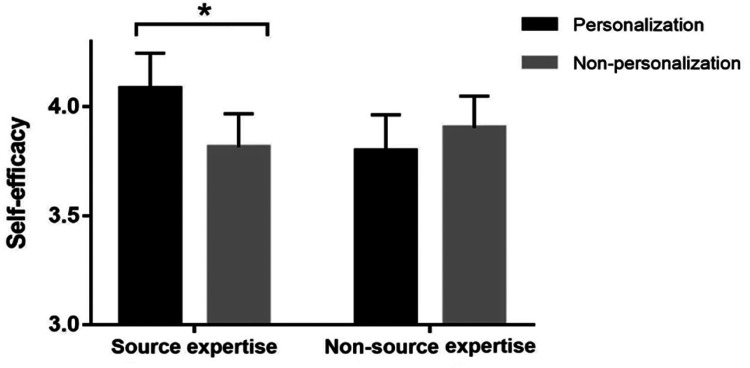

Self-efficacy. For H3, the results of the ANOVA analysis showed that no significant main effects of personalization (F[1, 256] = 1.126, p = 0.290) and source expertise (F[1, 256] = 1.608, p = 0.206) on self-efficacy were found. Nevertheless, the personalization × source expertise interaction revealed a significant effect on the participants’ self-efficacy (F[1, 256] = 5.762, p = 0.017). Simple comparisons revealed that the participants’ self-efficacy was higher in the source expertise group with personalized information compared to that for those without personalized information (F[1, 256] = 6.079, p = 0.014). Personalization did not have an effect on self-efficacy in the non-source expertise group (F[1, 256] = 0.884, p = 0.348) (see Figure 5 and Table 2).

Figure 5.

Means for self-efficacy according to conditions.

*p < 0.05.

Privacy concerns. As for H4, the ANOVA results showed that the main effects were not significant for both personalization (F[1, 256] = 0.000, p = 0.997) and source expertise (F[1, 256] = 0.387, p = 0.534). Moreover, there was also no significant interaction effect between personalization and source expertise in relation to privacy concerns (F[1, 256] = 2.384, p = 0.124) (see Table 2).

Mediation analysis

To test H5, we conducted a moderated mediation analysis using Model 8 of the Process macro of SPSS. 89

Figure 6 and Table 3 present the conditional direct and indirect effects that were contingent on the source expertise. The results show that perceived benefits and self-efficacy played mediating roles between personalization and usage intention in the source expertise condition. Specifically, the indirect effect of personalization on usage intention via perceived benefits was significant when the participants were exposed to the source expertise (indirect effect = 0.138, 95% BootCI [0.038, 0.263]). However, such an indirect effect of perceived benefits was not significant when the source expertise was absent (indirect effect = −0.035, 95% BootCI [−0.144, 0.069]). Likewise, the indirect effect of personalization on usage intention through self-efficacy was significant when the source expertise was shown (indirect effect = 0.161, 95% BootCI [0.029, 0.313]), and not significant when it was not shown (indirect effect = −0.062, 95% BootCI [−0.205, 0.068]). However, privacy concerns did not mediate the relationship between personalization and usage intention for two source expertise conditions. The direct effect of personalization on usage intention was not significant for any conditions, suggesting that the perceived benefits and self-efficacy fully mediated the influence of personalization on usage intention when the source expertise was shown.

Figure 6.

Statistical significance of paths in a moderated mediation model.

Table 3.

Results of conditional effects for moderated mediation (n = 260).

| Conditional direct effect of source expertise conditions | ||||

|---|---|---|---|---|

| Source expertise | Value | SE | t | p |

| 0 a | 0.076 | 0.092 | 0.825 | 0.410 |

| 1 | −0.116 | 0.091 | −1.265 | 0.207 |

| Conditional indirect effect through perceived benefits | ||||

| Value | SE | LLCI b | ULCI | |

| 0 | −0.035 | 0.053 | −0.144 | 0.069 |

| 1 | 0.138 | 0.057 | 0.038 | 0.263 |

| Conditional indirect effect through self-efficacy | ||||

| Value | SE | LLCI | ULCI | |

| 0 | −0.062 | 0.068 | −0.205 | 0.068 |

| 1 | 0.161 | 0.072 | 0.029 | 0.313 |

| Conditional indirect effect through privacy concerns | ||||

| Value | SE | LLCI | ULCI | |

| 0 | −0.001 | 0.009 | −0.022 | 0.018 |

| 1 | 0.001 | 0.009 | −0.019 | 0.021 |

0: non-source expertise condition; 1: source expertise condition.

LL: lower limit; CI: confidence interval; UL: upper limit; Bootstrapped at sample size = 5000.

Discussion and implications

This study investigated how the personalization and source expertise of the information from a health chatbot influence individuals’ health-related outcomes, privacy concerns and usage intention in relation to the health chatbot. The findings of this research merit a detailed discussion.

We found that personalization can increase perceived benefits and self-efficacy only in the case where the health chatbot provides the source expertise. As noted in previous research, personalization can increase the perceived benefits90,91 and self-efficacy 92 in different contexts. However, our study found that when users interact with the health chatbot, this effect has a precondition regarding the source expertise. A possible explanation is that currently the users do not fully trust the information provided by the health chatbot. For individuals who are seeking health-related information from the Internet, the assessment regarding the credibility and trustworthiness of the sources is highly important 93 since information or suggestions about health may have significant effects on individuals. 94 As a health-related service on the Internet, the health chatbot is still at the nascent stage. Many users are uncertain about the trustworthiness and quality of this service. 4 The concern about an incorrect diagnosis is a salient issue of the health chatbot. 95 When users doubt the quality and the authenticity of the message from the chatbot, the effects of the information on their health beliefs may be minimal. However, the source expertise of the message can help users to build perceptions about credibility and trustworthiness, 96 which will effectively help individuals form favorable evaluations toward the information 17 and further mitigate the uncertainties toward the message. Only when individuals believe the message is credible and trustworthy will the information further influence their health beliefs.

This finding adds discussion to the previous literature on the effects of both personalization and source expertise. With regard to personalization, there have been some contradictory findings pertaining to its influences. 80 On the one hand, scholars provided evidence to show the favorable effects of personalization28,97; on the other hand, a few studies found that a personalized message may not lead to significant changes.98,99 Our result provides a new potential explanation for the previous inconsistent findings. Previous studies 98 often explored personalization as the sole research focus. By demonstrating the moderating effect of source expertise on personalization, this study further shows that in some cases, the effects of personalization are contingent upon other important message cues. Future studies need to comprehensively consider the potential boundary conditions when exploring the influences of personalization based on the research context.

In regard to source expertise, we found no direct main effects of the expertise cue in our experiment, which is different from previous studies.46,50 This may be because the dependent variables are users’ health beliefs in the current study rather than perceptions related to message quality, which were often explored in previous studies. The interaction effect in our findings sheds light on how source expertise plays a role in the process of establishing health-related outcomes. Specifically, in the context of the health chatbot, although source expertise per se cannot alter users’ health beliefs, users seemed to digest personalized information and change their beliefs based in part on their perceptions of expertise related to the source. Such a crossover effect indicates that source expertise serves as a necessary anchor that users employ to accept persuasive health messages.43,50 As one of the first studies to explore source expertise in health chatbots, our research contributes to the stream of research regarding its influences in the health domain.

From the theoretical perspective of information processing, our study has provided evidence of an interaction effect between the systematic cue and the heuristic cue, which provides further evidence to deepen our understanding of the HSM. In our research, the personalization of the message is a systematic cue whereas the source expertise is a heuristic cue. Aligned with the “bias hypothesis,” heuristic processing will influence individuals’ systematic processing by leading to expectancies about the message, especially when individuals hold a high motivation for information processing and the message is meanwhile not sufficiently clear and convincing. 54 As discussed above, users have specific motivations when using the health chatbot and the uncertainty of the health information from the Internet service makes them feel less assured about the credibility of the health message provided by the chatbot. Thus, as the “bias hypothesis” proposes, there is an interdependent interaction whereby the heuristic processing of source expertise influences the effect of the systematic processing of personalization on people's attitudes and beliefs. It adds to the discussion about the role that the heuristic cue plays in the individual's processing. Previous research has proved that in biased processing, the systematic cue is not always the determining factor. 17 In actual fact, based on the least effort principle, the heuristic cues are particularly important since individuals tend to rely on them for a convenient and quick assessment of the information. 16 Our study provides evidence that the heuristic cue may be a necessary condition for the effect of the systematic cue, which shores up the related arguments of the HSM.

From a practical point of view, it is recommended that health chatbot developers include source expertise as one of the design features. For example, the developers could include visual cues that might trigger the cognition of source expertise into the system design. It is suggested that service providers select a credible source to use as the database, for instance by collaborating with prestigious medical institutions, or recruit a team of medical experts to provide authoritative advice regarding the health information and diagnosis. Then, the chatbot could disclose their collaboration at the beginning of the conversation with users to present the well-founded expertise cue. In addition, to further help the users realize the chatbot's expertise, the service provider could notify users that there are regular updates of the chatbot's database and analytic systems. These measures would help to establish a sense of credibility and trust closer to what the patients usually receive from visiting a hospital in real life and further improve the users’ acceptance of the health chatbot.

Meanwhile, we have also found that personalization cannot directly influence the intention to use the health chatbot. Perceived benefits and self-efficacy fully mediate between personalization and the intention to use the health chatbot. The reason may be that people have specific and concrete health-related demands and motivations when they make decisions about whether they will continue to use a health chatbot. In our study, the chatbot was designed to give users instantaneous diagnoses and suggestions about hemorrhoids, so that the chatbot was a goal-oriented chatbot with clear and specific functions. These chatbots are designed to provide users with the necessary and specific information that they want in short conversations. 100 The core requirement for the participants in our study is to acquire useful health-related information, specifically, diagnoses and related treatments. Under these circumstances, only when the users feel that the conversation with the health chatbot can bring benefits and improve self-efficacy will they further use the chatbot. Based on the HBM, our research is one of the early studies using the experiment to investigate how personalization influences health outcomes in a chatbot context. It contributes to the previous research28,40 on personalization in the health context by distinctly demonstrating its effects on individuals’ health beliefs. Meanwhile, in a way that differs from the previous HCI literature 34 showing that personalization can directly increase behavioral intention, our findings on the full mediation effects of health belief factors demonstrate the users’ unique psychological mechanisms in the health chatbot context.

The factors from the HBM are useful for understanding the mechanisms of the increased usage intention of the health chatbot. To be specific, perceived benefits and self-efficacy are important health beliefs that directly facilitate the users’ usage intention to use this service. These results are in accordance with previous studies that also use constructs of the HBM to explore health-related intentions and behaviors.18,19 Our study shows that the HBM is a helpful tool in explaining why users are willing to use the health chatbot, which provides implications that further research on health-related AI services and products could refer to the HBM to investigate users’ perceptions and actions. For instance, by emphasizing the potential health-related advantages that the service would bring to the users, chatbot providers will be more likely to retain active users since the health-related benefits are the core demands of users when they use the health chatbots.

Finally, somewhat unexpectedly, we found that personalization has no effect on privacy concerns. Privacy concerns cannot influence the intention to use the health chatbot either. While it is widely recognized that the personalization is closely related to the risks and concerns about privacy, 101 our results indicate that privacy concerns do not constitute an important issue for usage intention in the context of the health chatbot. The first explanation may be that the benefits of personalization may overshadow privacy concerns in the case of the health chatbot. As discussed earlier, users have specific motivations when using health chatbots. In addition, privacy-related perceptions with regard to the personalization paradox may be context-dependent. 81 Previous studies indicated that personalization may be related to privacy concerns in scenarios involving social media websites, 101 online shopping, 102 and so on. Considering that our research context is the health chatbot providing diagnosis and therapeutic suggestions, we manipulated personalization based on the participants’ physical condition and lifestyle. Compared to these contexts, personalization may be relatively more acceptable in the health context because it can indeed help individuals to obtain health information that is more suitable for them. When users direct the most attention to health-related benefits during their interaction with the chatbot, they may ignore the possibility that their private information might be collected and tracked. This point is consistent with previous research, which indicated that Chinese people are willing to sacrifice privacy for potential benefits. Another explanation is that currently the health chatbot is a new service. In the nascent stages of a new technology, privacy concerns are not a salient issue for its users. 103

Although it seems that users do not care much about the perception of privacy in our research setting, this does not mean that chatbot platforms do not need to pay attention to privacy. Due to the popularity of and easy access to AI devices in our daily lives, the active concept of privacy protection referred to as “privacy by design” 104 should be highlighted by device designers. That is, the thought of privacy protection could be initially considered in the design of the product or service. For example, a clear purpose statement for data collection should be provided to users via the chatbot's privacy policy. Besides, users can type keywords like “forget/delete my personal information” to fulfill their right to be forgotten. By doing this, users’ privacy concerns can be alleviated at the beginning.

Limitations and future research

There are some limitations to this study. Our experimental design is based on the participants’ assumptions regarding their health situation, regardless of whether they have a certain disease or not. While requiring subjects to imagine symptoms and follow certain experimental procedures can effectively enable researchers to avoid the uncontrollable effects in online experiments,12,78 it is inevitable that this may reduce ecological validity. In addition, the results of this study are based on data collected from Chinese users. In the context of health chatbots, Chinese participants have exhibited a lack of concern when disclosing their privacy, and such results may not be replicated in other countries or regions. Therefore, future studies could consider replicating and extending the current experimental designs of this study with different stimulus materials, contexts and other background characteristics to ensure more robust external validity. Furthermore, considering that our experiment involved 260 valid responses, we recommend that future studies regarding chatbots arrive at a larger sample size to improve the generalizability of the results.

The final limitation is that we conducted a one-time simulation test based on the HBM. More long-term intervention experiments are needed to enrich the relevant research in this field. Future research could consider using health chatbots as an intervention in the experimental group, and comparing the health beliefs perceived by users in the control group after a few months. By conducting such longitudinal studies, the effectiveness of the intervention can be examined from a long-term perspective.

Acknowledgements

The authors would like to thank the anonymous reviewers for their constructive comments.

Footnotes

Contributorship: YL, WY and BH contributed to the research idea and wrote the original manuscript. ZL designed the experimental chatbot and participated in writing the section of literature review. YLL participated in writing the section of literature review.

Declaration of conflicting interests: The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding: The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by the General Research Fund, University Grant Council, Hong Kong (Grant No: 9043252) and City University of Hong Kong (Project No. 9380125).

Ethical approval: The study was approved by the Human Subjects Ethics Sub-Committee of City University of Hong Kong (Application No.: H002620).

Guarantor: YL.

ORCID iD: Bo Hu https://orcid.org/0000-0001-9020-3423

References

- 1.Senseforth.ai. Medical Chatbots – use cases, examples and case studies of conversational AI in medicine and health, https://www.senseforth.ai/conversational-ai/medical-chatbots/ (2022, accessed 27 June 2022).

- 2.Dealroom.co. Consumer healthcare services list, https://app.dealroom.co/lists/14276 (2022, accessed 27 June 2022).

- 3.AiTechPark. Ping An Good Doctor aiming to be a pillar of China’s healthcare sector. AI-TechPark, https://ai-techpark.com/ping-an-good-doctor-aiming-to-be-a-pillar-of-chinas-healthcare-sector/ (2022, accessed 27 June 2022).

- 4.Nadarzynski T, Miles O, Cowie A, et al. Acceptability of artificial intelligence (AI)-led chatbot services in healthcare: a mixed-methods study. Digit Health 2019; 5: 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.People.cn. The AI Doctor is coming, http://finance.people.com.cn/n1/2019/0322/c1004-30989298.html (2019, accessed 27 June 2022).

- 6.Ayeh JK. Travellers’ acceptance of consumer-generated media: an integrated model of technology acceptance and source credibility theories. Comput Hum Behav 2015; 48: 173–180. [Google Scholar]

- 7.Guo X, Zhang X, Sun Y. The privacy–personalization paradox in mHealth services acceptance of different age groups. Electron Commer Res Appl 2016; 16: 55–65. [Google Scholar]

- 8.Awad NF, Krishnan MS. The personalization privacy paradox: an empirical evaluation of information transparency and the willingness to be profiled online for personalization. MIS Q 2006; 30: 13–28. [Google Scholar]

- 9.Zhang X, Guo X, Guo F, et al. Nonlinearities in personalization-privacy paradox in mHealth adoption: the mediating role of perceived usefulness and attitude. Technol Health Care 2014; 22: 515–529. [DOI] [PubMed] [Google Scholar]

- 10.Lee CH, Cranage DA. Personalisation–privacy paradox: the effects of personalisation and privacy assurance on customer responses to travel Web sites. Tour Manag 2011; 32: 987–994. [Google Scholar]

- 11.Law J, Martin E. Concise medical dictionary. Oxford, UK: Oxford University Press, 2020. [Google Scholar]

- 12.Ischen C, Araujo T, van Noort G, et al. “I am here to assist you today”: the role of entity, interactivity and experiential perceptions in chatbot persuasion. J Broadcast Electron Media 2020; 64: 615–639. [Google Scholar]

- 13.Eastin MS. Credibility assessments of online health information: the effects of source expertise and knowledge of content. J Comput-Mediat Commun 2001; 6: JCMC643. [Google Scholar]

- 14.Shang L, Zhou J, Zuo M. Understanding older adults’ intention to share health information on social media: the role of health belief and information processing. Internet Res 2020; 31: 100–122. [Google Scholar]

- 15.Suzanne K, Steginga SO. The application of the heuristic-systematic processing model to treatment decision making about prostate cancer. Med Decis Making 2004; 24: 573–583. [DOI] [PubMed] [Google Scholar]

- 16.Bohner G, Moskowitz GB, Chaiken S. The interplay of heuristic and systematic processing of social information. Eur Rev Soc Psychol 1995; 6: 33–68. [Google Scholar]

- 17.Chaiken S, Maheswaran D. Heuristic processing can bias systematic processing: effects of source credibility, argument ambiguity, and task importance on attitude judgment. J Pers Soc Psychol 1994; 66: 460–473. [DOI] [PubMed] [Google Scholar]

- 18.Walrave M, Waeterloos C, Ponnet K. Adoption of a contact tracing app for containing COVID-19: a health belief model approach. JMIR Public Health Surveill 2020; 6: e20572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Willis E. Applying the health belief model to medication adherence: the role of online health communities and peer reviews. J Health Commun 2018; 23: 743–750. [DOI] [PubMed] [Google Scholar]

- 20.Song X, Xiong T. A survey of published literature on conversational artificial intelligence. In: 2021 7th International conference on information management (ICIM), London, UK, 27–29 March 2021, pp.113–117. [Google Scholar]

- 21.Dingler T, Kwasnicka D, Wei J, et al. The use and promise of conversational agents in digital health. Yearb Med Inform 2021; 30: 191–199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Pereira J, Díaz Ó. Using health chatbots for behavior change: a mapping study. J Med Syst 2019; 43: 1–13. [DOI] [PubMed] [Google Scholar]

- 23.Athota L, Shukla VK, Pandey N, et al. Chatbot for healthcare system using artificial intelligence. In: 2020 8th International conference on reliability, infocom technologies and optimization (trends and future directions) (ICRITO), Noida, India, 4–5 June 2020, pp.619–622. [Google Scholar]

- 24.Crutzen R, Peters G-JY, Portugal SD, et al. An artificially intelligent chat agent that answers adolescents’ questions related to sex, drugs, and alcohol: an exploratory study. J Adolesc Health 2011; 48: 514–519. [DOI] [PubMed] [Google Scholar]

- 25.Corneliussen SEL. Factors affecting intention to use chatbots for health information . Master’s Thesis. University of Oslo, Oslo, 2020. [Google Scholar]

- 26.Liu K, Tao D. The roles of trust, personalization, loss of privacy, and anthropomorphism in public acceptance of smart healthcare services. Comput Hum Behav 2022; 127: 107026. [Google Scholar]

- 27.Huang D-H, Chueh H-E. Chatbot usage intention analysis: veterinary consultation. J Innov Knowl 2021; 6: 135–144. [Google Scholar]

- 28.Zhu Y, Wang R, Pu C. “I am chatbot, your virtual mental health adviser.” What drives citizens’ satisfaction and continuance intention toward mental health chatbots during the COVID-19 pandemic? An empirical study in China. Digit Health 2022; 8: 1–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Liu X, He X, Wang M, et al. What influences patients’ continuance intention to use AI-powered service robots at hospitals? The role of individual characteristics. Technol Soc 2022; 70: 101996. [Google Scholar]

- 30.Ho SY, Tam KY. An empirical examination of the effects of web personalization at different stages of decision making. Int J Human-Computer Interact 2005; 19: 95–112. [Google Scholar]

- 31.Blom J. Personalization: a taxonomy. In: CHI ’00 extended abstracts on human factors in computing systems, 1–6 April 2000. New York, NY: Association for Computing Machinery, pp.313–314. [Google Scholar]

- 32.Kim NY, Sundar SS. Personal relevance versus contextual relevance: the role of relevant ads in personalized websites. J Media Psychol Theor Methods Appl 2012; 24: 89–101. [Google Scholar]

- 33.Alpert SR, Karat J, Karat C-M, et al. User attitudes regarding a user-adaptive ecommerce web site. User Model User-Adapt Interact 2003; 13: 373–396. [Google Scholar]

- 34.Kim J, Gambino A. Do we trust the crowd or information system? Effects of personalization and bandwagon cues on users’ attitudes and behavioral intentions toward a restaurant recommendation website. Comput Hum Behav 2016; 65: 369–379. [Google Scholar]

- 35.Rhee CE, Choi J. Effects of personalization and social role in voice shopping: an experimental study on product recommendation by a conversational voice agent. Comput Hum Behav 2020; 109: 106359. [Google Scholar]

- 36.Noar SM, Harrington NG, Aldrich RS. The role of message tailoring in the development of persuasive health communication messages. Ann Int Commun Assoc 2009; 33: 73–133. [Google Scholar]

- 37.Kreuter MW, Strecher VJ. Do tailored behavior change messages enhance the effectiveness of health risk appraisal? Results from a randomized trial. Health Educ Res 1996; 11: 97–105. [DOI] [PubMed] [Google Scholar]

- 38.Head KJ, Noar SM, Iannarino NT, et al. Efficacy of text messaging-based interventions for health promotion: a meta-analysis. Soc Sci Med 2013; 97: 41–48. [DOI] [PubMed] [Google Scholar]

- 39.Fitzpatrick KK, Darcy A, Vierhile M. Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated conversational agent (woebot): a randomized controlled trial. JMIR Ment Health 2017; 4: e19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Sillice MA, Morokoff PJ, Ferszt G, et al. Using relational agents to promote exercise and sun protection: assessment of participants’ experiences with two interventions. J Med Internet Res 2018; 20: e48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Kocaballi AB, Berkovsky S, Quiroz JC, et al. The personalization of conversational agents in health care: systematic review. J Med Internet Res 2019; 21: e15360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Pornpitakpan C. The persuasiveness of source credibility: a critical review of five decades’ evidence. J Appl Soc Psychol 2004; 34: 243–281. [Google Scholar]

- 43.Walther JB, Jang J, Hanna Edwards AA. Evaluating health advice in a Web 2.0 environment: the impact of multiple user-generated factors on HIV advice perceptions. Health Commun 2018; 33: 57–67. [DOI] [PubMed] [Google Scholar]

- 44.Wang Z, Walther JB, Pingree S, et al. Health information, credibility, homophily, and influence via the Internet: web sites versus discussion groups. Health Commun 2008; 23: 358–368. [DOI] [PubMed] [Google Scholar]

- 45.Van Der Heide B, Schumaker EM. Computer-mediated persuasion and compliance: social influence on the Internet and beyond. In: Amichai-Hamburger Y (eds) The social net: understanding our online behavior. 2nd ed. New York, NY: Oxford University Press, 2013, pp.79–98. [Google Scholar]

- 46.Liew TW, Tan S-M, Tee J, et al. The effects of designing conversational commerce chatbots with expertise cues. In: 2021 14th International conference on human system interaction (HSI), Gdansk, Poland, 8–10 July 2021, pp.1–6. [Google Scholar]

- 47.Avery EJ. The role of source and the factors audiences rely on in evaluating credibility of health information. Public Relat Rev 2010; 36: 81–83. [Google Scholar]

- 48.Dong Z. How to persuade adolescents to use nutrition labels: effects of health consciousness, argument quality, and source credibility. Asian J Commun 2015; 25: 84–101. [Google Scholar]

- 49.Borah P, Xiao X. The importance of ‘likes’: the interplay of message framing, source, and social endorsement on credibility perceptions of health information on Facebook. J Health Commun 2018; 23: 399–411. [DOI] [PubMed] [Google Scholar]

- 50.Yi MY, Yoon JJ, Davis JM, et al. Untangling the antecedents of initial trust in Web-based health information: the roles of argument quality, source expertise, and user perceptions of information quality and risk. Decis Support Syst 2013; 55: 284–295. [Google Scholar]

- 51.Chaiken S. Heuristic versus systematic information processing and the use of source versus message cues in persuasion. J Pers Soc Psychol 1980; 39: 752–766. [Google Scholar]

- 52.Chen S, Chaiken S. The heuristic-systematic model in its broader context. In: Chaiken S, Trope Y. (eds) Dual-process theories in social psychology. New York, NY: Guilford Press, 1999, pp.73–96. [Google Scholar]

- 53.Shapiro J, Nixon LL, Wear SE, et al. Medical professionalism: what the study of literature can contribute to the conversation. Philos Ethics Humanit Med 2015; 10: Article 10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Chaiken S, Liberman A, Eagly AH. Heuristic and systematic information processing within and beyond the persuasion context. In: Uleman JS, Bargh JA. (eds) Unintended thought. New York, NY: Guilford Press, 1989, pp.212–252. [Google Scholar]

- 55.Laumer S, Maier C, Gubler FT. Chatbot acceptance in healthcare: explaining user adoption of conversational agents for disease diagnosis. In: Proceedings of the 27th European Conference on Information Systems (ECIS), Stockholm and Uppsala, Sweden, 8–14 June 2019, pp.1–19. [Google Scholar]

- 56.Zhang KZ, Zhao SJ, Cheung CM, et al. Examining the influence of online reviews on consumers’ decision-making: a heuristic–systematic model. Decis Support Syst 2014; 67: 78–89. [Google Scholar]

- 57.Lustria M LA, Noar S M, Cortese J, et al. A meta-analysis of web-delivered tailored health behavior change interventions. J Health Commun 2013. 18: 1039–1069. [DOI] [PubMed] [Google Scholar]

- 58.Griffin RJ, Neuwirth K, Giese J, et al. Linking the heuristic-systematic model and depth of processing. Commun Res 2002; 29: 705–732. [Google Scholar]

- 59.Rosenstock IM. Historical origins of the health belief model. Health Educ Monogr 1974; 2: 328–335. [Google Scholar]

- 60.Rosenstock IM, Strecher VJ, Becker MH. Social learning theory and the health belief model. Health Educ Q 1988; 15: 175–183. [DOI] [PubMed] [Google Scholar]

- 61.Alharbi NS, AlGhanmi AS, Fahlevi M. Adoption of health mobile apps during the COVID-19 lockdown: a health belief model approach. Int J Environ Res Public Health 2022; 19: 4179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Mou J, Shin D-H, Cohen J. Health beliefs and the valence framework in health information seeking behaviors. Inf Technol People 2016; 29: 876–900. [Google Scholar]

- 63.Zhang X, Baker K, Pember S, et al. Persuading me to eat healthy: a content analysis of YouTube public service announcements grounded in the health belief model. South Commun J 2017; 82: 38–51. [Google Scholar]

- 64.Dhagarra D, Goswami M, Kumar G. Impact of trust and privacy concerns on technology acceptance in healthcare: an Indian perspective. Int J Med Inf 2020; 141: 104164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Vaidyam AN, Wisniewski H, Halamka JD, et al. Chatbots and conversational agents in mental health: a review of the psychiatric landscape. Can J Psychiatry 2019; 64: 456–464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Trumbo CW. Heuristic–systematic information processing and risk judgment. Risk Anal 1999; 19: 391–400. [DOI] [PubMed] [Google Scholar]

- 67.Choi D-H, Yoo W, Noh G-Y, et al. The impact of social media on risk perceptions during the MERS outbreak in South Korea. Comput Hum Behav 2017; 72: 422–431. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Victoria L C, Celette Sugg S. The health belief model. Health Behav Health Educ Theory Res Pract 2008; 4: 45–65. [Google Scholar]

- 69.Gaston A, Cramp A, Prapavessis H. Enhancing self-efficacy and exercise readiness in pregnant women. Psychol Sport Exerc 2012; 13: 550–557. [Google Scholar]

- 70.Hong Y-J, Piao M, Kim J, et al. Development and evaluation of a child vaccination chatbot real-time consultation messenger service during the COVID-19 pandemic. Appl Sci 2021; 11: 12142. [Google Scholar]

- 71.Ho SY, Bodoff D. The effects of Web personalization on user attitude and behavior. MIS Q 2014; 38: 497–A10. [Google Scholar]

- 72.Aguirre E, Roggeveen AL, Grewal D, et al. The personalization-privacy paradox: implications for new media. J Consum Mark 2016; 33: 98–110. [Google Scholar]

- 73.Kim DJ. Self-perception-based versus transference-based trust determinants in computer-mediated transactions: a cross-cultural comparison study. J Manag Inf Syst 2008; 24: 13–45. [Google Scholar]

- 74.Ahadzadeh AS, Sharif SP, Ong FS, et al. Integrating health belief model and technology acceptance model: an investigation of health-related internet use. J Med Internet Res 2015; 17: e45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Xu L, Li P, Hou X, et al. Middle-aged and elderly users’ continuous usage intention of health maintenance-oriented WeChat official accounts: empirical study based on a hybrid model in China. BMC Med Inform Decis Mak 2021; 21: 257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Umphrey LR. Message defensiveness, efficacy, and health–related behavioral intentions. Commun Res Rep 2004; 21: 329–337. [Google Scholar]

- 77.Sina News. Can hemorrhoids become cancerous? https://tech.sina.cn/2020-05-28/detail-iircuyvi5503124.d.html?cre=wappage&mod=r&loc=5&r=9&rfunc=47&tj=none&cref=cj&wm=3236 (2020, accessed 27 June 2022).

- 78.Liu B, Sundar SS. Should machines express sympathy and empathy? Experiments with a health advice chatbot. Cyberpsychol Behav Soc Netw 2018; 21: 625–636. [DOI] [PubMed] [Google Scholar]

- 79.Serino CM, Furner CP, Smatt C. Making it personal: how personalization affects trust over time. In: Proceedings of the 38th annual Hawaii international conference on system sciences, Hawaii, USA, 3–6 January 2005, pp.170a. [Google Scholar]

- 80.Li C, Liu J. A name alone is not enough: a reexamination of web-based personalization effect. Comput Hum Behav 2017; 72: 132–139. [Google Scholar]

- 81.Faul F, Erdfelder E, Lang A-G, et al. G*Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav Res Methods 2007; 39: 175–191. [DOI] [PubMed] [Google Scholar]

- 82.Tam KY, Ho SY. Understanding the impact of web personalization on user information processing and decision outcomes. MIS Q 2006; 30: 865–890. [Google Scholar]

- 83.Johnson D, Grayson K. Cognitive and affective trust in service relationships. J Bus Res 2005; 58: 500–507. [Google Scholar]

- 84.Nordheim CB, Følstad A, Bjørkli CA. An initial model of trust in chatbots for customer service – findings from a questionnaire study. Interact Comput 2019; 31: 317–335. [Google Scholar]

- 85.Forslund H. Measuring information quality in the order fulfilment process. Int J Qual Reliab Manag 2007; 24: 515–524. [Google Scholar]

- 86.Carballo NJ, Alessi CA, Martin JL, et al. Perceived effectiveness, self-efficacy, and social support for oral appliance therapy among older veterans with obstructive sleep apnea. Clin Ther 2016; 38: 2407–2415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Xu H, Dinev T, Smith J, et al. Information privacy concerns: linking individual perceptions with institutional privacy assurances. J Assoc Inf Syst 2011; 12: 798–824. [Google Scholar]

- 88.Pai F-Y, Huang K-I. Applying the technology acceptance model to the introduction of healthcare information systems. Technol Forecast Soc Change 2011; 78: 650–660. [Google Scholar]