Abstract

Reservoir computing is a computational framework of recurrent neural networks and is gaining attentions because of its drastically simplified training process. For a given task to solve, however, the methodology has not yet been established how to construct an optimal reservoir. While, “small-world” network has been known to represent networks in real-world such as biological systems and social community. This network is categorized amongst those that are completely regular and totally disordered, and it is characterized by highly-clustered nodes with a short path length. This study aims at providing a guiding principle of systematic synthesis of desired reservoirs by taking advantage of controllable parameters of the small-world network. We will validate the methodology using two different types of benchmark tests—classification task and prediction task.

Subject terms: Mathematics and computing, Optics and photonics

Introduction

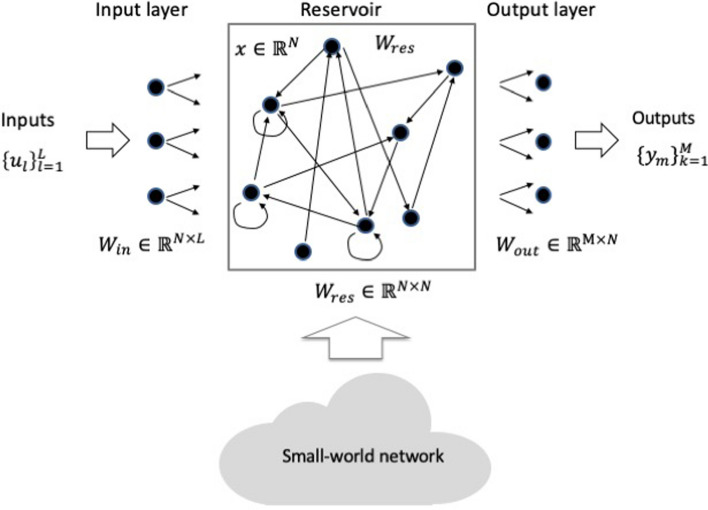

Reservoir computing (RC) is a unified computational framework1,2, independently proposed recurrent neural network (RNN) models of echo state networks (ESNs)3,4 and liquid state machines (LSMs)5,6. It is a special class of RNN models, consisting of three layers—an input layer and an output layers and a reservoir between the input and output layers (Fig. 1). The primary difference between the RC and deep learning or multi-layer neural networks is that only the connections between the reservoir and the output layer are trainable, and the training requires much less data than in the deep learning. Owing to an excellent memory capability of the recurrent nature, it can be used in speech recognition and temporal waveform forecast. It has been shown that the RC can be used for the prediction and recognition of temporal and sequential data such as spoken word5, time series signals4,6, and wireless and optical a channel equalizations7,8. In addition, RC can also be used for handwritten digits recognition by transforming the images into temporal signals9.

Figure 1.

Reservoir computing architecture. Input weight matrix Win is a fixed N × L matrix where N is the number of nodes in the reservoir, and L is the dimension of the inputs at each time step. Reservoir weight matrix Wres is a fixed N × N matrix, which is typically sparse with nonzero elements having an either a symmetrical uniform, discrete bi-valued, or normal distribution centered around zero13. Output weight matrix Wout is a learned matrix where is the number of classes of the output data.

There have been several studies on various reservoir network topologies such as sparsely random network1,2 and topologies of swirl10 and waterfall11. However, for every given task, one has to empirically seek an optimum condition, and these topologies are not sufficiently flexible because there are few adjustable parameters. Hence, a variety of networks has been tested for the reservoir. In a conventional random reservoir, it is treated like a “black box”, which only allows one to specify the density or the sparseness of the weight matrix of the reservoir. The topologies of the swirl and the waterfall are fixed, and there is no variable except for the size, that is, the number of nodes. Therefore, a guiding principle to find an optimal reservoir for a given task is required. The forementioned factors have motivated us toward using “small-world” network12 for the reservoir.

The reservoir state vector and the output vector at time t are given by13

| 1 |

| 2 |

where is the leaking rate, the activation function of node, and the input gain. When is equal to zero, the states are totally governed by previous states, while for the case with , the next state of the reservoir depends only on the current state and the external input. Their weights are uniquely determined using by employing the regularized least squares method as13

| 3 |

where is the training input vector, the regularization parameter, and the N × N identity matrix. Because the training is simple, the computation cost is low.

Various hardware implementations of the RC based on electronic and photonic components have been reported. The hardware could serve as an accelerator at the frontend of digital computers14, which is optimized to perform a specific function but does so faster with less power consumption compared to a general-purpose processor. The electronic RC implementations include analog circuits15 and VLSIs16, while the photonic hardware of RC exploits its parallelism and high-speed operations with a potentially low power consumption10,11,17–24. However, a stumbling block is the absence of nonlinear devices on a large scale acting as the activation function of a reservoir node. To address this issue, two types of architecture of photonic RC have been proposed—delay-loop reservoir and spatial reservoir. The delay-loop RC can simplify a complicated network using a single nonlinear node in a loop-back configuration with the time-delayed feedback. The virtual nodes are distributed along the delay line, and the data injection is realized using time multiplexing17,18. In the delay-loop reservoir, an electro-optic modulator19,20, a semiconductor optical amplifier11,21, and a laser diode22,23 can be used as the nonlinear node. The nonlinearity is yielded in the optical output against the applied input voltage of the electro-optic modulator, while it appears in the optical output against the optical input of both the semiconductor optical amplifier and the laser diode, subject to the optical feedback. On the other hand, the spatial implementation of RC is basically a spatially-distributed network23. This model uses two key components; a spatial light modulator which consists of over a few tens of thousands of pixels that act as the reservoir nodes and a digital micro-mirror device realizing the reconfigurable output weights. Recently, an alternative approach to building larger reservoirs based on the combination of several blocks of small reservoir has been proposed; the model demonstrated enhanced computational capability24. As the photonic integrated circuit (PIC) technology is recently making a rapid progress25,26, PIC-based hardwares of the RC will be developed in the near future. This study will also serve as a design guideline of the PIC RC.

With regard to the forementioned factors, we will explore a guiding principle for optimizing the reservoir by shedding light on an article of small-world network in 199812. With the aim of achieving a better performance of the RC, we will refer to several preceding works relevant to small-world network27–29. In Ref.27, a reservoir model, scale-free highly-clustered echo state network (SHESN) having characteristics of both networks of the small-world and the scale-free30 has been proposed. SHESN features a spatially hierarchical and distributed topology where the intradomain connections are much denser than those of interdomain ones. In each domain, the small-world network characteristics such as a short path length and a high clustering are preserved, while the power law degree distribution of the scale-free network is embedded. It is numerically shown that time-series prediction capability of the Mackey–Glass (MG) dynamic system is enhanced, compared with a conventional reservoir having random connections. Reference28 analyzes three types of network, including the scale-free network and small-work network as well as their mixture and demonstrates enhanced capability in the prediction of two types of time serial signals generated by NARMAX mode. Reference29 investigates characteristics of the path length of three types of network for reservoir, including the small-world network, the scale-free network, and the conventional random one, in order to narrow down the search space of the parameter of the reservoir for predicting chaotic signals. In our present work, the reservoir model is solely based on the small-world network. We examine that the dependence of the parameters such as the degree of nodes k and the rewiring probability p on the computing capability, which has not been studied in details in the preceding works, Refs.27–29. We also show that there is a sweet spot of the small-world network, which gives the optimum performance of the RC for two typical tasks of neural networks; classification and regression. For a classification task, we chose the classification of human activities which has not been tested in the preceding articles, while for a regression task, we conducted the prediction of the MG chaotic signals as Refs.27,29 did.

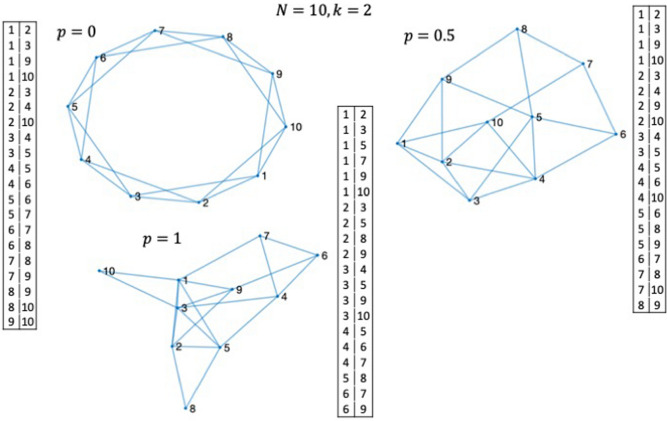

The term, small-world network, is derived by an analogy with the small-world phenomenon31. It has been shown that the small-world network can well characterize the social and natural phenomena in a real world, including human behavior in social lives, power grid networks, and biological neural networks. We recall a statement related to the ongoing pandemic, presented in the article12, “infectious diseases are predicted to spread much more easily and quickly in a small world; the alarming and less obvious point is how few short cuts are needed to make the world small”. The small-world network is based upon the Watts–Strogatz graph, which explores a simple model of network with an arbitrarily-tuned magnitude of disorder by rewiring the links between the nodes. The small-world network is categorized between a regular network () and a completely disordered one (), where a small amount of the links between the nodes are rewired to introduce disorder. Here, is the probability of rewiring the links at random. For the purpose of illustration, three examples of 10-node () network with the node degree are illustrated in Fig. 2, which indicates connections with neighboring nodes, including the regularly connected (), the modestly disordered (), and the totally disordered (). It is possible to exploit the high flexibility and build up a desired reservoir from scratch. The weight matrix of the reservoir can be generated from the table of the link connections shown alongside of the graphs (Fig. 2) (see “Methods” section). The link may be either bidirectional or partially bidirectional, and in this study, it is assumed that all the links are bidirectional, thus resulting in a symmetric matrix . There has been another network model referred to as the Erdős–Rényi model32, the primary difference between this model and the Watts–Strogatz graph is in the number of parameters, wherein the former contains one parameter, the node degree, while the latter has two parameters, the node degree and the probability of rewiring.

Figure 2.

Architectures of 10-node () networks with the node degree ; regularly connected () on the l.h.s. on the top, modestly disordered () on the r.h.s. on the top, and totally disordered () at the bottom. Each node is connected with four (= 2 neighboring nodes. Each table represents the pairs of nodes for each . For example, node#1 is connected with nodes#2, 3, 9, and 10 for the case with .

Results

In the Watts–Strogatz graph, the clustering coefficient of node i with the node degree is defined as33

| 4 |

and the total clustering coefficient of N-node network is expressed by

| 5 |

The density of the weight matrix of the small-world network-based reservoir is calculated by

| 6 |

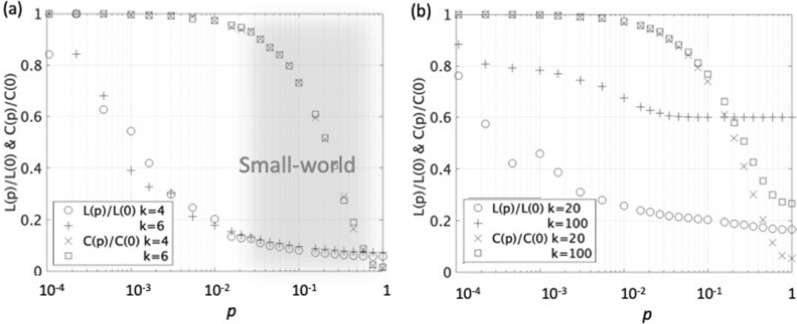

The characteristic path length is calculated as the mean value of distances of the shortest paths between all the nodes. The clustering coefficient and the characteristic path lengths as a function of the probability of rewiring for the case with 1000-node and the node degrees, and 6 are shown in Fig. 3a. As increases, the clustering coefficient rapidly decreases beyond , and the average path length also monotonically decreases. The small-world network is indicated by the shaded area of = 0.01–0.7, which is characterized highly-clustered with the relatively short path length. As shown in Supple-Fig. 1, there are several clustering hubs for the case with the 1000-node network of , which connect with up to 14 ~ 16 nodes are indicated in orange and yellow of the color bar. When the degree is greater than 20, the small-world characteristic of highly-clustered with the relatively short path length is almost lost (Fig. 3b). Based on this observation, the degree up to around 20 preserves the characteristic of the small-world.

Figure 3.

a Clustering coefficient and average path length or hop count vs. probability of rewiring p for the case with 1000-node () and the node degrees and 6. Range roughly 0.01–0.7 of small-world is indicated by the shaded area. b Clustering coefficients and average path lengths vs. probability of rewiring p for the case with the node degrees and 100.

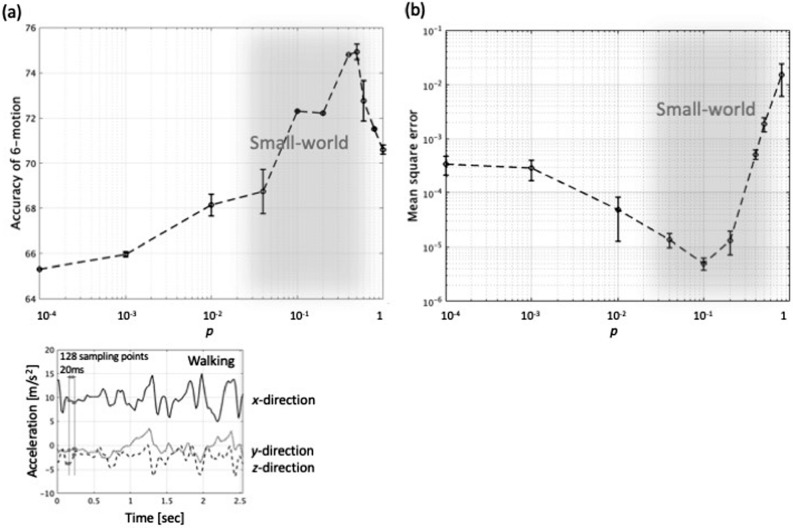

Hereafter, we will introduce the small-world network to the RC and synthesize the reservoir accordingly. We will investigate how the small-world network acts as the reservoir by comparing with a conventional sparsely random weight matrix . We investigate the performance of the RC by processing temporal and serial data. The recognition of images such as handwritten digits and letters are beyond the scope of this study because they require a peculiar preprocessing of image deformation techniques such as reframing and resizing9. We implement two benchmark tests; classification of human activity34 and time series prediction of Mackey–Glass (MG) chaotic signal35. We first study the dependence of the probability of rewiring on both the performance of the classification of human activity and prediction of the MG chaotic signal shown in Fig. 4a,b, respectively. The human activity includes six motions—walking, walking upstairs, walking downstairs, sitting, standing, and lying down (Fig. 5a). The motions along x-, y-, and z-axes are captured by the accelerator of a smartphone as shown on the bottom of in Fig. 4a34. The 1000-node reservoir is generated for the degree . The impact of the degree on the performance will be discussed later (Fig. 7). In all the benchmark tests, the reservoir network is generated for ten time, and each of ten test runs is conducted by using a different reservoir. The classification accuracy of the human 6-activity improves as increases from 65.3% at and peaks out to 74.9% at (Fig. 4a). While, in the mean square error (MSE) of the MG chaotic signal prediction (Fig. 4b), a 1000-node reservoir is also generated with the degree . The prediction accuracy, which is represented by the MSE is minimized to be at . From Fig. 4, it is observed that the sweet spot of the optimum performance of these tests is situated in the range of small-world network, the shaded area of 0.01–0.7. The hyperbolic tangent is used for the activation function in Eq. (1) throughout this study, and sets of parameters such the leaking rate and input gain in Eq. (1) and the regularization parameter in Eq. (3) are tuned for the optimum performance. For the case with the node count , the typical values of the leaking rate , the input gain , and the regularization parameter are for the human motion classification and for the MG signal prediction. The results of 2000-node will be discussed later in the manuscript.

Figure 4.

(a) Classification accuracy of human 6-activity vs. probability of rewiring for the case with and . The accuracy is maximized to be 74.9% at . For an example, the temporal waveforms of accelerations of walking on x-, y, and z-axes are also shown on the bottom. (b) Prediction accuracy represented by the mean square error (MSE) of MG chaotic signal vs. for the case with and . MSE is minimized to be at .

Figure 5.

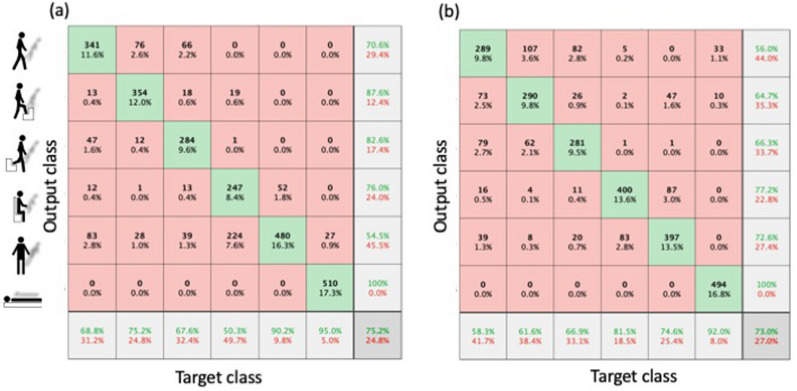

Confusion matrices of human 6-activity classification are compared for 1000-node reservoir. (a) Reservoir weight matrix of small-world network . Accuracy (in green) is 75.2%. (b) Conventional sparsely random matrix with the density of 0.008. Accuracy is 73.0%.

Figure 7.

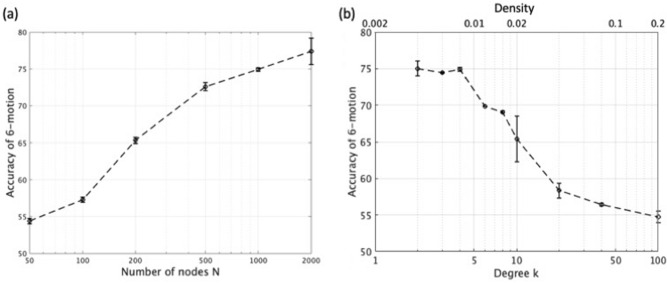

Performance of human activity classification using reservoir weight matrix generated from the Watts–Strogatz graph. (a) Classification accuracy versus the number of nodes . Node degree and the probability of rewiring within the range of small-world network are assumed. (b) Classification accuracy against the degree of node . of 1000-node small-world network is assumed. Horizontal axis on the top is density of matrix calculated by Eq. (6).

We will present the results of the two benchmark tests in details. First, in the human activity classification the captured temporal waveforms along x-, y-, and z-axes for 2.56 s were sampled into 128-sample at a rate of 20 ms as shown on the bottom of Fig. 4a. The data sets of the training and the testing include 7352 and 2947, respectively (see “Methods” section). The accuracy of the human activity classification in the confusion matrix is maximized to 75.2% when (Fig. 5a), and it is slightly better than that of conventional random weight matrix (73.0%) with the same density of 0.008 as the 1000-node small-world network, which is calculated from Eq. (6) (Fig. 5b).

In the benchmark test of the MG temporal chaotic signal, a 10,000 timestep-long signal is used. The first 2000 of data are used for the training, and the output weight matrix is determined by Eq. (3) (see “Methods” section). Then, the next 2000 of the data are used for the prediction. The reservoir consists of 1000-node. The plots of waveforms provide a comparison of the RC results using small-world network as the reservoir with that of sparsely random weight matrix (Fig. 6a,b). The results are summarized in Table 1, including the minimum/maximum mean square errors (MSEs) along with the mean values and the standard deviations for the 10-run. The best MSE of the 1000-node small-world is as low as (Fig. 6a), slightly larger compared to of the random weight matrix with the density of 0.008, which is equal to the density of small-world reservoir (Fig. 6b). It can be confirmed that the optimum performance is obtained in the range of small-world as the classification of human activity does. It should be noted that the standard deviation was which amounts to 28.5% of the MSE value. The impact of the length of training data is examined using the 1000 and the 3000 timestep-long training data, and it is confirmed that the 2000 timestep-long training data is sufficient. For instance, the prediction accuracy was when the 3000 training data are used, while it is for the case with the 1000 training data.

Figure 6.

2000 timestep-long waveforms of predicted and that of target MG chaotic time series for 1000-node reservoir. (a) Result of reservoir weight matrix of 1000-node small-world network . Mean square error (MSE) is . (b) Conventional sparsely random matrix with the density of 0.008. MSE is . Results of the two benchmark tests are summarized in Table 1.

Table 1.

Summary of two benchmark tests using reservoir of small-world and sparsely random for the cases with 1000-node and 2000-node.

|

N Density |

Small-world network | Random weight matrix | |

|---|---|---|---|

|

Max/min accuracy Mean Standard dev (%) |

1000 0.008 |

75.2/74.2 74.9 0.25 |

73.0/71.6 72.8 0.58 |

|

2000 0.004 |

79.2/75.3 77.4 1.80 |

75.9/73.2 75.4 0.85 |

|

|

Min/max MSE Mean Standard dev |

1000 0.008 |

/

|

/

|

|

2000 0.004 |

/

0 |

/

|

number of node, degree of node, probability of rewiring.

The results of two benchmark tests with the case of the 2000-node are also summarized in Table 1. The confusion matrix of the classification accuracy of human 6-activity and the waveforms of predicted MG chaotic time series are shown in Supple-Figs. 2 and 3, respectively. The performance is considerably improved compared to the results of . The mean value of classification accuracy is increased to 77.4% for the small-world of , and it outperforms the sparsely random weight matrix, whose accuracy is 75.4%. The density of the weight matrix is 0.004, which is equal to the density of small-world reservoir. In the chaotic signal prediction, the mean value of MSE of the small-world reservoir of is , better than that of random weight matrix, whose mean MSE is .

Finally, we investigate the RC performance against the parameters of the small-world network such as the number of nodes and the degree of node . We will focus on the small-world range 0.1–0.7, which is indicated by the shaded area in Fig. 3a, and hence, we assume . First, the dependence of the classification accuracy of the human 6-activity on is investigated (Fig. 7a). The classification accuracy monotonically improves as the network scales up. The mean value is 54.4% at , and it continues to increase to 77.4% at . Next, we will observe the impact of the node degree on the classification accuracy (Fig. 7b). The accuracy is maximized with relatively small number of degrees around , and it monotonically degrades as the degree is increased.

Discussion

We have conducted two benchmark tests—the classification of human activity and the time series prediction of the Mackey–Glass chaotic system. It has been demonstrated that the optimum performance is obtained from the reservoir in the range of small-world network bounded by 0.01–0.7 and . Based on this observation, a guiding principle has been presented to systematically synthesize a reservoir of RC by exploring the small-world network nature of highly-clustered with the short characteristic path length. We expect that this study will draw attention to the versatile capability of the small-world network in the research of neural networks and help understand architectures of the RC in-depth.

Methods

Generation of weight matrix of reservoir

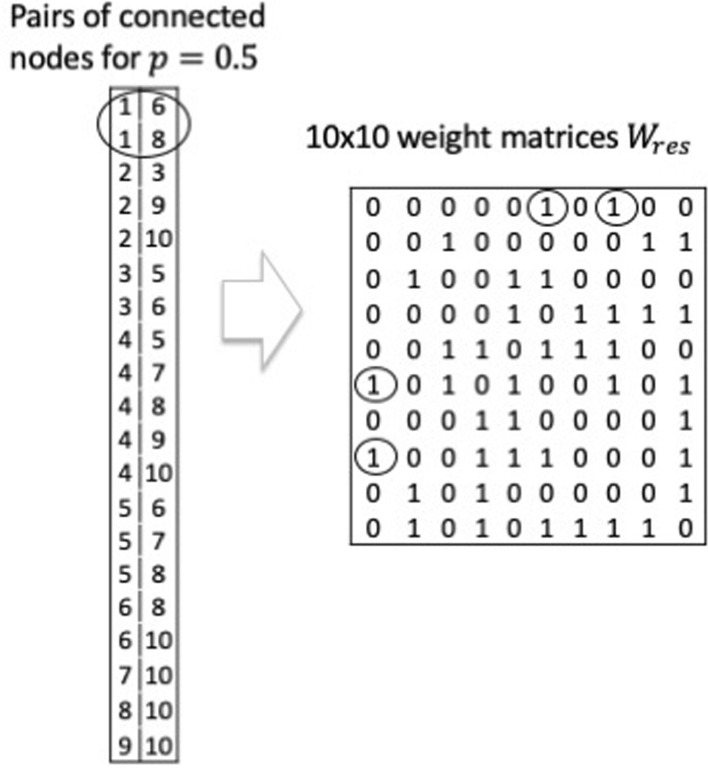

The method of generating the weight matrix of the reservoir of 10-node () network with the node degree is illustrated (Fig. 8). The table on the l.h.s. represents the pairs of connected nodes for . From this table, the weight matrix of the reservoir for the bidirectional connection can be generated, as shown on the r.h.s. Throughout the simulation, the nonzero elements of take a binary value, and it is assumed that all the connections are bidirectional, resulting in a symmetric weight matrix. Although, the nonzero elements can take an arbitrary positive value, and the asymmetric matrix may be another option.

Figure 8.

Method for generating the weight matrix of the reservoir of 10-node () networks with . Table of pairs of connected nodes on the l.h.s. and weight matrix . For instance, connections of node 1, pairs of nodes, and reflect on the weight matrix , as marked by circles.

Generation algorithm of Watts–Strogatz graph12

Creating the Watts–Strogatz graph through two basic steps:

Create a ring lattice with -node of the mean degree 2 (on the l.h.s. on the top of Fig. 2). Each node is connected to its nearest neighboring 2 nodes.

For each edge or link in the graph, rewire the target node with probability . The rewired edge cannot be a duplicate or self-loop. This results in a partially () or totally disordered () topologies (on the r.h.s. on the top and at the bottom of Fig. 2, respectively).

The basic Matlab code is available at: https://jp.mathworks.com/help/matlab/math/build-watts-strogatz-small-world-graph-model.html?lang=en. The clustering coefficient is calculated using Eqs. (4) and (5) by following the aglgorithm33. The Matlab code of is available at https://github.com/mdhumphries/SmallWorldNess.

Classification of human activity34

The data set of the human activity is available at http://archive.ics.uci.edu/ml/machine-learning-databases/00240/UCI HAR Dataset.zip.

It includes six motions—walking, walking upstairs, walking downstairs, sitting, standing, and laying down. The captured temporal waveforms along x-, y-, and z-axes for 2.56 s were sampled into 128-sample at the rate of 20 ms. The data sets of the training and the testing are 7352 and 2947, respectively. For the training and classification of the human activity in RC (Fig. 1), the dimensions of weight matrices , , and of Eqs. (1), (2) and (3) are and is specified, for instance 1000 etc.

Time series prediction of Mackey–Glass (MG) chaotic signal35

The time series data and the basic Matlab code are available at:

A minimalistic sparse Echo State Networks demo with Mackey–Glass (delay 17) data in "plain" Matlab/Octave from https://mantas.info/code/simple_esn (c) 2012–2020 Mantas Lukosevicius.

Distributed under MIT license https://opensource.org/licenses/MIT.

For the training and prediction of MG chaotic time series, the dimensions of weight matrices , , and of Eqs. (1), (2) and (3) are and is specified, for instance 1000 etc.

Supplementary Information

Acknowledgements

We would like to thank for invaluable discussions H. Furukawa and S. Shimizu of the National Institute of Information and Communications Technology, T. Hara and H. Toyoda of Hamamatsu Photonics Central Laboratory, and K. Ishii of the Graduate School for the Creation of New Photonic Industries. We would also like to thank the anonymous reviewers for their constructive comments and advices.

Author contributions

100% of contribution owes the sole author.

Data availability

The raw data sets used in the simulations are available (see “Methods”).

Code availability

The Matlab-based codes used for simulations are partly available (see “Methods”).

Competing interests

The author declares no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

The online version contains supplementary material available at 10.1038/s41598-022-21235-y.

References

- 1.Verstraeten D, Schrauwen B, Stroobandt D, Van Campenhout J. Isolated word recognition with the liquid state machine: A case study. Inf. Process. Lett. 2005;95:521–528. doi: 10.1016/j.ipl.2005.05.019. [DOI] [Google Scholar]

- 2.Lukoševicius M, Jaeger H. Reservoir computing approaches to recurrent neural network training. Comput. Sci. Rev. 2009;3:127–149. doi: 10.1016/j.cosrev.2009.03.005. [DOI] [Google Scholar]

- 3.Jaeger. H. The ‘echo state’ approach to analyzing and training recurrent neural networks. Technical Report GMD Report148, German National Research Center for Information Technology (2001).

- 4.Jaeger H, Hass H. Harnessing nonlinearity: Predicting chaotic systems and saving energy in wireless communication. Science. 2004;304:78–80. doi: 10.1126/science.1091277. [DOI] [PubMed] [Google Scholar]

- 5.Maass W, Natschläger T, Markram H. Real-time computing without stable states: A new framework for neural computation based on perturbations. Neural Comput. 2002;14:2531–2560. doi: 10.1162/089976602760407955. [DOI] [PubMed] [Google Scholar]

- 6.Maass W. Computability in Context: Computation and Logic in the Real World. World Scientific; 2011. Liquid state machines: Motivation, theory, and applications; pp. 275–296. [Google Scholar]

- 7.Antonik P, Duport F, Hermans M, Smerieri A, Haelterman M, Massar S. Online training of an opto-electronic reservoir computer applied to real-time channel equalization. IEEE Trans. Neural Netw. Learn. Syst. 2017;28:2686–2698. doi: 10.1109/TNNLS.2016.2598655. [DOI] [PubMed] [Google Scholar]

- 8.Argyris A, Bueno J, Fischer I. Photonic machine learning implementation for signal recovery in optical communications. Sci. Rep. 2018;8:8487. doi: 10.1038/s41598-018-26927-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Schaetti, N., Salomon, M., & Couturier, R. Echo State Networks-based Reservoir Computing for MNIST Handwritten Digits Recognition. In 2016 IEEE Intl Conference on Computational Science and Engineering (CSE) and IEEE Intl Conference on Embedded and Ubiquitous Computing (EUC) and 15th Intl Symposium on Distributed Computing and Applications for Business Engineering (DCABES) 17043857 Paris, France, Aug. 2016.

- 10.Vandoorne K, Mechet P, Van Vaerenbergh T, Fiers M, Morthier G, Verstraeten D, Schrauwen B, Dambre J, Bienstman P. Experimental demonstration of reservoir computing on a silicon photonics chip. Nat. Commun. 2014;5:3541. doi: 10.1038/ncomms4541. [DOI] [PubMed] [Google Scholar]

- 11.Vandoorne K, Dambre J, Verstraeten D, Schrauwen B, Bienstman P. Parallel reservoir computing using optical amplifiers. IEEE Trans. Neural Netw. 2011;22:1469–1481. doi: 10.1109/TNN.2011.2161771. [DOI] [PubMed] [Google Scholar]

- 12.Strogatz SH, Stewart I. Collective dynamics of ‘small-world’ networks. Nature. 1998;393:440–442. doi: 10.1038/30918. [DOI] [PubMed] [Google Scholar]

- 13.Lukoševičius M. A practical guide to applying echo state networks. Neural Netw. Tricks Trade. 2012;20:659–686. doi: 10.1007/978-3-642-35289-8_36. [DOI] [Google Scholar]

- 14.Kitayama K, Notomi M, Naruse M, Inoue K, Kawakami S, Uchida A. Novel frontier of photonics for data processing—photonic accelerator. APL Photon. 2019;9:090901. doi: 10.1063/1.5108912. [DOI] [Google Scholar]

- 15.Soriano MC, Brunner D, Escalona-Morán CR, Fischer I. Minimal approach to neuro-inspired information processing. Front. Comput. Neurosci. 2015;9:1–11. doi: 10.3389/fncom.2015.00068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Polepalli, A., Soures, N., & Kudithipudi, D. Digital neuromorphic design of a liquid state machine for real-time processing. In IEEE International Conference on Rebooting Computing (ICRC) 1–8 (2016).

- 17.Appeltant L, Soriano MC, Van der Sande G, Danckaert J, Massar S, Dambre J, Schrauwen B, Mirasso CR, Fischer I. Information processing using a single dynamical node as complex system. Nat. Commun. 2011;2:468. doi: 10.1038/ncomms1476. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Brunner D, Soriano MC, Mirasso CR, Fischer I. Parallel photonic information processing at gigabyte per second data rates using transient states. Nat. Commun. 2013;4:1364. doi: 10.1038/ncomms2368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Larger L, Soriano MC, Brunner D, Appeltant L, Gutierrez JM, Pesquera L, Mirasso CR, Fischer I. Photonic information processing beyond Turing: An optoelectronic implementation of reservoir computing. Opt. Express. 2012;20:3241–3249. doi: 10.1364/OE.20.003241. [DOI] [PubMed] [Google Scholar]

- 20.Paquot Y, Duport F, Smerieri A, Dambre J, Schrauwen B, Haelterman M, Massar S. Optoelectronic reservoir computing. Sci. Rep. 2012;2:287. doi: 10.1038/srep00287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Duport F, Schneider B, Smerieri A, Haelterman AM, Masser S. All-optical reservoir computing. Opt. Express. 2012;20:22783–22795. doi: 10.1364/OE.20.022783. [DOI] [PubMed] [Google Scholar]

- 22.Nakayama J, Kanno K, Uchida A. Laser dynamical reservoir computing with consistency: An approach of a chaos mask signal. Opt. Express. 2016;24(8):8679–8692. doi: 10.1364/OE.24.008679. [DOI] [PubMed] [Google Scholar]

- 23.Bueno J, Maktoobi S, Froehly L, Fischer I, Jacquot M, Larger L, Brunner D. Reinforcement learning in a large-scale photonic recurrent neural network. Optica. 2018;5:756–760. doi: 10.1364/OPTICA.5.000756. [DOI] [Google Scholar]

- 24.Freiberger M, Sackesyn S, Ma C, Katumbe A, Bienstman P, Dambre J. Improving time series recognition and prediction with networks and ensembles of passive photonic reservoirs. IEEE J. Sel. Top. Quntum Electron. 2020;26:1–11. doi: 10.1109/JSTQE.2019.2929699. [DOI] [Google Scholar]

- 25.Bowers JE, et al. Recent advances in silicon photonic integrated circuits. Proc. SPIE. 2017;9774:977402–977402. [Google Scholar]

- 26.Nozaki K, Matsuo S, Fujii T, Takeda K, Shinya A, Kuramochi E, Notomi M. Femtofarad optoelectronic integration demonstrating energy-saving signal conversion and nonlinear functions. Nat. Photon. 2019;13:454–459. doi: 10.1038/s41566-019-0397-3. [DOI] [Google Scholar]

- 27.Deng Z, Zhang Y. Collective behavior of a small-world recurrent neural system with scale-free distribution. IEEE Trans. Neural Netw. 2007;18(5):1364–1375. doi: 10.1109/TNN.2007.894082. [DOI] [PubMed] [Google Scholar]

- 28.Cui H, Liu X, Li L. The architecture of dynamic reservoir in the echo state network. Chaos. 2012;22:033127. doi: 10.1063/1.4746765. [DOI] [PubMed] [Google Scholar]

- 29.Carroll TL. Path length statistics in reservoir computers. Chaos. 2020;30:083130. doi: 10.1063/5.0014643. [DOI] [PubMed] [Google Scholar]

- 30.Barabási AL, Albert R. Emergence of scaling in random networks. Science. 1999;286:509–512. doi: 10.1126/science.286.5439.509. [DOI] [PubMed] [Google Scholar]

- 31.Milgram S. The small world problem. Psychol. Today. 1967;2:60–67. [Google Scholar]

- 32.de Oliveira Jr L, Stelzer F, Zhao L. Clustered and deep echo state networks for signal noise reduction. Mach. Learn. 2022 doi: 10.1007/s10994-022-06135-6. [DOI] [Google Scholar]

- 33.Humphries MD, Gurney K. Network ‘Small-World-Ness’: A Quantitative method for determining canonical network equivalence. PLoS One. 2008;3:e0002051. doi: 10.1371/journal.pone.0002051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Anguita, D., Ghio, A., Oneto, L., Parra, X. & Reyes-Ortiz, J. L. Human activity recognition on smartphones using a multiclass hardware-friendly support vector machine. In International Workshop of Ambient Assisted Living (IWAAL2012) (Vitoria-Gasteiz, Spain, December 2012).

- 35.Mackey MC, Glass L. Oscillation and chaos in physiological control systems. Science. 1977;197:287–289. doi: 10.1126/science.267326. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The raw data sets used in the simulations are available (see “Methods”).

The Matlab-based codes used for simulations are partly available (see “Methods”).