Abstract

Detection of changes in facial emotions is crucial to communicate and to rapidly process threats in the environment. This function develops throughout childhood via modulations of the earliest brain responses, such as the P100 and the N170 recorded using electroencephalography. Automatic brain signatures can be measured through expression‐related visual mismatch negativity (vMMN), which reflects the processing of unattended changes. While increasing research has investigated vMMN processing in adults, few studies have been conducted on children. Here, a controlled paradigm previously validated was used to disentangle specific responses to emotional deviants (angry face) from that of neutral deviants. Latencies and amplitudes of P100 and N170 both decrease with age, confirming that sensory and face‐specific activity is not yet mature in school‐aged children. Automatic change detection‐related activity is present in children, with a similar vMMN pattern in response to both emotional and neutral deviant stimuli to what previously observed in adults. However, vMMN processing is delayed in children compared to adults and no emotion‐specific response is yet observed, suggesting nonmature automatic detection of salient emotional cues. To our knowledge, this is the first study investigating expression‐related vMMN in school‐aged children, and further investigations are needed to confirm these results.

Keywords: anger, change detection, emotion, face, school‐aged children, vMMN

1. INTRODUCTION

Faces are salient dynamic stimuli conveying rapid information about the environment and about others’ mental states and intentions. Emotional expressions are particularly crucial to inform and communicate about possible threats (Anderson et al., 2003). Thus, an emotional facilitation effect has often been reported, showing that even when attention is required in a concurrent task, emotional information is prioritized and automatically processed (Carretié, 2014; Herba et al., 2006; Hinojosa et al., 2015; Ikeda et al., 2013). Investigating preattentional processing to emotional changes in children allows to better understand how these automatic mechanisms develop (Herba et al., 2006; Morales et al., 2016; Ridderinkhof & van der Stelt, 2000; Wetzel & Schroger, 2014).

In order to investigate early emotional processing, event‐related potentials (ERPs) enable extremely accurate time‐domain resolution and are easily recorded even in young populations with and without clinical conditions (Astikainen et al., 2008; Clery et al., 2012; Czigler & Pato, 2009; Kovarski et al., 2021; Qian et al., 2014). Experimental studies have investigated face processing by analyzing neural responses to all basic emotions in children, adolescents, and adults (see Batty & Taylor, 2003, 2006; Taylor et al., 2004), showing modulations by facial expressions of early visual components such as the P100 and the N170 both in children and adults (Batty & Taylor, 2003; Brenner et al., 2014; Hinojosa et al., 2015; Olivares et al., 2015). A decrease in the latency and the amplitude with age has often been reported regarding these early responses, with children not yet presenting a mature modulation of the emotional content (Batty & Taylor, 2006; Hileman et al., 2011).

Extensively investigated and described in the auditory modality (Bartha‐Doering et al., 2015; Näätänen & Winkler, 1999), the mismatch negativity (MMN) component has also been observed in the visual modality (vMMN; see Czigler, 2014; Kimura et al., 2011). The MMN reflects the activity resulting from the subtraction of a standard stimulus from a deviant stimulus, as often presented in oddball paradigms. An increasing number of vMMN studies have investigated automatic change detection of socially relevant stimuli (Czigler, 2014) with a particular attention to the emotion‐related vMMN (see Kovarski et al., 2017). The emotional vMMN has been observed in a wide time window (70–500 ms), and it has been interpreted as successive independent stages of processing (Astikainen & Hietanen, 2009; Li et al., 2012; Stefanics et al., 2012) or a unique preattentional mechanism (Gayle et al., 2012; Kimura et al., 2012; Vogel et al., 2015).

Both positive and negative emotions were found to elicit a vMMN response. Depending on the valence of the deviant emotion, different peak latencies and scalp distributions have been associated to emotional vMMN (Astikainen et al., 2013; Kimura et al., 2012). Supported by previous ERPs findings (Batty & Taylor, 2003), a latency advantage has been found when happy deviants were compared to negative deviants (Astikainen & Hietanen, 2009; Zhao & Li, 2006). In contrast, a shorter vMMN latency for fearful faces compared to happy faces was also identified (Kimura et al., 2012; Stefanics et al., 2012). In line with this negative bias, a greater vMMN amplitude has been reported for sadness (Gayle et al., 2012; Zhao & Li, 2006), fear (Stefanics et al., 2012), and anger (Kreegipuu et al., 2013). However, other studies reported no difference in the vMMN amplitude to positive or negative emotions (Astikainen et al., 2013; Astikainen & Hietanen, 2009), suggesting that vMMN responses measured in previous studies might differ partly for the specific deviant emotion. Despite such discrepancies, a common emotional vMMN peak has frequently been reported or presented around the 260–350 ms latency range over occipital or parieto‐occipital electrodes (Astikainen & Hietanen, 2009; Gayle et al., 2012; Kimura et al., 2012; Susac et al., 2010, 2004; Vogel et al., 2015; Zhao & Li, 2006) and sometimes in earlier time widows (Kovarski et al., 2017; Li et al., 2012; Liu et al., 2015; Stefanics et al., 2012).

Three studies have compared emotional deviancy to neutral deviancy in adults (Gayle et al., 2012; Kovarski et al., 2017; Vogel et al., 2015), while most studies investigated emotional vMMN without comparing responses to emotional deviants to those to neutral deviants (for discussion, see Kovarski et al., 2017). Sad faces were found to elicit a greater vMMN than happy and neutral deviants (Gayle et al., 2012), and a latency advantage and/or a greater amplitude for the emotional deviancy compared to the neutral deviancy has also been described, as well as a late sustained emotional response to angry or fearful deviants, showing both the emotional specificity of vMMN and a partial overlap with neutral vMMN (Kovarski et al., 2017; Vogel et al., 2015).

Few studies have investigated visual mismatch responses (vMMR) in a developmental perspective by measuring this component in children and/or adolescents. While some studies reported developmental changes in the vMMN (Clery et al., 2012), no difference between adults and children was found in a color vMMN study (Horimoto et al., 2002). vMMN has been used to investigate early discrimination in newborns (Tanaka et al., 2001) and in children with and without mental retardation (Horimoto et al., 2002), and also with autism (Clery et al., 2013), showing that vMMR can be detected very early in both the typical and the atypical development. Clery et al. (2012) measured vMMN in 8–14 years old children and adults within an oddball visual task where moving like circles changed in form becoming ellipses (deviant stimuli). While adults presented a parieto‐occipital negativity peaking at 210 ms, response in children consisted of three successive negative peaks in the 150–330 ms time window. Additionally, a positive vMMR at 450 ms interpreted as a late dorsal pathway involvement was observed only in children, and even in an earlier time window in children with autism (Clery et al., 2013). In a similar vein, Cleary et al. (2013) presented gratings of two different spatial frequencies as standards and deviants, and found a common vMMN response to the deviant stimulus peaking at 150 ms in adults and children, but a second peak at 230 ms for children only. A positive centro‐frontal activity was also observed in both groups possibly suggesting a recruitment of more cognitive mechanisms involved in reorienting attentional sources. It has often been shown that early visual components continue to develop through adolescence and reach maturity only at adulthood (Batty & Taylor, 2006). Accordingly, automatic detection processing has probably not reached the full maturity before the age of 16 (Tomio et al., 2012).

In accordance with previous vMMN developmental studies and evidence on early emotional processing in children and adults, we hypothesized that although emotional change detection would elicit a specific response (i.e., different from neutral change detection), such processing is not yet mature. However, to date, no expression‐related vMMN study has been performed in young children, and the way they specifically process evoked‐related changes still remains unexplored. Thus, the aim of the present exploratory study was to investigate the developmental aspects of automatic change detection of emotional expression, by comparing emotional deviancy to neutral deviancy detection. Moreover, to provide a “genuine” vMMN by avoiding neural adaptation, an oddball sequence and an equiprobable sequence were presented while participants performed a concurrent visual task.

2. MATERIAL AND METHODS

2.1. Participants

Forty‐one participants completed the EEG task. After rejection of the data of five children who presented incomplete recording or poor EEG quality, a sample of 18 children (mean age: 9.4 ± 1.4 [7–12]; eight females) and a sample of 18 adults (mean age ± standard deviation: 25.7 ± 6.9 [18–42]; five females) were included in the analyses. None of the participants reported any developmental difficulties in language or sensorimotor acquisition. For all participants, no disease of the Central Nervous System, infectious or metabolic disease, epilepsy or auditory, or visual deficit was reported. Recruitment was realized through an email list and flyers at the University of Tours and among Tours Hospital employees. Written informed consent for the experiment was collected from all participants and from parents for children, as well as assent from all participants. The protocol was approved by the local Ethics Committee of the University Hospital of Tours according to the ethical principles of the Declaration of Helsinki.

2.2. Stimuli and procedures

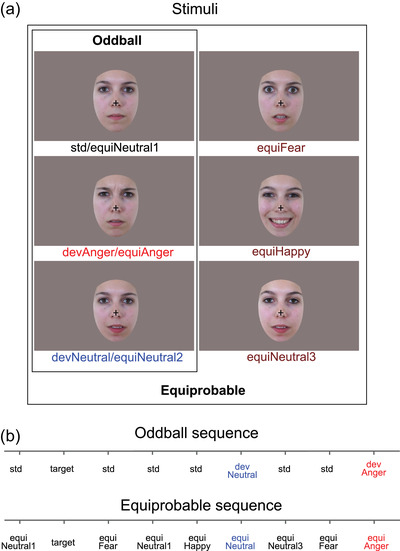

The procedure and stimuli used in the present study were the same as previously reported (Kovarski et al., 2021, 2017). Six photographs of the same actress (Figure 1a) were presented in two sequences: an oddball and an equiprobable sequence (Figure 1a). Stimuli were previously behaviorally validated for their emotional significance and arousal on an independent group of participants (for details, see Kovarski et al., 2017).

FIGURE 1.

Stimuli and example of oddball and equiprobable sequences. (a) Illustration of the stimuli used in the oddball sequence (N = 3) and in the equiprobable sequence (N = 6, including the three from the oddball sequence). (b) Example of the oddball and equiprobable sequences. Target consisted of a standard face stimulus with no cross (participants had to detect the disappearance of the central cross).

In the oddball sequence, a neutral expression was presented as the standard stimulus (std; probability of occurrence p = .80). Photographs expressing anger or with a different neutral expression were presented as the emotional deviant (devAnger, p = .10) and the neutral deviant (devNeutral, p = .10), respectively.

In the equiprobable sequence, stimuli have equal probability of occurrence. This sequence is additionally presented to control for neural habituation due to stimulus repetition in oddball sequences (standard stimulus) (Kimura et al., 2009; Li et al., 2012; Schroger & Wolff, 1996). In the equiprobable sequence, six stimuli were presented: the same three stimuli of the oddball sequence and three other facial expressions. Altogether, the equiprobable sequence included angry (the same stimulus as the angry deviant: equiAnger), fearful and happy (equiFear and equiHappy, respectively), and three neutral faces (equiNeutral1: same as the standard stimulus; equiNeutral2: same as the neutral deviant; and equiNeutral3) presented pseudorandomly (p = .16 each), avoiding immediate repetition (see Figure 1b). Responses to the equiFear, equiHappy, and equiNeutral3 stimuli were not further analyzed as these stimuli were added to respect the design of the equiprobable sequence.

Controlled vMMN was performed by subtracting the evoked response of the deviant stimulus (in the oddball sequence) from the same stimulus presented in the equiprobable sequence. Moreover, this allows excluding low‐level features effects and revealing genuine automatic change‐detection responses (Jacobsen & Schroger, 2001).

Subjects were asked to focus on a concurrent visual task: target stimuli consisted of a face stimulus in which a black fixation cross on the nose that was otherwise present disappeared. Participants were instructed to look at the fixation cross and to press a button as quickly as possible when the cross disappeared. Targets (cross disappearance) occurred only on neutral standard stimuli in the oddball sequence, but could occur on any stimulus in the equiprobable sequence (p = .05). Participants sat comfortably in an armchair 120 cm from the screen. Stimuli were presented using Presentation® software in the central visual field (visual angle: width = 5.7°, height = 8.1°) for 150 ms with a 550 ms interstimulus interval. The oddball sequence comprised 1575 stimuli and the equiprobable sequence 924 stimuli. Total recording time lasted 30 min. All subjects were monitored with a camera during the recording session to ensure compliancy to the task.

2.3. EEG recording

EEG data were recorded using a 64‐channel ActiveTwo system (BioSemi®, The Netherlands). Two electrodes were applied on the left and right outer canthi of the eyes and one below the left eye to record the electrooculographic activity. An additional electrode was placed on the tip of the nose for offline referencing. During recording, impedances were kept below 10 kΩ. EEG signal was recorded with a sampling rate of 512 Hz and filtered at 0–104 Hz.

2.4. Preprocessing

A 0.3‐Hz digital high‐pass filter was applied to the EEG signal. Ocular artifacts were removed by applying Independent Component Analysis (ICA) as implemented in EEGLab. Blink artifacts were captured into components that were selectively removed via inverse ICA transformation. Thirty‐two components were examined for artifacts and one or two components were removed in each subject. Muscular and other recording artifacts were discarded manually. EEG data were recorded continuously and time‐locked to each trial onset. Trials were extracted over a 700 ms analysis period, from 100 ms prestimulus to 600 ms poststimulus. ERPs were baseline corrected and digitally filtered with a low‐pass frequency cutoff of 30 Hz.

The first three trials of a sequence as well as trials occurring after deviant or target stimuli were not included in averaging. Each ERP was computed by averaging all trials of each stimulus type (see Figure 2) from the oddball sequence (std, devAnger, devNeutral) and from the equiprobable sequence (equiAnger, equiNeutral2). For each stimulus of interest (deviant stimuli in oddball sequence, and the same stimuli presented in the equiprobable sequence), the average of artifact‐free trials was for the Children group: 109 ± 13 (devAnger), 107 ± 14 (devNeutral), 105 ± 16 (equiAnger), and 101 ± 16 (equiNeutral2); for the Adults group: 127 ± 13 (devAnger), 128 ± 16 (devNeutral), 120 ± 21 (equiAnger), and 118 ± 21 (equiNeutral2). Groups significantly differed in the number of trials averaged as revealed by t‐tests performed for each stimulus of interest (all p < .016); however, individual ERPs responses confirmed that all participants correctly processed the visual stimuli. This was also established by developmental effects confirmed over P1 and N170 components (see Section 3).

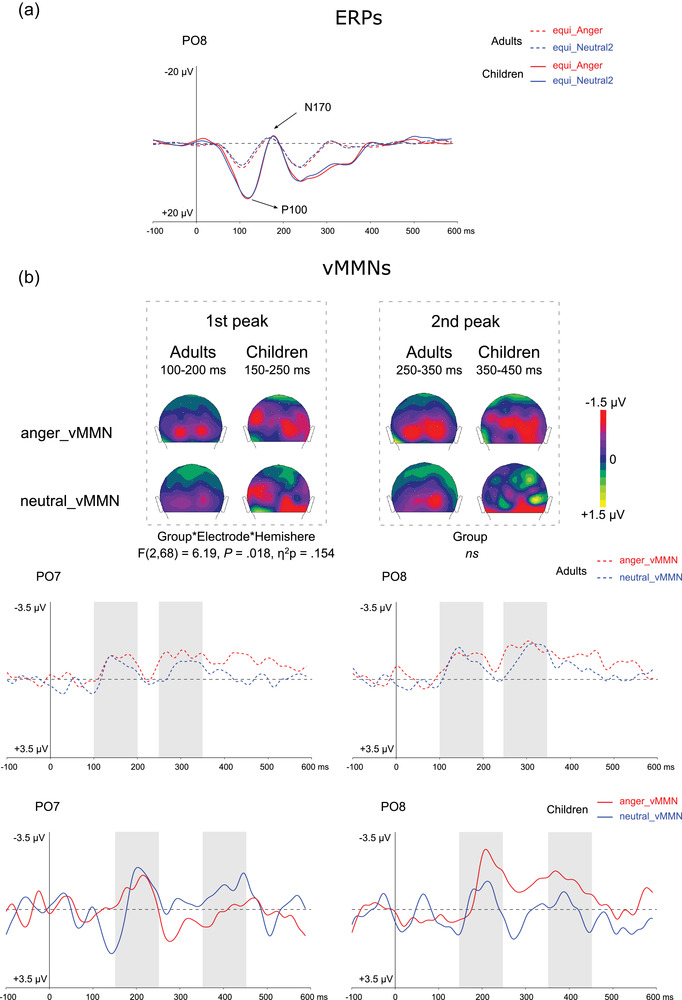

FIGURE 2.

(a) Grand average ERPs at PO8 elicited by emotional (anger, red lines) and neutral (blue lines) stimuli presented in the equiprobable sequence for adults (dotted lines) and for children (plain lines). P1 and N170 components are indicated by arrows. (b) On the top, two‐dimensional scalp topographies (back view) showing mean activity in selected time windows for each group and condition. On the bottom, grand average vMMN at PO7 and PO8 elicited by emotional (angry, red lines) and neutral (blue lines) deviants for adults (dotted lines) and for children (plain lines). Gray rectangles represent the time windows in which peak selection was applied for the first and second peaks measurement.

Responses were analyzed and compared with the ELAN software (Aguera et al., 2011). vMMNs were calculated as ERPs to devAnger and ERPs to devNeutral stimuli minus the responses elicited by the same emotional (equiAnger) and neutral (equiNeutral2) stimuli, respectively, presented in the equiprobable sequence (i.e., anger vMMN = devAnger − equiAnger; neutral vMMN = devNeutral − equiNeutral2) (Kimura et al., 2009; Kovarski et al., 2017). Group grand average difference waveforms across groups were examined to establish deflections of interest. Group comparisons were performed on specific brain activities related to emotion deviants and neutral deviants and on the entire scalp.

2.5. Statistical analyses

2.5.1. Behavioral analysis

Accuracy and false alarms (FAs) during the target detection task were analyzed, and the sensitivity index, dʹ = z‐score (correct responses rate) − z‐score (FAs rate), was measured to evaluate the degree of attention of the participants.

2.5.2. Event‐related potentials

P100 and N170 peak latency and amplitude to angry and neutral stimuli presented in the equiprobable sequence were analyzed (see Figure 2a).

P100 latency and amplitude positive peaks were measured in all subjects in the 70–160 ms time window. A repeated‐measure ANOVA was performed including Group (children, adults) as between‐subject factor and Emotion (anger, neutral) and Hemisphere (O1, O2) as within‐subject factors.

A similar analysis was carried for the N170 component over the P5, P6, PO7, and PO8 electrodes by measuring latency and amplitude of the negative peak in the 120–220 ms time window. Significant interactions were further investigated by reporting Bonferroni corrected p‐values. The effect of the Electrode was also added as averaged scalp topographies of the groups suggested a different localization of this component.

2.5.3. Visual mismatch negativity

For both groups (children and adults) and conditions (anger and neutral), vMMN amplitude was tested at each time point and electrode to ensure significant activity due to deviancy in the parieto‐occipital region. Permutation tests in the 50–600 ms time window were performed by comparing deviant stimuli to the same stimulus presented in the equiprobable sequence (e.g., devAnger − equiAnger). A correction for multiple comparisons was applied (Guthrie & Buchwald, 1991).

vMMN posterior peak amplitude was then specifically measured over occipital and parieto‐occipital electrodes: O1, O2, PO7, PO8, P7, and P8 (see Kovarski et al., 2021). Time course of the responses was visually inspected, and similarly to previous studies two deflections were elicited. Grand average responses in both groups and conditions suggested a similar response pattern between adults and children, but with children responses being delayed in time (about 50 ms according to the grand average). Thus, to compare groups, pick amplitudes were measured in different time windows in each group. The first deflection was measured as the most negative peak in the 100–200 ms time window in adults, and in the 150–250 ms time window in children. The second deflection was measured as the most negative deflection in the 250–350 ms time window in adults, and in the 350–450 ms time window in children. A repeated‐measure ANOVA was performed for each vMMN peak amplitude including Group (children, adults) as between‐subject factor and Emotion (anger, neutral), Electrode (occipital, parieto‐occipital and parietal), and Hemisphere (left, right) as within‐subject factors. Where significant interactions were found, they were further explained by reporting Bonferroni corrected P‐values.

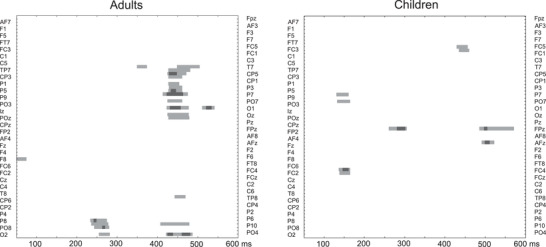

In addition to peak time‐related analyses, permutation tests in the 50–600 ms time window were performed by comparing vMMNs (anger vMMN vs. neutral vMMN) within each group (children and adults) (Figure 3). A correction for multiple comparisons was applied (Guthrie & Buchwald, 1991). Group comparison was not performed with this statistical method as the latency shift between the two groups could reveal effects due to latency and not to amplitude.

FIGURE 3.

Permutation tests analyses showing statistical significance over the entire scalp (64 electrodes, left electrodes on the top and right electrodes on the bottom) in the 50–600 ms latency range comparing vMMN conditions (anger vs. neutral in adults [left] and in children [right]). Statistical significance is represented by light gray and gray colors (p < .05 and p < .01, respectively). For a better readability, electrodes’ labels were presented alternately on left and right sides.

3. RESULTS

3.1. Behavioral analysis

Analysis of the dʹ revealed a Group difference due to children presenting smaller values (children: dʹ = 3.07 ± 1; adults: dʹ = 4.53 ± 0.8; t(34) = 4.84, p < .001). It is not surprising that children presented more difficulties in completing the task as the two youngest children presented the poorest scores (dʹ < 2). Nevertheless, visual inspection of their obligatory responses P1 and N170 confirmed that all participants presented the expected face processing, confirming that stimuli were properly seen and processed.

3.2. Event‐related potentials

The results revealed a significant Group effect for P100 latency due to children presenting a delayed P100 compared to adults (F(1,34) = 9.21, p = .005, η 2 p = .213; averaged conditions by group over O2 in children: 124 ± 9 ms, and in adults: 110 ± 11 ms), but no other effects (Emotion, Hemisphere, nor interactions) for latency were found (p > .30). Children presented a greater P100 amplitude than adults (F(1,34) = 66.24, p < .001, η 2 p = .661). No other significant effect for P100 amplitude was found, although a tendency was observed for the interaction between Group and Emotion (p = .08), possibly related to emotional stimulus eliciting a greater response than neutral in adults (see Kovarski et al, 2017).

Analyses of the N170 latency revealed a significant interaction between Emotion, Hemisphere, and Group (F(1,34) = 5.17, p = .029, η 2 p = . 132), but post‐hoc analyses (Bonferroni corrected) did not further reveal significant differences. Groups differed for N170 amplitude (F(1,34) = 4.79, p = .035, η 2 p = .124), and a significant interaction between Group and Electrode was found (F(1,34) = 5.18, p = .029, η 2 p = .132) due to children presenting greater N170 amplitude (more negative) compared to adults over the parietal electrodes only (P5 and P6; p = .04).

3.3. Visual mismatch negativity

vMMN responses are partly similar between children and adults, presenting two negative deflections, with the first peak being more clearly identifiable (see Figure 2).

In the posterior region, the analysis of the first negative peak measured in two different time windows for each group revealed an Electrode effect (F(2,68) = 3.27, p = .044, η 2 p = .088) due to parieto‐occipital electrodes presenting more negative amplitude compared to parietal ones (p = .046). A significant interaction between the Electrode and the Hemisphere was found (F(2,68) = 6.68, p = .014, η 2 p = .164), further explained by a three‐way interaction between the Electrode, the Hemisphere, and the Group (F(2,68) = 6.19, p = .018, η 2 p = .154). After Bonferroni correction was applied, comparisons did not reach significance, although figures suggest larger hemispherical differences between emotional and non‐emotional vMMN specifically in children compared to adults (see Kovarski et al., 2017). No other effects or interactions were significant.

The analysis of the second peak only revealed a significant Electrode effect (F(2,68) = 6.48, p = .003, η 2 p = .160) due to both occipital and parieto‐occipital electrode presenting a more negative vMMN (p = .003 and p = .032, respectively). No group effect was found.

Permutation analyses in each group are displayed in Figure 3: on the left side, the analysis confirms the emotional effects previously found in adults (Kovarski et al., 2021, 2017), in particular the late sustained activity in response to the emotional deviancy as observed in the occipital and parieto‐occipital region. This is not surprising as a part of the adults included in the present study were included in the current study. On the right, contrary to adults, the analysis shows no specific clusters for the emotional activity in the children group, who do not present significant late sustained activity in response to the emotional deviancy.

4. DISCUSSION

In the present study, we investigated the early processing of emotional face change in school‐age children compared to adults. In addition to visual and face‐specific ERPs (i.e., P100 and N170 components), we investigated whether a specific brain response to emotional change was present in children, similarly to what has previously been observed in adults (see Astikainen & Hietanen, 2009; Gayle et al., 2012; Kovarski et al., 2017). Brain responses elicited by neutral change were compared to those elicited by emotional change (i.e., angry expression). Accordingly, an oddball sequence included both neutral and emotional deviant stimuli, and an additional equiprobable sequence was presented to participants allowing to measure genuine vMMN responses, thus reducing activity due to low‐level differences and neural adaptation. To our knowledge, this is the first study on the development of emotional facial expression vMMN, investigating the interplay between change detection and emotion processing in children.

The analyses of sensory responses revealed increased amplitude and delayed latency of the P100 in children compared to adults, as shown by previous research using simple/nonsocial or face stimuli (Batty & Taylor, 2006; Cleary et al., 2013), confirming that sensory and face‐specific processing as well as its underlying brain activity is not yet mature in school‐aged children (Passarotti et al., 2003). No clear latency group difference for N170 was found (due to interaction analyses not reaching significance). This could be due to a great variability within the group of children, but also to the specific age group tested here (9.4 ± 1.4 years old). Accordingly, Batty and Taylor (2006) showed that N170 latency reduced with age, but that at 10–11 years old, N170 reached its shortest values (similar to those recorded in adolescents), suggesting that compared to P1, the N170 latency decrease rather occurs during early development (see Taylor et al., 2004).

Amplitude was found more negative in children compared to adults, especially over the parietal region in line with previous developmental findings. Additionally, the absence of emotional effect on the N170 components in school‐aged children and in adults is in line with previous findings (Batty & Taylor, 2006; Kovarski et al., 2017; Taylor et al., 2004; Usler et al., 2020).

Previous studies in adults using the same stimuli and design revealed specific responses for emotional change detection, but also a similar pattern elicited by emotional and neutral deviancy, suggesting both common underlying activity but greater emotional saliency (Kovarski et al., 2021, 2017). Two negative deflections were observed over the occipital and parieto‐occipital region; however, only the emotional deviant face elicited a sustained response, suggesting an emotion‐specific brain activity.

vMMN analyses of the present study also show that automatic change detection is not mature in children, confirming previous vMMN studies using other stimuli (Cleary et al., 2013; Clery et al., 2012). While Clery et al. (2012) reported three subsequent deflections, another study using spatial frequency simple stimuli suggested that individual differences or the type of stimuli (i.e., moving circles) could partly explain this pattern (Cleary et al., 2013). Moreover, Cleary et al. (2013) found a more negative response in adults compared to children, but a similar latency. Here, latencies were not compared between groups as different time windows were applied for peak extraction as peaks occurred later in children (∼50 and ∼100 ms for the first and second peak, respectively), and this would have biased the statistical analysis. Discrepancies between the present study and previous could be due to the type of stimuli and protocol used. In our study, the stimuli were more complex and implicated social and emotional information. Moreover, we controlled for low‐level features as vMMN was measured via the subtraction of the stimulus presented in the equiprobale sequence from the same presented as deviant in the oddball sequence revealing preattentive mechanisms rather than responses due to different visual features.

Here, some similarities between the responses recorded in adults and in children suggest that brain processes underlying facial deviancy detection are already established in children, although emotional specificity is not yet developed. It is important to acknowledge that the lack of such statistical differences was partly due to great variability in the children group, which is also informative of the development of the emotional face change detection organization (Lee et al., 2013). Moreover, crucial functions related to emotional processing are developing throughout childhood, such as emotional regulation (Zeman et al., 2006), confirming that underlying brain activity is not completely established.

As in adults, visual inspection of the responses revealed that children present similar neutral and emotional time course, with emotional responses’ shape being similar, especially over the right hemisphere (see Figure 2). However, back‐view topographies suggest a less clear occipital response compared to that observed in adults. Peak analyses did not reveal group effects, nor emotion‐specific responses in children; accordingly, permutation tests performed in each group separately confirm that emotion‐specific change detection is not yet mature in children (see Figure 3). It is possible that underlying neural mechanisms are not entirely developed in children and also less specialized (see Lee et al., 2013), especially within the ventral occipital temporal cortex (Passarotti et al., 2003) and the fusiform gyrus, which reaches adult‐like activation at 16 years of age (Golarai et al., 2010).

It is important to keep in mind that the time course of the sustained effect observed in adults in response to emotional deviance corresponds the time window of the second peak observed in children, making it challenging to compare the groups in this late response as it might reflect a different mechanism. Nevertheless, grand‐average responses as well as permutation tests suggest that late sustained emotional activity is not yet present in school‐aged children.

The double peak pattern observed in this study has previously been reported in a vMMN study in children using simple nonsocial stimuli (Cleary et al., 2013), but also in the auditory domain in response to emotional sounds (Charpentier et al., 2018).

Crucially, vMMN first peak response in children is observed after both P100 and N170 components, while in adults, the first peak of the vMMN occurs between the two visual and face ERPs components. It is possible that in children, the first change detection response recorded in the posterior region (i.e., first MMN peak) would necessitate face to be fully processed (as reflected by the N170), while in adults only P100 processing precedes the first vMMN peak (see Kovarski et al., 2017). These developmental findings suggest the progressive independency between sensory responses and mismatch‐related brain activity, although further research should confirm this hypothesis (for a debate, see Astikainen et al., 2013, 2008).

This study presents some limitations. Previous researches have shown that age effects could be greater when investigating responses to happy faces, rather than angry ones (Wu et al., 2016). Here, only one emotional deviant stimulus has been used (i.e., angry expression); thus, other studies should be completed to generalize these results by showing other specific emotional deviancies. vMMN responses were heterogeneous, especially in the youngest group: further research should be conducted to account for individual differences both for ERPs components and for vMMN.

Overall, this study confirms that vMMN is a useful tool to investigate automatic change detection, even in the developing brain. Comparing healthy control groups to clinical groups could also be of interest to study how specific behavioral deficits might be predicted by vMMN specificities, as suggested by previous clinical researches on the emotion‐related vMMN in depression, autism, schizophrenia, and panic disorder (Chang et al., 2010; Csukly et al., 2013; Kovarski et al., 2021; Tang et al., 2013).

5. CONCLUSIONS

The present study is the first to investigate vMMN to emotional faces in children. The results reveal that although the shape of the change detection signature is already present in school‐aged children, this is not yet mature. This is confirmed by permutation tests in each group suggesting that emotion‐specific responses found in adults did not reach significance in the youngest group, which could be due to a lack of emotional effect but also to the important heterogeneity. These results provide additional evidence on the emotional processing in children but need to be further replicated. Future studies could further relate these findings to complex social skills and behaviors such as emotional regulation.

CONFLICT OF INTEREST

The authors declare no conflict of interest.

ACKNOWLEDGMENTS

We thank Céline Courtin for helping during recordings and Sylvie Roux, Annabelle Merchie, and Rémy Magné for technical support. We thank all the participants who took part in the study. This work was supported by a French National Research Agency grant (ANR‐12‐JSH2 0001‐01‐AUTATTEN).

Kovarski, K. , Charpentier, J. , Houy‐Durand, E. , Batty, M. , & Gomot, M. (2022). Emotional expression visual mismatch negativity in children. Developmental Psychobiology, 64, e22326. 10.1002/dev.22326

DATA AVAILABILITY STATEMENT

Data supporting the findings of this study are available from the corresponding author upon request.

REFERENCES

- Aguera, P. E. , Jerbi, K. , Caclin, A. , & Bertrand, O. (2011). ELAN: A software package for analysis and visualization of MEG, EEG, and LFP signals. Computational Intelligence and Neuroscience, 2011, 158970. 10.1155/2011/158970 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson, A. K. , Christoff, K. , Panitz, D. , De Rosa, E. , & Gabrieli, J. D. (2003). Neural correlates of the automatic processing of threat facial signals. Journal of Neuroscience, 23(13), 5627–5633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Astikainen, P. , Cong, F. , Ristaniemi, T. , & Hietanen, J. K. (2013). Event‐related potentials to unattended changes in facial expressions: Detection of regularity violations or encoding of emotions? Frontiers in Human Neuroscience, 7, 557. 10.3389/fnhum.2013.00557 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Astikainen, P. , & Hietanen, J. K. (2009). Event‐related potentials to task‐irrelevant changes in facial expressions. Behavioral and Brain Functions, 5, 30. 10.1186/1744-9081-5-30 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Astikainen, P. , Lillstrang, E. , & Ruusuvirta, T. (2008). Visual mismatch negativity for changes in orientation—A sensory memory‐dependent response. European Journal of Neuroscience, 28(11), 2319–2324. 10.1111/j.1460-9568.2008.06510.x [DOI] [PubMed] [Google Scholar]

- Bartha‐Doering, L. , Deuster, D. , Giordano, V. , am Zehnhoff‐Dinnesen, A. , & Dobel, C. (2015). A systematic review of the mismatch negativity as an index for auditory sensory memory: From basic research to clinical and developmental perspectives. Psychophysiology, 52(9), 1115–1130. 10.1111/psyp.12459 [DOI] [PubMed] [Google Scholar]

- Batty, M. , & Taylor, M. J. (2003). Early processing of the six basic facial emotional expressions. Brain Research: Cognitive Brain Research, 17(3), 613–620. [DOI] [PubMed] [Google Scholar]

- Batty, M. , & Taylor, M. J. (2006). The development of emotional face processing during childhood. Developmental Science, 9(2), 207–220. 10.1111/j.1467-7687.2006.00480.x [DOI] [PubMed] [Google Scholar]

- Brenner, C. A. , Rumak, S. P. , Burns, A. M. , & Kieffaber, P. D. (2014). The role of encoding and attention in facial emotion memory: An EEG investigation. International Journal of Psychophysiology, 93(3), 398–410. 10.1016/j.ijpsycho.2014.06.006 [DOI] [PubMed] [Google Scholar]

- Carretié, L. (2014). Exogenous (automatic) attention to emotional stimuli: A review. Cognitive, Affective & Behavioral Neuroscience, 14(4), 1228–1258. 10.3758/s13415-014-0270-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang, Y. , Xu, J. , Shi, N. , Zhang, B. , & Zhao, L. (2010). Dysfunction of processing task‐irrelevant emotional faces in major depressive disorder patients revealed by expression‐related visual MMN. Neuroscience Letters, 472(1), 33–37. 10.1016/j.neulet.2010.01.050 [DOI] [PubMed] [Google Scholar]

- Charpentier, J. , Kovarski, K. , Roux, S. , Houy‐Durand, E. , Saby, A. , Bonnet‐Brilhault, F. , Latinus, M. , & Gomot, M. (2018). Brain mechanisms involved in angry prosody change detection in school‐age children and adults, revealed by electrophysiology. Cognitive, Affective & Behavioral Neuroscience, 18(4), 748–763. 10.3758/s13415-018-0602-8 [DOI] [PubMed] [Google Scholar]

- Cleary, K. M. , Donkers, F. C. , Evans, A. M. , & Belger, A. (2013). Investigating developmental changes in sensory processing: Visual mismatch response in healthy children. Frontiers in Human Neuroscience, 7, 922. 10.3389/fnhum.2013.00922 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clery, H. , Bonnet‐Brilhault, F. , Lenoir, P. , Barthelemy, C. , Bruneau, N. , & Gomot, M. (2013). Atypical visual change processing in children with autism: An electrophysiological study. Psychophysiology, 50(3), 240–252. 10.1111/psyp.12006 [DOI] [PubMed] [Google Scholar]

- Clery, H. , Roux, S. , Besle, J. , Giard, M. H. , Bruneau, N. , & Gomot, M. (2012). Electrophysiological correlates of automatic visual change detection in school‐age children. Neuropsychologia, 50(5), 979–987. 10.1016/j.neuropsychologia.2012.01.035 [DOI] [PubMed] [Google Scholar]

- Csukly, G. , Stefanics, G. , Komlosi, S. , Czigler, I. , & Czobor, P. (2013). Emotion‐related visual mismatch responses in schizophrenia: Impairments and correlations with emotion recognition. PLoS ONE, 8(10), e75444. 10.1371/journal.pone.0075444 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Czigler, I. (2014). Visual mismatch negativity and categorization. Brain Topography, 27(4), 590–598. 10.1007/s10548-013-0316-8 [DOI] [PubMed] [Google Scholar]

- Czigler, I. , & Pato, L. (2009). Unnoticed regularity violation elicits change‐related brain activity. Biological Psychology, 80(3), 339–347. 10.1016/j.biopsycho.2008.12.001 [DOI] [PubMed] [Google Scholar]

- Gayle, L. C. , Gal, D. E. , & Kieffaber, P. D. (2012). Measuring affective reactivity in individuals with autism spectrum personality traits using the visual mismatch negativity event‐related brain potential. Frontiers in Human Neuroscience, 6, 334. 10.3389/fnhum.2012.00334 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Golarai, G. , Liberman, A. , Yoon, J. M. , & Grill‐Spector, K. (2010). Differential development of the ventral visual cortex extends through adolescence. Frontiers in Human Neuroscience, 3, 80. 10.3389/neuro.09.080.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guthrie, D. , & Buchwald, J. S. (1991). Significance testing of difference potentials. Psychophysiology, 28(2), 240–244. [DOI] [PubMed] [Google Scholar]

- Herba, C. M. , Landau, S. , Russell, T. , Ecker, C. , & Phillips, M. L. (2006). The development of emotion‐processing in children: Effects of age, emotion, and intensity. Journal of Child Psychology and Psychiatry and Allied Disciplines, 47(11), 1098–1106. 10.1111/j.1469-7610.2006.01652.x [DOI] [PubMed] [Google Scholar]

- Hileman, C. M. , Henderson, H. , Mundy, P. , Newell, L. , & Jaime, M. (2011). Developmental and individual differences on the P1 and N170 ERP components in children with and without autism. Developmental Neuropsychology, 36(2), 214–236. 10.1080/87565641.2010.549870 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hinojosa, J. A. , Mercado, F. , & Carretié, L. (2015). N170 sensitivity to facial expression: A meta‐analysis. Neuroscience and Biobehavioral Reviews, 55, 498–509. 10.1016/j.neubiorev.2015.06.002 [DOI] [PubMed] [Google Scholar]

- Horimoto, R. , Inagaki, M. , Yano, T. , Sata, Y. , & Kaga, M. (2002). Mismatch negativity of the color modality during a selective attention task to auditory stimuli in children with mental retardation. Brain and Development, 24(7), 703–709. [DOI] [PubMed] [Google Scholar]

- Ikeda, K. , Sugiura, A. , & Hasegawa, T. (2013). Fearful faces grab attention in the absence of late affective cortical responses. Psychophysiology, 50(1), 60–69. 10.1111/j.1469-8986.2012.01478.x [DOI] [PubMed] [Google Scholar]

- Jacobsen, T. , & Schroger, E. (2001). Is there pre‐attentive memory‐based comparison of pitch? Psychophysiology, 38(4), 723–727. [PubMed] [Google Scholar]

- Kimura, M. , Katayama, J. , Ohira, H. , & Schroger, E. (2009). Visual mismatch negativity: New evidence from the equiprobable paradigm. Psychophysiology, 46(2), 402–409. 10.1111/j.1469-8986.2008.00767.x [DOI] [PubMed] [Google Scholar]

- Kimura, M. , Kondo, H. , Ohira, H. , & Schroger, E. (2012). Unintentional temporal context‐based prediction of emotional faces: An electrophysiological study. Cerebral Cortex, 22(8), 1774–1785. 10.1093/cercor/bhr244 [DOI] [PubMed] [Google Scholar]

- Kimura, M. , Schroger, E. , & Czigler, I. (2011). Visual mismatch negativity and its importance in visual cognitive sciences. NeuroReport, 22(14), 669–673. 10.1097/WNR.0b013e32834973ba [DOI] [PubMed] [Google Scholar]

- Kovarski, K. , Charpentier, J. , Roux, S. , Batty, M. , Houy‐Durand, E. , & Gomot, M. (2021). Emotional visual mismatch negativity: A joint investigation of social and non‐social dimensions in adults with autism. Translational Psychiatry, 11(1), 10. 10.1038/s41398-020-01133-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kovarski, K. , Latinus, M. , Charpentier, J. , Clery, H. , Roux, S. , Houy‐Durand, E. , Saby, A. , Bonnet‐Brilhault, F. , Batty, M. , & Gomot, M. (2017). Facial expression related vMMN: Disentangling emotional from neutral change detection. Frontiers in Human Neuroscience, 11, 18. 10.3389/fnhum.2017.00018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kreegipuu, K. , Kuldkepp, N. , Sibolt, O. , Toom, M. , Allik, J. , & Naatanen, R. (2013). vMMN for schematic faces: Automatic detection of change in emotional expression. Frontiers in Human Neuroscience, 7, 714. 10.3389/fnhum.2013.00714 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee, K. , Quinn, P. C. , Pascalis, O. , & Slater, A. (2013). Development of face‐processing ability in childhood. In Zelazo P. D. (Ed.), The Oxford handbook of developmental psychology: Body and mind (Vol. 1, pp. 338–370). Oxford University Press. [Google Scholar]

- Li, X. , Lu, Y. , Sun, G. , Gao, L. , & Zhao, L. (2012). Visual mismatch negativity elicited by facial expressions: New evidence from the equiprobable paradigm. Behavioral and Brain Functions, 8, 7. 10.1186/1744-9081-8-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu, T. , Xiao, T. , Li, X. , & Shi, J. (2015). Fluid intelligence and automatic neural processes in facial expression perception: An event‐related potential study. PLoS ONE, 10(9), e0138199. 10.1371/journal.pone.0138199 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morales, S. , Fu, X. , & Perez‐Edgar, K. E. (2016). A developmental neuroscience perspective on affect‐biased attention. Developmental Cognitive Neuroscience, 21, 26–41. 10.1016/j.dcn.2016.08.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Näätänen, R. , & Winkler, I. (1999). The concept of auditory stimulus representation in cognitive neuroscience. Psychological Bulletin, 125(6), 826–859. [DOI] [PubMed] [Google Scholar]

- Olivares, E. I. , Iglesias, J. , Saavedra, C. , Trujillo‐Barreto, N. J. , & Valdes‐Sosa, M. (2015). Brain signals of face processing as revealed by event‐related potentials. Behavioural Neurology, 2015, 514361. 10.1155/2015/514361 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Passarotti, A. M. , Paul, B. M. , Bussiere, J. R. , Buxton, R. B. , Wong, E. C. , & Stiles, J. (2003). The development of face and location processing: An fMRI study. Developmental Science, 6(1), 100–117. 10.1111/1467-7687.00259 [DOI] [Google Scholar]

- Qian, X. , Liu, Y. , Xiao, B. , Gao, L. , Li, S. , Dang, L. , Si, C. , & Zhao, L. (2014). The visual mismatch negativity (vMMN): Toward the optimal paradigm. International Journal of Psychophysiology, 93(3), 311–315. 10.1016/j.ijpsycho.2014.06.004 [DOI] [PubMed] [Google Scholar]

- Ridderinkhof, K. R. , & van der Stelt, O. (2000). Attention and selection in the growing child: Views derived from developmental psychophysiology. Biological Psychology, 54(1‐3), 55–106. [DOI] [PubMed] [Google Scholar]

- Schroger, E. , & Wolff, C. (1996). Mismatch response of the human brain to changes in sound location. NeuroReport, 7(18), 3005–3008. [DOI] [PubMed] [Google Scholar]

- Stefanics, G. , Csukly, G. , Komlosi, S. , Czobor, P. , & Czigler, I. (2012). Processing of unattended facial emotions: A visual mismatch negativity study. Neuroimage, 59(3), 3042–3049. 10.1016/j.neuroimage.2011.10.041 [DOI] [PubMed] [Google Scholar]

- Susac, A. , Ilmoniemi, R. J. , Pihko, E. , Ranken, D. , & Supek, S. (2010). Early cortical responses are sensitive to changes in face stimuli. Brain Research, 1346, 155–164. 10.1016/j.brainres.2010.05.049 [DOI] [PubMed] [Google Scholar]

- Susac, A. , Ilmoniemi, R. J. , Pihko, E. , & Supek, S. (2004). Neurodynamic studies on emotional and inverted faces in an oddball paradigm. Brain Topography, 16(4), 265–268. [DOI] [PubMed] [Google Scholar]

- Tanaka, M. , Okubo, O. , Fuchigami, T. , & Harada, K. (2001). A study of mismatch negativity in newborns. Pediatrics International, 43(3), 281–286. [DOI] [PubMed] [Google Scholar]

- Tang, D. , Xu, J. , Chang, Y. , Zheng, Y. , Shi, N. , Pang, X. , & Zhang, B. (2013). Visual mismatch negativity in the detection of facial emotions in patients with panic disorder. NeuroReport, 24(5), 207–211. 10.1097/WNR.0b013e32835eb63a [DOI] [PubMed] [Google Scholar]

- Taylor, M. J. , Batty, M. , & Itier, R. J. (2004). The faces of development: A review of early face processing over childhood. Journal of Cognitive Neuroscience, 16(8), 1426–1442. 10.1162/0898929042304732 [DOI] [PubMed] [Google Scholar]

- Tomio, N. , Fuchigami, T. , Fujita, Y. , Okubo, O. , & Mugishima, H. (2012). Developmental changes of visual mismatch negativity. Neurophysiology, 44(2), 138–143. [Google Scholar]

- Usler, E. , Foti, D. , & Weber, C. (2020). Emotional reactivity and regulation in 5‐ to 8‐year‐old children: An ERP study of own‐age face processing. International Journal of Psychophysiology, 156, 60–68. 10.1016/j.ijpsycho.2020.07.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vogel, B. O. , Shen, C. , & Neuhaus, A. H. (2015). Emotional context facilitates cortical prediction error responses. Human Brain Mapping, 36(9), 3641–3652. 10.1002/hbm.22868 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wetzel, N. , & Schroger, E. (2014). On the development of auditory distraction: A review. PsyCh Journal, 3(1), 72–91. 10.1002/pchj.49 [DOI] [PubMed] [Google Scholar]

- Wu, M. , Kujawa, A. , Lu, L. H. , Fitzgerald, D. A. , Klumpp, H. , Fitzgerald, K. D. , Monk, C. S. , & Phan, K. L. (2016). Age‐related changes in amygdala–frontal connectivity during emotional face processing from childhood into young adulthood. Human Brain Mapping, 37(5), 1684–1695. 10.1002/hbm.23129 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeman, J. , Cassano, M. , Perry‐Parrish, C. , & Stegall, S. (2006). Emotion regulation in children and adolescents. Journal of Developmental and Behavioral Pediatrics, 27(2), 155–168. 10.1097/00004703-200604000-00014 [DOI] [PubMed] [Google Scholar]

- Zhao, L. , & Li, J. (2006). Visual mismatch negativity elicited by facial expressions under non‐attentional condition. Neuroscience Letters, 410(2), 126–131. 10.1016/j.neulet.2006.09.081 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data supporting the findings of this study are available from the corresponding author upon request.