Abstract

Overall prediction of oral cavity squamous cell carcinoma (OCSCC) remains inadequate, as more than half of patients with oral cavity cancer are detected at later stages. It is generally accepted that the differential diagnosis of OCSCC is usually difficult and requires expertise and experience. Diagnosis from biopsy tissue is a complex process, and it is slow, costly, and prone to human error. To overcome these problems, a computer-aided diagnosis (CAD) approach was proposed in this work. A dataset comprising two categories, normal epithelium of the oral cavity (NEOR) and squamous cell carcinoma of the oral cavity (OSCC), was used. Feature extraction was performed from this dataset using four deep learning (DL) models (VGG16, AlexNet, ResNet50, and Inception V3) to realize artificial intelligence of medial things (AIoMT). Binary Particle Swarm Optimization (BPSO) was used to select the best features. The effects of Reinhard stain normalization on performance were also investigated. After the best features were extracted and selected, they were classified using the XGBoost. The best classification accuracy of 96.3% was obtained when using Inception V3 with BPSO. This approach significantly contributes to improving the diagnostic efficiency of OCSCC patients using histopathological images while reducing diagnostic costs.

1. Introduction

Oral squamous cell carcinoma (OSCC) is a diverse collection of cancers that arise from the mucosal lining of the oral cavity [1, 2], accounting for more than 90% of all oral cancers [3]. It is a subtype of head and neck squamous cell carcinoma (HNSCC), which is the world's seventh most frequent cancer [4]. The World Health Organization estimates that 657,000 fresh cases are diagnosed annually, with over 330,000 fatalities globally. OSCC frequency rates were found to be massively greater in South Asian countries. India has the biggest number of cases (one-third of cases), whereas Pakistan has the first and second most common cancers in males and females, respectively [5]. Drinking alcohol, smoking cigarettes, poor oral hygiene, human papillomavirus (HPV) exposure, genetic background, lifestyle, ethnicity, and geographical region are all risk factors.

The detection of OSCC in early stages is essential to achieve a successful therapy, increased chances for survival, and low mortality and morbidity rates [6]. With a 50% average cure rate, the OSCC has bad prognosis [7, 8]. Microscopy-based histopathological analysis of tissue samples is considered the standard method for diagnosing OSCC [9, 10]. This diagnostic pathology methodology depends on histopathologists' interpretation, which is typically slow and error-prone, limiting its clinical utility [11]. As a result, it is critical to provide effective diagnostic tools to aid pathologists in the assessment and diagnosis of OSCC.

Lately, there have been a growing number of studies on applying artificial intelligence (AI) to improve medical diagnostics. Researchers have been able to examine AI applications in medical image analysis thanks to increasing the usage of diagnostic imaging. Deep learning (DL) [12], in particular, has shown outstanding success in solving a variety of medical image processing challenges [13], specifically in the diagnostics of pathological images [14, 15]. Computer-aided diagnosis (CAD) systems based on DL have been suggested and established on a large scale for a range of cancer sorts, such as breast cancer, prostate cancer, and lung cancer ([16, 17] and [18]). Nevertheless, the research shows that DL has been seldom used to diagnose OSCC from pathological images. To recognize keratin pearls in oral histopathology images, Dev et al. employed Convolutional Neural Network (CNN) and Random Forest. For keratin area segmentation, the CNN model achieved 98.05 percent accuracy, whereas the Random Forest model spotted keratin pearls with 96.88 percent accuracy [19]. Das et al. used DL to divide oral biopsy images into different classes according to Broder's histological grading system. CNN was also proposed, which had a 97.5 percent accuracy rate (N) [20].

Folmsbee et al. used CNN to classify oral cancer tissue into seven types using Active Learning (AL) and Random Learning (RL) (stroma, tumor, lymphocytes, mucosa, keratin pearls, adipose, and blood). The AL's accuracy was determined to be 3.26 percent higher than that of the RL [21]. Furthermore, Martino et al. applied multiple DL architectures, such as U-Net, SegNet, U-Net with VGG16 encoder, and U-Net with ResNet50 encoder, to segment oral lesion whole slide images (WSI) into three groups (carcinoma, noncarcinoma, and nontissue). A deeper network, such as U-Net upgraded with ResNet50 as encoder, was shown to be more accurate than the original U-Net [22]. Amin et al. recently used VGG16, Inception V3, and Resnet50 were fine-tuned individually and then used in concatenation as a feature extractor to perform binary classification on oral pathology images [23].

The categorization of oral histopathological images into normal and OSCC classes was enhanced in this research. The increase is achieved by employing the notion of transfer learning to extract features from pretrained CNN models (VGG16, AlexNet, ResNet50, and Inception V3). Then, using the Binary Particle Swarm Optimization (BPSO) approach, features were selected. Finally, three different classifiers, XGBoost, KNN, and ANN, were used to determine the detection performance of OSCC and normal oral histopathology images.

2. Research Contributions

The proposed research investigates the following:

Oral histopathological images introduced to extract the desired features to four various models of DL.

Investigating the obtained features in “a” by applying the methods of metaheuristic feature selection.

The metaheuristic feature selection method is explored to enhance the performance of the classifiers.

Achieving the most recommended “hybrid” system that provides the highest accuracy in the stage of the making decision, which is obtained by comparing the highest performance of the proposed models for detecting OSCC.

The final stage of the proposed research is the effect evaluation for the stain normalization on images classification.

Based on “a” to “e,” AIoMT platforms development [24–26] is regarded as the main goal of the introduced research in the medical and health field.

The rest of the paper is organized as follows: The literature study in Section 1 goes into detail on previous successful approaches. A discussion of the method and approaches used in this study can be found in Section 3. A breakdown of the findings from the study is given in Section 4. Finally, the outcome of the investigated study is described in Section 5 before concluding the paper in Section 6.

3. Materials and Methods

3.1. Materials

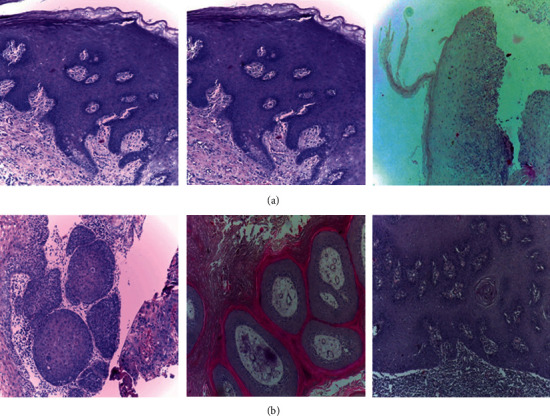

The histopathological images dataset is got from the online resource repository [27], and medical experts prepared and cataloged data from 230 individuals. These images were collected from two different locations in India: Dr. B. Borooah Cancer Research Institute and Ayursundra Healthcare Pvt. Ltd, Guwahati. Images are divided into two categories: normal epithelium of the oral cavity (NEOR) and OSCC. A Leica ICC50 HD microscope camera was used to get the images. Table 1 shows the histopathological image details in terms of class, resolutions, and quantity. Figure 1 shows a chosen selection of histopathological images and the related categories.

Table 1.

Image categories with the related source.

| Resolution | Class | Number of images |

|---|---|---|

| 100× magnification | NEOR | 89 |

| OSCC | 439 | |

|

| ||

| 400× magnification | NEOR | 201 |

| OSCC | 495 | |

|

| ||

| Total images | NEOR and OSCC | 1224 |

Figure 1.

Different classes of histopathological images with (a) NEOR and (b) OSCC.

3.2. Proposed Model

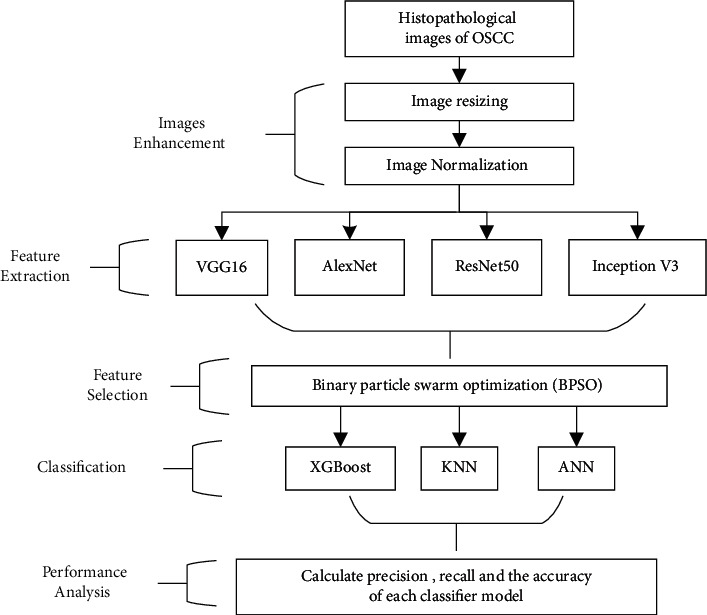

Figure 2 depicts the proposed model. The model states that the input histopathology images were first preprocessed. In order to extract features from normalized histopathology images, four DL models were used. The transfer learning approach was used during the feature extraction procedure. To find the best probable features, Binary Particle Swarm Optimization was employed as a feature selection method. Finally, three machine learning methods were used to classify abstract features, such as DL [28].

Figure 2.

The proposed model for realizing AIoMT in medical systems.

3.2.1. Image Enhancement

To develop the classification process, some preprocessing work is regarded as essential. So, the processes performed in the preprocessing step are explained in the following.

(1) Image Resizing. As shown in Table 2, the size of the histopathology images was adjusted depending on the size of the input of each DL architecture. Losing local features in histopathological images when resized is offset by the preservation of global features.

Table 2.

The input size for each DL architecture [29].

| Model | Input size (pixels) |

|---|---|

| VGG16 | 224 × 224 |

| AlexNet | 227 × 227 |

| ResNet50 | 224 × 224 |

| Inception V3 | 299 × 299 |

(2) Image Normalization. The analysis of the histopathology images faces may challenges, such as producing robust models to the variations resulting from different labs and imaging systems [30], caused by raw materials, the response to various colors of the scanners' slide, protocols for staining, and manufacturing techniques [31]. The histopathology dataset was stain-normalized using the Reinhard method [32, 33]. The purpose of the Reinhard stain normalization approach is to bring these stains' appearances into line [34]. This method allows a target image to be shifted in the color domain to more closely match the template image, which is applied on each histopathology image pixel as shown in equations 1 to 3 [35, 36].

Step 1: Convert both the source image X and target image Y from RGB space to lαβ space.

- Step 2: Do the following transformation in lαβ space:

(1) (2) (3) where l2, a2, andb2 are intensity variables of the processed image in lαβ space. l1, a1, andb1 are intensity variables of target image in lαβ space. l, a, andb are intensity variables of source image. μg indicates the global mean of the image and σg represents the global standard deviation of the image.

Step 3: Convert back the processed image Z from lαβ space to RGB space.

The intensity variation of the original image is preserved using this procedure. As a result, its structure is kept, while the contrast is altered to match that of the target.

3.2.2. Features Extraction Using Deep Learning Models

In this work, four DL models were used for feature extraction, namely, VGG16, AlexNet, Inception V3, and ResNet50.

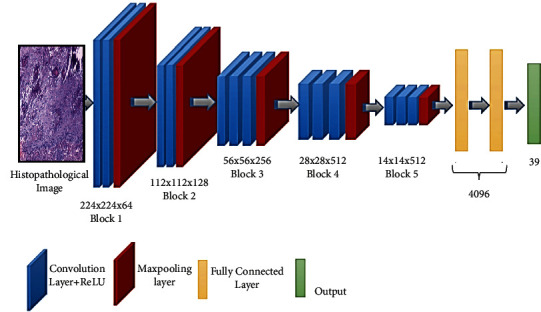

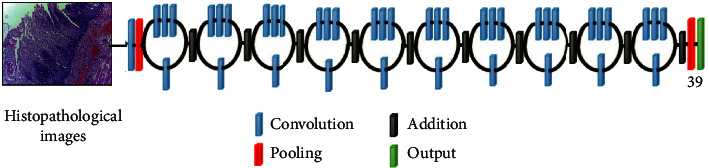

(1) VGG16. Maximum pooling and the convolution layers arrangement are followed by the VGG16 architecture [37] (Figure 3). Eventually, there are three FC layers: the first and the second are triggered by ReLU, while the third is triggered by Softmax. This design has 16 layers and 138 million parameters; the input layer can receive images with 224 × 224 pixels.

Figure 3.

Schematic illustration of VGG16 architecture.

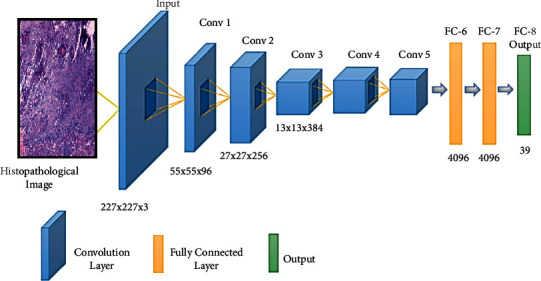

(2) AlexNet. The AlexNet [38] model contains 61 million parameters using an 8-layer CNN architecture. In the AlexNet architecture (Figure 4), there are five convolutional layers, where three of them are only used as linked layers. The fourth layer is the Softmax layer that requests a resolution of 227 × 227 pixels input image, and the last layer is the ReLU activation function, which performs the system convolutional and connected processes. Moreover, the FC-8 layer is linked to the Softmax layer via 39 neurons.

Figure 4.

Schematic illustration of AlexNet architecture.

(3) ResNet50. The ResNet50 architecture [39] addressed the issues of several nonlinear layers, not learning identity mappings, and deterioration. Simply said, ResNet50 is a network made up of residual unit stacks (Figure 5). The network is built using residual units as building components. These units were built with convolution and pooling layers. The input histopathology images have a resolution of 224 × 224 pixels, and the design includes 3 × 3 filters.

Figure 5.

Schematic illustration of ResNet50 architecture.

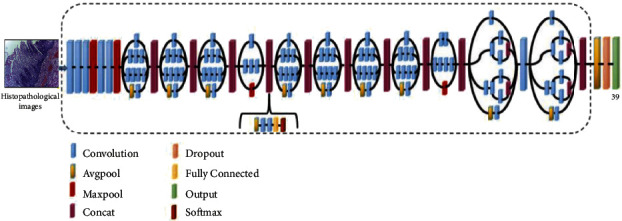

(4) Inception V3. Convolutions, average pooling, maximum pooling, dropouts, and completely connected layers are among the asymmetrical and symmetrical building elements used in the model (Figure 6). The Softmax function is found in the last layer of the Inception V3 architecture [40], which comprises 42 levels, where the resolution of the received information by the input layer in pixels is 299 × 299.

Figure 6.

Inception V3 architecture schematic.

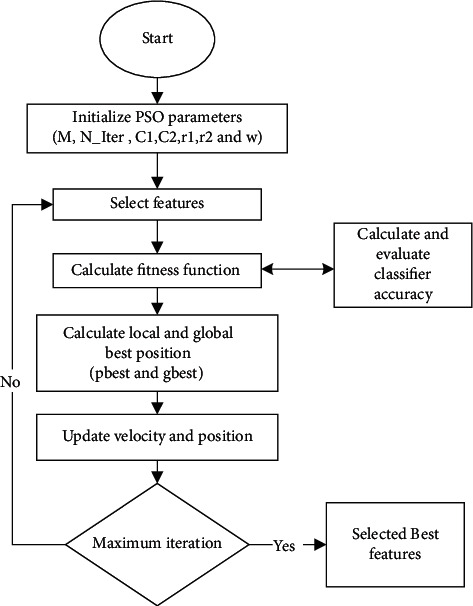

3.2.3. Binary Particle Swarm Optimization for Feature Selection

In processing data, the selection of features is critical [41–43]. Longer training and overcompliance are challenges caused by the vast amount of data to be handled (Deif et al. [12, 44]). Unnecessary features should be removed from the data to avoid situations like this. For feature selection [7], BPSO is used, where BPSO is the binary form of PSO [45, 46]. The BPSO algorithm process was summarized in Figure 7 and described below:

- Step (1): Equation (4) is used to update the particle velocity in the swarm.

(4) Each swarm particle represents a potential solution. A 0/1 (1x feature number) matrix is one conceivable option, with each column value produced at random. Selecting the column numbers in the feature matrix with 1 yields a workable solution (a particle). The fitness value of particles in a swarm was calculated using the kNN classifier error rate. The particle with the best objective function value in the swarm is considered as gbestin the first iteration.

Step (3): The particle with the highest aim function value is allocated in subsequent iterations (equation (7)).

| (7) |

Figure 7.

BPSO approach for feature selection.

If pbest's aim function value is higher than gbest's, pbest is assigned to Gbest (equation (8)).

| (8) |

where x is the solution, pbest denotes the personal best, Bbest denotes the global best, F(.) denotes the fitness function, and t denotes the number of iterations.

This process is repeated for T iterations, and the algorithm returns the best global solution as output [7].

3.2.4. Classification Stage

Xtreme gradient boosting (XGBoost) achieves the classification model for distinguishing between histopathological images for NEOR and OSCC. The findings of XGBoost are compared to those of classic machine learning techniques like artificial neural networks (ANN) and Random Forest (RF) [47].

3.2.5. Assessment of the Suggested Methodology's Classification Performance

The suggested methodology is evaluated by calculating sensitivity, precision, and accuracy for classifiers. The following formula is used to calculate these terms:

| (9) |

The number of correctly classified chest disease images in the normal and OSCC classes, respectively, is represented by TP (True Positive) and TN (True Negative). The numbers FN (False Negative) and FP (False Positive) in the normal and OSCC classes, respectively, show the number of misclassified histopathological images.

4. Experimental Setup and Setting

The layers of the all deep models would have been trainable true during the training phase. Softmax was used as the activation function of the final CNN layer, and MSE was used as a loss function. The early halting approach was employed, with patience set at 5 and the minimal loss change set to 0.0001. The SGD optimizer was employed in all deep models with a learning rate of 0.001. The size of the mini-batch is 32.

Histopathological images were divided into two groups in the classification stage: 80 percent for training the classifier models and 20 percent for testing, with 10-fold cross-validation. All experiments were run on Google Colab [48] with GPU support. The whole code was written in Python 3.10.1 [49] using Keras version 2.7.0 [50].

The algorithms implemented in this research are BPSO, XGBoost, ANN, and RF, taking into consideration the fact that these algorithms are varying in the hyperparameters, and the settings are shown in [51].

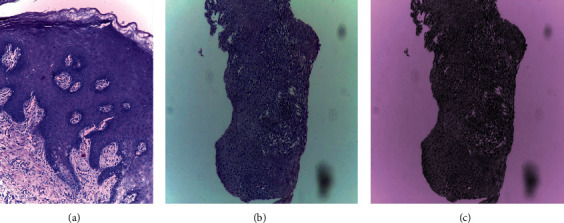

5. Results

In the first step, to better understand the impact of stain normalization approaches on classification performance, all histopathology images in the dataset were stain-normalized using the Reinhard technique. The Reinhard stain normalization approach used in this study is illustrated in Figure 8. In this diagram, (a) represents the original histopathological image used for the Reinhard technique, (b) represents the target image to be transferred (the techniques aim to normalize the colors in the target images to those of the original), and (c) represents the result of using the Reinhard technique.

Figure 8.

Samples of original and normalized histopathological images: (a) original image; (b) target image; and (c) Reinhard normalized.

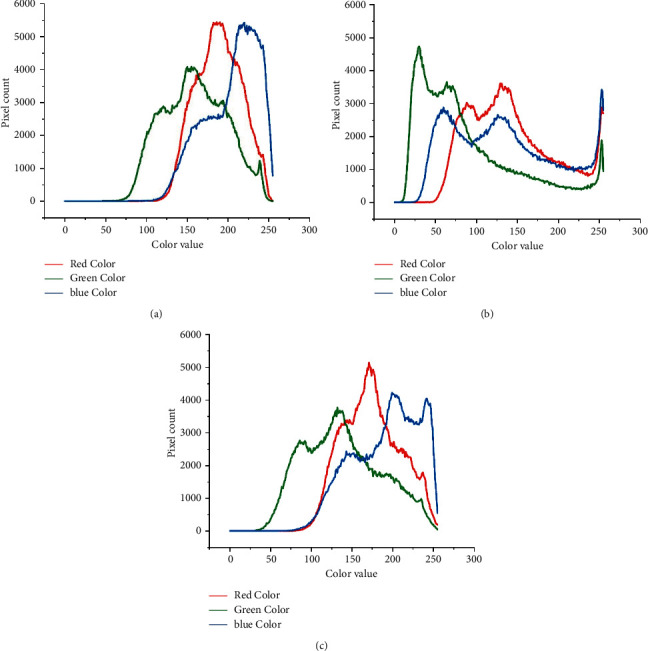

In addition, we employed the probability density function (PDF) to investigate the effect of the Reinhard normalization method on histopathological images by comparing the postnormalization image PDF with the prenormalization image PDF. Figure 9(a) is the original PDF. The resultant density functions of the pixels in 3-channel RGB show that the target image's distribution is substantially skewed to the left (Figure 9(b)). Figure 9(c) shows that, after normalization, the probability distribution of the three channels resembles that of the original image.

Figure 9.

Samples of the probability density function (PDF) for (a) original histopathological image, (b) target histopathological image, and (c) Reinhard normalized.

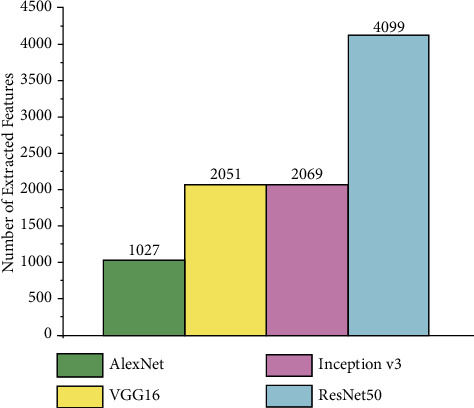

Then, four deep models were used to extract features from the images: VGG16, AlexNet, ResNet50, and Inception V3. Figure 10 shows the total number of features got from each deep model. It can be seen in Table 3 that the smallest number of extract features was from the AlexNet model and numerous extract features were got from the ResNet50 model. Meanwhile VGG16 and Inception V3 extracted the same number of features.

Figure 10.

The features got in the study and all execution times.

Table 3.

Performance comparison for XGBoost classifier with another state-of-the-art traditional machine learning algorithm.

| Classifier name | Overall accuracy (%) | Sensitivity (%) | Precision (%) |

|---|---|---|---|

| XGBoost | 96.3 | 98.9 | 96.3 |

| RF | 93.1 | 97.8 | 93.3 |

| ANN | 94.1 | 97.8 | 94.7 |

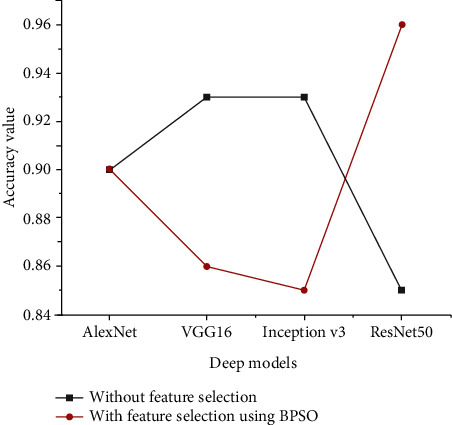

Two experiments were carried out to see if the proposed feature selection strategy could increase the classification model's accuracy. In the first experiment, to train the classifier, all features were used without applying the feature selection technique. The second experiment was performed by employing the proposed feature selection method, that is, BPSO, to eliminate the undesired redundant and unrelated features by looking for the best features used to train the classifier. The performance values obtained for the XGBoost classifier using all extracted features and selected features are given in Figure 11.

Figure 11.

Accuracy performance for the XGBoost classifier using all extracted features and selected features.

The findings of the experiments show that when using the selected technique on features extracted using VGG16 and Inception V3, the classification performance of the XGBoost model was reduced but not when applying the selected approach on features derived using AlexNet. Meanwhile the XGBoost model delivered outstanding results when using the selected feature approach on features that were extracted using ResNet50.

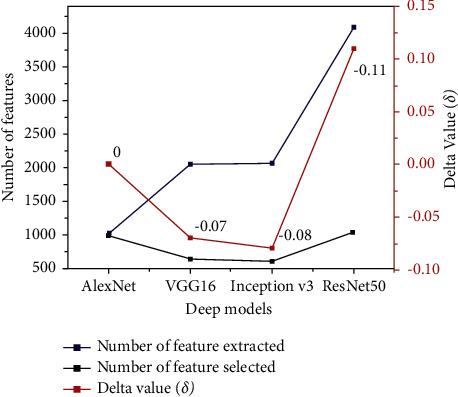

To interpret the performance of XGBoost when using the features selection technique, Figure 12 was developed to illustrate the relation between the numbers of extracted features from all deep models; the number of features was selected using BPSO and Delta value (δ) that indicates difference between classification accuracies for XGBoost before and after applying BPSO.

Figure 12.

Comparison between the number of extracted features from all deep models, number of selected features, and Delta value (δ).

It is seen that the highest extracted number belongs to the ResNet50 model, while VGG16 and Inception V3 have about half extracted number compared to the ResNet50 model. The lowest extracted number was got from AlexNet.

It is concluded that there is a relationship between the number of features extracted and the feature selection approach, where numerous features increase the ability of BPSO to select the best features and then select from fewer features. Therefore, we can interpret that the accuracy of XGBoost increased (δ = 11%) when applying BPSO on features that were extracted from ResNet50 because the BPSO selects the best features from numerous features (4099) compared to features extracted from VGG16 (2051) and Inception V3 (2069), while the XGBoost accuracy has not changed (δ = 0).

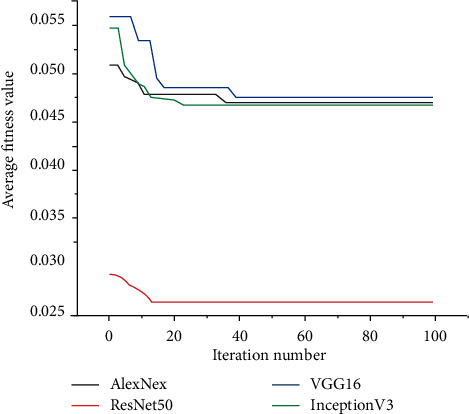

Figure 13 illustrates a comparison of the BPSO convergence curves algorithm at the feature selection stage. It is important to note that the fitness is the average of 20 runs. The greater the performance, the lower the values of the best fitness, worst fitness, and mean fitness. It is observed that the features obtained from VGG16 and Inception V3 models achieved the lowest fitness value for BPSO, while the best performance is given by the feature group obtained by using ResNet50.

Figure 13.

Fitness values of BPSO for different extracted features.

Because the XGBoost classifier's histopathological image classification results with each features extraction method are the average accuracy got from 20 independent runs, a two-sample t-test with a 95 percent confidence level was used to see if the classification performance of the proposed ResNet50 with BPSO was significantly better (p value <0.05) than those of the other methods on histopathological images.

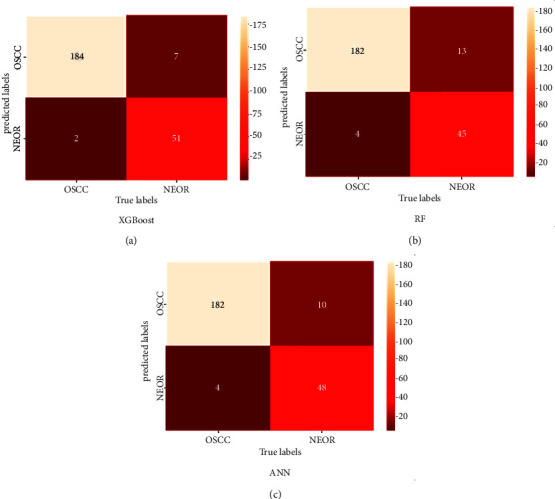

To assess the XGBoost classifier's classification performance, artificial neural network (ANN) and Random Forest are two more traditional machine learning techniques that are compared to the outcomes (RF). The classifier models were trained using features taken from the ResNet50 model and then fed to the classifier by best-selected features using BPSO, based on previous results. A confusion matrix for all classifiers is shown in Figure 14.

Figure 14.

Confusion matrix of classification results for all classifiers.

It is clear from the confusion matrix that the highest TP results were got from the XGBoost classifier, where 184 of 186 histopathological images with OSCC were detected correctly, while two were diagnosed as NEOR. On other hand, the classifier had 7 histopathological images with NEOR. The XGBoost classifier has misclassified 7 normal histopathological images, which is the lowest number of FP compared to other classifiers.

According to the results shown in the confusion matrix, overall accuracy, sensitivity, and precision were calculated, as shown in Table 3. The XGBoost model delivered outstanding results compared to the other classifiers. It has consistently high accuracy, sensitivity, and precision (96.3%, 98.9%, and 96.3% respectively) across all models. This shows that this model was the most successful in learning and extracting essential features from the training data.

Finally, the proposed work investigates whether data normalization techniques can improve the accuracy of classification models or not, where all models were trained on nonnormalized histopathological images and the classification performance is illustrated in Table 4. It is seen that the accuracy was decreased when using histopathological images without applying the Reinhard approach. These effective results show that Reinhard stain normalization can improve the classifier performance and can produce satisfactory results.

Table 4.

Accuracy performance for the classifier with non-stain-normalized image and stain-normalized image

| Classifier name | Without stain normalization (%) | With stain normalization (%) |

|---|---|---|

| XGBoost | 95.1 | 96.3 |

| RF | 91.3 | 93.1 |

| ANN | 94.2 | 94.1 |

For further evaluation of the proposed approach, it was compared with several previous research works shown in Table 5, which shows that the proposed method achieves the highest accuracy of 96.3% to classify different classes of histopathological images.

Table 5.

Comparison of previous research works with the proposed method.

| Authors | Algorithm architecture | The objective of the study | No. of images | Accuracy |

|---|---|---|---|---|

| Uthoff et al. [52] | CNN | Early detection of precancerous and cancerous lesions | 170 | 86.88% |

| Jeyaraj et al. [53] | CNN | Develop an automated computer-aided oral cancer-detecting system | 100 | 91.4% |

| Jubair et al. [54] | CNN | CNN using EfficientNet-B0 transfer model CNN for binary classication of oral lesions into benign and malignant | 716 | 85.0% |

| Sunny et al. [55] | ANN | Early detection of oral potentially malignant/malignant lesion | 82 | 86% |

| Rathod et al. [56] | CNN | Classify different stages of oral cancer | Not mentioned | 90.68% |

| Alabi et al. [57] | ANNs | Predicting risk of recurrence of oral tongue squamous cell carcinoma (OTSCC) | 311 | 81% |

| Alhazmi et al. [58] | ANNs | Predicting risk of developing oral cancer | 73 | 78.95% |

| Chu et al. [59] | CNN | Evaluate the ability of supervised machine learning models to predict disease outcome | 467 | 70.59% |

| Karadaghy et al. [60] | DT | Develop a prediction DT model using machine learning for 5-year overall survival among patients with OCSCC | 33, 065 | 71% |

| Proposed method | CNN and BPSO | The features were extracted with Inception V3 and selected with BPSO to improve the classification performance of patients with OCSCC | 1224 | 96.3% |

6. Conclusion

In this study, traditional classification algorithms are used by extracting features from the four CNN models (VGG16, AlexNet, ResNet50, and Inception V3) and selecting the best features using the BPSO algorithm. The features extracted with Inception V3 and selected with BPSO improved the classification performance and contributed positively to the results. In addition, the effects of stain normalization procedures were investigated and compared with nonnormalized histological images. The results showed that the XGBoost model performed better when the Reinhard technique was used. This breakthrough achievement has the potential to be a precious and rapid diagnostic tool that might save many people who die each year because of delayed or incorrect diagnoses.

Acknowledgments

The authors thank Ibrar Amin et al. for their research entitled “Histopathological Image Analysis for Oral Squamous Cell Carcinoma Classification Using Concatenated DL Models” (access through https://doi.org/10.1101/2021.05.06.21256741) which was insightful for them. As a standard manner in academic publishing, some papers may be extracted/inspired from graduate theses; hence, the authors would like to mention that this case has also happened for their paper and they need to thank their universities as well.

Data Availability

The data used to support the findings of the study can be obtained from the first author (e-mail: mohand.deif@eng.mti.edu.eg) upon request.

Conflicts of Interest

The authors declare that there are no conflicts of interest to declare.

References

- 1.Du M., Nair R., Jamieson L., Liu Z., Bi P. Incidence trends of lip, oral cavity, and pharyngeal cancers: global burden of disease 1990–2017. Journal of Dental Research . 2020;99(2):143–151. doi: 10.1177/0022034519894963. [DOI] [PubMed] [Google Scholar]

- 2.Li L., Yin Y., Nan F., Ma Z. Circ_LPAR3 promotes the progression of oral squamous cell carcinoma (OSCC) Biochemical and Biophysical Research Communications . 2022;589:215–222. doi: 10.1016/j.bbrc.2021.12.012. [DOI] [PubMed] [Google Scholar]

- 3.Warnakulasuriya S., Greenspan J. S. Textbook of Oral Cancer . Berlin, Germany: Springer; 2020. Epidemiology of oral and oropharyngeal cancers. [Google Scholar]

- 4.Perdomo S., Martin Roa G., Brennan P., Forman D., Sierra M. S. Head and neck cancer burden and preventive measures in central and South America. Cancer Epidemiology . 2016;44:S43–S52. doi: 10.1016/j.canep.2016.03.012. [DOI] [PubMed] [Google Scholar]

- 5.Anwar N., Pervez S., Chundriger Q., Awan S., Moatter T., Ali T. S. Oral cancer: clinicopathological features and associated risk factors in a high-risk population presenting to a major tertiary care center in Pakistan. PloS One . 2020;15(8) doi: 10.1371/journal.pone.0236359.e0236359 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chakraborty D., Natarajan C., Mukherjee A. Advances in oral cancer detection. Advances in Clinical Chemistry . 2019;91:181–200. doi: 10.1016/bs.acc.2019.03.006. [DOI] [PubMed] [Google Scholar]

- 7.Eckert A. W., Kappler M., GroBe I., Wickenhauser C., Seliger B. Current understanding of the HIF-1-Dependent metabolism in oral squamous cell carcinoma. International Journal of Molecular Sciences . 2020;21(17) doi: 10.3390/ijms21176083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Ghosh A., Chaudhuri D., Adhikary S., Das A. K. Deep reinforced neural network model for cyto-spectroscopic analysis of epigenetic markers for automated oral cancer risk prediction. Chemometrics and Intelligent Laboratory Systems . 2022;224 doi: 10.1016/j.chemolab.2022.104548.104548 [DOI] [Google Scholar]

- 9.Deif M. A., Hammam R. E. Skin lesions classification based on deep learning approach. Journal of Clinical Engineering . 2020;45(3):155–161. doi: 10.1097/jce.0000000000000405. [DOI] [Google Scholar]

- 10.Kong J., Sertel O., Shimada H., Boyer K., Saltz J., Gurcan M. Computer-aided evaluation of neuroblastoma on whole-slide histology images: classifying grade of neuroblastic differentiation. Pattern Recognition . 2009;42(6):1080–1092. doi: 10.1016/j.patcog.2008.10.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Santana M. F., Ferreira L. C. L. Diagnostic errors in surgical pathology. Jornal Brasileiro de Patologia e Medicina Laboratorial . 2017;53:124–129. doi: 10.5935/1676-2444.20170021. [DOI] [Google Scholar]

- 12.Deif M. A., Hammam R. E., Solyman A. A. A. Gradient boosting machine based on PSO for prediction of leukemia after a breast cancer diagnosis. International Journal on Advanced Science, Engineering and Information Technology . 2021;11(2):508–515. doi: 10.18517/ijaseit.11.2.12955. [DOI] [Google Scholar]

- 13.Altaf F., Islam S. M. S., Akhtar N., Janjua N. K. Going deep in medical image analysis: concepts, methods, challenges, and future directions. IEEE Access . 2019;7 doi: 10.1109/access.2019.2929365.99540 [DOI] [Google Scholar]

- 14.Deif M. A., Solyman A. A. A., Alsharif M. H., Uthansakul P. Automated triage system for intensive care admissions during the COVID-19 pandemic using hybrid XGBoost-AHP approach. Sensors . 2021;21(19) doi: 10.3390/s21196379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Echle A., Rindtorff N. T., Brinker T. J., Luedde T., Pearson A. T., Kather J. N. Deep learning in cancer pathology: a new generation of clinical biomarkers. British Journal of Cancer . 2021;124(4):686–696. doi: 10.1038/s41416-020-01122-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Duggento A., Conti A., Mauriello A., Guerrisi M., Toschi N. Deep computational pathology in breast cancer. Seminars in Cancer Biology . 2021;72:226–237. doi: 10.1016/j.semcancer.2020.08.006. [DOI] [PubMed] [Google Scholar]

- 17.Wang S., Yang D. M., Rong R., et al. Artificial intelligence in lung cancer pathology image analysis. Cancers . 2019;11(11):p. 1673. doi: 10.3390/cancers11111673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Goldenberg S. L., Nir G., Salcudean S. E. A new era: artificial intelligence and machine learning in prostate cancer. Nature Reviews Urology . 2019;16(7):391–403. doi: 10.1038/s41585-019-0193-3. [DOI] [PubMed] [Google Scholar]

- 19.Das D. K., Bose S., Maiti A. K., Mitra B., Mukherjee G., Dutta P. K. Automatic identification of clinically relevant regions from oral tissue histological images for oral squamous cell carcinoma diagnosis. Tissue and Cell . 2018;53:111–119. doi: 10.1016/j.tice.2018.06.004. [DOI] [PubMed] [Google Scholar]

- 20.Das N., Hussain E., Mahanta L. B. Automated classification of cells into multiple classes in epithelial tissue of oral squamous cell carcinoma using transfer learning and convolutional neural network. Neural Networks . 2020;128:47–60. doi: 10.1016/j.neunet.2020.05.003. [DOI] [PubMed] [Google Scholar]

- 21.Folmsbee J., Liu X., Brandwein-Weber M., Scott D. Active deep learning: improved training efficiency of convolutional neural networks for tissue classification in oral cavity cancer. Proceedings of the IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018); April 2018; Washington, DC, USA. pp. 770–773. [DOI] [Google Scholar]

- 22.Martino F., Bloisi D. D., Pennisi A., et al. Deep learning-based pixel-wise lesion segmentation on oral squamous cell carcinoma images. Applied Sciences . 2020;10(22) doi: 10.3390/app10228285. [DOI] [Google Scholar]

- 23.Amin I., Zamir H., Khan F. F. medRxiv; 2021. Histopathological image analysis for oral squamous cell carcinoma classification using concatenated deep learning models. http://medrxiv.org/content/early/2021/05/14/2021.05.06.21256741.abstract . [DOI] [Google Scholar]

- 24.Abbasi M., Tahouri R., Rafiee M. Enhancing the performance of the aggregated bit-vector algorithm in network packet classification using GPU. PeerJ Computer Science . 2019;5 doi: 10.7717/peerj-cs.185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Abbasi M., Vesaghati Fazel S., Rafiee M. MBitCuts: optimal bit-level cutting in geometric space packet classification. The Journal of Supercomputing . 2020;76(4):3105–3128. doi: 10.1007/s11227-019-03090-3. [DOI] [Google Scholar]

- 26.Abbasi M., Shokrollahi A. Enhancing the performance of decision tree-based packet classification algorithms using CPU cluster. Cluster Computing . 2020;23(4):3203–3219. doi: 10.1007/s10586-020-03081-7. [DOI] [Google Scholar]

- 27.Rahman T. Y. A histopathological image repository of normal epithelium of oral cavity and oral squamous cell carcinoma. 2019. https://onlinelibrary.wiley.com/doi/abs/10.1111/jmi.12611 .

- 28.Deif M. A. Deep learning algorithms and soft optimization methods codes. 2022. https://github.com/BioScince/Oral-squamous-cell-carcinoma-.git .

- 29.Deif M. A., Solyman A. A. A., Hammam R. E. ARIMA model estimation based on genetic algorithm for COVID-19 mortality rates. International Journal of Information Technology & Decision Making . 2021;20(6):1775–1798. doi: 10.1142/s0219622021500528. [DOI] [Google Scholar]

- 30.Deif M. A., Hammam R. E., Solyman A. A. A., Band S. S. Mathematical Biosciences and Engineering . 6. Vol. 18. AIMS--Press; 2021. A deep bidirectional recurrent neural network for identification of SARS-CoV-2 from viral genome sequences. [DOI] [PubMed] [Google Scholar]

- 31.Munien C., Viriri S. Classification of hematoxylin and eosin-stained breast cancer histology microscopy images using transfer learning with EfficientNets. Computational Intelligence and Neuroscience . 2021;2021:17. doi: 10.1155/2021/5580914.5580914 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Magee D., Treanor D., Crellin D., Shires M. Colour normalisation in digital histopathology images. Proceedings of the Optical Tissue Image Analysis in Microscopy, Histopathology and Endoscopy (MICCAI Workshop); 2009; pp. 100–111. [Google Scholar]

- 33.Reinhard E., Adhikhmin M., Gooch B., Shirley P. Color transfer between images. IEEE Computer Graphics and Applications . 2001;21(4):34–41. doi: 10.1109/38.946629. [DOI] [Google Scholar]

- 34.Janowczyk A., Basavanhally A., Madabhushi A. Stain normalization using sparse autoencoders (StaNoSA): application to digital pathology. Computerized Medical Imaging and Graphics . 2017;57:50–61. doi: 10.1016/j.compmedimag.2016.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Tan M., Le Q. Efficientnet: rethinking model scaling for convolutional neural networks. International Conference on Machine Learning . 2019:6105–6114. [Google Scholar]

- 36.Macenko M., Niethammer M., Marron J. S., Borland D. A method for normalizing histology slides for quantitative analysis. Proceedings of the IEEE International Symposium on Biomedical Imaging: From Nano to Macro; June 2009; Boston, MA, USA. [DOI] [Google Scholar]

- 37.Simonyan K., Zisserman A. Very deep convolutional networks for large-scale image recognition. 2014. https://arxiv.org/abs/1409.1556 .

- 38.Krizhevsky A., Sutskever I., Hinton G. E. Imagenet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems . 2012;25:1097–1105. [Google Scholar]

- 39.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; June 2016; Las Vegas, NV, USA. pp. 770–778. [DOI] [Google Scholar]

- 40.Szegedy C., Vanhoucke V., Loffe S. Rethinking the inception architecture for computer vision. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; June 2016; Las Vegas, NV, USA. pp. 2818–2826. [DOI] [Google Scholar]

- 41.Canayaz M. MH-COVIDNet: diagnosis of COVID-19 using deep neural networks and meta-heuristic-based feature selection on X-ray images. Biomedical Signal Processing and Control . 2021;64 doi: 10.1016/j.bspc.2020.102257.102257 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Narin A. Accurate detection of COVID-19 using deep features based on X-ray images and feature selection methods. Computers in Biology and Medicine . 2021;137 doi: 10.1016/j.compbiomed.2021.104771.104771 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Deif M. A., Attar H., Amer A., Issa H., Khosravi M. R., Solyman A. A. A. A new feature selection method based on hybrid approach for colorectal cancer histology classification. Wireless Communications and Mobile Computing . 2022;2022:14. doi: 10.1155/2022/7614264.7614264 [DOI] [Google Scholar]

- 44.Deif M. A., Solyman A. A. A., Alsharif M. H., Jung S., Hwang E. A hybrid multi-objective optimizer-based svm model for enhancing numerical weather prediction: a study for the seoul metropolitan area. Sustainability . 2021;14(1) doi: 10.3390/su14010296. [DOI] [Google Scholar]

- 45.Kumari K., Singh J. P., Dwivedi Y. K., Rana N. P. Multi-modal aggression identification using convolutional neural network and binary particle swarm optimization. Future Generation Computer Systems . 2021;118:187–197. doi: 10.1016/j.future.2021.01.014. [DOI] [Google Scholar]

- 46.Hammam R. E., Attar H., Amer A., Issa H. Prediction of wear rates of UHMWPE bearing in hip joint prosthesis with support vector model and grey wolf optimization. Application of Neural Network in Mobile Edge Computing . 2022;2022:16. doi: 10.1155/2022/6548800.6548800 [DOI] [Google Scholar]

- 47.Deif M., Hammam R., Ahmed S. Adaptive neuro-fuzzy inference system (ANFIS) for rapid diagnosis of COVID-19 cases based on routine blood tests. International Journal of Intelligent Engineering and Systems . 2021;14 [Google Scholar]

- 48.Alves F. R. V., Machado Vieira R. P. The Newton fractal’s leonardo sequence study with the Google Colab. International Electronic Journal of Mathematics Education . 2019;15(2) doi: 10.29333/iejme/6440.em0575 [DOI] [Google Scholar]

- 49.Van R. G. Python programming language. Proceedings of the USENIX Annual Technical Conference; 2007. [Google Scholar]

- 50.Gulli A., Pal S. Deep Learning with Keras . Birmingham, United Kingdom: Packt Publishing Ltd; 2017. [Google Scholar]

- 51.Khanagar S. B., Naik S., Al Kheraif A. A., et al. Application and performance of artificial intelligence technology in oral cancer diagnosis and prediction of prognosis: a systematic review. Diagnostics . 2021;11(6) doi: 10.3390/diagnostics11061004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Uthoff R. D., Song B., Sunny S., et al. Point-of-Care, smartphone-based, dual-modality, dual-view, oral cancer screening device with neural network classification for low-resource communities. PloS One . 2018;13(12) doi: 10.1371/journal.pone.0207493.e0207493 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Jeyaraj P. R., Samuel Nadar E. R., Edward R. S. N. Computer-assisted medical image classification for early diagnosis of oral cancer employing deep learning algorithm. Journal of Cancer Research and Clinical Oncology . 2019;145(4):829–837. doi: 10.1007/s00432-018-02834-7. [DOI] [PubMed] [Google Scholar]

- 54.Jubair F., Al‐karadsheh O., Malamos D., Al Mahdi S., Saad Y., Hassona Y. A novel lightweight deep convolutional neural network for early detection of oral cancer. Oral Diseases . 2022;28(4):1123–1130. doi: 10.1111/odi.13825. [DOI] [PubMed] [Google Scholar]

- 55.Sunny S., Baby A., James B. L., et al. A smart tele-cytology point-of-care platform for oral cancer screening. PLoS One . 2019;14(11) doi: 10.1371/journal.pone.0224885.e0224885 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Rathod J., Sherkay S., Bondre H., Sonewane R. Oral cancer detection and level classification through machine learning. Int. J. Adv. Res. Comput. Commun. Eng . 2020;9:177–182. [Google Scholar]

- 57.Alabi R. O., Elmusrati M., Sawazaki‐Calone I., et al. Comparison of supervised machine learning classification techniques in prediction of locoregional recurrences in early oral tongue cancer. International Journal of Medical Informatics . 2020;136 doi: 10.1016/j.ijmedinf.2019.104068.104068 [DOI] [PubMed] [Google Scholar]

- 58.Alhazmi A., Alhazmi Y., Makrami A., et al. Application of artificial intelligence and machine learning for prediction of oral cancer risk. Journal of Oral Pathology & Medicine . 2021;50(5):444–450. doi: 10.1111/jop.13157. [DOI] [PubMed] [Google Scholar]

- 59.Chu C. S., Lee N. P., Adeoye J., Thomson P., Choi S. Machine learning and treatment outcome prediction for oral cancer. Journal of Oral Pathology & Medicine . 2020;49(10):977–985. doi: 10.1111/jop.13089. [DOI] [PubMed] [Google Scholar]

- 60.Karadaghy O. A., Shew M., New J., Bur A. M. Development and assessment of a machine learning model to help predict survival among patients with oral squamous cell carcinoma. JAMA Otolaryngology–Head & Neck Surgery . 2019;145(12):1115–1120. doi: 10.1001/jamaoto.2019.0981. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data used to support the findings of the study can be obtained from the first author (e-mail: mohand.deif@eng.mti.edu.eg) upon request.