Abstract

Developments in artificial intelligence (AI) have led to an explosion of studies exploring its application to cardiovascular medicine. Due to the need for training and expertise, one area where AI could be impactful would be in the diagnosis and management of valvular heart disease. This is because AI can be applied to the multitude of data generated from clinical assessments, imaging and biochemical testing during the care of the patient. In the area of valvular heart disease, the focus of AI has been on the echocardiographic assessment and phenotyping of patient populations to identify high-risk groups. AI can assist image acquisition, view identification for review, and segmentation of valve and cardiac structures for automated analysis. Using image recognition algorithms, aortic and mitral valve disease states have been directly detected from the images themselves. Measurements obtained during echocardiographic valvular assessment have been integrated with other clinical data to identify novel aortic valve disease subgroups and describe new predictors of aortic valve disease progression. In the future, AI could integrate echocardiographic parameters with other clinical data for precision medical management of patients with valvular heart disease.

Keywords: echocardiography, heart valve diseases

Introduction

The global incidence of valvular heart disease (VHD) has increased by 45% in the last 30 years, with an annual incidence of 401 new cases per 100 000 people.1 This is due to an expanding ageing population and age-related VHD.1 Echocardiography is the most common imaging modality used to identify patients with VHD as it is non-invasive, portable, widely available and cost-effective, and provides real-time assessment of cardiac structure and function.2 Currently, there are over seven million echocardiograms performed annually in North America.3 4 Despite this, there is evidence that a number of patients with VHD are underdiagnosed.5 Merely increasing the number of echocardiograms performed to provide screening to the millions of people at risk of developing VHD is not feasible within current clinical practice paradigms and budgetary limits.6 Even the advent of handheld/point-of-care ultrasound machines may not address this need, as diagnostic quality image acquisition and interpretation for VHD require training and expertise.7–9 Moreover, busy clinicians must incorporate multimodal imaging and clinical and biochemical patient data for decision-making.

Developments in the field of artificial intelligence (AI) hold great promise in transforming how patients with VHD are assessed and managed as it can simulate the complex, multimodal decision-making required (figure 1). It is already changing how echocardiographic images are acquired, processed and quantified. AI methods can also be applied to the wealth of information contained in the images, measurements and clinical data obtained that are not currently considered during assessment. In this review, we will discuss the emerging work of AI in VHD assessment. First, we will provide a summary of AI concepts related to medical imaging and the contemporary implementation of AI to echocardiographic valvular image assessment. Then we will examine the AI methods used for phenotyping VHD and assess the studies in this area. Finally, we will discuss the future directions of AI echocardiography and valvular assessment.

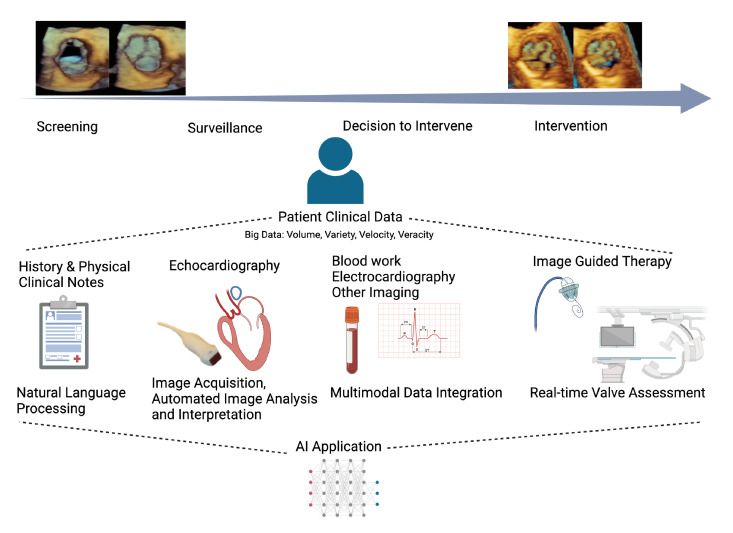

Figure 1.

Pathway of a patient with valvular heart disease and areas of care where AI can improve assessment and management. The top left and right images are three-dimensional TTE images of the aortic valve in short axis during systole and diastole representing progression from a normal to a diseased state. Below are the stages of care (screening, surveillance, decision to intervene, intervention). AI can be applied to any type of patient data (ie, clinical notes, echo images) obtained at any of these stages. In turn, the collective set of data can be used by AI to improve management at various care stages. AI, artificial intelligence; TTE, transthoracic echocardiogram.

AI in cardiac imaging

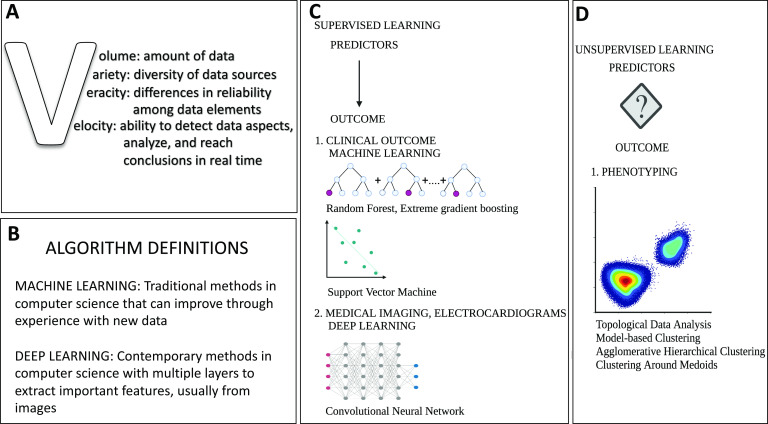

AI is a method used to identify patterns of associations between predictors and outcomes. Its power comes from its ability to find these associations from large amounts of data and, with no prior knowledge of associations, draw non-linear relationships between a wide variety of predictors with an outcome of interest. These large amounts of data, termed ‘big data’, are characterised by the 4Vs: volume, variety, velocity and veracity (figure 2).10 Patient data collected today can be considered ‘big data’ and AI is potent in its ability to perform multidata integration and generate predictions using clinical, imaging, electrophysiological and genomics information. With improved access to significant computing power and therefore the capacity to process large amounts of data, AI can perform complex decision-making in a fraction of the time needed by humans.11

Figure 2.

(A) Characteristics of big data. (B) Common AI definitions. (C) Common model architectures used in AI depend on the purpose of modelling. With supervised learning, predictors are mapped to a known outcome. When the outcomes of interest are clinical, machine learning methods such as random forest and support vector machine are used. When the outcome of interest is imaging-based, then deep learning methods such as convolutional neural networks are used. (D) With unsupervised learning, the predictors are visualised on a plot to find natural clustering of the data. A typical use in valve disease studies has been in phenotyping to identify higher risk phenotypes. Methods used with unsupervised learning include topological data analysis, model-based clustering, agglomerative hierarchical clustering and clustering around medoids. AI, artificial intelligence.

Based on the type of problem, different AI algorithms can be applied to clinical and imaging data (figure 2). However, the most widely implemented model has been the convolutional neural network (CNN) due to its success in medical imaging. CNN architecture is modelled based on the visual cortex of the brain and involves identifying crucial image features that allow for image identification. By applying different filters, or sieves, to an image, image features can be extracted and correlated with the outcome of interest. This form of modelling can be extremely accurate but requires significant computational power and many images to train a model to build associations.12

AI is currently encountered in automated ECG interpretation, cardiac CT and MRI chamber measurements, and most recently two-dimensional (2D) echocardiography strain analysis and Doppler tracing.13 Given the dominant role of echocardiography in VHD, this review will focus on this modality. Echocardiograms are ideal for AI applications as each echocardiographic study contains several acquisition modes, multiple views and numerous frames generating a large amount of data, of which only a fraction are clinically appreciated. AI on these big data can generate gains in echocardiographic valve imaging assessment and identification of novel disease markers through phenotyping.

AI to improve echocardiographic image valve assessment

The application of AI to echocardiographic images in patients with VHD falls into four main categories: (1) image acquisition, (2) view recognition, (3) image segmentation and (4) disease state identification.

Image acquisition

In patients with VHD, the echocardiographic study is focused on acquiring images that allow the diagnosis of valve disease severity and the impact on related cardiac structures. Thus, in addition to the cardiac chambers, acquired images should allow clear visualisation of the valve leaflets/cusps, the jet origin and extent in regurgitant lesions, the source of the flow acceleration for stenotic lesions, and the complete continuous wave (CW) Doppler signal of the maximal flows. Acquisition of such images requires training, especially when regurgitant jets are eccentric or wall-hugging or gradients are highest in non-traditional off-axis planes. Some laboratories have addressed specific quality issues such as the acquisition of maximal aortic stenosis (AS) gradients by implementing ‘buddy’ systems.14 However, this is time- and labour-intensive.

AI has the potential to improve valve assessments through the development of programs that guide image acquisition. Currently the focus of such AI-assisted image acquisition has been on basic non-colour images such as the parasternal or apical views.15 16 One such developed AI algorithm has been assessed by comparing the quality of images acquired by novice nurses scanning patients with AI guidance against expert sonographers.15 The percentage of evaluable images of the aortic, mitral and tricuspid valves obtained by the novice users were 91.7%, 96.3% and 83.3%, respectively. Future iterations of these early-stage programs can be used in patients with mitral or tricuspid regurgitation or guide Doppler interrogation in AS.

View identification

Similar to its current use to identify left ventricular (LV) views, AI could improve valve assessment by identifying images containing valve data to allow for reading in ‘stacks’, automated measurements, and even aid interpretation using current guideline criteria.9 This could offer significant time savings and potentially improve report quality by increasing severity assessment agreement between readers, which can be as low as 61% for mitral regurgitation (MR) severity.17 The first step for such AI programs would be to identify the views that include valve information.18 While many papers have been published on standard view identification, few have been published identifying specific valve anatomy or Doppler signals. One publication has described using AI to identify and track mitral and tricuspid valve leaflets in the apical four-chamber view to identify the presence of pathology.19 This paper reported that their program could detect mitral valve leaflets with accuracy of 98% and tricuspid valve leaflets with accuracy of 90%. Studies have also reported overall success in identifying Doppler data of 94%.20 A separate AI program has found that accuracy of identification of CW Doppler signal images was better at 98% compared with pulsed-wave (PW) images, which had an accuracy of 83% because a PW signal can look similar to a faint CW signal.21

Image segmentation

AI-driven automated image analysis to provide measurements would greatly increase quantitative assessments, accuracy and reproducibility. This can be achieved through image segmentation, which refers to recognising a specific structure in the image, identifying its boundaries and performing measurements. Application of image segmentation can be applied to 2D and three-dimensional (3D) echocardiographic chamber images with the goal to automate size and function measurements. Note, most of this work has been performed using labelled images, but there are some studies developing programs without manual image delineation.22–24 In addition, segmentation can be performed of the valve annulus, leaflets/cusps, jets and Doppler spectral profiles.

Valve annulus and leaflet

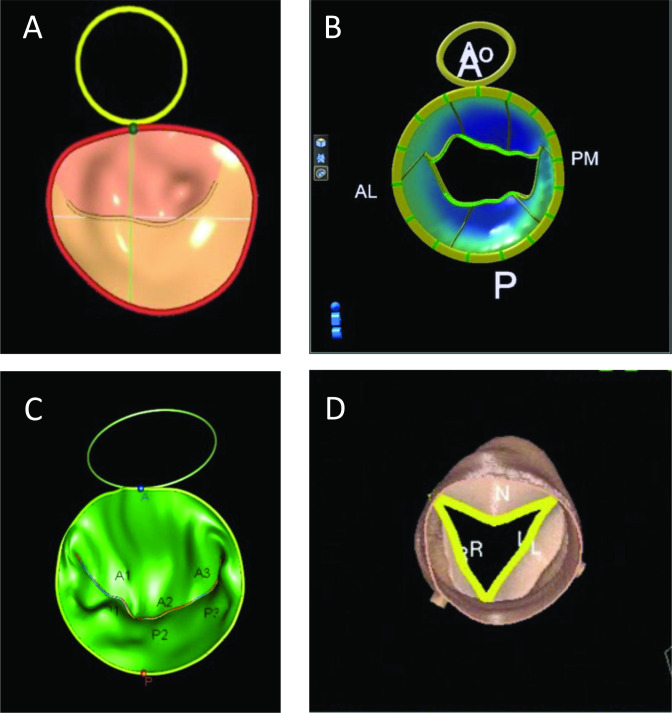

Commercial and non-commercial programs have been developed that use AI methods to provide automated valve measurements from 3D aortic, mitral and tricuspid echocardiographic images (table 1). It must be noted that early programs in this area were based on computational methods, which apply mathematical rules for automation, rather than AI methods such as CNNs. Due to the proprietary nature of commercial software packages, details on the included AI algorithms are not available, although it is likely that current iterations include some form of AI analytics (online supplemental table 1).25–27 Overall, these commercial packages have a few limitations. Some are technically semiautomated processes that require expert initialisation and others can only be applied to images generated from echocardiographic machines produced by the same vendor (figure 3).27

Table 1.

Summary of AI applications by valve

| Valve | Pathology | AI application | ||||

| Image acquisition | View identification | Image segmentation | Disease state identification | Phenotyping | ||

| Aortic | Stenosis | x | x | x | x | |

| Regurgitation | x | x | x | |||

| Mitral | Stenosis | x | x | x | x | |

| Regurgitation | x | x | x | x | ||

| Pulmonary | Stenosis | No current literature. | ||||

| Regurgitation | ||||||

| Tricuspid | Stenosis | x | x | x | ||

| Regurgitation | x | x | x | |||

AI, artificial intelligence.

Figure 3.

Example images of commercial valve analysis software. Mitral valve models from (A) GE, (B) Philips and (C) TomTec. (D) An aortic valve model from Siemens. A, anterior; AL, anterolateral; Ao, aorta; L, left coronary cusp; N, non-coronary cusp; P, posterior; PM, posteromedial; R, right coronary cusp.

heartjnl-2021-319725supp001.pdf (27KB, pdf)

Non-commercial programs have also been developed to aid valve annular and leaflet segmentation (table 2). These programs focus on CNN development using mitral valve images. These methods’ Dice coefficients (measurement of accuracy in the setting of image identification) for mitral valve segmentation were modest to good, ranging from 0.48 to 0.79.28 Error rates of these automated program measurements were low at 6.1%±4.5% for annular perimeter measurements and 11.94%±10% for area measurement.29 The strength in these algorithms is their performance on low-quality images, while their limitations arise from their overestimation of mitral valve borders and false structure identification caused by image artefact.

Table 2.

Non-commercial AI-driven algorithms for valvular detection in echocardiography

| Authors (year) | Data | Training data population | Outcome of interest | Algorithm used | Findings |

| Vafaeezadeh et al (2021)35 | 2044 TTE studies: 1597 had normal valves and 447 had prosthetic valves. | Patients with normal mitral valve and mitral valve prosthesis: both mechanical and biological. | Identification of prosthetic mitral valve from echo images. | 13 pretrained models with CNN architecture and fine-tuned via transfer learning. | All the models worked with incredible accuracy (>98%), but the EfficientNetB3 had the best AUC (99%) for the A4C and EfficientNetB4 had the best AUC (99%) for PLAX. However, these models were computationally more expensive for a small gain in AUC, so the authors concluded that the best model for this task is EfficientNetB2. |

| Corinzia et al (2020)50 | Training: 39 2D TTE. Test: 46 2D echos from EchoNet-Dynamic public echo data set. |

Patients who were undergoing mitraclip: all patients had moderate to severe or severe MR. | Fully automated delineation of mitral valve annulus and both MV leaflets. | NN-MitralSeg, unsupervised MV segmentation algorithm based on neural collaborative filtering. | This model outperforms state-of-the-art unsupervised and supervised methods (NeuMF MF Dice coefficient of 0.482, with benchmark performance of 0.447), with best performance on low-quality videos or videos with sparse annotation. |

| Andreassen et al (2020)29 | 111 multiframe recordings from 3D TEE echocardiograms. | 4D echocardiographic images of the mitral valve. | Fully automated method for mitral annulus segmentation on 3D echocardiography. | CNN, specifically a U-Net architecture. | With no manual input, this methodology gave comparable results with those that required manual input (relative error of 6.1%±4.5% for perimeter measurements and 11.94%±10% for area measurement). |

| Costa et al (2019)28 | Training: 21 2D TTE echo videos in PLAX, 22 videos in A4C. Test: 6 videos in PLAX and A4C. | PLAX and A4C views from echos. | Automatic segmentation of mitral valve leaflets. | CNN, specifically a U-Net architecture. | This model is the first of its kind to perform segmentation of valve leaflets. For AMVL, the median Dice coefficient in PLAX was 0.742 and 0.795 in A4C. For PMVL, the median Dice coefficient in PLAX was 0.60 and 0.69 in A4C. Cardiologists were then asked to score the segmentation quality on a scale from 0 to 2, with pooled score of 0.781, suggesting reasonable quality segmentation. |

A4C, apical 4-chamber view; AI, artificial intelligence; AML, anterior mitral valve leaflet; AUC, area under the receiver operator curve; CNN, convolutional neural network; 2D, two-dimensional; 3D, three-dimensional; 4D, four-dimensional; MR, mitral regurgitation; MV, mitral valve; PLAX, parasternal long-axis view; TEE, transoesophageal echocardiogram; TTE, transthoracic echocardiogram.

Doppler

One study has been published applying AI segmentation to colour Doppler images. Zhang et al 22 studied 1132 patients with expert reader-defined MR, ranging from mild to severe, to train an algorithm that can quantify MR from 2D echo colour images. On an external validation data set of 295 patients, the accuracy of classification was 0.90, 0.89 and 0.91 for mild, moderate and severe MR, respectively. Similarly, little has been published on AI automation of CW and PW measurements. From one publication, compared with a board-certified echocardiographer, AI automation of CW and PW measurement of peak velocity, mean gradients and velocity time integral showed excellent correlation, with all correlation coefficients greater than 0.9.30 Commercial software has been developed to perform semiautomated 3D proximal isovelocity surface area (PISA) measurements with good accuracy and reproducibility, as multiple measurements can be made, although it is unclear if AI is used in modelling.31

Disease state identification

Deep learning approaches are powerful in that they can automatically encode features from data for recognition that are beyond human perception.32 In the case of disease state identification, echo images do not need to proceed through the traditional AI workflow of image identification and segmentation as diseases can be directly linked to the echocardiographic images. Using a cohort of 139 patients with no, mild, moderate and severe MR, Moghaddasi and Nourian33 developed an algorithm that can automatically quantify MR severity with 99.52%, 99.38%, 99.31% and 99.59%, respectively, for normal, mild, moderate and severe MR. Similarly, AI programs can automatically identify rheumatic heart disease involving the aortic and/or mitral valves with 72.77% accuracy.34 These algorithms are also able to effectively recognise prosthetic mitral valves as demonstrated by Vafaeezadeh et al,35 who developed and tested 13 different CNN algorithms, all of which had excellent area under the receiver operator curve (AUC) values of at least 98%.

AI VHD phenotyping

During a routine echocardiogram, a large volume of potentially diagnostic data are generated, which are further increased with 3D imaging and speckle tracking strain analysis. The totality of data available can be difficult for the busy cardiologist to parse and interpret and are likely underutilised.36 It is unknown how many ‘hidden’ variables exist within an echocardiogram and AI can help discover the value of these variables.7 This is especially relevant when discussing VHD, as currently the assessment is predominantly focused on valve haemodynamics. However, cardiac changes that occur in response to VHD could also be informative to severity assessment. Using AI for phenotyping allows for identification of novel disease groups and novel predictors of these disease groups. There have been considerable efforts in phenotyping VHD as practitioners are increasingly recognising the heterogeneity of our current classification groupings. Phenotyping can help identify a high-risk subgroup that may require more timely intervention.

Methodological considerations for phenotyping in studies in valve disease

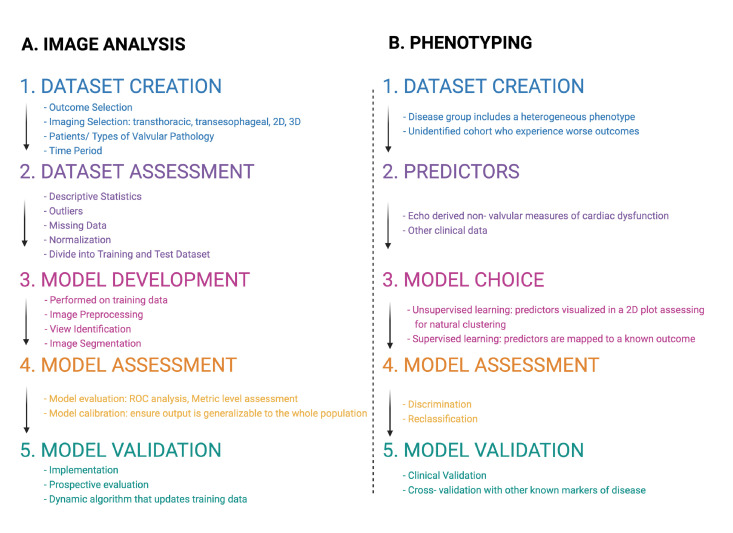

To evaluate phenotyping studies, there are five methodological components that are helpful in their evaluation (figure 4). In determining the inclusion criteria, the disease group has to present a heterogeneous phenotype with a subgroup that experiences worse outcomes. Attention should be paid to the inherent biases, such as those related to sex, or race, that can affect the population included in a data set.37 Predictors should be derived from various data sources as the use of AI to amalgamate data from echocardiograms, other imaging, ECG and patient clinical data can boost identification of high-risk groups from higher data granularity. During algorithm choice, unsupervised learning can be used to derive clusters that can be studied and compared with other clusters to identify high-risk groups and novel predictors of these groups. Performance metrics should include measurements of improved performance of classification.38 Model validation is important to ensure the model can perform on non-training examples and is generalisable to its task. This measure is important as training data can be skewed and can contribute to bias in modelling. Validation can take many forms and is tailored to the purpose of the modelling.

Figure 4.

(A) Deep learning workflow in automated image analysis. (B) A stepwise approach to assessing machine learning phenotyping studies from the study population and the data/predictor selection to the algorithm choice and assessment metrics. 2D, two-dimensional; 3D, three-dimensional; ROC, receiver operator curve.

AI phenotypic studies in aortic valve disease

VHD phenotyping using machine learning (ML) is an emerging field with only three studies, all on AS, published (table 3). One study, not discussed in detail, identified aneurysmal proximal aorta phenotypes in 656 patients with bicuspid aortic valve (AV) disease using CT.39 The three AS papers, to be discussed in further detail, all investigated heterogeneity in patients with AS to identify high-risk subgroups.

Table 3.

Phenotyping studies using echocardiographic-derived parameters

| Authors (year) | Patients (n) | Training data | Outcome of interest | Inclusion criteria | Algorithm used | Findings | Validation |

| Wojnarski et al (2017)39 | 656 patients. |

|

|

|

|

|

|

| Kwak et al

(2020)42 |

Training data: 398 patients. Validation data: 262 patients. |

|

|

|

|

|

|

| Sengupta et al (2021)41 | 1052 patients with CT, MRI and echos from three centres, prospective cohort. |

|

|

|

|

|

|

| Casaclang-Verzosa et al

(2019)40 |

Training data: 346 patients with mild to severe AS. Validation data:155 mice at 3, 6, 9 and 12 months of age. |

|

|

|

|

|

|

AS, aortic stenosis; AUC, area under the receiver operator curve; AV, aortic valve; AVA, aortic valve area; AVR, aortic valve replacement; BMI, body mass index; BNP, brain natriuretic protein; EF, ejection fraction; LV, left ventricular; LVEF, left ventricular ejection fraction; ML, machine learning.

Casaclang-Verzosa et al 40 used unsupervised ML to create a patient–patient similarity network to describe the progression between mild and severe AS from 346 patients using 79 clinical and echocardiographic variables. A Reeb graph, where distances between patients define their similarities, was created using topological data analysis. Two subtypes of patients with moderate AS were visualised, with one group being male with lower ejection fraction and more coronary artery disease, while the other group had a lower peak AV velocity and mean gradients but higher LV mass indexes and left atrial volumes. In follow-up post aortic valve replacement (AVR), the patients’ loci in the Reeb graph regressed from the severe to the mild position. The model was then validated in a murine model of AS, with similar findings to the human Reeb graph. From this analysis, a subset of patients with moderate AS who experience aggressive deterioration of LV function were identified. This superior stratification supports the use of changes in LV and AV function along a continuum in disease management.

In Sengupta et al,41 the investigators sought to identify a high-risk group among a cohort of 1052 patients with mild or moderate AS and a discordant AS group which is the traditional low-flow, low-gradient group. Topological data analysis based on echocardiographic parameters derived a high-risk phenotype which had higher AV calcium scores, more late gadolinium enhancement, higher brain natriuretic protein and troponin levels, greater incidences of AVR, and death before and after AVR. These relationships remained true when the data set was restricted only to discordant AS. Model validation included developing a supervised ML model with an AUC of 0.988, which had better discrimination (integrated discrimination improvement of 0.07) and reclassification (net reclassification improvement of 0.17) for the outcome of AVR at 5 years compared with our traditional grading of valve severity. This paper showed that, using echocardiographic measurements and ML, there can be improved risk stratification in discordant AS where risks can be identified without the need for additional tests.

Kwak et al 42 used model-based clustering of 398 patients with newly diagnosed moderate and severe AS, with 11 demographic, laboratory and echocardiographic parameters, to identify a high-risk subgroup that may not benefit from valve intervention. They found three patient clusters that differed by age, LV remodelling and symptoms. These clusters had different risks of mortality, with one group experiencing higher all-cause mortality and another group having high cardiac mortality. When the cluster variable was added to modelling predicting 3-year all-cause mortality, there was improved discrimination (integrated discrimination improvement 0.029) and net reclassification improvement (0.294). Important findings from this paper include the integration of non-echocardiographic measurements and non-traditional measures of disease severity in risk-stratifying patients with AS. This paper suggests that patients at high risk of non-cardiac death could warrant a different therapeutic strategy.

Limitations

Although there are many avenues for AI to improve echocardiographic VHD assessment, there are some limitations to this approach (table 4). AI is sensitive to data quality and valvular data can be challenging as the components are mobile and the images are prone to noise and artefact. Thus, training data must include a wide variety of images of varying quality to develop implementable AI solutions. AI models can have significant model complexity, rendering it a ‘black box’ and uninterpretable to the user. Measures such as saliency maps, which show which parts of the images are analysed for classification, can help the user understand how the algorithm functions.43 Widespread AI implementation has also been limited by questions related to patient privacy and consent, algorithmic bias that could cause diagnostic/management errors, algorithm scalability, data security and an agreed-upon implementation strategy.44 45

Table 4.

Limitations in echo imaging that make artificial intelligence implementation in valve disease more challenging

| Image quality | Speckle noise, artefact. |

| Frame rate | Low frame rates, irregular frames resulting in fast and irregular motion of the valve leaflets. |

| Echogenicity | Lack of features to discriminate heart valve from adjacent myocardium, which have similar intensity and texture. |

Summary and future directions

The application of AI to echocardiographic valvular assessment is growing and will become essential given clinical time constraints and the increasing volume of patient data. Echo automation using AI can reduce structural and economic barriers to VHD care, democratising access to disease screening, point-of-care valvular evaluation and potentially referral for intervention.34 46 47 For example, conditions such as rheumatic heart disease, which is underdiagnosed among marginalised populations, could benefit from automated disease detection and help connect patients with healthcare services.48 Additionally, platforms such as federated cloud computing can allow for automated image acquisition in low access areas with real-time image interpretation/consultation occurring elsewhere in a private and trustworthy manner.49 AI applications in phenotyping could be used in other circumstances where valvular assessment on echo can be challenging, such as in identifying low-flow, low-gradient AS, or in disease quantification in mixed valve disease. Overall, AI can create efficiencies in the use of echo in healthcare that allows for enhanced valve disease identification, diagnosis and management, giving more patients access to timely, accurate and goal-directed treatment.

Footnotes

Funding: The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: None declared.

Provenance and peer review: Not commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

Ethics statements

Patient consent for publication

Not required.

Ethics approval

This study does not involve human participants.

References

- 1. Chen J, Li W, Xiang M. Burden of valvular heart disease, 1990-2017: results from the global burden of disease study 2017. J Glob Health 2020;10:020404. 10.7189/jogh.10.020404 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Donal E, Muraru D, Badano L. Artificial intelligence and the promise of uplifting echocardiography. Heart 2021;107:523–4. 10.1136/heartjnl-2020-318718 [DOI] [PubMed] [Google Scholar]

- 3. Virnig BA SN, O'Donnell B. Trends in the use of echocardiography, 2007 to 2011: Data Points #20. Data Points Publication Series [Internet]. Rockville (MD): Agency for Healthcare Research and Quality (US), 2011. .. Available: https://www.ncbi.nlm.nih.gov/books/NBK208663/ [Accessed 13 May 2014].

- 4. Blecker S, Bhatia RS, You JJ, et al. Temporal trends in the utilization of echocardiography in Ontario, 2001 to 2009. JACC Cardiovasc Imaging 2013;6:515–22. 10.1016/j.jcmg.2012.10.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Thoenes M, Bramlage P, Zamorano P, et al. Patient screening for early detection of aortic stenosis (AS)-review of current practice and future perspectives. J Thorac Dis 2018;10:5584–94. 10.21037/jtd.2018.09.02 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Papolos A, Narula J, Bavishi C, et al. U.S. Hospital use of echocardiography: insights from the nationwide inpatient sample. J Am Coll Cardiol 2016;67:502–11. 10.1016/j.jacc.2015.10.090 [DOI] [PubMed] [Google Scholar]

- 7. Zoghbi WA, Adams D, Bonow RO, et al. Recommendations for noninvasive evaluation of native valvular regurgitation: a report from the American Society of echocardiography developed in collaboration with the Society for cardiovascular magnetic resonance. J Am Soc Echocardiogr 2017;30:303–71. 10.1016/j.echo.2017.01.007 [DOI] [PubMed] [Google Scholar]

- 8. Baumgartner H, Hung J, Bermejo J, et al. Recommendations on the echocardiographic assessment of aortic valve stenosis: a focused update from the European association of cardiovascular imaging and the American Society of echocardiography. J Am Soc Echocardiogr 2017;30:372–92. 10.1016/j.echo.2017.02.009 [DOI] [PubMed] [Google Scholar]

- 9. Otto CM, Nishimura RA, Bonow RO, et al. 2020 ACC/AHA guideline for the management of patients with valvular heart disease: a report of the American College of Cardiology/American heart association joint Committee on clinical practice guidelines. Circulation 2021;143:e72–227. 10.1161/CIR.0000000000000923 [DOI] [PubMed] [Google Scholar]

- 10. Omar AMS, Krittanawong C, Narula S, et al. Echocardiographic Data in Artificial Intelligence Research: Primer on Concepts of Big Data and Latent States. JACC Cardiovasc Imaging 2020;13:170–2. 10.1016/j.jcmg.2019.07.017 [DOI] [PubMed] [Google Scholar]

- 11. Zhou J, Du M, Chang S, et al. Artificial intelligence in echocardiography: detection, functional evaluation, and disease diagnosis. Cardiovasc Ultrasound 2021;19:29. 10.1186/s12947-021-00261-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Nabi W, Bansal A, Xu B. Applications of artificial intelligence and machine learning approaches in echocardiography. Echocardiography 2021;38:982–92. 10.1111/echo.15048 [DOI] [PubMed] [Google Scholar]

- 13. Long Q, Ye X, Zhao Q. Artificial intelligence and automation in valvular heart diseases. Cardiol J 2020;27:404–20. 10.5603/CJ.a2020.0087 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Samad Z, Minter S, Armour A, et al. Implementing a continuous quality improvement program in a high-volume clinical echocardiography laboratory: improving care for patients with aortic stenosis. Circ Cardiovasc Imaging 2016;9. 10.1161/CIRCIMAGING.115.003708 [DOI] [PubMed] [Google Scholar]

- 15. Narang A, Bae R, Hong H, et al. Utility of a Deep-Learning algorithm to guide novices to acquire Echocardiograms for limited diagnostic use. JAMA Cardiol 2021;6:624–32. 10.1001/jamacardio.2021.0185 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Abdi AH, et al. Correction to "Automatic Quality Assessment of Echocardiograms Using Convolutional Neural Networks: Feasibility on the Apical Four-Chamber View". IEEE Trans Med Imaging 2017;36. [DOI] [PubMed] [Google Scholar]

- 17. Uretsky S, Gillam L, Lang R, et al. Discordance between echocardiography and MRI in the assessment of mitral regurgitation severity: a prospective multicenter trial. J Am Coll Cardiol 2015;65:1078–88. 10.1016/j.jacc.2014.12.047 [DOI] [PubMed] [Google Scholar]

- 18. Nascimento BR, Meirelles ALS, Meira W, et al. Computer deep learning for automatic identification of echocardiographic views applied for rheumatic heart disease screening: data from the ATMOSPHERE-PROVAR study. J Am Coll Cardiol 2019;73:1611. 10.1016/S0735-1097(19)32217-X [DOI] [Google Scholar]

- 19. Chandra V, Sarkar PG, Singh V. Mitral valve leaflet tracking in echocardiography using custom Yolo3. Procedia Comput Sci 2020;171:820–8. 10.1016/j.procs.2020.04.089 [DOI] [Google Scholar]

- 20. Lang RM, Addetia K, Miyoshi T, et al. Use of machine learning to improve echocardiographic image interpretation workflow: a disruptive paradigm change? J Am Soc Echocardiogr 2021;34:443–5. 10.1016/j.echo.2020.11.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Madani A, Arnaout R, Mofrad M, et al. Fast and accurate view classification of echocardiograms using deep learning. NPJ Digit Med 2018;1. 10.1038/s41746-017-0013-1. [Epub ahead of print: 21 Mar 2018]. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Zhang J, Gajjala S, Agrawal P, et al. Fully automated echocardiogram interpretation in clinical practice. Circulation 2018;138:1623–35. 10.1161/CIRCULATIONAHA.118.034338 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Salte IM, Østvik A, Smistad E, et al. Artificial Intelligence for Automatic Measurement of Left Ventricular Strain in Echocardiography. JACC Cardiovasc Imaging 2021;14:1918–28. 10.1016/j.jcmg.2021.04.018 [DOI] [PubMed] [Google Scholar]

- 24. Asch FM, Poilvert N, Abraham T, et al. Automated echocardiographic quantification of left ventricular ejection fraction without volume measurements using a machine learning algorithm mimicking a human expert. Circ Cardiovasc Imaging 2019;12:e009303. 10.1161/CIRCIMAGING.119.009303 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Thalappillil R, Datta P, Datta S, et al. Artificial intelligence for the measurement of the aortic valve annulus. J Cardiothorac Vasc Anesth 2020;34:65–71. 10.1053/j.jvca.2019.06.017 [DOI] [PubMed] [Google Scholar]

- 26. Jeganathan J, Knio Z, Amador Y, et al. Artificial intelligence in mitral valve analysis. Ann Card Anaesth 2017;20:129–34. 10.4103/aca.ACA_243_16 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Fatima H, Mahmood F, Sehgal S, et al. Artificial intelligence for dynamic echocardiographic tricuspid valve analysis: a new tool in echocardiography. J Cardiothorac Vasc Anesth 2020;34:2703–6. 10.1053/j.jvca.2020.04.056 [DOI] [PubMed] [Google Scholar]

- 28. Costa E MN, Sultan MS, et al. Mitral valve leaflets segmentation in echocardiography using convolutional neural networks. IEEE Port Meet Bioeng ENBENG 2019 Proc 2019:1–4. [Google Scholar]

- 29. Andreassen BS, Veronesi F, Gerard O, et al. Mitral annulus segmentation using deep learning in 3-D transesophageal echocardiography. IEEE J Biomed Health Inform 2020;24:994–1003. 10.1109/JBHI.2019.2959430 [DOI] [PubMed] [Google Scholar]

- 30. Gosling AF, Thalappillil R, Ortoleva J, et al. Automated spectral Doppler profile tracing. J Cardiothorac Vasc Anesth 2020;34:72–6. 10.1053/j.jvca.2019.06.018 [DOI] [PubMed] [Google Scholar]

- 31. Nolan MT, Thavendiranathan P. Automated quantification in echocardiography. JACC Cardiovasc Imaging 2019;12:1073–92. 10.1016/j.jcmg.2018.11.038 [DOI] [PubMed] [Google Scholar]

- 32. Kusunose K, Haga A, Abe T, et al. Utilization of artificial intelligence in echocardiography. Circ J 2019;83:1623–9. 10.1253/circj.CJ-19-0420 [DOI] [PubMed] [Google Scholar]

- 33. Moghaddasi H, Nourian S. Automatic assessment of mitral regurgitation severity based on extensive textural features on 2D echocardiography videos. Comput Biol Med 2016;73:47–55. 10.1016/j.compbiomed.2016.03.026 [DOI] [PubMed] [Google Scholar]

- 34. Martins JFBS, Nascimento ER, Nascimento BR, et al. Towards automatic diagnosis of rheumatic heart disease on echocardiographic exams through video-based deep learning. J Am Med Inform Assoc 2021;28:1834–42. 10.1093/jamia/ocab061 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Vafaeezadeh M, Behnam H, Hosseinsabet A, et al. A deep learning approach for the automatic recognition of prosthetic mitral valve in echocardiographic images. Comput Biol Med 2021;133:104388. 10.1016/j.compbiomed.2021.104388 [DOI] [PubMed] [Google Scholar]

- 36. Yoon YE, Kim S, Chang HJ. Artificial intelligence and echocardiography. J Cardiovasc Imaging 2021;29:193–204. 10.4250/jcvi.2021.0039 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Obermeyer Z, Powers B, Vogeli C, et al. Dissecting racial bias in an algorithm used to manage the health of populations. Science 2019;366:447–53. 10.1126/science.aax2342 [DOI] [PubMed] [Google Scholar]

- 38. Cook NR. Quantifying the added value of new biomarkers: how and how not. Diagn Progn Res 2018;2:14. 10.1186/s41512-018-0037-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Wojnarski CM, Roselli EE, Idrees JJ, et al. Machine-learning phenotypic classification of bicuspid aortopathy. J Thorac Cardiovasc Surg 2018;155:461–9. 10.1016/j.jtcvs.2017.08.123 [DOI] [PubMed] [Google Scholar]

- 40. Casaclang-Verzosa G, Shrestha S, Khalil MJ, et al. Network Tomography for Understanding Phenotypic Presentations in Aortic Stenosis. JACC Cardiovasc Imaging 2019;12:236–48. 10.1016/j.jcmg.2018.11.025 [DOI] [PubMed] [Google Scholar]

- 41. Sengupta PP, Shrestha S, Kagiyama N, et al. A Machine-Learning Framework to Identify Distinct Phenotypes of Aortic Stenosis Severity. JACC Cardiovasc Imaging 2021;14:1707–20. 10.1016/j.jcmg.2021.03.020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Kwak S, Lee Y, Ko T, et al. Unsupervised cluster analysis of patients with aortic stenosis reveals distinct population with different phenotypes and outcomes. Circ Cardiovasc Imaging 2020;13:e009707. 10.1161/CIRCIMAGING.119.009707 [DOI] [PubMed] [Google Scholar]

- 43. Ghassemi M, Oakden-Rayner L, Beam AL. The false hope of current approaches to explainable artificial intelligence in health care. Lancet Digit Health 2021;3:e745–50. 10.1016/S2589-7500(21)00208-9 [DOI] [PubMed] [Google Scholar]

- 44. Shaw J, Rudzicz F, Jamieson T, et al. Artificial intelligence and the implementation challenge. J Med Internet Res 2019;21:e13659. 10.2196/13659 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Li RC, Asch SM, Shah NH. Developing a delivery science for artificial intelligence in healthcare. NPJ Digit Med 2020;3:107. 10.1038/s41746-020-00318-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Asch FM, Mor-Avi V, Rubenson D, et al. Deep Learning-Based automated echocardiographic quantification of left ventricular ejection fraction: a point-of-care solution. Circ Cardiovasc Imaging 2021;14:e012293. 10.1161/CIRCIMAGING.120.012293 [DOI] [PubMed] [Google Scholar]

- 47. Stewart JE, Goudie A, Mukherjee A, et al. Artificial intelligence-enhanced echocardiography in the emergency department. Emerg Med Australas 2021;33:1117–20. 10.1111/1742-6723.13847 [DOI] [PubMed] [Google Scholar]

- 48. Lamprea-Montealegre JA, Oyetunji S, Bagur R, et al. Valvular heart disease in relation to race and ethnicity: JACC focus seminar 4/9. J Am Coll Cardiol 2021;78:2493–504. 10.1016/j.jacc.2021.04.109 [DOI] [PubMed] [Google Scholar]

- 49. Blanquer IA-B, Castro Angel, Garcia-Teodoro Fabio. Antonio, Medical Imaging Processing Architecture on ATMOSPHERE Federated Platform. In: IWFCC special session on Fedaration in cloud and container infrastructure, 2019: 589–94. [Google Scholar]

- 50. Corinzia L, Laumer F, Candreva A, et al. Neural collaborative filtering for unsupervised mitral valve segmentation in echocardiography. Artif Intell Med 2020;110:101975. 10.1016/j.artmed.2020.101975 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

heartjnl-2021-319725supp001.pdf (27KB, pdf)