Abstract

Background:

In neurophysiological data, latency refers to a global shift of spikes from one spike train to the next, either caused by response onset fluctuations or by finite propagation speed. Such systematic shifts in spike timing lead to a spurious decrease in synchrony which needs to be corrected.

New Method:

We propose a new algorithm of multivariate latency correction suitable for sparse data for which the relevant information is not primarily in the rate but in the timing of each individual spike. The algorithm is designed to correct systematic delays while maintaining all other kinds of noisy disturbances. It consists of two steps, spike matching and distance minimization between the matched spikes using simulated annealing.

Results:

We show its effectiveness on simulated and real data: cortical propagation patterns recorded via calcium imaging from mice before and after stroke. Using simulations of these data we also establish criteria that can be evaluated beforehand in order to anticipate whether our algorithm is likely to yield a considerable improvement for a given dataset.

Comparison with Existing Method(s):

Existing methods of latency correction rely on adjusting peaks in rate profiles, an approach that is not feasible for spike trains with low firing in which the timing of individual spikes contains essential information.

Conclusions:

For any given dataset the criterion for applicability of the algorithm can be evaluated quickly and in case of a positive outcome the latency correction can be applied easily since the source codes of the algorithm are publicly available.

Keywords: Spike train analysis, Latency, Latency Correction, SPIKE-synchronization, SPIKEorder, Synfire Indicator, Simulated Annealing, Mice, stroke, Rehabilitation

Highlights

-

•

Latency refers to a global shift of spikes from one spike train to the next.

-

•

It leads to a spurious decrease in synchrony which first needs to be corrected.

-

•

In this article we present a new algorithm for multivariate latency correction.

-

•

We validate the algorithm on simulated with known ground truth.

-

•

We show its effectiveness on real data, cortical propagation patterns in mice.

1. Introduction

Measuring the degree of synchrony within a set of spike trains is a common task in two major scenarios.

In the first scenario spike trains are recorded in successive time windows from only one neuron. In order to allow for a meaningful alignment of these time windows there has to be a common temporal reference point which is typically some kind of external trigger event such as the onset of a stimulus. If the stimulus is always the same, the issue under consideration is the reliability of individual neurons (Mainen and Sejnowski, 1995, Tiesinga et al., 2008), while different stimuli are used to find the features of the response that provide the optimal discrimination within the context of neuronal coding (Victor, 2005, Quian Quiroga and Panzeri, 2013).

In the second scenario spike trains are recorded simultaneously from a population of neurons (Gerstein and Kirkland, 2001, Brown et al., 2004). In this scenario spikes emitted at the same time are truly ‘synchronous’ (Greek: ‘occurring at the same time’). Typical applications for such data are the multi-channel recordings of various neuronal circuits of the brain (Tiesinga et al., 2008, Shlens et al., 2008) and the analysis of spiking activity propagation in neuronal networks (Buzsaki and Draghun, 2004, Kumar et al., 2010). The real data example used in this study also belongs to this scenario, global activation patterns recorded via wide-field calcium imaging in the cortex of mice before and after stroke (Allegra Mascaro et al., 2019, Cecchini et al., 2021).

Generally speaking, latency is the temporal delay of some physical change in the system being observed. In neuroscience latencies have biological reasons and carry a lot of valuable information in themselves that are typically analyzed in a first step using measures of directionality (Kreuz et al., 2017, Cecchini et al., 2021). Once all the information contained in the latencies has been extracted, it is worth investigating also the synchrony of the underlying dynamics. In this context of synchrony estimation latency in the data is not a primary source of information but rather a hindrance that first has to be removed in order to find the true value of synchrony.

Latency and its correction are relevant in both of the scenarios introduced above. In the first ‘successive single neuron recordings’ scenario latency translates into the time lag between the stimulus and the response. Due to various sources of noise (Lee et al., 2020, Uzuntarla et al., 2012) this lag may vary from trial to trial (Lee et al., 2016). These variations in onset latency can then lead to a “spurious” underestimation of synchrony (Ermentrout et al., 2008, Zirkle and Rubchinsky, 2021). In order to account for this, a multivariate latency correction has to be performed in which the various trials are realigned before the “true” synchrony is calculated.

In the second ‘simultaneous population recordings’ scenario dealt with here, latency becomes important whenever there is a spatial propagation of activity from one location to another (Kreuz et al., 2017, Cecchini et al., 2021). The question of interest when analyzing synchrony becomes whether and how much the activity changes during the course of the propagation. Again, in order to answer this, the recordings from different locations have to be compared only after the latency caused by the finite propagation speed has been accounted for.

Methods of latency correction proposed in the literature mostly deal with the first scenario and use rate-based estimates of latency. Typically they rely on dynamic rate profiles for each trial that are obtained by convolving the individual spike trains with either a static or a dynamic kernel (Nawrot et al., 2003, Schneider and Nikolić, 2006). The resulting peaks are then realigned, e.g. by maximizing the total pairwise correlation (Nawrot et al., 2003). This is done under the underlying assumptions that the rate is the most important feature of the response, that the number and density of spikes is high enough to estimate it reliably and, crucially, that the timing of the individual spikes can be neglected. These assumptions hold for a wide variety of real data (Walter and Khodakhah, 2009, Enoka and Duchateau, 2017) but there are also many datasets in which this is not the case (van Rullen and Thorpe, 2001, Harvey et al., 2013, Fukushima et al., 2015) and for which so far no reliable and feasible method of latency correction has been proposed.

Therefore, here we would like to address the complementary problem of latency correction in data in which there are not that many spikes and where the relevant information is not primarily in the rate but in the timing of each individual spike. In doing so, we follow two specific objectives: First we would like to propose a latency correction algorithm that works not only with rather clean simulated data but functions also with experimental data that typically contain disturbances such as unreliability (missing spikes), jitter (noisy spike shifts), and background noise (extra spikes). The algorithm consists of two parts, matching pairs of spikes over all the spike trains followed by an optimization procedure (simulated annealing) that minimizes the distances between the matched spikes. The second objective is to define the limits for which the algorithm works well, e.g. to specify whether there are any conditions the dataset needs to fulfill in order to be a good candidate for the application of this method.

The remainder of the article is organized as follows:

In Section 2 we describe the wide-field calcium imaging datasets that we use to illustrate the effectiveness of the algorithm at work. In the Methods (Section 3) we first describe the two basic steps of our latency correction algorithm, matching pairs of spikes and minimizing their average distance via simulated annealing (Section 3.1). Then we define the two quantities SPIKE-Synchronization and Synfire Indicator (based on the measure SPIKE-order) from which we will later derive a well-defined criterion for the improvability of a given dataset (Section 3.2). The Results (Section 4) consist of three subsections detailing applications of the new approach to artificially generated datasets (Section 4.1), to neurophysiological datasets (Section 4.2) and to simulations of these experimental data (Section 4.3). Conclusions are drawn in Section 5.

2. Data

Here we provide a short overview of the experimental paradigm, the basic recording setup and the data processing that was performed in order to arrive at the rasterplots that were then analyzed in Section 4.3. More details can be found in Allegra Mascaro et al. (2019) and Cecchini et al. (2021).

The datasets consist of cortical activity obtained by 12 × 21 pixel wide-field calcium imaging in mice before and after the induction of a focal stroke via a photothrombotic lesion in the primary motor cortex. The purpose of the recordings was to investigate changing propagation patterns during motor recovery from the functional deficits caused by the stroke. This motor recovery was aided by the M-platform (Spalletti et al., 2014, Spalletti et al., 2017), a robotic system that performs passively actuated contralesional forelimb extension on a slide to trigger active retraction movements that were subsequently rewarded (up to 15 cycles per recording session).

In this article we analyze a total of recordings (mean duration s, range to s) from mice which were divided into three groups according to their rehabilitation paradigm: Control, Robot and Combined. The healthy Controls (3 mice) had no stroke induced but underwent four weeks of motor training. The Robot group (5 mice) performed the same physical rehabilitation for four weeks starting five days after the stroke induction. The Combined group (6 mice) underwent motor training together with a transient pharmacological inactivation of the controlesional hemisphere.

Each recording session resulted in continuous calcium traces from between and pixels (mean number ) that were then transformed via a straightforward detection of upwards threshold crossings into the spike trains that together form the rasterplots that are analyzed here. These rasterplots display the global activity propagation events in the cortex that typically correspond to attempted or completed forelimb pull events.

The data that we obtained had already undergone some filtering of background noise. Before applying our multivariate latency correction we sort spike trains from leader to follower by means of the SPIKE-order approach (Kreuz et al., 2017, Cecchini et al., 2021).

All experimental procedures were performed in accordance with directive 2010/63/EU on the protection of animals used for scientific purposes and approved by the Italian Minister of Health, authorization n.183/2016-PR.

3. Methods

In this Section we describe our approach to multivariate latency correction. The starting point is a set of spike trains each composed of sequences of spike times recorded over a certain period of time (with denoting the numbers of spikes in spike train ).

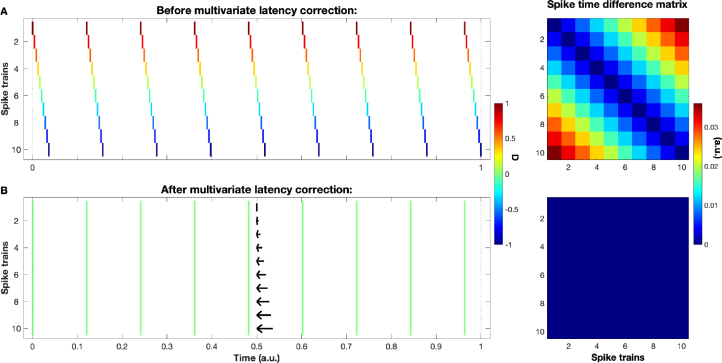

Since a delay correction for a set of spike trains without any delays is obviously not very reasonable we assume that the set contains spike trains exhibiting a systematic delay between them (later we will define a criterion that tells us if and to what extent this is actually the case). In our preferred ‘simultaneous population recordings’ scenario this corresponds to a consistent propagation of activity from leading to following spike trains. The simplest example, a perfect synfire chain, is shown in the rasterplot of Fig. 1A. Choosing an arbitrary spike train as reference (here we will always use the first), the task is to find for each of the other spike trains the delay correction that maximizes the similarity (or minimizes the dissimilarity) between all the spike trains. An example of such a solution, in this simple case perfect identity, is shown in Fig. 1B.

Fig. 1.

Illustration of a set of artificially created spike trains exhibiting a consistent propagation activity, in this case a perfect regularly spaced synfire chain. Subplot A is before and subplot B is after the multivariate latency correction. The arrows in B indicate for each spike train the shift performed during the latency correction. Spike colors code the asymmetric SPIKE-order (see Section 3.2) on a scale from (red, first leader) to (blue, last follower). On the right we show the spike time difference matrices. For this simple example the corrected spike trains are identical and accordingly the spike time difference matrix turns from its perfectly ordered ascension away from the diagonal to zero everywhere.

Of course in real data any propagation pattern will typically be contaminated by unreliability, jitter, and background noise. And our task is complicated by two more problems: First, since time is continuous the number of possible solutions to this optimization problem is infinite. Second, in the approaches based on the rate coding assumption (Nawrot et al., 2003, Schneider and Nikolić, 2006) the individual spike trains are transformed into rate functions with one well-defined maximum each and these maxima can then easily be aligned, but for the kind of sparse data under consideration here calculating rate functions and identifying maxima is so difficult that such a reductive approach is not feasible.

Instead, our algorithm looks at the temporal relationship between individual spikes and uses an iterative heuristic approach (simulated annealing) to search within the vast space of possible solutions for the optimal one. Since for reasons of computational cost and feasibility we cannot calculate in each iteration the overall synchrony among all the spike trains, we use a definition of synchrony based on the measure SPIKE-synchronization that is straightforward to calculate and very easy and efficient to update.

3.1. Spike matching and simulated annealing

Searching for systematic delays in a spike train set requires a way to determine which spikes should be compared against each other. Under the assumption of sparse data with rather well-defined global events we employ the adaptive coincidence criterion originally introduced for the bivariate measure event synchronization (Quian Quiroga et al., 2002) and then also used for both the symmetric SPIKE-Synchronization (Kreuz et al., 2015) and the asymmetric SPIKE-order (Kreuz et al., 2017).

This coincidence detection is scale- and parameter-free since the maximum time lag up to which two spikes and of spike trains are considered to be synchronous is adapted to the local firing rates according to

| (1) |

For some applications it might be appropriate here to also introduce a maximum coincidence window (Kreuz et al., 2017) as a parameter thereby combining the time-scale independent coincident detection with a time-scale dependent upper limit. This way additional knowledge about the data (such as typical signal propagation speed) can be taken into account in order to guarantee that two coincident spikes are really part of the same meaningful event.

Following the derivation of SPIKE-synchronization (Kreuz et al., 2015), we then apply the coincidence criterion by defining for each spike of any spike train and for each other spike train a coincidence indicator

| (2) |

which is either one or zero depending on whether this spike is part of a coincidence with a spike of spike train or not. This results in an unambiguous spike matching since any spike can at most be coincident with one spike (the nearest one) in the other spike train.

Subsequently, for each spike of every spike train a normalized coincidence counter

| (3) |

is obtained by averaging over all bivariate coincidence indicators involving the spike train .

In order to obtain a single multivariate SPIKE-Synchronization profile we pool the coincidence counters of all the spikes of every spike train:

| (4) |

where we map the spike train indices and the spike indices into a global spike index denoted by the mapping and .

With denoting the total number of spikes in the pooled spike train, the average of this profile

| (5) |

yields SPIKE-Synchronization, the overall fraction of coincidences. It reaches one if and only if each spike in every spike train has one matching spike in all the other spike trains (or if there are no spikes at all), and it attains the value zero if and only if there are no coincidences in any of the spike trains.

For SPIKE-synchronization the only information used is binary: match or no match. Here, for the purpose of latency correction, we go one crucial step further and calculate for each pair of matched spikes the difference between the respective spike times. To do so, for each matched spike in spike train (all spikes for which , cf. Eq. (2)) we first identify the matching spike in spike train as

| (6) |

and then calculate their distance as

| (7) |

Finally, we obtain the average spike time differences for this spike train pair

| (8) |

which serves as our best estimate of the latency between these two spike trains. Repeating this procedure for all pairs of spike trains we obtain the symmetric spike time difference matrix . The aim of our multivariate latency correction algorithm is to minimize the mean value of this matrix (or since the matrix is symmetric, the mean value of the upper right tridiagonal part of the matrix , i.e., all values for which ).

In Fig. 1 on the right we show the spike time difference matrix for a perfect synfire chain, before and after the latency correction. In the initial synfire chain, the further apart two spike trains the larger their spike time differences. Accordingly, the values in the spike time difference matrix increase with the distance from the diagonal (which by definition is always zero). In this case the latency correction is very straightforward: a simple shift correction using either the values from the first row (with the first spike train as reference) or the values from the first upper diagonal (the difference between neighboring spike trains) does the trick. In this particular example the shift not only sets the matrix elements that were used in the calculation to zero but also all the other elements of the matrix, as can be seen on the lower right of Fig. 1. Whenever this is the case, the problem is solved and we stop immediately.

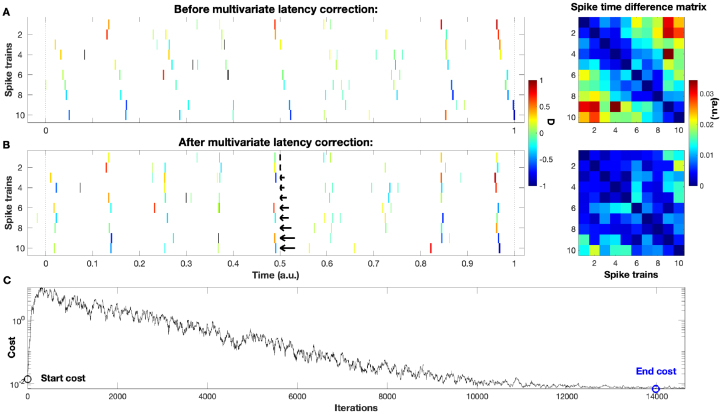

However, datasets in real life are not as clean and we encounter ‘disturbances’ such as incomplete global events, jitter, and background noise. Such a more realistic example is shown in Fig. 2. Going further, datasets can contain different duration of global events and different intervals between subsequent spikes (non-monotonous propagation), until in the end we arrive at spike trains sets without any clear propagation structure. For the perfect synfire chain of Fig. 1 it is enough to consider entries of the matrix and ignore the others, but under more realistic conditions the solution obtained this way becomes suboptimal and a more general and sophisticated approach is needed.

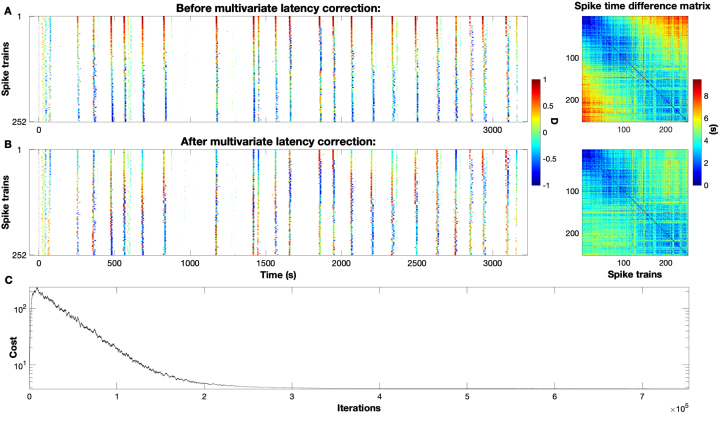

Fig. 2.

Similar to Fig. 1 but this time we show a more realistic dataset superimposed with some unreliability, jitter, and background noise. Clearly the latency corrected spike trains in rasterplot B exhibit a much larger degree of synchrony than the ones in rasterplot A. Notice that all three of the aforementioned sources of noise are still present after the correction. On the right, the values of the spike time difference matrix decrease considerably. In subplot C we also display the cost function over the course of the simulated annealing. It consists of the usual two parts, a short initial increase from the start cost (marked by a black circle) and a decrease that slowly convergences towards the end cost (defined as the minimum value of the cost function and here marked by a blue circle).

For this we propose simulated annealing (Dowsland and Thompson, 2012), an heuristic approach that uses an iterative directed random walk to find the optimum (here minimum) of a cost function within the vast search space.

Starting from the initial cost value before the latency correction (start cost), all iterations which decrease the cost function are accepted while the likelihood of accepting iterations which increase the cost function is getting lower and lower according to a slow cooling scheme which ensures a certain degree of convergence. However, since this probability remains always positive, simulated annealing, in contrast to a steepest descent (or ‘greedy’) algorithm, has the ability to recover from local (but non-global) minima. Iterations last until the cost no longer changes or until a predefined end temperature is reached. As end cost after the latency correction we use the minimum cost obtained over the course of the simulated annealing.

In multivariate latency correction the search space is composed of all possible shifts of the spike trains relative to each other and in principle this space is infinitely large. The cost function to be minimized is the average value of the upper right tridiagonal part of the spike time difference matrix (which thus takes into account all elements of the matrix):

| (9) |

Within each iteration a randomly selected spike train is shifted by a randomly selected time interval. As a special trick, to facilitate convergence the shift values are drawn from a Gaussian distribution that gets narrower the closer we get to the optimal solution: at each iteration its standard deviation is set to the current cost value. The typical course of the cost function during the simulated annealing is shown for the more realistic example of Fig. 2.

3.2. SPIKE-order and the synfire indicator

In this Section we introduce the concepts and the quantities needed to later define a quantitative criterion for the suitability of datasets for our multivariate latency correction algorithm.

SPIKE-Synchronization is invariant to which of the two spikes within a coincidence pair is leading and which is following. To take the temporal order of the spikes into account we developed the SPIKE-Order approach (Kreuz et al., 2017) which allows to sort the spike trains from leader to follower and to evaluate the consistency of the preferred order via the Synfire Indicator.

Following Kreuz et al. (2017), we first define the bivariate anti-symmetric SPIKE-Order indicator

| (10) |

which assigns to each spike either a or a depending on whether the respective spike is leading or following the coincident spike in the other spike train (cf. Eq. (6)).

SPIKE-Order distinguishes leading and following spikes, and is thus used to colorcode the individual spikes on a leader-to-follower scale (see, e.g., the rasterplots in Fig. 1). It can also be employed to sort the spike trains by means of the cumulative and anti-symmetric SPIKE-Order matrix

| (11) |

which quantifies the temporal relationship between spike trains and . If spike train is leading , while means is the leading spike train. For a spike train order in line with the synfire property (i.e., exhibiting consistent repetitions of the same global propagation pattern), we thus expect for . Therefore, the overall SPIKE-Order can be constructed as

| (12) |

i.e. the sum over the upper right tridiagonal part of the matrix .

Finally, normalizing by the total number of possible coincidences yields the Synfire Indicator:

| (13) |

This measure quantifies to what degree coinciding spike pairs with correct order prevail over coinciding spike pairs with incorrect order, or, in other words, to what extent the spike trains in their current order resemble a consistent synfire pattern. Accordingly, maximizing the Synfire Indicator as a function of the spike train order finds the sorting of the spike trains from leader to follower such that the sorted set comes as close as possible to a perfect synfire pattern:

| (14) |

Whereas the Synfire Indicator for any spike train order is normalized between and , the optimized Synfire Indicator can only attain values between and . But from Eq. (10) if follows that since the order is only evaluated among those spikes that match, the actual upper bound for any given dataset is the value of SPIKE-synchronization (Eq. (5)). A perfect synfire pattern results in , while sufficiently long Poisson spike trains without any synfire structure yield .

In this article, for both the simulated and the real datasets before the multivariate latency correction we first sort the spike trains from leader to follower. The optimization procedure that we use to find the best spike train order is again based on simulated annealing (details can be found in Kreuz et al., 2017). While this sorting is not necessary for the correction itself, it renders the resulting rasterplots more intuitive and easier to read. For simplicity we refer to the Synfire Indicator of the sorted spike trains as .

As we will show in Section 4.3, the Synfire Indicator serves as main criterion for the suitability of our algorithm that can be evaluated for a given dataset before the multivariate latency correction is actually applied. On the other hand, once we have performed the correction we would also like to quantify how successful it actually has been. As measure of its performance we use the relative cost improvement (in percent) defined as

| (15) |

the normalized change in cost (i.e. the mean spike time difference, see Eq. (9)) between before () and after () the correction. For comparison purposes we also define the shift cost as the cost that is obtained after shifting the spike trains according to the delays in the first row of the spike time difference matrix (without performing simulated annealing and while ignoring all other entries of that matrix). As we have seen in Fig. 1, for a perfect synfire chain this value is zero.

4. Results

First, in Section 4.1 we investigate the performance of our new method for multivariate latency correction in a controlled setting using simulated data that cover the whole range from a perfect synfire chain to pure Poisson spike trains. Then we apply the algorithm to neurophysiological datasets, cortical propagation patterns recorded via wide-field calcium imaging from mice before and after stroke (Section 4.2). Finally, in Section 4.3 we perform simulations of these experimental data which allow us to extend the parameter range and derive a criterion which determines whether a given dataset is a suitable candidate for our algorithm.

4.1. Simulated data

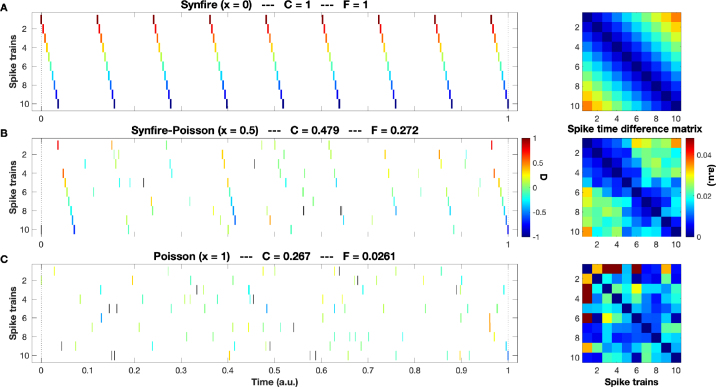

Before applying our method to the experimental datasets described in Section 2 we test it on controlled data with known ground truth. To this aim, we introduce a mixing parameter , that is used to interpolate between the two extremes of perfect synfire chain () and pure Poisson spike trains (). This mixing parameter is increased from to in steps of 0.05 and for every one of these values we generate spike trains. For each of these spike trains we select a fraction of spikes from a perfect synfire chain (with 9 spikes) and a fraction from a pure Poisson spike train (with an expectation value of spikes). This way each spike train becomes a superposition of a synfire chain and a Poisson spike train with the relative contribution determined by the mixing parameter. In Fig. 3 we show the two extremes and in between the halfway case. For each example we also report the values of the SPIKE-synchronization (cf. Section 3.1) and the Synfire Indicator (Section 3.2).

Fig. 3.

Simulated data with varying mixing parameter : From a perfect synfire chain (subplot A, ) via the halfway case (subplot B, ) to a pure Poisson process (subplot C, ). For each example, we state the values of the SPIKE-synchronization and the Synfire Indicator . Note how the spike time difference matrices get more and more irregular. While the trend underlying the perfect regularity from A (monotonous increase as one moves away from the diagonal) is still perceptible in B, in C there is no apparent order anymore.

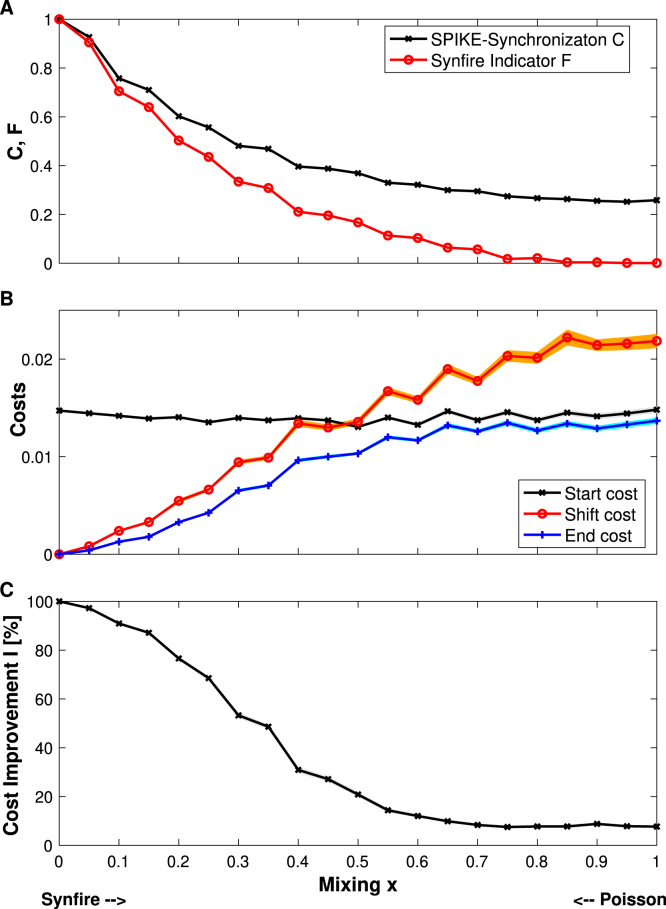

Fig. 4A reports and in dependence of the mixing parameter. Both values decrease with increasing mixing parameter. For the perfect synfire chain () they start with their maximum value of one, whereas for pure Poisson spike trains () they reach their lowest value which due to remaining random coincidences and persistent random order, respectively, gets quite close to but does not reach zero. As already mentioned in Section 3.2, by definition SPIKE-synchronization is an upper limit for the Synfire Indicator .

Fig. 4.

Simulated data: SPIKE-Synchronization and Synfire Indicator (subplot A), start, shift and end costs (subplot B), as well as relative cost improvement in percent (subplot C) versus the mixing parameter . Shaded areas indicate the standard error of the mean (which in some cases is hardly visible). In A both SPIKE-Synchronization and the Synfire Indicator decrease rather monotonously with . At the same time, acts as upper bound for . In B around a mixing value of the end cost is not that much lower than the start cost anymore, and accordingly in C the cost improvement starts to level off towards a rather low value below 10%. All graphs show averages over 100 independent realizations for each mixing value. While the main synfire chain always remains the same (like the one shown in Fig. 3A), for the stochastic parts vary for each realization: different synfire spikes are omitted and different Poisson spikes are added.

In Fig. 4B we display the behavior of the three relevant cost values. The start cost hovers around some intermediate value and seems to be quite independent of . In the case of a perfect synfire chain () the end cost is actually the shift cost since we refrain from running the simulated annealing because we have already reached the optimum value of zero. However, for all positive values of the mixing parameter the end cost obtained via simulated annealing outperforms the shift cost, as a tendency the more so the higher . This shows that while the shift cost is very fast to calculate, in general it gives only suboptimal results. Roughly starting from the halfway point () it is actually even worse than the start cost.

The end cost also consistently improves on the start cost and this is quantified more directly in Fig. 4C where we show the relative cost improvement (Eq. (15)) in dependence of the mixing parameter. The improvement is highest for the synfire chain where the correction yields the perfect result and then it slowly drops off until somewhere between and it reaches a plateau where the improvement is still positive but rather low. This cutoff range of the mixing parameter corresponds to SPIKE-Synchronization values of and Synfire Indicator values of (see Fig. 4A).

4.2. Experimental data

After validating our algorithm on controlled data with known ground truth, we now show its effectiveness in a real life application to neurophysiological datasets. For this we choose spatiotemporal propagation activity in the cortex of mice observed with in vivo calcium imaging before and after the induction of a stroke (Allegra Mascaro et al., 2019, Cecchini et al., 2021) (see Section 2).

In our previous work (Cecchini et al., 2021) we have analyzed these datasets and applied three novel indicators (angle, duration and smoothness) based on asymmetric measures of directionality to the observed global activation patterns in order to track damage and functional recovery during various rehabilitation paradigms. Here we would like to follow a complementary approach and look at the similarity of the activity during the course of the propagation. The aim is to investigate whether this new type of analysis can help us to distinguish the three different groups of mice, Control, Robot and Combined. But first we have to correct for the systematic latency caused by the finite propagation speed.

Fig. 5A shows a typical dataset (this one from a healthy control mouse) with a complete set of spike trains. This particular rasterplot exhibits about rather complete global events plus a few incomplete events and a limited amount of background noise. Since spike trains are already sorted, the global events mostly observe the rainbow pattern from red (leading spikes) to blue (following spikes). Typically, the matched spikes from the very first and the very last spike trains are furthest apart and, accordingly, the highest values in the spike time difference matrix are found in the corners away from the diagonal. On the other hand, the data are quite far from a perfect synfire chain. Apart from the incompleteness of some of the global events and the background noise there is also quite a bit of variability in the order within the events. In fact, because of this the value of SPIKE-synchronization for this example is while the Synfire Indicator is only , both clearly below their maximum value of .

Fig. 5.

Example of latency correction in an experimental dataset: The spikes in the rasterplot are the upwards threshold crossings of the calcium traces recorded in vivo during motor training from a healthy control mouse (mouse , day ). The plot follows the structure from Fig. 2. The order in different global events varies but typically there is a rather high level of consistency as can be seen by the rainbow-like color patterns of the events that mostly go from red (leading spikes) to blue (following spikes). On this dataset (SPIKE-synchronization , Synfire Indicator ) the effect of the latency correction can be seen most clearly in the outer corners of the spike time difference matrix, the intervals between the first leaders and the last followers get reduced considerably. Overall, the resulting cost improvement is .

After we run our multivariate latency correction it is in particular the distances between the first leaders and the last followers (the matrix elements furthest away from the diagonal) that are greatly reduced (Fig. 5B). The overall cost value falls from 4.21 to 3.74, a drop that corresponds to a relative cost improvement of 11%. It takes slightly more than 750.000 iterations to reach this improvement (Fig. 5C).

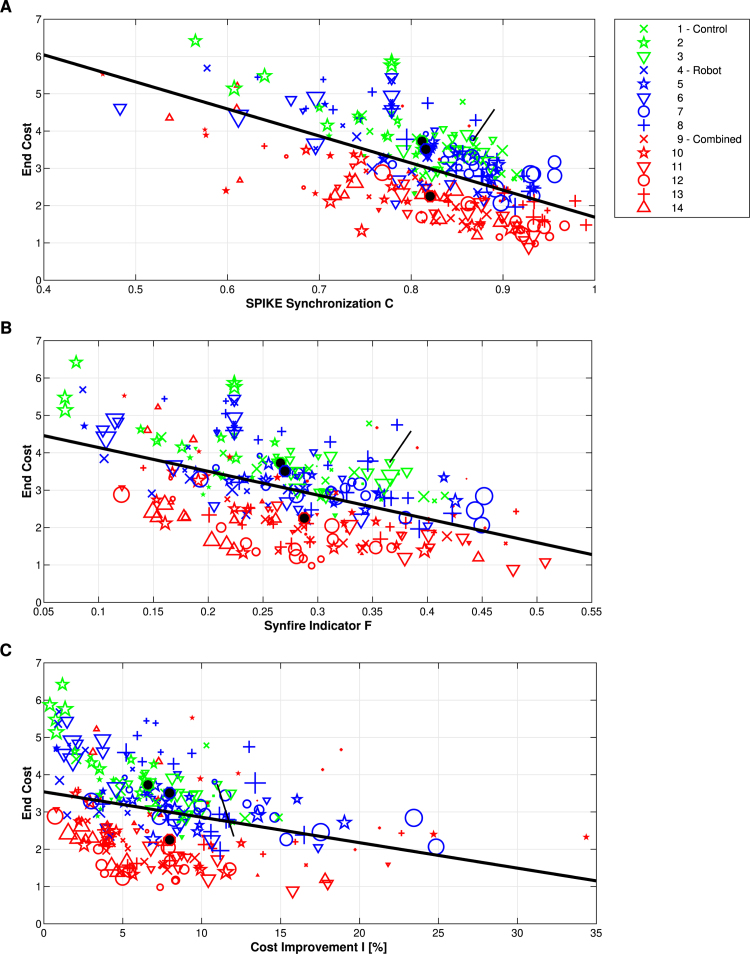

Next, we look at the statistics over all datasets. In Figs. 6A and 6B we plot the end cost (i.e. the average value of the spike time difference matrix after the multivariate latency correction) versus SPIKE-Synchronization and the Synfire Indicator , respectively. Comparing the different groups, the end cost is lowest (similarity is highest) for the Combined group followed by the Robot and the Control group, but there is quite some overlap between the different distributions. For all three groups separately, and combined, there is a tendency that the cost is lower for higher values of both SPIKE-Synchronization and the Synfire Indicator (overall, the least square fits show linear correlations of for and for ).

Fig. 6.

Statistics for experimental data: End cost versus SPIKE-Synchronization (subplot A), the Synfire Indicator (subplot B) and the relative cost improvement (subplot C) for all datasets. Colors indicate groups, symbols distinguish mice, and the larger the marker, the later the day of the recording. For each group we also added the center of mass indicated by a larger marker with a black center. The thick black lines represent a linear fit for all datasets together, independent of the group. The short black lines indicate the values for the example dataset shown in Fig. 5. The end cost is anticorrelated with both SPIKE-Synchronization and the Synfire Indicator as well as with the relative cost improvement.

Fig. 6C marks the transition from the description of the data to the characterization of the performance of our algorithm. The end cost quantifies the corrected similarity of the datasets and is thus the main value that we are interested in from a data point of view. On the other hand, the relative cost improvement characterizes the relative effect of the method in correcting the latency for a given set of data. Fig. 6C relates these two quantities and indicates that they are slightly anticorrelated with a correlation coefficient of . This anticorrelation can be expected from the definition of the relative cost improvement in Eq. (15) where the end cost enters as a subtrahend. The fact that the absolute correlation value is not higher is due to the influence of the start cost which then determines the relative change.

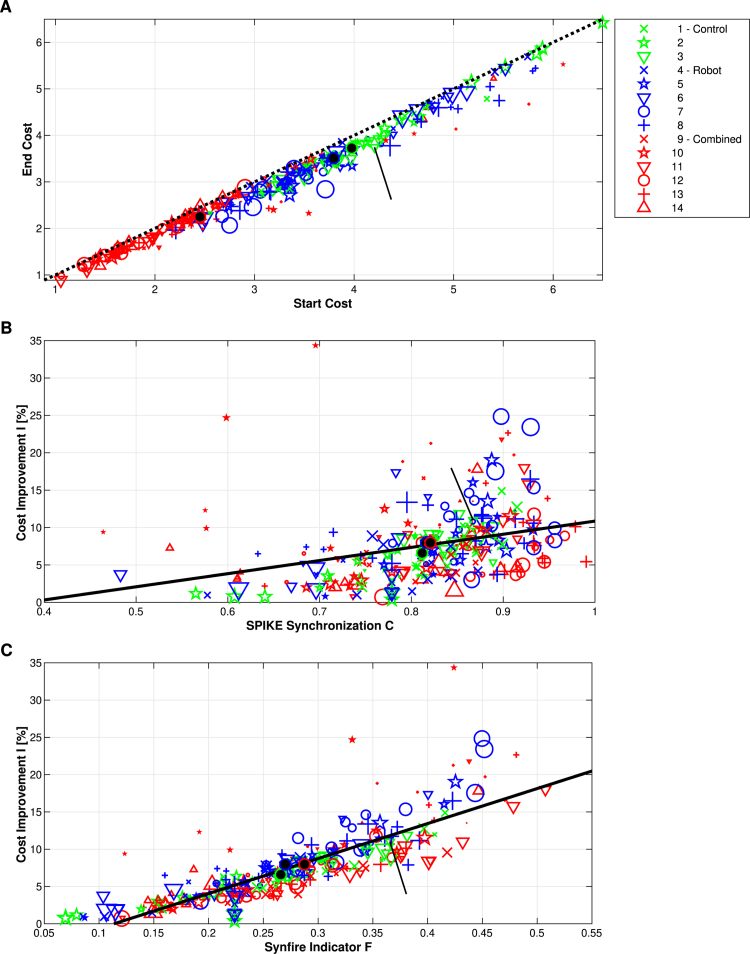

In order to analyze the performance of the algorithm in more detail, in Fig. 7A we compare the cost after the latency correction versus the cost before the latency correction, again for all datasets. For a correction that would just result in a percentual offset, all values would lie on a diagonal parallel to the main diagonal. As can be seen from the many off-diagonal values and the occasional larger outlier this is clearly not the case. We also find that the points being furthest away from the diagonal are concentrated in an intermediate range suggesting that the algorithm works best in cases with intermediate synchrony.

Fig. 7.

Performance of algorithm on experimental data: End cost versus start cost (subplot A) as well as relative cost improvement versus SPIKE-Synchronization (subplot B) and versus the Synfire Indicator (subplot C) for all datasets. Layout as in Fig. 6. In A the diagonal (corresponding to unchanged costs) is marked by a dashed black line. The effect of the latency correction on the costs is most pronounced in the middle range. The relative cost improvement is much less correlated with SPIKE-Synchronization (B) than with the Synfire Indicator (C).

Figs. 7B and 7C display the relative cost improvement in dependence of SPIKE-Synchronization and the Synfire Indicator, respectively. Here it is much more difficult to separate the three different groups than in Fig. 6 which demonstrates that the algorithm performs for all three groups equally well.

When we look at the dependence of the relative cost improvement on the SPIKE-synchronization we find a rather modest linear correlation of . However, the most pronounced linear correlation over all the datasets is obtained for the Synfire Indicator: the larger the bigger the relative cost improvement and here we obtain an astonishing . Thus, the primary influence on the performance of the algorithm is the Synfire Indicator, while the role of SPIKE-synchronization merits further investigation.

4.3. Simulations of experimental data

As a last step, we simulate the experimental data analyzed in the previous Section 4.2. With this we have two major objectives in mind: (i) to extend the parameter range covered by the experimental data and (ii) to control the simulations such that we can isolate the influence of the two most important characterizing quantities SPIKE-synchronization and Synfire Indicator in a more systematic way, something which cannot be done with the random and arbitrary distributions of the real data.

As we have seen in Fig. 5, a typical dataset consists of a number of rather complete global events (with a more or less consistent order), a few quite incomplete events and some noisy background spikes. We simulate all of these in a very controlled manner by setting the following parameters: the number of spike trains , the number of global events , the average relative completeness of these events and the relative amount of background spikes (in units of the number of spikes in the events if all of these events were complete). Note that both and are related to the mixing parameter from Section 4.1 ( and ) but here these two variables can be chosen independently and we are able to investigate parameter values beyond the ones covered in Section 4.1 (for example ) or beyond the values found in our experimental data of Section 4.2. Our last parameter, the shuffle , controls the consistency of the spike order within the global events. It denotes the relative fraction of spikes in each event that are shuffled. For nothing changes and a synfire chain remains a synfire chain, for a randomly selected half of the spikes of each event are shuffled, while for the shuffle is performed among all the spike trains present in each event and any initial consistency in order is completely destroyed.

With these parameters we can look more directly at the influence of SPIKE-synchronization (see Section 3.1) and the Synfire Indicator (Section 3.2) on the cost improvement and we do so in a way that the results on the one quantity are not disturbed or mediated by the other. The problem we have to overcome is that, while both the average completeness of events and the relative amount of background spikes are parameters that can be controlled easily, neither nor can be set directly. Fortunately, we can control the SPIKE-synchronization indirectly via and (starting from a perfect synfire chain both decreasing and increasing lead to smaller ). For simplicity, we here set to zero (no background noise), which maximizes the range of and values that we can cover. But we did confirm (results not shown) that for higher values of (including values larger than one) the results remain the same, the only difference is that for larger we are less able to reach the higher ranges of and .

So our two controlling parameters are the event completeness and the shuffle and on the left hand side of Fig. 8 we display three 3D-plots that show the dependence on these parameters of the Synfire Indicator , SPIKE-synchronization , and the cost improvement , respectively (again all plots are averages over realizations). First, we note that the event completeness has an effect on both the Synfire Indicator and SPIKE-synchronization (the influence on is mediated via its upper limit ). The shuffle controls only the Synfire Indicator, whereas, as expected, SPIKE-synchronization is invariant to . So a maximum value of leads immediately to a maximum of , while is maximal if and only if events are both complete and consistently ordered (no shuffle). The same holds true for the cost improvement but while the decreases for and are (seemingly) linear, for the dependency on both and is non-linear.

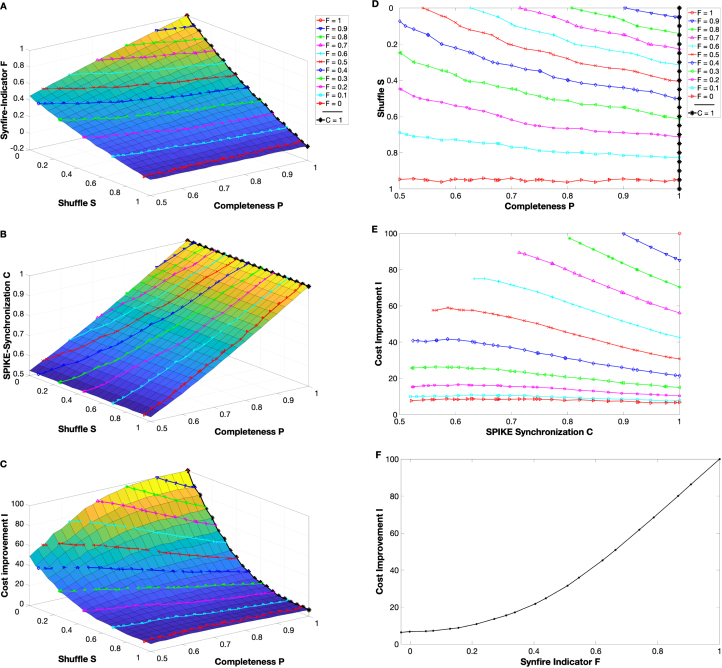

Fig. 8.

Simulations of experimental data: 3D-plots show the dependence of the Synfire Indicator (subplot A), SPIKE-Synchronization (subplot B) and the Cost improvement (subplot C) on the event completeness and the shuffle parameter . Colored lines in A depict the crossings of the Synfire Indicator plane with horizontal planes corresponding to constant -values, while in B and C they mark the values of and , respectively, obtained for these parameter combinations of and . In all three plots a thick black line marks the values obtained for SPIKE-synchronization equal to one. Subplot D depicts the values of event completeness and shuffle that yield the constant values of in subplot A and the maximum value of in subplot B. Finally, we show the cost improvement versus SPIKE-synchronization for different values of (subplot E) and versus the Synfire Indicator for (subplot F). High values of the SPIKE-synchronization and, to an even larger extent, the Synfire Indicator are crucial for an impactful latency correction.

Next, we use these 3D-plots to isolate the dependence of the cost improvement on SPIKE-synchronization and on the Synfire indicator. For we avoid mediation through by keeping constant. Thus we first identify in Fig. 8A the crossing of the ‘Synfire Indicator vs. event completeness and shuffle’ plane with different horizontal planes corresponding to constant -values from to (in steps of 0.1). The projections onto the event completeness - shuffle - plane are shown in Fig. 8D and with these parameter combinations we can look up the corresponding values of SPIKE-synchronization in Fig. 8B and of the cost improvement in Fig. 8C. The resulting versus curves are shown in Fig. 8E. We find that for low values of the cost improvement is very small and there is hardly any dependence on SPIKE-synchronization. In this case the datasets are so noisy that not much can be gained by applying the latency correction algorithm. On the other hand, for high values of the Synfire Indicator the cost improvement increases considerably and so does the dependence on SPIKE-synchronization. It turns out that for constant the cost improvement actually decreases with , a result which stands markedly in contrast to what we found in Section 4.2. There increased with which, as we learn here, was largely mediated by . In fact, once is eliminated as an influencing factor, the dependency reverses. This new result can be explained as follows: For larger event completeness (and thus larger ) more shuffle is needed to keep constant (see Fig. 8D). The resulting datasets are more noisy and as before this noise keeps the cost improvement down. Reducing the Synfire Indicator via shuffle has a stronger effect on than reducing via the event completeness.

At last we turn our attention to the dependence on the Synfire Indicator . This analysis is more straightforward, we can just use the event completeness to fix (according to Fig. 8B) and then vary the shuffle parameter to cover the whole range of . We restrict ourselves to values of (thus SPIKE synchronization ) and look up the values of in Fig. 8A and of in Fig. 8C. The result is displayed in Fig. 8F which shows a very pronounced increase of the cost improvement with the Synfire Indicator. Lower values of (corresponding to parallel -planes in Figs. 8A-C) yield similar results, just with a restricted -range which also means that higher values of are no longer reached. The main result is that in all cases a high Synfire Indicator is essential for the proper functioning of the algorithm.

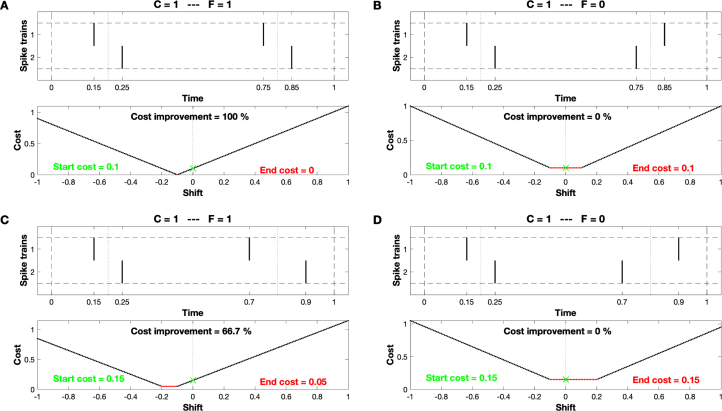

In our final figure we illustrate this importance of with minimal examples consisting of just two spike trains with two spikes each. As a representative of the noisy disturbances that are maintained by the latency correction, we also include the effect of different event lengths in our consideration. The upper plots of Fig. 9 show four possible arrangements of the four spikes. All of these arrangements consist of two perfectly matched spike pairs ( 1), the differences lie in the spike order and in the propagation velocity. The order in the two spike pairs is either consistent (, left subplots A and C) or inconsistent (, subplots B and D on the right), while the velocity is either constant (same interval within the two spike pairs, top subplots A and B) or varies (the second pair is more apart, subplots C and D at the bottom).

Fig. 9.

Four arrangements of two spike trains with just two spikes each. In all four cases the upper plot shows two pairs of matching spikes (). Subplot A: Perfect synfire chain (). Subplot B: The two pairs exhibit opposite orders (). Subplot C: Consistent order, but variation in propagation velocity leading to different distances between the matched spikes. Subplot D: Opposite order and different propagation velocities. In the lower plots we show the cost value versus a potential ‘shift’ of the second spike train with respect to the first. The start cost is the value at shift zero (marked by a green x), while the optimized end cost is the minimum value of this ‘cost function’ (marked in red). While the value of the Synfire Indicator has a decisive influence on the success of the latency correction, the change in propagation velocity results in an offset in cost that persists and can thus be measured even after the latency correction.

When we look at the four cost functions in the lower plots of Fig. 9 we see the main difference between the two kind of effects. Comparing left and right subplots we find that the inconsistency in order which is reflected in the value for the synfire indicator results in a collapse of the cost improvement . There is no systematic latency to correct and so the end cost equals the start cost. On the other hand, the different velocities in the two bottom arrangements lead to an offset in cost (in this case 0.05, compare A with B and C with D) that is not affected by the latency correction, rather it is present before and after.

Taken together, these different arrangements illustrate the essential difference between the systematic delays that we correct with our algorithm and other non-systematic disturbances which are exactly the kind of deviations from synchrony that we wish to quantify once the correction has been achieved.

5. Conclusions

In the quantification of synchrony, latency is a systematic disturbance that first needs to be eliminated. While there have been some rate-based approaches for data with sufficiently large firing rates (Nawrot et al., 2003, Schneider and Nikolić, 2006), the problem of latency in sparse neuronal spike trains where the timing of individual spikes matters remained elusive. In the present study we address this issue and propose a latency correction algorithm that corrects any systematic delays but to maintains all other kinds of noisy disturbances in the data. It consists of two basic steps, spike matching and minimization of the distances between the matched spikes using simulated annealing.

The algorithm receives as input a set of spike trains (as typically shown in a rasterplot) and delivers three main outputs: the end cost, the shifts performed in order to get there, and the relative cost improvement. The end cost (the minimal cost over the course of the simulated annealing) quantifies how well the spike trains in the dataset under investigation can be aligned. The shifts (marked by arrows in Fig. 1, Fig. 2) provide information about the latencies that were present initially. Finally, the relative cost improvement is a measure of the effect the algorithm has had in correcting the latency for this specific dataset.

We validate the algorithm on controlled data simulated from scratch (Section 4.1), show its effectiveness in an experimental real life setting (global propagation patterns in the cortex of mice recorded via wide-field calcium imaging before and after stroke induction, Section 4.2) and use simulations of these experimental data (Section 4.3) to identify the best conditions for its applicability to experimental datasets. In the first part we show that for mixings of a perfect synfire chain with Poisson spike trains decreasing the SPIKE-synchronization and the Synfire Indicator makes the cost improvement level off. On the real data we observe that the cost improvement is strongly positively correlated with the Synfire Indicator (and with SPIKE-synchronization as well, but much less). Finally, in the more systematic simulations of these real data we find again a pronounced increase of the cost improvement with the Synfire Indicator. On the other hand, for constant Synfire Indicators the cost improvement actually slightly decreases with SPIKE-synchronization (because more shuffle is needed to keep the Synfire Indicator constant and this noise limits the cost improvement).

Overall, we have accumulated evidence that the algorithm functions best for sparse data with well defined global events (as manifested by high values of ) and a consistent order within these events (corresponding to elevated values of ). But in particular in Section 4.3 we have seen that these two quantities are not equally important. Clearly the one fundamental criterion for a meaningful application of our latency correction algorithm is a high value of the Synfire Indicator . SPIKE-synchronization is not decisive in itself, but it is still relevant as a mediator: Without a reasonably high SPIKE-Synchronization there are not enough coincident spike pairs to estimate the latency within the spike trains. In addition, as we have mentioned repeatedly, SPIKE-Synchronization acts as an upper limit to the Synfire Indicator. Thus, a large value of SPIKE-Synchronization is a necessary condition, but as the minimum examples on the right hand side of Fig. 9 demonstrate, it is not a sufficient condition. If the spikes do not exhibit a high degree of consistency in order (a large ), the algorithm does not have enough systematic latency to work with. On the other hand, a high value of Synfire Indicator guarantees that SPIKE-Synchronization is large as well.

Nevertheless, it is important to stress that the actual value of the cost improvement also depends crucially on cost offset effects caused by noisy disturbances. What the algorithm does is correct constant systematic delays, any other disturbances are left unaffected. Thus the example for which the algorithm works best is a perfect synfire chain (as shown in Fig. 3A both and attain their maximum value of one). This is the only dataset that exhibits constant systematic delays but not any of the other potential sources of noise such as unreliability (missing spikes in the global events), jitter (noisy spike shifts), or background noise (extra spikes). These, together with other disturbances like different durations of global events (variation in propagation velocity) or changing intervals between subsequent spikes within the global events (non-monotonic propagation), make the correction more difficult and accordingly decrease the cost improvement. However, these are also exactly the deviations from synchrony in the dataset that we would like to quantify in the first place. We can easily do so by means of the cost offset that still remains even after the correction of the systematic delays has been performed. For an illustration of this point compare subplots A and C in Fig. 9. These two examples both have maximum SPIKE-synchronization and Synfire Indicator, but the change in propagation speed in subplot C causes a cost offset that persists after the correction. For the algorithm this means a reduction of the cost improvement, but for our synchrony analysis it is just an indication of the noisiness in the dataset (and this is independent of whether a latency correction has been performed or not).

At the other extreme there are clearly some datasets where the algorithm does not work, in the sense that no significant improvement can be achieved. The most obvious case are datasets where there is no significant systematic latency at all, which means there is actually nothing to correct. One example is when the dataset is already perfectly synchronous. In this case is one and is zero. In most other such cases the data are rather disordered with very low values for both and . A prominent example, Poisson spike trains, is shown in Fig. 3C.

Another feature in the data that would create problems for the algorithm are global events that overlap and are thus difficult to entangle. More specifically, whenever the interval between two successive events is less than twice the propagation time within an event, according to the coincidence criterion of Eq. (1) there will be matchings between spikes from different events. Such mismatches would be indicated by a decrease in the value of SPIKE-synchronization . Fortunately for us, many repetitive propagation phenomena in neuroscience as well in other fields (the algorithm is universal and could be applied to any type of discrete data) exhibit ratios of characteristic time scales that fulfill the coincidence criterion. For example, the duration of an epileptic seizure is usually much shorter than the interval between two successive seizures. Similarly, in meteorological data (an example outside of neuroscience) the time it takes a storm front to cross a specific region is typically much smaller than the time to the next storm.

A final rather general caveat that can be relevant under certain circumstances is that the latency we are referring to in this study is the latency from the point of view of the experimenter. However, sometimes (for example in case the dataset under consideration is derived from a neural network) it might be worth considering that every node in the network will have a different perspective of the same network activity which will depend on the array of propagation delays from each neuron to this “observing” neuron.

We can identify three different areas of future directions. First, for the algorithm itself we envisage a way to get over some of these limits mentioned above, in particular the entanglement of overlapping successive events. The basic idea is to focus on the non-overlapping parts (the neighboring spike trains) and to restrict the definition of the cost function on the corresponding diagonals closest to the main diagonal thus disregarding the matrix elements disturbed by the overlap. After this modified latency correction has been carried out the correct matching of spikes can be performed thereby disentangling the overlapping events. This avenue has some complications as well as wider implications and will therefore be pursued in a forthcoming study.

Second, concerning the underlying aim of estimating synchrony, instead of using just the cost function itself (the average of the spike time difference matrix) as a measure of spike train synchrony, one could evaluate before but in particular after the correction more sophisticated and comprehensive measures of spike train similarity such as the time-scale independent ISI- (Kreuz et al., 2007) or SPIKE-distances (Kreuz et al., 2013) or the time-scale dependent Victor-Purpura (Victor and Purpura, 1996) or van Rossum (van Rossum, 2001) distances.

Finally, the most important point regards the experimental calcium data in mice before and after stroke that we analyzed. Together with our previous findings on the lower duration and increased smoothness (Cecchini et al., 2021), the results of Fig. 6 suggest that the propagation of cortical activation shows a faster, more coherent and linear pattern in the Combined group. We will follow up on these results and evaluate the potential of both the end cost value (Fig. 6) and the cost improvement (Fig. 7) to serve as biomarkers that are able to uncover neural correlates not only of motor deficits caused by stroke but also of functional recovery during the various rehabilitation paradigms. Such insights could pave the way towards more targeted post-stroke therapies.

The algorithm will be readily applicable for everyone since it will be implemented in three freely available software packages called SPIKY1 (Matlab graphical user interface (Kreuz et al., 2015)), PySpike2 (Python library (Mulansky and Kreuz, 2016)) and cSPIKE3 (Matlab command line library with MEX-files). All of these software packages contain already the three symmetric measures of spike train synchrony, ISI-distance (Kreuz et al., 2007, Kreuz et al., 2009), SPIKE-distance (Kreuz et al., 2011, Kreuz et al., 2013), SPIKE-synchronization (Kreuz et al., 2015) (see Satuvuori et al., 2017 for generalized versions), the directional SPIKE-order (Kreuz et al., 2017) as well as source codes designed to find within a larger neuronal population the most discriminative subpopulation (Satuvuori et al., 2018).

CRediT authorship contribution statement

Thomas Kreuz: Conceptualization, Methodology, Software, Validation, Formal analysis, Investigation, Resources, Writing – original draft, Writing – review & editing, Visualization, Supervision. Federico Senocrate: Methodology, Software, Validation, Formal analysis, Writing – original draft, Writing – review & editing, Visualization. Gloria Cecchini: Formal analysis, Resources, Writing – review & editing. Curzio Checcucci: Software, Formal analysis, Writing – review & editing. Anna Letizia Allegra Mascaro: Investigation, Writing – review & editing. Emilia Conti: Investigation, Data curation, Writing – review & editing. Alessandro Scaglione: Investigation, Data curation, Writing – review & editing. Francesco Saverio Pavone: Resources, Project Administration, Funding acquisition.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

We thank Arturo Mariani for useful discussions and a careful reading of the manuscript.

This project has received funding from the H2020 EXCELLENT SCIENCE - European Research Council (ERC) under grant agreement ID n. 692943 BrainBIT and from the European Union’s Horizon 2020 Research and Innovation Programme under Grant Agreement No. 785907 (HBP SGA2) [Grant recipient: F.S.P.]. This research was supported by the EBRAINS research infrastructure, funded from the European Union’s Horizon 2020 Framework Programme for Research and Innovation under the Specific Grant Agreement No. 945539 (Human Brain Project SGA3) [Grant recipient: F.S.P.].

Footnotes

http://www.thomaskreuz.org/source-codes/SPIKY.

http://mariomulansky.github.io/PySpike.

http://www.thomaskreuz.org/source-codes/cSPIKE.

Data availability

All data generated or analyzed during this study have been deposited in the free-to-use multidisciplinary open Mendeley Data Repository https://data.mendeley.com/datasets/gpsjkbp6h4/1.

References

- Allegra Mascaro A.L., Conti E., Lai S., Di Giovanna A.P., Spalletti C., Alia C., Panarese A., Scaglione A., Sacconi L., Micera S., et al. Combined rehabilitation promotes the recovery of structural and functional features of healthy neuronal networks after stroke. Cell Rep. 2019;28(13):3474–3485. doi: 10.1016/j.celrep.2019.08.062. [DOI] [PubMed] [Google Scholar]

- Brown E.N., Kass R.E., Mitra P.P. Multiple neural spike train data analysis: state-of-the-art and future challenges. Nature Neurosci. 2004;7:456. doi: 10.1038/nn1228. [DOI] [PubMed] [Google Scholar]

- Buzsaki G., Draghun A. Neuronal oscillations in cortical networks. Science. 2004;304:1926. doi: 10.1126/science.1099745. [DOI] [PubMed] [Google Scholar]

- Cecchini G., Scaglione A., Allegra Mascaro A.L., Checcucci C., Conti E., Adam I., Fanelli D., Livi R., Pavone F.S., Kreuz T. Cortical propagation tracks functional recovery after stroke. PLoS Comput. Biol. 2021;17(5) doi: 10.1371/journal.pcbi.1008963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dowsland K.A., Thompson J.M. Handbook of Natural Computing. Springer; 2012. Simulated annealing; pp. 1623–1655. [Google Scholar]

- Enoka R.M., Duchateau J. Rate coding and the control of muscle force. Cold Spring Harb. Perspect. Med. 2017;7(10):a029702. doi: 10.1101/cshperspect.a029702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ermentrout G.B., Galán R.F., Urban N.N. Reliability, synchrony and noise. Trends Neurosci. 2008;31(8):428–434. doi: 10.1016/j.tins.2008.06.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fukushima M., Rauske P.L., Margoliash D. Temporal and rate code analysis of responses to low-frequency components in the bird’s own song by song system neurons. J. Comp. Physiol. A. 2015;201(12):1103–1114. doi: 10.1007/s00359-015-1037-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gerstein G.L., Kirkland K.L. Neural assemblies: technical issues, analysis and modeling. Neural Netw. 2001;14:589. doi: 10.1016/s0893-6080(01)00042-9. [DOI] [PubMed] [Google Scholar]

- Harvey M.A., Saal H.P., Dammann III J.F., Bensmaia S.J. Multiplexing stimulus information through rate and temporal codes in primate somatosensory cortex. PLoS Biol. 2013;11(5) doi: 10.1371/journal.pbio.1001558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kreuz T., Chicharro D., Andrzejak R.G., Haas J.S., Abarbanel H.D.I. Measuring multiple spike train synchrony. J. Neurosci. Methods. 2009;183:287. doi: 10.1016/j.jneumeth.2009.06.039. [DOI] [PubMed] [Google Scholar]

- Kreuz T., Chicharro D., Greschner M., Andrzejak R.G. Time-resolved and time-scale adaptive measures of spike train synchrony. J. Neurosci. Methods. 2011;195:92. doi: 10.1016/j.jneumeth.2010.11.020. [DOI] [PubMed] [Google Scholar]

- Kreuz T., Chicharro D., Houghton C., Andrzejak R.G., Mormann F. Monitoring spike train synchrony. J. Neurophysiol. 2013;109:1457. doi: 10.1152/jn.00873.2012. [DOI] [PubMed] [Google Scholar]

- Kreuz T., Haas J.S., Morelli A., Abarbanel H.D.I., Politi A. Measuring spike train synchrony. J. Neurosci. Methods. 2007;165:151. doi: 10.1016/j.jneumeth.2007.05.031. [DOI] [PubMed] [Google Scholar]

- Kreuz T., Mulansky M., Bozanic N. SPIKY: A graphical user interface for monitoring spike train synchrony. J. Neurophysiol. 2015;113:3432. doi: 10.1152/jn.00848.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kreuz T., Satuvuori E., Pofahl M., Mulansky M. Leaders and followers: Quantifying consistency in spatio-temporal propagation patterns. New J. Phys. 2017;19 [Google Scholar]

- Kumar A., Rotter S., Aertsen A. Spiking activity propagation in neuronal networks: reconciling different perspectives on neural coding. Nature Rev. Neurosci. 2010;11:615–627. doi: 10.1038/nrn2886. [DOI] [PubMed] [Google Scholar]

- Lee J., Darlington T.R., Lisberger S.G. The neural basis for response latency in a sensory-motor behavior. Cerebral Cortex. 2020;30(5):3055–3073. doi: 10.1093/cercor/bhz294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee J., Joshua M., Medina J.F., Lisberger S.G. Signal, noise, and variation in neural and sensory-motor latency. Neuron. 2016;90(1):165–176. doi: 10.1016/j.neuron.2016.02.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mainen Z., Sejnowski T.J. Reliability of spike timing in neocortical neurons. Science. 1995;268:1503. doi: 10.1126/science.7770778. [DOI] [PubMed] [Google Scholar]

- Mulansky M., Kreuz T. PySpike - A Python library for analyzing spike train synchrony. Software X. 2016;5:183. [Google Scholar]

- Nawrot M.P., Aertsen A., Rotter S. Elimination of response latency variability in neuronal spike trains. Biol. Cybern. 2003;88:321–334. doi: 10.1007/s00422-002-0391-5. [DOI] [PubMed] [Google Scholar]

- Quian Quiroga R., Kreuz T., Grassberger P. Event synchronization: a simple and fast method to measure synchronicity and time delay patterns. Phys. Rev. E. 2002;66 doi: 10.1103/PhysRevE.66.041904. [DOI] [PubMed] [Google Scholar]

- Quian Quiroga R., Panzeri S. CRC Taylor and Francis; Boca Raton, FL, USA: 2013. Principles of Neural Coding. [Google Scholar]

- Satuvuori E., Mulansky M., Bozanic N., Malvestio I., Zeldenrust F., Lenk K., Kreuz T. Measures of spike train synchrony for data with multiple time scales. J. Neurosci. Methods. 2017;287:25–38. doi: 10.1016/j.jneumeth.2017.05.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Satuvuori E., Mulansky M., Daffertshoder A., Kreuz T. Using spike train distances to identify the most discriminative neuronal subpopulation. J. Neurosci. Methods. 2018;308:354. doi: 10.1016/j.jneumeth.2018.09.008. [DOI] [PubMed] [Google Scholar]

- Schneider G., Nikolić D. Detection and assessment of near-zero delays in neuronal spiking activity. J. Neurosci. Methods. 2006;152(1–2):97–106. doi: 10.1016/j.jneumeth.2005.08.014. [DOI] [PubMed] [Google Scholar]

- Shlens J., Rieke F., Chichilnisky E.L. Synchronized firing in the retina. Curr. Opin. Neurobiol. 2008;18:396. doi: 10.1016/j.conb.2008.09.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spalletti C., Alia C., Lai S., Panarese A., Conti S., Micera S., Caleo M. Combining robotic training and inactivation of the healthy hemisphere restores pre-stroke motor patterns in mice. ELife. 2017;6 doi: 10.7554/eLife.28662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spalletti C., Lai S., Mainardi M., Panarese A., Ghionzoli A., Alia C., Gianfranceschi L., Chisari C., Micera S., Caleo M. A robotic system for quantitative assessment and poststroke training of forelimb retraction in mice. Neurorehabil. Neural Repair. 2014;28(2):188–196. doi: 10.1177/1545968313506520. [DOI] [PubMed] [Google Scholar]

- Tiesinga P.H.E., Fellous J.M., Sejnowski T.J. Regulation of spike timing in visual cortical circuits. Nat. Rev. Neurosci. 2008;9:97. doi: 10.1038/nrn2315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uzuntarla M., Ozer M., Guo D. Controlling the first-spike latency response of a single neuron via unreliable synaptic transmission. Eur. Phys. J. B. 2012;85(8):1–8. [Google Scholar]

- van Rossum M.C.W. A novel spike distance. Neural Comput. 2001;13:751. doi: 10.1162/089976601300014321. [DOI] [PubMed] [Google Scholar]

- van Rullen R., Thorpe S.J. Rate coding versus temporal order coding: what the retinal ganglion cells tell the visual cortex. Neural Comput. 2001;13(6):1255–1283. doi: 10.1162/08997660152002852. [DOI] [PubMed] [Google Scholar]

- Victor J. Spike train metrics. Curr. Opin. Neurobiol. 2005;15:585. doi: 10.1016/j.conb.2005.08.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Victor J.D., Purpura K.P. Nature and precision of temporal coding in visual cortex: A metric-space analysis. J. Neurophysiol. 1996;76:1310. doi: 10.1152/jn.1996.76.2.1310. [DOI] [PubMed] [Google Scholar]

- Walter J.T., Khodakhah K. The advantages of linear information processing for cerebellar computation. Proc. Natl. Acad. Sci. 2009;106(11):4471–4476. doi: 10.1073/pnas.0812348106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zirkle J., Rubchinsky L.L. Noise effect on the temporal patterns of neural synchrony. Neural Netw. 2021;141:30–39. doi: 10.1016/j.neunet.2021.03.032. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All data generated or analyzed during this study have been deposited in the free-to-use multidisciplinary open Mendeley Data Repository https://data.mendeley.com/datasets/gpsjkbp6h4/1.