Abstract

Objective

To close gaps between research and clinical practice, tools are needed for efficient pragmatic trial recruitment and patient-reported outcome collection. The objective was to assess feasibility and process measures for patient-reported outcome collection in a randomized trial comparing electronic health record (EHR) patient portal questionnaires to telephone interview among adults with epilepsy and anxiety or depression symptoms.

Materials and Methods

Recruitment for the randomized trial began at an epilepsy clinic visit, with EHR-embedded validated anxiety and depression instruments, followed by automated EHR-based research screening consent and eligibility assessment. Fully eligible individuals later completed telephone consent, enrollment, and randomization. Participants were randomized 1:1 to EHR portal versus telephone outcome assessment, and patient-reported and process outcomes were collected at 3 and 6 months, with primary outcome 6-month retention in EHR arm (feasibility target: ≥11 participants retained).

Results

Participants (N = 30) were 60% women, 77% White/non-Hispanic, with mean age 42.5 years. Among 15 individuals randomized to EHR portal, 10 (67%, CI 41.7%–84.8%) met the 6-month retention endpoint, versus 100% (CI 79.6%–100%) in the telephone group (P = 0.04). EHR outcome collection at 6 months required 11.8 min less research staff time per participant than telephone (5.9, CI 3.3–7.7 vs 17.7, CI 14.1–20.2). Subsequent telephone contact after unsuccessful EHR attempts enabled near complete data collection and still saved staff time.

Discussion

In this randomized study, EHR portal outcome assessment did not meet the retention feasibility target, but EHR method saved research staff time compared to telephone.

Conclusion

While EHR portal outcome assessment was not feasible, hybrid EHR/telephone method was feasible and saved staff time.

Keywords: psychiatric comorbidity, pragmatic trial, electronic health record, learning health system, seizures

BACKGROUND AND SIGNIFICANCE

Though traditional randomized trials contribute substantially to advances in medical treatment, these advances typically require 2 decades to reach the clinic, study populations differ significantly from those treated in routine practice settings, and some evidence-based therapies are never implemented in routine care.1–5 At the same time, there is increasing emphasis on patient-centered care and use of patient-reported outcomes to ensure advances in treatment result in meaningful outcomes for patients.6,7 Pragmatic trials and research involving patient-reported outcome measures (PROMs) in real-world care settings have potential to close gaps between traditional research trials and patient-centered care delivery, by enrolling more representative study samples and studying more realistic treatment conditions.8

To advance pragmatic trials and other research in routine care settings (including learning health system research), there is need to develop methods for recruiting study participants from more representative clinical populations and assess methods for rigorous outcome collection, including patient-reported outcome measures. Methods for recruitment and follow-up using Electronic Health Record (EHR) interfaces at the point of care, with tools incorporated seamlessly in routine care processes9 have potential to facilitate pragmatic research in medical care settings and subspecialty settings such as neurology or epilepsy clinics, while reducing participant burden.

EHR-based enrollment and remote outcome assessment via telephone and/or EHR obviates the need for patients to attend in-person research visits, which has potential to improve both access and adherence. This is particularly valuable in epilepsy, a condition characterized by recurrent seizures, where restricted driving privileges are a major barrier to travel, and both research follow-up and epilepsy care involve elements appropriate for remote follow-up.10 Indeed, studies demonstrated no significant difference in seizures, hospitalizations, or ER visits with remote care, along with high satisfaction and improved follow-up.11–13 Moreover, close to 80% of people with epilepsy live in low- and middle-income communities without nearby specialized epilepsy centers, limiting access to epilepsy research.14 Successful use of remote follow-up may expand research access for individuals in these underserved communities. Thus, it is important to study remote patient-reported outcome collection among people with epilepsy, as this may have important implications for research serving people with epilepsy far beyond the COVID-19 pandemic.

Anxiety and depression are highly prevalent in epilepsy and major contributors to poor quality of life.15,16 The importance of patient-reported outcome measures in epilepsy is exemplified by the 2017 Epilepsy Quality Measurement Set, with measures for screening anxiety and depression at each visit, assessing quality of life, and evaluating quality of life outcomes.17 Thus, epilepsy patients with anxiety or depression symptoms are an advantageous group to develop and assess novel EHR-based approaches for pragmatic research using patient-reported outcome measures. Our prior work demonstrated patients with anxiety or depression were interested in participating in pragmatic research for anxiety and depression,9 yet one potential additional in-person visit was a major reason eligible individuals declined enrollment in a treatment study.18 Considering this data and transportation barriers faced by many with epilepsy, use of pragmatic, remote outcome assessment methods may be particularly advantageous for research in this condition.

OBJECTIVE

This initial analysis of a pragmatic randomized pilot trial of PROM collection among adults with epilepsy and high or borderline anxiety and depression symptoms has the following objectives: (1) To assess feasibility of EHR patient portal-based outcome collection (retention at 6 months, primary outcome), and (2) To compare process measures and retention by EHR-based patient portal outcome method versus telephone interview control condition at 3 and 6 months (secondary outcomes).

MATERIALS AND METHODS

Brief design overview, setting, inclusions/exclusions

This was a pilot, parallel group randomized trial of 6-month patient-reported outcome collection by electronic health record (EHR) patient portal versus telephone with 1:1 allocation. Participants were recruited from a tertiary adult epilepsy clinic with 6 epileptologists and 1 epilepsy-specialized physician assistant in the Southeastern United States. Inclusion criteria were: (1) age ≥18 years; (2) high or borderline anxiety or depression symptoms based on electronic responses to validated anxiety and depression instruments (Generalized Anxiety Disorder-7, GAD-7 score ≥819,20 and/or Neurological Disorders Depression Inventory-Epilepsy, NDDI-E score ≥1421,22); and (3) diagnosis of epilepsy based on EEG findings or epilepsy specialist EHR-documented clinical impression. Individuals were excluded if they indicated potential passive suicidal ideation during clinic-based depression screening, via response of “sometimes” or “always or often” to question 4 of the NDDI-E (“I’d be better off dead”).23 A pop-up notification to the epilepsy clinician occurred in the clinic encounter for these responses and documentation tools were provided to clinicians as a guide for evaluating and managing suicidality. Individuals unable to independently complete anxiety and depression screeners in clinic were implicitly excluded.

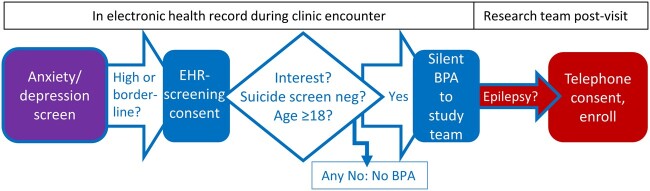

EHR-based, care-embedded recruitment

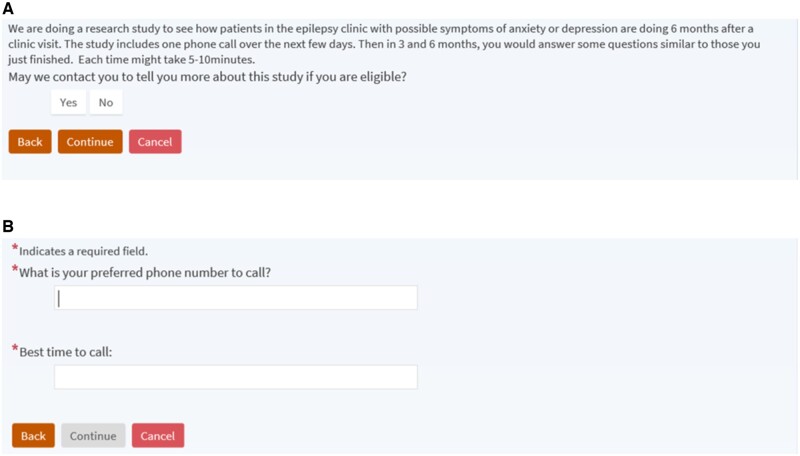

Study recruitment utilized an EHR-embedded screening and initial trial recruitment process depicted in Figure 1. To enhance clinical care in accordance with epilepsy quality measures,17 anxiety, depression, and quality of life measurement was implemented in the epilepsy center practice using electronic tools adapted from a multicenter network.24,25 Patients completed the anxiety and depression instruments and quality of life measure (Quality of Life in Epilepsy-10, QOLIE-10)26 as EHR-based questionnaires in the secure patient portal, typically on the clinic computer following rooming by clinical staff. Staff launched the built-in questionnaires by clicking a link in the EHR, allowing completion while patients waited to see the clinician. A few patients noticed the questionnaires in the patient portal prior to clinic arrival on the visit date and completed them on their own device. Institutional Review Board-approved electronic tools for preliminary trial screening and consent were built into the Epic EHR. Individuals whose scores on the GAD-7 or NDDI-E were in the eligible range for the randomized study (≥8 or ≥14, respectively) immediately received brief screening consent information and questionnaire following the anxiety or depression screener (Figure 2). The screening consent provided brief information on study goals and activities (Figure 2A) and prompted interested individuals to enter contact preferences (Figure 2B). In collaboration with 2 staff EHR analysts (vendor EHR system Epic Systems Corporation, Verona WI) having research tool and ambulatory system expertise, rules were built into the EHR for a notification message (Epic system silent Best Practice Advisory) to a study team inbasket pool for each interested and potentially eligible individual (Figure 1). These messages included contact information from the screening consent (Figure 2B), age, NDDI-E score, GAD-7 score, and NDDI-E passive suicidal ideation response. The final inclusion criterion (epilepsy diagnosis) was assessed by study staff via manual EHR review before telephone contact for enrollment (Figure 1). Results of anxiety, depression, and quality of life measures were available in the EHR for providers during epilepsy clinic visits, and otherwise participants received usual epilepsy care.

Figure 1.

Recruitment process and role of the electronic health record (EHR). EHR-based anxiety and depression screening for clinical care (purple, far left) was followed by EHR-embedded research screening consent and automated eligibility assessment, then study team notification message in the EHR (Epic system silent Best Practice Advisory, BPA; EHR activities blue, left and middle portion of figure). Subsequently, study team tasks (red, far right) included manual epilepsy diagnosis assessment in the EHR and telephone enrollment.

Figure 2.

Electronic health record-based screening consent. (A) Screening consent wording and button selections. (B) Additional questions that appear after selecting the Yes response and clicking Continue.

Standard protocol approvals, registrations, and consents

The institutional review board approved the EHR-based screening consent followed by telephone consent, based on minimal risk study classification. In addition to study coordinator documentation of phone consent, enrolled participants received a study information sheet by standard US Postal Service mail, containing the information reviewed during telephone consent. The study is registered at clinicaltrials.gov: NCT03879525.

Detailed study design, randomization, and follow-up

Following enrollment via telephone consent, a brief telephone interview was conducted to collect demographics and baseline clinical history. Other baseline variables were collected from the EHR. For participants who did not already have an EHR patient portal account, research staff activated a patient portal account for them prior to randomization. Participants were then randomized in REDCap (Research Electronic Data Capture)27 to either EHR patient portal outcome collection, or telephone outcome collection. The randomization table was developed by the study statistician and uploaded to REDCap by the programmer, thus concealing study arm allocation from study coordinators and Principal Investigator (PI) until participants were fully enrolled and coordinator activated the REDCap randomization button. Allocation was 1:1 for EHR versus telephone, using blocked randomization stratified by patient portal enrollment status at baseline, with variable block sizes. For this pilot study, to conduct and supervise outcome assessment procedures, it was not possible to blind the study team to EHR versus telephone allocation. However, identical encounter types were made within the EHR and outcome instrument results were present in the EHR regardless of study arm to blind treating epilepsy specialists to the randomized allocation. Study procedures involved study staff viewing individual patient-reported outcome results during data collection, but the PI did not access these values until study end.

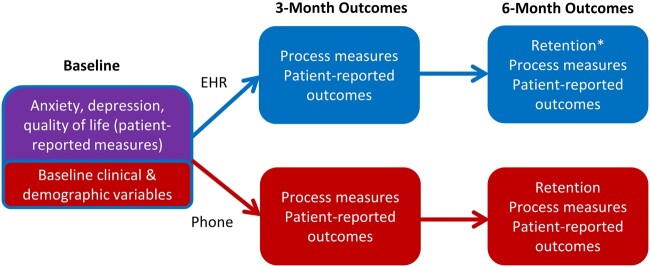

Those enrolled in the EHR patient portal arm received an instructional handout via mail on how to access patient portal-based patient reported outcome measures. Follow-up patient-reported outcome collection and reminders were conducted at 3 and 6 months using these procedures: (1) Ten days prior to scheduled outcome, paper outcome measures were mailed to telephone participants and electronic outcome measures were sent to EHR participants in the EHR portal; (2) Two reminder communications (telephone calls vs patient portal messages) were provided to participants during the 10 days prior to outcome due date; and (3) Up to 5 postdue reminder calls or EHR portal reminder messages were attempted following outcome due date. Reminders were provided every 2–3 days following target outcome date. Prior to 6-month outcome collection, 2 additional follow-up procedures were added via a protocol amendment. First, 10 days prior to EHR outcome assessment due date, an enhanced tip sheet on how to access EHR patient portal questionnaires from patient portal message notification emails was mailed to EHR arm participants. Second, if a participant failed to meet the primary retention outcome via the randomized method (defined as failure to complete outcome assessment within 1 week of the final postdue reminder), up to 3 attempts for outcome collection were then made by the alternative, nonrandomized method. Participants received a $15 incentive for completing the baseline interview and for each completed outcome assessment. Figure 3 outlines measure collection method and timing for baseline variables and each outcome concept.

Figure 3.

Study design schema with outcome assessment. Baseline data collection and outcome measure concepts were collected from the clinical care visit that prompted study enrollment (purple, top of far left), via research telephone call (red, lower figure) and via the electronic health record (EHR, blue, upper figure). Individuals who did not meet criteria for retention at 6 months via outcome collection by randomized method would then have 3 additional contact attempts by the alternative, nonrandomized method. *The primary outcome was retention at 6 months in the EHR outcome collection arm.

Baseline clinical and demographic variables

Sociodemographic variables were collected and coded using National Institute of Neurological Disorders and Stroke Common Data elements when possible. Education, marital status, and employment were collected by interview. Age, sex, race, and ethnicity were obtained from the EHR: variables were collected from EHR when feasible to reduce participant burden. Epilepsy type was collected from the EHR and classified according to 2017 International League Against Epilepsy criteria.28 Seizure freedom (for at least 6 months before baseline GAD-7 and NDDI-E) was also collected from the EHR. Baseline anxiety (GAD-7),20 depression (NDDI-E),22 and quality of life scores (QOLIE-10)26 were collected from the clinic visit that prompted trial enrollment; these patient reported outcome measures were also collected at each follow up. This initial report focuses on retention and process outcomes from the randomized trial.

Feasibility assessment, process variables, sample size

The primary feasibility outcome was retention in the EHR arm, with retention defined as complete patient-reported outcome collection occurring via randomized modality within 1 week of the 5th postdue date randomized modality reminder (as described above). Study sample size of N = 15 per arm was planned on the primary feasibility hypothesis that 6-month retention would be greater than 60% in the EHR arm. Based on a Bayesian simulation of 10,000 trials with sample size N = 15 in the EHR arm, a noninformative uniform prior on the probability of adherence, and an 80% credible interval that true retention is at least 60% as a target, we determined that if 11 or more of the EHR-arm participants are retained, then we would be 83% confident that the true retention probability is greater than 60%. We selected 60% retention as the target based on retention in efficacy trials, incorporating additional retention data from pragmatic trials, and based on the study team’s consensus that retention below 60% would not be acceptable for a future trial.29–32 Other process and feasibility variables were collected at 3 and 6 months (Figure 3). The outcomes included retention in both arms, total study team time required for outcome collection, total study team time for 6-month outcome collection and data entry, timing of outcome collection relative to due date, whether EHR-arm participants read the study EHR portal messages, and number of total contact attempts/reminders.

Statistical analysis

Analyses were conducted using SAS 9.4. Descriptive and summary statistics including proportions, mean, median and interquartile range were calculated for the total sample and each group. Retention rates were calculated, together with 95% confidence intervals, based on inverting the score test for a binomial proportion. Confidence intervals for means were calculated on log transformed values and back transformed. Retention, process, and health utilization outcomes were compared between groups using Wilcoxon rank-sum test or Fisher’s exact test, as appropriate. Age and anxiety and depression scores were also compared across different branch points in the trial screening/enrollment process.

RESULTS

Recruitment

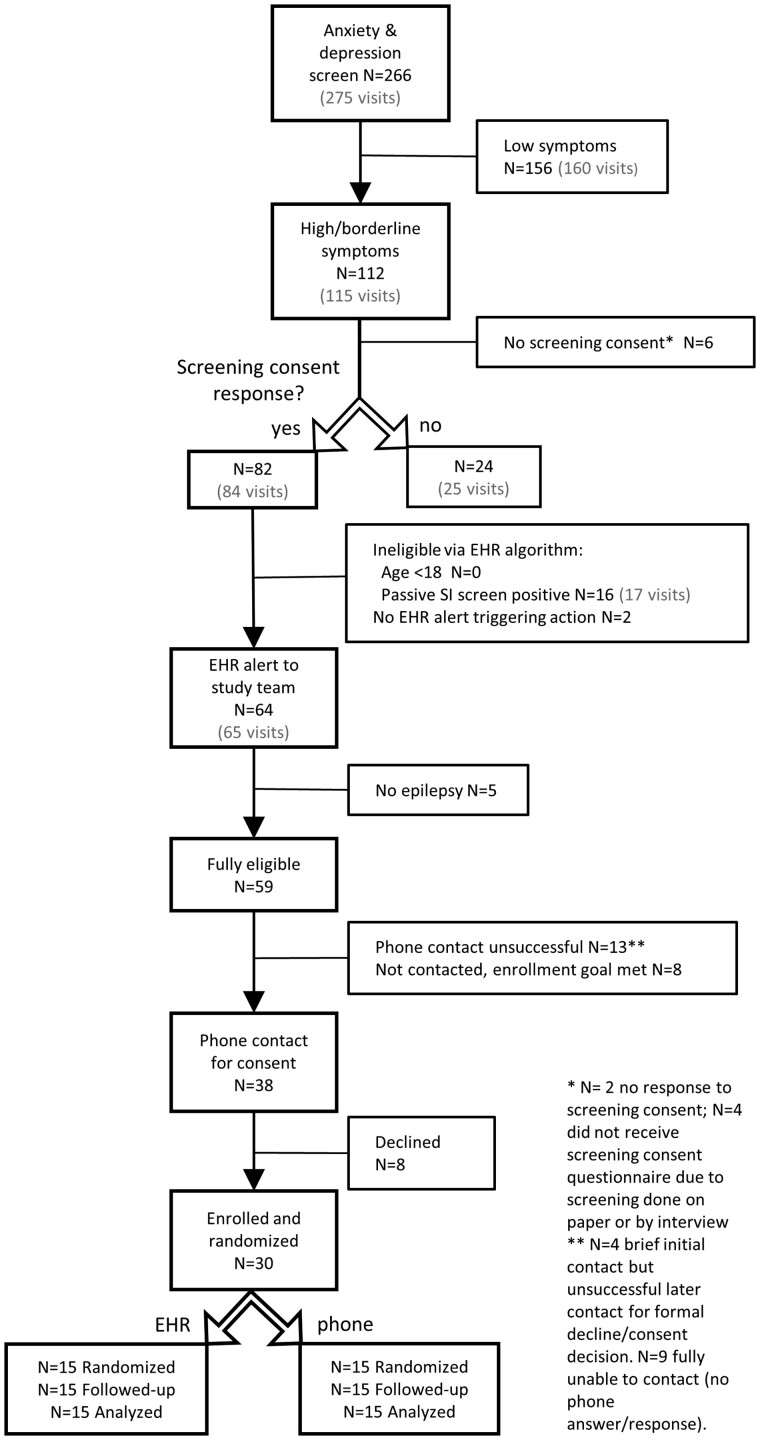

Recruitment occurred from December 12, 2019 to May 14, 2020 and follow-up occurred from March 30, 2020 to November 10, 2020. Recruitment completed nearly 1 month faster than the 6 months projected during trial planning. Figure 4 demonstrates the flow of potential participants from initial clinical anxiety and depression screening, EHR-based automated eligibility assessment, and enrollment through study completion. Of those who completed anxiety and depression screens, 112 (42.1%) had borderline or high scores on at least 1 instrument, and more than 75% of these individuals responded yes to the screening consent (82 of 106 who completed the electronic screening consent question). Nearly 20% of interested individuals were automatically excluded by the EHR algorithm due to their scores on the passive suicidal ideation NDDI-E item. Anxiety and depression scores and NDDI-E passive suicidal ideation item responses did not differ significantly among those who indicated yes versus no to the screening consent, nor for those who ultimately enrolled versus did not enroll (among those with study eligibility EHR alert generated). The group that selected no to the screening consent were younger than those who responded yes (mean age ± SD 30.4 ± 12 years for No group vs 39.6 ± 14.3 for Yes group, P = 0.004, Wilcoxon rank sum test). There was no age difference between those who ultimately enrolled versus did not enroll among individuals with eligibility EHR alert generated. All N = 30 individuals enrolled were analyzed for retention and process outcomes.

Figure 4.

Eligibility participant recruitment flow diagram. Ns shown are unique individual patients. The total number of clinic visits is shown in gray, when there were repeated visits for individuals in the study period.

Participant characteristics

Table 1 shows sociodemographic and clinical characteristics of the study sample at baseline. Age of participants ranged from 20 to 64; 40% were male and 20% were Black, mixed Black and White, or Native American race. Half were married and fewer than half were employed. Over 80% had focal epilepsy and only one-third had been seizure free in the prior 6 months. Two-thirds of the study sample had positive screens for anxiety or depression (GAD-7 ≥ 10 or NDDI-E ≥ 16) and the remainder had borderline high scores.

Table 1.

Participant characteristics overall and by randomized modalitya

| Characteristic | Overall (N = 30) | EHR (N = 15) | Telephone (N = 15) |

|---|---|---|---|

| Age at baseline, years | 42.5 ± 12.8 | 42.5 ± 13.4 | 42.5 ± 12.7 |

| 40 [33, 53] | 38 [33, 57] | 46 [32, 53] | |

| 20–29 | 4 (13%) | 2 (13%) | 2 (13%) |

| 30–39 | 10 (33) | 6 (40) | 4 (27) |

| 40–49 | 6 (20) | 2 (13) | 4 (27) |

| 50–59 | 7 (23) | 3 (20) | 4 (27) |

| 60–64 | 3 (10) | 2 (13) | 1 (7) |

| Female | 18 (60%) | 7 (47%) | 11 (73%) |

| Race-ethnicity | |||

| Non-Hispanic Black only | 5 (17%) | 3 (20%) | 2 (13%) |

| Non-Hispanic white only | 23 (77) | 11 (73) | 12 (80) |

| Otherb | 2 (7) | 1 (7) | 1 (7) |

| Education | |||

| High school/GED or less | 7 (23%) | 7 (47%) | 0 |

| Associate’s degree/some college | 15 (50) | 5 (33) | 10 (67%) |

| Bachelor’s degree or greater | 8 (27) | 3 (20) | 5 (33) |

| Marital status | |||

| Never married | 12 (40%) | 7 (47%) | 5 (33%) |

| Separated/divorced | 3 (10) | 1 (7) | 2 (13) |

| Married | 15 (50) | 7 (47) | 8 (53) |

| Employment status | |||

| Employed | 9 (30%) | 4 (27%) | 5 (33%) |

| Disabled | 15 (50) | 8 (53) | 7 (47) |

| Student | 2 (7) | 1 (7) | 1 (7) |

| All othersc | 4 (13) | 2 (13) | 2 (13) |

| Epilepsy type | |||

| Focal | 25 (83%) | 12 (80%) | 13 (87%) |

| Generalized | 4 (13) | 3 (20) | 1 (7) |

| Unknown | 1 (3) | 0 | 1 (7) |

| Seizure free at least 6 months | 10 (33%) | 4 (27%) | 6 (40%) |

| Number of current antiseizure medications | |||

| 1 | 13 (43%) | 5 (33%) | 8 (53%) |

| 2 | 9 (30) | 5 (33) | 4 (27) |

| 3 | 5 (17) | 2 (13) | 3 (20) |

| 4 | 3 (10) | 3 (20) | 0 |

| GAD-7 Score | 10.5 ± 4.5 | 10.5 ± 5.3 | 10.5 ± 3.8 |

| 9 [7, 13] | 9 [7, 14] | 9 [8, 13] | |

| GAD-7 ≥ 10 | 13 (43%) | 6 (40%) | 7 (47%) |

| NDDI-E Score | 15.3 ± 3.0 | 15.4 ± 2.8 | 15.3 ± 3.4 |

| 15 [14, 18] | 15 [14, 18] | 15 [12, 19] | |

| NDDI-E ≥ 16 | 14 (47%) | 7 (47%) | 7 (47%) |

| GAD-7 ≥ 10, NDDI-E ≥ 16, or both | 20 (67%) | 9 (60%) | 11 (73%) |

Count (column %), mean±SD, and median [interquartile range].

One Black and White mixed race individual in the phone group and 1 Native American in the EHR group.

Includes keeping house, temporarily laid off, on leave via Family Medical Leave Act, not working.

EHR: electronic health record; GAD-7: Generalized Anxiety Disorder-7; NDDI-E: Neurological Disorders Depression Inventory-Epilepsy.

Overall, the EHR and telephone arm participants had similar characteristics, except all of the study participants with high school or lower level of education were randomized to the EHR arm. Two participants did not have EHR patient portal accounts prior to study enrollment; one in each arm.

Primary and secondary outcomes: EHR patient portal versus telephone

Analyses of the primary and secondary outcomes are demonstrated in Tables 2 and 3. The primary outcome, retention at 6 months (PROM collection at 6 months in EHR arm within 1 week of final reminder message) was met in 10 of the 15 participants in the EHR arm (66.7%, CI 41.7%–84.8%). This did not meet the predetermined feasibility goal of 11 participants retained, and the difference in retention between EHR arm and telephone arm (100%, CI 79.6%–100%) was statistically significant. Nearly all 6-month outcomes were obtained when the hybrid method of outcome collection was used for those not retained by randomized modality (telephone for EHR-randomized participants, Table 3). The one individual in Table 3 with failure to obtain outcome by hybrid method actually returned most (but not all) outcome measures via patient portal following initiation of telephone contact attempts.

Table 2.

Retention and process measures by randomized modality with no hybrid method of outcome collectiona

| Overall (N = 30) | EHR (N = 15) | Telephone (N = 15) | P value* | |

|---|---|---|---|---|

| Retention | ||||

| 3 months | 25 (83%) | 10 (67%) | 15 (100%) | 0.04 |

| 6 monthsb | 25 (83%) | 10 (67%) | 15 (100%) | 0.04 |

| Staff time for outcome collection (min) | ||||

| 3 months | 14.4 ± 7.3 | 11.0 ± 7.1 | 17.9 ± 5.8 | 0.004 |

| 12.4 [8.3, 19.4] | 8.3 [6.0, 16.0] | 18.3 [12.3, 22.0] | ||

| 6 months | 13.0 ± 7.7 | 5.9 ± 3.6 | 17.7 ± 5.7 | <0.001 |

| (N = 25, 10, 15) | 12 [6, 20] | 4 [4, 8] | 17.7 [12.2, 22.0] | |

| Staff time for outcome collection and data entry (min) | ||||

| 6 months | 22.8 ± 13.6 | 13.6 ± 3.7 | 29.0 ± 14.3 | <0.001 |

| (N = 25, 10, 15) | 21 [14, 27] | 12.7 [11.0, 14.0] | 26.0 [22.1, 32.3] | |

| Number of reminders | ||||

| 3 months | 2.8 ± 2.3 | 3.5 ± 2.5 | 2.1 ± 1.8 | 0.09 |

| 2 [1, 4] | 3 [1, 7] | 1 [1, 2] | ||

| 6 months | 2.3 ± 2.0 | 2.7 ± 2.7 | 2.0 ± 1.4 | 0.79 |

| (N = 25, 10, 15) | 1 [1, 3] | 1.5 [1, 3] | 1 [1, 3] | |

| Observations relative to due date (days) | ||||

| 3 months | −1.9 ± 6.8 | −2.1 ± 6.2 | −1.8 ± 7.3 | 0.89 |

| (N = 25, 10, 15) | −3 [−6, −1] | −2.5 [−7, 1] | −3 [−6, −1] | |

| 6 months | −1.7 ± 8.1 | −1.9 ± 10.7 | −1.6 ± 6.2 | 0.34 |

| (N = 25, 10, 15) | −5 [−7, 0] | −5 [−10, 0] | −5 [−6, 1] |

Count (column %), mean±SD, and median [interquartile range]; data are complete unless otherwise noted.

Primary feasibility outcome.

P values are for comparison of EHR and telephone groups, based on Fisher’s exact and Wilcoxon rank sum tests.

EHR: electronic health record.

Table 3.

Retention and process measures by randomized modality with hybrid method of outcome collectiona

| Overall (N = 30) | EHR (N = 15)b | Telephone (N = 15) | P value* | |

|---|---|---|---|---|

| Outcome obtained by dual method protocol | ||||

| 6 months | 29 (97%) | 14 (93%) | 15 (100%) | >0.99 |

| Staff time for outcome collection (min) | ||||

| 6 months | 15.6 ± 9.8 | 13.5 ± 12.5 | 17.7 ± 5.7 | 0.09 |

| 14.7 [8.0, 22.0] | 8 [4, 25] | 17.7 [12.2, 22.0] | ||

| Staff time for outcome collection and data entry (min) | ||||

| 6 months | 25.2 ± 14.0 | 21.4 ± 13.0 | 29.0 ± 14.3 | 0.04 |

| 23.2 [14.0, 29.0] | 14.0 [11.5, 29.0] | 26.0 [22.1, 32.3] | ||

| Number of reminders | ||||

| 6 months | 3.5 ± 3.3 | 4.9 ± 4.0 | 2.0 ± 1.4 | 0.07 |

| 2 [1, 6] | 3 [1, 9] | 1 [1, 3] | ||

| Observations relative to due date (days) | ||||

| 6 months | 3.3 ± 13.7 | 8.3 ± 17.3 | −1.6 ± 6.2 | 0.39 |

| −2.5 [−6, 11] | 0 [−10, 27] | −5 [−6, 1] |

Count (column %), mean±SD, and median [interquartile range]; data are complete unless otherwise noted.

Total 5 individuals in this group had outcomes collected by hybrid method.

P values are for comparison of EHR and telephone groups, based on Fisher’s exact and Wilcoxon rank sum tests.

EHR: electronic health record.

Staff time required for 6-month data collection by randomized modality was 11.8 min less per participant in the EHR arm (CI 3.3–7.7 min) versus phone arm (CI 14.1–20.2 min). Time for data collection and entry was 15.4 fewer minutes per participant by randomized modality at 6 months (EHR CI 11–15.8, phone CI 22.1–32.9 min; Table 2). When time for hybrid method follow-up (phone calls among those not retained by EHR method) was considered (Table 3), 4.2 fewer minutes staff time per participant were required in the EHR arm for data collection (CI 5.2–15.1 min) and 7.6 fewer minutes for collection and entry (CI 13.6–25.0 min). Number of reminders and timing of outcome collection did not significantly differ between groups (Tables 2 and 3).

Potential factors associated with outcome collection by EHR portal were explored. Four individuals did not return EHR portal outcomes at either 3 or 6 months. Of these, 3 (75%) had high school education. Overall, retention was 4/7 (57%) at 6 months among those with high school or lower education, and the other 2 individuals not retained in the EHR arm at 6 months had some college but not a bachelor’s or higher degree. Weekly reviews of whether the patient portal messages had been read by participants in the EHR arm demonstrated 2 individuals who did not return outcomes at 3 or 6 months never read any patient portal messages during the review period; these individuals both had high school education. One individual who did not return outcomes at 3 or 6 months by EHR read some messages at 3 months, but none at 6 months. The others read some or all messages at one outcome time point only (N = 2), or at both time points (N = 1). The EHR-arm individual who did not have a patient portal account before study enrollment returned all outcomes in the EHR. Process outcomes were similar among participants with only borderline anxiety or depression scores at baseline (GAD-7 of 8-9 and/or NDDI-E of 14-15 but not higher for either instrument, N = 10) versus high scores at baseline (GAD-7 ≥ 10 and/or NDDI-E ≥ 16, N = 20). In this study, there were no adverse events related to study activities.

DISCUSSION

This novel pilot trial demonstrated feasibility of recruiting individuals with anxiety or depression symptoms from a comprehensive epilepsy clinic for a pragmatic 6-month outcome study using EHR-embedded screening consent and automated rules for preliminary eligibility assessment. In this trial, the EHR-based eligibility screening approach facilitated efficient and successful enrollment, with recruitment completing early in spite of clinic volume reductions over the final 7 weeks due to COVID-19. The initial stages of eligibility assessment and screening consent were embedded in routine care processes, and these were largely automated or self-completed by patients. Thus, dedicated research staff resources were not required until potential participants had already indicated interest and met all but one of the eligibility criteria. This builds on our prior work, eliminating the in-clinic time spent by research coordinators to screen potential participants during prior studies,9,33 and could serve as a readily implementable model for efficient trial recruitment from routine care settings, in contrast to exploratory automated methods utilizing artificial intelligence which require further development prior to routine use.34–36 Use of EHR-embedded questionnaires for both clinical screening and trial recruitment supports a learning health system approach,1,37 as anxiety and depression screening results remain accessible for clinical care using existing clinical EHR documentation and review tools, and research results can be used rapidly to further refine care processes. EHR-based screening tools also have potential to support implementation research and scaling of strategies in real world care settings.38 Limitations to this approach include need for EHR analyst expertise and time to build these tools into the EHR, general institutional information technology support needs,39 and variability40 or lack of interoperability across different EHRs (and within the same EHR across different institutions). However, these EHR discrete data approaches pose an advantage over pen-and-paper measures that require staff time to scan into the EHR and that are often not imputed as discrete data elements.

Analysis of the primary feasibility objective in this study, successful 6-month outcome collection (retention) in the EHR patient portal arm, demonstrated actual retention of 67% (10 of 15) by randomized modality following 5 postdue date reminders and a mailed instruction handout on how to access the portal-based outcomes from email links. This was significantly lower than the telephone arm, and it did not meet the a priori target of at least 11 retained in EHR arm to support our hypothesis that true retention is at least 60% for the EHR portal method. However, during follow up phone calls for those who did not return EHR-based outcomes, patient reported outcomes were ultimately collected among all participants at 6 months (albeit with one individual returning most but not all responses). The EHR method required significantly less research staff time for outcome collection (>11 min per participant if outcomes obtained by EHR and >4–7 min per participant with hybrid method). Reasons for lower retention in the EHR portal arm compared with telephone arm may include: (1) limited notification capabilities of the EHR platform (general email message for all portal messages; actual content not visible until portal login); (2) portal message fatigue due to higher than usual message volume during follow-up (system-wide COVID-19 notifications); (3) education level imbalance between the 2 study arms; (4) differences in skills for information technology; and (5) reduced appeal of this method among these study participants compared to telephone. One Australian smoking cessation trial did find an association of trial retention with higher education,41 and low health literacy (often correlates with low education) may pose a barrier to patient portal use.42 Overall, the retention and process measure results in our study suggest an Epic EHR portal-based outcome collection approach may not yield sufficient retention if used as a sole method of PROM collection. A hybrid approach using EHR portal methods and telephone follow-up may result in excellent retention and reduced research staff resources compared with telephone alone. Refined electronic or EHR-based methods including MyChart app with app-based notifications warrant further study, and future studies should incorporate explicit plans to account for education level imbalances that might potentially affect retention.

Limitations and future directions

While this study is important in demonstrating efficient trial recruitment using EHR-embedded tools and research staff time savings from EHR portal-based outcome measures, limitations include small sample size, lack of balance in education levels across 2 arms, single site and single EHR examined, and possible confounding of COVID-19 related factors with outcome collection. Although the sample size was small, it was powered a priori to assess the primary retention outcome in the EHR arm. If lower education is associated with reduced capabilities to access and use electronic tools such as patient portals, then the predominance of individuals with lower education in the EHR portal arm could have resulted in lower EHR arm retention than would have been observed in a balanced sample. Given COVID-19 related changes in daily living during the follow-up period, it is possible retention and process measures were affected by increased stay at home time, potentially enhancing retention by telephone or overall, though the direction of potential COVID-related impact is unclear, nor is it clear whether this would have a differential effect on the 2 study arms. The single site and single EHR used in this study limit generalizability of the results, and thus future work in additional settings and using interoperable tools or additional EHR systems is warranted. Future investigation comparing artificial intelligence-based trial recruitment methods to the manually programmed EHR-based methods used in this trial would also be beneficial.

CONCLUSION

Overall, this pragmatic randomized outcome measurement trial demonstrated recruitment ahead of schedule with low initial research staff effort. Better retention occurred at 6 months using telephone assessment compared to EHR portal. Near complete outcome capture was achieved, and >4–7 min of research staff time per participant was saved when a hybrid method of EHR outcome assessment followed by telephone was used. This hybrid approach may be promising for future investigation and use in pragmatic trials. Future work is warranted to investigate refined, EHR portal app-based approaches and to compare AI-based recruitment to this study’s recruitment methods.

FUNDING

This work was supported by the National Institutes of Health (National Center for Advancing Translational Sciences and National Institute of Neurological Disorders and Stroke), grant numbers KL2 TR001421, UL1 TR001420, and R25 NS088248. Early development of some EHR-based tools used in the study was supported by the Agency for Healthcare Research and Quality under award number R01 HS24057. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

AUTHOR CONTRIBUTIONS

Specific individual contributions of the authors include: HMMC: conception and design, acquisition, analysis and interpretation of data, drafting the work. BMS: conception and design, analysis and interpretation of the data, revising the work critically for important intellectual content. UT: analysis and interpretation of the data, revising the work critically for important intellectual content. PD: conception and design, interpretation of data for the work, revising the work critically for important intellectual content. JK: interpretation of the data, revising the work critically for important intellectual content. HA: interpretation of data, drafting the work, and revising critically for intellectual content. GAB: conception and design, acquisition, analysis, and interpretation of the data, revising the work critically for important intellectual content. In addition, all authors have provided final approval of the version to be published and agree to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

ACKNOWLEDGMENTS

We would like to acknowledge Nancy Lawlor, Nicole Rojas, Sandra Norona, Amy Ayler, Jennifer Newsome, Kelly Conner, PhD, PA-C, Cormac O’Donovan, MD, Maria Sam, MD, Jane Boggs, MD, Christian Robles, MD, Mingyu Wan, and Eric Foster, PhD, for technical assistance, data collection, and patient recruitment support.

CONFLICT OF INTEREST STATEMENT

PD is a founding partner of Care Directions, LLC. The remaining authors have no relevant conflicts of interest to disclose.

Contributor Information

Heidi M Munger Clary, Department of Neurology, Wake Forest School of Medicine, Winston-Salem, North Carolina, USA.

Beverly M Snively, Department of Biostatistics and Data Science, Wake Forest School of Medicine, Winston-Salem, North Carolina, USA.

Umit Topaloglu, Department of Cancer Biology, Wake Forest School of Medicine, Winston-Salem, North Carolina, USA.

Pamela Duncan, Department of Neurology, Wake Forest School of Medicine, Winston-Salem, North Carolina, USA.

James Kimball, Department of Psychiatry, Wake Forest School of Medicine, Winston-Salem, North Carolina, USA.

Halley Alexander, Department of Neurology, Wake Forest School of Medicine, Winston-Salem, North Carolina, USA.

Gretchen A Brenes, Department of Internal Medicine, Section of Gerontology and Geriatric Medicine, Wake Forest School of Medicine, Winston-Salem, North Carolina, USA.

Data Availability

The data underlying this article are available in the Dryad Digital Repository, at https://doi:10.5061/dryad.qz612jmk3.43

REFERENCES

- 1. Institute of Medicine. The Learning Healthcare System: Workshop Summary. Washington, DC: The National Academies Press; 2007. [PubMed] [Google Scholar]

- 2. Steinhoff BJ, Staack AM, Hillenbrand BC.. Randomized controlled antiepileptic drug trials miss almost all patients with ongoing seizures. Epilepsy Behav 2017; 66: 45–8. [DOI] [PubMed] [Google Scholar]

- 3. Perucca E. From clinical trials of antiepileptic drugs to treatment. Epilepsia Open 2018; 3 (Suppl 2): 220–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Sanson-Fisher RW, Bonevski B, Green LW, D’Este C.. Limitations of the randomized controlled trial in evaluating population-based health interventions. Am J Prev Med 2007; 33 (2): 155–61. [DOI] [PubMed] [Google Scholar]

- 5. Walker MC, Sander JW.. Difficulties in extrapolating from clinical trial data to clinical practice: the case of antiepileptic drugs. Neurology 1997; 49 (2): 333–7. [DOI] [PubMed] [Google Scholar]

- 6. Lavallee DC, Chenok KE, Love RM, et al. Incorporating patient-reported outcomes into health care to engage patients and enhance care. Health Aff (Millwood) 2016; 35 (4): 575–82. [DOI] [PubMed] [Google Scholar]

- 7. Sacristan JA. Patient-centered medicine and patient-oriented research: improving health outcomes for individual patients. BMC Med Inform Decis Mak 2013; 13: 6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Thorpe KE, Zwarenstein M, Oxman AD, et al. A pragmatic-explanatory continuum indicator summary (PRECIS): a tool to help trial designers. J Clin Epidemiol 2009; 62 (5): 464–75. [DOI] [PubMed] [Google Scholar]

- 9. Munger Clary HM, Croxton RD, Allan J, et al. Who is willing to participate in research? A screening model for an anxiety and depression trial in the epilepsy clinic. Epilepsy Behav 2020; 104 (Pt A): 106907. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Hatcher-Martin JM, Adams JL, Anderson ER, et al. Telemedicine in neurology. Neurology 2020; 94 (1): 30–8. [DOI] [PubMed] [Google Scholar]

- 11. Haddad N, Grant I, Eswaran H.. Telemedicine for patients with epilepsy: a pilot experience. Epilepsy Behav 2015; 44: 1–4. [DOI] [PubMed] [Google Scholar]

- 12. Bahrani K, Singh MB, Bhatia R, et al. Telephonic review for outpatients with epilepsy—a prospective randomized, parallel group study. Seizure 2017; 53: 55–61. [DOI] [PubMed] [Google Scholar]

- 13. Rasmusson KA, Hartshorn JC.. A comparison of epilepsy patients in a traditional ambulatory clinic and a telemedicine clinic. Epilepsia 2005; 46 (5): 767–70. [DOI] [PubMed] [Google Scholar]

- 14. Lavin B, Dormond C, Scantlebury MH, Frouin PY, Brodie MJ.. Bridging the healthcare gap: building the case for epilepsy virtual clinics in the current healthcare environment. Epilepsy Behav 2020; 111: 107262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Boylan LS, Flint LA, Labovitz DL, Jackson SC, Starner K, Devinsky O.. Depression but not seizure frequency predicts quality of life in treatment-resistant epilepsy. Neurology 2004; 62 (2): 258–61. [DOI] [PubMed] [Google Scholar]

- 16. Kwan P, Yu E, Leung H, Leon T, Mychaskiw MA.. Association of subjective anxiety, depression, and sleep disturbance with quality-of-life ratings in adults with epilepsy. Epilepsia 2009; 50 (5): 1059–66. [DOI] [PubMed] [Google Scholar]

- 17. Patel AD, Baca C, Franklin G, et al. Quality improvement in neurology: Epilepsy Quality Measurement Set 2017 update. Neurology 2018; 91 (18): 829–36. [DOI] [PubMed] [Google Scholar]

- 18.Epileptologist-Treated Anxiety and Depression: Methods and Feasibility of a Learning Health System Trial. American Epilepsy Society Annual Meeting; Baltimore, MD; 2019.

- 19. Micoulaud-Franchi JA, Lagarde S, Barkate G, et al. Rapid detection of generalized anxiety disorder and major depression in epilepsy: validation of the GAD-7 as a complementary tool to the NDDI-E in a French sample. Epilepsy Behav 2016; 57 (Pt A): 211–6. [DOI] [PubMed] [Google Scholar]

- 20. Spitzer RL, Kroenke K, Williams JB, Lowe B.. A brief measure for assessing generalized anxiety disorder: the GAD-7. Arch Intern Med 2006; 166 (10): 1092–7. [DOI] [PubMed] [Google Scholar]

- 21. Gill SJ, Lukmanji S, Fiest KM, Patten SB, Wiebe S, Jetté N.. Depression screening tools in persons with epilepsy: a systematic review of validated tools. Epilepsia 2017; 58 (5): 695–705. [DOI] [PubMed] [Google Scholar]

- 22. Gilliam FG, Barry JJ, Hermann BP, Meador KJ, Vahle V, Kanner AM.. Rapid detection of major depression in epilepsy: a multicentre study. Lancet Neurol 2006; 5 (5): 399–405. [DOI] [PubMed] [Google Scholar]

- 23. Mula M, McGonigal A, Micoulaud-Franchi JA, May TW, Labudda K, Brandt C.. Validation of rapid suicidality screening in epilepsy using the NDDIE. Epilepsia 2016; 57 (6): 949–55. [DOI] [PubMed] [Google Scholar]

- 24. Maraganore DM, Frigerio R, Kazmi N, et al. Quality improvement and practice-based research in neurology using the electronic medical record. Neurol Clin Pract 2015; 5 (5): 419–29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Narayanan J, Dobrin S, Choi J, et al. Structured clinical documentation in the electronic medical record to improve quality and to support practice-based research in epilepsy. Epilepsia 2017; 58 (1): 68–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Cramer JA, Perrine K, Devinsky O, Meador K.. A brief questionnaire to screen for quality of life in epilepsy: the QOLIE-10. Epilepsia 1996; 37 (6): 577–82. [DOI] [PubMed] [Google Scholar]

- 27. Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG.. Research electronic data capture (REDCap) – a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform 2009; 42 (2): 377–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Scheffer IE, Berkovic S, Capovilla G, et al. ILAE classification of the epilepsies: position paper of the ILAE Commission for Classification and Terminology. Epilepsia 2017; 58 (4): 512–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Gilbody S, Lewis H, Adamson J, et al. Effect of collaborative care vs usual care on depressive symptoms in older adults with subthreshold depression: the CASPER randomized clinical trial. Jama 2017; 317 (7): 728–37. [DOI] [PubMed] [Google Scholar]

- 30. Rollman BL, Herbeck Belnap B, Abebe KZ, et al. Effectiveness of online collaborative care for treating mood and anxiety disorders in primary care: a randomized clinical trial. JAMA Psychiatry 2018; 75 (1): 56–64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Mavandadi S, Benson A, DiFilippo S, Streim JE, Oslin D.. A telephone-based program to provide symptom monitoring alone vs symptom monitoring plus care management for late-life depression and anxiety: a randomized clinical trial. JAMA Psychiatry 2015; 72 (12): 1211–8. [DOI] [PubMed] [Google Scholar]

- 32. Duncan PW, Bushnell CD, Jones SB,et al. ; COMPASS Site Investigators and Teams. Randomized pragmatic trial of stroke transitional care: the COMPASS study. Circ Cardiovasc Qual Outcomes 2020; 13 (6): e006285. [DOI] [PubMed] [Google Scholar]

- 33. Munger Clary HM, Croxton RD, Snively BM, et al. Neurologist prescribing versus psychiatry referral: examining patient preferences for anxiety and depression management in a symptomatic epilepsy clinic sample. Epilepsy Behav 2021; 114 (Pt A): 107543. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Tissot HC, Shah AD, Brealey D, et al. Natural language processing for mimicking clinical trial recruitment in critical care: a semi-automated simulation based on the LeoPARDS trial. IEEE J Biomed Health Inform 2020; 24 (10): 2950–9. [DOI] [PubMed] [Google Scholar]

- 35. Umberger R, Indranoi CY, Simpson M, Jensen R, Shamiyeh J, Yende S.. Enhanced screening and research data collection via automated EHR data capture and early identification of sepsis. SAGE Open Nurs 2019; 5: 2377960819850972. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Ni Y, Bermudez M, Kennebeck S, Liddy-Hicks S, Dexheimer J.. A real-time automated patient screening system for clinical trials eligibility in an emergency department: design and evaluation. JMIR Med Inform 2019; 7 (3): e14185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Greene SM, Reid RJ, Larson EB.. Implementing the learning health system: from concept to action. Ann Intern Med 2012; 157 (3): 207–10. [DOI] [PubMed] [Google Scholar]

- 38. Satre DD, Anderson AN, Leibowitz AS, et al. Implementing electronic substance use disorder and depression and anxiety screening and behavioral interventions in primary care clinics serving people with HIV: protocol for the Promoting Access to Care Engagement (PACE) trial. Contemp Clin Trials 2019; 84: 105833. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Van Eaton EG, Devlin AB, Devine EB, Flum DR, Tarczy-Hornoch P.. Achieving and sustaining automated health data linkages for learning systems: barriers and solutions. EGEMS (Wash DC) 2014; 2 (2): 1069. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Wright A, Sittig DF, Ash JS, et al. Development and evaluation of a comprehensive clinical decision support taxonomy: comparison of front-end tools in commercial and internally developed electronic health record systems. J Am Med Inform Assoc 2011; 18 (3): 232–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Courtney RJ, Clare P, Boland V, et al. Predictors of retention in a randomised trial of smoking cessation in low-socioeconomic status Australian smokers. Addict Behav 2017; 64: 13–20. [DOI] [PubMed] [Google Scholar]

- 42. Sakaguchi-Tang DK, Bosold AL, Choi YK, Turner AM.. Patient portal use and experience among older adults: systematic review. JMIR Med Inform 2017; 5 (4): e38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.[dataset]* Munger Clary H, Snively B. Patient-reported outcomes via electronic health record portal vs. telephone: process and retention data in a pilot trial of anxiety or depression symptoms in epilepsy. 2022. Dryad, doi: 10.5061/dryad.qz612jmk3. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data underlying this article are available in the Dryad Digital Repository, at https://doi:10.5061/dryad.qz612jmk3.43