Abstract

Background

This article is a summary of the quantitative imaging subgroup of the 2017 AAPM Practical Big Data Workshop (PBDW‐2017) on progress and challenges in big data applied to cancer treatment and research supplemented by a draft white paper following an American Association of Physicists in Medicine FOREM meeting on Imaging Genomics in 2014.

Aims

The goal of PBDW‐2017 was to close the gap between theoretical vision and practical experience with encountering and solving challenges in curating and analyzing data.

Conclusions

Recommendations based on the meetings are summarized.

Keywords: big data, deep learning, imaging biomarkers, quantitative imaging, radiomics

1. Introduction

The 1st AAPM Practical Big Data Workshop was held in May 2017 in Ann Arbor MI to discuss the need to make progress in “Big Data” applied to cancer treatment and research. This article is a summary of the workshop's quantitative imaging subgroup supplemented with observations from a draft white paper following an American Association of Physicists in Medicine FOREM meeting on Imaging Genomics in 2014 in Houston, Texas.1 Imaging genomics is concerned with the association of image‐based features, that is, “radiomics”, with gene expression or genomics. Both meetings focused on the utility of quantitative medical imaging to becoming integral to the task of big data applied to cancer. While the AAPM Practical Big Data Workshop focused on cancer, the techniques may provide insight into other diseases, including cardiac disease, neurodegenerative diseases such as multiple sclerosis, and arthritis. Recommendations based on the meetings are summarized at the end of the article.

Radiology has been at the center of healthcare transformation for two generations. Before 1970, exploratory surgery was responsible for a large fraction of hospital beds filled with patients preparing for or recovering from such procedures. The advent of the CT scanner and other three‐dimensional (3D) imaging systems virtually eliminated exploratory surgery, brought about outpatient minimally invasive surgery, and enabled targeting of cancer radiotherapy and tumor assessment for medical oncology. Today, 3D imaging modalities provide the primary means by which disease phenotypes are assessed for screening, diagnosis, and treatment evaluation. Despite its major impact thus far, medical imaging could be made even more useful if images could be processed to yield quantitative descriptive, prognostic, and prescriptive information. While over the past few decades, computer‐aided detection/diagnosis (CADe/CADx) algorithms have already been extracting quantitative features from images of tumors for use in clinical decision‐support systems, the use in associative data mining is more recent.2, 3 This image analysis can be used in imaging decision support. The term radiomics was coined to describe the conversion of medical images into mineable data.4, 5, 6 Figure 1 summarizes the methodology of radiomics.

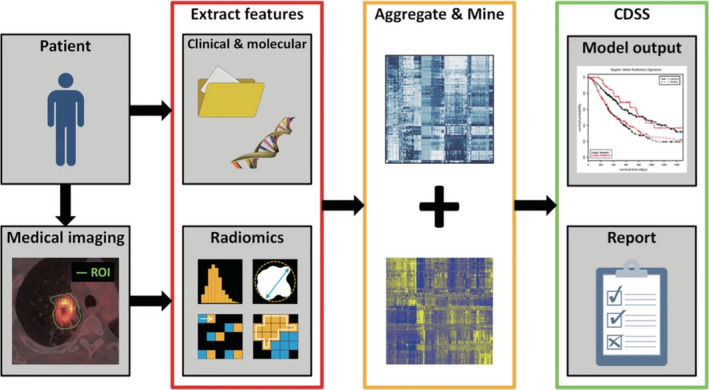

Figure 1.

Methodology of radiomics. Medical images are segmented to extract imaging biomarker features (i.e., phenotypes). These are aggregated with other biomarkers and the resulting quantitative data are mined with techniques borrowed from clinical trials, genomics discovery, and machine learning to achieve actionable clinical decision‐support systems (CDSS) and quantitative reports, which can be used to manage cancer longitudinally and form a rational taxonomy of disease. From Ref. 7.

Big data is an accumulation of data that are too large and complex for processing by traditional database management tools. Big data has not lived up to its hype in medicine, but this may be also true in radiology. Handling big data in imaging can be classified into two approaches: (a) direct analysis of the image and medical records data through techniques such as machine learning, and (b) extraction of quantitative data from images, sometimes augmented with natural language processing from reports, and analysis in combination with other quantitative medical records data. This article will examine the extraction of information using both approaches (see Fig. 2).

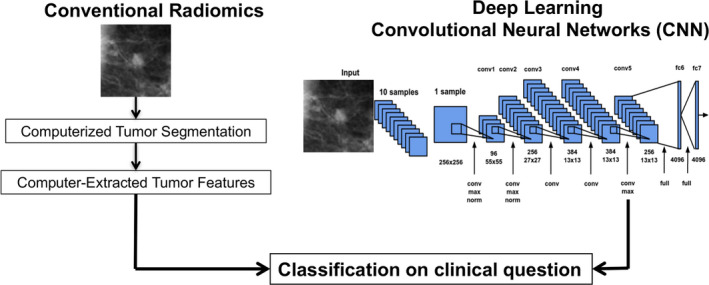

Figure 2.

Two general classification approaches to obtaining quantitative data for radiomics; (left) conventional manual or semi‐automated lesion segmentation followed by computer‐extracted features. and (right) fully automated deep convolutional neural networks. Here, the clinical task is in distinguishing between malignant and benign breast lesions. Both methods can take advantage of machine learning techniques and the need to train from annotated known cases. From Ref. 8.

If art is in the eye of the beholder, then in clinical radiology, interpretation is in the eye of the radiologist. Qualitative radiology reports are more akin to the written critique of a painting than the detailed quantitative information of a weather report. To transform radiological interpretation from an art into a science requires quantitative measurements along with minimization of known uncertainties. This transformation will require all of the imaging direct stakeholders to contribute effort, including medical imaging device and software vendors, radiologists, referring medical specialists, medical physicists and biomedical engineers, biostatisticians, and informatics professionals. Vendors need to focus on the need to provide accurate and reproducible quantitative measures rather than just than “pretty pictures”. Getting imaging vendors more interested in image quantitation will require pressure from radiologists and imaging scientists, and the downstream clinicians that will rely on the resulting quantitative data. Busy radiologists may be satisfied giving only qualitative interpretation until their referring physicians start demanding quantitative assessments. Many medical physicists and other imaging scientists as well as radiologists have been at the forefront associated with the Radiological Society of North America's (RSNA) Quantitative Imaging Biomarkers Alliance (QIBA®) (https://www.rsna.org/QIBA and the NCI's Quantitative Imaging Network (QIN) (https://imaging.cancer.gov/informatics/qin.htm). In addition to imaging scientists and radiologists, representatives from other key quantitative imaging stakeholders, including imaging device and software vendors, pharmaceutical companies, regulatory agencies (e.g., FDA), standards agencies (e.g., NIST, MITA), and biostatisticians and meteorologists are also contributors to RSNA QIBA efforts. Radiomics and machine learning have also been prominent at many scientific meetings, including the RSNA and the American Association of Physicists in Medicine. However, besides CT, the majority of 3D imaging equipment today is not sufficiently calibrated, assessed for image quantitation, or harmonized for use by image quantification systems. In fact, with the increasing frequency and varying implementations of iterative reconstruction techniques, even CT‐based measurements can be challenging in certain applications, for example, reproducible quantitative assessment of lung density in asthma or COPD. The demands for the time of healthcare IT professionals come from all quarters. The digitization of medical records has achieved clinical and business assessment efficiency but the promise of a research breakthrough of big data applied to imaging has been largely illusive mainly because of the lack of available and vetted quantitative data outside of the needs of clinical trials. Medical images represent about 90% of the bytes in a typical hospital data storage system, but until it is quantified it will neither be searchable nor actionable.

In most of big data applied to medicine, the more analysis, the greater the need to add data storage. This is not true in medical imaging. Medical image storage has been overwhelming for a long time and is ever getting larger with local and cloud storage competing on the basis of cost and Internet bandwidth.9 Anatomic and temporal resolutions continue to improve, more types of images are acquired in one examination, more cine images are created, and more patients are living longer to undergo more exams. Quantitative imaging applied to radiology has the possibility of negligibly increasing the overall data storage requirements and may even reduce it because it may avert needless exams with the potential for data analysis to add enormously to the interpretability of the disease state.

The existence of DICOM, Digital Imaging and Communications in Medicine (https://www.dicomstandard.org/), and organized Picture Archiving and Communication Systems (PACS) have situated radiology “ahead in the game” for big data analysis since they have made medical imaging substantially more accessible and standardized for image transmission, sharing, archival, and retrieval, as compared to nonimaging medical data. In addition, current CADe/CADx systems output structured reports of quantitative lesion features, furthering the readiness for clinical radiomics for data mining.

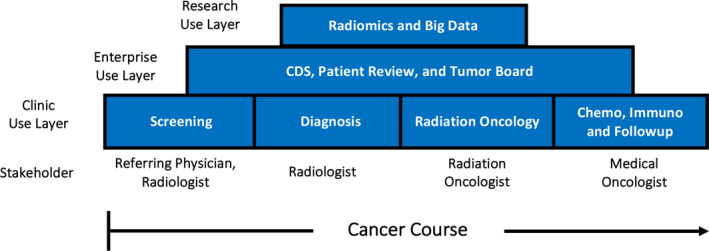

Medical imaging is used today to make tough decisions throughout the course of cancer from screening to diagnosis, treatment planning in radiation oncology, and determining if a course of drugs is working in medical oncology. However, most of that information is not in a quantitative structured form and therefore not actionable. Medical imaging could be key to not only describing the solid tumor cancer phenotype but also useful for guiding biopsies, which are considered the gold standard for phenotypic description. Interestingly, tumor heterogeneity was traditionally seen as noise in genomics research, but it is now recognized as an important tumor characteristic. Until the science of circulating tumor cells (CTC) or cancer metabolomics is mature, medical imaging will be the only noninvasive basis on which decisions for cancer management rests. Figure 3 illustrates the foundational role of medical imaging. From left to right, the use of medical imaging in managing the treatment course of cancer is indicated in the bottom “clinical use” layer. Quantitative imaging data analysis cannot be in isolation but needs to be aggregated with other electronic medical records, pathology, and genomics data in the “enterprise use” layer. This is the layer in which a multidisciplinary tumor board should be making major collaborative decisions. On the top layer is research. By aggregating all of the quantitative information, including quantitative medical imaging data, the taxonomy of cancer will become more apparent (i.e., making classifications based on phenotype and expressed genotype). This taxonomic description will be useful for determining the disease prognosis, which will enable patients and clinicians to make more rational decisions. Finally, by having enough curated data on outcomes from a wide arsenal of available treatments, radiomics or quantitative imaging decision support will be useful for prescribing the best treatment for the disease expressed and the individual patient inflicted and for the early assessment of treatment efficacy.

Figure 3.

Layer diagram of quantitative imaging data gathering in the clinic use layer (bottom), the use of the clinical data for multidisciplinary cancer management (middle layer), and the aggregation of the patient and population data for research (top layer).

1.A. Challenges with transforming cancer imaging into metrology

The requirements for stability, accuracy, and precision of quantitative values depend on how the images are used.10 Images that are used to delineate anatomical boundaries, such as a tumor in a lung, may depend more on the relative gradient of voxel values along the boundary as opposed to the exact absolute voxel value. This reduces, but does not eliminate, the need for reliable voxel values. If the actual value itself is important, then there is need for an understanding of the biases and variances in the voxel value measurements in order to mitigate such effects to the degree possible and to establish confidence intervals on the measured values. In addition, image distortions might directly affect the position of anatomic boundaries and subsequently alter a small fraction of voxel values. The concepts of “distance to agreement” and “dose agreement” borrowed from radiation therapy dosimetry are relevant to describe the differing accuracy requirements of quantity vs. geometry measures.11

The CT scanners are the most quantitative common 3D imaging systems. The vendors long ago settled on Hounsfield units as the basis for the grayscale images displayed. Named after a co‐inventor of CT, Godfrey Hounsfield, Hounsfield units are defined to be:

where is the attenuation coefficient of a voxel and is the attenuation coefficient of water.12 There are a large number of corrections made to a CT scanner in order to achieve relatively accurate and precise HU. These corrections are based on the knowledge of the reconstruction process, including normalization to remove any detector sensitivity or projection angle variation, deconvolution to take into account a finite source size, and filtering of the projection data to remove blurring inherent in filtered backprojection. Attenuation‐corrected backprojection is used to correct for beam hardening. Regular use of “air scans” accounts for time‐dependent variations. Inherent noise reduction can be accomplished by estimating the noise characteristics and adaptive filtering such that real gradients are preserved but the images are negligibly blurred. Image stability and quality does require routine quality assurance now largely built into the operation of the scanners and monitored by medical physicists. Iterative reconstruction techniques, and variation in the algorithms used by vendors, have the potential to impact the reproducibility of certain quantitative CT measures, as mentioned previously, and should be considered in such applications.

Much of the improvement in accuracy and precision in CT applications was driven by radiotherapy. Accurate dose calculation requires accurate CT numbers. For photon beam radiotherapy, a 10% density uncertainty in a region translates to a 2% dose uncertainty for a 5 cm long region.13 However, in proton radiotherapy the same density uncertainty shifts the expected range of the protons and can result in a geographical miss. Proton dose calculation methods require accurate stopping powers which may even require dual‐energy CT to separate density and atomic number. Phantoms, such as the ACR phantom,14 with inserts of known density are used to calibrate HU to density. Today, the variation in HU to density curves is inherently kVp dependent, but is not too dependent on phantom size.

The PET images provide some of the most convincing images of the spread and aggressiveness of cancer. In the past, PET molecular imaging only employed 18FDG (fluorodeoxyglucose) to assess glucose uptake. Recently, other agents that mark a variety of aspects of tumor function that have been tagged with positron emitters, such as 18FLT (fluorothymidine), which allows for an assessment of proliferation and 18FMISO (fluoromisonidazole), which allows assessment of hypoxia. Quantitative images from a PET scanner are not as quantitative as that of CT. The Standardized Uptake Value (SUV) is typically the quantitative PET scan measurand. SUV is defined as15:

where A is the activity concentration in kBq/ml, is the decay−corrected amount of injected radiolabel in kBq, and w is the mass of the patient in gm. If it is assumed that the average density of a patient is 1 g/ml, SUV is rendered dimensionless. The maximum SUV in a small region of interest, SUVmax, is often used in cancer outcome. A quantitative PET image requires attenuation corrections obtained from an attenuation map from CT, or, more recently, from an accompanying MR image.16 Filtering operations applied to PET reconstructions are similar to those used for CT; however, PET has far more inherent noise than CT and the variability in PET images obtained from test/retest experiments suggests that SUV is only precise to about 20%.17 Use of image‐based dose boosting and dose painting18, 19 in radiotherapy is applying pressure for more accurate quantification of PET images.

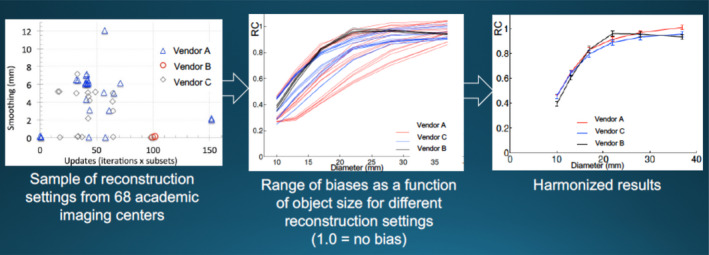

The inherent resolution of PET is relatively poor and its signal‐to‐noise ratio is relative small. This is due to the finite range of positrons before they decay, the high inherent quantum noise, and the large detector size, which is optimized to achieve acceptable signal‐to‐noise and be affordable. A point source emitter will be reconstructed to be a Gaussian distribution of several millimeters (full width at half maximum, FWHM). This means that small objects with a given activity concentration will be blurred and the SUV values under‐reported as compared to large objects with the same concentration. Figure 4 shows results of using contrast‐resolution phantoms containing vials with different diameters and isotope concentrations can characterize the activity concentration bias due to this volume averaging and can in principle be used as input to deblur SUV values. This figure shows that harmonization can account for some differences in imaging reconstruction parameters (e.g., smoothing, iterations, subsets) so that units from different vendors have similar SUV size variations.

Figure 4.

Example of harmonization of measurements from equipment from multiple vendors and reconstruction parameters to provide similar measures of activity concentration for a range of object sizes. RC is the ratio of observed activity concentration to the actual activity concentration. Data from Paul Kinahan, University of Washington.

Understanding the stability, accuracy, and precision of quantitative values in MRI is far more complicated than CT or PET. The gray levels of an MR image relate to a large range of intrinsic and extrinsic parameters, including the density of the nucleus of study (usually protons) located in a voxel (intrinsic), longitudinal and transverse spin relaxation times (T1 and T2, respectively, intrinsic), magnetic field strength (extrinsic), acquisition parameters, including echo and repetition times, resolution, bandwidth, etc. (extrinsic), radiofrequency coil used for signal detection (extrinsic), etc.20 Image formation is much more complicated than that for CT or PET, utilizing both frequency‐ and phase‐encoding of the readout signals to produce a 2D image. Slice‐encoding techniques, in addition, allow for the acquisition of 3D anatomic image data, commonly used for surgical and radiation therapy treatment planning applications, and for MR angiography applications. Complexity is further increased by a wide range of types, including, but not limited to, T1, T2, T2*, proton density, and diffusion‐weighted imaging. Dynamic contrast‐enhanced (DCE) and dynamic susceptibility change (DSC)MRI techniques allow the assessment of microvascular changes, and MR spectroscopy (MRS) techniques allow for the assessment of biochemical and metabolic changes.

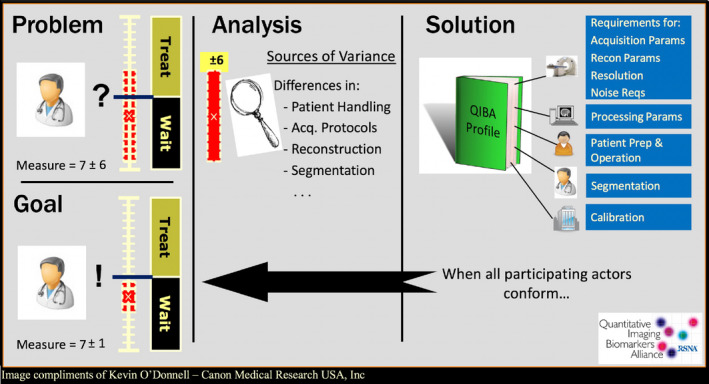

The complex image formation process and the large variety of image types mean that quality management is also more complex. Furthermore, there are relevant differences in magnetic field homogeneity and magnetic field gradient linearity between different scanner models and vendors that produce nonlinear effects on image values as well as cause image distortion. Characterization and calibration of MR is correspondingly complex. Phantom vendors are starting to implement recommendations of RSNA QIBA and other quantitative imaging initiatives. For example, a NIST/ISMRM MR System Phantom and a NIST/RSNA QIBA DWI Phantom are available commercially (https://www.qalibre-md.com/) and contain NIST‐traceable components. Figure 5 illustrates the goal of QIBA, which has as its mission “to improve the value and practicality of quantitative imaging biomarkers by reducing variability across devices, sites, patients, and time”. PET/MRI units prove that when the stakes are high enough, MRI images can be made more quantitative in order to provide attenuation corrections for PET. Powerful quantitative image transformations of the MRI image data, such as the random forest technique, can produce sufficiently accurate attenuation coefficients required to produce attenuation corrections for the accompanying PET scans.21 We recommend that device and software manufacturers, phantom vendors, and others work just as diligently with QIBA and other quantitative imaging initiatives, such as the NCI Quantitative Imaging Network, to achieve reproducible quantitative imaging biomarker goals in MRI applications.

Figure 5.

The goal of QIBA is to reduce the variability in quantitative imaging biomarker across devices, sites, patients, and time by applying imaging metrology principles to improve the accuracy and precision of medical image data. Image from Kevin O'Donnell, Canon Medical Research USA, Inc; reproduced with permission.

With all these modalities, that is, CT, PET, and MRI, additional quantitative radiomic features, which indirectly measure morphology or function, can also be extracted and used effectively in clinical decision‐making.2 Such features include shape, margin sharpness, texture patterns, and kinetics of a tumor.

1.B. Challenges and opportunities of introducing quantitative imaging into clinical workflow

Medicine has yet to transform itself into a “knowledge‐based” discipline. In data‐poor disciplines like archeology or paleontology, information is carefully curated. Medicine has plenty of data but much of it is not adequately curated such that it is actionable. The data generation for radiomics (or quantitative imaging decision support) in cancer applications has, up to now, been the domain of “quant labs,” sometimes in radiology, but most often associated with the labs of cancer researchers in large cancer centers. They usually utilize data collected from clinical trials that enforce consistent imaging protocols. This is manageable for clinical trials with scales in the few hundred to a thousand image datasets, but in order to have quantitative results for the more than a million US cancer patients with solid tumors diagnosed every year — let alone the nearly 15 million living with cancer (https://seer.cancer.gov/statfacts/html/all.html) — the data capture for quantitative results must become fully automatic or part of a routine workflow in state‐of‐the‐art management of cancer patients. The quality vs. quantity dichotomy must be replaced with quality and quantity.

There has been considerable progress. In 2001, the National Cancer Institute funded the creation, annotation, and study of a common thoracic computed tomography database, which led to the creation of LIDC: The Lung Imaging Database Consortium.22 This multi‐institutional initiative included collecting 1018 CT scans with careful annotations of nodule locations and radiologists' interpretations. The database produced detailed descriptions of clinical “truth” and quality assurance methods, as well as LIDC spinoffs, including NCI's RIDER (Reference Image Database to Evaluate Response) Project, in which reference image databases were related to evaluate response, and in which databases of reference images were harmonized to permit methods of data collection and analysis across different commercial imaging platforms. The benefit of such a carefully constructed dataset is evidenced by its public availability on The Cancer Imaging Archive sponsored by the NCI (www.cancerimagingarchive.net) and by its citation in more than 70 peer‐reviewed publications.23, 24 This effort can potentially serve as a model for future datasets to be used as training sets for machine learning or used with conventional radiomics methodologies. The size of this task can only be tackled by a culture of data sharing and cooperation of knowledgeable academics with healthcare IT and companies operating in radiology and oncology.

One approach to tackle the scale of the problem is to centralize the data into the cloud or use distributed networks to process all of the big data automatically. Machine vision is one aspect of machine learning that has the potential someday to reliably determine the location of tumors. Machine vision has long been done in the well‐defined setting of mammography using computer‐aided detection (CADe). The false positive rates are too high for these systems to operate fully automatically. The radiologist has to indicate which lesions are not cancerous, which may be a time‐consuming task. More importantly, false positives which remain after radiologist's inspection may result in unnecessary biopsies, which are stressful and uncomfortable for patients and costly for society.

Computer‐aided detection using 3D image sets shows promise. Convolutional neural networks (CNNs) are widely used for image classification in other industries, such as social media, security, and law enforcement, to identify a person from things or even an individual from a group.25 They “learn” from a “training set” of images and can be programmed to select a class or an individual. When fully trained, machine learning has been shown to perform as well or better than other state‐of‐the‐art approaches for image classification of brain lesions.26 CNN systems have even been shown to perform well when trained, not just in the task of tumor characterization, but on everyday objects, such cats, dogs, cars, etc.27 They are expected to do much better if trained with a large number of curated cases. Human subject identification deep learning CNNs have been trained with hundreds of thousands of images25 and medical image classification will improve when they have comparably sized curated datasets. In order to make progress today and to get the needed number of curated datasets for training machine learning, quantitative imaging has to move into everyday clinical workflow of radiology and/or oncology.

Current PACS are a testament to the digitization of imaging. Gone are the light box view panels with a grid of cellulose acetate radiographs. PACS has provided optimized image quality, lower staff costs and faster reading throughput. They function well to get qualitative reports from a large number of high‐quality images. While current PACS systems do not generate much quantitative information, they are designed to be extensible allowing separate applications launched from them utilizing the same DICOM archive, so that radiologist‐assisted machine vision may be incorporated into the workflow.

For decades, most of the analyses of cancerous tumors have depended on one‐dimensional (1D) features, that is, the lesion's long and short axis, as the sole quantitative reporting feature. The critical step for unlocking a flood of quantitative features is lesion segmentation. Based on a tumor boundary, simple quantitative metrics describing the tumor, that is, volume and average density, and other metrics like gray‐level heterogeneity, boundary spiculation, texture, and the degree of ground glass opacity can be determined. Various measurements have been defined in the literature that may be important to describing the phenotype of the disease. As more and more semiautomated and fully automated segmentation systems obtain FDA clearance, radiomic analyses become possible within current radiology workflow. Segmentation is mandatory for 3D radiotherapy techniques and the gross tumor volume (GTV) can take many minutes to contour manually. Such features are currently included in various CADe and CADx systems, involving almost real‐time tumor segmentation, and thus can be applied to clinical tasks in radiation therapy. While reliable fully automated segmentation is promising research and a long‐term goal, semiautomated segmentation is at today required for feature extraction.

As shown in Fig. 3, cancer management should be inherently longitudinal in nature. Today's evaluation of a tumor should be compared to the same lesion from a prior image taken on the same modality. Current PACS systems are well tuned to fast reading of the current day's images but become slow and cumbersome if prior images have to be found, registered, and normalized for comparison. If long and short axes are the only quantitative measure, they often have to be remeasured for the sake of consistency, because, while they may be recorded in a note, it is not obvious on an image where these measurements were made. Having consistent tracking of lesions and not having to remeasure lesions will save significant time for radiologists. Adopting a longitudinal view of cancer progression into the workflow will also make quantitative information more useful to the radiologist and oncologist because comparisons can be displayed as tables and graphs and compared to cohorts of patients who have the same diagnosis and stage.

1.C. Analysis of quantitative images to find biomarkers of disease

A biomarker or set of biomarkers describing a signature of a disease phenotype with sufficient specificity and accuracy can be used to define or describe, prognosticate its course, or prescribe treatments. An imaging feature is a mathematical description of a region of interest that may be indicative of the disease. Hundreds of imaging features have been mathematically defined for medical imaging or repurposed from quantitative imaging analysis from remote sensing or other data sciences. The features might correspond to tumor size, shape, morphology, or texture. The term “delta‐radiomics” has been used to describe the relative change in a feature in order to provide a longitudinal view useful for judging the change in the disease over time. The first task of radiomics research is to assemble a sufficient amount of curated case studies in order to find statistically relevant correlations of a set of features with other data provided from other “omics”, such as genomics, proteomics, and metabolomics, and patient records that include confirmed diagnoses and treatment outcomes in an actionable data form (text records in reports are typically not actionable data).28, 29 If deep learning is to be used for analyzing the imaging features, data curation should be done only by experts — typically experienced physicians — to minimize false classification in the training sets. For example, CNNs have been shown to misclassify images when intentional false perturbations are introduced into training set images, whereas human experts can easily recognize the perturbations.30

It is likely that single biomarkers will not be sufficiently reliable to be actionable, but a set of biomarkers to define a signature of phenotype will need to be defined. If one relevant biomarker requires a large dataset to be reliable, then a collection of biomarkers defining a signature of disease will require much more curated data.

Tumors are not homogeneous over space and time. One goal of radiomics is to use noninvasive imaging to assess phenotypic heterogeneity within the tumor and perhaps also in the surrounding microenvironments and over time to investigate tumor evolution. For example, a tumor might not be called “Her2+ tumor”; instead, it is identified by giving the percentage of cells that are HER2+. As tumors evolve, they tend to express greater heterogeneity.31 Microenvironments in the tumor as well as in the surrounding tissue may also be investigated by combining information from multimodality images that express the “habitat” of the tumor. For example, heterogeneity in the microenvironment of the tumor may correspond to differences in cell response to treatment.32 Just as biopsies should reflect tumor heterogeneity, imaging biomarkers can be viewed as “virtual biopsies” having an advantage of being noninvasive.29, 33

To date, only relatively small clinical trials have been used to pilot radiomics. Table 1 summarizes five clinical lung cancer trials.7 Once sufficiently large reliable data exist, it can be input into machine learning systems but even simple traditional analysis, typically utilized in prospective clinical trials, will advance clinical medicine greatly. For example, image phenotypes show promise for high‐throughput discrimination of breast cancer subtypes.29 Another example, is being able to find a cohort of patients with similar biomarkers as an individual patient will be useful in gauging their prognosis. With enough cases, it may be possible to assemble a large enough cohort matching a patient such that Kaplan–Meier analysis of rival viable therapies will be able to optimize the best treatment approach.

Table 1.

Externally verified radiomics clinical trials for lung cancer. N refers to the number of patients. Summarized from Ref. 7

| Author | Description | Year | N | Endpoint | Reliability |

|---|---|---|---|---|---|

| Carvalho et al. | Early variation in FDG‐PET radiomics features in NSCLC is related to survival | 2016 | 54 | Overall survival | Concordance index = 0.61 |

| Coroller et al. | Prediction of distant metastases in lung carcinoma using CT‐based radiomic signature | 2015 | 98 | Distant metastases | P ≪ 0.05 |

| Parmar et al. | Radiomics feature clusters and prognostic signatures | 2015 | 878 | Histology stage | Lung Stage AUC = 0.61 |

| Aerts et al. | Using a radiomics approach to decode tumor phenotype | 2014 | 1,019 | Overall survival | Concordance Index from 0.65 to 0.69 |

| Balagurunathan et al. | Reproducibility and prognosis of quantitative features | 2014 | 32 | Overall survival | P < 0.046 |

As in all metrology applications, it is imperative that uncertainty quantification be done so that false interpretations of the data do not misguide the care of patients. Candidate imaging biomarkers will need more than just peer review for validation. There is a need for academia and industry to work together to define protocols and standards that are specific for quantitative imaging for verifying the veracity of biomarkers and signatures, and then be subsequently cleared by FDA for safe and effective clinical use. This will enable radiomics‐derived biomarkers to be treated as equivalently as possible to those obtained from genomics and electronic medical records.

1.D. Recommendations

Images contain quantitative data that represent noninvasive measurement of the cancer phenotype.

Oncology professionals have to communicate their need for quantitative imaging for managing their patients, thus motivating radiomics research.

Radiology has to embrace the needs of oncology for quantitative imaging features other than just 1D linear size measures of a lesion.

Segmentation of lesions is a requirement for computerized hand‐crafted feature extraction, although deep learning may yield additional features.

Lessons learned from development and translation of CADe and CADx systems, which inherently have included radiomics, should be used in the development of radiomics for radiation oncology assessment.

The extraction of quantitative information should be either part of the radiology and oncology workflows without impeding productivity or be part of fully automated machine learning systems that can extract the needed information.

Uncertainty quantification with systematic reduction in the uncertainties is essential for progress in quantitative imaging; imaging metrology principles for assessing measurement bias and variance and to identify means of mitigating such errors and subsequently to define well‐characterized confidence intervals for such measurements are required.

The goals of RSNA QIBA and other quantitative imaging biomarker initiatives for improving quantitative imaging reproducibility and accuracy should become the goals of vendors and the demands of purchasers of medical imaging equipment.

Data curation is essential for data accuracy and reliability for clinical utility, research integrity, and as input for training sets for machine learning and discovery.

Longitudinal tracking of registered lesions and the tumor environment is both efficient and efficacious for the interpretation of the progress of treatment.

Analysis of imaging big data tied to other sources of data using machine learning, and other techniques from conventional clinical trial analysis, is expected to create a data‐driven taxonomy of cancer, optimize treatment decisions, and improve cancer prognosis.

Imaging professionals will remain essential to the production and highest quality interpretation of data.

Imaging biomarker signatures of disease should be treated by regulatory authorities in the same way as other reliable biomarkers obtained from genomics and medical records data.

Conflict of interest

Thomas R Mackie is a cofounder and stockholder in HealthMyne. He is a stockholder in Asto CT, IVMD, Leo Cancer Care, Oncora, OnLume, Redox, and ImageMoverMD. Maryellen Giger is a cofounder of and equity holder in Quantitative Insights, a stockholder in R2 technology/Hologic, and receives royalties from Hologic, GE Medical Systems, MEDIAN Technologies, Riverain Medical, Mitsubishi, and Toshiba.

Acknowledgments

Authors acknowledge the contributions of Sandy Napel, John Hazle, and Paul Kinahan in the organization of the 2014 AAPM FOREM on Imaging Genomics. Authors also thank the American Association of Physicists in Medicine (AAPM). Support of NIBIB contracts HHSN268201000050C, HHSN268201300071C, and HHSN268201500021C is gratefully acknowledged.

References

- 1. Giger ML, Napel S, Hazle J, Kinahan P AAPM FOREM on imaging genomics. AAPM Newsletter, p.44–46, January 2015.

- 2. Giger ML, Chan H‐P, Boone J. Anniversary paper: history and status of CAD and quantitative image analysis: the role of Medical Physics and AAPM. Med Phys. 2008;35:5799–5820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Giger ML, Karssemeijer N, Schnabel J. Breast image analysis for risk assessment, detection, diagnosis, and treatment of cancer. Ann Review BME. 2013;15:327–357. [DOI] [PubMed] [Google Scholar]

- 4. Gillies RJ, Anderson AR, Gatenby RA, Morse DL. The biology underlying molecular imaging in oncology: from genome to anatome and back again. Clin Radiol. 2010;65:517–521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Lambin P, Rios‐Velazquez E, Leijenaar R, et al. Radiomics: extracting more information from medical images using advanced feature analysis. Eur J Cancer. 2012;48:441–446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Kumar V. Radiomics: the process and the challenges. Magn Reson Imaging. 2012;30:1234–1248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Scrivener M, de Jong EEC, van Timmeren JE, Pieters T, Ghaye B, Geets X. Radiomics applied to lung cancer: a review. Transl Cancer Res. 2016;5:398–409. [Google Scholar]

- 8. Huynh B, Li H, Giger ML. Digital mammographic tumor classification using transfer learning from deep convolutional neural networks. J Med Imaging. 2016;3:034501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Langer SG. Challenges for data storage in medical imaging research. J Digital Imaging. 2011;24:203–207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. McShane LM, Pennello G, PhD GC, et al. Quantitative imaging biomarkers: a review of statistical methods for technical performance assessment. Stat Methods Med Res. 2015;24:27–67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Grégiore V, Mackie TR, De Neve W. ICRU Report 83: prescribing, recording, and reporting photon‐beam intensity‐modulated radiation therapy (IMRT). J ICRU. 2010;10:1–93. [Google Scholar]

- 12. Wolbarst AB. Physics of Radiology. New York NY: Appleton and Lange; 1993. [Google Scholar]

- 13. Siebers J. Treatment planning dose computation. In: Palta JR, Mackie TR, eds. Uncertainties in External Beam Radiation Therapy. Madison, WI: Medical Physics Publishing; 2011. [Google Scholar]

- 14. McCollough CH, Bruesewitz MR, McNitt‐Gray MF, et al. The phantom portion of the American college of radiology (ACR) computed tomography (CT) accreditation program: practical tips, artifact examples, and pitfalls to avoid. Med Phys. 2004;31:2423–2442. [DOI] [PubMed] [Google Scholar]

- 15. Kinahan PE, Fletcher JW. PET/CT Standardized uptake values (SUVs) in clinical practice and assessing response to therapy. Semin Ultrasound CT MR. 2010;31:496–505. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Vandenberghe S, Marsden PK. PET‐MRI: a review of challenges and solutions in the development of integrated multimodality imaging. Phys Med Biol. 2015;60:R115–R154. [DOI] [PubMed] [Google Scholar]

- 17. Lu WJ. HH. Computerized PET/CT image analysis in the evaluation of tumour response to therapy. Br J Radiol. 2015;88:20140625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Ling CC, Humm J, Larson S, et al. Towards multidimensional radiotherapy (MD‐CRT): biological imaging and biological conformality. Int J Radiat Oncol Biol Phys. 2000;47:551–560. [DOI] [PubMed] [Google Scholar]

- 19. Bentzen SM. Theragnostic imaging for radiation oncology: dose‐painting by numbers. Lancet Oncol. 2005;6:112–117. [DOI] [PubMed] [Google Scholar]

- 20. Abramson RG, Arlinghaus LR, Weis JA, et al. Current and emerging quantitative magnetic resonance imaging methods for assessing and predicting the response of breast cancer to neoadjuvant therapy. Breast Cancer: Targets and Therapy. 2012;4:139–154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Huynh T, Gao Y, Kang J, et al. Estimating CT image from MRI data using structured random forest and auto‐context model. IEEE Trans Med Image. 2016;35:174–183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Armato SG III, McLennan G, McNitt‐Gray MF, et al. Lung image database consortium: developing a resource for the medical imaging research community. Radiology. 2004;232:739–748. [DOI] [PubMed] [Google Scholar]

- 23. Clark K, Vendt B, Smith K, et al. The cancer imaging archive (TCIA): maintaining and operating a public information repository. J Digit Imaging. 2013;26:1045–1057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Gutman DA, Cooper LAD, Hwang SN, et al. MR Imaging predictors of molecular profile and survival: multi‐institutional study of the TCGA glioblastoma data set. Radiology. 2013;267:560–569. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Rawat W, Wang Z. Deep convolutional neural networks for image classification: a comprehensive review. Neural Comp. 2017;29:2352–2449. [DOI] [PubMed] [Google Scholar]

- 26. Kamnitsas K, Ledig C, Newcombe VFJ, et al. Efficient multi‐scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Med Imaging Anal. 2017;36:61–78. [DOI] [PubMed] [Google Scholar]

- 27. Antropova N, Huynh BQ, Giger ML. A deep feature fusion methodology for breast cancer diagnosis demonstrated on three imaging modality datasets. Med Phys. 2017;44:5162–5171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Zhu Y, Li H, Guo W, et al. Deciphering genomic underpinnings of quantitative MRI‐based radiomic phenotypes of invasive breast carcinoma. Nature – Sci Rep. 2015;5:17787. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Li H, Zhu Y, Burnside ES, et al. Quantitative MRI radiomics in the prediction of molecular classifications of breast cancer subtypes in the TCGA/TCIA data set. Breast Cancer. 2016;2:16012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Goodfellow IJ, Shlens J, Szegedy C. Explaining and harnessing adversarial examples. In Proceedings of the 3rd International Conference on Learning Representations 1–11. Computational and Biological Learning Society 2015.

- 31. Gerlinger M, Rowan AJ, Horswell S, et al. Intratumor heterogeneity and branched evolution revealed by multiregion sequencing. NEJM. 2012;366:883–892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Sottoriva A, Spiteri I, Piccirillo SGM, et al. Intratumor heterogeneity in human glioblastoma reflects cancer evolutionary dynamics. PNAS. 2013;110:4009–4014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Giger ML. Machine learning in medical imaging. J Am Coll Radiol. 2018;15:512–520. [DOI] [PubMed] [Google Scholar]