Abstract

The goals of this review paper on deep learning (DL) in medical imaging and radiation therapy are to (a) summarize what has been achieved to date; (b) identify common and unique challenges, and strategies that researchers have taken to address these challenges; and (c) identify some of the promising avenues for the future both in terms of applications as well as technical innovations. We introduce the general principles of DL and convolutional neural networks, survey five major areas of application of DL in medical imaging and radiation therapy, identify common themes, discuss methods for dataset expansion, and conclude by summarizing lessons learned, remaining challenges, and future directions.

Keywords: computer‐aided detection/characterization; deep learning, machine learning; reconstruction; segmentation; treatment

1. Introduction

In the last few years, artificial intelligence (AI) has been rapidly expanding and permeating both industry and academia. Many applications such as object classification, natural language processing, and speech recognition, which until recently seemed to be many years away from being able to achieve human levels of performance, have suddenly become viable.1, 2, 3 Every week, there is a news story about an AI system that has surpassed humans at various tasks ranging from playing board games4 to flying autonomous drones.5 One report shows that revenues from AI will increase by around 55% annually in the 2016–2020 time period from roughly $8 billion to $47 billion.6 Together with breakthroughs in other areas such as biotechnology and nanotechnology, the advances in AI are leading to what the World Economic Forum refers to as the fourth industrial revolution.7 The disruptive changes associated with AI and automation are already being seriously discussed among economists and other experts as both having the potential to positively improve our everyday lives, for example, by reducing healthcare costs, as well as to negatively affect society, for example, by causing large‐scale unemployment and rising income inequality8, 9 (according to one estimate, half of all working activities can be automated by existing technologies10). The advances in AI discussed above have been almost entirely based on the groundbreaking performance of systems that are based on deep learning (DL). We now use DL‐based systems on a daily basis when we use search engines to find images on the web or talk to digital assistants on smart phones and home entertainment systems. Given its widespread success in various computer vision applications (among other areas), DL is now poised to dominate medical image analysis and has already transformed the field in terms of performance levels that have been achieved across various tasks as well as its application areas.

1.A. Deep learning, history, and techniques

Deep learning is a subfield of machine learning, which in turn is a field within AI. In general, DL consists of massive multilayer networks of artificial neurons that can automatically discover useful features, that is, representations of input data (in our case images) needed for tasks such as detection and classification, given large amounts of unlabeled or labeled data.11, 12

Traditional applications of machine learning using techniques such as support vector machines (SVMs) or random forests (RF) took as input handcrafted features, which are often developed with a reliance on domain expertise, for each separate application such as object classification or speech recognition. In imaging, handcrafted features are extracted from the image input data and reduce the dimensionality by summarizing the input into what is deemed to be the most relevant information that helps with distinguishing one class of input data from another Using the image pixels as the input, the image data can be flattened into a high‐dimensional vector; for example, in mammographic mass classification, a 500 × 500 pixel region of interest will result in a vector with 250,000 elements. Given all the possible variations of a mass's appearance due to differences in breast type, dose, type and size of a mass, etc., finding the hyperplane that separates the high‐dimensional vectors of malignant and benign masses would require a very large number of examples if the original pixel values are used. However, each image can be summarized into a vector consisting of a few dozen or a few hundred elements (as opposed to over a million elements in the original format) by extracting specialized features that for instance describe the shape of the mass. This lower dimensional representation is more easily separable using fewer examples if the features are relevant. A key problem with this general approach is that useful features are difficult to design, often taking the collective efforts of many researchers over years or even decades to optimize. The other issue is that the features are domain or problem specific. One would not generally expect that features developed for image recognition should be relevant for speech recognition, but even within image recognition, different types of problems such as lesion classification and texture identification require separate sets of features. The impact of these limitations has been well demonstrated in experiments that show the performance of top machine learning algorithms to be very similar when they are used to perform the same task using the same set of input features.13 In other words, traditional machine learning algorithms were heavily dependent on having access to good feature representations; otherwise, it was very difficult to improve the state‐of‐the‐art results on a given dataset.

The key difference between DL and traditional machine learning techniques is that the former can automatically learn useful representations of the data, thereby eliminating the need for handcrafted features. What is more interesting is that the representations learned from one dataset can be useful even when they are applied to a different set of data. This property, referred to as transfer learning14, 15, is not unique to DL, but the large training data requirements of DL make it particularly useful in cases where relevant data for a particular task are scarce. For instance, in medical imaging, a DL system can be trained on a large number of natural images or those in a different modality to learn proper feature representations that allow it to “see.” The pretrained system can subsequently use these representations to produce an encoding of a medical image that is used for classification.16, 17, 18 Systems using transfer learning often outperform the state‐of‐the‐art methods based on traditional handcrafted features that were developed over many years with a great deal of expertise.

The success of DL compared to traditional machine learning methods is primarily based on two interrelated factors: depth and compositionality.11, 12, 19 A function is said to have a compact expression if it has few computational elements used to represent it (“few” here is a relative term that depends on the complexity of the function). An architecture with sufficient depth can produce a compact representation, whereas an insufficiently deep one may require an exponentially larger architecture (in terms of the number of computational elements that need to be learned) to represent the same function. A compact representation requires fewer training examples to tune the parameters and produces better generalization to unseen examples. This is critically important in complex tasks such as computer vision where each object class can exhibit many variations in appearance which would potentially require several examples per type of variation in the training set if a compact representation is not used. The second advantage of deep architectures has to do with how successive layers of the network can utilize the representations from previous layers to compose more complex representations that better capture critical characteristics of the input data and suppress the irrelevant variations (for instance, simple translations of an object in the image should result in the same classification). In image recognition, deep networks have been shown to capture simple information such as the presence or absence of edges at different locations and orientations in the first layer. Successive layers of the network assemble the edges into compound edges and corners of shapes, and then into more and more complex shapes that resemble object parts. Hierarchical representation learning is very useful in complicated tasks such as computer vision where adjacent pixels and object parts are correlated with each other and their relative locations provide clues about each class of object, or speech recognition and natural language processing where the sequence of words follow contextual and grammatical rules that can be learned from the data. This distributed hierarchical representation has similarities with the function of the visual and auditory cortexes in the human brain where basic features are integrated into more complex representations that are used for perception.20, 21

As discussed earlier, DL is not a completely new concept, but rather mostly an extension of previously existing forms of artificial neural networks (ANNs) to larger number of hidden layers and nodes in each layer. In the late 1990s until early 2000s, ANNs started to lose popularity in favor of SVMs and decision‐tree‐based methods such as random forests and gradient boosting trees that seemed to be more consistently outperforming other learning methods.22 The reason for this was that ANNs were found to be both slow and difficult to train aside from shallow networks with one to two hidden layers as well as prone to getting stuck in local minima. However, starting around 2006, a combination of several factors led to faster and more reliable training of deep networks. One of the first influential papers was a method for efficient unsupervised (i.e., using unlabeled data, as opposed to supervised training that uses data labeled based on the ground truth) layer by layer training of deep restricted Boltzmann machines.23 As larger datasets became more commonplace, and with availability of commercial gaming graphical processing units (GPUs), it became possible to explore training of larger deeper architectures faster. At the same time, several innovations and best practices in network architecture and training led to faster training of deep networks with excellent generalization performance using stochastic gradient descent. Some examples include improved methods for network initialization and weight updates,24 new neuron activation functions,25 randomly cutting connections or zeroing of weights during training,26, 27 and data augmentation strategies that render the network invariant to simple transformations of the input data. Attention to these improvements was still mostly concentrated within the machine learning community and not being seriously considered in other fields such as computer vision. This changed in 2012 in the ImageNet28 competition in which more than a million training images with 1000 different object classes were made available to the challenge participants. A DL architecture that has since been dubbed AlexNet outperformed the state‐of‐the‐art results from the computer vision community by a large margin and convinced the general community that traditional methods were on their way out.29

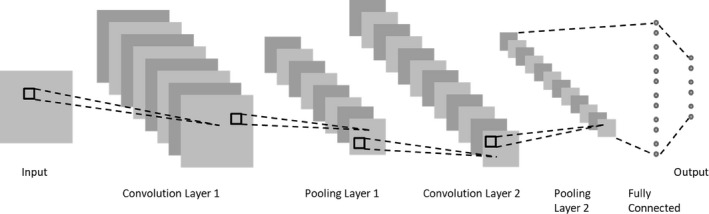

The most successful and popular DL architecture in imaging is the convolutional neural network (CNN).30 Nearby pixels in an image are correlated with one another both in areas that exhibit local smoothness and areas consisting of structures (e.g., edges of objects or textured regions). These correlations typically manifest themselves in different parts of the same image. Accordingly, instead of having a fully connected network where every pixel is processed by a different weight, every location can be processed using the same set of weights to extract various repeating patterns across the entire image. These sets of trainable weights, referred to as kernels or filters, are applied to the image using a dot product or convolution and then processed by a nonlinearity (e.g., a sigmoid or tanh function). Each of these convolution layers can consist of many such filters resulting in the extraction of multiple sets of patterns at each layer. A pooling layer (e.g., max pooling where the output is the maximum value within a window) often follows each convolution layer to both reduce the dimensionality and impose translation invariance so that the network becomes immune to small shifts in location of patterns in the input image. These convolution and pooling layers can be stacked to form a multilayer network often ending in one or more fully connected layers as shown in Fig. 1, followed by a softmax layer. The same concepts can be applied in one‐dimensional and three‐dimensional (3D) to accommodate time series and volumetric data, respectively. Compared to a fully connected network, CNNs contain far fewer trainable parameters and therefore require less training time and fewer training examples. Moreover, since their architecture is specifically designed to take advantage of the presence of local structures in images, they are a natural choice for imaging applications and a regular winner of various imaging challenges.

Figure 1.

CNN with two convolution layers each followed by a pooling layer and one fully connected layer.

Another very interesting type of network is the recurrent neural network (RNN) which is ideal for analyzing sequential data (e.g., text or speech) due to having an internal memory state that can store information about previous data points. A variant of RNNs, referred to as long short‐term memory (LSTM),31 has improved memory retention compared to a regular RNN and has demonstrated great success across a range of tasks from image captioning32, 33 to speech recognition1, 34 and machine translation.35

Generative adversarial networks (GANs) and its different variants (e.g., WGAN36, CycleGAN37, etc.) are another promising class of DL architectures that consist of two networks: a generator and a discriminator.38 The generator network produces new data instances that try to mimic the data used in training, while the discriminator network tries to determine the probability of whether the generated candidates belong to the training samples or not. The two networks are trained jointly with backpropagation, with the generative network becoming better at generating more realistic samples and the discriminator becoming better at detecting artificially generated samples. GANs have recently demonstrated great potential in medical imaging applications such as image reconstruction for compressed sensing in magnetic resonance imaging (MRI).39

1.B. Deep learning in medical imaging

In medical imaging, machine learning algorithms have been used for decades, starting with algorithms to analyze or help interpret radiographic images in the mid‐1960s.40, 41, 42 Computer‐aided detection/diagnosis (CAD) algorithms started to make advances in the mid 1980s, first with algorithms dedicated to cancer detection and diagnosis on chest radiographs and mammograms,43, 44 and then widening in scope to other modalities such as computed tomography (CT) and ultrasound.45, 46 CAD algorithms in the early days predominantly used a data‐driven approach as most DL algorithms do today. However, unlike most DL algorithms, most of these early CAD methods heavily depended on feature engineering. A typical workflow for developing an algorithm for a new task consisted of understanding what types of imaging and clinical evidence clinicians use for the interpretation task, translating that knowledge into computer code to automatically extract relevant features, and then using machine learning algorithms to combine the features into a computer score. There were, however, some notable exceptions. Inspired by the neocognitron architecture,47 a number of researchers investigated the use of CNNs48, 49, 50, 51 or shift‐invariant ANNs52, 53 in the early and mid‐1990s, and massively trained artificial neural networks (MTANNs)54, 55 in the 2000s for detection and characterization tasks in medical imaging. These methods all shared common properties with current deep CNNs (DCNNs): Data propagated through the networks via convolutions, the networks learned filter kernels, and the methods did not require feature engineering, that is, the inputs into the networks were image pixel values. However, severely restricted by computational requirements of the time, most of these networks were not deep, that is, they mostly consisted of only one or two hidden layers. In addition, they were trained using much smaller datasets compared to a number of high‐profile DCNNs that were trained using millions of natural images. Concepts such as transfer learning,14 residual learning,56 and fully convolutional networks with skip connections57 were generally not well developed. Thus, these earlier CNNs in medical imaging, as competitive as they were compared to other methods, did not result in a massive transformation in machine learning for medical imaging.

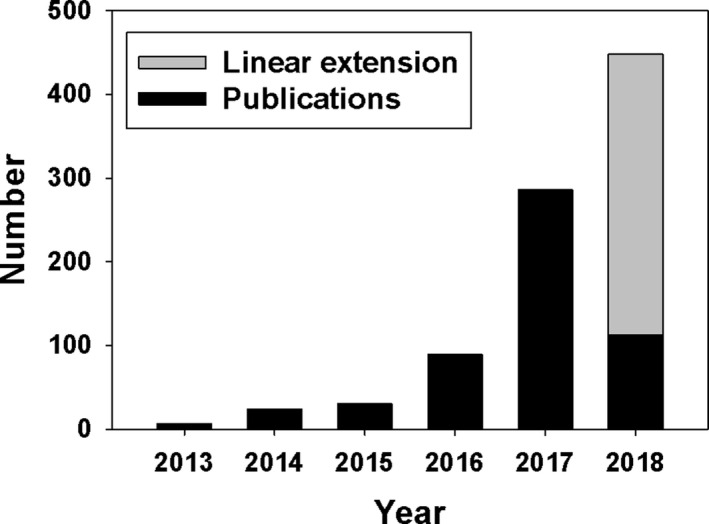

With the advent of DL, applications of machine learning in medical imaging have dramatically increased, paralleling other scientific domains such as natural image and speech processing. Investigations accelerated not only in traditional machine learning topics such as segmentation, lesion detection, and classification58 but also in other areas such as image reconstruction and artifact reduction that were previously not considered as data‐driven topics of investigation. Figure 2 shows the number of peer‐reviewed publications in the last 6 yr in the areas of focus for this paper, DL for radiological images, and shows a very strong trend: For example, in the first 3 months of 2018, more papers were published on this topic than the whole year of 2016.

Figure 2.

Number of peer‐reviewed publications in radiologic medical imaging that involved DL. Peer‐reviewed publications were searched on PubMed using the criteria (“deep learning” OR “deep neural network” OR deep convolution OR deep convolutional OR convolution neural network OR “shift‐invariant artificial neural network” OR MTANN) AND (radiography OR x‐ray OR mammography OR CT OR MRI OR PET OR ultrasound OR therapy OR radiology OR MR OR mammogram OR SPECT). The search only covered the first 3 months of 2018 and the result was linearly extended to the rest of 2018.

Using DL involves making a very large number of design decisions such as number of layers, number of nodes in each layer (or number and size of kernels in the case of CNNs), type of activation function, type and level of regularization, type of network initialization, whether to include pooling layers and if so what type of pooling, type of loss function, and so on. One way to avoid using trial and error for devising the best architecture is to follow the same exact architectures that have shown to be successful in natural image analysis such as AlexNet,29 VGGNet,59 ResNet,56 DenseNet,60 Xception,61 or Inception V3.62 These networks can be trained from scratch for the new task.63, 64, 65, 66, 67 Alternatively, they can be pretrained on natural images that are more plentiful compared to medical images so that the weights in the feature extraction layers are properly set during training (see Section 3.B for more details). The weights only in the last fully connected layer or last few layers (including some of the convolutional layers) can then be retrained using medical images to learn the class associations for the desired task.

1.C. Existing platforms and resources

A large number of training examples are required to estimate the large number of parameters of a DL system. One needs to perform backpropagation throughout many iterations using stochastic gradient descent over minibatches consisting of a small subset of samples at any given time to train hundreds of thousands to hundreds of millions or even billions of parameters. A single or multicore central processing unit (CPU) or a cluster of CPU nodes in a high‐performance computing (HPC) environment could be used for training, but the former approach would take an extremely long amount of time while the latter requires access to costly infrastructure.

Fortunately, in the last 10 yr gaming, GPUs have become cheaper, increasingly powerful, and easier to program. This has resulted in simultaneously far cheaper hardware requirements for running DL (compared to HPC solutions) and training times that are several orders of magnitude shorter compared to a solution run on a CPU.27, 68 The most common setup for training DL models is therefore to train networks on a desktop workstation containing one or more powerful gaming GPUs that can be easily configured for a reasonable price. There are also several cloud‐based solutions including Amazon Web Services (AWS)69 and Nvidia GPU cloud70 that allow users to train or deploy their models remotely. Recently, Google has developed application‐specific integrated circuit (ASIC) for neural networks to run its wide variety of applications that utilize DL. These accelerators, referred to as tensor processing units (TPUs), are several times faster than CPU or GPU solutions and have recently been made available to general users via Google Cloud.71

In line with the rapid improvements in performance of GPUs, several open‐source DL libraries have been developed and made public that free the user from directly programming GPUs. These frameworks allow the users to focus on how to setup a particular network and explore different training strategies. The most popular DL libraries are TensorFlow,72 Caffe,73 Torch,74 and Theano.75 They all have application programming interfaces (APIs) in different programming languages, with the most popular language being Python.

1.D. Organization of the paper

Throughout the paper, we strived to refer to published journal articles as much as we could. However, DL is a very fast‐changing field, and reports of many excellent and new studies either appear as a conference proceeding paper only, or as a preprint in online resources such as arxiv. We did not refrain from citing articles from these resources whenever necessary. In sections other than Section 2, to better summarize the state‐of‐the‐art, we have included publications from many different medical imaging and natural imaging. However, to keep the length of the paper reasonable, in Section 2, we focused only on applications in radiological imaging and radiation therapy, although there are other areas in medical imaging that have seen influx of DL applications, such as digital pathology and optical imaging. This paper is organized as follows. In Section 2, we summarize applications of DL to radiological imaging and radiation therapy. In Section 3, we describe some of the common themes among DL applications, which include training and testing with small dataset sizes, pretraining and fine tuning, combining DL with radiomics applications, and different types of training, such as supervised, unsupervised, and weakly supervised. Since dataset size is a major bottleneck for DL applications in medical imaging, we have devoted Section 4 to special methods for dataset expansion. In Section 5, we summarize some of the perceived challenges, lessons learned, and possible trends for the future of DL in medical imaging and radiation therapy.

2. Application areas in radiological imaging and radiation therapy

2.A. Image segmentation

DL has been used to segment many different organs in different imaging modalities, including single‐view radiographic images, CT, MR, and ultrasound images.

Image segmentation in medical imaging based on DL generally uses two different input methods: (a) patches of an input image and (b) the entire image. Both methods generate an output map that provides the likelihood that a given region is part of the object being segmented. While patch‐based segmentation methods were initially used, most recent studies use the entire input image to give contextual information and reduce redundant calculations. Multiple works subsequently refine these likelihood maps using classic segmentation methods, such as level sets,76, 77, 78, 79, graph cuts,80 and model‐based methods,81, 82 to achieve a more accurate segmentation than using the likelihood maps alone. Popular DL frameworks used for segmentation tasks include Caffe, Matlab™, and cuda‐convnet.

2.A.1. Organ and substructure segmentation

Segmentation of organs and their substructures may be used to calculate clinical parameters such as volume, as well as to define the search region for computer‐aided detection tasks to improve their performance. Patch‐based segmentation methods, with refinements using traditional segmentation methods, have been shown to perform well for different segmentation tasks.76, 83 Table 1 briefly summarizes published performance of DL methods in organ and substructure segmentation tasks using either Dice coefficient or Jaccard index, if given, as the performance metric.

Table 1.

Organ and substructure segmentation summary and performance using DL

| Region | Segmentation object | Network input | Network architecture basis | Dataset (train/test) | Dice coefficient on test set |

|---|---|---|---|---|---|

| Abdomen | Skeletal muscle89 | Whole image | FCN | 250/150 patients | 0.93 |

| Subcutaneous and visceral fat areas90 | Image patch | Custom | 20/20 patients | 0.92–0.98 | |

| Liver, spleen, kidneys91 | Whole image | Custom | 140 scans fivefold CV | 0.94–0.96 | |

| Bladder | Bladder76 | Image patch | CifarNet | 81/93 patients | 0.86 |

| Brain | Anterior visual pathway92 | Whole image | AE | 165 patients LOO CV | 0.78 |

| Bones86 | Whole image | U‐net | 16 patients LOO CV | 0.94 | |

| Striatum93 | Whole image | Custom | 15/18 patients | 0.83 | |

| Substructures94 | Image patch | Custom | 15/20 patients | 0.86–0.95 | |

| Substructures95 | Image patch | Custom | 20/10 patients | 0.92 | |

| Substructures96 | Image patch | Deep Residual Network92 | 18 patients sixfold CV | 0.69–0.83 | |

| Substructures97 | Whole image | FCN | 150/947 patients | 0.86–0.92 | |

| Breast | Dense tissue and fat98 | Image patch | Custom | 493 images fivefold CV | 0.63–0.95 |

| Breast and fibroglandular tissue85 | Whole image | U‐net | 66 patients threefold CV | 0.85–0.94 | |

| Head and neck | Organs‐at‐risk83 | Image patch | Custom | 50 patients fivefold CV | 0.37–0.90 |

| Heart | Left ventricle79 | Whole image | AE | 15/15 patients | 0.93 |

| Left ventricle82 | Whole image | AE | 15/15 patients | 0.94 | |

| Left ventricle99 | Image patch | Custom | 100/100 patients | 0.86 | |

| Left ventricle100 | Image Patch | Custom | 100/100 patients | 0.88 | |

| Fetal left ventricle101 | Image patch | Custom | 10/41 patients | 0.95 | |

| Right ventricle78 | Whole image | AE | 16/16 patients | 0.82 | |

| Kidney | Kidney102 | Whole image | Custom | 2000/400 patients | 0.97 |

| Kidney103 | Whole image | FCN | 165/79 patients | 0.86 | |

| Knee | Femur, femoral cartilage, tibia, tibial cartilage81 | Whole image | Custom | 60/40 images | – |

| Liver | Liver80 | Image patch | Custom | 78/40 patients | – |

| Liver104 | Image patch | Custom | 109/32 patients | 0.97 | |

| Portal vein83 | Image Patch | Custom | 72 scans eightfold CV | 0.70 | |

| Lung | Lung105 | Whole image | HNN | 62 slices/31 patients | 0.96–0.97 |

| Pancreas | Pancreas106 | Image patch | Custom | 80 patients sixfold CV | 0.71 |

| Pancreas107 | Image patch | Custom | 82 patients fourfold CV | 0.72 | |

| Prostate | Prostate108 | Image patch | AE | 66 patients twofold CV | 0.87 |

| Prostate109 | Image patch | Custom | 30 patients LOO CV | 0.87 | |

| Prostate110 | Whole image | FCN | 41/99 patients | 0.85 | |

| Prostate87 | Whole image | HNN | 250 patients fivefold CV | 0.90 | |

| Rectum | Organs‐at‐risk111 | Whole Image | VGG‐16 | 218/60 patients | 0.88–0.93 |

| Spine | Intervertebral disk112 | Image Patch | Custom | 18/6 scans | 0.91 |

| Whole body | Multiple organs113 | Whole Image | FCN | 228/12 scans | – |

| Multiple organs | Liver and heart (blood pool, myocardium)114 | Whole Image | Custom |

Liver: 20/10 patients Heart: 10/10 patients |

0.74–0.93 |

A “–” on the performance metrics means that the authors report different segmentation accuracy metrics. AE, autoencoder; FCN, fully convolutional network; HNN, holistically nested network; LOO, leave‐one‐out; CV, cross‐validation.

A popular network architecture for segmentation is the U‐net.84 It was originally developed for segmentation of neuronal structures in electron microscope stacks. U‐nets consist of several convolution layers, followed by deconvolution layers, with connections between the opposing convolution and deconvolution layers (skip connections), which allow for the network to analyze the entire image during training, and allow for obtaining segmentation likelihood maps directly, unlike the patch‐based methods. Derivatives of U‐net have been used for multiple tasks, including segmenting breast and fibroglandular tissue85 and craniomaxillofacial bony structures.86

Another DL structure that is being used for segmentation of organs is holistically nested networks (HNN). HNN uses side outputs of the convolutional layers, which are multiscale and multilevel, and produce a corresponding edge map at different scale levels. A weighted average of the side outputs is used to generate the final output, and the weights for the average are learned during the training of the network. HNN has been successfully implemented in segmentation of the prostate87 and brain tumors.88

2.A.2. Lesion segmentation

Lesion segmentation is a similar task to organ segmentation; however, lesion segmentation is generally more difficult than organ segmentation, as the object being segmented can have varying shapes and sizes. Multiple papers covering many different lesion types have been published for DL lesion segmentation (Table 2). A common task is the segmentation of brain tumors, which could be attributed to the availability of a public database with dedicated training and test sets for use with the brain tumor segmentation challenge held by the Medical Image Computing and Computer Assisted Intervention (MICCAI) conference from 2014 to 2016, and continuing in 2017 and 2018. Methods evaluated on this dataset include patch‐based autoencoders,115, 116 U‐net‐based structures117 as well as HNN.88

Table 2.

Lesion segmentation summary and performance using DL

| Region | Segmentation object | Network input | Network architecture basis | Dataset (train/test) | Dice coefficient on test set |

|---|---|---|---|---|---|

| Bladder | Bladder lesion77 | Image patch | CifarNet | 62 patients LOO CV | 0.51 |

| Breast | Breast lesion118 | Image patch | Custom | 107 patients fourfold CV | 0.93 |

| Bone | Osteosarcoma119 | Whole image | ResNet‐50 | 15/8 patients | 0.89 |

| Osteosarcoma120 | Whole image | FCN | 1900/405 images from 23 patients | 0.90 | |

| Brain | Brain lesion121 | Image patch | Custom | 61 patients fivefold CV | 0.65 |

| Brain metastases122 | Image patch | Custom | 225 patients fivefold CV | 0.67 | |

| Brain tumor115 | Image patch | AE |

HGG: 150/69 patients, LGG: 20/23 patients |

HGG: 0.86 LGG: 0.82 |

|

| Brain tumor117 | Image patch | Custom |

HGG: 220, LGG: 54, fivefold CV |

HGG: 0.85–0.91 LGG: 0.83–0.86 |

|

| Brain tumor123 | Whole image | Custom | 30/25 patients | 0.88 | |

| Brain tumor124 | Whole image | FCN | 274/110 patients | 0.82 | |

| Brain tumor88 | Whole image | HNN | 20/10 patients | 0.83 | |

| Ischemic lesions125 | Whole image | DeConvNet | 380/381 patients | 0.88 | |

| Multiple sclerosis lesion126 | Whole image | Custom | 250/77 patients | 0.64 | |

| White matter hyperintensities116 | Image patch | AE | 100/135 patients | 0.88 | |

| White matter hyperintensities127 | Image patch | Custom | 378/50 patients | 0.79 | |

| Head and neck | Nasopharyngeal cancer128 | Whole image | VGG‐16 | 184/46 patients | 0.81–0.83 |

| Thyroid nodule129 | Image patch | HNN | 250 patients fivefold CV | 0.92 | |

| Liver | Liver lesion130 | Image patch | Custom | 26 patients LOO CV | 0.80 |

| Lung | Lung nodule131 | Image patch | Custom | 350/493 nodules | 0.82 |

| Lymph nodes | Lymph nodes132 | Whole image | HNN | 171 patients fourfold CV | 0.82 |

| Rectum | Rectal cancer133 | Image patch | Custom | 70/70 patients | 0.68 |

| Skin | Melanoma134 | Image patch | Custom | 126 images fourfold CV | – |

A “–” on the performance metrics means that the authors report different segmentation accuracy metrics. AE, autoencoder; FCN, fully convolutional network; HNN, holistically nested network; LOO, leave‐one‐out; CV, cross‐validation; HGG, high‐grade glioma; LGG, low‐grade glioma.

2.B. Detection

2.B.1. Organ detection

Anatomical structure detection is a fundamental task in medical image analysis, which involves computing the location information of organs and landmarks in 2D or 3D image data. Localized anatomical information can guide more advanced analysis of specific body parts or pathologies present in the images, that is, organ segmentation, lesion detection, and radiotherapy planning. In a similar fashion to counterparts using traditional machine learning techniques, DL‐based organ/landmark detection approaches can be mainly divided into two groups, that is, classification‐based methods and regression‐based ones. While classification‐based methods focus on discriminating body parts/organs on the image or patch level, regression‐based methods target at recovering more detailed location information, for example, coordinates of landmarks. Table 3 illustrates a list of the DL‐based anatomical structure detection methods together with their performance on different evaluation settings.

Table 3.

Organ and anatomical structure detection summary and performance

| Organ | Detection object | Network input | Network architecture basis | Dataset (train/test) | Error (mean ± SD) |

|---|---|---|---|---|---|

| Bone | 37 hand landmarks147 | X‐ray images | Custom CNN | 895 images threefold CV | 1.19 ± 1.14 mm |

| Femur bone135 | MR 2.5D image patches | Custom 3D CNN | 40/10 volumes | 4.53 ± 2.31 mm | |

| Vertebrae148 | MR/CT image patches | Custom CNN | 1150 patches/110 images | 3.81 ± 2.98 mm | |

| Vertebrae149 | US/x‐ray images | U‐Net | 22/19 patients | F1:0.90 | |

| Vessel | Carotid artery150 | CT 3D image patches | Custom 3D CNN | 455 patients fourfold CV | 2.64 ± 4.98 mm |

| Ascending aorta139 | 3D US | Custom CNN | 719/150 patients | 1.04 ± 0.50 mm | |

| Fetal anatomy | Abdominal standard scan plane136, 151 | US image patches | Custom CNN | 11942/8718 images | F1:0.71136, 0.75151 |

| 12 standard scan planes137 | US images | Custom CNN | 800/200 images | F1:0.42–0.93 | |

| 13 standard scan planes138 | US images | AlexNet | 5229/2339 images | Acc: 0.10–0.94 | |

| Body | Body parts152 | CT images | AlexNet + FCN | 450/49 patients | 3.9 ± 4.7 voxels |

| Body parts153 | CT images | AlexNet | 3438/860 images | AUC: 0.998 | |

| Multiple Organ154 | 3D CT images | Custom CNN | 200/200 scans | F1:0.97 | |

| Body parts141, 142 | CT images | LeNet | 2413/4043 images | F1:0.92 | |

| Brain | Brain landmarks155 | MR images | FCN | 350/350 images | 2.94 ± 1.58 mm |

| Lung | Pathologic Lung156 | CT images | FCN | 929 scans fivefold CV | 0.76 ± 0.53 mm |

| Extremities | Thigh muscle157 | MR images | FCN | 15/10 patients | 1.4 ± 0.8 mm |

| Heart | Ventricle landmarks143, 144, 145 | MRI images | Custom CNN + RL | 801/90 images | 2.9 ± 2.4 mm |

FCN, fully convolutional network; RL, reinforcement learning; F1, harmonic average of the precision (positive predictive value) and recall (sensitivity); AUC, area under the receiver operating characteristic curve; CV, cross‐validation.

Early classification‐based approaches often utilized off‐the‐shelf CNN features to classify image or image patches that contain anatomical structures. Yang et al.135 adopted a CNN classifier to locate two‐dimensional (2D) image patches (extracted from 3D MR volumes) that contain possible landmarks as an initialization of the follow‐up segmentation process for the femur bone. Chen et al.136 adopted an ImageNet pretrained model and fine‐tuned the model using fetal ultrasound frames from recorded scan videos to classify the fetal abdominal standard plane images.

A variety of information in addition to original images could also be included to help the detection task. For the same standard plane detection task in fetal ultrasound, Baumgartner et al.137 proposed a joint CNN framework to classify 12 standard scan planes and also localize the fetal anatomy using a series of ultrasound fetal midpregnancy scans. The final bounding boxes were generated based on the saliency maps computed as the visualization of network activation for each plane class.

Improvements were also achieved by adapting the CNN network with more advanced architecture and components. Kumar et al.138 composed a two‐path CNN network with features computed from both original images and pregenerated saliency maps in each path. The final standard plane classification was performed using SVM on a set of selected features.

Another category of methods tackle the anatomy detection problems with regression analysis techniques. Ghesu et al.139 formulated the 3D heart detection task as a regression problem, targeting at the 3D bounding box coordinates and affine transform parameters in transesophageal echocardiogram images. This approach integrated marginal space learning into the DL framework and learned sparse sampling to reduce computational cost in the volumetric data setting.140

Yan et al.141, 142 formulated body part localization using DL. The system was developed using an unsupervised learning method with two intersample CNN loss functions. The unsupervised body part regression built a coordinate system for the body and output a continuous score for each axial slice, representing the normalized position of the body part in the slice.

Besides the two common categories of methods discussed above, modern techniques (e.g., reinforcement learning) are also adopted to tackle the problem from a different direction. Ghesu et al.143 present a good example of combining reinforcement learning and DL in anatomical detection task. With the application in multiple image datasets across a number of different modalities, the method could search the optimal paths from a random starting point to the predefined anatomical landmark via reinforcement learning with the help of effective hierarchical features extracted via DCNN models. Furthermore, the system was further extended to search 3D landmark positions with 3D volumetric CNN features.144, 145 Later on, Xu et al.146 further extended this approach by turning the optimal action path searching problem into an image partitioning problem, in which a global action map across the whole image was constructed and learned by a DCNN network to guide the searching action.

2.B.2. Lesion detection

Detection of abnormalities (including tumors and other suspicious growths) in medical images is a common but costly and time‐consuming part of the daily routine of physicians, especially radiologists and pathologists. Given that the location is often not known a priori, the physician should search across the 2D image or 3D volume to find deviations compared to surrounding tissue and then to determine whether that deviation constitutes an abnormality that requires follow‐up procedures or something that can be dismissed from further investigation. This is often a difficult task that can lead to errors in many situations either due to the vast amount of data that needs to be searched to find the abnormality (e.g., in the case of volumetric data or whole‐slide images) or because of the visual similarity of the abnormal tissue with normal tissue (e.g., in the case of low‐contrast lesions in mammography). Automated computer detection algorithms have therefore been of great interest in the research community for many years due to their potential for reducing reading costs, shortening reading times and thereby streamlining the clinical workflow, and providing quality care for those living in remote areas who have limited access to specialists.

Traditional lesion detection systems often consist of long processing pipelines with many different steps.158, 159 Some of the typical steps include preprocessing the input data, for example, by rescaling the pixel values or removing irrelevant parts of the image, identification of locations in the image that are similar to the object of interest according to rule‐based methods, extraction of handcrafted features, and classification of the candidate locations using a classifier such as SVM or RF. In comparison, DL approaches for lesion detection are able to avoid the time‐consuming pipeline design approach. Table 4 presents a list of studies that used DL for lesion detection, along with some details about the DL architecture.

Table 4.

Lesion detection using DL

| Detection organ | Lesion type | Dataset (train/test) | Network input | Network architecture basis |

|---|---|---|---|---|

| Lung and thorax | Pulmonary nodule | 888 patients fivefold CV168 | Image patch168, 169, 173, 174, 175, 176, 177Whole image178, 179, 180 | CNN168, 169, 173, 175, 176, 177, 178, 179, 180SDAE/CNN174 |

| 888 patients tenfold CV169 | ||||

| 303 patients tenfold CV173 | ||||

| 2400 images tenfold CV174 | ||||

| 104 patients fivefold CV175 | ||||

| 1006 patients tenfold CV176 | ||||

| Multiple pathologies | 35,038/2,443 radiographs178 | |||

| 76,000/22,000 chest x rays180 | ||||

| ImageNet Pretraining, 433 patients LOO CV181 | ||||

| Tuberculosis | 685/151 chest radiographs179 | |||

| Brain | Cerebral aneurism | 300/100 magnetic resonance angiography images182 | Image patch182Whole image170, 172 | CNN182FCN/CNN170, 172 |

| Cerebral microbleed | 230/50 brain MR scans172 | |||

| Lacune | 868/111 brain MR scans170 | |||

| Breast | Solid cancer | 40,000/18,000 mammographic images64 | Image patch17, 64, 183Whole image66, 161 | CNN17, 64, 66, 183FCN/CNN161 |

| 161/160 breast MR images183 | ||||

| Mass | Pretraining on ~2300 mammography images, 277/47 DBT cases17 | |||

| ImageNet pretraining, 306/163 breast ultrasounds images161 | ||||

| Malignant mass and mircocalcification | ImageNet Pretraining, 3476/115 FFDM images66 | |||

| Colon | Polyp | 394/792 CT colonography cases166 | Whole image,184Image patch166, 185 | CNN166, 184, 185 |

| 101 CT colonography cases; tenfold CV185 | ||||

| Colitis | ImageNet Pretraining, 160 abdominal CT cases; fourfold CV184 | |||

| Multiple | Lymph node | ImageNet Pretraining, 176 CT cases; threefold CV160 | Image patch160, 166, 186 | CNN160, 166, 186 |

| 69/17 abdominal CT cases166 | ||||

| 176 abdominal CT cases; threefold CV186 | ||||

| Liver | Tumor | NA/37187 | Image patch187 | CNN187 |

| Thyroid | Nodule | 21,523 ultrasound images; tenfold CV188 | Image patch188 | CNN188 |

| Prostate | Cancer | 196 MR cases; tenfold CV189 | Whole image189 | FCN189 |

| Pericardium | Effusion | 20/5 CT cases190 | Whole image190 | FCN190 |

| Vascular | Calcification | ImageNet pretraining; 84/28191 | Image patch191 | FCN191 |

SDAE, stacked denoising autoencoder; FCN, fully convolutional network; LOO, leave‐one‐out; CV, cross‐validation.

Many of the papers focused on detection tasks use transfer learning with architectures from computer vision.160 Examples of this approach can be found in many publications, including those for lesion detection in breast ultrasound,161 for the detection of bowel obstructions in radiography,162 and for the detection of the third lumbar vertebra slice in a CT scan.163 Usage of CNNs in lesion detection is not limited to architectures taken directly from computer vision but also includes some applications where custom architectures are used.164, 165, 166, 167

Most of the early applications used 2D CNNs, even if the data were 3D. Due to prior experience with 2D architectures, limitations in the amount of available memory of GPUs, and higher number of samples needed for training the larger number of parameters in a 3D architecture, many DL systems used multiview 2D CNNs for analysis of CT and MRI datasets in what is referred to as 2.5D analysis. In these methods, orthogonal views of a lesion or multiple views at different angles through the lesion were used to train an ensemble of 2D CNNs whose scores would be merged together to obtain the final classification score.166, 168 More recently, 3D CNNs that use 3D convolution kernels are successfully replacing 2D CNNs for volumetric data. A common approach to deal with the small number of available cases is to train the 3D CNNs on 3D patches extracted from each case. This way, each case can be used to extract hundreds or thousands of 3D patches. Combined with various data augmentation methods, it is possible to generate sufficient number of samples to train 3D CNNs. Examples of using 3D patches can be found for the detection of pulmonary nodules in chest CT169 and for the detection of lacunar strokes in brain MRI.170 Due to the large size of volumetric data, it would be very inefficient to apply the CNN in a sliding window fashion across the entire volume. Instead, once the model is trained on patches, the entire network can be converted into a fully convolutional network171 so that the whole network acts as a convolution kernel that can be efficiently applied to an input of arbitrary size. Since convolution operations are highly optimized, this results in fast processing of the entire volume when using a 3D CNN on volumetric data.172

2.C. Characterization

Over the past decades, characterization of diseases has been attempted with machine learning leading to computer‐aided diagnosis (CADx) systems. Radiomics, the –omics of images, is an expansion of CADx to other tasks such as prognosis and cancer subtyping. Radiomic features can be described as (a) “hand‐crafted”/“engineered”/“intuitive” features or (b) deep‐learned features. Characterization of disease types will depend on the specific disease types and the clinical question. With handcrafted radiomic features, the features are devised based on imaging characteristics typically used by radiologists in their interpretation of a medical image. Such features might include tumor size, shape, texture, and/or kinetics (for dynamic contrast‐enhanced imaging). Various review papers have already been written about these handcrafted radiomic features that are merged with classifiers to output estimates of, for example, the likelihood of malignancy, tumor aggressiveness, or risk of developing cancer in the future.158, 159

DL characterization methods may take as input a region of the image around the potential disease site, such as a region of interest (ROI) around a suspect lesion. How that ROI is determined will likely affect the training and performance of the DL. Thinking of how a radiologist is trained during residency will lend understanding of how a DL system needs to be trained. For example, an ROI that is cropped tightly around a tumor will provide different information to a DL system than an ROI that is much larger than the encompassing tumor since with the latter, more anatomical background is also included in the ROI.

More and more DL imaging papers are published each year, but there are still only a few methods that are able to characterize among the vast range of radiological presentations across subtle disease states. Table 5 presents a list of published DL characterization studies in radiological imaging.

Table 5.

Characterization using DL

| Anatomic site | Object or task | Network input | Network architecture | Dataset (train/test) |

|---|---|---|---|---|

| Breast | Cancer risk assessment192 | Mammograms | Pretrained Alexnet followed by SVM | 456 patients LOO CV |

| Cancer risk assessment193 | Mammograms | Modified AlexNet | 14,000/1850 images randomly selected 20 times | |

| Cancer risk assessment194 | Mammograms | Custom DCNN | 478/183 mammograms | |

| Cancer risk assessment195 | Mammograms | Fine‐tuned a pretrained VGG16Net | 513/91 women | |

| Diagnosis196 | Mammograms | Pretrained AlexNet followed by SVM | 607 cases fivefold CV | |

| Diagnosis197 | Mammograms, MRI, US | Pretrained VGG19Net followed by SVM | 690 MRI, 245 FFDM 1125 US, LOO CV | |

| Diagnosis198 | Breast tomosynthesis | Pretrained Alexnet followed by evolutionary pruning | 2682/89 masses | |

| Diagnosis199 | Mammograms | Pretrained AlexNet | 1545/909 masses | |

| Diagnosis200 | MRI MIP | Pretrained VGG19Net followed by SVM | 690 cases with fivefold CV | |

| Diagnosis201 | DCE‐MRI | LSTM | 562/141 cases | |

| Solitary cyst diagnosis202 | Mammograms | Modified VGG Net | 1600 lesions eightfold CV | |

| Prognosis203 | Mammograms | VGG16Net followed by logistic regression classifier | 79/20 cases randomly selected 100 times | |

| Chest — lung | Pulmonary nodule classification204 | CT patches | ResNet | 665/166 nodules |

| Tissue classification205 | CT patches | Restricted Boltzmann machines | Training 50/100/150/200; testing 20,000/1000/20,000/20,000 image patches | |

| Interstitial disease206 | CT patches | Modified AlexNet | 100/20 patients | |

| Interstitial disease207 | CT patches | Modified VGG |

Public: 71/23 scans Local: 20/6 scans |

|

| Interstitial disease208 | CT patches | Custom | 480/(120 and 240) | |

| Interstitial disease209 | CT patches | Custom | 36,106/1050 patches | |

| Pulmonary nodule staging210 | CT | DFCNet | 11/7 patients | |

| Prognosis211 | CT | Custom | 7983/(1000 and 2164) subjects | |

| Chest — cardiac | Calcium scoring212 | CT | Custom | 1181/506 scans |

| Ventricle quantification213 | MR | Custom (CNN + RNN + Bayesian multitask) | 145 cases, fivefold CV | |

| Abdomen | Tissue classification214 | Ultrasound | CaffeNet and VGGNet | 136/49 Studies |

| Liver tumor classification215 | Portal Phase 2D CT | GAN | 182 cases, threefold CV | |

| Liver Fibrosis216 | DCE‐CT | Custom CNN | 460/100 scans | |

| Fatty liver disease217 | US | Invariant scattering convolution network | 650 patients, five‐ and tenfold CV | |

| Brain | Survival218 | Multiparametric MR | Transfer learning as feature extractor, CNN‐S | 75/37 patients |

| Skeletal | Maturity219 | Hand radiographs | Deep residual network | 14,036/(200 and 913) examinations |

FCN, fully convolutional network; LOO, leave‐one‐out; CV, cross‐validation.

2.C.1. Lesion characterization

When it comes to computer algorithms and specific radiological interpretation tasks, there is no one‐size‐fits‐all for either conventional radiomic machine learning methods or DL approaches. Each computerized image analysis method requires customizations specific to the task as well as the imaging modality.

Lesion characterization is mainly being conducted using conventional CAD/radiomics computer algorithms, especially when the need is to characterize (i.e., describe) a lesion rather than conduct further machine learning for disease assessment. For example, characterization of lung nodules and characterization of the change in lung nodules over time are used to track the growth of lung nodules in order to eliminate false‐positive diagnoses of lung cancer.

Other examples involving computer characterization of tumors occur in research in imaging genomics. Here, radiomic features of tumors are used as image‐based phenotypes for correlative and association analysis with genomics as well as histopathology. A well‐documented, multiinstitutional collaboration on such was conducted through the TCGA/TCIA Breast Phenotype Group.220, 221, 222, 223, 224

Use of DL methods as feature extractors can lend itself to tumor characterization; however, the extracted descriptors (e.g., CNN‐based features) are not intuitive. Similar to “conventional” methods that use handcrafted features, DL‐extracted features could characterize a tumor relative to some known trait — such as receptor status — during supervised training, and that subsequent output could be used in imaging genomics discovery studies.

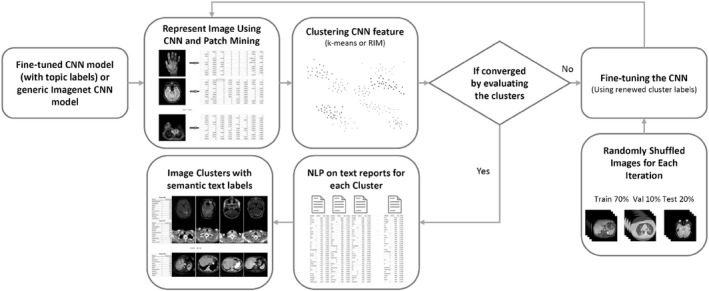

Additional preprocessing and data use methods can further improve characterization such as in the past use of unlabeled data with conventional features to enhance the machine learning training.225, 226 Here, the overall system can learn aspects of the data structure without the knowledge of the disease state, leaving the labeled information for the final classification step.

2.C.2. Tissue characterization

Tissue characterization is sought when specific tumor regions are not relevant. Here, we focus on the analysis of nondiseased tissue to predict a future disease state (such as texture analysis on mammograms in order to assess the parenchyma with the goal to predict breast cancer risk159) and the characterization of tissue that includes diffuse disease, such as in various types of interstitial lung disease and liver disease.227, 228

In breast cancer risk assessment, computer‐extracted characteristics of breast density and/or breast parenchymal patterns are computed and related to breast cancer risk factors. Using radiomic texture analysis, Li et al. have found that women at high risk for breast cancer have dense breasts with parenchymal patterns that are coarse and low in contrast.229 DL is now being used to assess breast density.194, 195 In addition, parenchymal characterization is being conducted using DL, in which the parenchymal patterns are related through the CNN architecture to groups of women using surrogate markers of risk. One example is shown by Li et al. assessing the performance of DL in the distinction between women at normal risk of breast cancer and those at high risk based on their BRCA1/2 status.192

Lung tissue has been analyzed with conventional texture analysis and DL for a variety of diseases. Here, characterization of the lung pattern lends itself to DL as patches of the lung may be informative of the underlying disease, commonly interpreted by the radiologist's eye–brain system. Various investigators have developed CNNs, including those to classify interstitial lung diseases characterized by inflammation of the lung tissue.207, 208, 209 These disease characterizations can include healthy tissue, ground glass opacity, micronodules, consolidation, reticulation, and honeycombing patterns.179

Assessing liver tissue lends itself to DCNNs in the task of staging liver fibrosis on MRI by Yasaka et al.216 and ultrasonic fatty liver disease characterization by Bharath et al.217

2.C.3. Diagnosis

Computer‐aided diagnosis (CADx) involves the characterization of a region or tumor, initially indicated by either a radiologist or a computer, after which the computer characterizes the suspicious region or lesion and/or estimates the likelihood of being diseased (e.g., cancerous) or nondiseased (e.g., non‐cancerous), leaving the patient management to the physician.158, 159 Note that CADx is not a localization task but rather a characterization/classification task. The subtle difference between this section and the preceding two sections is that here the output of the machine learning system is related to the likelihood of disease and not just a characteristic feature of the disease presentation.

Many review papers have been written over the past two decades on CADx, radiomic features, and machine learning,158, 159 and thus, details will not be presented in this paper.

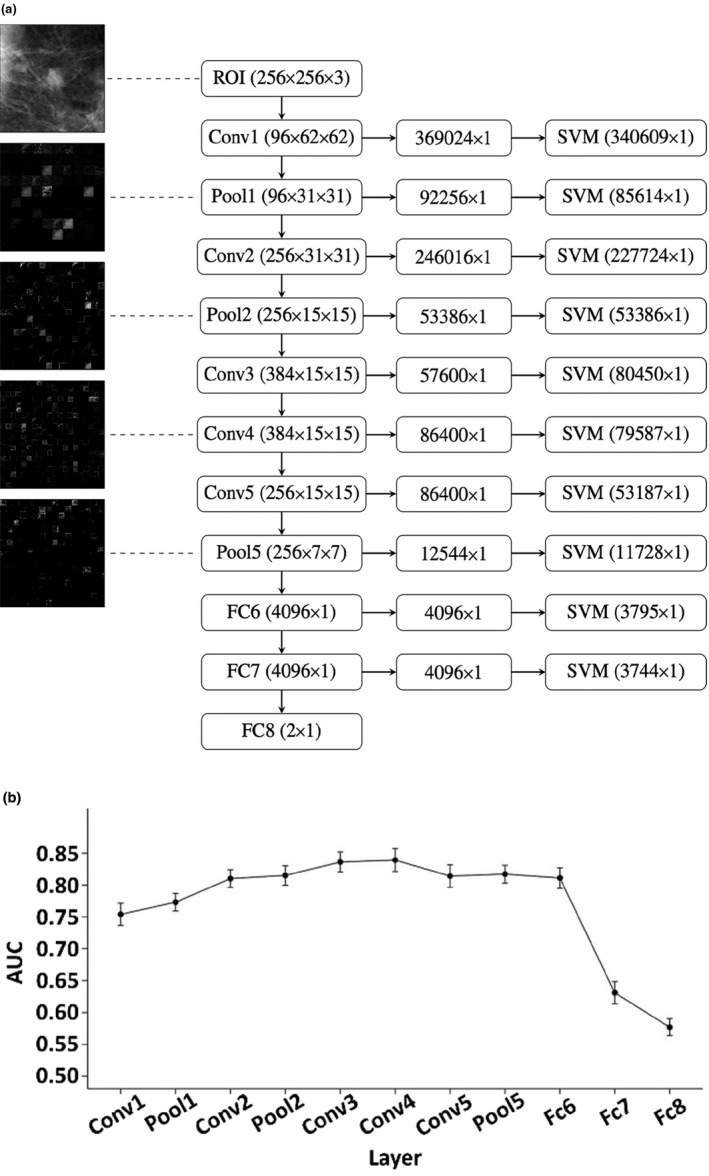

An active area of DL is CADx of breast cancer. Training CNNs “from scratch” is often not possible for CAD and other medical image interpretation tasks, and thus, methods to use CNNs trained on other data (transfer learning) are considered. Given the initial limited datasets and variations in tumor presentations, investigators explored the use of transfer learning to extract tumor characteristics using CNNs trained on nonmedical tasks. The outputs from layers can be considered as characteristic features of the lesion and serve as input to classifiers, such as linear discriminant analysis and SVMs. Figure 3(a) shows an example in which AlexNet is used as a feature extractor for an SVM, and Fig. 3(b) shows the performance of the SVM based on features from each layer of AlexNet.

Figure 3.

Use of CNN as a feature extractor.196 (a) Each ROI is sent through AlexNet and the outputs from each layer are preprocessed to be used as sets of features for an SVM. The filtered image outputs from some of the layers can be seen in the left column. The numbers in parentheses for the center column denote the dimensionality of the outputs from each layer. The numbers in parentheses for the right column denote the length of the feature vector per ROI used as an input for the SVM after zero‐variance removal. (b) Performance in terms of area under the receiver operating characteristic curve for classifiers based on features from each layer of AlexNet in the task of distinguishing between malignant and benign breast tumors.

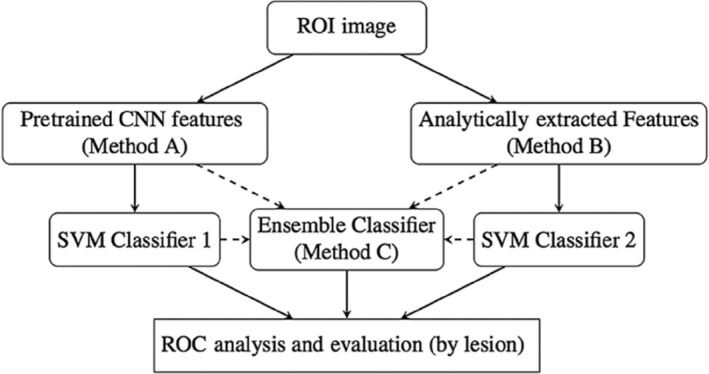

Researchers have found that performance of the conventional radiomics CADx and that of the CNN‐based CADx yielded similar levels of diagnostic performance in the task of distinguishing between malignant and benign breast lesions, and thus when combined, via a deep feature fusion methodology, gave a statistically significant level of performance.196, 197 Figure 4 shows one possible method for combining CNN‐extracted and conventional radiomics features.

Figure 4.

CNN‐extracted and conventional features can be combined in a number of ways, including a traditional classifier such as an SVM.196

In an effort to augment, under limited dataset constraints, CNN performance with dynamic contrast‐enhanced MRI, investigators have looked to vary the image types input to the CNN. For example, instead of replicating a single image region to the three RGB channels of VGG19Net, investigators have used the temporal images obtained from DCE‐MRI, inputting the precontrast, the first postcontrast, and the second postcontrast MR images to the RGB channels, respectively. In addition, to exploit the four‐dimensional nature of DCE‐MRI (3D and temporal), Antropova et al. have input MIP (maximum intensity projections) images to the CNN.200 Incorporation of temporal information into the DL efforts has resulted in the use of RNN, such as LSTM recurrent networks.201, 230

Instead of using transfer learning for feature extraction, investigators have used transfer learning for fine tuning by either (a) freezing the earlier layers of a pretrained CNN and training the later layers, that is, fine tuning or (b) training on one modality, such as digitized screen/film mammography (dSFM), for use on a related modality, such as full‐field digital mammography (FFDM). The latter has been shown by Samala et al.199 to be useful in the training of CNN‐based CADx for lesion diagnosis on FFDMs.

Investigations on DL for CADx are continuing across other cancers, that is, lung cancer, and other disease types, and similar methods can be used.204, 205, 206, 207, 208, 209, 210, 211, 212, 213, 214, 215, 216, 217, 218, 219 The comparison to more conventional radiomics‐based CADx is also demonstrated further, which is potentially useful for both understanding the CNN outputs and providing additional decision support.

2.C.4. Prognosis and staging

Once a cancer is identified, further workup through biopsies gives information on stage, molecular subtype, and/or genomics to yield information on prognosis and potential treatment options. Cancers are spatially heterogeneous, and therefore, investigators are interested whether imaging can provide information on that spatial variation. Currently, many imaging biomarkers of cancerous tumors include only size and simple enhancement measures (if dynamic imaging is employed), and thus, there is interest in expanding, through radiomic features, the knowledge that can be obtained from images. Various investigators have used radiomics and machine learning in assessing the stage and prognosis of cancerous tumors.220, 231 Now, those analyses are being investigated further with DL. It is important to note that when using DL to assess prognosis, one can analyze the tumor from medical imaging, such as MRI or ultrasound, or from pathological images. Also, in the evaluation, one needs to determine the appropriate comparison — a radiologist, a pathologist, or some other histopathological/genomics test.

The goal is to better understand the imaging presentation of cancer, that is, to obtain prognostic biomarkers from image‐based phenotypes, including size, shape, margin morphology, enhancement texture, kinetics, and variance kinetic phenotypes. For example, enhancement texture phenotypes can characterize the tumor texture pattern of contrast‐enhanced tumors on DCE‐MRI though an analysis of the first postcontrast images, and thus quantitatively characterize the heterogeneous nature of contrast uptake within the breast tumor.220 Here, the larger the enhancement texture entropy, the more heterogeneous is the vascular uptake pattern within the tumor, which potentially reflects the heterogeneous nature of angiogenesis and treatment susceptibility, and serves as a location‐specific “virtual digital biopsy.” Understanding the relationships between image‐based phenotypes and the corresponding biopsy information could potentially lead to discoveries useful for assessing images obtained during screening as well as during treatment follow‐up, that is, when an actual biopsy is not practical.

Shi et al.203 demonstrated the prediction of prognostic markers using DL on mammography in distinguishing between DCIS with occult invasion from pure DCIS. Staging on thoracic CTs is being investigated by Masood et al. through DL by relating CNN output to metastasis information for pulmonary nodules.210 In addition, Gonzalez et al. evaluated DL on thoracic CTs in the detection and staging of chronic obstructive pulmonary disease and acute respiratory disease.211 While the use of DL in the evaluation of thoracic CTs is promising, more development is needed to reach clinical applicability.

2.C.5. Quantification

Use of DL in quantification requires a CNN output that correlates significantly with a known quantitative medical measurement. For example, DL has been used in automatic calcium scoring in low‐dose CTs by Lessmann et al.212 and in cardiac left ventricle quantification by Xue et al.213 Similar to cancer workup, in cardiovascular imaging, use of DL is expected to augment clinical assessment of cardiac defect/function or uncover new clinical insights.232 Larson et al. turned to DL to assess skeletal maturity on pediatric hand radiographs with performance levels rivaling that of an expert radiologist.219 DL has been used to predict growth rates for pancreatic neuroendocrine tumors233 on PET‐CT scans.

2.D. Processing and reconstruction

In the previous parts of this section, we focused on applications in which image pixels or ROIs are classified into multiple classes (e.g., segmentation, lesion detection, and characterization), the subject is classified into multiple classes (e.g., prognosis, staging), or a feature in the image (or the ROI) is quantified. In this part, we focus on applications in which the output of the machine learning algorithm is also an image (or a transformation) that potentially has a quantifiable advantage over no processing or traditional processing methods.Table 6 presents a list of studies that used DL for image processing or reconstruction, and that produced an image as the DL algorithm output.

Table 6.

Image processing and reconstruction with DL

| Task | Imaging modality | Performance measure | Network output | Network architecture basis |

|---|---|---|---|---|

| Filtering | CT,234Chest x ray,235x ray fluoro236 | MSE234, CAD performance,234PSNR,235, 236 SSIM,235, 236Runtime236 | Likelihood of nodule,234Bone image,235CLAHE filtering236 | Custom CNN,234, 235Residual CNN,236Residual AE236 |

| Noise reduction | CT,237, 238, 239, 240PET241 | PSNR,237, 238, 239, 240, 241 RMSE,237, 238 SSIM,237, 238, 240 NRMSE,239 NMSE241 | Noise‐reduced image237, 238, 239, 240, 241 | Custom CNN,237, 238, 239Residual AE,237, 238Concatenated CNNs,241U‐net240 |

| Artifact reduction | CT,242, 243MRI244 | SNR,242, 243 NMSE,244 Qualitative,243 Runtime244 | Sparse‐view recon,242, 244 Metal artifact reduced image243 | U‐net,242, 244Custom CNN243 |

| Recons | MRI245, 246, 247, 248 | RMSE,245, 248 runtime,245 MSE,246, 247 NRMSE,246 SSIM,246 SNR248 | Image of scalar measures,245 MR reconstruction246, 247, 248 | Custom CNN,245, 248Custom NN,246Cascade of CNNs247 |

| Registration | MRI249, 250, 251, 252x‐ray to 3D253, 254 | DICE,249, 250 Runtime,250 Target Overlap,251 SNR,252 TRE,254 Image and vessel sharpness,252 mTREproj253 | Deformable registration,249, 250, 251, 252 Rigid body 3D transformation253, 254 | Custom CNN,249, 251, 252, 253, 254SAE250 |

| Synthesis of one modality from another | CT from MRI,255, 256, 257, 258, 259MRI from PET,260PET from CT261 | MAE,255, 256 PSNR,255, 259 ME,256 MSE,256 Pearson correl,256 PET image Quality,257, 258 SSIM,260 SUVR of MR‐less methods,260 Tumor detection by radiologist261 | Synthetic CT,255, 256, 257, 258Synthetic MRI,260Synthetic PET261 | Custom 3D FCN,255GAN,259, 260, 261U‐net,256, 257AE258 |

| Image quality assessment |

US,262 MRI265 |

AUC,262, 264 IOU,262 Correlation between TRE estimation and ground truth,263 Concordance with readers265 | ROI localization and classification,262 TRE estimation,263 Estimate of image diagnostic value264, 265 | Custom CNN,262, 265Custom NN,263VGG19264 |

MSE, mean‐squared error; RMSE, Root MSE; NSME, normalized MSE; NRMSE, normalized RMSE; SNR, signal‐to‐noise ratio; PSNR, peak SNR; SSIM, structural similarity; DICE, segmentation overlap index; TRE, target registration error; mTREproj, mean TRE in projection direction; MAE, mean absolute error; ME, mean error; SUVR, standardized uptake value ratio; AUC, area under the receiver operating characteristic curve; IOU, intersection over union; CLAHE, contrast‐limited adaptive histogram equalization.

2.D.1. Filtering, noise/artifact reduction, and reconstruction

Filtering

Going back to the early days of application of CNNs to medical images, one can find the examples of CNNs that produced output images for further processing. Zhang et al.52 trained a shift‐invariant ANN that aimed at having a high or low pixel value in an output image depending on whether the pixel was determined to be the center of a microcalcification by an expert mammographer. Suzuki et al.234 trained an MTANN as a supervised filter for the enhancement lung nodules on thoracic CT scans. More recently, Yang et al.235 used a cascade of CNNs for bone suppression in chest radiography. Using ground truth images extracted from dual‐energy subtraction chest x rays, the authors trained a set of multiscale networks to predict bone gradients at different scales and fuse these results to obtain a bone image from a standard chest x ray. Another advantage of CNNs for image filtering is speed; Mori236 investigated several types of residual convolutional autoencoders and residual CNNs for contrast‐limited adaptive histogram equalization filtering and denoising of x‐ray fluoroscopic imaging during treatment, without specialized hardware.

Noise reduction

The past couple of years have seen a proliferation of applications of DL to improve the noise quality of reconstruction medical images. One application area is low‐dose image reconstruction. This is important in modalities with ionizing radiation such as CT or PET for limiting patient dose,237, 238, 239, 241 or for limiting damage to samples in synchrotron‐based x‐ray CT.240 Chen et al.237 designed a DL algorithm for noise reduction in reconstructed CT images. They used the mean‐squared pixelwise error between the ideal image and the denoised image as the loss function, and synthesized noisy projections based on patient images to generate training data.238 They later combined a residual autoencoder with a CNN in an architecture called the RED‐CNN,238 which has a stack of encoders and a symmetrical stack of decoders that are connected with shortcuts for the matching layers. Kang et al.239 applied a DCNN to the wavelet transform coefficients of low‐dose CT images and used a residual learning architecture for faster network training and better performance. Their method won the second best place at the 2016 “Low‐Dose CT Grand Challenge.266 Xiang et al. used low‐dose PET images combined with T1‐weighted images acquired on a PET/MRI scanner to obtain standard acquisition quality PET images. In comparison to the papers above that started denoising with reconstructed images, Yang et al. aimed at improving the quality of recorded projections. They used a CNN‐based approach for learning the mapping between a number of pairs of low‐ and high‐dose projections. After training with a limited number of high‐dose training examples, they used the trained network to predict high‐dose projections from low‐dose projections and then used the predicted projections for reconstruction.

Artifact reduction

Techniques similar to those described for denoising have been applied to artifact reduction. Jin et al.242 described a general framework for the utilization of CNNs for inverse problems, applied the framework to reduce streaking artifacts in sparse‐view reconstruction on parallel beam CT, and compared their approach to filtered back projection (FBP) and total variation (TV) techniques. Han et al.244 used DL to reduce streak artifacts resulting from limited number of radial lines in radial k‐space sampling in MRI. Zhang et al.243 used a CNN‐based approach to reduce metal artifacts on CT images. They combined the original uncorrected image with images corrected with the linear interpolation and beam hardening correction methods to obtain a three‐channel input. This input was fed into a CNN, whose output was further processed to obtain “replacement” projections for the metal‐affected projections.

Reconstruction

Several studies indicated that DL may be useful in directly attacking the image reconstruction problem. In one of the early publications in this area, Golkov et al.245 applied a DL approach to diffusion‐weighted MR images (DWI) to derive rotationally invariant scalar measures for each pixel. Hammernik et al.246 designed a variational network to learn a complete reconstruction procedure for multichannel MR data, including all free parameters which would otherwise have to be set empirically. To obtain a reconstruction, the undersampled k‐space data, coil sensitivity maps, and the zero‐filling solution are fed into the network. Schlemper et al.247 evaluated the applicability of CNNs for reconstructing undersampled dynamic cardiac MR data. Zhu et al.248 introduced an automated transform by manifold approximation approach to replace the conventional image reconstruction with a unified image reconstruction framework that learns the reconstruction relationship between sensor and image domain without expert knowledge. They showed examples in which their approach resulted in superior immunity to noise and a reduction in reconstruction artifacts compared with conventional reconstruction methods.

2.D.2. Image registration

To establish accurate anatomical correspondences between two medical images, both handcrafted features and features selected based on a supervised method are frequently employed in deformable image registration. However, both types of features have drawbacks.249 Wu et al.249 designed an unsupervised DL approach to directly learn the basis filters that can effectively represent all observed image patches and used the coefficients by these filters for correspondence detection during image registration. They subsequently further refined the registration performance by using a more advanced convolutional stacked autoencoder and comprehensively evaluated the registration results with respect to current state‐of‐the‐art deformable registration methods.250 A deep encoder–decoder network was used for predictions for the large deformation diffeomorphic metric mapping model by Yang et al.251 for fast deformable image registration. In a feasibility study, Lv et al.252 trained a CNN for respiratory motion correction for free‐breathing 3D abdominal MRI. For the problem of 2D–3D registration, Miao et al.253 used a supervised CNN regression approach to find a rigid transformation from the object coordinate system to the x‐ray imaging coordinate system. The CNNs were trained using synthetic data only. The authors compared their method with for intensity‐based 2D–3D registration methods and a linear regression‐based method and showed that their approach achieved higher robustness and larger capture range as well as higher computational efficiency. A later study by the same research group identified a performance gap when the model trained with synthetic data is tested on clinical data.254 To narrow the gap, the authors proposed a domain adaptation method by learning domain invariant features with only a few paired real and synthetic data.

2.D.3. Synthesis of one modality from another

A number of studies have recently investigated using DL to generate synthetic CT (sCT) images from MRI. This is important for at least two applications. First, for accurate PET image reconstruction and uptake quantification, tissue attenuation coefficients can be readily estimated from CT images. Thus, estimation of sCT from MRI in PET/MRI imaging is desirable. Second, there is an interest in replacing CT with MRI in the treatment planning process mainly because MRI is free of ionizing radiation. Nie et al.255 used a 3D CNN to learn an end‐to‐end nonlinear mapping from an MR image to a CT image. The same research group in their later research added a context‐aware GAN for improved results.259 Han et al.256 adopted and modified the U‐net architecture for sCT generation from MRI. Current commercially available MR attenuation correction (MRAC) methods for body PET imaging use a fat/water map derived from a two‐echo Dixon MRI sequence. Leynes et al.257 used multiparametric MRI consisting of Dixon MRI and proton‐density‐weighted zero (ZTE) echo‐time MRI to generate sCT images with the use of a DL model that also adopted the U‐net architecture.267 Liu et al.258 trained a deep network (deep MRAC) to generate sCT from T1‐weighted MRI and compared deep MRAC with Dixon MRAC. Their results showed that significantly lower PET reconstruction errors were realized with deep MRAC. Choi et al.260 investigated a different type of synthetic image generation. They noted that although PET combined with MRI is useful for precise quantitative analysis, not all subjects have both PET and MR images in the clinical setting and used a GAN‐based method to generate realistic structural MR images from amyloid PET images. Ben‐Cohen et al.261 aimed at developing a system that can generate PET images from CT, to be used in applications such as evaluation of drug therapies and detection of malignant tumors that require PET imaging, and found that a conditional GAN is able to create realistic looking PET images from CT.

2.D.4. Quality assessment

In addition to traditional characterization tasks in medical imaging, such as classification of ROIs as normal or abnormal, DL has been applied to image quality assessment. Wu et al.262 proposed a DCNN for computerized fetal US image quality assessment to assist the implementation of US image quality control in the clinical obstetric examination. The proposed system has two components: the L‐CNN that locates the ROI of the fetal abdominal region in the US image and the C‐CNN that evaluates the image quality by assessing the goodness of depiction for the key structures of stomach bubble and umbilical vein. Neylon et al.263 used a deep neural network as an alternative to image similarity metrics to quantify deformable image registration performance.

Since the image quality strongly depends on both the characteristics of the patient and the imager, both of which are highly variable, using simplistic parameters like noise to determine the quality threshold is challenging. Lee et al.264 showed that DL using fine‐tuning of a pretrained VGG19 CNN was able to predict whether CT scans meet the minimal image quality threshold for diagnosis, as deemed by a chest radiologist.

Esses et al.265 used a DCNN for automated task‐based image quality evaluation of T2‐weighted liver MRI acquisition and compared this automated approach to image quality evaluation by two radiologists. Both the CNN and the readers classified a set of test images as diagnostic or nondiagnostic. The concordance between the CNN and reader 1 was 0.79, that between the CNN and reader 2 was 0.73, and that between the two readers was 0.88. The relatively lower concordance of the CNN with the readers was mostly due to cases that the readers agreed to be diagnostic, but the CNN did not agree with readers. The authors concluded that although the accuracy of the algorithm needs to be improved, the algorithm could be utilized to flag cases as low‐quality images for technologist review.

2.E. Tasks involving imaging and treatment

Radiotherapy and assessment of response to treatment are not areas that are traditionally addressed using neural networks or data‐driven approaches. However, these areas have recently seen a strong increase in the application of DL techniques. Table 7 summarizes studies in this fast‐developing DL application area.

Table 7.

Radiotherapy and assessment of response to treatment with DL

| Anatomic site | Object or task | Network input | Network architecture | Dataset (train/test) |

|---|---|---|---|---|

| Bladder | Treatment response assessment268 | CT | CifarNet | 82/41 patients |

| Brain | Glioblastoma multiforme treatment options and survival prediction218 | MRI | Custom | 75/37 patients |

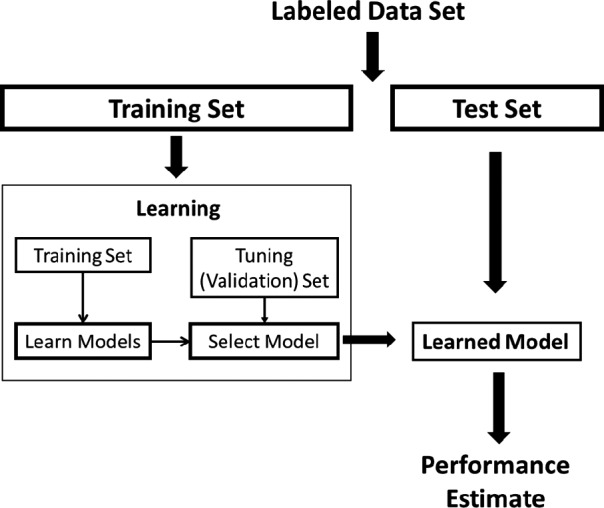

| Assessment of treatment effect in acute ischemic stroke269 | MRI | CNN based on SegNet | 158/29 patients | |