Abstract

Purpose

The recent exponential growth in teleophthalmology has been limited in part by the lack of a validated method to measure visual acuity (VA) remotely. We investigated the validity of a self-administered Early Treatment Diabetic Retinopathy Study (ETDRS) home VA test. We hypothesized that a home VA test with a printout ETDRS chart is equivalent to a standard technician-administered VA test in clinic.

Design

Prospective cohort study.

Participants

Two hundred nine eyes from 108 patients who had a scheduled in-person outpatient ophthalmology clinic visit at an academic medical center.

Methods

Enrolled patients were sent a .pdf document consisting of instructions and a printout ETDRS vision chart calibrated for 5 feet. Patients completed the VA test at home before the in-person appointment, where their VA was measured by an ophthalmic technician using a standard ETDRS chart. Survey questions about the ease of testing and barriers to completion were administered. For the bioequivalence test with a 5% nominal level, the 2 1-sided tests procedure was used, and an equivalent 90% confidence interval (CI) was constructed and compared with the prespecified 7-letter equivalence margin.

Main Outcome Measures

The primary outcome was the mean adjusted letter score difference between the home and clinic tests. Secondary outcomes included the unadjusted letter difference, absolute letter difference, and survey question responses.

Results

The mean adjusted VA letter score difference was 4.1 letters (90% CI, 3.2–4.9 letters), well within the 7-letter equivalence margin. Average unadjusted VA scores in clinic were 3.9 letters (90% CI, 3.1–4.7 letters) more than scores at home. The absolute difference was 5.2 letters (90% CI, 4.6–5.9 letters). Ninety-eight percent of patients agreed that the home test was easy to perform.

Conclusions

An ETDRS VA test self-administered at home following a standardized protocol was equivalent to a standard technician-administered VA test in clinic in the examined population.

Keywords: Home visual acuity chart, Remote ETDRS chart, Telehealth, Teleophthalmology, Visual acuity

Abbreviations and Acronyms: CI, confidence interval; COVID-19, coronavirus disease 2019; ETDRS, Early Treatment Diabetic Retinopathy Study; logMAR, logarithm of the minimum angle of resolution; VA, visual acuity

With increased patient anxiety about traveling for in-person clinic visits, the coronavirus disease 2019 (COVID-19) pandemic has forced health care providers worldwide to adapt to creative models of medicine that provide effective and timely care while adhering to pandemic safety protocols. As a result of the need to protect patients, providers, and affiliate staff, many ambulatory practices incorporated the use of telemedicine, which has led to a transformation in the realm of care delivery. Telemedicine was adopted rapidly among almost all specialties, and the number of Medicare beneficiaries receiving telemedicine services per week rose from 13 000 before the COVID-19 pandemic to 1.7 million in just a couple of months.1 In mid-March 2020, the American Academy of Ophthalmology recommended that ophthalmologists cease providing nonurgent patient care in person.2, 3, 4 Over a 4-week period from early March to early April 2020, daily telemedicine visits at our medical center increased from the single digits to more than 3000.

Telemedicine, defined as the “use of electronic information and communications technologies to provide and support health care when distance separates the participants,” has emerged across medicine as a well-established method to diagnose disease remotely as well as to monitor patient data such as glucose levels, blood pressure, electrocardiography results, international normalized ratios, and even sleep patterns.5,6 In ophthalmology practices, telemedicine, also known as teleophthalmology, has been used predominantly through store-and-forward models to screen for retinopathy of prematurity, diabetic retinopathy, and age-related macular degeneration.7,8 Beyond this scope, the role of live video conferencing for face-to-face clinician visits within ophthalmology traditionally has been limited.3 However, recent reports of live teleophthalmology clinic visits during the COVID-19 pandemic have highlighted its usefulness in the specialty, citing benefits of promoting clinical safety and reaching patients in remote locations.3,4 Despite the many successes, live video visits still present challenges for eye care clinicians that have limited the cadence and scope of teleophthalmology implementation. Among the greatest barriers has been finding a reliable way to measure the visual acuity (VA) of patients remotely.9

Visual acuity is the most basic ocular vital sign and is essential to the evaluation and management of most ophthalmic diseases. However, the best way to measure VA remotely is not clear. Several applications (“apps”) for measuring VA are available online, but evidence of their accuracy compared with office measurements is mixed.4,10 The lack of standardization across mobile and computer devices and the inability for all patients to navigate such technology are additional barriers to their usefulness.4,11,12 Printable versions of eye charts, such as the American Academy of Ophthalmology downloadable vision chart,13 have similar limitations. Urgent validation of a universal, simple home VA test is warranted to meet the needs of patients and ophthalmologists across the globe.3 This study aimed to validate a printable home Early Treatment Diabetic Retinopathy Study (ETDRS) vision chart that can be incorporated as part of future teleophthalmology services. We hypothesize that among patients seen within an all-specialty academic eye institution, a home VA test with a printout ETDRS chart is equivalent to a VA test performed by a trained technician in clinic with a standard ETDRS chart.

Methods

Study Design, Setting, and Data Sources

We performed a cohort study of equivalence design with adult patients scheduled for in-person visits in various subspecialties at the Vanderbilt Eye Institute between May 20 and July 10, 2020. Patient outcomes and covariates were extracted directly from the electronic medical record. Prospective Vanderbilt University Institutional Review Board/Ethics Committee approval was obtained. This study adhered to the tenets of the Declaration of Helsinki and is in accordance with Health Insurance Portability and Accountability Act regulations. The study registration information is available online at the National Institutes of Health ClinicalTrials.gov registry (identifier, NCT04391166).

Study Population

The study population consisted of adult patients with scheduled in-person outpatient visits for eye examinations during the study period at the main campus of the Vanderbilt Eye Institute. Patients who were 18 to 85 years of age were included. Patients were excluded if they had a non-English primary language, an inactive online patient portal, or were undergoing an examination after ocular surgery. Patients with a documented prior best-corrected VA worse than 20/200 in both eyes were excluded because the home chart only includes VA assessment up to 20/200.

Patient Enrollment and Study Protocol

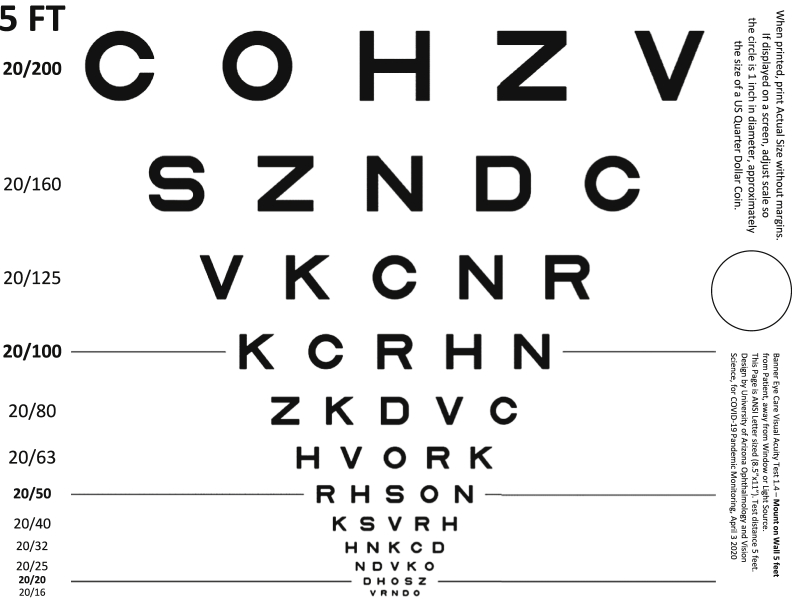

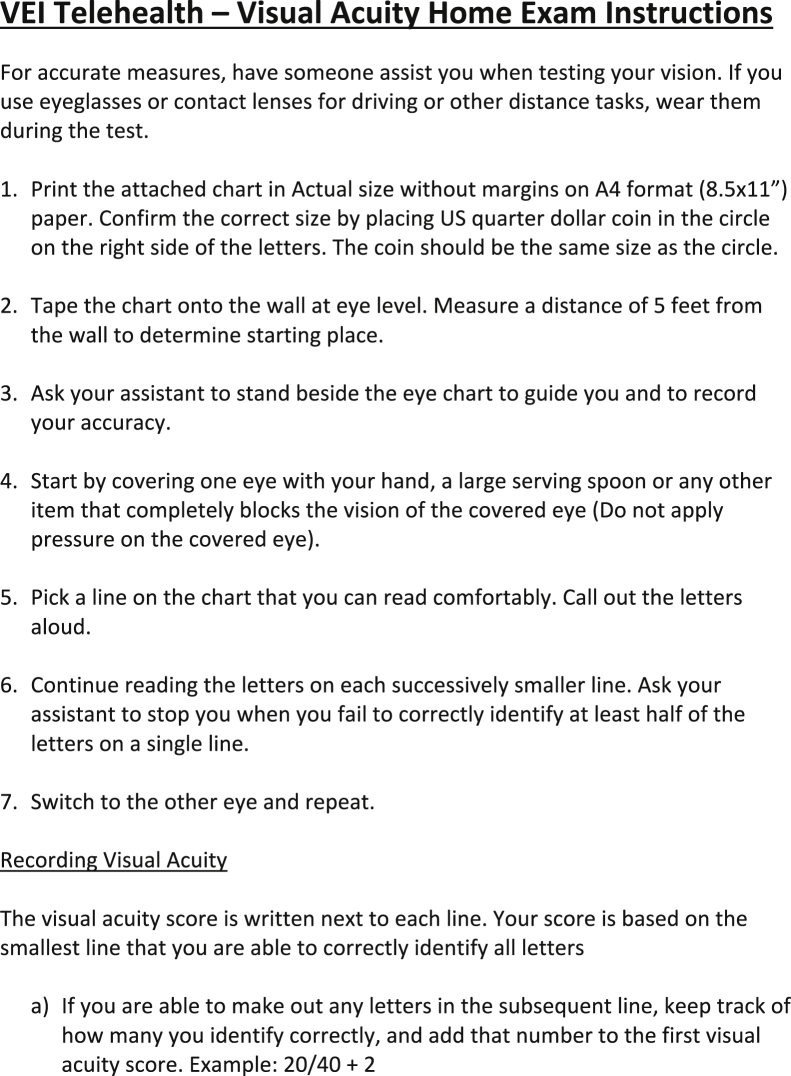

Patients with upcoming clinic visits less than 1 week away were contacted via phone and offered the opportunity to participate in the study. Written informed consent was obtained from all participants through the patient portal with a research technician on the phone. These patients were electronically sent a .pdf document of the ETDRS vision chart with instructions via the online patient portal (Figs 1 and 2). The chart was designed by Banner Eye Care and the University of Arizona Department of Ophthalmology and Vision Science and was placed in the public domain in April 2020 via an e-mail to the Association of University Professors of Ophthalmology community from the then-head of the University of Arizona Department of Ophthalmology, Dr. Joseph M. Miller. It includes Snellen equivalents with VA assessment up to 20/200.14 It fits on 1 standard letter page (8.5 × 11 inches) and was calibrated for 5 feet. Patients followed the instructions to complete the VA test at home before coming to clinic. Visual acuity then was measured in clinic by a trained technician using a standard ETDRS chart calibrated for 4 m. Patients who wear glasses or contacts were instructed to wear them during both the home and clinic tests. Questions about the ease of self-testing and barriers faced during the home test were administered by the technician in clinic.

Figure 1.

Early Treatment Diabetic Retinopathy Study home visual acuity chart.

Figure 2.

Early Treatment Diabetic Retinopathy Study home visual acuity examination instructions.

Outcomes

The primary outcome was the mean adjusted letter difference between the home and clinic tests. Secondary outcomes included the mean unadjusted letter difference, mean absolute letter difference, distribution of letter differences, and responses to survey questions regarding ease of testing and barriers to completion.

Covariates

Patient-level variables were selected based on the suspected association with VA performance and to assess generalizability across populations. Covariates were recorded during the index encounter and were extracted from the electronic medical record. Data that were collected included age, gender, race, zip code, baseline intraocular pressure, baseline VA, primary diagnosis, and clinic department. Baseline VA was defined as last recorded best-corrected VA and was converted from Snellen values to ETDRS letter scores using a published algorithm.15 Zip codes of each patient were used to identify the area deprivation index, a derivative of census tract variables pertaining to material deprivation.16 Index scores range from 0 to 1, with higher index indicating greater deprivation and lower socioeconomic status. The primary diagnosis of each patient was classified into 1 of the following categories: cataract, corneal disease, glaucoma, macular disease, neuro-ophthalmology disease, oculoplastics disease, refractive error, retinal vascular disease, uveitis, and visual impairment or screening.

Statistical Analysis

Patients who completed the vision tests at home and in clinic were classified as having participated, whereas those who declined to participate or did not complete 1 of the tests were classified as having declined. Differences in demographic and ophthalmic measurements between these groups were compared using a Wilcoxon rank-sum test for continuous variables and a Pearson chi-square test for categorical variables to assess for selection bias.

For the bioequivalence test with a 5% nominal level, the 2 1-sided tests procedure (comparing with a 2-sided test for significance) was used, and 90% confidence intervals (CIs) were constructed for the unadjusted, adjusted, and absolute difference between letter scores on the home and clinic tests.17,18 Note that to achieve a 5% nominal level, the commonly used significance test will compare the boundary of 95% CIs with 0, whereas a bioequivalence test such as the one used in this study will evaluate if 90% CIs (but not 95% CIs) fall into the prespecified bioequivalence boundary. We refer to Chow and Zheng18 for more technical details. The bootstrap method was used to construct the 90% CIs for both the unadjusted and absolute letter difference with 5000 samples. We resampled patients to control for the correlation between left and right eyes from the same patient. A generalized estimating equation model with patient cluster and adjustment for demographic variables, intraocular pressure, and baseline VA was used to derive a 90% CI for the adjusted letter difference between the home and clinic tests. The CIs then were compared with the prespecified overall average equivalence margin of ±7 letters. Although no established equivalence margin was found in the literature, this equivalence boundary is conservative given that previously reported test–retest variability values for ETDRS charts used in clinics range from 0.07 to 0.19 logarithm of the minimum angle of resolution (logMAR), or up to approximately 9.5 letters.19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29 If the 90% CIs for score difference fall within –7 to 7, the 2 methods will be claimed as equivalent. Otherwise, they will be deemed nonequivalent. We also fit generalized estimating equation models for score difference and absolute score difference adjusting for the same set of covariates as well as clinic VA to evaluate the association between clinic-measured VA and score difference. Data analysis was performed with R software version 3.6.1 (R Foundation for Statistical Computing).

Sample Size Calculation

Assuming an average difference of 5 ETDRS letters at home and in clinic, a correlation coefficient of 0.2 between left and right eyes of the same patient, a standard deviation of 8 letters, an equivalence margin of 7 letters, and a type I error rate of 5%, we needed 148 patients (or 276 eyes) to achieve 90% power and 105 patients (or 210 eyes) to achieve 80% power.17 Sample size calculation was based on 5000 Markov chain Monte Carlo simulations.

Results

Study Population

Three hundred three patients were contacted and offered the chance to enroll in the study. Of these, 195 patients declined or did not complete the study, leaving a study population of 108 patients (209 eyes) who completed the measurements at home and in clinic. Demographic and ocular characteristics of patients who were offered participation are summarized in Table 1. The average age of the study population was 52 years (range, 19–83 years), and 65% of the patients were women. The clinics attended by the study population included comprehensive ophthalmology (28%), glaucoma (19%), retina (18%), cornea (16%), neuro-ophthalmology (10%), and oculoplastics (10%). The study population was diverse in primary diagnoses, with the 2 most common diagnosis categories being refractive error (20%) and glaucoma (19%). The only covariate found to be associated with participation in the study was VA because patients who completed the study were more likely to have better VA in their worse eye than those who declined or did not complete the study (P = 0.01).

Table 1.

Characteristics of Patients Who Participated and Who Declined

| Contacted (n = 303) | Declined (n = 195) | Participated (n = 108) | P Value | |

|---|---|---|---|---|

| Age (yrs) | 0.17∗ | |||

| Mean ± SD | 54 ± 17 | 55 ± 16 | 52 ± 17 | |

| Range | 18–89 | 18–89 | 19–83 | |

| Gender | 0.46† | |||

| Female | 188 (62) | 118 (61) | 70 (65) | |

| Male | 115 (38) | 77 (39) | 38 (35) | |

| Race | 0.64† | |||

| Asian | 9 (3) | 6 (3) | 3 (3) | |

| Black | 42 (14) | 29 (15) | 13 (12) | |

| Other | 2 (1) | 1 (1) | 1 (1) | |

| Unknown | 28 (9) | 16 (8) | 12 (11) | |

| White | 222 (73) | 143 (73) | 79 (73) | |

| Clinic department | 0.17† | |||

| Comprehensive | 63 (21) | 33 (17) | 30 (28) | |

| Cornea | 48 (16) | 31 (16) | 17 (16) | |

| Glaucoma | 60 (20) | 40 (21) | 20 (19) | |

| Neuro-ophthalmology | 32 (11) | 21 (11) | 11 (10) | |

| Oculoplastics | 26 (9) | 15 (8) | 11 (10) | |

| Retina | 74 (24) | 55 (28) | 19 (18) | |

| Primary diagnosis category | 0.18† | |||

| Cataract | 17 (6) | 12 (6) | 5 (5) | |

| Corneal disease | 36 (12) | 23 (12) | 13 (12) | |

| Glaucoma | 57 (19) | 36 (18) | 21 (19) | |

| Macular disease | 15 (5) | 11 (6) | 4 (4) | |

| Neuro-ophthalmology disease | 29 (10) | 20 (10) | 9 (8) | |

| None | 11 (4) | 11 (6) | 0 (0) | |

| Oculoplastics disease | 19 (6) | 11 (6) | 8 (7) | |

| Refractive error | 43 (14) | 21 (11) | 22 (20) | |

| Retinal vascular disease | 17 (6) | 11 (6) | 6 (6) | |

| Uveitis | 15 (5) | 12 (6) | 3 (3) | |

| Visual impairment/screening | 44 (15) | 27 (14) | 17 (16) | |

| Area deprivation index score (n = 300) | 0.329 ± 0.097 | 0.336 ± 0.096 | 0.317 ± 0.099 | 0.11∗ |

| Intraocular pressure (mmHg) | ||||

| Right eye (n = 254) | 15.3 ± 4.0 | 15.6 ± 4.1 | 14.8 ± 3.6 | 0.16∗ |

| Left eye (n = 255) | 15.4 ± 3.9 | 15.7 ± 3.9 | 14.9 ± 3.7 | 0.06∗ |

| Visual acuity of better-seeing eye (n = 264) | ||||

| ETDRS letters | 80.9 ± 8.7 | 80.0 ± 9.6 | 82.2 ± 6.7 | 0.12∗ |

| Snellen equivalent | 20/24 | 20/25 | 20/23 | |

| Visual acuity of worse-seeing eye (n = 264) | ||||

| ETDRS letters | 75.3 ± 11.4 | 74.0 ± 12.1 | 77.4 ± 9.9 | 0.01∗‡ |

| Snellen equivalent | 20/31 | 20/33 | 20/28 |

ETDRS = Early Treatment Diabetic Retinopathy Study; SD = standard deviation.

Data are presented as mean ± SD or no. of patients (%), unless otherwise indicated.

Wilcoxon test.

Pearson test.

Statistically significant.

Visual Acuity at Home versus in Clinic

Visual acuity results at home and in clinic are summarized in Table 2. The mean±standard deviation ETDRS letter scores at home and in clinic were 75 ± 11 letters (Snellen equivalent, 20/32) and 79 ± 11 letters (Snellen equivalent, 20/26), respectively. The difference in letter score was 3.9 ± 5.8 letters (90% CI, 3.1–4.7 letters). The adjusted letter difference was calculated using a model to control for demographic variables, intraocular pressure, and baseline VA and resulted in an estimated mean letter difference of 4.1 letters (90% CI, 3.2–4.9 letters). These values are within the study’s prespecified 7-letter equivalence margin. Therefore, the home test is equivalent to the clinic test in our cohort.

Table 2.

Visual Acuity Letter Scores at Home and in Clinic

| Variable | Data |

|---|---|

| Home | |

| ETDRS letter score | 75 ± 11 |

| Snellen equivalent | 20/32 |

| Clinic | |

| ETDRS letter score | 79 ± 11 |

| Snellen equivalent | 20/26 |

| Unadjusted difference in letter score | 3.9 ± 5.8 |

| Absolute difference in letter score | 5.2 ± 4.6 |

| 90% Confidence interval | |

| Unadjusted difference in letter score | 3.1–4.7 |

| Absolute difference in letter score | 4.6–5.9 |

| Adjusted difference in letter score | 3.2–4.9 |

| Distribution of eyes by absolute difference in letter score (letters) | |

| ≤ 5 | 136 (65) |

| ≤ 10 | 185 (89) |

| ≤ 15 | 204 (98) |

| ≤ 20 | 209 (100) |

| Distribution of eyes by difference in letter score∗ | |

| –16 to –20 | 1 (0) |

| –11 to –15 | 0 (0) |

| –6 to –10 | 5 (2) |

| –1 to –5 | 31 (15) |

| 0 | 25 (12) |

| 1–5 | 80 (38) |

| 6–10 | 44 (21) |

| 11–15 | 19 (9) |

| 16–20 | 4 (2) |

ETDRS = Early Treatment Diabetic Retinopathy Study.

Data are presented as mean ± standard deviation or no. of patients (%), unless otherwise indicated.

Difference is presented as clinic score minus home score.

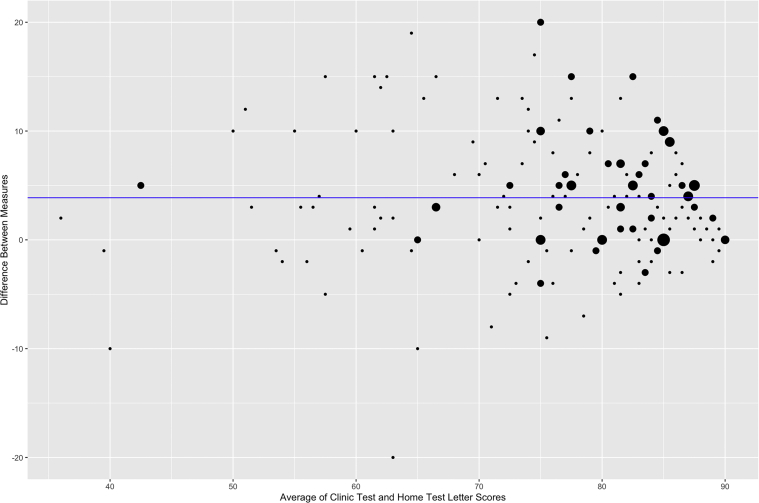

Figure 3 shows a Bland-Altman plot of the differences in letter scores at home and in clinic. The mean absolute difference in letter score (absolute value of clinic letter score minus home letter score) was 5.2 ± 4.6 letters (90% CI, 4.6–5.9 letters). Eighty-nine percent of patients showed an absolute difference of 10 letters or fewer, and 98% of patients showed an absolute difference of 15 letters or fewer. Across the board, scores tended to be higher on the clinic test compared with the home test. In fact, only 2.9% of patients showed home scores that exceeded clinic scores by more than 5 letters. Table 3 shows the unadjusted letter differences and absolute letter differences broken down by clinic VA. Although this study was not powered to evaluate the bioequivalence for individual subgroups, most of the 90% CIs for letter score difference fell into the equivalence interval of –7 to 7, except for group with clinic VA of 61 to 70 letters, in which the 90% CI, 1.8 to 7.1 letters, fell slightly outside the boundary. For absolute score difference, the 90% CIs for the groups with clinic VA of 0 to 60 letters and 61 to 70 letters fell outside the equivalence interval (90% CIs, 3.75–7.62 and 3.67–8.22 letters, respectively). Independent of the other covariates, increased clinic VA was associated significantly with increasing letter difference (β = 0.206; P = 0.006), but not with absolute letter difference (β = 0.003; P = 0.95).

Figure 3.

Bland-Altman plot showing the differences in letter scores at home and in clinic. Dot size is proportional to the number of patients. Blue line shows average pure difference in letter score.

Table 3.

Adjusted Difference in Visual Acuity Letter Score by Clinic Letter Score

| Clinic Letter Score | Adjusted Difference∗ in Letter Score |

Absolute Difference in Letter Score |

||

|---|---|---|---|---|

| Mean ± Standard Deviation | 90% Confidence Interval | Mean ± Standard Deviation | 90% Confidence Interval | |

| 0-60 | 0.3 ± 7.5 | –2.6 to 3.2 | 5.5 ± 5.0 | 3.8–7.6 |

| 61-70 | 4.3 ± 6.9 | 1.8–7.1 | 5.8 ± 5.6 | 3.7–8.2 |

| 71-80 | 3.5 ± 5.7 | 2.2–4.8 | 5.2 ± 4.2 | 4.3–6.1 |

| 81-85 | 4.2 ± 5.9 | 2.8–5.7 | 5.0 ± 5.2 | 3.8–6.4 |

| 86-90 | 5.1 ± 4.0 | 4.6–6.2 | 5.2 ± 3.9 | 4.2–6.3 |

Presented as clinic score minus home score.

Barriers and Ease of Use

Few study participants reported barriers to using the printout chart at home, as shown in Table 4. Ninety-eight percent of patients agreed or strongly agreed that the VA test was easy to set up and perform, and 94% of patients reported that they would be willing to perform the test at home. Technology access, confidence in accurately completing the test, clarity of instructions, and level of vision were barriers in less than 5% of participants.

Table 4.

Survey Responses

| Question | Data | |

|---|---|---|

| Ease of use | ||

| “The home visual acuity test was easy to set up and perform” | ||

| Strongly disagree | 0 (0) | |

| Disagree | 1 (1) | |

| Neither agree nor disagree | 1 (1) | |

| Agree | 26 (24) | |

| Strongly agree | 80 (74) | |

| Barriers to completion | ||

| Barrier experienced | Yes | No |

| Lack of access to technology | 2 (2) | 106 (98) |

| Lack of confidence to perform accurately at home | 4 (4) | 104 (96) |

| Lack of clear instructions | 5 (5) | 103 (95) |

| Reluctance to try at home | 6 (6) | 102 (94) |

| Vision is too poor | 0 (0) | 108 (100) |

Discussion

This study is important because it evaluated a simple, scalable method to measure the VA of patients for teleophthalmology visits. We found that the printout ETDRS chart used at home accurately predicts the VA that would be measured in clinic in our cohort with an adjusted letter difference 90% CI of 3.2 to 4.9 letters.

These findings sufficiently validate the home testing protocol because they are consistent with previously reported test–retest variability values for ETDRS charts used in clinic. Our equivalency cutoff of 7 letters is well within the expected test–retest variability of ETDRS charts, which range from 0.07 to 0.19 logMAR, or up to approximately 2 lines of vision.19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29 The test–retest variability of Snellen charts has been reported to be worse, as high as 0.24 logMAR and 0.29 logMAR when measuring by letter and 0.33 logMAR when measuring by line.22,23 That is, the difference between the home and clinic ETDRS vision charts on average in our cohort is no greater than the variability typically seen in subsequent measurements performed in clinic with Snellen or ETDRS charts.

Our findings also are consistent with a recently published study from England by Crossland et al30 that is the only other existing research on a home VA test. In the study of 100 adult patients, patients’ VA measurements using a home chart similar to the one used in our study were compared with their last recorded VAs in clinic. The difference was 0.10 logMAR, the equivalent of 5.0 ETDRS letters.30 The clinic VA score was more than the home VA score, just as it was in our study.

Limitations of this study include its generalizability. It is possible the study population had a self-selection bias because patients with more familiarity with technology may have been more likely to participate. Additionally, the exclusion of eyes with a baseline VA worse than 20/200 limits generalizability to patients with very poor vision because the chart does not include VA assessment beyond 20/200. Furthermore, this study is not generalizable to patients with a non-English primary language because these patients were excluded. Future studies could test the validity of a VA test based in another language such as Spanish. This study’s generalizability is limited to patients 18 to 85 years of age who have a primary language of English, access to a computer and printer, and VA of 20/200 or better.

Other limitations include the variable time between the home and clinic measurements, which ranged from a few hours to 7 days among different patients, and the possibility of differences in effort and fatigue between measurements at home and in clinic. It should be noted that the VA assessments were tests of habitual VA, with patients instructed to wear their current glasses or contact lenses. Because pinhole vision was unable to be assessed by patients at home, we are unable to confirm whether the refractive error of patients was always fully corrected. It is possible that patients who wear glasses could have forgotten to wear them or could have worn the wrong pair when performing the home test, despite the instructions. In addition, the use of different printers and toner ink by patients could have resulted in different degrees of contrast on the eye charts. Also, the amount of lighting at home could have varied among patients, and not all patients may have measured the 5-foot reading distance exactly. Still, this study is strengthened by its prospective nature, large population size, and diversity of demographic factors and ocular diagnoses.

However, it should be noted that the home test is not for everyone, particularly patients with VA worse than 20/200. Patients who have severe eye disease need an in-person appointment to monitor their progression. However, for those patients who are undergoing screening for diabetic retinopathy or glaucoma or who have stable well-managed eye conditions, this home test can enhance the quality of teleophthalmology visits. Thus, the home test should not be used to replace in-person assessments when indicated, but rather should be used to delay well visits or as a tool that enhances the value of teleophthalmology visits.

Beyond demonstrating the validity of a home VA assessment, this study also highlighted the ease of use of the test among patients willing to embrace the new methodology. The provision of simple, clear instructions attached to the vision chart facilitated favorable responses on the feedback surveys. The lack of barriers reported by participants across different ages, diagnoses, and VAs further indicates that the home testing protocol is feasible for a wide range of patient populations. Although we were unable to capture adequately the reasons why patients declined to participate or did not complete the study, the most common reason was the lack of access to necessary technology such as a computer or a printer. However, many of these patients were still able to participate in telemedicine appointments through smartphones and tablets. We suggest that home VA tests include an option to mail out physical copies of the chart to address this barrier. Additionally, having the eye care provider educate patients on how to test VA at home may bolster their confidence and willingness to self-administer tests in the future.

In conclusion, this study showed that ophthalmologists can use a home testing protocol as part of their teleophthalmology routine and anticipate reliable measurements of VA. This finding will improve the effectiveness and feasibility of teleophthalmology and will enable its expansion across the world, both during the COVID-19 pandemic and beyond.

Acknowledgments

The authors thank Dr. Joseph M. Miller, Banner Eye Care, and the University of Arizona Department of Ophthalmology and Vision Science for the design of the home visual acuity chart. This chart was placed in the public domain by Dr. Miller via an e-mail through the Association of University Professors of Ophthalmology in April 2020. In addition, research reported in this publication was supported by the Vanderbilt Institute for Clinical and Translational Research (VICTR). VICTR is funded by the National Center for Advancing Translational Sciences (NCATS) Clinical Translational Science Award (CTSA) Program, Award Number 5UL1TR002243-03. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

Manuscript no. D-21-00004.

Footnotes

Disclosure(s): All authors have completed and submitted the ICMJE disclosures form.

The author(s) have made the following disclosure(s): S.N.P.: Financial support – Alcon

Supported in part by Research to Prevent Blindness, Inc., New York, New York (unrestricted grant to the Vanderbilt Eye Institute). The sponsor or funding organization had no role in the design or conduct of this research.

HUMAN SUBJECTS: Human subjects were included in this study. The human ethics committees at Vanderbilt University approved the study. All research complied with the Health Insurance Portability and Accountability Act (HIPAA) of 1996 and adhered to the tenets of the Declaration of Helsinki. All participants provided informed consent.

No animal subjects were included in this study.

Author Contributions:

Conception and design: Donahue, Patel, Sternberg, Robinson, Kammer, Gangaputra

Analysis and interpretation: Siktberg, Hamdan, Liu, Chen, Gangaputra

Data collection: Siktberg, Hamdan, Gangaputra

Obtained funding: N/A

Overall responsibility: Siktberg, Hamdan, Liu, Chen, Donahue, Patel, Sternberg, Robinson, Kammer, Gangaputra

References

- 1.Verma S. Early impact of CMS expansion of Medicare telehealth during COVID-19. Health Affairs Blog. Published July 15, 2020. https://www.healthaffairs.org/do/10.1377/hblog20200715.454789/full/ Available at: Accessed 07.08.20.

- 2.American Academy of Ophthalmology Recommendations for urgent and nonurgent patient care. Published March 18, 2020. https://www.aao.org/headline/new-recommendations-urgent-nonurgent-patient-care Accessed 07.08.20.

- 3.Saleem S.M., Pasquale L.R., Sidoti P.A., Tsai J.C. Virtual ophthalmology: telemedicine in a COVID-19 era. Am J Ophthalmol. 2020;216:237–242. doi: 10.1016/j.ajo.2020.04.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Williams A.M., Kalra G., Commiskey P.W., et al. Ophthalmology practice during the coronavirus disease 2019 pandemic: the University of Pittsburgh experience in promoting clinic safety and embracing video visits. Ophthalmol Ther. 2020;9(3):1–9. doi: 10.1007/s40123-020-00255-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Institute of Medicine . The National Academies Press; Washington, DC: 1996. Telemedicine: A Guide to Assessing Telecommunications for Health Care. [PubMed] [Google Scholar]

- 6.Holekamp N.M. Moving from clinic to home: what the future holds for ophthalmic telemedicine. Am J Ophthalmol. 2018;187 doi: 10.1016/j.ajo.2017.11.003. xxviii–xxxv. [DOI] [PubMed] [Google Scholar]

- 7.Adams M.K., Date R.C., Weng C.Y. The emergence of telemedicine in retina. Int Ophthalmol Clin. 2016;56(4):47–66. doi: 10.1097/IIO.0000000000000138. [DOI] [PubMed] [Google Scholar]

- 8.Strul S., Zheng Y., Gangaputra S., et al. Pediatric diabetic retinopathy telescreening. J AAPOS. 2020;24(1) doi: 10.1016/j.jaapos.2019.10.010. 10.e11–10.e15. [DOI] [PubMed] [Google Scholar]

- 9.Patel S., Hamdan S., Donahue S. Optimising telemedicine in ophthalmology during the COVID-19 pandemic. J Telemed Telecare. 2020 Aug 16 doi: 10.1177/1357633X20949796. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Silverstein E., Williams J.S., Brown J.R., et al. Teleophthalmology: evaluation of phone-based visual acuity in a pediatric population. Am J Ophthalmol. 2021;221:199–206. doi: 10.1016/j.ajo.2020.08.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Han X., Scheetz J., Keel S., et al. Development and validation of a smartphone-based visual acuity test (vision at home) Transl Vis Sci Technol. 2019;8(4):27. doi: 10.1167/tvst.8.4.27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Brady C.J., Eghrari A.O., Labrique A.B. Smartphone-based visual acuity measurement for screening and clinical assessment. JAMA. 2015;314(24):2682–2683. doi: 10.1001/jama.2015.15855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.American Academy of Ophthalmology Home eye test for children and adults. Published May 19, 2020. https://www.aao.org/eye-health/tips-prevention/home-eye-test-children-adults Accessed 07.08.20.

- 14.Miller J.M., Jang H.S., Ramesh D., et al. Telemedicine distance and near visual acuity tests for adults and children. J APPOS. 2020;24(4):235–236. doi: 10.1016/j.jaapos.2020.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gregori N.Z., Feuer W., Rosenfeld P.J. Novel method for analyzing snellen visual acuity measurements. Retina. 2010;30(7):1046–1050. doi: 10.1097/IAE.0b013e3181d87e04. [DOI] [PubMed] [Google Scholar]

- 16.Brokamp C., Beck A.F., Goyal N.K., et al. Material community deprivation and hospital utilization during the first year of life: an urban population-based cohort study. Ann Epidemiol. 2019;30:37–43. doi: 10.1016/j.annepidem.2018.11.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Phillips K.F. Power of the two one-sided tests procedure in bioequivalence. J Pharmacokinet Biopharm. 1990;18(2):137–144. doi: 10.1007/BF01063556. [DOI] [PubMed] [Google Scholar]

- 18.Chow S.-C., Zheng J. The use of 95% CI or 90% CI for drug product development—a controversial issue? J Biopharm Stat. 2019;29(5):834–844. doi: 10.1080/10543406.2019.1657141. [DOI] [PubMed] [Google Scholar]

- 19.Reeves B.C., Wood J.M., Hill A.R. Vistech VCTS 6500 charts—within-and between-session reliability. Optom Vis Sci. 1991;68(9):728–737. doi: 10.1097/00006324-199109000-00010. [DOI] [PubMed] [Google Scholar]

- 20.Elliott D.B., Sheridan M. The use of accurate visual acuity measurements in clinical anti-cataract formulation trials. Ophthalmic Physiol Opt. 1988;8(4):397–401. doi: 10.1111/j.1475-1313.1988.tb01176.x. [DOI] [PubMed] [Google Scholar]

- 21.Vanden Bosch M.E., Wall M. Visual acuity scored by the letter-by-letter or probit methods has lower retest variability than the line assignment method. Eye (Lond) 1997;11(3):411–417. doi: 10.1038/eye.1997.87. [DOI] [PubMed] [Google Scholar]

- 22.Rosser D.A., Laidlaw D.A., Murdoch I.E. The development of a “reduced logMAR” visual acuity chart for use in routine clinical practice. Br J Ophthalmol. 2001;85(4):432–436. doi: 10.1136/bjo.85.4.432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Laidlaw D.A., Abbott A., Rosser D.A. Development of a clinically feasible logMAR alternative to the Snellen chart: performance of the “compact reduced logMAR” visual acuity chart in amblyopic children. Br J Ophthalmol. 2003;87(10):1232–1234. doi: 10.1136/bjo.87.10.1232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Beck R.W., Moke P.S., Turpin A.H., et al. A computerized method of visual acuity testing: adaptation of the early treatment of diabetic retinopathy study testing protocol. Am J Ophthalmol. 2003;135(2):194–205. doi: 10.1016/s0002-9394(02)01825-1. [DOI] [PubMed] [Google Scholar]

- 25.Rosser D.A., Cousens S.N., Murdoch I.E., et al. How sensitive to clinical change are ETDRS logMAR visual acuity measurements? Invest Ophthalmol Vis Sci. 2003;44(8):3278–3281. doi: 10.1167/iovs.02-1100. [DOI] [PubMed] [Google Scholar]

- 26.Laidlaw D.A., Tailor V., Shah N., et al. Validation of a computerised logMAR visual acuity measurement system (COMPlog): comparison with ETDRS and the electronic ETDRS testing algorithm in adults and amblyopic children. Br J Ophthalmol. 2008;92(2):241–244. doi: 10.1136/bjo.2007.121715. [DOI] [PubMed] [Google Scholar]

- 27.Shah N., Laidlaw D.A., Rashid S., Hysi P. Validation of printed and computerised crowded Kay picture logMAR tests against gold standard ETDRS acuity test chart measurements in adult and amblyopic paediatric subjects. Eye (Lond) 2012;26(4):593–600. doi: 10.1038/eye.2011.333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Cooke M.D., Winter P.A., McKenney K.C., et al. An innovative visual acuity chart for urgent and primary care settings: validation of the Runge near vision card. Eye (Lond) 2019;33(7):1104–1110. doi: 10.1038/s41433-019-0372-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Falkenstein I.A., Cochran D.E., Azen S.P., et al. Comparison of visual acuity in macular degeneration patients measured with snellen and early treatment diabetic retinopathy study charts. Ophthalmology. 2008;115(2):319–323. doi: 10.1016/j.ophtha.2007.05.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Crossland M.D., Dekker T.M., Hancox J., et al. Evaluation of a home-printable vision screening test for telemedicine. JAMA Ophthalmol. 2021;139(3):271–277. doi: 10.1001/jamaophthalmol.2020.5972. [DOI] [PMC free article] [PubMed] [Google Scholar]