Abstract

Purpose

To develop a novel evaluation system for retinal vessel alterations caused by hypertension using a deep learning algorithm.

Design

Retrospective study.

Participants

Fundus photographs (n = 10 571) of health-check participants (n = 5598).

Methods

The participants were analyzed using a fully automatic architecture assisted by a deep learning system, and the total area of retinal arterioles and venules was assessed separately. The retinal vessels were extracted automatically from each photograph and categorized as arterioles or venules. Subsequently, the total arteriolar area (AA) and total venular area (VA) were measured. The correlations among AA, VA, age, systolic blood pressure (SBP), and diastolic blood pressure were analyzed. Six ophthalmologists manually evaluated the arteriovenous ratio (AVR) in fundus images (n = 102), and the correlation between the SBP and AVR was evaluated manually.

Main Outcome Measures

Total arteriolar area and VA.

Results

The deep learning algorithm demonstrated favorable properties of vessel segmentation and arteriovenous classification, comparable with pre-existing techniques. Using the algorithm, a significant positive correlation was found between AA and VA. Both AA and VA demonstrated negative correlations with age and blood pressure. Furthermore, the SBP showed a higher negative correlation with AA measured by the algorithm than with AVR.

Conclusions

The current data demonstrated that the retinal vascular area measured with the deep learning system could be a novel index of hypertension-related vascular changes.

Keywords: Arteriosclerosis, Deep learning system, Hypertensive retinopathy, Imaging, Retinal arteriolar narrowing

Abbreviations and Acronyms: AA, total arteriolar area; AVR, arteriovenous ratio; AUC, area under the receiver operating characteristic curve; BP, blood pressure; DBP, diastolic blood pressure; DRIVE, Digital Retinal Images for Vessel Extraction; FN, false-negative; FP, false-positive; FPa, FP arterioles; FPv, FP venules; MISCa, misclassification rates of arterioles; MISCv, misclassification rates of venules; RGB, red-green-blue; SBP, systolic blood pressure; TN, true-negative; TP, true-positive; TPa, TP arterioles; TPv, TP venules; VA, total venular area

Hypertension and arteriosclerosis are major public health problems worldwide.1,2 Approximately 30% of adults worldwide have hypertension,3 and 10.4 billion deaths have been related to high systolic blood pressure (SBP) in the past 3 decades.4 Because hypertension causes morphologic changes in microvasculature, practical techniques to evaluate hypertension-related vessel alterations have been explored.5,6 The transparent structure of the eye enables us to examine the retinal vasculature directly; therefore, fundus examination has been used to assess alterations in microvasculature in patients with hypertension.

The retinal arteriovenous ratio (AVR), the ratio between retinal arteriolar and venular diameters, is a classic index to evaluate retinal arteriolar narrowing, which is used widely and routinely in clinical settings. An AVR of 2:3 is considered healthy, and AVR decreases with age and blood pressure (BP) elevation.7 Because retinal arteriolar narrowing is related to the risk of various systemic diseases including diabetes,8 cardiovascular disease,8,9 and cerebrovascular complications,10 estimating AVR estimation has been a simplified but useful clinical technique in routine ophthalmic practice. However, despite AVR being easy to use, an ophthalmoscopic evaluation of retinal AVR is subjective and lacks both intragrader and intergrader repeatabilities. Therefore, extensive efforts have been made to improve the shortcomings and revolutionize AVR estimation using a scientific approach. Consequently, a semiautomated system was developed to calculate the retinal AVR using the diameters of all arterioles and venules coursing in a specified area surrounding the optic disc in fundus photographs.11 However, it was a semiautomated method supported by human graders to choose the vessel segments, which may hinder objective manipulation to analyze retinal vessels. Therefore, to establish a more accurate and standardized vascular measurement method and to assess a large number of subjects, an automatic vessel segmentation method with high accuracy is necessary. The aim of the present study was to develop a fully automatic architecture assisted by a deep learning system to measure separately the total area of retinal arterioles and venules in fundus images.

Methods

Deep Learning Architecture

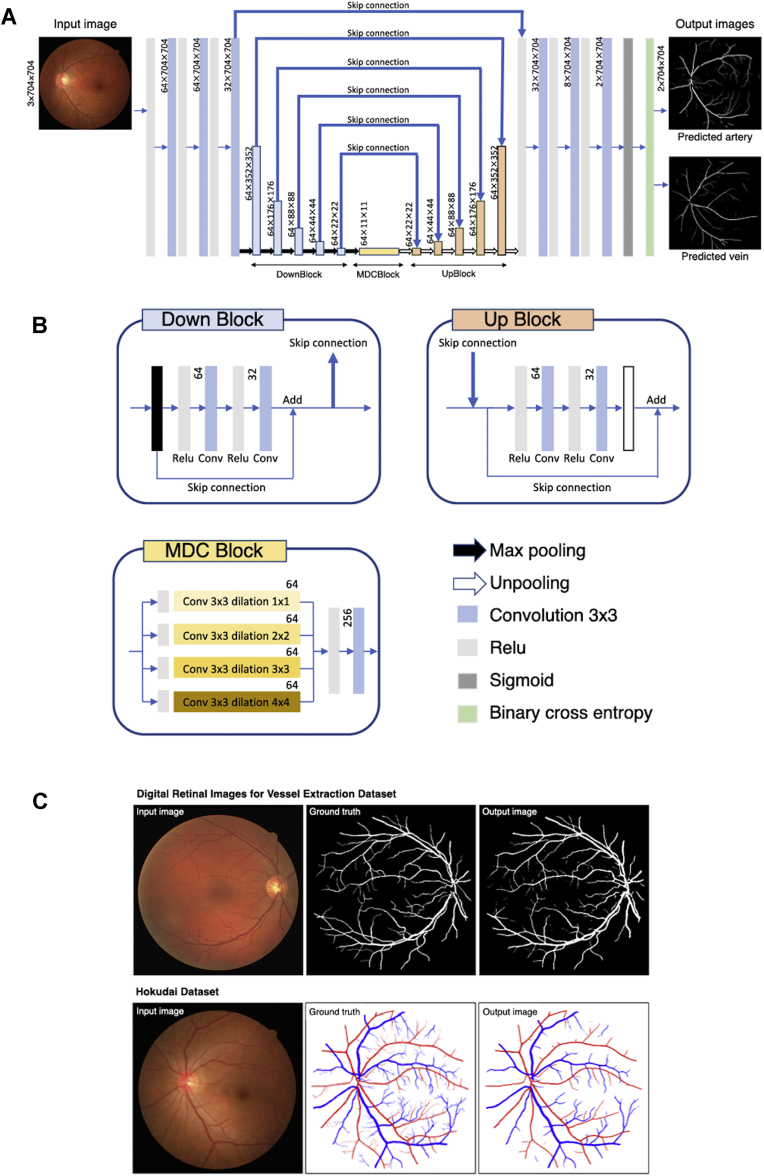

Figure 1A shows the neural network process used in this study. The neural network has an encoder–decoder structure, similar to the U-Net, which is a traditional neural network model used previously for semantic segmentation.12 A fundus photograph with red-green-blue (RGB) channels containing 704 × 704 pixels is used as an input image. From the 1 deep learning tree, 2 probability maps of arterioles and venules are produced as outputs. The probability map is binarized with a threshold set as 125. The threshold value was determined experimentally. In Figure 1A, the blue bars with black border represent DownBlock, whereas the orange and yellow bars represent the UpBlock and multiple dilated convolutional block,13 respectively. The left-side path consists of repeated DownBlocks connected to the corresponding UpBlocks. The connections are called skip connections (Fig 1B, bold blue arrows). In addition to the skip connections between DownBlocks and UpBlocks, each DownBlock and UpBlock has additional short connections internally, similar to ResBlock.14 The distinct skip connections reduce the gradient loss in backpropagation and solve the vanishing gradient problem as the network becomes deeper. The multiple dilated convolutional block consists of 4 dilated convolution layers with different strides. It is placed between the left-side encoder and the right-side decoder, contributing to capturing the global features. Sigmoid function is used to transform the output of the network into a probability map.

Figure 1.

Proposed deep learning method. A, Schematic view of the deep learning model. The numbers written beside each layer represent the number of feature maps × width (pixels) × height (pixels). B, Detailed expositions of the DownBlock, UpBlock, multiple dilated convolutional (MDC) block, and signs (arrows and layers). C, Representative input image, manually annotated ground truth, and automatic vessel segmentation of the digital retinal images for vessel extraction dataset (top row) and representative input image, manually annotated ground truth, and automatic vessel segmentation of the Hokudai dataset (bottom row).

Training Methods

We implemented the neural network on NNabla version 0.9.9 (Sony Corporation). Training images were augmented randomly by flipping the images horizontally and rotating them within a 0.26 radian before inputting them into the neural network. To minimize the overhead and use the graphic processing unit memory maximally, we prioritized the size of input images over the batch size. For the NVIDIA GTX1080 graphic processing unit, we chose 704 × 704 square pixels and reduced the batch size to 2 samples. The epoch size was set as 1000 with the early stopping method. Binary cross-entropy loss function and Adam optimizer was used with the following parameters: initial learning rate, 0.001; α, 0.001; β1, 0.9; β2, 0.999; and ε, 1E-8.

Datasets

A public dataset known as Digital Retinal Images for Vessel Extraction (DRIVE) was used to evaluate our deep learning algorithm for comparison with other methods. Our original Hokudai dataset consisting of fundus images acquired at the Keijinkai Maruyama Clinic and Hokkaido University Hospital also was used to develop the deep learning algorithm. Blurred fundus images resulting from media opacities or inadequate imaging conditions were excluded. The institutional review boards for clinical research of the Keijinkai Maruyama Clinic (identifier, 20120626-1) and Hokkaido University Hospital (identifier, 012-0106) approved the study protocol. The requirement for informed consent was waived because of the retrospective nature of the study. This research adhered to the tenets of the Declaration of Helsinki.

The Hokudai dataset contained 102 color fundus photographs obtained from patients who visited the Keijinkai Maruyama Clinic for regular health checkups using an autofundus camera (AFC-330; Nidek, LLC, Tokyo, Japan). The mean age was 52 ± 8 years, the mean SBP was 124 ± 13 mmHg, and the mean diastolic BP (DBP) was 79 ± 10 mmHg. The corresponding ground truth images were generated by manual annotation of retinal vessels by 2 ophthalmologists (M.S. and K.F.) in a precise fashion. The Hokudai dataset then was divided into 82 images as the training set, 10 images as the validation set, and 10 images as the test set.

The DRIVE dataset, a public dataset containing 40 color fundus photographs from a diabetic retinopathy screening program in The Netherlands,15 has been used widely to evaluate the accuracy of automatic retinal vessel segmentation methods.15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27 The DRIVE dataset contains 20 images as the training set, and 20 images for validation and testing of the deep learning algorithm. The size of each photograph in the DRIVE dataset is 565 × 584 pixels. To apply our deep learning method, which accepts images with 704 × 704 pixels as input data, each input image was pasted on a black background mount measuring 704 × 704 pixels. The verification process was conducted based on the original size.

Verification of Vessel Segmentation Algorithms

To evaluate the vessel segmentation ability of our deep learning architecture using the DRIVE dataset, the algorithm was arranged to produce 1 output image. We combined the probability maps of arterioles and venules that our network output to create a probability map of vessels to evaluate the neural network trained using the Hokudai dataset.

Accuracy of vessel segmentation in the predicted images was evaluated by calculating the number of false-positive (FP) results, false-negative (FN) results, true-positive (TP) results, and true-negative (TN) results in pixel drawings of retinal vessel structures. Using the parameters, indices to evaluate the accuracy of the deep learning system, such as sensitivity, specificity, overall accuracy, Dice coefficient, and area under the receiver operating characteristic curve (AUC), also were calculated from the equations below:

The AUC was calculated from sensitivity and specificity using scikit-learn module 0.19.1. using Python version 3.6.4.

Verification of Arteriovenous Classification Algorithms

Accuracy of the arteriovenous classification in the predicted images was assessed by the misclassification rates of arterioles (MISCa) and misclassification rates of venules (MISCv), and overall accuracy of the arteriovenous classification calculated using TP arterioles (TPa), TP venules (TPv), FP arterioles (FPa), and FP venules (FPv) from the equations below26:

The number of the pixels identified as both arteriole and venule was calculated in the output images produced from the test set of Hokudai dataset (10 images).

Vascular Area Measurement

Color retinal photographs (n = 10 571) obtained from patients who visited the Keijinkai Maruyama Clinic for a regular health checkup were used to analyze the vascular area measured by the deep learning algorithm. The 102 images from the Hokudai dataset used for the training of the deep learning system are included. The mean age was 49 ± 10 years, the mean SBP was 117 ± 16 mmHg, and the mean DBP was 74 ± 11 mmHg. Predicted images of the arterioles and venules were generated from color fundus photographs using the trained neural network. The sum of each probability map was defined as total arteriolar area and total venular area.

Repeatability of Vascular Area Measurement

To examine the repeatability of vascular area measurement by the deep learning algorithm, 2 consecutive fundus photographs of both eyes of 10 healthy volunteers were obtained and the areas of arterioles and venules in each photograph were measured.

Arteriovenous Ratio Measurement

For a manual AVR measurement, we sought to extract approximately 100 photographs from the original fundus photograph set (n = 10 571). We used random stratification to extract the photographs, which obtain the same distributions of age and BP as the original population. Consequently, a total of 102 photographs were extracted as a result of random stratification. The mean age was 52 ± 9 years, the mean SBP was 117 ± 14 mmHg, and the mean DBP was 73 ± 9 mmHg. Subsequently, well-trained ophthalmologists manually evaluated the AVR of these photographs between 0 and 1 in 0.1 steps and used the average value as the representative AVR value. In accordance with a previous study,7 the evaluation was performed visually after choosing a pair of arterioles and matching the venules from the photographs. The intergrader agreement of AVR was analyzed by calculating the intraclass correlation coefficient value using RStudio version 1.1.456.

Statistical Analysis

Pearson’s product-moment correlation was used for the statistical analysis to calculate the correlation efficiency between vessel areas and other parameters using RStudio version 1.1.456 statistical software.

Results

Verification of the Vessel Segmentation Ability

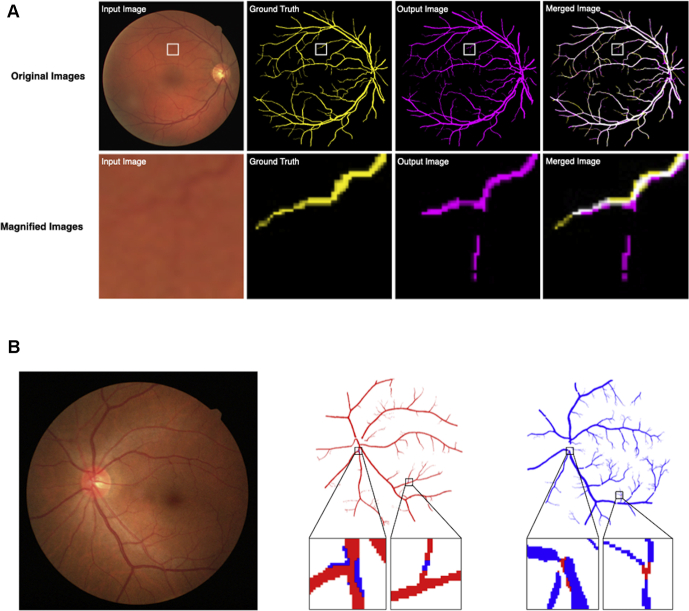

In the present study, the proposed deep learning system output the predicted images in which retinal vessels were distinguished as clusters of arterioles and venules (Fig 1C). Using the predicted images, we assessed the accuracy of the newly developed deep learning system in automatic segmentation of arterioles and venules directly from fundus images, as reported previously (Fig 2A).26 In the assessment of the vessel segmentation ability in the DRIVE dataset, parametric statistics were as follows: sensitivity, 0.778; specificity, 0.985; overall accuracy, 0.967; Dice coefficient, 0.800; and AUC, 0.98. The present data indicated the favorable ability of the deep learning system for vessel segmentation (Table 1). Alternatively, using the Hokudai dataset, the statistics were as follows: sensitivity, 0.833; specificity, 0.994; overall accuracy, 0.983; Dice coefficient, 0.871; and AUC, 0.99.

Figure 2.

Verification of automatic vessel segmentation and arteriovenous classification. A, Representative input image, ground truth image, output image by automatic vessel segmentation, and merged image (top row). In merged images, yellow pixels are regarded as false negative results, pink pixels are regarded as false positive results, white pixels are regarded as true positive results, and black pixels are regarded as true negative results. Magnified images of the boxed area in each image above appear in the bottom row. B, Representative input image (left). Representative predicted arteriole image (middle). Red pixels represent the area belonging to the arteriole in ground truth and predicted as an arteriole by the deep learning program. Blue pixels represent the area belonging to the venule in ground truth, but predicted as an arteriole by the deep learning program. Representative predicted venule image (right). Blue pixels represent the area belonging to the venule in ground truth and predicted as a venule by the deep learning program. Red pixels represent the area belonging to the arteriole in ground truth, but predicted as a venule by the deep learning program.

Table 1.

Comparison of Vessel Segmentation Algorithms

| Method | Authors | Year | Data Set | Sensitivity | Specificity | Overall Accuracy |

|---|---|---|---|---|---|---|

| Ensemble classifiers-based methods | Orlando et al | 2014 | DRIVE | 0.78 | 0.97 | N/A |

| Orlando et al | 2017 | DRIVE | 0.79 | 0.97 | N/A | |

| Lupascu et al | 2010 | DRIVE | 0.67 | 0.99 | 0.96 | |

| Fraz et al | 2012 | DRIVE | 0.74 | 0.98 | 0.95 | |

| Statistical learning-based methods | Staal et al | 2004 | DRIVE | N/A | N/A | 0.94 |

| Soares et al | 2006 | DRIVE | N/A | N/A | 0.95 | |

| Neural network | Marin et al | 2011 | DRIVE | 0.71 | 0.98 | 0.94 |

| Vega et al | 2014 | DRIVE | 0.74 | 0.96 | 0.94 | |

| Wang et al | 2015 | DRIVE | 0.82 | 0.97 | 0.98 | |

| Li et al | 2016 | DRIVE | 0.76 | 0.98 | 0.95 | |

| Mo et al | 2017 | DRIVE | 0.78 | 0.98 | 0.95 | |

| Xu et al | 2018 | DRIVE | 0.94 | 0.96 | 0.95 | |

| Yan et al | 2018 | DRIVE | 0.76 | 0.98 | 0.95 | |

| Proposed method | 2021 | DRIVE | 0.78 | 0.99 | 0.97 |

DRIVE = Digital Retinal Images for Vessel Extraction; N/A = not available.

Verification of the Arteriovenous Classification Ability

We assessed the algorithm of the deep learning system for classification of vessels into arterioles and venules using the validity indices reported previously (Fig 2B).26 In the assessment of the arteriovenous classification ability in the Hokudai dataset, parametric statistics were as follows: MISCa, 1.065%; MISCv, 0.930%; and overall accuracy of the arteriovenous classification, 0.99. In comparison with the indices of the previously reported deep learning system to classify arterioles and venules in fundus images,26,28, 29, 30, 31 the current deep learning system also showed a favorable ability of arteriovenous classification. For further verification, we calculated the number of pixels identified as both arteriole and venule. The average percentages of overlapping pixels of the total arteriole area, total venule area, and total pixels were 0.18%, 0.14%, and 0.006%, respectively.

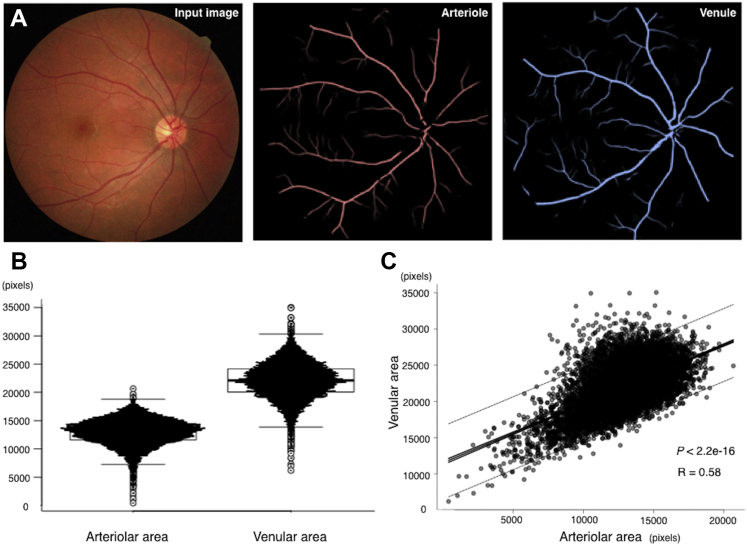

Total Arteriolar and Venular Areas

Using the deep learning system, we automatically measured the total arteriolar and venular areas in fundus images (n = 10 571). The mean total area of arterioles was 12 929 ± 287 pixels per fundus image, whereas that of venules was 22 046 ± 3169 pixels per fundus image (Fig 3A, B). In addition, the arteriolar and venular areas showed a moderate positive correlation (R = 0.59; n = 10 571; P < 0.001; Fig 3C). The repeatability of vascular area measurement was evaluated using 2 consecutive fundus photographs of both eyes of 10 healthy volunteers, and the correlation coefficients of arteriole area and venule area were r = 0.8775429 (n = 20; P < 0.001) and r = 0.6809523 (n = 20; P < 0.001), respectively.

Figure 3.

Total arteriolar and venular areas. A, Representative visualization of the input image and predicted arteriole and venule images using the proposed deep learning method. B, Graph showing distributions of the total arteriolar and venular areas measured by the proposed algorithm. C, Graph showing correlation between the total arteriolar and venular areas. Solid lines show 95% confidence intervals. Dotted lines show 95% prediction intervals. R = 0.58, n = 10 571, and P < 0.001.

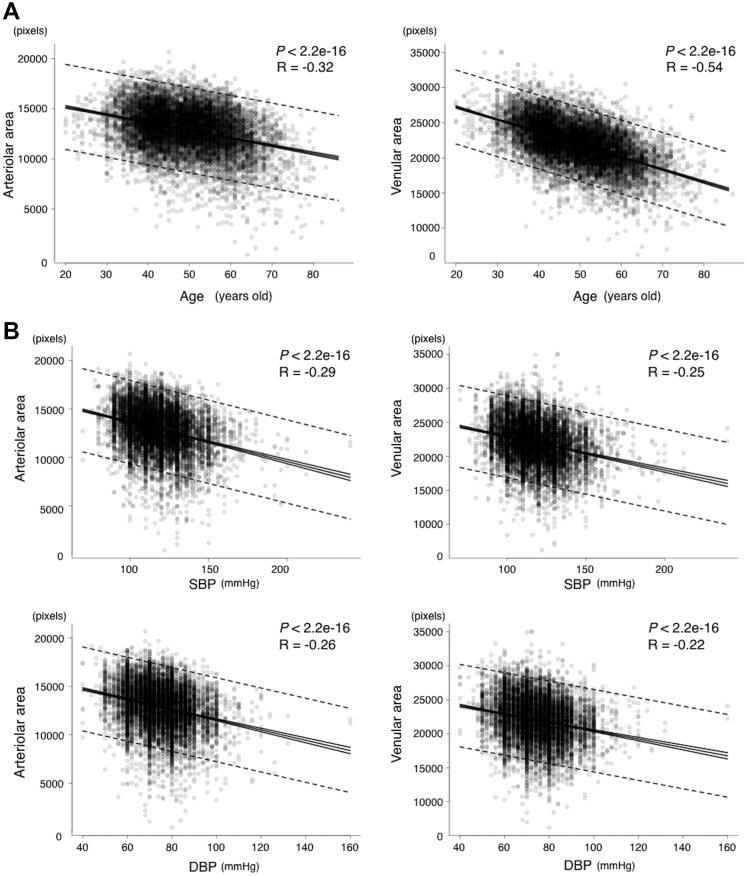

Correlation between the Retinal Vascular Area and Age

To investigate the relationship between the retinal vascular area and age, we assessed the correlation of age with the arteriolar and venular areas separately. Age showed negative correlations with the retinal arteriolar area (R = –0.32; n = 10 571; P < 0.001) and the retinal venular area (R = –0.54; n = 10 571; P < 0.001; Fig 4A).

Figure 4.

Graphs showing the correlation between the retinal vascular area and age or blood pressure. A, Correlation between the total arteriolar area and age (left); R = –0.32, n = 10 571, and P < 0.001. Correlation between the total venular area and age (right); R = –0.54, n = 10 571, and P < 0.001. B, Correlation between (top row) systolic blood pressure (SBP) and the total arteriolar area (R = –0.29, n = 10 571, and P < 0.001) or the total venular area (R = –0.25, n = 10 571, and P < 0.001) and between (bottom row) diastolic blood pressure (DBP) and the total arteriolar area (R = –0.26, n = 10 571, and P < 0.001) or the total venular area (R = –0.22, n = 10 571, and P < 0.001).

Correlation between the Retinal Vascular Area and Blood Pressure

To investigate the relationship between the retinal vascular area and BP, we calculated the correlation of SBP and DBP with the arteriolar and venular areas separately. Systolic BP showed negative correlations with both the retinal arteriolar area (R = –0.29; n = 10 571; P < 0.001) and the retinal venular area (R = –0.25; n = 10 571; P < 0.001; Fig 4B). Similarly, DBP showed a negative correlation with both the retinal arteriolar area (R = –0.26; n = 10 571; P < 0.001) and the retinal venular area (R = –0.22; n = 10 571; P < 0.001; Fig 4B).

Arteriovenous Ratio versus Retinal Vascular Area Accuracy as an Index of Blood Pressure and Age

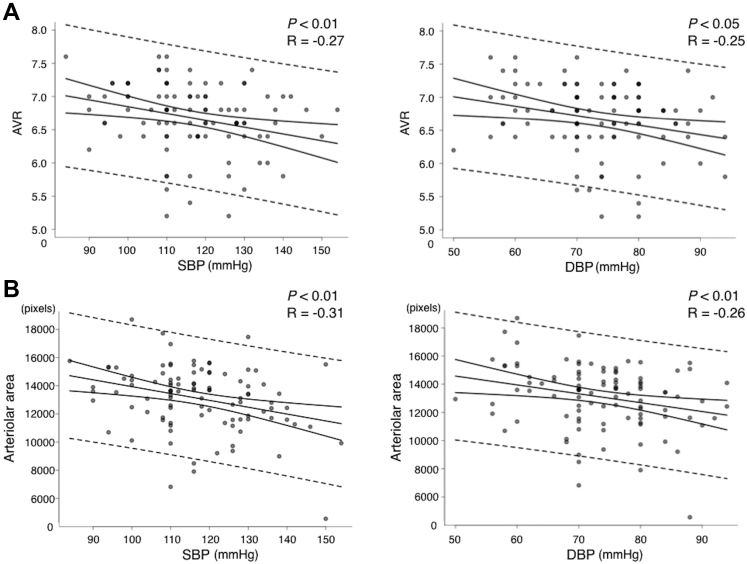

To assess the clinical significance of the retinal vascular area as an index of hypertension-related alterations in retinal vessels, the correlation coefficient between SBP or DBP and the retinal vascular area was compared with that between SBP or DBP and AVR. Arteriovenous ratio, which was evaluated manually by well-trained ophthalmologists, showed negative correlations with SBP (R = –0.27; n = 102; P < 0.01) and DBP (R = –0.25; n = 102; P < 0.05; Fig 5A). Likewise, the retinal arteriolar area showed negative correlations with SBP (R = –0.31; n = 102; P < 0.01) and DBP (R = –0.26; n = 102; P < 0.01; Fig 5B), indicating that retinal vascular area measurement by the deep learning architecture can be applied clinically for the evaluation of hypertension-related vessel alterations as a state-of-the-art technique. The intraclass correlation coefficient value of manually evaluated AVR was relatively low at 0.104.

Figure 5.

Graphs showing arteriovenous ratio (AVR) versus the arteriolar area as an index of blood pressure. A, Correlations between (left) systolic blood pressure (SBP; R = –0.27, n = 102, and P < 0.01) or (right) diastolic blood pressure (DBP; R = –0.25, n = 102, and P < 0.05) and AVR. B, Correlations between (left) SBP (R = –0.31, n = 102, and P < 0.01) or (right) DBP (R = –0.26, n = 102, and P < 0.01) and the total arteriolar area.

Discussion

In the present study, we investigated the clinical usefulness of a novel deep learning architecture for retinal vessel segmentation and arteriovenous classification that enables 2-dimensional assessments of the retinal vasculature and provides a more accurate evaluation axis for hypertension-related vascular changes than pre-existing AVR evaluation methods. The architecture showed that the retinal venular area is larger than the retinal arteriolar area in fundus images, as expected based on previous findings obtained from vascular caliber measurements.32,33 In addition, we elucidated as a novel finding that the correlation between the retinal arteriolar area and BP was stronger than that between manually evaluated AVR and BP. The current data indicated that the automatic measurement of the retinal vascular area in fundus images could be an alternative index for hypertension-related retinal changes, which has been evaluated by AVR so far.

Segmentation and classification of retinal vasculature are indispensable processes for the automated measurement of the total area of retinal arterioles and venules. Previously, several approaches such as a graph-based approach28 were challenged to develop a semiautomated system for segmentation and discrimination of arterioles and venules in fundus images. Thereafter, a deep learning strategy was proposed for automated segmentation and discrimination.17,19,20,24,26 In comparison with the previous systems, the current architecture sufficiently achieved desired accuracies of vessel segmentation and arteriovenous classification. The robustness of our method is presumably the result of the detailed manual annotation for the ground truth images generated by well-trained ophthalmologists. In addition, combined models and a multitude of skip connections might have enabled us to obtain the high accuracy of the current architecture.

In the present study, using the novel deep learning architecture we found as expected that the retinal venular area was larger than the retinal arteriolar area. Because area calculation of retinal vasculature by a manual image analysis has been technically impossible, the present work is, to the best of our knowledge, the first attempt to measure the total area of retinal arterioles and venules. Anatomically, retinal arterioles and venules depicted in fundus photographs lie within the superficial nerve fiber layer of the retina, and the arteriolar diameter invariably is smaller than the venular diameter running parallel in the retina. Previous image analysis data demonstrated that the retinal venous caliber is larger than the retinal arterial caliber. In full-term infants, the mean arteriolar diameter in the retina is 85.5 μm, whereas the venular diameter is 130.0 μm.34 The mean retinal arteriolar and venular calibers expand to 162.7 and 226.8 μm, respectively, at 6 years of age,35 and subsequently, both retinal arteriolar and venular calibers decrease after middle age.36 In accordance with previous findings, the current data first showed that the retinal venular area is larger than the retinal arteriolar area in fundus images. Second, we found a negative correlation between age and retinal vascular areas. In particular, the venular area showed a stronger correlation with age than the arteriolar area, possibly because of the susceptibility of retinal arterioles against systemic variabilities, such as variations in BP.

Human BP is associated with retinal vascular calibers. It was reported that narrowing or attenuation of the retinal arterioles is proportional to the degree of elevation of BP, and AVR evaluation has been used so far in clinical settings.7,37,38 However, it has been argued that an ophthalmoscopic evaluation of retinal AVR is subjective and lacks intergrader repeatability, which was proven to be quite low in this study. Recently, a more generalized method was established to calculate the summary indices reflecting the average width of retinal arterioles and retinal venules, that is, central retinal artery equivalent and central retinal vein equivalent. These indices showed that arteriolar narrowing was associated strongly with higher BP11,39,40 and that venule narrowing also was associated with BP elevation, independent of age.41 In our novel index, retinal arteriolar and venular areas showed the same tendency as reported previously. Furthermore, our present method had several advantages over the past ones. Whereas central retinal artery equivalent and central retinal vein equivalent were defined by measuring the width of retinal vessels between 0.5 and 1 disc diameters from the disc margin, evidence is insufficient to establish that this zone is the optimal region to evaluate the alterations in retinal vessels because of systemic disorders. In contrast, our method automatically and entirely assessed vascular areas in fundus photographs without any bias. Therefore, the vascular area measurement theoretically has the potential to assess the overall condition of retinal vasculature with a higher accuracy compared with the pre-existing methodology.

A meta-analysis revealed that the association between the narrowed retinal arteriolar diameter and BP or hypertension was consistent across different ethnic samples and age groups, from children to older adults, and in both cross-sectional and longitudinal studies.42 In the present study, we also elucidated a robust association between the retinal arteriolar area and elevated BP. Moreover, the arteriolar area showed a stronger correlation with SBP compared with manually evaluated AVR in this study, suggesting that our novel approach is at least comparable with previous methods, such as AVR estimation and semiautomatic calculations of central retinal artery equivalent and central retinal vein equivalent.

This study has several limitations. First, because the deep learning system adopts a multiclass multilabel classification, it is possible—albeit rare—for a single pixel to be classified into both arteriolar and venular area when the score for that pixel is above the threshold in both the artery and vein output images. Second, the measurable vascular area in fundus images using this architecture was restricted by several conditions, such as the angle of view. The ultra-widefield retinal imaging technology may boost the current concept to use the retinal vessel area instead of the vessel caliber. However, the versatility of our deep learning architecture for the imaging method with other settings was not examined in the current study. Third, participants enrolled in this study ranged from the middle-aged to the elderly, because generally young people do not require fundus photographs in regular health checkups. Investigating the retinal vascular area across a wider age range may shed light on the detailed aspect of this novel index. Finally, in the present study the vascular area was shown using dot pixels, but not absolute metric, because the refractive value and axial length data were not available, both of which are not measured at a routine health check-up in Japan. Further studies are mandatory to improve the quality of this deep learning architecture.

In summary, we developed a novel deep learning architecture for retinal vessel segmentation that showed comparable accuracy as previous methods. The automatic approach for vessel classification into arterioles and venules enables us to address objectively hypertensive alteration of retinal vessels via vascular area measurement in an automatic fashion. A meta-analysis of longitudinal studies previously demonstrated the association between an antecedent increase in peripheral vascular resistance and subsequent development of hypertension.42 Therefore, this newly developed deep learning system potentially is useful in the prediction of hypertension.

Acknowledgments

The authors thank Drs Mayuko Shinagawa, Saori Inafuku, Yusuke Matsumoto, and Yukiko Seino for volunteering to evaluate arteriovenous ratios.

Manuscript no. D-20-00011.

Footnotes

Disclosure(s): All authors have completed and submitted the ICMJE disclosures form.

The author(s) have made the following disclosure(s): K.F.: Patent – NIDEK Co., Ltd.

M.S.: Patent – NIDEK Co., Ltd.

K.N.: Patent – NIDEK Co., Ltd.

S.I.: Patent – NIDEK Co., Ltd.

Financial support - NIDEK Co., Ltd. (to establish the endowed course “Department of Ocular Circulation and Metabolism”) to the Department of Ophthalmology, Faculty of Medicine and Graduate School of Medicine, Hokkaido University.

Supported by NIDEK Co., Ltd, Tokyo, Japan. NIDEK Co., Ltd (Gamagori, Japan), had no role in the design or conduct of this research.

HUMAN SUBJECTS: Human subjects were included in this study. The Institutional Review Board for Clinical Research of the Keijinkai Maruyama Clinic and Hokkaido University Hospital approved the study protocol. The requirement for informed consent was waived because of the retrospective nature of the study. This research adhered to the tenets of the Declaration of Helsinki.

No animal subjects were included in this study.

Author Contributions:

Conception and design: Fukutsu, Saito, Noda, Murata, S.Kase, M.Kase, Ishida

Analysis and interpretation: Fukutsu, Saito, Noda, Shiba, Isogai

Data collection: Fukutsu, Saito, Shiba, Isogai, Asano, Hanawa, Dohke

Obtained funding: N/A; Study was performed as part of regular employment duties at Department of Ocular Circulation and Metabolism, Hokkaido University. No additional funding was provided.

Overall responsibility: Fukutsu, Saito, Noda, Murata, S.Kase, Shiba, Isogai, Asano, Hanawa, Dohke, M.Kase, Ishida

References

- 1.Herrington W., Lacey B., Sherliker P., et al. Epidemiology of atherosclerosis and the potential to reduce the global burden of atherothrombotic disease. Circ Res. 2016;118(4):535–546. doi: 10.1161/CIRCRESAHA.115.307611. [DOI] [PubMed] [Google Scholar]

- 2.Kearney P.M., Whelton M., Reynolds K., et al. Global burden of hypertension: analysis of worldwide data. Lancet. 2005;365(9455):217–223. doi: 10.1016/S0140-6736(05)17741-1. [DOI] [PubMed] [Google Scholar]

- 3.Mills K.T., Bundy J.D., Kelly T.N., et al. Global disparities of hypertension prevalence and control: a systematic analysis of population-based studies from 90 countries. Circulation. 2016;134(6):441–450. doi: 10.1161/CIRCULATIONAHA.115.018912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Global Burden of Disease 2013 Risk Factors Collaborators, Forouzanfar M.H., Alexander L., et al. Global, regional, and national comparative risk assessment of 79 behavioural, environmental and occupational, and metabolic risks or clusters of risks in 188 countries, 1990–2013: a systematic analysis for the Global Burden of Disease Study 2013. Lancet. 2015;386(10010):2287–2323. doi: 10.1016/S0140-6736(15)00128-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Feihl F., Liaudet L., Waeber B. The macrocirculation and microcirculation of hypertension. Curr Hypertens Rep. 2009;11(3):182–189. doi: 10.1007/s11906-009-0033-6. [DOI] [PubMed] [Google Scholar]

- 6.Schiffrin E.L. Remodeling of resistance arteries in essential hypertension and effects of antihypertensive treatment. Am J Hypertens. 2004;17(12 Pt 1):1192–1200. doi: 10.1016/j.amjhyper.2004.05.023. [DOI] [PubMed] [Google Scholar]

- 7.Stokoe N.L., Turner R.W. Normal retinal vascular pattern. Arteriovenous ratio as a measure of arterial calibre. Br J Ophthalmol. 1966;50(1):21–40. doi: 10.1136/bjo.50.1.21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wong T.Y., Klein R., Sharrett A.R., et al. Retinal arteriolar narrowing and risk of diabetes mellitus in middle-aged persons. JAMA. 2002;287(19):2528–2533. doi: 10.1001/jama.287.19.2528. [DOI] [PubMed] [Google Scholar]

- 9.Wang J.J., Liew G., Wong T.Y., et al. Retinal vascular calibre and the risk of coronary heart disease-related death. Heart. 2006;92(11):1583–1587. doi: 10.1136/hrt.2006.090522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Cooper L.S., Wong T.Y., Klein R., et al. Retinal microvascular abnormalities and MRI-defined subclinical cerebral infarction: the Atherosclerosis Risk in Communities Study. Stroke. 2006;37(1):82–86. doi: 10.1161/01.STR.0000195134.04355.e5. [DOI] [PubMed] [Google Scholar]

- 11.Hubbard L.D., Brothers R.J., King W.N., et al. Methods for evaluation of retinal microvascular abnormalities associated with hypertension/sclerosis in the Atherosclerosis Risk in Communities Study. Ophthalmology. 1999;106(12):2269–2280. doi: 10.1016/s0161-6420(99)90525-0. [DOI] [PubMed] [Google Scholar]

- 12.Maloca P.M., Lee A.Y., de Carvalho E.R., et al. Validation of automated artificial intelligence segmentation of optical coherence tomography images. PLoS One. 2019;14(8) doi: 10.1371/journal.pone.0220063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Yamashita T., Furukawa H., Fujiyoshi H. Multiple skip connections of dilated convolution network for semantic segmentation. Proceedings of the 2018 25th IEEE International Conference on Image Processing. 2018:1593–1597. [Google Scholar]

- 14.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition. Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition. 2016 770–778. [Google Scholar]

- 15.Staal J., Abramoff M.D., Niemeijer M., et al. Ridge-based vessel segmentation in color images of the retina. IEEE Trans Med Imaging. 2004;23(4):501–509. doi: 10.1109/TMI.2004.825627. [DOI] [PubMed] [Google Scholar]

- 16.Fraz M.M., Remagnino P., Hoppe A., et al. An ensemble classification-based approach applied to retinal blood vessel segmentation. IEEE Trans Biomed Eng. 2012;59(9):2538–2548. doi: 10.1109/TBME.2012.2205687. [DOI] [PubMed] [Google Scholar]

- 17.Li Q., Feng B., Xie L., et al. A cross-modality learning approach for vessel segmentation in retinal images. IEEE Trans Med Imaging. 2016;35(1):109–118. doi: 10.1109/TMI.2015.2457891. [DOI] [PubMed] [Google Scholar]

- 18.Lupascu C.A., Tegolo D., Trucco E. FABC: retinal vessel segmentation using AdaBoost. IEEE Trans Inf Technol Biomed. 2010;14(5):1267–1274. doi: 10.1109/TITB.2010.2052282. [DOI] [PubMed] [Google Scholar]

- 19.Marin D., Aquino A., Gegundez-Arias M.E., Bravo J.M. A new supervised method for blood vessel segmentation in retinal images by using gray-level and moment invariants-based features. IEEE Trans Med Imaging. 2011;30(1):146–158. doi: 10.1109/TMI.2010.2064333. [DOI] [PubMed] [Google Scholar]

- 20.Mo J., Zhang L. Multi-level deep supervised networks for retinal vessel segmentation. Int J Comput Assist Radiol Surg. 2017;12(12):2181–2193. doi: 10.1007/s11548-017-1619-0. [DOI] [PubMed] [Google Scholar]

- 21.Orlando J.I., Blaschko M. Learning fully-connected CRFs for blood vessel segmentation in retinal images. Med Image Comput Comput Assist Interv. 2014;17(Pt 1):634–641. doi: 10.1007/978-3-319-10404-1_79. [DOI] [PubMed] [Google Scholar]

- 22.Orlando J.I., Prokofyeva E., Blaschko M.B. A discriminatively trained fully connected conditional random field model for blood vessel segmentation in fundus images. IEEE Trans Biomed Eng. 2017;64(1):16–27. doi: 10.1109/TBME.2016.2535311. [DOI] [PubMed] [Google Scholar]

- 23.Soares J.V., Leandro J.J., Cesar Junior R.M., et al. Retinal vessel segmentation using the 2-D Gabor wavelet and supervised classification. IEEE Trans Med Imaging. 2006;25(9):1214–1222. doi: 10.1109/tmi.2006.879967. [DOI] [PubMed] [Google Scholar]

- 24.Vega R., Sanchez-Ante G., Falcon-Morales L.E., et al. Retinal vessel extraction using lattice neural networks with dendritic processing. Comput Biol Med. 2015;58:20–30. doi: 10.1016/j.compbiomed.2014.12.016. [DOI] [PubMed] [Google Scholar]

- 25.Wang S., Yin Y., Cao G., et al. Hierarchical retinal blood vessel segmentation based on feature and ensemble learning. Neurocomputing. 2015;149:708–717. [Google Scholar]

- 26.Xu X., Wang R., Lv P., et al. Simultaneous arteriole and venule segmentation with domain-specific loss function on a new public database. Biomed Opt Express. 2018;9(7):3153–3166. doi: 10.1364/BOE.9.003153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Yan Z., Yang X., Cheng K.T. A three-stage deep learning model for accurate retinal vessel segmentation. IEEE J Biomed Health Inform. 2019;23(4):1427–1436. doi: 10.1109/JBHI.2018.2872813. [DOI] [PubMed] [Google Scholar]

- 28.Dashtbozorg B., Mendonca A.M., Campilho A. An automatic graph-based approach for artery/vein classification in retinal images. IEEE Trans Image Process. 2014;23(3):1073–1083. doi: 10.1109/TIP.2013.2263809. [DOI] [PubMed] [Google Scholar]

- 29.Estrada R., Allingham M.J., Mettu P.S., et al. Retinal artery-vein classification via topology estimation. IEEE Trans Med Imaging. 2015;34(12):2518–2534. doi: 10.1109/TMI.2015.2443117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Mirsharif Q., Tajeripour F., Pourreza H. Automated characterization of blood vessels as arteries and veins in retinal images. Comput Med Imaging Graph. 2013;37(7–8):607–617. doi: 10.1016/j.compmedimag.2013.06.003. [DOI] [PubMed] [Google Scholar]

- 31.Xu X., Ding W., Abramoff M.D., Cao R. An improved arteriovenous classification method for the early diagnostics of various diseases in retinal image. Comput Methods Programs Biomed. 2017;141:3–9. doi: 10.1016/j.cmpb.2017.01.007. [DOI] [PubMed] [Google Scholar]

- 32.Antonio P.R., Marta P.S., Luis D.D., et al. Factors associated with changes in retinal microcirculation after antihypertensive treatment. J Hum Hypertens. 2014;28(5):310–315. doi: 10.1038/jhh.2013.108. [DOI] [PubMed] [Google Scholar]

- 33.Pakter H.M., Fuchs S.C., Maestri M.K., et al. Computer-assisted methods to evaluate retinal vascular caliber: what are they measuring? Invest Ophthalmol Vis Sci. 2011;52(2):810–815. doi: 10.1167/iovs.10-5876. [DOI] [PubMed] [Google Scholar]

- 34.Kandasamy Y., Smith R., Wright I.M. Retinal microvasculature measurements in full-term newborn infants. Microvasc Res. 2011;82(3):381–384. doi: 10.1016/j.mvr.2011.07.011. [DOI] [PubMed] [Google Scholar]

- 35.Mitchell P., Liew G., Rochtchina E., et al. Evidence of arteriolar narrowing in low-birth-weight children. Circulation. 2008;118(5):518–524. doi: 10.1161/CIRCULATIONAHA.107.747329. [DOI] [PubMed] [Google Scholar]

- 36.Ikram M.K., Ong Y.T., Cheung C.Y., Wong T.Y. Retinal vascular caliber measurements: clinical significance, current knowledge and future perspectives. Ophthalmologica. 2013;229(3):125–136. doi: 10.1159/000342158. [DOI] [PubMed] [Google Scholar]

- 37.Scheie H.G. Evaluation of ophthalmoscopic changes of hypertension and arteriolar sclerosis. AMA Arch Ophthalmol. 1953;49(2):117–138. doi: 10.1001/archopht.1953.00920020122001. [DOI] [PubMed] [Google Scholar]

- 38.Wagener H.P., Clay G.E., Gipner J.F. Classification of retinal lesions in the presence of vascular hypertension: report submitted to the American Ophthalmological Society by the committee on Classification of Hypertensive Disease of the Retina. Trans Am Ophthalmol Soc. 1947;45:57–73. [PMC free article] [PubMed] [Google Scholar]

- 39.Parr J.C., Spears G.F. General caliber of the retinal arteries expressed as the equivalent width of the central retinal artery. Am J Ophthalmol. 1974;77(4):472–477. doi: 10.1016/0002-9394(74)90457-7. [DOI] [PubMed] [Google Scholar]

- 40.Sharrett A.R., Hubbard L.D., Cooper L.S., et al. Retinal arteriolar diameters and elevated blood pressure: the Atherosclerosis Risk in Communities Study. Am J Epidemiol. 1999;150(3):263–270. doi: 10.1093/oxfordjournals.aje.a009997. [DOI] [PubMed] [Google Scholar]

- 41.Leung H., Wang J.J., Rochtchina E., et al. Impact of current and past blood pressure on retinal arteriolar diameter in an older population. J Hypertens. 2004;22(8):1543–1549. doi: 10.1097/01.hjh.0000125455.28861.3f. [DOI] [PubMed] [Google Scholar]

- 42.Chew S.K., Xie J., Wang J.J. Retinal arteriolar diameter and the prevalence and incidence of hypertension: a systematic review and meta-analysis of their association. Curr Hypertens Rep. 2012;14(2):144–151. doi: 10.1007/s11906-012-0252-0. [DOI] [PubMed] [Google Scholar]