Abstract

Lung abnormality in humans is steadily increasing due to various causes, and early recognition and treatment are extensively suggested. Tuberculosis (TB) is one of the lung diseases, and due to its occurrence rate and harshness, the World Health Organization (WHO) lists TB among the top ten diseases which lead to death. The clinical level detection of TB is usually performed using bio-medical imaging methods, and a chest X-ray is a commonly adopted imaging modality. This work aims to develop an automated procedure to detect TB from X-ray images using VGG-UNet-supported joint segmentation and classification. The various phases of the proposed scheme involved; (i) image collection and resizing, (ii) deep-features mining, (iii) segmentation of lung section, (iv) local-binary-pattern (LBP) generation and feature extraction, (v) optimal feature selection using spotted hyena algorithm (SHA), (vi) serial feature concatenation, and (vii) classification and validation. This research considered 3000 test images (1500 healthy and 1500 TB class) for the assessment, and the proposed experiment is implemented using Matlab®. This work implements the pretrained models to detect TB in X-rays with improved accuracy, and this research helped achieve a classification accuracy of >99% with a fine-tree classifier.

1. Introduction

In the healthcare industry, there is a heavy diagnostic burden because of the steady increase in disease incidence in humans due to various reasons. The burden of disease detection can be reduced in hospitals by developing and implementing automated disease detection systems using artificial intelligence (AI) [1–5].

The lungs are one of the vital internal organs, and an infection in the lungs can cause severe illness, including death [6–8]. Tuberculosis (TB) is one of the severe lung diseases caused by Mycobacterium tuberculosis (M. tuberculosis), and it can cause severe breathing problems in human patients. Therefore, it is imperative to detect and treat tuberculosis in a timely manner. It is also a communicable illness that will affect a human quickly and easily if one has a weak immune system.

A recent report by World Health Organization (WHO) lists TB as one of the top 10 causes of death globally and the foremost reason for death from a solitary infectious agent [9]. This report also confirms that, in 2019, TB caused 1.4 million deaths worldwide, and this report estimated that ten million people would be diagnosed with TB. Most people infected with TB (>t 90%) are adults, and the infection rate in males is higher than in women. Increased TB rate in a country is due to poverty, which causes financial distress, susceptibility, marginalization, and bias in TB-infected people. Furthermore, this report also verifies that about a quarter of the world's population is infected with TB. Usually, TB is curable and preventable when diagnosed in its early phase, and >85% who develop TB can be completely recovered with a 6-month drug regimen [10, 11].

The clinical level diagnosis of TB is usually performed with various clinical tests, including the bio-images. The infected lung section is typically recorded using computed tomography (CT) and radiographs (X-ray). The recorded image is then examined using a computer algorithm or by an experienced doctor to identify the harshness of TB. The former research on TB detection confirms that early diagnosis is essential to reduce the disease burden; hence, the researchers suggest several automated diagnostic procedures [12, 13]. In literature, the detection of TB with chest X-ray is widely discussed due to its clinical significance. Several machine learning (ML) and deep learning (DL) procedures are developed and employed to assess chest X-ray pictures. The DL-supported scheme helps to achieve a better detection accuracy compared to the ML, and hence, the DL-supported TB detection is considered in this research. The proposed research proposes a TB detection framework using the pretrained DL scheme, which implements combined segmentation and classification to achieve better detection, as discussed in [14]. The earlier work by Rahman et al. [14] implemented UNet for the segmentation and pretrained DL schemes for the classification. In the earlier work, the performance of VGG16 is not discussed, and hence, this research attempted the detection of TB using the VGG-UNet-based technique. The different stages of this framework consist of (i) image collection and resizing, (ii) implementation of pretrained VGG-UNet to segment the lung section from X-ray, (iii) collection of deep features (DF), (iv) local-binary-pattern (LBP) generation using different weights and LBP feature extraction, (v) spotted hyena algorithm (SHA) based DF and HF reduction, (vi) generating a new feature section with the serial concatenation of features, and (vii) binary classification and validation.

In this work, 3000 test images (1500 healthy and 1500 TB) are collected from the dataset provided by Rahman et al. [14, 15]. Initially, every test image is resized to 224 × 224 × 3 pixels, and the converted images are then evaluated using the pretrained VGG-UNet. UNet is a well-known convolutional neural network (CNN)-based encoder-decoder assembly, and the enhancement of this scheme is already reported in the literature. The enhancement methods, such as VGG-UNet [16] and ResNet [17], are already employed in which the encoder section is modified using the DL scheme. In the considered VGG-UNet, the well-known VGG16 architecture is considered to implement the encoder-decoder assembly, and the earlier work on this scheme can be accessed from [4]. In this work, the encoder section provides the necessary DF, and the decoder section supplies the segmented lung section, which is then considered to extract HF. The optimal value of DF and HF is then identified using SHA, and then, a serial concatenation is considered to combine these optimal features (DF + HF). This feature vector is then considered to validate the performance of the binary classifier with a 5-fold cross-validation, and the employed scheme helped to achieve a classification accuracy of 98.73% with the fine-tree classifier.

The main contribution of this research includes the following:

Execution of CNN-based joint segmentation and classification is implemented using VGG16

LBP pattern generation with various weights is presented

SHA-based feature selection and serial feature concatenation is discussed

Other sections are arranged as follows: Section 2 shows earlier related work, Section 3 demonstrates methodology, and Sections 4 and 5 present the experimental outcome and conclusion of this research.

2. Related Research

Automated disease detection schemes are developed to reduce the diagnostic burden in hospitals, and most of these schemes also support the decision-making and treatment planning processes. In the literature, several ML and DL schemes are discussed to identify the TB from chest X-rays with the help of benchmark and clinically collected images. Every procedure aims to get better detection accuracy. This section summarizes chosen procedures employed to examine the X-ray, and the necessary information is presented in Table 1.

Table 1.

Summary of automated TB detection schemes employed to examine X-ray images.

| Reference | Developed procedure |

|---|---|

| Rajaraman and Antani [18] | A customized DL system is proposed to examine the Shenzhen CXR pictures, and the proposed system provided an accuracy of 83.7%. However, this work confirms that implementing a customized DL approach is complex and time-consuming |

|

| |

| Hwa et al. [19] | Examination of TB from X-ray using ensemble DL system and canny-edge detection is implemented and achieved better values of accuracy (89.77%), sensitivity (90.91%), and specificity (88.64%). However, the implementation of canny-edge detection along with the ensemble DL scheme needs a larger image preprocessing task, and it will increase the detection time |

|

| |

| Wong et al. [20] | The development of a customized DL technique called TB-Net is proposed, and this work helped to achieve better performance measures, such as accuracy (99.86%), sensitivity (100%), and specificity (99.71%). This research also proposes a customary model, which is relatively more complex than the pretrained models |

|

| |

| Hooda et al. [21] | Seven convolutional layers and three fully connected layer-based customized DL method are proposed for TB detection and achieved a classification accuracy of 94.73% |

|

| |

| Rohilla et al. [22] | This work employed the conventional AlexNet and VGG16 methods to examine the X-ray images and attained an accuracy of >81% |

|

| |

| Nguyen et al. [23] | X-ray diagnosis performance of pretrained DL schemes is presented, and the employed technique helped to provide better TB recognition |

|

| |

| Afzali et al. [24] | The contour-based silhouette descriptor technique is employed to detect TB, and the selected features provided an accuracy of 92.86% |

|

| |

| Stirenko et al. [25] | The CNN-based disease diagnosis with lossless and lossy data expansion is employed, and the proposed method offers a better TB diagnosis with X-ray pictures |

|

| |

| Rahman et al. [14] | Implementation of combined CNN segmentation and categorization is presented to identify TB from X-ray images. This work implemented the classification task with and without segmentation and achieved a TB detection accuracy of 96.47% and 98.6%, respectively. This work also presented a detailed evaluation methodology for TB detection using various pretrained DL methods |

The research by Rahman et al. [14] employed a combined segmentation and thresholding concept to improve disease detection performance. This work employed the proposed technique on 7000 images (3500 healthy and 3500 TB class) and presented a detailed examination using various pretrained CNN methods in the literature. With an experimental investigation, the proposed work confirmed that joint segmentation and classification help to get a better disease diagnosis. With this motivation, the proposed work of this research also adopted the joint segmentation and classification concept to examine the TB from the database provided by Rahman et al. [15]. In the earlier work, the VGG16 was not employed for the segmentation and classification task. Hence, the proposed research work adopted the VGG-UNet scheme for the investigation, in which the VGG16 acts as the encoder unit. The experimental outcome of this study confirms that the proposed scheme worked well on the chest X-ray database and helped to achieve a classification accuracy of >99% with the fine-tree classifier.

3. Methodology

This research division shows the scheme developed to identify the TB by joint segmentation and classification task. First, the necessary test pictures are collected from a benchmark image database represented by Rahman et al. [15], and after the collection, every image is resized to a dimension of 224 × 224 × 3 pixels. After the resizing task, every picture is evaluated by the VGG-UNet. Then, the encoder section presents the necessary DF, and the final layer (SoftMax) of the decoder section provides the binary form of the segmented lung section. The outcome of the encoder unit provides a DF of value, which is then reduced by 1 × 1 × 1024 using a chosen dropout rate (2 dropout layers with 50% dropout value to reduce 1 × 1 × 4096 to 1 × 1 × 1024), and these features are further reduced using the SHA to get the DF of a chosen dimension. The binary image obtained at the decoder section is then combined with its original test image to extract the lung section. The necessary LBP features are extracted from the extracted lung section, and these features are further reduced with SHA. Finally, a serial concatenation is then implemented to get DF + HF, and these features are then chosen to test and validate the performance of the developed system on the considered database.

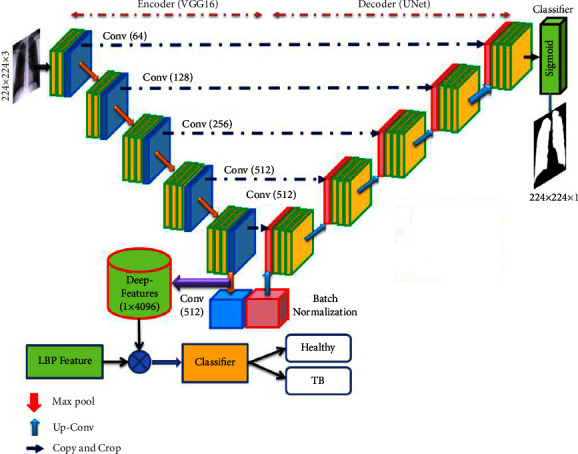

The performance of the proposed scheme is tested using (i) DF alone and (ii) SHA-optimized DF + HF. During this assessment, the SoftMax-based binary classification is employed, and later, other binary classifiers existing in the literature are considered for testing the performance of the proposed scheme. The various stages presented in this scheme are shown in Figure 1. The concatenated feature is employed in this work to classify the X-ray images into healthy/TB classes.

Figure 1.

Joint segmentation and classification implemented for TB detection using X-ray.

3.1. Image Dataset

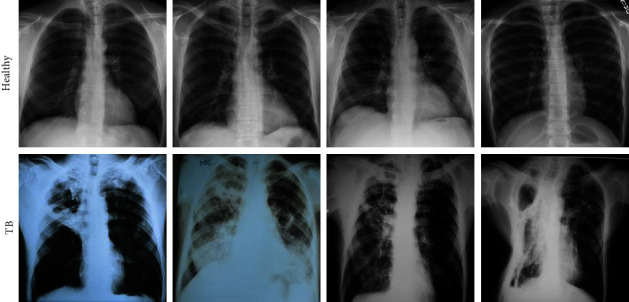

The merit of the automated disease diagnosis is then tested and verified using the clinically grade or benchmark medical data. In this research, the chest X-ray images considered by Rahman et al. [15] are adopted. From this dataset, 3000 images are collected to assess which 1500 images belong to the healthy group and the remaining 1500 with TB traces. Every collected image is resized to 224 × 224 × 3 pixels (approved size for VGG16). Of the total images, 70% (1050 images) are considered to train the developed scheme, and the remaining 30% (450 images) are considered for validation. The information about the test images is shown in Table 2, and the sample test images for the healthy/TB class are presented in Figure 2.

Table 2.

Chest X-ray image dataset information.

| Class | Dimension | Images | ||

|---|---|---|---|---|

| Total | Training | Validation | ||

| Healthy | 224 × 224 × 3 | 1500 | 1050 | 450 |

| TB | 224 × 224 × 3 | 1500 | 1050 | 450 |

Figure 2.

Sample X-ray images of healthy/TB class.

3.2. Pretrained VGG-UNet

Deep-learning-supported medical data assessment is a commonly employed technique, and most of these approaches are adopted to implement automatic segmentation and classification operations [26–28]. The CNN-based segmentation using the traditional UNet [29] and SegNet [30] is employed in the literature to extract and evaluate the disease-infected section from various modality medical images. The limitation of traditional CNN segmentation schemes is rectified by enhancing its performance using the pretrained DL schemes. The DL schemes are considered to form the encoder and decoder section, which supports the feature extraction and segmentation for medical images of a chosen dimension. In this work, the pretrained VGG16 is then considered to implement the VGG-UNet scheme, and the necessary information about this architecture can be found in [4, 31].

Initially, the considered VGG-UNet is trained using X-ray images with the following tasks.

Predictable augmentation (rotation and zoom) to increase the number of training images

Assignment of learning rate as 1e-4 for better accuracy

Training with linear dropout rate (LDR) and Adam optimization

During this task, other vital parameters are assigned as follows: total iteration = 2000, total epochs = 50, dropout rates in the fully connected layer = 50%, and the final layer is the SoftMax unit with 5-fold cross-validation.

3.3. Feature Extraction

This section presents the outline of the DF and HF extraction procedure.

3.3.1. Deep Feature

The necessary deep features from the proposed scheme are extracted from the encoder section (VGG16) of the VGG-UNet. This section offered a feature vector of dimension 1 × 1 × 4096, and it is then passed through three fully connected (FC) layers with a dropout rate of 50% to get a reduced feature vector of dimension 1 × 1 × 1024. This feature is the DF, which is then considered to classify the X-ray images using a chosen binary classifiers. In this work, the classification task is executed using the conventional DF and the DF optimized using the SHA. The experimental outcome of this study confirms that the proposed work helped to get better classification accuracy with optimized DF compared to the conventional DF.

3.3.2. Handcrafted Feature

The HF is considered in ML-based automatic disease detection systems, and in this work, the HF is obtained using LBP of various weights as discussed in [32]. The various procedures to extract the HF from the chosen X-ray are as follows: the implemented VGG-UNet helps to extract the lung section in binary form. This binary image is then combined with its original test image to get the necessary lung section without the artifacts. After getting the lung image, the necessary LBP pattern is generated by assigning its weights as W=1,2,3 an d 4, and from these images, the necessary LBP features with dimension 1 × 1 × 59 are extracted, and the extracted features are then optimized using the SHA.

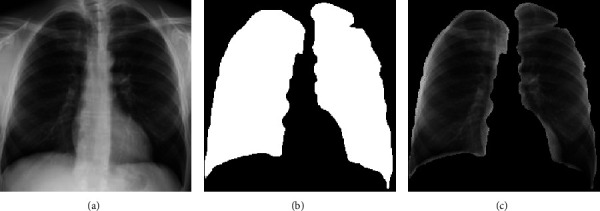

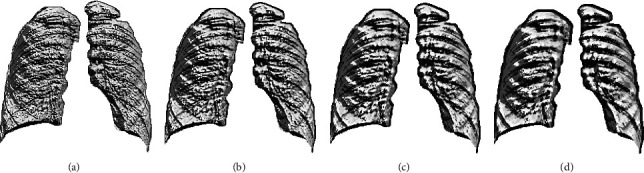

Figure 3 depicts the procedure to remove the artifact. Figures 3(a) and 3(b) show the sample test image and the extracted binary region by the decoder section of VGG-UNet. When Figures 3(a) and 3(b) are combined (pixel-wise multiplication), then we get Figure 3(c), which is the lung section without the artifact. This section is then considered to get the LBP pattern with various weights. The generated LBP pattern of a sample image is presented in Figure 4, in which Figures 4(a)–4(d) depict the outcome for the chosen weights. Every image provides 59 number of one-dimensional (1D) features, and the total features obtained with LBP are 236 features (59 × 4) which are then reduced using the SHA to avoid the overfitting difficulty in X-ray classification. Other information related to this task can be found in the earlier research works [4, 16].

Figure 3.

The result achieved with VGG-Unet and the extracted lung section. (a) Test image, (b) binary image, (c) section to be examined.

Figure 4.

Generated LBP pattern of X-ray for various weights. (a)W = 1, (b)W = 2, (c)W = 3, (d)W = 4.

3.4. Feature Reduction with Spotted Hyena Algorithm

Metaheuristic algorithms (MA) are adopted in the literature to find the finest solution for various real-world problems. The earlier works related to medical image assessment confirm that the MA is widely adopted in various image examination works, such as thresholding, segmentation, and feature selection [33, 34]. The MA-based feature selection procedure is already discussed in various ML and DL techniques, and this procedure helps to get the finest feature vector, which avoided the overfitting problem during the automated classification. The MA-based feature selection can be used as an alternative technique for the traditional feature reduction procedures discussed in [35].

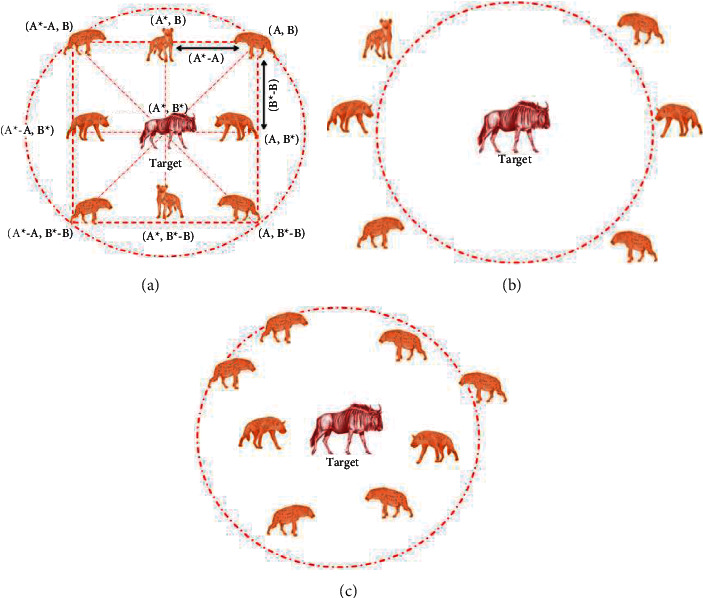

In this work, the feature reduction task is implemented for both the DF and HF using the SHA. It is a nature-motivated procedure invented in 2017 by mimicking the hunting events found in spotted hyena (SH) packs. The SH are the skillful animal that hunts as a pack, and this operation consists of the following stages: (i) choice making and following the prey, (ii) chasing the prey, (iii) surrounding the prey, and (iv) killing. The arithmetical replica developed by Dhiman and Kumar [36, 37] considered all constraints to improve the converge capability of the SHA. A similar kind of algorithm, known as the Dingo optimizer, is also developed and implemented by Bairwa et al. [38].

The various stages of the SHA are depicted in Figure 5, in which Figures 5(a)–5(c) present the operations, such as identifying and tracking the prey as in Figure 5(a), tracking and encircling the prey depicted in Figure 5(b), and hunting as presented in Figure 5(c). This operation is as follows: the leader in pack identifies the prey, and the leader and its pack will chase it till it is tired. When the prey is tired, the leader and its group will encircle the prey as depicted in Figure 5. In this context, every group member will adjust their location concerning the prey. This process is depicted in the figure using notation A and B. This adjustment is carried out in the algorithm using mathematical operations such as multiplication and subtraction.

Figure 5.

Various stages of SHA. (a) Encircling, (b) hunting, (c) attacking.

The encircling process is mathematically represented as follows:

| (1) |

| (2) |

where = distance among the hyena and prey, x = current iteration, = position vector of prey, and = position vector of hyena.

The coefficient vectors and are computed as follows:

| (3) |

| (4) |

| (5) |

where Itermax = maximum iterations assigned, = a linearly decreasing value from 5 to 0 insteps of 0.1, and = random number [0,1] number

In this figure, (A, B) are the hyena, and it will adjust its location towards the prey (A∗, B∗) based on the values of Eqns. (3) to (5).

In the hunting stage, the hyena pack will move close to the prey and proceed for the attack. This phase is represented as follows:

| (6) |

| (7) |

| (8) |

where = leader which moves closer to prey and = positions of other hyenas in the pack, and N = total hyenas in the pack.

In the attacking phase, the hyena moves and attacks the prey, other hyenas in the group also follow the same technique, and the group attach will kill the prey. When the prey is dead, every hyena in the pack is on or nearer to the prey. This process is the convergence of the chosen agents towards the optimal location as in (7).

| (9) |

where is the best position, in which every hyena of the pack converges. In this work, the SHA is initiated with the following parameters: number of hyena (agent) = N = 20, search dimension (D) = 2, Itermax = 2000 and stopping criteria = maximization of Cartesian distance (CD) between features or Itermax.

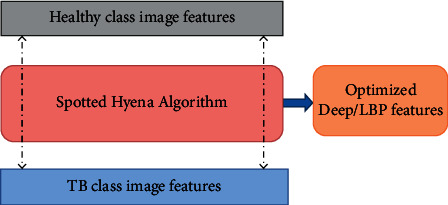

The feature reduction with SHA is graphically depicted in Figure 6, and from this procedure, it is clear that this task selects the image features of the healthy/TB class based on the maximal CD, and this procedure is already discussed in earlier works [5, 34, 39]. As depicted in Figure 6, the SHA-based feature selection is separately employed to identify the optimal features, and after getting these features, it is then serially concatenated, and the concatenated features are then considered for classifier training and validation.

Figure 6.

The SHA-based feature selection process.

The number of DF and HF available for the optimization is depicted in the following equations:

| (10) |

| (11) |

| (12) |

| (13) |

| (14) |

| (15) |

During the feature reduction process, the DF in Eqn. (10) and HF in (8) are examined by the SHA, and the reduced features are then serially concatenated to get a hybrid feature vector (DF + HF). In this work, the SHA helps to reduce the DF to 1 × 1 × 427and HF to 1 × 1 × 166, and these features are then combined as in Eqn. (16) to get the new feature vector.

| (16) |

The feature presented in (9) is then considered to train and test the classifiers considered in this study. The various binary classifiers considered in this research include SoftMax, Naïve-Bayes (NB), random forest (RF), decision tree (DT) variants, K-nearest neighbors (KNN) variants, and SVM with linear kernel [40–43].

3.5. Performance Validation

The merit of an automated disease detection system is to be verified by computing the necessary performance values. In this work, the measures obtained from the confusion matrix are considered to confirm the eminence of the proposed scheme. These measures include true positive (TP), false negative (FN), true negative (TN), false positive (FP), accuracy (ACC), precision (PRE), sensitivity (SEN), specificity (SPE), and negative predictive value (NPV). The mathematical expressions of these values are presented in the following equations [42–46]:

| (17) |

| (18) |

| (19) |

| (20) |

| (21) |

4. Results and Discussion

This part of the research presents the present research's investigational outcome. This work is executed using a workstation; Intel i7 2.9 GHz processor with 20 GB RAMS and 4 GB VRAM equipped with Matlab®.

Initially, the pretrained VGG-UNet scheme is trained using resized chest X-ray images till it extracts the lung section with better accuracy. After the training, its segmentation performance is tested using test images, and the outcome is recorded. Then, the extracted section is combined with the original image to extract the lung section without the artifact, and the necessary LBP is generated when the HF is extracted. Similarly, the necessary DF is extracted from the encoder section (VGG16), and these features are then passed through the fully connected layers to get a feature vector of size 1 × 1 × 1024. This procedure is similar to employing a traditional VGG16 scheme, and this feature is initially considered to classify the images with a SoftMax classifier, and the necessary performance is then recorded.

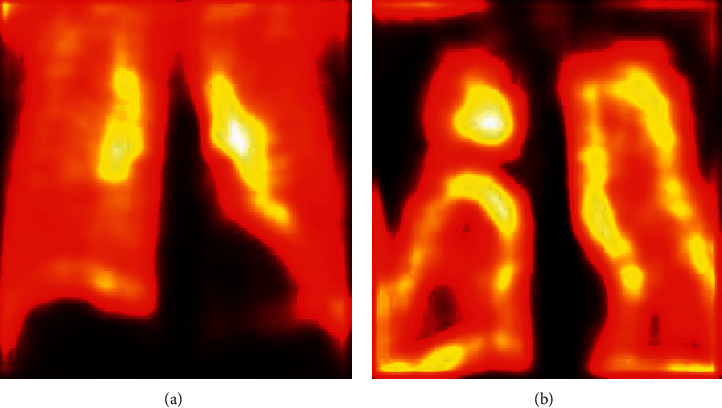

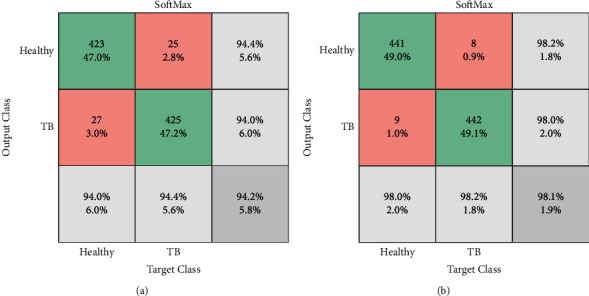

During the convolutional operation, the layers of the VGG-UNet help to recognize the necessary image features to support the necessary feature extraction and segmentation. The sample test image textures identified during a convolutional operation are presented in Figure 7. Figures 7(a) and 7(b) depict the hot color map image obtained for healthy and TB class sample images, respectively. After extracting the necessary deep features from the test images (with a VGG16-like scheme), the necessary classification task is implemented using the SoftMax classifier with a 5-fold cross-validation. The achieved results are presented in Table 3. This table confirms that when the DF vector of dimension 1 × 1 × 1024 is considered, SoftMax provided a classification accuracy of 94.22%. This procedure is then repeated using the SHA-selected DF + HF presented in (9), and the achieved confusion matrix (CM) is presented in Figure 8. Figure 8(a) shows the CM of the DF case, and Figure 8(b) shows the CM of optimized DF + HF, and this confirms that the accuracy achieved with the proposed method is superior to the traditional technique. Hence, the performance of the proposed scheme is then confirmed with various binary classifiers using the DF + HF.

Figure 7.

Sample test images obtained the convolution operation. (a) Normal, (b) TB.

Table 3.

Classification result achieved with DF alone for a 5-fold cross-validation.

| Classifier | TP | FN | TN | FP | ACC (%) | PRE (%) | SEN (%) | SPE (%) | NPV (%) |

|---|---|---|---|---|---|---|---|---|---|

| Fold 1 | 418 | 32 | 414 | 36 | 92.44 | 92.07 | 92.89 | 92.00 | 92.82 |

| Fold 2 | 421 | 29 | 419 | 31 | 93.33 | 93.14 | 93.56 | 93.11 | 93.53 |

| Fold 3 | 420 | 30 | 424 | 26 | 93.78 | 94.17 | 93.33 | 94.22 | 93.39 |

| Fold 4 | 423 | 27 | 425 | 25 | 94.22 | 94.42 | 94.00 | 94.44 | 94.03 |

| Fold 5 | 421 | 29 | 423 | 27 | 93.78 | 93.97 | 93.56 | 94.00 | 93.58 |

Figure 8.

Confusion matrix attained with traditional and optimized features. (a) DF with SoftMax, (b) DF + HF with SoftMax.

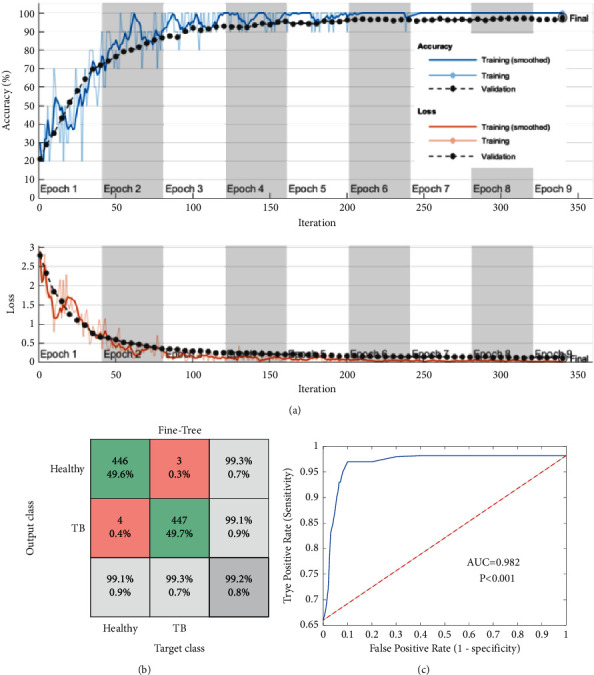

Figure 9 presents the experimental outcome achieved with the RF variant and the fine-tree classifier. Figure 9(a) depicts the convergence of the search, and Figures 9(b) and 9(c) show the CM and the receiver operating characteristic (ROC) curve, respectively. The results achieved with other chosen classifies are presented in Table 4. This confirms that the classification accuracy of the fine tree is >99%, which confirms its merit over other techniques. In order to verify the performance of the proposed scheme, its best result is compared with the results of Rahman et al. [14] and confirmed that the proposed joint segmentation and classification scheme with SHA-selected DF + HF help to achieve a better outcome compared to the earlier works.

Figure 9.

Investigational outcome of the fine-tree classifier with DF + HF. (a) Convergence, (b) confusion matrix, (c) ROC.

Table 4.

Sample results attained with the implemented UNet scheme.

| Classifier | TP | FN | TN | FP | ACC (%) | PRE (%) | SEN (%) | SPE (%) | NPV (%) |

|---|---|---|---|---|---|---|---|---|---|

| SoftMax | 441 | 9 | 442 | 8 | 98.11 | 98.22 | 98.00 | 98.22 | 98.00 |

| NB | 442 | 8 | 443 | 7 | 98.33 | 98.44 | 98.22 | 98.44 | 98.23 |

| RF | 441 | 9 | 443 | 7 | 98.22 | 98.44 | 98.00 | 98.44 | 98.01 |

| Coarse tree | 442 | 8 | 442 | 8 | 98.22 | 98.22 | 98.22 | 98.22 | 98.22 |

| Medium tree | 442 | 8 | 444 | 6 | 98.44 | 98.66 | 98.22 | 98.67 | 98.23 |

| Fine tree | 446 | 4 | 447 | 3 | 99.22 | 99.33 | 99.11 | 99.33 | 99.11 |

| Coarse KNN | 445 | 5 | 446 | 4 | 99.00 | 99.11 | 98.89 | 99.11 | 98.89 |

| Medium KNN | 444 | 6 | 445 | 5 | 98.78 | 98.88 | 98.67 | 98.89 | 98.66 |

| Fine KNN | 447 | 3 | 442 | 8 | 98.78 | 98.24 | 99.33 | 98.22 | 99.32 |

| SVM linear | 441 | 9 | 445 | 5 | 98.44 | 98.88 | 98.00 | 98.89 | 98.02 |

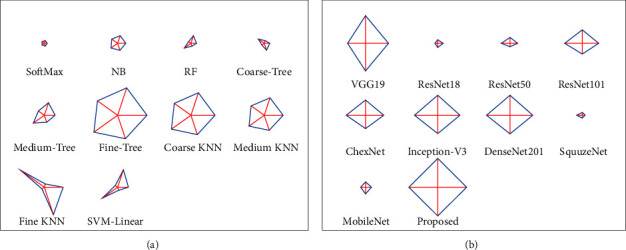

Table 4 confirms that the result of the fine tree is better than other binary classifiers, and the coarse KNN also helped to achieve a classification accuracy of 90% compared to other techniques. This confirmed that the optimized DF + HF supported classification helps to get a better overall result, as presented in Figure 10. Figure 10(a) shows the glyph plot for the overall performance of binary classifiers, and the pattern covering a maximum area is considered superior. The comparison of the fine-tree classifier with earlier works is presented in Figure 10(b), which confirms its superiority over other classifiers. Figure 10(b) compares the ACC. PRE, SEN, and SPE of the earlier research by Rahman et al. [14] with the fine-tree result, and this comparison confirms that the proposed system's outcome is better.

Figure 10.

Overall performance of TB detection system demonstrated with glyph plot. (a) Results with DF + HF, (b) comparison with earlier work.

This research implemented a joint segmentation and classification scheme to detect TB from chest X-rays with better accuracy. The main limitation of the proposed scheme is that it considered the artifact-removed image for getting the necessary HF from the LBP images. In the future, the LBP can be combined with other HF existing in the literature. Furthermore, the performance of the proposed scheme can be tested and verified with other benchmark chest X-ray images with various lung abnormalities.

5. Conclusion

In humans, TB is a severe disease that widely affects the lungs, and early diagnosis and treatment will help to reduce the severity. Furthermore, the timely detection and recommended medication will help to cure the TB completely. Due to its significance, a considerable number of research works are performed by researchers to support the automated diagnosis. This research aims to develop and implement a joint segmentation and classification scheme with the help of a pretrained VGG-UNet scheme. The VGG-UNet system consists of an encoder-decoder assembly, in which the encoder helps to get the necessary DL features as in the traditional VGG16 system, and the decoder associated with the SoftMax classifier helps to extract the binary form of the lung image. This work considered the LBP pattern of the lung image to extract the necessary HF. This work considered the LBP with varied weights and helped to get a1D feature vector of size 236. The extracted DF and the HF are then optimized using the SHA, and these features are then serially united to get the concatenated features vector (DF + HF). This feature vector is then considered for testing and validating the performance of the binary classifiers using 5-fold cross-validation. The experimental outcome of this study confirmed that the binary classification with the fine-tree classifier helped to achieve an accuracy of >99% for the considered chest X-ray images. This result is then compared and validated with the result of other DL methods available in the literature. This research confirmed the merit of the proposed DF + HF-based TB detection from the chest X-ray images. In the future, this scheme can be enhanced with other HF available in the literature. Furthermore, the performance of the proposed scheme can be tested and validated with other chest X-ray image datasets available in the literature.

Acknowledgments

This work was supported in part by the National Research Foundation of Korea (NRF) grant funded by the Korean government (MSIT) (No. 2020R1G1A1099559).

Data Availability

The X-ray images considered in this research work can be accessed from https://ieee-dataport.org/documents/tuberculosis-tb-chest-x-ray-database.

Conflicts of Interest

The authors declare that they have no conflicts of interest to report regarding the present study.

References

- 1.Kumar V., Singh D., Damaševičius M., Damaševičius R. Overview of current state of research on the application of artificial intelligence techniques for COVID-19. PeerJ Computer Science . 2021;7(1):5644–e634. doi: 10.7717/peerj-cs.564. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Chouhan V., Singh S. K., Khamparia A., et al. A novel transfer learning based approach for pneumonia detection in chest X-ray images. Applied Sciences . 2020;10(2):559–617. doi: 10.3390/app10020559. [DOI] [Google Scholar]

- 3.Priya S. J., Rani A. J., Subathra M. S. P., Mohammed M. A., Damaševičius N. pattern transformation based feature extraction for recognition of Parkinson’s disease based on gait signals. Diagnostics . 2021;11(8):1395–1417. doi: 10.3390/diagnostics11081395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Rajinikanth V., Kadry S., Nam Y. Convolutional-Neural-Network assisted segmentation and SVM classification of brain tumor in clinical MRI slices. Information Technology and Control . 2021;50(2):342–356. doi: 10.5755/j01.itc.50.2.28087. [DOI] [Google Scholar]

- 5.khan Z. F., Alotaibi S. R. Applications of artificial intelligence and big data analytics in m-health: a healthcare system perspective. Journal of Healthcare Engineering . 2020:1–15. doi: 10.1155/2020/8894694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Vj S., D J. F. Deep learning algorithm for COVID-19 classification using chest X-ray images. Computational and Mathematical Methods in Medicine . 2021;2021(10) doi: 10.1155/2021/9269173.9269173 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Akram T., Attique M., Gul S., et al. A novel framework for rapid diagnosis of COVID-19 on computed tomography scans. Pattern Analysis & Applications . 2021;24(3):951–964. doi: 10.1007/s10044-020-00950-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Singh D., Kumar V., Kaur M. Densely connected convolutional networks-based COVID-19 screening model. Applied Intelligence . 2021;51(5):3044–3051. doi: 10.1007/s10489-020-02149-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. https://www.who.int/health-topics/tuberculosis#tab=tab_1 .

- 10.Who G. Global tuberculosis report . Glob. Tuberc. Rep; 2020. [Google Scholar]

- 11.Harding E. WHO global progress report on tuberculosis elimination. The Lancet Respiratory Medicine . 2020;8(1):19–30. doi: 10.1016/s2213-2600(19)30418-7. [DOI] [PubMed] [Google Scholar]

- 12.Al-Timemy A. H., Khushaba R. N., Mosaand Z. M., Escudero J. An efficient mixture of deep and machine learning models for covid-19 and tuberculosis detection using x-ray images in resource limited settings. Artificial Intelligence for COVID-19 . 2021:77–100. doi: 10.1007/978-3-030-69744-0_6. [DOI] [Google Scholar]

- 13.Azmi U. Z. M., Yusof N. A., Abdullah J., Ahmad S. A. A., Faudziet al F. N. M. Portable electrochemical immunosensor for detection of Mycobacterium tuberculosis secreted protein CFP10-ESAT6 in clinical sputum samples. Microchimica Acta . 2021;188(1):1–11. doi: 10.1007/s00604-020-04669-x. [DOI] [PubMed] [Google Scholar]

- 14.Rahman T., Khandakar A., Kadir M. A., et al. Reliable tuberculosis detection using chest X-ray with deep learning, segmentation and visualization. IEEE Access . 2020;8(2):191586–191601. doi: 10.1109/access.2020.3031384. [DOI] [Google Scholar]

- 15. https://ieee-dataport.org/documents/tuberculosis-tb-chest-x-ray-database .

- 16.Rajinikanth V., Kadry S. Development of a framework for preserving the disease-evidence-information to support efficient disease diagnosis. International Journal of Data Warehousing and Mining . 2021;17(2):63–84. doi: 10.4018/ijdwm.2021040104. [DOI] [Google Scholar]

- 17.Diakogiannis F. I., Waldner F., Caccetta P., Wu C. ResUNet-a: a deep learning framework for semantic segmentation of remotely sensed data. ISPRS Journal of Photogrammetry and Remote Sensing . 2020;162(2):94–114. doi: 10.1016/j.isprsjprs.2020.01.013. [DOI] [Google Scholar]

- 18.Rajaraman S., Antani S. K. Modality-specific deep learning model ensembles toward improving TB detection in chest radiographs. IEEE Access . 2020;8(2):27318–27326. doi: 10.1109/access.2020.2971257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Hijazi M. H. A., Kieu Tao Hwa S., Bade A., Yaakob R., Saffree Jeffree M. Ensemble deep learning for tuberculosis detection using chest X-Ray and canny edge detected images. IAES International Journal of Artificial Intelligence . 2019;8(4):429–442. doi: 10.11591/ijai.v8.i4.pp429-435. [DOI] [Google Scholar]

- 20.Wong A., Lee J. R. H., Rahmat-Khah H., Alaref A. S. A. TB-Net: a tailored, self-attention deep convolutional neural network design for detection of tuberculosis cases from chest X-ray images. 2021. https://arxiv.org/abs/2104.03165 . [DOI] [PMC free article] [PubMed]

- 21.Hooda R., Sofat S., Kaur S., Mittal A., Meriaudeau F. Deep-learning: a potential method for tuberculosis detection using chest radiography. Proceedings of the 2017 IEEE International Conference on Signal and Image Processing Applications (ICSIPA); September 2017; Kuching, Malaysia. IEEE; pp. 497–502. [DOI] [Google Scholar]

- 22.Rohilla A., Hooda R., Mittal A. TB detection in chest radiograph using deep learning architecture. ICETETSM . 2017;17:136–147. [Google Scholar]

- 23.Nguyen Q. H., Nguyen B. P., Dao S. D., Unnikrishnan B., Dhingraet al R. Deep learning models for tuberculosis detection from chest X-ray images. Proceedings of the 2019 26th International Conference on Telecommunications (ICT); April 2019; Hanoi, Vietnam. IEEE; pp. 381–385. [DOI] [Google Scholar]

- 24.Afzali A., Mofrad F. B., Pouladian M. Feature selection for contour-based tuberculosis detection from chest x-ray images. Proceedings of the 2019 26th National and 4th International Iranian Conference on Biomedical Engineering (ICBME); November 2019; Tehran, Iran. IEEE; pp. 194–198. [DOI] [Google Scholar]

- 25.Stirenko S., Kochura Y., Alienin O., Rokovyi O., Gordienkoet al Y. Chest X-ray analysis of tuberculosis by deep learning with segmentation and augmentation. Proceedings of the 2018 IEEE 38th International Conference on Electronics and Nanotechnology (ELNANO); April 2018; Kyiv, UKraine. IEEE; pp. 422–428. [DOI] [Google Scholar]

- 26.Rajinikanth V., Joseph Raj A. N., Thanaraj K. P., Naik G. R. A customized VGG19 network with concatenation of deep and handcrafted features for brain tumor detection. Applied Sciences . 2020;10(10):3429–3439. doi: 10.3390/app10103429. [DOI] [Google Scholar]

- 27.Ramasamy L. K., Padinjappurathu S. G., Kadry S., Damaševičius R. Detection of diabetic retinopathy using a fusion of textural and ridgelet features of retinal images and sequential minimal optimization classifier. PeerJComputer Science . 2021;7(1):4566–e517. doi: 10.7717/peerj-cs.456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Wieczorek M., Siłka J., Połap D., Woźniak M., Damasevicius R. Real-time neural network-based predictor for cov19 virus spread. PLoS One . 2020;15(12):p. e243217. doi: 10.1371/journal.pone.0243189.02431899 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ronneberger O., Fischer P., Brox T. U-net: convolutional networks for biomedical image segmentation. Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention; November 2015; Cham: Springer; pp. 234–241. [DOI] [Google Scholar]

- 30.Badrinarayanan V., Kendall A., Cipolla R. Segnet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence . 2017;39(12):2481–2495. doi: 10.1109/tpami.2016.2644615. [DOI] [PubMed] [Google Scholar]

- 31.Kadry S., Damaševičius R., Taniar D., Rajinikanth V., Lawal I. A. U-net supported segmentation of ischemic-stroke-lesion from brain MRI slices. Proceedings of the 2021 Seventh International Conference on Bio Signals, Images, and Instrumentation (ICBSII); March 2021; Chennai, India. IEEE; pp. 1–5. [DOI] [Google Scholar]

- 32.Gudigar A., Raghavendra U., Devasia T., et al. Global weighted LBP based entropy features for the assessment of pulmonary hypertension. Pattern Recognition Letters . 2019;125(2):35–41. doi: 10.1016/j.patrec.2019.03.027. [DOI] [Google Scholar]

- 33.Wei L., Pan S. X., Nanehkaran Y. A., Rajinikanth V. An optimized method for skin cancer diagnosis using modified thermal exchange optimization algorithm. Computational and Mathematical Methods in Medicine . 2021;2021(2):1–11. doi: 10.1155/2021/5527698. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Wang Y. Y., Zhang H., Qiu C. H., Xia S. R. A Novel Feature Selection Method Based on Extreme Learning Machine and Fractional-Order Darwinian PSO. Computational intelligence and neuroscience . 2018;2018:1–8. doi: 10.1155/2018/5078268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Cheong K. H., Tang K. J. W., Zhao X., et al. An automated skin melanoma detection system with melanoma-index based on entropy features. Biocybernetics and Biomedical Engineering . 2021;41(3):997–1012. doi: 10.1016/j.bbe.2021.05.010. [DOI] [Google Scholar]

- 36.Dhiman G., Kumar V. Spotted hyena optimizer: a novel bio-inspired based metaheuristic technique for engineering applications. Advances in Engineering Software . 2017;114(2):48–70. doi: 10.1016/j.advengsoft.2017.05.014. [DOI] [Google Scholar]

- 37.Dhiman G., Kumar V. Harmony Search and Nature Inspired Optimization Algorithms . Singapore: Springer; 2019. Spotted hyena optimizer for solving complex and non-linear constrained engineering problems; pp. 857–867. [DOI] [Google Scholar]

- 38.Bairwa A. K., Joshi S., Singh D. Dingo optimizer: a nature-inspired metaheuristic approach for engineering problems. Mathematical Problems in Engineering . 2021;2021(2):1–12. doi: 10.1155/2021/2571863. [DOI] [Google Scholar]

- 39.Rajinikanth V., Dey N. Magnetic Resonance Imaging: Recording, Reconstruction and Assessment . Amsterdam, Netherlands: Elsevier; 2022. [Google Scholar]

- 40.Ramya J., Rajakumar M. P., Maheswari B. U. Deep CNN with hybrid binary local search and particle swarm optimizer for exudates classification from fundus images. Journal of Digital Imaging . 2022;35:56–67. doi: 10.1007/s10278-021-00534-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ahmed M., Ramzan M., Ullah Khan H., et al. Real-Time violent action recognition using key frames extraction and deep learning. Computers, Materials & Continua . 2021;69(2):2217–2230. doi: 10.32604/cmc.2021.018103. [DOI] [Google Scholar]

- 42.Rajakumar M. P., Sonia R., Uma Maheswari B., Karuppiah S. P. Tuberculosis detection in chest X-ray using Mayfly-algorithm optimized dual-deep-learning features. Journal of X-Ray Science and Technology . 2021;29(6):961–974. doi: 10.3233/xst-210976. [DOI] [PubMed] [Google Scholar]

- 43.Rajesh Kannan S., Sivakumar J., Ezhilarasi P. Automatic detection of COVID-19 in chest radiographs using serially concatenated deep and handcrafted features. Journal of X-Ray Science and Technology . 2022;30(2):231–244. doi: 10.3233/xst-211050. [DOI] [PubMed] [Google Scholar]

- 44.Hmoud Al-Adhaileh M., Mohammed Senan E., Alsaade W., et al. Deep Learning Algorithms for Detection and Classification of Gastrointestinal Diseases. Complexity . 2021;2021 doi: 10.1155/2021/6170416.6170416 [DOI] [Google Scholar]

- 45.Wen T., Du Y., Pan T., Huang C., Zhang Z. A deep learning-based classification method for different frequency EEG data. Computational and Mathematical Methods in Medicine . 2021;2021:1–13. doi: 10.1155/2021/1972662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Sharma S., Gupta S., Gupta D., et al. Deep Learning Model for the Automatic Classification of White Blood Cells. Computational Intelligence and Neuroscience . 2022;2022 doi: 10.1155/2022/7384131.7384131 [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The X-ray images considered in this research work can be accessed from https://ieee-dataport.org/documents/tuberculosis-tb-chest-x-ray-database.