Abstract

Developmental language disorder (DLD) and dyslexia are common but under-identified conditions that affect children’s ability to read and comprehend text. Universal screening is a promising solution for improving under-identification of DLD and dyslexia, however, we lack evidence for how to effectively implement and sustain screening procedures in schools. In the current study, we solicited input from educators in the U.S. around perceived barriers and facilitators to the implementation of researcher-developed screeners for DLD and dyslexia. Using thematic analysis, we identified barriers and facilitators within five domains: (1) features of the screeners, (2) preparation for screening procedures, (3) administration of the screeners, (4) demands on users, and (5) screening results. We discuss these findings and ways we can continue improving our efforts to maximize the contextual fit and utility of screening practices in schools.

Keywords: universal screening, developmental language disorder, dyslexia, implementation, thematic analysis

Developmental language disorder (DLD) and dyslexia are common but under-identified disorders. Both occur in the population at around 10% (Norbury et al., 2016; Tomblin et al., 1997; Wagner et al., 2020). Children with DLD experience difficulties understanding and/or using spoken language in the absence of other medical conditions, such as cognitive impairment, traumatic brain injury, or hearing loss (Leonard, 2014; Tomblin et al., 1997). Children with dyslexia experience difficulties in word reading despite adequate instruction and average intelligence (Lyon et al., 2003). Approximately 50% of children with dyslexia have DLD and vice versa (McArthur et al., 2000). In addition to significant learning difficulties, children with DLD and dyslexia experience behavioral and emotional problems and are at higher risk for suicide, delinquency, and incarceration (Arnold et al., 2005; Brownlie et al., 2004; Conti-Ramsden & Botting, 2008; McArthur et al., 2020).

The good news is that we can help prevent negative consequences associated with DLD and dyslexia by promoting school-wide systems of early identification and remediation (Adlof & Hogan, 2019; Catts & Hogan, 2020). Implementation of preventive approaches is complex and requires systematic efforts characterized by collaboration, awareness of contextual barriers, targeted strategies to overcome them, and continuous quality improvement (Goldstein & Olszewski, 2015). To achieve these goals, we can rely on methods of implementation science that aim to promote the systematic uptake of evidence-based practices into routine settings (Bauer et al., 2015). In this paper, we discuss our initial efforts to leverage implementation science and understand what it takes to implement universal screening for DLD and dyslexia in U.S. schools.

Early Identification of DLD and Dyslexia

Universal screening is a critical first step of preventive approaches. Screening procedures are commonly used in healthcare to identify individuals who show early indicators of a disease (e.g., diabetes, cardiovascular disease, breast cancer) and who could benefit from preventive treatment and further assessment. Similarly, U.S. schools conduct periodic health screenings (e.g., vision, hearing, dental health, mental health, communication, motor skills), and currently, dyslexia screenings as mandated by new state laws (Ward-Lonergan & Duthie, 2018). Early childhood programs (birth to five) include developmental screenings to support children’s readiness for school. However, due to the lack of universal preschool in the U.S., there is no guarantee that every child will access screenings before primary school entry (Kamerman & Gatenio-Gabel, 2007). On the other hand, primary schools are compulsory for most children which provides a consistent structure to support universal screening from kindergarten onwards.

Unfortunately, systematic screening for DLD for all children at school entry does not yet exist. In the U.S., a child is diagnosed with DLD after being referred to a speech-language pathologist by a concerned adult, such as parent, teacher, or pediatrician. This, however, may contribute to the under-identification of DLD by leaving out many children with DLD who experience mild symptoms that are not readily noticeable (Adlof & Hogan, 2019; Tomblin et al., 1997). One way to address under-identification of DLD is to include measures of oral language in universal screening (Adlof & Hogan, 2019; Hendricks et al., 2019).

Understanding the Implementation of Universal Screening for DLD and Dyslexia

Early screening for DLD and dyslexia is important. However, we know very little about the actual implementation of school-based screenings. This is not surprising because, in general, more efforts have been devoted to generating scientific knowledge than supporting evidence-based practice in routine settings (Bauer & Kirchner, 2019; Cook & Odom, 2013). For example, we can reliably identify DLD after the age of five and we know more about clinical markers that can indicate the presence of DLD during testing (e.g., sentence recall, grammar, nonword repetition), yet, about 50%−70% of children with DLD still go undetected (McGregor, 2020; Paul, 1996; Tomblin et al., 1997). We also know that dyslexia is best remediated in kindergarten or first grade, yet, most children with dyslexia are not diagnosed until second or third grade or even later (Catts & Hogan, 2020; Ozernov-Palchik & Gaab, 2016).

These examples illustrate that, regardless of the amount of research evidence, we cannot guarantee its routine uptake without an intentional focus on implementation (Bauer & Kirchner, 2019). We also cannot expect educators to apply research findings in their settings by merely being exposed to them through scientific journals or conferences (Cook & Odom, 2013; Goldstein & Olswang, 2017). Educators often face many challenges trying to balance student needs with limited capacity and resources, which leaves little room for implementing new practices (Cook & Odom, 2013). What we should expect are systematic efforts to facilitate the translation of research evidence into routine practice that are grounded in researcher-practitioner collaborations, knowledge of school contexts, and tailored implementation strategies (Douglas et al., 2015).

An essential element of implementation is the continuous evaluation of its progress and quality to determine what works and what needs improvement (Damschroder et al., 2009). Various factors can facilitate or impede implementation. These factors may be related to characteristics of the inner setting (e.g., resources, capacity, organizational structure, leadership, communication channels), characteristics of educators (e.g., buy-in, knowledge, training), characteristics of students (e.g., demographics, language, views and perspectives), characteristics of the evidence-based program itself (e.g., evidence quality, length, usability), characteristics of the outer setting (e.g., community networks, policy), and characteristics of the process (e.g., planning, evaluation) (Damschroder et al., 2009; Woodward et al., 2021; Petscher et al., 2019).

During evaluation of implementation processes, it is important to recognize the value of soliciting educators’ input. Educators play a central role in shaping uptake of screening programs and soliciting their input is necessary for three main reasons. First, as primary service providers, educators can bring important insights into the range of contextual factors that may influence implementation and sustainability of screening procedures. Second, educators take a more active and participatory role in implementation, which is known to advance the adoption of evidence-based practices (Clarke et al., 2010; Green, 2008; Green & Glasgow, 2006). Third, it can promote collaborative decision making, knowledge exchange, and trust in the context of researcher-practitioner partnerships (Aspland et al., 1996; Henrick et al., 2017).

Our Study

In the current study, we sought educators’ input about perceived barriers and facilitators to the implementation of researcher-developed screeners for DLD and dyslexia. This study is part of a multi-site, longitudinal investigation that aims to examine language and reading trajectories in children with and without DLD. An important objective of our work is to help school districts implement and sustain screenings for DLD and dyslexia. Over the past three years, we worked together to understand the needs and goals of each district and to examine how to best optimize the fit between screening measures and contexts (Alonzo et al., 2021). However, the COVID-19 pandemic forced us to pivot to online screenings and brought new opportunities and challenges to our partnerships.

To prepare for implementation, we (1) created digital screeners (see Appendix A), (2) met regularly with district teams for planning, (3) developed and provided online trainings to district staff, and (4) determined district-specific accommodations, such as incorporating our screeners into their online platforms and translating instructions for families who speak other languages. At that time, school districts in the U.S. operated under either a remote learning model (i.e., all students accessed online learning from their homes) or a hybrid learning model (i.e., students accessed online and in-person learning). In the current study, a total of 2,097 students, 1,995 kindergartners (between 5 and 6 years old) and 102 first graders (between 6 and 7 years old), completed the screening. Sixty-six percent of students completed the screening during remote learning and 34% of students completed the screening during in-person learning. To maintain consistency in administration methods, students in both remote and in-person learning completed the same digital screeners on their personal devices (e.g., laptops, tablets). For remote students, educators initiated and supported screening procedures, but families were naturally involved in the process, as with any other aspect of remote learning. However, we solely focused on educators and their experiences because timeline delays related to COVID-19 school closures did not allow us to solicit feedback from families.

Educators supported implementation in various ways, including communicating screening logistics to families, overseeing screening for remote and in-person students, assisting families during screening, and troubleshooting. During follow-up meetings, we asked educators to complete an informal survey about the implementation process. The purpose of this study is to use data from the post-screening survey to evaluate and report educators’ experiences, and specifically, their perceptions of barriers and facilitators to the implementation of our screeners. Our goal is to use these findings to develop appropriate screening avenues for both in-person and remote students and to continue optimizing implementation of universal screening for DLD and dyslexia in schools.

Method

Participants

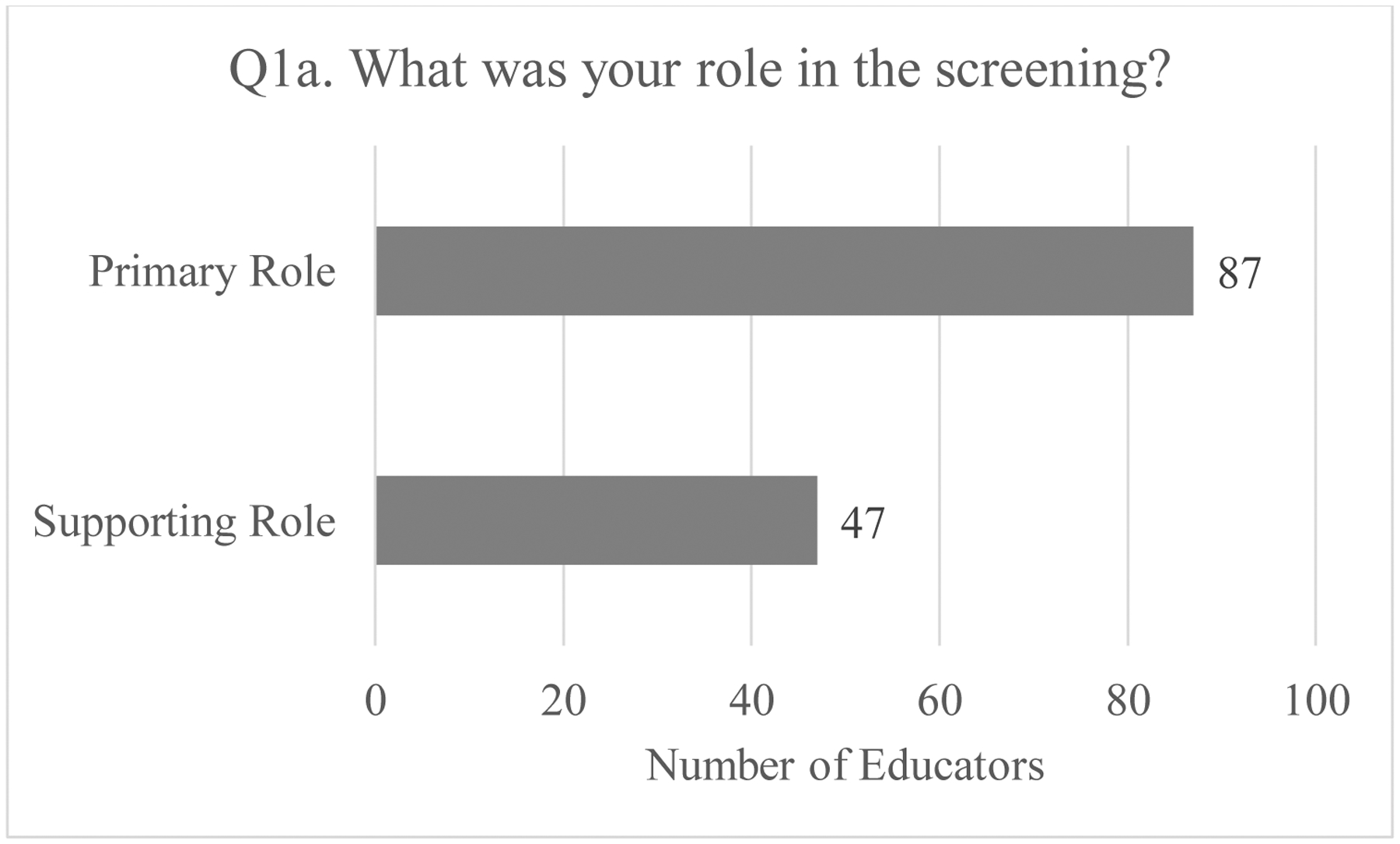

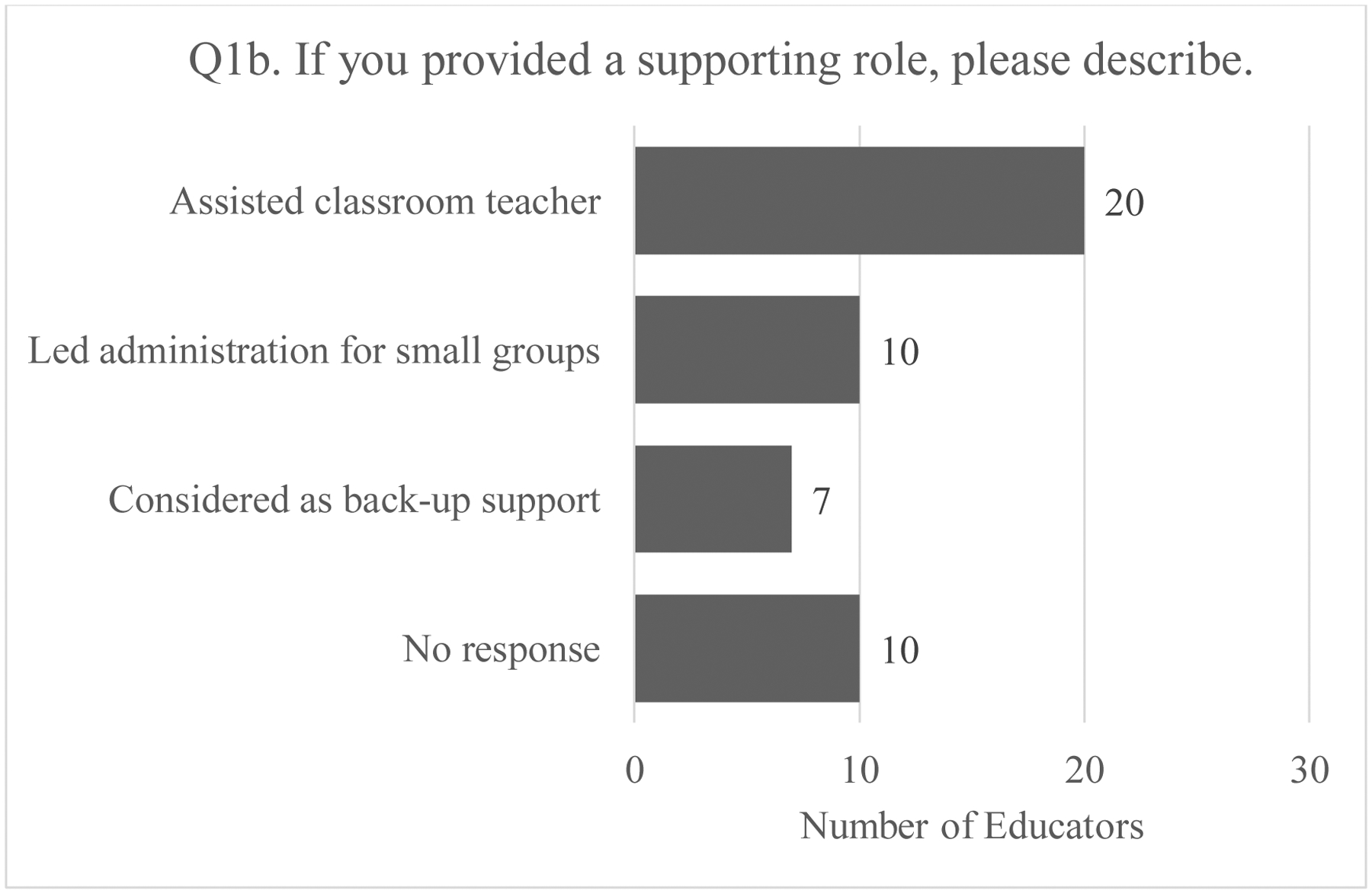

One hundred and thirty-four educators from three school districts in two U.S. states participated in the survey. We use “educators” as an umbrella term, to include classroom teachers who had a primary role in implementation and other school staff who had a supporting role, such as speech-language pathologists, special educators, teaching assistants, ESL (i.e., English as a second language) teachers, and administrative staff. Eighty-seven of the respondents were classroom teachers and 47 were other school staff (see Figure 1). Supporting roles were grouped into the following categories: (1) assisted classroom teachers (i.e., communication with families, technology support, student monitoring), (2) led screening administration for small groups (e.g., Spanish-speaking students) (3) considered as back-up support but did not actively assist classroom teachers, (4) no response. Figure 2 shows the number of educators in each category.

Figure 1.

Total Number of Educators in Primary and Supporting Roles

Figure 2. Total Number of Educators in Each Category of Supporting Role.

Note. The ‘assisted classroom teacher’ category included supporting the classroom teacher with parent communication, supporting with technology, and monitoring students.

Measures

Educators completed a short survey after children in their care had completed our DLD and dyslexia screening measures. We want to emphasize that the survey was informal and was used as a quick method to evaluate our implementation efforts. Table 1 lists the six questions included in the survey.

Table 1.

OWL Language and Literacy Screening: Educator Feedback Survey

| Survey Question | Response Choices |

|---|---|

| Q1a. What was your role in the screening? |

|

| Q1b. If you provided a supporting role, please describe. | |

| Q2a. How well prepared did you feel to facilitate the screening for your students? |

|

| Q2b. If you selected “somewhat prepared” or “not prepared,” what additional supports would have been useful? | |

| Q3. Which issues or challenges did you or your students encounter? (Select all that apply) |

|

| Q4. What did you like about the screening? | |

| Q5. What did you not like about the screening? | |

| Q6. Please provide any additional comments you feel would be useful for the research team to know about the screening process. |

Data analysis

We used inductive thematic analysis to provide a detailed account of educators’ perceptions of barriers and facilitators to the implementation of DLD and dyslexia screeners. An inductive thematic analysis is defined as an analysis where “…the themes identified are strongly linked to the data themselves” (Braun & Clarke, 2006, p. 12) and it differs from a deductive thematic analysis which is driven by preconceived themes (Braun & Clarke, 2006). For the thematic analysis, we compiled educators’ responses to questions 2b (If you selected “somewhat prepared” or “not prepared”, what additional supports would have been useful?), 3 (Which issues or challenges did you or your students encounter?), 4 (What did you like about the screening?), 5 (What did you not like about the screening?), and 6 (Please provide any additional comments you feel would be useful for the research team to know about the screening process) because they were deemed relevant for identifying barriers and facilitators. Responses were compiled and analyzed in an Excel spreadsheet. We followed Braun and Clarke’s (2006) approach to thematic analysis which includes the following six phases: (1) familiarizing ourselves with our data, (2) generating initial codes, (3) searching for themes, (4) reviewing themes, (5) defining and naming themes, and (6) producing the report.

The second author independently generated initial codes and reviewed them with the first author (i.e., Phase 2) (see Appendix B for a complete list of codes). The second author then proceeded to categorize responses into themes and sub-themes (i.e., Phase 3). Over several meetings, the first and second authors reviewed together the initial themes and sub-themes to determine their coherence, re-code where necessary, and refine them (i.e., Phase 4). In the next section we define themes and sub-themes, and we discuss them in the context of barriers and facilitators to implementing universal screening for DLD and dyslexia in school settings (i.e., Phases 5 and 6).

Results

We identified contextual factors that were perceived to act as barriers and/or facilitators within five main themes: (1) features of the screeners, (2) preparation for screening procedures, (3) administration of the screeners, (4) demands on users, and (5) screening results. A complete list of all themes, sub-themes, and codes can be found in Appendix B.

Theme 1: Features of the Screeners

This theme concerns the design characteristics of the screeners that acted as barriers and/or facilitators to implementation. It contains five sub-themes: (1) duration, (2) features of items, (3) format, (4) result turnaround time, and (5) usability.

Duration.

Overall, educators appreciated that the screeners were quick and could be completed in just one session. One educator wrote: “It didn’t take too much time out of our regular schedule.”

Features of items.

Educators reported several design features of the screening items that they perceived as problematic. One such feature was the difference in function between practice items and test items. That is, children received feedback during the practice items (i.e., correct answers were highlighted), but not during the test items. One educator wrote: “It was a little different than the sample. Students were waiting for the highlighted pictures to show.” Educators also expressed concerns about the language level of the screening items and the short time lag between items. One educator wrote: “The questions are sometimes worded in a way that could be confusing to a 5-year-old.” Another educator wrote: “The timer was very quick. I feel like students didn’t get a chance to think about the question before it quickly went to the next one.”

Format.

Some educators reported that they liked the digital, automatically-paced, and standardized format of the screeners, while others preferred the original paper and pencil format. One educator wrote: “I liked having it on the computer this year.” Another educator wrote: “I wish it was paper/pencil instead.”

Result turnaround time.

Educators indicated that the result turnaround time was a barrier because they could not receive real-time results after a child had completed the screeners and the research team delayed releasing results. One educator wrote: “Timeline was crunched, no real time feedback on data- data spreadsheet should have been updated a couple times during the week.”

Usability.

Usability assesses the degree that a user can use a product in an effective, efficient, and satisfactory manner. Educators reported several features related to usability that acted as facilitators to implementation. According to them, the screeners were easy to access and use, the directions were clear and easy to follow, and students were able to complete it on their own. One educator wrote: “Once the students were logged on, it seems as though even our youngest students were able to access the assessment.” Another educator wrote: “I liked that once set up, the screening was online for students to complete on their own.” Some educators, however, reported that the screeners were difficult to access and use by different groups, including caregivers who spoke other languages, students who did not have enough support at home, and students with identified disabilities. Moreover, the screeners were not deemed appropriate for multilingual learners. One educator wrote: “This screener does not support multi language learners. Students who are just beginning to learn English, especially young students, are already at a disadvantage when it comes to this screener. As a teacher, it is very frustrating to have to give my students an assessment that I know they are not going to do well with because they have not been accounted for when creating the assessment.” Educators also reported that they could not discern if students actually completed the screeners. One educator wrote: “There was no way of knowing if the child really completed the screener or not. It would be better if the teacher got immediate confirmation.”

Theme 2: Preparation for Screening Procedures

This theme concerns all activities that researchers and educators undertook to prepare for implementation. It contains three sub-themes: (1) communication, (2) preparation timeline, and (3) training.

Communication.

Educators indicated that researchers did not communicate information about the screening clearly and effectively. Educators did not feel that they received sufficient notice to administer the screeners and were unsure about logistical details. One educator wrote: “I would have liked more time to prepare and to know that it was happening. Notification, training happened within days of being told it need[s] to be done the next week. We have worked hard to build routines with families all year and this caused a need for last minute communication and changes to our routine. Being able to give them a full week[‘s] notice, would have been helpful. Many of my families work or students are home with grandparents.” A few educators appreciated that directions for logging in and completing the screeners were available in multiple languages. One educator wrote: “I like that the screening was shorter. I also liked that the directions could be assigned in different languages to help parents get their child to the screener.”

Preparation timeline.

Educators expressed that the short timeline prevented them from adequately preparing for implementation and familiarizing themselves with the screeners. One educator wrote: “I felt like I received the link last minute and felt rushed trying to figure it out and contact parents.”

Training.

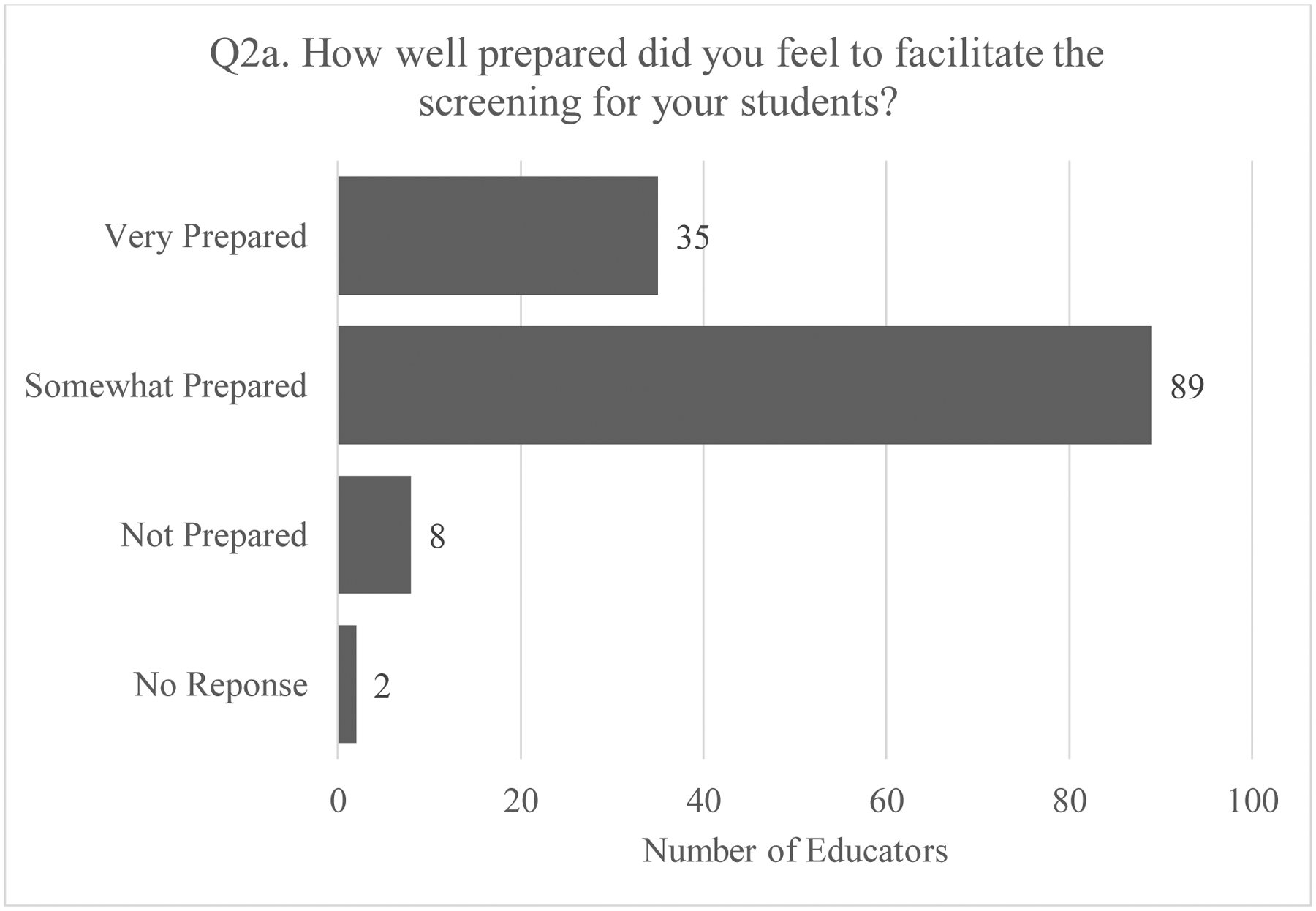

Educators reported that the training delivered by researchers was insufficient because they did not receive enough information about the screeners and their implementation, they did not have a chance to interact with the screeners beforehand, and they did not participate in a practice session. One educator wrote: “There wasn’t much direction given about how it was to be implemented virtually.” Another educator wrote: “Having the opportunity to administer a practice session would have helped.” These qualitative findings resonate with the level of preparedness that was reported by educators in question 2a (see Figure 3). According to the results, the majority of educators (89 out of 134) felt somewhat prepared to administer the screening, 35 educators felt very prepared, and eight educators did not feel prepared.

Figure 3.

Total Number of Educators at Each Level of Preparedness to Administer the Screeners

Theme 3: Administration of the Screeners

This theme concerns the active process of administering the DLD and dyslexia screeners to kindergarten students. It contains four sub-themes: (1) mode of administration, (2) screening timeline, (3) technical considerations, and (4) teacher and family involvement.

Mode of administration.

Educators perceived that the remote administration of the screeners acted as a barrier to their implementation. They reported that remote administration was overall a difficult process and that they were unable to observe students or assist families through technical difficulties. One educator wrote: “It was hard to see what they were seeing and to help them through technical difficulties because it was remotely completed.” Some educators also reported that they preferred in-person administration of the screeners.

Screening timeline.

Educators reported that the timing of the screening was not ideal, especially since it coincided with remote learning. They also reported that the timeframe for completing the process was relatively short and that they would have preferred to have more time. One educator wrote: “It felt extremely thrown together and random during remote learning.”

Technical considerations.

Educators reported several technical factors that influenced the implementation process, including audio quality, system technology, and screener completion. Educators reported that families complained about audio and other technical problems and that completed screeners were sometimes not marked as such. One educator wrote: “Somehow it kept saying my students did not complete when I watched them share their screen and complete it. Some kiddos had to do it more than once for it to register that they did it.”

Teacher and family involvement.

Since most students participated in the screening while at home, families played a bigger role than educators during implementation. This was perceived as both a barrier and a facilitator by educators. On the one hand, some educators reported that they did not like relying on families to complete the screening. When asked what they did not like about the screening (i.e., Q5), one educator wrote: “Having to send many reminders and getting families to participate.” Educators also reported that families found the process challenging and confusing. On the other hand, some educators reported that they appreciated that their involvement was minimal as they were only required to help students access the link and log in. They also appreciated that parents were able to participate in the screening with their children. One educator wrote: “The parents provided the majority of the process and were able to see it for themselves.”

Theme 4: Demands on Users

This theme concerns the demands that the screening process placed on educators, students, and families. Some educators reported that the screening was overwhelming for families and students, and for themselves as it overlapped with other responsibilities. One educator wrote: “To be honest, none of this made sense this school year…there was way too much put [on] students and families.” Another educator wrote: “Another responsibility for the teacher to direct and make sure they are completed.” However, others suggested that the demands placed on them were minimal and prior experiences with screeners facilitated their involvement. One educator wrote: “The role was easy. Just get them logged in and I was done.”

Theme 5: Screening Results

This theme concerns educators’ perceptions of the relevance of screening results. It contains two sub-themes: (1) validity of screening results, and (2) use of screening results.

Validity of screening results.

Educators questioned the validity of the screening results due to various reasons. Some of them raised concerns over the validity of the results due to the challenges that arose during remote administration. One educator wrote: “I don’t think these results are particularly valid due to the screening circumstances. I would proceed with caution.” Others suggested that parents may have assisted their children by providing them with the correct answers. One educator wrote: “I am not sure how reliable results will be with the students taking it at home. We have found parents to give children support on other assessments even when they have been directed not to.” Finally, some educators were concerned that the screening results included many false positives. One educator wrote: “Many of my kiddos came up as at risk for language impairment. Some of them are my high kids so it was a little confusing to me.”

Use of screening results.

Overall, educators indicated that the screeners were beneficial to their students because they provided useful information about their literacy development and promoted early identification of at-risk students. One educator wrote: “I do like there is a screening to help provide early intervention supports for children.”

Discussion

This study aimed to capture educators’ perceptions of barriers and facilitators to the implementation of DLD and dyslexia screeners. Universal screening can promote early identification of DLD and dyslexia and prevent long-term adverse effects (Adlof & Hogan, 2019; Catts & Hogan, 2020). However, the successful uptake of universal screening into school-based practice is not guaranteed without a comprehensive evaluation of contextual and process factors that may facilitate or hinder implementation (Bauer & Kirchner, 2019). Such evaluation must include feedback from educators who know their settings better than researchers and can provide important and practical insights for ongoing quality improvement. In our study, educators reported different factors that acted as barriers and/or facilitators to implementing DLD and dyslexia screeners within five main themes: (1) features of the screeners, (2) preparation for screening procedures, (3) administration of the screeners, (4) demands on users, and (5) screening results.

Perceived Barriers to the Implementation of DLD and Dyslexia Screeners

In terms of barriers, educators shared that the screening timeline was not ideal and that it placed unrealistic demands on them. We acknowledge that this process asked for additional time from educators, especially during a year that schools in the U.S., and in the rest of the world, faced unprecedented challenges. Due to the uncertainty caused by the COVID-19 pandemic and constant changes in operational guidance for schools, we had little room for timely implementation of screenings. However, even without a pandemic, time constraints is a common barrier to the implementation of school-based practices (Fohlin et al., 2021; Fulcher-Rood et al., 2020). Useful strategies to support the integration of screenings into routine schedules may include changes in workload policies and dedicated time (Fulcher-Rood et al., 2020).

Educators also expressed concerns about technical issues and specific features of the screeners, such as the timing between items and recording of responses. We are currently working to implement changes into our digital screeners, including adjusting timing between items, confirming response choices, and identifying solutions to increase compatibility with different devices. In addition, we must reinforce collaborative decision making around technical considerations to increase our screeners’ usability (Henrick et al., 2017). Educators have plethora of experiences with digital learning platforms and can provide valuable insights on what makes an application user-friendly, accessible, and appropriate for kindergarteners.

Educators reported that many of their students, for whom they did not have any concerns, were identified as at-risk. The concern over false positives is understandable and we are in the process of validating our screeners to improve the detection of DLD and dyslexia risk. However, it is worth noting that screening alone is not enough to determine which students are likely to have DLD and dyslexia. Additional steps must follow, such as further testing, targeted intervention, and progress monitoring, to address false positives and provide an accurate estimation of students’ skills (Catts & Hogan, 2020; Maxim et al., 2014). Educators also raised concerns about the appropriateness of the screeners, particularly for students with diverse linguistic backgrounds. One potential solution is to supplement our screeners with dynamic assessments to account for the language and reading abilities of bilingual students. Evidence suggests that dynamic assessments are better options for students who speak other languages because they evaluate learning potential instead of prior language experiences (Castilla-Earls et al., 2020).

Educators perceived several barriers related to remote screening, such as technology and internet connection problems, caregivers potentially helping their children with correct answers, and delayed results. Technology and internet connection problems are common barriers in the context of telepractice (Tucker, 2012). However, the pandemic and the dependence on digital education have exacerbated these problems, especially for students from low-income communities (Benda et al., 2020; Campbell & Goldstein, 2021). Potential solutions to overcome limitations of remote screenings include improving compatibility of our screeners with different devices and supporting community efforts to extend the provision of high-speed free internet to those who need it (Benda et al., 2020). In addition, we must communicate better to families the importance of letting their children complete the screening on their own, for results to reflect their true skills.

Finally, educators expressed concerns about the preparation phase that preceded implementation and the quality of the training that was provided by the research team. Educators felt that we rushed through the preparation which caused many of them to feel underprepared to support implementation efforts. Our timeline delays affected pre-implementation activities, such as communication, the breadth and depth of our training, and the possibility for educators to interact with the screeners beforehand. Unfortunately, because of frequently changing timelines, the screeners were not ready for demonstration at the time of the training. Nonetheless, these findings show the importance of the preparation phase to implementation success (Damschroder et al., 2009). We must increase the confidence and competencies of educators through careful planning, robust training, effective communication outlets, and standardized procedures.

Perceived Facilitators to the Implementation of DLD and Dyslexia Screeners

In terms of facilitators, educators expressed that the screeners were quick, easy to access and to use, and allowed students to work independently. In addition, some educators suggested that their role was easy, particularly since the screeners were in digital format. This feedback is very encouraging and resonates with general recommendations about desirable features of universal screeners (Miles et al., 2018). Considering the many contextual constraints that educators usually face, including limited time and resources, it is ideal if screening assessments are brief and efficient to guarantee their viability within overburdened systems.

Educators also agreed that the screening process was important and provided useful information for the early identification and support of students at-risk of DLD and dyslexia. We consider educators’ buy-in an important aspect of this partnership and without it, we cannot guarantee sustainability in screening practices (Henrick et al., 2017). We must continue cultivating their trust as we are improving our processes, to help them leverage the value of periodic screenings to identify early risk signs of DLD and dyslexia.

Finally, educators appreciated that families were able to experience the screening process with their children and that screening instructions were available in multiple languages. To clarify, families’ direct involvement in screening procedures was not part of our regular implementation activities, but rather, a byproduct of remote learning. Remote learning has forced families everywhere to take more active roles in their children’s education, including facilitating the administration of academic assessments (Ribeiro et al., 2021). It is possible that the perceived benefit of family involvement outweighs caregivers’ potential interference with student responses. Given the benefits of active family involvement in educational practices, future work will need to address this question. In the context of implementation, it is not enough to achieve buy-in from educators and administrators only. Families are also important stakeholders whose active participation must be encouraged, especially when proposed initiatives, such as universal screening, aim to directly benefit their children (Ash et al., 2020; Porter et al., 2020). With more opportunities for active involvement, families can learn about early screening for DLD and dyslexia, help fight stigma and harmful misperceptions, collaborate with educators, share their opinions, and feel reassured that school districts are doing everything in their capacity to support their children’s learning.

Overall, these findings suggest the need for continuous improvement of our implementation efforts, with a particular emphasis on increasing collaborative decision-making between educators, families, and researchers. Although these findings speak to the implementation of our researcher-developed screeners, we believe that they can generalize to any type of educational implementation work. Aside from the strengths and weaknesses of our screeners, we report findings on contextual and process factors that are likely to affect the quality of implementation in schools. Other researchers and educators who engage in similar work can use our findings as examples of what works (and what does not) for whom under what circumstances.

Limitations

The current study has several notable limitations. First, we solicited educators’ input only after the completion of the screening process. In the future, we must seek educators’ input before, during, and after implementation to address limitations in planning and delivery of screening procedures. Second, we used a brief survey to capture educators’ perceptions of barriers and facilitators to implementing DLD and dyslexia screeners. While this can be a quick and easy way to evaluate implementation, it is not enough. We must supplement with additional approaches, like focus groups or interviews, to gather detailed perspectives. Focus groups or interviews can be particularly useful in the early stages of planning as they can uncover preventable problems (Hamilton & Finley, 2020). Third, we did not seek input from families about their perceptions of the screening process. We cannot be confident that educators fully captured the views and needs of families and direct input from families would have strengthened the scope of this study. We must identify opportunities to involve families and use their voices to create more pragmatic screening procedures, especially in the context of remote learning (Ribeiro et al., 2021). Finally, our survey did not distinguish responses from educators who facilitated implementation during remote administration versus those who facilitated implementation during in-person administration. These types of administration may involve different facilitators and barriers; thus, future inquiries will need to characterize their differences.

Conclusion

To improve uptake of universal screening for DLD and dyslexia in schools, we must identify and understand contextual and process factors that facilitate or hinder implementation. Educators in the current study provided useful input for barriers and facilitators related to characteristics of the screeners, characteristics of the users, and characteristics of the planning process. Future implementation work should consider these factors to guide improvement efforts. In addition, we must enhance participation of educators and families in educational implementation to ensure that evidence-based practices are meaningful to them, sustainable, and beneficial to long-term outcomes of children with DLD and dyslexia.

Implications for Practice.

What is already known about this topic?

DLD and dyslexia are common but under-identified disorders.

Universal screening can improve under-identification of DLD and dyslexia.

What does this paper add?

This paper presents useful information about contextual and process factors that may facilitate or hinder implementation of universal screening for DLD and dyslexia in schools.

Implications for theory, policy, or practice

This paper is an important starting point in understanding what it takes to implement universal screening for DLD and dyslexia in schools. Our findings offer several suggestions of contextual and process factors that might influence the implementation trajectory. They also highlight the importance of leveraging educators’ knowledge of routine practice to improve uptake of evidence-based programs for children with DLD and dyslexia.

Acknowledgements

Research reported in this publication was supported by the National Institute on Deafness and Other Communication Disorders of the National Institutes of Health Grant R01DC016895, awarded to co- PIs Tiffany P. Hogan and Julie A. Wolter. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. We sincerely thank the children and their families who participated in this research and the following school districts and educational organizations from Massachusetts and Montana who partnered with us to recruit participants: Worcester Public Schools, Amesbury Public Schools, and Missoula County Public Schools. We also thank research staff members: Alexandra Armstrong, Xue Bao, Tim DeLuca, Sarah Floyd, Melissa Phelan, Kate Radville, Julia Swanson, and Emma Wyatt.

Biography

Rouzana Komesidou, Ph.D. is a Postdoctoral Research Fellow in the Department of Communication Sciences and Disorders and Project Manager at the Speech and Language (SAiL) Literacy Lab at the MGH Institute of Health Professions, in Boston, MA. Her research focuses on language and literacy development and using implementation science to improve educational and clinical practices.

Melissa Feller, M.A., CCC-SLP is a PhD student in the MGH Institute’s PhD in Rehabilitation Sciences program. She is a speech-language pathologist and reading specialist with extensive clinical experience in public school and private interdisciplinary practice settings. Melissa’s research interests include oral/written language relationships, implementation science, and the role of risk and resilience in learning.

Julie A. Wolter, Ph.D., CCC-SLP is Professor and Chair of the School of Speech, Language, Hearing and Occupational Sciences at the University of Montana and a recognized Fellow of the American Speech Language and Hearing Association. Dr. Wolter directs the Language Literacy Essentials in Academic Development (LLEAD) Lab. Dr. Wolter studies the multiple linguistic or language skills of phonological awareness (i.e., awareness of the sound system of language), morphological awareness (i.e., understanding of the meaningful units in language such as suffixes and prefixes), orthographic awareness (i.e., knowledge about spelling patterns and rules) and vocabulary, as they relate to how children with and without language and literacy disorders develop reading and writing skills.

Jessie Ricketts, Ph.D. is a Senior Lecturer in the Department of Psychology, Royal Holloway, University of London. Her research combines longitudinal and experimental designs to disentangle the causal relationships between oral vocabulary knowledge and reading in children and adolescents.

Mary Rasner, MLIS is Lab Manager of the Speech and Language (SAiL) Literacy Lab at MGH Institute of Health Professions under the direction of Dr. Tiffany Hogan. Before joining the MGH Institute, Mary was a librarian and Head of the Reference Department at Melrose Public Library, in Melrose MA.

Coille Putman, M.S., CCC-SLP is a Clinical Instructor and Lab Coordinator in the Language Literacy Essentials in Academic Development (LLEAD) Lab, in the School of Speech, Language, Hearing and Occupational Sciences at the University of Montana.

Tiffany P. Hogan, Ph.D., CCC-SLP, is Director of the Speech and Language (SAiL) Literacy Lab and Professor in the Department of Communication Sciences and Disorders at MGH Institute of Health Professions in Boston, MA. Dr. Hogan studies the genetic, neurologic, and behavioral links between oral and written language development, with a focus on implementation science for improving assessment and intervention for young children with speech, language and/or literacy impairments.

Appendix A

The OWL Language and Literacy Screeners were developed as part of the Orthography and Word Learning (OWL) project funded by National Institutes of Health – National Institute on Deafness and Other Communication Disorders (NIDCD) Grant R01 DC016895.

The OWL Language and Literacy Screeners were originally designed to be administered in a paper format to all students in a classroom at the same time. However, we decided to develop digital versions of the screeners for individual administration since the majority of students have been learning remotely, either full time or part time (i.e., in-person classes a few hours per week), during the COVID-19 pandemic. We used the Gorilla Experiment Builder (www.gorilla.sc) to create and host our screeners (Anwyl-Irvine et al., 2018). Data was collected between 01 March 2021 and 14 May 2021.

Each screener contains two practice items and 10 test items. The language screener examines knowledge of grammatical structures and aims to identify students who may be at risk for DLD. Each language item is accompanied by four pictures. Students hear a sentence and they must select one of the four pictures that illustrates the sentence. The literacy screener examines code-based skills (e.g., phonological awareness, orthographic awareness, letter-sound correspondence) and aims to identify students who may be at risk for dyslexia. Each literacy item is accompanied by four pictures. Students hear a sound or a word and they must select one of the four pictures that illustrates what they hear. Students hear each item twice before selecting an answer. For every item, students have 10 seconds to select an answer. If they do not select an answer within 10 seconds, the screen automatically moves to the next item. Students receive feedback for their responses only in the practice sections of the screeners.

Appendix B

This appendix lists the themes, sub-themes, and codes created during the thematic analysis. The fourth column labels each code as a barrier and/or a facilitator, based on educators’ perceptions.

| Themes | Sub-themes | Codes | Barriers/Facilitators |

|---|---|---|---|

| Features of the screeners | Duration | Only one session to complete | Facilitator |

| Quick | Facilitator | ||

| Features of items | Concern about distribution of answer choices | Barrier | |

| Could only select answer after repetition | Barrier | ||

| Developmentally appropriate | Facilitator | ||

| Different functionality between practice and test items | Barrier | ||

| Items advanced too quickly | Barrier | ||

| Language level was too difficult | Barrier | ||

| Content | Facilitator | ||

| Format | Automatically paced | Facilitator | |

| Digital | Facilitator | ||

| Efficient | Facilitator | ||

| Prefer paper/pencil | Barrier | ||

| Simple format | Facilitator | ||

| Standardized | Facilitator | ||

| Result turnaround time | Delayed results | Barrier | |

| No real-time data | Barrier | ||

| Usability | Difficult for students with identified LDs to complete independently | Barrier | |

| Difficult to access for caregivers | Barrier | ||

| Difficult to access for multilingual speakers | Barrier | ||

| Difficult to access for students without home support | Barrier | ||

| Directions were clear | Facilitator | ||

| Directions were easy to follow | Facilitator | ||

| Easy to access | Facilitator | ||

| Easy to use | Facilitator | ||

| Hard for parents to tell if screeners were working properly | Barrier | ||

| Hard to tell if completed | Barrier | ||

| Not appropriate for legally blind students | Barrier | ||

| Not appropriate for multilingual learners | Barrier | ||

| Not motivating for students | Barrier | ||

| Streamlined | Facilitator | ||

| Student could complete it at home | Facilitator | ||

| Students could complete it independently | Facilitator | ||

| Students not finishing due to parent errors | Barrier | ||

| Students unsure if answered a question | Barrier | ||

| Students were already familiar with devices | Facilitator | ||

| Too many steps for log in | Barrier | ||

| Typing demands exceeded students’ ability | Barrier | ||

| Preparation for screening procedures | Communication | Confusion about start | Barrier |

| Directions were available in multiple languages | Facilitator | ||

| Execution was ineffective | Barrier | ||

| Limited advanced notice | Barrier | ||

| Not enough information for parents | Barrier | ||

| Parents did not see value in the screeners | Barrier | ||

| Unclear about implementation logistics | Barrier | ||

| Unclear what to expect | Barrier | ||

| Preparation timeline | Limited preparation time | Barrier | |

| Need time to go over screening with parents | Barrier | ||

| Need time to look at screeners | Barrier | ||

| Training | Inadequate training | Barrier | |

| Lack of practice session | Barrier | ||

| Limited understanding of the screeners | Barrier | ||

| Not enough information about screener functionality | Barrier | ||

| Not enough information on logistics | Barrier | ||

| Purpose of screeners was unclear | Barrier | ||

| Questions left answered by researchers | Barrier | ||

| Unable to answer questions for parents | Barrier | ||

| Unable to see the screeners in advance | Barrier | ||

| Unable to share examples with students | Barrier | ||

| Unsure if applicable with ERC students | Barrier | ||

| Administration of the screeners | Mode of administration | Difficult to support families | Barrier |

| Limited technical support | Barrier | ||

| Not able to see students answering questions | Barrier | ||

| Preference for in-person administration | Barrier | ||

| Remote administration was difficult | Barrier | ||

| Teacher felt discomfort with remote administration | Barrier | ||

| Unable to monitor students | Barrier | ||

| Screening timeline | Rushed timeframe | Barrier | |

| Timing was not ideal | Barrier | ||

| Technical considerations | Administration via Seesaw | Barrier/Facilitator | |

| Audio issues | Barrier | ||

| Completed screeners were not counted | Barrier | ||

| Technical issues | Barrier | ||

| Educator and family involvement | Difficult for families to administer | Barrier | |

| Easy to facilitate | Facilitator | ||

| Parents were able to participate in the process | Facilitator | ||

| Parents were confused | Barrier | ||

| Preference for more involvement | Barrier | ||

| Relying on families | Barrier | ||

| Demands on users | A lot to handle | Barrier | |

| Added responsibility for teacher | Barrier | ||

| Amount of time | Barrier | ||

| Easy role | Facilitator | ||

| Need for last minute changes to routines with families | Barrier | ||

| Overwhelmed due to number of different district-required trainings | Barrier | ||

| Preference for outside administrators | Barrier | ||

| Prior experience with the screeners | Facilitator | ||

| The screening added to screen time | Barrier | ||

| Screening results | Validity of screening results | False positives | Barrier |

| Impulsive response styles | Barrier | ||

| Invalid due to mode of administration | Barrier | ||

| Invalid for certain populations | Barrier | ||

| Invalid results | Barrier | ||

| Invalid results due to features of the screeners | Barrier | ||

| Parents may have helped with answers | Barrier | ||

| Use of screening results | Early identification | Facilitator | |

| Provides early literacy data | Facilitator | ||

| Provides useful data | Facilitator |

Footnotes

Conflicts of interest: The authors declare that there are not competing interests at the time of publication.

Data availability statement:

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

- Adlof SM, & Hogan TP (2019). If We Don’t Look, We Won’t See: Measuring Language Development to Inform Literacy Instruction. Policy Insights from the Behavioral and Brain Sciences, 6(2), 210–217. 10.1177/2372732219839075 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alonzo CN, Komesidou R, Wolter JA, Curran M, Ricketts J, & Hogan TP (2021). Building sustainable models of research practice partnerships within educational systems. Manuscript in revision. [DOI] [PMC free article] [PubMed]

- Anwyl-Irvine AL, Massonié J, Flitton A, Kirkham NZ, Evershed JK (2019). Gorilla in our midst: An online behavioural experiment builder. Behavior Research Methods, 52(1), 388–407. 10.3758/s13428-019-01237-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arnold EM, Goldston DB, Walsh AK, Reboussin BA, Daniel SS, Hickman E, & Wood FB (2005). Severity of Emotional and Behavioral Problems Among Poor and Typical Readers. Journal of Abnormal Child Psychology, 33(2), 205–217. 10.1007/s10802-005-1828-9 [DOI] [PubMed] [Google Scholar]

- Ash AC, Christopulos TT, & Redmond SM (2020). “Tell Me About Your Child”: A Grounded Theory Study of Mothers’ Understanding of Language Disorder. American Journal of Speech-Language Pathology, 29(2), 819–840. 10.1044/2020_AJSLP-19-00064 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aspland T, Macpherson I, Proudford C, & Whitmore L (1996). Critical Collaborative Action Research as a Means of Curriculum Inquiry and Empowerment. Educational Action Research, 4(1), 93–104. 10.1080/0965079960040108 [DOI] [Google Scholar]

- Bauer MS, Damschroder L, Hagedorn H, Smith J, & Kilbourne AM (2015). An introduction to implementation science for the non-specialist. BMC Psychology, 3(1), 32. 10.1186/s40359-015-0089-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bauer MS, & Kirchner J (2019). Implementation science: What is it and why should I care? Psychiatry Research, 112376. 10.1016/j.psychres.2019.04.025 [DOI] [PubMed] [Google Scholar]

- Benda NC, Veinot TC, Sieck CJ, & Ancker JS (2020). Broadband Internet Access Is a Social Determinant of Health! American Journal of Public Health, 110(8), 1123–1125. 10.2105/AJPH.2020.305784 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Braun V, & Clarke V (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. 10.1191/1478088706qp063oa [DOI] [Google Scholar]

- Brownlie EB, Beitchman JH, Escobar M, Young A, Atkinson L, Johnson C, Wilson B, & Douglas L (2004). Early Language Impairment and Young Adult Delinquent and Aggressive Behavior. Journal of Abnormal Child Psychology, 32(4), 453–467. 10.1023/B:JACP.0000030297.91759.74 [DOI] [PubMed] [Google Scholar]

- Campbell DR, & Goldstein H (2021). Evolution of Telehealth Technology, Evaluations, and Therapy: Effects of the COVID-19 Pandemic on Pediatric Speech-Language Pathology Services. American Journal of Speech-Language Pathology, 1–16. 10.1044/2021_AJSLP-21-00069 [DOI] [PubMed] [Google Scholar]

- Castilla-Earls A, Bedore L, Rojas R, Fabiano-Smith L, Pruitt-Lord S, Restrepo MA, & Peña E (2020). Beyond Scores: Using Converging Evidence to Determine Speech and Language Services Eligibility for Dual Language Learners. American Journal of Speech-Language Pathology, 29(3), 1116–1132. 10.1044/2020_AJSLP-19-00179 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Catts HW, & Hogan TP (2020). Dyslexia: An ounce of prevention is better than a pound of diagnosis and treatment [Preprint]. PsyArXiv. 10.31234/osf.io/nvgje [DOI] [Google Scholar]

- Clarke ALL, Shearer W, McMillan AJ, & Ireland PD (2010). Investigating apparent variation in quality of care: The critical role of clinician engagement. Medical Journal of Australia, 193(S8). 10.5694/j.1326-5377.2010.tb04025.x [DOI] [PubMed] [Google Scholar]

- Conti-Ramsden G, & Botting N (2008). Emotional health in adolescents with and without a history of specific language impairment (SLI). Journal of Child Psychology and Psychiatry, 49(5), 516–525. 10.1111/j.1469-7610.2007.01858.x [DOI] [PubMed] [Google Scholar]

- Cook BG, & Odom SL (2013). Evidence-Based Practices and Implementation Science in Special Education. Exceptional Children, 79(3), 135–144. 10.1177/001440291307900201 [DOI] [Google Scholar]

- Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, & Lowery JC (2009). Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science, 4(1), 50. 10.1186/1748-5908-4-50 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Douglas NF, Campbell WN, & Hinckley JJ (2015). Implementation Science: Buzzword or Game Changer? Journal of Speech, Language, and Hearing Research, 58(6). 10.1044/2015_JSLHR-L-15-0302 [DOI] [PubMed] [Google Scholar]

- Fohlin L, Sedem M, & Allodi MW (2021). Teachers’ Experiences of Facilitators and Barriers to Implement Theme-Based Cooperative Learning in a Swedish Context. Frontiers in Education, 6, 139. 10.3389/feduc.2021.663846 [DOI] [Google Scholar]

- Fulcher-Rood K, Castilla-Earls A, & Higginbotham J (2020). What Does Evidence-Based Practice Mean to You? A Follow-Up Study Examining School-Based Speech-Language Pathologists’ Perspectives on Evidence-Based Practice. American Journal of Speech-Language Pathology, 29(2), 688–704. 10.1044/2019_AJSLP-19-00171 [DOI] [PubMed] [Google Scholar]

- Goldstein H, & Olswang L (2017). Is there a science to facilitate implementation of evidence-based practices and programs? Evidence-Based Communication Assessment and Intervention, 11(3–4), 55–60. 10.1080/17489539.2017.1416768 [DOI] [Google Scholar]

- Goldstein H, & Olszewski A (2015). Developing a Phonological Awareness Curriculum: Reflections on an Implementation Science Framework. Journal of Speech, Language, and Hearing Research, 58(6). 10.1044/2015_JSLHR-L-14-0351 [DOI] [PubMed] [Google Scholar]

- Green LW (2008). Making research relevant: If it is an evidence-based practice, where’s the practice-based evidence? Family Practice, 25(Supplement 1), i20–i24. 10.1093/fampra/cmn055 [DOI] [PubMed] [Google Scholar]

- Green LW, & Glasgow RE (2006). Evaluating the Relevance, Generalization, and Applicability of Research: Issues in External Validation and Translation Methodology. Evaluation & the Health Professions, 29(1), 126–153. 10.1177/0163278705284445 [DOI] [PubMed] [Google Scholar]

- Hamilton AB, & Finley EP (2020). Reprint of: Qualitative methods in implementation research: An introduction. Psychiatry Research, 283, 112629. 10.1016/j.psychres.2019.112629 [DOI] [PubMed] [Google Scholar]

- Hendricks AE, Adlof SM, Alonzo CN, Fox AB, & Hogan TP (2019). Identifying Children at Risk for Developmental Language Disorder Using a Brief, Whole-Classroom Screen. Journal of Speech, Language, and Hearing Research, 62(4), 896–908. 10.1044/2018_JSLHR-L-18-0093 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henrick EC, Cobb P, Penuel WR, Jackson K, & Clark T (n.d.). Assessing Research-Practice Partnerships. 31.

- Kamerman SB, & Gatenio-Gabel S (2007). Early Childhood Education and Care in the United States: An Overview of the Current Policy Picture. International Journal of Child Care and Education Policy, 1(1), 23–34. 10.1007/2288-6729-1-1-23 [DOI] [Google Scholar]

- Maxim LD, Niebo R, & Utell MJ (2014). Screening tests: A review with examples. Inhalation Toxicology, 26(13), 811–828. 10.3109/08958378.2014.955932 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McArthur GM, Filardi N, Francis DA, Boyes ME, & Badcock NA (2020). Self-concept in poor readers: A systematic review and meta-analysis. PeerJ, 8, e8772. 10.7717/peerj.8772 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McArthur GM, Hogben JH, Edwards VT, Heath SM, & Mengler ED (2000). On the “Specifics” of Specific Reading Disability and Specific Language Impairment. Journal of Child Psychology and Psychiatry, 41(7), 869–874. 10.1111/1469-7610.00674 [DOI] [PubMed] [Google Scholar]

- McGregor KK (2020). How We Fail Children With Developmental Language Disorder. Language, Speech, and Hearing Services in Schools, 1–12. 10.1044/2020_LSHSS-20-00003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miles S, Fulbrook P, & Mainwaring-Mägi D (2018). Evaluation of Standardized Instruments for Use in Universal Screening of Very Early School-Age Children: Suitability, Technical Adequacy, and Usability. Journal of Psychoeducational Assessment, 36(2), 99–119. 10.1177/0734282916669246 [DOI] [Google Scholar]

- Norbury CF, Gooch D, Wray C, Baird G, Charman T, Simonoff E, Vamvakas G, & Pickles A (2016). The impact of nonverbal ability on prevalence and clinical presentation of language disorder: Evidence from a population study. Journal of Child Psychology and Psychiatry, 57(11), 1247–1257. 10.1111/jcpp.12573 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ozernov-Palchik O, & Gaab N (2016). Tackling the ‘dyslexia paradox’: Reading brain and behavior for early markers of developmental dyslexia: Tackling the ‘dyslexia paradox.’ Wiley Interdisciplinary Reviews: Cognitive Science, 7(2), 156–176. 10.1002/wcs.1383 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paul R (1996). Clinical Implications of the Natural History of Slow Expressive Language Development. American Journal of Speech-Language Pathology, 5(2), 5–21. 10.1044/1058-0360.0502.05 [DOI] [Google Scholar]

- Petscher Y, Fien H, Stanley C, Gearin B, Gaab N, Fletcher JM, & Johnson E (2019). Screening for dyslexia. Retrieved from improvingliteracy.org.

- Porter KL, Oetting JB, & Pecchioni L (2020). Caregivers’ Perceptions of Speech-Language Pathologist Talk About Child Language and Literacy Disorders. American Journal of Speech-Language Pathology, 29(4), 2049–2067. 10.1044/2020_AJSLP-20-00049 [DOI] [PubMed] [Google Scholar]

- Ribeiro LM, Cunha RS, Silva M. C. A. e, Carvalho M, & Vital ML (2021). Parental Involvement during Pandemic Times: Challenges and Opportunities. Education Sciences, 11(6), 302. 10.3390/educsci11060302 [DOI] [Google Scholar]

- Tomblin JB, Records NL, Buckwalter P, Zhang X, Smith E, & O’Brien M (1997). Prevalence of Specific Language Impairment in Kindergarten Children. Journal of Speech, Language, and Hearing Research, 40(6), 1245–1260. 10.1044/jslhr.4006.1245 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tucker JK (2012). Perspectives of Speech-Language Pathologists on the Use of Telepractice in Schools: The Qualitative View. International Journal of Telerehabilitation, 4(2), 47–60. 10.5195/ijt.2012.6102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wagner RK, Zirps FA, Edwards AA, Wood SG, Joyner RE, Becker BJ, Liu G, & Beal B (2020). The Prevalence of Dyslexia: A New Approach to Its Estimation. Journal of Learning Disabilities, 53(5), 354–365. 10.1177/0022219420920377 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ward-Lonergan JM, & Duthie JK (2018). The State of Dyslexia: Recent Legislation and Guidelines for Serving School-Age Children and Adolescents With Dyslexia. Language, Speech, and Hearing Services in Schools, 49(4), 810–816. 10.1044/2018_LSHSS-DYSLC-18-0002 [DOI] [PubMed] [Google Scholar]

- Woodward EN, Singh RS, Ndebele-Ngwenya P, Melgar Castillo A, Dickson KS, & Kirchner JE (2021). A more practical guide to incorporating health equity domains in implementation determinant frameworks. Implementation Science Communications, 2(1), 61. 10.1186/s43058-021-00146-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.