SUMMARY

Integrative analysis of multiple data sets has the potential of fully leveraging the vast amount of high throughput biological data being generated. In particular such analysis will be powerful in making inference from publicly available collections of genetic, transcriptomic and epigenetic data sets which are designed to study shared biological processes, but which vary in their target measurements, biological variation, unwanted noise, and batch variation. Thus, methods that enable the joint analysis of multiple data sets are needed to gain insights into shared biological processes that would otherwise be hidden by unwanted intra-data set variation. Here, we propose a method called two-stage linked component analysis (2s-LCA) to jointly decompose multiple biologically related experimental data sets with biological and technological relationships that can be structured into the decomposition. The consistency of the proposed method is established and its empirical performance is evaluated via simulation studies. We apply 2s-LCA to jointly analyze four data sets focused on human brain development and identify meaningful patterns of gene expression in human neurogenesis that have shared structure across these data sets.

Keywords: Integrative methods, Joint decomposition, Low rank models, Multiview data, Principal component analysis

1. Introduction

1.1. Background

Heterogeneous data of various types are now being collected from scientific experiments and/or large scale health initiatives. Thus, it is of scientific interest to harness the collective discovery potential of such complex and growing data resources. For example, in biomedical research, high-dimensional gene expression and epigenetic data are produced to gain insight into cellular processes and disease mechanisms (Stein-O’Brien and others, 2019). Another example is in the study of Alzheimer’s disease where recent efforts have been focusing on combining brain imaging data, genetic data, as well as clinical outcomes in predicting disease (Nathoo and others, 2019). A third example is a large study profiling different states of wellness, where genetic, proteomic and metabolic data among other types of data are collected (Gao and others, 2020). Together, the different types of data provide a more comprehensive picture which has the potential of better characterizing and optimizing what it is to be healthy. In addition to these examples of studies with multimodal data collection, many public repositories are full of experimental data from single modalities that are related biologically. Therefore, in recent years, there has been growing interests as well as demands for understanding and utilizing these data in an integrative way (Lock and others, 2013, Yang and Michailidis, 2016; Li and Jung 2017; Gaynanova and Li 2019; Gao and others 2020).

1.2. Motivating data

Advances in RNA sequencing technologies have produced a large amount of data creating unprecedented opportunities for scientific discovery through data integration. We put together four experimental paradigms used to study brain development: (i) van de Leemput and others (2014); (ii) Yao and others (2017); (iii) BrainSpan (2011); and (iv) Nowakowski and others (2017). Specifically, we use two

data sets from cultured human pluripotent stem cells subjected to neural differentiation, and two

data sets from cultured human pluripotent stem cells subjected to neural differentiation, and two

data sets of brain tissue across varying ages of human fetal development. Within the

data sets of brain tissue across varying ages of human fetal development. Within the

and

and

studies, two different RNA sequencing technologies were used: the two bulk studies extracted RNA from all the cells in tissue samples and expression levels were measured in aggregate for each tissue sample, while in the two single-cell studies, the RNA from each cell in a tissue sample was extracted and quantified individually. All four data sets focus on the creation of neurons from neural precursor cells across these times and hence are highly interrelated. However, there are sample specific details that introduce experiment specific variation beyond the expected technical batch effects. In the van de Leemput and others (2014) bulk data set, data were collected at 9 time points across 77 days of neural differentiation with a total number of 24

studies, two different RNA sequencing technologies were used: the two bulk studies extracted RNA from all the cells in tissue samples and expression levels were measured in aggregate for each tissue sample, while in the two single-cell studies, the RNA from each cell in a tissue sample was extracted and quantified individually. All four data sets focus on the creation of neurons from neural precursor cells across these times and hence are highly interrelated. However, there are sample specific details that introduce experiment specific variation beyond the expected technical batch effects. In the van de Leemput and others (2014) bulk data set, data were collected at 9 time points across 77 days of neural differentiation with a total number of 24

samples. The Yao and others (2017) data set was collected to model the production of human cortical neurons

samples. The Yao and others (2017) data set was collected to model the production of human cortical neurons

. There are about 2.7 thousand cells collected over across 54 days during

. There are about 2.7 thousand cells collected over across 54 days during

. In the BrainSpan (2011) bulk data set, there are a total of 35

. In the BrainSpan (2011) bulk data set, there are a total of 35

brain tissue samples. The Nowakowski and others (2017) data set contains around 3.5 thousand single cells across different ages in the fetal brain. For visualization of these data sets, see the NeMO Analytics portal at https://nemoanalytics.org/p?l=ChenEtAl2021&g=DCX, where the gEAR platform is leveraged to construct an integrated gene expression data viewer including all data sets used in the report (Orvis and others, 2021).

brain tissue samples. The Nowakowski and others (2017) data set contains around 3.5 thousand single cells across different ages in the fetal brain. For visualization of these data sets, see the NeMO Analytics portal at https://nemoanalytics.org/p?l=ChenEtAl2021&g=DCX, where the gEAR platform is leveraged to construct an integrated gene expression data viewer including all data sets used in the report (Orvis and others, 2021).

The primary goal of assembling these different data sets together is to define molecular dynamics that are common to all of them and are central in the creation of human neocortical neurons. In addition, we are interested in learning what cellular processes during

development of the brain can be recapitulated in the

development of the brain can be recapitulated in the

systems and what processes are only present in the

systems and what processes are only present in the

data. To achieve this goal, we need a new joint model that can properly model the two-way design exhibited by the four data sets and separate the different cellular processes.

data. To achieve this goal, we need a new joint model that can properly model the two-way design exhibited by the four data sets and separate the different cellular processes.

1.3. Existing literature

We distinguish two distinct types of data structure for multiple data sets: multiview data and linked data. For multiview data, for example, multiomics data, different sets of features are measured for each subject or unit with subject being the linking entity across data sets. For linked data, for example, gene expression data for different types of cancer patients in The Cancer Genome Atlas, the same set of features are measured for different groups of subjects. When data sets are organized such that each column corresponds to one sample, the former can be called vertically linked data as the data sets can be aligned vertically, while the latter can be called horizontally linked data as the data sets can be aligned horizontally (Richardson and others, 2016). For both types of data structures, a central goal of statistical analysis is to identify meaningful decomposition of variations across data sets.

Canonical correlation analysis (CCA) is a useful method for extracting common variation across multiview data. Various variants of CCA for high dimensional data sets have been proposed; see, for example, Gao and others (2020) and Min and Long (2020). However, CCA ignores potentially substantial variation present only in individual data sets. To remedy this, the joint and individual variations explained (JIVE) method (Lock and others, 2013) and subsequent variants of JIVE, for example, AJIVE (Feng and others, 2018), were developed to identify common variation shared across multiple data sets as well as individual variation specific to each data set. Going further, the structural learning and integrative decomposition (SLIDE) method (Gaynanova and Li, 2019) also allows variations that are shared by only a subset of data sets. Other relevant works include Li and Jung (2017), Li and others (2018), and Argelaguet and others (2018).

A pioneering method for linked data is common principal component analysis (Flury, 1984, CPCA), which can identify the same set of orthogonal eigenvectors shared across data sets. CPCA was extended to extract shared signal subspace present across data sets (Flury, 1987) and to determine the number of shared components (Wang and others, 2020). Another extension based on matrix decomposition, population value decomposition (Crainiceanu and others, 2011) extends CPCA to matrix-valued data, for example, neuroimaging and electrophysiology data.

We mention a few other related works. Lock and others (2020) and Park and Lock (2020) jointly analyzed multiple data sets for heterogeneous groups of objects with heterogeneous feature sets. Kallus and others (2019) developed a data adaptive method which allows structure in data to be shared across an arbitrary subset of views and cohorts. Gao and others (2020) and Wang and Allen (2021) considered clustering problems for multiview data. Li and others (2019) proposed a regression model with multiview data as covariates.

1.4. Our contribution

We propose a joint decomposition model for linked multiple data sets with a general design, for example, the 2 by 2 factorial design in our motivating data, thus extending the aforementioned CPCA method which assumes the linked multiple data sets have a one-way design. The goal of the joint decomposition is to identify signal subspaces that are either shared or not shared across data sets. Similar in spirit to the underlying model for the SLIDE method for multiview data, our model allows for common signal subspace that are shared by all data sets, partially shared signal subspaces that are shared by only subsets of all data sets, and individual signal subspaces specific to each data set. It is important to note that, for our method, it is fixed signal subspaces rather than latent random scores that are shared across data sets. Another significant difference is that the existence of partially shared signal subspaces for the proposed model is determined by design, which is scientifically meaningful for our motivating data. While not designed explicitly for linked data and the use case described, other methods can also be used in this setting. The BIDIFAC+ method (Lock and others, 2020), an extension of the BIDIFAC method for bi-dimensional data (Park and Lock, 2020), could also accommodate partially shared subspaces by design for linked data. These methods were proposed for decomposing bidimensional data. Specifically, the BIDIFAC+ method decomposes data into the sum of matrices with varying structures and imposes matrix nuclear norm penalties for model selection. Additionally, the SLIDE method, while designed for multiview data, may still be applied to linked data via transposing the data. Indeed, existing multiview methods (e.g., JIVE) that are based on matrix decomposition can also be applicable for linked data. However, because SLIDE is a fully unsupervised method and due to the specific penalty it employs to learn different types of components, it is unclear how to directly incorporate design of components into SLIDE. Nevertheless, it is worth mentioning that SLIDE, BIDIFAC+, and the proposed model allow partially shared components.

Our model estimation consists of a simple and intuitive two-stage procedure and is built on existing literature in principal component analysis. From a computational perspective, it is straightforward and avoids complex and computationally challenging optimization methods, for example, large matrix decomposition and iterative optimization methods as in BIDIFAC+ and SLIDE. In particular, the proposed method avoids joint estimation of dimensions of different types of spaces, which can be challenging for both BIDFIFAC+ and SLIDE as is shown in the simulation study. We also study the theoretic properties of the proposed method for a general class of decomposition models. Specifically, we establish the consistency of the proposed method for estimating the signal subspaces. In particular, we prove the consistency of the proposed method for estimating the dimensions of the signal subspaces. Note that most existing methods in the literature lack theoretic justification for the choice of dimensions (or ranks of matrices).

The rest of the article is organized as follows. In Section 2, we first describe our model for linked data sets with a 2 by 2 design, as motivated by our data example, and then extend the model for data sets with a general design. A two-step model estimation method is given in the Section. In Section 3, we study the theoretic properties of the proposed model estimation method. In Section 4, we carry out a simulation study to evaluate the empirical performance of our proposed method and compare with existing methods. In Section 5, we apply the proposed method to the motivating data and also carry out a validation study using additional data sets. Finally, in Section 6, we discuss how our work can be extended, for example, for high-dimensional data.

2. Models

2.1. Model formulation

First, consider a 2 by 2 factorial design, as in our motivating data, but later generalize the model for more generic designs. Let  be the index for the cells’ system, with

be the index for the cells’ system, with  for

for

and

and  for

for

. Similarly, let

. Similarly, let  be the index for the RNA sequencing technology, with

be the index for the RNA sequencing technology, with  for single cell and

for single cell and  for bulk. Let

for bulk. Let  be the

be the  th data set consisting of

th data set consisting of  tissue samples or cells of dimension

tissue samples or cells of dimension  . For simplicity, hereafter we shall use sample to refer to either tissue sample or cell. Denote by

. For simplicity, hereafter we shall use sample to refer to either tissue sample or cell. Denote by  the

the  th sample for the

th sample for the  th data set so that

th data set so that  . Thus, the samples are of the same dimension for all data sets and they are assumed independent between the data sets and from each other.

. Thus, the samples are of the same dimension for all data sets and they are assumed independent between the data sets and from each other.

Consider a two-way latent factor model,

|

(2.1) |

where  ,

,  ,

,  and

and  are fixed orthonormal matrices of components with associated random scores

are fixed orthonormal matrices of components with associated random scores  ,

,  ,

,  and

and  , respectively. The vector

, respectively. The vector  consists of uncorrelated, mean zero error terms, with variance

consists of uncorrelated, mean zero error terms, with variance  . We assume that all random scores have zero means and

. We assume that all random scores have zero means and  , with positive diagonal elements in all covariances. In particular, we assume without loss of generality that

, with positive diagonal elements in all covariances. In particular, we assume without loss of generality that  ,

,  ,

,  , and

, and  are diagonal matrices so that the matrices can be identified. The other covariance matrices can be nondiagonal, implying that components do not have to align component-wisely across the data sets, but the space spanned by them are the same. Finally, the random terms

are diagonal matrices so that the matrices can be identified. The other covariance matrices can be nondiagonal, implying that components do not have to align component-wisely across the data sets, but the space spanned by them are the same. Finally, the random terms  are assumed uncorrelated across

are assumed uncorrelated across  ,

,  , and

, and  .

.

For model identifiability, we impose the condition that the orthogonal matrices  ,

,  ,

,  ,

,  ,

,  ,

,

are also mutually orthogonal. This condition ensures that the components are not movable, for example, components in

are also mutually orthogonal. This condition ensures that the components are not movable, for example, components in  cannot be transferred to other matrices without changing the model.

cannot be transferred to other matrices without changing the model.

Model (2.1) can be interpreted as a multivariate ANOVA model. To elaborate, we use the notation  to denote the column space of a matrix. Then,

to denote the column space of a matrix. Then,  is the space of common components, the space of variation that is shared by all data sets. Next,

is the space of common components, the space of variation that is shared by all data sets. Next,  is the space of partially shared components specific to

is the space of partially shared components specific to

system, that is, the two

system, that is, the two

data sets. Thus, it is orthogonal to both the common components, that is,

data sets. Thus, it is orthogonal to both the common components, that is,  , and the space of variation specific to the

, and the space of variation specific to the

system, that is,

system, that is,  . Third,

. Third,  is the space of partially shared components specific to the single cell sequencing technology and

is the space of partially shared components specific to the single cell sequencing technology and  is for the bulk sequencing method. Finally,

is for the bulk sequencing method. Finally,  are the individual components specific to each data set.

are the individual components specific to each data set.

For data set  , model (2.1) is a latent factor model with the signal space being the column space of

, model (2.1) is a latent factor model with the signal space being the column space of  , which can be denoted by

, which can be denoted by  .

.

2.2. Model estimation

We propose a two-stage estimation method, called two-stage linked component analysis (2s-LCA). The first stage is to estimate the signal space from each data set separately using PCA. The second stage combines the signal spaces to extract the common subspace, the partially shared subspaces and the individual subspaces, also using PCA. Note that the first stage of 2s-LCA is essentially the same as the first step of AJIVE (Feng and others, 2018), which first extracts signal spaces from each data set and then decomposes the variations of the obtained latent scores into common variation and individual variation.

For the first stage, the key is to estimate the number of components in each data set, that is, the dimension of  . We use the BEMA method (Ke and others, 2021) (see Supplementary material available at Biostatistics online for detail), which fits a distribution to the eigenvalues (putatively) arising from noise using the observed bulk eigenvalues and then determines the number of eigenvalues arising from signals via extrapolation of the fitted distribution. This method yields consistent estimation and worked well in our simulations. Denote by

. We use the BEMA method (Ke and others, 2021) (see Supplementary material available at Biostatistics online for detail), which fits a distribution to the eigenvalues (putatively) arising from noise using the observed bulk eigenvalues and then determines the number of eigenvalues arising from signals via extrapolation of the fitted distribution. This method yields consistent estimation and worked well in our simulations. Denote by  the matrices of the estimated eigenvectors associated with the top eigenvalues from the sample covariance of data set

the matrices of the estimated eigenvectors associated with the top eigenvalues from the sample covariance of data set  .

.

For the second stage, we extract the common subspace, the partially shared subspaces, and the individual subspaces, sequentially. Assume that we extract the subspaces in the following order:  Here, to simplify notation, we use the matrices to denote the subspaces.

Here, to simplify notation, we use the matrices to denote the subspaces.

The estimation of the common subspace is based on the following observation. Let  . Then, it is easy to show that for any eigenvector of

. Then, it is easy to show that for any eigenvector of  ,

,  equals

equals  if

if  and is at most

and is at most  otherwise. Thus, there exists a natural gap of eigenvalues associated with the common subspace and those of other subspaces for

otherwise. Thus, there exists a natural gap of eigenvalues associated with the common subspace and those of other subspaces for  . From a theoretical perspective, any cut-off value between 2 and 4 for the eigenvalues may lead to consistent estimation. In practice, a scree plot of the eigenvalues of

. From a theoretical perspective, any cut-off value between 2 and 4 for the eigenvalues may lead to consistent estimation. In practice, a scree plot of the eigenvalues of  can be used to determine the dimension of the common subspace, that is, considering the relative amount of variation explained among the top eigenvalues. Once the dimension of the common subspace is determined, the eigenvectors of

can be used to determine the dimension of the common subspace, that is, considering the relative amount of variation explained among the top eigenvalues. Once the dimension of the common subspace is determined, the eigenvectors of  associated with these top eigenvalues give us an estimate of the space of common components. Let

associated with these top eigenvalues give us an estimate of the space of common components. Let  be the matrix with columns corresponding to the retained eigenvectors of

be the matrix with columns corresponding to the retained eigenvectors of  . Note that

. Note that  may not be a good estimate of

may not be a good estimate of  elementwise, rather, the notation is used to represent that

elementwise, rather, the notation is used to represent that  is an estimate of

is an estimate of  and

and  is one of many matrix representations of the former.

is one of many matrix representations of the former.

Next we estimate the partially shared subspaces. We first project  onto the orthogonal complement of

onto the orthogonal complement of  , that is,

, that is,  For simplicity, we still denote it by

For simplicity, we still denote it by  , but the common subspace has been removed. We now consider estimation of

, but the common subspace has been removed. We now consider estimation of  , the space of partially shared components corresponding to the

, the space of partially shared components corresponding to the

system. With similar arguments as before, now the matrix

system. With similar arguments as before, now the matrix  has one set of eigenvalues close to

has one set of eigenvalues close to  and another set of eigenvalues close to or smaller than

and another set of eigenvalues close to or smaller than  . Indeed, the theoretic counterpart of the above matrix has two sets of nonzero eigenvalues: one set of eigenvalues of 2 with associated eigenvectors forming the space

. Indeed, the theoretic counterpart of the above matrix has two sets of nonzero eigenvalues: one set of eigenvalues of 2 with associated eigenvectors forming the space  and another set of eigenvalues of 1 with associated eigenvectors from the individual space of either

and another set of eigenvalues of 1 with associated eigenvectors from the individual space of either  or

or  . Therefore, another scree plot of eigenvalues of

. Therefore, another scree plot of eigenvalues of  will determine the dimension of

will determine the dimension of  and also give an estimate of the space.

and also give an estimate of the space.

Similar analysis can be used to estimate the other partially shared subspaces. For example, a spectral analysis of  can be used to estimate

can be used to estimate  . Further steps follow similarly and thus are omitted. To ensure the estimated spaces are orthogonal, orthogonal projection is always used after each estimation of the partially shared subspaces. After the projection of

. Further steps follow similarly and thus are omitted. To ensure the estimated spaces are orthogonal, orthogonal projection is always used after each estimation of the partially shared subspaces. After the projection of  onto the complement of estimates of

onto the complement of estimates of  and

and  , the remaining space is an estimate of the individual space

, the remaining space is an estimate of the individual space  .

.

A remaining problem lies in the determination of the order which subspaces are estimated. First, it is clear that components shared by more data sets should be prioritized, and estimated first. The problem then lies in the order to follow to extract the partially shared components. While this is not a problem in our theoretical analysis, in real data analysis, different orders produce different estimates, because of the orthogonal condition imposed on the model and the orthogonal projection used in model estimation. A reasonable method considers the quality of the data sets (e.g., the sample size) and the spaces of shared components with higher perceived quality prioritized in the estimation order.

2.3. Model and estimation for general design

We extend the above model for a two-way factorial design to a model with a general design. To accommodate the more general setting, the notation here is slightly different from the previous sections. Assume that there are  data sets each containing

data sets each containing  samples. Denote by

samples. Denote by  the

the  th sample for the

th sample for the  th data set. We consider a latent factor model with general design as follows:

th data set. We consider a latent factor model with general design as follows:

|

(2.2) |

The orthonormal matrices  correspond to the

correspond to the  th space of components with associated scores,

th space of components with associated scores,  . So the

. So the  th space is of dimension

th space is of dimension  , which is unknown and needs to be determined. The indicator variables,

, which is unknown and needs to be determined. The indicator variables,  , denote if the

, denote if the  th data set contains the

th data set contains the  th space of components, and

th space of components, and  are random errors. Here the number of spaces,

are random errors. Here the number of spaces,  , and the indicator variables are determined by design. For example, for the two-way factorial design considered above,

, and the indicator variables are determined by design. For example, for the two-way factorial design considered above,  . Two clear restrictions on the indicator variables are: (i) for each

. Two clear restrictions on the indicator variables are: (i) for each  , there exists at least one

, there exists at least one  such that

such that  ; (ii) there does not exist

; (ii) there does not exist  with identical indicator values across all data sets. Let

with identical indicator values across all data sets. Let  , which is the number of data sets sharing the

, which is the number of data sets sharing the  th space of components. Without loss of generality, we assume that

th space of components. Without loss of generality, we assume that  .

.

We assume that all random scores have zero means and  with positive diagonal elements in the covariance. In particular, for each

with positive diagonal elements in the covariance. In particular, for each  , there exists a pre-determined

, there exists a pre-determined  such that

such that  is a diagonal matrix, that is, the corresponding random vector

is a diagonal matrix, that is, the corresponding random vector  has uncorrelated elements. We also assume that all random terms are uncorrelated from each other and across

has uncorrelated elements. We also assume that all random terms are uncorrelated from each other and across  and

and  . For model identifiability, we impose that

. For model identifiability, we impose that  are mutually orthogonal. Let

are mutually orthogonal. Let  , the total dimension of signal subspaces in data set

, the total dimension of signal subspaces in data set  .

.

We now extend the model estimation proposed in the previous subsection. The first step remains the same and denote by  the matrices of estimated eigenvectors. Consider the second step where the critical issue is the order of components to be extracted. The key idea is that spaces shared by more data sets should be extracted before those shared by fewer data sets. For each

the matrices of estimated eigenvectors. Consider the second step where the critical issue is the order of components to be extracted. The key idea is that spaces shared by more data sets should be extracted before those shared by fewer data sets. For each  , denote by

, denote by  the index set for which if

the index set for which if  , then

, then  and for at least one

and for at least one  the inequality is strict. Then let

the inequality is strict. Then let  be the smallest element in

be the smallest element in  . If

. If  is empty then let

is empty then let  be 0. Obviously,

be 0. Obviously,  for all

for all  . Suppose we have estimated the first

. Suppose we have estimated the first  spaces of shared components and have projected

spaces of shared components and have projected  onto the orthogonal space of the previously extracted spaces. For simplicity, still denote those spaces by

onto the orthogonal space of the previously extracted spaces. For simplicity, still denote those spaces by  . To estimate the

. To estimate the  th space, compute

th space, compute  and its eigendecomposition. Let

and its eigendecomposition. Let  consists of the resulting eigenvectors with associated eigenvalues larger than

consists of the resulting eigenvectors with associated eigenvalues larger than  . Then

. Then  is our estimate of

is our estimate of  .

.

The above estimation procedure is unique if  are all distinct. When there exists ties, then the order of estimation can be determined similarly as in the two-way design. One note is that our theoretical derivation remains valid no matter what order of estimation taken within the ties.

are all distinct. When there exists ties, then the order of estimation can be determined similarly as in the two-way design. One note is that our theoretical derivation remains valid no matter what order of estimation taken within the ties.

After obtaining the spaces of components, to further analyze or visualize the data, we project the data sets onto the estimated subspaces to obtain scores; see, for example, our real data analysis.

3. Theoretical properties

We establish the consistency of the proposed estimation methods for the general design proposed in (2). Specifically, we prove the consistency of  for estimating the signal space

for estimating the signal space  , for each

, for each  , which implies the consistency of dimension estimation for each signal subspace as well.

, which implies the consistency of dimension estimation for each signal subspace as well.

We use the following notation. For a square matrix  , denote by

, denote by  the sum of diagonals in

the sum of diagonals in  and

and  the operator norm of

the operator norm of  . Let

. Let  , the effective rank of

, the effective rank of  .

.

Consider the following assumptions.

Assumption 3.1

is a constant.

Assumption 3.2

The minimal nonzero eigenvalue of

, denoted by

, satisfies

, for a positive constant

and

in Assumption 3.1, for all

and

.

Assumption 3.3

Assume that for each signal subspace

, the random scores

and the noise vector

and that the random scores and noises are independent from each other and across subjects.

Assumption 3.4

Let

for

. Assume that these covariance matrices have bounded effective ranks, that is,

for all

and some fixed positive constant

.

Assumptions 3.1 and 3.2 are needed for the consistency of the BEMA method (Ke and others, 2021) for estimating the dimension of the signal space for each data matrix in the first step of model estimation. Assumption 3.2 means the signals can be separated from the noises. Assumptions 3.3 and 3.4 are needed to establish the consistency of the sample covariance matrix estimators (Bunea and Xiao, 2015). Assumption 3.4 means the signals have to be sufficiently strong so that they can be consistently estimated. When Assumption 3.4 is invalid, such as in the instance of a high-dimensional spiked covariance matrix, additional sparsity assumptions are needed and then the sample covariances have to be replaced by sparsity-inducing estimators in our estimation method; see the Discussion section for more details.

Let  both have orthonormal columns, then the vector of

both have orthonormal columns, then the vector of  principal angles between their column spaces is given by

principal angles between their column spaces is given by  , where

, where  are the singular values of

are the singular values of  . Let

. Let  denote

denote  diagonal matrix whose

diagonal matrix whose  th diagonal entry is the

th diagonal entry is the  th principal angle, and let

th principal angle, and let  be defined entry-wise. Then, for two signal subspaces

be defined entry-wise. Then, for two signal subspaces  and

and  , define convergence in space as

, define convergence in space as

Theorem 3.1

Under Model (2.2), if Assumptions 3.1–3.4 hold, then

The proof of Theorem 3.1 is provided in Section S.3 of the Supplementary material available at Biostatistics online. Note that this theorem also implies the consistency of the estimated dimension of each signal subspace.

4. Simulation studies

4.1. Settings

Consider the two-way model (2.1) for which the spaces of common components, partially shared components, as well as individual components are all of dimension 2. We sample the orthonormal matrices ( ) from a Stiefel manifold and generate the random scores and noises from normal distributions. For the diagonal covariance matrices (

) from a Stiefel manifold and generate the random scores and noises from normal distributions. For the diagonal covariance matrices ( ), we sample the diagonals uniformly from the interval

), we sample the diagonals uniformly from the interval  and then multiply them by the dimension

and then multiply them by the dimension  . To ensure desired signal to noise ratio (SNR) as defined below, a scale parameter is multiplied to the diagonals. For the other covariance matrices, we randomly rotate

. To ensure desired signal to noise ratio (SNR) as defined below, a scale parameter is multiplied to the diagonals. For the other covariance matrices, we randomly rotate  to

to  with a rotation angle

with a rotation angle  . Similarly, we randomly rotate

. Similarly, we randomly rotate  and

and  to

to  and

and  respectively with a rotation angle

respectively with a rotation angle  . We generate noises

. We generate noises  for each data set from

for each data set from  with

with  .

.

We set the sample sizes  for all data sets and consider two cases. Case 1 is a high dimensional setting with

for all data sets and consider two cases. Case 1 is a high dimensional setting with  and case 2 is a low dimensional setting with

and case 2 is a low dimensional setting with  . Finally, we define the SNR for the

. Finally, we define the SNR for the  th data set as

th data set as  We set the same SNR for all data sets and use three different values of SNR,

We set the same SNR for all data sets and use three different values of SNR,  . Therefore, there are a total number of 6 model conditions. Under each model condition, we conduct 1000 simulations in a cluster computing environment.

. Therefore, there are a total number of 6 model conditions. Under each model condition, we conduct 1000 simulations in a cluster computing environment.

We compare 2s-LCA with several existing methods. First, we fix the dimensions of all subspaces at their true values, that is, 2, and compare 2s-LCA with JIVE (Lock and others, 2013), AJIVE (Feng and others, 2018), SLIDE (Gaynanova and Li, 2019), BIDIFAC (Park and Lock, 2020), and BIDIFAC+ (Lock and others, 2020) for subspace estimation. Then, we do the same as above except that the data are generated such that the variances of scores associated with individual subspaces are much larger than those for common and partially shared subspaces. Third and most importantly, we compare SLIDE, BIDIFAC+, and 2s-LCA without pre-fixing dimensions of the subspaces.

To evaluate the performance of methods, we use a metric called space alignment (SA), to measure the alignment of two spaces. For instance, if  is the estimated common subspace and

is the estimated common subspace and  is the population common subspace, then the SA between them is

is the population common subspace, then the SA between them is

|

Here  denotes the nuclear norm, which is invariant to matrix rotation. Note that SA always lies in

denotes the nuclear norm, which is invariant to matrix rotation. Note that SA always lies in  , with

, with  indicating that the two spaces are identical, while a

indicating that the two spaces are identical, while a  indicating that the two spaces are orthogonal to each other. We also compare the computational times of the proposed method and a few existing methods.

indicating that the two spaces are orthogonal to each other. We also compare the computational times of the proposed method and a few existing methods.

4.2. Simulation results

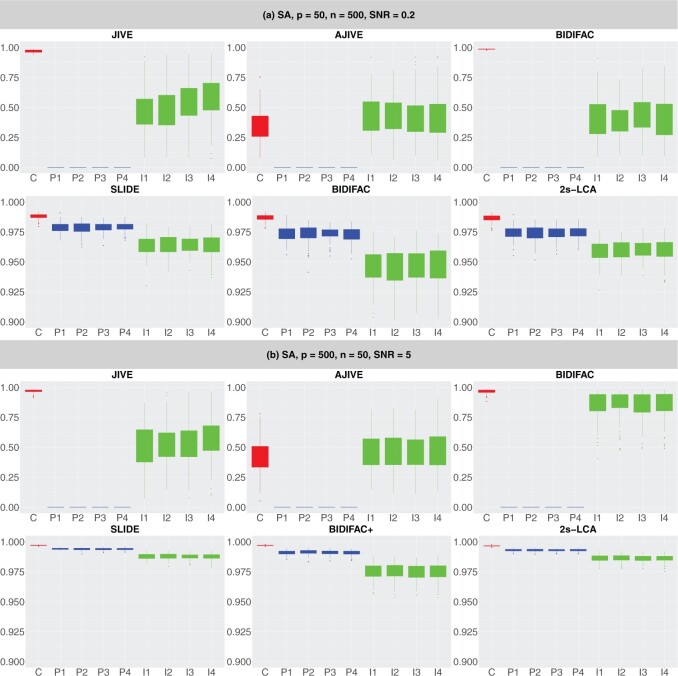

We first summarize simulation results when the dimensions of the subspaces are fixed at their true values; see Figure 1 for summarization results with further details provided in Section S.4.1 of the Supplementary material available at Biostatistics online. Because JIVE, AJIVE, and BIDIFAC do not consider partially shared components, they do not perform well in this settings. On the contrary, SLIDE, BIDIFAC+, and 2s-LCA all perform well and have comparable values of SA across all model conditions.

Fig. 1.

Comparisons between JIVE, AJIVE, BIDIFAC, SLIDE, BIDIFAC+, and 2s-LCA with known dimensions of spaces. (a) and (b) are both under the low-dimensional setting with  ; (c) and (d) are under high dimensional setting with

; (c) and (d) are under high dimensional setting with  . For each setting,

. For each setting,  simulations are run.

simulations are run.

However, when the variance of individual components are much larger than the common and partially shared components, both SLIDE and BIDIFAC+, can have difficulty recovering common and partially shared spaces. In contrast, 2s-LCA is capable of accurately estimating these spaces (Section S.4.2 of the Supplementary material available at Biostatistics online). A likely explanation is that both SLIDE and BIDIFAC+ focus on recovering the overall signal space, in this case dominated by the individual components, in the data while 2s-LCA prioritizes and first estimates common and partially shared components.

Now we compare SLIDE, BIDIFAC+, and 2s-LCA for model estimation without knowing the dimension of each space. As discussed in the Introduction section, SLIDE does not allow direct specification of types of spaces and hence might be slightly disadvantaged compared with 2s-LCA and BIDIFAC+, as the latter two methods accommodate the specification of types of subspaces. Figure 2 summarizes the performance of the methods under two model conditions. First, BIDIFAC+ tends to overestimate the dimensions of common and partially shared spaces, which negatively impacts SA. Second, SLIDE underestimates the dimensions of these spaces in low dimensional settings with small SNRs. Under both model conditions, 2s-LCA yields an accurate estimation of the dimensions of the subspaces, and results in high values for SA. Similar numerical results were found for the other four model conditions (Section S.4.3 of the Supplementary material available at Biostatistics online). In particular, 2s-LCA seems capable of accurately estimating the dimensions of spaces correctly for most settings, except the high dimensional setting with a low SNR (Figure S.16 of the Supplementary material available at Biostatistics online). However, it still has overall higher SA values than SLIDE and BIDIFAC+.

Fig. 2.

Comparisons between SLIDE, BIDIFAC+ and 2s-LCA. (a) and (b) are both under the low-dimensional setting with  ; (c) and (d) are under high dimensional setting with

; (c) and (d) are under high dimensional setting with  . For each setting,

. For each setting,  simulations are run.

simulations are run.

Lastly, we simulate the data as above except for setting the sizes of data as the same as that of the motivating data. We assume that the dimensions of spaces are known and fixed and run SLIDE, BIDIFAC+ and 2s-LCA. The proposed 2s-LCA takes about 32s (s.d. 16s) on average for one run while SLIDE takes about 6.5 h (s.d. 3.0 h) on average to reach convergence. We are unable to obtain convergent result for one single run after running the BIDIFAC+ code for 24 h. We believe that the computational time of SLIDE can be potentially reduced by utilizing the inherent low rank property of the estimated signal matrix. As for BIDIFAC+, we believe its computational difficulties arise because it relies on eigendecompositions, which are difficult for high-dimensional matrices. Consequently, BIDIFAC+ has a large RAM requirement, which may slow it down substantially if disk swapping becomes required. Finally, the iterative nature of both SLIDE and BIDIFAC+ may increase their computational time substantially.

5. Experimental data analysis

5.1. Analysis of four data sets

We apply 2s-LCA to the four brain tissue data sets focused on brain development. As described in Section 1, the data sets were collected using two technologies, bulk and single cell RNA sequencing, and under two cellular environments,

and

and

. Thus, this exhibits a two-way design as in model (2.1), which includes one subspace of common components, four subspaces of partially shared components, and four subspaces of individual components. As 2D visualizations of the data are of interest, a natural choice of the number of components for each subspace is

. Thus, this exhibits a two-way design as in model (2.1), which includes one subspace of common components, four subspaces of partially shared components, and four subspaces of individual components. As 2D visualizations of the data are of interest, a natural choice of the number of components for each subspace is  .

.

For 2s-LCA, normalization within each data set is needed to mitigate technical effects. For each data set, we center the expression value for each gene, center and scale expression level of each sample by its standard deviation across genes. For the second stage of 2s-LCA, we first estimate common components and then the partially shared components sequentially in the following order:

,

,

, single cell, and bulk.

, single cell, and bulk.

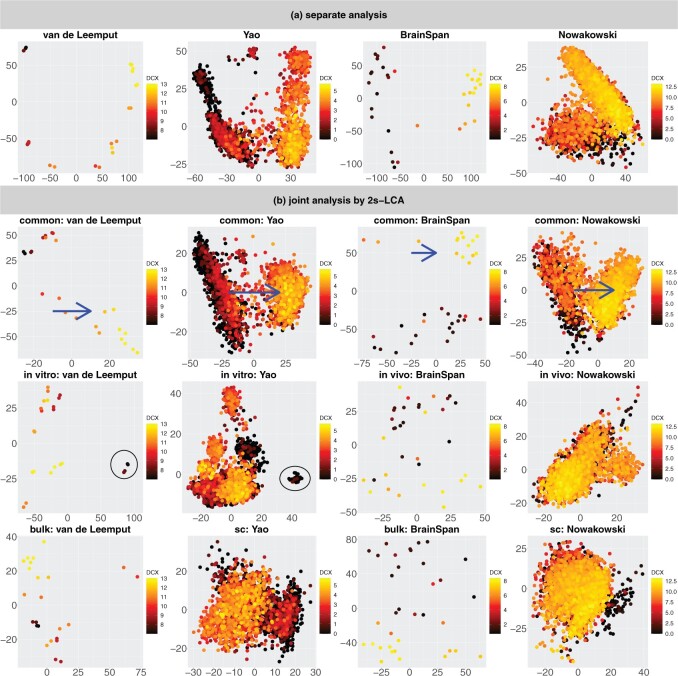

Figure 3(a) shows the projection of every data set onto its top two eigenvectors—a separate analysis. We then apply 2s-LCA to jointly analyze the four data sets and obtain common, partially shared, and individual subspaces, each of which is of dimension 2. Then, we project every data set onto these subspaces to investigate biological processes associated with the scores; see Figure 3(b) for plots of the scores for common and partially shared subspaces. Note that the axes in each plot have different ranges and to facilitate interpretation, we have rotated the scores associated with the common subspace (top panel of Figure 3(a)) simultaneously for all four data sets.

Fig. 3.

(a) Scatterplots of scores corresponding to the top two principal components of each data set by separate PCA and (b) Scatterplots of scores of each data set corresponding to common components (top panel), partially shared components associated with environments (middle panel:

on the left two plots and

on the left two plots and

on the right two plots), and partially shared components associated with technologies (bottom panel: bulk on the left two plots and single cell on the right two plots) by the proposed 2s-LCA. The 4 columns in both parts correspond to the data sets: (1) van de Leemput:

on the right two plots), and partially shared components associated with technologies (bottom panel: bulk on the left two plots and single cell on the right two plots) by the proposed 2s-LCA. The 4 columns in both parts correspond to the data sets: (1) van de Leemput:

+ bulk; (2) Yao:

+ bulk; (2) Yao:

+ single cell; (3) BrainSpan:

+ single cell; (3) BrainSpan:

+ bulk; and (4) Nowakowski:

+ bulk; and (4) Nowakowski:

+ single cell. Each point corresponds to either one tissue sample or one cell and is colored by the

+ single cell. Each point corresponds to either one tissue sample or one cell and is colored by the  transformed expression level of the DCX gene. Blue arrows indicate alignment of the first common component (

transformed expression level of the DCX gene. Blue arrows indicate alignment of the first common component ( -axis in top row of panels in (b) with DCX expression (neurogenesis) in all four data sets. Black circles indicate pluripotent stem cells, which are present only in the

-axis in top row of panels in (b) with DCX expression (neurogenesis) in all four data sets. Black circles indicate pluripotent stem cells, which are present only in the

data sets.

data sets.

To evaluate and interpret results, we shall use the DCX gene, which is turned on as neural progenitors transition to being neurons. According to Liu (2011), “The DCX gene provides instructions for producing a protein called doublecortin. This protein is involved in the movement of nerve cells (neurons) to their proper locations in the developing brain, a process called neuronal migration.” The  transformed expression level of the DCX gene is used to color the cells, with the dark color indicating low expression level corresponding to neural progenitors and the yellow color indicating high expression level corresponding to neurons.

transformed expression level of the DCX gene is used to color the cells, with the dark color indicating low expression level corresponding to neural progenitors and the yellow color indicating high expression level corresponding to neurons.

We first focus on the comparison of the separate components with the common components; see Figure 3(a) and the top row of panels in Figure 3(b). For the separate analysis, the plots show that cells with different expression levels of the DCX gene are clustered and separated; however, the extent to which the scores and gene loadings align across the data sets is unclear. In contrast to the separate analysis, 2s-LCA produces jointly derived components, allowing direct assessment of how effects across the different data sets align. The plots for common components show that cells with similar expression levels in the DCX gene tend to cluster together, which means that the local structure of the cells is preserved. In addition, by projecting the data sets onto the same subspace shared among them, a similar pattern can be observed among data sets, indicating shared global structure across the data sets. For example, the  -axis along which DCX expression increases during fetal development as neural progenitors become neurons (blue arrows) can be seen precisely aligned across the common components in each of the four data sets. Moreover, a visual comparison of the separate components with those of the proposed joint method suggests that some of the order defined in the separate components is preserved in the joint analysis (in the sense of the overall shape of the distribution of cells and clustering of cells with similar expression levels), consistent with the knowledge that these four diverse experiments capture common molecular elements of neurogenesis. In addition to the visual comparison, we also compute the ratio of the variances explained by the common components versus those explained by the separate analysis for each data set. The ratios are: (i) van de Leemput: 0.195; (ii) Yao: 0.524; (iii) BrainSpan: 0.382; and (iv) Nowakowski: 0.538. The values indicate strong presence of common spaces shared by all data sets.

-axis along which DCX expression increases during fetal development as neural progenitors become neurons (blue arrows) can be seen precisely aligned across the common components in each of the four data sets. Moreover, a visual comparison of the separate components with those of the proposed joint method suggests that some of the order defined in the separate components is preserved in the joint analysis (in the sense of the overall shape of the distribution of cells and clustering of cells with similar expression levels), consistent with the knowledge that these four diverse experiments capture common molecular elements of neurogenesis. In addition to the visual comparison, we also compute the ratio of the variances explained by the common components versus those explained by the separate analysis for each data set. The ratios are: (i) van de Leemput: 0.195; (ii) Yao: 0.524; (iii) BrainSpan: 0.382; and (iv) Nowakowski: 0.538. The values indicate strong presence of common spaces shared by all data sets.

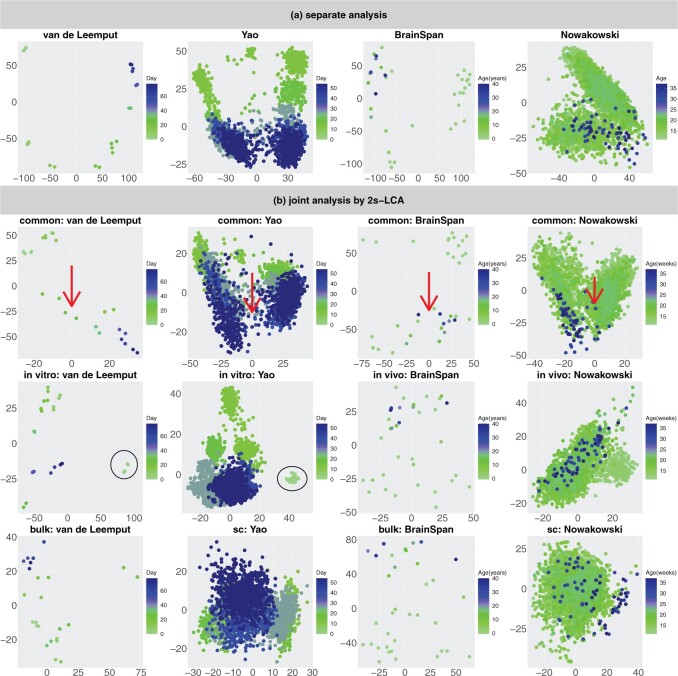

We have also evaluated the common components using the time of the samples: days of neural differentiation for the

data sets and age (years) for the

data sets and age (years) for the

data sets; see Figure 4 which is a recoloring of Figure 3. Interestingly, the

data sets; see Figure 4 which is a recoloring of Figure 3. Interestingly, the  -axis (red arrows) appears to be a common temporal dimension orthogonal to the DCX expression component, which is along the

-axis (red arrows) appears to be a common temporal dimension orthogonal to the DCX expression component, which is along the  -axis in Figure 3. As developmental time progresses, it appears that the cellular identities of the neural precursors and their post-mitotic neuronal progeny become less distinct, that is, the clusters of low and high DCX expressing cells begin to merge. The cellular basis underlying this dimension is not known and might be of significant biological interest to explore further. In the top 50 gene specific loadings for the first common component, we identify, in addition to the DCX gene, many markers of nascent neurons, for example, MYT1L and NRXN1, synaptic components, for example, PSD2 and SYT1, and the channels SCN2A, SCN3A and SCN3B. Many of these identified genes have been implicated in human neurodevelopmental disorders (see Table S1 of the Supplementary material available at Biostatistics online), which further demonstrates that this component parallels neurogenesis in each of the four data sets.

-axis in Figure 3. As developmental time progresses, it appears that the cellular identities of the neural precursors and their post-mitotic neuronal progeny become less distinct, that is, the clusters of low and high DCX expressing cells begin to merge. The cellular basis underlying this dimension is not known and might be of significant biological interest to explore further. In the top 50 gene specific loadings for the first common component, we identify, in addition to the DCX gene, many markers of nascent neurons, for example, MYT1L and NRXN1, synaptic components, for example, PSD2 and SYT1, and the channels SCN2A, SCN3A and SCN3B. Many of these identified genes have been implicated in human neurodevelopmental disorders (see Table S1 of the Supplementary material available at Biostatistics online), which further demonstrates that this component parallels neurogenesis in each of the four data sets.

Fig. 4.

This figure is a recoloring of the data shown in Figure 3, in order to show effects across time. Each point corresponds to either one tissue sample or one cell and is colored by days of neural differentiation for the

data sets and age in years or gestational weeks for the

data sets and age in years or gestational weeks for the

data sets. Red arrows indicate alignment of the second common component (

data sets. Red arrows indicate alignment of the second common component ( -axis in top row of panels in (b) with developmental time in all four data sets. Black circles indicate pluripotent stem cells, which are present only in the

-axis in top row of panels in (b) with developmental time in all four data sets. Black circles indicate pluripotent stem cells, which are present only in the

data sets.

data sets.

We next evaluate the partially shared components and focus on the components shared only by the two

data sets; see the left two plots on the second row of Figure 3(b). In both plots, those few cells circled in blue are pluripotent cells, which are present only in the

data sets; see the left two plots on the second row of Figure 3(b). In both plots, those few cells circled in blue are pluripotent cells, which are present only in the

data sets as they would have disappeared prior to the developmental time points measured in the

data sets as they would have disappeared prior to the developmental time points measured in the

system. This result demonstrates that the proposed joint decomposition method captures known biological effects unique to the

system. This result demonstrates that the proposed joint decomposition method captures known biological effects unique to the

experimental paradigm and not represented in the

experimental paradigm and not represented in the

data sets. The top 10 gene specific loadings for the first

data sets. The top 10 gene specific loadings for the first

component identifies pluripotent stem cells in the

component identifies pluripotent stem cells in the

systems, as desired. Specifically, we find pluripotency markers: POU5F1, PRDM14, and CDH1, demonstrating the identification of a pluripotency transcriptional program common to the two

systems, as desired. Specifically, we find pluripotency markers: POU5F1, PRDM14, and CDH1, demonstrating the identification of a pluripotency transcriptional program common to the two

data sets.

data sets.

Finally, we also applied the BIDIFAC+ and SLIDE methods to the data sets. In a shared cluster computing environment, it took SLIDE about 1 day to yield convergent result. The results from SLIDE are provided in the Supplementary material available at Biostatistics online. The results have similar apparent scientific validity as 2s-LCA. The results for BIDIFAC+ are unavailable, as we could not obtain convergent results in several days, again likely due to the requirements of the eigenvalue decomposition. We believe the computational efficiency of 2s-LCA is a key aspect to the model. For example, for this data convergence took only a two minutes.

5.2. A validation study

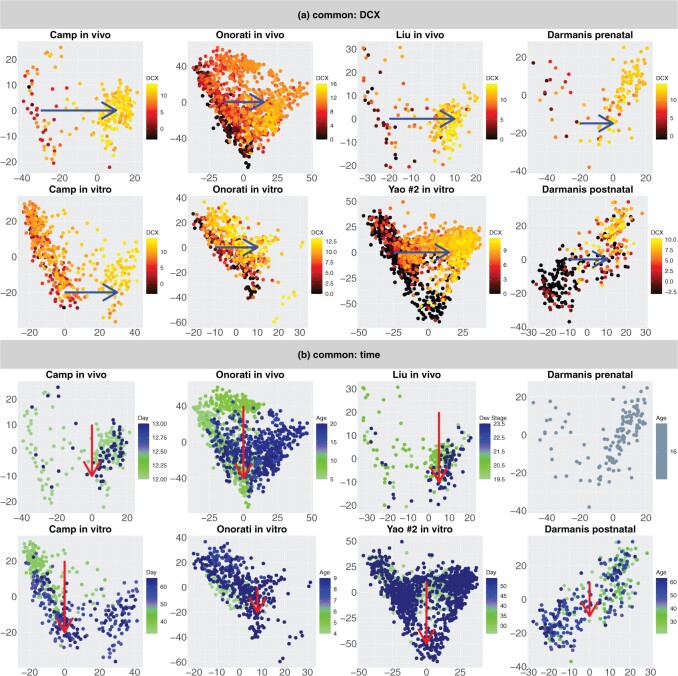

To validate the common components defined by the joint 2s-LCA decomposition (top row of panels in Figures 3(b) and 4(b), we use eight additional single cell RNA-seq data sets from five studies, see the Supplementary material available at Biostatistics online for details about these studies and the data sets. We project the additional data sets on the found common and

components through the “projectR” package (Sharma and others, 2020). Projection of these data onto the common components clearly demonstrates recapitulation of the biological effect captured in the first component that is aligned with DCX expression and neurogenesis, and to a lesser extent the second that is aligned with time (Figure 5(a) and (b), blue and red arrows, respectively).

components through the “projectR” package (Sharma and others, 2020). Projection of these data onto the common components clearly demonstrates recapitulation of the biological effect captured in the first component that is aligned with DCX expression and neurogenesis, and to a lesser extent the second that is aligned with time (Figure 5(a) and (b), blue and red arrows, respectively).

Fig. 5.

Biological validation by projecting additional data sets onto common components obtained from 2s-LCA. (a) Projection of eight additional scRNA-seq data sets onto the common components with cells colored by the  transformed expression level of the DCX gene. Blue arrows indicate alignment of the first common component with DCX expression (neurogenesis). (b) Projection of the same data sets onto the common components with cells colored by time: days of neural differentiation for the

transformed expression level of the DCX gene. Blue arrows indicate alignment of the first common component with DCX expression (neurogenesis). (b) Projection of the same data sets onto the common components with cells colored by time: days of neural differentiation for the

data sets and age in years or gestational weeks for the

data sets and age in years or gestational weeks for the

data sets. Red arrows indicate alignment of the second common component with developmental time. The Darmanis and others (2015) prenatal study did not specify the exact age of the 4 fetal tissue donors used in their prenatal study, indicating only 16–18 gestational weeks for all samples.

data sets. Red arrows indicate alignment of the second common component with developmental time. The Darmanis and others (2015) prenatal study did not specify the exact age of the 4 fetal tissue donors used in their prenatal study, indicating only 16–18 gestational weeks for all samples.

The

components generated by 2s-LCA (first two panels in the second row of Figures 3(b) and 4(b)) were validated by projecting data from another bulk RNA-seq study of neural differentiation onto these components. This replicated the segregation of pluripotent stem cells with high values for the first component, away from other cells in this study that proceeded through neural progenitor and neuronal states, validating the identification of this cell type specific to the

components generated by 2s-LCA (first two panels in the second row of Figures 3(b) and 4(b)) were validated by projecting data from another bulk RNA-seq study of neural differentiation onto these components. This replicated the segregation of pluripotent stem cells with high values for the first component, away from other cells in this study that proceeded through neural progenitor and neuronal states, validating the identification of this cell type specific to the

studies (see Supplementary material available at Biostatistics online, black circles).

studies (see Supplementary material available at Biostatistics online, black circles).

6. Discussion

In this article, we proposed 2s-LCA for the joint analysis of multiple data sets that are independent but have shared underlying structure resulting from common biological processes and/or shared measurement technologies. The proposed method extracts signal spaces that can be characterized as common, partially shared or individual, which enhances the understanding of the underlying biology between the data sets. Our experimental data results indicate that the 2s-LCA joint decomposition can be a useful tool to define shared molecular dynamics across biologically related data sets, while avoiding unwanted artifacts.

The proposed method remains valid for high dimensional data, as long as the sample covariance matrices are consistent. For the four experimental data sets, we found that the trace and top eigenvalue of the sample covariance matrix for each data set comparable. Hence, it is not unreasonable to assume that the population covariance matrices are of reduced effective ranks, for which the sample covariance matrices are consistent.

The proposed method can be easily extended to high dimensional data sets, where sparsity is necessary or desired. In such cases, the sample covariance matrix estimator used in the proposed method can be replaced by any consistent covariance estimator (e.g., Bickel and Levina, 2008; Bien and others, 2016). Then the consistency of the proposed 2s-LCA can still be established.

The proposed 2s-LCA depends on a general, but fixed, design. It might also be of interest to extend 2s-LCA to situations without a fixed design or where the design is only partially fixed. There the existence and estimation of subspaces would have to be empirically determined.

7. Software

The code to conduct 2s-LCA can be found in the SJD package (https://github.com/CHuanSite/SJD). The four experimental data sets in this article can be explored at the individual gene level through the NeMO Analytics portal at https://nemoanalytics.org/p?l=ChenEtAl2021&g=DCX.

Supplementary Material

Acknowledgments

We gratefully acknowledge the comments and suggestions made by the Associate Editor and two referees that led to a much improved article.

Conflict of Interest: None declared.

Contributor Information

Huan Chen, Department of Biostatistics, Johns Hopkins Bloomberg School of Public Health, Baltimore, MD, 21205, USA.

Brian Caffo, Department of Biostatistics, Johns Hopkins Bloomberg School of Public Health, Baltimore, MD, 21205, USA.

Genevieve Stein-O’Brien, Department of Neuroscience, Johns Hopkins University, Baltimore, MD, 21205, USA.

Jinrui Liu, Department of Neurology, Johns Hopkins University, Baltimore, MD, 21287, USA.

Ben Langmead, Department of Computer Science, Johns Hopkins University, Baltimore, MD, 21218, USA.

Carlo Colantuoni, Department of Neuroscience, Johns Hopkins University, Baltimore, MD, 21205, USA, Department of Neurology, Johns Hopkins University, Baltimore, MD, 21287, USA and Institute for Genome Sciences, University of Maryland School of Medicine, Baltimore, MD, 21201, USA.

Luo Xiao, Department of Statistics, North Carolina State University, Raleigh, North Carolina, 27607, USA.

Supplementary material

Supplementary material is available online at http://biostatistics.oxfordjournals.org.

Funding

National Institute of Health (NIH) (R01 NS112303, R56 AG064803, and R01 AG064803 to L.X., in part); National Institute of Biomedical Imaging and Bioengineering (NIBIB) (R01 EB029977 and P41 EB031771 to B.C.); National Institute of Health (NIH) (K99 NS122085 to G.S.) from BRAIN Initiative in partnership with the National Institute of Neurological Disorders; Kavli NDS Distinguished Postdoctoral Fellowship and Johns Hopkins Provost Postdoctoral Fellowship (G.S.); Johns Hopkins University Discovery Award 2019 (C.C., B.C., and B.L.). Data sharing and visualization via NeMO Analytics was supported by grants R24MH114815 and R01DC019370.

References

- Argelaguet, R., Velten, B., Arnol, D., Dietrich, S., Zenz, T., Marioni, J. C., Buettner, F., Huber, W. and Stegle, O. (2018). Multi-omics factor analysis—a framework for unsupervised integration of multi-omics data sets. Molecular Systems Biology 14, e8124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickel, P. J. and Levina, E. (2008). Regularized estimation of large covariance matrices. The Annals of Statistics 36, 199–227. [Google Scholar]

- Bien, J., Bunea, F. and Xiao, L. (2016). Convex banding of the covariance matrix. Journal of the American Statistical Association 111, 834–845. [DOI] [PMC free article] [PubMed] [Google Scholar]

- BrainSpan, BrainSpan. (2011). Atlas of the developing human brain. Secondary BrainSpan: Atlas of the Developing Human Brain. [Google Scholar]

- Bunea, F. and Xiao, L. (2015). On the sample covariance matrix estimator of reduced effective rank population matrices, with applications to fPCA. Bernoulli 21, 1200–1230. [Google Scholar]

- Crainiceanu, C. M., Caffo, B. S., Luo, S., Zipunnikov, V. M. and Punjabi, N. M. (2011). Population value decomposition, a framework for the analysis of image populations. Journal of the American Statistical Association 106, 775–790. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Darmanis, S., Sloan, S. A., Zhang, Y., Enge, M., Caneda, C., Shuer, L. M., Gephart, M. G. H., Barres, B. A. and Quake, S. R. (2015). A survey of human brain transcriptome diversity at the single cell level. Proceedings of the National Academy of Sciences United States of America 112, 7285–7290. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feng, Q., Jiang, M., Hannig, J and Marron, J. S. (2018). Angle-based joint and individual variation explained. Journal of Multivariate Analysis 166, 241–265. [Google Scholar]

- Flury, B. K. (1987). Two generalizations of the common principal component model. Biometrika 74, 59–69. [Google Scholar]

- Flury, B. N. (1984). Common principal components in k groups. Journal of the American Statistical Association 79, 892–898. [Google Scholar]

- Gao, L. L., Bien, J. and Witten, D. (2020). Are clusterings of multiple data views independent? Biostatistics 21, 692–708. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gaynanova, I. and Li, G. (2019). Structural learning and integrative decomposition of multi-view data. Biometrics 75, 1121–1132. [DOI] [PubMed] [Google Scholar]

- Kallus, J., Johansson, P., Nelander, S. and Jörnsten, R. (2019). MM-PCA: integrative analysis of multi-group and multi-view data. arXiv preprint arXiv:1911.04927. [Google Scholar]

- Ke, Z. T., Ma, Y. and Lin, X. (2021). Estimation of the number of spiked eigenvalues in a covariance matrix by bulk eigenvalue matching analysis. Journal of the American Statistical Association 1–19.35757777 [Google Scholar]

- Li, G., Gaynanova, I.. and others. (2018). A general framework for association analysis of heterogeneous data. The Annals of Applied Statistics 12, 1700–1726. [Google Scholar]

- Li, G. and Jung, S. (2017). Incorporating covariates into integrated factor analysis of multi-view data. Biometrics 73, 1433–1442. [DOI] [PubMed] [Google Scholar]

- Li, G., Liu, X. and Chen, K. (2019). Integrative multi-view regression: bridging group-sparse and low-rank models. Biometrics 75, 593–602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu, J. S. (2011). Molecular genetics of neuronal migration disorders. Current Neurology and Neuroscience Reports 11, 171–178. [DOI] [PubMed] [Google Scholar]

- Lock, E. F., Hoadley, K. A., Marron, J. S. and Nobel, A. B. (2013). Joint and individual variation explained (JIVE) for integrated analysis of multiple data types. The Annals of Applied Statistics 7, 523. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lock, E. F., Park, J. Y. and Hoadley, K. A. (2020). Bidimensional linked matrix factorization for pan-omics pan-cancer analysis. The Annals of Applied Statistics. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Min, E. J. and Long, Q. (2020). Sparse multiple co-inertia analysis with application to integrative analysis of multi-omics data. BMC Bioinformatics 21, 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nathoo, F. S., Kong, L., Zhu, H. and Alzheimer’s Disease Neuroimaging Initiative. (2019). A review of statistical methods in imaging genetics. Canadian Journal of Statistics 47, 108–131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nowakowski, T. J., Bhaduri, A., Pollen, A. A., Alvarado, B., Mostajo-Radji, M. A., Di Lullo, E., Haeussler, M., Sandoval-Espinosa, C., Liu, S. J., Velmeshev, D.. and others. (2017). Spatiotemporal gene expression trajectories reveal developmental hierarchies of the human cortex. Science 358, 1318–1323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orvis, J., Gottfried, B., Kancherla, J., Adkins, R. S., Song, Y., Dror, A. A., Olley, D., Rose, K., Chrysostomou, E., Kelly, M. C.. and others. (2021). gEAR: Gene Expression Analysis Resource portal for community-driven, multi-omic data exploration. Nature Methods 18, 843–844. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park, J. Y. and Lock, E. F. (2020). Integrative factorization of bidimensionally linked matrices. Biometrics 76, 61–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richardson, S., Tseng, G. C. and Sun, W. (2016). Statistical methods in integrative genomics. Annual Review of Statistics and its Application 3, 181–209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sharma, G., Colantuoni, C., Goff, L. A., Fertig, E. J. and Stein-O’Brien, G. (2020). projectr: an r/bioconductor package for transfer learning via PCA, NMF, correlation and clustering. Bioinformatics 36, 3592–3593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein-O’Brien, G. L., Clark, B. S., Sherman, T., Zibetti, C., Hu, Q., Sealfon, R., Liu, S., Qian, J., Colantuoni, C., Blackshaw, S.. and others. (2019). Decomposing cell identity for transfer learning across cellular measurements, platforms, tissues, and species. Cell Systems 8, 395–411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van de Leemput, J., Boles, N. C., Kiehl, T. R., Corneo, B., Lederman, P., Menon, V., Lee, C., Martinez, R. A., Levi, B. P., Thompson, C. L.. and others. (2014). Cortecon: a temporal transcriptome analysis of in vitro human cerebral cortex development from human embryonic stem cells. Neuron 83, 51–68. [DOI] [PubMed] [Google Scholar]

- Wang, B., Luo, X., Zhao, Y. and Caffo, B. (2020). Semiparametric partial common principal component analysis for covariance matrices. Biometrics. [DOI] [PubMed] [Google Scholar]

- Wang, M. and Allen, G. I. (2021). Integrative generalized convex clustering optimization and feature selection for mixed multi-view data. Journal of Machine Learning Research 22, 1–73. [PMC free article] [PubMed] [Google Scholar]

- Yang, Z. and Michailidis, G. (2016). A non-negative matrix factorization method for detecting modules in heterogeneous omics multi-modal data. Bioinformatics 32, 1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yao, Z., Mich, J. K., Ku, S., Menon, V., Krostag, A.-R., Martinez, R. A., Furchtgott, L., Mulholland, H., Bort, S., Fuqua, M. A.. and others. (2017). A single-cell roadmap of lineage bifurcation in human ESC models of embryonic brain development. Cell Stem Cell 20, 120–134. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.