Abstract

Although few performance evaluation instruments have been used conventionally in different machine learning-based classification problem domains, there are numerous ones defined in the literature. This study reviews and describes performance instruments via formally defined novel concepts and clarifies the terminology. The study first highlights the issues in performance evaluation via a survey of 78 mobile-malware classification studies and reviews terminology. Based on three research questions, it proposes novel concepts to identify characteristics, similarities, and differences of instruments that are categorized into ‘performance measures’ and ‘performance metrics’ in the classification context for the first time. The concepts reflecting the intrinsic properties of instruments such as canonical form, geometry, duality, complementation, dependency, and leveling, aim to reveal similarities and differences of numerous instruments, such as redundancy and ground-truth versus prediction focuses. As an application of knowledge representation, we introduced a new exploratory table called PToPI (Periodic Table of Performance Instruments) for 29 measures and 28 metrics (69 instruments including variant and parametric ones). Visualizing proposed concepts, PToPI provides a new relational structure for the instruments including graphical, probabilistic, and entropic ones to see their properties and dependencies all in one place. Applications of the exploratory table in six examples from different domains in the literature have shown that PToPI aids overall instrument analysis and selection of the proper performance metrics according to the specific requirements of a classification problem. We expect that the proposed concepts and PToPI will help researchers comprehend and use the instruments and follow a systematic approach to classification performance evaluation and publication.

Keywords: Classification, Knowledge representation, Machine learning, Performance evaluation, Performance measures, Performance metrics, Periodic table

Introduction

Numerous binary-classification performance evaluation instruments have been developed and used independently for different requirements of classification problems in various domains for decades. The choice of an instrument for classification performance evaluation (i.e. measurement of closeness between a classifier’s prediction and ground truth) generally relies on domain knowledge and previous related studies. Hence, although these instruments might be frequently used in their specific domain, they could be unknown to the researchers working in the other domains. This paper aims to fill an elemental gap in the literature by reviewing 57 performance instruments compiled from different domains in-depth, and proposes a methodology to reveal their intrinsic properties, similarities, and differences. To our knowledge, this is the first time that such an ultimate set of classification performance instruments is addressed in the literature.

Performance instruments originated, and the related terms and notations appeared in different domains including statistics (i.e. 2 × 2 contingency table, binary similarity/distance measures), biology (i.e. binary association measures), signal processing (e.g., area under the receiver operating characteristic curve), information retrieval (e.g., ‘precision’, ‘recall’), medical diagnosis (e.g., ‘sensitivity’, ‘specificity’), statistical pattern recognition (e.g., ‘accuracy’) and marketing analysis (e.g., ‘lift’) at different times. For example, ‘precision’ and ‘recall’ metrics were proposed by Mooers as a necessity for measuring performance in the information retrieval domain [1]. Then, the terminology was established among different alternatives gradually.

In 1964, a group of researchers discussing the proper terminology for ‘positive predictive value’ (PPV) suggested the use of ‘acceptance rate’ instead of ‘relevance ratio’ to avoid confusion [2]. They also discussed the use of terms under different names in different domains, most of which are still used today. For example, ‘recall ratio’, ‘sensitivity’, ‘hit rate’ for ‘true positive rate’ (TPR), ‘Snobbery ratio’ for ‘false-negative rate’ (FNR), ‘precision ratio’, ‘relevance ratio’, ‘pertinency factor’, ‘acceptance rate’ for PPV, ‘noise factor’ (borrowed from signal processing) for ‘false discovery rate’ (FDR), ‘specificity’ for ‘true negative rate’ (TNR), and ‘fallout ratio’ for ‘false positive rate’ (FPR). Additionally, ‘inverse recall’ and ‘inverse precision’ are used for TNR and ‘negative predictive value’ (NPV), respectively [3]. Based on the inverse relationship (i.e. increasing the classification performance in terms of one metric generally decreases the performance in terms of the other) between recall (TPR) and precision (PPV), some individual metrics have been proposed, for example, F, which is a transformation of F1 metric [4]. It was later expressed as an unnamed coefficient version of F1 by Sokal and Sneath [5]. Note that the earliest record of the F1 metric’s usage is by Jaccard, as he called it ‘coefficient de communauté’ [6].

Classification research problems requiring Machine Learning (ML)-based solutions have increased and varied significantly in recent years. Researchers mostly used the ones they have already known for the problem they have been studying. Otherwise, they either adapted the ones used in similar domains or even implemented new performance evaluation instruments for specific research problems. Besides instrument choice, different approaches in the literature to describe performance evaluation instruments also cause variations in terminology. In the literature, the definitions of some performance instruments are not clear, such as ‘performance metrics’ and ‘performance measures’ are undistinguished.

As a result, each community conventionally adapted the performance instruments according to their domain-specific practices and considerations [7]. Although numerous instruments have been proposed and used, their properties, similarities, and differences have not been thoroughly analyzed in the literature. Several works reviewed in “Semantic/formal definitions and organization of performance evaluation instruments” address the class imbalance effect on well-known instruments, especially accuracy (ACC). Relationships between different instrument types, as well as confusion-matrix-derived instruments (i.e., entropic, graphical-based, and probabilistic instruments) were not studied together. The review studies express performance evaluation instruments with different notations, abbreviations, and symbols [8–10]. The contributions of this research can be summarized as follows:

To the best of our knowledge, this study is the first to review the interchangeable naming of the instruments in general and the alternating terminology of the ones from both semantic and formal perspectives.

The study also identifies three relationships between the instruments, namely duality, complementation, and class counterparts.

Instruments are categorized into metrics and measures by determining whether they can be used to evaluate classification results directly in the performance context (e.g., ACC) or they are related to the non-performance aspects such as ground-truth or prediction class-related measurements (e.g., PREV or IMB for ground-truth and BIAS for prediction). The categorization is described semantically and defined formally.

We also propose different forms by which the instruments' equations are expressed, such as canonical, high-level, equivalent, and base-measure forms.

The axioms about the summarization of the confusion matrix to reflect the performance and the leveling of the instruments per instrument category to observe their dependencies are defined.

A geometry concept is developed to identify ground-truth or prediction dimensions of the instruments.

Finally, a visual exploratory table is proposed to represent all the concepts for the comprehensive set of performance instruments in one place, including the instruments for single-threshold ML models or crisp binary classifiers, as well as graphical-based performance metrics based on varying model thresholds, entropy-based instruments, and the measures based on the probabilistic interpretation of classification error or loss. The table covers 57 instruments along with 12 variant and parametric instruments.

The rest of the paper is structured as follows. The next section presents and discusses the results from the conducted case study, summarizes the related works in the literature, and describes the research questions addressed in this study. The subsequent section categorizes performance instruments, and describes the axioms and formal definitions for the proposed concepts about them. It also presents a new leveling approach by defining the base and higher-level measures/metrics and introducing new measures followed by which the proposed binary-classification performance instrument table PToPI as a knowledge organization tool is introduced. It describes the design methodology, meaning of visual design elements, and its practical applications by examples in the literature. The final section outlines the contribution of this study and summarizes its potential use. Appendix 1 lists abbreviations and alternative names of performance measures and metrics in levels. Appendix 2 presents the instrument equations as a complete reference. Appendix 3 gives a full view of the proposed exploratory table. Appendix 4 describes the selection methodology for Android malware classification studies surveyed. Finally, Appendix 5 lists survey references.

Online Data and Materials

The following data and materials are provided online for researchers:

The detailed data and findings for the case study in “Case study: performance evaluation in android mobile-malware classification” are made online at 10.17632/5c442vbjzg.3 via the Mendeley Data platform.

An online repository is also maintained at https://www.github.com/gurol/ptopi, which includes the proposed exploratory table as a spreadsheet (PToPI.xlsx) along with full-resolution PToPI images (full view: online Fig. C.1 and plain view: Fig. 4).

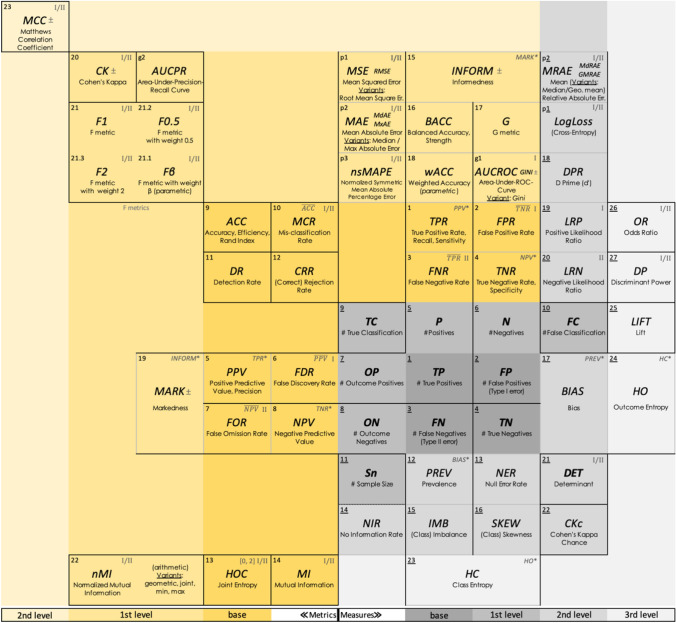

Fig. 4.

Plain view of PToPI (see online Fig. C.1 for the full view)

The spreadsheet also provides extra materials such as an instrument list (showing proposed concepts: instrument category, level, symbol, derivatives, complements, duals, geometries, alternating terms, range, error types, etc.), a probabilistic error instrument list, and probabilistic error/loss, confusion matrix-based, and entropic instruments calculator/simulation tool for hypothetical classifiers on synthetic datasets.

Case Study: Performance Evaluation in Android Mobile-Malware Classification

A case study was conducted to clarify the issues described above. We selected “mobile-malware classification” as a case study domain to analyze performance evaluation approaches systematically. The domain was chosen because it is a recent rapidly changing classification research problem, where ML-based binary classification (whether it is malicious (positive) or benign (negative) software) is frequently used [11, 12]. Our survey included 78 studies from 2012 to 2018, which reported their performances with different ML algorithms on Android mobile-malware binary classification problems. The results showed that even within the same domain, several researchers used alternative terminology, as listed in Table 1 (see Appendix 4 for the details of the systematic literature review and online Table E.1 for the references of the studies).

Table 1.

The distribution of alternative terms per individual performance metrics referred by the surveyed studies

| Metrics | Terms |

|---|---|

| ‘Accuracy’ (ACC) | ‘Accuracy’ (80%), ‘Detection Rate/Ratio’ (11%), ‘Detection Accuracy’ (7%). ‘Success Rate/Ratio’, and ‘Overall Accuracy/Efficiency’ |

| ‘F metric’ (F1) | ‘F-measure’ (43%), ‘F-score’ or ‘F1 score’ (39%), F1 (22%), Fm |

| ‘True Positive Rate’ (TPR) | ‘True Positive Rate’ (39%), ‘Recall’ (26%), ‘True Positive Rate’ and ‘Recall’ (at the same time) (15%), ‘Detection Rate’ (9%), ‘Sensitivity’ (5%), and ‘Accuracy Rate’ |

| ‘Positive Predictive Value’ (PPV) | ‘Precision’ (86%), ‘Positive Predictive Value’ (8%), and ‘Detection Rate’ |

| ‘False Positive Rate’ (FPR) | ‘False Positive Rate (96%) and ‘False Alarm Rate’ (7%) |

| ‘True Negative Rate’ (TNR) | ‘True Negative Rate (60%), ‘Specificity’ (27%), and ‘Recall Benign’ (13%) |

The metrics referred to with a single term are: FNR (‘False Negative Rate’), NPV (‘Negative Predictive Value’), CK (‘Cohen’s Kappa’), MCC (‘Matthews Correlation Coefficient’), MCR as ERR (‘Misclassification Rate’)

In mobile-malware classification, positive class detection success in class-imbalanced datasets is referred to as ‘detection rate’. The overall performance is stated as ‘malware detection’. Besides, “malware classification” corresponds to “binary classification” encapsulating “malware detection”. This is due to the reason that the studies we surveyed reported other binary classification metrics such as CK and MCC. The researchers also used a limited number of instruments to evaluate and compare the performance of their classifiers, as listed in “Case study: performance evaluation in android mobile-malware classification”. Additionally, the findings show that they prefer using different terms for the instruments. Mainly, some used the same term (e.g., ‘detection rate’) to refer to various instruments (ACC, TPR, and PPV, as shown double underlined). Moreover, different terms for the same metrics were expressed in the same study. For example, 15% used both ‘true positive rate’ and ‘recall’ in the same context as shown in underlined.

More interestingly, we even found that six of the surveyed studies (7.7%) published the same metrics (referring to as TPR and ‘recall’) with the same values redundantly.1 Although it is a typical binary classification domain, some researchers used conventional terms that are rather semantic, namely ‘precision’, ‘recall’, ‘sensitivity’, and ‘specificity’. For the rest, the findings show that researchers are familiar with syntactic terms. For example, (‘True’/‘False’) + ‘Positive’/‘Negative’ + ‘Rate’/‘Predictive Value’ (e.g., ‘True Positive Rate’, ‘Positive Predictive Value’).

The above brief history and our survey on the mobile-malware classification domain showed that classification performance evaluation approaches in different domains were affected by the other deep-rooted domains such as information retrieval, biology, and medicine. Note that referring to one alternative instrument naming instead of the others is not wrong essentially. However, these alternative terms can lead to misunderstanding or unnecessary use of equivalent terms in knowledge transfer and communication between researchers from different disciplines.

Related Works

A comprehensive study by Japkowicz and Shah provided an ontology of performance instruments and a general classifier evaluation framework, including selecting a performance metric [7]. Some studies compared metrics by testing standard ML algorithms on real-world or synthetic datasets. The examples of such experimental studies are as follows: Sokolova et al. covered three measures and six metrics using naïve Bayes and support vector machine classifiers [10]. Tharwat gives preliminary information for four measures and thirteen metrics [3]. Luque et al. analyzed the symmetry of ten metrics under three types of transformation, such as labeling transformation that exchanges positive and negative class labels [9].

Most of the related literature addressed the issues researchers encounter when they seek to use performance instruments, especially class imbalance, where the number of examples in positive and negative classes is not the same or close [13–17]. Valverde-Albacete and Peláez-Moreno focused on the so-called “accuracy paradox,” where a classifier with lower accuracy might have higher predictive power and vice versa [18]. Bradley earlier addressed several desirable properties of AUCROC over ACC [19]. Chicco and Jurman suggested MCC as a more informative metric compared with F1 and ACC [20]. Hu and Dong studied the cost-based evaluation of twelve metrics for class-imbalance conditions [21]. They individually check whether a misclassification from the class with fewer number of examples (e.g., positive, P = 100) will cause a higher cost than that from the other class with a higher number of examples (e.g., negative, N = 900). Another aspect reviewed in the literature is the chance correction in metrics (e.g., CK) that eliminates a potentially high performance exhibited by a random classifier [22]. Wang and Yao focused on the relationship between diversity (i.e. the degree of disagreement within classification ensembles) and performance metrics [23].

Other studies examined instruments and their properties from specific perspectives such as invariance in switching confusion matrix elements [24], a chronology of the instruments [25], and the patterns in the instruments’ equations [26]. Yan et al. discussed the metrics’ decomposability into the sum or average of individual losses on each example in the dataset due to incorrect classifications [27]. Forbes proposed constraints, which evaluate metrics in terms of whether they are statistically principled, readily interpretable, and generalizable to k-class situations [28]. Straube and Krell suggested the following criteria for choosing a proper metric: (i) performance-oriented (not data-oriented), (ii) intuitive (interpretable), and (iii) comparable (accepted in the literature) [13]. Huang and Ling recommended “consistency” and “discriminancy” degrees for comparing performance metrics through ACC and AUCROC example metrics in balanced and imbalanced datasets [29]. The robustness of binary classification performance instruments is examined via a benchmarking method [30]. Multi-class/multi-labeled performance evaluation was also addressed [24, 31, 32].

Many binary-classification performance instruments are the same as binary similarity or distance metrics [33] because all are derived from a 2 × 2 contingency table. For example, F1 and ACC were referred to as ‘Sørensen–Dice coefficient’ and ‘simple matching coefficient’, respectively. Tulloss suggested requirements and recommendations for binary similarity instruments such as sensitivity to the relative size of two compared lists (similar to the class imbalance in classification) and having a lower and upper bound for identical and unequal lists, respectively [34].

Theoretically, performance instruments, as well as similarity/distance and association instruments, can be formed in numerous ways by changing the coefficients or weights in the equations. Hence, generalized instruments (i.e. representing the instruments in other forms) are suggested. Koyejo et al. described the equations as the ratio of two polynomials with one degree in four variables: TP, FP, FN, and TN [35]. Paradowski formed a function combining joint probability, marginal probabilities, and the mean of marginal probabilities for binary class variables (P and N) [36]. These forms might also be used in classification performance evaluation.

We observe that in the literature on performance evaluation instruments, limited issues were examined, most of which were related to class imbalance on a few customary metrics, especially F1 and ACC. Besides, the instruments were compared at a high level without taking their intrinsic properties into account. To the best of our knowledge, an extensive analysis of performance evaluation instruments in broad coverage has not been conducted in the literature. This study also provides a baseline for the performance evaluation of classifications with a higher number of classes, because binary-classification evaluation metrics can be used for multi-class or multi-label classification by micro- or macro-averaging binary metrics [37, 38] or making specific adaptions such as one-versus-all approach [31, 32, 39]. Moreover, the literature has recently focused on the need for reliable measurement [40]. Note that the preliminary work of this study provides only a few concepts without formal definitions with a limited scope [41].

Research Questions

The paper has three main research questions:

RQ.1. How can we differentiate performance instruments semantically and formally?

‘Performance evaluation instruments’ (shortly ‘performance instruments’) are generally expressed by various terms such as ‘performance metrics’, ‘performance measures’, ‘evaluation measures’, and ‘prediction scores’. The evaluation based on a 2 × 2 contingency table is named ‘diagnostic accuracy’ or ‘test accuracy’ in medicine [42] or ‘skill score’ or ‘forecast skill’ in meteorology (forecast vs. observation classes) [43]. Concerning the literature, we observed that.

Performance ‘measures’, ‘indicators’, ‘metrics’, ‘scores’, ‘criteria’, ‘factors’ or ‘indices’ terms are used interchangeably.

Despite the semantic differences between the terms, the studies directly related to classification performance use the terms interchangeably [22, 24, 29, 44, 45].

In our surveyed studies, 42% use ‘performance metrics’, 15% use ‘performance measures’, and 25% use both terms interchangeably.

In this paper, we present a recommendation to clarify the definitions of these terms to express and distinguish the instruments. We also give conventional naming and abbreviations in a generic classification context for the instruments.

RQ.2 How can we formally identify the properties of binary-class performance instruments and their similarities, redundancies, and dependencies?

Performance evaluation instruments summarize the confusion matrix via a mathematical function that can be expressed in numerous ways in terms of other instruments. Interpreting the functions and the dependencies among the instruments brings out difficulties in comprehension and comparison of the instruments. In this study, we examine 57 instruments methodically and present novel concepts that reveal their inherent properties formally. We refer to the intrinsic properties enabling the comparison of those instruments as “concepts”. This study introduces canonical forms and two basic measures, namely TC (True Classification) and FC (False Classification), to enhance comprehension and interpretation of instrument equations. Then, it defines geometry, duality, complementation, and leveling concepts formally to uncover the similarity, redundancy, and dependency among instruments.

RQ.3 How can we effectively select instruments in performance reporting and publication?

For performance reporting, it is not clear what and how many instruments should be used even in a specific application domain. The performance of a classifier can be examined from the standpoint of failure instead of success. In this case, the number of false classifications, namely FP or FN (or both), becomes the primary concern. The choice of metrics in performance reporting depends on the classification problem domain. Type I error (false positives) is critical in many binary classification problems. For example;

In information retrieval applications such as document filtering [37], FP might be critical.

In malware (also known as “malicious software”, e.g., computer viruses) analysis, it might be better to label a “benign” software example incorrectly as “malign” (“malware”) than to omit malware by labeling benign incorrectly. Because the examples labeled as malware could be prioritized, then an expert could later go through further manual analysis to eliminate FP [46].

An anti-malware product (also known as “anti-virus”) classifying a given computer file instance as malware or benign should behave with decreased FPs to prevent displaying excessive malware warnings.

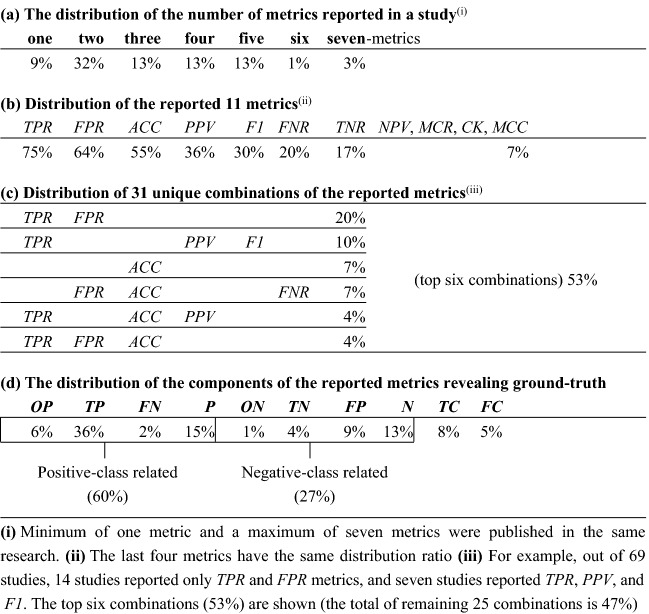

However, according to our survey of studies involving Android mobile-malware classification, we found that most studies focused more on type I errors (63% report FPR, whereas 19% report FNR). Table 2 shows the key findings of our survey in reporting ML-based malware classification performance to answer “how many”, “which”, and “what combination of” metrics the researchers report.

Table 2.

The statistics of performance metrics reported from 69 applicable studies of 78 surveyed studies

As seen in Table 2a, the number of performance evaluation instruments reported in a single study has a wide range. The studies tend to publish two or three instruments, but they may choose from only one instrument (ACC or F1) to seven instruments inclusive. TPR, FPR, and ACC are the most reported metrics, as shown in Table 2b. Note that the same variance in selected metrics was also observed in multi-labeled performance reporting [31].

Consequently, we develop a solution to facilitate the selection of performance measures with an exploratory table called PToPI, which is similar to a periodic table of elements to represent the concepts proposed in this study. The periodic table of elements can be considered an unprecedented example application of information or knowledge organization. The classification of the elements (i.e. grouping, ordering, positioning the elements) is pragmatic (e.g., producing the most helpful one) and methodological suggesting new hypotheses, explanations, and theories [47]. Likewise, PToPI is also a schematic representation of available performance evaluation instruments conveying different forms of intrinsic properties (i.e. concepts) [48, 49]. Additionally, as people are familiar with the periodic table, there are other adaptations of periodic tables in different scopes, such as in data science [50]. Covering these research questions, in this paper, we studied 57 instruments including the following instrument types:

confusion-matrix derived instruments for a single/final classification model-threshold;

entropy-based instruments (a subset of confusion-matrix derived instruments) such as mutual information (MI), outcome entropy (HO), class entropy (HC), joint entropy (HOC), and normalized mutual information (nMI);

graphical-based performance metrics such as area-under-ROC-curve (AUCROC, ROC: receiver operating characteristic) or area-under-precision-recall-curve (AUCPR); and

the instruments dependent on classification error’s probabilistic interpretation such as mean squared error (MSE, also known as Brier score), mean absolute error (MAE), root mean square error (RMSE), and LogLoss (also known as binary cross-entropy or relative entropy).

Considering entropy as an information-theoretic concept, mutual information indicating the strength of association in the contingency table [51] is also used for binary classification, namely prior (“ground truth”: P or N) and posterior (“prediction”: OP or ON) distributions [44]. For the entropy-based instruments, which are the subtype of confusion-matrix-derived instruments, the following equation is valid: MI = HC + HO − HOC [52]. Note that entropy-based instruments are measures of uncertainty with the true distribution of a random variable: HC for positive class distribution (PREV), HO for outcome-positive distribution (BIAS), and HOC for confusion-matrix elements (TP, FP, FN, TN). On the other hand, binary cross-entropy (LogLoss) is about the uncertainty with the approximate distribution of the variable with a probability function.

Although graphical-based performance metrics are not based on a single instance of a confusion matrix, they are calculated by varying a decision threshold (i.e. full operating range of a classifier) for different TPR and false-positive rate (FPR) or positive predictive value (PPV) and TPR pairs in a specific binary-classification application [53, 54]. Such metrics are the summary statistics of the graphical measurements of paired base metrics to rank classifiers according to performances [7]. Graphical-based metrics give insight into the performance of a classifier modeled for the whole possible model threshold range. In contrast, confusion-matrix-derived instruments represent the final performance of the classifier for an optimum threshold. In other words, the former is for model development, and the latter is for production.

Although probabilistic error/loss instruments are not based on a confusion matrix generated for single-threshold classification models or crisp classifiers [55], most of the proposed concepts and definitions are applicable. For example, they can be categorized as ‘measures’ with a half-bounded/unbounded interval or ‘metrics’ with a finite interval, indicating the performance failure (i.e. the smaller values, the better predictions). The instruments and their variants that summarize the deviation from the true probability are for regression problems rather than classification. While MAE (also known as Mean Absolute Deviation and abbreviated as MAD) is computationally less expensive and more resistant to outlier errors, MSE is more often used in practice [56]. LogLoss that is the predicted probability of the ‘true’ class measures the prediction uncertainty. It is also preferred for multi-class classification and modeling with artificial neural networks. Contrary to zero–one loss metrics (e.g., MCR, FPR, FNR, FDR, and FOR), probabilistic error/loss instruments evaluate the performance error of scoring or non-crisp classifiers that label instances with a reported or attached belief value (score, probability, or likelihood) according to a decision boundary.

For example, instead of labeling an instance as positive (one) or negative (zero) absolutely (also known as a “hard label”), a classifier model with a 0.5 internal decision-boundary value (the right side is for positive labels, the left side is for negative ones) in [0, 1] interval can label an instance as positive correctly with a 0.85 score (also known as “soft label”). In contrast, it labels another instance as negative correctly with a 0.40 score. Hence, we can interpret the probabilistic classification error for those instances such that the former labeling is more probable than the latter (|0.85 − 0.50|= 0.35 > 0.10 =|0.40 − 0.50|). A significant difference in probabilistic error/loss instruments measured for test and training datasets might reveal over/underfitting (bias-variance trade-off) unless it appeared due to the different statistical properties of the datasets or modeling errors [57] (e.g., RMSEtest > RMSEtraining for overfitting).

Note that probabilistic instruments are also used in the evaluation of ordinal classification, where there is an inherent (but without a meaningful numeric difference), order between the classes [58]. Another related use of probabilistic instruments is to assess candidate classification models in the same dataset by checking the trade-off between models’ fit and complexity. Akaike Information Criterion (AIC) and Bayesian Information Criterion (BIC), two inter-model complexity criteria, put a penalty for the number of model parameters (i.e. model complexity) and reward goodness-of-fit via negative MSE as a likelihood function (the equations are given in Appendix 2) [59, 60].

We reviewed 30 probabilistic instruments based on the summary of errors via different summary functions (see the last row for the summary functions of Probabilistic Error/Loss Measures/Metrics equations in Appendix 2). Then we prepared a calculator and analyzed these instruments based on the hypothetical classifiers using synthetic datasets in ten cases. The calculator and example simulation results provided in the online PToPI spreadsheet showed the following specific deficiencies exhibited in some of the instruments:

In ME and MPE, positive and negative errors are neutralized in summation.

In all percentage error instruments (e.g., MPE and MAPE), division-by-zero occurs when the samples have at least one negative class (N > 0).

Scaled error instruments (MASE, MdASE, and RMSSE) for time series regressions and forecasting are not applicable in binary classification because there is no innate sequence/order in classification dataset samples.

Critically, Normalized Mean Squared Error (nMSE) in five variants, ME, and percentage error instruments yield unbalanced over-prediction (pi > ci, false positive) − under-prediction (pi < ci, false negative) errors.

Based on the findings, out of 30 instruments, we distinguished and presented five instruments (with a total of 9 variants) that are proper for binary classification, namely LogLoss, MRAE (MdRAE, GMRAE), MSE (RMSE), MAE (MdAE, MxAE), and nsMAPE. Note that we excluded recently proposed binary-classification performance metrics such as

SAR (an abbreviation of Squared Error, Accuracy, and ROC area) by Caruana and Niculescu-Mizil [61],

Optimized Precision (OACC) by Ranawana and Palade [62],

Index of Balanced Accuracy (e.g., IBAα(G)) by Garcia, Mollineda, and Sanchez [63] and

probabilistic error instruments with unconventional summary functions such as Mean Arctangent Absolute Percentage Error (MAAPE) by Kim and Kim [64],

because they are derived from well-known standard metrics, or their use in the literature is limited. However, the concepts proposed in this study are also applicable to those metrics. Finally, this study focused on the performance instruments’ properties and their relations, but it does not aim to compare the superiority of an instrument over the other.

Semantic/Formal Definitions and Organization of Performance Evaluation Instruments

This section, first, proposes a semantic categorization of performance evaluation instruments as ‘measure’ and ‘metric’. Second, we provide a formal definition of the categories consistent with measure and metric in mathematics and the semantic approach. We also propose another organization of multiple instruments per category to see their similarities and dependencies.

Semantic Categorization of Performance Evaluation Instruments

This section addressing RQ.1 aims to clarify ‘measure’ and ‘metric’ terms that are used interchangeably. By definition at a high level,

A measure is defined as “the dimensions, capacity, or amount of something ascertained by measuring”2 and a metric (often metrics) is “a standard of measurement”.3

A measure is quantitatively derived from measurement, while a metric is close to inferring qualitative subjects.

A metric is a calculated or composite measure based on two or more measures and is typically stated as percentages, ratios, or fractions.

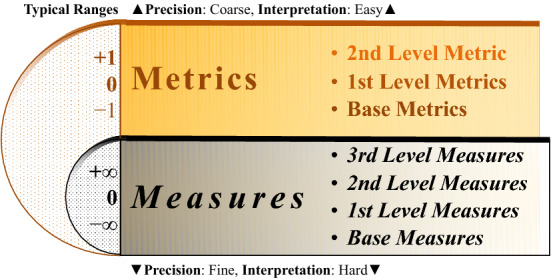

We distinguish ‘measures’ and ‘metrics’ referring to different but dependent concepts. This categorization was also examined by Texel from a general perspective [65]. Measures are numerical values providing incomprehensible or no context. In contrast, metrics have a compilation of measures within a comprehensible context. Figure 1 illustrates our proposed categorization with performance measures/metrics with their relative characteristics and typical intervals. Note that the literature in specific disciplines also focuses on similar terminologies. For example, Olsina and de Los Angeles Martín pointed out the lack of consensus in terminology about the assurance of the non-functional requirements of software such as quality, accessibility, and productivity (like classification performance) [66]. They proposed a comprehensive ontology covering various terms, including ‘measures’, ‘metrics’, and ‘indicators’. The ontology exhibits a metric's dependency on one or more measures via value interpretation.

Fig. 1.

Dependency and relative characteristics of performance evaluation instrument categories. The attached semicircles on the left show the typical intervals for each category. For classification performance measures and metrics, the intervals are usually [0, ∞) and [0, 1], respectively

Measures are produced by a measurement activity, whereas metrics quantify an attribute of any entity in software domain such as process, product, and resource. The analogies ‘data’ to ‘measures’ and ‘information’ to ‘metrics’ might also be insightful. García et al. mainly reviewed and discussed ‘measures’ and ‘metrics’ terms concerning software management and proposed another ontology [67]. Our interpretation of ‘measures’ and ‘metrics’ described above is also in line with those studies.

Formal Definitions and Organization of Performance Evaluation Instruments

This section addresses RQ.1 for distinguishing instruments formally and RQ.2 for the identification of their intrinsic properties formally. Table 3 shows the notation proposed for instruments as well as their transformations (dual and complement) described in this study.

Table 3.

Performance instrument notations

| Notation | Style | Meaning | Examples |

|---|---|---|---|

| M | Italic | Any instrument (measure or metric) | ACC in [0, 1], MCC in [− 1, 1], PREV in [0, 1] |

| M | +Bold | Unlimited measures having positive integer values (recommended when used in a text, the notation optional in equations) | TP, P, Sn, DET |

| M* | * Super-script | The dual of an instrument | PREV = BIAS*, HC = HO* |

| Over bar | The complement of an instrument |

First, we propose the following axioms setting out a base for the formal definitions for the confusion-matrix-derived instruments, including entropic instruments.

Axiom 1

(Atomicity). The only indivisible instruments to evaluate binary-classification performance are TP, FP, FN, and TN, which are the elements of a confusion matrix (2 × 2 contingency table).

Axiom 2

(Atomic Expression). Any binary-classification performance evaluation instrument can be expressed with the confusion matrix elements.

Axiom 3

(Basic Summary). A basic summary includes the summation of the pairwise or all of the confusion matrix elements.

Axiom 4

(Binary-classification Performance Instrument Expression). All performance instruments can be expressed via functions of individual or basic summaries of confusion matrix elements.

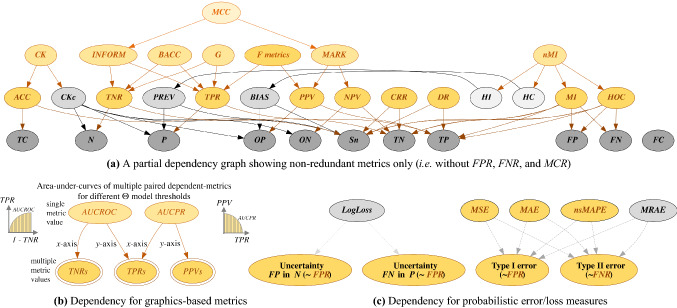

The axioms are valid for graphical-based metrics and probabilistic error/loss measures, which also depend on or have a similar relationship with the confusion-matrix-derived instruments, as depicted in Fig. 3b and c. Namely, AUCROC and AUCPR depend on multiple TPRs vs. TNRs and TPRs vs.. PPVs, respectively. Probabilistic instruments measuring classification uncertainty or type I/II errors are similar to FPR and FNR. An example of Axiom 2 could be given for PREV: PREV = P/Sn can be expressed in an atomic manner as PREV = (TP + FN)/(TP + FP + FN + TN). The other axioms are described in the sections below. We proposed the following formal definitions for organizing and describing binary-classification performance evaluation instruments.

Fig. 3.

Instrument dependencies graphs. The full-resolution graphs and the DOT (graph description language) files to produce them via Graphviz are provided online at https://github.com/gurol/ptopi

The Base Measures (TP, FP, TN, FN)

Based on Axiom 1, we called the four atomic confusion matrix elements “base measures”. As stated in Axiom 2, all other instruments can be expressed by these base measures. PToPI full-view in online Fig. C.1 provides different names of the base measures.

The First-Level Measures (P, N, OP, ON, TC, FC, Sn)

Based on Axiom 3, the first-level measures are the composition of the four base measures by summation (pairwise and total):

P and N are column (marginal) totals of a confusion matrix. They represent the ground truth denoting the real number of the two observed classes (known labels). For example, a classification test dataset with 3000 malign and 2000 benign application samples is expressed as P = 3000 and N = 2000.

OP and ON measures that are row totals (the other marginal totals in probability theory) of a confusion matrix represent the prediction (test or classification result/outcome) of the two classes, where OP = TP + FP and ON = FN + TN. For the same example, the outcome of a decision tree classifier that predicts 3,100 malign and 1,900 benign applications is expressed as OP = 3100 and ON = 1900. These measures correspond to predicted, hypothesized, or estimated (classification) output.

Moreover, True Classification (TC) and False Classification (FC) are defined as the totals of diagonal base measures (TP and TN) and off-diagonal ones (FP and FN), respectively. Substituting those totals simplifies the instruments’ equations and their interpretation. For instance, ACC that is defined as (TP + TN)/Sn (even as (TP + TN)/(TP + FP + FN + TN)) could be expressed merely as TC/Sn with TC. Including TC and FC, where appropriate, makes the equation easy to interpret. Note that this notation also simplifies the multi-class performance instruments. For example, the accuracy of a ternary classification is again TC/Sn.

Finally, Sn is the total of all the base measures (Sn = TP + FP + FN + TN). As specified in Axiom 3, P, N OP, ON, TC, and FC are pairwise and Sn is the overall summation of confusion matrix elements.

Canonical Form and Formal Instrument Categorization

This subsection proposes and defines a formal logic that determines whether a given equation of a performance evaluation instrument is a ‘metric’ or ‘measure’. The first step in the proposed formal definition is to standardize the equations satisfying Axiom 4. In canonical form, the equations are expressed with the base and first level measures (namely TP, FP, FN, TN, P, N, OP, ON, TC, FC, and Sn) that are also called “canonical measures” in this study.

For example, MCR = FC/Sn and F1 = 2TP/(2TP + FC) are expressed in canonical form. Note that any part of the given equations matched in the where clause of the definition above must be reduced into its complete form (P, N, OP, ON, TC, FC, Sn) while converting an equation into canonical form (e.g., a TP + FP + FN + TN should always be reduced into Sn and likewise a remaining TP + FN into P).

Definition 1

(Canonical Form).

M is a binary-classification performance instrument expressed in the canonical form

, and where basic summaries defined in Axiom 3 are reduced for .

To find the canonical form, first, the equations should be in “base-measure form” (i.e. atomic expression in Axiom 2, expanded until all the terms are four base measures, namely TP, FP, FN, TN). Then the substitutions can be carried out according to Definition 1. As stated in Definition 2, a binary-classification performance evaluation measure expressed in canonical form has only P, N, OP, ON, or Sn base measures, or its range is infinite as imposed in semantic interpretation (i.e. numerical values with limited or no context derived from measurement).

Definition 2

(Measure/Metric).

M is a binary-classification performance ‘measure’ if it can be expressed in the canonical form where and ( or ()).

Otherwise, M is a ‘metric’.

The first condition states that an instrument consisting of only any of P, N, OP, ON, or Sn is a ‘measure’. P, N, and Sn, which are even available before a classifier is modeled, depend on datasets (i.e. they are numerical values with no performance context). In contrast, OP and ON show the pure outputs of a classifier (i.e. numerical values with limited performance context). An instrument in canonical form that has any of four base measures (TP, FP, FN, and TN) and two diagonal 1st level measures (TC and FC), represent the performance context. However, if the range of the instrument is unlimited, it is a ‘measure’ as dictated by the second condition because the precision is fine, and interpretation is challenging, as depicted in Fig. 1. For example, PREV = P/Sn and NER = N/Sn are measures because their domains are equal to {P, Sn} and {N, Sn}, respectively, whereas is still a measure even though dom(OR) = {TP, FP, FN, TN} {P, N, OP, ON, Sn} because the interval of OR is left-bounded, i.e. [0, ∞). is a metric because neither dom(G) is a subset of {P, N, OP, ON, Sn} (because of TP and TN) and nor its interval is unbounded (range(G) = [0, 1]).

Comparison with Measures and Metrics in Mathematics

Performance measures and metrics defined formally above have similarities with measures and metrics in mathematics. Measure in mathematics is a function (μ) from Σ (an σ-algebra over a set X) to affinely extended real numbers () like performance measures. Various types of measures are defined according to different properties or conditions, such as negativity, intervals, empty sets, additivity, and monotonicity properties. 26 of 29 performance measures correspond to mathematical (positive) measures. “Signed measures” do not require negativity. Only DET, DPR, and DP are identified as signed (±) measures in (− ∞, ∞).

Canonical performance measures (X in Definition 1, e.g., TP and P) correspond to “counting measures”, another type of measure in mathematics (i.e. number of elements (cardinality), a function to natural numbers: ). The performance measures in [0, 1] for a specific aspect of classification datasets, namely class or outcome ratios (e.g., PREV or BIAS), class or outcome entropies (HC vs. HO), and chance factor (CKc), correspond to “finite measures” (for example, a probability measure in [0, 1] yielding 0 for empty sets and its entire measure (probability) space is 1). Additivity is determined by two operations on subsets in X, namely “union”: and “sum”: . A measure is “countable additive” when union = sum, “subadditive” when union ≤ sum, and “superadditive” when union ≥ sum. For example, PREV satisfies “subadditivity” for two datasets (e.g., Sn = 20): dataset1 with samplei (i = 1, …, Sn/2 = 10) and dataset2 with samplei (i = 11, …, Sn = 20).

We evaluated all performance measures for two datasets and observed that all canonical performance measures satisfy “countable additivity” and the remaining performance measures, except signed ones, satisfy “subadditivity” (see “Measures and additivity” worksheet in the online PToPI file). The additivity of DET, DPR, and DP signed measures is nondeterministic (subadditive, countable additive, or superadditive like the determinant of nonnegative Hermitian matrices).4 The measure values of the sub-datasets might cancel out each other. Canonical measures satisfy all five properties with the countable additive property.

Mathematically, metrics are defined as a distance function D between pairwise elements in a set. Both metrics and performance metrics are in a bounded interval [a, b] where 0 ≤ a < b < ∞.5 However, performance metrics correspond to “similarity functions” as an inverse of (distance) metrics (e.g., S = 1 − D) in mathematics (how similar are classification labels and class labels?). Performance metrics can be expressed as a similarity function S(o, c) between ground-truth (c) and prediction (o, classifier’s outcome) for all the binary examples (i = 1, …, Sn) in a dataset where ci and oi ∈ {0 for positive and outcome positive, 1 for negative or outcome negative}.

The maximum similarity or the minimum distance (dissimilarity) is the better performance (e.g., ACC = 1). Error or loss metrics such as FPR, FNR, FDR, FNR, and MCR, described in “Instrument complement”, as well as probabilistic instruments, are directly distance metrics (D) in mathematics. As described in “Semantic/formal definitions and organization of performance evaluation instruments”, binary similarity measures are a well-studied domain historically in the literature where a fourfold table is used to calculate the similarity/distance between two binary vectors in a convenient manner instead of the comparison of pairwise elements.

The conventional notation concerning binary classification is a = TP, b = FP, c = FN, and d = TN. As addressed in Ref. [26], binary-classification performance metrics had been studied as similarity coefficients, for example, ACC by Sokal and Michener (1958) and Rand (1971), F1 by Gleason (1920), Dice (1945), and Sørenson (1948), CK by Cohen (1960), and MCC by Yule (1912) and Pearson and Heron (1913).

Three axioms are defined for mathematical metric definition:

-

(i)

identity of indiscernibles: D(o, c) = 0 ⇔ o = c,

-

(ii)

symmetry: D(o, c) = D(c, o)), and

-

(iii)

subadditivity or triangle inequality: D(o, c) = D(o, x) + D(x, c).

Performance metrics either satisfy all three axioms (e.g., ACC) or the first and second axioms (“semimetrics” in mathematics, e.g., F1, CK, and MCC). Probabilistic error metrics correspond to Euclidian distance in mathematics (e.g., MAE and its variants are Euclidian distance whereas MSE and RMSE are squared Euclidean distance).

Probabilistic error measures are not metrics in mathematics. MRAE and its variants are not symmetric (exchanging ci ↔ pi, see Eqs. (B.pi, B.piii, and B.piv) and relative absolute error measure equations in Appendix 2). LogLoss is not a mathematical metric because it does not satisfy the first axiom completely (LogLoss = 0 for ci = pi = 1, but LogLoss is undefined for ci = pi = 0). LogLoss > 0 for ci = 0 ≈ pi. For example, LogLoss = 6.64 (➝∞) for ci = 0 and pi = 0.01 (➝0+) whereas MSE = MdSE = 0, RMSE = MAE = 0.01 (➝0+), and nsMAPE = 1. Note that probabilistic metrics such as MAE, MSE, and RMSE in binary classification that are in [0, 1] are usually expressed in a right-open interval [0, ∞) in regression for convenience. Nevertheless, they become bounded according to the dependent variable’s range.

Comparing a classification and a regression model on the weather temperatures on Earth, which range ± 40 °C (100 °F to − 40 °F) annually, for example. The binary scoring classifier with “cold” (0) and “hot” (1) labels yield minimum 0 and maximum 1 absolute errors (ci ∈ {0, 1}, pi [0, 1]). The regression classifier yields a minimum of 0 °C/°F and a maximum of 80 °C (140 °F) absolute errors (a threshold, for example, 20 °C/68 °F, could be used to categorize the scalar outcomes into cold and hot labels). Even MRAE and LogLoss also measure the same classification error; they have a right-open interval in both (binary) classification and regression because of the nature of their summary functions. As addressed by the practitioners, the interpretation of probabilistic performance measures is not convenient, comparing the metrics [68].

The performance measures with open intervals, namely DPR, LRP, LRN, DET, LIFT, OR, DP, MRAE, and LogLoss, violating the first condition in Definition 2 (i.e. having at least one canonical measure different from {P, N, OP, ON, Sn} such as base measures, TC, and FC) are not used in the literature for performance evaluation, comparison, and publishing for different classifiers because they are not easy to interpret (unbounded and having non-linear distribution). The attempts to categorize their unbounded range are subjective, indecisive, and not accepted by the literature (e.g., DP [10], OR [69, 70], LRP/LRN [71]). They have domain-specific usages to reflect the specific aspects of the classifiers.

We propose measure/metric categorization of instruments consistent with the mathematical definition and semantical interpretation of ‘measures’ and ‘metrics’ to clarify the terminology as well as enhance the initial interpretation of the instruments. Metrics can be directly and conveniently used for performance evaluation, whereas measures are auxiliary to evaluate other factors such as datasets. Of the 57 performance instruments covered, almost half of them are measures and half of them are metrics.

Instrument Geometry

Figure 2a depicts the following geometry of the 1st level measures defined above:

P and N are column types (total of elements in vertical cells in confusion matrix) that are related to ground truth only.

OP and ON are row types (total of elements in horizontal cells) related to prediction only.

TC and FC are mixed types (total of elements in diagonal or off-diagonal cells).

Sn is mixed geometry and does not affect determining geometry type when it is involved in other instruments’ equations. In this study, we extend this column/row geometry to any performance instrument apart from canonical measures via Definition 3.

Figure 2b depicts the geometries of all the measures and metrics that are determined via Definition 2. The figure is used as a starting point for the proposed exploratory table to position the different instruments in the table layout. Note that pale and dark solid edges represent geometry types, as depicted in the “Box edges” group as described in “Interpretation of PToPI visual design elements”. In our survey, 26% of the studies published column-geometry metrics (e.g., TPR, TNR, FPR, or FNR). 19% published true-classification-only metrics (i.e. having TP or TN, e.g., TPR, TNR, PPV, NPV, or ACC). Interestingly, 3% published FPR with FNR, which is a subset of false-classification-only metrics (the other one is MCR).

Definition 3

(Column, Row, and Mixed Geometry).

M is a binary-classification performance instrument expressed in a canonical form where

The geometry of M is ‘column’ (depicted as )

if and

The geometry of m is “row” (depicted as )

if and

Otherwise, the geometry of M is ‘mixed’ (depicted as ).

Figure 2c depicts the possible confusion matrix examples for probabilistic error/loss performance measures. The classifier labels both the first and second examples as positives with a score greater than the decision boundary. Nevertheless, the second one is a negative example (i.e. false positive). Likewise, the third example is misclassified as negative (i.e. false negative) even if the classifier’s score falls under the threshold. As described in “PToPI: an exploratory table for binary-classification performance evaluation instruments”, those instruments give a score (pi) about the labeling predictions for the actual class of the example (ci). Figure 2d lists the corresponding calculations for each example for LogLoss and MAE, which is a variant of MSE. Note that the parts yielding zero in the pairs of the sum function in LogLoss are not shown for the sake of simplicity. The evaluation of each case in both measures shows that probabilistic error/loss instruments measure;

either uncertainty/type-II-error in P, namely FN (like FNR = FN / P)

or uncertainty/type-I-error in N, namely FP (like FPR = FP / N).

Fig. 2.

The origin of laying out of performance evaluation instruments in PToPI and probabilistic error/loss instruments for four samples labeled as one TP, FP, FN, and TN

This reveals that the measures are a typical mathematical function for FPR and FNR like (FPR + FNR)/2, which is also examined in “Instrument complement”. The instruments are column-geometry because their domains {P, N} satisfy the first condition in Definition 3.

Transforming Geometry: Instrument Duality

The extended geometry divides classification performance instruments into two orthogonal dimensions besides the mixed ones: column (ground-truth only) vs. row (prediction only). This approach brings about transformations in corresponding instruments expressed in Definition 4.

Definition 4

(M*, Duality).

M is a binary-classification performance instrument expressed in a canonical form, where and the geometry of M is “column”, “row”, or “mixed”. The dual of M, M* is produced by

if the geometry of M is “column” (),

if the geometry of M is “row” (), or

if the geometry of M is “mixed” (),

where , , and .

Essentially, duality is to transform one concept into another concept in a bilateral manner. It could be perceived as an interchanging antecedent and consequent [8]. A transformation via switching column to row geometries and vice versa corresponds to ground-truth versus prediction perspective change. The introduced transformation via duality facilitates researchers to see the special relations in corresponding instruments. A dual of a column/row type instrument is formed by swapping between {P} and {OP} and between {N} and {ON}, respectively. For instance, TPR = PPV* and PPV = TPR* (dual metrics) or HC = HO* and HO = HC* (entropy-based dual measures). As seen in the examples, the symmetry (involution) is valid for duality ( and , i.e. if is the dual of , then is the dual of ). The duality is essential for two dual concepts or dimensions where a mapping identified in one can be transferred into the other by duality. For example, a function (f) of two column-geometry instruments ( and ) could be transformed or sought in their corresponding dual (row-geometry) instruments ( and ) as described below:

| 1 |

For example, LRP is a mapping between TPR and TNR. The dual of LRP = TPR/(1 – TNR) is TPR*/(1 – TNR*) = PPV/(1 – NPV), which is not a common instrument in existing classification performance evaluations. It is called “relative risk” which is mainly used in statistics, epidemiology, clinical research, and diagnostic tests [72]. The relation revealed by duality can connect classification performance evaluation with these domains. The example given for LRP is related to the transformations of the column- or row-geometry instruments. As for mixed geometry, duality transformation of high-level mixed-geometry instruments reveals different dependencies (note that the dual of a mixed type instrument is equal to itself as expressed in the third condition of Definition 4). For instance, the following transformation of mixed-type ACC from Eq. (2) showing PREV dependency further reveals BIAS (the dual of PREV) dependency of ACC:

| 2 |

| 3 |

| 4 |

Increasing prevalence leads to a higher performance value in terms of ACC, as shown in Eq. (2), which also causes a higher bias, as shown in Eq. (4). However, dual instruments should be interpreted correctly. For example, Powers’ statement that the goal of the classification model is achieving the equality of dual instruments such as PREV = BIAS, TPR = PPV, or TNR = NPV should be clarified by adding “in the highest possible metric values” constraint (e.g., TPR = PPV = TNR = NPV ≈ 1.0) [8]. Because a random classifier yielding the base measures equal (e.g., TP = FP = FN = TN = 50) also satisfies all these three equalities. The duals of column-geometry probabilistic error/loss measures depicted in Fig. 2c and d switch ground-truth measures (P and N) to the prediction measures (OP and ON), see Appendix 2 for their equations. They are similar to (FPR* + FNR*)/2 = (FDR + FOR)/2 but not common in the literature.

Instrument Complement

Binary-classification performance metrics and some measures are normalized ratios having bounded intervals, such as [0, 1] (also known as the unit interval) or [− 1, 1]. The complement of those instruments is defined below. For instance, TPR is a metric M, which has an interval [0, max(M) = 1], the complement of TPR is . Likewise, INFORM is a metric M, which has an interval [min(M) = − 1, max(M) = 1].

Definition 5

().

M is a binary-classification performance instrument where . The complement of the M is , where

The second condition is given for the sake of completeness because there is no well-known instrument having zero and negative values (e.g., interval [− 1, 0]). Complements are useful.

to simplify equations,

to change the performance perspective (from a positive class perspective to a negative one (e.g., TPR to FNR or PPV to FDR), or

to switch focus on correctness to both error types (I and II) (i.e. ACC to MCR).

Without any complements and duals practically observed in the literature, all probabilistic error/loss measures focus on classification errors (type I/II) or losses.

As an example of the third condition in Definition 5,

The complement of INFORM in [− 1, 1] is simply − INFORM,

However, the complement of normalized INFORM in [0, 1] is 1 − ((INFORM + 1)/2) = (2 − (TPR + TNR − 1 + 1))/2 = (FPR + FNR)/2.

The former is not a common metric whereas the latter (mean false positive/negative rates or mean type I/II errors) is similar to the probabilistic error/loss measures interpreting the errors for both classes (e.g., mean absolute error). Note that zero–one loss complement metrics (e.g., MCR, FPR, FNR, FDR, and FOR) along with probabilistic error/loss instruments are negatively oriented (i.e. negative performance where zero is the best).

HC and HO entropy-based instruments measure the uncertainty associated with given distributions of PREV and BIAS, respectively, along with their corresponding complements (i.e. 1 − PREV and 1 − BIAS). In comparison to duality, reporting an instrument along with its complement does not provide extra information. This redundancy in performance reporting (i.e. reporting both a metric and its complement) is occasionally observed in the literature. Out of 51 surveyed studies reporting classification performance, 16% have redundant metrics, namely TPR with FNR (14%), TNR with FPR (12%), and ACC with MCR (2%).

Class Counterparts

Class-specific instruments have counterpart instruments that are defined per class (positive class only and negative class only). For example, TPR and TNR are class counterparts. The former is for positive classes and the latter is for negative classes. Likewise, FNR and FPR, the complements of TPR and TNR, are class counterparts. The other examples are PPV with NPV (and their complements, FDR with FOR) and LRP with LRN. Not all counterpart relations are common. For example, the counterpart of PREV (PREV = P/Sn) is NER (NER = N/Sn), which is not commonplace in the literature. However, the counterpart of BIAS (BIAS = OP/Sn) (i.e. ON/Sn) or the counterpart of F1 (i.e. 2TN/(2TN + FC)) is not used at all. Counterparts are also applicable in multi-class performance evaluations above binary classification. Generic examples of n-ary classification are also provided in “Summary functions”.

Duality, complementation, and class counterparts together help to identify the characteristics of performance instruments. For example, in Eq. (B.23) can be re-formulated as and , which is easier to interpret and extendable to multi-class (X ∈ {‘P’, …, ‘N’}).

More Geometries: Dependencies, Levels, and High-Level Dependency Forms

Performance instruments can be expressed in terms of others in numerous ways. For example, in addition to Eqs. (2)–(4), Eq. (5) reported by Powers that expresses ACC in terms of BIAS/PREV and INFORM metrics can be transformed into Eq. (6) in terms of BIAS/PREV and MARK metrics [8].

| 5 |

| 6 |

However, such expression derivations (i.e. equivalent form) are exhaustive and might be confusing. Therefore, we suggest a leveling approach based on high-level dependencies among the existing instruments to simplify their summarization relationships and interdependencies. We prepared a dependency graph among binary classification instruments. Figure 3 shows partial views of the dependency graph. We use high-level equation forms (i.e. substituting instruments other than base level measures/metrics and 1st level measures) where possible to identify direct dependencies. Otherwise, the dependencies are calculated based on the equations in canonical form. For example,

TPR, TNR, PPV, and NPV metrics and their complements depend on canonical measures. Therefore, they are considered base metrics

INFORM depends on TPR and TNR; MARK depends on PPV and NPV base metrics. Therefore, they are 1st level metrics.

shows that MCC has direct dependencies on INFORM and MARK 1st level metrics at a high level. Therefore, MCC is a 2nd level metric.

Beyond the well-known ones, the literature rarely examines the instrument equations with different expressions like in Eqs. (2)–(6). Press, for example, found the equivalent form of PPV and NPV by expressing them with TPR and TNR [73]. Sokolova et al. expressed INFORM and DP in terms of LRP and LRN [10]. The high-level dependency reveals another kind of redundancy observed in performance evaluation publications (i.e. reporting a metric with its direct dependencies). For example, out of 51 surveyed studies reporting classification performance, 27% published F1 along with the two direct dependencies (the harmonic mean of TPR and PPV).

Upper-Level Measures (the 2nd and 3rd Level Measures) and Metrics Leveling (the Base, 1st, and 2nd Level Metrics)

As a result of applying the leveling approach described above, measures have four levels and metrics have three levels, including base levels. The complete list of levels and corresponding instruments is listed in Appendix 1 in alphabetic order.

Summary functions

High-level metrics summarize the dependent metrics into a single figure, as listed in Table 4. In parametric instruments such as wACC or Fβ (see Eqs. (B.18) and (B.21.1) in Appendix 2), the summary function depending on two or more instruments can be adjusted according to the importance given to each dependent [37]. For example, wACC puts more weight on one of the high-level dependent metrics TPR and TNR, as shown in Table 4.

Table 4.

The instruments’ summary functions and their class counterparts or dual high-level dependencies

| Instrument | Summary functions | High-level dependencies | |

|---|---|---|---|

| Column geometry | Class counterparts (positive vs. negative) | ||

| INFORM | Addition | TPR | TNR |

| BACC | Arithmetic mean | TPR | TNR |

| G | Geometric mean | TPR | TNR |

| wACC | Weighted mean | TPR | TNR |

| Mixed geometry | Duals (column vs. row) | ||

| nMI | MI / Arithmetic/geometric means or minimum/maximum of | HC | HO |

| MI / joint (HOC) | – | – | |

| Fβ | Weighted harmonic mean | TPR | PPV |

| MCC | Geometric mean | INFORM | MARK |

| Probabilistic error instrumentsa | |||

| ME, MSE, RMSE, MdSE, SSE, nMSE (in five variants), MAE, MdAE, MxAE, GMAE, MRAE, MdRAE, GMRAE, RAE, RSE, MPE, MAPE, MdAPE, RMSPE, RMdSPE, sMAPE, nsMAPE, nsMdAPE, MASE, MdASE, RMSSE, and LogLoss | |||

| Summary functions | |||

| Normalized/Symmetric/Root/Geometric mean/Mean/Median/Max/Sum/Square(d)/Relative/Absolute/Percentage/Scaled + ‘Error’ along with Logarithmic function for LogLoss | |||

The equations are given in Appendix 2

aTotal of 31 probabilistic error instruments are reviewed. More details are provided in the online PToPI spreadsheet

High-level dependency and summary functions are key to understanding the properties of performance instruments. In information retrieval, for instance, the single metric properly summarizing dual TPR and PPV metrics is Fβ parametric metric (usually F1 where β = 1). Because the datasets available for information retrieval are extremely skewed (over 99% of the documents are irrelevant), some metrics, especially ACC, are not appropriate.

Using harmonic mean in F1 as a summary function instead of simple arithmetic/geometric means suppresses the extreme performance values in cases where PPV is exceedingly high (e.g., by returning all documents for a specific query) [74]. Note that β > 1 emphasizes PPV and type I error whereas 0 < β < 1 emphasizes TPR and type II error.

Leveling not only allows the researchers to distinguish similar instruments from a large number of instruments but also shows the dependencies among levels and their summarization degree. For example, MCC depends on and summarizes the 1st level metrics that depend on and summarize the base metrics. Table 5 summarizes complementation, duality, and class-counterpart concepts applicable to the reviewed performance instruments.

Table 5.

Summary of the concepts for the instruments with column/row geometry and suggested notation and naming for multi-class classification instruments (3-ary, 4-ary, …, n-ary classification)

| Concept | For measures | For metrics | |||

|---|---|---|---|---|---|

| Complements | Positive | Negative | Success | Failure | |

| PREV | NER | TPR | FNR | ||

| TNR | FPR | ||||

| PPV | FDR | ||||

| NPV | FOR | ||||

| ACC | MCR | ||||

| Duals | Column | Row | Column | Row | |

| PREV | BIAS | TPR | PPV | ||

| HC | HO | TNR | NPV | ||

| INFORM | MARK | ||||

| Class Counterparts | Positive | Negative | Positive | Negative | For n-ary classification (e.g., class "X") |

| LRP | LRN | TPR | TNR | TXR (True X Rate) | |

| FPR | FNR | FXR (False X Rate) | |||

| PPV | NPV | XPV (X Predictive Value) | |||

| FDR | FOR | FPXR (False Predictive X Rate) | |||

PToPI: An Exploratory Table for Binary-Classification Performance Evaluation Instruments

We designed a compact exploratory table for a total of 57 binary-classification performance evaluation instruments. The table is the pictorial specification or blueprint of instruments from multiple perspectives covering all the proposed concepts that we described and formally defined in “Conclusion and discussion”. Figure 4 and online Fig. C.1 show its plain (simplified) and full view versions, respectively.

PToPI Design Methodology

The proposed exploratory table is designed with the following methodology:

Reviewing the literature to compile the instruments and related information such as alternative names and equations;

Equations are converted into different forms where possible, such as canonical form (Definition 1) and high-level dependency form (see “More Geometries”);

Measure and metric categories are identified by canonical form equations (via Definition 2);

Geometry types are determined as “column”, “row”, or “mixed” (via Definition 3);

A dependency graph is prepared to formulate the levels and discover the similarities and dependencies (see dependency graph in Fig. 3);

The determined levels and dependencies along with the geometry types are used to position and level the measures/metrics around base measures shown in a 2 × 2 contingency table;

Entropic instruments are noticeable by positioning them beneath or right of the base measures;

After the layout is completed, the dual and complement of measures/metrics are determined (via Definitions 4 and 5, respectively);

Unique background colors are used to distinguish measures (grey) and metrics (gold) along with their levels (shades of grey or gold color);

Geometry is depicted by dark and pale (greyed-out) lines (pale bottom/top edges for column geometry, pale left/right edges for row geometry, and all dark for mixed geometry, see Table 6);

Measures and metrics are separately numbered according to levels and dependencies from the innermost (measures are underlined). Within each level, the numbers are assigned from column to row and mixed geometry and from positive to negative class dependencies. Graphical-based metrics and probabilistic instruments are numbered separately with ‘g’ and ‘p’ prefixes, respectively;

Instrument abbreviations, names, and alternative names (for common instruments) are displayed. The metrics in the [− 1, 1] interval is indicated in name via a ‘±’ suffix (e.g., ‘MCC ± ’).

The complement pairs are displayed only for the instrument indicating classification errors (e.g., is shown in FDR where 0 is the best performance value, but is not shown in PPV);

Dual instrument pairs are stated (e.g., INFORM* is shown in MARK and vice versa);

For the full view:

The error types, if exist, are indicated (type I: I, type II: II, and type I/II errors: I/II);

Instrument value intervals (other than [0, 1]) and whether an instrument yields not-a-number (i.e. 0/0) are calculated and indicated by “NaN” (i.e., instruments yield not-a-number in extreme cases, e.g., on datasets without any positive samples where P = 0); and

Equations are displayed per instrument.

Table 6.

Descriptions of the visual design elements used in PToPI

Note that Appendix 2 also suggests corrections for common performance metrics to avoid indeterminacies.)

Interpretation of PToPI Visual Design Elements

Table 6 lists the visual design elements employed in PToPI to represent the properties of individual instruments or instrument categories. The full view also presents abbreviated names, full names, alternative names, and particular attributes of measures and metrics such as error types, whether having not-a-number value (i.e. no 0/0), intervals that are different from [0, 1].

Recall that the names of measures with integer values are written in bold, as shown in Table 3. Measures are numbered with underlined text. The instruments above or below the confusion matrix frame are the column-geometry type with only these dependencies: base measures, Sn, P, and/or N.

In contrast, the ones located on the left or right of the confusion matrix are the row-geometry type with only base measures and/or Sn but with OP, and/or ON. F0.5 emphasizing TPR is positioned closer to TPR and F2, emphasizing PPV is placed closer to PPV.

Demonstration of PToPI Usage

PToPI enables standardized specifications of a large number of performance evaluation instruments, provides terminological relations, and avoids the uninformed choice of a metric. Knowing the limitations of the instruments eliminates unnecessary performance reporting and allows for the selection of the most appropriate instruments according to specific requirements. The table is intended to be a single comprehensive reference that will be updated upon new instrument proposals. The practical use of PToPI can be described in two pillars:

Overall instrument analysis (addressing RQ.2): Seeing and comparing the relationships, differences, and similarities of all the instruments.

The proper metric choice for performance reporting and comparison (addressing RQ.3): Deciding which instruments are suitable for establishing classification models, comparing different classifiers, and reporting classification performances.

Overall Instrument Analysis

The exploratory table shows the similarities of the performance instruments. For instance, comparing INFORM and MARK dual metrics in the 1st level, three additional column-geometry metrics are shown near INFORM, namely BACC, wACC, and G. However, the duals of those additional metrics corresponding to row geometry are not present near MARK. For example, there is no metric taking the arithmetic mean of PPV and NPV like BACC (arithmetic mean of TPR = PPV* and TNR = NPV*). No metric in row geometry is found that corresponds to G taking the geometric mean of the dependents (i.e. geometric mean of PPV and NPV). Probabilistic error/loss measures are located near INFORM, because they are similar to the normalized complement of INFORM (i.e. (FPR + FNR)/2) as described in “Instrument complement”. The reason for the lack of dual metrics in row geometry is attributed to the fact that performance metrics based on the prediction of a classifier (i.e. depending on OP and ON) are not as significant as the ones based on the ground truth (i.e. depending on P and N). The duals of LRP, LRN, and OR column-type measures are also missing due to the same reason. We revealed such findings that were not addressed in the literature after seeing the big picture via the developed table.

The Proper Metric Choice for Performance Reporting and Comparison

The following performance evaluation examples are compiled from different domains in the recent literature to show the practical assistance of PToPI in selecting suitable metrics in performance comparison and reporting. Note that we used those papers to reflect performance instrument choices in practical in various problem domains in the literature. Therefore, they are selected for demonstration purposes. In the examples below, we present different conventions across different domains.

Example 1: F1 is frequently used as a single metric in many domains, especially in information retrieval. Referring to PToPI, we can see that F1 is the harmonic mean of TPR and PPV, which then depends on positive-class-only measures (TP, P, and OP). While using F1 could be acceptable because of the domain requirements focusing on positive performance (i.e. excluding the negative class counterparts, namely TNR and NPV), it would be better to report a supporting metric with F1 to distinguish the negative class performance. The best alternative is TNR or NPV which is shown near TPR and PPV. Briefly, the primary metric (i.e. used as a single figure in a performance comparison and ranking of different classifiers) is F1 and the supporting metric (i.e. additional metrics used in performance reporting to indicate other perspectives) is TNR in this case. A classifier with higher performance in terms of a primary metric could have a lower performance in terms of supporting metrics.

Example 2: Another common approach in performance reporting, as shown in Table 2c, is reporting F1 along with its direct dependencies, namely TPR and PPV (e.g., in predicting hospital admissions from emergency department medical records [75]). Following the same approach above and addressing the negative class performance, F1 can be reported as the primary metric. Furthermore, TNR and one of the TPR and PPV direct dependent metrics can be published as supporting metrics. In the medical example given, PPV can be selected as a supporting metric along with F1 primary metric because PPV values are less than TPR. Thus, the lower PPV performances are also disclosed to the readers.

Example 3: Some domains prioritize false classifications (either or both FPR and FNR). For example, an intrusion detection system focuses on and reports FPR (type I error) and then FNR (type II error) along with TPR and ACC [76]. Because, high false positives can be annoying for end-users, in the example given, reporting TPR, which is the complement of FNR, is redundant. Reporting a metric (INFORM, BACC, and G groups in PToPI) above FPR and FNR is also redundant unless focusing on both error types. As an alternative to reporting ACC, a mixed geometry metric above FPR and FNR level such as CK or MCC can be used as a primary metric besides supporting FPR and FNR metrics (e.g., reporting three metrics: MCC, FPR, FNR instead of ACC, TPR, FPR, FNR).