Abstract

Background: This paper presents a novel lightweight approach based on machine learning methods supporting COVID-19 diagnostics based on X-ray images. The presented schema offers effective and quick diagnosis of COVID-19. Methods: Real data (X-ray images) from hospital patients were used in this study. All labels, namely those that were COVID-19 positive and negative, were confirmed by a PCR test. Feature extraction was performed using a convolutional neural network, and the subsequent classification of samples used Random Forest, XGBoost, LightGBM and CatBoost. Results: The LightGBM model was the most effective in classifying patients on the basis of features extracted from X-ray images, with an accuracy of 1.00, a precision of 1.00, a recall of 1.00 and an F1-score of 1.00. Conclusion: The proposed schema can potentially be used as a support for radiologists to improve the diagnostic process. The presented approach is efficient and fast. Moreover, it is not excessively complex computationally.

Keywords: features extraction, X-ray images, COVID-19, machine learning, image processing

1. Introduction

COVID-19 is a disease caused by the SARS-CoV-2 virus. It has a wide range of symptoms, most of which affect the respiratory tract. It can lead to serious inflammation of the lungs and, consequently, pneumonia [1]. The COVID-19 pandemic has exposed healthcare problems around the world. The large number of patients to diagnose and the limited number of tests and staff available turned out to be a significant problem. As a result, the number of diagnostic tests performed was very often too low. The diagnostic method that turned out to be the gold standard for the confirmation of SARS-CoV-2 infection was the polymerase chain reaction (PCR) test. However, this method is not error free and sometimes gives false results [2]. Another difficulty comes from the fact that some people, despite being infected with the SARS-CoV-2 virus, do not develop symptoms of the disease [3] and are not referred for PCR testing. This can cause problems with the correct diagnosis of the disease. Despite its latency, the disease can cause serious changes in the lungs. In these cases, the diagnosis is possible on the basis of an X-ray of the lungs. For this reason, machine learning methods have been used to detect COVID-19 infections on X-ray images. Methods based on machine learning (ML) turned out to be effective and useful during the analysis and assessment of the impact of diseases (e.g., COVID-19 or pneumonia) on X-ray images of the lungs [4,5,6]. The major contributions of this paper are as follows:

We propose a novel approach to chest X-ray image analysis in order to diagnose COVID-19 using an original CNN-based features extraction method.

We obtained a new dataset containing samples from confirmed COVID-19 cases as well as from uninfected patients. The infection status of both groups was confirmed by a PCR test. We performed an augmentation in order to increase the dataset’s size.

We implemented the proposed features extraction for different classifiers, obtaining promising results.

Further parts of this paper are constructed in the following manner: (a) a brief review of the state-of-the-art is presented in Section 2; (b) in Section 3, we describe the dataset, augmentation process and the proposed approach to the classification; (c) in Section 4, we present the obtained results; (d) in Section 5 (the Discussion) we compare our results with other state-of-the-art approaches, we pull out some conclusions, and we present perspectives for future work on this topic.

2. Related Work

To combat the challenges posed by the pandemic to the healthcare service, Khan et al. [7] proposed a deep-learning-based method of accurate and quick diagnosis of COVID-19 using X-ray images. They proposed a method consisting of two novel deep learning frameworks: Deep Hybrid Learning (DHL) and Deep Boosted Hybrid Learning (DBHL). The use of both of these frameworks led to an improvement in their COVID-19 diagnostic methods. The result was a model capable of identifying COVID-19 in X-ray images with over 98% accuracy on a previously unseen dataset. This method has been shown to be effective in reducing both false positives and false negatives and has proven to be a useful supportive tool for radiologists.

Tahir et al. [8] proposed a model based on a convolutional neural network (CNN) capable of lung segmentation and localization of specific changes caused by COVID-19. The dataset used consisted of nearly 34,000 X-ray images, including lung images of people with COVID-19 and pneumonia and of healthy people. An important element of the study was the appropriate marking of photos by specialists. This method recognized COVID-19 and its effects on the lung image with sensitivity and specificity values over 99%.

In [9], Brunese et al. used transfer learning to create a model capable of detecting COVID-19 changes in X-ray images of the lungs. This model is applicable (1) to the classification of healthy people and patients with changes in lung X-ray images; (2) to distinguish between COVID-19 and other lung diseases; and (3) to distinguish lung lesions caused by the SARS-CoV-2 infection. This model was based on the VGG-16 (16-layered convolutional neural network) and underwent transfer learning. This study included 6523 X-ray images from healthy individuals, patients with various lung diseases and patients with COVID-19. The model trained on this dataset achieved a sensitivity equal to 0.96 and a specificity of 0.98 (accuracy of 0.96) for distinguishing between healthy individuals and patients with lung diseases, and a sensitivity of 0.87 and a specificity equal to 0.94 (accuracy of 0.98) for distinguishing lung diseases from COVID-19. The image analysis process itself is extremely fast and takes only about 2.5 s. The data presented by the authors indicate that the developed model achieves good and reliable results.

Chakraborty et al. [10] presented a COVID-19 detection method based on a Deep Learning Method (DLM) using X-ray images. The authors used different architectures of deep neural networks in order to achieve optimal results. They combined several pre-trained models such as ResNet18, AlexNet, DenseNet, VGG16, etc. This approach showed to be effective and cost significantly less than standard laboratory diagnostic methods. The dataset consisted of 10,040 chest X-ray images, which included a normal/healthy population, COVID-19 patients and patients with pneumonia. The presented model was highly accurate (96.43%) and sensitive (93.68%). This work showed the high usefulness of ML models for determining changes in X-ray images, which can facilitate the work of radiologists who, as a result of this quick method, can refer patients directly to treatment.

Civit-Masot et al. [11] noted that traditional tests to identify SARS-CoV-2 infection are invasive and time consuming. Imaging, on the other hand, is a useful method for assessing disease symptoms. Due to the limited number of trained medical doctors who can reliably assess X-ray images, it is necessary to invent ways to facilitate this type of assessment. The authors used the VGG-16-based Deep Learning model to identify pneumonia and COVID-19. The presented results indicate high accuracy (close to 100%) and specificity of the model, which qualify it as an effective screening test.

It is also worth mentioning that ML-based methods can support not only radiology specialists. In [12], we can see the transfer learning approach to discovering the impact of the stringency index on the number of deaths caused by the SARS-Cov-2 virus. As presented in [13], ML can be also implemented in order to predict the COVID-19 diagnosis based on symptoms. Statistical analyses revealed that the most frequent and significant predictive symptoms are fevers (41.1%), coughs (30.3%), lung infections (13.1%) and runny noses (8.43%). A total of 54.4% of people examined did not develop any symptoms that could be used for diagnosis. Moreover, ML can also be a useful tool in vaccine discovering, as presented in [14].

Recently, numerous ML-based approaches for rapid diagnostics have been published. In addition, they have gathered increasingly more attention from some government and international agencies. For example, the European Commission published a White Paper entitled ‘On Artificial Intelligence—A European approach to excellence and trust [15]. Seven key requirements were identified and are described in the document:

Human agencies and oversight;

Technical robustness and safety;

Privacy and data governance;

Transparency;

Diversity, non-discrimination and fairness;

Societal and environmental well-being;

Accountability.

The following statement in the EC publication is worth noting: AI can and should itself critically examine resource usage and energy consumption and should be trained to make choices that are positive for the environment. It follows from the above citation that it is extremely important to focus on providing solutions that are not only cost and time effective but also that spare energy used for computations. This kind of approach to AI has become introduced as the ‘GreenAI’ [16] and has gathered increasingly more attention recently [17]. Research working on the GreenAI have also proposed some novel metrics [18]. As a result of this metric, researchers can compare not only the accuracy and precision of the proposed method, but also its sustainability and eco-friendliness.

3. Materials and Methods

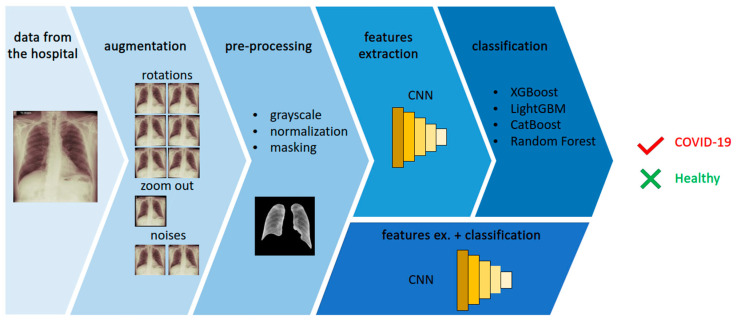

The general overview of the proposed method is presented in Figure 1. The image presents an example of the data obtained from the hospital and some consequential steps: the augmentation process and pre-processing of the sample. In Figure 1, the features extraction and classification steps are presented. Finally, the proposed method gives the answer of “true” for the COVID-19-positive sample and “false” for the healthy sample. Each step of the process is described in detail in this section. All experiments were carried out with the use of Python 3.7 and the TensorFlow platform. Among others, we used the following libraries: scikit-learn, Xgboost, Lightgbm and Catboost.

Figure 1.

The following steps of processing in the proposed method: data acquisition, data augmentation, sample pre-processing, features extraction and binary classification of COVID-19 as positive or negative (healthy).

3.1. Dataset

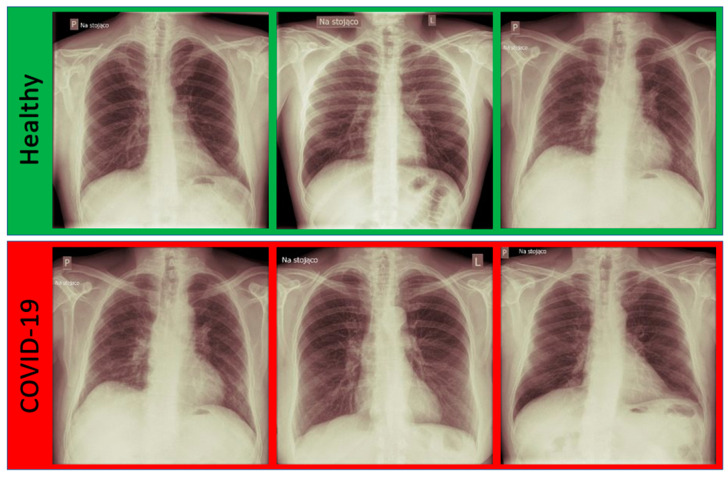

In this research, anonymized real data were used. The data were obtained from Antoni Jurasz University Hospital No. 1 in Bydgoszcz, Department of Radiology and Imaging Diagnostics. A total of 60 chest X-ray images were obtained; 30 were from healthy individuals, and 30 had COVID-19 confirmed by a PCR test. The images were provided in the DICOM format. The images were in a raw form, without masks. Some samples from the dataset are presented in Figure 2.

Figure 2.

The exemplary images from the dataset divided into two classes: Healthy and COVID-19 confirmed by a PCR test.

3.2. Data Augmentation

In order to perform training, the dataset was divided into 3 disjointed subsets: the training set (80%), validation set (10%) and testing set (10%). Unfortunately, the quantity of samples was not enough to use any ML technique. Thus, we decided to use augmentation for increasing the size of the training dataset. As a result of the augmentation, 10 samples from one single image were obtained. The initial proper balance in the dataset was unchanged, and as a result, the dataset was still well balanced. The following methods for augmentation were implemented:

rotations—1°, 2° and 3° both clockwise and anti-clockwise;

noises—a random Gaussian noise and a salt and pepper noise were added;

zooming out—the image was resized to obtain 95% of its original size.

3.3. Data Pre-Processing

First of all, the samples were moved to grayscale images and were normalized. The goal of normalization was to improve the quality of the images, e.g., by enhancing the contrast, as described in [19]. The data obtained from the hospital were not masked. Thus, the essential step of processing was to provide proper masks to help in selecting the region of interest. The goal of this step was to prevent the ML-based model from learning information that is useless from the point of view of COVID-19 diagnostics, such as images of a collar bone or a stomach. Hand crafting masks would be time consuming, and it would require the involvement of a specialist. On the other hand, proposing a novel method of segmentation can be treated as a separate scientific problem, as presented in [20,21]. Therefore, it was decided to use the pretrained model, which is widely available and very powerful in masking X-ray images [22].

3.4. ML-Based Methods

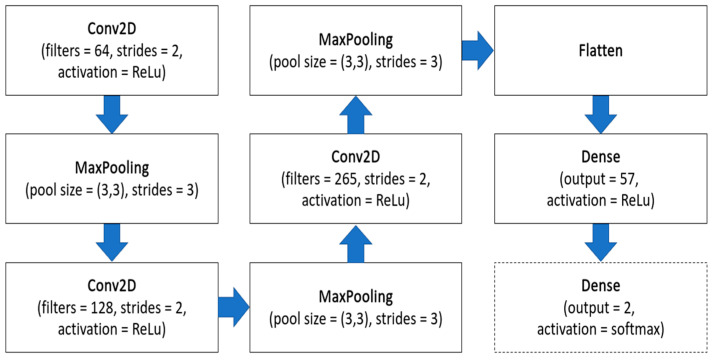

ML-based methods were used in two steps of processing, namely features extraction and classification. As a baseline, a convolutional neural network (CNN) was used for both steps. Then, almost the same CNN architecture was used solely for extracting features, since it was reported as very promising and efficient [23,24].

The features extraction step can be an essential one for the whole image processing system. It can reduce the complexity of the problem, and consequently, it can make the proposed approach more efficient, require less computing time and, therefore, more eco-friendly. The general schema of the CNN is presented in Figure 3. The input in this architecture was grayscale images with sizes of 512 × 512 pixels. Then, three pairs of convolutional layers and max pooling layers were used. Each of them was responsible for performing operations between the filters and the input of each corresponding layer. The convolutional layers consisted of 64, 128 and 256 filters, respectively. On each layer, an ReLU activation function was used to implement and perform nonlinear transformations. Then, the flattened layer and the dense layer were used. The proposed neural networks against the dense layer neuron quantity were examined. The values, with a range of [5,200], were tested. Involving the validation subset, it was observed that the most promising was using 57 features. This features extraction type was qualified for further research and development. Then, numerous classifiers were examined: XGBoost [25], Random Forest [26], LightGBM [27] and CatBoost [28]. It was decided to use these classifiers due to some reasons. First of all, they are tree-based algorithms, and they perform very well in binary classification problems. Secondly, they have been already used in similar applications, as presented in [29,30,31], providing promising results.

Figure 3.

The architecture of the CNN used in the research. In dashed lines, the added Dense network was in solely a CNN-based approach.

The approach based on solely CNN both for features extraction and for classification had one change in the architecture. In this case, the next dense layer with the softmax activation function (presented in Figure 3 in dashed line) was added. It enabled the binary classification of COVID-19 positive or negative. This approach was trained, validated and tested using the above-mentioned training, validation and testing datasets, respectively. The training parameters were: 50 epochs, a learning rate equal to 0.00001 and a loss function set to SparseCategoricalCrossentropy.

4. Results

Since the hospital data represent two classes (COVID-19 positive and healthy), the problem of the disease diagnostics can be treated as a binary classification. In this research, the confusion matrices were used in order to evaluate and compare the ML-based methods. Four measures were defined, as follows:

TP—true positives—COVID-19-infected patients classified as sick;

FP—false positives—healthy patient images classified as COVID-19 infected;

FN—false negatives—COVID-19-infected patients classified as healthy;

TN—true negatives—healthy patients classified as healthy.

Each model in the research was evaluated using accuracy (Equation (1)), precision (Equation (2)), recall (Equation (3)) and F1-score (Equation (4)), which use the above-mentioned measures of TP, FP, FN and TN.

| (1) |

| (2) |

| (3) |

| (4) |

All experiments were performed using a Tesla with GPU support. As a result of its enormous computing power, low price, relatively low demand for electricity and the CUDA environment support, Tesla systems have become an attractive alternative to traditional high-power computing systems, such as CPU clusters and supercomputers. This kind of device can be extremely helpful in image processing and also in medicine diagnostics.

The obtained results from all the experiments are provided in Table 1. All the evaluated metrics are given: accuracy, precision, recall and F1-score. The approach using the CNN both for features extraction and for classification provided the less promising results. Two examined classifiers provided the highest results: XGBoost and LightGBM, with accuracy = 1.0, precision = 1.0, recall = 1.0 and F1-score = 1.0. For selecting the optimal classifier for the presented solution, the computational time for both classifiers, namely the training time and prediction time for a single image, was compared. It was decided to use this parameter for optimization because the goal was to provide a light, sustainable and eco-friendly solution. For XGBoost, the average training time was equal to 242 ms, and the prediction time for a single image was above 11ms. For LightGBM, those times were 132 ms and less than 2 ms, respectively. That is why LightGBM is marked in bold in Table 1.

Table 1.

Obtained results: accuracy, precision, recall and F1-score for all experiments.

| F. Extractor | Classifier | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| CNN | CNN | 0.86 | 0.75 | 1.00 | 0.86 |

| CNN | XGBoost | 1.00 | 1.00 | 1.00 | 1.00 |

| CNN | Random Forest | 0.91 | 0.86 | 1.00 | 0.92 |

| CNN | LightGBM | 1.00 | 1.00 | 1.00 | 1.00 |

| CNN | CatBoost | 0.91 | 0.86 | 1.00 | 0.92 |

One could ask ‘what makes LightGBM faster than XGBoost?’. The following are the features of LightGBM that affect its effectiveness and the mathematics (Equation (5)) behind LightGBM that allow one to understand the answer to this question [32,33].

| (5) |

where , , , , d is the point in the data where the split is calculated to find the optimal gain in variance and the coefficient is used to normalize the sum of the gradients over B back to the size of .

LightGBM produces trees and finds the leaves with the greatest variance to perform division with the use of leaf-sage techniques. LightGBM achieves the optimal number of leaves in the trees and uses the minimum amount of data in the tree.

5. Discussion

ML methods can be valuable tool for COVID-19 diagnosis. ML-based methods cannot replace an experienced medical doctor in the final diagnosis, but they help significantly in the process, relieving the burden on health care and improving the diagnostic process. Screening with X-ray images is less expensive and faster than PCR testing [34]. This is one of the reasons why it is worth developing ML-based techniques to assist specialists in diagnostics.

In [35], the authors paid particular attention to the explainability AI (xAI), as it is essential in clinical applications. Explainable approaches increase the confidence and trust of the medical community in AI-based methods. They noted that X-ray imaging was not a method of choice when diagnosing COVID-19. However, the changes visible in the X-ray images of the lungs allow for the detection of pathological changes at an early stage of their development. For this reason, the authors indicated the usefulness of models supporting radiologists in their work and improving the decision-making process. The dataset of X-ray images used by the authors contained nearly 900 X-ray images of both COVID-19 patients and healthy patients, which was a significantly bigger dataset than that presented in this paper. Therefore, it could learn a wider range of differences between the images. In this work, the authors used pre-trained networks (ResNet-18 and DenseNet-121) to perform image classification with the best AUC score of 0.81. The sensitivity and specificity results obtained by authors were significantly lower than ours, however, which may be caused by dataset size differences. The model proposed in this research is fast, efficient and does not require high computing power; thus, it can be used in ordinary computers in hospital laboratories. The presented model obtained satisfactory results of evaluation metrics, which confirm its accuracy. These results are comparable and potentially better than those reported in the state-of-the-art review (Section 2). Some detailed results provided in the literature are presented in Table 2. However, our concern remains on the small number of original images that formed the basis of the database used. We believe that the method of data augmentation used may introduce bias; however, with such a small amount of data, this step was necessary. We believe that this is an aspect that could be improved in the course of further cooperation with hospitals that would provide more learning data.

Table 2.

Results compared to other state-of-the-art methods, namely accuracy, precision, recall, F1-score and AUC. The results not provided by the authors are marked with ‘-’.

| Authors | Method | Acc. | Prec. | Rec. | F1 | AUC |

|---|---|---|---|---|---|---|

| Rajagopal [27] | CNN + SVM | 0.95 | 0.95 | 0.95 | 0.96 | - |

| Júnior et al. [30] | VGG19 + XGBoost | 0.99 | 0.99 | 0.99 | 0.99 | - |

| Nasari et al. [29] | DenseNet169 + XGBoost | 0.98 | 0.98 | 0.92 | 0.97 | - |

| Ezzoddin et al. [36] | DenseNet169 + LightGBM | 0.99 | 0.99 | 1.00 | 0.99 | - |

| Laeli et al. [28] | CNN + RF | 0.99 | - | - | - | 0.99 |

| Proposed | CNN + LightGBM | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

Table 2 presents numerous approaches providing comparable results. It is essential to mention that some of them are based on very complex architectures, use an extremely big number of training parameters, need excessive computational power and require long training. However, we decided to use fewer than 60 features and a light, fast classifier in our approach. Thus, the approach proposed in this paper is lightweight, efficient and fast. In the literature, we can observe very complex and resource-consuming approaches (such as COVID-Net, as proposed in [37], or ResNet, as implemented in [38]). It is worth emphasizing that the proposed solution is lighter but still equally or more efficient. Unfortunately, it is very difficult to compare the eco-friendliness of different computing-based diagnostic approaches, as it is not customary to provide such information in scientific publications. Hopefully, in the near future, the sustainability of the proposed methods will become more significant for researchers and editors.

We are also aware that there are some future improvements required for the presented model. It is necessary to validate the model on a larger, different dataset. X-ray images made with the use of various equipment exhibit different features, which may be an obstacle to the universality of the presented model. It is worth checking how the model performs on a new set of data from the same hospital and, alternatively, on a set from a different source. Likely, the additional pre-processing can help to make all samples uniform. Another potential extension of this work is providing the xAI. Its main aim is not only giving the classification but also providing an explanation of why such a decision was made by a ML-based method. Implementing the xAI can allow radiologist doctors to evaluate the model and verify whether it makes the decisions based on real COVID-19 lesions.

Author Contributions

Conceptualization, A.G., M.T. and A.M.; methodology, A.G.; software, M.T.; validation, A.M., Z.S. and S.M.K.; formal analysis, M.W.; investigation, A.M. and S.M.K.; resources, Z.S. and A.H.; data curation, M.W. and A.H.; writing—original draft preparation, S.M.K.; writing—review and editing, A.G.; visualization, M.T.; supervision, M.W.; project administration, A.G.; funding acquisition, A.G and A.M. All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

The project was approved by the Ethics Committee of the Nicolaus Copernicus University in Toruń Collegium Medicum in Bydgoszcz (decision no. KB 454/2022.)

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used in the research in a raw, anonymized form are available at https://github.com/UTP-WTIiE/Xray_data.git (accessed on 2 August 2022).

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This research was funded by Bydgoszcz University of Science and Technology grant number DNM 32/2022. Part of this research was supported by the National Centre for Research and Development of Poland (Narodowe Centrum Badań i rozwoju, NCBR) with project number POWR.03.02.00-00-I019/16-00.

Footnotes

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Zheng J. SARS-CoV-2: An Emerging Coronavirus that Causes a Global Threat. Int. J. Biol. Sci. 2020;16:1678–1685. doi: 10.7150/ijbs.45053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Tahamtan A., Ardebili A. Real-time RT-PCR in COVID-19 detection: Issues affecting the results. Expert Rev. Mol. Diagn. 2020;20:453–454. doi: 10.1080/14737159.2020.1757437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Pang L., Liu S., Zhang X., Tian T., Zhao Z. Transmission Dynamics and Control Strategies of COVID-19 in Wuhan, China. J. Biol. Syst. 2020;28:543–560. doi: 10.1142/S0218339020500096. [DOI] [Google Scholar]

- 4.Momeny M., Neshat A.A., Hussain M.A., Kia S., Marhamati M., Jahanbakhshi A., Hamarneh G. Learning-to-augment strategy using noisy and denoised data: Improving generalizability of deep CNN for the detection of COVID-19 in X-ray images. Comput. Biol. Med. 2021;136:104704. doi: 10.1016/j.compbiomed.2021.104704. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Kassania S.H., Kassanib P.H., Wesolowskic M.J., Schneidera K.A., Detersa R. Automatic Detection of Coronavirus Disease (COVID-19) in X-ray and CT Images: A Machine Learning Based Approach. Biocybern. Biomed. Eng. 2021;41:867–879. doi: 10.1016/j.bbe.2021.05.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Jain G., Mittal D., Thakur D., Mittal M.K. A deep learning approach to detect Covid-19 coronavirus with X-ray images. Biocybern. Biomed. Eng. 2020;40:1391–1405. doi: 10.1016/j.bbe.2020.08.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Khan S.H., Sohail A., Khan A., Hassan M., Lee Y.S., Alam J., Basit A., Zubair S. COVID-19 detection in chest X-ray images using deep boosted hybrid learning. Comput. Biol. Med. 2021;137:104816. doi: 10.1016/j.compbiomed.2021.104816. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Tahir A.M., Chowdhury M.E., Khandakar A., Rahman T., Qiblawey Y., Khurshid U., Kiranyaz S., Ibtehaz N., Rahman M.S., Al-Maadeed S., et al. COVID-19 infection localization and severity grading from chest X-ray images. Comput. Biol. Med. 2021;139:105002. doi: 10.1016/j.compbiomed.2021.105002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Brunese L., Mercaldo F., Reginelli A., Santone A. Explainable Deep Learning for Pulmonary Disease and Coronavirus COVID-19 Detection from X-rays. Comput. Methods Programs Biomed. 2020;196:105608. doi: 10.1016/j.cmpb.2020.105608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Chakraborty S., Murali B., Mitra A.K. An Efficient Deep Learning Model to Detect COVID-19 Using Chest X-ray Images. Int. J. Environ. Res. Public Health. 2022;19:2013. doi: 10.3390/ijerph19042013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Civit-Masot J., Luna-Perejón F., Domínguez Morales M., Civit A. Deep Learning System for COVID-19 Diagnosis Aid Using X-ray Pulmonary Images. Appl. Sci. 2020;10:4640. doi: 10.3390/app10134640. [DOI] [Google Scholar]

- 12.Ayesha S., Yu Z., Nutini A. COVID-19 Variants and Transfer Learning for the Emerging Stringency Indices. Neural Process. Lett. 2022:1–10. doi: 10.1007/s11063-022-10834-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ahamad M.M., Aktar S., Rashed-Al-Mahfuz M., Uddin S., Liò P., Xu H., Summers M.A., Quinn J.M.W., Moni M.A. A machine learning model to identify early stage symptoms of SARS-Cov-2 infected patients. Expert Syst. Appl. 2020;160:113661. doi: 10.1016/j.eswa.2020.113661. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kannan S., Subbaram K., Ali S., Kannan H. The role of artificial intelligence and machine learning techniques: Race for covid-19 vaccine. Arch. Clin. Infect. Dis. 2020;15:e103232. doi: 10.5812/archcid.103232. [DOI] [Google Scholar]

- 15.EC On Artificial Intelligence—A European Approach to Excellence and Trusty. [(accessed on 2 July 2022)]. Available online: https://ec.europa.eu/info/sites/default/files/commission-white-paper-artificial-intelligence-feb2020_en.pdf/

- 16.Schwartz R., Dodge J., Smith N.A., Etzioni O. Green ai. Commun. ACM. 2020;63:54–63. doi: 10.1145/3381831. [DOI] [Google Scholar]

- 17.Yigitcanlar T. Greening the artificial intelligence for a sustainable planet: An editorial commentary. Sustainability. 2021;13:13508. doi: 10.3390/su132413508. [DOI] [Google Scholar]

- 18.Lenherr N., Pawlitzek R., Michel B. New universal sustainability metrics to assess edge intelligence. Sustain. Comput. Inform. Syst. 2021;31:100580. doi: 10.1016/j.suscom.2021.100580. [DOI] [Google Scholar]

- 19.Giełczyk A., Marciniak A., Tarczewska M., Lutowski Z. Pre-processing methods in chest X-ray image classification. PLoS ONE. 2022;17:e0265949. doi: 10.1371/journal.pone.0265949. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Hasan M.J., Alom M.S., Ali M.S. Deep learning based detection and segmentation of COVID-19 & pneumonia on chest X-ray image; Proceedings of the 2021 International Conference on Information and Communication Technology for Sustainable Development (ICICT4SD); Dhaka, Bangladesh. 27–28 February 2021; Piscataway, NJ, USA: IEEE; 2021. pp. 210–214. [Google Scholar]

- 21.Munusamy H., Muthukumar K.J., Gnanaprakasam S., Shanmugakani T.R., Sekar A. FractalCovNet architecture for COVID-19 chest X-ray image classification and CT-scan image segmentation. Biocybern. Biomed. Eng. 2021;41:1025–1038. doi: 10.1016/j.bbe.2021.06.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Alimbekov R., Vassilenko I., Turlassov A. Lung Segmentation Library. [(accessed on 15 May 2022)]. Available online: https://github.com/alimbekovKZ/lungs_segmentation/

- 23.Rajagopal R. Comparative analysis of COVID-19 X-ray images classification using convolutional neural network, transfer learning, and machine learning classifiers using deep features. Pattern Recognit. Image Anal. 2021;31:313–322. doi: 10.1134/S1054661821020140. [DOI] [Google Scholar]

- 24.Laeli A.R., Rustam Z., Pandelaki J. Tuberculosis Detection based on Chest X-rays using Ensemble Method with CNN Feature Extraction; Proceedings of the 2021 International Conference on Decision Aid Sciences and Application (DASA); Sakheer, Bahrain. 7–8 December 2021; Piscataway, NJ, USA: IEEE; 2021. pp. 682–686. [Google Scholar]

- 25.Chen T., Guestrin C. Xgboost: A scalable tree boosting system; Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining; San Francisco, CA, USA. 13–17 August 2016; New York, NY, USA: Association for Computing Machinery; 2016. pp. 785–794. [Google Scholar]

- 26.Breiman L. Bagging predictors. Mach. Learn. 1996;24:123–140. doi: 10.1007/BF00058655. [DOI] [Google Scholar]

- 27.Ke G., Meng Q., Finley T., Wang T., Chen W., Ma W., Ye Q., Liu T. Lightgbm: A highly efficient gradient boosting decision tree; Proceedings of the Advances in Neural Information Processing Systems; Long Beach, CA, USA. 4–9 December 2017; [Google Scholar]

- 28.Prokhorenkova L., Gusev G., Vorobev A., Dorogush A.V., Gulin A. CatBoost: Unbiased boosting with categorical features; Proceedings of the Advances in Neural Information Processing Systems; Montreal, QC, Canada. 3–8 December 2018; [Google Scholar]

- 29.Nasiri H., Hasani S. Automated detection of COVID-19 cases from chest X-ray images using deep neural network and XGBoost. Radiography. 2022;28:732–738. doi: 10.1016/j.radi.2022.03.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Júnior D.A.D., da Cruz L.B., Diniz J.O.B., da Silva G.L.F., Junior G.B., Silva A.C., de Paiva A.C., Nunes R.A., Gattass M. Automatic method for classifying COVID-19 patients based on chest X-ray images, using deep features and PSO-optimized XGBoost. Expert Syst. Appl. 2021;183:115452. doi: 10.1016/j.eswa.2021.115452. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Jawahar M., Prassanna J., Ravi V., Anbarasi L.J., Jasmine S.G., Manikandan R., Sekaran R., Kannan S. Computer-aided diagnosis of COVID-19 from chest X-ray images using histogram-oriented gradient features and Random Forest classifier. Multimed. Tools Appl. 2022:1–18. doi: 10.1007/s11042-022-13183-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Machado M.R., Karray S., Sousa I.T.D. LightGBM: An effective decision tree gradient boosting method to predict customer loyalty in the finance industry; Proceedings of the 14th International Conference Science and Education; Toronto, ON, Canada. 19–21 September 2019. [Google Scholar]

- 33.Misshuari I.W., Herdiana R., Farikhin A., Saputra J. Factors that Affect Customer Credit Payments During COVID-19 Pandemic: An Application of Light Gradient Boosting Machine (LightGBM) and Classification and Regression Tree (CART); Proceedings of the 11th Annual International Conference on Industrial Engineering and Operations Management; Singapore. 7–11 March 2021. [Google Scholar]

- 34.Nikolaou V., Massaro S., Fakhimi M., Stergioulas L., Garn W. COVID-19 diagnosis from chest x-rays: Developing a simple, fast, and accurate neural network. Health Inf. Sci. Syst. 2021;9:1–11. doi: 10.1007/s13755-021-00166-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Barbano C.A., Tartaglione E., Berzovini C., Calandri M., Grangetto M. A two-step explainable approach for COVID-19 computer-aided diagnosis from chest x-ray images. arXiv. 20212101.10223 [Google Scholar]

- 36.Ezzoddin M., Nasiri H., Dorrigiv M. Diagnosis of COVID-19 Cases from Chest X-ray Images Using Deep Neural Network and LightGBM; Proceedings of the 2022 International Conference on Machine Vision and Image Processing (MVIP); Ahvaz, Iran. 23–24 February 2022; Piscataway, NJ, USA: IEEE; 2022. pp. 1–7. [Google Scholar]

- 37.Wang L., Lin Z.Q., Wong A. Covid-net: A tailored deep convolutional neural network design for detection of covid-19 cases from chest x-ray images. Sci. Rep. 2020;10:19549. doi: 10.1038/s41598-020-76550-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Rajpal S., Lakhyani N., Singh A.K., Kohli R., Kumar N. Using handpicked features in conjunction with ResNet-50 for improved detection of COVID-19 from chest X-ray images. Chaos Solitons Fractals. 2021;145:110749. doi: 10.1016/j.chaos.2021.110749. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data used in the research in a raw, anonymized form are available at https://github.com/UTP-WTIiE/Xray_data.git (accessed on 2 August 2022).