Abstract

Objective

To examine the data quality and usability of visual acuity (VA) data extracted from an electronic health record (EHR) system during ophthalmology encounters and provide recommendations for consideration of relevant VA end points in retrospective analyses.

Design

Retrospective, EHR data analysis.

Participants

All patients with eyecare office encounters at any 1 of the 9 locations of a large academic medical center between August 1, 2013, and December 31, 2015.

Methods

Data from 13 of the 21 VA fields (accounting for 93% VA data) in EHR encounters were extracted, categorized, recoded, and assessed for conformance and plausibility using an internal data dictionary, a 38-item listing of VA line measurements and observations including 28 line measurements (e.g., 20/30, 20/400) and 10 observations (e.g., no light perception). Entries were classified into usable and unusable data. Usable data were further categorized based on conformance to the internal data dictionary: (1) exact match; (2) conditional conformance, letter count (e.g., 20/30+2-3); (3) convertible conformance (e.g., 5/200 to 20/800); (4) plausible but cannot be conformed (e.g., 5/400). Data were deemed unusable when they were not plausible.

Main Outcome Measures

Proportions of usable and unusable VA entries at the overall and subspecialty levels.

Results

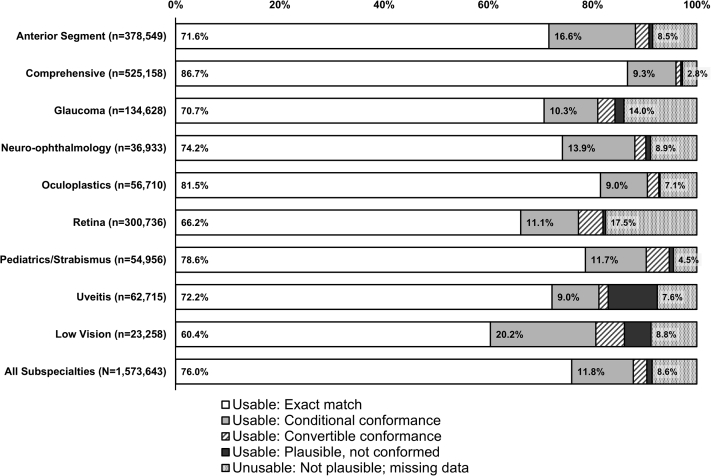

All VA data from 513 036 encounters representing 166 212 patients were included. Of the 1 573 643 VA entries, 1 438 661 (91.4%) contained usable data. There were 1 196 720 (76.0%) exact match (category 1), 185 692 (11.8%) conditional conformance (category 2), 40 270 (2.6%) convertible conformance (category 3), and 15 979 (1.0%) plausible but not conformed entries (category 4). Visual acuity entries during visits with providers from retina (17.5%), glaucoma (14.0%), neuro-ophthalmology (8.9%), and low vision (8.8%) had the highest rates of unusable data. Documented VA entries with providers from comprehensive eyecare (86.7%), oculoplastics (81.5%), and pediatrics/strabismus (78.6%) yielded the highest proportions of exact match with the data dictionary.

Conclusions

Electronic health record VA data quality and usability vary across documented VA measures, observations, and eyecare subspecialty. We proposed a checklist of considerations and recommendations for planning, extracting, analyzing, and reporting retrospective study outcomes using EHR VA data. These are important first steps to standardize analyses enabling comparative research.

Keywords: Data quality, Electronic health record, Retrospective analysis, Usability, Visual acuity

Abbreviations and Acronyms: CSM, central, steady, maintained; CSUM, central, steady, unmaintained; CUSM, central, unsteady, maintained; EHR, electronic health record; VA, visual acuity

The prevalence of electronic health records (EHRs) has increased interest in pooling data sets across practices and institutions. These efforts involve extracting and analyzing available data elements to answer questions, including disease prevalence, treatment effects, and clinical practice patterns. Visual acuity (VA) is a vital sign in eyecare and likely the most measured and recorded data element in clinical encounters. Visual acuity routinely serves as a surrogate for visual ability/disability when evaluating the effects of medical and surgical interventions, and as an end point, may define success or failure in clinical studies and trials.1 With the availability of VA data at nearly every visit, and its importance to overall visual function, VA is perhaps a best case scenario to examine issues related to EHR data quality in eyecare. An extensive body of work on obtaining VA measurements is well-established and relies on technical standards. However, inconsistent application of or failure to apply these guidelines in clinical practice challenges data harmonization practices and usability of recorded data.2, 3, 4 Currently, there are no established best practices when coding VA before combining data sets or analytic techniques that should be employed. In contrast to data derived from prospective research where strict adherence to VA standards exist for measuring and recording findings (except for VA estimates worse than 20/800), retrospective EHR VA data analysis can present serious challenges.

Two studies have reported on EHR-derived VA data analysis using algorithms for harmonization of data.5,6 The aim of both studies was to extract the best documented VA for data analysis from a given clinical encounter. Both studies noted problems where VA exists as free text (instead of a structured data element) lacking formatting constraints and encouraging errors. To tackle the problem, Mbagwu et al5 extracted VA from an EHR by creating keyword searches for text strings that were manually mapped to 18 predefined VA categories (e.g., 20/10, 20/30, counting fingers). They observed 5668 unique VA entries from 8 VA fields in a sample of 295 218 clinic encounters—far exceeding the typical 30+ plausible unique entries one might expect using VA line measurement and observation standards. In contrast, Baughman et al6 developed an algorithm applying natural language processing to inpatient ophthalmology consultation notes. Regardless of the approach, both groups reported “success” comparable with manually extracted data and noted limitations, including data analysis from a single center and inability to characterize VA by method of measurement (e.g., Snellen, ETDRS, HOTV).

Judging data quality continues to remain difficult7 and is often inadequately reported.8, 9, 10, 11, 12 An information gap observed in a 2016 systematic review of big data analytics in health care revealed that none of the EHR studies evaluating data quality discussed quantitative results.13 One framework describing data quality suggests 3 data quality categories be considered when curating, coding, and harmonizing data.14 These categories include conformance (data complying with an internal or external standard, e.g., data dictionary), completeness (absence of data), and plausibility (believability of data). Currently, in eyecare, there is no external standard or definitive data dictionary regarding the conformance of VA data. Completeness (specifically, missingness and omission) has implications for data bias and thus may limit the utility of analyses. Across medical specialties, missing data rates varied from 20% to 80%.15 In eyecare research, the Intelligent Research in Sight registry reported 16% missing VA values from EHR data collected nationally.16 These data included uncorrected and corrected VA in the right eye, left eye, and both eyes. However, details on other VA elements in the EHR (e.g., manifest refraction VA), data conformance, plausibility, methods for harmonization of data from different platforms, or differences by subspecialty have not been described further in relevant publications.17,18 To assist in developing guidelines on the minimum level of acceptable data quality (i.e., conformance, completeness, plausibility),14 an understanding of current variations (type and magnitude) in EHR VA data is needed; with few exceptions, this variation remains largely unknown.13,19, 20, 21 As there is a high adoption rate of EHRs in eyecare22 and the field relies on other structured data elements, eyecare is well-positioned to examine and improve data quality.

In addition to data quality standards, defining VA end points to reflect meaningful clinical outcomes remains a priority for big data analyses and machine learning.8,23 Visual acuity end points in prospective research are commonly reported as the number of letters read, lines read, or impairment category in cross-sectional investigations, whereas changes in VA are typically reported in clinical trials or longitudinal studies.1,24,25 Alternatively, differences in mean changes in VA between groups have been proposed.1 For retrospective EHR analyses, standards have yet to be established.

Our aim is to examine existing VA documentation practices, data quality focusing on conformance and plausibility,14 and thus the usability of EHR VA data for individuals and institutions planning on analyzing “readily available” data. In this work, usability is defined as VA data that meet conformance criteria or characteristics consistent with plausibility. Understanding these practices highlights opportunities for data quality improvement initiatives and may provide guidance when including VA data in analyses and determining study end points.

Methods

This study was approved by the Johns Hopkins University School of Medicine Institutional Review Board. All research adhered to the tenets of the Declaration of Helsinki. Informed consent was waived for the study.

Data Source and Variables

Data from patients having ≥ 1 office visit encounter at any of the 9 locations (1 urban hospital-based clinic and 8 suburban clinics) and seen by any of the 156 eyecare providers of the Johns Hopkins Wilmer Eye Institute between August 1, 2013, and December 31, 2015, were obtained from a single EHR system (EpicCare Ambulatory, Epic Systems). Medical record number, encounter date, visit provider, and VA data were included. Visual acuity entries were extracted from 13 of the 21 available data fields (Table 1) for respective right and left eyes (uncorrected, corrected, pinhole uncorrected, pinhole corrected, and manifest refraction) and both eyes (corrected, uncorrected, and manifest refraction). Our EHR system allows VA to be recorded multiple times for each of these fields by selecting “Add” where allowable. All entries were extracted for analysis from the 13 fields. Note that the 8 available fields not included in the analysis (i.e., cycloplegic refraction, contact lens, contact lens overrefraction, and final refractive prescription in the right and left eyes) contributed 7% of all available VA entries.

Table 1.

Available Fields to Document Distance VA in Our Electronic Health Record Platform

| VA Entry Fields | Right Eye | Left Eye | Both Eyes |

|---|---|---|---|

| Uncorrected | A, I | A, I | A, I |

| Corrected | A, I | A, I | A, I |

| Pinhole uncorrected | A, I | A, I | |

| Pinhole corrected | A, I | A, I | |

| Manifest refraction | A, I | A, I | A, I |

| Cycloplegic refraction | A | A | |

| Contact lens | A | A | |

| Contact lens overrefraction | A | A | |

| Final refractive prescription | A | A |

A = available; I = included in the current analysis; VA = visual acuity.

Visual acuity assessment and data entry in this health system is typical for eyecare practices and mostly performed by ophthalmic technicians. Visual acuity is recorded by typing free text or by selecting 1 of the 24 predefined menu options (Fig 1). It is not possible to retrospectively discern which method of entry was used. Some technicians work solely within 1 subspecialty clinic, whereas others float between subspecialties. Each eyecare provider was assigned to 1 of the 9 subspecialties: anterior segment, comprehensive, glaucoma, neuro-ophthalmology, oculoplastics, retina, pediatrics/strabismus, uveitis, and low vision. Encounters only associated with provider types of “resident,” “technician,” and “research staff,” but no other subspecialty providers were excluded from the analysis as these providers can deliver care in > 1 subspecialty division.

Figure 1.

Internal data dictionary of visual acuity (VA) line measurements and observations. A, Internal data dictionary VA measurements. B, Internal data dictionary VA observations. Proportions of usable VA entries belonging to categories exact match, conditional conformance, and convertible conformance pertaining the 38 VA line measurements and observations for the data dictionary. ∗VA line measurements and observations predefined in the drop-down menu in the institutional electronic health record system. Specifically, “CF at 3’” instead of “CF” was the predefined menu item. CF = counting fingers; CSM = central, steady, maintained; CSUM = central, steady, unmaintained; CUSM = central, unsteady, maintained; HM = hand motion; LP = light perception; NLP = no light perception; UCUSUM = uncentral, unsteady, unmaintained.

Data Quality Assessment

The researchers (J.G., X.G., M.B.) discussed and agreed on a comprehensive listing of VA measurements and observations (e.g., 20/30, 20/400, no light perception) that included 28 VA line measurements and 10 observations (Fig 1). This data dictionary served as the internal study standard for data conformance assessment. The researchers also developed outcome categories and the coding rules for VA value conformance and plausibility. Using an automated coding algorithm, the VA entry was first compared with the data dictionary to determine whether it was an exact match. If not, the VA entry was examined for letter count documentation where the algorithm identified “+” and “−” and removed letter count information and any trailing alpha-descriptors. In cases where the VA entry did not match the data dictionary after letter count removal, the entry was manually reviewed to determine whether reasonable conversion to 1 of the 38 data dictionary items could be achieved. Finally, if not convertible, the entry was manually reviewed to determine plausibility. (Fig S1, available at www.ophthalmologyscience.org).

Visual acuity entries were classified as usable and unusable (Table 2), where usable was further categorized based on conformance and plausibility assessment outcome: (1) exact match; (2) conditional conformance, letter count (“20/50 −2+3”); (3) convertible conformance; for example, testing recorded at testing distances other than 4 m or 20 feet (“5/200 letters missing” was converted to “20/800,” “Tumbling E@2M” was converted to “20/400”), documentation errors (“20/12” was converted to “20/12.5”), variations in language descriptors or observations (“prosthetic” was converted to “no light perception”); and (4) plausible but not conformed. Visual acuity entries were placed in category 3 when there was high confidence of the recorder’s intent, and the values could reasonably be converted to a VA data value that conformed to the data dictionary. Visual acuity entries that could not be aligned with the data dictionary but were plausible were reported in category (4) (e.g., 5/400, 1/125). Category 4 entries had the potential to be usable in categorical analyses; however, a more expansive data dictionary would be needed. Visual acuity entries that were not plausible after manual review were considered unusable and interpreted as missing data (e.g., “1920/20,” “20/02-2,” “can’t see,” “+3.50”).

Table 2.

Categories of Electronic Health Record VA Entries for Data Analysis

| Category | Description | Examples | Interpretation Outcome | |

|---|---|---|---|---|

| Usable | 1. Exact match | Conformance to the data dictionary∗ | 20/20, 20/32, 20/500, CF | Use as is |

| 2. Conditional conformance | Entry references VA lines partially read, reflecting the inclusion of letter count with or without subsequent alpha-descriptors; can be conformed to the data dictionary∗ | 20/50−2+3 → 20/50 20/100−1 moving head around → 20/100 |

For categories 2 and 3: • Extract numeric VA measures or standard observations only • Disregard counting letter or narrative information • Conform VA line measures and observations to allowed values defined by the data dictionary∗ |

|

| 3. Convertible conformance | Entry can be conformed to the data dictionary∗ after conversion, - Includes alpha-descriptors or modifiers - Recorded at test distances inconsistent with the data dictionary - Reflects typographical documentation errors, variations in language descriptors or observations |

20/20 blurry → 20/20 NI 20/50 → 20/50 5/200 letters missing → 20/800 CF@3ft → CF Tumbling E@2M → 20/400 20/12 → 20/12.5 prosthetic → NLP posthetic → NLP |

||

| 4. Plausible, not conformed | Entry did not conform to the data dictionary∗ but could be plausible | 5/400 1/125 |

May use depending on research question, the data dictionary, and internal documentation idiosyncrasies | |

| Not usable | Number strings, nonsensical fractions, and misplaced data | 20825, 2525, 60/64, 070, 20/0, 820/25, 0.35, +3.50, 1920/20 | Recorded data not plausible and deemed as missing data | |

| Single number recorded | 15, 18, 20, 35, 40, 070, 200 | |||

| Interpretation deemed arbitrary | VA recorded as 20/020, possible VA interpretations include 20/20, 20/200, 2/200 (“200-size letter E”) | |||

CF = counting fingers; NI = no improvement; NLP = no light perception; VA = visual acuity.

Data dictionary: internal standard listing of VA line measurements and observations that meet conformance and plausibility standards (Fig 1).

Statistical Analysis

Proportions of each VA category were calculated by assessing conformance and plausibility of VA line measure and observation and by provider subspecialty. Proportions of usable data and unusable VA data were compared between the 9 subspecialties using chi-square tests. We conducted 36 pairwise chi-square tests to compare the distribution of usable and unusable data between every 2 subspecialties, with the P values considered as statistically significant at < 0.0014 level using Bonferroni correction. All statistical analyses were performed using Stata 17 (Stata Corp).

Results

We extracted 1 573 643 VA entries from 513 036 encounters, representing 166 212 patients seen by 156 eyecare providers during a 29-month period. Of the 9 subspecialties, comprehensive, anterior segment, and retina had the highest volume of eyecare providers, encounters, and VA entries (Table S1, available at www.ophthalmologyscience.org). Up to 4 entries were observed for a given VA field examined during the same encounter. Visual acuity was documented in 1 eye only in 36 748 of 513 036 (7.2%) encounters. Our analysis found 1 438 661 (91.4%) usable data. Of those, there were 1 196 720 (76.0%) exact match (category 1), 185 692 (11.8%) conditional conformance with letter count (category 2), 40 270 (2.6%) convertible conformance (category 3), and 15 979 (1.0%) plausible but not conformed entries (category 4, Fig 2).

Figure 2.

Distributions of usable and unusable visual acuity (VA) entries by eyecare subspecialty. Proportions of electronic health record-derived VA entries categorized as usable, exact match of the data dictionary (Fig 1); usable, conditional conformance, VA entry referenced lines partially read and otherwise conformed to the data dictionary; usable, convertible conformance, VA entry included additional alpha-descriptors, modifiers, or performed at test distances inconsistent with the data dictionary and can be conformed to the data dictionary; usable, plausible but not conformed to the data dictionary; and unusable, VA entries that were not plausible after manual review. Proportions shown for VA entries from office visit encounters with providers of 9 eyecare subspecialties and in total. Numbers of VA entries for each subspecialty are shown.

Proportions of VA entry categories (usable, exact match; usable, conditional conformance; usable, convertible conformance; usable, plausible but not conformed; unusable) differed by subspecialty (P < 0.001). The highest percentage of unusable data was among retina providers (17.5%) followed by glaucoma (14.0%), neuro-ophthalmology (8.9%), and low vision (8.8%). The percentage of usable but not exact match (categories 2–4) was the highest among providers in low vision (30.9%), uveitis (20.3%), and anterior segment (19.9%). Of all encounters, 11.8% referenced letter count or line partially read when documenting VA entries, with low vision (20.2%) and anterior segment (16.5%) having the highest percentages. Visual acuity entries during visits with providers from comprehensive eyecare (86.7%), oculoplastics (81.5%), and pediatrics/strabismus (78.6%) yielded the highest proportions of exact VA matches (Fig 2). The proportions of usable and unusable VA entries were similar between anterior segment and neuro-ophthalmology, between oculoplastics and uveitis, between anterior segment and low vision, and between neuro-ophthalmology and low vision (Table S2, available at www.ophthalmologyscience.org).

Using the data dictionary of 38 VA line measurements and observations as a reference and acknowledging that this may include only a subset of all acceptable VA line measurements and observations, we observed that VA entries referencing letter count or line partially read were greatest for line measurements of 20/10 (23.3%), 20/64 (19.1%), and 20/32 (18.3%) (Fig 1A). Note that there were 180 usable entries (categories 1–3) with measurements of 20/10, significantly fewer than some of the other line measurements (e.g., 20/15, n = 24 662). On average, VA line measurements 20/10 through 20/100 (16 line measurements) contained 14.2% VA entries with letter count, and VA measurements 20/125 through 20/800 (12 line measurements) contained 6.1% entries with letter count (P < 0.001). There were 1047 VA entries conformed to 20/12.5 by exact match, conditional conformance, or convertible conformance, with 799 (76.3%) belonging to category 3 (convertible conformance), most of which were documented as “20/12.”

Interpreting and recoding VA entries to align with the data dictionary was most likely for largely nonnumeric entries or observations such as counting fingers, hand motion, light perception, no light perception, and observations involving fixation and eye movement in the pediatric population (e.g., central, unsteady, maintained) (Fig 2B). Between 3.3% (uncentral, unsteady, unmaintained) and 94.5% (counting fingers) of observations required additional interpretation to recode to a data dictionary standard (Fig 1B).

Discussion

We observed that nearly one-tenth (8.6%) of the VA entries were unusable based on conformance and plausibility data quality assessment, with retina (17.5%) and glaucoma (14%) subspecialties showing the greatest proportion of unusable data over 29 months. Similar to the findings of the study by Mbagwu et al,5 we observed an excessive number of unique VA entries (n = 11 713), highlighting the continued challenges of free-text data entry, the coding burden on analytic personnel, and the need for internal or external data dictionaries to ensure reliable and comparable end point assessments. Based on this analysis, prior clinical and EHR research experience, and in accordance with existing guidelines on using VA as an end point,1, 2, 3, 4 we have developed a checklist of considerations—Reporting EHR VA Retrospective Data—when designing, extracting, analyzing, and reporting VA outcomes using retrospective EHR data (Table 3).

Table 3.

Reporting EHR VA Retrospective Data Considerations

| Phase/Element | Considerations and Recommendations |

|---|---|

| Study design | |

| Outcome definition | • Define VA outcome (e.g., best VA in the better-eye, change of best VA in the worse-eye, change in both eye VA) • Determine VA outcome unit (e.g., line versus letter count, logMAR, visual impairment categories) • Consider defining a minimum clinically important difference threshold |

| EHR VA elements | • Determine VA data fields that are relevant to the research question (e.g., best documented versus best corrected, see Table 1 for examples of available VA data fields) • Understand the implication of VA chart type (e.g., Snellen, ETDRS) on reporting and system capabilities • Plan for handling of the documentation of letter count and narrative detail |

| Nonquantifiable VA parameters | • Plan for managing observations (e.g., CF, HM, LP, NLP) as VA data - Observation (e.g., CF, HM, LP, NLP, prosthetic) entries and others should be analyzed separately from VA line measures or logMAR units when reporting VA change scores |

| EHR data extraction | |

| VA fields | • Identify available and relevant VA fields for data extraction - Assess for multiple entries in a single data field - Determine need for letter count extraction field (e.g., “+2”, “−1”) |

| Other relevant parameters | • Consider unique system and platform encounter parameters: department, subspecialty, location, provider, etc. • EHR system parameters: available VA fields, VA data entry method (e.g., free-text, drop-down menu) |

| Data quality assessment and analysis | |

| Completeness | • Identify the absence of VA data element(s) • Consider implications on cross-sectional and longitudinal analyses |

| Data dictionary conformance and plausibility | • Apply a data dictionary (VA values and observations) that is consistent with internal formatting constraints or standards until external standards are established • Plan for management of VA data documented at atypical or nonstandard test distances • Plan for manual review to assess plausibility in cases where data values may not meet the conformance standard |

| Change of VA | • Calculations of VA changes should consider eye- versus person-level reporting (completeness or missing data may impact usable data and analysis) • Plan for management of VA change in measures at floor (e.g., CF, HM) or ceiling of the estimate (e.g., 20/25) |

| Reporting | |

| VA outcome | • Outcome definition |

| VA fields examined | • Number of VA fields, entries per field examined • Content and description of VA fields analyzed (e.g., pinhole, refraction, near VA) |

| VA entry means | • Means of VA measure entries available: free text, drop-down menu, etc. • Documentation practices (if known) |

| Data quality | • Number of unique VA entries as a percentage of total entries • Completeness: number and percentage of entries with absent VA data • Definitions and percentage of usable (meeting conformance and plausibility standards) and unusable data (e.g., overall, by subspecialty, by provider, etc.) |

| VA coding | • Management of letter count, entries with test distance that do not convert to data dictionary, observations, narrative information, and implausible entries |

CF = counting fingers; EHR = electronic health record; HM = hand motion; logMAR = logarithmic minimum angle of resolution; LP = light perception; NLP = no light perception; VA = visual acuity.

Conformance into 1 of the 38 plausible VA data dictionary items defined in this study (Fig 1) required interpretation of the qualitative and quantitative modifications to entries documented in the VA fields. Of the usable entries, nearly 15% required recoding to enable categorization. Most of the recoding was for entries documented as partial lines read (letter count) and the highest proportions appeared in anterior segment and low vision encounters, perhaps emphasizing the subspecialties’ attentiveness for precision of the estimates (e.g., changes in VA after cataract extraction, documenting the effects of scotomas). Although counting letters (e.g., +2 or −1) to document partial lines read (for ETDRS, each optotype letter equates to 0.02 log units)2,26 may offer greater precision in the VA estimate and provide clinically useful information, counting letters presumes the entire chart has been read until threshold and applies when there are the same number of optotypes on each line. Additionally, not all EHR VA categories offer designated fields to record partial lines read (e.g., refraction right and left eye, uncorrected both eyes, habitual corrected both eyes), thus assessing changes in VA by letter count cannot be universally applied when including all VA elements; we expect ongoing system software updates will also challenge longitudinal data analyses. Possible solutions to improve data quality include transitioning to predefined VA structured fields in EHR without a free-text option, or more realistically, implementing computer-adaptive VA testing (similar to automated visual field staircase algorithms). Computer-adaptive VA testing may offer a standardization of testing administration and lead to improved accuracy and precision of VA estimates. The increased interest in testing and monitoring by telehealth may also accelerate opportunities for standardization.27,28 Until computer-adaptive VA testing is ubiquitous, reporting best line read or changes in lines read seems to be the most pragmatic approach when analyzing retrospective EHR data.

Other measurement, documentation, and analytic considerations are included in Table 2. Similar to the findings of the study by Mbagwu et al,5 we observed that it is not possible to reliably discern the documentation method of VA measurement (e.g., ETDRS, Snellen) retrospectively. This creates challenges in developing a data dictionary and judging equivalence (e.g., 20/60, 20/63, 20/64) when categorizing data and examining changes in VA longitudinally. Although not unique to EHR analyses, defining a meaningful change in VA as an outcome measure will vary based on the precision required and ceiling/floor effects (e.g., difficult to improve an eye with 20/25 VA by 2 lines). Floor effects will require careful planning in retrospective analyses of patients with retinal disease, given the higher proportions of documenting observations and unusable data. These findings highlight the opportunity to improve data quality by standardizing practices when VA estimates are worse than 20/800.29, 30, 31

Unlike prospective VA data collection, retrospective EHR VA data analysis from routine clinical care data may include biases from not only the unmasked measurement, the variability in measurement and documentation, but also the use of a post hoc outcome definition. Additionally, automated methods may lead to incomplete extraction and subsequent missing data for analyses when entries do not conform to data dictionaries. One must be wary of any claims that large quantities of data eliminate these types of biases as this assumes any biases are randomly distributed rather than systematic or unidirectional.32 Further study is needed to evaluate the presence and potential implication of biases in VA data by practice type, EHR platform, subspecialty, and patient status (i.e., postoperative measurement).

A major strength of this study is that it provides quantitative estimates of conformance and plausibility of VA data with consideration to eyecare subspecialties under the same health system with the same overarching data collection practices. These findings may be applicable to other medical centers and offer opportunities for quality improvement and improved comparative analyses. Although using data from 1 health system on 1 platform is a strength due to uniformity in software and protocols, we cannot generalize to other practices or platforms with different VA data entry interfaces and methods without further study. Other limitations include the inability to determine differing documentation practice among ophthalmic technicians or the reasons for VA data entry discordances and the discrepancies between different subspecialties with this retrospective analysis. Lastly, the descriptors of VA needing manual review were defined and identified according to the researchers’ discretion and interpretation of the data. Because external VA standards have yet to be specified for EHR analyses, conformance for this study was based on an internal data dictionary as detailed.

The magnitude of usable EHR VA data, including the VA entries requiring additional coding, varies by eyecare subspecialty. Further work will need to set external standards, further define data quality categories, and understand the implications of unusable data on both the encounter and patient-level study outcome. For those interested in planning EHR-related analyses involving VA, it is helpful to consider the Reporting EHR VA Retrospective Data checklist and the aforementioned issues that arise when pooling data and performing comparative assessments, including identifying pragmatic VA end points for longitudinal studies. Transparency in reporting and understanding the limitations may encourage modifications to existing VA measurement and documentation approaches, the development of an external VA data dictionary, coding algorithms, and analytic practices to identify feasible clinical end points and to ensure we have “Big Data” on which we can rely.

Manuscript no. D-22-00055R2.

Footnotes

Supplemental material available atwww.ophthalmologyscience.org.

Footnotes and Disclosures

Disclosures:

All authors have completed and submitted the ICMJE disclosures form.

The author(s) have made the following disclosure(s): M.V.B.: Personal fees – Allergan, Carl Zeiss Meditec, Janssen, Topcon Healthcare.

Supported by the Reader’s Digest Partners for Sight Foundation. The sponsor or funding organization had no role in the design or conduct of this research.

HUMAN SUBJECTS: No human subjects were included in this study. This study was approved by the Johns Hopkins University School of Medicine Institutional Review Board. All research adhered to the tenets of the Declaration of Helsinki. Informed consent was waived for the study.

No animal subjects were used in this study.

Author Contributions:

Conception and design: Goldstein, Guo, Boland

Data collection: Goldstein, Guo, Boland, Smith

Analysis and interpretation: Goldstein, Guo, Boland

Obtained funding: Goldstein

Overall responsibility: Goldstein, Guo, Boland, Smith

Supplementary Data

References

- 1.Csaky K.G., Richman E.A., Ferris F.L., III Report from the NEI/FDA Ophthalmic Clinical Trial Design and Endpoints Symposium. Invest Ophthalmol Vis Sci. 2008;49:479–489. doi: 10.1167/iovs.07-1132. [DOI] [PubMed] [Google Scholar]

- 2.Diabetic retinopathy study. Report number 6. Design, methods, and baseline results. Report Number 7. A modification of the Airlie House classification of diabetic retinopathy. Prepared by the Diabetic Retinopathy. Invest Ophthalmol Vis Sci. 1981;21:1–226. [PubMed] [Google Scholar]

- 3.Bailey I.L., Lovie-Kitchin J.E. Visual acuity testing. From the laboratory to the clinic. Vision Res. 2013;90:2–9. doi: 10.1016/j.visres.2013.05.004. [DOI] [PubMed] [Google Scholar]

- 4.Vol. 41. National Research Council, National Academy of Sciences; Washington, D.C: 1980. Recommended stardard procedures for the clinical measurement and specification of visual acuity. Report of working group 39. Committee on vision. Assembly of Behavioral and Social Sciences; pp. 103–148. [PubMed] [Google Scholar]

- 5.Mbagwu M., French D.D., Gill M., et al. Creation of an accurate algorithm to detect Snellen best documented visual acuity from ophthalmology electronic health record notes. JMIR Med Inform. 2016;4:e14. doi: 10.2196/medinform.4732. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Baughman D.M., Su G.L., Tsui I., et al. Validation of the Total Visual Acuity Extraction Algorithm (TOVA) for automated extraction of visual acuity data from free text, unstructured clinical records. Transl Vis Sci Technol. 2017;6:2. doi: 10.1167/tvst.6.2.2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Weiskopf N.G., Weng C. Methods and dimensions of electronic health record data quality assessment: enabling reuse for clinical research. J Am Med Inform Assoc. 2013;20:144–151. doi: 10.1136/amiajnl-2011-000681. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Alexeeff S.E., Uong S., Liu L., et al. Development and validation of machine learning models: electronic health record data to predict visual acuity after cataract surgery. Perm J. 2020;25:1. doi: 10.7812/TPP/20.188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Gui H., Tseng B., Hu W., Wang S.Y. Looking for low vision: predicting visual prognosis by fusing structured and free-text data from electronic health records. Int J Med Inform. 2022;159 doi: 10.1016/j.ijmedinf.2021.104678. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Parke D.W., II, Coleman A.L. New data sets in population health analytics. JAMA Ophthalmol. 2019;137:640–641. doi: 10.1001/jamaophthalmol.2019.0410. [DOI] [PubMed] [Google Scholar]

- 11.Wang S.Y., Pershing S., Lee A.Y. AAO Taskforce on AI and AAO Medical Information Technology Committee. Big data requirements for artificial intelligence. Curr Opin Ophthalmol. 2020;31:318–323. doi: 10.1097/ICU.0000000000000676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Dunbar G.E., Titus M., Stein J.D., et al. Patient-reported outcomes after corneal transplantation. Cornea. 2021;40:1316–1321. doi: 10.1097/ICO.0000000000002690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Clark A., Ng J.Q., Morlet N., Semmens J.B. Big data and ophthalmic research. Surv Ophthalmol. 2016;61:443–465. doi: 10.1016/j.survophthal.2016.01.003. [DOI] [PubMed] [Google Scholar]

- 14.Kahn M.G., Callahan T.J., Barnard J., et al. A harmonized data quality assessment terminology and framework for the secondary use of electronic health record data. eGEMs (Wash DC) 2016;4:1244. doi: 10.13063/2327-9214.1244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Chan K.S., Fowles J.B., Weiner J.P. Review: electronic health records and the reliability and validity of quality measures: a review of the literature. Med Care Res Rev. 2010;67:503–527. doi: 10.1177/1077558709359007. [DOI] [PubMed] [Google Scholar]

- 16.Wittenborn J., Ahmed F., Rein D. NHIS; Chicago, IL: 2016. IRIS registry summary data report: for the Vision & Eye Health Surveillance System.https://www.norc.org/PDFs/VEHSS/IRISDataSummaryReportVEHSS.pdf [Google Scholar]

- 17.American Academy of Ophthalmology. IRIS registry data analysis. https://www.aao.org/iris-registry/data-analysis/requirements. Accessed July 27, 2022.

- 18.Chiang M.F., Sommer A., Rich W.L., et al. The 2016 American Academy of Ophthalmology IRIS Registry (Intelligent Research in Sight) database: characteristics and methods. Ophthalmology. 2018;125:1143–1148. doi: 10.1016/j.ophtha.2017.12.001. [DOI] [PubMed] [Google Scholar]

- 19.Henriksen B.S., Goldstein I.H., Rule A., et al. Electronic health records in ophthalmology: source and method of documentation. Am J Ophthalmol. 2020;211:191–199. doi: 10.1016/j.ajo.2019.11.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Hicken V.N., Thornton S.N., Rocha R.A. Integration challenges of clinical information systems developed without a shared data dictionary. Stud Health Technol Inform. 2004;107:1053–1057. [PubMed] [Google Scholar]

- 21.Valikodath N.G., Newman-Casey P.A., Lee P.P., et al. Agreement of ocular symptom reporting between patient-reported outcomes and medical records. JAMA Ophthalmol. 2017;135:225–231. doi: 10.1001/jamaophthalmol.2016.5551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Lim M.C., Boland M.V., McCannel C.A., et al. Adoption of electronic health records and perceptions of financial and clinical outcomes among ophthalmologists in the United States. JAMA Ophthalmol. 2018;136:164–170. doi: 10.1001/jamaophthalmol.2017.5978. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Rohm M., Tresp V., Müller M., et al. Predicting visual acuity by using machine learning in patients treated for neovascular age-related macular degeneration. Ophthalmology. 2018;125:1028–1036. doi: 10.1016/j.ophtha.2017.12.034. [DOI] [PubMed] [Google Scholar]

- 24.Martin D.F., Maguire M.G., Ying G.S., et al. Ranibizumab and bevacizumab for neovascular age-related macular degeneration. N Engl J Med. 2011;364:1897–1908. doi: 10.1056/NEJMoa1102673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Vitale S., Agrón E., Clemons T.E., et al. Association of 2-year progression along the AREDS AMD scale and development of late age-related macular degeneration or loss of visual acuity: AREDS Report 41. JAMA Ophthalmol. 2020;138:610–617. doi: 10.1001/jamaophthalmol.2020.0824. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Raasch T.W., Bailey I.L., Bullimore M.A. Repeatability of visual acuity measurement. Optom Vis Sci. 1998;75:342–348. doi: 10.1097/00006324-199805000-00024. [DOI] [PubMed] [Google Scholar]

- 27.Beck R.W., Moke P.S., Turpin A.H., et al. A computerized method of visual acuity testing: adaptation of the early treatment of diabetic retinopathy study testing protocol. Am J Ophthalmol. 2003;135:194–205. doi: 10.1016/s0002-9394(02)01825-1. [DOI] [PubMed] [Google Scholar]

- 28.Moke P.S., Turpin A.H., Beck R.W., et al. Computerized method of visual acuity testing: adaptation of the amblyopia treatment study visual acuity testing protocol. Am J Ophthalmol. 2001;132:903–909. doi: 10.1016/s0002-9394(01)01256-9. [DOI] [PubMed] [Google Scholar]

- 29.Bailey I.L., Jackson A.J., Minto H., et al. The Berkeley Rudimentary Vision Test. Optom Vis Sci. 2012;89:1257–1264. doi: 10.1097/OPX.0b013e318264e85a. [DOI] [PubMed] [Google Scholar]

- 30.Bach M. The Freiburg Visual Acuity test--automatic measurement of visual acuity. Optom Vis Sci. 1996;73:49–53. doi: 10.1097/00006324-199601000-00008. [DOI] [PubMed] [Google Scholar]

- 31.Bittner A.K., Jeter P., Dagnelie G. Grating acuity and contrast tests for clinical trials of severe vision loss. Optom Vis Sci. 2011;88:1153–1163. doi: 10.1097/OPX.0b013e3182271638. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Vedula S.S., Tsou B.C., Sikder S. Artificial intelligence in clinical practice is here-now what? JAMA Ophthalmol. 2022;140:306–307. doi: 10.1001/jamaophthalmol.2022.0040. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.