Abstract

Introduction

Many healthcare delivery systems have developed clinician‐led quality improvement (QI) initiatives but fewer have also developed in‐house evaluation units. Engagement between the two entities creates unique opportunities. Stanford Medicine funded a collaboration between their Improvement Capability Development Program (ICDP), which coordinates and incentivizes clinician‐led QI efforts, and the Evaluation Sciences Unit (ESU), a multidisciplinary group of embedded researchers with expertise in implementation and evaluation sciences.

Aim

To describe the ICDP‐ESU partnership and report key learnings from the first 2 y of operation September 2019 to August 2021.

Methods

Department‐level physician and operational QI leaders were offered an ESU consultation to workshop design, methods, and overall scope of their annual QI projects. A steering committee of high‐level stakeholders from operational, clinical, and research perspectives subsequently selected three projects for in‐depth partnered evaluation with the ESU based on evaluability, importance to the health system, and broader relevance. Selected project teams met regularly with the ESU to develop mixed methods evaluations informed by relevant implementation science frameworks, while aligning the evaluation approach with the clinical teams' QI goals.

Results

Sixty and 62 ICDP projects were initiated during the 2 cycles, respectively, across 18 departments, of which ESU consulted with 15 (83%). Within each annual cycle, evaluators made actionable, summative findings rapidly available to partners to inform ongoing improvement. Other reported benefits of the partnership included rapid adaptation to COVID‐19 needs, expanded clinician evaluation skills, external knowledge dissemination through scholarship, and health system‐wide knowledge exchange. Ongoing considerations for improving the collaboration included the need for multi‐year support to enable nimble response to dynamic health system needs and timely data access.

Conclusion

Presence of embedded evaluation partners in the enterprise‐wide QI program supported identification of analogous endeavors (eg, telemedicine adoption) and cross‐cutting lessons across QI efforts, clinician capacity building, and knowledge dissemination through scholarship.

Keywords: embedded research, learning health systems, partnership, quality improvement

1. BACKGROUND

Learning health systems aim to embed knowledge‐generating scientific, informatics, incentive, and cultural tools into daily clinical practice to continuously improve healthcare. 1 , 2 Many healthcare organizations in the United States of America and abroad have taken steps toward this aim, including developing clinician capacity to lead quality improvement (QI) initiatives, 3 enhancing digital infrastructure to track data, 4 , 5 deepening relationships with patients and caregivers, 6 and aligning incentives and goals of inter‐disciplinary teams, including researchers. 7 , 8 , 9 , 10 , 11 Documenting and analyzing these diverse QI initiatives can offer health systems insight into how to further optimize these efforts.

Clinicians are ideally positioned to identify problems, deploy QI initiatives, anticipate clinical impact, and rapidly adjust interventions given their position on the frontlines of clinical care. 12 Medical training in the United States of America 13 and elsewhere 14 increasingly requires foundational QI skills, but it typically does not include substantive evaluation methods needed to formally assess QI initiatives. 15 This is somewhat concerning as evaluations lacking methodological rigor can generate spurious conclusions and potentially harmful changes within a health system. 16 This gap limits a health system's capacity to implement changes informed by valid, reliable, and actionable evidence produced in real‐world settings.

Embedded mixed methods researchers with expertise in implementation science and health services research, offer an antidote to enhance evaluation of QI efforts. 17 , 18 , 19 Although a minority but increasing proportion of health systems have developed in‐house evaluation units, 10 , 20 , 21 , 22 further work is needed to optimize clinician‐researcher partnerships, especially in academic health systems where the medical school and health system are separate entities with aligned but distinct missions. 23 Some health systems have created ongoing clinician‐researcher partnerships within a given clinical area or temporary project‐based collaborations tasked with meeting organizational objectives. 10 , 22 , 23 , 24 We describe a hybrid of these approaches, a formalized clinician‐researcher partnership at Stanford Medicine aiming to enhance the evaluation of clinician‐led QI efforts.

2. METHODS

2.1. Improvement Capability Development Program (ICDP)

Stanford Health Care, a quaternary academic health system, in partnership with its affiliate, the Stanford School of Medicine (Stanford, CA, USA) launched ICDP in 2017 to strengthen their capacity as a learning health system. Herein, “Stanford Medicine” refers to the health system and medical school with affiliate organizations. Clinical faculty physicians are employed through the medical school but provide patient care within the health system.

ICDP is one component of a multi‐faceted effort to embed QI into every day clinical practice across the health system. It aims to develop improvement infrastructure through a network of designated Physician Improvement Leaders, one for each academic department. Physician Improvement Leaders are responsible for engaging peer clinician faculty in two to five ICDP leadership‐approved QI projects annually. 25 Approved projects are led by either the Physician Improvement Leader or another faculty physician, and executed by teams of physician and non‐physicians, including nurses, physician assistants, and other care team members (eg, lactation consultants). The health system encourages engagement and success with an incentive of 1% to 4% of the departments' “at‐risk” clinical revenue; these monies can be used to support departmental QI infrastructure by protecting faculty and staff's time for QI efforts, training, scholarly work, conference attendance, etc.

2.2. The Evaluation Sciences Unit (ESU) and the ICDP‐ESU collaboration

ICDP established a formal partnership with the ESU in September 2019 to elevate ICDP project evaluation by providing dedicated time of practical, applied researchers with mixed methods expertise.

The ESU was launched within the Stanford School of Medicine' Division of Primary Care and Population Health in an effort to build applied health services research expertise. The ESU is a multidisciplinary group of clinician and non‐clinician, doctorate‐ and masters‐trained faculty, and staff with methodological proficiency in implementation theory and evaluation sciences, health services research, qualitative research, epidemiology, and biostatistics, as well as content expertise across many clinical care settings. 26

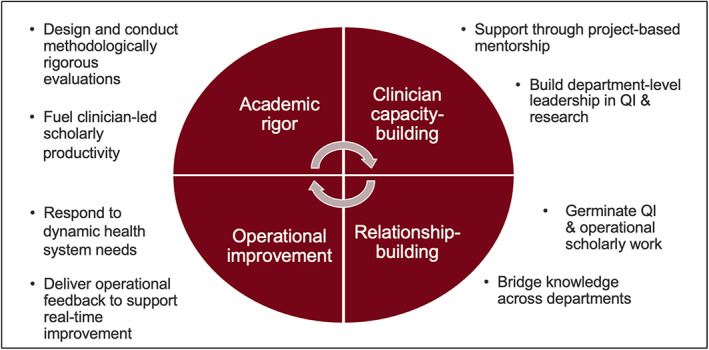

They partner with Stanford Medicine clinicians as well as external health systems to improve healthcare delivery through rigorous quantitative and qualitative evaluations while fostering clinician development and intra‐institutional networks (Figure 1). These efforts culminate in a “double bottom line”—informing operational decisions and disseminating learnings through scholarly work.

FIGURE 1.

Roles of the Evaluation Sciences Unit within the learning health system

The ICDP‐ESU collaboration is in its third annual cycle (September‐August): (1) 2019 to 2020, (2) 2020 to 2021, and (3) 2021 to 2022. The first 2 y are described below; third year activities are ongoing.

Each year, the ESU offered and provided each clinical department's ICDP project teams two distinct types of support: (a) consultations for each interested department, and (b) in‐depth partnered evaluation for a subset of high potential projects.

2.2.1. Consultation

Department Physician Improvement Leaders were invited to a consultation meeting with the ESU during the first months of each cycle. Physician Improvement Leaders were asked to identify one or two approved ICDP projects to discuss with the ESU that they perceived would benefit most from an enhanced evaluation. Project team members (medical, administrative, operational) were highly encouraged to join consultations to meet ESU members, learn about implementation science, and discuss and receive feedback on their project/s.

Preparatory materials were provided prior to each initial consultation, including reading material on implementation science 27 and an ESU‐developed project mapping tool—a structured matrix where QI project teams could map out the problem within its clinical context, its relevance to patient care and the overall organization, goals for improvement, risks and mitigation strategies, any planned interventions, and potential outcomes (Appendix S1). During consultations, the ESU helped teams think through the “why” and “how” of their project/s, with a focus on study design, evaluation methods, operational and investigative priorities, and scope. The ESU welcomed discussions on projects in diverse stages of implementation during these consultations.

Throughout the consultative period, the ESU team met weekly to discuss recent consultations, brainstorm remaining questions or risks, and determine next steps with each ICDP team. Following each consultation, ESU team members individually evaluated each project using an internally developed evaluation tool (Appendix S2) where projects were preliminarily ranked on the following criteria: (a) potential evaluability, that is, strength of study design and potential for rigorous evaluation, (b) alignment with current year's health system operational priorities, and (c) potential for broader application. Consensus discussions regarding project rankings took place at these weekly meetings, which allowed the ESU team to collaboratively identify promising projects that would significantly benefit from rigorous evaluation. Affiliated Physician Improvement Leaders and their team were then proactively invited for a second consultation to further discuss and assess their project's potential for in‐depth evaluation.

2.2.2. In‐depth partnered evaluation

Once all consultations were concluded in December of each year, the ESU team shortlisted 5 to 6 projects to present to a steering committee comprised of senior representatives from the health system, the ESU, and ICDP, using the above‐mentioned criteria and evaluation tool. The steering committee selected three projects from three different departments each year for in‐depth partnered evaluation. Equitable distribution of opportunity across departments each cycle was considered.

Beginning in January, dedicated project‐specific teams from the ESU (including expertise based on project needs) met with each QI project team weekly to refine the intervention, and develop and execute a methodologically rigorous evaluation plan. The weekly ICDP‐ESU collaboration meetings covered evidence supporting the proposed or ongoing intervention, implementation strategies and updates, project and evaluation aims, incorporation of stakeholder input, research frameworks, data collection and analysis, and other topics as needed. In addition, an ESU representative attended key operational meetings to track project activities, timelines, and challenges encountered in order to identify and recommend adaptations to strengthen implementation strategies. The ESU team also met internally every 2 wks to discuss project evaluation designs and troubleshoot challenges.

Project activities varied by data type. The qualitative team collected data from key stakeholders through interviews, observations, and focus groups, depending on project needs. Clinical partners typically provided lists of eligible stakeholders, including patients and/or clinicians who were then contacted by the qualitative team to gather their perspectives. The qualitative team also collaborated with clinical partners to determine how data were analyzed and shared (eg, de‐identified transcripts, data analysis tools, or summarized reports), depending on the desired involvement and capacity of the clinical team. Validated rapid analytic approaches were pursued where possible. 28

The quantitative team collaborated with the clinical partners to identify existing data sources and establish pathways to procure data in compliance with institutional policies. For example, data could be obtained from Epic Systems (Verona, WI)‐based and the institution's business analytic dashboards, the institution's research repository tool, 29 chart reviews conducted by clinical teams, and team‐developed surveys administered through REDCap 30 (Nashville, TN). The ESU‐ICDP collaboration also supported a designated, masters‐level biostatistician to have direct access to EHR‐based data through Epic Clarity to minimize burden on institution's Epic and business analytics teams to create dashboards. The team used the following coding languages (and respective software): SAS (Cary, NC), R, 31 and structure query language (SQL). The ESU and clinical teams shared de‐identified data and collaborated on a data analysis plan, but the ESU executed the analysis and presented results to collaborators. The ESU also informed dataset creation and dashboards by third parties to support clinical teams in ongoing evaluation.

Total effort for the consultations and in‐depth partner evaluations represented approximately 4.5 full time equivalents (FTE): 0.25 FTE each of director and physician scholar, 1.5 FTE quantitative expertise, 1.5 FTE qualitative expertise, and 1.0 FTE project manager; project management responsibilities were shared by two people, both of whom also supported qualitative data collection and analysis. A budget over a half million $USD annually covered evaluation faculty and staff time and other project resources (eg, interview transcription fees). The collaboration was supported through health system funding with a matched start‐up contribution through the Department of Medicine.

2.3. Understanding clinician‐partner experience and lessons learned

To understand the impact of and lessons learned from this process, real‐time feedback was encouraged and discussed periodically at project team meetings or one‐on‐one at the level of ICDP and ESU project leads, as appropriate. At the end of each fiscal year, ICDP project leaders and department Physician Improvement Leaders were asked to provide written feedback on how to improve the collaboration, providing specific examples where possible. Finally, the ESU team held internal bi‐annual reflection sessions to document learnings. Drawings from these sources, the overall impact and key learnings from the first 2 y of collaboration are presented below.

3. RESULTS

3.1. Consultation and selection process for in‐depth partnered evaluation

At the start of the first collaborative year, 60 ICDP projects were launched across 18 departments, ranging from 2‐5 projects per department. Project numbers grew slightly the following year with 62 ICDP projects (2‐8 projects per department) across 18 departments. ESU consultations were offered to all departments, but ultimately, 15 departments (83%) completed the initial consultation each year.

Most projects aligned with health system priorities but only a few had high potential for rigorous evaluation given ongoing confounders in the real‐world healthcare setting. Similarly, only a subset had potential for broader relevance, as many focused on locally responsive, and therefore idiosyncratic, solutions. Ultimately, 7 projects in Year 1 and 6 in Year 2 were shortlisted by the ESU team and presented to the steering committee for consideration, three of which were selected each year.

3.2. In‐depth partnered project evaluations

Table 1 summarizes the projects selected for in‐depth partnered evaluation each year, alignment of their aims with healthcare system domain priorities, their institutional impact, and resulting academic products. Briefly, projects targeted diverse aims within the healthcare system, including improving quality and safety, patient experience, clinician engagement and wellness, clinical care pathways, team‐building, and reducing readmissions. Project evaluations typically informed operational and implementation decisions, including those related to optimizing inpatient and outpatient clinical workflows (ie, Medicine, Dermatology, Pathology), appropriate patient triage (ie, Surgery), patient engagement in health system technology (ie, Neurology, Medicine), and optimizing staff clinical skills (ie, Radiology). Actionable, summative learnings were shared with clinical partners through lightning reports, 32 and internal discussions and presentations, including an end‐of‐year presentation by each department to ICDP leadership. These evaluations also led to external dissemination of findings through the production of 17 manuscripts (published, under review, or in progress), and 9 presentations at academic conferences.; citations per paper to date range from 0 to 75 with a median of 2.

TABLE 1.

Quality improvement projects selected for in‐depth partnered evaluation in 2019‐2020 and 2020‐2021 through the ICDP‐ESU partnership, their operational domains, impact, and scholarly outputs

| Department | Project | Health system operational domains | Institutional impact | Scholarly outputs |

|---|---|---|---|---|

| 2019–2020 | ||||

| Medicine |

AI‐informed workflow to detect deterioration of seriously ill inpatients (including COVID‐19) Adapted from the pre‐COVID‐19 project: palliative predictive mortality model for advanced care planning |

|

Project informed the strategy for implementing and integrating an AI algorithm to predict clinical deterioration of hospitalized patients into the workflow of a multidisciplinary inpatient team. To evaluate its impact, we designed a novel, theory‐driven protocol for a mixed method stepped‐wedge study using the Systems Engineering Initiative for Patient Safety (SEIPS) 2.0 model 51 and design‐thinking to optimize internal learnings and external validity. The evaluation is currently underway and will be used to inform subsequent decision‐making about the future of using AI to predict and respond to clinical deterioration |

Articles Holdsworth LM, Kling SMR, Smith M, et al. (2021) Predicting and responding to clinical deterioration in hospitalized patients by using artificial intelligence: protocol for a mixed methods, stepped wedge study. JMIR Res Protoc., 10(7):e27532. 52 |

|

Acute care telemedicine to optimize infection control and PPE use No prior pre‐COVID‐19 project; this project benefited from additional clinical informatics physician fellow support |

|

Project enhanced understanding of the use and patient and clinician experience of acute care telemedicine and clarified impact on bedside care for patients under isolation precautions. The evaluation identified facilitators and barriers to inpatient telemedicine, which prompted the system to adapt how it was used and expectations of its impact on patient care and infection prevention. This partnership also provided students enrolled in the Stanford Healthcare Consulting Group with a hands‐on opportunity to conduct semi‐structured interviews with patients and write a peer‐reviewed manuscript, supporting the health system's academic mission |

Articles Patel B, Vilendrer S, Kling SMR, et al. (2021) Using a real‐time locating system to evaluate the impact of telemedicine in an emergency department during COVID‐19: observational study. JMIR, 23(7):e29240. 39 Safaeinili N, Vilendrer S, Williamson E, et al. (2021) Inpatient telemedicine implementation as an infection control response to COVID‐19: qualitative process evaluation study. JMIR Formative Research, 5(6):e26452. 40 Vilendrer S, Patel B, Chadwick W, et al. (2020) Rapid deployment of inpatient telemedicine in response to COVID‐19 across three health systems. JAMIA, 27(7):1102‐1109. 44 Vilendrer S, Sackeyfio S, Akinbami E, et al. (2022) Patient perspectives of inpatient telemedicine during the COVID‐19 pandemic: qualitative assessment. JMIR Form Res, 30;6(3):e32933. 42 Vilendrer S, Lough ME, Garvert DW, et al. (2022) Nursing workflow change in a COVID‐19 inpatient unit following the deployment of inpatient telehealth: observational study using a real‐time locating system. JMIR, 24(6):e36882. 53 Conference abstracts Patel B, Vilendrer S, Kling SMR, et al. Leveraging a real‐time locating system to evaluate the impact of telemedicine in an emergency department during COVID‐19. Poster presentation at: AMIA Virtual Clinical Informatics Conference; May 18‐21, 2021; Online Safaeinili N, Vilendrer S, Williamson E, et al. Qualitative evaluation of a rapidly deployed inpatient telemedicine to COVID‐19 using RE‐AIM. Poster presented at: 13th Annual Conference on the Science of Dissemination and Implementation in Health. December 15‐17, 2020; Online |

|

| Neurology | Rapid implementation and evaluation of video visits across ambulatory neurology |

|

Project informed the rapid uptake of video visits across ambulatory neurology while maintaining patient access to care during pandemic‐related lockdowns. The evaluation identified considerations related to video visit uptake, ongoing needs, barriers, and facilitators to teleneurology across ambulatory neurology, including in both new and return patients. Through key insights from early interviews with clinicians, patients, and caregivers, the evaluation team was able to inform rapid (within weeks) adaptations to teleneurology during the early COVID‐19 response, enabling neurology to be the first fully virtual specialty at our institution. Longer‐term (within months), the evaluation helped inform clinic‐level guidelines for appropriate use of teleneurology. Adjustments were made to the resident experience, and strategies were implemented to overcome identified barriers |

Articles Kling SMR, Falco‐Walter J, Saliba‐Gustafsson EA, et al. (2021) Patient and provider perspectives of new and return neurology patient visits. Neurol Clin Pract, 11(6):472‐483. 33 Ng J, Chen J, Liu M, et al. (2021) Resident‐driven strategies to improve the educational experience of teleneurology (5111). Neurology, 96(15 Suppl):5111. 34 Saliba‐Gustafsson EA, Miller‐Kuhlmann R, Kling SMR, et al. (2020) Rapid implementation of video visits in neurology during COVID‐19: mixed methods evaluation. JMIR, 22(12):e24328 Yang L, Brown‐Johnson CG, Miller‐Kuhlmann R, et al. (2020) Accelerated launch of video visits in ambulatory neurology during COVID‐19: key lessons from the Stanford experience. Neurology, 95(7):305‐311. 36 Conference abstracts Falco‐Walter J, Kling SMR, Saliba‐Gustafsson EA, et al. Evaluation of patient and clinician perspectives for new and return ambulatory teleneurology visits, with special attention to subspecialty differences. Poster presented at: 2021 American Academy of Neurology Annual Meeting; April 17‐22, 2021; Online. Kling SMR, Saliba‐Gustafsson EA, Brown‐Johnson CG, et al. Overcoming challenges to neurology video visits during the COVID‐19 crisis: a mixed methods evaluation. Poster presented at: 13th Annual Conference on the Science of Dissemination and Implementation in Health; December 15‐17, 2020; Online |

| Surgery |

Patient and surgeon experiences with pre‐surgical video visit consults in plastic surgery Substituted from the pre‐COVID‐19 project: Decreasing total operating room case cycle times (a root cause analysis) |

|

Interviews with patients and surgeons helped inform a triage tool to determine in‐person vs virtual appointment scheduling to optimize patient preference with clinical need, and established video visits as a viable option for early pre‐operative appointments in addition to follow‐up post‐operative appointments |

Articles Brown‐Johnson CG, Spargo T, Kling SMR, et al. (2021) Patient and surgeon experiences with video visits in plastic surgery‐toward a data‐informed scheduling triage tool. Surgery, 170(2):587‐595. 37 Fu SJ, George E, Maggio PM, et al. (2020) The consequences of delaying elective surgery: surgical perspective. Ann Surg, 272(2):e79‐e80 Conference abstracts Spargo T, Brown‐Johnson CG, Park M, et al. Understanding the patient and provider experience with telehealth in plastic surgery. Oral presentation at: 16th Annual Academic Surgical Congress. February 2‐4, 2021; Online |

| 2020‐2021 | ||||

| Dermatology | Digitally‐enabled team‐based inpatient to outpatient dermatology care coordination and transitions |

|

Project enhanced the department's understanding of challenging patient care transitions from inpatient to outpatient dermatology through patient, clinician, and scheduling staff interviews, improved data capture, and evaluation of the impact of teledermatology on this problem. Early insights from patients, caregivers, schedulers, and dermatologists helped inform a clinician‐developed, EHR‐based template and associated workflow that was successfully implemented to improve the percent of patients receiving outpatient follow‐up within the desired timeframe while reducing clinical and scheduling staff workload and burden. The evaluation identified barriers to the continued use of the EHR‐based template, which were addressed shortly after the evaluation. The mixed methods evaluation revealed to the clinical team that patient care transitions from inpatient to outpatient care is a complex, multi‐factorial problem that needs more than one solution |

Articles Kling SMR, Aleshin MA, Saliba‐Gustafsson EA, et al. Evolution of a project to improve inpatient‐to‐outpatient dermatology care transitions: a mixed methods evaluation [under review] Kling SMR, Saliba‐Gustafsson EA, Winget M, et al. Teledermatology to facilitate transitions of care from inpatient to outpatient dermatology care: a mixed methods evaluation. JMIR [forthcoming/in press] Saliba‐Gustafsson EA, Aleshin MA, Brown‐Johnson CG, et al. Inpatient to outpatient dermatology care transitions: a qualitative exploratory study of patient and caregiver experiences. [under review] Conference abstracts Aleshin MA, Kwong BY, Saliba‐Gustafsson EA, et al. Improving efficiency in scheduling post‐discharge dermatology follow‐up. Oral presentation at: IHI Scientific Symposium; December 5‐82 021; Online Kling SMR, Saliba‐Gustafsson EA, Garvert DW, et al. Unintentional impact of telemedicine: implementation and sustainability of using video visits to improve access during patient care transitions in dermatology. Poster presented at: 14th Annual Conference on the Science of Dissemination and Implementation in Health; December 14‐16, 2021; Online Saliba‐Gustafsson EA, Kling SMR, Garvert DW, et al. Improving the efficiency of post‐discharge follow‐up scheduling in dermatology: a mixed methods evaluation. Poster presented at: 14th Annual Conference on the Science of Dissemination and Implementation in Health; December 14‐16, 2021; Online |

| Pathology | Reducing the cross‐matched to transfusion ratio of red blood cell products to improve product availability and reduce wastage |

|

In‐depth analysis of baseline EHR and blood management data resulted in changing the focus of an intervention target from location (ie, new vs old hospital) to clinical departments with inefficient utilization patterns that were contributing to red blood cell product waste during surgery. The more thorough understanding of the efficiency of red blood utilization prompted the team to target specific surgical specialties and individuals in the following fiscal year (2021‐2022) |

Articles Kling SMR, Garvert DW, Virk M, et al. Evolution of an analytic approach to identify inefficiencies in red blood cell ordering and transfusion for surgical cases. [in progress] |

| Radiology | Improving image quality through expansion of coaching model program to all imaging modalities |

|

The program improved diagnostic accuracy by optimizing technologists' skills capturing images; partnership informed the program rollout from its existence in two imaging modalities (ultrasound and mammography) to all 6 imaging modalities across the health system: ultrasound, mammography, x‐ray, nuclear medicine, computed tomography, magnetic resonance. Learning shared through a bi‐monthly learning collaborative helped to facilitate the dissemination of best practices and resources across all modalities |

Articles Hwang G, Vilendrer S, Amano A, et al. From acceptable to superlative: scaling a technologist coaching intervention to improve image quality. [under review] Conference abstracts Amano A, Vilendrer S, Brown‐Johnson CG, et al. Adaptation and dissemination of a technologist coaching intervention to improve radiologic imaging exams: a qualitative analysis. Poster presented at: 14th Annual Conference on the Science of Dissemination and Implementation; December 14–16, 2021; Online |

Abbreviations: AI, artificial intelligence; EHR, electronic health record; PPE, personal protective equipment; QI, quality improvement.

3.2.1. Role definition during in‐depth partnered project evaluations

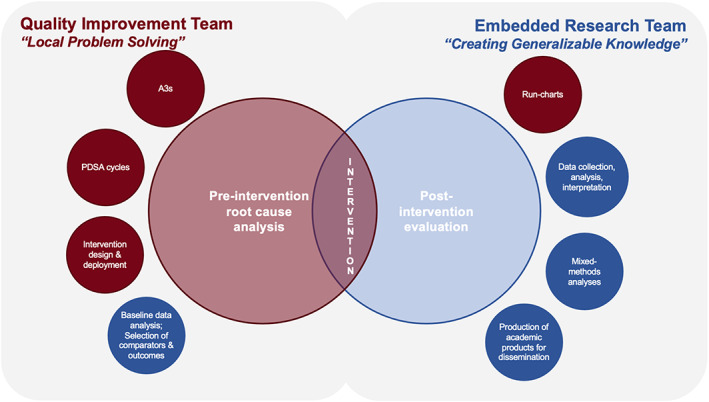

Each ICDP‐ESU project team defined roles and responsibilities at the outset of the collaboration. Internal reflection and partner feedback helped further delineate primary responsibilities as the collaboration progressed. Although both clinical and evaluation team members were involved in multiple aspects of each project, the QI project team typically led activities related to “local problem solving” through the development and implementation of an intervention, and the ESU team led activities related to “creating generalizable knowledge” through evaluation of the intervention and/or implementation strategy (Figure 2). Quantitative data were typically gathered from pre‐existing operational sources, whereas qualitative data were generated de novo through interviews and/or focus groups conducted by the ESU.

FIGURE 2.

Roles and responsibilities of quality improvement teams (left side) and the Evaluation Sciences Unit (right side) in Improvement Capability Development Program (ICDP) projects. Red represents roles and responsibilities typically associated with quality improvement whereas blue represents roles and responsibilities of embedded researchers. The exact balance of these roles and responsibilities was tailored for each ICDP project

The ESU team provided an objective view of each project and shared the common goal of successful intervention launch with QI project teams. The ESU's outside perspective often led to new interpretations of baseline data and/or the need to further define the underlying problem through additional stakeholder conversations, process mapping activities, and/or procurement of alternate data that ultimately helped refine the problem and intervention. Many projects were also re‐scoped to focus on more proximal outcomes and process measures to increase feasibility within the one‐year ICDP timeframe (eg, time to schedule a follow‐up appointment following a hospital discharge rather than readmission reduction).

The weekly QI project team‐ESU meetings provided the QI team an opportunity to expand their implementation and evaluation sciences skills. A subset of team members also accepted the ESU's standing invitation to join additional data analysis sessions, which they did based on availability and level of interest. These sessions involved teasing through detailed analyses of quantitative data or coding and consensus discussions with qualitative data. Although most clinicians engaged with the hands‐on learning the partnership offered, their bandwidth varied, particularly for physician project leaders for whom QI efforts competed against clinical and administrative duties. This led to delays in a subset of projects. One‐on‐one check‐ins between ICDP and ESU project leaders were occasionally required to reassess project status given fluctuating departmental and individual priorities.

3.3. Reflections from the ICDP‐ESU collaboration

3.3.1. Clinician partner feedback

End‐of‐year feedback from clinical partners was positive overall in terms of the ESU support in evaluation planning and design, building clinician capacity, contributing novel perspectives (including implementation science frameworks), and solidifying QI project teams through intentional team‐building. In one mixed methods project, a clinical partner reflected: “…two things that our ICDP team benefitted the most from the collaboration … assistance with data analysis and guidance with qualitative interviews/discussions. These would have been difficult to accomplish without the ESU team and may have required outside consultation for data analysis.” Another clinician reflected the expertise gained through the ESU: “…working with the ESU helped our team understand ‘what we didn't know.’ For example, use of a step‐wedge design was never a consideration in the past.” Regarding operating as a learning health system, the increased capacity was reported to inspire clinicians to engage in publishable QI work: “I have learned so much from the team (ie, study design, quantitative vs qualitative research and mixed methods). […] it has really inspired other clinicians too, that publications can be done in QI.”

Improvement areas were also noted, primarily in terms of expectation setting for this novel partnership. Specifically, clinician partners asked for detailed timelines, short guides for introduction to qualitative methods, team roster and roles, and manuscript production expectations. In terms of manuscripts, clinician partners noted the need for flexibility: “…[we needed to be able to] discuss this point again during the middle stages of the project if QI expectations are not going in the expected direction.” Going forward, partners looked for more collaboration through enhanced team member responsibilities related to the evaluation and merging of implementation science and QI frameworks from project initiation.

3.3.2. ESU team insights and reflections

Enterprise‐wide partnership facilitated inter‐departmental learnings

The ICDP‐ESU collaboration enabled the evaluation team to collectively assemble observations across diverse departments and identify lessons from analogous QI efforts across the health system. This was particularly valuable in facilitating cross talk and dissemination of best practices to support nimble transitions during a period when clinical teams were absorbed with the shifting healthcare realities of COVID‐19.

This was exemplified in the implementation and evaluation of telemedicine. Entering the COVID‐19 pandemic, Stanford Medicine had the advantage of several years of experience with forays into telemedicine that, albeit relatively small, were mature and well supported. The health system was committed and well positioned to implement telemedicine swiftly and universally at the start of the pandemic, and the ICDP‐ESU collaboration ensured that this was accompanied by rigorous evaluation. Specifically, the ESU actively supported parallel evaluation of telemedicine deployment in the health systems' Departments of Neurology, 33 , 34 , 35 , 36 Dermatology, and Surgery, 37 and Primary Care, 38 as well as novel application of telemedicine in the emergency department 39 and inpatient settings. 40 , 41 Not only were learnings shared across clinical departments to promote telehealth implementation best practices (eg, triage criteria, virtual physical exam skills, trainee integration into virtual visits) but equally important was sharing of research methods, tools, and experience. This was particularly relevant in gathering patient and caregiver perspectives during the pandemic when in‐person interviews in clinic were not feasible; proactive outreach with scheduled phone and video calls helped the teams obtain these perspectives for several projects. 33 , 37 , 42 , 43 Last, the ESU's collective experience and emerging expertise in this domain, resulted in a collaborative analysis of telemedicine implementation across multiple healthcare systems. 44

The networks built from the ICDP‐ESU collaboration also facilitated unexpected partnerships in which physician trainees were linked with attendings who shared a similar interest to document changes happening within the healthcare system. These linkages led to additional publications within surgery, 45 radiology, 46 and primary care. 47

Need for multi‐year support to enable nimble response to dynamic health system needs

Funding for each cycle was determined annually, which proved challenging for several reasons. First, projects were necessarily scoped smaller in terms of outcome, target population, or extensiveness of the intervention than might have otherwise been optimal, given the timeline (see examples in Appendix S3). Interventions were often not launched until halfway through the year, given time needed for initial consultations, project selection for in‐depth evaluation, and launch of project‐based clinician/researcher partnerships. Thus, project impact and/or stakeholder experience could only be assessed in the early stages of implementation. Increased emphasis was necessarily placed on proximal outcomes, such as patient acceptance or clinician adoption, rather than distal outcomes such as intervention effectiveness, despite the latter being of additional interest to stakeholders. Time limitations also put projects with small patient populations or that focused on rare outcomes at a disadvantage in the selection process. In addition, some Year 1 activities inadvertently extended into Year 2, including data analysis and manuscript preparation. This often meant that researchers and project leaders were stretched thin extending work beyond the original scope of the project. Finally, projects with promising next steps were not able to pursue a “second round” of funding to support the next stage of their evaluation, leading to possible missed opportunities. Planning for research staff for the longer‐term evaluation needs of the institution overall remained an ongoing challenge. Alternate funding strategies that benefit all stakeholders are currently being explored.

Need for timely access to quantitative data

The annual funding cycle also restricted teams' ability to identify and access appropriate data to meet project goals within a short timeline. Prior to the in‐depth partnership, QI project teams typically had either incomplete baseline data or no data access at all; data acquisition often occurred simultaneously with intervention and evaluation planning. This contributed to delays in understanding the problem, identifying the population and outcomes of interest, and ultimately, launching the intervention and its evaluation. These data resources were also essential for qualitative data collection, specifically in identifying patients for interviews.

However, of significant benefit, the ICDP‐ESU collaboration provided critical funding and support to provide the ESU with additional access to EHR‐based data through Epic and Clarity. This required the ESU biostatistician to complete four certificates from Epic University (ie, Cogito, Caboodle Data Model, Clarity Data Model, and Clinical Data Model) in Year 1. Access to the institution's Clarity required support from the Chief Medical Officer, Chief Analytics Officer, and ICDP leadership; full access was obtained during the second half of Year 2. The ICDP‐ESU collaboration funded the ESU's biostatistician at approximately 0.25 FTE for 18 mo to complete the Epic certifications, pass a Stanford‐based skills test, and obtain Clarity access. The collaboration also funded Epic University tuition for the certificate courses, travel and accommodations to Epic headquarters, and testing fees. Therefore, Year 2 projects benefited most from this streamlined data access. This came with a steep learning curve to put this new capability to practical use, which was compounded by the simultaneous need to define and refine the populations and outcomes of interest depending on each projects' needs.

The ESU identified key characteristics of QI project teams that helped facilitate quantitative evaluation. Of the 7 projects presented, the most successful quantitative evaluations involved QI project teams that were already closely connected with the health system's clinical business analytics center and therefore had pre‐existing access to operational and clinical data (eg, dashboards) for operational purposes. Having a clinician champion familiar with these existing resources was essential to understand available data and generate rough baseline values (eg, population size) with ESU support. With these resources, the ESU could quickly identify remaining needs and subsequently either collaborate with the clinical business analytics center or work with the ESU biostatistician to obtain additional data. These examples of success highlight the need for all QI teams, regardless of ESU partnership, to have data access and/or connection to an entity that can produce operational dashboards to help clinicians evaluate their QI efforts.

4. DISCUSSION

The ICDP‐ESU collaboration embeds researchers into clinician‐led QI teams to strengthen the validity, reliability, and actionability of QI evaluations within an academic learning health system. Within the seven projects selected for in‐depth analysis across the first 2 y of the collaboration, clinician‐researcher teams worked toward implementing and evaluating solutions to ongoing clinical challenges in real‐world settings, combining QI tools and implementation science methodology. Evaluators offered actionable, summative findings to clinical partners and leadership to inform operational improvement and shared learnings through scholarly work.

Recognized benefits reported here, predominantly the co‐production of knowledge that is responsive to the host organization's needs, align with experiences of other embedded research partnerships. 17 The collaboration functioned to a high degree of embeddedness within the health system, 48 where researchers were directly involved in the evolution of QI projects; evaluation learnings were shared on a weekly basis with clinical partners to inform iterative improvement. This is perhaps best exemplified in the collaboration's work to inform a nimble telemedicine response early in the COVID‐19 pandemic when the health system was most vulnerable, with the ESU serving as a central hub in an evolving clinician‐researcher network. The ESU's mixed methods expertise also provided clinicians with new skills to enhance the health system's overall evaluation capacity. Future work may focus on quantifying clinician partner satisfaction and the extent to which these skills foster ongoing rigor in QI evaluation, independent of researcher involvement.

The ICDP‐ESU collaboration functioned at a decentralized, project level relative to some other embedded research models. 17 , 20 Evaluations benefited from involvement of frontline clinician partners, who shared unique insights into clinical challenges and ultimately led the development, implementation, and iterative improvement of discrete QI interventions. However, the decentralized model had limited reach in terms of both organizational impact and clinician capacity building—while all departments were invited to workshop project evaluations during consultations with the ESU, only three projects from three departments receive in‐depth evaluation support from the ESU out of approximately 60 possible projects from 18 departments annually. Therefore, the collaboration is necessarily only one aspect of the organization's multi‐faceted QI and clinician development effort. Further, clinician‐led ICDP projects typically align, but are not determined exclusively by, the broader operational needs of the healthcare system, and the 1 y timeline limited the longitudinal impact of any given project. The collaboration could therefore miss higher yield evaluation opportunities that alternate, centralized evaluation models might capture. 20 Expanding the collaboration timeline and focus to address health system needs at the operational domain level (eg, focusing on a multi‐year patient experience project rather than an annual department‐specific project) may have broader QI and clinician educational impact. Further, supporting an option for follow‐on evaluations of particularly promising projects can also enhance depth of insight; these are areas of ongoing exploration as the partnership evolves.

The tradeoff between responding to local needs, which can be exquisitely nuanced, and the broader relevance of knowledge gained, remains a challenge—often, an evaluation of a local solution may be valid and have significant organizational impact but have reduced relevance to outside entities. We aimed to choose high‐potential projects in terms of evaluability, organizational importance, and broader relevance, but projects that met all three criteria were rare. System‐wide QI efforts, as well as researcher involvement earlier in the QI project pipeline may increase the pool of high‐potential projects and are actively being pursued. Project scope was also frequently narrowed as we elected to trade off breadth of understanding in favor of timeliness and validity of knowledge. Striking the right balance along the “double bottom line” to both add operational value to the health system and disseminate learnings through scholarship is an ongoing discussion within the collaboration that is reflected more broadly. 49 , 50

Finally, ongoing program challenges include funding sustainability, which has thus far relied upon operational clinical revenue and is therefore subject to market considerations and the operational and clinical leaders' priorities for the health system. Traditional sources of support for academic pursuits (ie, federal or foundation grants) are not often applicable to year‐over‐year health system improvement efforts; thus, the program's survival will likely depend on its ability to demonstrate continual institutional impact over time. Yet, quantifying this impact is its own challenge, given project diversity in improvement area, target process and outcome measures, and degree and breadth of stakeholder involvement. An evaluation of the program overall might be limited to a qualitative analysis and/or focus on broadly applicable quantitative outcomes such as dollars saved or formally measured patient satisfaction. Other program improvements include the need to protect time for clinician project leaders to engage in project activities and ongoing efforts to direct departmental incentive payments toward QI efforts; these changes could help foster a virtuous cycle of continuous improvement. Finally, the collaboration invested in the ESU biostatistician's ability to directly access EHR and operational data; streamlining this cumbersome process for other ESU or department‐based biostatisticians will also speed the institution's ability to capture rapid insights from its data.

5. CONCLUSION

The presence of embedded researchers in a health system‐wide incentivized QI program supported rigorous evaluation of a subset of clinician‐led QI projects, dissemination through scholarly work, and clinician capacity building in mixed methods evaluation and implementation sciences. Frontline clinician insights and clinician‐researcher networks facilitated the dissemination of best practices from analogous efforts (eg, telemedicine). Future considerations include the need for multi‐year support, protected time for participating QI clinical leaders, and streamlined data access.

FUNDING INFORMATION

This project was supported by Stanford Health Care as part of the Improvement Capability Development Program.

CONFLICT OF INTEREST

The authors have no conflicts of interest to report.

Supporting information

APPENDIX S1 Supporting Inforamtion.

ACKNOWLEDGEMENTS

The authors acknowledge Stanford Health Care for their financial support of the above collaboration, in particular Nilushka Melnick for her support and ongoing development of ICDP. The authors also acknowledge Dr. Sang‐ick Chang and the Division of Primary Care and Population Health within the Stanford School of Medicine for their financial support of the above collaboration. Finally, the authors also acknowledge the Stanford Medicine Center for Improvement for fostering an inter‐disciplinary improvement environment across Stanford Medicine.

Vilendrer S, Saliba‐Gustafsson EA, Asch SM, et al. Evaluating clinician‐led quality improvement initiatives: A system‐wide embedded research partnership at Stanford Medicine. Learn Health Sys. 2022;6(4):e10335. doi: 10.1002/lrh2.10335

DATA AVAILABILITY STATEMENT

The collaboration between the ESU and ICDP is jointly funded by Stanford Health Care and the Division of Primary Care and Population Health at Stanford School of Medicine.

REFERENCES

- 1. Institute of Medicine (U.S.) , Grossmann C, Powers B, JM MG. Digital infrastructure for the learning health system: the Foundation for Continuous Improvement in health and health care: workshop series summary. Washington, DC: National Academies Press; 2011. [PubMed] [Google Scholar]

- 2. Best care at lower cost: the path to continuously learning health care in America. Washington, DC: National Academies Press; 2013:13444. doi: 10.17226/13444 [DOI] [PubMed] [Google Scholar]

- 3. Foley TJ, Vale L. What role for learning health systems in quality improvement within healthcare providers? Learn Health Sys. 2017;1(4):e10025. doi: 10.1002/lrh2.10025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Enticott J, Johnson A, Teede H. Learning health systems using data to drive healthcare improvement and impact: a systematic review. BMC Health Serv Res. 2021;21(1):200. doi: 10.1186/s12913-021-06215-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Delaney BC, Curcin V, Andreasson A, et al. Translational medicine and patient safety in Europe: TRANSFoRm—architecture for the learning health system in Europe. Biomed Res Int. 2015;2015:1‐8. doi: 10.1155/2015/961526 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Mullins CD, Wingate LT, Edwards HA, Tofade T, Wutoh A. Transitioning from learning healthcare systems to learning health care communities. J Comp Eff Res. 2018;7(6):603‐614. doi: 10.2217/cer-2017-0105 [DOI] [PubMed] [Google Scholar]

- 7. Stefan MS, Salvador D, Lagu TK. Pandemic as a catalyst for rapid implementation: how our hospital became a learning health system overnight. Am J Med Qual. 2021;36(1):60‐62. doi: 10.1177/1062860620961171 [DOI] [PubMed] [Google Scholar]

- 8. Vahidy F, Jones SL, Tano ME, et al. Rapid response to drive COVID‐19 research in a learning health care system: rationale and Design of the Houston Methodist COVID‐19 surveillance and outcomes registry (CURATOR). JMIR Med Inform. 2021;9(2):e26773. doi: 10.2196/26773 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Deans KJ, Sabihi S, Forrest CB. Learning health systems. Semin Pediatr Surg. 2018;27(6):375‐378. doi: 10.1053/j.sempedsurg.2018.10.005 [DOI] [PubMed] [Google Scholar]

- 10. Psek WA, Stametz RA, Bailey‐Davis LD, et al. Operationalizing the learning health care system in an integrated delivery system. eGEMs. 2015;3(1):6. doi: 10.13063/2327-9214.1122 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Menear M, Blanchette MA, Demers‐Payette O, Roy D. A framework for value‐creating learning health systems. Health Res Policy Syst. 2019;17(1):79. doi: 10.1186/s12961-019-0477-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Snell AJ, Briscoe D, Dickson G. From the inside out: the engagement of physicians as leaders in health care settings. Qual Health Res. 2011;21(7):952‐967. doi: 10.1177/1049732311399780 [DOI] [PubMed] [Google Scholar]

- 13. Herman DD, Weiss CH, Thomson CC. Educational strategies for training in quality improvement and implementation medicine. ATS Sch. 2020;1(1):20‐32. doi: 10.34197/ats-scholar.2019-0012PS [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Davey P, Thakore S, Tully V. How to embed quality improvement into medical training. BMJ. 2022;376:e055084. doi: 10.1136/bmj-2020-055084 [DOI] [PubMed] [Google Scholar]

- 15. Melder A, Robinson T, McLoughlin I, Iedema R, Teede H. An overview of healthcare improvement: unpacking the complexity for clinicians and managers in a learning health system. Intern Med J. 2020;50(10):1174‐1184. doi: 10.1111/imj.14876 [DOI] [PubMed] [Google Scholar]

- 16. Walkey AJ, Bor J, Cordella NJ. Novel tools for a learning health system: a combined difference‐in‐difference/regression discontinuity approach to evaluate effectiveness of a readmission reduction initiative. BMJ Qual Saf. 2020;29(2):161‐167. doi: 10.1136/bmjqs-2019-009734 [DOI] [PubMed] [Google Scholar]

- 17. Vindrola‐Padros C, Pape T, Utley M, Fulop NJ. The role of embedded research in quality improvement: a narrative review. BMJ Qual Saf. 2017;26(1):70‐80. doi: 10.1136/bmjqs-2015-004877 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Grumbach K, Lucey CR, Johnston SC. Transforming from centers of learning to learning health systems: the challenge for academic health centers. JAMA. 2014;311(11):1109‐1110. doi: 10.1001/jama.2014.705 [DOI] [PubMed] [Google Scholar]

- 19. Chambers DA, Feero WG, Khoury MJ. Convergence of implementation science, precision medicine, and the learning health care system: a new model for biomedical research. JAMA. 2016;315(18):1941‐1942. doi: 10.1001/jama.2016.3867 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Horwitz LI, Kuznetsova M, Jones SA. Creating a learning health system through rapid‐cycle, Randomized Testing. N Engl J Med. 2019;381(12):1175‐1179. doi: 10.1056/NEJMsb1900856 [DOI] [PubMed] [Google Scholar]

- 21. Lessard L, Michalowski W, Fung‐Kee‐Fung M, Jones L, Grudniewicz A. Architectural frameworks: defining the structures for implementing learning health systems. Implementation Sci. 2017;12(1):78. doi: 10.1186/s13012-017-0607-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Allen C, Coleman K, Mettert K, Lewis C, Westbrook E, Lozano P. A roadmap to operationalize and evaluate impact in a learning health system. Learn Health Syst. 2021;5(4):e10258. doi: 10.1002/lrh2.10258 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Mathews SC, Demski R, Hooper JE, et al. A model for the departmental quality management infrastructure within an academic health system. Acad Med. 2017;92(5):608‐613. doi: 10.1097/ACM.0000000000001380 [DOI] [PubMed] [Google Scholar]

- 24. Pronovost PJ, Mathews SC, Chute CG, Rosen A. Creating a purpose‐driven learning and improving health system: the Johns Hopkins medicine quality and safety experience. Learn Health Syst. 2017;1(1):e10018. doi: 10.1002/lrh2.10018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Improvement Capability Development Program . Stanford medicine: neurology & neurological sciences. Accessed March 25, 2022. https://med.stanford.edu/neurology/quality/icdp.html

- 26. Evaluation Sciences Unit . Stanford medicine. Accessed March 25, 2022. https://med.stanford.edu/evalsci.html

- 27. Proctor E, Silmere H, Raghavan R, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Mental Health Mental Health Serv Res. 2011;38(2):65‐76. doi: 10.1007/s10488-010-0319-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Averill JB. Matrix analysis as a complementary analytic strategy in qualitative inquiry. Qual Health Res. 2002;12(6):855‐866. doi: 10.1177/104973230201200611 [DOI] [PubMed] [Google Scholar]

- 29. STanford Research Repository (STARR) Tools. https://med.stanford.edu/starr-tools.html

- 30. REDCAP@Stanford. http://redcap.stanford.edu

- 31. R Core Team (2021). R: a language and environment for statistical computing. R Foundation for Statistical Computing. Vienna Austria. https://www.r-project.org/ [Google Scholar]

- 32. Brown‐Johnson C, Safaeinili N, Zionts D, et al. The Stanford lightning report method: a comparison of rapid qualitative synthesis results across four implementation evaluations. Learn Health Syst. 2019;4:e10210. doi: 10.1002/lrh2.10210 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Kling SMR, Falco‐Walter JJ, Saliba‐Gustafsson EA, et al. Patient and clinician perspectives of new and return ambulatory Teleneurology visits. Neurol Clin Pract. 2021;11(6):472‐483. doi: 10.1212/CPJ.0000000000001065 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Ng J, Chen J, Liu M, et al. Resident‐driven strategies to improve the educational experience of teleneurology (5111). Neurology. 2021;96(15 Supplement):5111. http://n.neurology.org/content/96/15_Supplement/5111.abstract [Google Scholar]

- 35. Saliba‐Gustafsson EA, Miller‐Kuhlmann R, Kling SMR, et al. Rapid implementation of video visits in neurology during COVID‐19: mixed methods evaluation. J Med Internet Res. 2020;22(12):e24328. doi: 10.2196/24328 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Yang L, Brown‐Johnson CG, Miller‐Kuhlmann R, et al. Accelerated launch of video visits in ambulatory neurology during COVID‐19: key lessons from the Stanford experience. Neurology. 2020;95(7):305‐311. doi: 10.1212/WNL.0000000000010015 [DOI] [PubMed] [Google Scholar]

- 37. Brown‐Johnson CG, Spargo T, Kling SMR, et al. Patient and surgeon experiences with video visits in plastic surgery–toward a data‐informed scheduling triage tool. Surgery. 2021;170(2):587‐595. doi: 10.1016/j.surg.2021.03.029 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Srinivasan M, Asch S, Vilendrer S, et al. Qualitative assessment of rapid system transformation to primary care video visits at an Academic Medical Center. Ann Intern Med. 2020;6:527‐535. doi: 10.7326/M20-1814 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Patel B, Vilendrer S, Kling SMR, et al. Using a real‐time locating system to evaluate the impact of telemedicine in an emergency department during COVID‐19: observational study. J Med Internet Res. 2021;23(7):e29240. doi: 10.2196/29240 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Safaeinili N, Vilendrer S, Williamson E, et al. Inpatient telemedicine implementation as an infection control response to COVID‐19: a qualitative process evaluation. JMIR Form Res. 2021;19:e26452. doi: 10.2196/26452 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Vilendrer SM, Sackeyfio S, Akinbami E, et al. Patient perspectives of inpatient telemedicine during COVID‐19: a qualitative assessment. JMIR Form Res. 2021;6(3):e32933. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Vilendrer S, Sackeyfio S, Akinbami E, et al. Patient perspectives of inpatient telemedicine during the COVID‐19 pandemic: qualitative assessment. JMIR Form Res. 2022;6(3):e32933. doi: 10.2196/32933 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Kling SMR, Saliba‐Gustafsson EA, Winget M, et al. Teledermatology to facilitate patient care transitions from inpatient to outpatient dermatology: a mixed methods evaluation (preprint). J Med Int Res. 2022;24(8):e38792. doi: 10.2196/38792 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Vilendrer S, Patel B, Chadwick W, et al. Rapid deployment of inpatient telemedicine in response to COVID‐19 across three health systems. J Am Med Inform Assoc. 2020;27(7):1102‐1109. doi: 10.1093/jamia/ocaa077 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Fu SJ, George EL, Maggio PM, Hawn M, Nazerali R. The consequences of delaying elective surgery: surgical perspective. Ann Surg. 2020;272(2):e79‐e80. doi: 10.1097/SLA.0000000000003998 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Madhuripan N, Cheung HMC, Alicia Cheong LH, Jawahar A, Willis MH, Larson DB. Variables influencing radiology volume recovery during the next phase of the coronavirus disease 2019 (COVID‐19) pandemic. J Am Coll Radiol. 2020;17(7):855‐864. doi: 10.1016/j.jacr.2020.05.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Thomas SC, Carmichael H, Vilendrer S, Artandi M. Integrating telemedicine triage and drive‐through testing for COVID‐19 rapid response. Health Policy Manag Innovat. 2020;3:18. [Google Scholar]

- 48. Jackson GL, Damschroder LJ, White BS, et al. Balancing reality in embedded research and evaluation: low vs high embeddedness. Learn Health Syst. 2022;6(2):e10294. doi: 10.1002/lrh2.10294 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Atkins D, Kullgren JT, Simpson L. Enhancing the role of research in a learning health care system. Healthcare. 2021;8:100556. doi: 10.1016/j.hjdsi.2021.100556 [DOI] [PubMed] [Google Scholar]

- 50. Damschroder LJ, Knighton AJ, Griese E, et al. Recommendations for strengthening the role of embedded researchers to accelerate implementation in health systems: findings from a state‐of‐the‐art (SOTA) conference workgroup. Healthcare. 2021;8:100455. doi: 10.1016/j.hjdsi.2020.100455 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. Holden RJ, Carayon P, Gurses AP, et al. SEIPS 2.0: a human factors framework for studying and improving the work of healthcare professionals and patients. Ergonomics. 2013;56(11):1669‐1686. doi: 10.1080/00140139.2013.838643 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Holdsworth LM, Kling SMR, Smith M, et al. Predicting and responding to clinical deterioration in hospitalized patients by using artificial intelligence: protocol for a mixed methods, stepped wedge study. JMIR Res Protoc. 2021;10(7):e27532. doi: 10.2196/27532 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Vilendrer S, Lough ME, Garvert DW, et al. Nursing workflow change in a COVID‐19 inpatient unit following the deployment of inpatient telehealth: observational study using a real‐time locating system. J Med Internet Res. 2022;24(6):e36882. doi: 10.2196/36882 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

APPENDIX S1 Supporting Inforamtion.

Data Availability Statement

The collaboration between the ESU and ICDP is jointly funded by Stanford Health Care and the Division of Primary Care and Population Health at Stanford School of Medicine.