Abstract

Many effective methods extract and fuse different protein features to study the relationship between protein sequence, structure, and function, but different methods have preferences in solving the research of protein structure and function, which requires selecting valuable and contributing features to design more effective prediction methods. This work mainly focused on the feature selection methods in the study of protein structure and function, and systematically compared and analyzed the efficiency of different feature selection methods in the prediction of protein structures, protein disorders, protein molecular chaperones, and protein solubility. The results show that the feature selection method based on nonlinear SVM performs best in protein structure prediction, protein solubility prediction, protein molecular chaperone prediction, and protein solubility prediction. After selection, the accuracy of features is improved by 13.16% ~71%, especially the Kmer features and PSSM features of proteins.

1. Introduction

Protein structure and function is the basic research field of protein research, which is of great significance for the study of protein folding rate, DNA binding sites, and protein folding recognition [1–7]. In recent years, the gap between protein sequence and protein structure is becoming larger and larger with the development of sequencing technology, and the speed of identifying protein structure and function through experimental methods is relatively slow. Therefore, it is necessary to develop computational methods to quickly and accurately determine protein structure and function.

The function of a protein is determined by its spatial structure, which is determined by its sequence. Therefore, sequence information can be used to predict protein structure and function directly, so as to further guide biological experiments and reduce experimental costs. After the concept of protein structure class was put forward, several protein structure and function prediction methods were proposed [3–5, 7–11]. Some methods use protein composition information to predict protein structure and function [1, 12, 13]. For example, short peptide composition [14–16], pseudo amino acid composition [17–20], and functional domain composition match [21]. The sequence characteristic information is expressed as amino acid composition (AAC) by calculating the ratio of 20 amino acid residues in the sequence [14–16], but it does not take into account the physicochemical properties and interaction of amino acids. In order to overcome the above problems, pseudo amino acid composition (PseACC) calculates the composition of amino acid residues based on the hydrophobicity and other physical and chemical properties of amino acid residues [17–23].

The above methods are outstanding in high similarity data, but for low similarity data, their performance is ordinary, with prediction accuracy 50%. Therefore, we need to design more effective prediction algorithm. Kurgan et al. predicted protein secondary structures and designed SCPRED method on this basis [24]. Zhang et al. calculated the TPM matrix and took it as the characteristic representation of the protein secondary structures [25]. Dai et al. statistically analyzed the characteristic distribution of protein secondary structures and applied them to protein structure prediction [26]. Ding et al. constructed a multidimensional representation vector of protein secondary structure features, and fused it with existing features to achieve protein structure prediction [27]. Chen et al. and Kumar et al. combined structural information with physical and chemical characteristics to design a protein structure prediction method [28, 29]. Nanni et al. calculated the primary sequence characteristics and secondary structure characteristics of protein, respectively, for protein structure and function prediction [30]. Wang et al. simplified PSSM features and combined them with protein secondary structure features for protein structure prediction [31].

Through the fusion of the above features, the prediction accuracy of some methods on low similarity data sets has been improved to more than 80%, but there are still some problems in the development of protein structure and function prediction. In order to improve the prediction accuracy and efficiency of the model, the existing research is mainly achieved by fusing different types of protein features. However, it is worth noting that a simple combination of different features does not necessarily improve the prediction performance. If the combination is not appropriate, it may even offset the information contained in each other, which will not only lead to information redundancy but also increase the complexity and calculation of the model. This requires selecting valuable and contributing features, and then effective fusion, in order to design more effective prediction methods of protein structure and function.

With the above problems in mind, we introduced 16 feature selection methods based on mutual information, feature selection based on support vector machine, feature selection based on genetic algorithm, feature selection based on kurtosis and skewness, ReliefF, and sequentialfs information selection, and systematically compared their performance in protein structure class prediction, protein disorder prediction, protein molecular chaperone prediction, and protein solubility prediction. Through a comprehensive comparison and discussion, some novel valuable guidelines for use of the feature selection method for protein structure and function prediction are obtained.

2. Materials and Methods

2.1. Datasets

Four standard data sets for protein structure and function prediction were used in this work, which are protein structural class data set, molecular chaperone data set [32], solubility data set [33], and protein disorder data set [34]. The structure data set consists of 278 α structural proteins and 192 β proteins composition of structure. The molecular chaperone data set [35] is composed of 109 proteins that need Dnak/GroEL molecular chaperones to fold correctly, and 39 proteins that can fold autonomously. The solubility data set [36] is composed of 1000 proteins with high solubility and 1000 proteins with low solubility. The protein disorder data set is composed of 630 disordered proteins from DisProt and 3347 structural proteins from SCOP [37]. The detailed information of the data set is shown in Table 1.

Table 1.

Detailed information of protein structure and function data.

| Dataset | Positive | Negative | Total |

|---|---|---|---|

| Protein structural class | 278 | 192 | 470 |

| Molecular chaperone | 109 | 39 | 148 |

| Solubility | 1000 | 1000 | 2000 |

| Protein disorder | 630 | 3347 | 3977 |

2.2. Sequence Feature

Six kinds of different characteristic information of proteins are extracted [26]. They are Kmer, Pseudo Amino Acid Composition (PseAAC), Correlation-based features (correlation), composition-transition-distribution (RCTD), order-based features (order), position-based features (position), GO, and position-specific score matrix (PSSM).

2.3. Feature Selection

2.3.1. Feature Selection Based on Mutual Information

Feature selection based on mutual information has become more and more popular in data mining, especially because of their ease of use, effectiveness, and strong theoretical foundation rooted in information theory. We adopted nine feature selection algorithms based on mutual information [38], which are maxRelFS, MRMRFS, minRedFS, MIQFS, QPFSFS, SPECCMI_Fs, MRMTRFS, CMIMFSand, and CIFEFS. The common point of these methods is that they all focus on the concepts of redundancy and correlation, and use greedy schemes to build the selected feature sets incrementally. Given a sample, the column is the characteristic matrix X, and the corresponding category is C. The calculation formula of mutual information is

| (1) |

If the selected set is S, the calculation formula of redundancy is as follows:

| (2) |

The above nine feature selection algorithms calculate the mutual information value of each feature and category C, and select the feature with the largest mutual information as the optimal feature. Then, according to the feature selection method of quadratic programming, the features with minimum redundancy and maximum correlation are selected one by one. Finally, we can get a feature vector sorted according to the importance of features.

2.3.2. Support Vector Machine Recursive Feature Extraction (SVM-RFE)

Support vector machine recursive feature extraction (SVM-RFE) is divided into linear SVM-RFE and nonlinear SVM-RFE. The details are as follows:

(1) Linear SVM-RFE. For a samples {xi, yi}, the objective function of linear SVM-RFE is

| (3) |

where a is weight factor and b is deviation. Thus, the Lagrangian version of this problem can be expressed as

| (4) |

where αi is Lagrange factor. αi can be calculated by LD maximum under the condition of αi ≥0 and ∑i=1nαiyi = 0. Weighting factors can be calculated by the following formula:

| (5) |

k-th feature sorting criteria is the square of the k-th weighting factor.

| (6) |

In the training process, the feature with the smallest influence factor will be deleted every time, and so on, until all the features are deleted. Then, the importance of features is sorted according to the order in which they are deleted [39].

(2) Nonlinear SVM-RFE. In many cases, the number of features of the sample will be more than the number of samples. At this time, using linear SVM-RFE can avoid the phenomenon of over fitting [40]. However, when the number of samples is greater than the number of features, the selection result of nonlinear SVM-RFE will be better than that of linear SVM-RFE.

Nonlinear SVM-RFE will map features to new spaces with higher dimensions as follows:

| (7) |

In the new space, the samples are expected to be linearly separable. Its Lagrangian form can be expressed as

| (8) |

Thus, we could transform inner product φ(xi)φ(xj) into a Gaussian kernel K(xi, xj) as follows:

| (9) |

Thus, k-th feature sorting criteria could be expressed as

| (10) |

x i (−k) represents that feature k has been removed.

2.3.3. Feature Selection Based on Genetic Algorithm

We adopted the assembled neural network (ASNN) algorithm. This method carries out combinatorial optimization by using the idea of genetic algorithm. For a given data set, a behavior sample can be constructed and listed as the matrix X of features [41], and finally a feature vector will be obtained, which is the optimal feature set, but the ranking of each feature is not related to its importance.

2.3.4. Feature Selection Based on Kurtosis and Skewness

For a vector of length n {x1, x2, ..., xn}, its kurtosis and skewness are calculated as follows:

| (11) |

Kurtosis and skewness are statistics used to measure the distribution of data. In this work, we calculated the skewness and kurtosis of each feature, and then sort them according to their values as a method to measure the importance of features.

2.3.5. Relieff Algorithm

Relieff algorithm randomly takes a sample R from the training sample set every time, then finds k nearest neighbor samples of R from the sample set of the same kind as R, and finds k nearest neighbor samples from the sample set of different classes of each R, and then updates the weight of each feature. The formula is as follows:

| (12) |

where diff(A, R1, R2) means the difference between feature R1 and R2 in feature A. Mj(C) means the j-th nearest neighbor sample in class C. Formula is as follows:

| (13) |

2.3.6. Sequentialfs

We adopted the forward feature selection algorithm of sequence in this work. For a training set {xtrain,ytrain} and validation set {xvalidation,yvalidation}, the evaluation criteria can be expressed as

| (14) |

2.4. Classification Algorithm

Support vector machine is a large-scale edge classifier based on statistical learning theory [42]. It uses the optimal separation hyperplane to separate two kinds of data. For binary support vector machines, the decision function is

| (15) |

where b is a constant, C is a cost parameter controlling the trade-off between allowing training errors and forcing rigid margins, yiϵ{−1, +1}, xi is the support vector, 0 ≤ αi ≤ C, and K(xi, x) is the kernel function. This work chooses the Gauss kernel function of support vector machine because of its superiority in solving nonlinear problems [34, 37]. Furthermore, a simple grid search strategy is used to select the parameters C and gamma with the highest overall prediction. It is designed based on 10 times cross validation of each dataset, and the values of C and gamma are taken from the 2−10 to 210.

2.5. Performance Evaluation

This work adopted different feature selection methods for different data sets, and used the leave one method for evaluation. Finally, the prediction results are compared by calculating accuracy.

For each data set, we compared the efficiency of different feature selection methods through the following steps. The following takes the feature selection method based on genetic algorithm (GA) and PSSM features as examples to introduce the evaluation process:

PSSM information is selected by GA feature selection method

Select the top 10, 20, 30, 40, and 50 features using GA (if the number of features is insufficient, all the information will be taken out), input them into SVM classifier for classification prediction, and calculate the accuracy of prediction ACC1, ACC2, ACC3, ACC4, and ACC5

Subtract the accuracy of the whole PSSM information from ACC1, ACC2, ACC 3, ACC 4, and ACC 5

Compare the changes in accuracy of various special products after 16 selection methods

We also compared and analyzed the characteristics of selection, and the main steps are as follows:

Use the above 16 selection methods to select each type of feature

According to the selection results of 16 feature selection methods, the importance of each type of feature is ranked

Take out the first 10, 20, 30, 40, and 50 features of each type of feature, respectively, (if the number of features is insufficient, all the information will be taken out) and mix them together as five new mixed features (I10, I20, I30, I40, I50);

Then, 16 feature selection methods are used to select the mixed features

According to the results of the fourth step, the importance of the fused features is ranked

Take out the top 10 features and count the type of features from which these 10 features come. Take out the top 20 features and count the categories of features

In five cases, if there are a large number of certain features (or observed), it means that such features are more important

3. Results and Discussion

3.1. Comparison of Feature Selection in Protein Structure Prediction

We first discussed the efficiency of different feature selection methods in protein structure prediction. We adopted the structural data set, which contains 278 items α structural proteins and 192 β structural proteins. In this work, eight kinds of features are selected through 16 feature selection methods, and the selected features are input into the support vector machine to predict the structural class of protein. The quality of feature selection methods is evaluated based on the accuracy of prediction, which are represented in Figure 1 and Supplementary Figures 1–4.

Figure 1.

The comparison between the accuracy of support vector machine prediction and that of single class feature prediction after selecting the top 10 features. For each graph, the selection method is arranged from left to right and from top to bottom. They are GA, and there are nine selection methods of mutual information, relief, sequentialfs, linear SVM, nonlinear SVM, kurtosis, and skewness. The horizontal axis represents sequence features, which are PSSM, go, Kmer, RCTD, PRseAAC, correlation, order, and position, respectively.

From Figure 1 and Supplementary Figures 1–4, it is easy to note that the accuracy of MRMRFS, MRMTRFS, CMIMFS, CIFEFS, and nonlinear SVM feature selection methods changes the most, and the change range is 3.19% for the position feature. By comparing the accuracy of the first 20-50 features selected with that of the unselected features, it can be seen that the biggest change in accuracy is the GO features selected by nonlinear SVM, with changes of 2.13%, 6.39%, 6.17%, and 4.68%, respectively. Therefore, nonlinear SVM feature selection method performs best in protein structure prediction.

For structural data sets [43], we further compared and analyzed the types of selected features. First, eight types of features are fused, and the fused features are selected through 16 feature selection methods, and the top 10-50 features are selected. Then, the number of eight types of features in the top 10-50 total selected features is counted, and the preference of eight types of features is evaluated by proportion. Figure 2 shows the number of 8 types of features in the top 10-50 total selected features in the protein structural data.

Figure 2.

The number of 8 types of features in the top selected features in the protein structural data. From (a) to (e), it means that the number of selected features is 10 to 50, respectively.

Figure 2 show that when the total number of selections is 10, there are 5 order features, accounting for 50%. When the total selection number is 20, there are 8 order features, accounting for 40%. When the selection number is 40, both order and RCTD have 10, accounting for 25% of the top 40 features. When the total selection number is 50, there are 12 orders and RCTD features, respectively, accounting for 24% of the total. The above results show that order feature is the first choice for protein structure prediction, followed by RCTD feature.

3.2. Comparison of Feature Selection in Protein Disorder Prediction

We then discussed the efficiency of different feature selection methods in protein disorder prediction. The protein disorder data set [44] used in this chapter is from two protein databases related to structural classes, including 630 disordered proteins from disProt and 3347 structural proteins from SCOP. In this work, eight kinds of features are selected through 16 feature selection methods, and the selected features are input into KNN to predict protein disorder. The quality of feature selection methods is evaluated based on the accuracy of prediction, which are represented in Figure 3 and Supplementary Figures 5–8.

Figure 3.

The comparison between the accuracy of support vector machine prediction and that of single class feature prediction after selecting the top 10 features. For each graph, the selection method is arranged from left to right and from top to bottom. They are GA, and there are nine selection methods of mutual information, relief, sequentialfs, linear SVM, nonlinear SVM, kurtosis and skewness. The horizontal axis represents sequence features, which are PSSM, go, Kmer, RCTD, PRseAAC, correlation, order, and position, respectively.

It can be seen from Figure 3 and Supplementary Figures 5–8 that when PSSM feature, go feature and Kmer feature are input into KNN algorithm for prediction, the change values of their accuracy are 51.28%, 55.11% and 26.95%, respectively. It can be seen that after feature selection, the accuracy of protein disorder prediction is significantly improved. When selecting 10 features, SPECCMI_FS performs best based on Kmer feature, and its accuracy by 71%. When selecting the first 20 and 30 features, the nonlinear SVM feature selection method is particularly prominent in Kmer features, and its accuracy has increased by 64.19%. Among the top 40 features selected, CIFEFS selection method performs best in Kmer features, and the accuracy is improved to 65.21%. Among the top 50 features selected, CIFEFS and linear SVM selection methods are outstanding, and the accuracy has increased by 59.61%. The above results show that for protein disorder data sets, SPECCMI_FS, CIFEFS, nonlinear SVM, and linear SVM feature selection methods can select core features from Kmer features, which improve its accuracy by 59.61% ~71%.

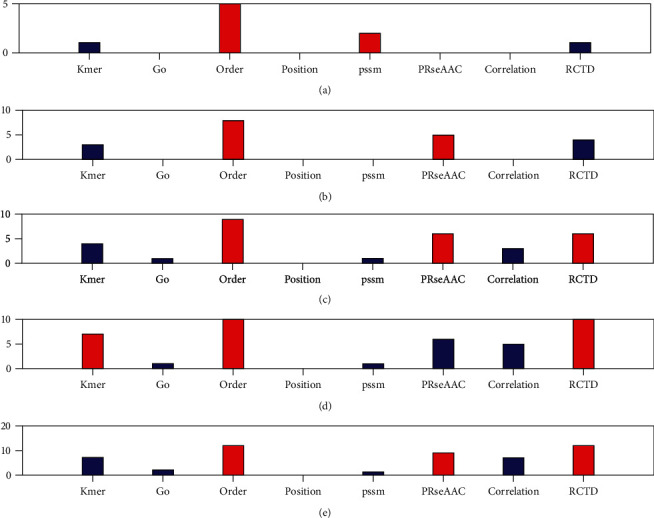

We also further compared the types of selected features. First, eight types of features are fused, and the fused features are selected through 16 feature selection methods, and the top 10-50 features are selected. Then, the number of eight types of features in the top 10-50 total selected features is counted, and the preference of eight types of features is evaluated by proportion. Figure 4 shows the number of 8 types of features in the top 10-50 total selected features in the protein disorder data set.

Figure 4.

The number of 8 types of features in the top selected features in the protein structural data. From (a) to (e), it means that the number of selected features is 10 to 50, respectively.

Figure 4 shows the number of features selected at five levels from top to bottom. If the top 10 fusion features are selected, 5 of them are from order features. If the first 20 fusion features are selected, 8 of them are from order features. If the first 30 fusion features are selected, 9 of them are from order features. If the first 40 fusion features are selected, there are 10 features from order and RCTD, respectively. If the top 50 fusion features are selected, 12 of them are from order and RCTD features, respectively. Therefore, the order and RCTD feature will help to improve the accuracy of the protein disorder prediction.

3.3. Comparison of Feature Selection in Protein Molecular Chaperone Prediction

We then discussed the efficiency of different feature selection methods in protein molecular chaperone prediction. In the data set used in this work, there are 109 proteins that need Dnak/GroEL molecular chaperones to fold correctly, and the remaining 39 proteins that can fold autonomously. In this work, eight kinds of features are selected through 16 feature selection methods, and the selected features are input into KNN to predict protein disorder. The quality of feature selection methods is evaluated based on the accuracy of prediction, which are represented in Figure 5 and Supplementary Figures 9–12.

Figure 5.

The comparison between the accuracy of support vector machine prediction and that of single class feature prediction after selecting the top 10 features. For each graph, the selection method is arranged from left to right and from top to bottom. They are GA, and there are nine selection methods of mutual information, relief, sequentialfs, linear SVM, nonlinear SVM, kurtosis, and skewness. The horizontal axis represents sequence features, which are PSSM, go, Kmer, RCTD, PRseAAC, correlation, order, and position, respectively.

Figure 5 and Supplementary Figures 9–12 show that when selecting the top 10 and 20 features, the accuracy of GO feature selection using nonlinear SVM is improved by 13.16% and 14.48%. When selecting the first 30 and 50 features, the accuracy of using sequentialfs to select RCTD features is improved by 13.16% and 17.17%. When selecting the first 40 features, linear SVM is used to select Kmer features, which improves its accuracy by 14.48%. Therefore, nonlinear SVM, sequentialfs and linear SVM are used to select features in the molecular chaperone prediction, which improves its accuracy by 13.16% ~17.17%.

We also further compared the types of selected features. First, eight types of features are fused, and the fused features are selected through 16 feature selection methods, and the top 10-50 features are selected. Then, the number of eight types of features in the top 10-50 total selected features is counted, and the preference of eight types of features is evaluated by proportion. Figure 6 shows the number of 8 types of features in the top 10-50 total selected features in the protein disorder data set.

Figure 6.

The number of 8 types of features in the top selected features in the protein structural data. From (a) to (e), it means that the number of selected features is 10 to 50, respectively.

When selecting 10 comprehensive features, there are 5 RCTD features, accounting for 50%. When selecting 20-30 comprehensive features, PSSM features have an absolute advantage, with 19, 26, 39, and 47 selected, respectively. It can be seen that PSSM is the preferred feature if you want to check whether a protein sequence is self-folding or molecular chaperone to help complete the correct folding.

3.4. Comparison of Feature Selection in Protein Solubility Prediction

Finally, the efficiency of different feature selection methods in protein solubility prediction is discussed. In this work, more than 7000 proteins from E. coli were selected and sorted according to their solubility. The first 1000 protein sequences with higher solubility and the last 1000 protein sequences with the lowest solubility were taken out to form a protein sequence data set. Through 16 feature selection methods, 8 kinds of features are selected, respectively, and the selected features are input into KNN to predict the solubility of protein. The quality of feature selection methods is evaluated based on the accuracy of prediction, which are represented in Figure 7 and Supplementary Figures 13–16.

Figure 7.

The comparison between the accuracy of support vector machine prediction and that of single class feature prediction after selecting the top 10 features. For each graph, the selection method is arranged from left to right and from top to bottom. They are GA, and there are nine selection methods of mutual information, relief, sequentialfs, linear SVM, nonlinear SVM, kurtosis, and skewness. The horizontal axis represents sequence features, which are PSSM, go, Kmer, RCTD, PRseAAC, correlation, order, and position, respectively.

When selecting 10 and 20 features, using CIFEFS based on mutual information to select RCTD features, the accuracy is improved the most, which is 3.93% and 3.88%, respectively. When selecting 30 features, using sequentialfs to select RCTD features, the accuracy is improved by 3.12%. When 40 and 50 features are selected, the accuracy of nonlinear SVM is improved by 3.12% and 4.76%, respectively. The above results show that CIFEFS, sequentialfs and nonlinear SVM feature selection methods perform well in protein solubility prediction.

We also further compared the types of selected features. First, eight types of features are fused, and the fused features are selected through 16 feature selection methods, and the top 10-50 features are selected. Then, the number of eight types of features in the top 10-50 total selected features is counted, and the preference of eight types of features is evaluated by proportion. Figure 8 shows the number of 8 types of features in the top 10-50 total selected features in the protein disorder data set.

Figure 8.

The number of 8 types of features in the top selected features in the protein structural data. From (a) to (e), it means that the number of selected features is 10 to 50, respectively.

When selecting 10-50 comprehensive features, PSSM features always account for the most, with 6, 7, 11, 23 and 28 PSSM features, accounting for 60%, 35%, 36.67%, 50.75% and 56% of the total. Therefore, using PSSM characteristics as input features to predict the solubility of new protein sequences is more reliable [45].

3.5. Comparison of Calculation Efficiency of Various Methods

The above analysis shows that the nonlinear SVM feature selection method based on support vector machine performs well in the prediction of various protein structures and functions. In order to further study the computational efficiency of feature selection methods, we calculated the time-consuming of various feature selection methods to select 8 types of features, as shown in Table 2. Mutual information represents the average time of the nine selection methods. It is not difficult to find that the nonlinear SVM selection method is related to the size of matrix elements. The larger the data elements, the longer the time required. Therefore, the matrix is normalized before feature selection. Sequentialfs consumes the most time, and the time-consuming ratio of nonlinear SVM, linear SVM, and single mutual information selection method is 2.5: 27.5 : 1. Therefore, the nonlinear SVM selection method is the preferred feature selection method in the prediction of protein structure and function.

Table 2.

Time consumption of feature selection methods.

| Mutual Information (/S) |

Sequentialfs (/S) | Linear-svm (/S) | Nonlinear-svm (/S) | |

|---|---|---|---|---|

| PSSM | 5.8 | 14074 | 23.8 | 2082.4 |

| Go | 360.33 | — | 42.4 | 0.75 |

| RCTD | 4.2 | 5571 | 11.7 | 1.3 |

| Kmer | 6.3 | 7423 | 18.9 | 1.7 |

| PRseAAC | 0.67 | 5.83 | 4.32 | 0.36 |

| Order | 1 | 35.2 | 22.8 | 270.5 |

| Position | 0.75 | 2.09 | 2.04 | 0.17 |

| Correlation | 0.62 | 3.87 | 1.39 | 0.33 |

4. Conclusion

Feature selection can reduce the problem of over fitting, improves the performance of the model, and reduces the time and space cost of the learning algorithm. 16 feature selection methods used in this work are feature selection method based on mutual information, feature selection method based on support vector machine, feature selection method based on genetic algorithm, feature selection method based on kurtosis and skewness, ReliefF ,and sequentialfs information selection methods. Different feature selection methods were compared and analyzed in protein structure class prediction, protein disorder prediction, protein molecular chaperone prediction, and protein solubility prediction.

Through a comprehensive comparison and discussion, we found that nonlinear SVM feature selection method performs best in protein structure prediction, the first choice is order feature, followed by RCTD feature. In protein disorder prediction, SPECCMI_FS, CIFEFS, nonlinear SVM, and linear SVM feature selection methods can select core features from Kmer features, which improves its accuracy by 59.61% ~71%. At the same time, order or RCTD features as input information will help to improve the accuracy of prediction. In protein molecular chaperone prediction, nonlinear SVM, sequentialfs, and linear SVM are used to select features, which improves the accuracy by 13.16% ~17.17%, and the preferred feature is PSSM feature. In protein solubility prediction, CIFEFS, sequentialfs, and nonlinear SVM feature selection methods perform well, and PSSM is the preferred feature. These results can be regarded as some novel valuable guidelines for use of the feature selection method for protein structure and function prediction.

Acknowledgments

This work is supported by the National Natural Science Foundation of China (62172369); Key Research and Development Plan of Zhejiang Province (2021C02039); and Zhejiang Provincial Natural Science Foundation of China (LY20F020016). The authors thank all the anonymous referees for their valuable suggestions and support.

Contributor Information

Wei Zhang, Email: zhangweicse@zstu.edu.cn.

Qi Dai, Email: daiailiu04@yahoo.com.

Data Availability

The data are available in https://github.com/bioinfo0706/RaaMLab.

Conflicts of Interest

The authors declare that there are no conflicts of interest, financial, or otherwise.

Supplementary Materials

Supplementary Figures 1‑16 are the precision comparison between support vector machine prediction and single class feature prediction based on the selected 20, 30, 40 and 50 features.

References

- 1.Klein P., Delisi C. Prediction of protein structural class from the amino acid sequence. Biopolymers . 1986;25(9):1659–1672. doi: 10.1002/bip.360250909. [DOI] [PubMed] [Google Scholar]

- 2.Chou K. C. Structural bioinformatics and its impact to biomedical science and drug discovery. Frontiers in Medicinal Chemistry . 2006;3(1):455–502. doi: 10.2174/978160805206610603010455. [DOI] [PubMed] [Google Scholar]

- 3.Chothia C., Levitt M. Structural patterns in globular proteins. Nature . 1976;261(5561):552–558. doi: 10.1038/261552a0. [DOI] [PubMed] [Google Scholar]

- 4.Andreeva A., Howorth D., Brenner S. E., Hubbard T. J. P., Chothia C., Murzin A. G. SCOP database in 2004: refinements integrate structure and sequence family data. Nucleic Acids Research . 2004;32(90001):226D–2269. doi: 10.1093/nar/gkh039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Murzin A. G., Brenner S. E., Hubbard T., Chothia C. SCOP: a structural classification of proteins database for the investigation of sequences and structures. Journal of Molecular Biology . 1995;247(4):536–540. doi: 10.1016/S0022-2836(05)80134-2. [DOI] [PubMed] [Google Scholar]

- 6.Ferragina P., Giancarlo R., Greco V., Manzini G., Valiente G. Compression-based classification of biological sequences and structures via the universal similarity metric: experimental assessment. BMC Bioinformatics . 2007;8(1):p. 252. doi: 10.1186/1471-2105-8-252. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Qi D., Wang T. Comparison study on k-word statistical measures for protein: from sequence to 'sequence space'. BMC Bioinformatics . 2008;9(1):394–394. doi: 10.1186/1471-2105-9-394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chen C., Tian Y. X., Zou X. Y., Cai P. X., Mo J. Y. Using pseudo-amino acid composition and support vector machine to predict protein structural class. Journal of Theoretical Biology . 2006;243(3):444–448. doi: 10.1016/j.jtbi.2006.06.025. [DOI] [PubMed] [Google Scholar]

- 9.Chou K. C. Prediction of protein structural classes and subcellular locations. Current Protein & Peptide Science . 2000;1(2):171–208. doi: 10.2174/1389203003381379. [DOI] [PubMed] [Google Scholar]

- 10.Kedarisetti K. D., Kurgan L., Dick S. Classifier ensembles for protein structural class prediction with varying homology. Biochemical and Biophysical Research Communications . 2006;348(3):981–988. doi: 10.1016/j.bbrc.2006.07.141. [DOI] [PubMed] [Google Scholar]

- 11.Qi D., Li W., Li L. Improving protein structural class prediction using novel combined sequence information and predicted secondary structural features. Journal of Computational Chemistry . 2011;32(16):3393–3398. doi: 10.1002/jcc.21918. [DOI] [PubMed] [Google Scholar]

- 12.Chou K. C. A key driving force in determination of protein structural classes. Biochemical & Biophysical Research Communications . 1999;264(1):216–224. doi: 10.1006/bbrc.1999.1325. [DOI] [PubMed] [Google Scholar]

- 13.Chou K. C., Shen H. B. Recent progress in protein subcellular location prediction. Analytical Biochemistry . 2007;370(1):1–16. doi: 10.1016/j.ab.2007.07.006. [DOI] [PubMed] [Google Scholar]

- 14.Luo R. Y., Feng Z. P., Liu J. K. Prediction of protein structural class by amino acid and polypeptide composition. European Journal of Biochemistry . 2002;269(17):4219–4225. doi: 10.1046/j.1432-1033.2002.03115.x. [DOI] [PubMed] [Google Scholar]

- 15.Sun X. D., Huang R. B. Prediction of protein structural classes using support vector machines. Amino Acids . 2006;30(4):469–475. doi: 10.1007/s00726-005-0239-0. [DOI] [PubMed] [Google Scholar]

- 16.Zhang S., Liang Y., Yuan X. Improving the prediction accuracy of protein structural class: approached with alternating word frequency and normalized Lempel-Ziv complexity. Journal of Theoretical Biology . 2014;341(1):71–77. doi: 10.1016/j.jtbi.2013.10.002. [DOI] [PubMed] [Google Scholar]

- 17.Ding Y. S., Zhang T. L., Chou K. C. Prediction of protein structure classes with pseudo amino acid composition and fuzzy support vector machine network. Protein and Peptide Letters . 2007;14(8):811–815. doi: 10.2174/092986607781483778. [DOI] [PubMed] [Google Scholar]

- 18.Wu L., Dai Q., Han B., Zhu L., Li L. Combining sequence information and predicted secondary structural feature to predict protein structural classes. 2011 5th International Conference on Bioinformatics and Biomedical Engineering; 2011 May 10; Wuhan, China. pp. 1–4. [DOI] [Google Scholar]

- 19.Liao B., Xiang Q., Li D. Incorporating secondary features into the general form of Chou's PseAAC for predicting protein structural class. Protein & Peptide Letters . 2012;19(11):1133–1138. doi: 10.2174/092986612803217051. [DOI] [PubMed] [Google Scholar]

- 20.Kong L., Zhang L., Lv J. Accurate prediction of protein structural classes by incorporating predicted secondary structure information into the general form of Chou's pseudo amino acid composition. Journal of Theoretical Biology . 2014;344(1):12–18. doi: 10.1016/j.jtbi.2013.11.021. [DOI] [PubMed] [Google Scholar]

- 21.Rahman M. S., Shatabda S., Saha S., Kaykobad M., Rahman M. S. DPP-PseAAC: a DNA-binding protein prediction model using Chou's general PseAAC. Journal of Theoretical Biology . 2018;452:22–34. doi: 10.1016/j.jtbi.2018.05.006. [DOI] [PubMed] [Google Scholar]

- 22.Zuo Y., Li Y., Chen Y., Li G., Yan Z., Yang L. PseKRAAC: a flexible web server for generating pseudo K-tuple reduced amino acids composition. Bioinformatics . 2017;33(1):122–124. doi: 10.1093/bioinformatics/btw564. [DOI] [PubMed] [Google Scholar]

- 23.Chou K. C., Cai Y. D. Prediction of protein subcellular locations by GO-FunD-PseAA predictor. Biochemical and Biophysical Research Communications . 2004;320(4):1236–1239. doi: 10.1016/j.bbrc.2004.06.073. [DOI] [PubMed] [Google Scholar]

- 24.Kurgan L., Cios K., Chen K. SCPRED: accurate prediction of protein structural class for sequences of twilight-zone similarity with predicting sequences. BMC Bioinformatics . 2008;9(1):p. 226. doi: 10.1186/1471-2105-9-226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Zhang S., Ding S., Wang T. High-accuracy prediction of protein structural class for low-similarity sequences based on predicted secondary structure. Biochimie . 2011;93(4):710–714. doi: 10.1016/j.biochi.2011.01.001. [DOI] [PubMed] [Google Scholar]

- 26.Dai Q., Li Y., Liu X., Yao Y., Cao Y., He P. Comparison study on statistical features of predicted secondary structures for protein structural class prediction: From content to position. BMC Bioinformatics . 2013;14(1):1–4. doi: 10.1186/1471-2105-14-152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Ding H., Lin H., Chen W., et al. Prediction of protein structural classes based on feature selection technique. Interdisciplinary Sciences: Computational Life Sciences . 2014;6(3):235–240. doi: 10.1007/s12539-013-0205-6. [DOI] [PubMed] [Google Scholar]

- 28.Chen C., Chen L. X., Zou X. Y., Cai P. X. Predicting protein structural class based on multi-features fusion. Journal of Theoretical Biology . 2008;253(2):388–392. doi: 10.1016/j.jtbi.2008.03.009. [DOI] [PubMed] [Google Scholar]

- 29.Kumar A. V., Ali R. F. M., Cao Y., Krishnan V. V. Application of data mining tools for classification of protein structural class from residue based averaged NMR chemical shifts. Biochimica et Biophysica Acta . 2015;1854(10):1545–1552. doi: 10.1016/j.bbapap.2015.02.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Nanni L., Brahnam S., Lumini A. Prediction of protein structure classes by incorporating different protein descriptors into general Chou's pseudo amino acid composition. Journal of Theoretical Biology . 2014;360:109–116. doi: 10.1016/j.jtbi.2014.07.003. [DOI] [PubMed] [Google Scholar]

- 31.Wang J., Wang C., Cao J., Liu X., Yao Y., Dai Q. Prediction of protein structural classes for low-similarity sequences using reduced PSSM and position-based secondary structural features. Gene . 2015;554(2):241–248. doi: 10.1016/j.gene.2014.10.037. [DOI] [PubMed] [Google Scholar]

- 32.Antes I., Siu S. W. I., Lengauer T. DynaPred: a structure and sequence based method for the prediction of MHC class I binding peptide sequences and conformations. Bioinformatics . 2006;22(14):e16–e24. doi: 10.1093/bioinformatics/btl216. [DOI] [PubMed] [Google Scholar]

- 33.Klus P., Bolognesi B., Agostini F., Marchese D., Zanzoni A., Tartaglia G. G. The cleverSuite approach for protein characterization: predictions of structural properties, solubility, chaperone requirements and RNA-binding abilities. Bioinformatics . 2014;30(11):1601–1608. doi: 10.1093/bioinformatics/btu074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Colonna G., Costantini M., Costantini S. Frequencies of specific peptides in intrinsic disordered protein domains. Protein & Peptide Letters . 2010;17(11):1398–1402. doi: 10.2174/0929866511009011398. [DOI] [PubMed] [Google Scholar]

- 35.Boeckmann B., Bairoch A., Apweiler R., et al. The SWISS-PROT protein knowledgebase and its supplement TrEMBL in 2003. Nucleic Acids Research . 2003;31(1):365–370. doi: 10.1093/nar/gkg095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Chang C. C. H., Song J., Tey B. T., Ramanan R. N. Bioinformatics approaches for improved recombinant protein production in Escherichia coli: protein solubility prediction. Briefings in Bioinformatics . 2014;15(6):953–962. doi: 10.1093/bib/bbt057. [DOI] [PubMed] [Google Scholar]

- 37.Idicula-Thomas S., Balaji P. V. Understanding the relationship between the primary structure of proteins and their amyloidogenic propensity: clues from inclusion body formation. Protein Engineering Design & Selection . 2005;18(4):175–180. doi: 10.1093/protein/gzi022. [DOI] [PubMed] [Google Scholar]

- 38.Nguyen X. V., Chan J., Romano S., Bailey J. Effective global approaches for mutual information based feature selection. Acm Sigkdd International Conference on Knowledge Discovery & Data Mining; 2014; New York, New York, USA. pp. 512–521. [Google Scholar]

- 39.Yang Y., Chen L. Identification of drug-disease associations by using multiple drug and disease networks. Current Bioinformatics . 2022;17(1):48–59. doi: 10.2174/1574893616666210825115406. [DOI] [Google Scholar]

- 40.Li X., Lu L., Chen L. Identification of protein functions in mouse with a label space partition method. Mathematical Biosciences and Engineering . 2021;19(4):3820–3824. doi: 10.3934/mbe.2022176. [DOI] [PubMed] [Google Scholar]

- 41.Pan X., Chen L., Liu, Niu Z., Huang T., Cai Y. D. Identifying protein subcellular locations with embeddings-based node2loc. IEEE/ACM Transactions on Computational Biology and Bioinformatics . 2021;19(1):1–675. doi: 10.1109/TCBB.2021.3080386. [DOI] [PubMed] [Google Scholar]

- 42.Zhou J. P., Chen L., Guo Z. H. iATC-NRAKEL: an efficient multi-label classifier for recognizing anatomical therapeutic chemical classes of drugs. Bioinformatics . 2020;36(5):1391–1396. doi: 10.1093/bioinformatics/btz757. [DOI] [PubMed] [Google Scholar]

- 43.Van Durme J., Maurer-Stroh S., Gallardo R., Wilkinson H., Rousseau F., Schymkowitz J. Accurate prediction of DnaK-peptide binding via homology modelling and experimental data. PLoS Computational Biology . 2009;5(8, article e1000475) doi: 10.1371/journal.pcbi.1000475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Fernandez-Escamilla A. M., Rousseau F., Schymkowitz J., Serrano L. Prediction of sequence-dependent and mutational effects on the aggregation of peptides and proteins. Nature Biotechnology . 2004;22(10):1302–1306. doi: 10.1038/nbt1012. [DOI] [PubMed] [Google Scholar]

- 45.Winkelmann J., Calloni G., Campioni S., Mannini B., Taddei N., Chiti F. Low-level expression of a folding-incompetent protein in Escherichia coli: search for the molecular determinants of protein aggregation in vivo. Journal of Molecular Biology . 2010;398(4):600–613. doi: 10.1016/j.jmb.2010.03.030. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Figures 1‑16 are the precision comparison between support vector machine prediction and single class feature prediction based on the selected 20, 30, 40 and 50 features.

Data Availability Statement

The data are available in https://github.com/bioinfo0706/RaaMLab.